1. Introduction

Ozone, a common air pollutant, is produced by a series of complex photochemical reactions, and severe ozone pollution can be extremely destructive to both human health and the ecological balance. Unlike the pattern of production and change in ozone at the surface, ozone distributed in the mesosphere is not only susceptible to the dynamics of the upper and lower atmosphere, but it is also considered to be an atmospheric factor that is susceptible to active change by human influence.

Ozone at the cold-point mesopause (O3-CPM) refers to the ozone concentration near the mesopause region, which is the boundary layer between the mesosphere and the thermosphere. This region plays a critical role in atmospheric radiative balance and chemical processes, influencing both climate dynamics and space weather. O3-CPM is particularly sensitive to solar ultraviolet radiation and atmospheric circulation patterns, making it a valuable indicator for studying long-term atmospheric changes and anthropogenic impacts on the upper atmosphere.

Therefore, accurate prediction of ozone is important for reducing greenhouse gas emissions and maintaining ecological balance [

1]. Specifically, predicting O3-CPM is essential for understanding the coupling processes between different atmospheric layers, assessing the impact of space weather on Earth’s environment, and evaluating the long-term effects of human activities on the upper atmosphere. The variation in O3-CPM is driven by multiple factors, including solar cycle activity, atmospheric wave dynamics, temperature fluctuations, and chemical interactions with species such as atomic oxygen and hydrogen. Moreover, increasing evidence suggests that anthropogenic emissions, such as greenhouse gases and halocarbons, can indirectly affect mesospheric ozone through changes in atmospheric temperature and circulation.

However, ozone at the mesopause region is influenced by various factors and is actively involved in complex chemical reactions, making the prediction of O3-CPM a challenging task. The variation in O3-CPM is driven by multiple factors, including solar cycle activity, atmospheric wave dynamics, temperature fluctuations, and chemical interactions with species such as atomic oxygen and hydrogen. Moreover, increasing evidence suggests that anthropogenic emissions, such as greenhouse gases and halocarbons, can indirectly affect mesospheric ozone through changes in atmospheric temperature and circulation [

1,

2]. For instance, rising CO

2 levels lead to mesospheric cooling, which may alter O

3 production and loss rates. Therefore, a considerable amount of research has been devoted to ozone prediction in the troposphere [

3,

4,

5,

6,

7], while there is relatively little research on predicting O3-CPM. Recent studies have further explored the dynamics of mesospheric ozone and the application of hybrid models [

8,

9,

10], highlighting the growing recognition of the complex interplay between solar activity, atmospheric chemistry, and anthropogenic influences in the mesosphere. Since machine learning algorithms can achieve good prediction results with high computational efficiency based on a small amount of input data [

11], more and more machine learning models have been applied to forecast variations in ozone.

Traditional machine learning models have good predictive ability for nonlinearly varying data, and many scholars have used these models for ozone prediction. For example, [

12] have discussed the potential of using random forest and RNN models to replace traditional atmospheric prediction models. The reliability of support vector machines in predicting ozone changes has also attracted the attention of several scholars [

13,

14]. Additionally, machine learning models such as recurrent neural networks (RNNs), artificial neural networks [

15], long- and short-term memory (LSTM) [

16], and seasonal autoregressive integral moving average (SARIMA) [

17,

18,

19,

20] have been widely used in ozone prediction. Although these machine-learning models offer significant advantages over traditional statistical models for ozone prediction [

21,

22], a common drawback is their reliance on a single model. When the influencing factors are complex, the accuracy of these prediction models may decrease over longer periods of time [

23]. Therefore, linear or nonlinear combinatorial models based on multiple models are favored by most scholars. By integrating different models that capture various aspects of the data, the overall performance can often be enhanced [

24]. Numerous studies have demonstrated that breaking down a complex problem into multiple manageable subproblems through hybrid models can simplify the modeling process while enhancing prediction accuracy and model robustness. In the study by [

5], the original ozone sequences were first broken down using full ensemble empirical mode decomposition (CEEMD), then predicted using an optimized hybrid model, effectively overcoming the limitations of a single model and enhancing prediction accuracy. Time series of meteorological factors often exhibit unpredictable and stochastic components due to seasonal changes and unexpected events, such as human activities. Therefore, prediction models that excel at capturing periodic trends and stochastic components tend to achieve high prediction accuracy [

25,

26,

27,

28]. In addition, ref. [

29] verified the effectiveness of long- and short-term memory networks based on multistage differential embedding in capturing the linear trend and periodicity of time series by predicting surface ozone concentration. Ref. [

30] hybridized the SARIMA and SVM models, and the hybrid model took into account the ability to deal with both linear and nonlinear features, which effectively improved the prediction accuracy of ozone.

This paper proposes a hybrid SSA-SARIMA-GSVR prediction model for forecasting O3-CPM based on the traditional decomposition and combination approaches. The SSA method is utilized to extract various components from the original sequence. Subsequently, the concept of RT reconstruction is introduced to facilitate the secondary categorization of components with distinct characteristics. In order to fully consider the seasonal cyclic variation characteristics and major trends of the O3-CPM time series, the SARIMA model was selected to target the prediction of the RT reconstructed series. Considering the excellent potential of the SVR model optimized by the Gray Wolf algorithm in capturing the stochastic components and the noise in the sequences, the GSVR model was applied to predict the N-RT reconstructed sequences. The final comparison of the prediction results of the hybrid model with the single or dual hybrid models found that the SSA-SARIMA-GSVR hybrid model utilized the respective advantages of the SARIMA and GSVR models without significantly increasing the computation time, and provided high-precision prediction results for the prediction of O3-CPM. Additionally, all experiments were conducted using conda 23.7.4 and Python 3.11.

3. Construction of SSA-SARIMA-GSVR Hybrid Model

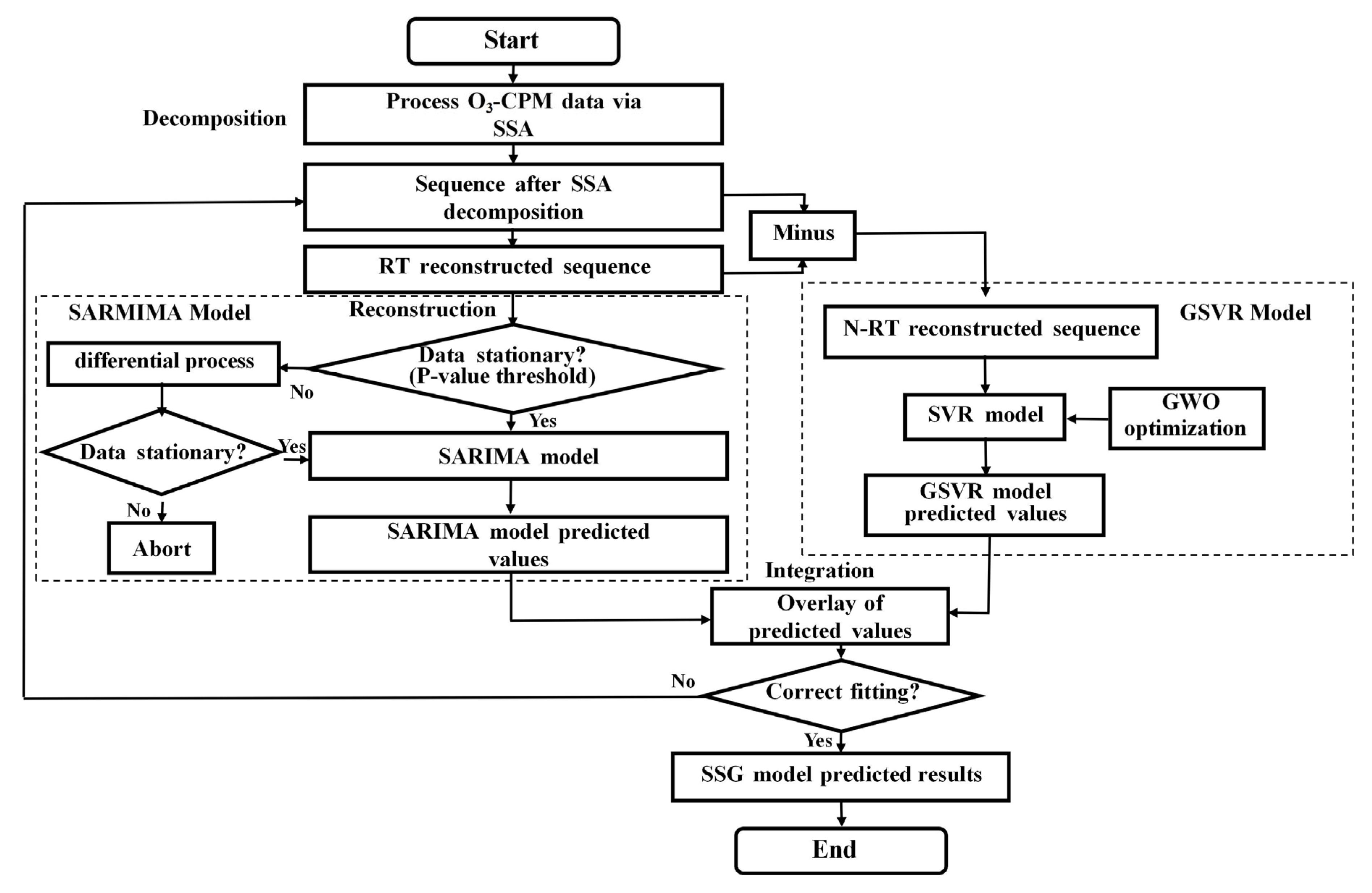

Figure 1 depicts the general structure of the proposed SSA-SARIMA-GSVR hybrid model. Similar to the traditional fusion approach for time series forecasting models, the SSA-SARIMA-GSVR model also follows the logic of decomposition followed by combination.

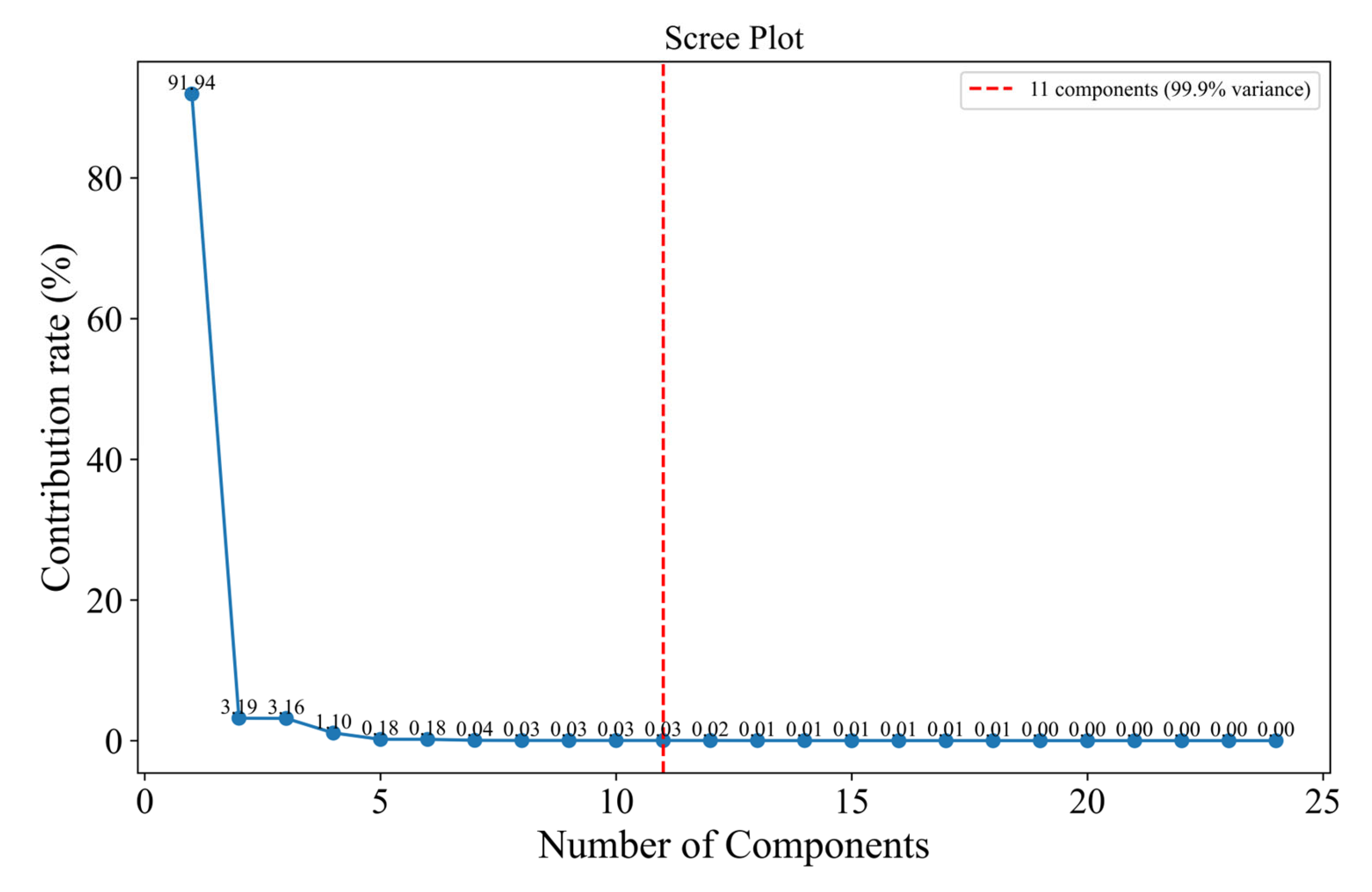

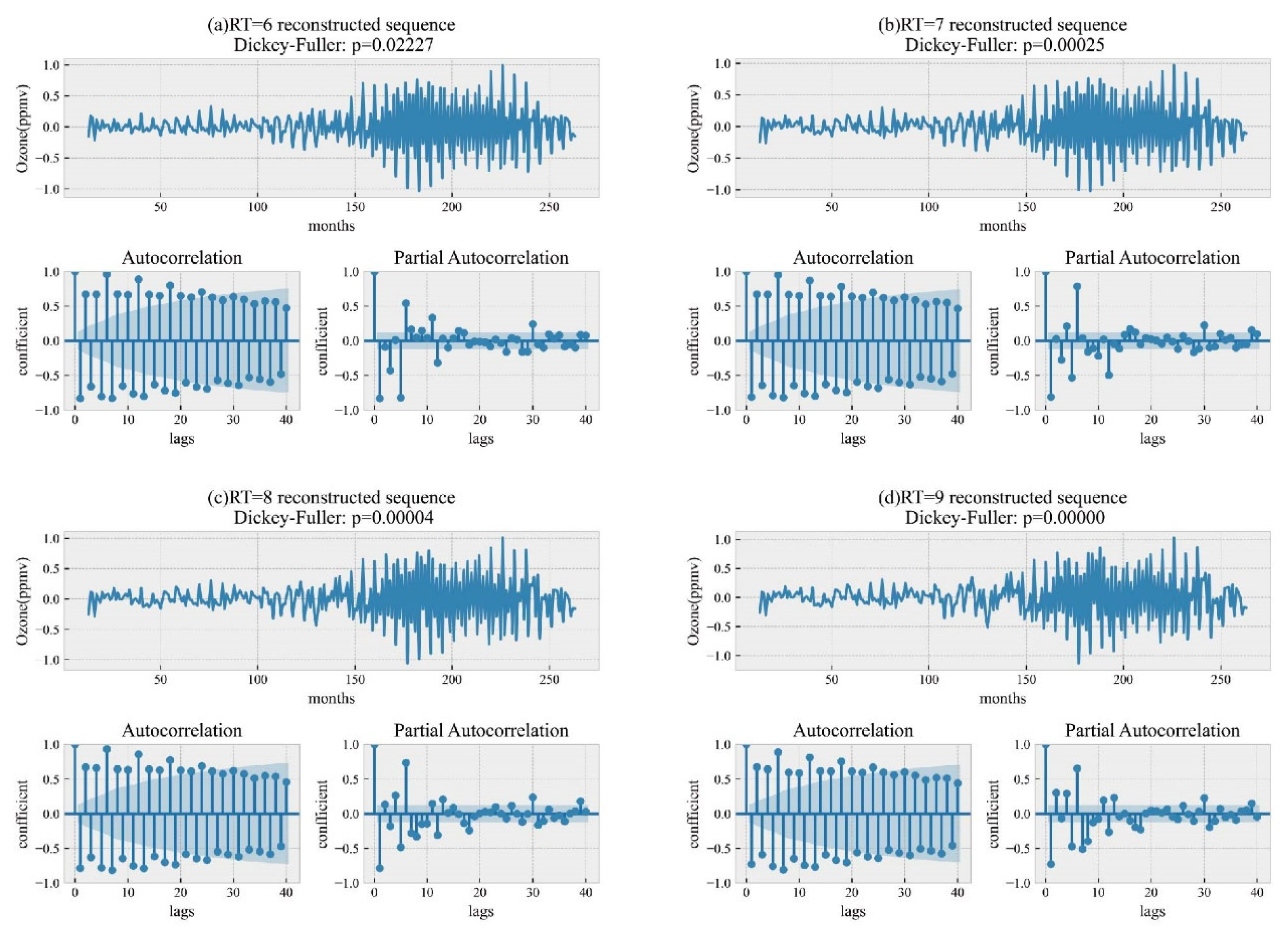

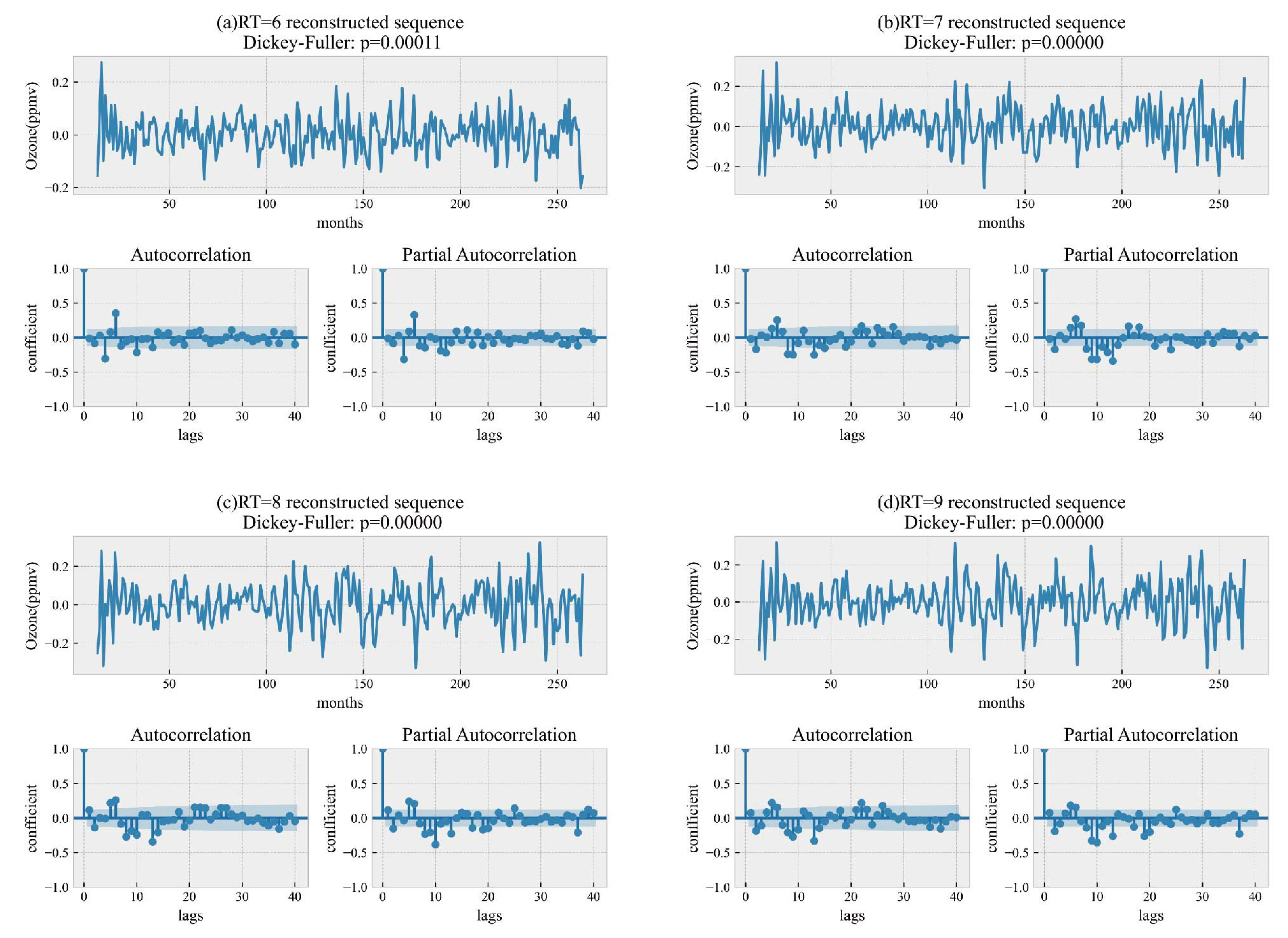

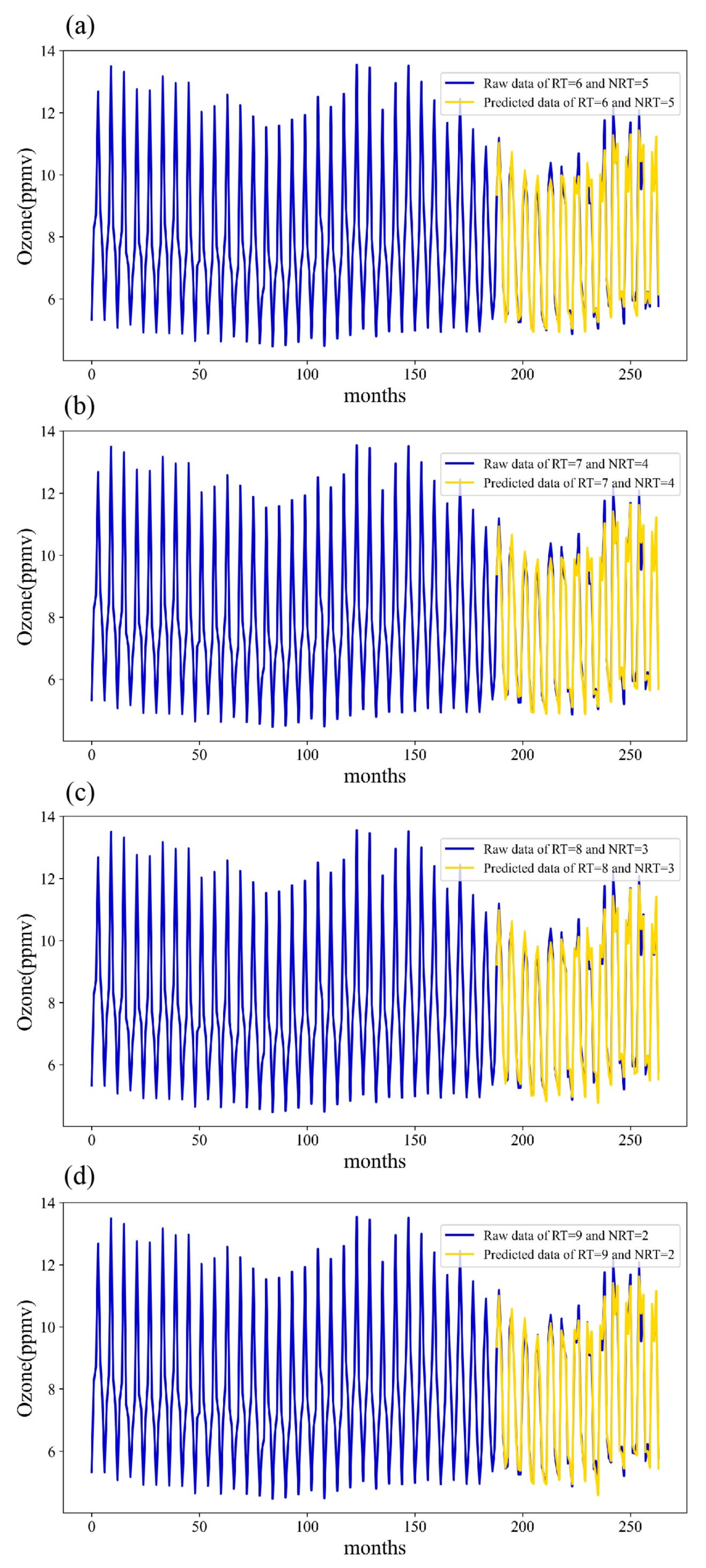

However, it introduces the concept of a reconstruction threshold (RT) to further categorize the N different components obtained from the SSA decomposition. The Reconstruction Threshold (RT) is defined as the number of leading SSA components whose cumulative contribution to the total variance of the original time series exceeds a predefined threshold (e.g., 99.5%). Formally, if the singular values are ordered as λ1 ≥ λ2 ≥ ... ≥ λL, then RT is the smallest integer k. The first k components of the SSA decomposition are regarded as the primary components when RT = k.

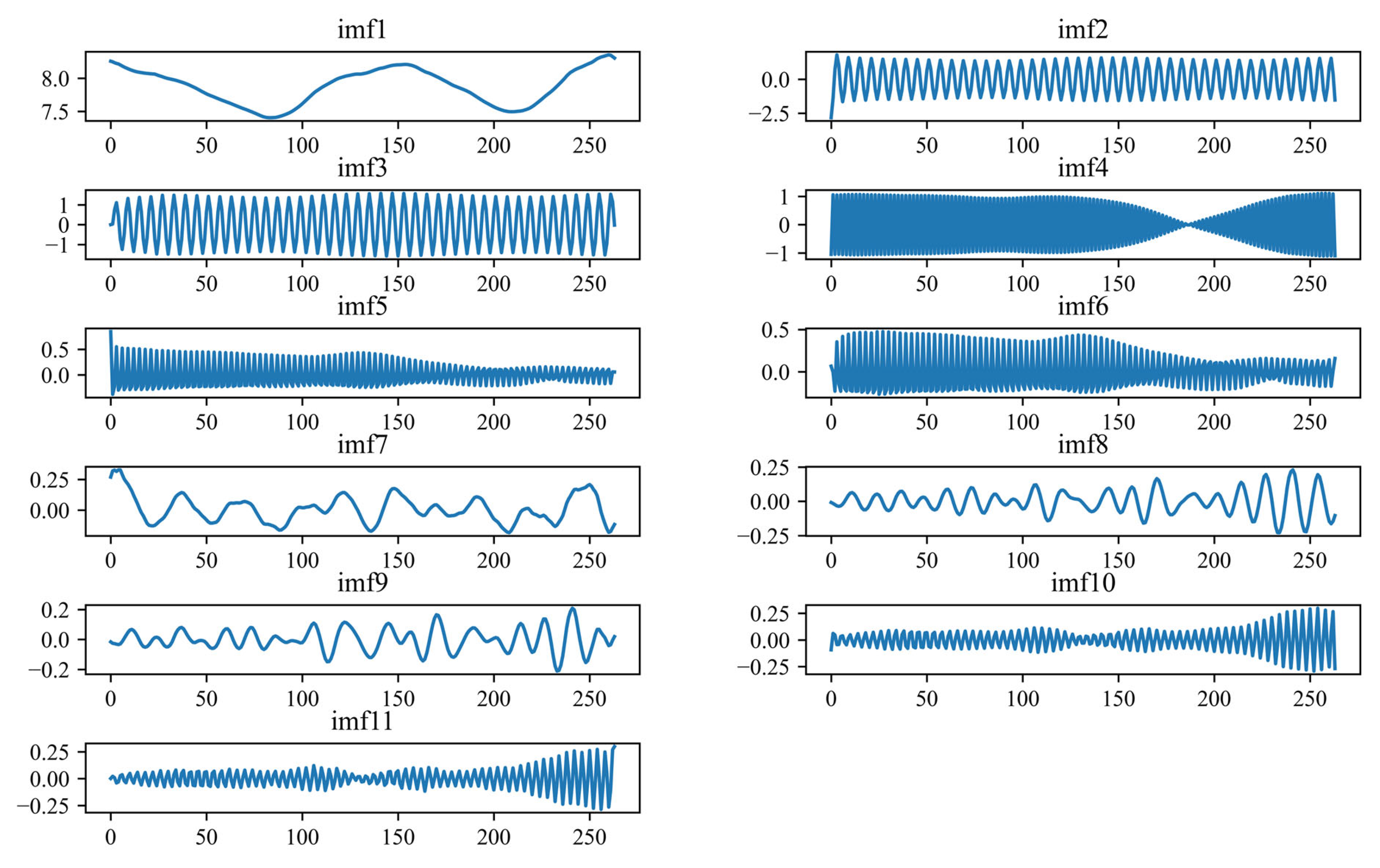

The noise-reduced RT time series is obtained by reconstructing these components, and the noisy N-RT sequence is obtained by reconstructing the k+1st to Nth components. Since the SARIMA model is proficient at capturing the major trends and periodic components retained in the noise-reduced RT time series, it is used to predict the RT reconstructed series. In addition, a GSVR model of the kernel function is introduced to capture the noise and random components in the N-RT sequences. This method increases the final prediction accuracy of the SSA-SARIMA-GSVR model by enabling more focused predictions of the O3-CPM sequence’s deconstructed components. In this study, the original O3-CPM sequence is decomposed into 11 components using SSA. After analysis, the sequence input to the SARIMA model should be the RT reconstruction sequence of the first eight components, while the sequence input to the GSVR model should be the N-RT reconstruction sequence of the next three components. The best results are acquired by superimposing the outputs from both models to arrive at the final forecast.

6. Discussion

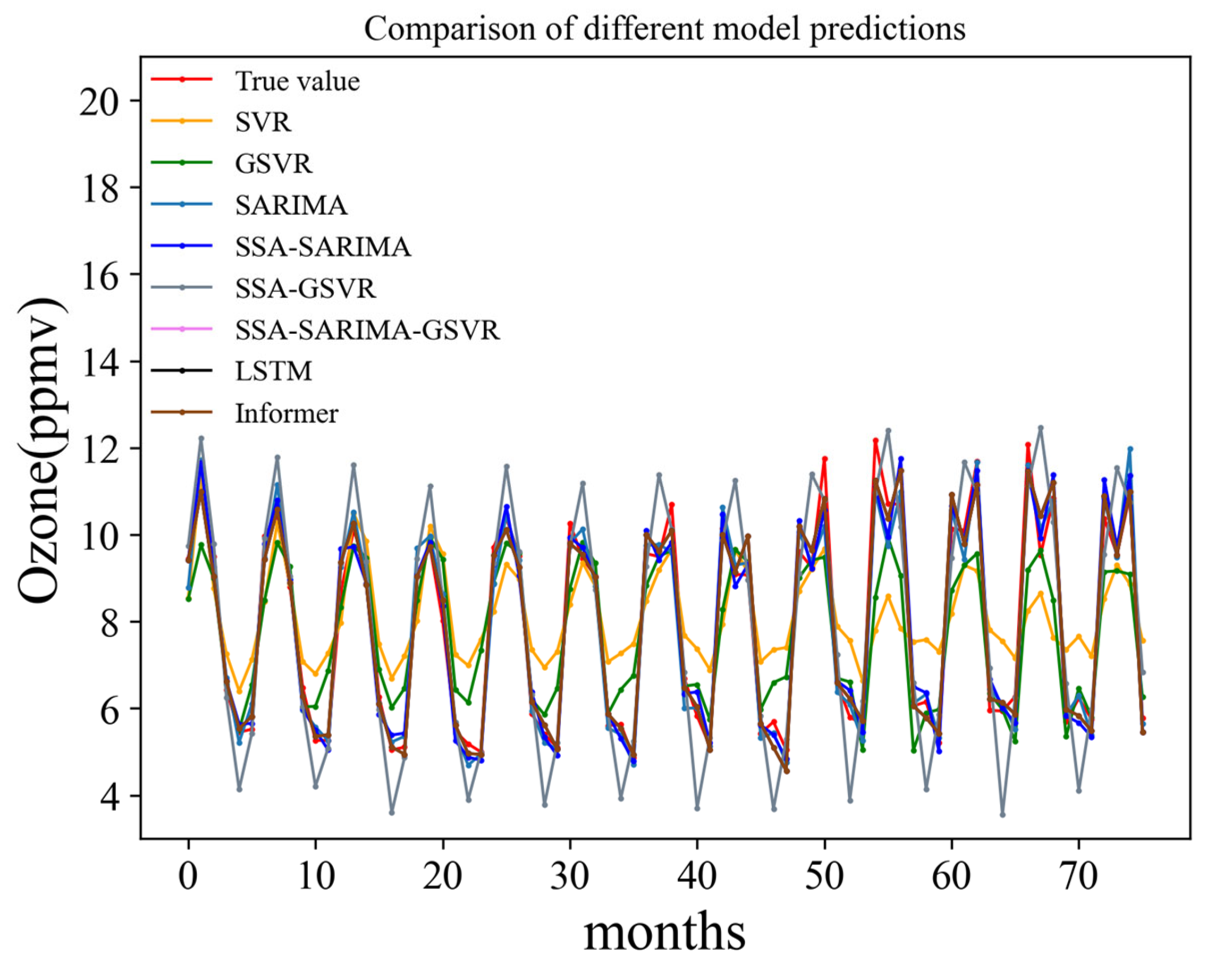

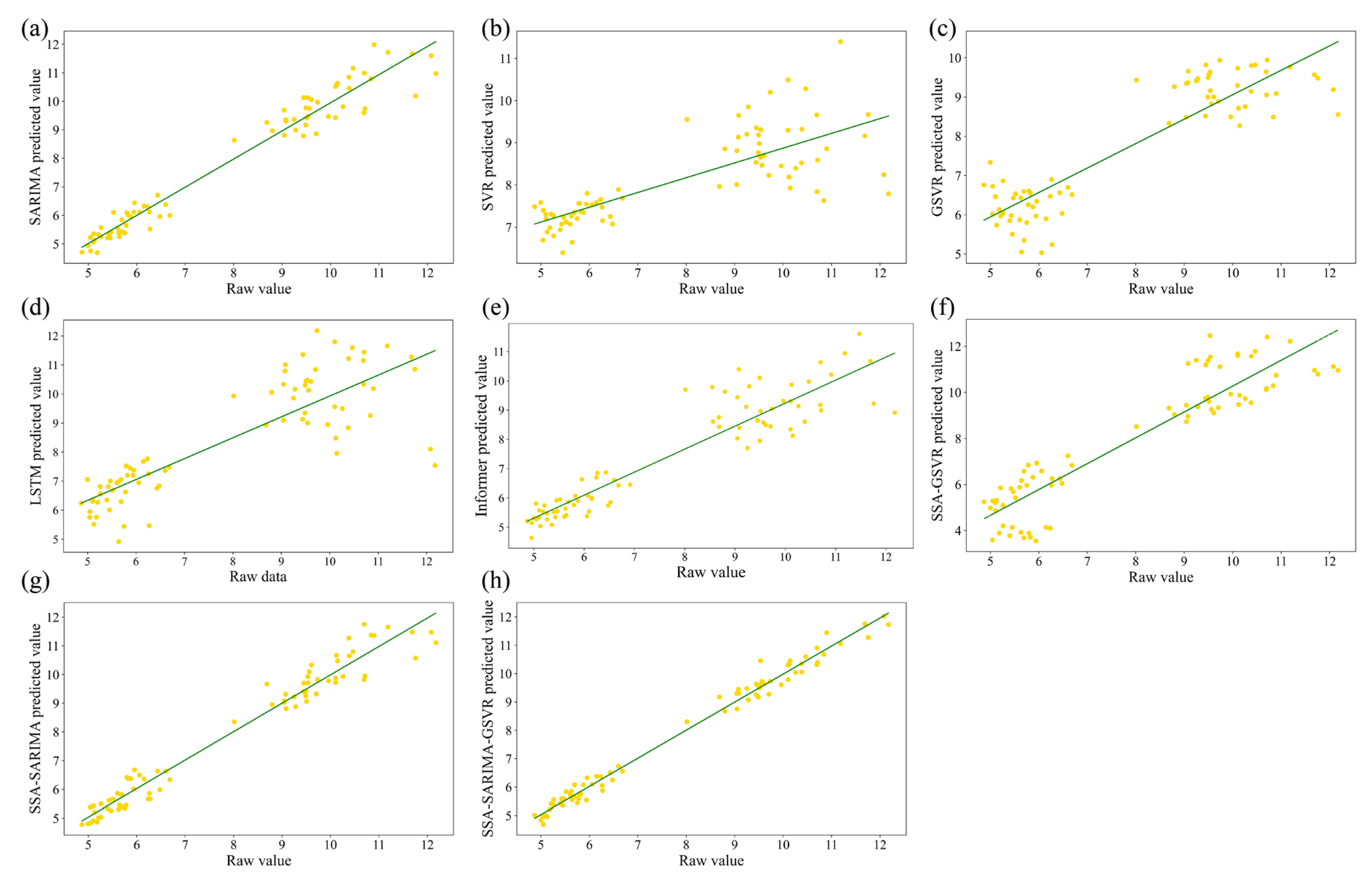

Due to the SARIMA model’s flexibility in capturing trends and seasonal patterns, it exhibits the best predictive performance among the five single prediction models. With an R

2 of 0.957, it boasts the highest goodness of fit, accompanied by the lowest RMSE of 0.476, the lowest MAPE of 0.045, the lowest MAE of 0.366, the lowest TIC of 0.029, and the lowest SSE of 17.25.

Table 8 lists the seven metrics used for model performance evaluation. Among them, the larger the R

2 and IA, the better the model. For the other metrics, the smaller they are, the lower the prediction error of the model. As can be seen in

Table 8, the SSA-SARIMA hybrid prediction model reduces the RMSE, MAE, TIC, and SSE by 6.7%, 1.6%, 6.9%, and 13.2%, respectively, compared to the best single model, SARIMA. These results show that the SSA decomposition method effectively separates the major trends and cycles in the original series and reduces the noise, thus improving the forecasting ability of the SARIMA model. Additionally, compared to single models, the runtime of the SSA-GSVR and SSA-SARIMA prediction models optimized by SSA did not significantly increase. The runtime of the proposed SSA-SARIMA-GSVR model increased by only 12.58 s compared to the SARIMA model. This increase in computation time (from 86.90 s to 99.48 s, representing a~14.5% increase) is considered marginal, especially given the substantial improvement in prediction accuracy (e.g., RMSE reduced by 45.8%). For practical O3-CPM forecasting, which typically deals with monthly data and long-term trends, an increase of a few tens of seconds is negligible and does not impact the model’s operational utility.This is because, compared to other time series decomposition methods such as Variational Mode Decomposition (VMD), the SSA decomposition method does not require iterative processes. Therefore, it can be considered that the SSA optimization method adds relatively little to the computational complexity of the model, and the computation time of the model remains relatively stable.

However, the improvement in prediction accuracy for the O3-CPM sequence achieved by the simple combination of the SSA method and the SARIMA model is very limited. Therefore, it is necessary to introduce the GSVR model to specifically predict the stochastic components and other elements contained in the O3-CPM sequence. In addition, the SSA-SARIMA model predicted better than the SSA-GSVR model. Primarily, the RT reconstructed sequence employed for SARIMA model prediction post-SSA decomposition encompasses significant information regarding various trends and periodicities, thereby substantially enhancing sequence prediction. Conversely, the GSVR model excels in predicting the N-RT sequences, which contain a multitude of nonlinear information contributing minimally to the prediction. These factors underscore why the prediction accuracy of the SSA-GSVR model is inferior to that of the SSA-SARIMA model.

Despite the superior prediction effect of the SARIMA model, the SSA-SARIMA model can still be optimized for each indicator. The R2 and IA of the SSA-SARIMA model improved by 0.2% and 0.5%, respectively, compared to the SARIMA model. In comparison to the optimal two-portfolio model, the SSA-SARIMA-GSVR hybrid forecasting model demonstrates reductions of 40.9%, 39.1%, 41.1%, 40.7%, and 65.4% in RMSE, MAPE, MAE, TIC, and SSE, respectively. Additionally, the proposed SSA-SARIMA-GSVR model shows a greater improvement over the SARIMA model. Its RMSE, MAPE, MAE, TIC, and SSE are reduced by 45.8%, 37.8%, 42.1%, 44.8%, and 70.0%, respectively, while R2 and IA are improved by 3.1% and 0.8%, respectively. This indicates that the predictive performance of the SSA-SARIMA-GSVR model, based on the decomposition and categorization combination approach, surpasses that of any single or double hybrid model compared in this study, thereby achieving the desired outcome.

The superior performance of the SSA-SARIMA-GSVR hybrid model can be attributed to its effective “divide-and-conquer” strategy. The SSA decomposition acts as a noise filter and feature extractor, separating the original complex time series into more manageable sub-sequences. The SARIMA model then precisely forecasts the relatively smooth and predictable RT component, which contains the dominant trend and seasonality. Concurrently, the GSVR model, empowered by the GWO optimizer, effectively captures the intricate nonlinear patterns and stochastic noise within the N-RT component, which are challenging for linear models like SARIMA. The final integration of these two distinct forecasts synthesizes the strengths of both linear and nonlinear modeling paradigms, leading to a more comprehensive and accurate prediction that neither model could achieve alone. This synergistic combination effectively mitigates the limitations inherent in single-model approaches. Furthermore, to directly address the contribution of each module:

- (1)

The SSA module serves as an adaptive filter, playing the critical role of deconstructing the original series into semantically meaningful components. Without this decomposition, the subsequent specialized prediction would not be possible.

- (2)

The SARIMA module’s primary contribution is its proficiency in modeling the smoothed, linear RT component, which encapsulates the dominant trend and stable seasonality. Its high accuracy on this subset forms the stable backbone of the final forecast.

- (3)

The GSVR module’s key contribution lies in its ability to capture the complex, nonlinear patterns and stochastic signals within the N-RT component. It acts as a fine-tuning mechanism, correcting deviations and adding refinements that the linear SARIMA model cannot represent.

The superiority of the SSA-SARIMA-GSVR model is inherently rooted in this complementary design, where each module is assigned to the part of the problem it is best suited to solve.

In summary, the SSA-SARIMA-GSVR model is not merely a random combination of the three algorithms. The optimization model decomposes and categorizes the original O3-CPM time series into two classes by means of singular spectrum analysis, which takes advantage of the potential of the SARIMA model in capturing seasonal trends and cyclical features, as well as major trends in the original series, and the strength of the GSVR model in capturing stochastic components and the noise, while increasing the computation time of the model in a lesser way. Therefore, we believe that the SSA-SARIMA-GSVR hybrid model proposed in this paper is more efficient and accurate in predicting the O3-CPM time series.