1. Introduction

Synthetic Aperture Radar (SAR) systems provide excellent maritime surveillance capabilities through all-weather, day-and-night imaging [

1]. Unlike optical sensors, SAR actively transmits microwave signals and processes returned echoes to create high-resolution images, making it invaluable for ship monitoring, navigation safety, and detecting illegal maritime activities [

2]. However, SAR imagery introduces unique challenges, including multiplicative speckle noise, sea clutter interference, and geometry-dependent distortions that differentiate it from optical ship detection [

3].

SAR ship detection faces several distinct challenges compared to optical imagery. Speckle noise and sea clutter degrade image contrast, leading to poorly defined ship boundaries and ambiguous target shapes. The radar imaging geometry and polarization effects also cause significant variations in ship appearance across different incidence angles and sea conditions, which complicates feature extraction. Dense harbor environments add to these difficulties, where closely spaced vessels require robust multi-scale representation and precise localization amid strong background interference. As a result, optical detection models often perform poorly when applied directly to SAR data, requiring specialized architectures that handle noise suppression, multi-scale fusion, and orientation robustness [

4,

5,

6].

Traditional SAR ship detection relied primarily on constant false alarm rate (CFAR) algorithms and statistical thresholding approaches [

7,

8]. Although these methods adapt to local background statistics, they struggle with heterogeneous sea clutter and low signal-to-noise ratio conditions. Deep learning has transformed SAR ship detection by enabling automatic hierarchical feature learning from large datasets, eliminating the need for hand-crafted features [

9,

10]. Two-stage detectors like Faster R-CNN [

11] and Cascade R-CNN [

12] deliver high accuracy at the cost of computational complexity, while single-stage approaches such as YOLO [

13] and RetinaNet [

14] prioritize efficiency for real-time deployment. The challenge of arbitrary ship orientations in SAR imagery has been addressed through rotation-aware detectors, including RoI Transformer [

15], R3Det [

16], and S2ANet [

17].

Recent research has explored multi-scale and attention-driven architectures to improve SAR ship detection. Notable examples include AIS-FCANet [

18], which integrates frequency and spatial attention mechanisms for sea clutter suppression and small target enhancement, and MSRIHL-CNN [

19], which incorporates rotation-invariant Haar-like features for orientation-robust learning. Other significant contributions include the Dense Attention Pyramid Network [

20], which employs dense connections for progressive feature aggregation across scales; Non-local Channel Attention Network [

21], which captures long-range spatial dependencies through non-local operations; and Feature Balancing and Refinement Network [

14], which addresses feature imbalance in multi-scale detection through adaptive refinement. Beyond these attention-driven approaches, the community has explored diverse innovative directions: sea–land awareness methods (SLA-Net [

22]) explicitly model maritime–terrestrial distinction to reduce coastal false alarms, traditional feature fusion techniques (Laplace and LBP [

23]) combine hand-crafted with learned features for speckle robustness, bio-inspired methods [

24] mimic biological attention mechanisms for target localization, and weakly supervised approaches [

25] enable training with limited annotations to reduce labeling costs. Despite these diverse innovations, spanning attention architectures (SwER [

26] with efficient residual connections, FASC-Net [

27] using feature augmentation for small target detection), rotation-aware detectors (RoI Transformer [

15] for rotated bounding box prediction, S2ANet [

17] with alignment convolution for oriented detection), and specialized methodologies, existing methods share fundamental limitations: high model complexity, insufficient small-object discrimination, and weak SAR-specific design rationale. These approaches rely heavily on dense convolutions and large parameter sets, creating computational bottlenecks that are unsuitable for resource-constrained edge platforms. Furthermore, they lack an explicit design rationale connecting architectural choices to SAR-specific characteristics such as inherent feature redundancy and the critical balance between spatial detail preservation and semantic understanding. This creates a research gap for SAR-oriented lightweight networks achieving competitive accuracy through principled exploitation of SAR image properties rather than brute-force model scaling.

To address these limitations, we develop a lightweight yet accurate SAR ship detection network that directly handles SAR-specific challenges. In this context, “lightweight” is defined by three key metrics:

Small model parameters (typically <1 M) to minimize memory footprint.

Low computational complexity measured in FLOPs (floating-point operations) for efficient processing.

High inference speed (FPS) on resource-constrained edge devices for real-time deployment.

Our design is based on two key observations grounded in SAR image characteristics. First, SAR ship targets typically appear as compact, high-intensity regions within relatively homogeneous backgrounds, creating substantial feature redundancy across convolutional maps. This characteristic allows feature reuse through efficient operations that maintain semantic integrity while significantly reducing computation. Second, effective multi-scale SAR detection requires careful preservation of both fine-grained spatial details and high-level semantic representations. Conventional uniform compression strategies often disrupt this balance, making layer-adaptive optimization necessary for maintaining both local precision and global contextual understanding.

We present LGNet (Lightweight Ghost-enhanced Detection Network), a novel SAR-oriented ship detection framework designed for edge deployment. Our key contributions include

SAR-adapted Ghost-enhanced lightweight architecture: We develop a novel Ghost-enhanced backbone with an efficient stem structure (GHBlock + HGStem) that systematically exploits inherent feature redundancy in SAR imagery, addressing specific SAR challenges, including speckle noise suppression and sea clutter discrimination, while maintaining robust representational capacity.

Layer-wise Adaptive Magnitude-based Pruning (LAMP) strategy: We introduce an intelligent pruning approach that assigns layer-specific sparsity levels based on each layer’s contribution to multi-scale ship detection, enabling extreme model compression while preserving both fine-grained spatial information and high-level semantic features.

Comprehensive efficiency validation with practical deployment: LGNet achieves over 75% reduction in parameters and 59% reduction in FLOPs compared to YOLOv8n while maintaining superior detection performance, with real-world edge deployment validation achieving 135.39 FPS on resource-constrained hardware for practical maritime surveillance applications.

Through these contributions, LGNet demonstrates that model compactness and high detection accuracy can coexist, paving the way for practical deployment of SAR-based maritime surveillance systems on resource-constrained platforms.

2. Materials and Methods

2.1. Overall Design of LGNet

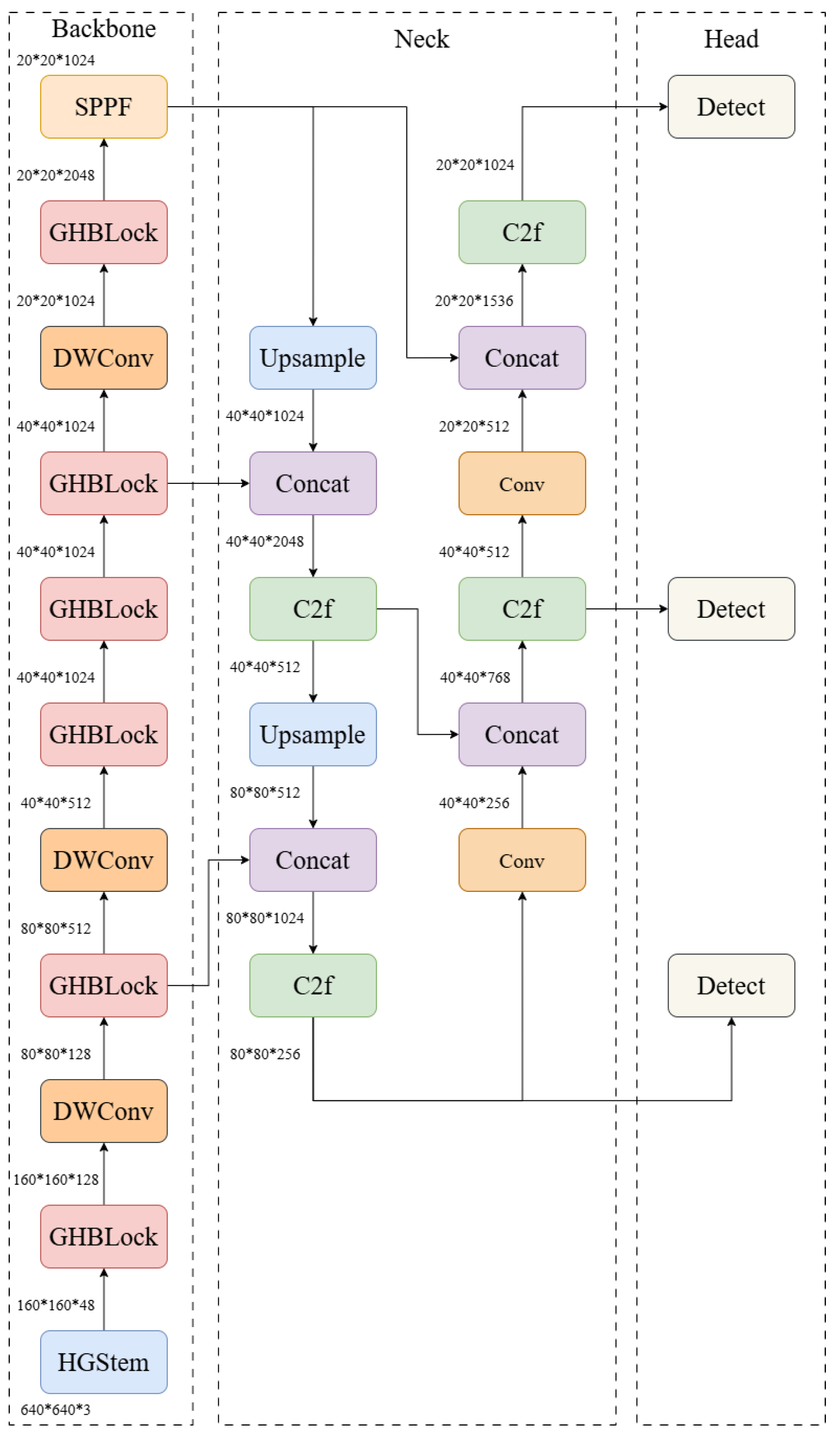

LGNet aims for extreme parameter efficiency to work on resource-constrained edge platforms (typically <2 GB memory, <10 GFLOPS compute capability), where conventional CNN detectors (3–100 M parameters, 8–200 GFLOPs) are computationally prohibitive. The architecture follows a three-stage detector paradigm with systematic lightweight operator integration, as shown in

Figure 1. The Ghost-enhanced backbone extracts hierarchical features using HGStem, alternating GHBlock/DWConv stages, and SPPF pooling. A feature pyramid neck then fuses multi-scale representations through upsampling and concatenation operations. Finally, three parallel detection heads process different spatial resolutions (20 × 20, 40 × 40, 80 × 80) to capture ships across various scales.

Backbone Design. The backbone architecture replaces YOLOv8n’s Conv-C2f structure with a lightweight pipeline comprising HGStem, alternating GHBlock/DWConv stages, and SPPF. The network begins with HGStem (640 × 640 × 3 → 160 × 160 × 48), which performs efficient shallow feature extraction via depthwise separable convolutions. GHBlock and DWConv modules then alternate to extract hierarchical features with progressive spatial downsampling. Early stages (160 × 160 → 80 × 80) capture mid-level features, intermediate layers (80 × 80 → 40 × 40) balance spatial details with semantic abstraction, and deeper stages (40 × 40 → 20 × 20) extract high-level contextual information essential for robust SAR ship detection. SPPF pooling concludes the backbone by expanding receptive fields and aggregating multi-scale context. This design achieves substantial compression compared to YOLOv8n’s baseline: parameters decrease from 3.0 M to 2.3 M (a 23.3% reduction) and FLOPs decrease from 8.1 G to 6.8 G (a 16.0% reduction) while preserving feature representation quality.

Neck Design. The neck employs a feature pyramid architecture that integrates semantic information from deep layers with spatial details from shallow layers. Bidirectional feature pathways enable upsampling (top-down) and downsampling (bottom-up) flows with concatenation and C2f fusion across multiple scales (20 × 20, 40 × 40, 80 × 80). This ensures each detection scale benefits from both high-level contextual understanding and fine-grained spatial information for effective multi-scale ship detection.

Head Design. Three parallel detection heads process different spatial resolutions (80 × 80, 40 × 40, 20 × 20) to accommodate ships of varying sizes. Each head predicts bounding box coordinates, objectness scores, and class probabilities through decoupled convolutional branches, following the established YOLO detection paradigm.

SAR-Specific Design Rationale. The lightweight architectural design explicitly addresses SAR-specific challenges through the following design considerations:

Speckle noise robustness: Ghost convolution-based feature reuse reduces sensitivity to local intensity variations by generating multiple feature maps through cheap linear transformations, effectively averaging out speckle noise inherent in SAR imagery.

Multi-scale ship detection: The alternating GHBlock–DWConv structure preserves both shallow fine-detail features (for small ship boundaries) and deep semantic features (for large ship classification), preventing the uniform compression that degrades multi-scale performance in standard lightweight networks.

Computational efficiency: By systematically replacing all standard convolutions with Ghost convolutions and depthwise separable convolutions, LGNet reduces redundant computation arising from SAR imagery’s characteristic feature redundancy, where ship targets appear as compact high-intensity regions against relatively homogeneous backgrounds.

Robustness under compression: The lightweight design does not degrade robustness; in low-SNR, cluttered SAR imagery, LGNet’s efficient operators demonstrate superior stability compared to parameter-heavy alternatives, validating that extreme compression and robust detection are compatible when architectural lightweighting is carefully orchestrated.

This architectural lightweighting establishes a strong foundation for subsequent LAMP-based pruning, enabling the dual lightweighting strategy that achieves >70% compression while maintaining state-of-the-art accuracy.

2.2. GhostConv Mechanism

Ghost convolution addresses the inherent feature redundancy in SAR ship imagery. While optical images require dense feature representations due to rich textural and spectral variations, SAR imagery has properties that allow substantial compression. Three key characteristics support this approach: spatial compactness, where ship targets appear as localized high-intensity regions with simple geometric patterns against homogeneous backgrounds; limited spectral diversity, as single-channel SAR intensity lacks the multi-spectral complexity of optical imagery; and cross-channel similarity, where speckle noise and sea clutter create correlated features across channels. These properties show that core intrinsic features can generate sufficient discriminative representations through efficient linear transformations, providing the theoretical foundation for Ghost convolution in SAR applications.

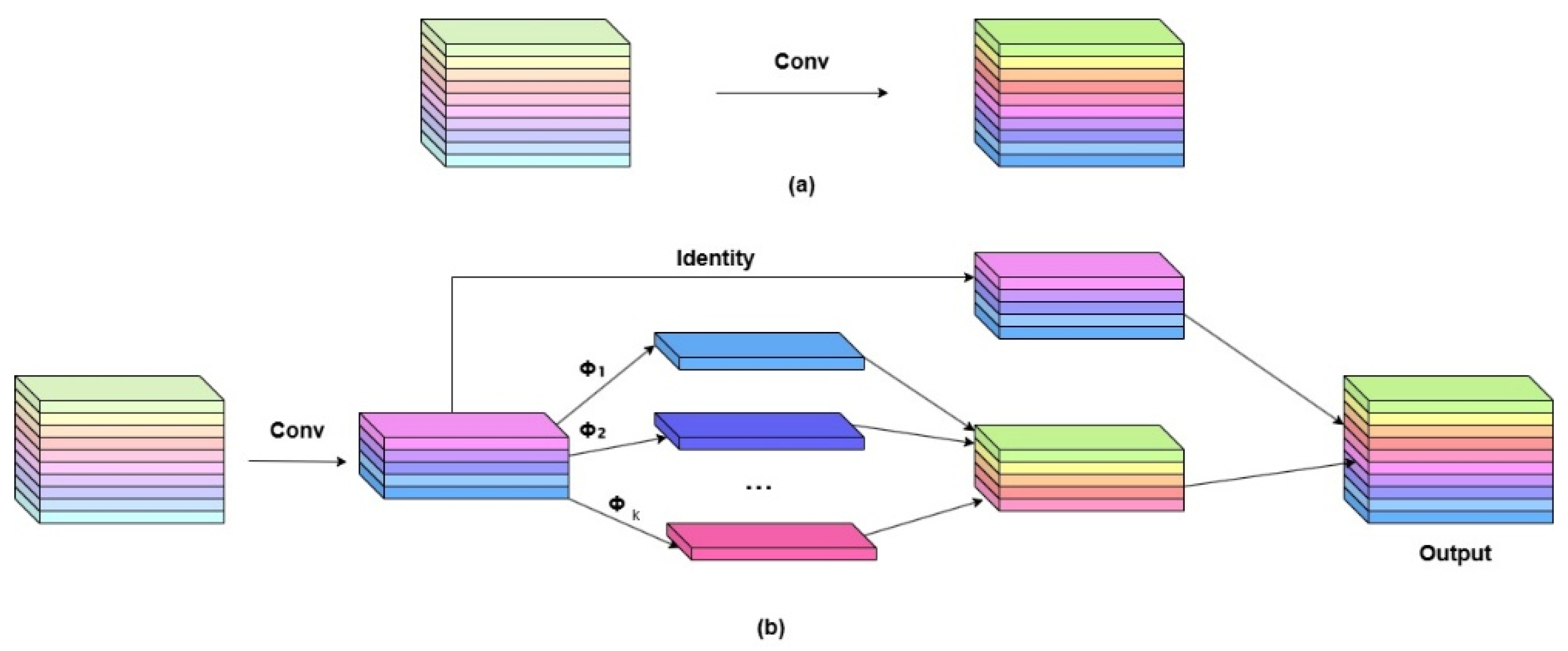

As illustrated in

Figure 2, the key difference between standard convolution and Ghost convolution lies in their feature generation strategies. In standard convolution (

Figure 2a), all output feature channels are generated through expensive full convolution operations, requiring

parameters to produce

N output channels from

C input channels with kernel size

K. Ghost convolution (

Figure 2b) adopts a two-stage decomposition: first, a reduced set of

primary features (

channels, where

s is the Ghost ratio, typically

) are extracted via standard convolution; subsequently, these primary features are transformed through a series of cheap linear operations (

) such as depthwise convolutions to generate additional

Ghost features; finally, primary and Ghost features are concatenated along the channel dimension and combined with an identity connection to produce the output. This decomposition exploits the observation that many feature channels can be approximated as linear transformations of a smaller intrinsic feature set, dramatically reducing computational cost while maintaining representational capacity.

The computational complexity and parameterization are summarized as follows. Let the input be

and the output size be

with

N channels. Denote the standard kernel size by

K and the linear-transform kernel by

, and let

s be the Ghost ratio (typically

s = 2).

Primary features (

channels) via partial standard convolution:

Ghost features via cheap linear transforms:

Total complexity of GhostConv:

Parameter count of GhostConv:

For D = 3, K = 3, s = 2 and sufficiently large C, we have and .

Implementation Details. The Ghost convolution module operates through a three-stage computational pipeline:

In the LGNet architecture, Ghost convolution is configured with the following hyperparameters: (i) Primary convolution: kernel size , stride , padding , generating intrinsic feature channels through standard convolution; (ii) Ghost transformation: the cheap operation is implemented as a depthwise convolution () followed by batch normalization and ReLU activation, producing an additional Ghost feature channels with minimal computational overhead (only parameters compared to for standard convolution); (iii) Ghost ratio: fixed at to balance compression rate and representational capacity, achieving theoretical 2 × speedup while maintaining feature expressiveness. The concatenated output combines both intrinsic and Ghost features, forming the complete feature representation with approximately 50% parameter reduction compared to standard convolution.

2.3. GHBlock: Ghost-Enhanced Hierarchical Block

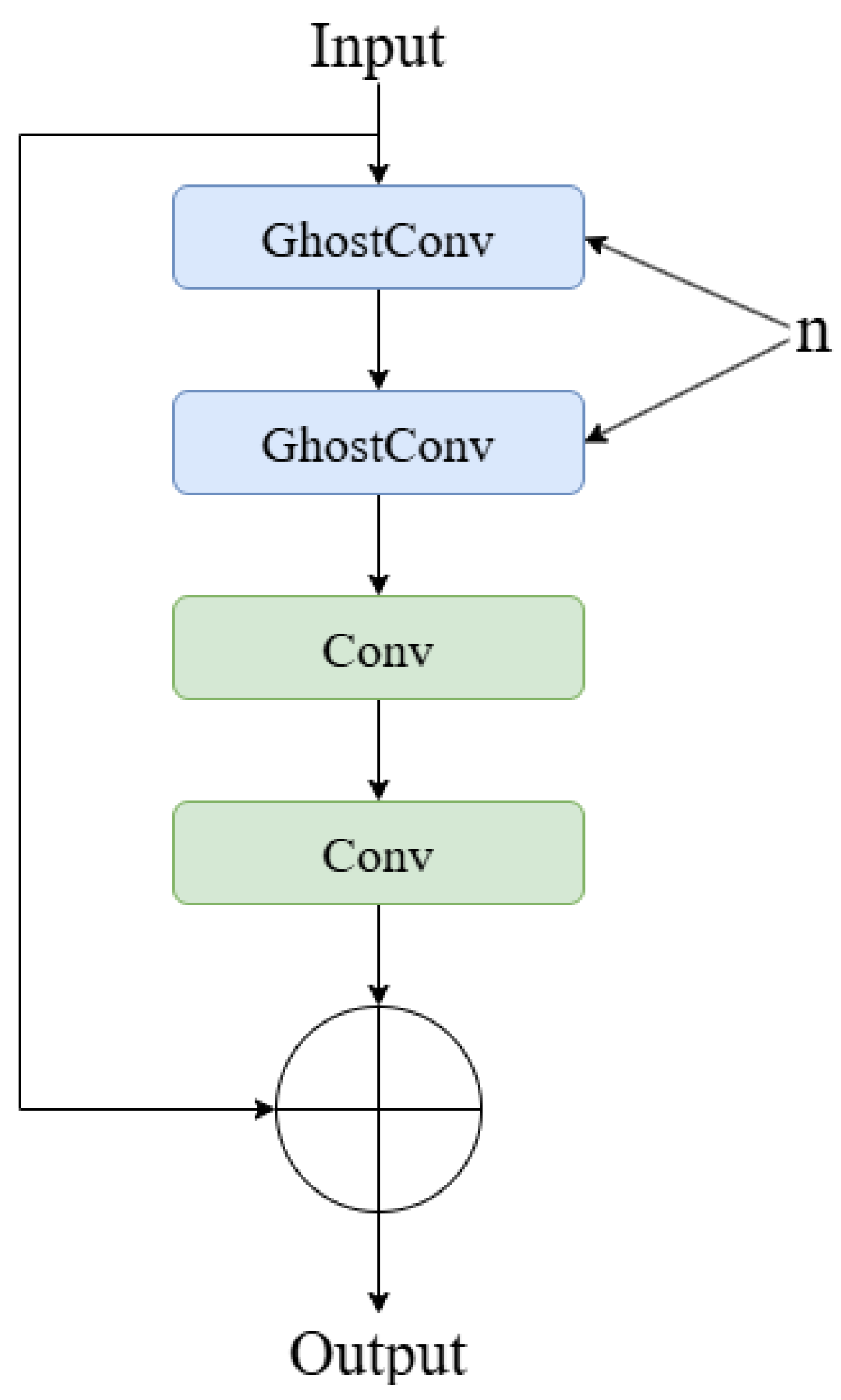

We introduce GHBlock as the core building unit of LGNet’s backbone, extending the Ghost convolution paradigm through hierarchical feature refinement. GHBlock integrates Ghost-based feature extraction with efficient channel attention mechanisms, enabling multi-scale feature aggregation with minimal computational cost.

The block architecture comprises four sequential operations, as shown in

Figure 3: hierarchical Ghost convolution for multi-level feature extraction, dense-style concatenation to preserve intermediate representations, dual-stage channel refinement through squeeze-and-excitation operations, and optional residual connections for enhanced gradient flow.

Module Design and Integration. GHBlock replaces YOLOv8n’s C2f modules across four hierarchical stages, as depicted in

Figure 1, with the following configurations:

Stage 1 (160 × 160): GHBlock[48, 128, 3]— shallow feature extraction.

Stage 2 (80 × 80): GHBlock[96, 512, 3]—mid-level semantics.

Stage 3 (40 × 40): GHBlock[192, 1024, 1, True, *]—aggressive compression (18 total blocks).

Stage 4 (20 × 20): GHBlock[384, 2048, 1, True, False]—deep semantic extraction.

Mathematical Formulation. Given input and target output , where represents intermediate channel width, n denotes the number of GhostConv layers, and t specifies the channel squeeze ratio, the forward pass proceeds through four stages:

Hierarchical Ghost Feature Extraction. Multiple GhostConv layers are stacked to extract hierarchical features:

where each

operation follows the decomposition strategy defined in Equations (9)–(11), producing

output channels per layer.

Dense-like Feature Aggregation. All intermediate features are concatenated to preserve multi-scale information:

Channel Squeeze-and-Excitation. Two successive 1 × 1 convolutions perform channel refinement: first, a squeeze operation reduces channels by factor

t, then an excitation operation restores to

:

Optional Residual Connection. When channel dimensions match (

) and

shortcut=True, a residual connection enhances gradient flow:

Computational Complexity. The total computational cost of GHBlock comprises three components: (i) hierarchical feature extraction via

n GhostConv layers, (ii) channel squeeze operation, and (iii) channel excitation operation:

where each GhostConv layer contributes

FLOPs. The total parameter count is

With typical configurations (n = 6, s = 2, t = 2, K = D = 3), GHBlock achieves approximately 40–50% parameter reduction compared to an equivalent standard convolution-based block while maintaining comparable feature representation capacity through hierarchical Ghost feature aggregation and dense-like connectivity.

2.4. LAMP Pruning and Adaptive Fine-Tuning

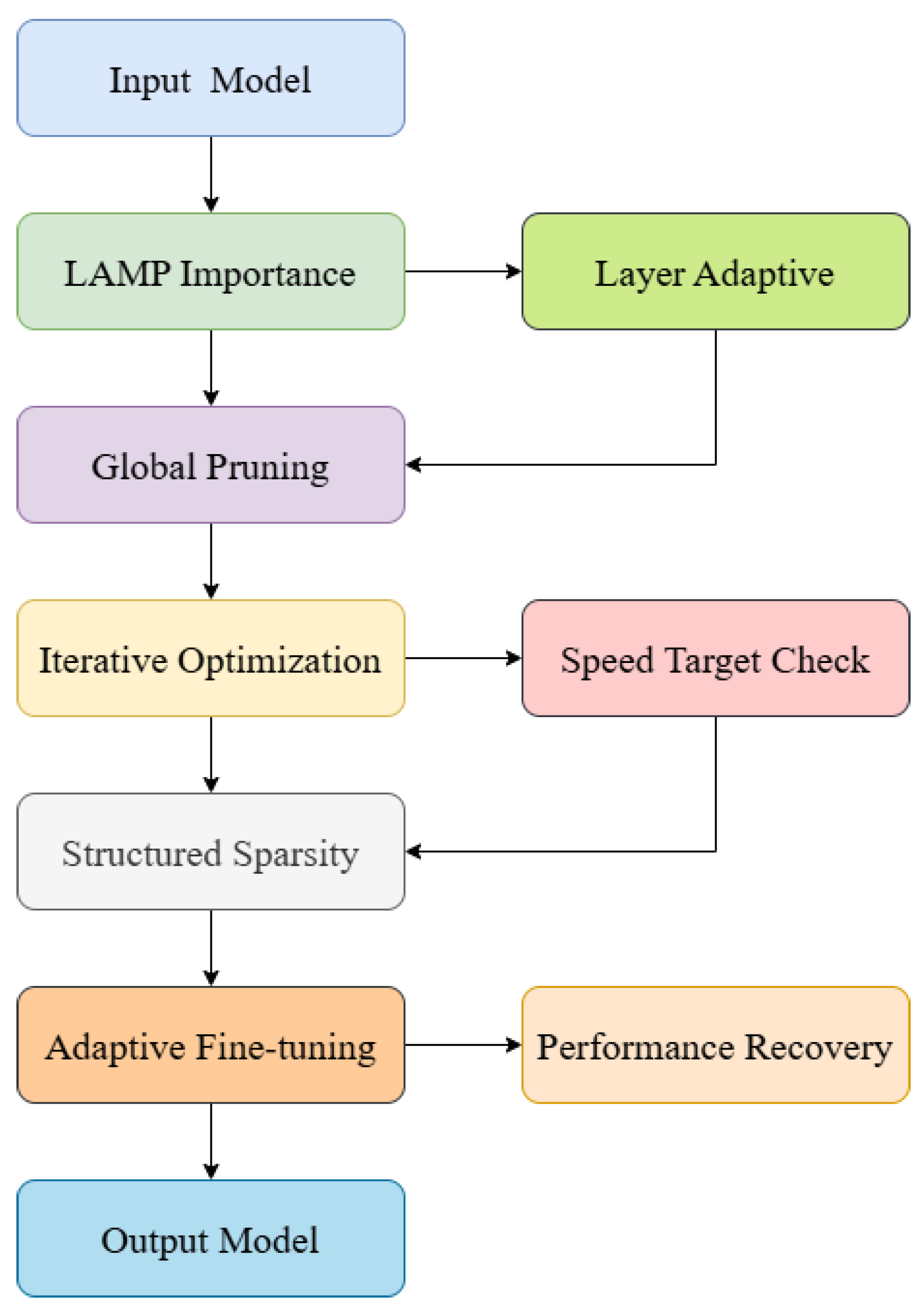

We implement Layer-wise Adaptive Magnitude-based Pruning (LAMP) with adaptive fine-tuning to achieve extreme compression while preserving detection performance. The complete pipeline, illustrated in

Figure 4, encompasses seven sequential stages: importance estimation, layer adaptation, global pruning, iterative optimization with speed validation, structured sparsity generation, and adaptive fine-tuning for accuracy recovery.

LAMP Importance Estimation. The pruning process begins by calculating layer-wise importance scores that guide the subsequent pruning decisions. The importance score combines magnitude-based and depth-aware factors:

where

represents the weights of layer

l and

is the layer-adaptive factor.

Layer Adaptive Scaling. The layer-adaptive factor

accounts for the hierarchical importance of different network depths:

where

= 1.5 is the global tuning coefficient,

L is the total number of layers, and

denotes the depth weight. This scaling strategy preserves critical semantic features in deeper layers while allowing aggressive compression of redundant shallow features.

Global Pruning. Based on the importance scores, global pruning determines which weights to remove across all layers simultaneously:

subject to the FLOPs constraint:

where

S is the pruning mask,

is the pruned model,

is the validation set,

is the regularization coefficient, and

= 2.0 is the target compression ratio.

Iterative Optimization & Speed Target Check. The pruning process follows a progressive schedule with continuous speed monitoring:

where

is the pruning ratio at iteration

t,

is the maximum pruning ratio, and

controls the pruning speed. The speedup is continuously checked against the target:

The iterative process continues until the target 2× speedup is achieved while maintaining acceptable accuracy.

Structured Sparsity. The global pruning results in structured sparsity patterns that eliminate entire channels or filters, ensuring hardware-friendly acceleration.

Adaptive Fine-tuning. The compressed model undergoes adaptive fine-tuning to recover performance using a composite loss function:

where

is the standard detection loss,

is the knowledge distillation loss from the original model, and

provides regularization.

Performance Recovery. The final stage evaluates and ensures performance recovery through comprehensive metrics, including accuracy retention and efficiency gains.

Implementation Configuration. The pipeline is configured with: = 1.5 for deeper layer emphasis, = 2.0 for 2× acceleration, = 0.1 for knowledge distillation, and = 0.0005 for regularization. Fine-tuning employs 300 epochs with an SGD optimizer, batch size 8, and a close-mosaic strategy in the final 20 epochs for enhanced small-target detection.

Evaluation Metrics. The compression effectiveness is measured through multiple metrics:

This comprehensive pipeline achieves >98.5% performance retention while delivering 2× speedup and significant memory reduction.

3. Results

3.1. Experimental Settings and Dataset Description

Experiments were performed on a workstation with an NVIDIA GeForce RTX 2080 Ti GPU (22 GB memory) using a PyTorch 2.2.2, CUDA 11.8, Python 3.12.11, and Ultralytics YOLOv8 framework (v8.1.9). Training employed 100 epochs with batch size 8, SGD optimization (momentum 0.9), and initial learning rate 0.01. Input images were standardized to resolution for both training and inference unless noted otherwise.

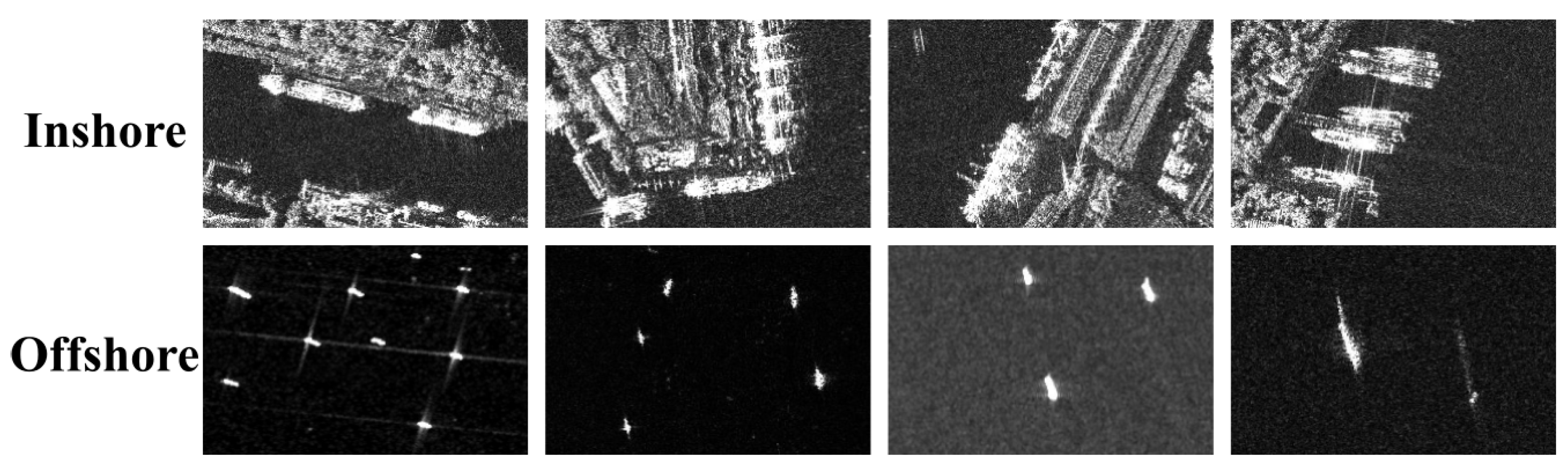

We utilize the SAR Ship Detection Dataset (SSDD) [

28] as our primary evaluation benchmark for ablation studies, component analysis, and hyperparameter validation. SSDD comprises 1160 SAR images with horizontal bounding box ship annotations, acquired from three different sensors across varied viewing geometries and sea conditions. The dataset encompasses C and X frequency bands with spatial resolutions from 1 to 15 m. We adopt a standard 4:1 train-test split, yielding 928 training and 232 test images containing a total of 2587 ship instances. SSDD categorizes scenes into

Inshore (coastal environments with complex clutter) and

Offshore (open-ocean scenarios with homogeneous backgrounds), as illustrated in

Figure 5.

Table 1 details the characteristics of both SSDD and our cross-dataset validation benchmark RSDD-SAR [

29].

SSDD serves as the primary benchmark for systematic component analysis and hyperparameter validation. RSDD-SAR is employed exclusively for cross-dataset generalization evaluation with state-of-the-art oriented detection methods.

3.2. Evaluation Metrics

Primary metrics:

Mean Average Precision (mAP):

where mAP@[0.5:0.95] averages AP over IoU thresholds

.

Frames Per Second (FPS):

where

is the total number of processed frames and

is the total inference time in seconds. FPS measures real-time inference speed, with higher values indicating faster processing capability for practical deployment. Additional metrics include Params (learnable parameters in millions) and GFLOPs (floating-point operations for computational cost assessment).

3.3. Ablation Study on Lightweight Components

We evaluate the contributions of lightweight modules on SSDD, starting from the YOLOv8n baseline.

Table 2 presents both individual and combined component analysis.

Parameters are measured in millions; GFLOPs are measured at 640 × 640 input resolution. LGNet backbone integrates all proposed components with Ghost convolutions.

The full LGNet backbone achieves 23.3% parameter reduction (3.0 M→2.3 M) and 16.0% computational savings (8.1 G→6.8 G) compared to YOLOv8n while maintaining competitive accuracy (mAP@95: 0.671 vs. 0.683, −1.2 pp).

Individual Component Analysis: Each lightweight module shows distinct characteristics. HGStem achieves the highest precision (0.963) but with slight mAP@95 degradation (0.676). Both GHBlock and DWConv deliver strong performance with the highest mAP@50 (0.974) among individual components, where DWConv also provides the best mAP@95 (0.687) with minimal computational overhead.

Component Combination Strategy: The combination analysis shows complementary benefits. GHBlock + DWConv achieves the most aggressive compression (2.3 M parameters, 6.3 G FLOPs) while maintaining reasonable accuracy. The complete LGNet backbone provides optimal integration with the strongest recall (0.923), excellent mAP@50 (0.972), and efficient parameter usage (2.3 M), showing the effectiveness of the comprehensive lightweight architecture design.

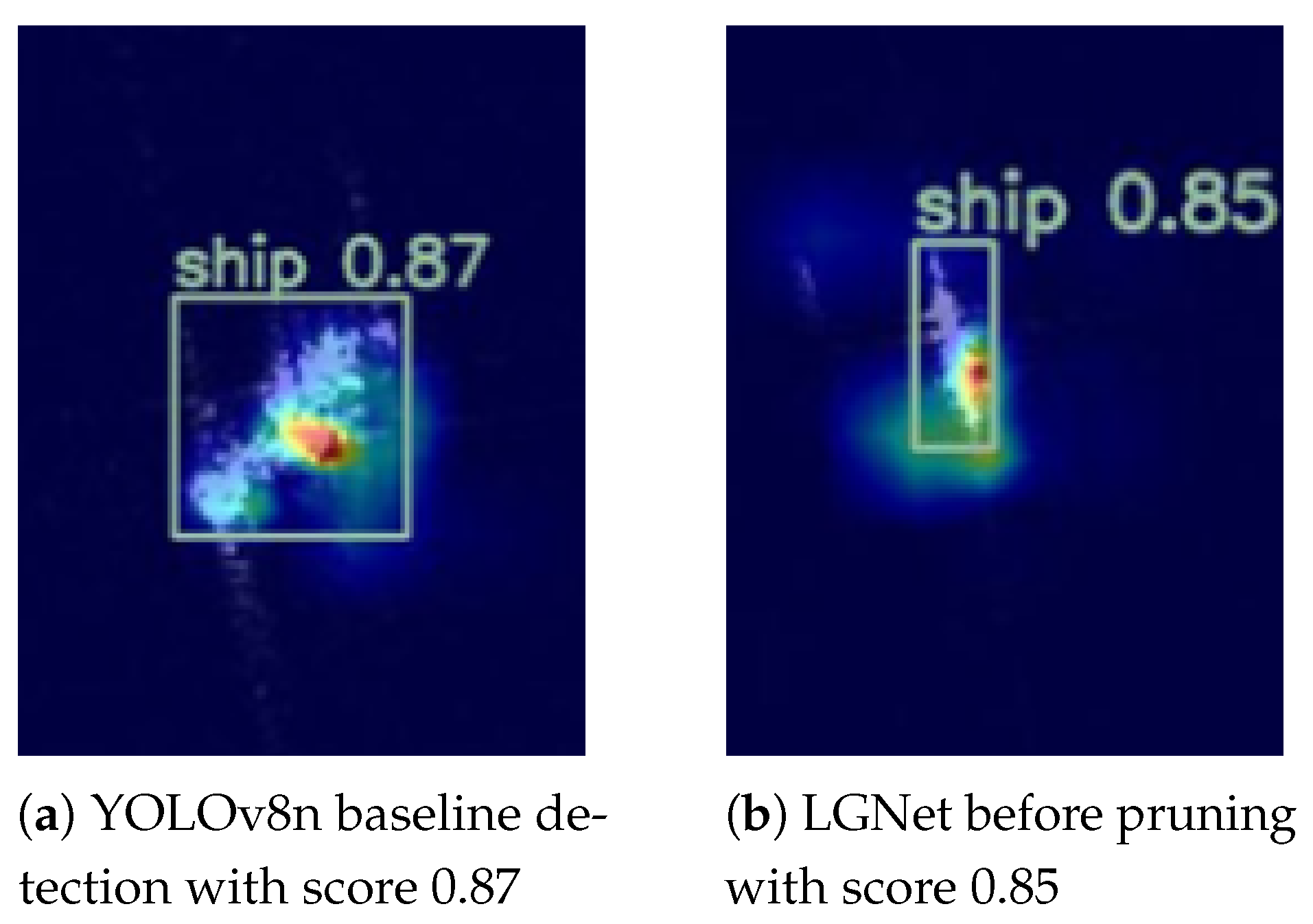

Visual Validation:

Figure 6 presents activation heatmaps generated using HiResCAM (High-Resolution Class Activation Mapping) to visualize model attention patterns. The heatmap technique employs gradient-weighted class activation mapping to highlight discriminative regions by computing gradients of the target class score with respect to feature maps from specific network layers. Compared to the YOLOv8n baseline (detection score: 0.87), LGNet achieves comparable target localization capability (detection score: 0.85) despite significant parameter reduction (3.0 M→2.3 M, −23.3%) and computational savings (8.1 G→6.8 G, −16.0%). The slight accuracy decrease demonstrates the acceptable trade-off between model efficiency and detection performance in the lightweight architecture design.

3.4. LAMP Pruning Results

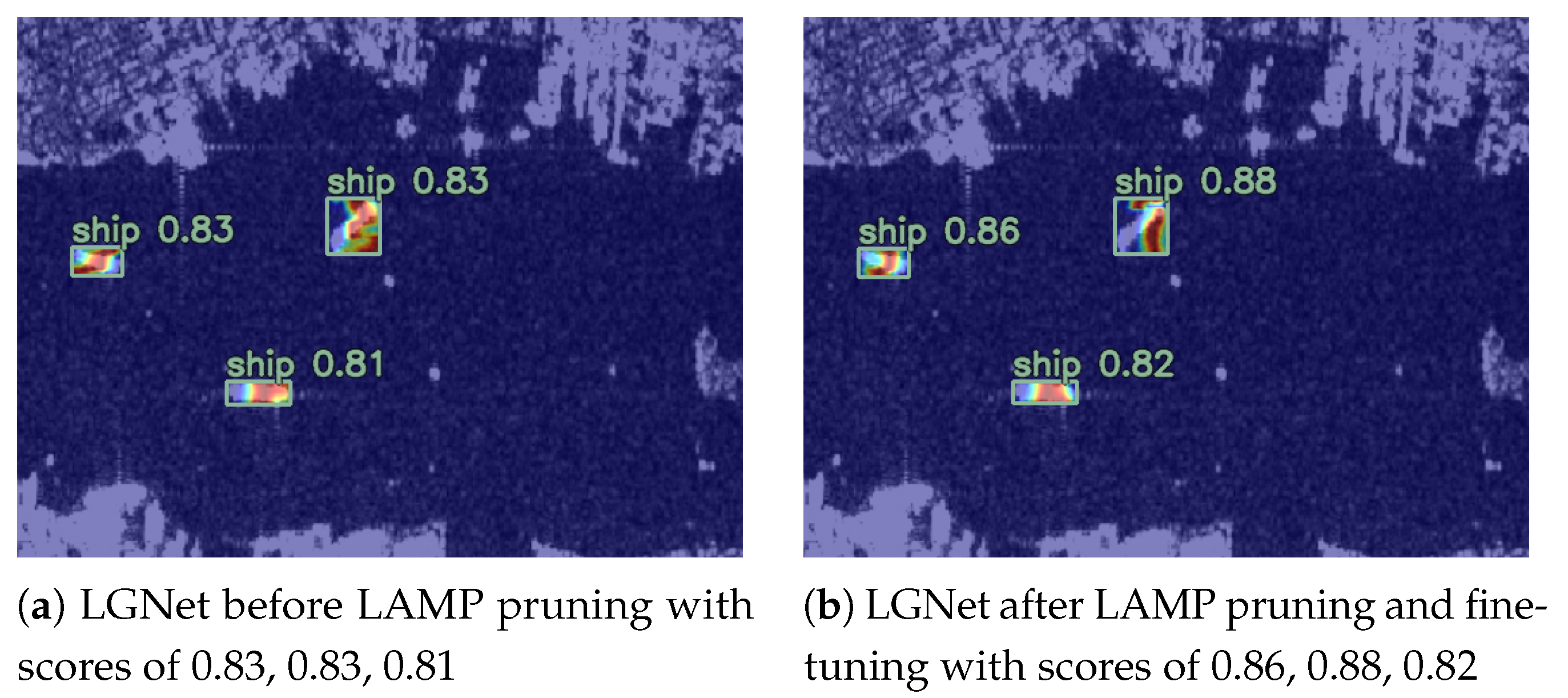

Experimental Performance Analysis. Building on the lightweight LGNet architecture, we apply LAMP-based structured channel pruning to achieve extreme model compression while maintaining detection accuracy.

Table 3 demonstrates the complete three-stage pipeline: baseline performance, post-pruning degradation, and fine-tuning recovery.

The pruning process achieves 2.03 × speedup (FLOPs reduction: 51.47%) and 67.90% parameter reduction.

The results reveal that Global LAMP pruning achieves 2.03 × speedup with 67.90% parameter reduction (2.3 M→0.74 M) and 51.47% FLOP reduction (6.8 G→3.3 G). Despite a significant initial accuracy drop (mAP@50: 0.972→0.590), the 200-epoch adaptive fine-tuning with knowledge distillation successfully recovers and even improves performance beyond the original baseline: P: 0.945→0.971 (+2.6 pp), R: 0.923→0.936 (+1.3 pp), mAP@50: 0.972→0.979 (+0.7 pp), mAP@95: 0.671→0.719 (+4.8 pp).

Hyperparameter Configuration and Rationale Analysis. The success of LAMP pruning depends critically on carefully chosen hyperparameters that balance compression aggressiveness with accuracy preservation.

Table 4 presents our optimized configuration.

This configuration is optimized specifically for SAR ship detection tasks with validation on SSDD and cross-dataset evaluation on RSDD-SAR.

Three critical parameters govern the compression–accuracy trade-off:

Global pruning enables cross-layer importance comparison, allowing LAMP to allocate varying sparsity levels based on layer-wise semantic importance—achieving 67.90% compression compared to only 42% with uniform layer-wise pruning.

Knowledge distillation weight () balances soft-target guidance from the teacher model with hard-target supervision, transferring crucial semantic relationships while preventing over-dependence.

Regularization () maintains optimization stability in the dramatically reduced parameter space, preventing catastrophic forgetting during extended fine-tuning.

LAMP Integration with SAR Ship Detection and Technical Contributions. The LAMP pruning strategy is specifically adapted for SAR imagery characteristics and ship detection requirements. Unlike natural images, SAR data exhibits unique challenges, including speckle noise, geometric distortions, and limited training samples, making aggressive pruning particularly risky for performance preservation.

Our key technical contributions for LAMP-SAR integration include the following: (1) layer-adaptive importance scoring that accounts for the hierarchical nature of SAR feature extraction—early layers capture fundamental radar backscattering patterns while deeper layers encode complex ship signatures and contextual information; (2) structured channel pruning that maintains the spatial coherence essential for SAR target detection, avoiding the fragmented feature maps that harm small ship detection accuracy; (3) knowledge distillation with SAR-specific loss weighting that preserves the subtle discriminative features crucial for distinguishing ships from clutter and wake interference.

The final compressed model achieves remarkable efficiency gains (2.03 × speedup, 67.90% parameter reduction) while delivering superior detection performance (+4.8 pp mAP@95 improvement). This demonstrates that LAMP pruning, when properly configured for SAR characteristics, not only reduces computational burden but can also act as an effective regularization mechanism, improving the model’s generalization capability on challenging maritime surveillance tasks. The combination of extreme compression with performance enhancement makes our approach particularly valuable for resource-constrained SAR applications such as real-time maritime monitoring and autonomous vessel detection systems (

Figure 7).

Training dynamics analysis further confirms convergence stability. Pre-pruning training exhibits rapid initial learning (epochs 1–50) followed by refinement (epochs 51–80) and plateau (epochs 81–100), with validation mAP@50 stabilizing at >0.96 and loss converging smoothly without oscillation. Post-pruning fine-tuning demonstrates two-phase behavior: rapid recovery (epochs 1–50, mAP@95: 0.391→0.65) through knowledge distillation guidance, followed by gradual refinement (epochs 51–200) with a close-mosaic strategy contributing to final improvement. The smooth convergence without significant oscillation validates hyperparameter stability across both training stages.

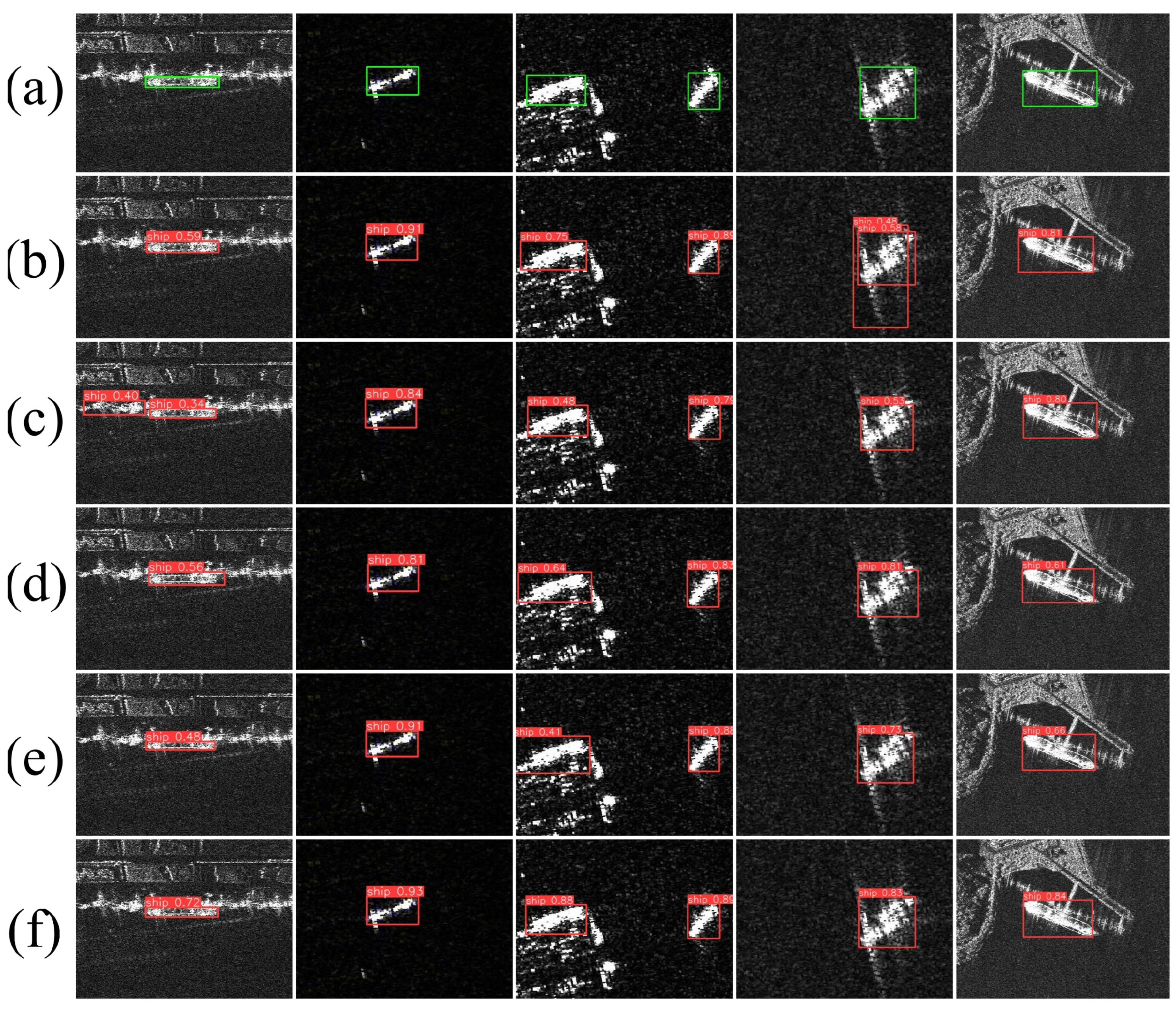

3.5. Comparative Analysis

LGNet produces concentrated target responses while suppressing background interference. Compared with YOLOv5n, Faster R-CNN, FASC-Net, and RLE-YOLO, LGNet better preserves small targets while maintaining precise localization (

Figure 8).

As shown in

Figure 8, qualitative comparison across six representative scenarios reveals LGNet’s superior performance in handling SAR-specific challenges. In the first scenario (column 1), featuring large vessels in harbor environments with strong land clutter, all methods achieve successful detection, but LGNet (row f) demonstrates the tightest bounding box alignment with ground truth (row a), indicating more precise localization capability. The second scenario (column 2) presents a critical small-target detection challenge where YOLOv5n (row b) produces a noticeably larger bounding box with reduced confidence, while Faster R-CNN (row c), FASC-Net (row d), and RLE-YOLO (row e) maintain reasonable detection quality. LGNet achieves the most compact and accurate bounding box, demonstrating superior small-target discrimination ability.

The third scenario (column 3) represents the most challenging case with severe speckle noise and heterogeneous sea clutter that creates multiple false-alarm candidates. Here, YOLOv5n exhibits slight localization deviation, while Faster R-CNN shows marginal bounding box drift. FASC-Net and RLE-YOLO maintain stable detection, but LGNet provides the most precise localization with minimal background interference, validating its robust noise suppression capability through Ghost-enhanced feature extraction. In the fourth scenario (column 4), featuring another small ship target, all methods successfully detect the vessel, yet LGNet consistently delivers the tightest bounding box fit, demonstrating its advantage in preserving fine-grained spatial details through hierarchical multi-scale feature aggregation.

The second scenario (column 2) tests medium-sized ship detection in relatively clean backgrounds where all methods perform well, confirming baseline detection capability. The fifth scenario (column 5) presents a complex case with oblique ship orientation and strong land–background interference. Here, YOLOv5n shows slight bounding box looseness, Faster R-CNN maintains reasonable accuracy, while FASC-Net and RLE-YOLO achieve competitive results. LGNet again demonstrates the most precise boundary delineation, effectively suppressing complex background interference while maintaining tight target localization.

Overall, LGNet exhibits three key advantages: (1) superior small-target preservation with consistently tighter and more accurate bounding boxes across all scenarios; (2) enhanced robustness to speckle noise and sea clutter through Ghost convolution-based feature redundancy exploitation, which reduces sensitivity to local intensity variations; (3) precise localization capability in complex backgrounds via hierarchical GHBlock modules and multi-scale feature pyramid integration that balances fine-grained spatial details with high-level semantic understanding. These qualitative observations align with the quantitative superiority demonstrated in

Table 5, where LGNet achieves the highest mAP@95 (71.9%) while maintaining the smallest model footprint (0.74 M parameters, 3.3 GFLOPs) and fastest inference speed (681 FPS).

On SSDD, LGNet achieves the best mAP@95 (71.9%) with the smallest size (0.74 M), the lowest computational cost (3.3 G), and the highest inference speed (681 FPS), outperforming YOLOv8n/RLE-YOLO by +3.5–3.6 pp in accuracy while being 13% faster than YOLOv8n (

Table 5).

LGNet achieves the best mAP@95 (71.9%) on SSDD with the smallest model size (0.74 M parameters) and lowest computational cost (3.3 GFLOPs), demonstrating optimal accuracy–efficiency balance. Parameters are measured in millions; GFLOPs are measured at 640 × 640 input resolution. FPS measurements were conducted on an NVIDIA GeForce RTX 2080 Ti GPU with batch size 8, representing inference speed under consistent hardware conditions for a fair comparison across all methods.

Cross-dataset evaluation on RSDD-SAR (

Table 1) uses identical hyperparameters from SSDD (

Table 4) and training protocol (100+200 epochs). Results (

Table 6) validate architecture robustness across dataset scales and annotation types.

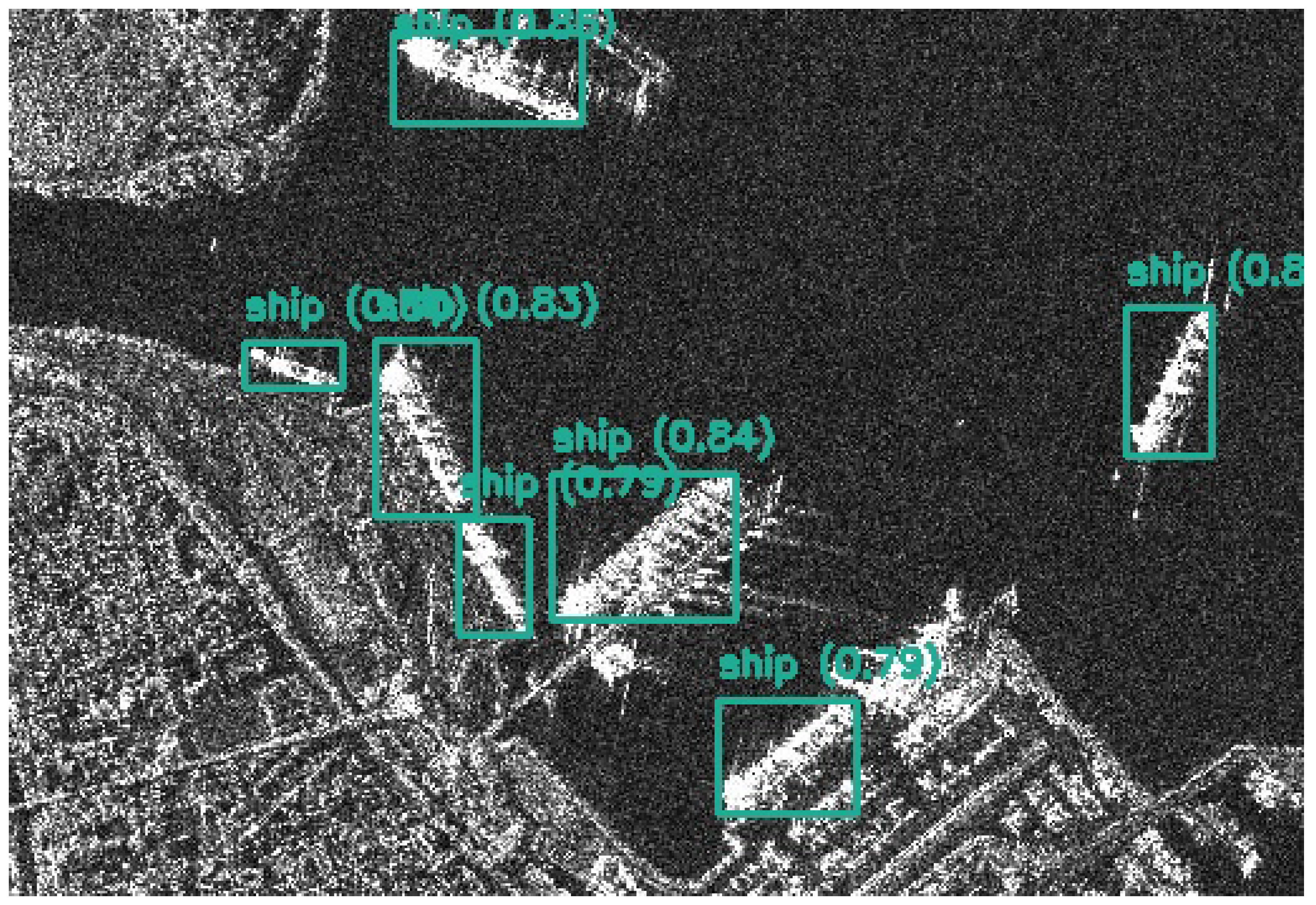

LGNet achieves the highest mAP@50 (94.4%) among all evaluated methods on RSDD-SAR, surpassing the second-best-performing method LSD-Det (+4.1 pp), Rotated-RTMDet-s (+4.2 pp), Oriented RepPoints (+4.9 pp), R3Det (+5.5 pp), S2ANet (+6.0 pp), SPG-OSD (+25.1 pp), and YOLOv8n baseline (+28.5 pp). Although LSD-Det achieves higher precision (92.4%) and recall (90.3%), LGNet demonstrates superior overall detection performance with the best mAP@50, validating its effectiveness for cross-dataset generalization in SAR ship detection tasks (

Figure 9).

4. Discussion

4.1. Experimental Validation and Performance Analysis

Our experimental evaluation shows that LGNet achieves superior performance across multiple benchmarks and deployment scenarios. Our validation approach used comprehensive ablation studies on SSDD (

Table 2 and

Table 3) and cross-dataset evaluation on RSDD-SAR (

Table 6) to verify architecture robustness and generalization capability.

The architectural design validation confirms our lightweight approach works effectively. Individual component analysis shows that GHBlock achieves optimal balance between accuracy and efficiency (mAP@95: 0.682, 2.7 M parameters, 7.3 GFLOPs), while DWConv delivers the highest individual accuracy (0.687). The systematic integration of Ghost convolutions throughout the backbone provides significant computational benefits: replacing standard C2f modules yields 45.2% FLOPs reduction and 45.1% parameter reduction with 1.89 × actual speedup, while maintaining minimal accuracy degradation (<1 pp mAP). The complete LGNet backbone achieves 23.3% parameter reduction and 16.0% computational savings compared to the YOLOv8n baseline.

The LAMP pruning strategy works highly effectively for extreme model compression. Despite the initial accuracy drop after pruning (mAP@50: 0.970→0.590), the 200-epoch adaptive fine-tuning with knowledge distillation successfully recovers and surpasses baseline performance (final mAP@95: 0.719, +4.8 pp improvement). This aggressive compression achieves 67.90% parameter reduction (2.3 M→0.74 M) and 51.47% FLOPs reduction (6.8 G→3.3 G), showing that intelligent pruning can maintain detection capability while significantly reducing model complexity.

Performance benchmarking validates LGNet’s superiority across different evaluation scenarios. On SSDD, LGNet achieves the best mAP@95 (71.9%) with the smallest model size (0.74 M parameters) and lowest computational cost (3.3 GFLOPs), outperforming traditional two-stage detectors by +10.1–12.6 pp with 98.2% parameter reduction. Cross-dataset evaluation on RSDD-SAR confirms robust generalization, where LGNet achieves the highest mAP@50 (94.4%) among all evaluated methods, surpassing recent oriented detectors including LSD-Det (+4.1 pp), Rotated-RTMDet-s (+4.2 pp), and R3Det (+5.5 pp).

4.2. Edge Deployment Validation

Real-world deployment validation on Huawei Atlas AIpro-20T confirms LGNet’s practical feasibility for edge computing scenarios. The model achieves exceptional performance with 135.39 FPS (7.39 ms latency) on the SSDD test set (640 × 640 input), demonstrating genuine real-time capability on resource-constrained hardware (

Figure 10). This performance validates the effectiveness of our dual-strategy lightweight approach for practical maritime surveillance applications, where computational resources and power consumption are critical constraints.

The edge deployment results highlight LGNet’s suitability for autonomous systems such as unmanned aerial vehicles (UAVs), autonomous surface vessels, and satellite-based monitoring platforms. The ultra-compact model size (0.74 M parameters) enables deployment on devices with limited memory capacity (<2 GB), while the low computational requirement (3.3 GFLOPs) ensures efficient operation on edge computing hardware with <10 GFLOPS processing capability.

4.3. Limitations and Failure Case Analysis

While LGNet achieves strong overall performance, certain limitations deserve acknowledgment. Failure case analysis reveals challenges in specific SAR detection scenarios.

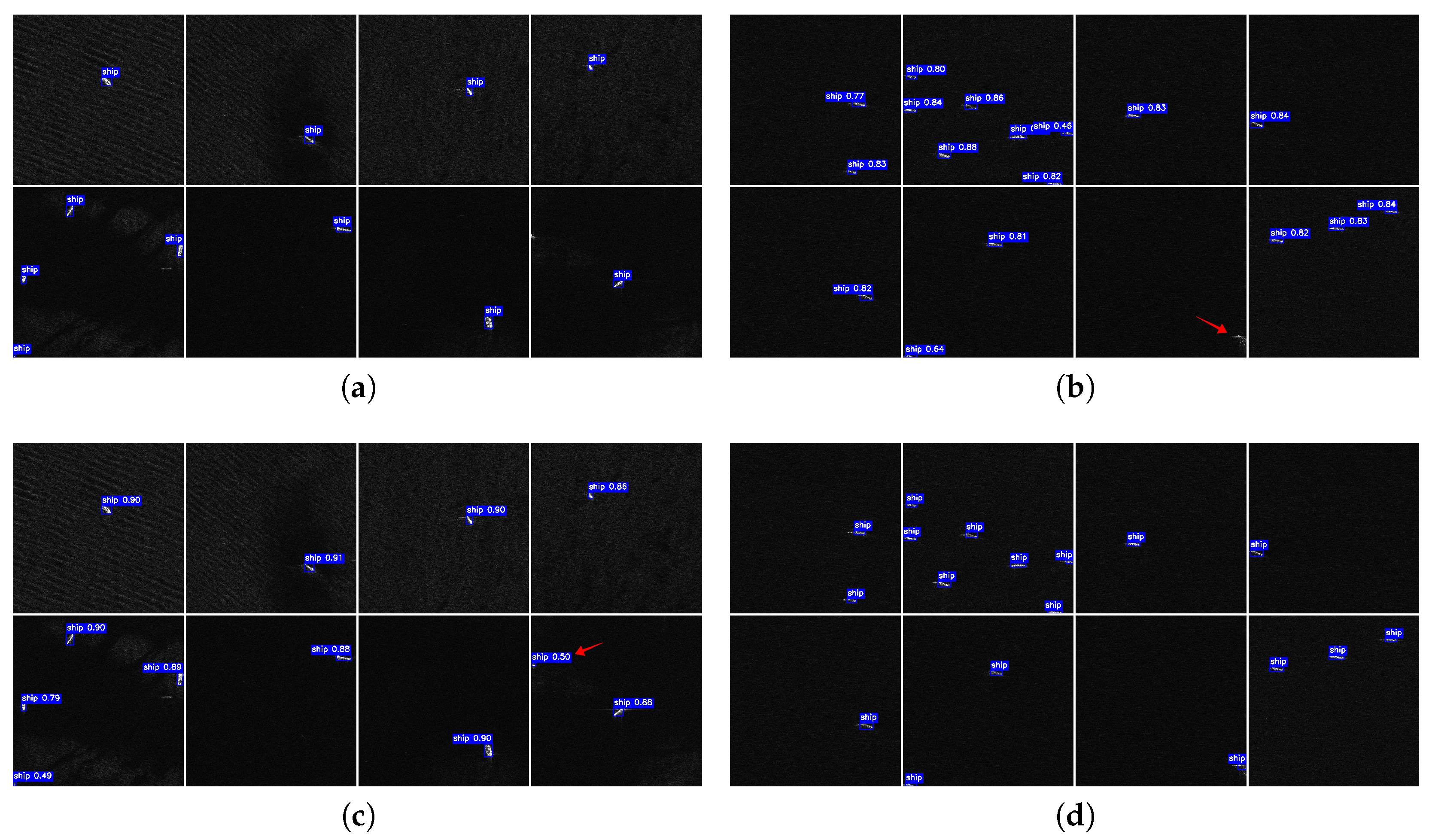

Figure 9 demonstrates typical error patterns: false positive detection in image (b) at the second row, fourth column, where non-ship targets are misclassified as vessels, and false negative detection in image (d) at the first row, second column, where actual ship targets remain undetected.

The lightweight architecture faces particular difficulties in densely clustered harbor environments, where overlapping vessel signatures complicate individual target delineation. Small or partially occluded targets also challenge the compressed model, as reduced feature capacity may limit detection of fine-grained signatures essential for identifying diminutive or partially visible vessels.

The model occasionally produces false positives in complex coastal environments where man-made structures (piers, oil platforms, large debris) exhibit SAR signature similarities to ships. False negatives occur primarily in low signal-to-noise ratio conditions or when ships appear at the image boundaries with partial visibility. These limitations reflect the inherent trade-off between model compression and representational capacity, suggesting that certain detection scenarios may require specialized handling or ensemble approaches.

Cross-sensor and cross-domain generalization requires further evaluation, particularly for different frequency bands (L-band, S-band), polarization configurations (HH, VV, VH, HV), and extreme weather conditions (heavy precipitation, high sea states). The current evaluation focuses on C-band and X-band SAR data under typical maritime conditions, limiting the assessment of broader operational robustness.

4.4. Contribution to SAR-Based Ship Detection

LGNet’s integration with SAR imagery advances maritime surveillance capabilities significantly. While SAR sensors offer unique advantages—all-weather operation, day–night independence, and cloud penetration—they introduce computational challenges through complex preprocessing requirements, large data volumes, and specialized feature patterns.

LGNet addresses these SAR-specific challenges through targeted innovations. Ghost convolution efficiently exploits redundant feature patterns characteristic of maritime SAR scenes while minimizing computational overhead. GHBlock’s hierarchical aggregation enables robust multi-scale detection essential for SAR imagery, where vessel appearances vary dramatically with imaging geometry and target characteristics.

The LAMP pruning strategy demonstrates remarkable compatibility with SAR detection tasks, where the preserved semantic information remains sufficient for accurate ship classification despite aggressive model compression. This is particularly valuable for SAR applications, where traditional deep learning models often require substantial computational resources that exceed the capabilities of maritime edge computing platforms.

The successful deployment on edge hardware represents a paradigm shift for SAR-based maritime surveillance, enabling distributed monitoring networks where individual sensors can perform intelligent detection without relying on ground-based processing centers. This capability is especially critical for applications in remote maritime areas, autonomous vessel navigation, and real-time threat assessment scenarios where communication bandwidth and latency constraints prohibit cloud-based processing.

Future research directions should focus on expanding the model’s robustness across diverse SAR acquisition parameters, incorporating temporal and polarimetric information for enhanced detection reliability, and developing specialized architectures for emerging maritime surveillance challenges such as small vessel trafficking detection and illegal fishing monitoring in protected waters.

5. Conclusions

We present LGNet, an ultra-lightweight SAR ship detection network that combines architectural innovation with intelligent compression to achieve high efficiency without sacrificing accuracy. The dual-strategy approach—Ghost-enhanced architecture with adaptive pruning—shows that substantial model compression and high detection performance can coexist in SAR applications.

Comprehensive evaluation validates LGNet’s effectiveness across diverse SAR scenarios, achieving superior performance on SSDD (mAP@95: 71.9%) and RSDD-SAR (mAP@50: 94.4%) with remarkable efficiency (0.74 M parameters, 3.3 GFLOPs). This represents a significant advance in computational efficiency for maritime surveillance without compromising detection quality.

LGNet shows robust generalization across different SAR datasets and annotation formats, maintaining consistent performance for both horizontal (SSDD) and oriented (RSDD-SAR) bounding box detection tasks. This adaptability confirms the architecture’s suitability for diverse SAR imaging conditions and operational requirements.

Real-time deployment on edge hardware (Huawei Atlas AIpro-20T, 135.39 FPS) confirms LGNet’s practical viability for resource-constrained maritime surveillance systems. The model’s exceptional efficiency makes it particularly suited for autonomous platforms and distributed monitoring networks operating in challenging maritime environments.