KFGOD: A Fine-Grained Object Detection Dataset in KOMPSAT Satellite Imagery

Highlights

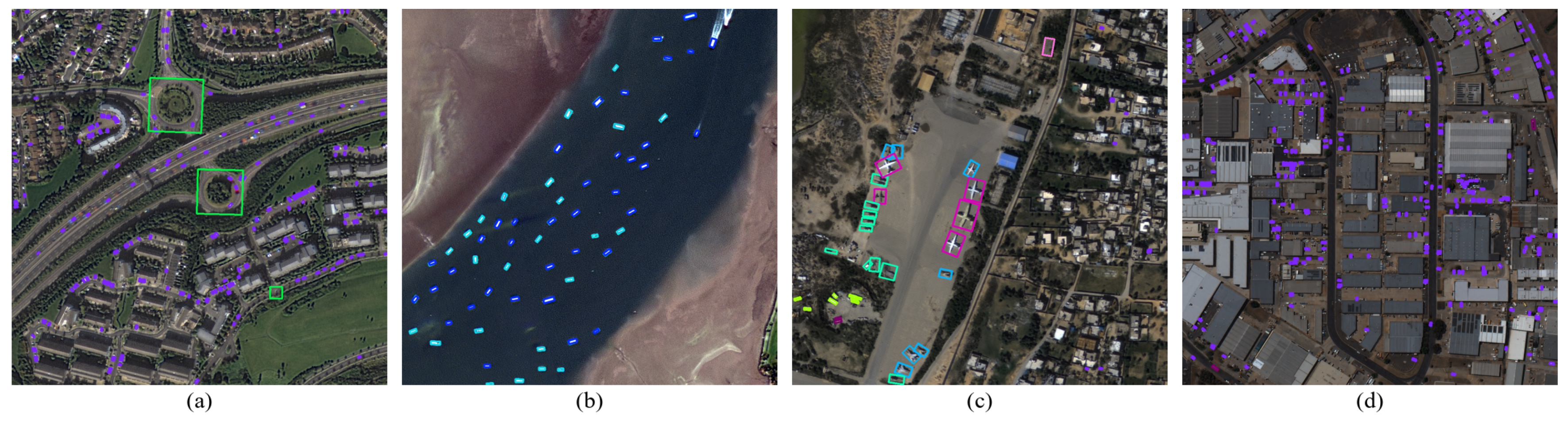

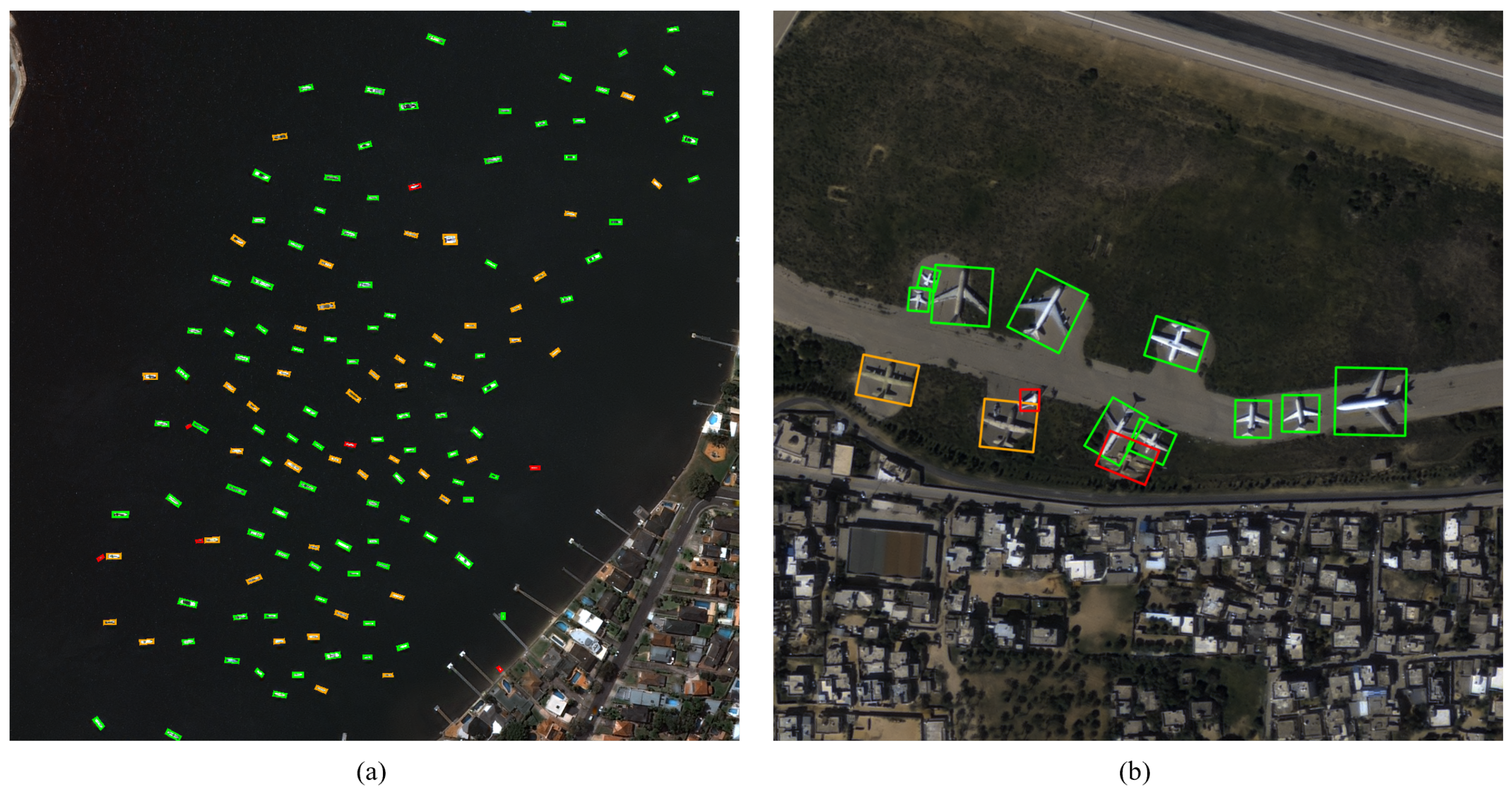

- KFGOD provides approximately 880K object instances across 33 fine-grained classes from homogeneous KOMPSAT-3/3A imagery (0.55–0.7 m resolution), with dual OBB+HBB annotations.

- The dataset’s unique sensor homogeneity (KOMPSAT-3/3A only) provides a well-controlled, sensor-consistent benchmark that minimizes sensor-induced domain gaps and enables a fair comparison of the detection algorithms.

- Benchmark results (SOTA mAP 63.9%) validate KFGOD as a challenging benchmark, highlighting critical, real-world research problems in fine-grained, long-tail, and oriented object detection.

- Multi-format label support and demonstrated real-world use cases (e.g., Korea Coast Guard maritime surveillance) show that KFGOD is practically useful and generalizes well to diverse high-resolution satellite imagery.

Abstract

1. Introduction

2. Related Work

3. Dataset Construction

3.1. Image Acquisition and Preprocessing

3.1.1. KOMPSAT-3/3A Data Collection

3.1.2. Image Preprocessing

3.2. Annotation Strategy

3.2.1. Annotation Protocol

3.2.2. Quality Control

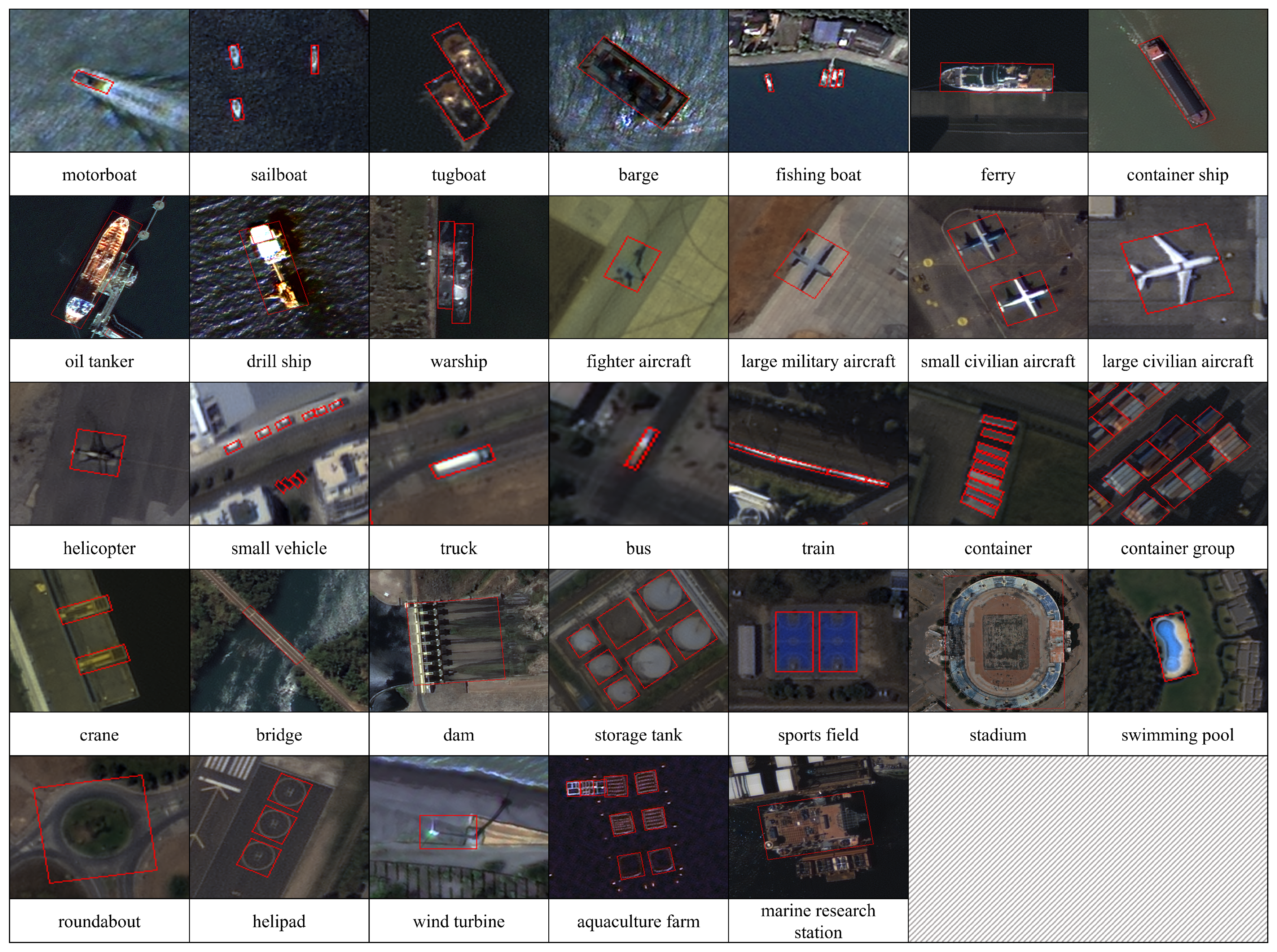

3.3. Class Definition

4. Dataset Characteristics

4.1. Dataset Split

4.2. Overall Class Distribution

4.3. Class Frequency by Image

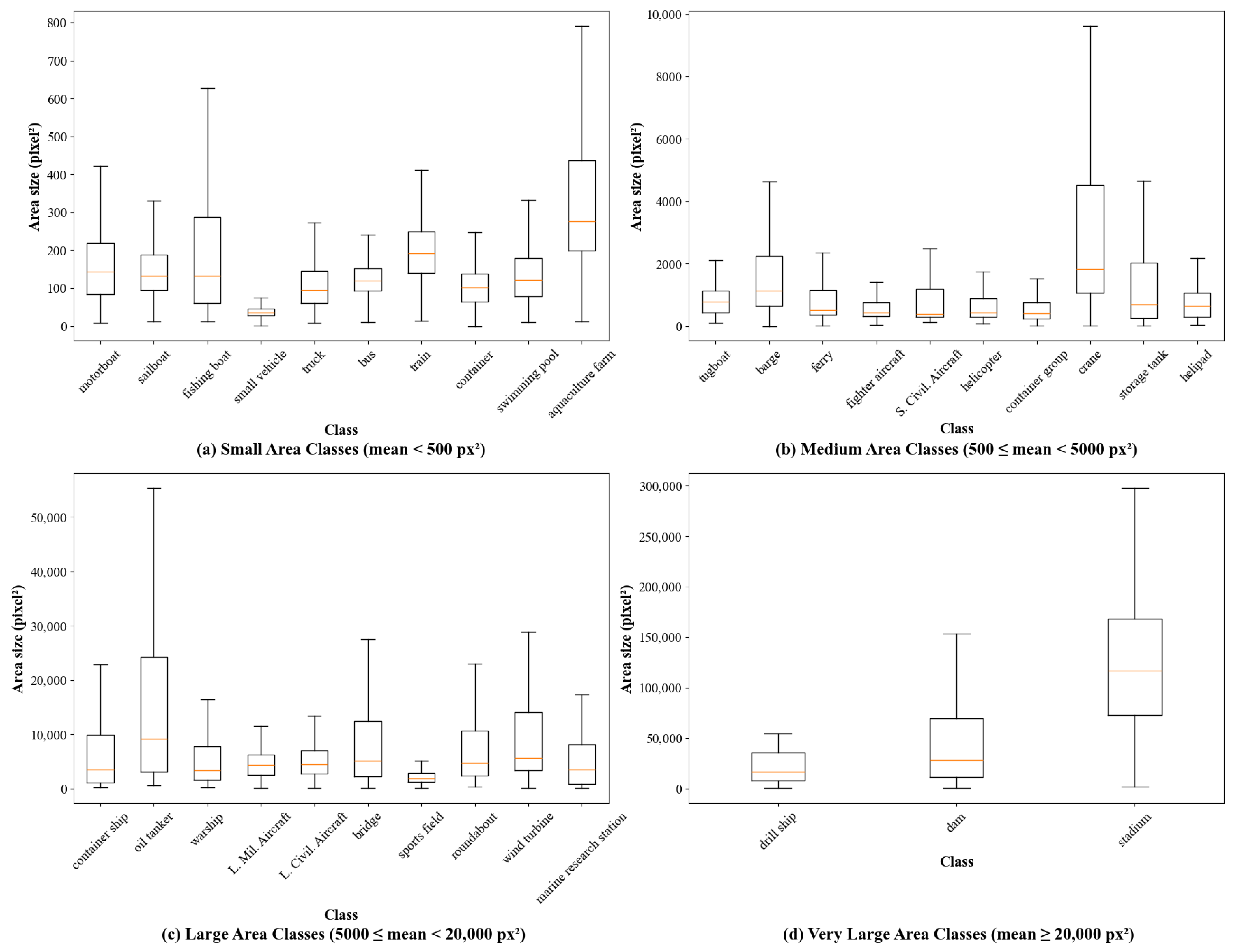

4.4. Instance Size Distribution

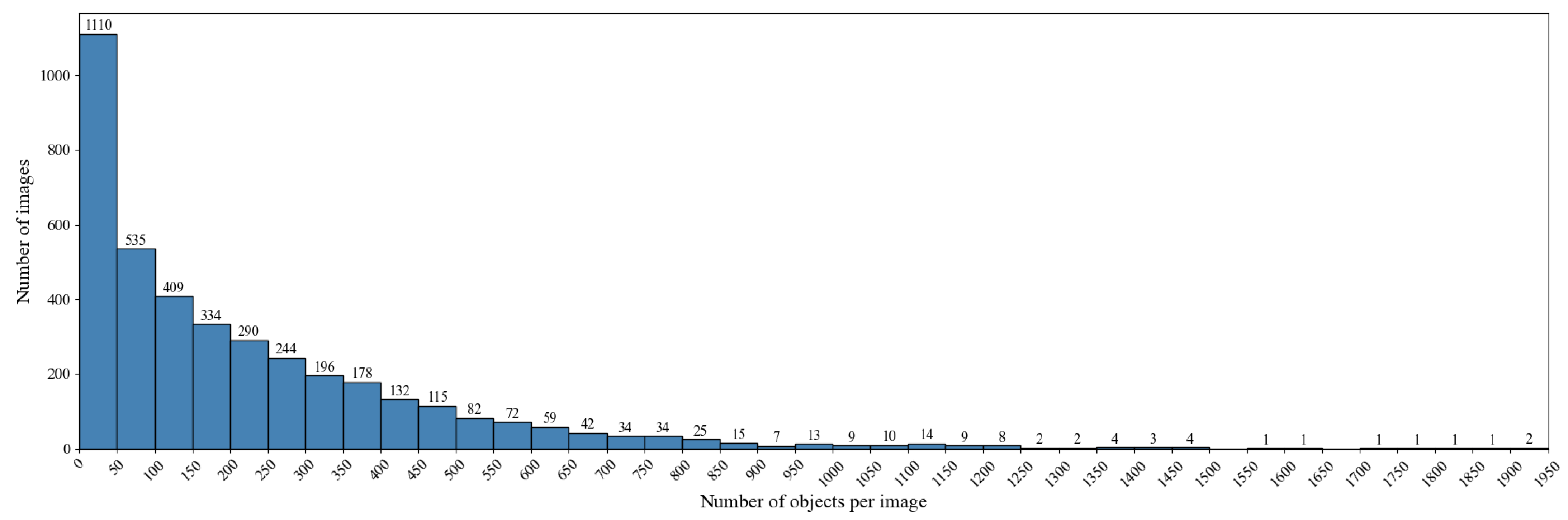

4.5. Instance Density per Image

5. Experimental Evaluation

5.1. Experimental Setup

5.1.1. Dataset and Preprocessing

5.1.2. Baseline Models

5.1.3. Implementation Details

5.2. Evaluation Tasks and Metrics

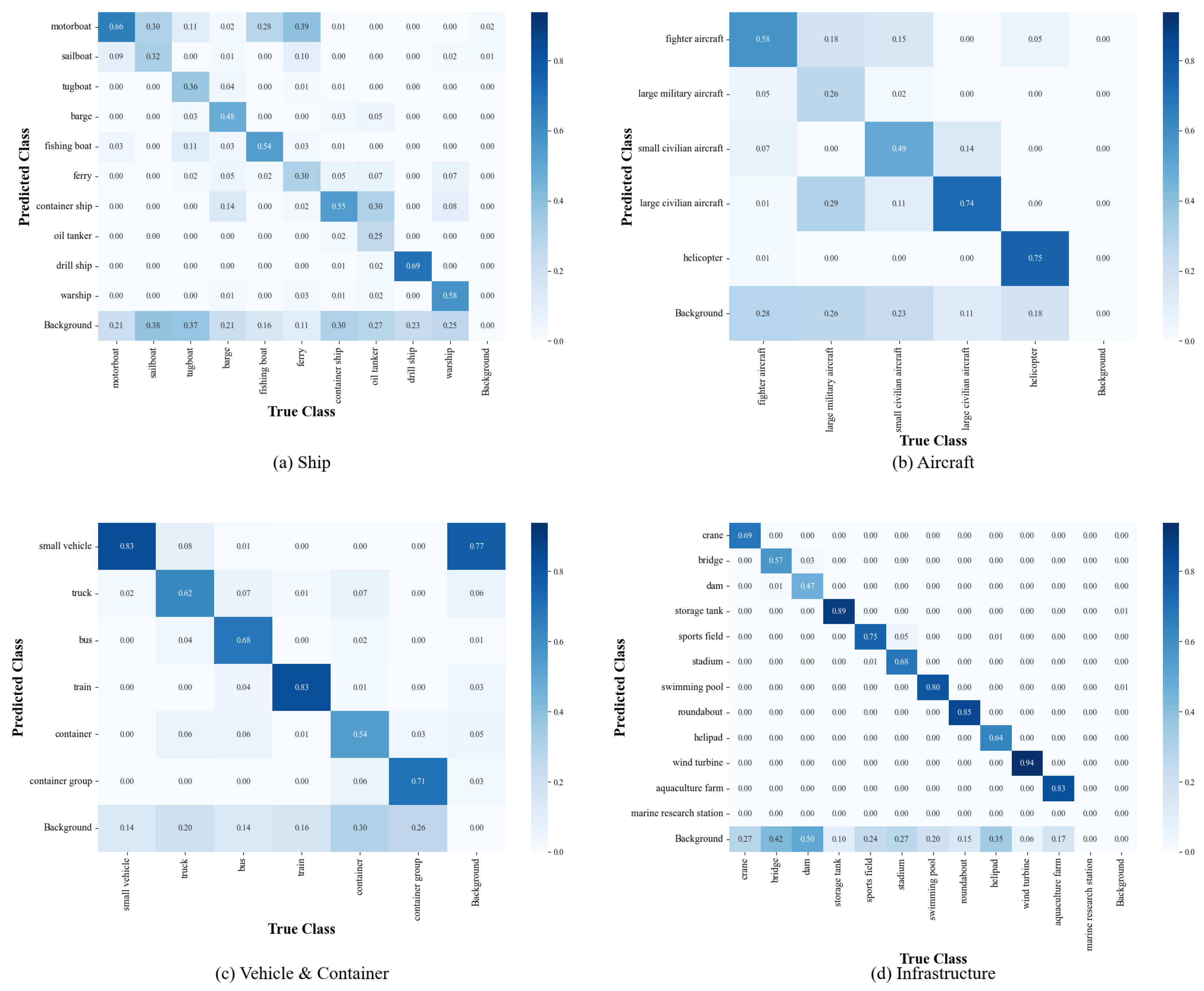

5.3. Benchmark Results and Analysis

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Gui, S.; Song, S.; Qin, R.; Tang, Y. Remote sensing object detection in the deep learning era—A review. Remote Sens. 2024, 16, 327. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Lam, D.; Kuzma, R.; McGee, K.; Dooley, S.; Laielli, M.; Klaric, M.; Bulatov, Y.; McCord, B. xView: Objects in Context in Overhead Imagery. arXiv 2018, arXiv:1802.07856. [Google Scholar] [CrossRef]

- Christie, G.; Fendley, N.; Wilson, J.; Mukherjee, R. Functional map of the world. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6172–6180. [Google Scholar] [CrossRef]

- Minetto, R.; Segundo, M.P.; Rotich, G.; Sarkar, S. Measuring human and economic activity from satellite imagery to support city-scale decision-making during COVID-19 pandemic. IEEE Trans. Big Data 2020, 7, 56–68. [Google Scholar] [CrossRef]

- Chen, Y.; Qin, R.; Zhang, G.; Albanwan, H. Spatial temporal analysis of traffic patterns during the COVID-19 epidemic by vehicle detection using planet remote-sensing satellite images. Remote Sens. 2021, 13, 208. [Google Scholar] [CrossRef]

- Golej, P.; Horak, J.; Kukuliac, P.; Orlikova, L. Vehicle detection using panchromatic high-resolution satellite images as a support for urban planning. Case study of Prague’s centre. GeoScape 2022, 16, 108–119. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Jakubik, J.; Roy, S.; Phillips, C.; Fraccaro, P.; Godwin, D.; Zadrozny, B.; Szwarcman, D.; Gomes, C.; Nyirjesy, G.; Edwards, B.; et al. Foundation models for generalist geospatial artificial intelligence. arXiv 2023, arXiv:2310.18660. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards large-scale small object detection: Survey and benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef]

- Shi, F.; Zhang, T.; Zhang, T. Orientation-aware vehicle detection in aerial images via an anchor-free object detection approach. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5221–5233. [Google Scholar] [CrossRef]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.S. Tiny object detection in aerial images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3791–3798. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Li, X.; Men, F.; Lv, S.; Jiang, X.; Pan, M.; Ma, Q.; Yu, H. Vehicle detection in very-high-resolution remote sensing images based on an anchor-free detection model with a more precise foveal area. ISPRS Int. J. Geo-Inf. 2021, 10, 549. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, H.; Weng, L.; Yang, Y. Ship rotated bounding box space for ship extraction from high-resolution optical satellite images with complex backgrounds. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1074–1078. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar] [CrossRef]

- Rufener, M.C.; Ofli, F.; Fatehkia, M.; Weber, I. Estimation of internal displacement in Ukraine from satellite-based car detections. Sci. Rep. 2024, 14, 31638. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Mundhenk, T.N.; Konjevod, G.; Sakla, W.A.; Boakye, K. A large contextual dataset for classification, detection and counting of cars with deep learning. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 785–800. [Google Scholar] [CrossRef]

- Chen, K.; Wu, M.; Liu, J.; Zhang, C. FGSD: A dataset for fine-grained ship detection in high resolution satellite images. arXiv 2020, arXiv:2003.06832. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Azimi, S.M.; Bahmanyar, R.; Henry, C.; Kurz, F. Eagle: Large-scale vehicle detection dataset in real-world scenarios using aerial imagery. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 6920–6927. [Google Scholar] [CrossRef]

- Al-Emadi, N.; Weber, I.; Yang, Y.; Ofli, F. VME: A Satellite Imagery Dataset and Benchmark for Detecting Vehicles in the Middle East and Beyond. Sci. Data 2025, 12, 500. [Google Scholar] [CrossRef]

- Higuchi, A. Toward more integrated utilizations of geostationary satellite data for disaster management and risk mitigation. Remote Sens. 2021, 13, 1553. [Google Scholar] [CrossRef]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, Y.; Feng, Y.; Lu, X. Hierarchical and Robust Convolutional Neural Network for Very High-Resolution Remote Sensing Object Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5535–5548. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO11, Version 11.0.0; Ultralytics: 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 17 November 2025).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part v 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Gupta, A.; Dollar, P.; Girshick, R. LVIS: A Dataset for Large Vocabulary Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar] [CrossRef]

- Zhou, Y.; Yang, X.; Zhang, G.; Wang, J.; Liu, Y.; Hou, L.; Jiang, X.; Liu, X.; Yan, J.; Lyu, C.; et al. Mmrotate: A rotated object detection benchmark using pytorch. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 7331–7334. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lee, D.H.; Choi, K. Small Ship Detection for Marine Search and Rescue: Construction of High-Resolution Training Data and Performance Evaluation Using Microsatellite Imagery. Korean J. Remote Sens. 2024, 40, 943–955. [Google Scholar] [CrossRef]

- Kim, H.O.; Ha, J.S.; Jeong, S.; Kim, Y.; Park, S.; Oh, H. High Resolution Land Application Approach Using Micosatellite Costellation (NEONSAT) in Korea. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 7168–7170. [Google Scholar] [CrossRef]

- Oh, H.; Shin, D.B.; Chung, D.W. KOMPSAT-3/3A Image-text Dataset for Training Large Multimodal Models. GEO DATA 2025, 7, 27–35. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Li, W.; Lang, C.; Zhang, P.; Yao, Y.; Han, J. Learning Discriminative Representation for Fine-Grained Object Detection in Remote Sensing Images. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 8197–8208. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9260–9269. [Google Scholar] [CrossRef]

| Dataset | Source | Instances a | Images | Image Width (px) | Categories | Annotation | Format | Fine-Grained b |

|---|---|---|---|---|---|---|---|---|

| NWPU VHR-10 [30] | Google Earth | 3775 | 800 | ∼1000 | 10 | HBB | JPG | N |

| VEDAI [27] | Google Earth | 3640 | 1210 | 512, 1024 | 9 | OBB | PNG | Y |

| UCAS-AOD [34] | Google Earth | 6029 | 910 | ∼1000 | 2 | OBB | PNG | N |

| HRSC2016 [24] | Google Earth | 2976 | 1070 | ∼1100 | 1 | OBB | BMP | N |

| DOTA [9] | Google Earth, Satellite JL-1, GF-2 | 188,282 | 2806 | 800–4000 | 15 | OBB + HBB | PNG | N |

| HRRSD [35] | Google Earth, Baidu Map | 55,740 | 21,761 | 152–10,569 | 13 | HBB | JPG | N |

| RSOD [36] | Google Earth, Tianditu | 6950 | 976 | ∼1000 | 4 | HBB | JPG | N |

| xView [4] | WorldView-3 | 1 M | 1127 | 2000–4000 | 60 | HBB | PNG | Y |

| DIOR [3] | Google Earth | 192,472 | 23,463 | 800 | 20 | HBB | JPG | N |

| FGSD [29] | Google Earth | 5634 | 2612 | 930 | 43 | OBB | JPG | Y |

| FAIR1M [10] | Gaofen, Google Earth | 1.02 M | 42,796 | 600–10,000 | 37 | OBB | TIFF | Y |

| KFGOD (Ours) | KOMPSAT-3, KOMPSAT-3A | 882,399 | 4003 | 1024 | 33 | OBB + HBB | PNG | Y |

| Characteristic | KOMPSAT-3 | KOMPSAT-3A |

|---|---|---|

| Sensor | Optical | |

| Orbital Altitude | 685 km | 528 km |

| Spatial Resolution | Pan: 70 cm, MS: 4 m | Pan: 55 cm, MS: 3.2 m |

| Band Configuration | Panchromatic, Blue, Green, Red, NIR | Panchromatic, Blue, Green, Red, NIR, IR |

| Image Size | 24,000 × 24,000 (px) | |

| Orbit Type | Sun-Synchronous | |

| Continent | KOMPSAT-3 | KOMPSAT-3A | Total Patches |

|---|---|---|---|

| Asia | 237 | 1360 | 1597 |

| Africa | 48 | 222 | 270 |

| North America | 57 | 367 | 424 |

| South America | 136 | 204 | 340 |

| Europe | 171 | 689 | 860 |

| Australia | 87 | 431 | 518 |

| Total | 733 | 3270 | 4003 |

| Category | Class | Abbr. | Class | Abbr. |

|---|---|---|---|---|

| Ship | motorboat | MB | sailboat | SB |

| tugboat | TB | barge | BG | |

| fishing boat | FB | ferry | FR | |

| container ship | CS | oil tanker | OT | |

| drill ship | DS | warship | WS | |

| Aircraft | fighter aircraft | FA | large military aircraft | LM |

| small civilian aircraft | SC | large civilian aircraft | LC | |

| helicopter | HC | |||

| Vehicle | small vehicle | SV | truck | TR |

| bus | BS | train | TN | |

| Container | container | CT | container group | CG |

| Infrastructure | crane | CR | bridge | BR |

| dam | DM | storage tank | ST | |

| sports field | SF | stadium | SD | |

| swimming pool | SP | roundabout | RA | |

| helipad | HP | wind turbine | WT | |

| aquaculture farm | AF | marine research station | MR |

| Class | MB | SB | TB | BG | FB | FR | CS | OT | DS | WS | FA | LM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | 31,469 | 5536 | 409 | 1218 | 4231 | 1678 | 768 | 195 | 55 | 320 | 827 | 325 |

| Val | 3105 | 967 | 70 | 198 | 538 | 195 | 184 | 17 | 17 | 66 | 95 | 17 |

| Test | 5296 | 594 | 104 | 239 | 625 | 209 | 109 | 40 | 17 | 23 | 198 | 16 |

| Total | 39,870 | 7097 | 583 | 1655 | 5394 | 2082 | 1061 | 252 | 89 | 409 | 1120 | 358 |

| Class | SC | LC | HC | SV | TR | BS | TN | CT | CG | CR | BR | DM |

| Train | 820 | 1265 | 605 | 501,394 | 42,776 | 11,133 | 17,332 | 24,005 | 18,362 | 1754 | 497 | 262 |

| Val | 80 | 170 | 81 | 70,055 | 6153 | 1356 | 3712 | 3781 | 3292 | 283 | 79 | 47 |

| Test | 159 | 258 | 197 | 69,617 | 6441 | 1200 | 1950 | 4481 | 3294 | 296 | 83 | 26 |

| Total | 1059 | 1693 | 883 | 641,066 | 55,370 | 13,689 | 22,994 | 32,267 | 24,948 | 2333 | 659 | 335 |

| Class | ST | SF | SD | SP | RA | HP | WT | AF | MR | Total | ||

| Train | 5486 | 2049 | 118 | 7982 | 842 | 989 | 181 | 1618 | 11 | 686,512 | ||

| Val | 1041 | 325 | 20 | 1269 | 146 | 114 | 22 | 144 | 2 | 97,641 | ||

| Test | 606 | 370 | 20 | 1100 | 155 | 165 | 16 | 357 | 3 | 98,264 | ||

| Total | 7133 | 2744 | 158 | 10,351 | 1143 | 1268 | 219 | 2119 | 16 | 882,399 | ||

| Group | Abbr. (Class Name) | # Instances | # Images |

|---|---|---|---|

| Rare | MR (marine research station) | 11 | 9 |

| Common | DS (drill ship) | 55 | 30 |

| AF (aquaculture farm) | 1618 | 41 | |

| LM (large military aircraft) | 325 | 50 | |

| WS (warship) | 320 | 59 | |

| FA (fighter aircraft) | 827 | 89 | |

| HC (helicopter) | 605 | 92 | |

| Frequent | SD (stadium) | 118 | 101 |

| SC (small civilian aircraft) | 820 | 102 | |

| WT (wind turbine) | 181 | 103 | |

| OT (oil tanker) | 195 | 108 | |

| SB (sailboat) | 5536 | 110 | |

| TB (tugboat) | 409 | 140 | |

| FB (fishing boat) | 4231 | 158 | |

| BG (barge) | 1218 | 200 | |

| CS (container ship) | 768 | 228 | |

| LC (large civilian aircraft) | 1265 | 235 | |

| DM (dam) | 262 | 244 | |

| TN (train) | 17,332 | 246 | |

| FR (ferry) | 1678 | 250 | |

| BR (bridge) | 497 | 297 | |

| CR (crane) | 1754 | 300 | |

| HP (helipad) | 989 | 354 | |

| ST (storage tank) | 5486 | 373 | |

| SF (sports field) | 2049 | 418 | |

| RA (roundabout) | 842 | 542 | |

| MB (motorboat) | 31,469 | 565 | |

| SP (swimming pool) | 7982 | 567 | |

| CG (container group) | 18,362 | 571 | |

| CT (container) | 24,005 | 850 | |

| BS (bus) | 11,133 | 1127 | |

| TR (truck) | 42,776 | 2228 | |

| SV (small vehicle) | 501,394 | 2739 |

| Model | Backbone | Optimizer | Learning Rate | Total Batch Size |

|---|---|---|---|---|

| RoI Transformer | ResNet-101 | SGD | 0.005 | 16 |

| Swin-S | AdamW | 0.0001 | 16 | |

| HiViT | AdamW | 0.0001 | 8 | |

| LSKNet | AdamW | 0.0001 | 8 | |

| Oriented R-CNN | ResNet-101 | SGD | 0.005 | 16 |

| Swin-S | AdamW | 0.0001 | 16 | |

| HiViT | AdamW | 0.0001 | 8 | |

| LSKNet | AdamW | 0.0001 | 8 | |

| YOLOv11 | — | AdamW | 0.00027 | 16 |

| Model | Backbone | mAP |

|---|---|---|

| RoI Transformer | ResNet101 | 0.467 |

| Swin Transformer Small | 0.505 | |

| HiViT-B | 0.533 | |

| LSKNet-S | 0.522 | |

| Oriented R-CNN | ResNet101 | 0.507 |

| Swin Transformer Small | 0.536 | |

| HiViT-B | 0.544 | |

| LSKNet-S | 0.509 | |

| YOLOv11 | nano | 0.482 |

| small | 0.554 | |

| medium | 0.602 | |

| large | 0.622 | |

| x-large | 0.639 |

| Coarse Category | Abbr. | RoI Transformer | Oriented R-CNN | YOLOv11 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R101 | Swin-S | HiViT | LSKNet | R101 | Swin-S | HiViT | LSKNet | Nano | Small | Medium | Large | x-Large | ||

| Ship | MB | 58.9 | 58.8 | 67.7 | 67.4 | 57.9 | 62.6 | 68.6 | 64.5 | 64.2 | 66.2 | 72.4 | 71.2 | 72.4 |

| SB | 46.4 | 62.3 | 55.3 | 55.2 | 52.2 | 58.7 | 67.4 | 56.1 | 33.8 | 24.8 | 42.2 | 43.6 | 53.1 | |

| TB | 51.6 | 46.2 | 49.5 | 43.0 | 51.1 | 44.5 | 54.6 | 38.5 | 8.6 | 25.5 | 52.9 | 43.8 | 43.3 | |

| BG | 47.5 | 53.5 | 62.3 | 60.9 | 58.1 | 56.6 | 60.0 | 56.0 | 36.2 | 55.7 | 55.3 | 62.6 | 63.3 | |

| FB | 43.8 | 54.6 | 59.9 | 56.3 | 49.4 | 60.5 | 62.2 | 53.6 | 46.9 | 56.4 | 58.2 | 67.0 | 64.2 | |

| FR | 17.9 | 29.0 | 36.1 | 36.6 | 30.7 | 30.9 | 37.1 | 39.8 | 25.2 | 31.3 | 21.2 | 25.6 | 27.8 | |

| CS | 34.4 | 45.6 | 44.0 | 54.5 | 45.9 | 51.0 | 46.7 | 54.4 | 47.7 | 57.8 | 60.2 | 60.3 | 64.8 | |

| OT | 44.3 | 51.4 | 54.0 | 66.9 | 58.1 | 60.0 | 61.1 | 66.6 | 24.5 | 26.5 | 41.7 | 34.7 | 45.5 | |

| DS | 40.9 | 39.3 | 40.9 | 50.0 | 33.4 | 62.4 | 39.9 | 46.2 | 39.1 | 60.8 | 61.7 | 55.2 | 61.0 | |

| WS | 45.3 | 56.5 | 74.1 | 57.7 | 48.7 | 66.2 | 63.5 | 55.7 | 24.2 | 48.7 | 54.4 | 63.7 | 72.4 | |

| Aircraft | FA | 65.7 | 65.5 | 75.2 | 65.0 | 70.8 | 63.5 | 74.9 | 57.0 | 51.8 | 71.5 | 73.1 | 76.1 | 77.0 |

| LM | 23.7 | 18.4 | 20.6 | 23.5 | 20.7 | 25.2 | 18.6 | 11.5 | 28.7 | 36.0 | 30.7 | 43.1 | 35.5 | |

| SC | 55.1 | 50.4 | 55.9 | 55.0 | 61.0 | 58.1 | 56.7 | 54.8 | 63.5 | 65.8 | 61.8 | 68.4 | 67.9 | |

| LC | 84.6 | 81.8 | 82.7 | 85.1 | 85.6 | 84.0 | 81.6 | 87.0 | 92.3 | 92.0 | 89.8 | 90.9 | 85.4 | |

| HC | 47.6 | 48.9 | 55.1 | 48.8 | 55.6 | 61.0 | 61.9 | 50.3 | 72.7 | 79.5 | 84.3 | 89.7 | 92.0 | |

| Vehicle | SV | 16.0 | 22.6 | 22.0 | 22.2 | 15.5 | 20.4 | 14.3 | 21.7 | 53.7 | 60.9 | 66.3 | 65.9 | 69.0 |

| TR | 36.2 | 40.1 | 39.6 | 42.4 | 38.7 | 39.7 | 39.7 | 41.1 | 24.3 | 35.2 | 41.0 | 44.1 | 47.0 | |

| BS | 47.5 | 52.2 | 61.4 | 60.2 | 50.7 | 58.6 | 64.4 | 61.1 | 40.0 | 49.3 | 61.1 | 69.1 | 69.6 | |

| TN | 37.0 | 44.6 | 45.9 | 44.9 | 44.6 | 46.7 | 46.2 | 46.2 | 52.4 | 69.6 | 73.9 | 73.7 | 75.4 | |

| Container | CT | 25.2 | 26.9 | 31.7 | 28.2 | 27.3 | 27.6 | 33.9 | 27.5 | 18.5 | 25.4 | 31.1 | 35.0 | 35.3 |

| CG | 40.4 | 42.3 | 44.2 | 38.7 | 43.8 | 42.9 | 42.8 | 38.5 | 48.1 | 54.3 | 51.5 | 58.2 | 57.5 | |

| Infrastructure | CR | 23.2 | 36.5 | 34.9 | 31.8 | 23.0 | 29.9 | 32.7 | 30.6 | 21.9 | 34.0 | 41.7 | 41.6 | 51.7 |

| BR | 39.3 | 42.9 | 40.1 | 34.0 | 49.7 | 42.4 | 46.8 | 40.5 | 60.3 | 61.1 | 65.0 | 66.1 | 66.0 | |

| DM | 30.2 | 33.6 | 37.3 | 30.4 | 39.9 | 32.6 | 43.2 | 33.2 | 43.8 | 45.3 | 43.7 | 46.8 | 46.7 | |

| ST | 71.2 | 71.4 | 71.6 | 71.4 | 71.1 | 70.7 | 71.4 | 70.8 | 79.0 | 83.8 | 87.8 | 87.6 | 87.4 | |

| SF | 57.8 | 57.5 | 54.8 | 54.3 | 60.6 | 61.7 | 55.4 | 53.1 | 55.2 | 65.6 | 71.3 | 74.6 | 73.5 | |

| SD | 69.0 | 70.1 | 73.2 | 84.5 | 81.2 | 79.7 | 79.9 | 82.5 | 78.0 | 72.9 | 81.7 | 76.9 | 80.4 | |

| SP | 63.2 | 63.5 | 63.7 | 68.7 | 62.1 | 64.4 | 64.8 | 63.4 | 64.4 | 73.5 | 77.5 | 78.8 | 81.6 | |

| RA | 90.3 | 90.2 | 89.9 | 89.0 | 90.1 | 87.8 | 89.7 | 90.3 | 92.2 | 97.0 | 96.5 | 97.9 | 97.2 | |

| HP | 60.6 | 57.7 | 62.8 | 62.1 | 61.4 | 65.8 | 63.9 | 55.5 | 51.0 | 59.3 | 68.0 | 72.7 | 77.4 | |

| WT | 54.5 | 71.7 | 72.7 | 54.5 | 54.5 | 70.7 | 70.2 | 49.4 | 65.4 | 55.8 | 70.5 | 78.1 | 76.3 | |

| AF | 72.0 | 79.8 | 81.4 | 80.5 | 80.9 | 80.4 | 81.3 | 81.1 | 82.7 | 86.6 | 89.8 | 87.1 | 89.5 | |

| MR | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.7 | 0.0 | 7.7 | 3.6 | 1.2 | |

| mAP | 46.7 | 50.5 | 53.3 | 52.2 | 50.7 | 53.6 | 54.4 | 50.9 | 48.2 | 55.4 | 60.2 | 62.2 | 63.9 | |

| Class | MB | SB | TB | BG | FB | FR | CS | OT | DS | WS | FA | LM |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AP | 65.9 | 49.8 | 44.4 | 63.0 | 56.0 | 41.2 | 53.5 | 47.8 | 31.4 | 52.4 | 74.7 | 43.7 |

| Class | SC | LC | HC | SV | TR | BS | TN | CT | CG | CR | BR | DM |

| AP | 63.6 | 90.0 | 73.3 | 67.4 | 48.6 | 57.1 | 81.5 | 39.5 | 41.8 | 30.7 | 37.9 | 38.1 |

| Class | ST | SF | SD | SP | RA | HP | WT | AF | MR | mAP | ||

| AP | 78.7 | 63.7 | 68.8 | 63.5 | 68.1 | 61.2 | 73.9 | 82.4 | 2.1 | 56.2 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.H.; Hong, J.H.; Seo, H.W.; Oh, H. KFGOD: A Fine-Grained Object Detection Dataset in KOMPSAT Satellite Imagery. Remote Sens. 2025, 17, 3774. https://doi.org/10.3390/rs17223774

Lee DH, Hong JH, Seo HW, Oh H. KFGOD: A Fine-Grained Object Detection Dataset in KOMPSAT Satellite Imagery. Remote Sensing. 2025; 17(22):3774. https://doi.org/10.3390/rs17223774

Chicago/Turabian StyleLee, Dong Ho, Ji Hun Hong, Hyun Woo Seo, and Han Oh. 2025. "KFGOD: A Fine-Grained Object Detection Dataset in KOMPSAT Satellite Imagery" Remote Sensing 17, no. 22: 3774. https://doi.org/10.3390/rs17223774

APA StyleLee, D. H., Hong, J. H., Seo, H. W., & Oh, H. (2025). KFGOD: A Fine-Grained Object Detection Dataset in KOMPSAT Satellite Imagery. Remote Sensing, 17(22), 3774. https://doi.org/10.3390/rs17223774