4.2.1. Model Performance Analysis

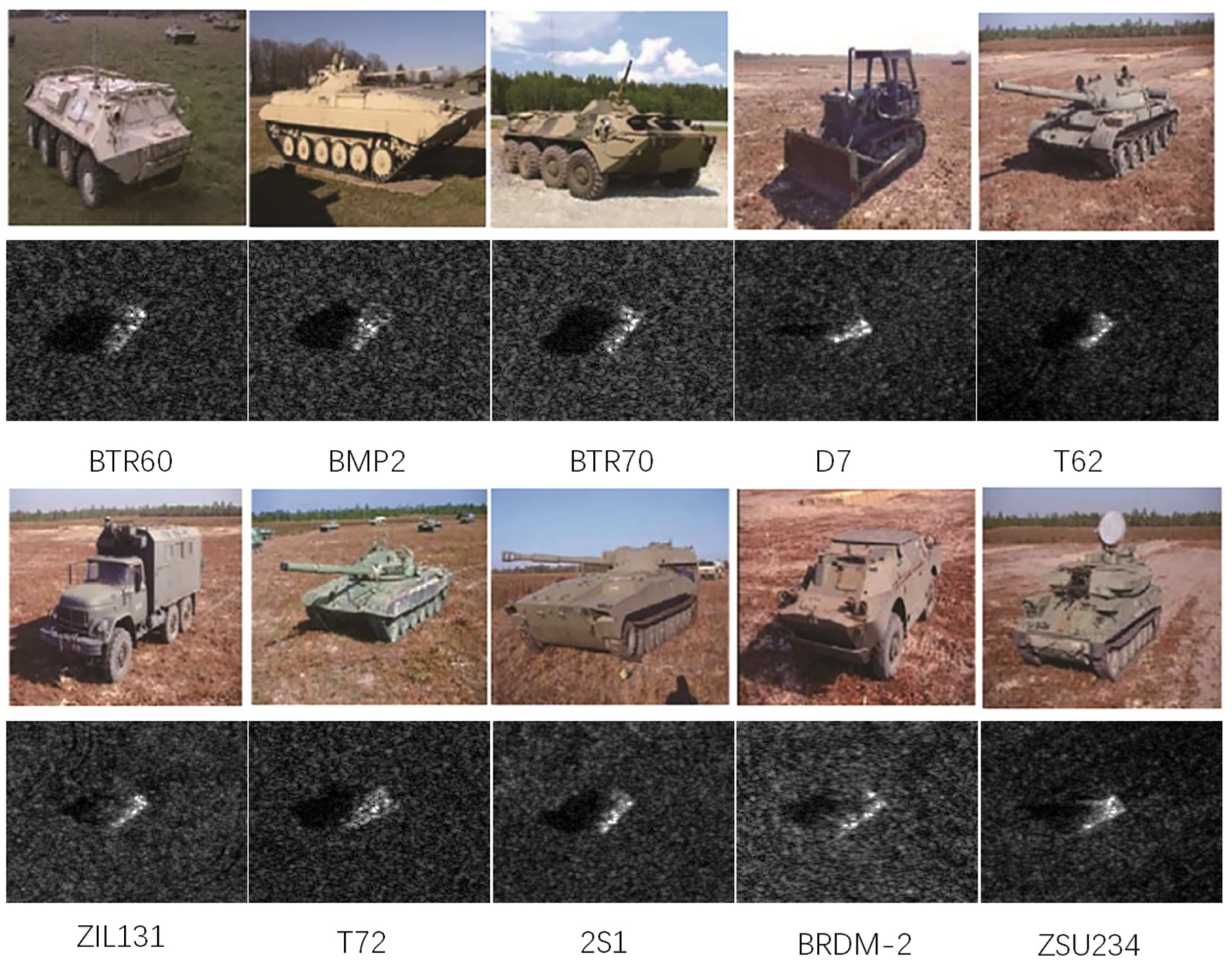

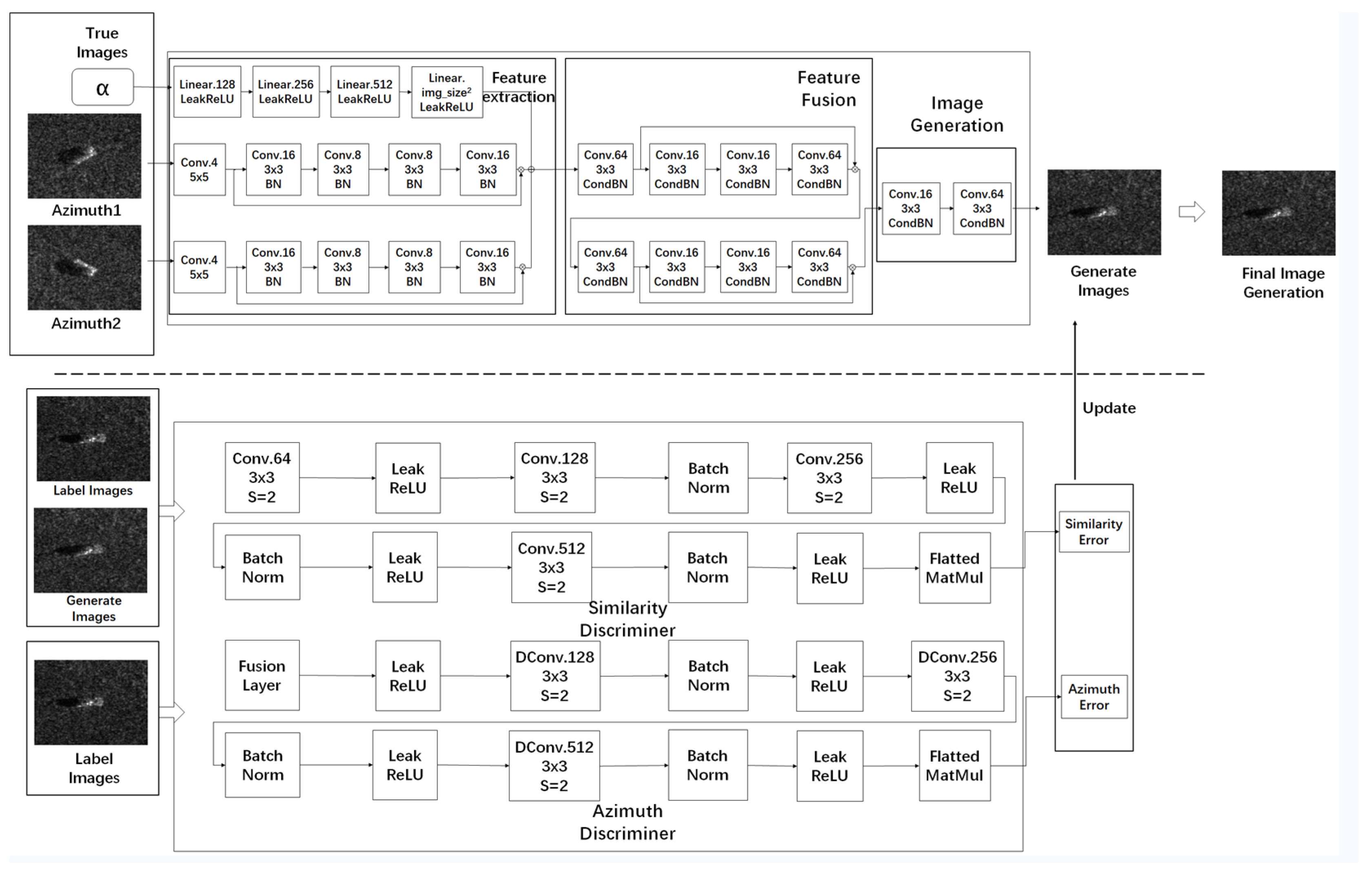

To demonstrate the SAR image generation performance of the proposed algorithm,

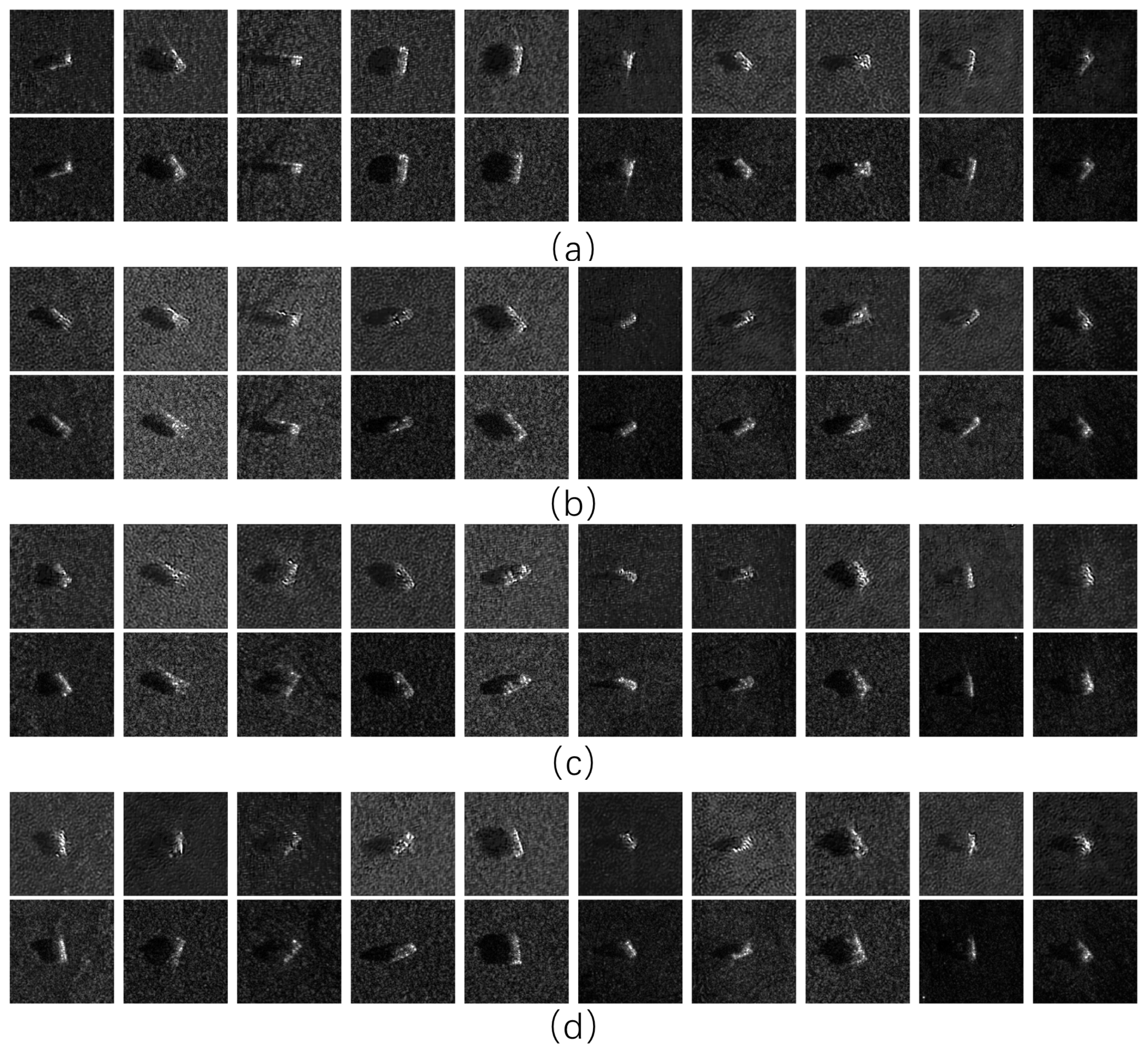

Figure 3 presents a comparison between generated and real synthetic aperture radar images. The generative model was trained on MSTAR dataset with 17° depression angle and maximum azimuth angle separation of 50° between input image pairs. Subsequently, the model was applied to input image pairs at a 15° depression angle with four different azimuth separations (5°, 10°, 20°, 50°), using α = 0.5.

Visual inspection reveals that when the azimuth interval between input image pairs is small (less than 20°), the generated images accurately preserve key features such as vehicle scattering centers, azimuthal orientation, background structures, and shadow patterns. As the angular interval increases to 20°, the generated images maintain correct azimuthal orientation but start to exhibit positional deviations in scattering centers relative to the ground truth. When the angular separation widens to 50°, notable discrepancies arise in azimuthal orientation, geometric features, and background details. These visual comparisons confirm that the perceptual quality of ACC-GAN outputs is inversely related to the azimuth interval of input pairs.

Table 4 presents quantitative evaluation results for the generated full-image and center-image when applying the model to images with a 15° depression angle. The results reveal that the proposed model maintains stable generation performance when the azimuth separation between input pairs is relatively small, while a gradual performance degradation occurs as the interval increases. Specifically, when the azimuth interval is within 5–10°, the generated images exhibit high similarity to real SAR targets, achieving SSIM values of 0.66~0.69 and MS-SSIM exceeding 0.90, with low mean squared errors (MSE of 0.0055~0.0059) and limited azimuth estimation errors (1.8°~2.4°). These results indicate that the generated images accurately preserve both local and global scattering characteristics.

When the azimuth interval expands to 20°, a moderate reduction in structural similarity can be observed (SSIM = 0.63, MS-SSIM = 0.88), yet the generation quality remains acceptable, with preserved geometric consistency and controllable angular deviations (angle error = 3.2°). In contrast, a notable deterioration is observed at 50°, where the azimuth error rises to approximately 5.2°, accompanied by a clear decline in similarity metrics (SSIM < 0.60, MS-SSIM = 0.84) and an increase in FID (13.7), indicating the increased difficulty of reconstructing accurate scattering structures under large viewpoint differences.

It is also noteworthy that the Center Image results generally outperform the Full Image counterparts in terms of SSIM (up to 0.71 vs. 0.69 at 5°) and LPIPS (0.0267 vs. 0.0621), suggesting that focusing on the central region effectively suppresses peripheral distortions and stabilizes the model’s spatial representation, despite slightly higher FID values at larger azimuth intervals.

Overall, these findings demonstrate that ACC-GAN can reliably synthesize high-fidelity SAR images and maintain effective azimuth control when the angular separation between input views is below 20°, ensuring both perceptual similarity and structural coherence across diverse observation geometries.

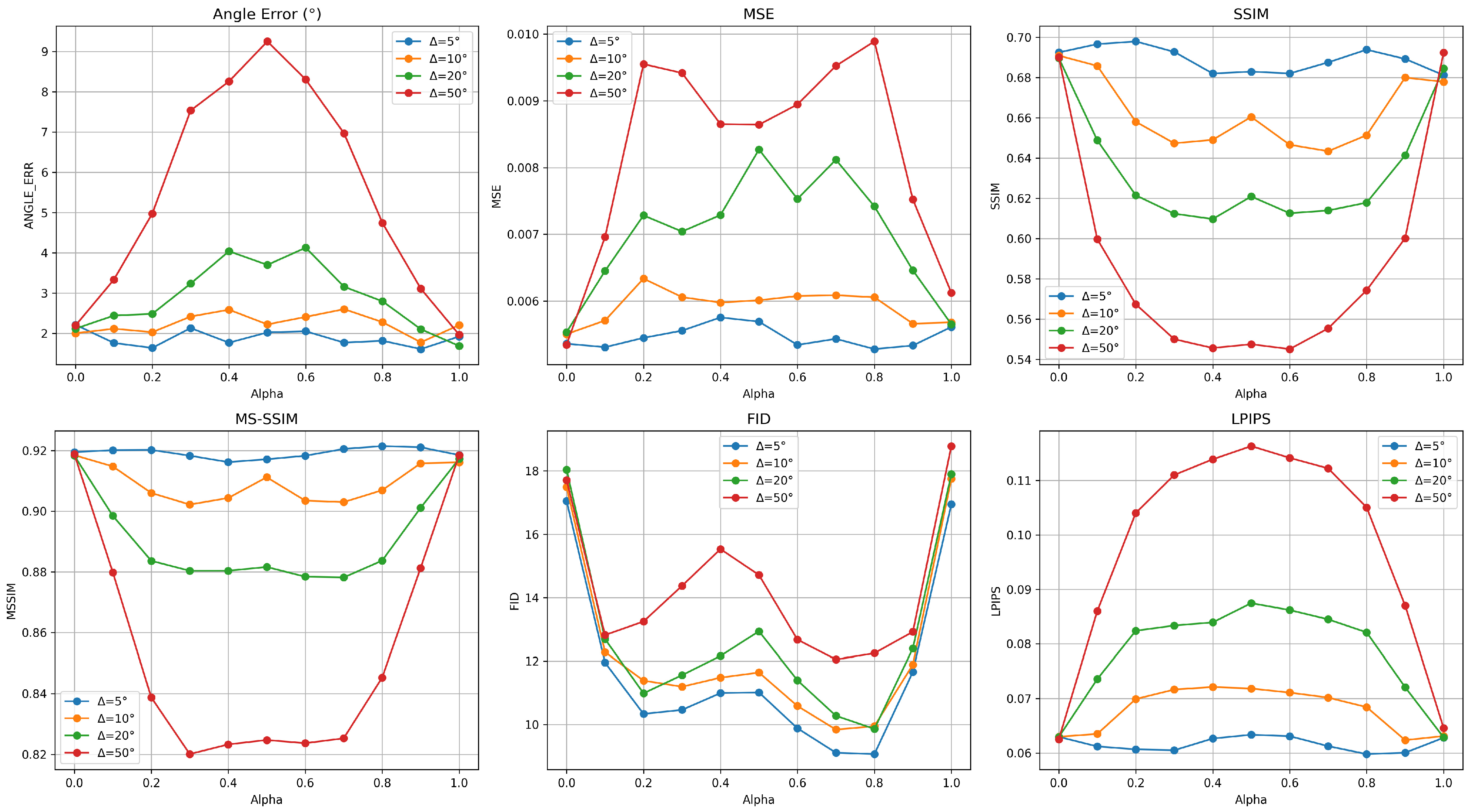

Figure 4 elucidates the feature fusion mechanism regulated by α across varying azimuth intervals on full image. When α approaches 0 or 1, the generated images predominantly emphasize features from the adjacent azimuth input, resulting in optimal quality metrics across all dimensions—minimal angle error, lowest MSE and perceptual distortion (FID, LPIPS), and highest structural similarity (SSIM, MS-SSIM). This boundary behavior demonstrates that single-view dominance effectively bypasses interpolation challenges. Conversely, when α ≈ 0.5, equal-weight fusion from both inputs leads to maximum quality degradation, as conflicting geometric and appearance features generate structural inconsistencies and perceptual artifacts. The fusion quality at intermediate α values is primarily dictated by the feature disparity between input images. For small azimuth intervals (Δ ≤ 20°), the metrics remain relatively stable across the entire α range, indicating manageable feature compatibility. However, large azimuth intervals (Δ > 20°) lead to severe degradation at mid-range α values, with dramatic increases in angle error, MSE, FID, and LPIPS, alongside substantial drops in SSIM and MS-SSIM. This phenomenon reveals that significant geometric and appearance disparities exceed the model’s generation capabilities, causing the fusion process to generate images that fall outside the learned feature manifold.

To further validate the cross-depression angle generalization capability of our ACC-GAN model, we conducted an additional 17° → 30° experiment beyond the standard 17° → 15° experiment.

As summarized in

Table 5, the model maintains comparable generation performance across the two depression angles. Specifically, the MSE and LPIPS show only marginal differences, while the SSIM and MS-SSIM remain above 0.63 and 0.86, respectively. Although the FID score slightly increases at 30°, indicating a modest distributional shift, the overall reconstruction fidelity does not degrade noticeably. These results suggest that the learned generator exhibits robust generalization to moderate variations in depression angle, implying that the network captures intrinsic scattering representations rather than overfitting to a single imaging geometry.

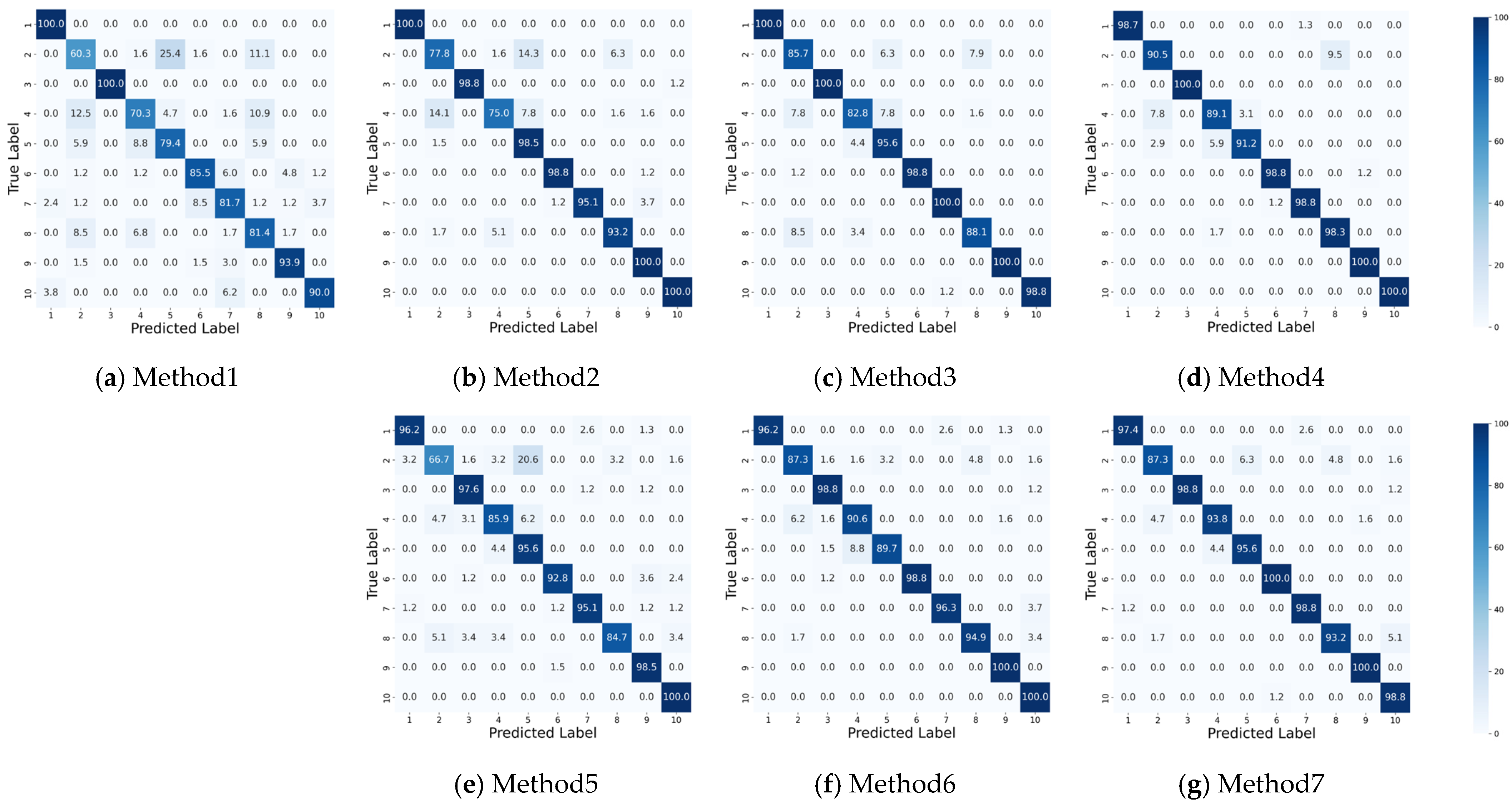

4.2.2. Quantitative Result for Different Generation Methods

To comprehensively evaluate the effectiveness of our ACC-GAN framework, we compare it against two representative SAR image generation methods: (1) cGAN, a classic conditional generative adversarial network that conditions the generator on auxiliary information, and (2) ACGAN [

33], a recent work specifically designed for multi-view SAR image synthesis.

For fair comparison, all methods were trained on the same MSTAR 17° dataset with identical preprocessing. cGAN was conditioned on azimuth angles through concatenation of angle embeddings with generator/discriminator inputs, while ACGAN method follows their original configuration. Our ACC-GAN was trained with a maximum azimuth interval of 50°. All models were evaluated on the same MSTAR 15° dataset.

Table 6 presents quantitative comparisons with conditional GAN baselines on the MSTAR 17° dataset. ACC-GAN demonstrates substantial and consistent improvements across all evaluation metrics. In terms of perceptual quality, ACC-GAN achieves a 50.0% reduction in FID (11.63 vs. 23.27) and 33.6% improvement in LPIPS (0.0782 vs. 0.1177) compared to ACGAN, indicating significantly more realistic and visually coherent SAR imagery. The advantage over the basic cGAN baseline is even more pronounced, with FID reduced by 75.8% and LPIPS improved by 65.1%, highlighting the critical importance of advanced conditional control mechanisms for multi-view SAR synthesis.

Regarding structural fidelity, ACC-GAN outperforms both baselines in preserving target geometry across viewpoint changes. The 15.7% improvement in MS-SSIM (0.8993 vs. 0.7772) over ACGAN is particularly noteworthy, as this multi-scale metric better captures SAR’s complex scattering structures while being robust to speckle noise. The high MS-SSIM score confirms that ACC-GAN effectively maintains structural consistency at multiple scales—a critical requirement for downstream SAR interpretation tasks. The 10.7% reduction in MSE further validates improved pixel-level accuracy, demonstrating that our dual-discriminator architecture and encoder–decoder fusion mechanism successfully balance both global realism and fine-grained detail preservation.