1. Introduction

Remote sensing image segmentation is a vital task in the interpretation of remote sensing data and holds significant value in various domains, including land use planning, urban development [

1,

2], disaster monitoring [

3,

4], resource exploration, military surveillance, and ecological monitoring [

5,

6]. Semantic segmentation, in particular, involves classifying each pixel in an image into a specific land cover category, enabling a comprehensive understanding of the landscape [

7,

8,

9,

10,

11,

12,

13,

14]. Advancing research in semantic segmentation of remote sensing images and achieving accurate object delineation are essential for promoting the development and application of remote sensing technology.

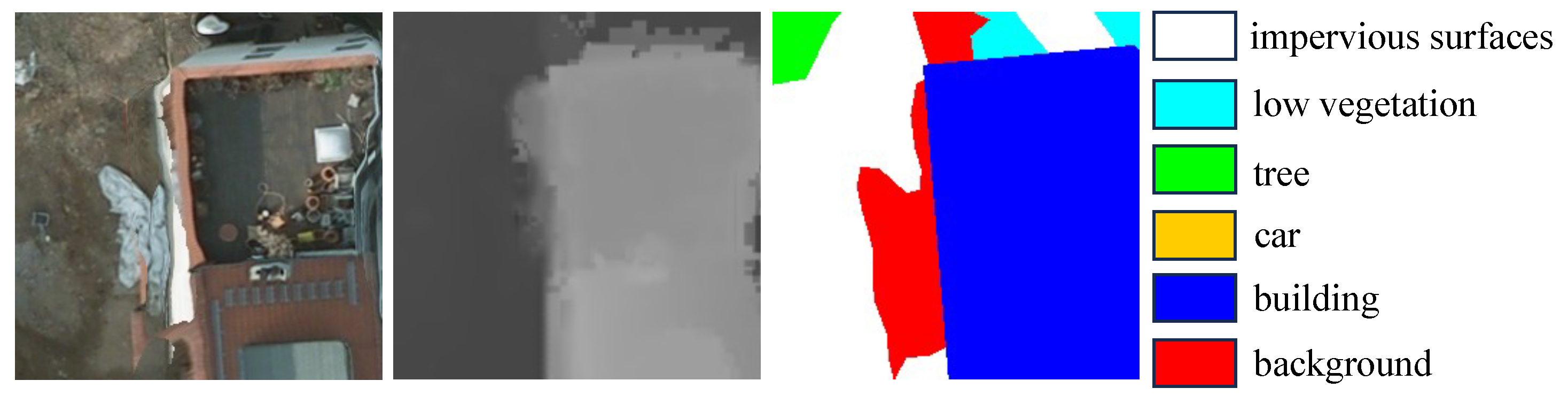

The fine-grained and multi-scale characteristics of remote sensing images pose significant obstacles to improving the accuracy of single-modality semantic segmentation. To address the inherent limitations of single-modality information, researchers have increasingly adopted multimodal approaches. For instance, RGB images capture visual color information, while Digital Surface Models (DSM) provide height data for ground objects. Due to differences in imaging mechanisms, modality heterogeneity, and suboptimal fusion strategies, multimodal fusion may suffer from insufficient feature complementarity and the loss of crucial information, ultimately leading to reduced segmentation accuracy. Moreover, visually similar objects in RGB imagery are difficult to distinguish, increasing the likelihood of misclassification. In such cases, incorporating height information from DSM can effectively differentiate objects and enhance segmentation accuracy. As shown in

Figure 1, although buildings and impervious surfaces exhibit similar visual characteristics in the RGB image, their height differences are clearly distinguishable in the DSM image, which helps to reduce segmentation confusion. This highlights the advantage of DSM in capturing edge information, which contributes to more accurate object and boundary recognition.

In recent years, several multimodal remote sensing segmentation methods have adopted dual-branch architectures to capture and fuse RGB and DSM features, achieving promising segmentation performance [

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25]. As network depth increases, these fusion strategies often suffer from issues such as the loss of edge information and blurred object boundaries. Blurred boundaries can obscure the distinction between different targets, potentially causing the model to misclassify two entirely different objects as the same class or as unrelated classes. Moreover, partial overlap and mixing between target regions may occur, disrupting the original feature distribution. This negatively impacts both feature extraction and fusion, ultimately leading to a decline in segmentation accuracy. Such issues are critical and should not be overlooked in multimodal fusion tasks.

As a classic frequency-domain technique, wavelet transform has been widely applied in multimodal image processing. It decomposes features into low- and high-frequency components, where the low-frequency components capture global contextual information, and the high-frequency components preserve edge details. For instance, BSANet [

26] employs wavelet transform for upsampling and downsampling in its dual-branch design. DWU-Net [

27] and XNet [

28] decompose images into high- and low-frequency components and apply separate U-Nets for each. HaarFuse [

29] extracts features from optical and infrared images using wavelet convolution and fuses them via wavelet decomposition. WaveCRNet [

30] introduces wavelet priors via a wavelet attention module embedded in its PID network, and reconstructs features using dual-tree complex wavelet transforms. WaveFusion [

31] combines wavelet transform and self-attention to enhance fused features, while HaarNet [

32] applies wavelet decomposition to RGB and depth features before convolutional fusion. These approaches collectively reflect the growing trend of integrating frequency-domain analysis with dual-branch architectures, which has become a prevailing framework for improved multimodal representation. Our work follows this direction but introduces a novel design for deeper-level feature fusion and enhancement, aiming to preserve more complete modality-specific information and improve overall fusion effectiveness.

To address issues such as boundary blurring and the loss of edge information during fusion, we introduce wavelet transform and propose a multimodal remote sensing segmentation network that integrates RGB and DSM data, aiming to further enhance segmentation accuracy [

33,

34]. Specifically, we design a hybrid branch fusion module, where RGB features are processed through a convolutional branch and DSM features are processed through a wavelet-based branch, effectively combining information from both the convolutional and frequency domains. In addition, we introduce a spatial-channel attention module to strengthen the model’s capacity for multi-scale contextual modeling, thereby improving feature representation and segmentation performance.

Although numerous studies [

26,

27,

28,

29,

30,

31,

32,

35,

36,

37] have explored the integration of wavelet transform into dual-branch architectures to improve multimodal image fusion and segmentation performance, most existing methods typically either treat wavelet transform as a preprocessing or auxiliary operation during the early encoding stages, or impose a tightly coupled fusion strategy that directly integrates features from different modalities. However, these designs often lack deeper-level fusion and enhancement mechanisms. As network depth increases, such limitations may lead to redundant or diluted representations across modalities and hinder the preservation of critical feature information.

Unlike previous methods, our approach introduces several significant differences. First, we adopt a decoupled design that maintains independent encoding paths for RGB and DSM in both the external dual-branch encoders and the internal hybrid fusion module. The proposed framework performs progressive fusion and enhancement of features from each encoder level. The fused features are not fed back into the original encoder paths but are passed through a decoder with progressive upsampling to produce the final segmentation map. This architecture not only preserves modality-specific representations by decoupling multimodal fusion from single-modality feature extraction, but also facilitates effective cross-modal feature interaction, while avoiding the redundancy and mutual interference that can arise from feeding fused features back into the encoder paths.

Second, unlike previous methods that apply wavelet transforms uniformly to all modalities, we selectively integrate the wavelet attention module into the DSM branch within the fusion module. Since DSM features are less rich than RGB features, applying wavelet transforms to both RGB and DSM can degrade feature quality and obscure the distinct information inherent to each modality, ultimately reducing segmentation accuracy. Therefore, we apply the wavelet transform specifically to the DSM branch to extract both high-frequency edge information and low-frequency structural information, leveraging the DSM modality’s advantage in providing clear boundary delineation. The RGB branch, in contrast, retains a convolutional structure to better capture its strengths in texture and structural feature extraction. Furthermore, unlike other methods, our wavelet attention module introduces wavelet transforms into the Transformer [

38] architecture. Combined with a gating mechanism, it not only captures long-range dependencies but also filters salient DSM features and controls the flow of effective information.

In summary, our design differs from existing approaches in terms of fusion strategy, branch decoupling, and the selective application of wavelet transform. Without compromising modality independence, it enhances boundary modeling capability and improves segmentation accuracy. The main contributions of this work are summarized as follows:

- 1.

To retain more edge information during the fusion process, a Multimodal Spatial–Frequency Fusion Network (MSFFNet) is constructed, which fuses edge features in the wavelet domain and spatial features in the convolutional domain, thereby enhancing edge representation as well as improving segmentation accuracy.

- 2.

To highlight edge information in the frequency domain, a Hybrid Branch Fusion Module (HBFM) is constructed, which decomposes DSM features into multiple sub-components by wavelet transform, from which high-frequency edge features are separately extracted and processed. Such process in the frequency domain prevents mixing with irrelevant features and alleviates edge information loss during the fusion process.

- 3.

To enhance spatial contextual information, a Multi-Scale Contextual Attention Module (MSCAM) is constructed, which aggregates multi-scale spatial information and applies self-attention to refine channel-wise features, improving intra-class consistency while effectively distinguishing inter-class differences.

The rest of this paper is structured as follows:

Section 2 reviews related works,

Section 3 details the proposed method,

Section 4 presents experimental evaluations to validate its effectiveness, and

Section 6 summarizes the conclusions.

3. Method

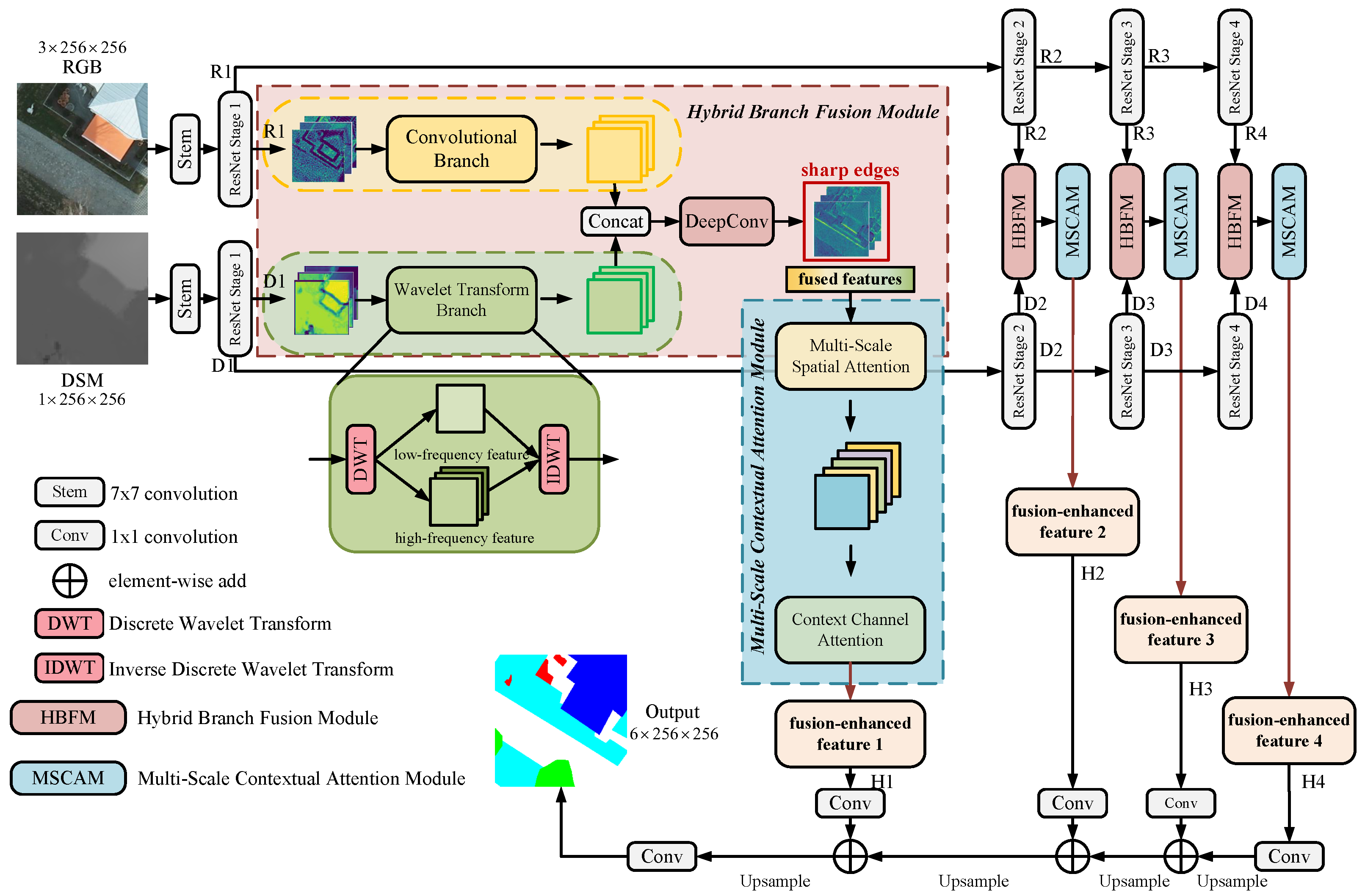

3.1. Overall Framework

Multimodal remote sensing image segmentation requires an efficient fusion strategy. For two heterogeneous modalities, inappropriate fusion approaches can lead to information loss or redundancy. To address these issues, MSFFNet selectively extracts and fuses critical information from RGB and DSM in both spatial and frequency domains to enhance edge features, as illustrated in

Figure 2.

The input to the network consists of RGB and DSM images. We use ResNet50 [

85] as the encoder and Feature Pyramid Network (FPN) [

86] as the decoder. The RGB image is processed through a ResNet-based encoder, generating four hierarchical feature maps denoted as P1, P2, P3, and P4. Similarly, the DSM data is passed through another ResNet encoder to produce four corresponding feature levels, D1, D2, D3, and D4. These two independent encoder branches enable each modality to fully extract its intrinsic features. Subsequently, the multi-level features from the RGB and DSM encoders are fused via the proposed HBFM and MSCAM modules, resulting in four fusion feature maps, H1, H2, H3, and H4. The fused features are then fed into a FPN, followed by conventional convolutional layers to generate the final segmentation map, as illustrated in

Figure 2. It is noteworthy that the fused features are not fed back into the encoder branches. This decoupled design not only preserves the integrity of the modality-specific representations and avoids feature redundancy but also facilitates the complementary integration of heterogeneous information from different modalities. The following section provides a detailed explanation. The symbols used in this paper are summarized in

Table A1 in the

Appendix A.

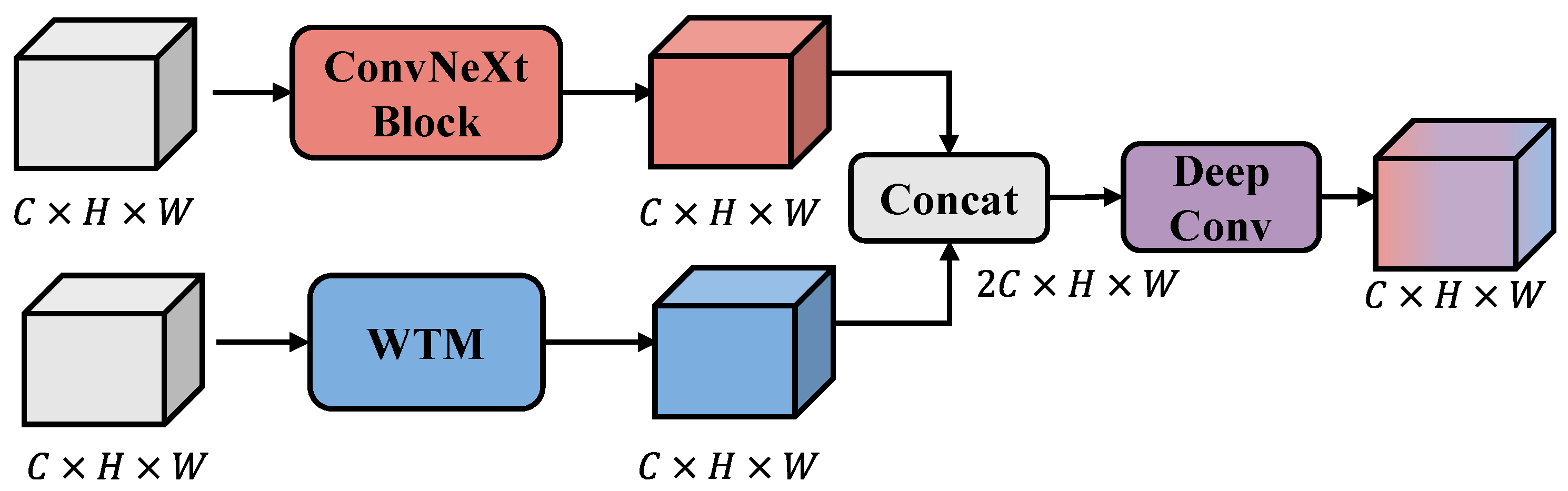

3.2. Hybrid Branch Fusion Module

We propose a Hybrid Branch Fusion Module (HBFM), in which convolutional and wavelet branches independently process the RGB and DSM modalities. The resulting features are subsequently fused through a deep convolutional module, as illustrated in

Figure 3.

RGB images typically contain richer structural, textural, and edge information, making convolutional processing particularly effective for capturing salient and diverse features. DSM images, by contrast, are single-channel grayscale representations with less spectral information but offer more precise boundary information—especially in scenarios where optical features are visually ambiguous. To exploit this complementarity, we utilize the frequency-domain capabilities of wavelet transform to further enhance edge features in DSM data. Accordingly, we assign the convolutional branch to process RGB features and the wavelet-based branch to handle DSM features. This design not only preserves the modality-specific representations but also facilitates the generation of more comprehensive and complementary fused features, thereby alleviating information loss during the fusion process.

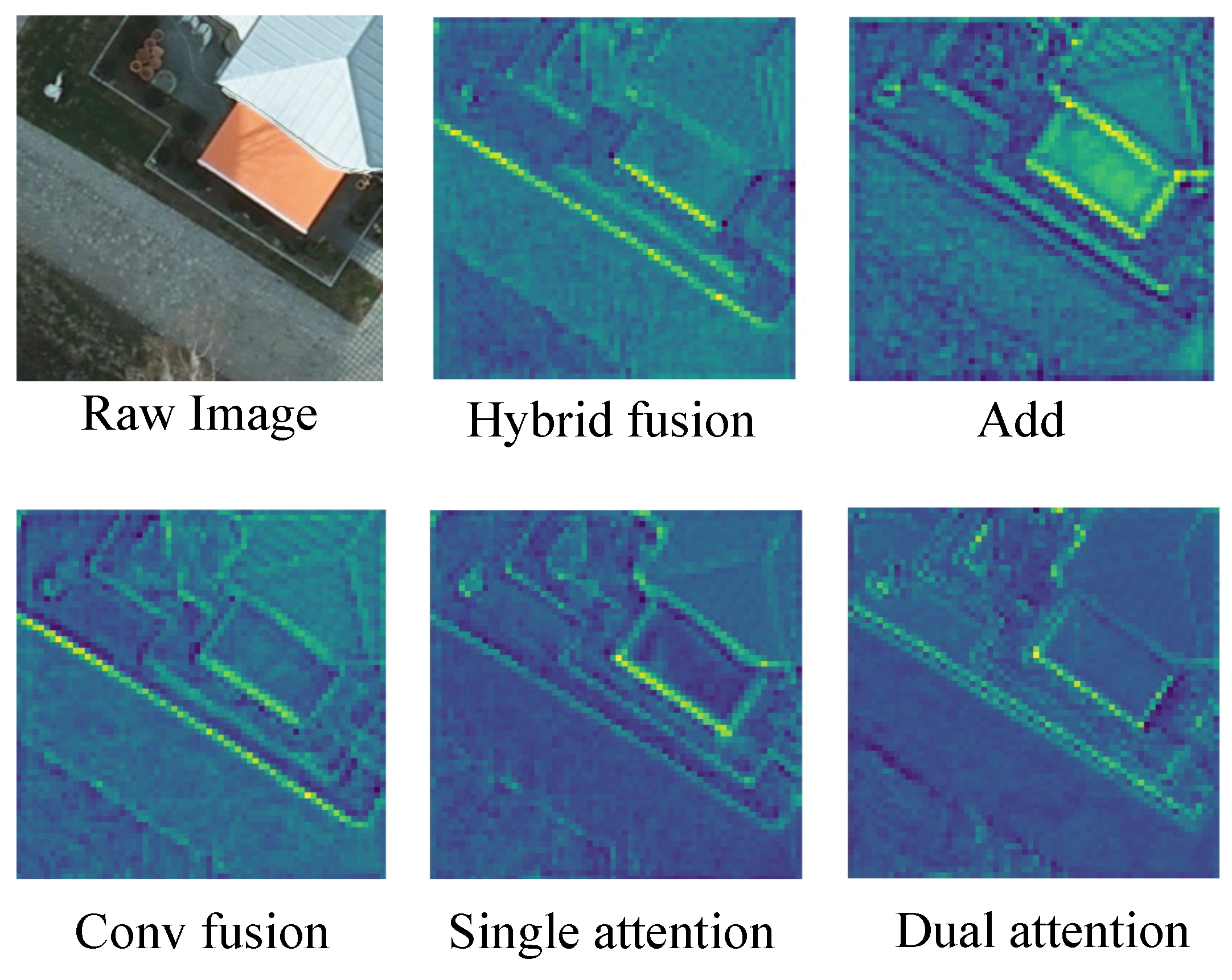

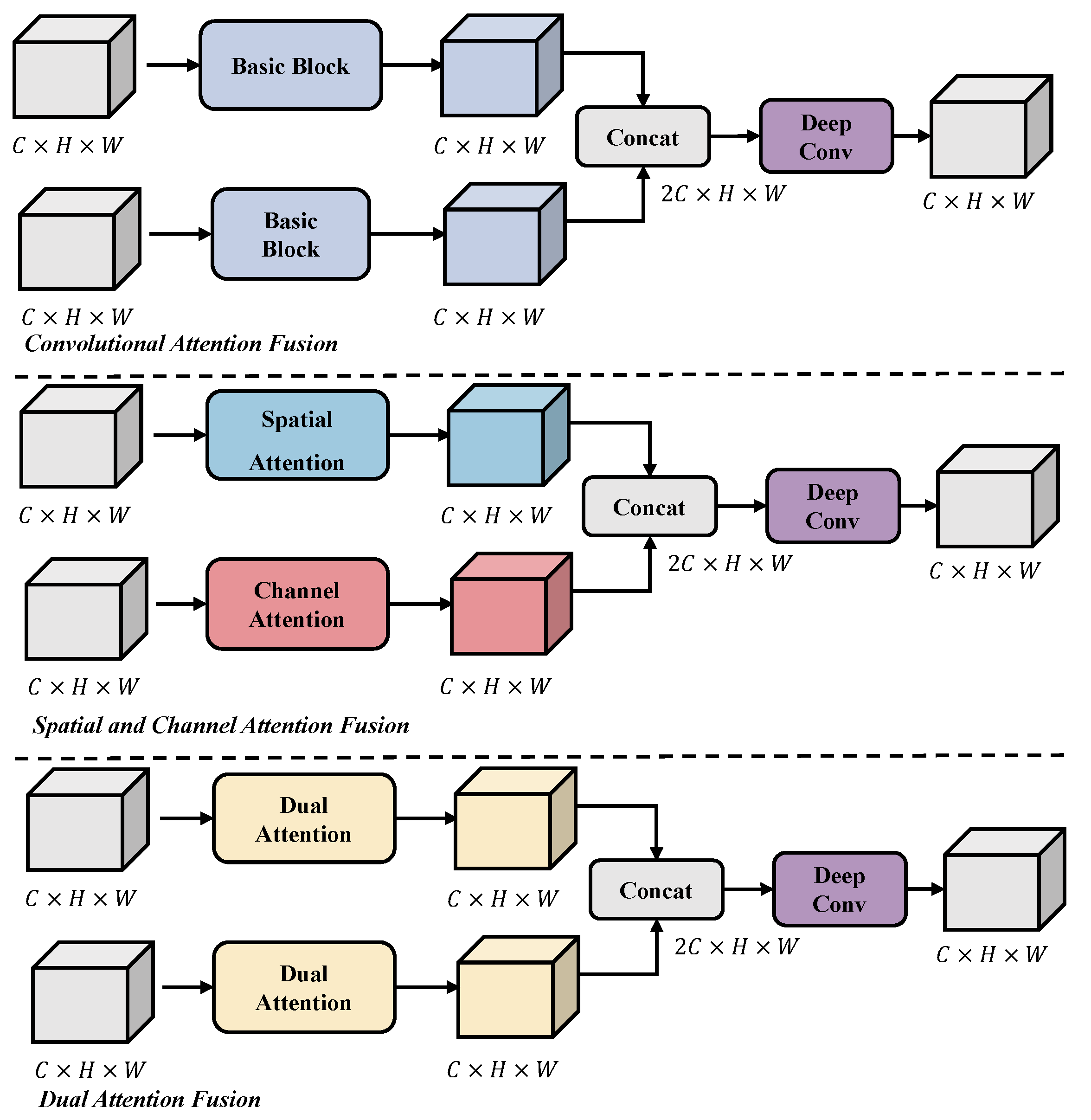

As shown in

Figure 4, we compare our hybrid fusion method with several alternative fusion strategies by analyzing feature maps after the first encoder stage. The top row shows the input image, our hybrid fusion result, and addition-based fusion. The bottom row presents feature maps from convolution-only, spatial attention, channel attention, and dual-attention fusion. While all methods retain some edge details, our hybrid approach preserves clearer boundaries, particularly for impervious surfaces and low-lying vegetation. Moreover, when the boundaries between objects are blurred or indistinct, different targets may exhibit highly similar feature representations. As a result, the model is prone to misclassifying distinct objects as a single category, which adversely affects the segmentation performance. Through feature map comparisons, this highlights the module’s ability to retain modality-specific information and reduce feature loss during fusion. Additional quantitative results are provided in

Section 4.

The input to HBFM consists of RGB features and DSM features, denoted as

and

, respectively, and is expressed as

where

represents the convolution branch composed of ConvNeXt blocks [

87], and

represents the wavelet transform branch.

refers to the concatenation of inputs along the channel dimension.

represents the depthwise convolution (Deep Conv) fusion network, whose detailed structure is illustrated in

Figure 5.

represents the convolution module composed of convolution, GELU activation function, and batch normalization. Depending on the parameters of the convolution module, the configuration of the activation function and batch normalization may change. represents a depthwise convolution, where the number of groups equals the number of channels. In the hybrid branch module, the first point convolution after concatenation includes the activation function and batch normalization, with output channels of C. The depthwise convolution and the second point convolution also include activation functions and batch normalization. The last point convolution does not include an activation function but has batch normalization.

3.2.1. Convolution Branch

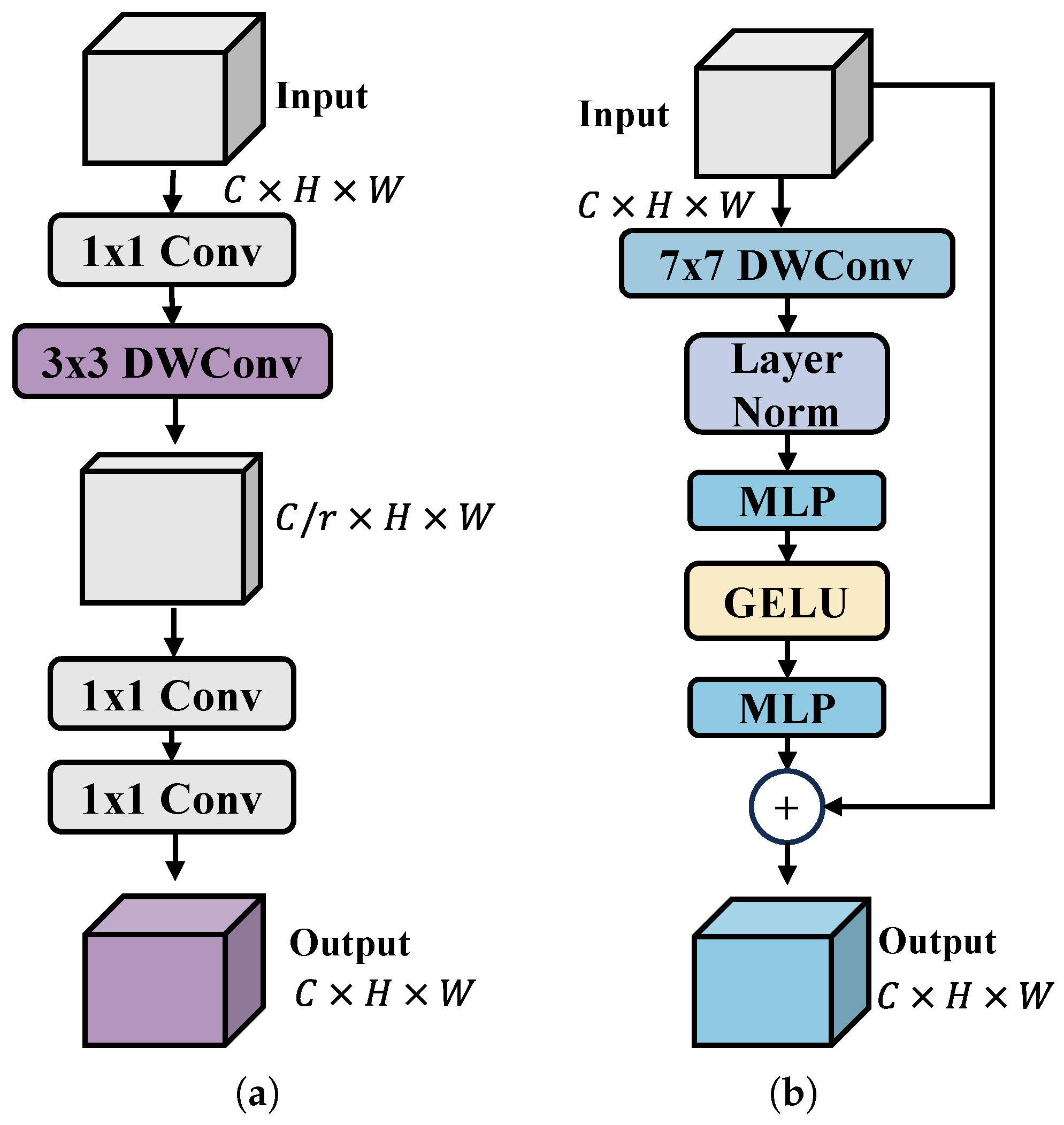

Although Transformer-based models have achieved impressive results in the field of semantic segmentation in recent years, and research on convolutional neural networks has decreased, we believe that the convolutional structure still has its irreplaceable advantages. Compared to Transformers, its inductive bias and local receptive field are key to capturing local features. We use ConvNeXt Blocks as the main components of the convolution branch, and the detailed structure is shown in

Figure 5. The mathematical expression of the convolution branch is as follows:

where

represents the RGB features input to the convolution branch.

refers to depthwise convolutions, where the number of groups equals the number of channels, with a convolution kernel size of

.

represents layer normalization,

stands for a multi-layer perceptron, and

denotes the GELU activation function.

denotes the feature output of the convolutional branch. The convolution branch stacks three identical ConvNeXt Blocks in the third-level fusion module, while only one ConvNeXt Block is used in other fusion modules.

The convolution branch processes RGB features using a depthwise convolution followed by layer normalization. A feed-forward network with GELU activation then expands and reduces the feature dimensions. Residual connections are employed to preserve information. This branch integrates concepts from both convolutional networks and Transformers, employing an inverted bottleneck structure and large-kernel convolutions to expand the receptive field. By leveraging the local-detail capturing capability of convolutions, it enhances the extraction of edge, texture, and shape features while maintaining a balance between accuracy and computational efficiency.

3.2.2. Wavelet Transform Branch

Wavelet Transform Branch: The Transformer architecture captures long-range dependencies between targets through self-attention, making it advantageous for extracting global features. However, some studies have found that the performance of Transformers is largely due to their token mixer and channel mixer frameworks. Considering that DSM images contain limited height information, we drew inspiration from GestFormer [

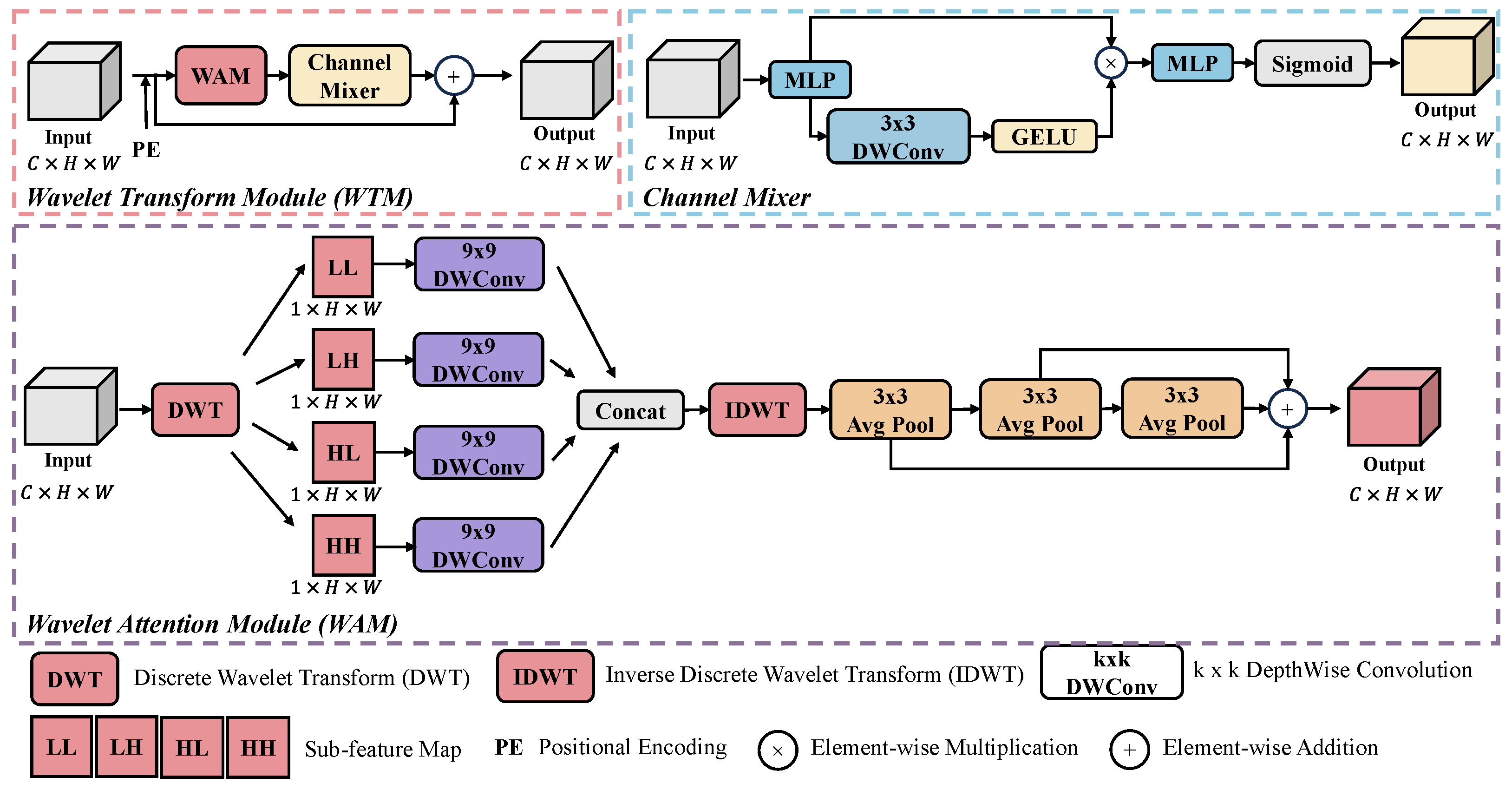

34] and improved the multi-scale wavelet pooling Transformer. We propose a novel Wavelet Transform Module (WTM) that extracts key spatial and edge-related information. The Wavelet Transform Module consists of a Wavelet Attention Module (WAM) and a channel mixer, as shown in

Figure 6. Before the Wavelet Attention Module, we introduce positional encoding. The WTM acts as a token mixer based on wavelet transform and multi-scale pooling. Additionally, we employ a convolutional gated linear unit as the channel mixer to control the flow of detailed information.

3.2.3. Wavelet Attention Module

Pooling has been shown to effectively select key features that benefit segmentation models [

88]. In a similar manner, we incorporate wavelet transform to extract multi-scale features while preserving edge details, effectively mitigating the fine-grained challenges in remote sensing image segmentation. The WAM consists of two main components: a feature processing part that utilizes Discrete Wavelet Transform (DWT) and Inverse Discrete Wavelet Transform (IDWT), and a pooling mechanism for feature selection, as illustrated in

Figure 6. The mathematical formulation of WAM is presented as follows:

where

X denotes the input to WAM, DWT and IDWT represent the discrete wavelet transform and its inverse, respectively.

denotes the four large-kernel convolutional layers, and

denotes the three average pooling layers.

Before applying the pooling operation, we use DWT to decompose the input into multiple sub-band features from a frequency perspective. To further enhance each sub-band feature, we employ large-kernel depthwise convolution. The DWT process can be formulated as

where

X represents the input feature. First, the input undergoes DWT and is decomposed into four sub-band features: LL, LH, HL, and HH. The LL component retains approximate information, while LH, HL, and HH capture horizontal, vertical, and diagonal details, respectively. These sub-band features can be categorized into low-frequency features (LL), which preserve the global structural information, and high-frequency features (LH, HL, HH), which capture edge details and local textures. Next, a

depthwise convolution is applied to enhance each of the four sub-band features. The enhanced features are then concatenated and processed using Inverse Discrete Wavelet Transform to reconstruct the fused representation.

After processing with Discrete Wavelet Transform and enhancement via depthwise convolution, the transformed features are fed into a sequential pooling structure. Unlike PoolFormer, our WAM improves the original multi-scale pooling module by replacing its parallel structure with a spatial pyramid pooling design. This modification not only increases computational efficiency but also enhances the ability to aggregate multi-scale information. Our improved multi-scale pooling structure is capable of capturing features of varying sizes and shapes, effectively handling scale variations in different ground objects. Specifically, the pooling structure consists of three sequential average pooling layers, where the outputs of each pooling layer are retained, averaged together, and subsequently used as the final output of the WAM.

3.2.4. Channel Mixer

While channel attention mechanisms have played a significant role in the field of computer vision, their coarse-grained nature limits their ability to aggregate global information compared to various self-attention-based token mixers. We adopt the convolutional Gated Linear Unit (GLU) as the channel mixer to regulate the flow of channel-wise information [

89], as illustrated in

Figure 6. This channel mixer integrates channel attention mechanisms with a gated linear unit and further enhances positional information using depthwise convolutions to improve model stability. Compared to multilayer perceptrons, the GLU demonstrates superior performance in channel mixing. The mathematical formulation is given as follows:

where

X represents the input,

denotes depthwise convolution with a kernel size of

, where the number of groups equals the number of channels.

refers to the feed-forward neural network,

represents the GELU activation function, and

denotes the Sigmoid function. After processing the input through the neural network, it is split into two parts along the channel dimension. One part undergoes depthwise convolution and GELU activation, then multiplies with the other part. The resulting product is passed through a neural network and a Sigmoid function, yielding the final output of the entire channel mixer. The Convolutional Linear GLU utilizes a gated channel attention mechanism to capture fine-grained features from the local neighborhood, adjust the channels, and enrich contextual information.

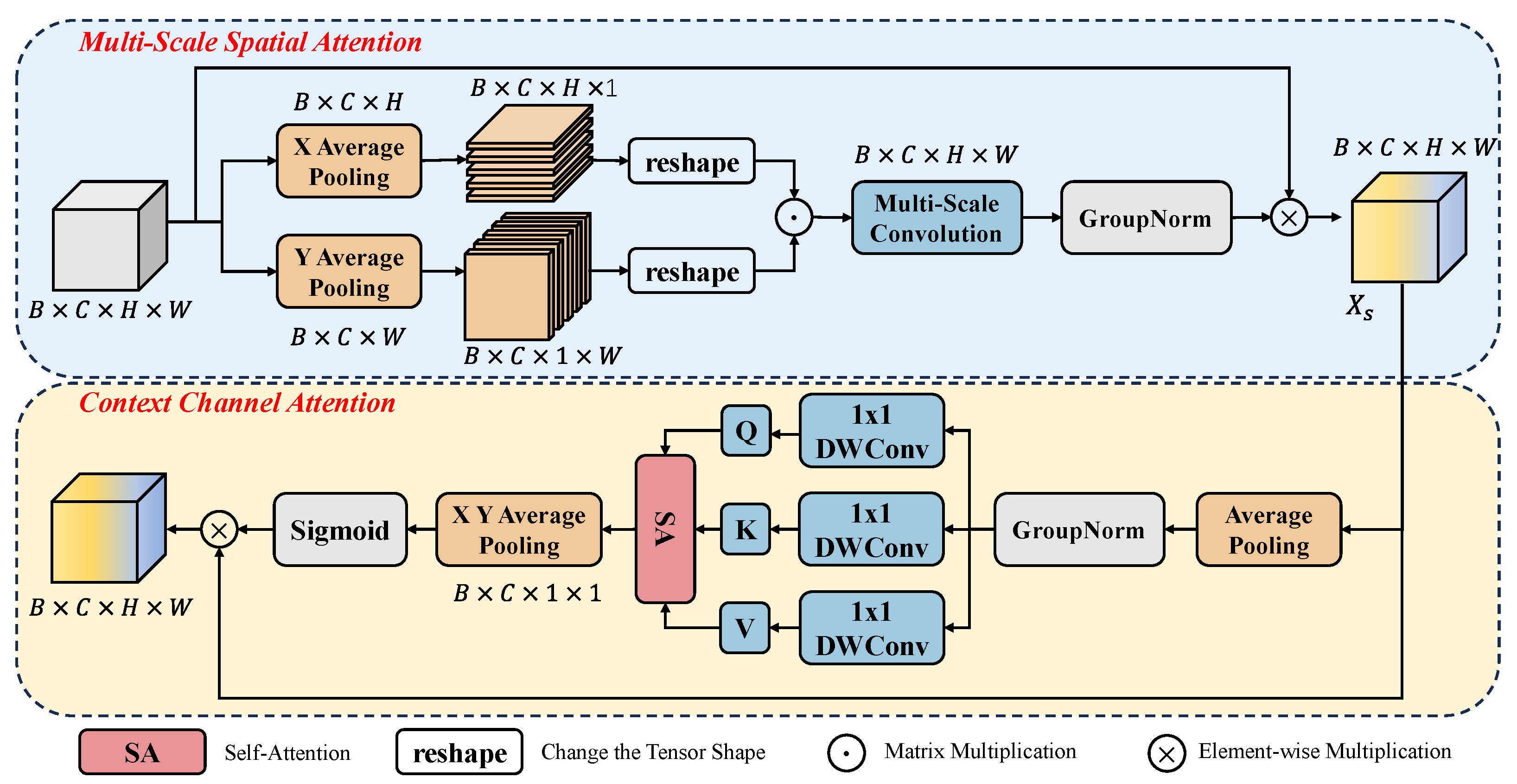

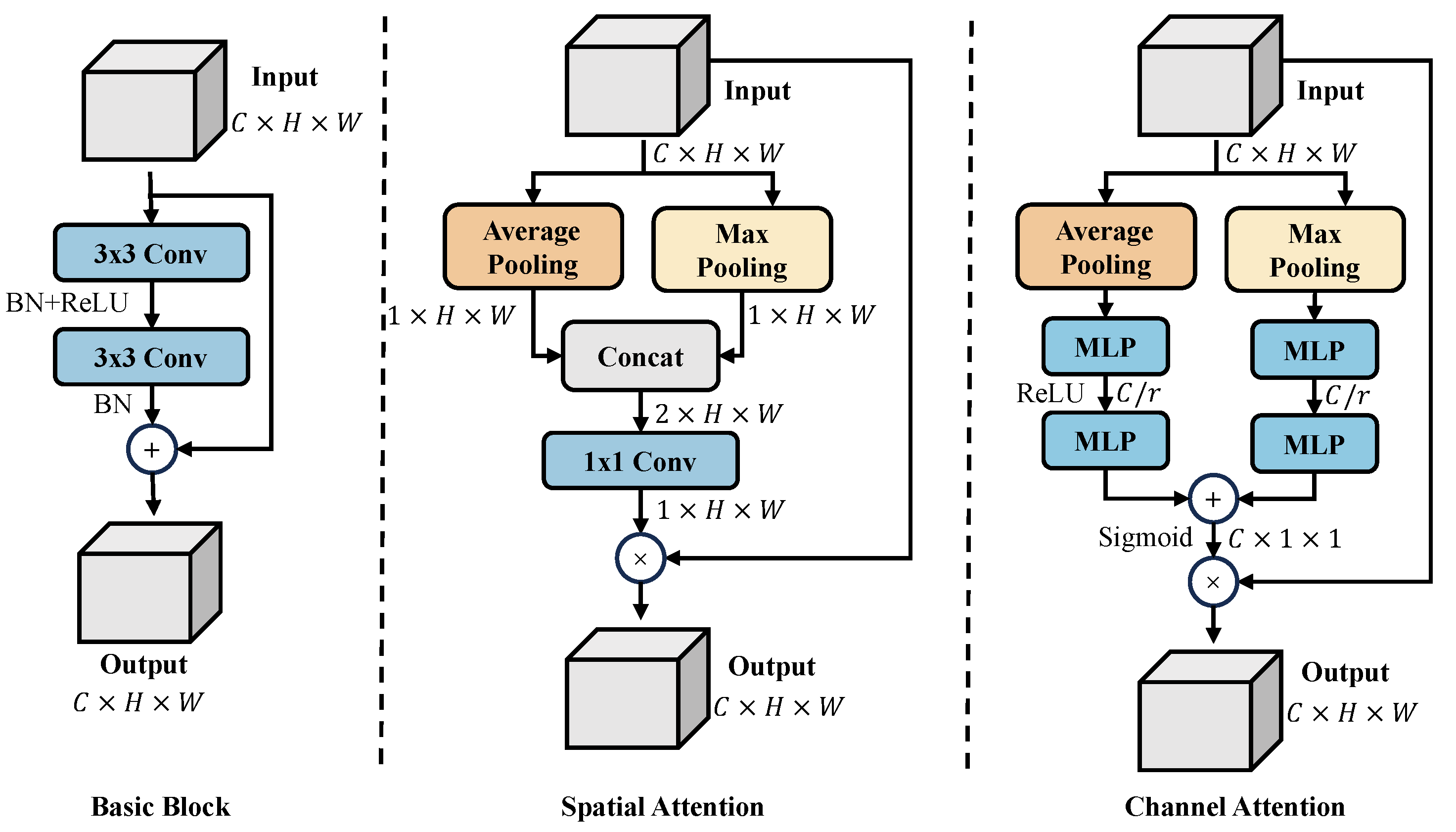

3.3. Multi-Scale Context Attention Module

Remote sensing images often contain rich details of ground objects, and the presence of multi-scale features poses significant challenges for segmentation. Combining spatial and channel attention to enhance feature representations is an effective strategy to address this issue. Inspired by the dual attention architecture [

90], we propose a multi-scale context attention module that combines spatial and channel attention mechanisms. This design effectively guides the model to focus on spatially informative features, thereby improving segmentation accuracy.

MSCAM is primarily composed of two parts: multi-scale spatial attention and contextual channel attention, with the overall structure shown in

Figure 7. Some previous attention mechanisms perform average pooling and max pooling along the channel dimension to generate spatial weights for feature maps. While this method is simple and computationally efficient, it fails to capture deeper features and is prone to losing important target features. In contrast, we perform global average pooling on the input

in both the horizontal and vertical directions, obtaining two corresponding pooling results:

and

. Then, we adjust the dimensions of both and re-multiply them to obtain the preliminary weights

.

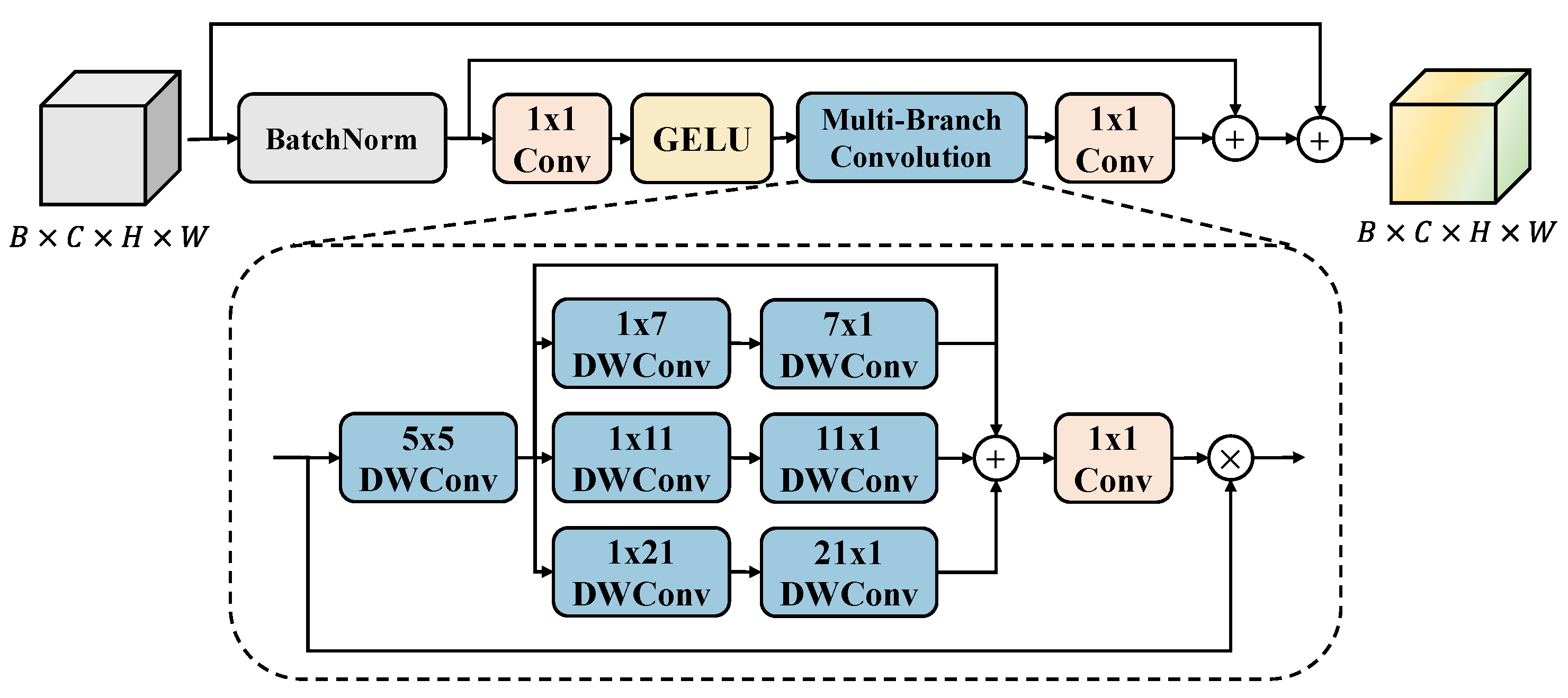

After obtaining the preliminary spatial weights, we aim to further explore the salient information in the features to prevent the loss of key features. We adopt a multi-branch deep strip convolution module to aggregate local information and capture contextual relationships, thereby enhancing the model’s ability to recognize multi-scale objects [

91]. The structure of the multi-scale convolution is shown in

Figure 8. We input the spatial weights

, and first process them through batch normalization, convolution, and activation functions. Then, multi-branch deep strip convolutions are applied to obtain multi-scale features, followed by a

convolution to adjust the channels. Specifically,

denotes the normalized features of

;

represents the features obtained after applying an activation function and a pointwise convolution to

; and

refers to the features produced by applying a

depthwise convolution to

. The detailed formulation is as follows:

where

i represents the kernel size for each branch in the multi-branch convolution, with values of 7, 11, and 21, respectively. ⊗ denotes the element-wise matrix multiplication.

represents the output of the multi-scale convolution.

refers to group normalization [

92]. After performing group normalization on the output

of the multi-scale convolution, the result is multiplied element-wise by the input

X to obtain the multi-scale spatial attention weights

.

After obtaining the spatial weights, we downsample the feature map using

average pooling and group normalization. Then, a

depthwise convolution is applied to compute the single-head self-attention. The results are pooled over the height (H) and width (W) dimensions. Finally, channel weights are computed using the Sigmoid function. The output of the multi-scale context attention module is obtained by multiplying the spatial weights with the channel weights. The detailed formulation is as follows:

The average pooling with a kernel size of 7 is denoted as , and the result of the single-head self-attention computation is denoted as . The global average pooling operation is represented as , and the final output of the multi-scale context attention module is denoted as . MSCAM leverages multi-scale convolutions to capture multi-scale features and uses the self-attention mechanism to connect contextual information, further enhancing the spatial feature representation. The spatial feature processing enables the fused features to better reflect the characteristics of different targets, thereby improving the model’s performance.

3.4. Loss Function

We adopt a joint loss function to supervise the training process of our model. This joint loss is composed of the cross-entropy loss and the Dice loss, which are computed independently and then combined with equal weights. Both loss functions are widely used in semantic segmentation tasks. Their combination enhances feature learning and contributes to improved segmentation accuracy.

5. Discussion

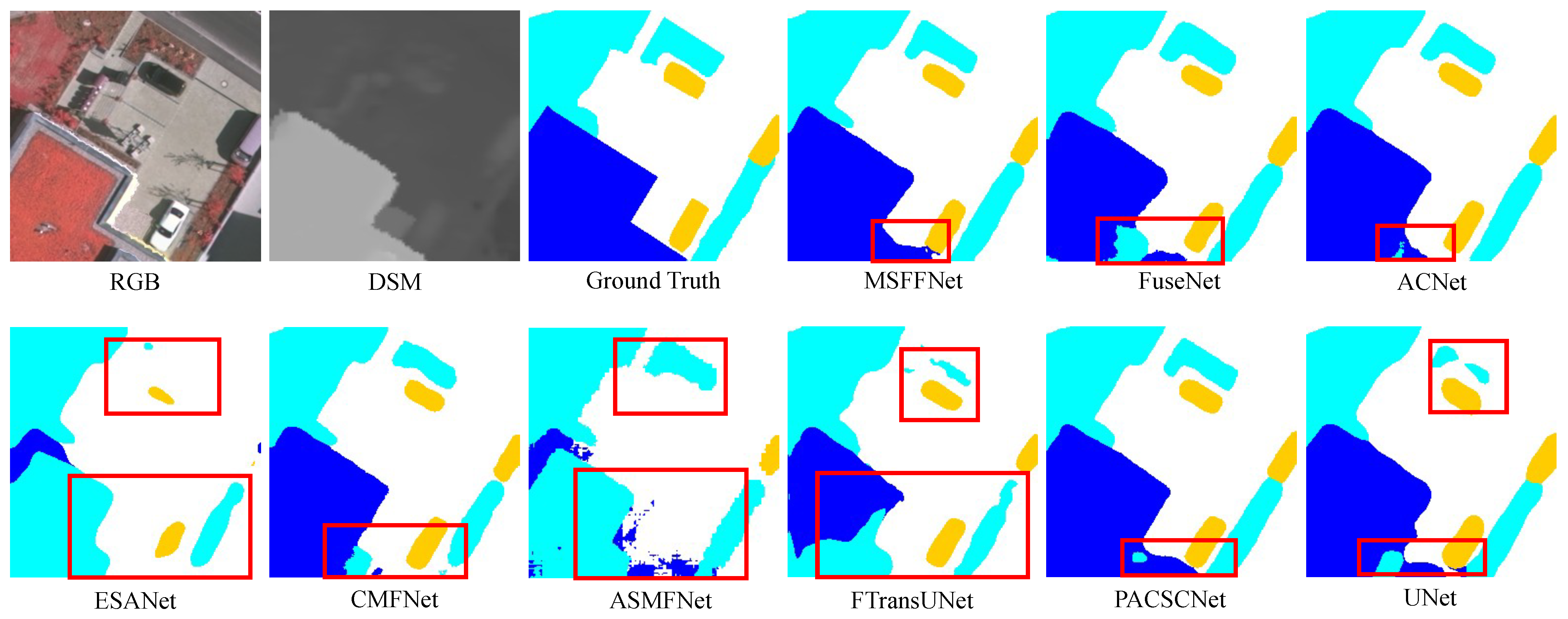

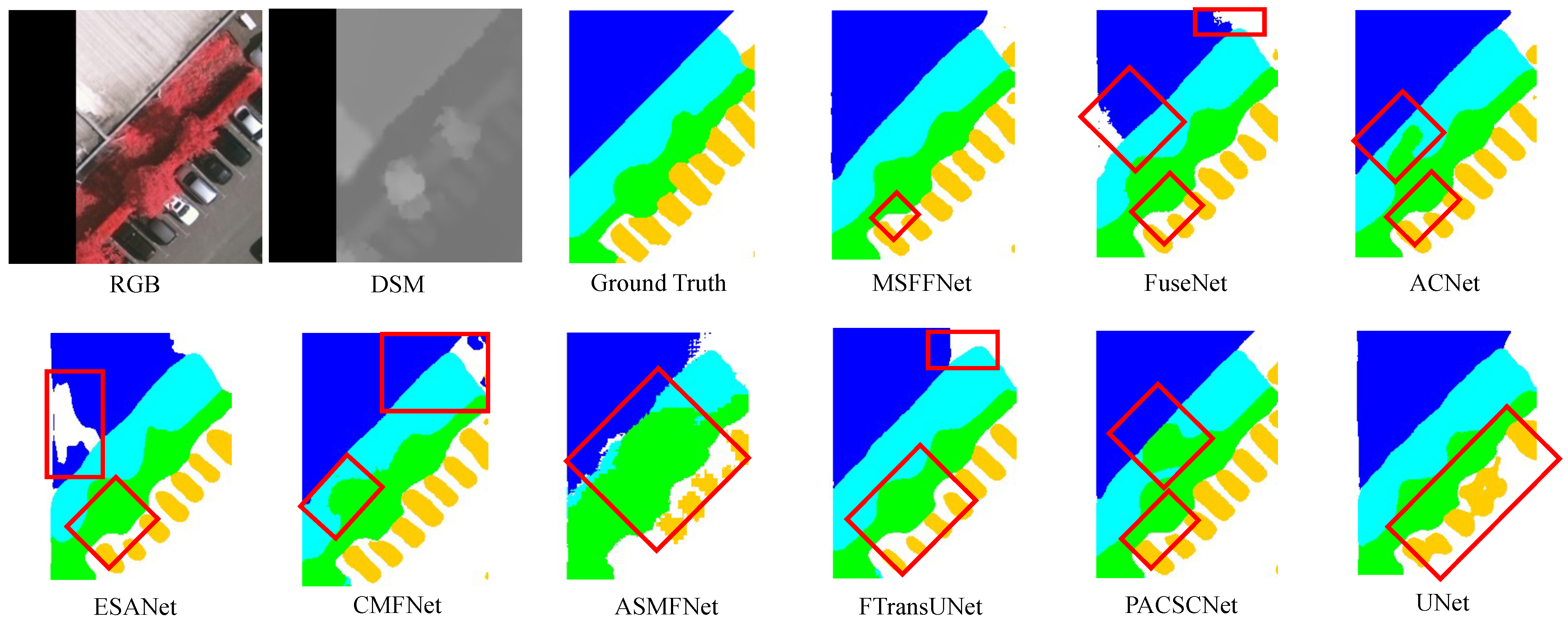

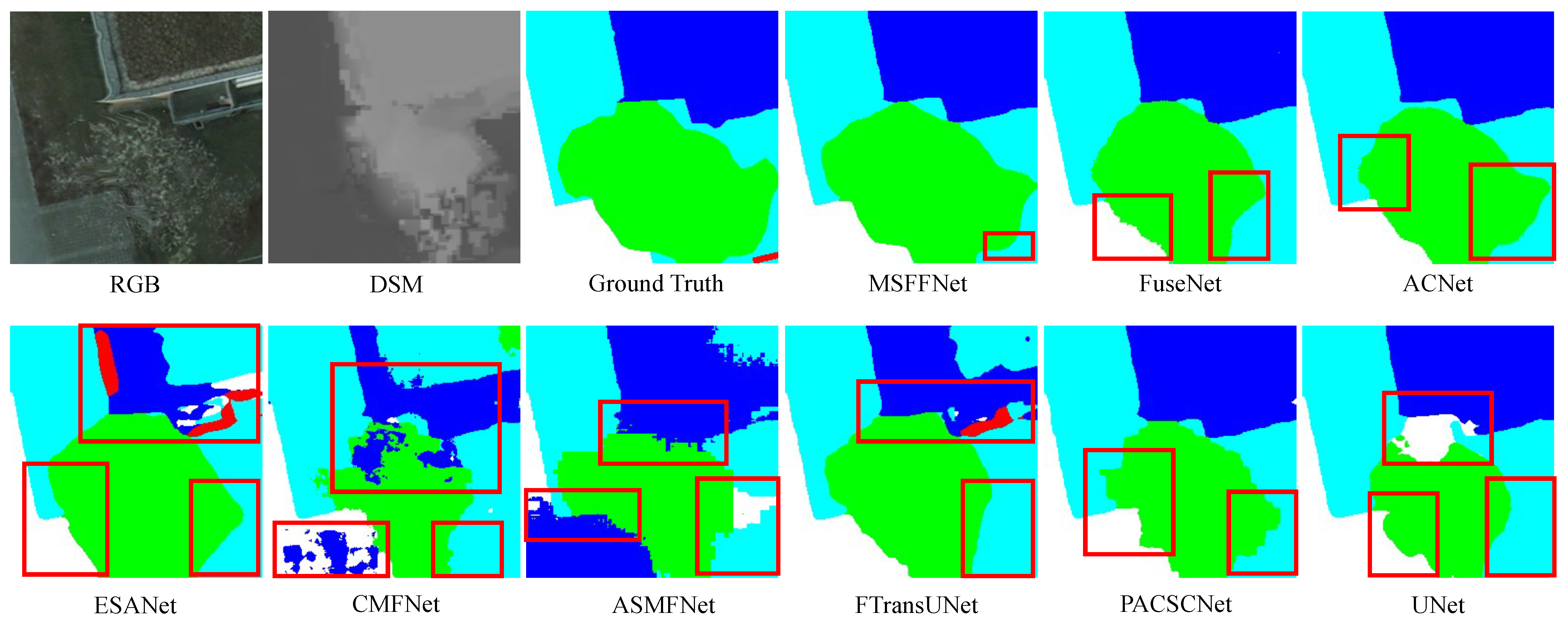

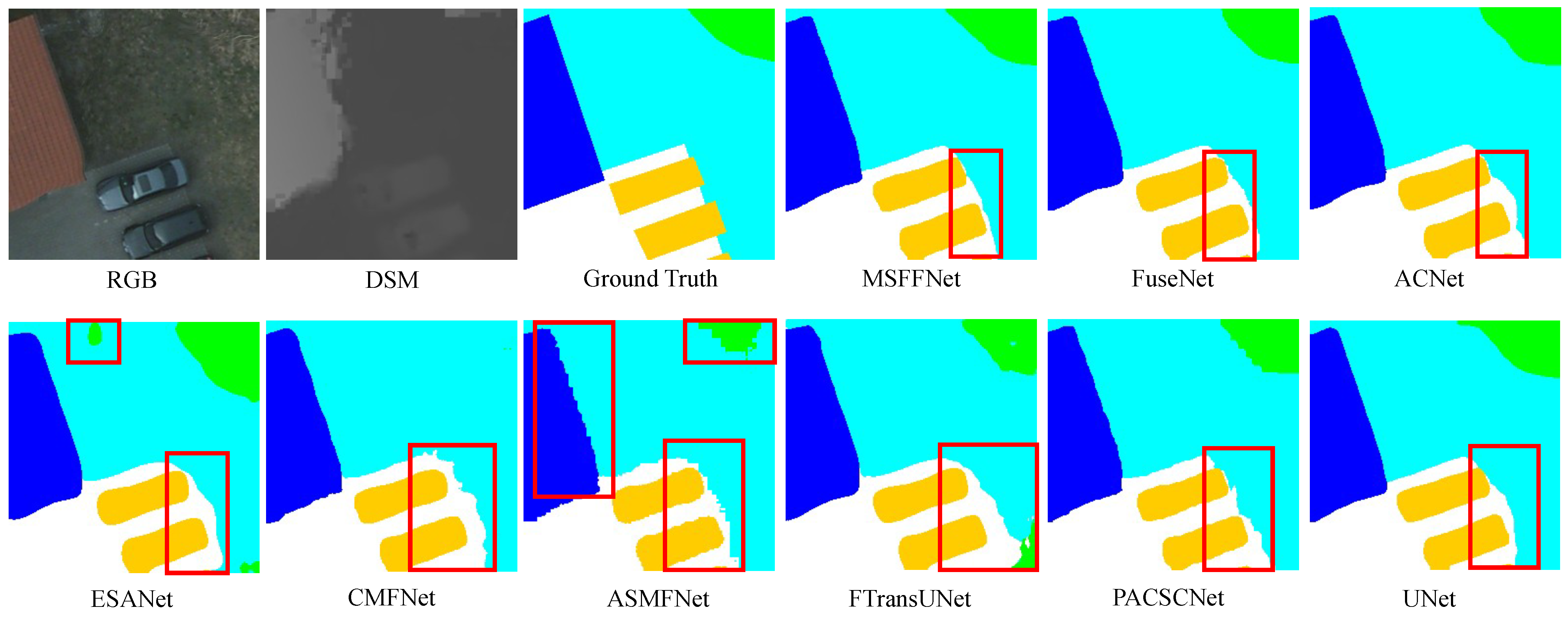

Through a series of experiments, the results show that, compared to other models, our model incorporates DSM, combines the HBFM and MSCAM, constructing a multimodal segmentation network that improves the semantic segmentation accuracy of remote sensing images. In the model comparison experiments, our model achieved excellent performance. In ablation experiments, the improvements were effective in enhancing the segmentation capabilities of the model. In scene segmentation visualization experiments, compared to other models, our model’s segmentation results were closer to the ground truth and successfully preserved edge information. We also designed different fusion strategies and tested their impact on the multimodal model. Experimental results show that, compared to other fusion strategies, HBFM achieved impressive results. On both datasets, it ranked first in OA, mIoU, and mF1.

Although our proposed model does not achieve the lowest FLOPs, parameter count, or FPS, its moderate computational complexity represents a reasonable trade-off for the substantial improvement in segmentation accuracy. The dual-branch design and Transformer-based fusion modules inevitably increase computational cost, yet they enable more effective cross-modal interaction and fine-grained feature representation. Consequently, the model attains the highest overall accuracy among all compared methods, confirming that the additional complexity is justified by its performance gains. Nevertheless, the relatively high computational demand may limit its applicability in real-time or resource-constrained scenarios. Future work will focus on enhancing model efficiency while preserving high segmentation accuracy.