SynerCD: Synergistic Tri-Branch and Vision-Language Coupling for Remote Sensing Change Detection

Highlights

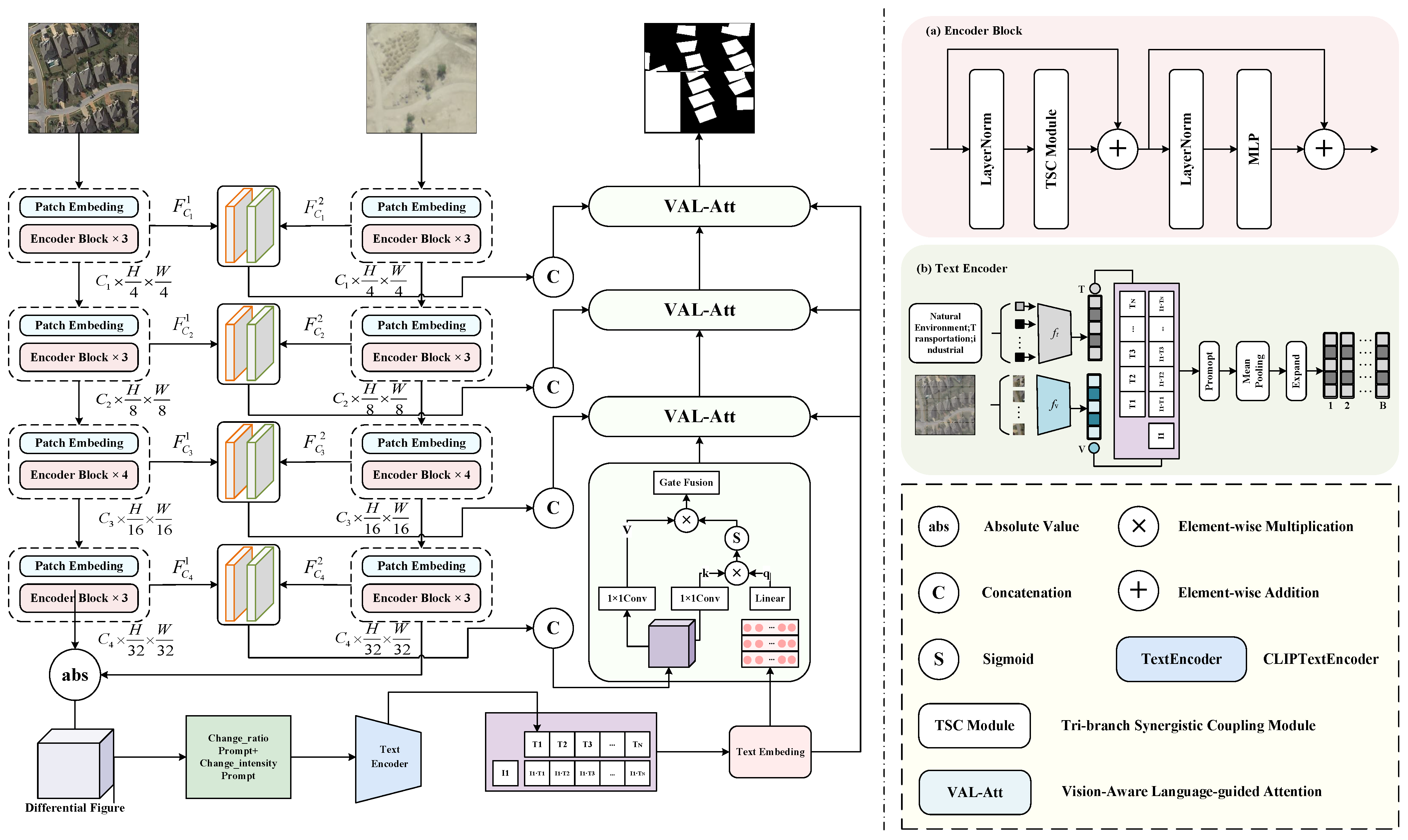

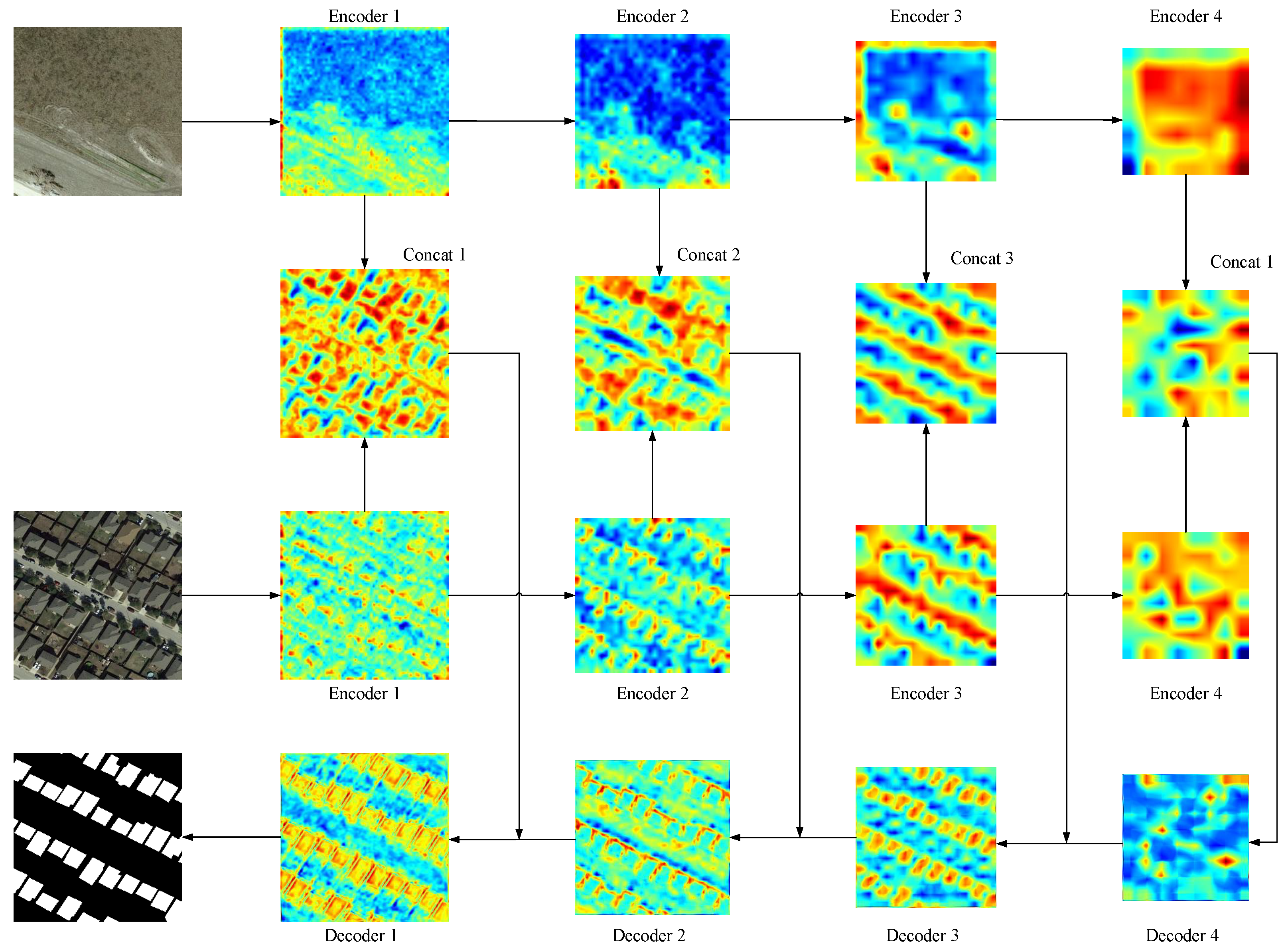

- We propose SynerCD, a Siamese encoder–decoder framework that integrates frequency-domain analysis with vision-language alignment for robust remote sensing change detection.

- Experiments on three public benchmarks demonstrate superior localization accuracy and cross-modal semantic adaptability compared with state-of-the-art methods.

- A novel Triple-branch Synergistic Encoding (TSC) module combines Mamba-based sequence modeling and frequency decomposition to capture fine-grained structural and spectral variations.

- A vision-attended language-guided attention (VAL-Att) module leverages CLIP prompts to dynamically align visual and semantic representations, enhancing sensitivity to subtle or ambiguous changes.

Abstract

1. Introduction

- We design a tri-branch encoder that models local details, global frequency information, and structure-preserving features via channel decoupling. By integrating Mamba and wavelet-based enhancement, the module enables effective spatial-frequency collaborative modeling.

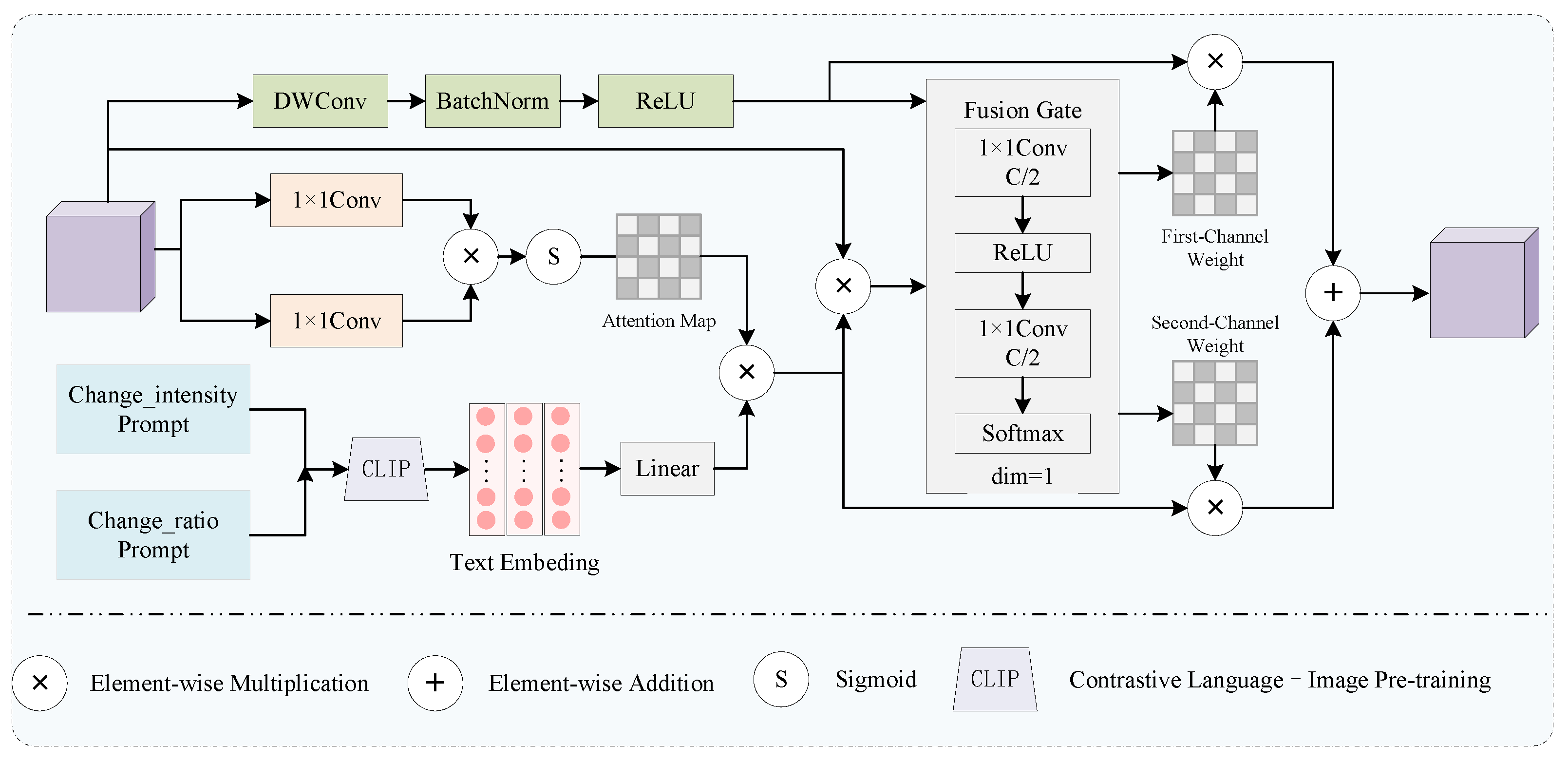

- We propose a language-guided attention fusion module that leverages CLIP-encoded semantic prompts to guide image attention, allowing dynamic modulation of modality contributions and enhancing the model’s responsiveness to ambiguous and non-salient changes.

- We construct a unified framework that integrates channel decoupling, semantic guidance, and frequency-domain enhancement, offering a new paradigm for RSCD with improved discriminative power and semantic awareness.

2. Methodology

2.1. Tri-Branch Synergistic Coupling Module

2.2. Vision-Aware Language-Guided Attention

2.3. Loss Function

2.4. Gradient Dynamics in Corner Cases

2.5. Gradient Stability and Loss Convergence

3. Experiments

3.1. Datasets

3.2. Evaluation Metrics

3.3. Implementation Details

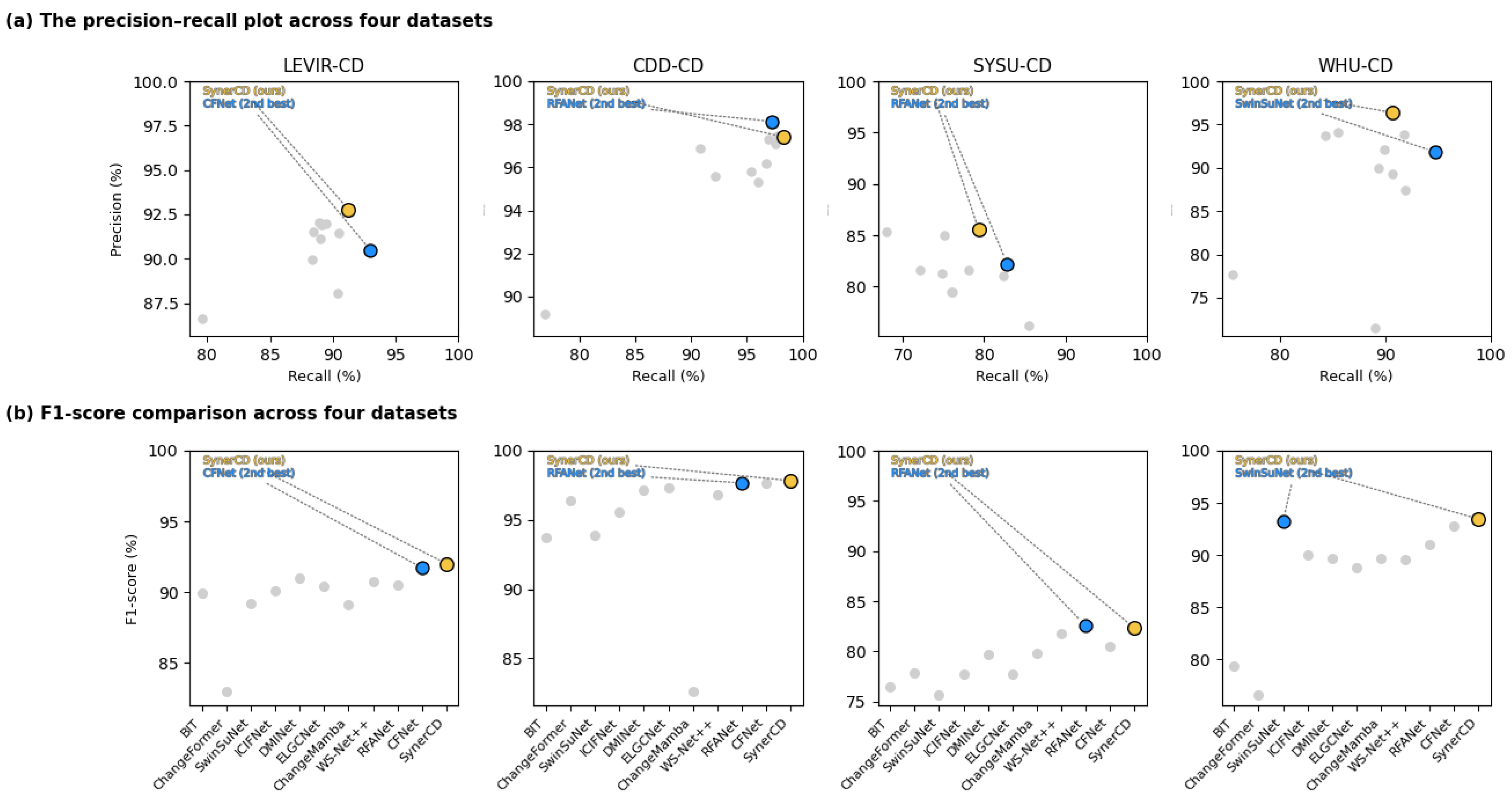

3.4. Comparison with State of the Arts

3.4.1. Quantitative Comparison

3.4.2. Complexity Comparison

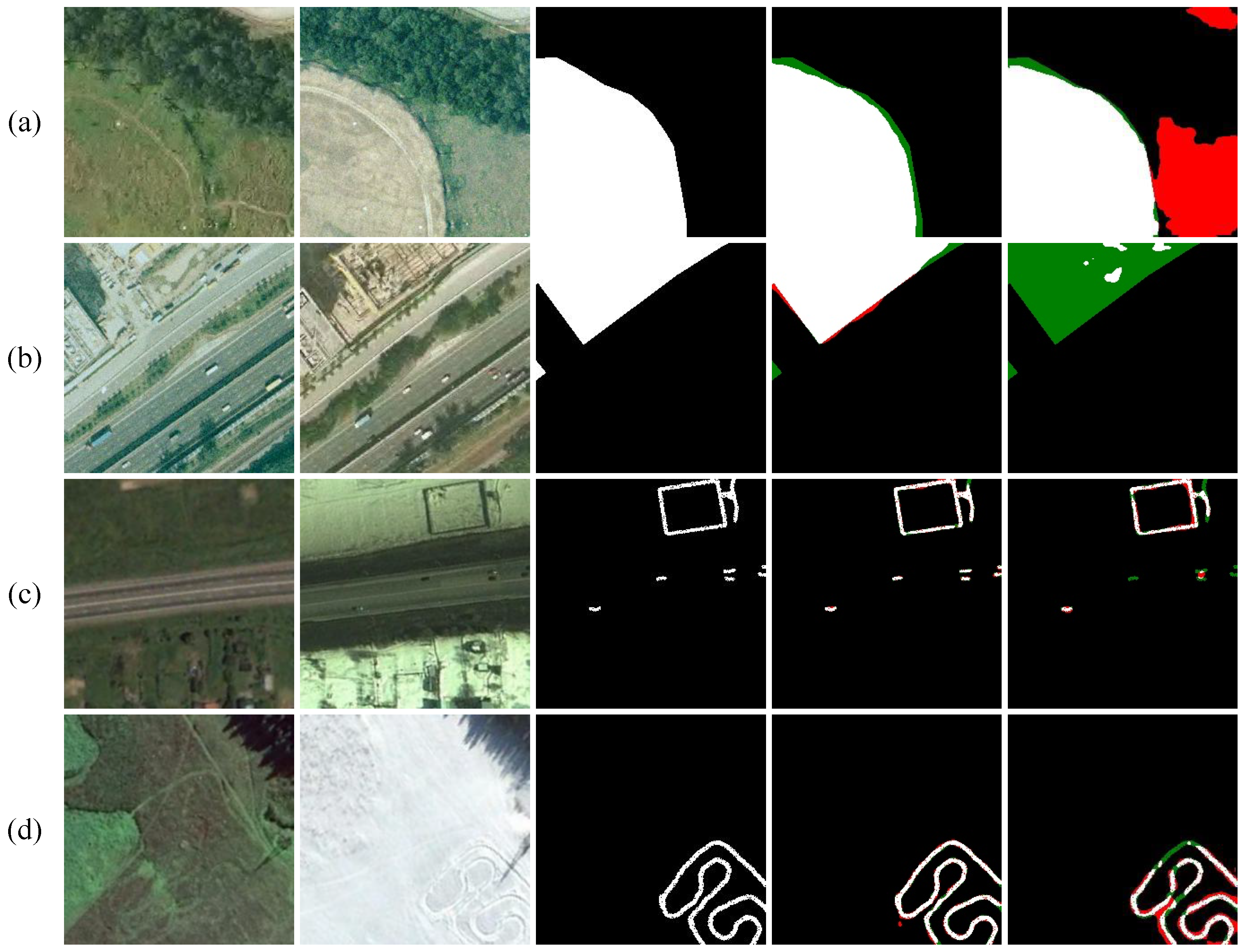

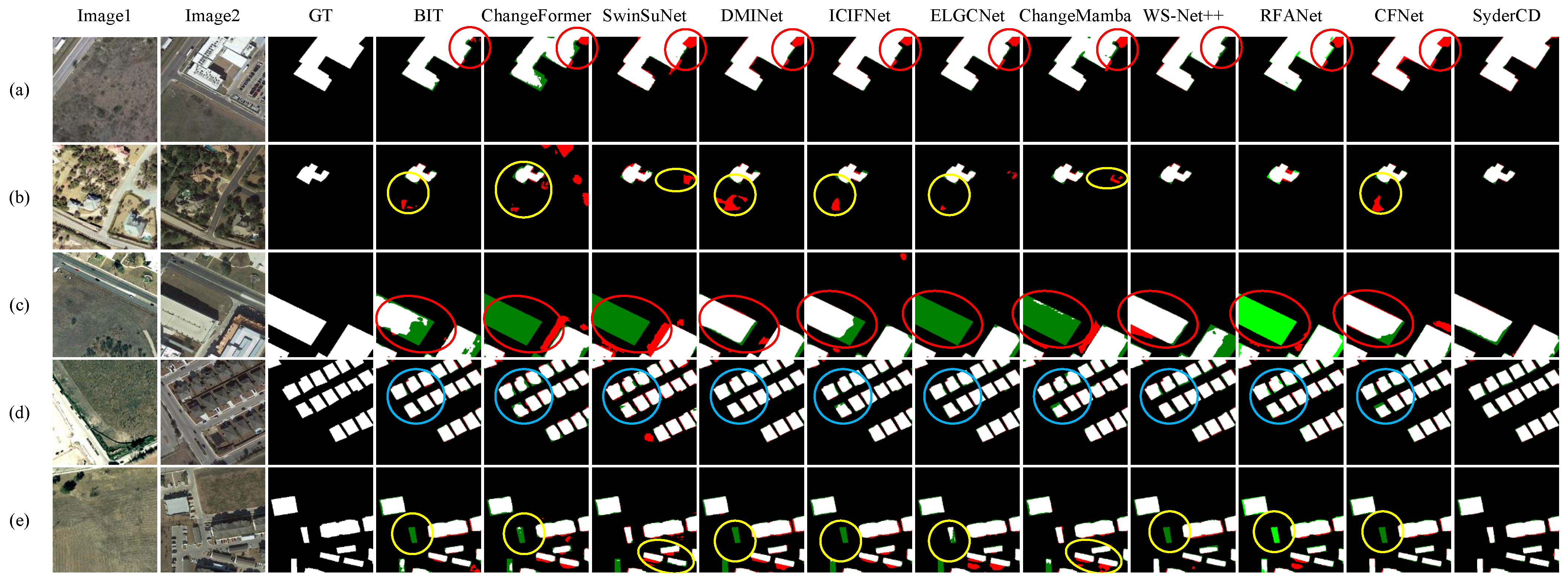

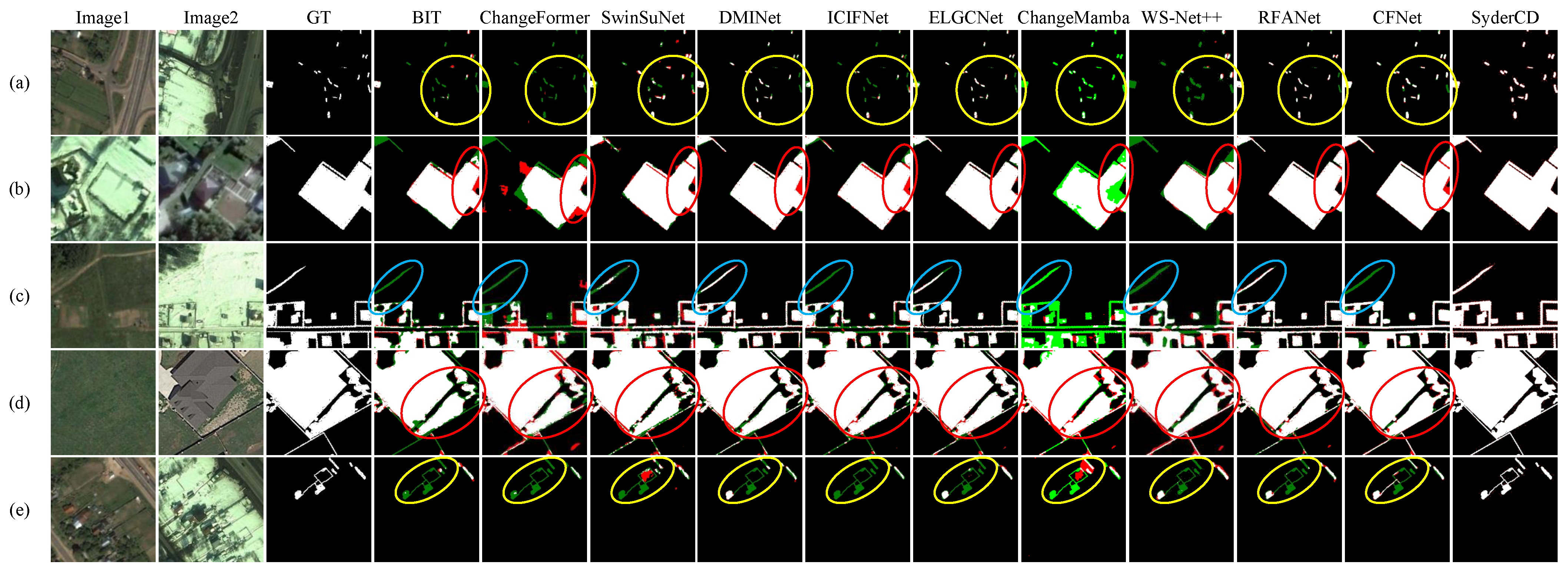

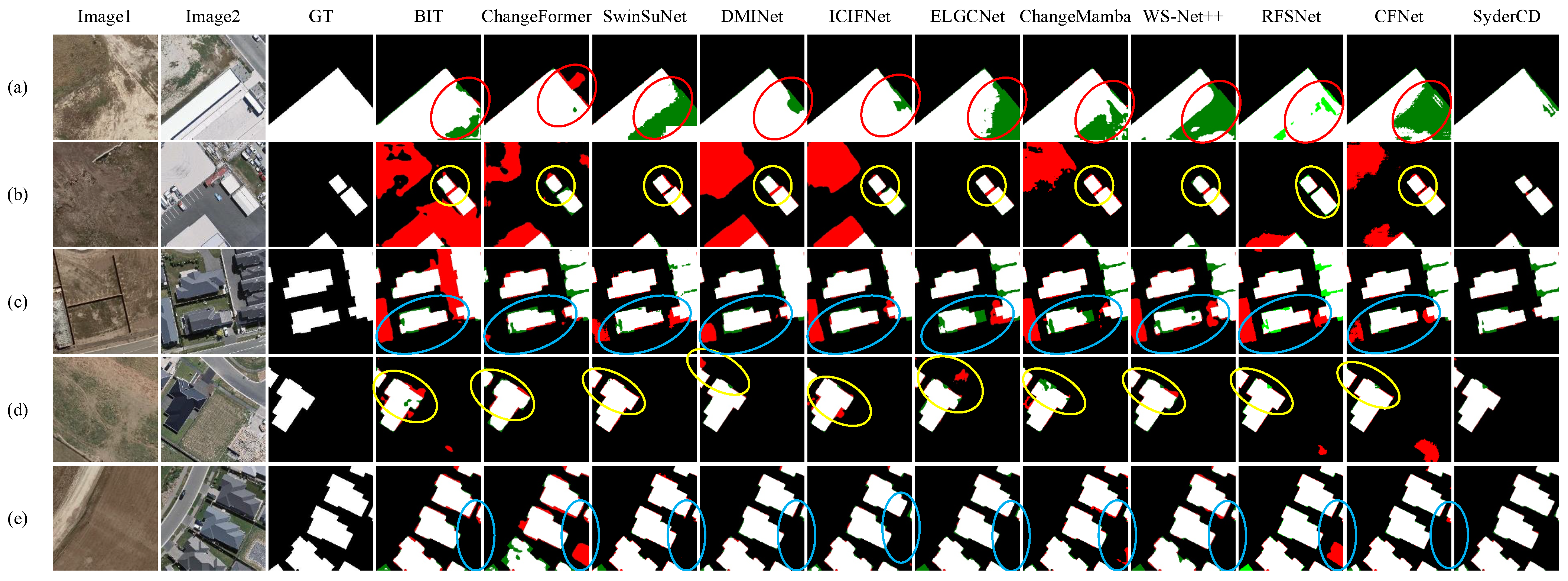

3.4.3. Qualitative Comparison

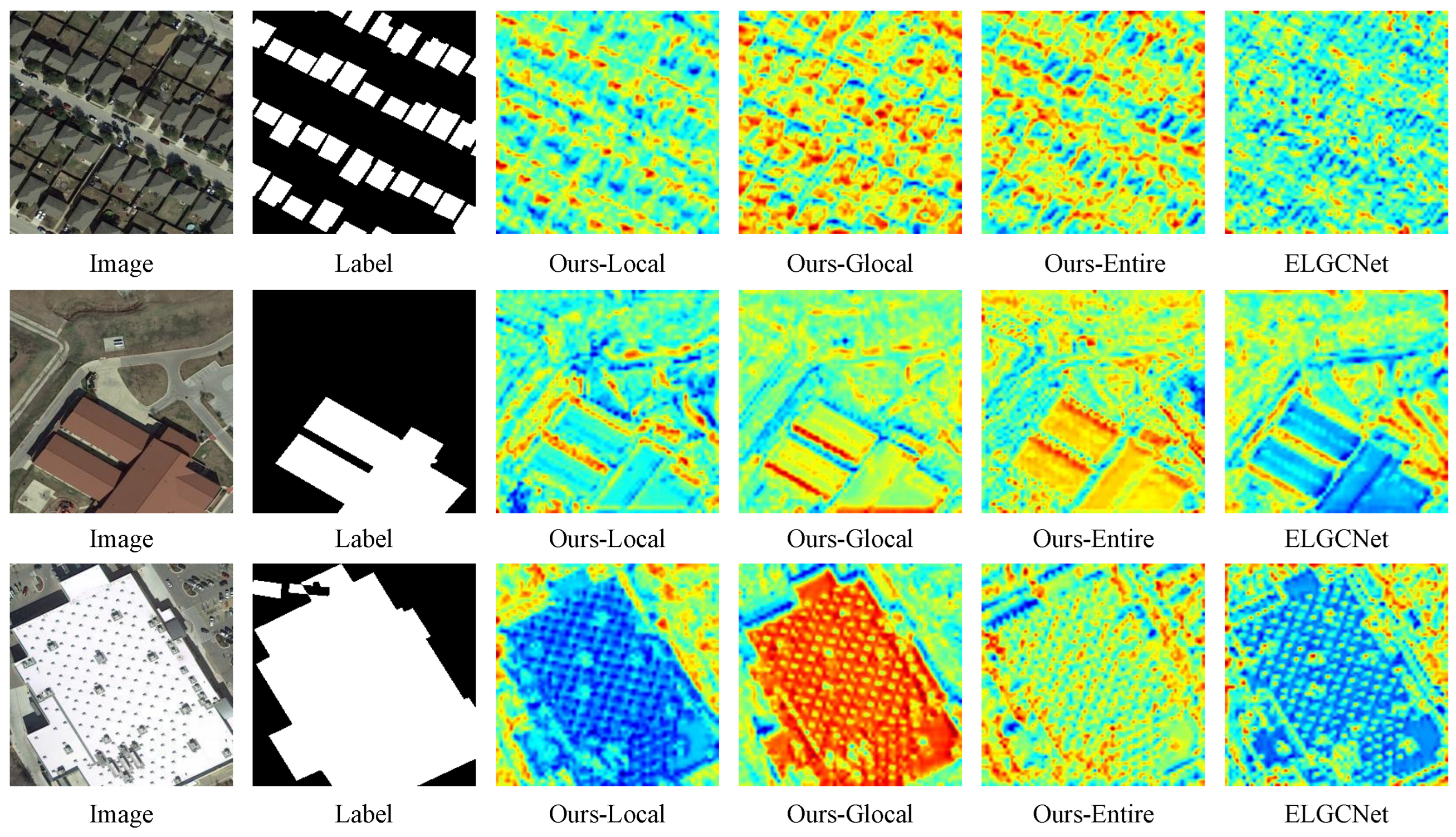

3.5. Ablation Studies

3.5.1. Ablation of Two Modules

3.5.2. Ablation of Encoder Blocks

3.5.3. Ablation of Different Frequency Transform Methods

3.5.4. Ablation of Different Backbone in the Encoder

3.5.5. Ablation of Different Attention Mechanism in the Decoder

3.5.6. Ablation of Different Loss Function in the Decoder

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Miao, J.; Li, S.; Bai, X.; Gan, W.; Wu, J.; Li, X. RS-NormGAN: Enhancing change detection of multi-temporal optical remote sensing images through effective radiometric normalization. ISPRS J. Photogramm. Remote Sens. 2025, 221, 324–346. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Zhang, M.; Shi, W. A feature difference convolutional neural network-based change detection method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Hou, X.; Bai, Y.; Li, Y.; Shang, C.; Shen, Q. High-resolution triplet network with dynamic multiscale feature for change detection on satellite images. ISPRS J. Photogramm. Remote Sens. 2021, 177, 103–115. [Google Scholar] [CrossRef]

- Li, Z.; Tang, C.; Wang, L.; Zomaya, A.Y. Remote sensing change detection via temporal feature interaction and guided refinement. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5628711. [Google Scholar] [CrossRef]

- Peng, D.; Bruzzone, L.; Zhang, Y.; Guan, H.; He, P. SCDNET: A novel convolutional network for semantic change detection in high resolution optical remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102465. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual attentive fully convolutional Siamese networks for change detection in high-resolution satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5604816. [Google Scholar] [CrossRef]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. Rs-mamba for large remote sensing image dense prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633314. [Google Scholar] [CrossRef]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. Changemamba: Remote sensing change detection with spatio-temporal state space model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409720. [Google Scholar]

- Zhang, H.; Chen, K.; Liu, C.; Chen, H.; Zou, Z.; Shi, Z. CDMamba: Remote sensing image change detection with mamba. arXiv 2024, arXiv:2406.04207. [Google Scholar] [CrossRef]

- Ma, X.; Yang, J.; Che, R.; Zhang, H.; Zhang, W. Ddlnet: Boosting remote sensing change detection with dual-domain learning. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar]

- Zhu, Y.; Fan, L.; Li, Q.; Chang, J. Multi-scale discrete cosine transform network for building change detection in very-high-resolution remote sensing images. Remote Sens. 2023, 15, 5243. [Google Scholar] [CrossRef]

- Xiong, F.; Li, T.; Yang, Y.; Zhou, J.; Lu, J.; Qian, Y. Wavelet Siamese Network with semi-supervised domain adaptation for remote sensing image change detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633613. [Google Scholar] [CrossRef]

- Dong, Z.; Cheng, D.; Li, J. SpectMamba: Remote sensing change detection network integrating frequency and visual state space model. Expert Syst. Appl. 2025, 287, 127902. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PmLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Wang, T.; Bai, T.; Xu, C.; Zhang, E.; Liu, B.; Zhao, X.; Zhang, H. MDS-Net: An Image-Text Enhanced Multimodal Dual-Branch Siamese Network for Remote Sensing Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 12421–12438. [Google Scholar] [CrossRef]

- Qiu, J.; Liu, W.; Zhang, H.; Li, E.; Zhang, L.; Li, X. A Novel Change Detection Method Based on Visual Language from High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 4554–4567. [Google Scholar] [CrossRef]

- Dong, S.; Wang, L.; Du, B.; Meng, X. ChangeCLIP: Remote sensing change detection with multimodal vision-language representation learning. ISPRS J. Photogramm. Remote Sens. 2024, 208, 53–69. [Google Scholar] [CrossRef]

- Zheng, W.; Yang, J.; Chen, J.; He, J.; Li, P.; Sun, D.; Chen, C.; Meng, X. Cross-Temporal Knowledge Injection with Color Distribution Normalization for Remote Sensing Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6249–6265. [Google Scholar] [CrossRef]

- Jiang, Z.; Wang, B.; Xu, X.; Zhang, Y.; Zhang, P.; Wu, Y.; Yang, H. Feature Enhancement and Feedback Network for Change Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2025, 22, 2500905. [Google Scholar] [CrossRef]

- Liu, G.; Yuan, Y.; Zhang, Y.; Dong, Y.; Li, X. Style transformation-based spatial–spectral feature learning for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2020, 60, 5401515. [Google Scholar] [CrossRef]

- Lin, H.; Zhao, C.; He, R.; Zhu, M.; Jiang, X.; Qin, Y.; Gao, W. CGA-Net: A CNN-GAT Aggregation Network based on Metric for Change Detection in Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 8360–8376. [Google Scholar] [CrossRef]

- Hoxha, G.; Chouaf, S.; Melgani, F.; Smara, Y. Change captioning: A new paradigm for multitemporal remote sensing image analysis. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5627414. [Google Scholar] [CrossRef]

- Yin, K.; Liu, F.; Liu, J.; Xiao, L. Vision-Language Joint Learning for Box-Supervised Change Detection in Remote Sensing. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 10254–10258. [Google Scholar]

- Guo, Z.; Chen, H.; He, F. MSFNet: Multi-scale Spatial-frequency Feature Fusion Network for Remote Sensing Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 1912–1925. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, H.; Zhao, Y.; He, M.; Han, X. Change detection of buildings in remote sensing images using a spatially and contextually aware Siamese network. Expert Syst. Appl. 2025, 276, 127110. [Google Scholar] [CrossRef]

- Feng, Y.; Zhuo, L.; Zhang, H.; Li, J. Hybrid-MambaCD: Hybrid Mamba-CNN Network for Remote Sensing Image Change Detection With Region-Channel Attention Mechanism and Iterative Global-Local Feature Fusion. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5907912. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Z.; Li, M.; Zhang, L.; Peng, X.; He, R.; Shi, L. Dual Fine-Grained network with frequency Transformer for change detection on remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2025, 136, 104393. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, M.; Ren, J.; Li, Q. Exploring Context Alignment and Structure Perception for Building Change Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5609910. [Google Scholar] [CrossRef]

- Zhang, K.; Zhao, X.; Zhang, F.; Ding, L.; Sun, J.; Bruzzone, L. Relation Changes Matter: Cross-Temporal Difference Transformer for Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5611615. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Lebedev, M.; Vizilter, Y.V.; Vygolov, O.; Knyaz, V.A.; Rubis, A.Y. Change detection in remote sensing images using conditional adversarial networks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 565–571. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Chinchor, N. MUC-4 evaluation metrics. In Proceedings of the 4th Message Understanding Conference (MUC-4), McLean, VA, USA, 16–18 June 1992; Association for Computational Linguistics: Stroudsburg, PA, USA, 1992; pp. 22–29. [Google Scholar]

- Van Rijsbergen, C. Information retrieval: Theory and practice. In Proceedings of the Joint IBM/University of Newcastle upon Tyne Seminar on Data Base Systems, Newcastle upon Tyne, UK, 4–7 September 1979; Butterworth-Heinemann: Oxford, UK, 1979; pp. 1–14. [Google Scholar]

- Jaccard, P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull. Société Vaudoise Sci. Nat. 1901, 37, 547–579. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A transformer-based siamese network for change detection. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 207–210. [Google Scholar]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure transformer network for remote sensing image change detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5224713. [Google Scholar] [CrossRef]

- Feng, Y.; Xu, H.; Jiang, J.; Liu, H.; Zheng, J. ICIF-Net: Intra-Scale Cross-Interaction and Inter-Scale Feature Fusion Network for Bitemporal Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4410213. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4401015. [Google Scholar] [CrossRef]

- Noman, M.; Fiaz, M.; Cholakkal, H.; Khan, S.; Khan, F.S. ELGC-Net: Efficient Local-Global Context Aggregation for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4701611. [Google Scholar] [CrossRef]

- You, Z.H.; Chen, S.B.; Wang, J.X.; Luo, B. Robust feature aggregation network for lightweight and effective remote sensing image change detection. ISPRS J. Photogramm. Remote Sens. 2024, 215, 31–43. [Google Scholar] [CrossRef]

- Wu, F.; Dong, S.; Meng, X. CFNet: Optimizing Remote Sensing Change Detection Through Content-Aware Enhancement. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 14688–14704. [Google Scholar] [CrossRef]

- Zhang, H.; Teng, Y.; Li, H.; Wang, Z. STRobustNet: Efficient Change Detection via Spatial-Temporal Robust Representations in Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5612215. [Google Scholar] [CrossRef]

| Range | Subtle | Medium | Severe |

|---|---|---|---|

| No significant changes (subtle) | No significant changes (medium) | No significant changes (severe) | |

| Slight changes (subtle) | Slight changes (medium) | Slight changes (severe) | |

| Moderate changes (subtle) | Moderate changes (medium) | Moderate changes (severe) | |

| Large-scale changes (subtle) | Large-scale changes (medium) | Large-scale changes (severe) |

| Datasets | Spatial Resolution | Size/Image | Number of Samples | ||

|---|---|---|---|---|---|

| Train | Val | Test | |||

| LEVIR-CD [34] | 0.5 m | 256 × 256 | 7120 | 1024 | 2048 |

| CDD-CD [35] | 0.3 m | 256 × 256 | 10,000 | 3000 | 3000 |

| SYSU-CD [8] | 0.5 m | 256 × 256 | 12,000 | 4000 | 4000 |

| WHU-CD [36] | 0.2 m | 256 × 256 | 5895 | 794 | 745 |

| Models | Types | Params (M) | FLOPS (G) | Interfence Time (s) | LEVIR-CD | CDD-CD | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | |||||

| BIT21 [42] | Transformer | 3.5 | 10.63 | 0.27 | 89.43 | 89.96 | 91.53 | 88.45 | 81.76 | 99.00 | 92.93 | 93.74 | 96.88 | 90.80 | 88.21 | 98.57 |

| ChangeFormer22 [43] | Transformer | 41.03 | 202.79 | 1.61 | 82.11 | 82.98 | 86.65 | 79.61 | 70.91 | 98.34 | 95.96 | 96.44 | 96.18 | 96.70 | 93.12 | 99.16 |

| SwinSuNet22 [44] | Transformer | 28.77 | 9.05 | 0.39 | 88.60 | 89.18 | 88.04 | 90.36 | 80.48 | 98.88 | 92.98 | 93.87 | 95.60 | 92.20 | 88.45 | 98.44 |

| ICIFNet22 [45] | Transformer | 25.41 | 23.84 | 0.08 | 89.52 | 90.05 | 91.12 | 89.01 | 81.90 | 99.00 | 95.00 | 95.58 | 95.80 | 95.37 | 91.54 | 98.96 |

| DMINet23 [46] | CNN | 6.24 | 14.55 | 0.02 | 90.47 | 90.96 | 91.46 | 90.46 | 83.41 | 99.08 | 96.73 | 97.12 | 97.30 | 96.94 | 94.40 | 99.32 |

| ELGCNet24 [47] | Transformer | 10.56 | 187.98 | 1.14 | 89.93 | 90.43 | 92.02 | 88.90 | 82.54 | 99.04 | 96.96 | 97.32 | 97.12 | 97.51 | 94.78 | 99.37 |

| ChangeMamba24 [12] | Mamba | 20.47 | 12.81 | 0.09 | 88.57 | 89.14 | 89.98 | 88.32 | 80.42 | 98.90 | 80.48 | 82.61 | 89.17 | 76.95 | 70.38 | 96.18 |

| WS-Net++24 [16] | CNN | 33.82 | 237.54 | 0.03 | 90.24 | 90.73 | 92.00 | 89.49 | 83.03 | 99.07 | 95.46 | 96.79 | 95.31 | 96.04 | 92.38 | 98.98 |

| RFANet24 [48] | CNN | 2.86 | 3.16 | 0.54 | 89.97 | 90.47 | 91.92 | 89.07 | 82.60 | 99.04 | 97.34 | 97.68 | 98.14 | 97.22 | 95.46 | 99.40 |

| CFNet25 [49] | CNN | 6.98 | 3.84 | 0.10 | 91.28 | 91.72 | 90.49 | 92.98 | 84.71 | 99.17 | 97.29 | 97.63 | 97.49 | 97.76 | 95.36 | 99.41 |

| STRobustNet25 [50] | CNN | 20.47 | 12.81 | 0.09 | 90.22 | 90.71 | 92.15 | 89.32 | 83.01 | 99.07 | 92.52 | 93.40 | 94.25 | 92.56 | 87.61 | 98.46 |

| SynerCD | Transformer + Mamba | 13.71 | 30.52 | 0.15 | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 | 97.55 | 97.84 | 97.41 | 98.28 | 95.78 | 99.49 |

| Model | Types | SYSU-CD | WHU-CD | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | ||

| BIT21 [42] | Transformer | 69.86 | 76.52 | 81.57 | 72.07 | 61.98 | 89.57 | 78.34 | 79.36 | 71.58 | 89.05 | 65.78 | 98.02 |

| ChangeFormer22 [43] | Transformer | 71.43 | 77.88 | 81.30 | 74.74 | 63.78 | 89.99 | 75.54 | 76.57 | 77.63 | 75.54 | 62.04 | 98.03 |

| SwinSuNet22 [44] | Transformer | 69.18 | 75.62 | 85.32 | 67.91 | 60.80 | 89.68 | 92.94 | 93.25 | 91.85 | 94.69 | 87.35 | 99.42 |

| ICIFNet22 [45] | Transformer | 71.03 | 77.70 | 79.53 | 75.96 | 63.54 | 89.72 | 89.51 | 89.96 | 89.29 | 90.64 | 81.75 | 99.14 |

| DMINet23 [46] | CNN | 74.00 | 79.75 | 84.98 | 75.12 | 66.31 | 91.00 | 89.16 | 89.63 | 87.49 | 91.88 | 81.21 | 99.09 |

| ELGCNet24 [47] | Transformer | 71.00 | 77.69 | 79.43 | 76.03 | 63.52 | 89.70 | 88.27 | 88.75 | 93.70 | 84.29 | 79.77 | 99.09 |

| ChangeMamba24 [12] | Mamba | 73.45 | 79.80 | 81.62 | 78.06 | 66.39 | 90.68 | 89.18 | 89.64 | 89.95 | 89.34 | 81.23 | 99.12 |

| WS-Net++24 [16] | CNN | 76.02 | 81.73 | 81.04 | 82.42 | 69.10 | 91.31 | 89.15 | 89.59 | 94.08 | 85.51 | 81.14 | 99.15 |

| RFANet24 [48] | CNN | 77.12 | 82.54 | 82.24 | 82.83 | 70.27 | 91.73 | 90.60 | 90.99 | 92.16 | 89.86 | 83.47 | 99.24 |

| CFNet25 [49] | CNN | 75.00 | 80.56 | 76.20 | 85.44 | 67.44 | 91.33 | 92.47 | 92.80 | 93.79 | 91.82 | 86.57 | 99.38 |

| STRobustNet25 [50] | CNN | 73.12 | 79.62 | 77.73 | 81.59 | 66.14 | 90.15 | 89.48 | 89.96 | 93.26 | 86.88 | 81.75 | 99.09 |

| SynerCD | Transformer + Mamba | 77.14 | 82.33 | 85.58 | 79.31 | 69.96 | 91.97 | 93.17 | 93.45 | 96.36 | 90.71 | 87.71 | 99.49 |

| Num. | TSC Module | VAL-Att | LEVIR-CD | CDD-CD | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| WEMamba | LoFiConv | Kappa (%) | F1 (%) | Recall (%) | IoU (%) | Kappa (%) | F1 (%) | Recall (%) | IoU (%) | ||

| 1 | 84.79 | 85.57 | 86.07 | 98.52 | 90.96 | 92.01 | 90.66 | 85.21 | |||

| 2 | ✓11/5000 We believe no explanation is needed here. | 91.18 | 91.63 | 91.37 | 84.55 | 96.88 | 97.25 | 97.68 | 94.64 | ||

| 3 | ✓ | 91.07 | 91.52 | 90.70 | 84.55 | 96.88 | 97.25 | 97.68 | 94.64 | ||

| 4 | ✓ | ✓ | 91.42 | 91.86 | 91.42 | 84.94 | 97.24 | 97.57 | 98.07 | 95.25 | |

| 5 | ✓ | 91.35 | 91.79 | 91.00 | 84.82 | 97.42 | 97.72 | 98.10 | 95.55 | ||

| 6 | ✓ | ✓ | 91.47 | 91.90 | 91.07 | 85.01 | 97.48 | 97.77 | 98.20 | 95.65 | |

| 7 | ✓ | ✓ | 91.39 | 91.83 | 90.98 | 84.89 | 97.51 | 97.78 | 98.17 | 95.70 | |

| 8 | ✓ | ✓ | ✓ | 91.58 | 92.01 | 92.78 | 85.20 | 97.55 | 97.84 | 98.28 | 95.78 |

| Models | Kappa (%) | F1 (%) | Recall (%) | Precision (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|---|

| 3333 | 91.20 | 91.65 | 92.46 | 98.85 | 84.58 | 99.16 |

| 3343 | 91.32 | 91.76 | 92.33 | 91.19 | 84.77 | 99.17 |

| 3353 | 91.34 | 91.78 | 92.56 | 91.02 | 84.81 | 99.17 |

| 3363 | 91.40 | 91.84 | 92.55 | 91.13 | 84.91 | 99.18 |

| 4434 | 91.24 | 91.68 | 92.60 | 90.78 | 84.64 | 99.16 |

| 4444 | 91.41 | 91.84 | 92.56 | 91.13 | 84.91 | 99.18 |

| 4454 | 91.48 | 91.91 | 92.50 | 91.32 | 85.03 | 99.16 |

| 4464 | 91.50 | 91.93 | 92.67 | 91.20 | 85.06 | 99.18 |

| 4474 | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 |

| Method | LEVIR-CD | CDD-CD | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | Kappa (%) | F1 (%) | Pre. (%) | Rec. (%) | IoU (%) | OA (%) | |

| DCT | 91.45 | 91.89 | 92.81 | 90.98 | 84.99 | 99.18 | 97.51 | 97.81 | 97.43 | 98.18 | 95.71 | 99.48 |

| FFT | 91.47 | 91.91 | 92.66 | 91.17 | 85.02 | 99.18 | 97.48 | 97.78 | 97.38 | 98.18 | 95.65 | 99.47 |

| DWT | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 | 97.55 | 97.84 | 97.41 | 98.28 | 95.78 | 99.49 |

| Model | Kappa (%) | F1 (%) | Recall (%) | Precision (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|---|

| ResNet18 | 90.81 | 91.27 | 91.64 | 90.91 | 83.95 | 99.11 |

| ResNet50 | 90.98 | 91.43 | 93.17 | 89.75 | 84.21 | 99.14 |

| PVT | 91.42 | 91.85 | 92.41 | 91.30 | 84.93 | 99.18 |

| SwinTransformer | 91.32 | 91.76 | 92.85 | 90.69 | 84.77 | 99.17 |

| ELGCA | 91.26 | 91.70 | 92.81 | 90.62 | 84.67 | 99.16 |

| SyderCD | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 |

| Model | Kappa (%) | F1 (%) | Recall (%) | Precision (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|---|

| SA | 90.89 | 91.34 | 91.73 | 90.97 | 84.07 | 99.12 |

| MHSA | 91.03 | 91.48 | 92.02 | 90.96 | 84.31 | 99.14 |

| CBAM | 91.43 | 91.86 | 92.61 | 91.13 | 84.95 | 99.18 |

| ShuffleAtt | 91.49 | 91.92 | 92.50 | 91.35 | 85.05 | 99.18 |

| SkAtt | 91.40 | 91.83 | 92.90 | 90.79 | 84.90 | 99.18 |

| SyderCD | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 |

| Model | Kappa (%) | F1 (%) | Recall (%) | Precision (%) | IoU (%) | OA (%) |

|---|---|---|---|---|---|---|

| CE Loss | 90.94 | 91.40 | 91.86 | 90.94 | 84.16 | 99.13 |

| Dice Loss | 91.32 | 91.76 | 92.49 | 91.04 | 84.77 | 99.17 |

| CE+Focal | 91.37 | 91.80 | 92.70 | 90.92 | 84.97 | 99.17 |

| CE+BCL | 91.44 | 91.87 | 92.85 | 90.92 | 84.97 | 99.18 |

| SyderCD | 91.58 | 92.01 | 92.78 | 91.25 | 85.20 | 99.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, Y.; Zheng, P.; Tang, W.; Cheng, S.; Wang, L. SynerCD: Synergistic Tri-Branch and Vision-Language Coupling for Remote Sensing Change Detection. Remote Sens. 2025, 17, 3694. https://doi.org/10.3390/rs17223694

Tong Y, Zheng P, Tang W, Cheng S, Wang L. SynerCD: Synergistic Tri-Branch and Vision-Language Coupling for Remote Sensing Change Detection. Remote Sensing. 2025; 17(22):3694. https://doi.org/10.3390/rs17223694

Chicago/Turabian StyleTong, Yumei, Panpan Zheng, Wenbin Tang, Shuli Cheng, and Liejun Wang. 2025. "SynerCD: Synergistic Tri-Branch and Vision-Language Coupling for Remote Sensing Change Detection" Remote Sensing 17, no. 22: 3694. https://doi.org/10.3390/rs17223694

APA StyleTong, Y., Zheng, P., Tang, W., Cheng, S., & Wang, L. (2025). SynerCD: Synergistic Tri-Branch and Vision-Language Coupling for Remote Sensing Change Detection. Remote Sensing, 17(22), 3694. https://doi.org/10.3390/rs17223694