Polarization Compensation and Multi-Branch Fusion Network for UAV Recognition with Radar Micro-Doppler Signatures

Highlights

- A rotor–polarization coupling model and corresponding compensation algorithm are developed to correct time-varying polarization rotation induced by UAV rotor motion, effectively enhancing harmonic visibility and micro-Doppler continuity under low-SNR conditions.

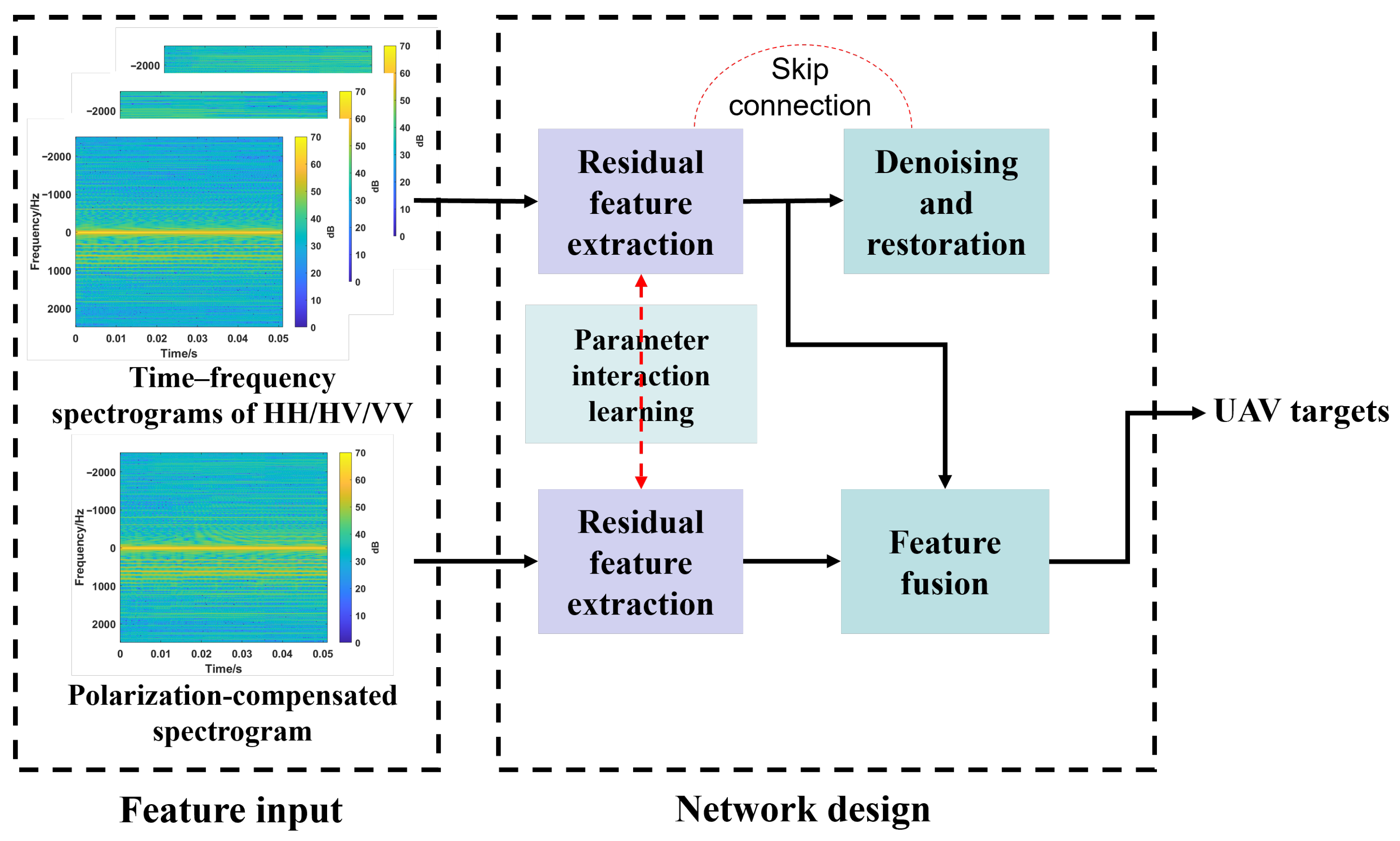

- A Multi-branch Polarization-Aware Fusion Network (MPAF-Net) integrating U-Net-based denoising, polarization-compensated feature extraction, and multi-head attention fusion achieves over 5% improvement in UAV recognition accuracy compared with conventional polarimetric representations.

- The proposed framework significantly improves the stability and discriminability of UAV polarimetric micro-Doppler features, offering a practical solution for cluttered, low-SNR radar environments.

- The results contribute to the advancement of polarization-based recognition techniques for rotor-type UAVs, enhancing radar sensing capability for small aerial vehicles.

Abstract

1. Introduction

2. Materials and Methods

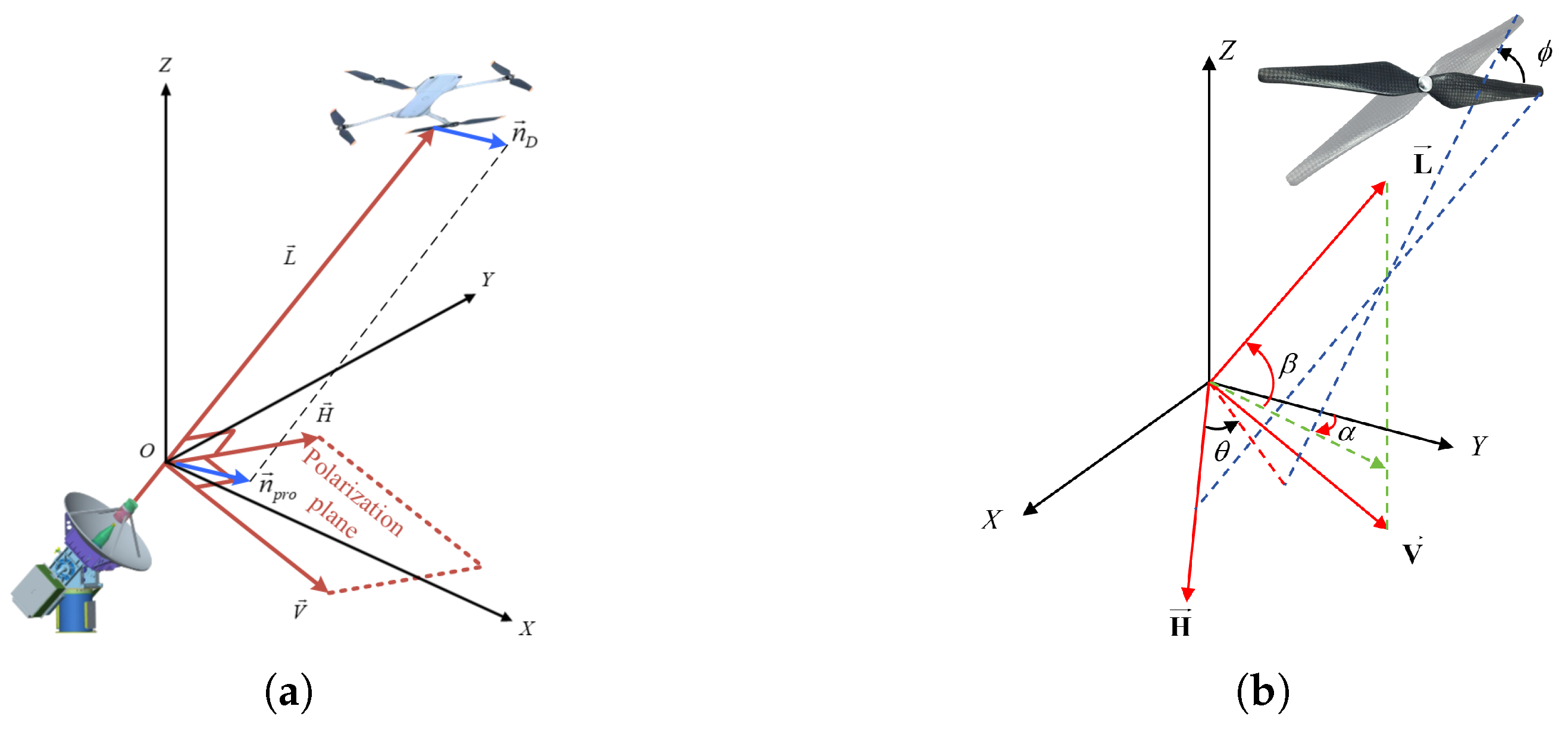

2.1. Modeling of Polarization Rotation Angle

2.2. Estimation of UAV Rotor Rotational Speed

2.3. Compensation of Blade Phase

2.4. CNN-Based UAV Recognition

2.4.1. U-Net-Based Denoising of UAV Polarimetric Time–Frequency Features

2.4.2. Interactive Learning for Polarimetric Deep Feature Extraction

- (1)

- Partial Weight Sharing

- (2)

- Feature-Wise FiLM Modulation

- (3)

- Cross-Branch Consistency Regularization

2.4.3. Multi-Head Attention Enhanced Feature Fusion

3. Results and Discussion

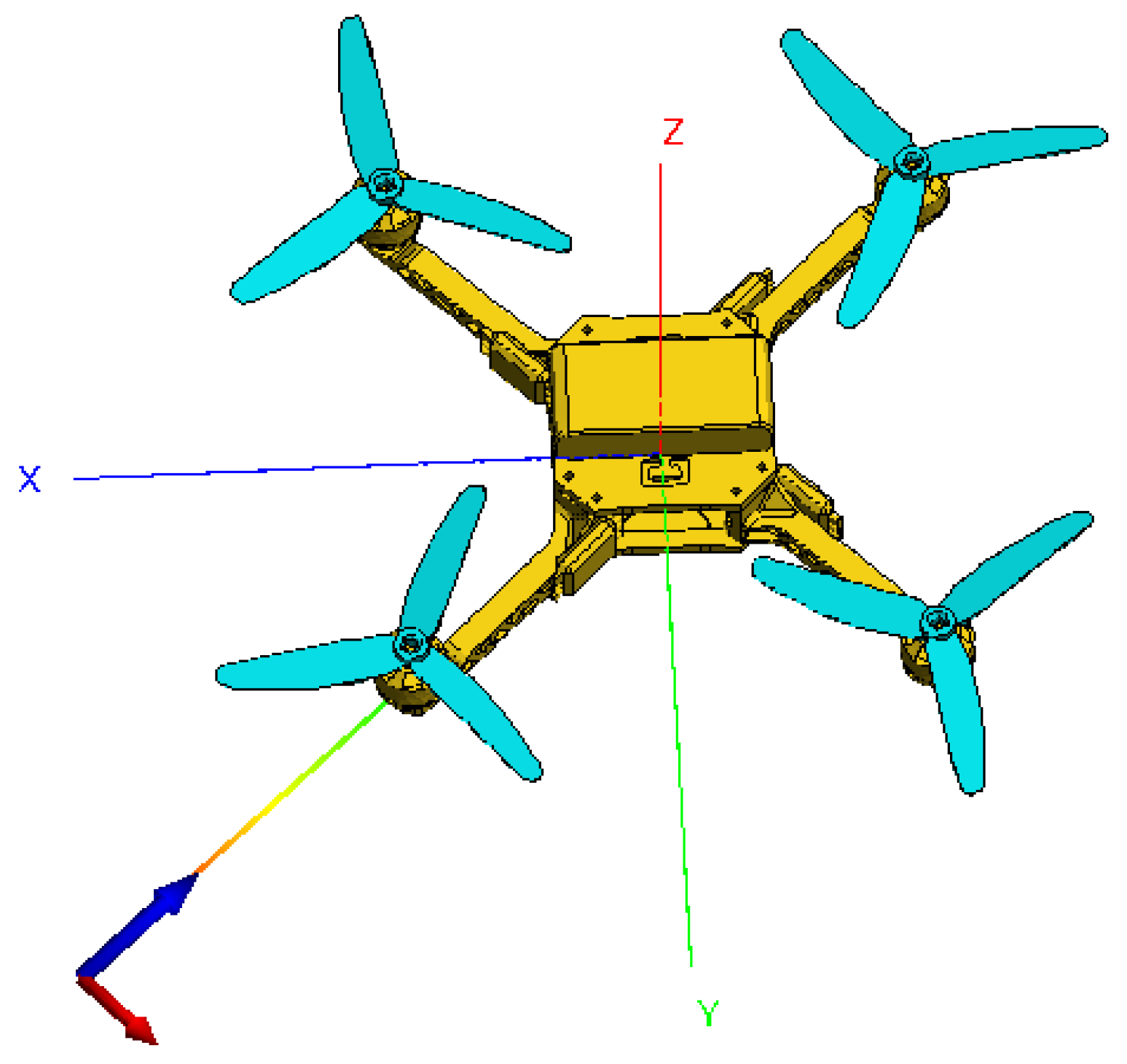

3.1. Experimental Validation via Simulation

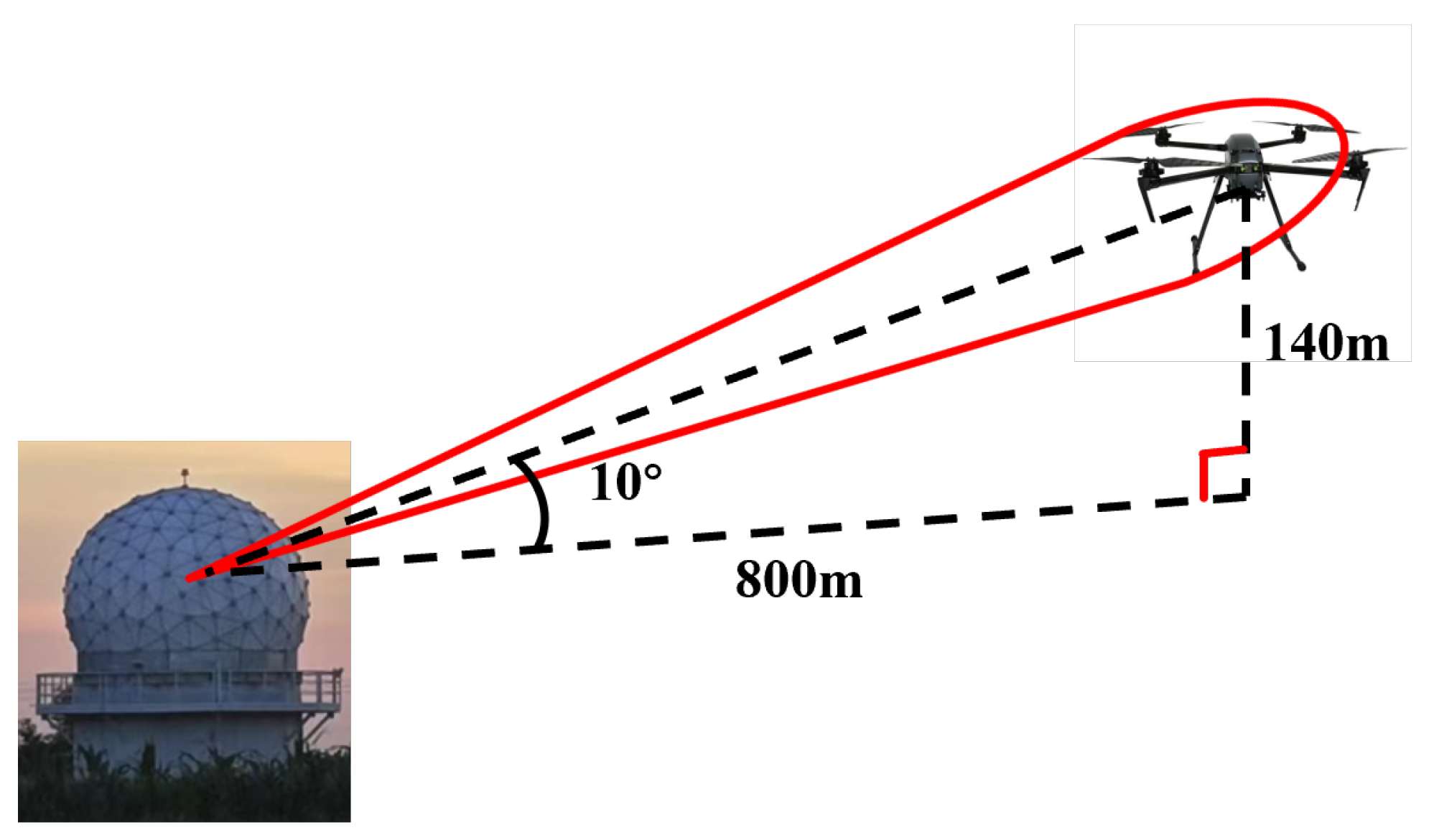

3.2. Measured Data Acquisition

3.3. Experimental Results and Analysis

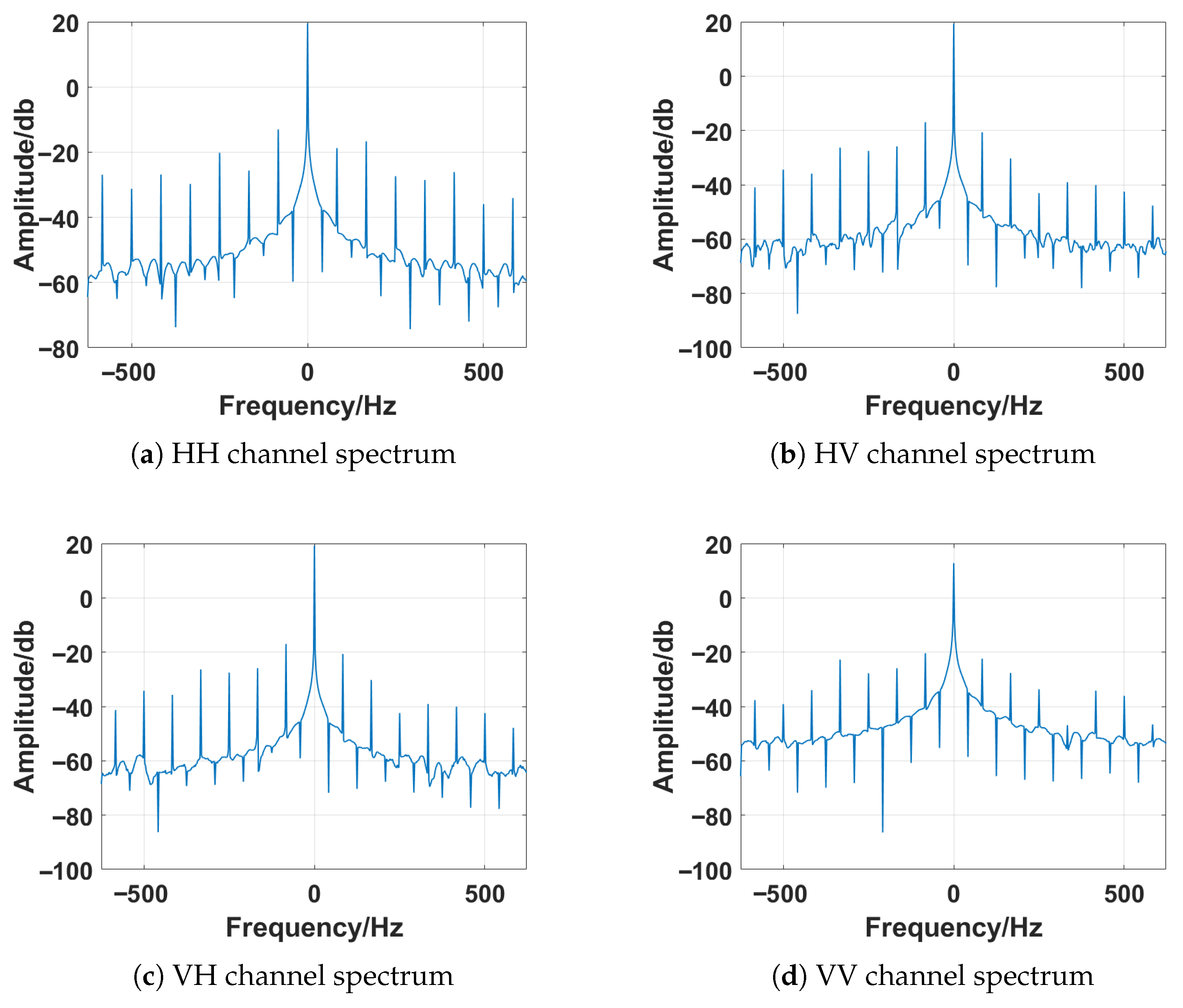

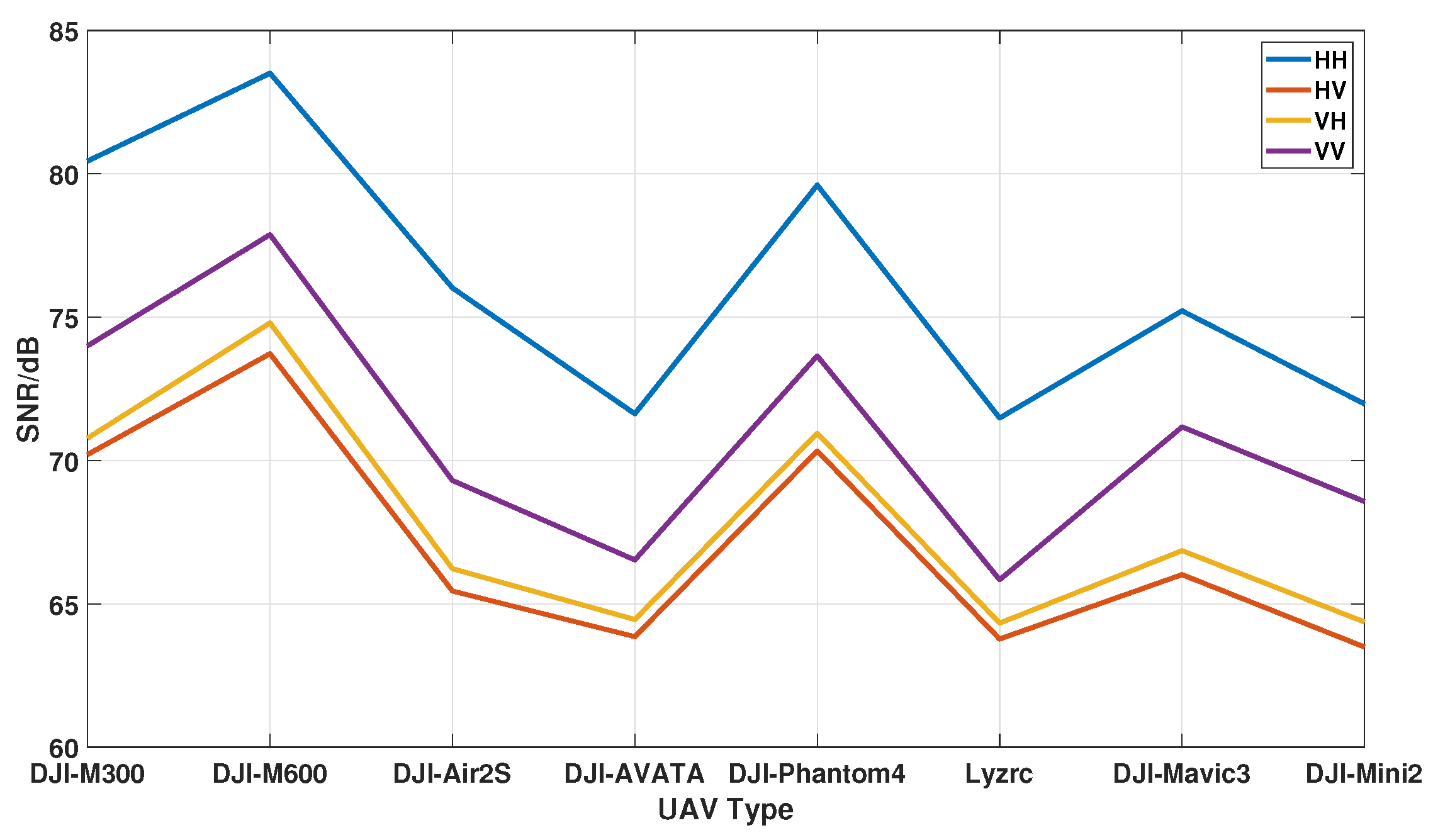

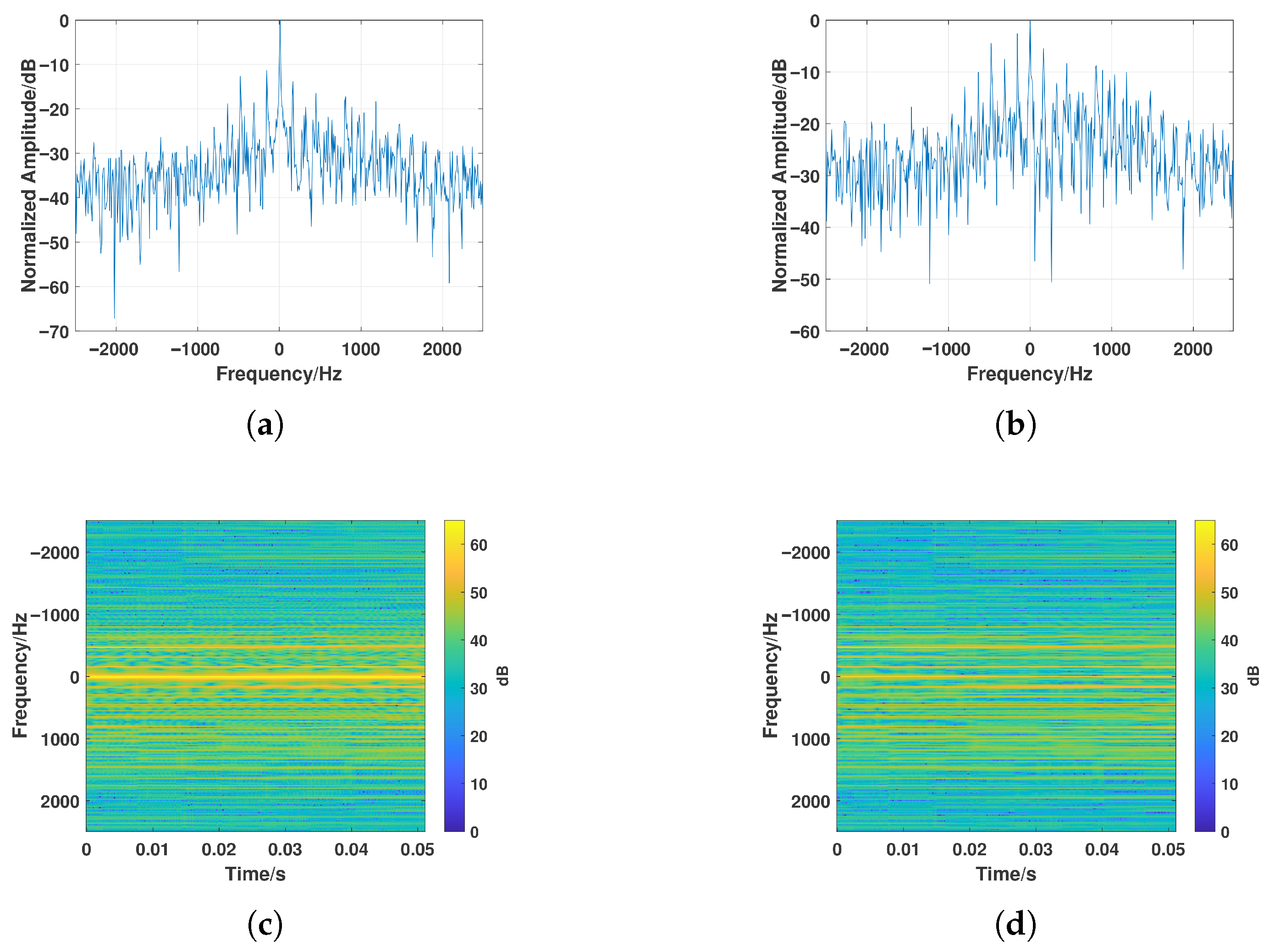

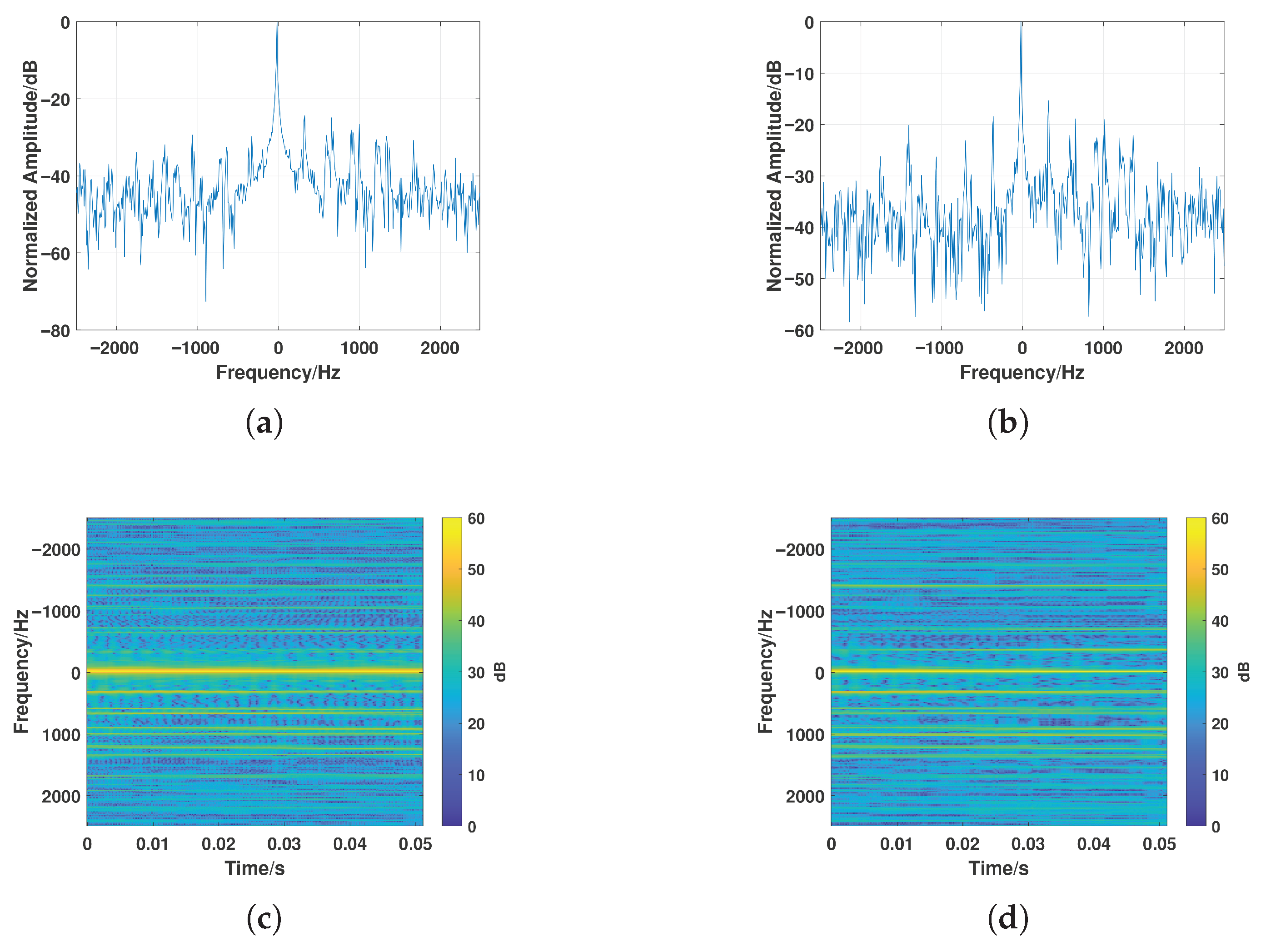

3.3.1. Main Doppler SNR Analysis

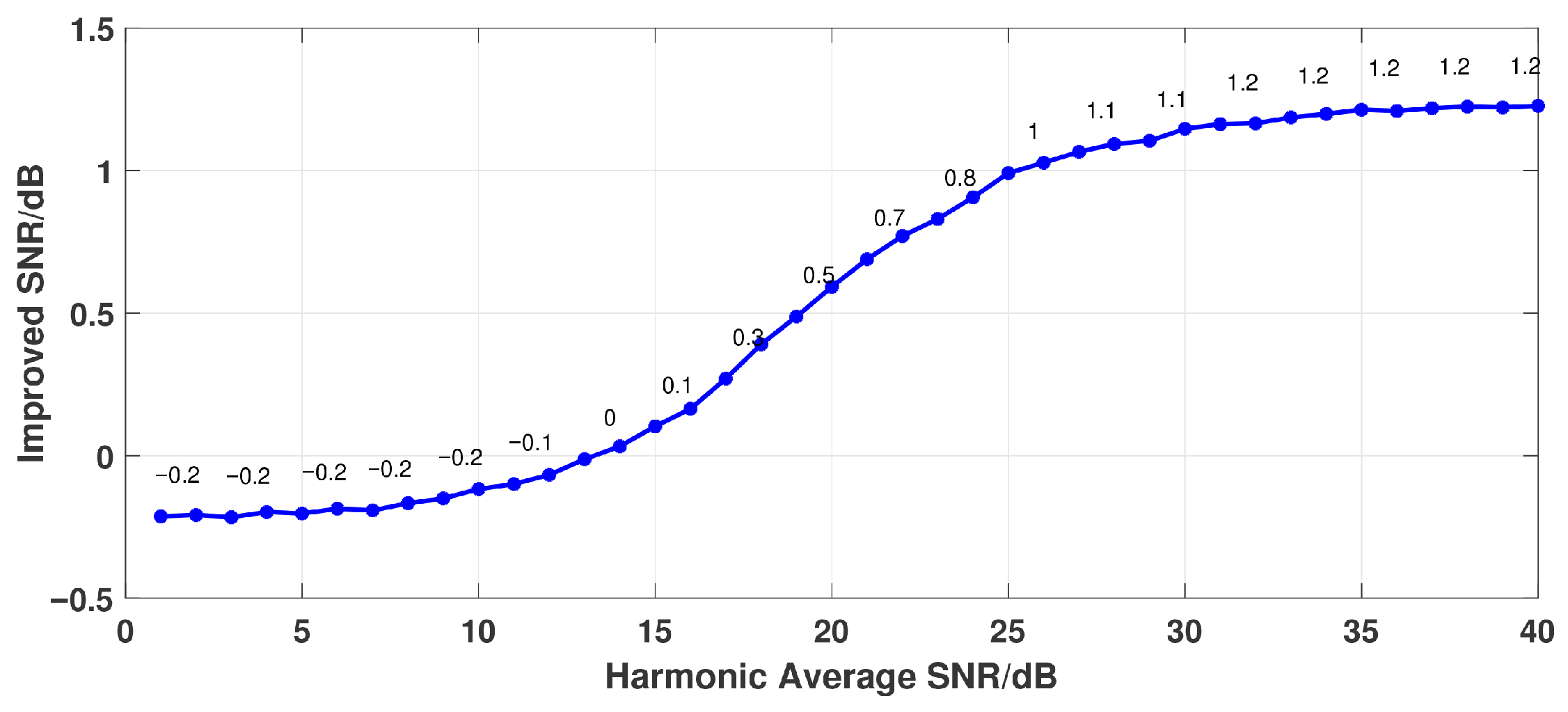

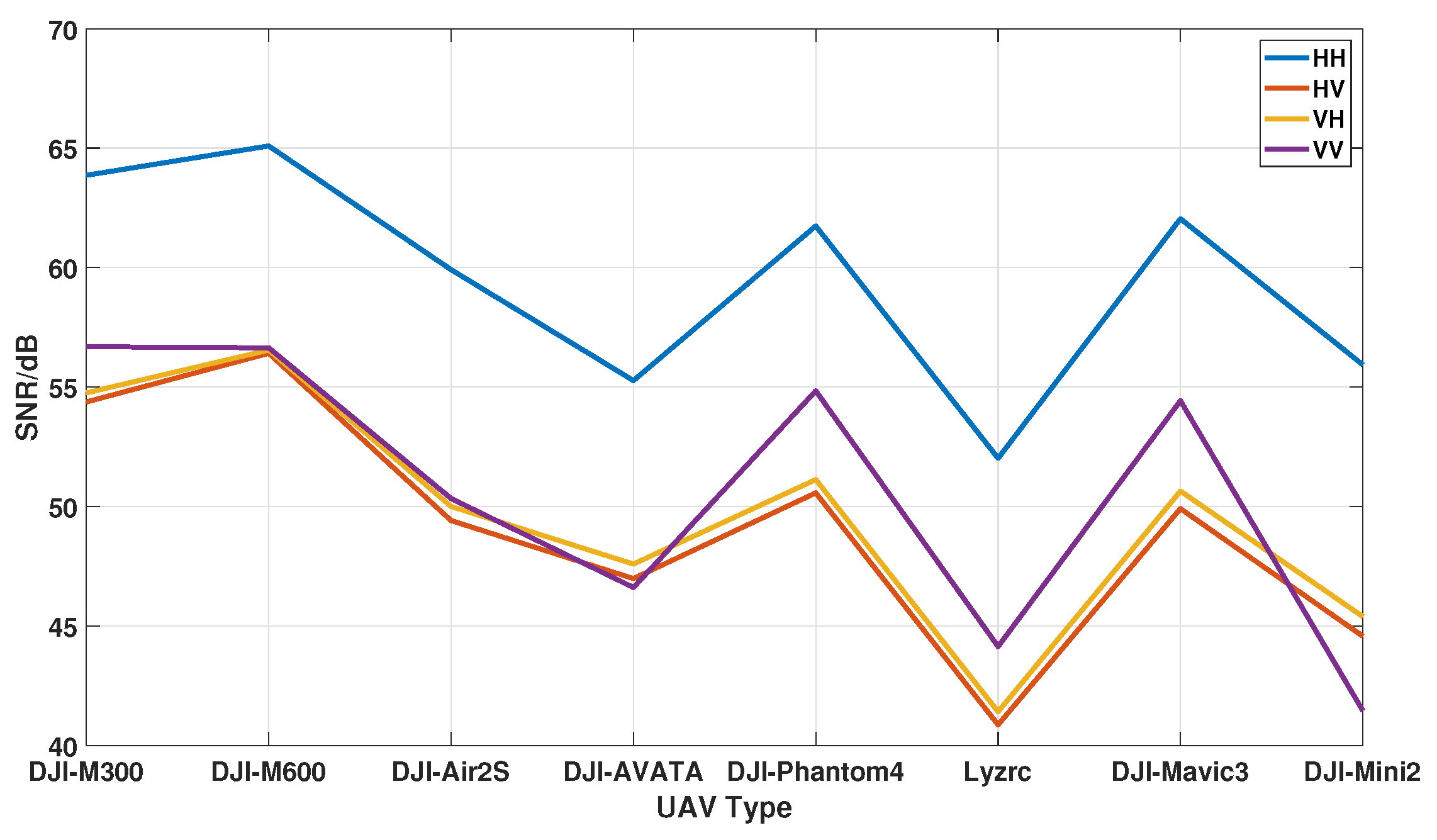

3.3.2. Harmonic SNR Analysis

3.3.3. Noise Injection and Compensation Analysis

- (4) construct the noisy signal as

3.3.4. Classification Performance

- Single-Branch (SB): A baseline model where the U-Net reconstruction and polarization-compensated feature extraction are merged into a single processing branch without explicit feature interaction.

- Early Fusion (EF): The three polarimetric spectrograms are concatenated at the input level and fed into a single deep network, corresponding to early fusion of polarization cues.

- Late Fusion (LF): Independent networks are trained for the U-Net reconstruction and the compensated feature extraction, and their features are fused at the classifier layer.

- Proposed MPAF-Net: The full framework with dual-branch structure, partial weight sharing, and FiLM modulation.

- Single-channel HH and VV inputs achieve over 91% accuracy at 20 dB but drop to about 40% at 0 dB, revealing strong noise sensitivity.

- Sinclair and Pauli fusions improve over single-channel results, particularly at 0 dB with a gain of about 14%, confirming the complementary nature of multi-polarimetric features.

- The proposed compensation + U-Net method achieves 66.7% accuracy at 0 dB, about 10% higher than the best baseline. At 10 dB and 20 dB, it further reaches 88.6% and 97.2%, maintaining superior performance across all SNR conditions.

- Performance-Complexity Trade-off: It is noteworthy that at a high SNR of 20 dB, the performance gain of the proposed method over the "Compensated" baseline is marginal. This indicates that the added computational complexity of the deep network may not be fully justified in high-SNR environments. The primary advantage of the proposed method is its significant performance improvement in low-SNR regimes (e.g., 0–10 dB), which are often more challenging and critical for practical applications. This trade-off should be considered when deploying the method in scenarios with varying noise conditions.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MPAF-Net | Multi-branch polarization aware fusion network |

| UAV | unmanned aerial vehicles |

| SNR | signal-to-noise ratio |

| CNN | convolutional neural network |

| SAR | synthetic aperture radar |

| RCS | radar cross-section |

| STFT | short-time Fourier transform |

| MHA | multi-head attention |

| GF | glass fiber |

| MLFMM | Multi-Level Fast Multipole Method |

| MOM | Method of Moments |

| FiLM | Feature-wise Linear Modulation |

References

- Ahmad, B.I.; Rogers, C.; Harman, S.; Dale, H.; Jahangir, M.; Antoniou, M.; Baker, C.; Newman, M.; Fioranelli, F. A Review of Automatic Classification of Drones Using Radar: Key Considerations, Performance Evaluation, and Prospects. IEEE Aerosp. Electron. Syst. Mag. 2024, 39, 18–33. [Google Scholar] [CrossRef]

- Rahman, M.H.; Sejan, M.A.S.; Aziz, M.A.; Tabassum, R.; Baik, J.I.; Song, H.K. A Comprehensive Survey of Unmanned Aerial Vehicles Detection and Classification Using Machine Learning Approach: Challenges, Solutions, and Future Directions. Remote Sens. 2024, 16, 879. [Google Scholar] [CrossRef]

- Coluccia, A.; Parisi, G.; Fascista, A. Detection and Classification of Multirotor Drones in Radar Sensor Networks: A Review. Sensors 2020, 20, 4172. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep Learning-Based UAV Detection in Pulse-Doppler Radar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Yan, J.; Hu, H.; Gong, J.; Kong, D.; Li, D. Exploring Radar Micro-Doppler Signatures for Recognition of Drone Types. Drones 2023, 7, 280. [Google Scholar] [CrossRef]

- Björklund, S. Target Detection and Classification of Small Drones by Boosting on Radar Micro-Doppler. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; pp. 182–185. [Google Scholar] [CrossRef]

- Kim, B.K.; Kang, H.S.; Park, S.O. Drone Classification Using Convolutional Neural Networks with Merged Doppler Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 38–42. [Google Scholar] [CrossRef]

- Brooks, D.A.; Schwander, O.; Barbaresco, F.; Schneider, J.Y.; Cord, M. Temporal Deep Learning for Drone Micro-Doppler Classification. In Proceedings of the 2018 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Ma, X.; Oh, B.S.; Sun, L.; Toh, K.A.; Lin, Z. EMD-Based Entropy Features for micro-Doppler Mini-UAV Classification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1295–1300. [Google Scholar] [CrossRef]

- Li, T.; Wen, B.; Tian, Y.; Li, Z.; Wang, S. Numerical Simulation and Experimental Analysis of Small Drone Rotor Blade Polarimetry Based on RCS and Micro-Doppler Signature. IEEE Antennas Wirel. Propag. Lett. 2019, 18, 187–191. [Google Scholar] [CrossRef]

- Herschfelt, A.; Birtcher, C.R.; Gutierrez, R.M.; Rong, Y.; Yu, H.; Balanis, C.A.; Bliss, D.W. Consumer-grade drone radar cross-section and micro-Doppler phenomenology. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 981–985. [Google Scholar] [CrossRef]

- Jatau, P.; Melnikov, V.; Yu, T.Y. A Machine Learning Approach for Classifying Bird and Insect Radar Echoes with S-Band Polarimetric Weather Radar. J. Atmos. Ocean. Technol. 2021, 38, 1797–1812. [Google Scholar] [CrossRef]

- Melnikov, V.M.; Lee, R.R.; Langlieb, N.J. Resonance Effects Within S-Band in Echoes From Birds. IEEE Geosci. Remote Sens. Lett. 2012, 9, 413–416. [Google Scholar] [CrossRef]

- Gauthreaux, S.; Diehl, R. Discrimination of Biological Scatterers in Polarimetric Weather Radar Data: Opportunities and Challenges. Remote Sens. 2020, 12, 545. [Google Scholar] [CrossRef]

- Cloude, S.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Jin, L.; Qi-hua, W.; Xiao-Feng, A.; Shun-Ping, X. Experimental Study on Full-Polarization Micro-Doppler of Space Precession Target in Microwave Anechoic Chamber. In Proceedings of the 2016 Sensor Signal Processing for Defence (SSPD), Edinburgh, UK, 22–23 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Liu, X.; Jiao, L.; Tang, X.; Sun, Q.; Zhang, D. Polarimetric Convolutional Network for PolSAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3040–3054. [Google Scholar] [CrossRef]

- Guan, T.; Chang, S.; Deng, Y.; Xue, F.; Wang, C.; Jia, X. Oriented SAR Ship Detection Based on Edge Deformable Convolution and Point Set Representation. Remote Sens. 2025, 17, 1612. [Google Scholar] [CrossRef]

- Ezuma, M.; Anjinappa, C.K.; Funderburk, M.; Guvenc, I. Radar Cross Section Based Statistical Recognition of UAVs at Microwave Frequencies. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 27–46. [Google Scholar] [CrossRef]

- Kim, S.; Lee, H.; Noh, Y.H.; Yook, J.G. Polarimetric micro-doppler signature measurement of a small drone and its resonance phenomena. J. Electromagn. Waves Appl. 2021, 35, 1493–1510. [Google Scholar] [CrossRef]

- Torvik, B.; Olsen, K.E.; Griffiths, H. Classification of Birds and UAVs Based on Radar Polarimetry. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1305–1309. [Google Scholar] [CrossRef]

- Kim, B.K.; Kang, H.S.; Lee, S.; Park, S.O. Improved Drone Classification Using Polarimetric Merged-Doppler Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1946–1950. [Google Scholar] [CrossRef]

- Kang, K.B.; Choi, J.H.; Cho, B.L.; Lee, J.S.; Kim, K.T. Analysis of Micro-Doppler Signatures of Small UAVs Based on Doppler Spectrum. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 3252–3267. [Google Scholar] [CrossRef]

- Wang, R.; Wang, L.; Cai, J.; Yan, Y.; Jiao, L.; Hu, C. Intelligent Recognition of a Low-altitude UAV Based on Micro-Doppler Feature Enhancement. IEEE Trans. Aerosp. Electron. Syst. 2025, 1–16. [Google Scholar] [CrossRef]

- Xu, J.; Yu, J.; Peng, Y.N.; Xia, X.G. Radon-Fourier Transform for Radar Target Detection, I: Generalized Doppler Filter Bank. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1186–1202. [Google Scholar] [CrossRef]

- Xu, J.; Yu, J.; Peng, Y.N. Radon-Fourier Transform for Radar Target Detection (II): Blind Speed Sidelobe Suppression. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 2473–2489. [Google Scholar] [CrossRef]

- Yu, J.; Xu, J.; Peng, Y.N.; Xia, X.G. Radon-Fourier Transform for Radar Target Detection (III): Optimality and Fast Implementations. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 991–1004. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Perez, E.; Strub, F.; de Vries, H.; Dumoulin, V.; Courville, A.C. FiLM: Visual Reasoning with a General Conditioning Layer. arXiv 2017, arXiv:1709.07871. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Hu, C.; Yan, Y.; Wang, R.; Jiang, Q.; Cai, J.; Li, W. High-resolution, multi-frequency and full-polarization radar database of small and group targets in clutter environment. Sci. China Inf. Sci. 2023, 66, 227301. [Google Scholar] [CrossRef]

- Park, D.; Lee, S.; Park, S.; Kwak, N. Radar-Spectrogram-Based UAV Classification Using Convolutional Neural Networks. Sensors 2021, 21, 210. [Google Scholar] [CrossRef]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Model origin coordinates | [0, 0, 0] |

| Fuselage dimensions (L × W × H) | 30 cm × 25 cm × 10 cm |

| Number of blades | 2 |

| Fuselage material | PC/ABS (20% ABS) |

| Rotor material | PA6 + 30%GF |

| Mesh accuracy | Coarse |

| Parameter | Value |

|---|---|

| Carrier Frequency | Ka-band (34.5∼35.5 GHz) |

| Bandwidth | 1 GHz |

| Detection range | 300∼1600 m |

| Pulse Repetition Frequency | 5000 Hz |

| Mode | Description |

|---|---|

| Fixed mode | UAV hovers at 200 m altitude and 1000 m range, west of the phased array radar. |

| Multi-azimuth mode | UAV performs circular flights at a radius of 50 m, centered at a 1050 m range. |

| Multi-elevation mode | UAV returns to the fixed mode position, then ascends vertically to 500 m before descending. |

| Longitudinal mode | UAV flies uniformly along the radar beam direction, referenced to the polarimetric radar. |

| Tangential mode | UAV flies uniformly along a trajectory perpendicular to the radar beam direction. |

| Fast maneuvering mode | UAV performs random fast turns, rapid approaching, and receding maneuvers. |

| Model | HH | HV | VH | VV |

|---|---|---|---|---|

| M300 | 80.25 | 70.28 | 70.78 | 74.00 |

| M600 | 83.24 | 74.02 | 74.80 | 77.88 |

| Air2s | 76.62 | 66.04 | 66.23 | 69.30 |

| Avata | 72.32 | 64.26 | 64.45 | 66.53 |

| Phantom4 | 79.72 | 70.41 | 70.95 | 73.65 |

| Lyzrc-L100 | 71.75 | 64.13 | 64.33 | 65.84 |

| Mavic3 | 75.67 | 66.35 | 66.86 | 71.17 |

| Mini2 | 72.10 | 63.58 | 64.37 | 68.57 |

| Model | HH | HV | VH | VV |

|---|---|---|---|---|

| M300 | 63.26 | 53.80 | 54.20 | 56.26 |

| M600 | 64.92 | 55.33 | 55.45 | 54.91 |

| Air2s | 59.20 | 49.17 | 49.92 | 49.54 |

| Avata | 52.01 | 44.64 | 45.36 | 44.80 |

| Phantom4 | 61.27 | 50.11 | 50.69 | 54.85 |

| Lyzrc-L100 | 51.82 | 40.59 | 41.13 | 44.27 |

| Mavic3 | 61.40 | 49.68 | 50.45 | 54.71 |

| Mini2 | 55.79 | 44.35 | 45.16 | 41.12 |

| SNR (dB) | SB | EF | LF | Proposed |

|---|---|---|---|---|

| 0 | 41.5 | 53.7 | 56.4 | 66.7 |

| 10 | 74.3 | 80.3 | 81.7 | 88.6 |

| 20 | 91.6 | 93.1 | 94.0 | 97.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Chen, Z.; Yu, T.; Yan, Y.; Cai, J.; Wang, R. Polarization Compensation and Multi-Branch Fusion Network for UAV Recognition with Radar Micro-Doppler Signatures. Remote Sens. 2025, 17, 3693. https://doi.org/10.3390/rs17223693

Wang L, Chen Z, Yu T, Yan Y, Cai J, Wang R. Polarization Compensation and Multi-Branch Fusion Network for UAV Recognition with Radar Micro-Doppler Signatures. Remote Sensing. 2025; 17(22):3693. https://doi.org/10.3390/rs17223693

Chicago/Turabian StyleWang, Lianjun, Zhiyang Chen, Teng Yu, Yujia Yan, Jiong Cai, and Rui Wang. 2025. "Polarization Compensation and Multi-Branch Fusion Network for UAV Recognition with Radar Micro-Doppler Signatures" Remote Sensing 17, no. 22: 3693. https://doi.org/10.3390/rs17223693

APA StyleWang, L., Chen, Z., Yu, T., Yan, Y., Cai, J., & Wang, R. (2025). Polarization Compensation and Multi-Branch Fusion Network for UAV Recognition with Radar Micro-Doppler Signatures. Remote Sensing, 17(22), 3693. https://doi.org/10.3390/rs17223693