1. Introduction

Hyperspectral object tracking (HOT) constitutes a key problem in computer vision, with broad applications in intelligent surveillance [

1], traffic monitoring [

2], and medical imaging analysis [

3]. Given an initial target annotation in the first frame, a tracker must localize the target throughout subsequent frames despite appearance variation, motion dynamics, partial occlusion, illumination changes, and background clutter. While deep RGB-based trackers have achieved notable advances [

4,

5,

6], their reliance on only three broad spectral channels inherently limits discriminability, especially in low-contrast scenes, camouflage settings, and spectrally ambiguous backgrounds frequently encountered in remote sensing and geoscience monitoring.

Hyperspectral imaging (HSI) acquires contiguous narrow-band reflectance measurements, often spanning on the order of tens to over one hundred bands, providing rich joint spatial–spectral information capable of distinguishing targets with similar spatial texture but differing spectral signatures [

7]. This fine-grained spectral resolution is particularly advantageous in scenarios involving small or partially occluded objects embedded in spectrally heterogeneous or cluttered environments. Consequently, HOT has emerged as a promising extension of traditional RGB tracking for improved robustness and semantic discrimination in complex environments.

A central challenge in hyperspectral object tracking is obtaining features that reliably distinguish the target from its background under varying conditions. Early HOT methods (such as references [

8,

9,

10]) mainly rely on manually designed features to construct the spectral or spatial expression of the target, such as material abundance, spectral gradient histogram or specific convolution kernel. While these feature representation strategies achieved successes, they lack adaptability, and it is difficult for them to handle rapid target appearance changes or severe occlusions. In contrast, deep learning has transformed RGB tracking by leveraging large-scale annotated datasets [

11,

12] to learn hierarchical, discriminative representations.

Due to the scarcity of large labeled hyperspectral video datasets, directly training deep HOT architectures end-to-end often leads to overfitting and unstable optimization. To mitigate this data limitation, a prevalent line of work transfers RGB-pretrained backbones (e.g., GOT-10K [

13], TrackingNet [

14], LaSOT [

15]) to hyperspectral scenarios by first converting the high-dimensional hyperspectral data into one or more three-channel false-color composites [

16]. Single-composite methods usually follow two paradigms: (i) selecting three key bands using conventional techniques for dimensionality reduction, such as PCA, or via learning-based selection networks [

17], and (ii) constructing a three-channel surrogate image by replicating existing bands. Specifically, band selection is sensitive and may exclude narrow yet discriminative spectral responses critical for small or low-contrast targets. Replication yields zero inter-channel diversity, preventing the pretrained filters from exploiting cross-channel interactions. Adjacent or sequential grouping preserves high local spectral correlation, causing redundant channel responses and underutilizing potential complementary cues. PCA emphasizes directions of maximal global variance; thus, minority-band features with high target–background discriminability but low energy risk being attenuated or entangled in principal components dominated by background structure. However, the above methods collectively reduce spectral separability, distort the statistical assumptions underlying RGB pretraining, and constrain downstream feature discrimination.

Alternatively, hyperspectral bands can be randomly grouped to form false-color composites, with the intention of reducing inter-channel correlation. However, this strategy treats all bands as equally important. In reality, different wavelengths vary significantly in their contribution to hyperspectral image formation and downstream tasks, as demonstrated by band selection studies [

18]. Consequently, random grouping often mixes weakly informative or noisy bands with discriminative ones, which negatively impacts hyperspectral tracking and increases training difficulty. To mitigate this problem, Li et al. introduced the Band Attention Ensemble Network (BAE-Net) [

19], which employs a band attention module to estimate spectral importance and groups bands accordingly before constructing false-color images. This line of work highlights that incorporating band importance into grouping leads to more reliable representations than purely random partitioning.

Building on the idea of leveraging multiple spectral views, recent multi-branch trackers such as SST-Net [

20] and BRRF-Net [

21] extend this paradigm by generating several composites and processing them in parallel. Although methods like BAE-Net already consider band importance during grouping, most of these trackers still fuse the outputs of different branches through uniform averaging, effectively assuming equal reliability across composites. In practice, a branch dominated by weak or interference-prone bands can produce an outlier response map; equal weighting then propagates this error and increases the risk of drift. Compounding the issue, adverse imaging factors (e.g., motion blur, defocus, low illumination) erode high-level spatial structure and channel discriminability. Existing refinement modules rarely perform coupled spatial–channel enhancement, limiting their ability to recover degraded details. Although adaptive update mechanisms alleviate gradual appearance evolution, they do not explicitly quantify band importance or implement reliability-aware suppression of low-quality branches.

Despite these advances, hyperspectral tracking still faces two key challenges. First, severe spectral redundancy can cause the tracker to overemphasize noisy or weakly discriminative bands, thereby diluting target–background contrast and increasing false responses. Second, spatial degradation from factors such as blur or low resolution weakens high-level structural integrity, raising the risk of localization drift. We tackle these issues with a Band and Context Refinement Network (BCR-Net), which unifies explicit spectral importance estimation, contextual feature enhancement, and reliability-aware fusion. Specifically, the Band Importance Modeling Module (BIMM) leverages the linear self-representation property of hyperspectral imagery: a U-Net-based unrolled gradient descent solver produces a sparse band coefficient matrix that captures complementary relations while suppressing redundancy. This matrix is then used to (i) quantify per-band importance and (ii) construct multiple complementary false-color composites, allowing an RGB-pretrained backbone to harvest transferable semantic and spatial priors. Building upon the resulting high-level features, the Contextual Feature Refinement Module (CFRM) applies cross-dimensional attention to jointly enhance spatial detail fidelity and channel-wise discriminability. Finally, we perform dynamic reliability-aware fusion: branch-level tracking outputs are adaptively weighted by importance scores derived from the coefficient matrix, effectively attenuating unstable predictions from low-importance or interference-prone composites.

The main contributions of this study can be summarized as follows:

We propose a unified hyperspectral tracking framework that integrates spectral band importance modeling with RGB-pretrained backbones. By selectively emphasizing informative bands and guiding feature construction, the framework achieves stable representations, efficient adaptation, and enhanced discriminative power during tracking.

We reformulate the spectral self-expression prior into a parameterized module that learns band-wise importance and guides feature construction, explicitly addressing spectral redundancy and improving the discriminative power of the features.

We employ a Contextual Feature Refinement Module to enhance multi-scale feature representations, improving discriminative power for target–background separation.

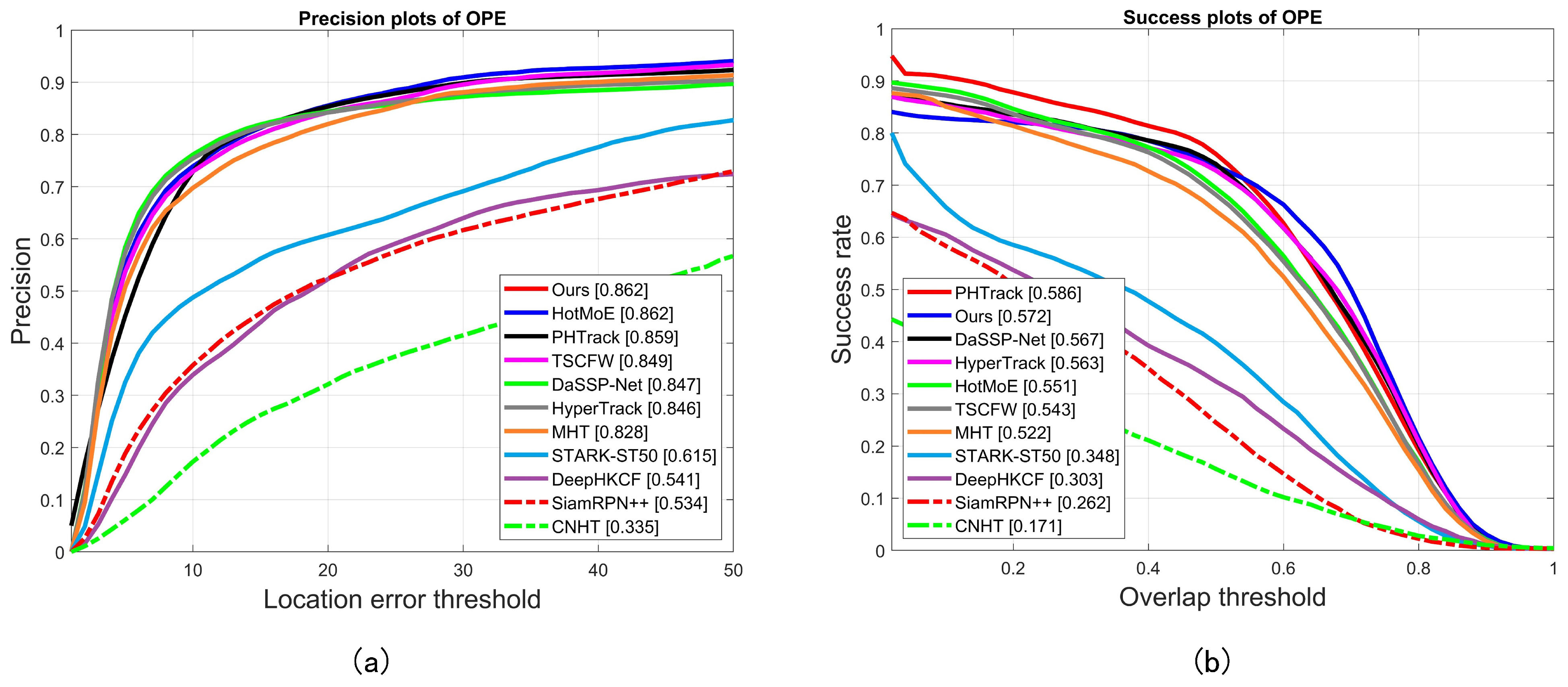

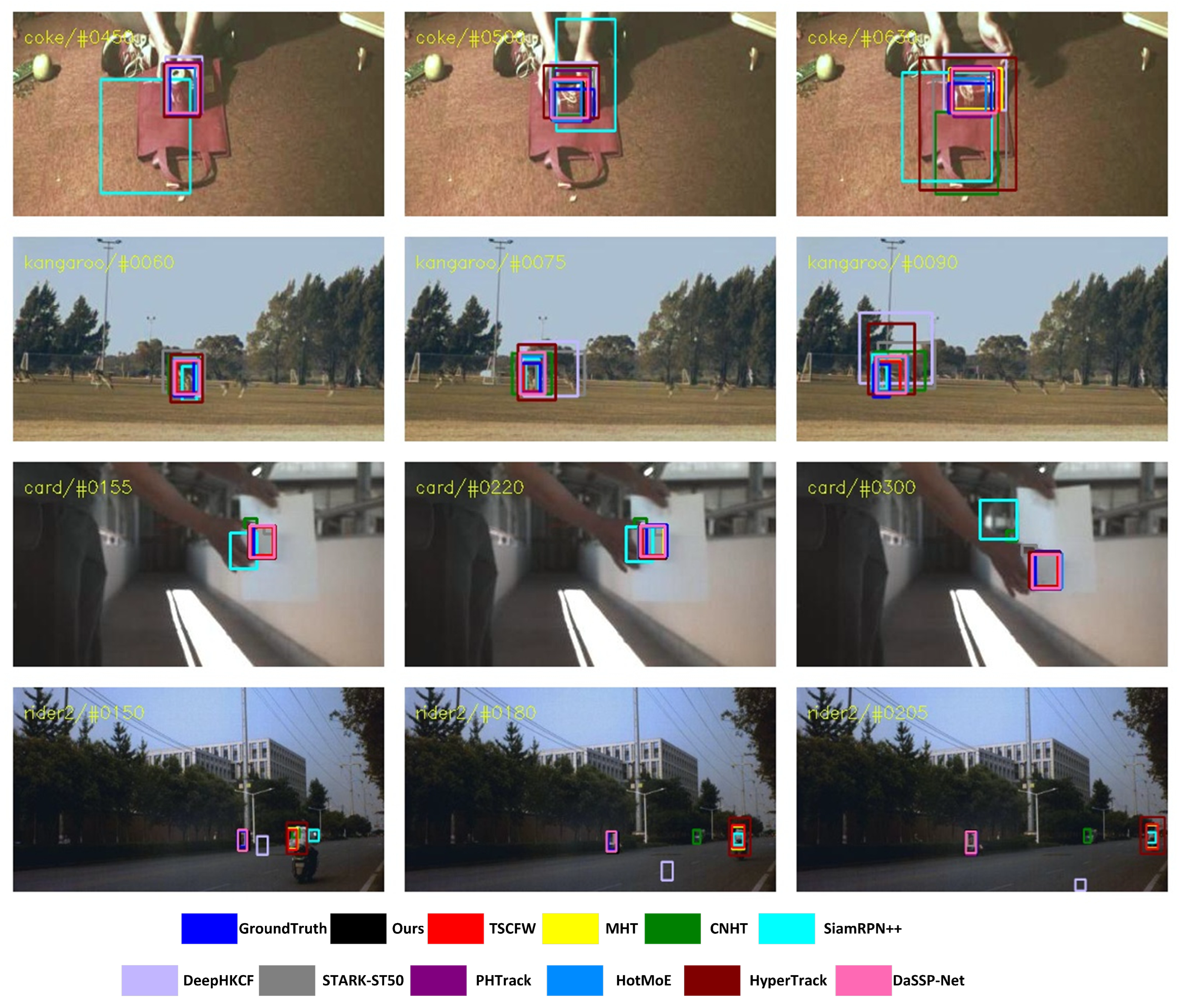

Extensive experiments on two public hyperspectral video datasets demonstrate that BCR-Net is competitive with existing methods, achieving strong performance in both tracking accuracy and robustness.

The remainder of this paper is organized as follows.

Section 2 reviews related work,

Section 3 describes the proposed tracker architecture,

Section 4 presents comparative analyses with other trackers and includes ablation studies, and

Section 5 concludes the paper.

2. Related Works

Current hyperspectral object tracking (HOT) methods have primarily evolved along two directions: correlation filter-based (CF) approaches and deep learning-based (DL) approaches. In the following, we review each category, highlighting their key techniques, advantages, and limitations.

2.1. Correlation Filter-Based HOT

CF methods constitute one of the earliest and most widely adopted strategies for HOT. These methods track targets by learning correlation filters that model the relationship between the target’s spectral–spatial characteristics and its surrounding context. Qian et al. [

22] proposed using normalized 3D spectral–spatial cubes as convolution filters to capture local spectral–spatial patterns for hyperspectral object tracking. Zhang et al. [

8] introduced a method that combines convolutional features from VGGNet with HOG descriptors and fuses their responses through correlation filters to improve tracking robustness under complex backgrounds. To address occlusions and illumination variations, Guo et al. [

23] developed a method that incorporates adaptive weighting and background-aware correlation filters to suppress anomalies in response maps.

To enhance feature extraction efficiency, Chen et al. [

24] proposed using fast spatial–spectral convolution kernels with closed-form solutions in the Fourier domain for real-time spectral–spatial feature learning. A tensor-based sparse correlation filter incorporating spatial–spectral weighted regularization was proposed by Hou et al. [

10] to reduce background interference and enhance spectral discriminability. Building on these approaches, Lou et al. [

25] proposed combining deep and spatial–spectral features by extracting 3D gradient-based spatial features through tensor singular spectrum analysis and convolutional features from a VGGNet pretrained on RGB images, thereby improving tracking accuracy by exploiting the complementarity of deep and enhanced spectral–spatial representations.

Overall, CF methods for HOT provide advantages such as low computational overhead, ease of implementation, and effective use of spectral–spatial priors. Nevertheless, their heavy reliance on handcrafted or predefined features limits adaptability in challenging scenarios, including occlusions, rapid appearance variations, and cluttered environments.

2.2. Deep Learning-Based HOT

DL methods have recently emerged as a major direction in HOT, offering the ability to learn adaptive spectral–spatial representations. The critical challenge, however, lies in the scarcity of labeled hyperspectral video data, which makes it impractical to train large-scale backbone networks tailored specifically for HOT. To mitigate this limitation, many studies transfer knowledge from RGB-pretrained models by dividing hyperspectral bands into groups and generating false-color images for training. Uzkent et al. [

26] proposed reducing hyperspectral data to three channels and applying an RGB-pretrained VGGNet to extract robust features for target representation. Wang et al. [

27] proposed a strategy that selects representative bands using joint entropy and utilizes RGB-pretrained Siamese networks to achieve effective semantic feature extraction under constrained training conditions.

Building upon RGB-pretrained backbones, many existing methods have specifically utilized spectral–spatial correlations to enhance feature discrimination. Specifically, Wu et al. [

21] reorganized hyperspectral bands into groups based on learned correlations and fused them for robust representation, but the final fusion relied on uniform averaging without fully exploiting learned band importance. Liu et al. [

16] designed a dual Siamese framework with a hyperspectral target-aware module and spatial–spectral cross-attention for adaptive fusion, yet lacked reliability-aware weighting and coupled spatial–channel refinement. Zhao et al. [

28] developed a transformer-based fusion network to capture intra- and inter-band interactions across modalities, effectively integrating hyperspectral and RGB information. Chen et al. [

29] proposed a spectral awareness module with feature interaction to model band dependencies and strengthen fusion, but did not incorporate importance learning into a unified fusion strategy. Jiang et al. [

30] introduced a channel-adaptive dual Siamese architecture to flexibly handle sequences with varied spectral channels. Xiong et al. [

31] introduced SSTCF, which employs spatial–spectral features and a low-rank constraint to enhance global spectral structure, while incorporating temporal consistency to maintain filter stability across consecutive frames. Gao et al. [

32] proposed SP-HST, a parameter-efficient method that freezes a pre-trained RGB network and trains only a lightweight branch to generate spectral prompts, which provide complementary HSI information fused with the frozen RGB features for the final tracking decision. Wang et al. [

33] presented SSF-Net, which employs spectral angle awareness to capture region-level spectral relationships, achieving more robust spatial–spectral representations for hyperspectral tracking.

Overall, DL-based HOT methods have demonstrated strong effectiveness in leveraging spectral–spatial fusion and knowledge transfer from pre-trained RGB models. Although the above-mentioned methods adopt band regrouping to improve hyperspectral tracking, they often fail to fully exploit the varying importance of spectral bands and suffer from degraded feature discrimination under complex conditions, which limits their efficiency and robustness. Therefore, in this paper, we propose BCR-Net to address these limitations through BIMM and CFRM, enabling adaptive band grouping and contextual refinement.

3. Proposed Method

Here, we describe the Band and Context Refinement Network (BCR-Net), which combines the Band Importance Modeling Module (BIMM) with the Contextual Feature Refinement Module (CFRM).

3.1. Overall Architecture

Since hyperspectral images contain high-dimensional spectral information that cannot be directly processed by conventional RGB-based object trackers, we propose the BCR-Net for hyperspectral tracking. It first learns the importance of each spectral channel and groups the hyperspectral data into multiple false-color images accordingly. Semantic features at a high level are extracted from the grouped images using a pretrained RGB backbone. Finally, the features from different groups are adaptively fused using importance-guided weighting, enabling robust spectral–spatial representation and accurate target localization.

Figure 1 illustrates the overall framework, which adopts a Siamese network architecture and includes three main components: (i) a dynamic spectral feature learning module, (ii) a backbone network for feature extraction, and (iii) an integrated prediction module. Input data is fed into two parallel branches: the template branch representing the initial target patch, and the search branch containing candidate regions from later frames.

The dynamic spectral feature learning module first analyzes inter-band relationships and channel importance through an auxiliary reconstruction task. This step projects the original C-dimensional spectral cube into multiple three-channel false-color images that preserve discriminative information while reducing redundancy. These false-color groups are then fed into a pretrained RGB backbone (e.g., ResNet-50), shared by both template and search branches, to obtain deep semantic features. On top of the backbone output, a context-aware feature refinement module further processes the features to capture fine-grained spatial details and inter-channel correlations, ensuring the extracted representations remain robust for subsequent tracking.

Finally, the integrated prediction module aggregates the similarity maps produced by different false-color branches. To balance their contributions, each branch is adaptively weighted according to the spectral importance scores estimated in the dynamic spectral feature learning stage. The response map guides target localization, complemented by a dynamic template update that adjusts for temporal appearance changes.

3.2. Band Importance Modeling Module

Hyperspectral data are usually converted into three-channel composites to fit RGB-pretrained CNNs, but sequential grouping produces highly correlated channels, and independent processing overlooks spectral structure, both of which weaken discriminability. To overcome these issues, we adopt an importance-guided grouping strategy that ranks bands by significance and assembles them into complementary composites, reducing redundancy while preserving informative cues for robust multi-branch tracking.

For a hyperspectral image

, we model intrinsic dependencies between spectral bands by leveraging the prior that each band can be roughly represented as a linear combination of the others. Accordingly, we learn a band correlation matrix via a spectral self-expression model, expressed as a non-negative reconstruction coefficient matrix

. Each row of

indicates how a band is reconstructed from the others, capturing inter-band dependencies, and summing the coefficients provides a quantitative measure of band importance, with larger values highlighting more discriminative spectral channels. Mathematically, the spectral self-expression model is formulated as

where

denotes the Frobenius norm. The non-negativity constraint

ensures physically meaningful importance values, while the zero-diagonal constraint

prevents self-reconstruction and stabilizes the estimated band importance.

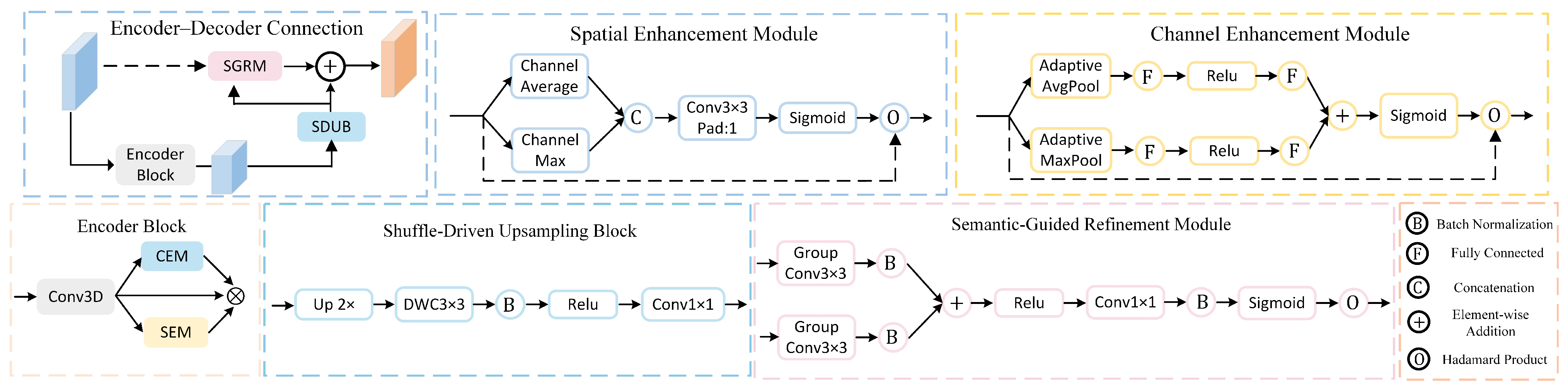

To implement the spectral self-expression problem in Equation (1), we employ an encoder–decoder architecture based on U-Net.

Figure 2 depicts the connection between the last encoder block and the first decoder block, as well as the internal structure of each submodule. The reconstruction coefficient matrix

is approximated by aggregating learned spectral–spatial features in a differentiable, end-to-end manner. The encoder captures multi-scale spectral–spatial representations, encoding both local patterns and global inter-band dependencies, while channel and spatial enhancement modules emphasize informative features. The decoder restores spatial resolution and fuses multi-scale features via a Semantic-Guided Refinement Module, where high-level features guide adaptive weighting of low-level features. From the decoder output, a global pooling operation aggregates feature correlations to produce an initial channel weight matrix

, providing a coarse approximation of the reconstruction coefficients

. Non-negativity and zero-diagonal constraints are enforced via simple activation and masking, and a lightweight Channel Weight Refinement module further optimizes

to emphasize informative bands while suppressing redundancies.

3.2.1. Encoder of BIMM

In hyperspectral image reconstruction, the encoder must model both spectral and spatial correlations to extract discriminative features that distinguish the object from its background. We integrate two complementary modules: the Channel Enhancement Module (CEM) for modeling spectral dependencies and alleviating inter-band redundancy, and the spatial enhancement module (SEM) for identifying important spatial regions and enhancing structural details. This design adaptively enhances informative features while suppressing redundancy, improving discrimination and robustness.

Given an input feature map

, CEM first applies adaptive average pooling

and max pooling

to obtain global channel descriptors

and

. Each descriptor is then passed through two fully connected layers: the first reduces the channel dimension to

(with

), and the second restores it, with a ReLU activation in between. The outputs are summed and passed through a Softplus activation to generate the channel weight matrix

, which is broadcasted to match the original feature dimensions and multiplied element-wise (⊙) with

:

While CEM captures spectral relationships, it does not directly model spatial structures. To address this, the SEM module enhances spatial saliency by highlighting important positions and suppressing irrelevant regions. For an input

, SEM computes channel-wise max and average maps,

and

, concatenates them, and applies a

convolution with padding, followed by a Sigmoid activation (

) to generate the spatial weight map

. The output is then obtained via element-wise multiplication (⊙) with

:

where

denotes concatenation along the channel dimension.

By stacking multiple 3D convolution layers, each followed by SEM and CEM, the encoder progressively captures and enhances spectral–spatial features. Specifically, SEM strengthens important spatial structures, while CEM emphasizes discriminative spectral channels. The output of the

l-th encoder layer is expressed as

This design combines convolution with spectral–spatial enhancement in a complementary way, leading to more discriminative feature representations and laying a strong foundation for decoding and reconstruction.

3.2.2. Decoder of BIMM

A high-quality decoder is essential for restoring spatial resolution, reconstructing boundary details, and maintaining semantic consistency. In this work, the decoder addresses both feature reconstruction and semantic refinement by integrating two complementary modules. The Shuffle-Driven Upsampling Block (SDUB) progressively upsamples features while preserving fine-grained spatial–spectral information, ensuring accurate reconstruction of target details. The Semantic-Guided Refinement Module (SGRM) captures global spectral–spatial dependencies and enhances salient semantic features, thereby improving the overall discriminability of the reconstructed output. This design effectively recovers feature scales, fuses cross-level information, and enhances salient regions.

The SDUB is designed to efficiently enhance spatial resolution while preserving feature quality. It takes either the last encoder output (for the first decoder layer) or the previous decoder feature map (for subsequent layers) and first performs bilinear interpolation to double the spatial size. Local channel features are then extracted via a depthwise separable convolution (

), followed by a channel rearrangement to promote cross-channel fusion, and finally a

convolution adjusts the output to the target channel dimension

. Formally, the operation on

is expressed as

While SDUB efficiently upsamples feature maps, effectively fusing multi-level features remains challenging. Low-level decoder features contain fine-grained details but are noisy and semantically weak, whereas high-level encoder features provide rich semantic context but suffer from reduced spatial resolution and blurred boundaries. Simple concatenation or addition often preserves noise and dilutes semantics, resulting in suboptimal reconstruction.

In response, we introduce the Semantic-Guided Refinement Module (SGRM) to refine low-level decoder features using high-level encoder information. Specifically, for the

l-th decoding layer, let

and

denote the encoder and previous decoder features after SDUB upsampling. Both are processed by

grouped convolutions with batch normalization, spatially aligned via bilinear interpolation if needed, and fused through element-wise addition followed by ReLU to form the intermediate attention tensor

:

where

/

are

grouped convolutions.

The intermediate tensor

passes through a

convolution with batch normalization and Sigmoid activation, and the resulting spatial attention map modulates

via element-wise multiplication to produce the fused decoder feature:

The decoder reconstructs multi-level semantic information by applying SDUB and SGRM in a nested manner:

In this structure, SDUB upsamples the previous decoder output, while SGRM fuses it with the corresponding encoder features, enabling efficient multi-scale feature reconstruction with enhanced spatial details and semantic consistency.

3.2.3. Channel Weight Refinement of BIMM

To capture inter-channel dependencies in the decoder features, we apply global average pooling (

) to the decoder output

to obtain a channel-wise summary. The correlation matrix of these pooled features is then mapped through a Softplus function to produce the initial channel weight matrix

:

where

captures the similarity between different feature channels.

However,

is a heuristic estimate that may contain noisy or suboptimal correlations, limiting its ability to highlight discriminative channels. To address this, we propose a single-step structural optimization module that refines

while preserving its non-negativity and zero-diagonal constraints. A lightweight MLP (

) predicts a gradient-like update for each element, simulating conventional gradient descent in a learnable, end-to-end manner. The refined weight matrix is computed as

where

is a diagonal suppression function that explicitly zeros out the diagonal elements of the matrix.

This single-step optimization captures the main structural patterns in channel correlations, promoting sparsity and highlighting important channels. By refining into a task-aligned, discriminative , the decoder emphasizes informative channels and suppresses irrelevant ones, enhancing both feature discrimination and robustness while remaining consistent with the initial estimation.

3.3. Contextual Feature Refinement Module

Previous studies by Chen et al. [

34] show that different backbone layers capture complementary information: shallow layers (e.g.,

conv3 in

-50) retain high spatial resolution and encode local structures, aiding precise target localization, while deeper layers (e.g.,

conv4 and

conv5) provide strong semantic abstraction, capturing high-level concepts and appearance variations. Accordingly, we select

conv3,

conv4, and

conv5 for multi-scale feature fusion, forming a hierarchically complementary framework. To enhance context perception without increasing parameters, dilated convolutions with rates 2 and 4 are applied in

conv4 and

conv5, respectively. Spatial downsampling is removed in these stages to maintain consistent spatial sizes, ensuring alignment for multi-layer fusion and preserving both fine-grained details and semantic richness.

We further introduce a context-aware feature optimization module to enhance separability between template and search features. Channel-wise semantics are captured via global average and max pooling, while spatial dependencies are modeled by compressing the feature map into query and key matrices to compute a correlation score. A learnable

convolution propagates contextual information, and the resulting channel and spatial cues are combined via element-wise Hadamard product to highlight salient regions and suppress background noise. Formally, this process is expressed as

where

and

are the input and output at the

j-th pixel,

N denotes the total number of pixels,

and

are learnable

convolution layers,

and

compute global average and maximum pooling,

is applied element-wise, and

indicates concatenation along the channel dimension.

By jointly modeling channel semantics and spatial dependencies in this integrated manner, the Contextual Feature Refinement Module strengthens salient target representations and suppresses redundant background responses, providing robust, contextually enriched features for subsequent multi-branch tracking and prediction.

3.4. Training and Tracking

3.4.1. Offline Training

During training, the backbone -50 remains frozen, while the upstream modules—including the dynamic spectral feature learning module and multiple Box-Adaptive prediction heads—are optimized jointly in an end-to-end manner. Training pairs are constructed by sampling template and search regions from hyperspectral videos. The template and search regions are cropped from the original images and resized to and , respectively, before being fed into the network.

To exploit multi-scale information from different backbone stages, a hierarchical fusion strategy is applied. Feature maps from

conv3,

conv4, and

conv5 are extracted for classification and regression, and their outputs are combined using learnable weights

and

to produce the final multi-level response maps:

where

and

denote the classification and regression outputs from the

k-th convolutional block, and the weights

and

(initialized to 1) control each layer’s contribution.

For label assignment, we follow the ellipse sampling strategy [

34]. In the search frame, the target is represented by a bounding box with top-left

, center

, and bottom-right

coordinates, having width

and height

. Two ellipses are generated at the box center:

with semi-axes

and

, and

with semi-axes

and

:

where

represents a candidate position. Points within

are labeled positive, points outside

negative, and those in between are ignored to prevent ambiguous supervision.

For each positive point, the regression target encodes its horizontal and vertical offsets relative to the left, top, right, and bottom boundaries of the ground-truth box:

Here, and indicate the horizontal distances to the left and right edges of the ground-truth box, while and correspond to the vertical distances to the top and bottom edges, respectively.

To jointly capture hyperspectral characteristics and dynamic target behavior, a multi-task loss function is adopted, combining spectral reconstruction, classification, and regression objectives:

where

Z and

X correspond to the features extracted from the template and search regions, respectively.

and

denote the band correlation matrices for the template and search regions, respectively.

represents the importance weight of the

n-th sample,

and

balance the classification and regression losses, and

controls the influence of spectral reconstruction. The first term in the loss guides the dynamic spectral feature learning module to capture physically meaningful band relationships, while the second and third terms supervise classification and precise bounding box regression.

3.4.2. Online Tracking

The initial target template is extracted from the first frame to initialize the tracking process. Using the weights provided by the dynamic spectral feature learning module, the spectral bands are ordered and grouped to generate multiple false-color images. Subsequently, these template images are fed into the contextual feature module to extract multi-scale target features, which serve as references for tracking in the following frames.

For each new frame, the search region is extracted based on the previous target state. Its spectral bands are similarly ranked and grouped into false-color search images

. The feature extraction network generates feature maps for each search image, which are paired with the corresponding template feature maps

. Each pair is fed into the Box-Adaptive Head to produce classification scores

and regression offsets

:

where

denotes a

convolution, and ⋆ is cross-correlation operation. Each response map provides an independent target estimate, and the final position is determined by fusing these estimates based on the relative importance of each spectral band.

Target localization is achieved by finding the location with the highest response in the classification map , and reconstructing the bounding box using the corresponding regression values . To avoid unstable predictions, a cosine window is applied to discourage large positional shifts, and a scale regularization is introduced to prevent abrupt size fluctuations. These strategies collectively improve robustness and maintain tracking accuracy across frames.