A Multi-Scale Windowed Spatial and Channel Attention Network for High-Fidelity Remote Sensing Image Super-Resolution

Highlights

- We propose MSWSCAN, a multi-scale windowed spatial–channel attention network that better preserves high-frequency textures and structures.

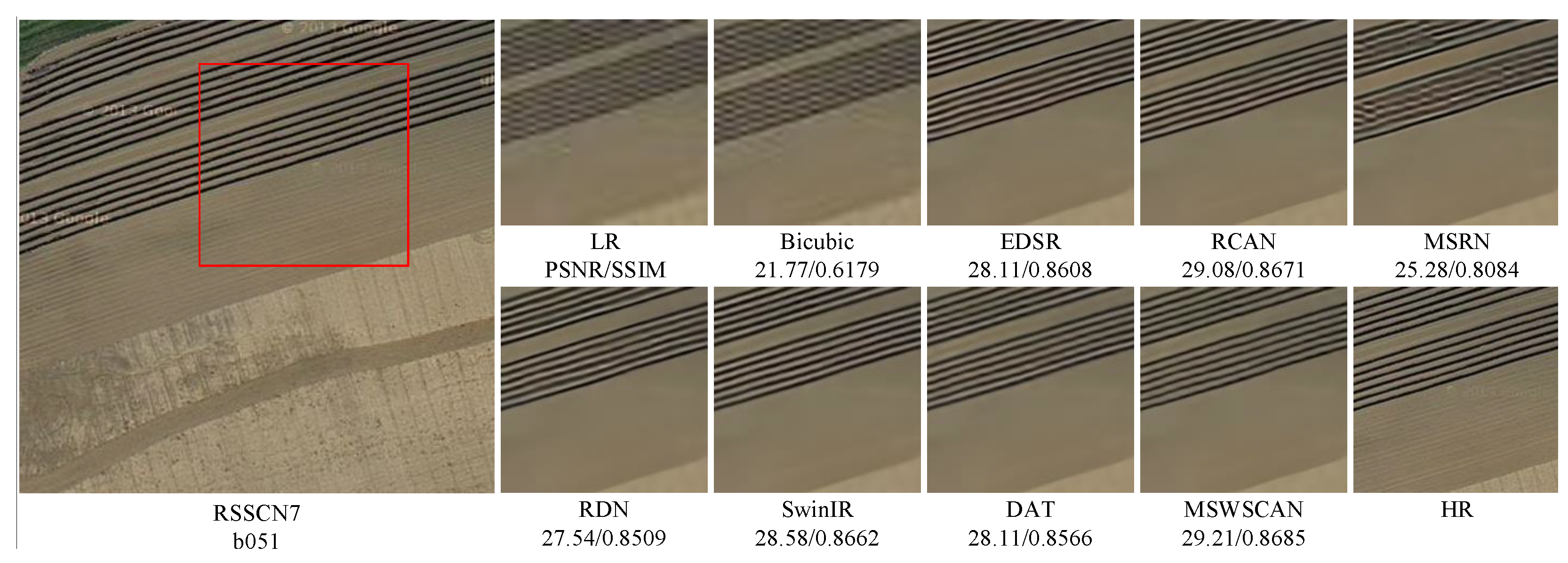

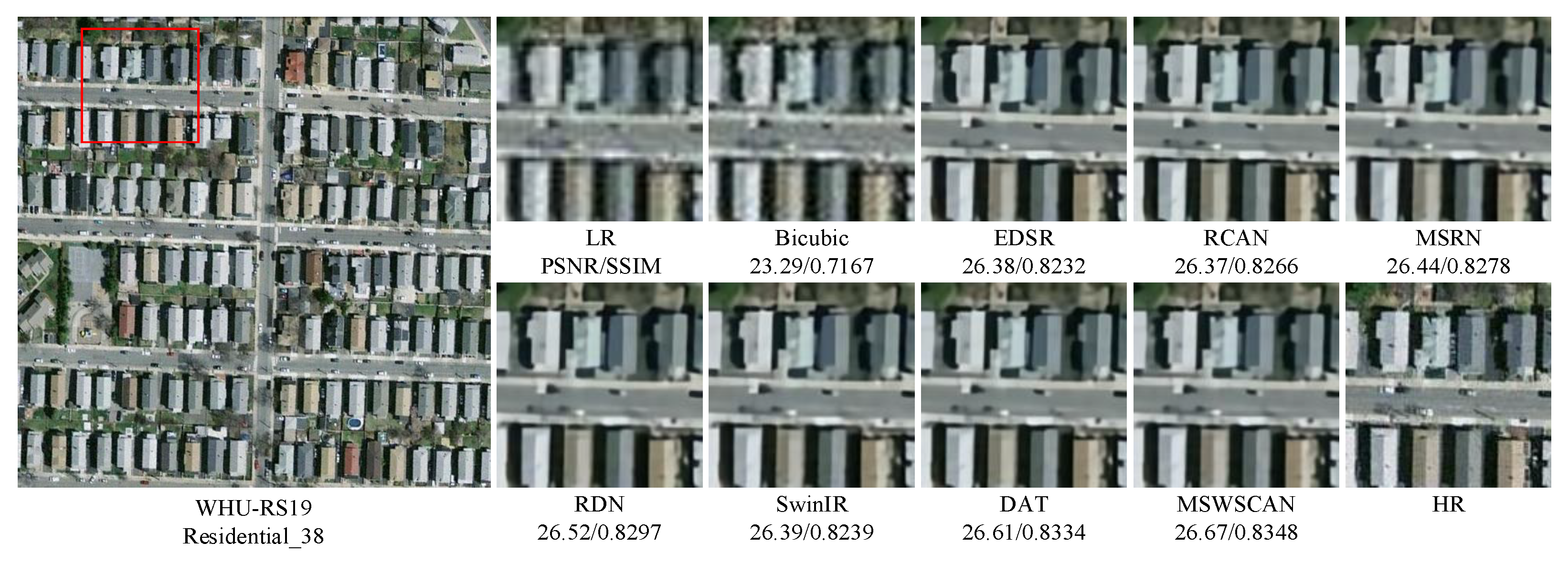

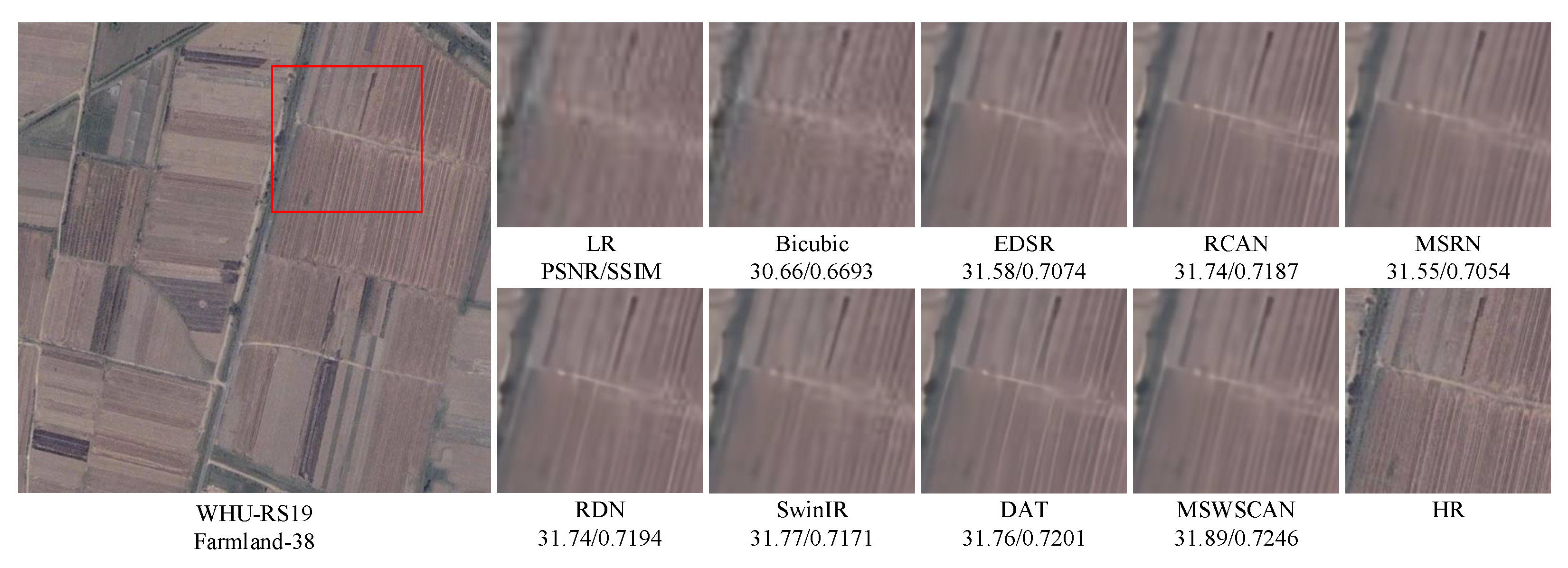

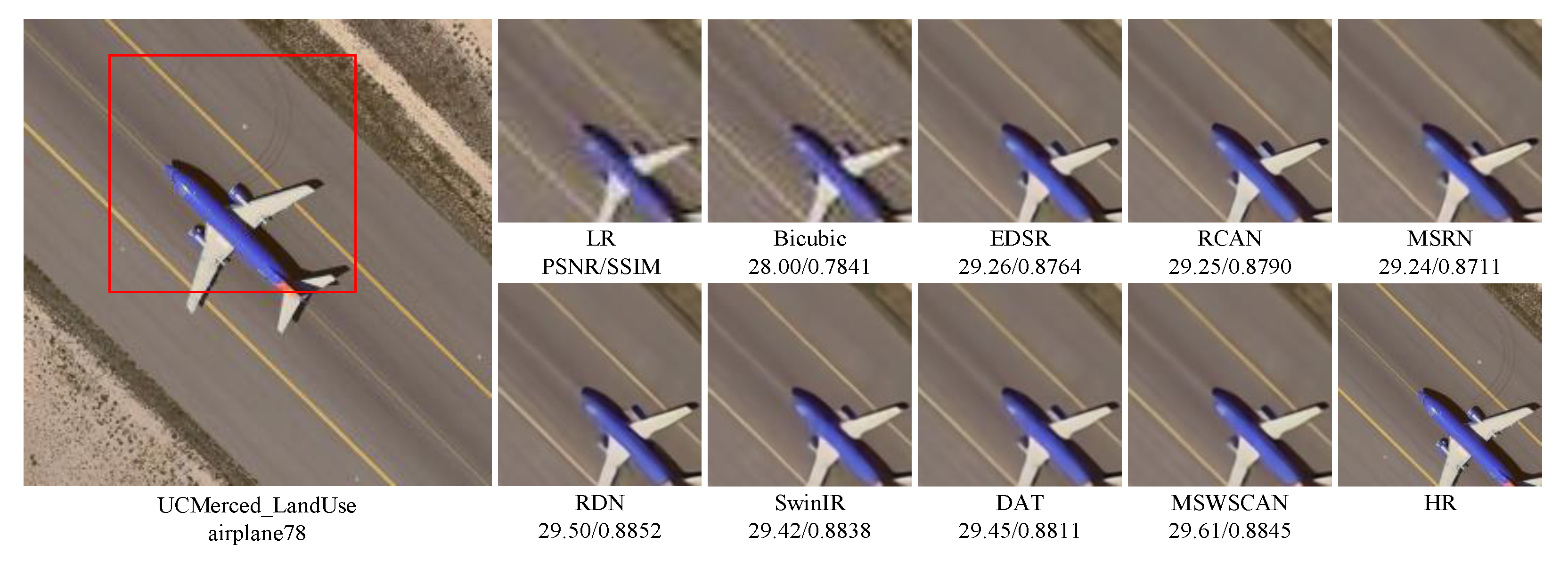

- It delivers strong results across ×2/×3/×4 scales on standard benchmarks (WHU-RS19, RSSCN7, UCMerced_LandUse), with reduced artefacts.

- Clearer, sharper reconstructions can improve downstream tasks (e.g., building extraction, urban planning, environmental monitoring).

- The attention and multi-scale design are general and reusable for other RS super-resolution models.

Abstract

1. Introduction

- Multi-scale Windowed Spatial and Channel Attention Network: We design a multi-scale module that uses convolutions with different kernel sizes to extract features with diverse receptive fields, and aggregate the multi-scale features to enhance the ability of model to capture high-frequency information.

- Windowed Spatial and Channel Attention Modules: By alternating the windowed spatial attention module and the channel attention module, the model dynamically re-weights both spatial and channel features within the module, improving its ability to learn fine-grained details and high-frequency features, thus reducing artifacts and structural distortions.

- Feature Fusion Layer: A feature fusion layer is introduced before the upsampling reconstruction module, which integrates features from different depths to further enhance the expression ability of deep features, improving the quality of the reconstructed images.

- Experimental Validation: Extensive experiments on the WHU-RS19, RSSCN7, and UCMerced_LandUse datasets demonstrate that the proposed Multi-scale Windowed Spatial and Channel Attention Network effectively enhances texture details in remote sensing images, alleviates artifacts and structural distortions, and outperforms existing methods in terms of peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM).

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

3. Materials and Methods

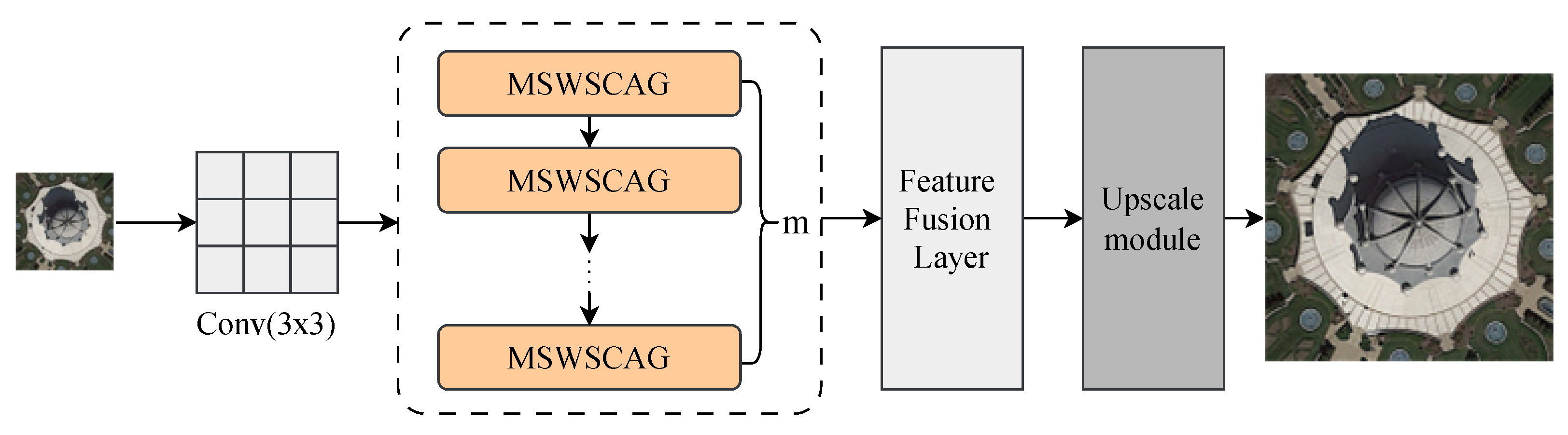

3.1. Network Architecture Design

3.1.1. Shallow Feature Extraction Module

3.1.2. Deep Feature Extraction Module

3.1.3. Feature Fusion Layer (FFL)

3.1.4. Upsampling and Reconstruction

3.2. Multi-Scale Windowed Spatial and Channel Attention Group (MSWSCAG) Module

- One MS module.

- One LayerNorm (LN) layer.

- One WSCAG module.

- Two convolutional layers.

3.3. Windowed Spatial and Channel Attention Group (WSCAG) Module

3.3.1. WSCAG Module

3.3.2. WSCA Module

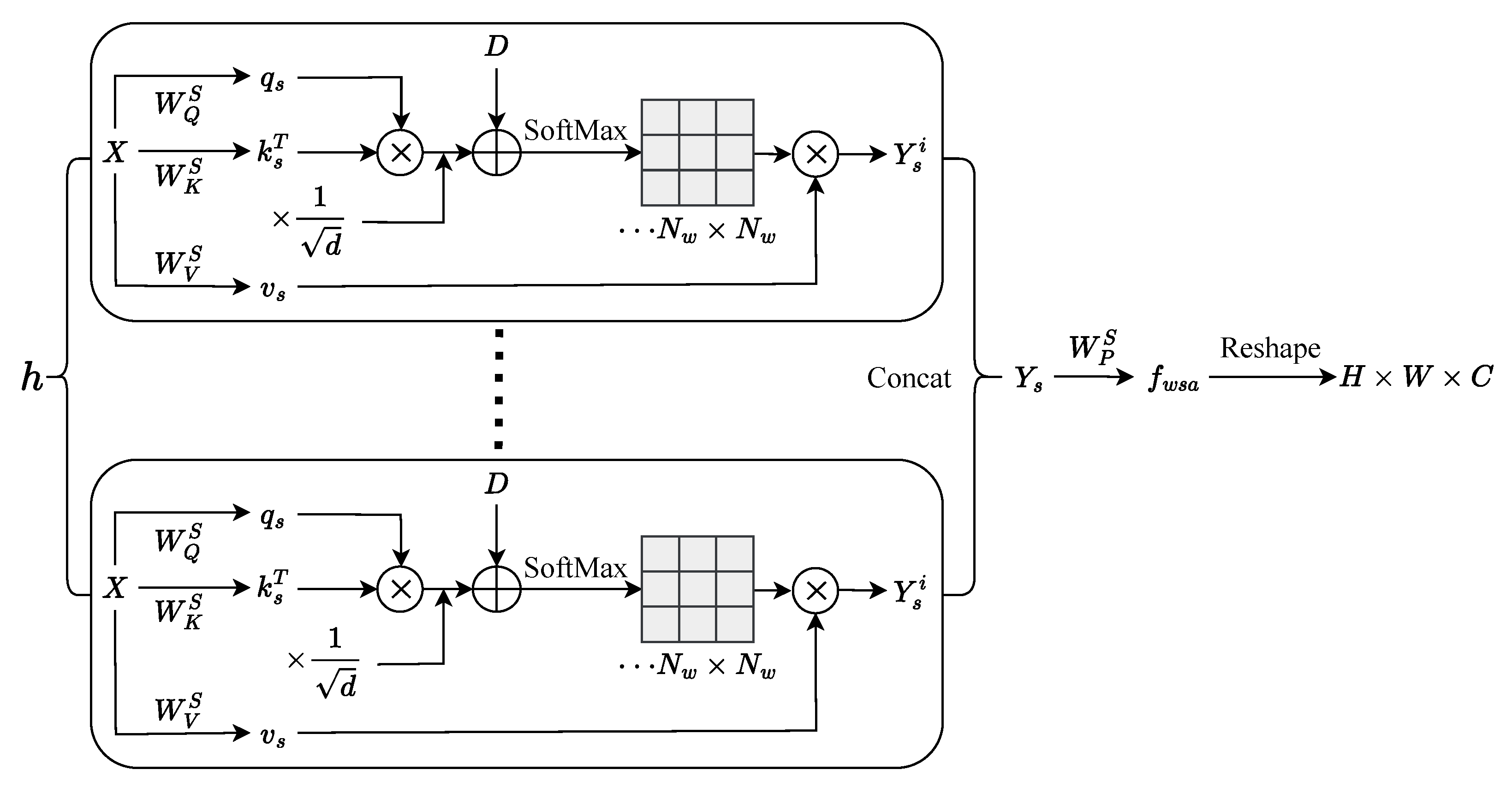

3.3.3. WSA Module

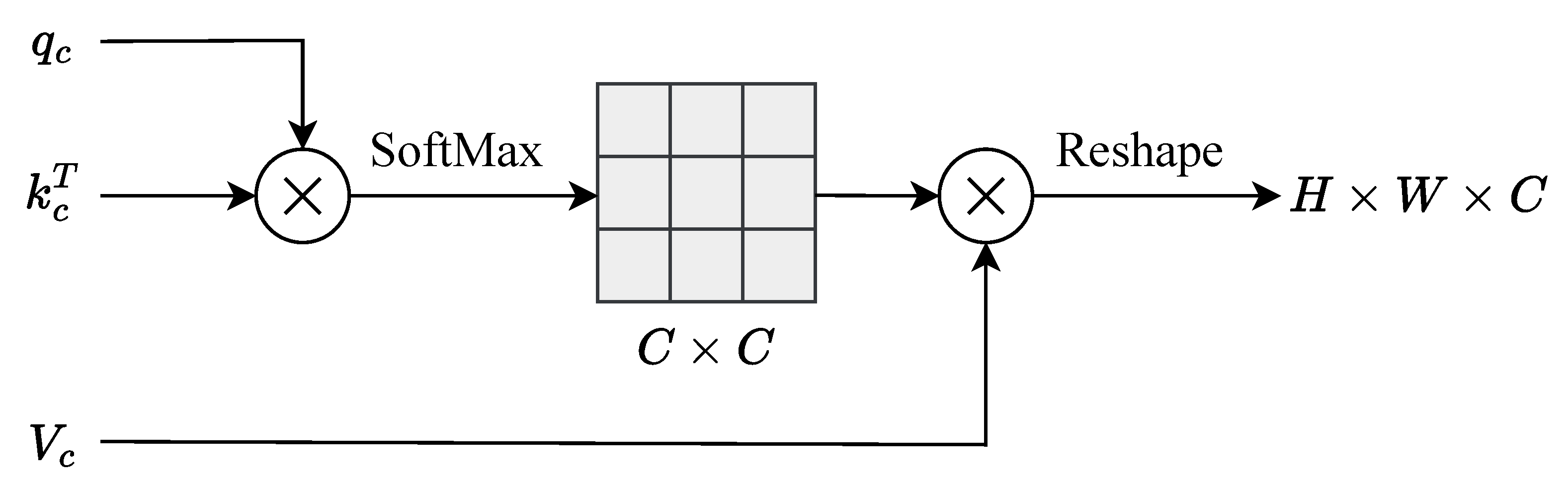

3.3.4. CA Module

4. Results

4.1. Experimental Setup

4.1.1. Data Setup

4.1.2. Model Configuration

4.1.3. Training Configuration

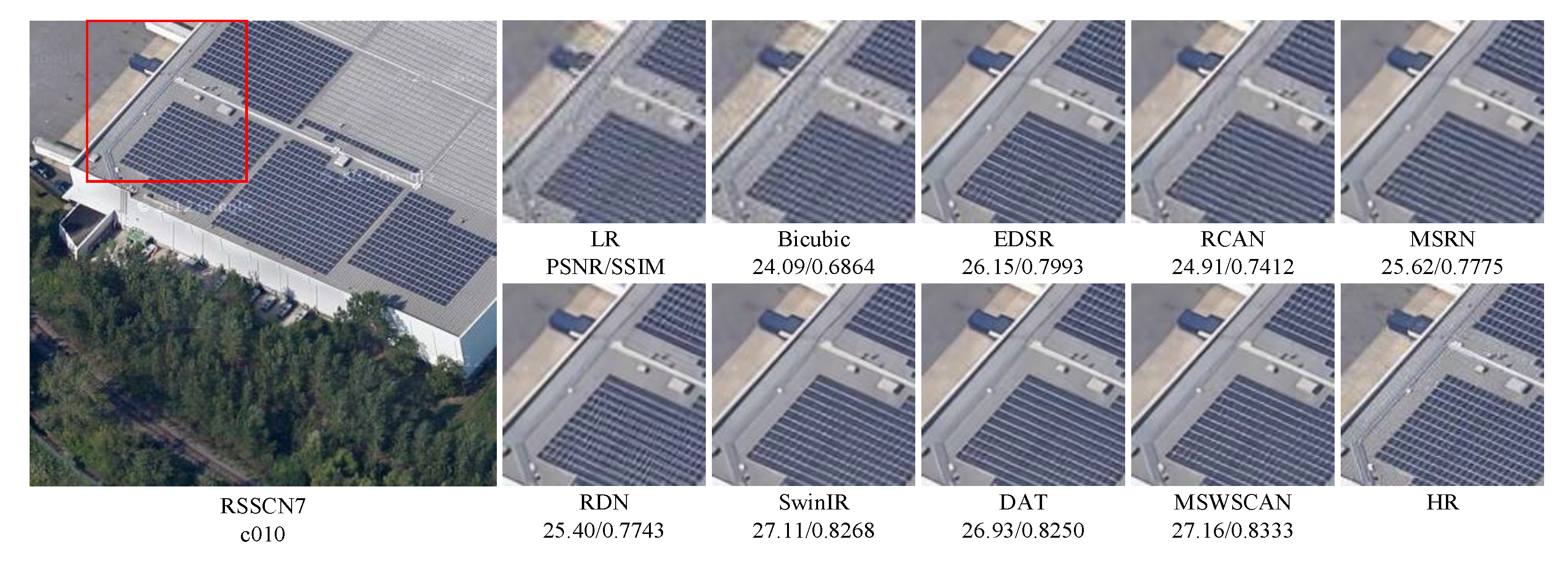

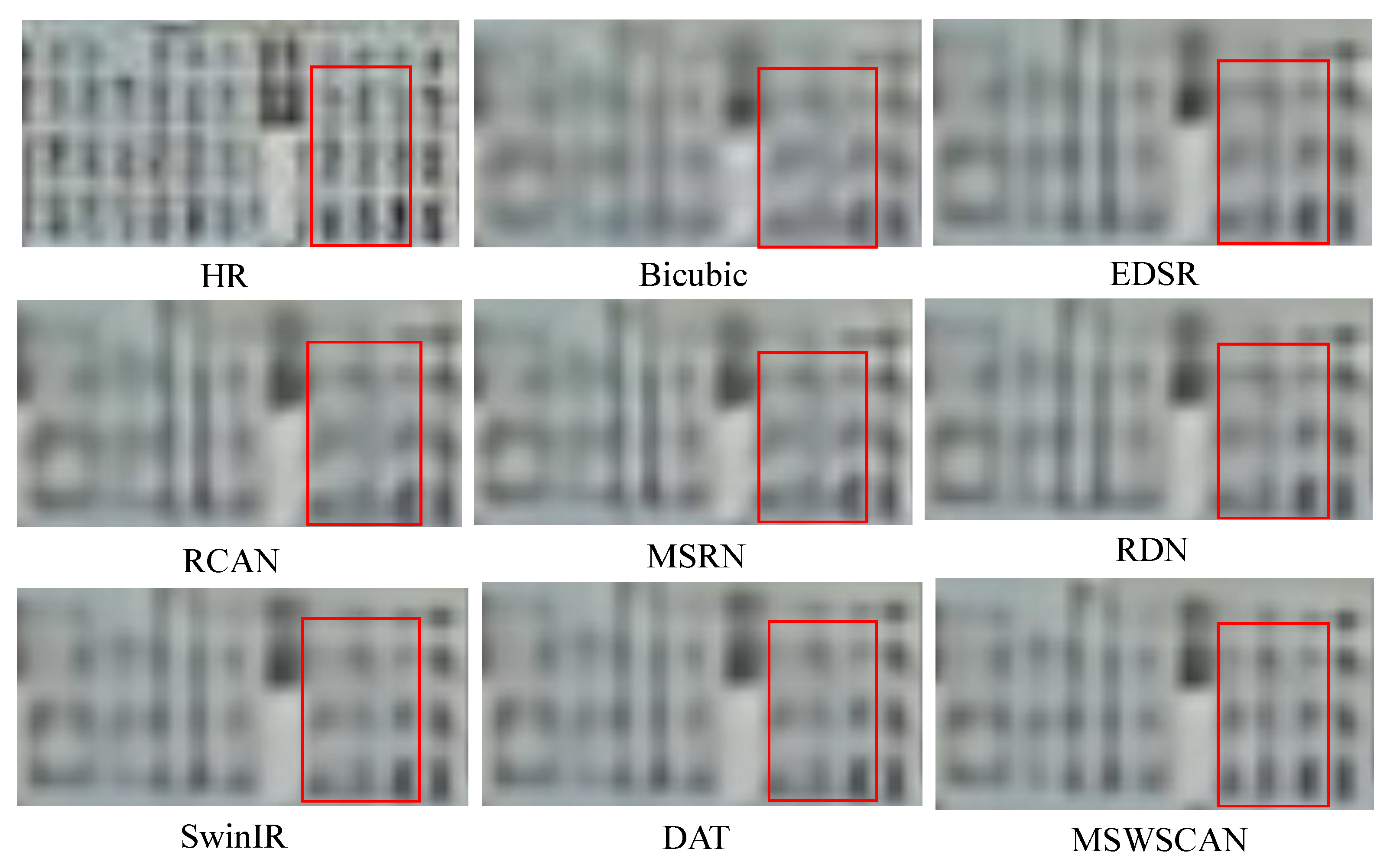

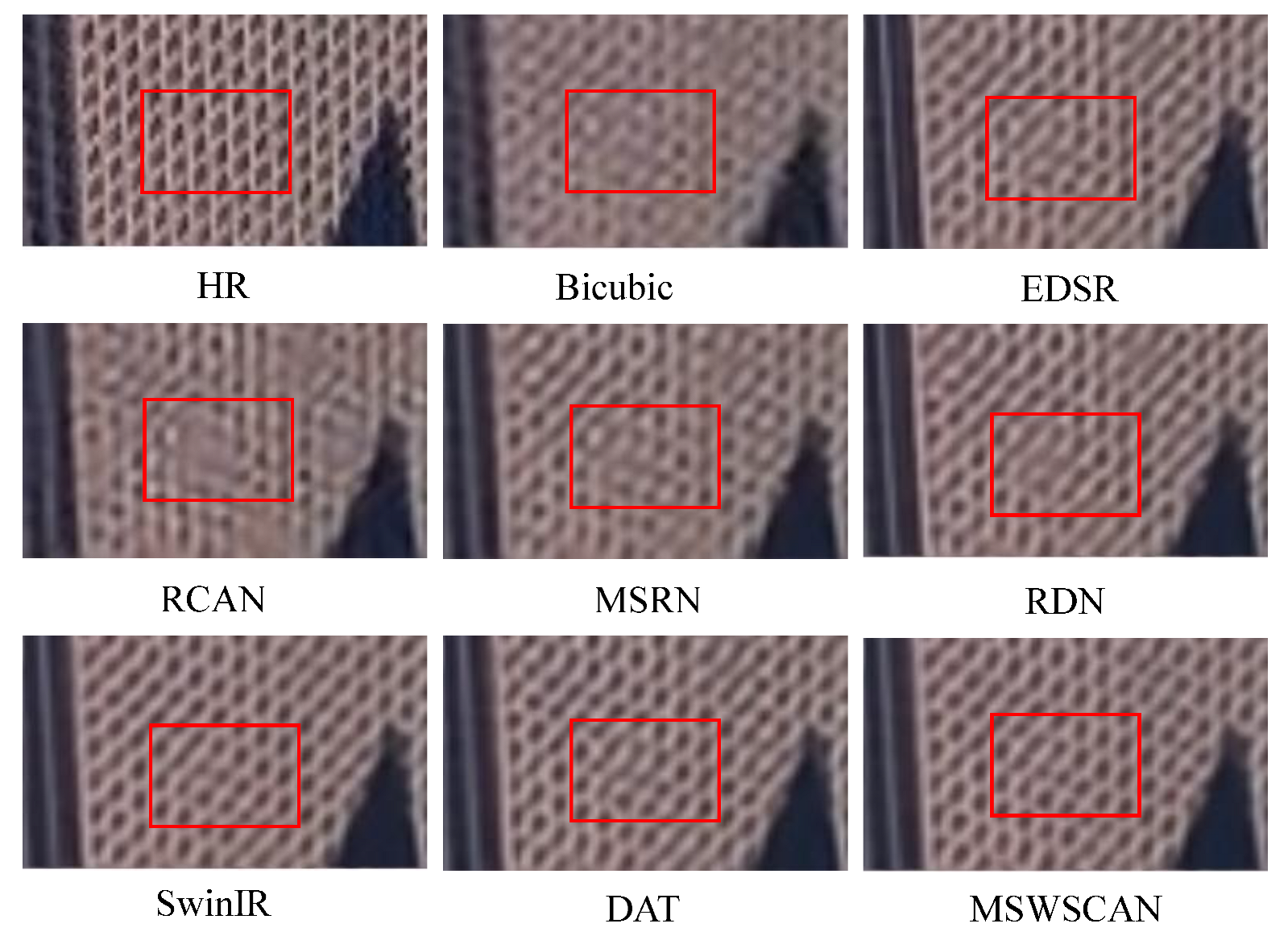

4.2. Analysis of Experimental Results

Limitations and Failure Cases

4.3. Component Ablation Analysis

4.3.1. The Impact of the Number of Cascaded MSWSCAG Modules on Network Performance

4.3.2. Influence of MSWSCAG Internal Module on Network Performance

4.3.3. Influence of Feature Fusion Layer on Network Performance

4.3.4. Complexity and Runtime

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. (CSUR) 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Yang, S.; Kim, Y.; Jeong, J. Fine edge-preserving technique for display devices. IEEE Trans. Consum. Electron. 2008, 54, 1761–1769. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Tsai, R.Y.; Huang, T.S. Multiframe image restoration and registration. Adv. Comput. Vis. Image Process. 1984, 1, 317–339. [Google Scholar]

- Malkin, K.; Robinson, C.; Hou, L.; Soobitsky, R.; Czawlytko, J.; Samaras, D.; Saltz, J.; Joppa, L.; Jojic, N. Label super-resolution networks. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2018. [Google Scholar]

- Li, Z.; Zhang, H.; Lu, F.; Xue, R.; Yang, G.; Zhang, L. Breaking the resolution barrier: A low-to-high network for large-scale high-resolution land-cover mapping using low-resolution labels. ISPRS J. Photogramm. Remote Sens. 2022, 192, 244–267. [Google Scholar] [CrossRef]

- Li, Z.; He, W.; Li, J.; Lu, F.; Zhang, H. Learning without Exact Guidance: Updating Large-scale High-resolution Land Cover Maps from Low-resolution Historical Labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27717–27727. [Google Scholar]

- Irani, M.; Peleg, S. Improving resolution by image registration. CVGIP Graph. Model. Image Process. 1991, 53, 231–239. [Google Scholar] [CrossRef]

- Kim, S.P.; Bose, N.K.; Valenzuela, H.M. Recursive reconstruction of high resolution image from noisy undersampled multiframe. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1013–1027. [Google Scholar] [CrossRef]

- Rhee, S.; Kang, M.G. Discrete cosine transform based regularized high-resolution image reconstruction algorithm. Opt. Eng. 1999, 38, 1348–1356. [Google Scholar] [CrossRef]

- Nguyen, N.; Milanfar, P. An efficient wavelet-based algorithm for image superresolution. In Proceedings of the 2000 International Conference on Image Processing (Cat. No. 00CH37101), Vancouver, BC, Canada, 10–13 September 2000; pp. 351–354. [Google Scholar]

- Stark, H.; Oskoui, P. High-resolution image recovery from image-plane arrays, using convex projections. J. Opt. Soc. Am. A 1989, 6, 1715–1726. [Google Scholar] [CrossRef] [PubMed]

- Schultz, R.R.; Stevenson, R.L. A Bayesian approach to image expansion for improved definition. IEEE Trans. Image Process. 1994, 3, 233–242. [Google Scholar] [CrossRef] [PubMed]

- Elad, M.; Feuer, A. Restoration of a single superresolution image from several blurred, noisy, and undersampled measured images. IEEE Trans. Image Process. 1997, 6, 1646–1658. [Google Scholar] [CrossRef] [PubMed]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1664–1673. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV. Springer: Berlin/Heidelberg, Germany, 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II. Springer: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1874–1883. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Qiu, Y.; Wang, R.; Tao, D.; Cheng, J. Embedded block residual network: A recursive restoration model for single-image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4180–4189. [Google Scholar]

- Liu, J.; Zhang, W.; Tang, Y.; Tang, J.; Wu, G. Residual feature aggregation network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2359–2368. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Li, J.; Fang, F.; Mei, K.; Zhang, G. Multi-scale residual network for image super-resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 517–532. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.-H. Deep Laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11065–11074. [Google Scholar]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single image super-resolution via a holistic attention network. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII. Springer: Berlin/Heidelberg, Germany, 2020; pp. 191–207. [Google Scholar]

- Zhang, Y.; Wei, D.; Qin, C.; Wang, H.; Pfister, H.; Fu, Y. Context reasoning attention network for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4278–4287. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Chen, Z.; Zhang, Y.; Gu, J.; Kong, L.; Yang, X.; Yu, F. Dual aggregation transformer for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 12312–12321. [Google Scholar]

- Guo, Y.; Chen, J.; Wang, J.; Chen, Q.; Cao, J.; Deng, Z.; Xu, Y.; Tan, M. Closed-loop matters: Dual regression networks for single image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5407–5416. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y.B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. MemNet: A persistent memory network for image restoration. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4539–4547. [Google Scholar]

| Model | Scale | ||

|---|---|---|---|

|

PSNR (dB)/ SSIM (X2) |

PSNR (dB)/ SSIM (X3) |

PSNR (dB)/ SSIM (X4) | |

| Bicubic | 30.03/0.7721 | 27.78/0.6626 | 26.93/0.6165 |

| EDSR | 31.43/0.8161 | 28.37/0.6986 | 28.04/0.6628 |

| RCAN | 31.08/0.8024 | 28.39/0.6993 | 28.06/0.6632 |

| MSRN | 31.44/0.8142 | 28.35/0.7090 | 28.08/0.6637 |

| RDN | 31.48/0.8173 | 28.41/0.6995 | 28.06/0.6652 |

| SwinIR | 31.47/0.8169 | 28.41/0.6993 | 28.04/0.6648 |

| DAT | 31.54/0.8186 | 28.43/0.7009 | 28.09/0.6675 |

| MSWSCAN | 31.59/0.8204 | 28.48/0.7020 | 28.13/0.6696 |

| Model | Scale | ||

|---|---|---|---|

|

PSNR (dB)/ SSIM (X2) |

PSNR (dB)/ SSIM (X3) |

PSNR (dB)/ SSIM (X4) | |

| Bicubic | 32.52/0.8488 | 29.54/0.7462 | 28.22/ 0.6829 |

| EDSR | 34.76/0.8932 | 31.41/0.8015 | 29.70/0.7408 |

| RCAN | 34.00/0.8804 | 31.40/ 0.8054 | 29.81/0.7452 |

| MSRN | 34.70/0.8914 | 31.33/0.8006 | 29.79/0.7412 |

| RDN | 34.78/0.8939 | 31.39/0.8402 | 29.83/ 0.7443 |

| SwinIR | 34.79/ 0.8936 | 31.43/0.8024 | 29.85/0.7436 |

| DAT | 34.84/0.8941 | 31.47/0.8059 | 29.90/0.7472 |

| MSWSCAN | 34.89/0.8952 | 31.53/0.8078 | 29.94/0.7470 |

| Model | Scale | ||

|---|---|---|---|

|

PSNR (dB)/ SSIM (X2) |

PSNR (dB)/ SSIM (X3) |

PSNR (dB)/ SSIM (X4) | |

| Bicubic | 29.38/0.8125 | 26.70/0.6995 | 25.00/0.6210 |

| EDSR | 32.05/0.8698 | 28.01/0.7612 | 26.93/0.6993 |

| RCAN | 31.20/0.8522 | 28.03/0.7635 | 27.01/0.7039 |

| MSRN | 31.93/0.8675 | 27.97/0.7598 | 26.91/0.6991 |

| RDN | 32.09/0.8704 | 28.01/0.7627 | 27.00/0.7026 |

| SwinIR | 32.11/0.8713 | 28.00/0.7629 | 27.02/0.7028 |

| DAT | 32.17/0.8721 | 28.07/0.7651 | 27.04/0.7056 |

| MSWSCAN | 32.23/0.8739 | 28.12/0.7672 | 27.11/0.7089 |

| Scale | |||||

|---|---|---|---|---|---|

| Datasets | N | M |

PSNR (dB)/ SSIM (X2) |

PSNR (dB)/ SSIM (X3) |

PSNR (dB)/ SSIM (X4) |

| RSSCN7 | 2 | 5.51 | 31.24/0.7715 | 28.14/0.6472 | 27.81/0.6205 |

| 4 | 10.39 | 31.48/0.8016 | 28.35/0.6758 | 27.98/0.6492 | |

| 6 | 15.26 | 31.59/0.8204 | 28.48/0.7020 | 28.13/0.6696 | |

| 8 | 20.14 | 31.62/0.8216 | 28.49/0.7018 | 28.12/0.6697 | |

| WHU-RS19 | 2 | 5.51 | 34.52/0.8397 | 31.11/0.7505 | 29.57/0.6921 |

| 4 | 10.39 | 34.75/0.8712 | 31.36/0.7821 | 29.80/0.7232 | |

| 6 | 15.26 | 34.89/0.8952 | 31.53/0.8078 | 29.94/0.7470 | |

| 8 | 20.14 | 34.91/0.8951 | 31.55/0.8086 | 29.92/0.7465 | |

| UCMerced LandUse | 2 | 5.51 | 31.88/0.8152 | 27.78/0.7099 | 26.64/0.6496 |

| 4 | 10.39 | 32.04/0.8498 | 27.98/0.7437 | 26.96/0.6840 | |

| 6 | 15.26 | 32.23/0.8739 | 28.12/0.7672 | 27.11/0.7089 | |

| 8 | 20.14 | 32.25/0.8745 | 28.13/0.7672 | 27.12/0.7096 | |

| Group | Module | ||||

|---|---|---|---|---|---|

| MS | WSCA | MSWSA | MSCA | MSWSCAG | |

| MS | √ | × | √ | √ | √ |

| WSA | × | √ | √ | × | √ |

| CA | × | √ | × | √ | √ |

| Parameters (M) | 1.37 | 0.86 | 1.80 | 1.80 | 2.44 |

| PSNR/SSIM | 32.05/0.8677 | 32.11/0.8719 | 32.15/0.8721 | 32.14/0.8722 | 32.23/0.8739 |

| Feature Fusion Layer (FFL) | × | √ |

|---|---|---|

| Parameters (k) | 4.16 | 4.16 |

| PSNR/SSIM | 34.86/0.8944 | 34.89/0.8952 |

| Inference (HR 256 × 256) | Training (LR 64 × 64, b = 2) | ||||||

|---|---|---|---|---|---|---|---|

| Model | Lat (ms) | Thr (img/s) | Mem (GB) | Iter (ms) | It/s | Mem (GB) | Epoch (min) |

| MSWSCAN | 558.38 | 1.79 | 3.03 | 198.98 | 5.03 | 6.39 | 3.31 |

| SwinIR | 315.15 | 3.17 | 2.61 | 140.42 | 7.12 | 4.97 | 2.34 |

| DAT | 544.59 | 1.84 | 3.15 | 181.25 | 5.52 | 7.59 | 3.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, X.; Xiang, X.; Wang, J.; Wang, L.; Gao, X.; Chen, Y.; Liu, J.; He, P.; Han, J.; Li, Z. A Multi-Scale Windowed Spatial and Channel Attention Network for High-Fidelity Remote Sensing Image Super-Resolution. Remote Sens. 2025, 17, 3653. https://doi.org/10.3390/rs17213653

Xiao X, Xiang X, Wang J, Wang L, Gao X, Chen Y, Liu J, He P, Han J, Li Z. A Multi-Scale Windowed Spatial and Channel Attention Network for High-Fidelity Remote Sensing Image Super-Resolution. Remote Sensing. 2025; 17(21):3653. https://doi.org/10.3390/rs17213653

Chicago/Turabian StyleXiao, Xiao, Xufeng Xiang, Jianqiang Wang, Liwen Wang, Xingzhi Gao, Yang Chen, Jun Liu, Peng He, Junhui Han, and Zhiqiang Li. 2025. "A Multi-Scale Windowed Spatial and Channel Attention Network for High-Fidelity Remote Sensing Image Super-Resolution" Remote Sensing 17, no. 21: 3653. https://doi.org/10.3390/rs17213653

APA StyleXiao, X., Xiang, X., Wang, J., Wang, L., Gao, X., Chen, Y., Liu, J., He, P., Han, J., & Li, Z. (2025). A Multi-Scale Windowed Spatial and Channel Attention Network for High-Fidelity Remote Sensing Image Super-Resolution. Remote Sensing, 17(21), 3653. https://doi.org/10.3390/rs17213653