Machine and Deep Learning for Wetland Mapping and Bird-Habitat Monitoring: A Systematic Review of Remote-Sensing Applications (2015–April 2025)

Highlights

- Across 121 studies (2015–April 2025), machine learning—especially Random Forest—is most used, while deep learning provides higher accuracy for complex wetlands, particularly when fusing Sentinel-1 radar with Sentinel-2 optical imagery.

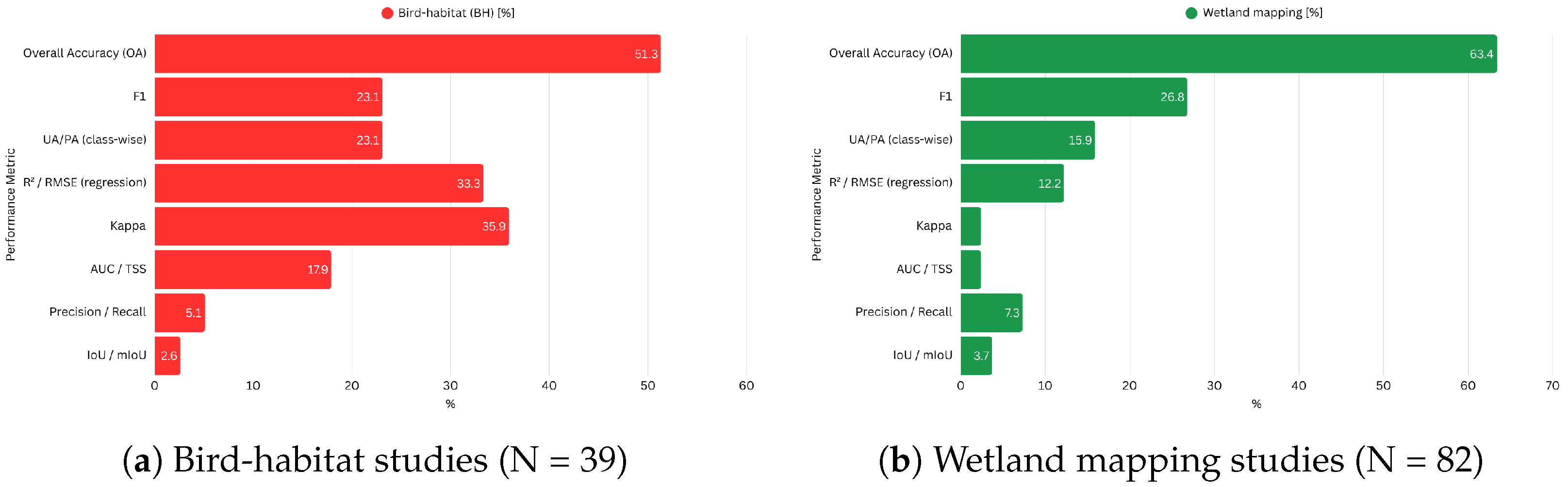

- Coverage is uneven: China and coastal wetlands dominate, bird-habitat studies are few, and validation still leans on overall accuracy with limited class-level reporting.

- Prioritize SAR–optical fusion and fit-for-purpose deep learning models for heterogeneous wetlands; report class-level metrics and use external validation to improve comparability and transfer.

- Address geographic and thematic gaps and link mapping outputs to bird-habitat variables; use UAV imagery for micro-habitats while minimizing disturbance.

Abstract

1. Introduction

2. Materials and Methods

2.1. Scope and Time Window

2.2. Research Protocol

2.2.1. Definition of the Research Objective

- Which remote-sensing technologies are used for wetland and bird-habitat monitoring?

- Which ML/DL models are most frequently used?

- Which wetland types and regions are over-/underrepresented?

2.2.2. Search Strategy Development

2.2.3. Definition of Inclusion and Exclusion Criteria

2.2.4. Risk of Bias Assessment

- The study involves ML algorithms.

- The study answers the questions that were defined above in the research objective section.

- The study provides details on the remote-sensing datasets that are used.

- The study provides a detailed and clear methodology description.

- The study uses appropriate remote sensing and ML techniques for classification.

- The study reports performance metrics

- There should be a comparison with benchmark models or traditional methods.

2.2.5. Data Extraction

3. Results

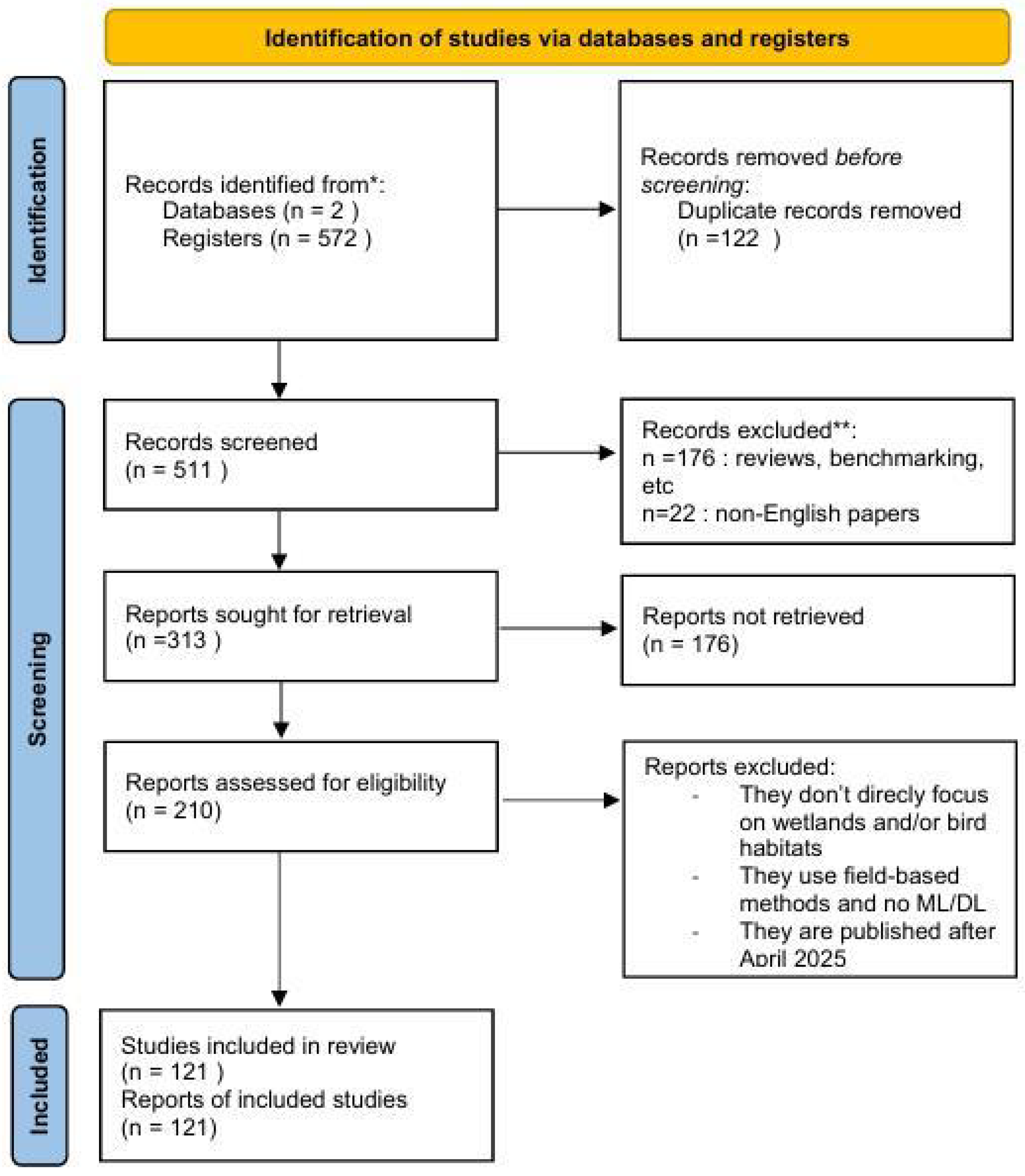

3.1. Statistical Overview and Study Characteristics

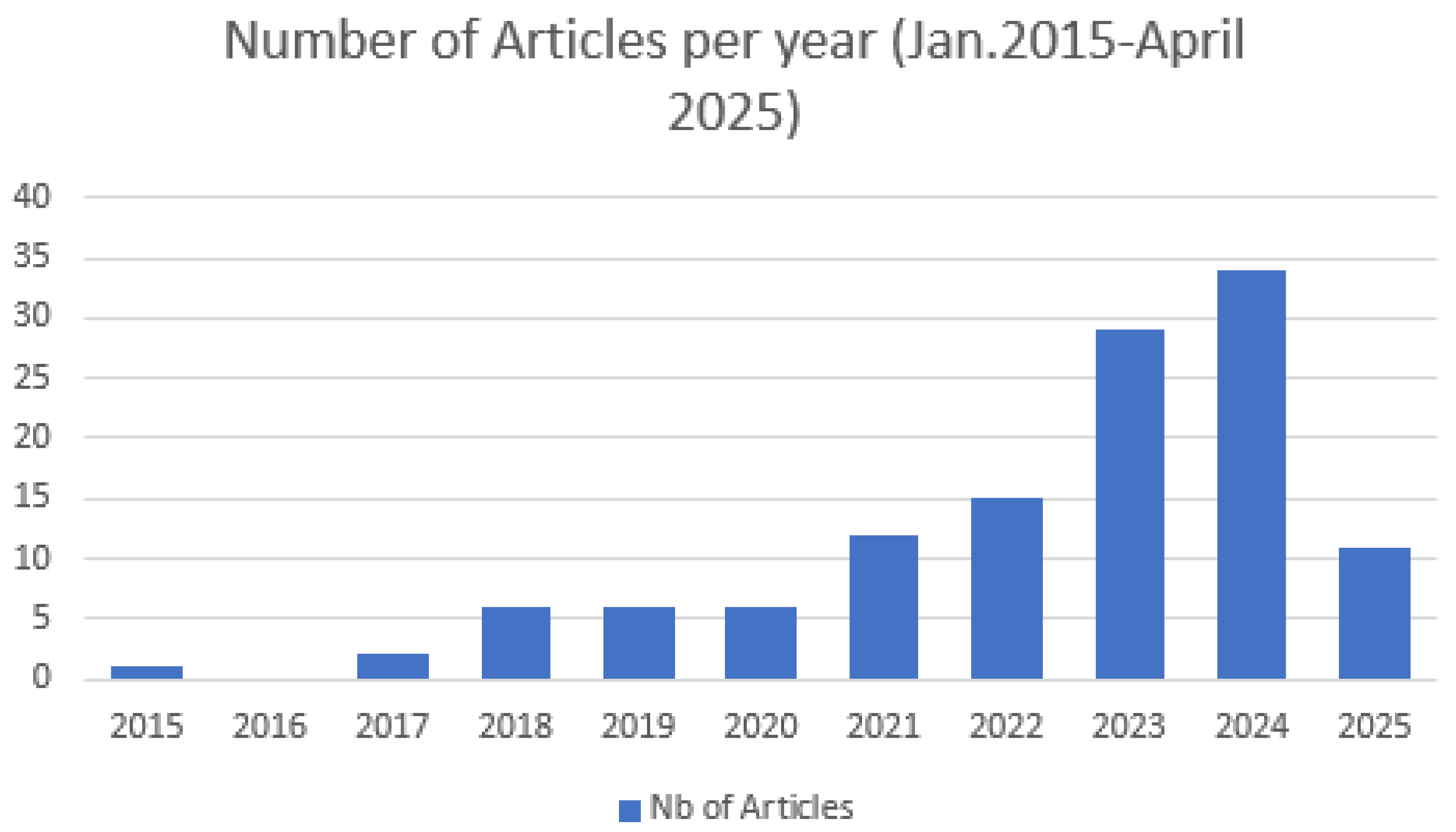

3.2. Temporal Distribution

3.3. Study Geographic Distribution

3.4. Wetland and Bird-Habitat Classes

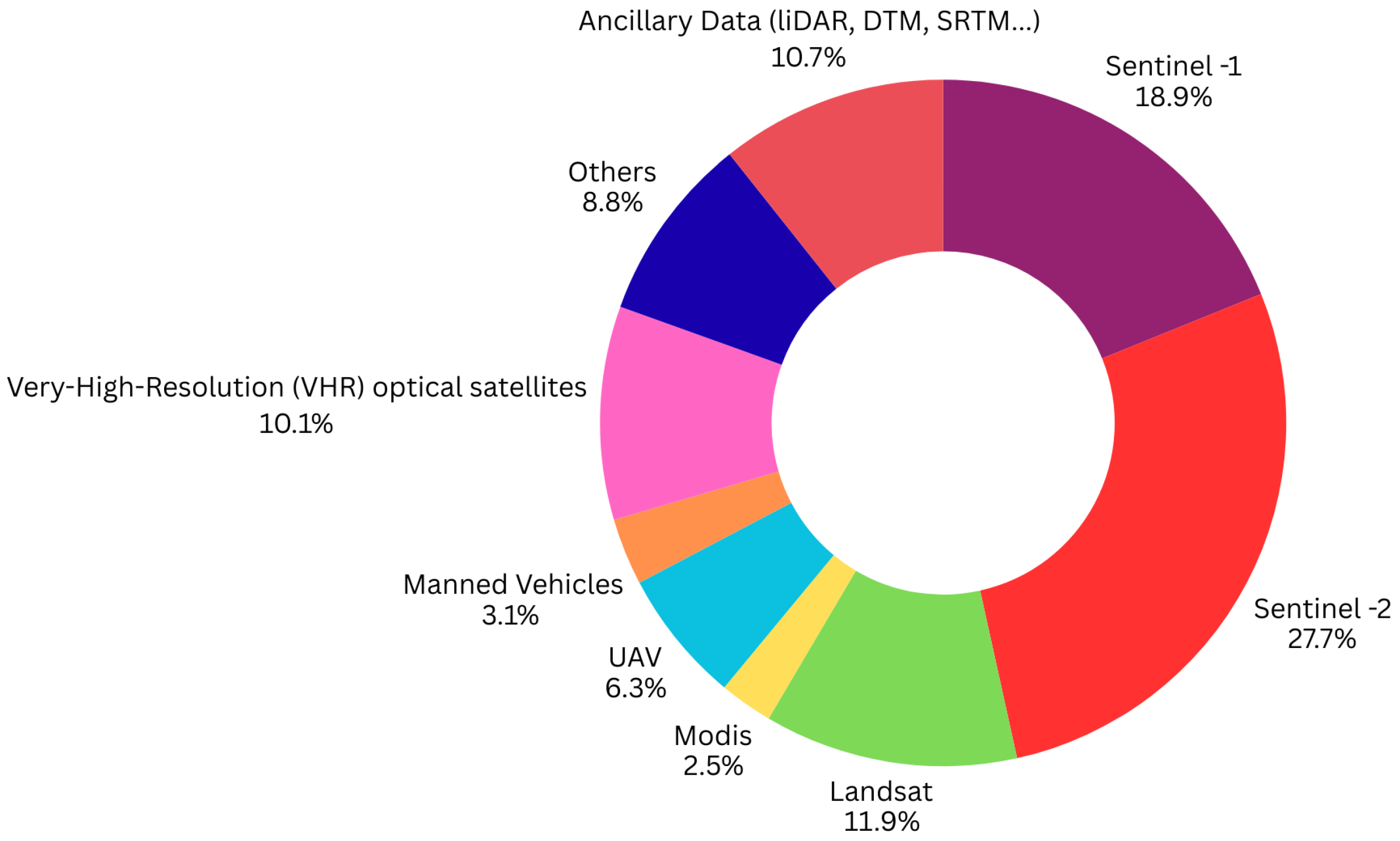

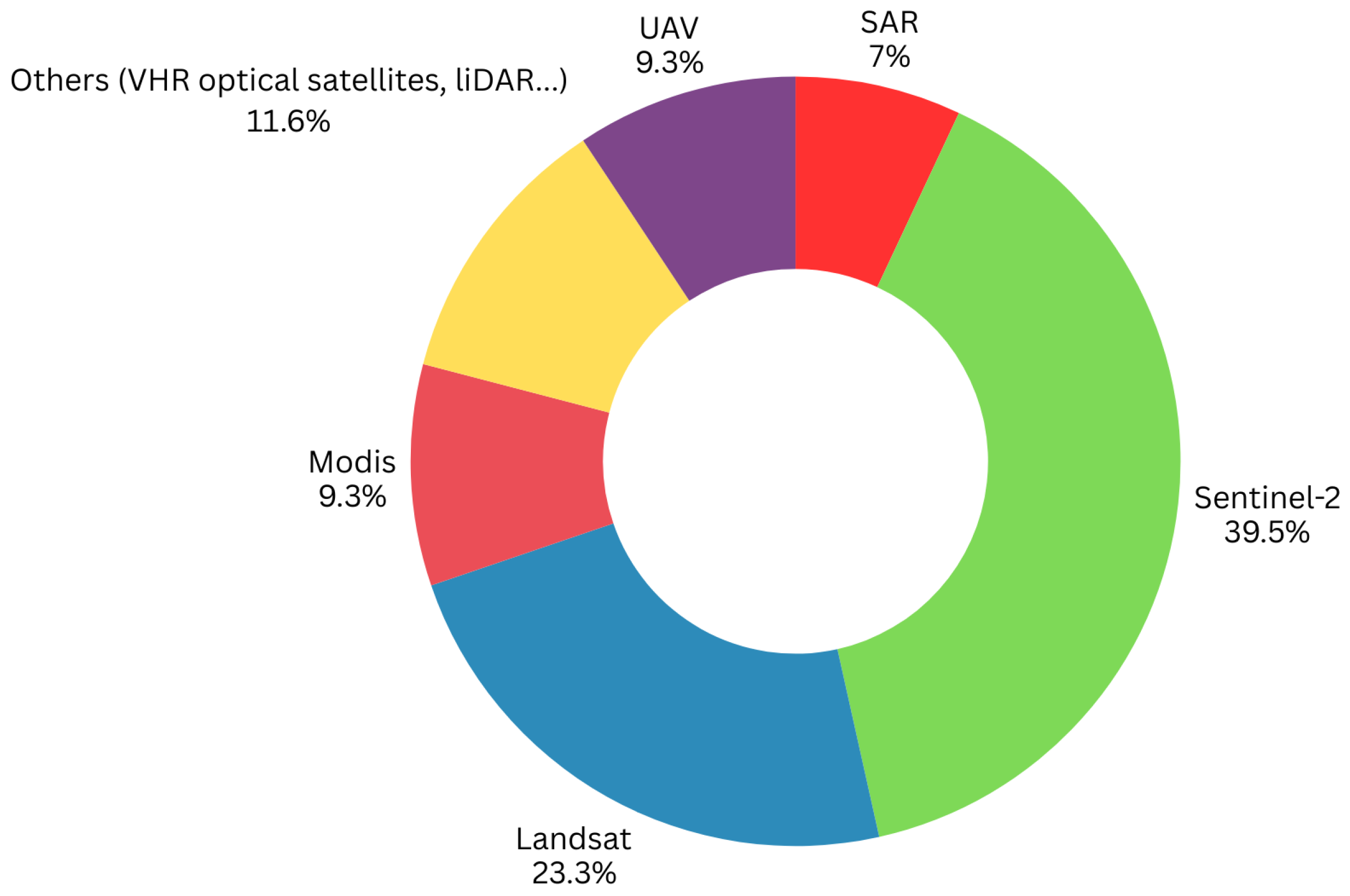

3.5. Data Sources and Acquisition Techniques

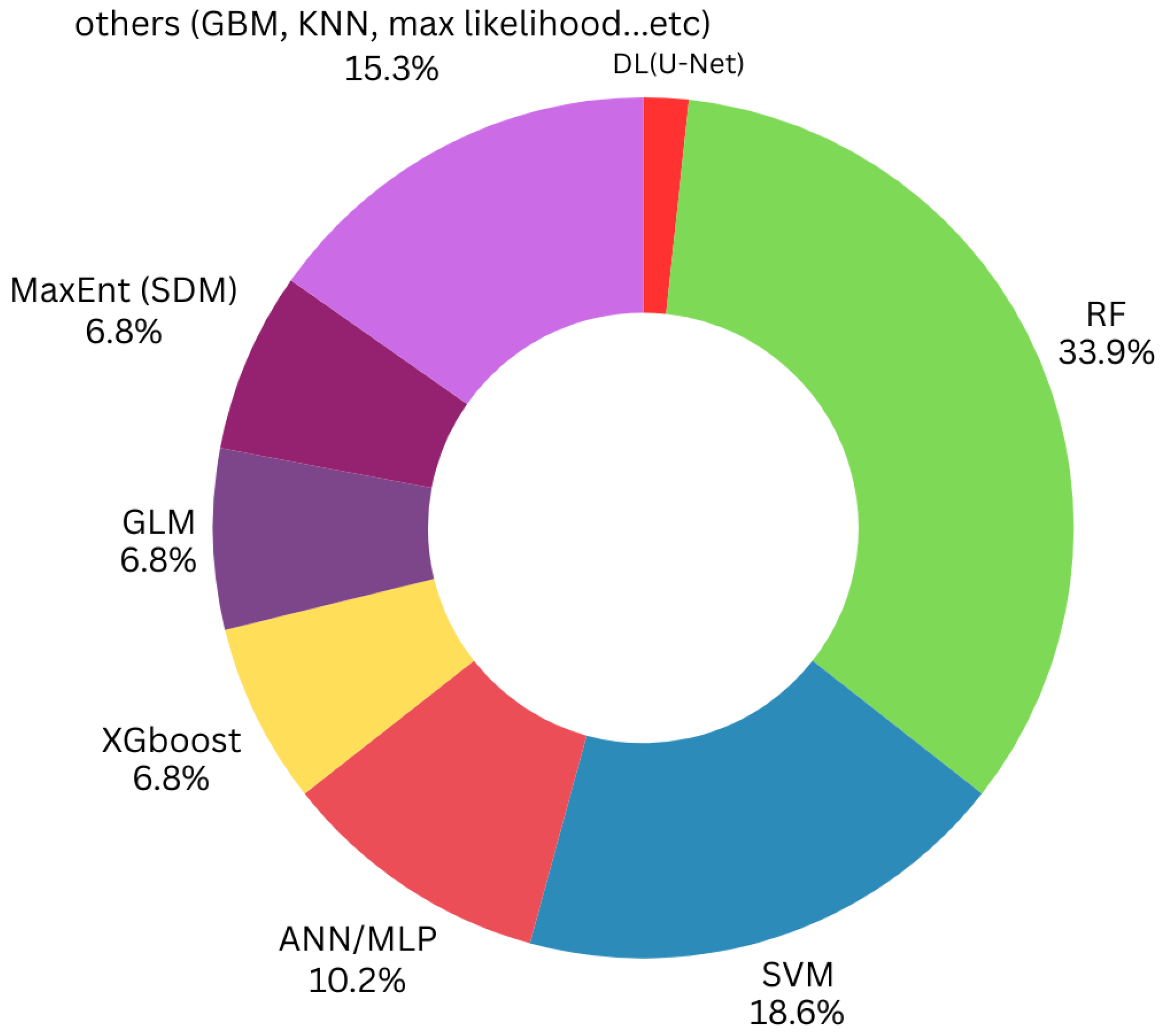

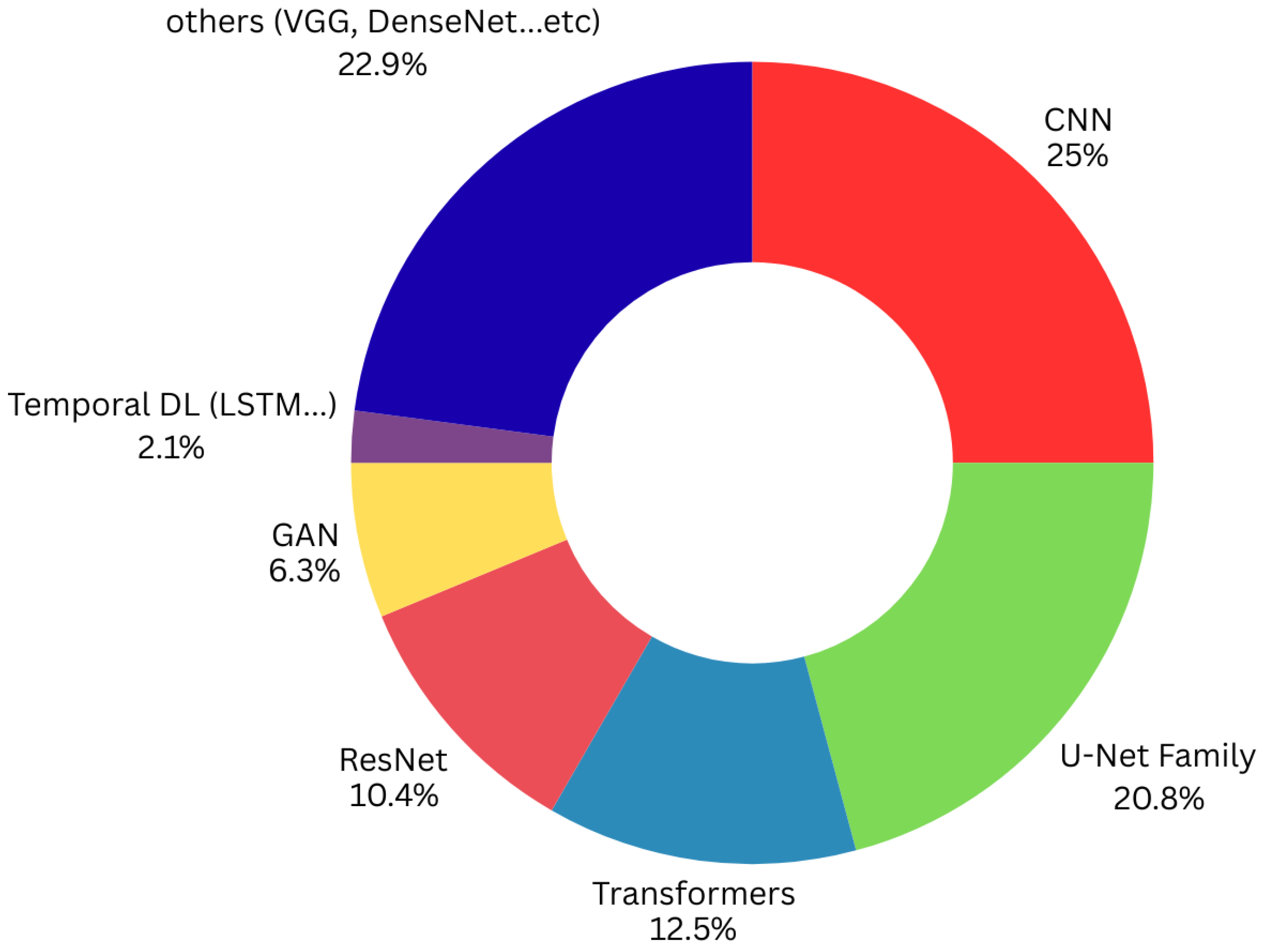

3.6. Applied Methodologies and Classification Approaches

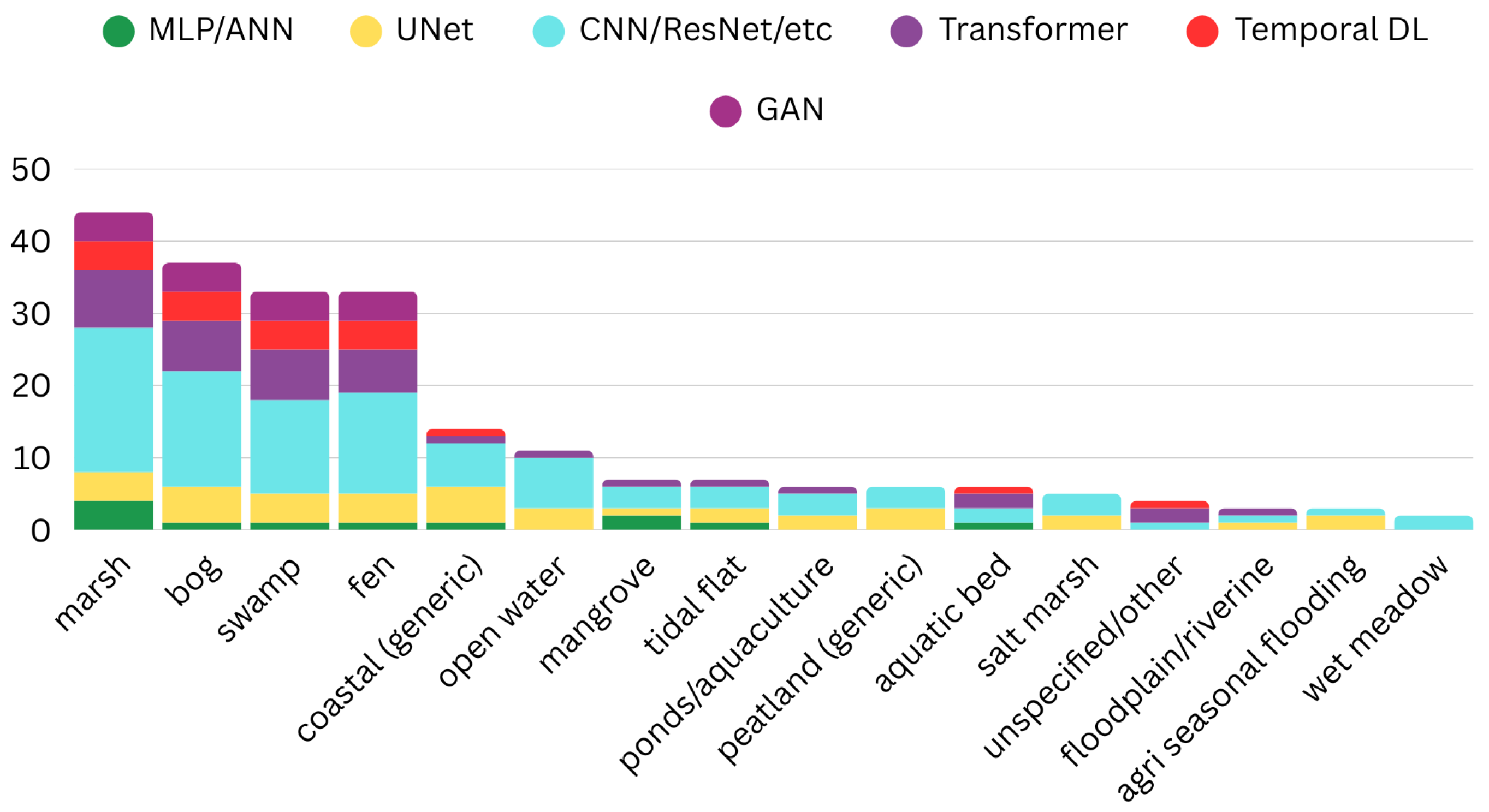

3.6.1. Methods Usage by Wetland Type

- ML usage by Wetland type

- DL usage by Wetland type

3.6.2. Machine Learning Applied to Wetland Mapping and Bird-Habitat Monitoring

3.6.3. Deep Learning Models Applied to Wetland Mapping and Bird-Habitat Monitoring

| Deep Learning Architecture | Main Input | Wetland Type(s) | References |

|---|---|---|---|

| U -Net/Residual U -Net | Sentinel-2 MSI; UAV RGB/HSI; S1 + S2 fusion; DEM/LiDAR auxiliaries | Mangroves; salt marsh; tidal flats; freshwater marshes; peatlands | [49,133,142,144,145] |

| CNN (encoder/backbone) | Sentinel-2; Landsat; S1 + S2 multi-sensor stacks | Salt marsh; mangroves; boreal/estuarine mixes | [63,67,135] |

| Transformer (ViT/Swin/SegFormer) | S1 + S2 multi-temporal stacks; multi-source fusion | Boreal/estuarine; freshwater marsh | [108,135,146] |

| 3D CNN | S1 + S2 cubes or UAV HSI cubes (space–time/spectral) | Floodplain; marsh mosaics | [140] |

| ConvLSTM/TempCNN | Sentinel-2 time series; S1 coherence/backscatter series | Marsh/swamp phenology; hydroperiod mapping | [75,135] |

| R -CNN | UAV RGB/multispectral; very-high-resolution optical | Nest/colony detection; micro-habitats in coastal/inland wetlands | [116,117] |

| GAN (augmentation/synthesis) | S1 + S2 patches; class-balanced synthetic samples | Historical/boreal/estuarine wetlands; class-imbalance scenarios | [63,140] |

| Ensembles (CNN ensembles/WetNet) | S1 + S2; ancillary DEM/shoreline masks | Salt marsh; boreal/estuarine; general wetland classes | [63,143] |

| DNN/Deep MLP | High-dimensional feature stacks (indices, texture, topography) | Mangroves; mixed inland wetlands | [141] |

| Hybrid CNN–ViT (attention fusion) | S1 + S2; optionally UAV/LiDAR-derived structure | Coastal wetlands and shoreline–wetland transitions | [146] |

3.7. Model Training, Validation, and Performance

3.7.1. Training Data and Labeling Strategies

3.7.2. Validation Protocols and Performance

3.7.3. Model Selection and Tuning

4. Discussion

- Sensor Usage and Data Availability

- Wetland Types and Regional Biases

- Dominant Approaches and Model Robustness

- Validation and Transferability Challenges

5. Limitations

5.1. Geographic Skew

5.2. Wetland Type Imbalance

5.3. Label Scarcity

5.4. UAV Availability

5.5. Analytical and Reporting Limitations

6. Recommendations for Practice and Future Research

- (1)

- Standardize performance reporting and validation.

- (2)

- Adopt SAR–optical fusion as a default data input.

- (3)

- Match model families to wetland classes.

- (4)

- Integrate avian ecology explicitly.

- (5)

- Leverage UAV × DL for micro-habitats.

- (6)

- Test transferability and manage domain shift.

- (7)

- Address geographic and typological gap.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Kappa coefficient | |

| 3D GAN | 3D Generative Adversarial Network |

| AI | Artificial Intelligence |

| AUC | Area under the curve |

| C5.0 Decision Tree | C5.0 Classification Algorithm |

| CA-Markov | Cellular Automata-Markov Chain Model |

| CNN | Convolutional Neural Network |

| CNN ensemble | Ensemble of Convolutional Neural Networks |

| CNN-Vision Transformer fusion | Combined CNN and Vision Transformer Architecture |

| Coordinate Attention | Attention Mechanism Using Spatial Coordinates |

| DL | Deep Learning |

| DTW-Kmeans++ | Dynamic Time Warping with K-means++ |

| Deep CNN | Deep Convolutional Neural Network |

| DenseNet | Densely Connected Convolutional Network |

| Fractional-order derivatives | Mathematical Feature Transformation Method |

| GAN | Generative Adversarial Network |

| GBM | Gradient Boosting Machine |

| GEE | Google Earth Engine |

| IEEE | Institute of Electrical and Electronics Engineers |

| IoU | Intersection Over Union |

| InSAR | Interferometric Synthetic Aperture Radar |

| K-means | K-means Clustering |

| KNN | K-Nearest Neighbors |

| LiDAR | Light Detection and Ranging |

| LightGBM | Light Gradient Boosting Machine |

| mIoU | Mean Intersection Over Union |

| ML | Machine Learning |

| MaxEnt | Maximum Entropy Model |

| MDPI | Multidsciplinary Digital Publishing Institute |

| NDVI | Normalized Difference Vegetation Index |

| NDWI | Normalized Difference Water Index |

| OA | Overall Accuracy |

| OBIA | Object-Based Image Analysis |

| PICO | Population, Intervention, Comparison, Outcome |

| RF | Random Forest |

| RFE | Recursive Feature Elimination |

| Residual Attention U-Net | U-Net with Residual and Attention Mechanisms |

| Residual U-Net | U-Net with Residual Connections |

| SAR | Synthetic Aperture Radar |

| SHAP-DNN | SHapley Additive Explanations for Deep Neural Networks |

| SPIDER | Sample-Phenomenon of Interest-Design-Evaluation-Research |

| Type | |

| SVM | Support Vector Machine |

| UAV-LiDAR | Unmanned Aerial Vehicle-Mounted Light Detection and |

| Ranging | |

| VHR | Very-High-Resolution |

| ViT | Vision Transformer |

| XGBoost | Extreme Gradient Boosting |

References

- Dornelas, M.; Gotelli, N.J.; McGill, B.; Shimadzu, H.; Moyes, F.; Sievers, C.; Magurran, A.E. Assemblage time series reveal biodiversity change but not systematic loss. Science 2014, 344, 296–299. [Google Scholar] [CrossRef] [PubMed]

- Pimm, S.L.; Jenkins, C.N.; Abell, R.; Brooks, T.M.; Gittleman, J.L.; Joppa, L.N.; Raven, P.H.; Roberts, C.M.; Sexton, J.O. The biodiversity of species and their rates of extinction, distribution, and protection. Science 2014, 344, 1246752. [Google Scholar] [CrossRef]

- Newbold, T. Future effects of climate and land-use change on terrestrial vertebrate community diversity under different scenarios. Proc. R. Soc. B Biol. Sci. 2018, 285, 20180792. [Google Scholar] [CrossRef] [PubMed]

- Cepic, M.; Bechtold, U.; Wilfing, H. Modelling human influences on biodiversity at a global scale–A human ecology perspective. Ecol. Model. 2022, 465, 109854. [Google Scholar] [CrossRef]

- Huang, J.; Wang, R.Y.; Chen, L. Analysis of Bird Habitat Suitability in Chongming Island Based on GIS and Fragstats. Int. J. For. Anim. Fish. Res. 2023, 7, 1–23. [Google Scholar] [CrossRef]

- Demarquet, Q.; Rapinel, S.; Dufour, S.; Hubert-Moy, L. Long-Term Wetland Monitoring Using the Landsat Archive: A Review. Remote Sens. 2023, 15, 820. [Google Scholar] [CrossRef]

- Mahendiran, M.; Azeez, P.A. Ecosystem Services of Birds: A Review of Market and Non-market Values. Entomol. Ornithol. Herpetol. Curr. Res. 2018, 7, 1000209. [Google Scholar] [CrossRef]

- Fair, J.M.; Paul, E.; Jones, J. Guidelines to the Use of Wild Birds in Research; Ornithological Council: Washington, DC, USA, 2010; pp. 1–215. [Google Scholar]

- Gregory, R.D.; Strien, A.V. Wild bird indicators: Using composite population trends of birds as measures of environmental health. Ornithol. Sci. 2010, 9, 3–22. [Google Scholar] [CrossRef]

- Fraixedas, S.; Lindén, A.; Piha, M.; Cabeza, M.; Gregory, R.; Lehikoinen, A. A state-of-the-art review on birds as indicators of biodiversity: Advances, challenges, and future directions. Ecol. Indic. 2020, 118, 106728. [Google Scholar] [CrossRef]

- Hunter, D.; Marra, P.P.; Perrault, A.M. Migratory Connectivity and the Conservation of Migratory Animals. Environ. Law 2011, 41, 317–354. [Google Scholar]

- Xu, Y.; Si, Y.; Takekawa, J.; Liu, Q.; Prins, H.H.; Yin, S.; Prosser, D.J.; Gong, P.; de Boer, W.F. A network approach to prioritize conservation efforts for migratory birds. Conserv. Biol. 2020, 34, 416–426. [Google Scholar] [CrossRef]

- Runge, C.A.; Watson, J.E.; Butchart, S.H.; Hanson, J.O.; Possingham, H.P.; Fuller, R.A. Protected areas and global conservation of migratory birds. Science 2015, 350, 1255–1258. [Google Scholar] [CrossRef] [PubMed]

- IJsseldijk, L.L.; ten Doeschate, M.T.; Brownlow, A.; Davison, N.J.; Deaville, R.; Galatius, A.; Gilles, A.; Haelters, J.; Jepson, P.D.; Keijl, G.O.; et al. Spatiotemporal mortality and demographic trends in a small cetacean: Strandings to inform conservation management. Biol. Conserv. 2020, 249, 108733. [Google Scholar] [CrossRef]

- Sauer, J.R.; Pardieck, K.L.; Ziolkowski, D.J.; Smith, A.C.; Hudson, M.A.R.; Rodriguez, V.; Berlanga, H.; Niven, D.K.; Link, W.A. The first 50 years of the North American Breeding Bird Survey. Condor 2017, 119, 576–593. [Google Scholar] [CrossRef]

- Dickinson, J.L.; Shirk, J.; Bonter, D.; Bonney, R.; Crain, R.L.; Martin, J.; Phillips, T.; Purcell, K. The current state of citizen science as a tool for ecological research and public engagement. Front. Ecol. Environ. 2012, 10, 291–297. [Google Scholar] [CrossRef]

- Ralph, C.J.; Geupel, G.R.; Pyle, P.; Martin, T.E.; DeSante, D.F. Handbook of Field Methods for Monitoring Landbirds. Director 1993, 144, 1–41. [Google Scholar]

- Lieury, N.; Devillard, S.; Besnard, A.; Gimenez, O.; Hameau, O.; Ponchon, C.; Millon, A. Designing cost-effective capture-recapture surveys for improving the monitoring of survival in bird populations. Biol. Conserv. 2017, 214, 233–241. [Google Scholar] [CrossRef]

- Markova-Nenova, N.; Engler, J.O.; Cord, A.F.; Wätzold, F. A Cost Comparison Analysis of Bird-Monitoring Techniques for Result-Based Payments in Agriculture. 2023, Volume 32. Available online: https://mpra.ub.uni-muenchen.de/id/eprint/116311 (accessed on 8 September 2025).

- Witmer, G.W. Wildlife population monitoring: Some practical considerations. Wildl. Res. 2005, 32, 259–263. [Google Scholar] [CrossRef]

- Wang, D.; Shao, Q.; Yue, H. Surveying wild animals from satellites, manned aircraft and unmanned aerial systems (UASs): A review. Remote Sens. 2019, 11, 1308. [Google Scholar] [CrossRef]

- Sauer, J.R.; Link, W.A.; Fallon, J.E.; Pardieck, K.L.; Ziolkowski, D.J. The North American Breeding Bird Survey 1966–2011: Summary Analysis and Species Accounts. N. Am. Fauna 2013, 79, 1–32. [Google Scholar] [CrossRef]

- Tibbetts, J.H. Remote sensors bring wildlife tracking to new level. BioScience 2017, 67, 411–417. [Google Scholar] [CrossRef]

- Stephenson, P.J. Technological advances in biodiversity monitoring: Applicability, opportunities and challenges. Curr. Opin. Environ. Sustain. 2020, 45, 36–41. [Google Scholar] [CrossRef]

- Stephenson, P.J. Integrating Remote Sensing into Wildlife Monitoring for Conservation. Environ. Conserv. 2019, 46, 181–183. [Google Scholar] [CrossRef]

- Kuiper, S.D.; Coops, N.C.; Hinch, S.G.; White, J.C. Advances in remote sensing of freshwater fish habitat: A systematic review to identify current approaches, strengths and challenges. Fish Fish. 2023, 24, 829–847. [Google Scholar] [CrossRef]

- Pettorelli, N.; Laurance, W.F.; O’Brien, T.G.; Wegmann, M.; Nagendra, H.; Turner, W. Satellite remote sensing for applied ecologists: Opportunities and challenges. J. Appl. Ecol. 2014, 51, 839–848. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Raja Segaran, R.; Reid, I.; Terauds, A.; Koh, L.P. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Lahoz-Monfort, J.J.; Magrath, M.J. A Comprehensive Overview of Technologies for Species and Habitat Monitoring and Conservation. BioScience 2021, 71, 1038–1062. [Google Scholar] [CrossRef]

- Drakshayini; Mohan, S.T.; Swathi, M.; Kadali, N. Leveraging Machine Learning and Remote Sensing for Wildlife Conservation: A Comprehensive Review. Int. J. Adv. Res. 2023, 11, 636–647. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (UAVs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Xie, J.; Hu, K.; Zhu, M.; Yu, J.; Zhu, Q. Investigation of Different CNN-Based Models for Improved Bird Sound Classification. IEEE Access 2019, 7, 175353–175361. [Google Scholar] [CrossRef]

- Pinto-Ledezma, J.N.; Cavender-Bares, J. Predicting species distributions and community composition using satellite remote sensing predictors. Sci. Rep. 2021, 11, 16448. [Google Scholar] [CrossRef]

- Yuan, S.; Liang, X.; Lin, T.; Chen, S.; Liu, R.; Wang, J.; Zhang, H.; Gong, P. A comprehensive review of remote sensing in wetland classification and mapping. arXiv 2025, arXiv:2504.10842. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Cui, Z.; Zuo, Y.; Lei, K.; Zhang, J.; Yang, T.; Ji, P. Precise Wetland Mapping in Southeast Asia for the Ramsar Strategic Plan 2016–24. Remote Sens. 2022, 14, 5730. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Besnard, A.G.; Davranche, A.; Maugenest, S.; Bouzillé, J.B.; Vian, A.; Secondi, J. Vegetation maps based on remote sensing are informative predictors of habitat selection of grassland birds across a wetness gradient. Ecol. Indic. 2015, 58, 47–54. [Google Scholar] [CrossRef]

- Ozesmi, S.L.; Bauer, M.E. Satellite remote sensing of wetlands. Wetl. Ecol. Manag. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Cooke, A.; Smith, D.; Booth, A. Beyond PICO: The SPIDER tool for qualitative evidence synthesis. Qual. Health Res. 2012, 22, 1435–1443. [Google Scholar] [CrossRef]

- Frandsen, T.F.; Bruun Nielsen, M.F.; Lindhardt, C.L.; Eriksen, M.B. Using the full PICO model as a search tool for systematic reviews resulted in lower recall for some PICO elements. J. Clin. Epidemiol. 2020, 127, 69–75. [Google Scholar] [CrossRef]

- Rezac, S.J.; Salkind, N.J.; McTavish, D.; Loether, H. Inclusion and exclusion criteria in research studies: Definitions and why they matter. Teach. Sociol. 2018, 29, 257. [Google Scholar] [CrossRef]

- Meline, T. Selecting Studies for Systemic Review: Inclusion and Exclusion Criteria. Contemp. Issues Commun. Sci. Disord. 2006, 33, 21–27. [Google Scholar] [CrossRef]

- Wang, L.; Qu, J.J. NMDI: A normalized multi-band drought index for monitoring soil and vegetation moisture with satellite remote sensing. Geophys. Res. Lett. 2007, 34, 1–5. [Google Scholar] [CrossRef]

- Di Vittorio, C.A.; Georgakakos, A.P. Land cover classification and wetland inundation mapping using MODIS. Remote Sens. Environ. 2018, 204, 1–17. [Google Scholar] [CrossRef]

- NICE. The Guidelines Manual: Appendices B-I Audit and Service Improvement. 2012. Available online: https://www.nice.org.uk/process/pmg6/resources/the-guidelines-manual-appendices-bi-pdf-3304416006853 (accessed on 8 September 2025).

- Wang, D.; Mao, D.; Wang, M.; Xiao, X.; Choi, C.Y.; Huang, C.; Wang, Z. Identify and map coastal aquaculture ponds and their drainage and impoundment dynamics. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104246. [Google Scholar] [CrossRef]

- Jia, M.; Wang, Z.; Mao, D.; Ren, C.; Song, K.; Zhao, C.; Wang, C.; Xiao, X.; Wang, Y. Mapping global distribution of mangrove forests at 10-m resolution. Sci. Bull. 2023, 68, 1306–1316. [Google Scholar] [CrossRef]

- Wang, M.; Mao, D.; Wang, Y.; Li, H.; Zhen, J.; Xiang, H.; Ren, Y.; Jia, M.; Song, K.; Wang, Z. Interannual changes of urban wetlands in China’s major cities from 1985 to 2022. ISPRS J. Photogramm. Remote Sens. 2024, 209, 383–397. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, M.; Zhang, Y.; Mao, D.; Li, F.; Wu, F.; Song, J.; Li, X.; Kou, C.; Li, C.; et al. Comparison of Machine Learning Methods for Predicting Soil Total Nitrogen Content Using Landsat-8, Sentinel-1, and Sentinel-2 Images. Remote Sens. 2023, 15, 2907. [Google Scholar] [CrossRef]

- Wang, M.; Mao, D.; Wang, Y.; Xiao, X.; Xiang, H.; Feng, K.; Luo, L.; Jia, M.; Song, K.; Wang, Z. Wetland mapping in East Asia by two-stage object-based Random Forest and hierarchical decision tree algorithms on Sentinel-1/2 images. Remote Sens. Environ. 2023, 297, 113793. [Google Scholar] [CrossRef]

- Zhao, C.; Jia, M.; Zhang, R.; Wang, Z.; Ren, C.; Mao, D.; Wang, Y. Mangrove species mapping in coastal China using synthesized Sentinel-2 high-separability images. Remote Sens. Environ. 2024, 307, 114151. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, G.; Chen, X. Wetland Scene Segmentation of Remote Sensing Images Based on Lie Group Feature and Graph Cut Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 1345–1361. [Google Scholar] [CrossRef]

- Deng, Y.C.; Jiang, X. Wetland Protection Law of the People’s Republic of China: New Efforts in Wetland Conservation. Int. J. Mar. Coast. Law 2023, 38, 141–160. [Google Scholar] [CrossRef]

- Wu, P.; Zhan, W.; Cheng, N.; Yang, H.; Wu, Y. A Framework to Calculate Annual Landscape Ecological Risk Index Based on Land Use/Land Cover Changes: A Case Study on Shengjin Lake Wetland. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11926–11935. [Google Scholar] [CrossRef]

- Zhang, Z.; Fan, Y.; Zhang, A.; Jiao, Z. Baseline-Based Soil Salinity Index (BSSI): A Novel Remote Sensing Monitoring Method of Soil Salinization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 202–214. [Google Scholar] [CrossRef]

- Mao, D.; Wang, Z.; Wang, Y.; Choi, C.Y.; Jia, M.; Jackson, M.V.; Fuller, R.A. Remote Observations in China’s Ramsar Sites: Wetland Dynamics, Anthropogenic Threats, and Implications for Sustainable Development Goals. J. Remote Sens. 2021, 2021, 9849343. [Google Scholar] [CrossRef]

- Ke, L.; Tan, Q.; Lu, Y.; Wang, Q.; Zhang, G.; Zhao, Y.; Wang, L. Classification and spatio-temporal evolution analysis of coastal wetlands in the Liaohe Estuary from 1985 to 2023: Based on feature selection and sample migration methods. Front. For. Glob. Change 2024, 7, 1406473. [Google Scholar] [CrossRef]

- Zhao, C.; Jia, M.; Wang, Z.; Mao, D.; Wang, Y. Identifying mangroves through knowledge extracted from trained random forest models: An interpretable mangrove mapping approach (IMMA). ISPRS J. Photogramm. Remote Sens. 2023, 201, 209–225. [Google Scholar] [CrossRef]

- Cao, J.; Liu, K.; Zhuo, L.; Liu, L.; Zhu, Y.; Peng, L. Combining UAV-based hyperspectral and LiDAR data for mangrove species classification using the rotation forest algorithm. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102414. [Google Scholar] [CrossRef]

- Jia, M.; Zhang, R.; Zhao, C.; Zhou, Y.; Ren, C.; Mao, D.; Li, H.; Sun, G.; Zhang, H.; Yu, W.; et al. Synergistic estimation of mangrove canopy height across coastal China: Integrating SDGSAT-1 multispectral data with Sentinel-1/2 time-series imagery. Remote Sens. Environ. 2025, 323, 114719. [Google Scholar] [CrossRef]

- Jamali, A.; Mahdianpari, M.; Brisco, B.; Granger, J.; Mohammadimanesh, F.; Salehi, B. Comparing solo versus ensemble convolutional neural networks for wetland classification using multi-spectral satellite imagery. Remote Sens. 2021, 13, 2046. [Google Scholar] [CrossRef]

- Banks, S.; White, L.; Behnamian, A.; Chen, Z.; Montpetit, B.; Brisco, B.; Pasher, J.; Duffe, J. Wetland Classification with Multi-Angle/Temporal SAR Using Random Forests. Remote Sens. 2019, 11, 670. [Google Scholar] [CrossRef]

- Valenti, V.L.; Carcelen, E.C.; Lange, K.; Russo, N.J.; Chapman, B. Leveraging Google Earth Engine User Interface for Semiautomated Wetland Classification in the Great Lakes Basin at 10 m with Optical and Radar Geospatial Datasets. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6008–6018. [Google Scholar] [CrossRef]

- Lemenkova, P. Support Vector Machine Algorithm for Mapping Land Cover Dynamics in Senegal, West Africa, Using Earth Observation Data. Earth 2024, 5, 420–462. [Google Scholar] [CrossRef]

- Guo, M.; Yu, Z.; Xu, Y.; Huang, Y.; Li, C. Me-net: A deep convolutional neural network for extracting mangrove using sentinel-2A data. Remote Sens. 2021, 13, 1292. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Ahmadi, S.A.; Amani, M.; Mohammadzadeh, A.; Jamali, S. Application of Artificial Neural Networks for Mangrove Mapping Using Multi-Temporal and Multi-Source Remote Sensing Imagery. Water 2022, 14, 244. [Google Scholar] [CrossRef]

- Jie, L.; Wang, J. Research on the extraction method of coastal wetlands based on sentinel-2 data. Mar. Environ. Res. 2024, 198, 106429. [Google Scholar] [CrossRef]

- Campbell, A.D. Mapping aboveground biomass and carbon in salt marshes across the contiguous United States. J. Appl. Remote Sens. 2024, 18, 032404. [Google Scholar] [CrossRef]

- Aslam, R.W.; Naz, I.; Shu, H.; Yan, J.; Quddoos, A.; Tariq, A.; Davis, J.B.; Al-Saif, A.M.; Soufan, W. Multi-temporal image analysis of wetland dynamics using machine learning algorithms. J. Environ. Manag. 2024, 371, 123123. [Google Scholar] [CrossRef]

- Data, U.S.; Random, Z.B. Fine-Resolution Wetland Mapping in the Yellow River Basin Using Sentinel-1/2 Data via Zoning-Based Random Forest with Remote-Sensing Feature Preferences. Water 2024, 16, 2415. [Google Scholar]

- Hardy, A.; Ettritch, G.; Cross, D.E.; Bunting, P.; Liywalii, F.; Sakala, J.; Silumesii, A.; Singini, D.; Smith, M.; Willis, T.; et al. Automatic Detection of Open and Vegetated Water Bodies Using Sentinel 1 to Map African Malaria Vector Mosquito Breeding Habitats. Remote Sens. 2019, 11, 593. [Google Scholar] [CrossRef]

- Nath, R.; Ramachandran, A.; Tripathi, V.; Badola, R. Ecological Informatics Spatio-temporal habitat assessment of the Gangetic floodplain in the Hastinapur wildlife sanctuary. Ecol. Inform. 2022, 72, 101851. [Google Scholar] [CrossRef]

- Sun, C.; Li, J.; Liu, Y.; Liu, Y.; Liu, R. Plant species classification in salt marshes using phenological parameters derived from Sentinel-2 pixel-differential time-series. Remote Sens. Environ. 2021, 256, 112320. [Google Scholar] [CrossRef]

- Avci, C.; Budak, M.; Yagmur, N.; Balcik, F.B. Comparison between random forest and support vector machine algorithms for LULC classification. Int. J. Eng. Geosci. 2023, 8, 1–10. [Google Scholar] [CrossRef]

- Isoaho, A.; Elo, M.; Marttila, H.; Rana, P.; Lensu, A.; Räsänen, A. Monitoring changes in boreal peatland vegetation after restoration with optical satellite imagery. Sci. Total Environ. 2024, 957, 177697. [Google Scholar] [CrossRef]

- DeLancey, E.R.; Simms, J.F.; Mahdianpari, M.; Brisco, B.; Mahoney, C.; Kariyeva, J. Comparing deep learning and shallow learning for large-scalewetland classification in Alberta, Canada. Remote Sens. 2020, 12, 2. [Google Scholar] [CrossRef]

- Dragoş, M.; Petrescu, A.; Merciu, G.L. Analysis of vegetation from satelite images correlated to the bird species presence and the state of health of the ecosystems of Bucharest during the period from 1991 to 2006. Geogr. Pannonica 2017, 21, 9–25. [Google Scholar] [CrossRef]

- Munizaga, J.; Garc, M.; Ureta, F.; Novoa, V.; Rojas, O.; Rojas, C. Mapping Coastal Wetlands Using Satellite Imagery and Machine Learning in a Highly Urbanized Landscape. Sustainability 2022, 14, 5700. [Google Scholar] [CrossRef]

- Zhipeng, G.; Jiang, W.; Peng, K.; Deng, Y.; Wang, X. Wetland Mapping and Landscape Analysis for Supporting International Wetland Cities: Case Studies in Nanchang City and Wuhan City. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8858–8870. [Google Scholar] [CrossRef]

- Lou, A.; He, Z.; Zhou, C.; Lai, G. International Journal of Applied Earth Observation and Geoinformation Long-term series wetland classification of Guangdong-Hong Kong-Macao Greater Bay Area based on APSMnet. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103765. [Google Scholar] [CrossRef]

- Lao, C.; Yu, X.; Zhan, L.; Xin, P. Monitoring soil salinity in coastal wetlands with Sentinel-2 MSI data: Combining fractional-order derivatives and stacked machine learning models. Agric. Water Manag. 2024, 306, 109147. [Google Scholar] [CrossRef]

- Ji, P.; Su, R.; Wu, G.; Xue, L.; Zhang, Z.; Fang, H.; Gao, R.; Zhang, W.; Zhang, D. Projecting Future Wetland Dynamics Under Climate Change and Land Use Pressure: A Machine Learning Approach Using Remote Sensing and Markov Chain Modeling. Remote Sens. 2025, 17, 1089. [Google Scholar] [CrossRef]

- Zhao, C.; Jia, M.; Zhang, R.; Wang, Z.; Mao, D.; Zhong, C.; Guo, X. Distribution of Mangrove Species Kandelia obovata in China Using Time-series Sentinel-2 Imagery for Sustainable Mangrove Management. J. Remote Sens. 2024, 4, 0143. [Google Scholar] [CrossRef]

- Li, S.; Meng, W.; Liu, D.; Yang, Q.; Chen, L.; Dai, Q.; Ma, T.; Gao, R.; Ru, W.; Li, Y.; et al. Migratory Whooper Swans Cygnus cygnus Transmit H5N1 Virus between China and Mongolia: Combination Evidence from Satellite Tracking and Phylogenetics Analysis. Sci. Rep. 2018, 8, 7049. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Xiao, X.; Wang, X.; Qin, Y.; Doughty, R.; Yang, X.; Meng, C.; Yao, Y.; Dong, J. Mapping wetlands in Northeast China by using knowledge-based algorithms and microwave (PALSAR-2, Sentinel-1), optical (Sentinel-2, Landsat), and thermal (MODIS) images. J. Environ. Manag. 2024, 349, 119618. [Google Scholar] [CrossRef] [PubMed]

- Wellmann, T.; Lausch, A.; Scheuer, S.; Haase, D. Earth observation based indication for avian species distribution models using the spectral trait concept and machine learning in an urban setting. Ecol. Indic. 2020, 111, 106029. [Google Scholar] [CrossRef]

- Regos, A.; Tapia, L.; Gil-Carrera, A.; Domínguez, J. Monitoring protected areas from space: A multi-temporal assessment using raptors as biodiversity surrogates. PLoS ONE 2017, 12, e0181769. [Google Scholar] [CrossRef] [PubMed]

- Gaspar, L.P.; Scarpelli, M.D.A.; Oliveira, E.G.; Alves, R.S.c.; Gomes, A.M.; Wolf, R.; Ferneda, R.V.; Kamazuka, S.H.; Gussoni, C.O.A.; Ribeiro, M.C. Predicting bird diversity through acoustic indices within the Atlantic Forest biodiversity hotspot. Front. Remote Sens. 2023, 4, 1283719. [Google Scholar] [CrossRef]

- Bay, P.P. Artificial Intelligence for Computational Remote Sensing: Quantifying Patterns of Land Cover Types around Cheetham. J. Mar. Sci. Eng. 2024, 12, 1279. [Google Scholar] [CrossRef]

- Radović, A.; Kapelj, S.; Taylor, L.T. Utilizing Remote Sensing Data for Species Distribution Modeling of Birds in Croatia. Diversity 2025, 17, 399. [Google Scholar] [CrossRef]

- Awoyemi, A.G.; Alabi, T.R.; Ibáñez-Álamo, J.D. Remotely sensed spectral indicators of bird taxonomic, functional and phylogenetic diversity across Afrotropical urban and non-urban habitats. Ecol. Indic. 2025, 170, 112966. [Google Scholar] [CrossRef]

- Roilo, S.; Spake, R.; Bullock, J.M.; Cord, A.F. A cross-regional analysis of red-backed shrike responses to agri-environmental schemes in Europe. Environ. Res. Lett. 2024, 19, 034004. [Google Scholar] [CrossRef]

- Alírio, J.; Sillero, N.P.; Garcia, N.; Campos, J.J.; Arenas-Castro, S.; Pôças, I.; Duarte, L.; Teodoro, A.C.M. Montrends: A Google Earth Engine application for analysing species habitat suitability over time. Ecol. Inform. 2024, 13197, 51. [Google Scholar] [CrossRef]

- Bekkema, M.E.; Eleveld, M. Mapping grassland management intensity using sentinel-2 satellite data. GI_Forum 2018, 6, 194–213. [Google Scholar] [CrossRef]

- Ivanova, I.; Stankova, N.; Borisova, D.; Spasova, T.; Dancheva, A. Dynamics and development of Alepu marsh for the period 2013-2020 based on satellite data. In Proceedings of the Earth Resources and Environmental Remote Sensing/GIS Applications XII, Online, 13–17 September 2021; Volume 11863, pp. 1–9. [Google Scholar] [CrossRef]

- Abbott, B.N.; Wallace, J.; Nicholas, D.M.; Karim, F.; Waltham, N.J. Bund Removal to Re-Establish Tidal Flow, Remove Aquatic Weeds and Restore Coastal Wetland Services-North Queensland, Australia. PLoS ONE 2020, 15, e0217531. [Google Scholar] [CrossRef]

- Hayri Kesikoglu, M.; Haluk Atasever, U.; Dadaser-Celik, F.; Ozkan, C. Performance of ANN, SVM and MLH techniques for land use/cover change detection at Sultan Marshes wetland, Turkey. Water Sci. Technol. 2019, 80, 466–477. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Na, X. Mapping herbaceous wetlands using combined phenological and hydrological features from time-series Sentinel-1/2 imagery. Int. J. Digit. Earth 2025, 18, 2498600. [Google Scholar] [CrossRef]

- Minotti, P.G.; Rajngewerc, M.; Alí Santoro, V.; Grimson, R. Evaluation of SAR C-band interferometric coherence time-series for coastal wetland hydropattern mapping. J. South Am. Earth Sci. 2021, 106, 102976. [Google Scholar] [CrossRef]

- Wang, Q.; Cui, G.; Liu, H.; Huang, X.; Xiao, X.; Wang, M.; Jia, M.; Mao, D.; Li, X.; Xiao, Y.; et al. Spatiotemporal Dynamics and Potential Distribution Prediction of Spartina alterniflora Invasion in Bohai Bay Based on Sentinel Time-Series Data and MaxEnt Modeling. Remote Sens. 2025, 17, 975. [Google Scholar] [CrossRef]

- Cao, J.; Liu, Q.; Yu, C.; Chen, Z.; Dong, X.; Xu, M.; Zhao, Y. Extracting waterline and inverting tidal flats topography based on Sentinel-2 remote sensing images: A case study of the northern part of the North Jiangsu radial sand ridges. Geomorphology 2024, 461, 109323. [Google Scholar] [CrossRef]

- Cao, H.; Han, L.; Liu, Z.; Li, L. Monitoring and driving force analysis of spatial and temporal change of water area of Hongjiannao Lake from 1973 to 2019. Ecol. Inform. 2021, 61, 101230. [Google Scholar] [CrossRef]

- Villoslada, M.; Berner, L.T.; Juutinen, S.; Ylänne, H.; Kumpula, T. Upscaling vascular aboveground biomass and topsoil moisture of subarctic fens from Unoccupied Aerial Vehicles (UAVs) to satellite level. Sci. Total Environ. 2024, 933, 173049. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Fagherazzi, S.; Liu, Y. Classification mapping of salt marsh vegetation by flexible monthly NDVI time-series using Landsat imagery. Estuarine Coast. Shelf Sci. 2018, 213, 61–80. [Google Scholar] [CrossRef]

- Zheng, J.Y.; Hao, Y.Y.; Wang, Y.C.; Zhou, S.Q.; Wu, W.B.; Yuan, Q.; Gao, Y.; Guo, H.Q.; Cai, X.X.; Zhao, B. Coastal Wetland Vegetation Classification Using Pixel-Based, Object-Based and Deep Learning Methods Based on RGB-UAV. Land 2022, 11, 2039. [Google Scholar] [CrossRef]

- Wang, Y.; Jin, S.; Dardanelli, G. Vegetation Classification and Evaluation of Yancheng Coastal Wetlands Based on Random Forest Algorithm from Sentinel-2 Images. Remote Sens. 2024, 16, 1124. [Google Scholar] [CrossRef]

- Jamali, A.; Mahdianpari, M.; Brisco, B.; Mao, D.; Salehi, B.; Mohammadimanesh, F. 3DUNetGSFormer: A deep learning pipeline for complex wetland mapping using generative adversarial networks and Swin transformer. Ecol. Inform. 2022, 72, 101904. [Google Scholar] [CrossRef]

- Heath, J.T.; Grimmett, L.; Gopalakrishnan, T.; Thomas, R.F.; Lenehan, J. Integrating Sentinel 2 Imagery with High-Resolution Elevation Data for Automated Inundation Monitoring in Vegetated Floodplain Wetlands. Remote Sens. 2024, 16, 2434. [Google Scholar] [CrossRef]

- Gannod, M.; Masto, N.; Owusu, C.; Highway, C.; Brown, K.E.; Blake-bradshaw, A.; Feddersen, J.C.; Hagy, H.M.; Talbert, D.A.; Cohen, B. Semantic Segmentation with Multispectral Satellite Images of Waterfowl Habitat. In Proceedings of the International FLAIRS Conference Proceedings, Miami, FL, USA, 16–19 May 2021. [Google Scholar]

- Bu, F.; Dai, Z.; Mei, X.; Chu, A.; Cheng, J.; Lan, L. Machine learning-based mapping wetland dynamics of the largest freshwater lake in China. Glob. Ecol. Conserv. 2025, 59, e03585. [Google Scholar] [CrossRef]

- Yao, H.; Fu, B.; Zhang, Y.; Li, S.; Xie, S.; Qin, J.; Fan, D.; Gao, E. Combination of Hyperspectral and Quad-Polarization SAR Images to Classify Marsh Vegetation Using Stacking Ensemble Learning Algorithm. Remote Sens. 2022, 14, 5478. [Google Scholar] [CrossRef]

- Approach, M.s.; Hemati, M.; Mahdianpari, M.; Shiri, H. Integrating SAR and optical data for aboveground biomass estimation of coastal wetlands using machine learning: Multi-scale approach. Remote Sens. 2024, 16, 831. [Google Scholar]

- Jiang, W.; Zhang, M.; Long, J.; Pan, Y.; Ma, Y. HLEL: A wetland classification algorithm with self-learning capability, taking the Sanjiang Nature Reserve I as an example. J. Hydrol. 2023, 627, 130446. [Google Scholar] [CrossRef]

- Niedzielko, J.; Kope, D.; Jaroci, A. Testing Textural Information Base on LiDAR and Hyperspectral Data for Mapping Wetland Vegetation: A Case Study of Warta River Mouth National Park ( Poland). Remote Sens. 2023, 15, 3055. [Google Scholar]

- Prentice, R.M.; Villoslada, M.; Ward, R.D.; Bergamo, T.F.; Joyce, C.B. Synergistic use of Sentinel-2 and UAV-derived data for plant fractional cover distribution mapping of coastal meadows with digital elevation models. Biogeosciences 2024, 21, 1411–1431. [Google Scholar] [CrossRef]

- Gasela, M.; Kganyago, M.; Jager, G.D. Using resampled nSight-2 hyperspectral data and various machine learning classifiers for discriminating wetland plant species in a Ramsar Wetland site, South Africa. Appl. Geomat. 2024, 16, 429–440. [Google Scholar] [CrossRef]

- Xiang, H.; Xi, Y.; Mao, D.; Mahdianpari, M.; Zhang, J.; Wang, M.; Jia, M.; Yu, F.; Wang, Z. Mapping potential wetlands by a new framework method using random forest algorithm and big earth data: A case study in China’s Yangtze River Basin. Glob. Ecol. Conserv. 2023, 42, e02397. [Google Scholar] [CrossRef]

- Jafarzadeh, H.; Member, S.; Mahdianpari, M.; Member, S.; Gill, E.W.; Member, S. Wet-GC: A Novel Multimodel Graph Convolutional Approach for Wetland Classification Using Sentinel-1 and 2 Imagery with Limited Training Samples. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5303–5316. [Google Scholar] [CrossRef]

- Turnbull, A.; Soto, M.; Michael, B. Delineation and Classification of Wetlands in the Northern Jarrah Forest, Western Australia Using Remote Sensing and Machine Learning. Wetlands 2024, 44, 52. [Google Scholar] [CrossRef]

- Tu, C.; Li, P.; Li, Z.; Wang, H.; Yin, S.; Li, D.; Zhu, Q.; Chang, M.; Liu, J.; Wang, G. Synergetic Classification of Coastal Wetlands over the Yellow River Delta with GF-3 Full-Polarization SAR and Zhuhai-1 OHS Hyperspectral Remote Sensing. Remote Sens. 2021, 13, 4444. [Google Scholar] [CrossRef]

- Mallick, J.; Talukdar, S.; Pal, S.; Rahman, A. Ecological Informatics A novel classifier for improving wetland mapping by integrating image fusion techniques and ensemble machine learning classifiers. Ecol. Inform. 2021, 65, 101426. [Google Scholar] [CrossRef]

- Musungu, K.; Dube, T.; Smit, J.; Shoko, M. Using UAV multispectral photography to discriminate plant species in a seep wetland of the Fynbos Biome. Wetl. Ecol. Manag. 2024, 32, 207–227. [Google Scholar] [CrossRef]

- Zheng, G.; Wang, Y.; Zhao, C.; Dai, W.; Kattel, G.R. Quantitative Analysis of Tidal Creek Evolution and Vegetation Variation in Silting Muddy Flats on the Yellow Sea. Remote Sens. 2023, 15, 5107. [Google Scholar] [CrossRef]

- Gxokwe, S.; Dube, T.; Mazvimavi, D. Leveraging Google Earth Engine platform to characterize and map small seasonal wetlands in the semi-arid environments of South Africa. Sci. Total Environ. 2022, 803, 150139. [Google Scholar] [CrossRef] [PubMed]

- Chignell, S.M.; Evangelista, P.H.; Luizza, M.W.; Skach, S.; Young, N.E. An integrative modeling approach to mapping wetlands and riparian areas in a heterogeneous Rocky Mountain watershed. Remote Sens. Ecol. Conserv. 2018, 4, 150–165. [Google Scholar] [CrossRef]

- Bartold, M.; Kluczek, M. Ecological Informatics Estimating of chlorophyll fluorescence parameter Fv/Fm for plant stress detection at peatlands under Ramsar Convention with Sentinel-2 satellite imagery. Ecol. Inform. 2024, 81, 102603. [Google Scholar] [CrossRef]

- Wen, L. Coastal Wetland Mapping Using Ensemble Learning Algorithms: A Comparative Study of Bagging, Boosting and Stacking Techniques Li. Remote Sens. 2020, 12, 1683. [Google Scholar] [CrossRef]

- Zhao, J.Q.; Wang, Z.; Zhang, Q.; Niu, Y.; Lu, Z.; Zhao, Z. A novel feature selection criterion for wetland mapping using GF-3 and Sentinel-2 Data. Ecol. Indic. 2025, 171, 113146. [Google Scholar] [CrossRef]

- Yang, Y.; Meng, Z.; Zu, J.; Cai, W.; Wang, J.; Su, H.; Yang, J. Fine-Scale Mangrove Species Classification Based on UAV Multispectral and Hyperspectral Remote Sensing Using Machine Learning. Remote Sens. 2024, 16, 3093. [Google Scholar] [CrossRef]

- Zhen, J.; Mao, D.; Shen, Z.; Zhao, D.; Xu, Y.; Wang, J.; Jia, M.; Wang, Z.; Ren, C. Performance of XGBoost Ensemble Learning Algorithm for Mangrove Species Classification with Multisource Spaceborne Remote Sensing Data. J. Remote Sens. 2024, 4, 0146. [Google Scholar] [CrossRef]

- Li, H.; Cui, G.; Liu, H.; Wang, Q.; Zhao, S.; Huang, X.; Zhang, R.; Jia, M.; Mao, D.; Yu, H.; et al. Dynamic Analysis of Spartina alterniflora in Yellow River Delta Based on U-Net Model and Zhuhai-1 Satellite. Remote Sens. 2025, 17, 226. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, Q.; Yang, N.; Wang, X.; Zhang, X.; Chen, Y.; Wang, S. Deep Learning Extraction of Tidal Creeks in the Yellow River Delta Using GF-2 Imagery. Remote Sens. 2025, 17, 676. [Google Scholar] [CrossRef]

- Jamali, A.; Mahdianpari, M. Swin Transformer for Complex Coastal Wetland Classification Using the Integration of Sentinel-1 and Sentinel-2 Imagery. Water 2022, 14, 178. [Google Scholar] [CrossRef]

- Li, H.; Wang, C.; Cui, Y.; Hodgson, M.; Carolina, S. ISPRS Journal of Photogrammetry and Remote Sensing Mapping salt marsh along coastal South Carolina using U-Net. ISPRS J. Photogramm. Remote Sens. 2021, 179, 121–132. [Google Scholar] [CrossRef]

- Pouliot, D.; Latifovic, R.; Pasher, J.; Duffe, J. Assessment of Convolution Neural Networks for Wetland Mapping with Landsat in the Central Canadian Boreal Forest Region. Remote Sens. 2019, 11, 772. [Google Scholar] [CrossRef]

- Jamali, A.; Mahdianpari, M.; Mohammadimanesh, F.; Brisco, B. A Synergic Use of Sentinel-1 and Sentinel-2 Imagery for Complex Wetland Classification Using Generative Adversarial Network (GAN) Scheme. Water 2021, 13, 3601. [Google Scholar] [CrossRef]

- Marjani, M.; Mohammadimanesh, F.; Mahdianpari, M.; Gill, E.W. A novel spatio-temporal vision transformer model for improving wetland mapping using multi-seasonal sentinel data. Remote Sens. Appl. Soc. Environ. 2025, 37, 101401. [Google Scholar] [CrossRef]

- Jamali, A.; Mahdianpari, M.; Mohammadimanesh, F.; Homayouni, S. A deep learning framework based on generative adversarial networks and vision transformer for complex wetland classification using limited training samples. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103095. [Google Scholar] [CrossRef]

- Mainali, K.; Evans, M.; Saavedra, D.; Mills, E.; Madsen, B.; Minnemeyer, S. Convolutional neural network for high-resolution wetland mapping with open data: Variable selection and the challenges of a generalizable model. Sci. Total Environ. 2023, 861, 160622. [Google Scholar] [CrossRef]

- Hosseiny, B.; Mahdianpari, M.; Brisco, B.; Mohammadimanesh, F.; Salehi, B. WetNet: A Spatialoral Ensemble Deep Learning Model for Wetland Classification Using Sentinel-1 and Sentinel-2. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Bakkestuen, V.; Venter, Z.; Ganerød, A.J.; Framstad, E. Delineation of Wetland Areas in South Norway from Sentinel-2 Imagery and LiDAR Using TensorFlow, U-Net, and Google Earth Engine. Remote Sens. 2023, 15, 1203. [Google Scholar] [CrossRef]

- Ghaznavi, A.; Saberioon, M.; Brom, J.; Itzerott, S. Comparative performance analysis of simple U-Net, residual attention U-Net, and VGG16-U-Net for inventory inland water bodies. Appl. Comput. Geosci. 2024, 21, 100150. [Google Scholar] [CrossRef]

- Cui, B.; Wu, J.; Li, X.; Ren, G.; Lu, Y. Combination of deep learning and vegetation index for coastal wetland mapping using GF-2 remote sensing images. Natl. Remote Sens. Bull. 2023, 27, 6–16. [Google Scholar] [CrossRef]

- Marjani, M.; Mahdianpari, M.; Mohammadimanesh, F.; Gill, E.W. CVTNet: A Fusion of Convolutional Neural Networks and Vision Transformer for Wetland Mapping Using Sentinel-1 and Sentinel-2 Satellite Data. Remote Sens. 2024, 16, 2427. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Jia, M.; Mao, D.; Wang, Z.; Ren, C.; Zhu, Q.; Li, X.; Zhang, Y. Tracking long-term floodplain wetland changes: A case study in the China side of the Amur River Basin. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102185. [Google Scholar] [CrossRef]

- Dutt, R.; Ortals, C.; He, W.; Curran, Z.C.; Angelini, C.; Canestrelli, A.; Jiang, Z. A Deep Learning Approach to Segment Coastal Marsh Tidal Creek Networks from High-Resolution Aerial Imagery. Remote Sens. 2024, 16, 2659. [Google Scholar] [CrossRef]

- Vincent, W.F.; Pina, P.; Freitas, P.; Vieira, G.; Mora, C. A trained Mask R-CNN model over PlanetScope imagery for very-high resolution surface water mapping in boreal forest-tundra. Remote Sens. Environ. 2024, 304, 114047. [Google Scholar] [CrossRef]

- Cai, F.; Tang, B.h.; Member, S.; Sima, O.; Chen, G.; Zhang, Z. Fine Extraction of Plateau Wetlands Based on a Combination of Object-Oriented Machine Learning and Ecological Rules: A Case Study of Dianchi Basin. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5364–5377. [Google Scholar] [CrossRef]

- Mohseni, F.; Amani, M.; Mohammadpour, P.; Kakooei, M.; Jin, S.; Moghimi, A. Wetland Mapping in Great Lakes Using Sentinel-1/2 Time-Series Imagery and DEM Data in Google Earth Engine. Remote Sens. 2023, 15, 3495. [Google Scholar] [CrossRef]

- Sun, Z.; Jiang, W.; Ling, Z.; Zhong, S.; Zhang, Z.; Song, J.; Xiao, Z. Using Multisource High-Resolution Remote Sensing Data (2 m) with a Habitat–Tide–Semantic Segmentation Approach for Mangrove Mapping. Remote Sens. 2023, 15, 5271. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, P.; Zhang, Q.; Wang, J. Improving wetland cover classification using artificial neural networks with ensemble techniques. GISci. Remote Sens. 2021, 58, 603–623. [Google Scholar] [CrossRef]

- Habib, W.; Mcguinness, K.; Connolly, J. Mapping artificial drains in peatlands—A national-scale assessment of Irish raised bogs using sub-meter aerial imagery and deep learning methods. Remote Sens. Ecol. Conserv. 2024, 10, 551–562. [Google Scholar] [CrossRef]

- Zhuo, W.; Wu, N.; Shi, R.; Liu, P.; Zhang, C.; Fu, X.; Cui, Y. Aboveground biomass retrieval of wetland vegetation at the species level using UAV hyperspectral imagery and machine learning. Ecol. Indic. 2024, 166, 112365. [Google Scholar] [CrossRef]

- Masood, M.; He, C.; Shah, S.A.; Rehman, S.A.U. Land Use Change Impacts over the Indus Delta: A Case Study of Sindh Province, Pakistan. Land 2024, 13, 1080. [Google Scholar] [CrossRef]

- Fu, C.; Song, X.; Xie, Y.; Wang, C.; Luo, J.; Fang, Y.; Cao, B.; Qiu, Z. Research on the Spatiotemporal Evolution of Mangrove Forests in the Hainan Island from 1991 to 2021 Based on SVM and Res-UNet Algorithms. Remote Sens. 2022, 14, 5554. [Google Scholar] [CrossRef]

- Vas, E.; Lescroël, A.; Duriez, O.; Boguszewski, G.; Grémillet, D. Approaching birds with drones: First experiments and ethical guidelines. Biol. Lett. 2015, 11, 20140754. [Google Scholar] [CrossRef]

- Chen, L.; Letu, H.; Fan, M.; Shang, H.; Tao, J.; Wu, L.; Zhang, Y.; Yu, C.; Gu, J.; Zhang, N.; et al. An Introduction to the Chinese High-Resolution Earth Observation System: Gaofen-1∼7 Civilian Satellites. J. Remote Sens. 2022, 2022, 9769536. [Google Scholar] [CrossRef]

- Lichun, M.; Ram, P. Wetland conservation legislations: Global processes and China’s practices. J. Plant Ecol. 2024, 17, rtae018. [Google Scholar] [CrossRef]

- Ye, S.; Pei, L.; He, L.; Xie, L.; Zhao, G.; Yuan, H.; Ding, X.; Pei, S.; Yang, S.; Li, X.; et al. Wetlands in China: Evolution, Carbon Sequestrations and Services, Threats, and Preservation/Restoration. Water 2022, 14, 1152. [Google Scholar] [CrossRef]

- McEvoy, J.F.; Hall, G.P.; McDonald, P.G. Evaluation of unmanned aerial vehicle shape, flight path and camera type for waterfowl surveys: Disturbance effects and species recognition. PeerJ 2016, 2016, e1831. [Google Scholar] [CrossRef]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| The study must focus on wetland monitoring and/or bird-habitat assessment. | Studies focusing exclusively on terrestrial ecosystems or general land cover mapping without relation to wetlands or bird-habitats. |

| The study must apply ML/DL algorithms for wetland and/or bird-habitat monitoring. | Studies that rely solely on traditional field-based methods without remote sensing or ML/DL integration. |

| The study must explore remote sensing technologies. | Studies using only conventional ground surveys, expert-based manual mapping, or MODIS-only papers. |

| The study was published from 2015 onward. | The study was published before 2015. |

| The study must be written in English for better accessibility. | Studies written in languages other than English. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zerrouk, M.; Ait El Kadi, K.; Sebari, I.; Fellahi, S. Machine and Deep Learning for Wetland Mapping and Bird-Habitat Monitoring: A Systematic Review of Remote-Sensing Applications (2015–April 2025). Remote Sens. 2025, 17, 3605. https://doi.org/10.3390/rs17213605

Zerrouk M, Ait El Kadi K, Sebari I, Fellahi S. Machine and Deep Learning for Wetland Mapping and Bird-Habitat Monitoring: A Systematic Review of Remote-Sensing Applications (2015–April 2025). Remote Sensing. 2025; 17(21):3605. https://doi.org/10.3390/rs17213605

Chicago/Turabian StyleZerrouk, Marwa, Kenza Ait El Kadi, Imane Sebari, and Siham Fellahi. 2025. "Machine and Deep Learning for Wetland Mapping and Bird-Habitat Monitoring: A Systematic Review of Remote-Sensing Applications (2015–April 2025)" Remote Sensing 17, no. 21: 3605. https://doi.org/10.3390/rs17213605

APA StyleZerrouk, M., Ait El Kadi, K., Sebari, I., & Fellahi, S. (2025). Machine and Deep Learning for Wetland Mapping and Bird-Habitat Monitoring: A Systematic Review of Remote-Sensing Applications (2015–April 2025). Remote Sensing, 17(21), 3605. https://doi.org/10.3390/rs17213605