1. Introduction

The increasing frequency and intensity of natural disasters, amplified by climate change and anthropogenic pressures, present one of the most significant challenges to global sustainable development in the 21st century [

1,

2]. These events inflict severe damage on socio-economic systems, threaten human security, and cause long-term degradation of ecological environments [

3,

4]. Recent global assessments by the United Nations Office for Disaster Risk Reduction (UNDRR) highlight a concerning trend of rising direct economic losses and impacts on livelihoods, even as mortality rates from major events in highly developed countries have, in some cases, been mitigated through improved early warning systems (EWS) [

5,

6]. This underscores the critical role of timely and accurate early warnings as a cornerstone of disaster risk reduction, a fact emphatically recognized by the UN’s “Early Warnings for All” initiative, which aims to ensure global coverage by 2027 [

7,

8].

Concurrently, we are living in the golden age of Earth observation. The advent of satellite constellations, unmanned aerial vehicles (UAVs), and the paradigm of remote sensing big data have revolutionized our capacity to monitor the planet with unprecedented spatial, temporal, and spectral resolution [

9,

10,

11,

12]. Satellites like Sentinel-1/2, Landsat 8/9, and Gaofen series provide continuous, synoptic data streams that are indispensable for pre-disaster risk assessment, real-time impact analysis, and post-disaster recovery monitoring [

13,

14,

15]. For instance, Synthetic Aperture Radar (SAR) imagery enables all-weather detection of flood extents and ground deformations from earthquakes or landslides [

16,

17], while multispectral and hyperspectral data are pivotal for monitoring wildfires, droughts, and agricultural disasters [

18,

19].

However, a profound and persistent gap exists between the explosive growth of data acquisition capabilities and the translation of this data into actionable, decision-ready knowledge [

20,

21]. This is the “data-rich, information-poor” dilemma. In the specific context of disaster early warning, remote sensing data is often siloed, serving primarily as a physical sensor for quantifying environmental anomalies—such as changes in water surface area, land surface temperature, or vegetation indices [

22,

23]. Conversely, sector-specific disaster management agencies rely heavily on statistical databases like Emergency Events Database (EM-DAT) and DesInventar, which catalog impacts in terms of human casualties (deaths, injuries) and economic losses [

24,

25]. The integration between these two worlds—the

physical world observed by satellites and the

socio-economic world documented by statistics—remains semantically weak and operationally fragmented [

26,

27]. There is no unified framework to seamlessly convert a “0.5 intensity anomaly” detected by a satellite into a probabilistic estimate of “potential economic loss” or to reconcile it with existing industry warning levels (e.g., Blue, Yellow, Orange, Red). This disconnect hinders the formation of a closed-loop, knowledge-driven early warning system where remote sensing can dynamically inform and validate statistical models, and vice versa.

In the quest to bridge this gap, Knowledge Graphs (KGs) have emerged as a transformative technology for representing and reasoning over complex, interconnected information [

28,

29]. A KG structures knowledge as a network of entities (nodes) and their relationships (edges), typically expressed as semantic triples (subject-predicate-object), enabling powerful associative querying and logical inference [

30,

31]. The application of KGs in geoscience and disaster management is a rapidly advancing frontier. Early research focused on constructing spatiotemporal KGs for specific disaster types, such as using them to model the evolution of agro-meteorological disasters [

32] or to organize emergency response procedures for earthquakes [

33]. Subsequent studies explored predictive capabilities; for example, Ma et al. [

34] integrated multi-source spatio-temporal data into a KG for wildfire and landslide prediction. Furthermore, domain-specific KGs have been built to fuse building vulnerability data with remote sensing-derived exposure information for seismic risk assessment [

35].

Despite these promising developments, a critical analysis reveals three salient limitations in the current state-of-the-art: Static Knowledge Representation vs. Dynamic Knowledge Generation: Existing disaster KGs predominantly function as static knowledge bases, organizing established facts and historical relationships [

36,

37]. They lack the embedded computational mechanisms to dynamically

generate new warning knowledge from real-time or near-real-time data streams, particularly from quantitative remote sensing analyses [

38].

The “Semantic Gap” in Multi-Source Data Fusion: While many studies advocate for multi-source data fusion, few have successfully established a formal, ontology-driven mechanism for

knowledge conversion between heterogeneous domains [

39,

40]. The semantic mismatch between continuous remote sensing indices (e.g., anomaly intensity) and discrete, impact-based industry warning levels remains a significant, unaddressed challenge [

41]. This limits the KG’s ability to act as a true interoperability platform.

Underdeveloped Operational Warning Reasoning: The reasoning capabilities of many current KGs are often limited to simple semantic queries or are reliant on pre-defined, static rules [

42,

43]. There is a notable scarcity of KGs that integrate quantitative assessment models (e.g., for intensity, impact, and trend) with explicit, operational rule sets that can automatically trigger warning decisions, thereby failing to close the loop from “data” to “decision” [

44].

To address these interconnected research gaps, this study proposes the construction of a Remote Sensing Early Warning Knowledge Graph (RSEW-KG) for natural disasters. Our work moves beyond traditional knowledge organization to establish a dynamic, operational system. The core contributions are: (1) We design a comprehensive, formal ontology for natural disaster early warning that provides a unified semantic schema for representing and linking entities and concepts from both the remote sensing and sectoral statistical domains. (2) We innovatively propose a suite of quantitative, remote sensing-derived evaluation indicators for disaster intensity, impact, and trend. More critically, we establish a transparent and operational knowledge conversion mechanism, defined by explicit mapping rules, to bridge the semantic gap between these RS-based assessments and established sector-specific warning levels. (3) We implement a complete pipeline for multi-source knowledge fusion and storage within the Neo4j graph database. We further develop and demonstrate a rule-based reasoning engine for early warning decision-making, validating the entire framework through extensive case studies on 17 types of natural disasters, with a detailed examination of wildfire events.

2. Materials and Methods

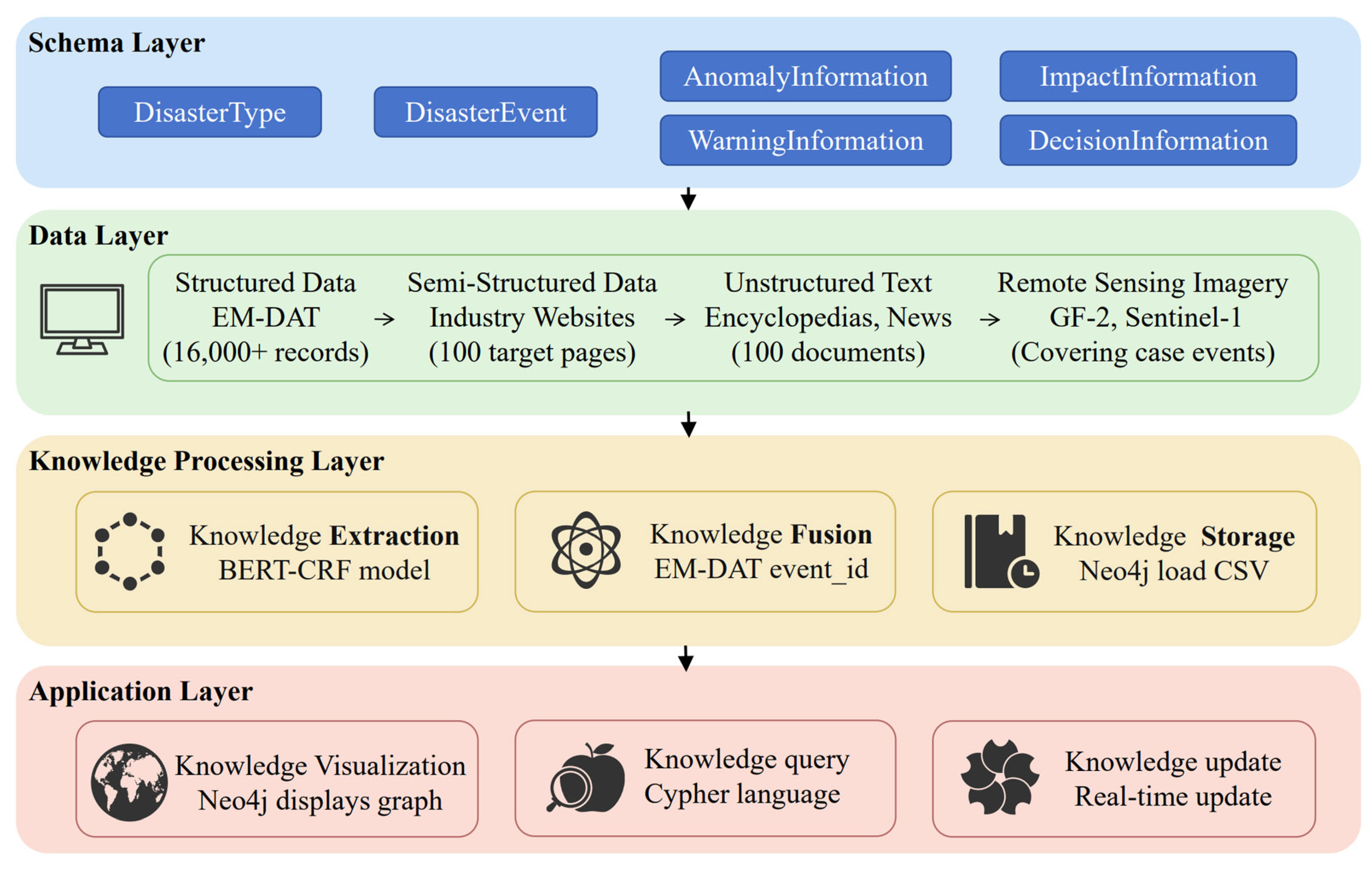

2.1. Overall Framework of the Knowledge Graph

A knowledge graph is a structured semantic knowledge base that employs graphical representations to express concepts and their interrelationships in the physical world. Its fundamental units are triples, structured as {(entity, relation, entity)} or {(entity, attribute, attribute value)} [

30]. Entities are interconnected through relationships, forming a networked knowledge structure. In the domain of natural disasters, the volume of disaster data is immense, event descriptions are complex, and remote sensing monitoring information is diverse. However, the close interrelationships between entities constitute a typical complex knowledge structure system. The remote sensing early warning knowledge graph constructed in this study adopts a hybrid approach combining top-down (schema layer design) and bottom-up (data layer construction) methodologies (

Figure 1). The overall framework comprises:

- (1)

Schema Layer: Defines the core concepts, attributes, and relationships of the knowledge graph, serving as its semantic foundation.

- (2)

Data Layer: Stores specific instances of entities, attribute values, and relationships, forming the knowledge body of the graph.

- (3)

Knowledge Processing Layer: Responsible for extracting, integrating, and storing multi-source raw data, representing the core technical component of knowledge graph construction.

- (4)

Application Layer: Provides services such as knowledge visualization, querying, and rule-based early warning reasoning, embodying the ultimate value of the knowledge graph.

Figure 1.

The framework of Remote Sensing Early Warning Knowledge Graph (RSEWKG).

Figure 1.

The framework of Remote Sensing Early Warning Knowledge Graph (RSEWKG).

2.2. Schema Layer Construction: Ontology Design

The schema layer forms the abstract logical structure of the knowledge graph. Drawing on the ISO 19115 [

45] geographic information standard [

38] and related disaster management standards, this study designed an ontology model illustrated. The core classes and their key attributes are defined as follows.

DisasterType: Covers 17 types of natural disasters, including earthquakes, floods, and wildfires. To comprehensively encompass various disaster categories, classifications were adopted based on systems co-developed by the Centre for Research on the Epidemiology of Disasters (CRED) and Munich Re, along with the “Peril Classification and Hazard Glossary” established by the Integrated Research on Disaster Risk (IRDR) [

24]. Disasters are categorized into six major groups: geophysical, hydrological, meteorological, climatological, biological, and extraterrestrial.

DisasterEvent: Represents specific disaster instances. Core attributes include event ID (‘event_id’) and name (‘event_name’). Each disaster type encompasses all corresponding disaster events.

AnomalyInformation: Describes basic information about disaster occurrence. Since the International Organization for Standardization (ISO) has standardized the description of geographic information for spatial data [

46], core attributes—aligned with geographic information and disaster management standards (ISO 19115) [

45]—include occurrence time (‘occur_date’), longitude and latitude (‘longitude’, ‘latitude’), and specific location (‘location’).

ImpactInformation: Based on the economic loss assessment guidelines from the United Nations Office for Disaster Risk Reduction’s (UNDRR) “Sendai Framework for Disaster Risk Reduction 2015–2030” [

47], impact information is characterized from three perspectives: human, economic, and environmental losses. Core attributes include number of deaths (‘deaths’), direct economic loss (‘economic_loss’), and affected area (‘affected_area’).

WarningInformation: A major challenge in disaster management is the multiplicity of severity definitions established by different sectors, based either on event intensity and impact alone or combined with other criteria, leading to issues of specificity and exclusivity in assessment. To address this, this study proposes a comprehensive evaluation method using quantitative indicators derived from remote sensing data, assessing intensity, impact, and trend. Intensity reflects the degree of surface anomaly monitored by remote sensing deviating from normal conditions, graded by comprehensively weighting deviations across multiple spatial scales (pixel to scene). Intensity is classified into five levels: 0 (none), 1 (slight), 2 (moderate), 3 (high), and 4 (severe), with tentative thresholds of <0.1, 0.1–0.3, 0.3–0.5, 0.5–0.7, and >0.7. Impact refers to the effects of surface anomalies on human life, property (e.g., buildings and infrastructure), and the environment (e.g., vegetation, water, atmosphere, crops). Impact is classified into five levels (0 to 4) based on comprehensive assessment of casualties and economic losses. Trend describes the development trajectory of surface anomalies from hours to days after occurrence, manifested as abnormal changes in remote sensing features (e.g., spectrum, texture, temperature, geometry, structure). Trends are classified into five levels (0 to 4), indicating scenarios such as anomaly intensity/impact disappearing, decreasing, or rapidly increasing.

DecisionInformation: To enhance the leading role of remote sensing in detecting, diagnosing, and warning of surface anomalies and to ensure consistent assessment of event severity and impact, a systematic decision-making and dissemination mechanism is designed based on warning information. The dissemination relies on sector-specific classifications of surface anomaly events in warnings, achieving knowledge conversion from “sector” to “remote sensing” through integrated evaluation of intensity, impact, and trend using remote sensing methods. A value of 0 indicates no warning issuance, while 1 indicates warning issuance. The criterion for issuance (value = 1) is: intensity ≥ 1 or impact ≥ 1 or trend ≥ 3.

These core classes are interconnected through object properties (e.g., ‘DisasterEvent’ hasAnomaly ‘AnomalyInformation’, ‘DisasterEvent’ hasImpact ‘ImpactInformation’).

2.3. Data Layer Construction: Knowledge Acquisition and Fusion

2.3.1. Multi-Source Data Acquisition and Processing

This study integrates four types of data (

Table 1), processed as follows:

Structured Data (EM-DAT): Acquired directly through its official API, cleaned, and converted into CSV format. Serves as the primary source for ‘DisasterEvent’ and ‘ImpactInformation’.

Semi-Structured Data (Web Pages): Targeted crawling of specific disaster warning websites using the Python Scrapy framework (version 2.11.0). HTML page structures were parsed to extract summaries of disaster events.

Unstructured Text (Encyclopedias, News): For such data, a BERT-CRF model was employed for Named Entity Recognition (NER). Specifically, the BERT-base-Chinese pre-trained model from Hugging Face’s Transformers library was fine-tuned on a custom-labeled corpus of Chinese disaster reports. The training corpus contained approximately 20,000 sentences annotated with entity types including disaster names, locations, dates, and impacts. The subsequent CRF layer was implemented using the pytorch-crf library to learn and enforce label transition constraints, improving the consistency of the predicted entity sequences. This combined architecture effectively captures contextual semantic information while ensuring valid label sequences.

Remote Sensing Imagery (GF-2, Sentinel-1): Downloaded from platforms such as the China Centre for Resources Satellite Data and Application and the ESA Copernicus Open Access Hub. Imagery was not directly used as nodes; instead, quantitative analysis was performed on the images, and the results (intensity, impact, and trend values) were used as attribute values of the ‘WarningInformation’ entity.

Table 1.

Multi-Source Data Sources and Processing Method.

Table 1.

Multi-Source Data Sources and Processing Method.

| Data Type | Source | Sample Size | Processing Method and Use |

|---|

| Structured Data | EM-DAT | 16,000+ records | Data cleaning; core source for DisasterEvent and ImpactInformation |

| Semi-Structured Data | Industry Websites | 100 target pages | Web crawling and parsing; supplementary event details |

| Unstructured Text | Wikipedia, News | 100 documents | Entity extraction via BERT-CRF; supplements anomaly and impact information |

| Remote Sensing Data | GF-2, Sentinel-1 | Covering case events | Quantitative remote sensing inversion; generates attribute values for WarningInformation |

2.3.2. Knowledge Fusion and Storage

To address the challenges of redundancy and semantic conflicts during the integration of multi-source heterogeneous data, a systematic fusion workflow with explicit rules was designed and implemented. This process involves entity alignment using authoritative sources as primary keys, conflict resolution based on defined priority rules, and culminates in batch construction for the graph database.

- (1)

Entity Alignment and Primary Key Definition

Given that the EM-DAT database provides authoritative and structured records of disaster events globally, its officially assigned event_id is defined as the core primary key and canonical source for DisasterEvent entities within the knowledge graph. Event information from other data sources is aligned with EM-DAT records through spatiotemporal fuzzy matching. Specifically, based on the occurrence time and geographical location (toponym string similarity or geocoordinate proximity) of disaster events, scattered data records are associated under a unified event_id, thereby achieving entity resolution at the data layer.

- (2)

Conflict Resolution Rules for Multi-Source Data

When inconsistencies exist for the same attribute (e.g., “direct economic loss,” “number of deaths”) across different sources, the following tiered priority rules are applied for resolution to ensure the accuracy and consistency of the knowledge graph data. The highest priority is assigned to authoritative structured databases. Data from EM-DAT is preferentially adopted. Secondary priority is assigned to official sector-specific websites. Real-time data from relevant government management departments or professional agencies serves as an important supplement. The lowest priority is assigned to unstructured or encyclopedic sources. Information from such sources is used only to fill attribute gaps when data from higher-priority sources is unavailable.

- (3)

Technical Implementation for Graph Storage

The cleansed and fused data are organized into multiple structured CSV files according to the predefined ontological schema. Subsequently, utilizing the Neo4j graph database’s LOAD CSV command and executing pre-written Cypher scripts, nodes are created in batches, node properties are set, and semantic relationships (e.g., hasAnomaly, hasImpact) are established, thereby efficiently persisting the integrated knowledge system into the graph storage in a structured manner.

2.4. Core Innovation: Remote Sensing Early Warning Knowledge Generation and Conversion Mechanism

2.4.1. Quantitative Methods for Remote Sensing Evaluation Indicators

This study deconstructs complex early warning knowledge elements into three core dimensions—intensity, impact, and trend—evaluated using different mathematical models. For surface anomaly intensity assessment, the research breaks through the limitations of traditional single indicators and innovatively proposes a multi-scale weighted comprehensive evaluation method [

48,

49].

Specifically, a mathematical model for the Land Surface Anomaly Intensity Index (LSAI) was constructed:

where LSAI is Land Surface Anomaly Intensity, PI is Pixel Intensity, SI is Structure Intensity, OI is Object Intensity, LI is Landscape Intensity. The computation of these constituent components (PI, SI, OI, LI) follows established methodologies in remote sensing anomaly characterization, which involve analyzing spectral changes, texture variations, and object- and landscape-level spatial patterns. The integration via the operator ⊗ represents a weighted fusion based on regression analysis of historical cases.

Weight allocation for each indicator was determined through regression analysis of historical disaster cases, with pixel- and structure-level indicators often playing dominant roles. Higher weights for pixel- and structure-level indicators may be due to significant changes in object tone and texture caused by events such as landslides, while object- and landscape-level indicators represent comprehensive information at grid scale, resulting in more continuous anomaly extents with smaller variations in aspect ratio, patch count, and anomaly area proportion across grids. Thus, object- and landscape-level indicators receive lower weights than pixel- and structure-level indicators. For example, in landslide monitoring, pixel-level NDVI changes and structure-level texture variations often have greater discriminative power than regional statistical features.

For surface anomaly impact assessment, the study utilizes basic information from remotely sensed images (type, extent, intensity, etc.) and prior knowledge of surface conditions under normal circumstances (cover type, feature distribution, etc.) to evaluate the impact of surface anomalies on the environment, personnel, and property, estimating losses (number of affected people, property value, environmental damage). A conceptual model for surface anomaly impact assessment is proposed, describing impact using both an impact index and impact values:

The impact index characterizes the degree to which an individual is affected, ranging from 0 to 1, calculated as:

where ‘PriorKnowledge’ represents prior knowledge about the type and distribution of affected objects under normal conditions (minimizing dependency). Impact values describe quantities such as population size, property value, and ecological value, using ‘Densityobject’ to represent the distribution and density properties of affected object types (e.g., quantity, value). The formula is:

For surface anomaly trends, the study adopts a grid dynamics model approach. Under temporally, spatially, and state-discrete conditions, a grid dynamics model with local spatial interactions and temporal causal relationships is constructed. Based on deep learning-driven grid dynamics models, a method for characterizing spatiotemporal changes under multi-factor drivers is established, exploring multi-branch spatiotemporal prediction networks. Additionally, research on generating surface anomaly trend characterization factors based on grid dynamics models is conducted:

Establishing time-series datasets for typical gaseous surface anomalies based on grid dynamics models, studying spectral angle, buffer zones, mathematical morphology, and time-series curve analysis methods to achieve dynamic characterization of anomaly trends.

Establishing time-series datasets for typical liquid surface anomalies based on grid dynamics models and developing knowledge production methods for dynamic trend characterization factors of surface anomalies based on time-series analysis methods.

2.4.2. Knowledge Conversion Mechanism and Early Warning Rules

Current research on “early warning” and “level” definitions in both industry and remote sensing still faces key issues. Industry-defined “event level” standards primarily assess the severity or impact of disasters or environmental damage events after they occur. Although this aligns broadly with the definition of early warning knowledge, significant differences exist in specific standards—varying definitions across industries (some based on event intensity, others on impact degree, or a combination) lead to a lack of specificity and exclusivity in level classification. As a full-coverage technical means, remote sensing monitoring requires unified monitoring, diagnosis, and warning for various surface anomaly events. This necessitates establishing a severity and impact assessment system based on remote sensing characteristics applicable to multiple anomaly events, ensuring comprehensiveness and consistency in warning level determination. The core lies in the mismatch between the post-event assessment nature of industry standards and the pre-warning requirements of remote sensing monitoring, as well as the conflict between the specificity of industry standards and the universality of remote sensing monitoring.

Therefore, this study aims to investigate industry classifications for different surface anomaly events and primarily adopt remote sensing methods to evaluate intensity, impact, and trend. It discusses a knowledge conversion mechanism from “industry” to “remote sensing” for early warning, establishing definitions and classification rules for surface anomaly early warning knowledge elements (intensity, impact, trend, warning issuance), forming a consistent understanding of various surface anomaly levels and early warning knowledge, thereby improving the surface anomaly early warning knowledge graph. This is achieved by defining an industry standard mapping function Lindustry ∈ {I1, I2, …, In} and a feature mapping function Φ that transforms it into remote sensing observable parameters:

where P represents the industry’s original parameters for intensity, impact, and trend, respectively. This mapping relationship is embodied through a rule base, as shown in

Table 2.

The early warning issuance rule (I ≥ 1 OR Im ≥ 1 OR T ≥ 3) was formulated based on the principle of ‘caution for any significant anomaly.’ A value of 1 in Intensity or Impact represents the lowest threshold of a detectable, non-negligible event as defined by sectoral standards (e.g., corresponding to the ‘Blue’ warning level). The stricter threshold for Trend (T ≥ 3) is designed to capture situations where the anomaly’s development momentum indicates a high probability of rapid escalation to a severe level, even if its current intensity or impact is not yet critical. This logic ensures that warnings are triggered not only for present severity but also for impending high risk, enhancing the lead time for response.

3. Results of Remote Sensing Early Warning Knowledge Graph

3.1. Visualization of Knowledge Graph

The remote sensing early warning knowledge graph for natural disasters is a significant application of knowledge graph technology in disaster management. Constructing this knowledge graph provides a unified descriptive framework that encompasses various natural disaster types and their related event data. It integrates anomaly information, impact information, early warning information, and decision-making information, enabling the extraction of key knowledge from massive data. This helps to improve the understanding and management of natural disaster events and enhances the intelligence level of emergency management and decision-making.

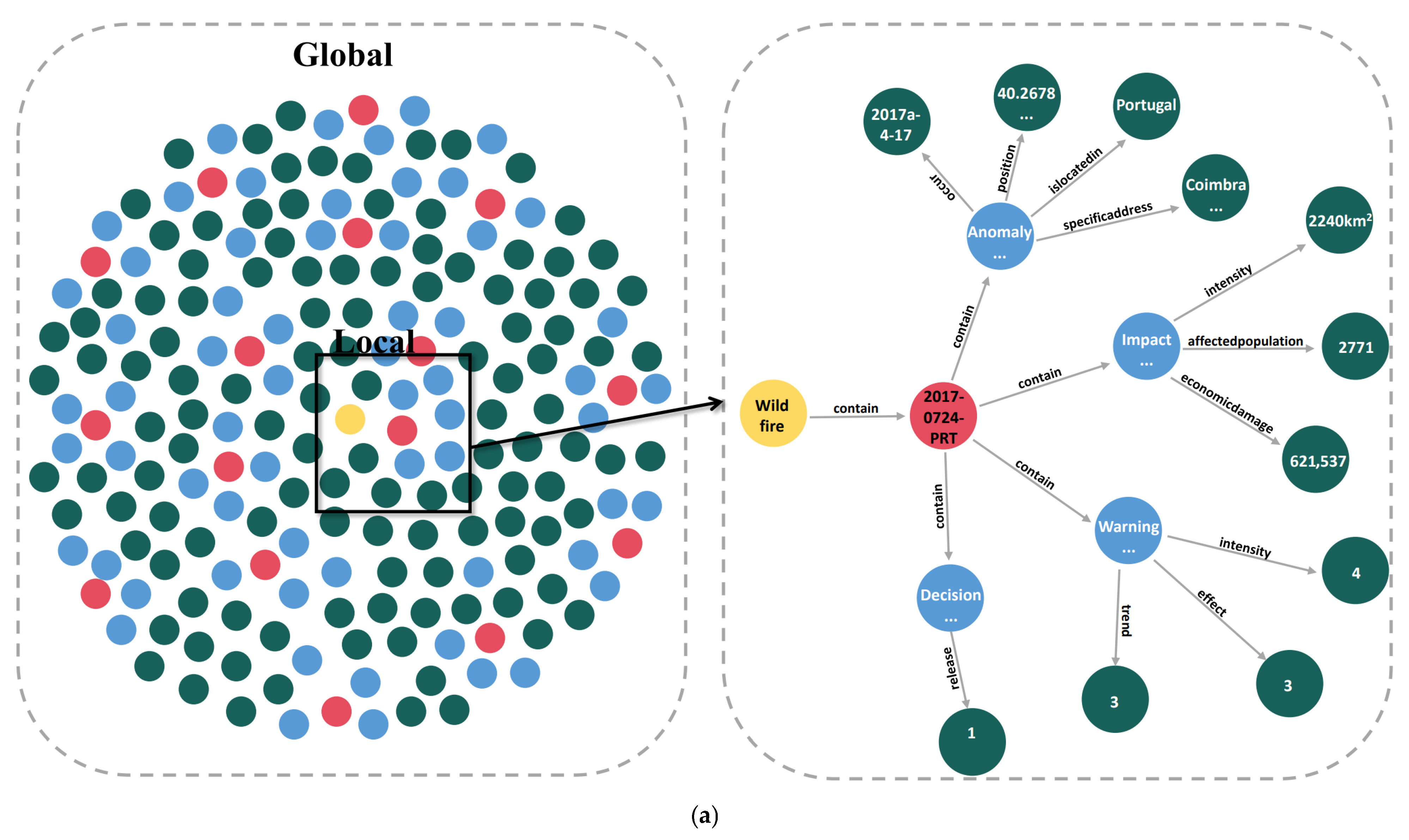

The surface anomaly remote sensing early warning knowledge graph categorizes disasters into six major categories: Geophysical, Hydrological, Meteorological, Climatological, Biological, and Extra-terrestrial, covering 17 types of disasters in

Figure 2.

Using the study of wildfire disasters as an example, we applied a combined top-down and bottom-up approach. First, we constructed the schema layer for wildfire disasters in a top-down manner, establishing six core elements: disaster type, disaster event, anomaly information, monitoring information, early warning information, and decision-making information. The constructed wildfire disaster knowledge graph can provide a comprehensive and clear description of wildfire disaster events and effectively express the relationship between disaster statistical data and remote sensing data, forming a unified description of concepts in the wildfire disaster early warning management domain. This graph not only aids in the sharing of domain information but also enhances the intelligence level of disaster management and the efficiency of early warning responses.

Next, we constructed the database from the bottom up, acquiring multi-source data from EM-DAT, disaster web pages, and the Land Observation Satellite Data Service Platform of the China Resources Satellite Application Center. The EM-DAT database contains 309 wildfire disaster events from 2000 to the present, including information on the occurrence time, location, impact scope, and losses to population and economy. Based on the ontology structure of the schema layer, we performed entity and attribute extraction for wildfire events. We downloaded relevant high-resolution satellite data and calculated the intensity, impact, and trend indicators for the disaster events. According to the constructed knowledge conversion mechanism between industry and remote sensing, we categorized the intensity, impact, and trend into different levels.

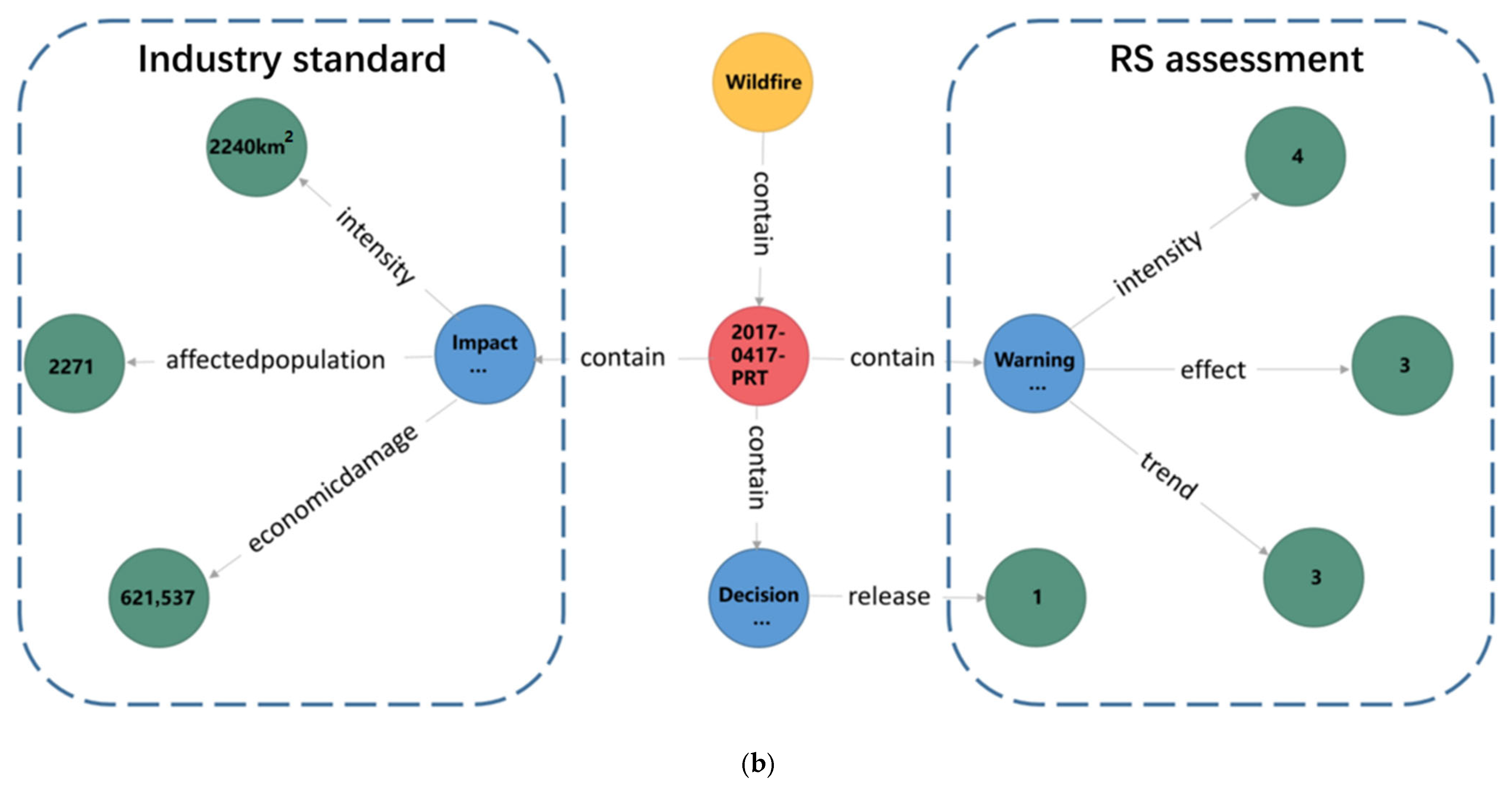

We used the Neo4j graph database to store over 4500 nodes and relationships. A portion of the nodes and relationships in the remote sensing early warning knowledge graph for fire disaster types is shown in

Figure 3a. The figure displays part of the events related to wildfire disasters. The red node represents the forest fire event numbered 2017-0417-PRT, with the name provided by the EM-DAT database, and each event has a unique identifier. Connected to the “2017-0417-PRT” node are anomaly information, monitoring information, early warning information, and decision-making information (in blue). The green nodes represent specific attributes for each type of information (

Figure 3b).

In the anomaly information, the event occurred on 15 October 2017, in Coimbra, Portugal, at coordinates 40.2678°N latitude and 8.2713°W longitude. In the impact information, the event affected an area of 2240 km

2, resulting in 2771 affected people and

$621,537,000 in economic losses. We collected and processed the multispectral data from the Gaofen-2 satellite for this event. For instance, the event ‘2017-0417-PRT’ (a major wildfire in Coimbra, Portugal, in October 2017) was used to validate the early warning rule. Quantitative analysis of the imagery yielded an intensity level of 4, an impact level of 3, and a trend level of 3. According to the decision rule established in our methodology (i.e., issue a warning if intensity ≥ 1 or impact ≥ 1 or trend ≥ 3), this event unequivocally met the criteria for warning issuance. Consequently, the ‘DecisionInformation’ attribute was set to 1, successfully demonstrating the operationalization of the rule-based reasoning mechanism and providing a concrete validation of our approach. This process is visually summarized in

Figure 3b.

By integrating multi-source heterogeneous data, including remote sensing data, disaster statistics, and web resources, the wildfire disaster knowledge graph plays a crucial role in revealing the characteristics, impacts, and evolution patterns of wildfire disaster events. Additionally, the knowledge graph supports early warning and decision-making for wildfires, enhancing the scientific and accuracy of emergency management. Overall, the wildfire disaster knowledge graph significantly contributes to improving disaster prevention and mitigation, promoting information sharing, and optimizing early warning responses.

3.2. Querying and Updating of Knowledge Graph

Neo4j provides visualization and retrieval functions for knowledge. The Cypher query language uses the ‘MATCH’ clause to query data, which is its most basic query clause. When querying data, the ‘MATCH’ clause specifies the search pattern, which is the primary method for querying data from the Neo4j database. For example, to query all wildfire events with issued early warnings, i.e., all event nodes with decision information set to 1, the query would return the relevant results as shown in

Figure 4. To illustrate the practical querying capabilities, the following Cypher query examples are provided:

>MATCH (de:DisasterEvent)-[:hasDecision]->(di:DecisionInformation {decision: 1})

>RETURN de.event_name

Additionally, clicking on any event, such as event “2019-0545-AUS,” displays all information about that event (

Figure 4).

Beyond querying, the knowledge graph implements a rule-based reasoning engine for early warning decision-making. This reasoning mechanism is directly integrated into the graph’s data structure. For instance, the warning issuance rule defined in

Section 2.4.2 (intensity ≥1 or impact ≥1 or trend ≥3) is operationalized as an automated check. When new or updated WarningInformation nodes are inserted into the graph, this rule is evaluated. If the conditions are met, the corresponding DecisionInformation node is automatically created or updated with a value of 1 (warning issued), thereby closing the loop from data to decision.

The remote sensing early warning knowledge graph for natural disasters supports real-time data updates. Data can be inserted directly or in batches into Neo4j’s graph data storage framework. For the current multi-source heterogeneous data, this early warning knowledge graph can quickly integrate other disaster databases, Wikipedia, and similar logical data sources, converting them into a unified standard format and organizing them in a graph structure. It also features expandable functionality to accommodate dynamically changing and developing data. The real-time updated data enables the knowledge graph to quickly reflect the latest disaster situations, helping emergency management departments rapidly acquire accurate disaster information. This timeliness is particularly critical for responding to sudden natural disasters such as earthquakes, floods, and wildfires, helping decision-makers stay informed about disaster trends and changes, and significantly reducing the losses and impacts caused by disasters.

Figure 4.

Querying events in the knowledge graph with decision information of 1.

Figure 4.

Querying events in the knowledge graph with decision information of 1.

4. Discussion

4.1. Core Contributions and Limitations

Current research on knowledge graphs in remote sensing has largely overlooked the dynamic generation and formal representation of early warning knowledge. A central challenge stems from the fact that severity classifications for disaster events often vary across industries—some rely primarily on physical intensity metrics, others on socioeconomic impact, and still others on hybrid criteria. This inconsistency complicates the integration of sector-specific data with remote sensing observations, which itself requires a unified framework for anomaly assessment to ensure consistent monitoring, diagnosis, and warning processes.

In response, this study establishes a knowledge conversion mechanism between domain-specific standards and remote sensing-based evaluations. We introduced consistent definitions and grading criteria for four core elements of early warning—intensity, impact, trend, and warning issuance—enabling a harmonized understanding of disaster severity and supporting integrated warning decisions. This approach enhances existing remote sensing knowledge graphs through the structured incorporation of early warning semantics. That said, the proposed classification rules remain preliminary and warrant further validation through larger-scale empirical tests and iterative refinement in operational settings.

Compared to existing disaster knowledge graph research, our work demonstrates several distinctive advantages:

Dynamic Knowledge Generation vs. Static Representation: While many existing KGs (e.g., Ma et al. [

34] and Ji et al. [

30]) primarily serve as static repositories of historical disaster facts or pre-defined relationships, our RSEW-KG embeds quantitative remote sensing models to dynamically generate warning knowledge from real-time or near-real-time data streams. This enables the graph to evolve beyond a passive knowledge base into an active decision-support tool.

Explicit Semantic Bridging Between Domains: Prior studies (e.g., Li et al. [

20]) have highlighted the challenge of multi-source data fusion but often lack a formal mechanism for converting between heterogeneous semantic spaces. Our study explicitly addresses the “semantic gap” by proposing a rule-based mapping function (Equation (4)) that translates continuous remote sensing indices (e.g., LSAI) into discrete, impact-based industry warning levels (

Table 2). This provides a reproducible and transparent interoperability platform that is largely absent in earlier KG implementations for disaster monitoring [

39,

40].

Operationalizable Warning Reasoning with Quantitative Rules:Unlike KG frameworks that support only semantic querying or rely on static rules (e.g., Riedel et al. [

23]), our system integrates quantitative assessment models (for intensity, impact, and trend) with explicit, operational warning rules (e.g., intensity ≥ 1 or impact ≥ 1 or trend ≥ 3). This closes the loop from data acquisition to warning decision, a capability that is still underdeveloped in current spatiotemporal KGs for disaster prediction [

22,

34].

That said, the proposed classification rules remain preliminary and warrant further validation through larger-scale empirical tests and iterative refinement in operational settings.

However, several limitations related to data foundations warrant careful consideration. First, the accuracy and accessibility of underlying data directly affect the reliability of assessments. For instance, global disaster databases (e.g., EM-DAT) may exhibit reporting biases, with systematic under-reporting of small-scale events or those in remote regions. Similarly, statistical data on economic losses can be incomplete or inconsistently compiled across jurisdictions. Such data gaps and inaccuracies propagate through our knowledge graph, potentially leading to underestimation of impact or miscalibration of warning rules. Second, the timeliness of data acquisition remains an operational challenge. While satellite constellations improve observational frequency, the latency between image acquisition, processing, and entity fusion can still hinder truly real-time warning issuance. This is particularly critical for rapidly evolving disasters such as flash floods or wildfires, where even short delays can significantly reduce mitigation opportunities. These data-related constraints—spanning quality, coverage, and latency—form a fundamental boundary for the current system’s operational effectiveness and decision-making confidence.

While the constructed knowledge graph successfully integrates multi-source disaster data and supports intuitive visual querying, it currently lacks support for knowledge reasoning. This limitation impedes the system’s ability to infer implicit relationships, anticipate event evolution, or autonomously generate new insights—capabilities essential for advanced decision support.

4.2. Considerations on Data Resolution and Timeliness

Two additional considerations that impact the practical application of our framework deserve mention. First, the spatial resolution of remote sensing imagery inherently influences assessment granularity. While our quantitative indicators are conceptually scalable, higher-resolution data (e.g., GF-2) enables finer-scale damage detection, whereas coarser-resolution data (e.g., MODIS) may be limited to monitoring large-scale phenomena. Second, data timeliness directly affects early warning efficacy. Dependence on optical imagery entails latency due to acquisition schedules and cloud cover, whereas integrating all-weather SAR data could improve responsiveness but requires further adaptation. These factors represent important boundary conditions for operational deployment.

Furthermore, the geographical applicability of the proposed knowledge conversion rules (e.g.,

Table 2) warrants careful consideration. The current rules, exemplified by the wildfire case, are primarily calibrated based on case studies and industry standards from specific regions (e.g., China and Portugal). The applicability of these thresholds and the weighting of indicators in the LSAI model (Equation (1)) may vary across different geographical contexts due to factors like ecosystem types (e.g., boreal vs. tropical forests), infrastructure density, and regional socio-economic characteristics. Future work should focus on validating and adapting these rules for a wider range of geographical settings to enhance the framework’s global robustness.

4.3. Preliminary Performance Evaluation

A preliminary evaluation was conducted to assess system performance. Query efficiency tests using 10 complex Cypher queries showed an average response time of 128 ms (max: 350 ms), demonstrating practical responsiveness. Validation on 50 historical wildfire events yielded an accuracy of 73.2%, precision of 68.5%, recall of 81.0%, and F1-score of 74.3%, indicating a well-balanced performance favoring event detection.

Compared to traditional methods relying on sparse ground sensors, historical statistics, and manual reports—which often suffer from limited coverage, delayed response, and subjectivity—our framework provides synoptic spatial coverage, objective quantitative assessment, and near real-time monitoring capability through formal integration of multi-source data within a unified ontological schema.

5. Summary and Prospect

This study developed a remote sensing early warning knowledge graph encompassing 17 types of natural disasters, achieving effective integration of multi-source heterogeneous data—including remote sensing imagery, structured databases, and literature resources. The graph incorporates key semantic elements such as anomaly, impact, warning, and decision information, providing a unified framework for disaster knowledge representation. Through visualized interrelations between disaster events and attributes, users can intuitively explore complex disaster scenarios. Furthermore, by introducing remote sensing-derived quantitative assessments of disaster intensity, impact, and trend, the study establishes a knowledge conversion mechanism between industrial standards and remote sensing data, thereby facilitating data-driven warning decisions.

Looking ahead, several promising directions are envisioned to advance the capabilities and applications of the remote sensing early warning knowledge graph:

Advancing Graph Reasoning for Complex Disaster Chains: Future work will focus on enhancing the graph’s reasoning capabilities to infer implicit relationships and model complex disaster cascades (e.g., earthquake-induced landslides). While the current system effectively represents explicit associations, future research will develop a unified, ontology-driven rule inference framework. This framework will integrate spatio-temporal reasoning with domain-specific physical models (e.g., for assessing post-earthquake landslide susceptibility based on seismic intensity, slope, and lithology) to automatically deduce multi-hazard risks. The key challenge lies in creating a rule schema that maintains universality while ensuring scientific rigor across different disaster types—an endeavor requiring deep collaboration with domain experts.

Enhancing Graph Reasoning Capabilities: Future research could adopt methods based on Graph Neural Networks (GNNs) to capture complex relationships between nodes and edges in the knowledge graph. By utilizing feature extraction and representation learning of the graph structure, we can enhance the reasoning capabilities of the knowledge graph. Techniques such as Graph Convolutional Networks (GCN) and Graph Attention Networks (GAT) can be employed to process the complex structural information within the graph.

Implementing Interactive Features: Future research should also focus on implementing interactive features in the knowledge graph, making it accessible not only to professionals but also to non-experts, thus serving as a powerful tool for science popularization. This includes improving the knowledge graph’s question-and-answer system, enabling users to query and interact through natural language to obtain the information they need. By providing an intuitive user interface and user-friendly interactive design, the knowledge graph can help the public understand and respond to natural disasters, thereby enhancing disaster awareness and mitigation capabilities across society. This interactivity not only aids in the dissemination and popularization of knowledge but also increases the practical value and impact of the knowledge graph in real-world applications.