Few-Shot Class-Incremental SAR Target Recognition with a Forward-Compatible Prototype Classifier

Highlights

- We propose a Forward-Compatible Prototype Classifier (FCPC) by emphasizing the model’s forward compatibility to continually learn new concepts from limited samples without forgetting the previously learned ones.

- A Nearest-Class-Mean (NCM) classifier is proposed for prediction by comparing the semantics of unknown targets with prototypes of all classes based on the cosine criterion.

- The proposed method can continually learn new concepts from limited samples without forgetting the previously learned ones, which can improve the SAR ATR capability.

- The proposed method powers the DL-based SAR ATR systems with Few-Shot Class-Incremental Learning (FSCIL) ability to satisfy real-world SAR ATR scenarios.

Abstract

1. Introduction

- We explored the FSCIL problem in the SAR ATR field and designed an FCPC framework to further improve the model’s forward compatibility with unpredictable targets in the dynamic world before and after deployment.

- We designed a VSS for virtual-class synthesis based on real-class semantics, a DMA for making our method continually evolve, and an NCM classifier for general prediction.

- We showed the state-of-the-art performance of the FCPC in comparison to several advanced benchmarks on three derived FSCIL of SAR ATR datasets.

2. Related Works

2.1. SAR Target Recognition

2.2. Few-Shot Class-Incremental Learning

2.3. FSCIL of SAR ATR

3. Materials and Methods

3.1. Problem Statement

3.2. Motivations

- Forward-Compatible ability means that a DL-based SAR ATR system can not only represent targets of known categories but can also incorporate new concepts rapidly once optimization has taken place. According to the Attribute Scattering Center (ASC) theory, target information in SAR imagery can be regarded as the specific composition of various basic scattering parts, e.g., dihedral, trihedral, cylinder. Furthermore, target deep semantics can be seen as collections of various convolutional activations, which implicitly represent high-level class-agnostic structures. Henceforth, the SAR ATR system with a strong forward compatibility should possess the ability to capture diverse cues of incoming targets.

- Stable-Discriminating ability means that an SAR ATR system can accurately identify targets of different classes captured in diverse scenarios and postures. In contrast to rich and stable target cues in optical images, only target-specific structures and parts can be observed in SAR images, providing rare and inconsistent information, caused by the particular SAR imaging mechanisms. Unlike single target instances, prototypes derived from the average of target features at diverse postures, can provide class-related information in a general and stable way for better identification.

3.3. Overall Framework

- At the base learning stage (), a CNN-based feature extractor parameterized by is trained on sufficient data to learn an embedding space for identifying base classes while extracting general patterns of unknown ones. Like the dynamically real-world SAR ATR scenarios and the prominent partiality of targets in SAR imagery, the model’s forward compatibility with unknown targets is promoted by a Virtual-class Semantic Synthesizer (VSS), in which numerous virtual classes with soft labels are synthesized from pre-encoded real-class embeddings . After being post-encoded by on both real and virtual classes and optimized by a cross-entropy (CE) loss, a well-spanned with base-class prototypes can be obtained.

- At incremental learning stages (), where new-class data continually appear with few-shot samples, the model’s forward compatibility is dynamically released by a Decoupled Margin Adaptation (DMA) strategy by merely fine-tuning high-level semantic parameters in a timely manner. Therefore, the similarities of few-shot samples of novel classes to class prototypes and the discrepancy with interclass ones can be improved effectively. After optimization, the prototypes of novel classes are obtained for further inference.

- For the inference of session t, like the general patterns provided by class prototypes, a Nearest-Class-Mean (NCM) classifier is formulated for identification by comparing semantic features of unknown targets with all-class prototypes.

3.4. Forward Compatibility

3.4.1. Virtual-Class Semantic Synthesizer

- Virtual Class Generation (VCG): As the rich and diverse target parts provided by , numerous virtual classes are synthesized by mixing up real-class semantic parts within a batch data . In Algorithm 1, is the sample number of . The larger the repeated time M, the more diverse the components of virtual classes that can be generated. saves the virtual-class instances. In Equation (1), we sample from a to control the overlaps between real and virtual classes. Notably, instead of synthesizing targets in input space, the intermediate spatial manifolds with different categories pre-encoded by are adopted, as are their distinctly component-aware characteristics and limited background interference.

- Soft Label (SL): The more mixing-up cues provided by virtual labels, the more diversified connections the model can learn from the virtual samples. In response, different from assigning virtual targets with newly one-hot labels , a Gaussian soft-labeling function varying with the given by Equation (2) is designed to generate soft supervisions. In particular, the labels for virtual targets reach the highest values as the reaches 0.5.

| Algorithm 1 The procedure of VSS. |

|

3.4.2. Decoupled Margin Adaptation

- Decoupled Adaptation: As target cues provided by few-shot samples are extremely rare and unstable, directly tuning whole parameters of our method can easily lead to the overfitting problem. In response, a decoupled adaptation (DA) strategy is introduced to divide the method’s whole parameters into low-level and high-level parameters based on the layers. During incremental learning, only the high-level parts containing abstract information are tuned while freezing the low-level ones with general cues to balance the representation of class-agnostic patterns and the rapid adaptation to class-specific patterns.

- Prototype Margin Loss: Given the rare and unstable target cues provided by few-shot instances, a Prototype Margin Loss (PML) is designed to update the model’s parameters when necessary, avoiding inappropriate adaptation. Unlike the cross-entropy (CE) loss, the PML ensures robust feature learning under limited data. As defined in Equation (4), and represent the deep embeddings of a novel class , and the parameterized class-related prototypes, respectively. Additionally, another class prototype , corresponding to the top J nearest distances to the , is selected for comparison. The cosine distance d is adopted for judgment due to its strong connection to azimuth-aware target structures, as demonstrated in [29]. The parameter m controls the discrepancy margin. By minimizing , the compact intraclass feature spaces and well-separated interclass feature spaces can be learned properly.

3.5. Nearest-Class-Mean Classifier

4. Results

4.1. Dataset Preparation

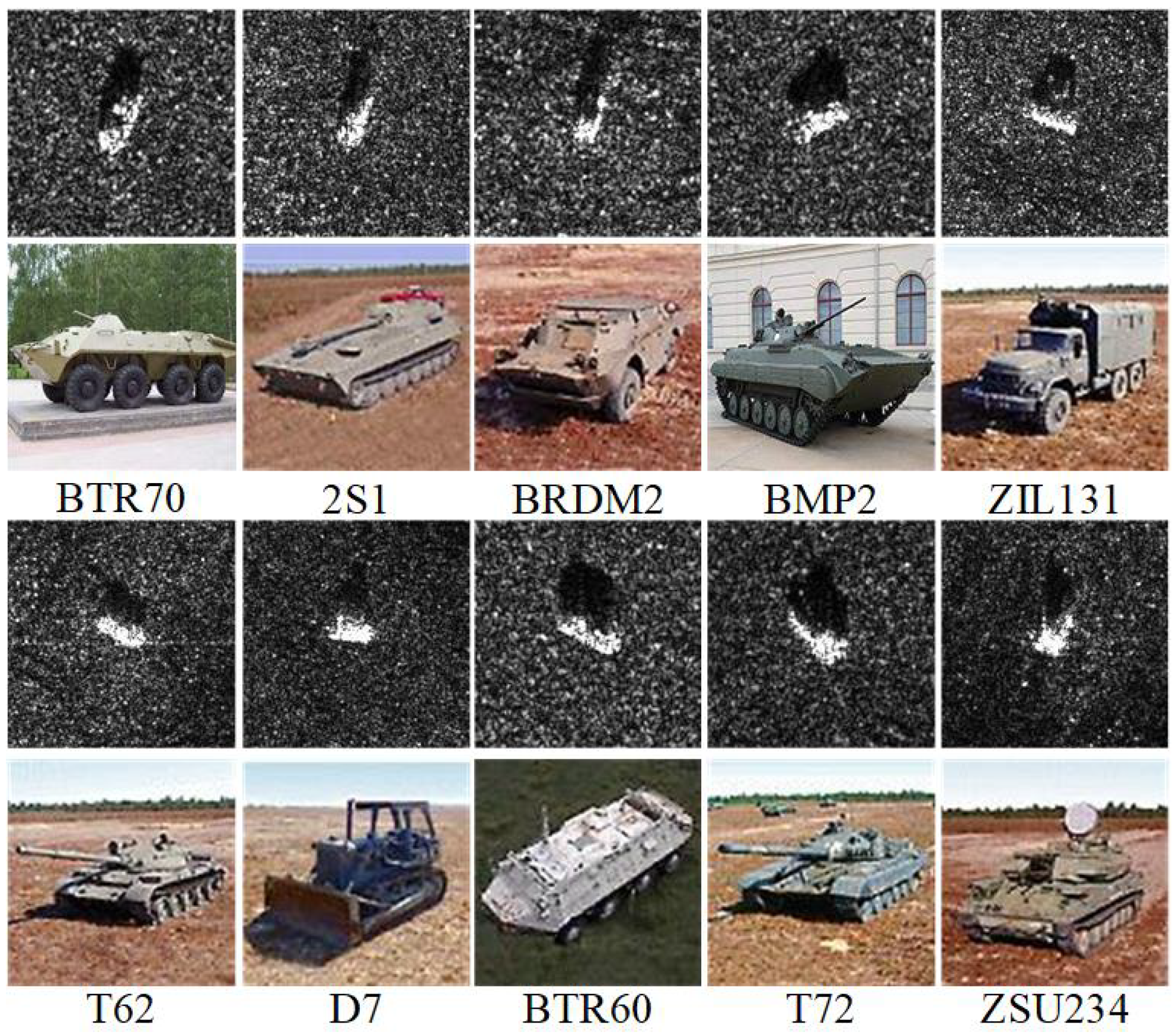

4.1.1. MSTAR-FSCIL

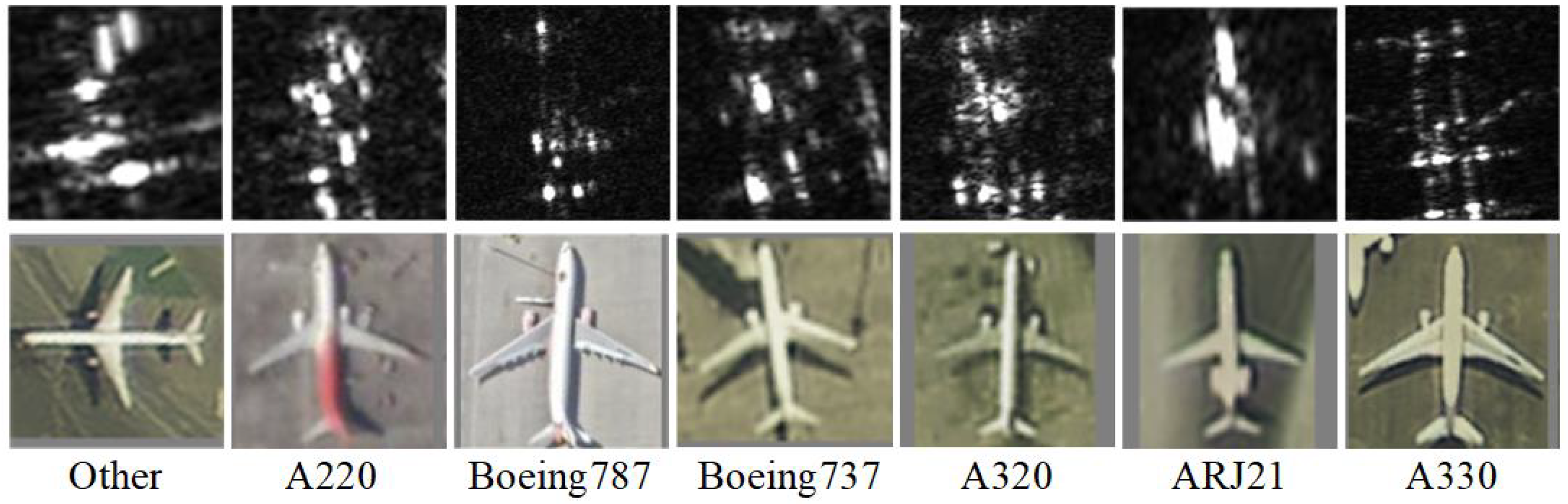

4.1.2. SAR-AIRcraft-FSCIL

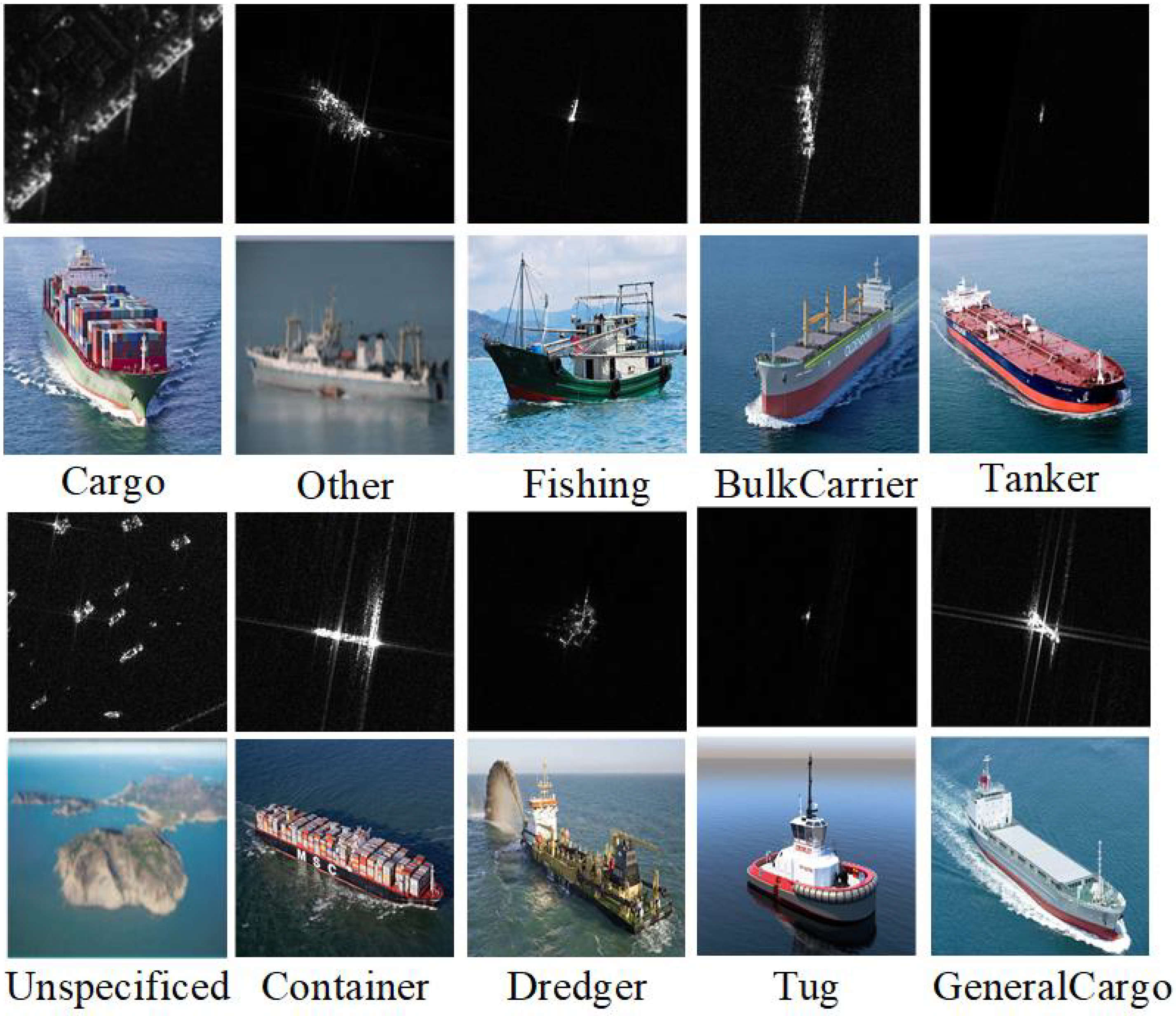

4.1.3. FUSAR-FSCIL

4.2. Implementation Details

4.3. Evaluation Metrics and Benchmarks

4.4. Ablation Study

4.4.1. Effects of VSS

- Quantitative performance: The contributions of the two submodules, namely the VCG and the SL, are progressively verified. Compared to the baseline results presented in the first row of the table, our method with the VCG achieves 79.28%, 71.26%, and 35.51% in Avg Acc, Avg. HA, and PD, respectively, as shown in the second row of the table. Additionally, the corresponding results for the method with the SL are 77.64%, 65.11%, and 36.40%. Finally, as indicated in the fourth row of the table, the scores of our method with the VSS, i.e., with both the VCG and SL, reach 82.49%, 76.59%, and 32.83%. These results are 7.23%, 15.22%, and 4.85% superior to the baseline, demonstrating the effectiveness of the VSS and its submodules.

- Qualitative performance: The feature diversity and significance are given by Figure 5. The former is quantified by its non-sparsity, measured as the ratio of non-zero feature channels to the total number of channels. The latter is represented by the L2-norm, which corresponds to the overall magnitude of the target features. Larger values correspond to features with more diversity and importance. Here, our method, facilitated by the VCG and the SL, can achieve progressively competitive scores in non-sparsity (Figure 5a) and normalization (Figure 5b). Notably, the much more diverse features can be captured by our method by using both the VCG and the SL. More intuitively, the t-SNE embeddings of class features extracted by our method with the VSS are shown in Figure 6. Here, compared to the baseline, features extracted by our method with the VSS can be distributed more uniformly, implicitly reflecting the diverse and rich target cues acquired by our method.

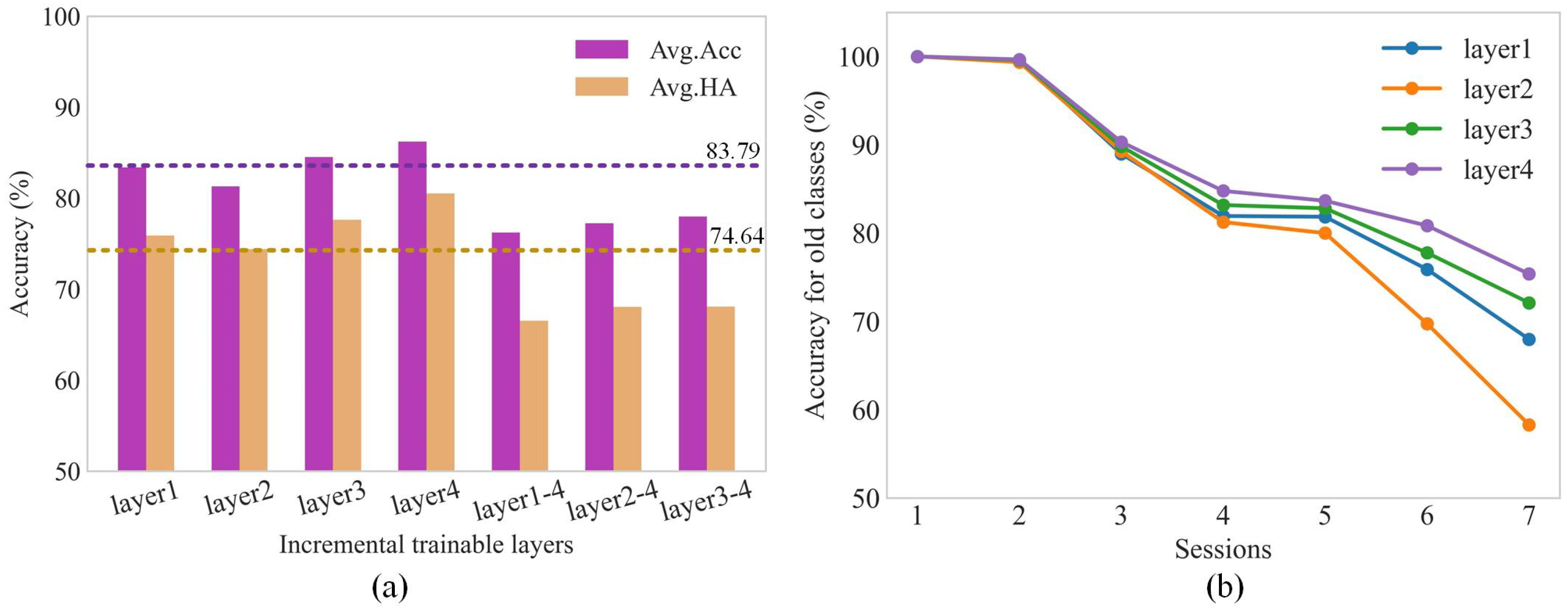

4.4.2. Effects of DMA

- DA: The DA is employed to balance the model’s stability for class-agnostic knowledge and its plasticity for class-specific knowledge by leveraging the hierarchical features from different convolutional layers. As shown in the fifth row of Table 4, our method with the DA achieves more competitive results compared to the version without this module, with Avg Acc, Avg. HA, and PD values of 82.75%, 77.64%, and 32.50%, respectively. Additionally, the effects of varying the locations of the DA are explored in Figure 7a; the method with fewer and deeper trainable parameters achieves higher performance in terms of Avg Acc and Avg HA compared to configurations with shallower and more trainable layers. Furthermore, as demonstrated in Figure 7b, the model with the trainable layer4 achieves the best performance in classifying old classes at each session. This is attributed to its ability to balance static extraction of low-level general features and dynamic adaptation for class-aware semantic features, resulting in lower drifts in old-class features compared to other variants.

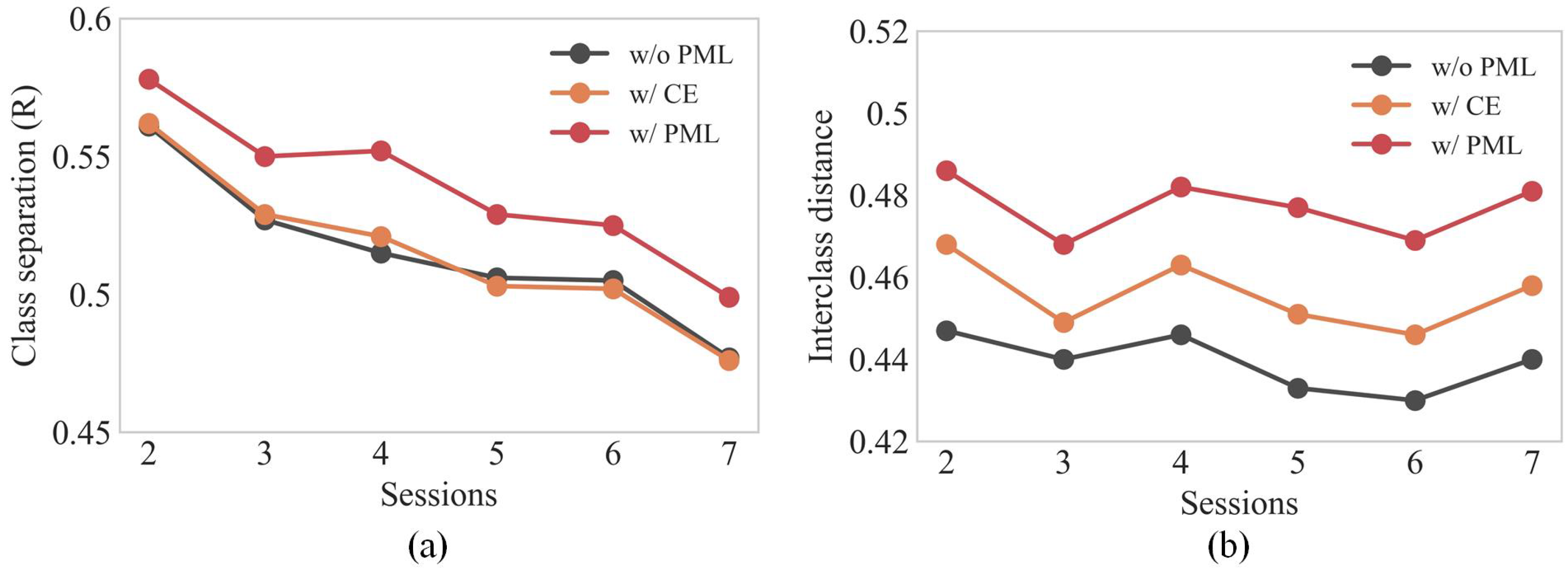

- PML: Our method’s proper adaptability to incoming targets is guaranteed by the PML, for which the contributions are shown in the last row of Table 4. Notably, the Avg Acc, the Avg. HA, and the PD of our method with the PML reach 83.03%, 78.13%, and 32.15%, 0.35%, which is superior to the method without the PML, demonstrating the effectiveness of the module. Furthermore, more investigations into session-wise class separations (R) [40] and interclass distances are presented in Figure 8a. Here, scores of the R among all classes learned by our method with the PML are consistently higher than those of methods without the module or with the CE loss across all sessions. Furthermore, as shown in Figure 8b, benefiting from the clear margin constraint between target samples and selected class weights considered by the PML, interclass distances learned by the loss are significantly superior to those learned by the methods without or with the CE. Henceforth, clear separations can be learned by the PML.

4.5. Benchmark Performance

4.5.1. Quantitative Evaluation

- Firstly, owing to the specially designed techniques aiming to enhance forward compatibility and stable discriminability, our method achieves competitive performance in terms of Avg Acc compared to other benchmarks. On the MSTAR-FSCIL dataset, the Avg Acc of our method reaches 83.03%, surpassing Oracle, SAVC, and CPL by 4.35%, 4.83%, and 4.47%, respectively. Similarly, on the SAR-AIRcraft-FSCIL dataset, our method outperforms Oracle, SAVC, and CPL by 6.64%, 2.49%, and 0.86%, respectively. On the FUSAR-FSCIL dataset, the score of the Avg Acc of our method reaches 60.40%, which is 2.12% higher than the second-ranked solution, demonstrating the effectiveness of our method for coping with the FSCIL problem in SAR ATR field.

- Secondly, our method effectively addresses the catastrophic forgetting issue, resulting in superior performance on the PD metric. Specifically, our method achieves PD scores of 32.15% and 27.57% on the MSTAR-FSCIL and SAR-AIRcraft-FSCIL datasets, respectively. Unlike most IL and FSCIL methods, which commonly rely on less forgetting losses and replay strategies to mitigate forgetting, our approach with the DMA strategy reaches a balance between static feature representation and dynamic class adaptation, overcoming the limitations of sparse and confused information within limited samples.

- Thirdly, our method achieves the competitive generalization ability in solving the FSCIL in comparison to public benchmarks. For further validation, a combined dataset with ten sessions (one base + nine incremental) is constructed by combining the FSCIL-MSTAR with the SAR-AIRcraft-FSCIL datasets. Methods are first trained on base classes of the two datasets and then optimized on novel classes of the FSCIL-MSTAR and the SAR-AIRcraft-FSCIL incrementally. The results are given in Table 8. Our method achieves competitive performance on the combined dataset compared to other public benchmarks. Furthermore, our method achieves the average accuracy (Avg Acc) of 77.40%, which is 1.7% higher than the second-ranked solution, verifying its strong generalization ability for continual learning across diverse categories.

4.5.2. Qualitative Evaluation

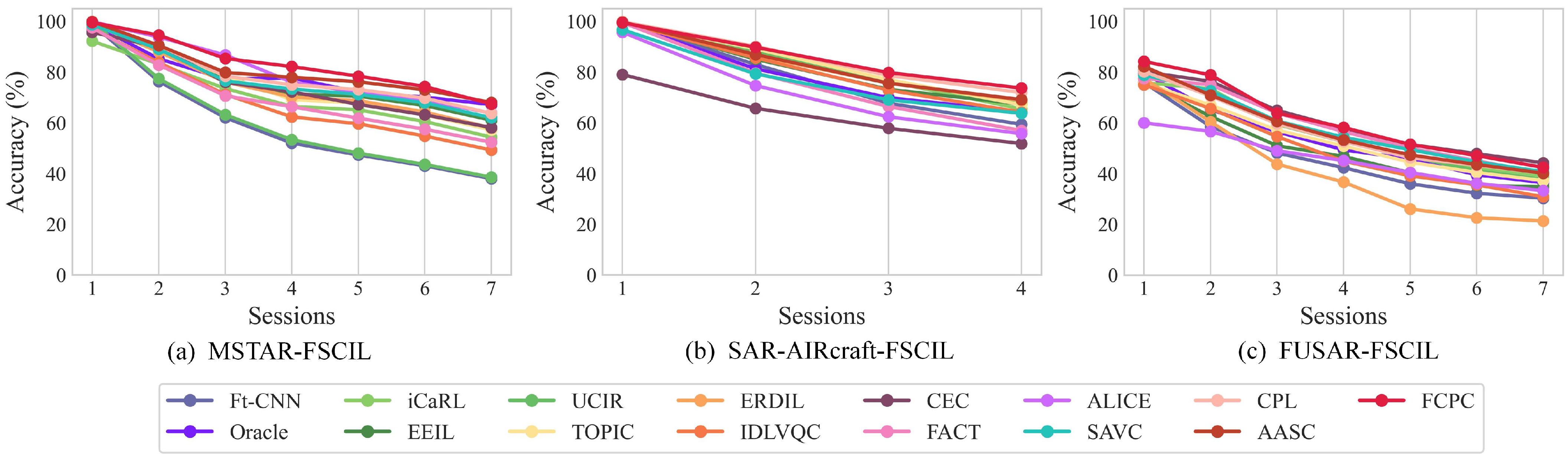

- Session performance curves: The accuracy (Acc) line charts for all compared methods evaluated on of the three datasets are illustrated in Figure 9a–c. Overall, our method with the designed modules consistently achieves the most competitive performance in terms of the Acc across all sessions. Furthermore, the richer and clearer the target-discriminating cues from abundant base classes, the stronger the forward compatibility that could obtained with our method. For instance, the MSTAR-FSCIL dataset, which contains more diverse target components provided by its full-azimuth targets, enables our method’s forward compatibility. Consequently, more competitive performance can be obtained by our method on the MSTAR-FSCIL dataset than that on the SAR-AIRcraft dataset.

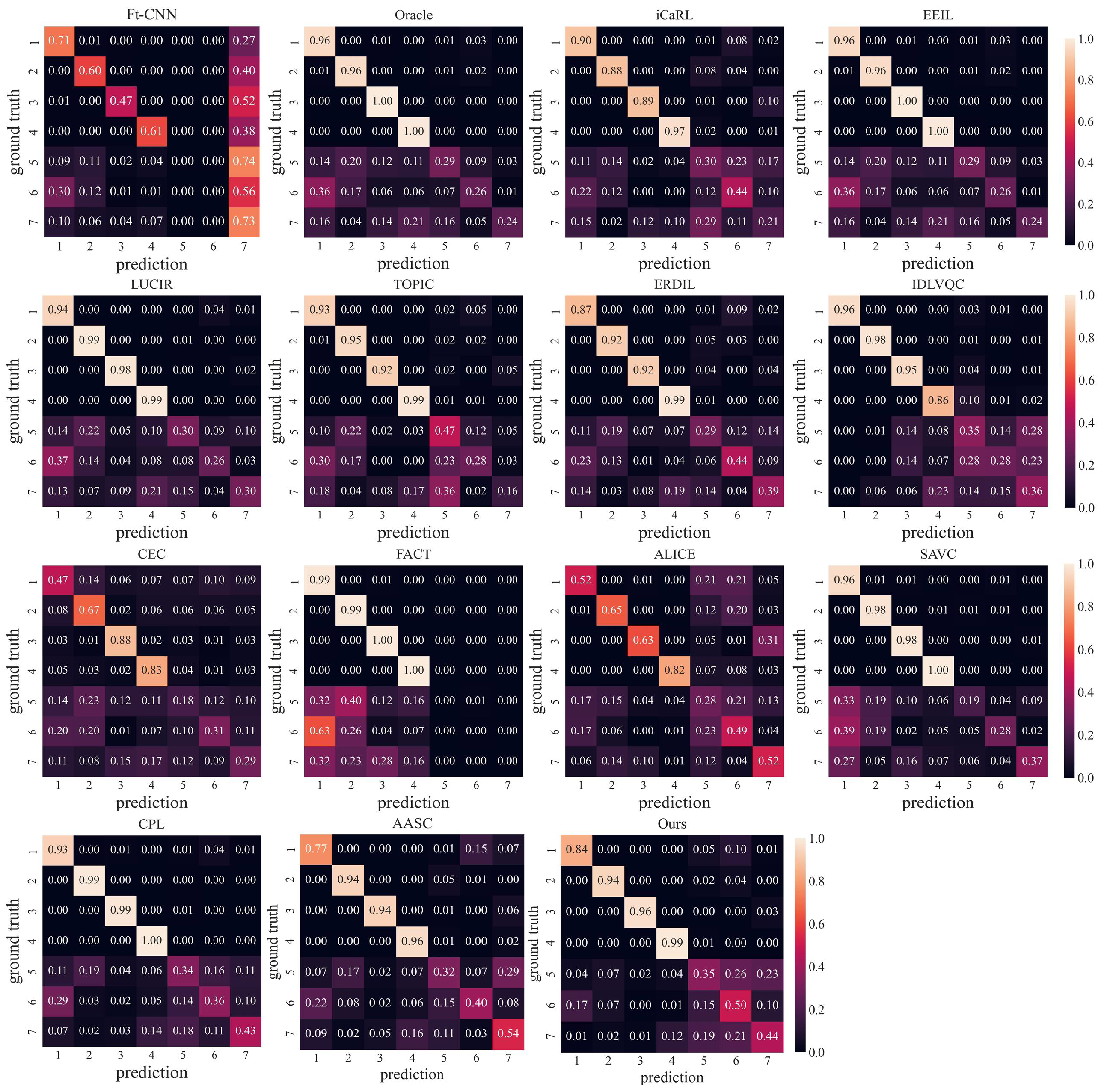

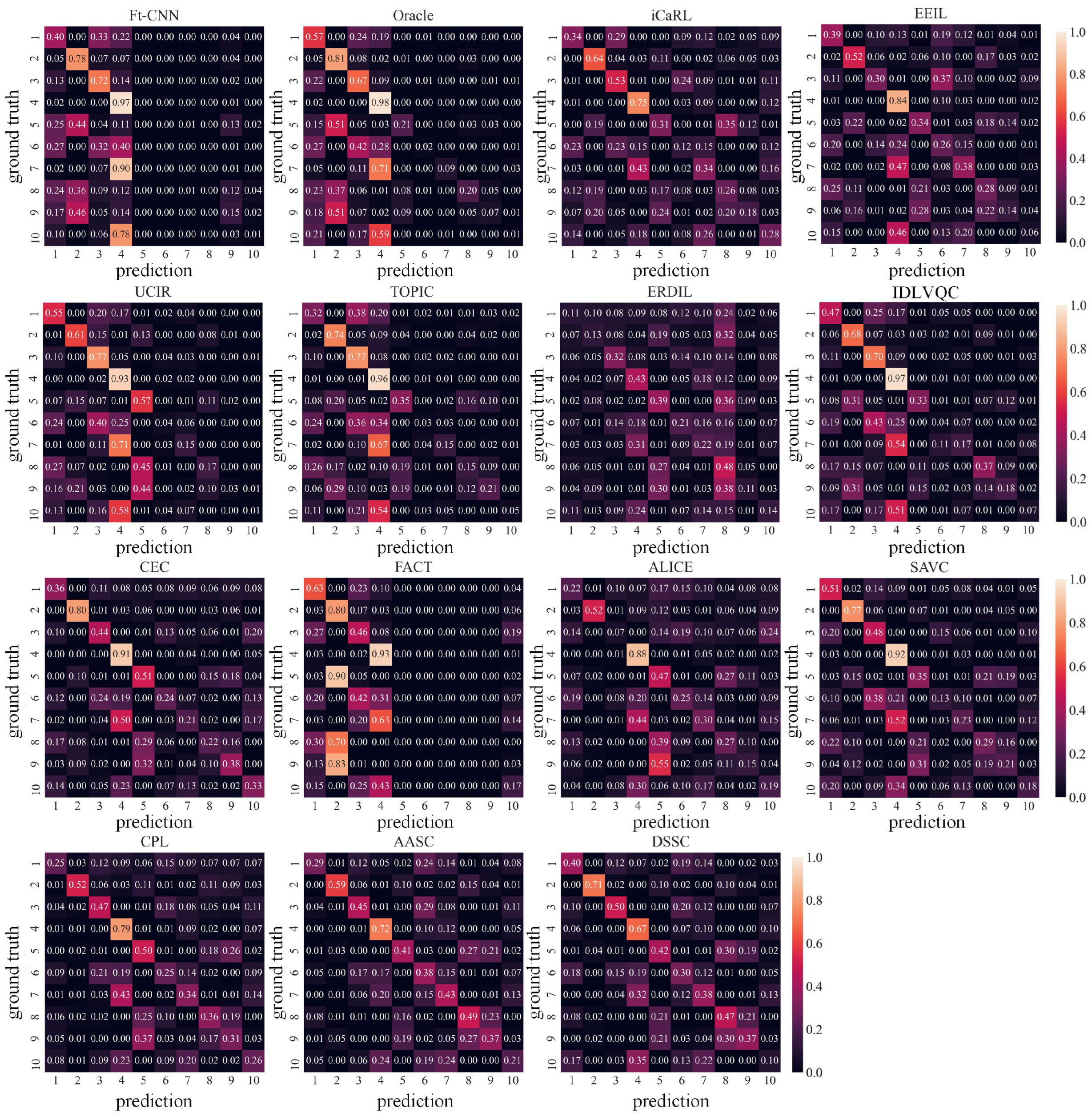

- Confusion matrix: The normalized confusion matrices on the final session of the two datasets are shown in Figure 10, Figure 11 and Figure 12, respectively. Overall, benefiting from the emphasis on both the model’s forward compatibility with incoming classes and the stable discriminability based on limited samples, the colors of diagonal blocks of matrices for both base and incremental classes predicted by our method are more harmonic and bright than those by other benchmarks. For example, most approaches perform well on base classes but fail to judge the new ones, inducing biased confusion matrices. In addition, the more clear and discerning cues provided by limited samples, the more competitive and balanced results the compared benchmarks can reach. For example, the compared benchmarks widely perform better on the MSTAR-FSCIL dataset than on the others, thanks to more distinct cues of targets under ideal conditions than that on the SAR-AIRcraft-FSCIL.

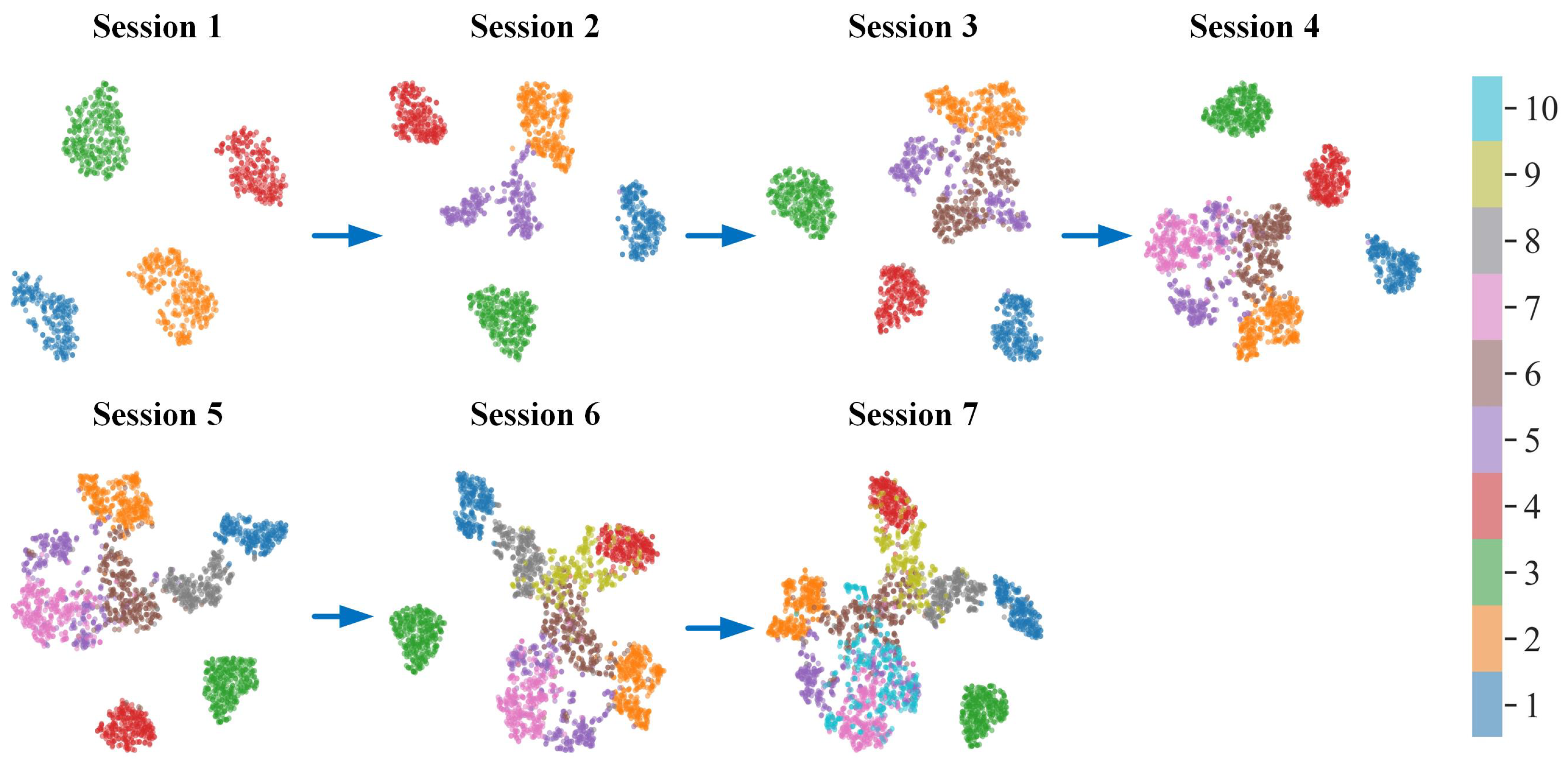

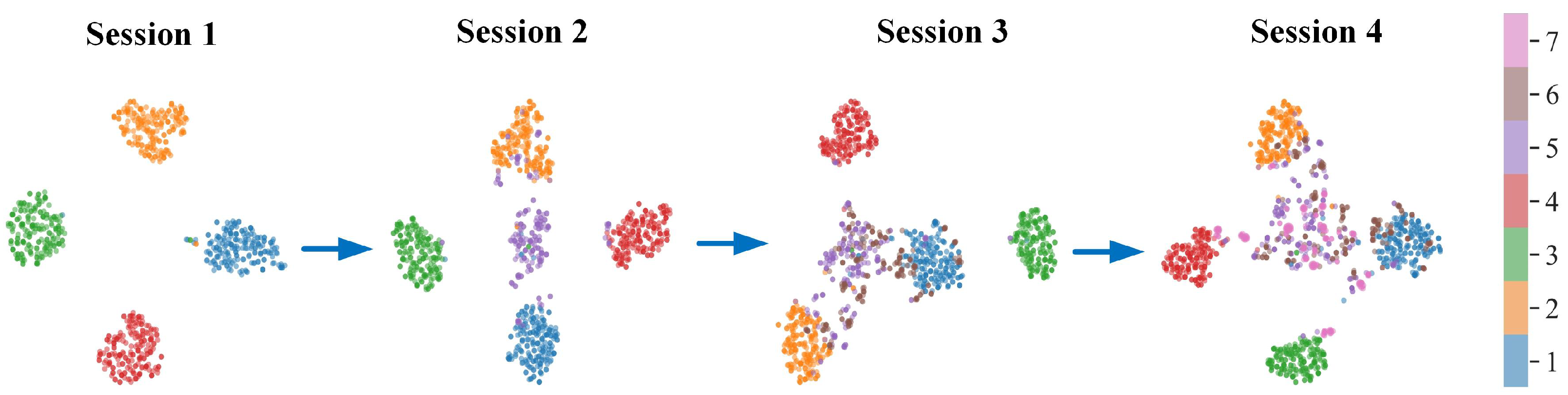

- Session-wise t-SNE results: Considering the limited testing samples of the FUSAR-FSCIL dataset, the t-SNE are solely conducted on the MSTAR-FSCIL and SAR-AIRcraft-FSCIL datasets, and the results are also shown in Figure 13 and Figure 14, respectively. First, our method, with special consideration on its forward compatibility, can reserve more space for the new, leading to more separated distributions of interclass high-dimensional features than those produced by the baseline. Second, the clearer the target-discerning cues provided by new-class samples, the better distinguishing ability our method can possess. Also, as shown by Figure 13 and Figure 14, the t-SNE results for new classes in the MSTAR-FSCIL are more separated than those in the SAR-AIRcraft-FSCIL. Specifically, interclass features are more separated, while the intraclass ones are more compacted from our method, verifying the importance of unleashing the model’s forward compatibility before deployment.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yadav, R.; Nascetti, A.; Azizpour, H.; Ban, Y. Unsupervised flood detection on SAR time series using variational autoencoder. Int. J. Appl. Earth Obs. Geoinf. 2024, 126, 103635. [Google Scholar] [CrossRef]

- Hou, X.; Bai, Y.; Xie, Y.; Ge, H.; Li, Y.; Shang, C.; Shen, Q. Deep collaborative learning with class-rebalancing for semi-supervised change detection in SAR images. Knowledge-Based Systems 2023, 264, 110281. [Google Scholar] [CrossRef]

- Liu, L.; Fu, L.; Zhang, Y.; Ni, W.; Wu, B.; Li, Y.; Shang, C.; Shen, Q. CLFR-Det: Cross-level feature refinement detector for tiny-ship detection in SAR images. Knowl.-Based Syst. 2024, 284, 111284. [Google Scholar] [CrossRef]

- Shang, R.; He, J.; Wang, J.; Xu, K.; Jiao, L.; Stolkin, R. Dense connection and depthwise separable convolution based CNN for polarimetric SAR image classification. Knowl.-Based Syst. 2020, 194, 105542. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Liu, Z.; Hu, D.; Kuang, G.; Liu, L. Attentional Feature Refinement and Alignment Network for Aircraft Detection in SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5220616. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention receptive pyramid network for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- McCloskey, M.; Cohen, N.J. Catastrophic interference in connectionist networks: The sequential learning problem. In Psychology of Learning and Motivation; Elsevier: Amsterdam, The Netherlands, 1989; Volume 24, pp. 109–165. [Google Scholar]

- Zhou, D.W.; Wang, F.Y.; Ye, H.J.; Ma, L.; Pu, S.; Zhan, D.C. Forward compatible few-shot class-incremental learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9046–9056. [Google Scholar]

- Peng, C.; Zhao, K.; Wang, T.; Li, M.; Lovell, B.C. Few-shot class-incremental learning from an open-set perspective. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 382–397. [Google Scholar]

- Zhao, Y.; Zhao, L.; Zhang, S.; Liu, L.; Ji, K.; Kuang, G. Decoupled Self-Supervised Subspace Classifier for Few-Shot Class-Incremental SAR Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 15845–15861. [Google Scholar] [CrossRef]

- Novak, L.M.; Owirka, G.J.; Brower, W.S.; Weaver, A.L. The automatic target-recognition system in SAIP. Linc. Lab. J. 1997, 10, 187–202. [Google Scholar]

- Novak, L.M.; Owirka, G.J.; Brower, W.S. Performance of 10-and 20-target MSE classifiers. IEEE Trans. Aerosp. Electron. Syst. 2000, 36, 1279–1289. [Google Scholar]

- Novak, L.M.; Halversen, S.D.; Owirka, G.; Hiett, M. Effects of polarization and resolution on SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 102–116. [Google Scholar] [CrossRef]

- Ikeuchi, K.; Wheeler, M.D.; Yamazaki, T.; Shakunaga, T. Model-based SAR ATR system. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery III, Orlando, FL, USA, 8–12 April 1996; International Society for Optics and Photonics: Bellingham, WA, USA, 1996; Volume 2757, pp. 376–387. [Google Scholar]

- Hummel, R. Model-based ATR using synthetic aperture radar. In Proceedings of the IEEE International Radar Conference, Alexandria, VA, USA, 12 May 2000; pp. 856–861. [Google Scholar]

- Diemunsch, J.R.; Wissinger, J. Moving and stationary target acquisition and recognition (MSTAR) model-based automatic target recognition: Search technology for a robust ATR. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery V, Orlando, FL, USA, 13–17 April 1998; International Society for Optics and Photonics: Bellingham, WA, USA, 1998; Volume 3370, pp. 481–492. [Google Scholar]

- Li, J.; Yu, Z.; Yu, L.; Cheng, P.; Chen, J.; Chi, C. A comprehensive survey on SAR ATR in deep-learning era. Remote Sens. 2023, 15, 1454. [Google Scholar] [CrossRef]

- Li, W.; Yang, W.; Liu, T.; Hou, Y.; Li, Y.; Liu, Z.; Liu, Y.; Liu, L. Predicting gradient is better: Exploring self-supervised learning for SAR ATR with a joint-embedding predictive architecture. ISPRS J. Photogramm. Remote Sens. 2024, 218, 326–338. [Google Scholar] [CrossRef]

- Li, W.; Yang, W.; Liu, L.; Zhang, W.; Liu, Y. Discovering and explaining the noncausality of deep learning in SAR ATR. IEEE Geosci. Remote Sens. Lett. 2023, 20, 4004605. [Google Scholar] [CrossRef]

- Yu, X.; Yu, H.; Liu, Y.; Ren, H. Enhanced prototypical network with customized region-aware convolution for few-shot SAR ATR. Remote Sens. 2024, 16, 3563. [Google Scholar] [CrossRef]

- Peng, B.; Peng, B.; Xia, J.; Liu, T.; Liu, Y.; Liu, L. Towards assessing the synthetic-to-measured adversarial vulnerability of SAR ATR. ISPRS J. Photogramm. Remote Sens. 2024, 214, 119–134. [Google Scholar] [CrossRef]

- Wang, C.; Xu, R.; Huang, Y.; Pei, J.; Huang, C.; Zhu, W.; Yang, J. Limited-data SAR ATR causal method via dual-invariance intervention. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5203319. [Google Scholar] [CrossRef]

- Tao, X.; Hong, X.; Chang, X.; Dong, S.; Wei, X.; Gong, Y. Few-shot class-incremental learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12183–12192. [Google Scholar]

- Dong, S.; Hong, X.; Tao, X.; Chang, X.; Wei, X.; Gong, Y. Few-shot class-incremental learning via relation knowledge distillation. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 1255–1263. [Google Scholar]

- Chen, K.; Lee, C.G. Incremental few-shot learning via vector quantization in deep embedded space. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Zhang, C.; Song, N.; Lin, G.; Zheng, Y.; Pan, P.; Xu, Y. Few-shot incremental learning with continually evolved classifiers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12455–12464. [Google Scholar]

- Wang, L.; Yang, X.; Tan, H.; Bai, X.; Zhou, F. Few-shot class-incremental SAR target recognition based on hierarchical embedding and incremental evolutionary network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5204111. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Ding, D.; Hu, D.; Kuang, G.; Liu, L. Few-Shot Class-Incremental SAR Target Recognition via Cosine Prototype Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5212718. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Zhang, S.; Ji, K.; Kuang, G.; Liu, L. Azimuth-aware Subspace Classifier for Few-Shot Class-Incremental SAR ATR. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5203020. [Google Scholar] [CrossRef]

- Kong, L.; Gao, F.; He, X.; Wang, J.; Sun, J.; Zhou, H.; Hussain, A. Few-shot class-incremental SAR target recognition via orthogonal distributed features. IEEE Trans. Aerosp. Electron. Syst. 2024, 61, 325–341. [Google Scholar] [CrossRef]

- Karantaidis, G.; Pantsios, A.; Kompatsiaris, I.; Papadopoulos, S. Few-Shot Class-Incremental Learning For Efficient SAR Automatic Target Recognition. arXiv 2025, arXiv:2505.19565. [Google Scholar]

- Zhao, Y.; Zhao, L.; Zhang, S.; Ji, K.; Kuang, G. Few-shot class-incremental sar target recognition via decoupled scattering augmentation classifier. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 7584–7587. [Google Scholar]

- Potter, L.C.; Moses, R.L. Attributed scattering centers for SAR ATR. IEEE Trans. Image Process. 1997, 6, 79–91. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Kang, Y.; Zeng, X.; Wang, Y.; Zhang, D.; Sun, X. SAR-AIRcraft-1.0: High-resolution SAR aircraft detection and recognition dataset. J. Radars 2023, 12, 906. [Google Scholar]

- Hou, X.; Ao, W.; Song, Q.; Lai, J.; Wang, H.; Xu, F. FUSAR-Ship: Building a high-resolution SAR-AIS matchup dataset of Gaofen-3 for ship detection and recognition. Sci. China Inf. Sci. 2020, 63, 140303. [Google Scholar] [CrossRef]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Castro, F.M.; Marín-Jiménez, M.J.; Guil, N.; Schmid, C.; Alahari, K. End-to-End Incremental Learning. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 233–248. [Google Scholar]

- Hou, S.; Pan, X.; Loy, C.C.; Wang, Z.; Lin, D. Learning A Unified Classifier Incrementally via Rebalancing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 831–839. [Google Scholar]

- Song, Z.; Zhao, Y.; Shi, Y.; Peng, P.; Yuan, L.; Tian, Y. Learning with Fantasy: Semantic-Aware Virtual Contrastive Constraint for Few-Shot Class-Incremental Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 24183–24192. [Google Scholar]

| Session | Order | Type | Serial No. | Train | Test |

|---|---|---|---|---|---|

| Base | 1 | BTR70 | c71 | 233 | 196 |

| 2 | 2S1 | b01 | 299 | 274 | |

| 3 | BRDM2 | E-71 | 298 | 274 | |

| 4 | BMP2 | 9563 | 233 | 196 | |

| Incremental | 5 | ZIL131 | E12 | 5 | 274 |

| 6 | T62 | A51 | 5 | 273 | |

| 7 | D7 | 92v13015 | 5 | 274 | |

| 8 | BTR60 | k10yt7532 | 5 | 195 | |

| 9 | T72 | 132 | 5 | 196 | |

| 10 | ZSU234 | d08 | 5 | 274 |

| Session | Order | Type | Train | Test |

|---|---|---|---|---|

| Base | 1 | Other | 2000 | 200 |

| 2 | A220 | 2000 | 200 | |

| 3 | Boeing787 | 2000 | 200 | |

| 4 | Boeing737 | 2000 | 200 | |

| Incremental | 5 | A320 | 5 | 200 |

| 6 | ARJ21 | 5 | 200 | |

| 7 | A330 | 5 | 200 |

| Session | Order | Type | Train | Test |

|---|---|---|---|---|

| Base | 1 | Cargo | 240 | 30 |

| 2 | Other | 240 | 30 | |

| 3 | Fishing | 240 | 30 | |

| 4 | BulkCarrier | 240 | 30 | |

| Incremental | 5 | Tanker | 5 | 30 |

| 6 | Unspecificied | 5 | 30 | |

| 7 | Container | 5 | 30 | |

| 8 | Dredger | 5 | 30 | |

| 9 | Tug | 5 | 30 | |

| 10 | GeneralCargo | 5 | 30 |

| Forward-Compatible | Stable Discriminating | MSTAR-FSCIL (%) | |||||

|---|---|---|---|---|---|---|---|

| VCG | SL | DA | PML | NCM | Avg. Acc | Avg. HA | PD ↓ |

| - | - | - | - | ✓ | 75.26 | 61.37 | 41.25 |

| ✓ | - | - | - | ✓ | 79.28 | 71.26 | 35.51 |

| - | ✓ | - | - | ✓ | 77.64 | 65.11 | 36.40 |

| ✓ | ✓ | - | - | ✓ | 82.49 | 76.59 | 32.83 |

| ✓ | ✓ | ✓ | - | ✓ | 82.75 | 77.64 | 32.50 |

| ✓ | ✓ | ✓ | ✓ | ✓ | 83.03 | 78.13 | 32.15 |

| Methods | Sessions | Avg Acc | PD ↓ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||

| Ft-CNN | 98.94 | 76.21 | 62.01 | 52.02 | 47.55 | 43.08 | 38.12 | 59.70 | 60.82 |

| Oracle | 98.94 | 85.05 | 77.17 | 78.30 | 73.86 | 70.34 | 67.10 | 78.68 | 31.84 |

| iCaRL [37] | 92.12 | 83.08 | 73.46 | 66.41 | 65.04 | 60.42 | 54.27 | 70.69 | 37.85 |

| EEIL [38] | 98.94 | 81.76 | 70.77 | 62.78 | 63.35 | 61.55 | 56.68 | 70.83 | 42.26 |

| LUCIR [39] | 99.89 | 90.07 | 76.97 | 72.60 | 70.33 | 66.67 | 60.89 | 76.77 | 39.00 |

| TOPIC [24] | 91.10 | 85.27 | 72.77 | 63.25 | 61.24 | 56.03 | 50.03 | 68.53 | 41.07 |

| ERDIL [25] | 98.94 | 87.92 | 76.02 | 70.14 | 68.66 | 64.17 | 57.94 | 74.83 | 41.00 |

| IDLVQC [26] | 97.02 | 83.75 | 71.12 | 62.27 | 59.54 | 54.71 | 49.20 | 68.23 | 47.82 |

| CEC [27] | 90.54 | 80.52 | 72.27 | 72.53 | 66.97 | 61.76 | 57.97 | 71.79 | 32.57 |

| FACT [9] | 98.85 | 87.36 | 67.14 | 64.24 | 49.50 | 46.69 | 45.01 | 65.54 | 53.84 |

| ALICE [10] | 97.55 | 86.83 | 72.36 | 66.73 | 63.05 | 59.36 | 54.44 | 71.47 | 43.11 |

| SAVC [40] | 97.10 | 89.43 | 79.64 | 75.66 | 72.47 | 68.48 | 64.64 | 78.20 | 32.46 |

| CPL [29] | 99.89 | 90.41 | 78.32 | 74.93 | 73.04 | 69.66 | 63.66 | 78.56 | 36.23 |

| AASC [30] | 99.89 | 89.73 | 76.33 | 73.64 | 71.46 | 66.97 | 62.31 | 77.19 | 37.58 |

| Ours (FCPC) | 99.47 | 94.48 | 85.28 | 82.10 | 78.31 | 74.26 | 67.32 | 83.03 | 32.15 |

| Methods | Sessions | Avg Acc | PD ↓ | |||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| Ft-CNN | 99.62 | 82.93 | 67.66 | 59.32 | 77.38 | 40.30 |

| Oracle | 99.47 | 81.24 | 69.83 | 64.31 | 78.71 | 35.16 |

| iCaRL [37] | 99.50 | 88.82 | 76.20 | 65.37 | 82.47 | 34.13 |

| EEIL [38] | 99.62 | 85.00 | 73.13 | 67.26 | 81.25 | 32.36 |

| LUCIR [39] | 99.75 | 87.68 | 75.76 | 68.23 | 82.86 | 31.52 |

| TOPIC [24] | 99.64 | 89.09 | 76.95 | 67.06 | 83.18 | 32.58 |

| ERDIL [25] | 99.62 | 86.50 | 75.51 | 68.80 | 82.61 | 30.82 |

| IDLVQC [26] | 99.37 | 85.53 | 72.80 | 64.10 | 80.45 | 35.27 |

| CEC [27] | 78.97 | 65.64 | 57.76 | 51.75 | 63.53 | 27.22 |

| FACT [9] | 99.44 | 79.55 | 66.36 | 56.88 | 75.56 | 42.56 |

| ALICE [10] | 95.63 | 74.62 | 62.30 | 55.66 | 72.05 | 39.97 |

| SAVC [40] | 99.04 | 83.44 | 73.74 | 67.97 | 81.05 | 31.07 |

| CPL [29] | 99.37 | 88.55 | 77.98 | 72.06 | 84.49 | 27.31 |

| AASC [30] | 99.50 | 89.12 | 77.18 | 69.28 | 83.77 | 30.22 |

| Ours (FCPC) | 99.37 | 91.09 | 79.15 | 71.80 | 85.35 | 27.57 |

| Methods | Sessions | Avg Acc | PD ↓ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||

| Ft-CNN | 75.83 | 58.53 | 48.11 | 42.29 | 35.96 | 32.22 | 30.30 | 46.18 | 45.53 |

| Oracle | 79.50 | 65.20 | 56.44 | 49.33 | 45.75 | 39.48 | 36.40 | 53.16 | 43.10 |

| iCaRL [37] | 75.83 | 73.40 | 59.33 | 52.57 | 47.13 | 42.41 | 39.13 | 55.69 | 36.70 |

| EEIL [38] | 75.83 | 62.53 | 50.94 | 46.76 | 40.33 | 35.41 | 34.73 | 49.50 | 41.10 |

| UCIR [39] | 80.83 | 71.47 | 60.28 | 53.19 | 46.67 | 41.11 | 37.73 | 55.90 | 43.10 |

| TOPIC [24] | 75.67 | 67.00 | 57.11 | 50.86 | 44.46 | 40.41 | 37.20 | 53.24 | 38.47 |

| ERDIL [25] | 77.50 | 60.40 | 43.72 | 36.62 | 26.04 | 22.59 | 21.30 | 41.17 | 56.20 |

| IDLVQC [26] | 75.00 | 65.60 | 54.56 | 44.90 | 39.08 | 35.56 | 30.83 | 49.36 | 44.17 |

| CEC [27] | 84.17 | 71.53 | 60.61 | 54.62 | 48.75 | 44.30 | 40.43 | 57.77 | 43.74 |

| FACT [9] | 77.50 | 75.00 | 63.83 | 56.52 | 50.00 | 45.04 | 40.10 | 58.28 | 37.40 |

| ALICE [10] | 60.00 | 56.60 | 49.00 | 45.10 | 40.42 | 36.15 | 33.23 | 45.79 | 26.77 |

| SAVC [40] | 78.83 | 72.60 | 60.72 | 54.29 | 49.42 | 44.56 | 40.73 | 57.31 | 38.10 |

| CPL [29] | 80.17 | 70.53 | 59.61 | 52.62 | 46.25 | 43.23 | 40.13 | 56.08 | 40.04 |

| AASC [30] | 82.23 | 70.92 | 60.53 | 53.21 | 47.35 | 43.55 | 39.33 | 56.73 | 42.90 |

| Ours (FCPC) | 80.17 | 76.33 | 64.94 | 57.86 | 51.46 | 47.85 | 44.20 | 60.40 | 35.97 |

| Methods | Sessions | Avg Acc | PD ↓ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |||

| Ft-CNN | 90.13 | 80.29 | 72.01 | 65.24 | 59.85 | 55.22 | 50.96 | 47.82 | 44.99 | 42.40 | 60.89 | 47.73 |

| Oracle | 91.77 | 88.48 | 83.35 | 81.09 | 77.58 | 71.17 | 70.97 | 67.53 | 63.77 | 61.28 | 75.70 | 30.49 |

| iCaRL [37] | 85.31 | 82.21 | 73.11 | 67.42 | 65.62 | 62.09 | 58.18 | 56.42 | 53.57 | 50.72 | 65.46 | 34.59 |

| EEIL [38] | 90.13 | 80.03 | 73.08 | 68.74 | 63.97 | 60.20 | 57.72 | 56.08 | 53.92 | 51.98 | 65.59 | 38.15 |

| UCIR [39] | 91.19 | 89.07 | 83.54 | 78.03 | 75.79 | 72.68 | 70.88 | 68.28 | 65.21 | 62.88 | 75.76 | 28.31 |

| TOPIC [24] | 87.50 | 84.22 | 73.50 | 68.09 | 65.46 | 63.15 | 59.61 | 58.00 | 55.12 | 51.29 | 66.59 | 36.21 |

| ERDIL [25] | 89.30 | 85.12 | 74.54 | 67.59 | 64.16 | 62.01 | 59.11 | 58.24 | 56.32 | 53.01 | 66.94 | 36.29 |

| IDLVQC [26] | 84.22 | 82.39 | 73.59 | 65.43 | 62.26 | 60.93 | 58.93 | 58.13 | 55.02 | 53.84 | 65.47 | 30.38 |

| CEC [27] | 91.18 | 88.98 | 79.86 | 73.52 | 70.79 | 66.62 | 64.35 | 61.92 | 58.78 | 57.15 | 71.31 | 34.03 |

| FACT [9] | 90.19 | 87.61 | 81.15 | 76.00 | 73.46 | 68.46 | 65.11 | 61.83 | 59.59 | 58.35 | 72.18 | 31.84 |

| ALICE [10] | 90.19 | 86.51 | 83.35 | 77.65 | 74.54 | 70.53 | 66.19 | 64.34 | 60.87 | 59.33 | 73.35 | 30.86 |

| SAVC [40] | 88.37 | 85.11 | 81.2 | 81.45 | 77.71 | 74.42 | 73.68 | 70.5 | 68.66 | 66.21 | 76.73 | 22.16 |

| Ours (FCPC) | 91.19 | 87.87 | 81.80 | 80.56 | 78.50 | 75.16 | 73.53 | 71.06 | 68.52 | 65.83 | 77.40 | 25.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, D.; Feng, R.; Xie, Y.; Zheng, X.; Li, B.; Xiang, D. Few-Shot Class-Incremental SAR Target Recognition with a Forward-Compatible Prototype Classifier. Remote Sens. 2025, 17, 3518. https://doi.org/10.3390/rs17213518

Guan D, Feng R, Xie Y, Zheng X, Li B, Xiang D. Few-Shot Class-Incremental SAR Target Recognition with a Forward-Compatible Prototype Classifier. Remote Sensing. 2025; 17(21):3518. https://doi.org/10.3390/rs17213518

Chicago/Turabian StyleGuan, Dongdong, Rui Feng, Yuzhen Xie, Xiaolong Zheng, Bangjie Li, and Deliang Xiang. 2025. "Few-Shot Class-Incremental SAR Target Recognition with a Forward-Compatible Prototype Classifier" Remote Sensing 17, no. 21: 3518. https://doi.org/10.3390/rs17213518

APA StyleGuan, D., Feng, R., Xie, Y., Zheng, X., Li, B., & Xiang, D. (2025). Few-Shot Class-Incremental SAR Target Recognition with a Forward-Compatible Prototype Classifier. Remote Sensing, 17(21), 3518. https://doi.org/10.3390/rs17213518