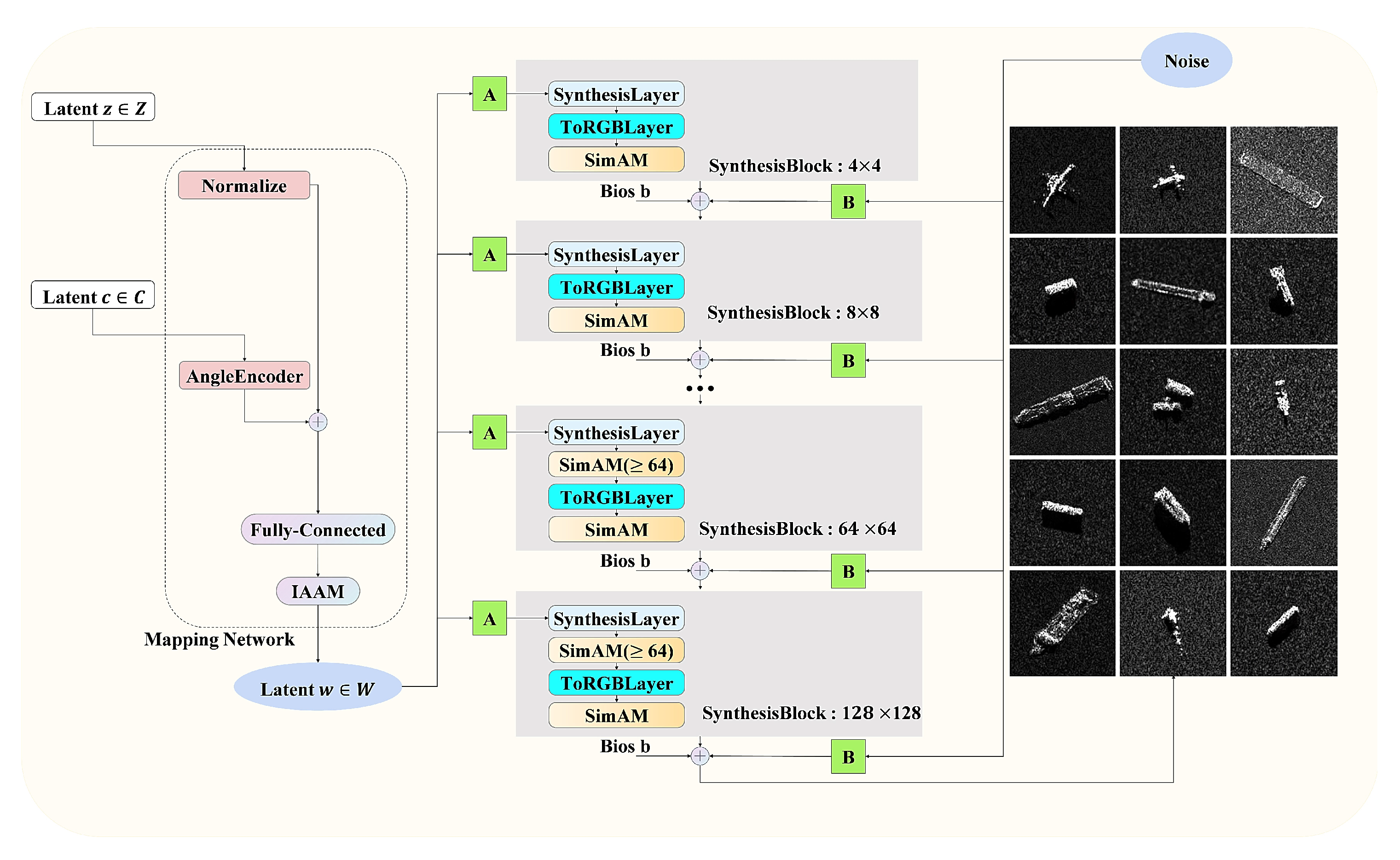

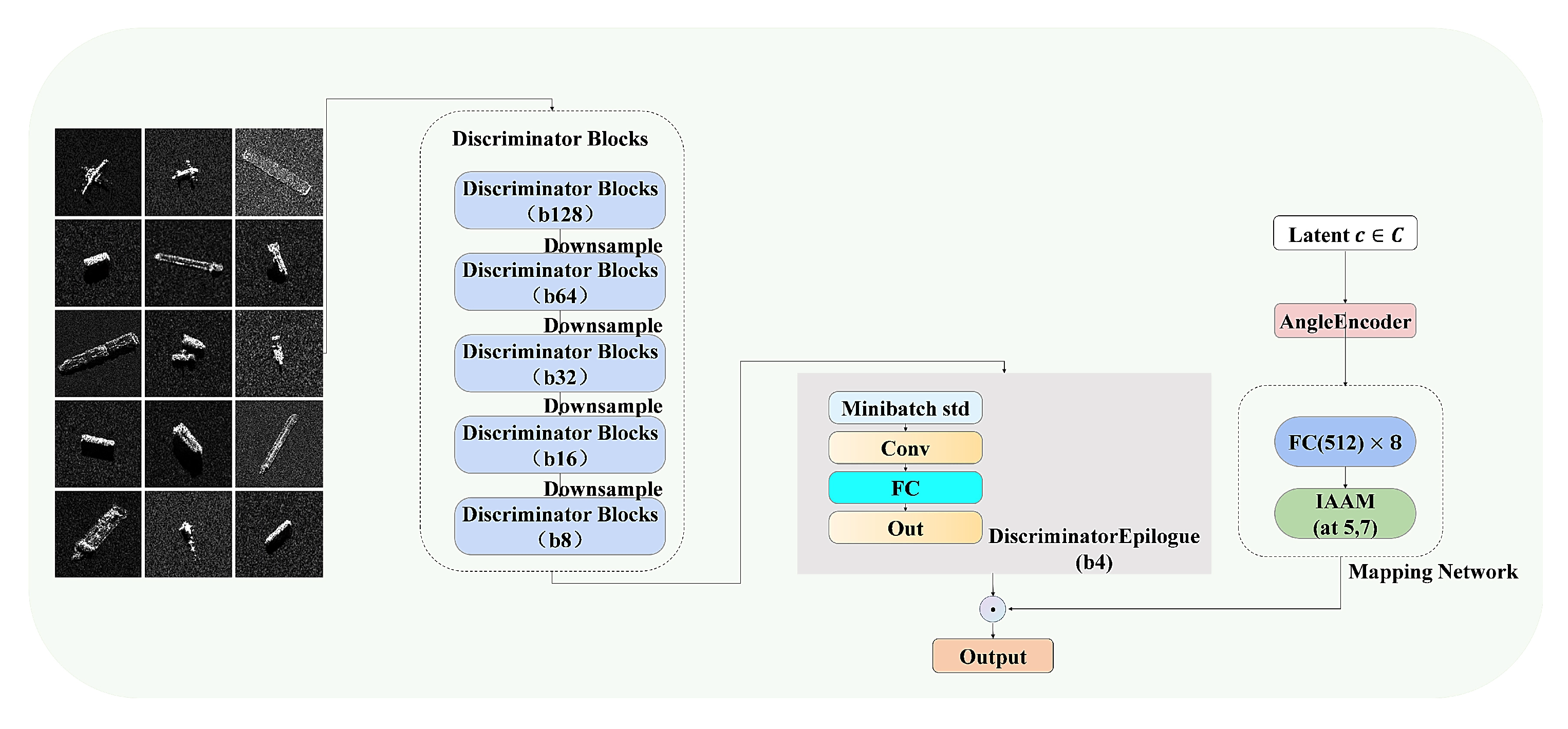

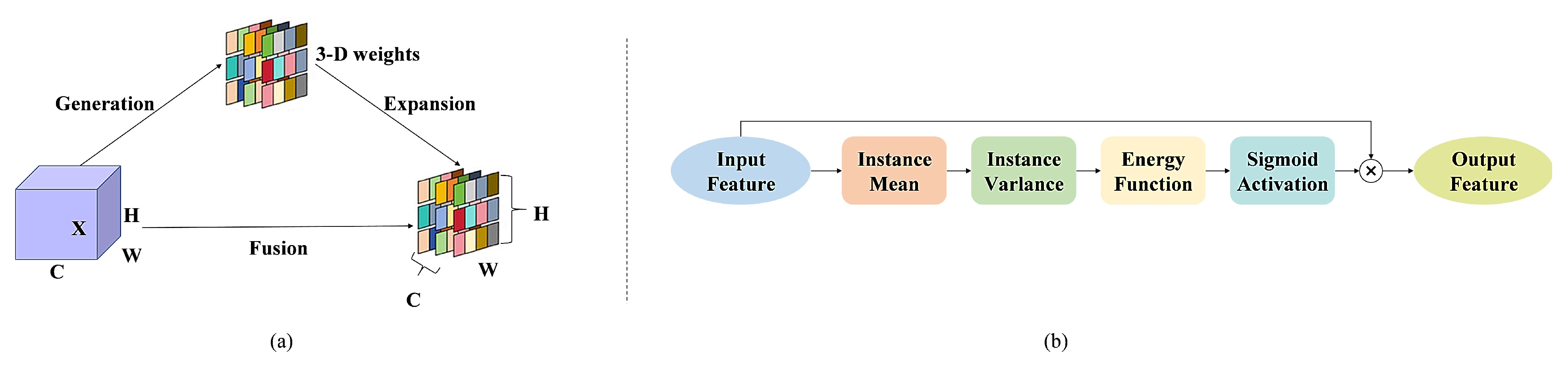

2.1. Multi-Target Selection and Detailed Modeling

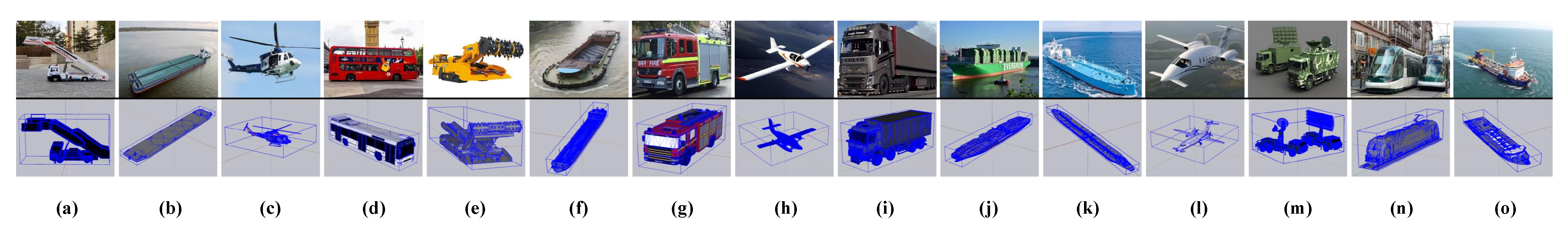

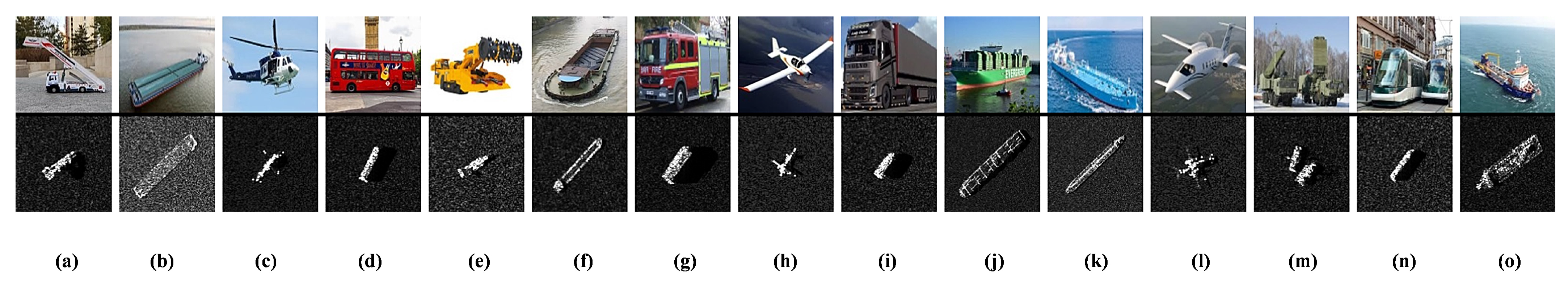

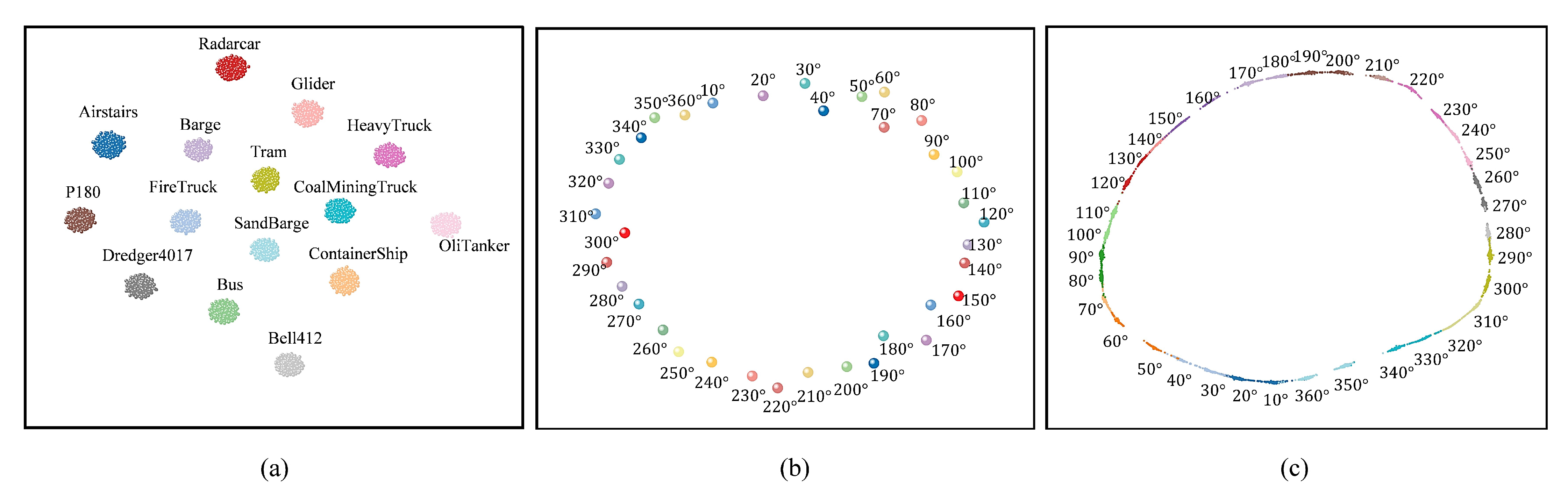

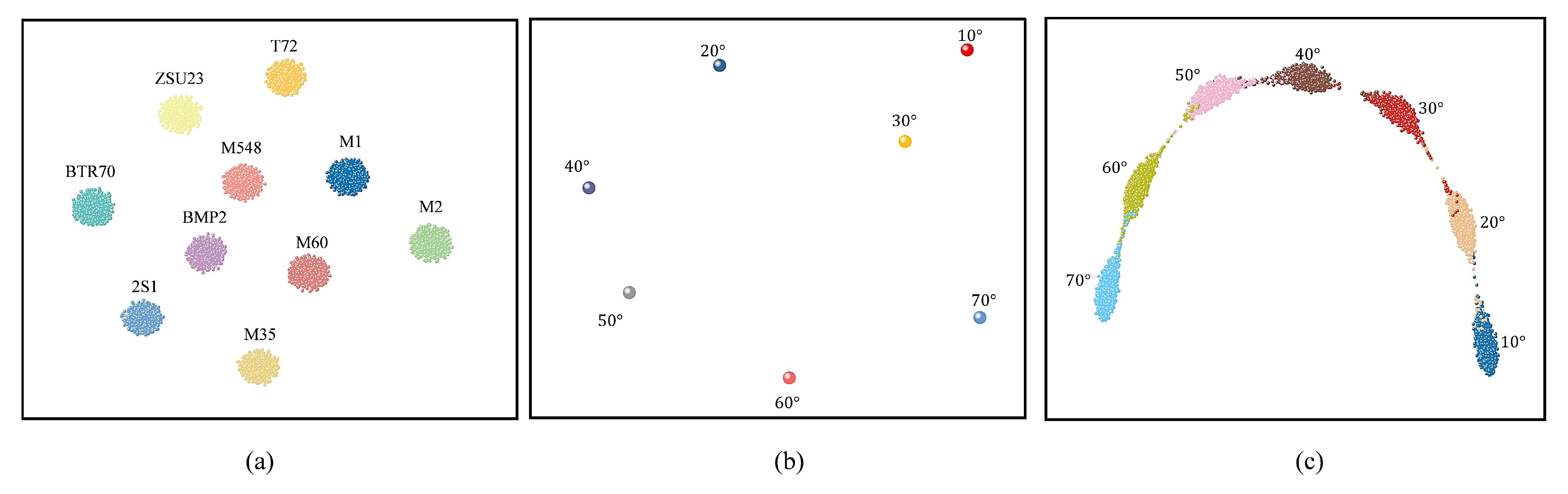

Currently, research on GAN-based SAR image generation predominantly revolves around the MSTAR dataset. To tackle the challenge of limited diversity in multi-angle synthetic aperture radar (SAR) image datasets for typical civilian targets, and considering the wide-ranging applications of SAR in both military and civilian contexts, this paper develops a dataset that includes 15 common and representative targets from civilian domains. These include ground targets, maritime targets, and aerial targets. The dimensions and categories of typical targets are shown in

Table 1.

Subsequently, geometric modeling was conducted for the 15 selected typical targets using scaled-down models. Stepwise precision-controlled mapping techniques were employed to perform accurate measurements and high-fidelity reverse 3D modeling of these reduced-scale targets [

32,

33]. In comparison to conventional 3D geometric reconstruction approaches, the scaled-model-based method provides several advantages, including adaptable dimensions, high controllability, efficient data storage and processing, and strong interpretability.

Since actual targets or scenes are often large in size, while synthetic aperture radar (SAR) imaging simulations are typically constrained by computational resources and processing time, the use of scaled models enables size reduction to meet the practical requirements of such experiments. This approach significantly simplifies subsequent calculations and processing [

34]. Moreover, scaling facilitates the adjustment of target dimensions to accommodate various simulation scenarios.

The detailed modeling workflow consists of three main stages: (1) data acquisition; (2) data preprocessing; (3) surface reconstruction. Data acquisition involves capturing geometric information of the target using specialized instruments; data preprocessing converts this information into 3D coordinates, shape, texture, and other relevant descriptors; surface reconstruction entails combining multiple modeling techniques according to the physical characteristics of the target and the requirements of the simulation scenario to generate the desired 3D model. Throughout this process, 3D model files in the .3ds format (a common format for storing 3D mesh data) and scene description files in the .pov format (used by the POV-Ray ray-tracing software) were produced for each typical target.

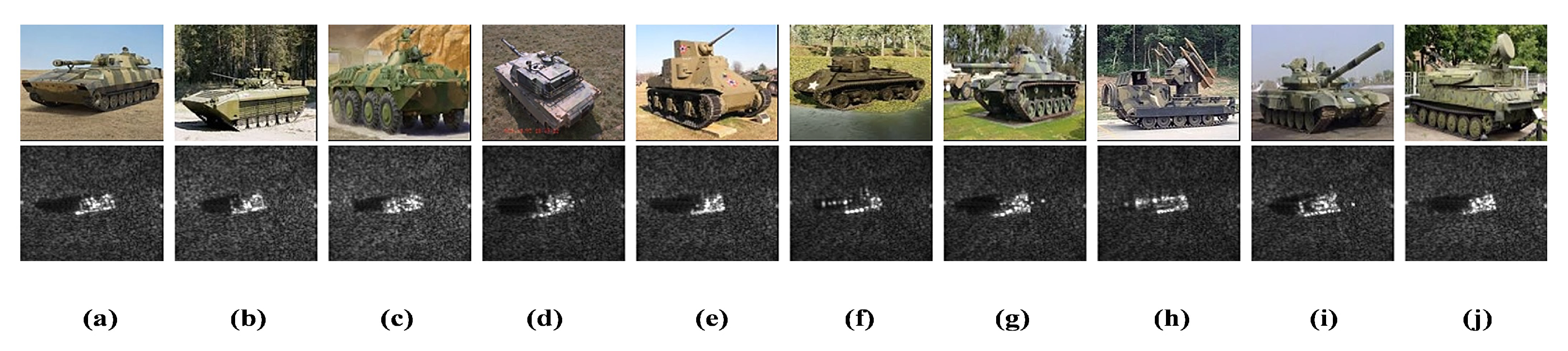

Figure 1 presents optical images of all targets alongside their corresponding 3D models.

2.2. Multi-Target Electromagnetic Modeling and Echo Simulation

In order to construct a multi-angle dataset of typical civilian targets, this study employs electromagnetic scattering modelling for 15 representative targets. The electromagnetic scattering computations utilise a ray-tracing method [

35] based on the Phong shading optical model to determine the three-dimensional coordinates of target scattering points. This is combined with the physical optics (PO) method to compute the scattering intensity of these points [

36,

37]. Together, these approaches enable efficient calculation of both the spatial coordinates and intensities of scattering points within a three-dimensional coordinate system (range, azimuth, and elevation). The physical optics method, also referred to as the Kirchhoff approximation under scalar assumptions, is applied to handle slightly rough surfaces that satisfy the following conditions [

38]:

where

is the wavenumber (rad m

−1) corresponding to the radar wavelength

(m);

l is the surface correlation length representing the horizontal roughness scale (m);

s is the root-mean-square (RMS) surface height (m); and

is the surface slope parameter (dimensionless). These conditions ensure that the physical optics (PO) approximation, also known as the Kirchhoff approximation, is valid for slightly rough surfaces. The incoherent backscatter coefficient for pp polarisation is given by [

39]:

where

denotes the normalized radar cross-section (NRCS) for

-polarization;

is the Fresnel reflectivity for p-polarization (

or

h);

is the local incidence angle; and

I represents the integral term derived from the surface autocorrelation function (

not the image intensity used in SAR backscattering computation). For the surface of the Gaussian autocorrelation function,

where

n is a roughness-related integer parameter introduced in the series expansion of the incoherent term;

l is the surface correlation length; and

is the local incidence angle. For the exponential autocorrelation function,

where

k is the wavenumber;

l is the surface correlation length;

n is the roughness-related integer parameter; and

is the incidence angle.

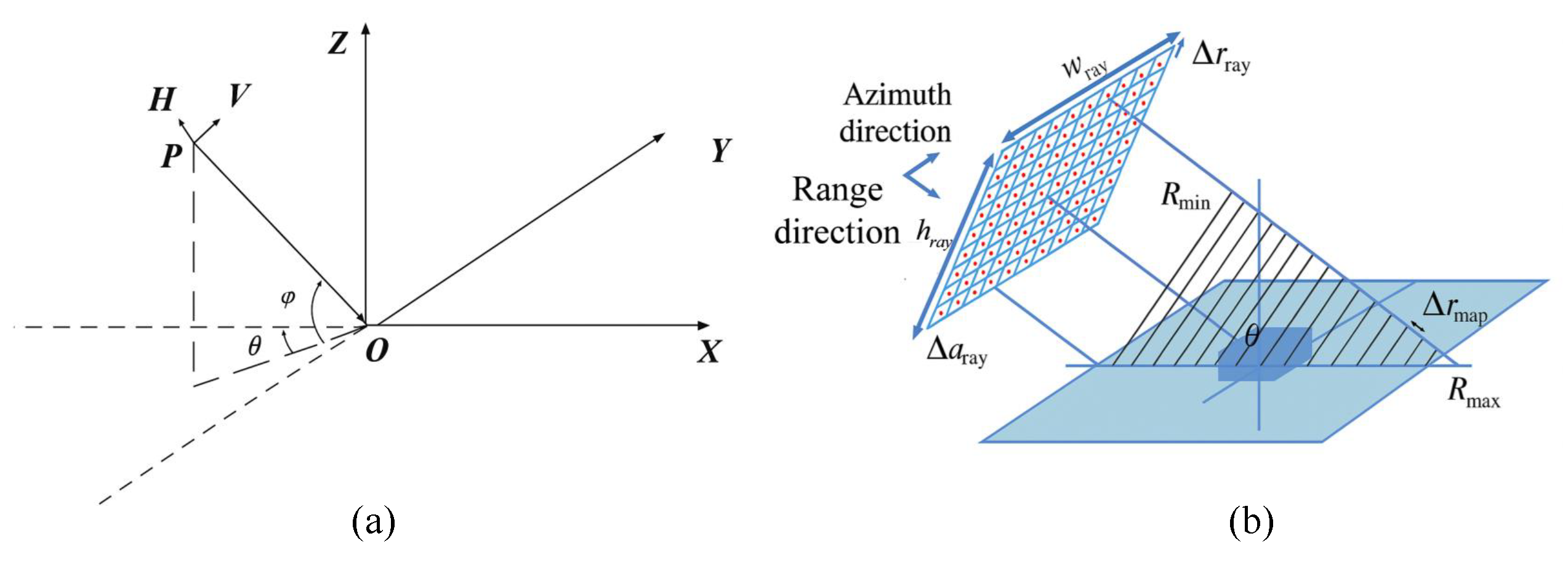

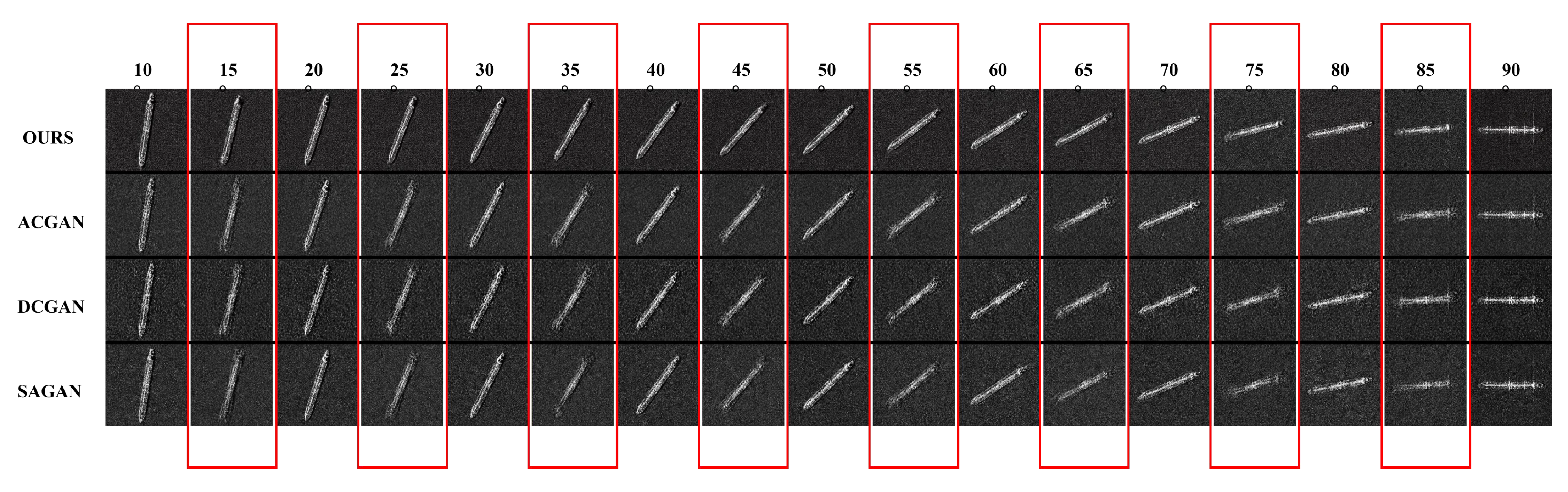

The specific workflow for electromagnetic scattering computation proceeds as follows. Using the geometric modeling results of the typical targets (i.e., target geometric model files in .pov format) along with the necessary radar system parameters for ray tracing simulation (X-band, HH polarisation, with an angular coverage from 10° to 360° at 10° intervals), the backscattering coefficient map is generated via the ray tracing method [

40]. This map is subsequently converted into a header file for the electromagnetic scattering model to compute the target’s electromagnetic scattering parameters. Following the ray tracing process, the final electromagnetic modeling results of the scene targets are produced through image processing operations, including cropping and rotation. The coordinate system configuration and a schematic of the ray tracing process are illustrated in

Figure 2.

Current spaceborne synthetic aperture radar (SAR) simulation methods typically integrate satellite platform models, ground scene models, and radar signal models to interactively simulate the reception process of actual echo signals. However, the high degree of coupling and complex interdependencies among these sub-models limit the modularity and scalability of the simulation system. Furthermore, the implementation of individual models often suffers from code redundancy and convoluted logic, resulting in poor maintainability and reusability. As observation scenarios continue to expand and resolution requirements become increasingly demanding, the computational complexity of echo data simulation grows exponentially, significantly extending the total simulation time. Thus, a major challenge in advancing SAR simulation technology is to effectively reduce computational complexity and time cost while preserving the imaging quality of the simulated outputs [

41].

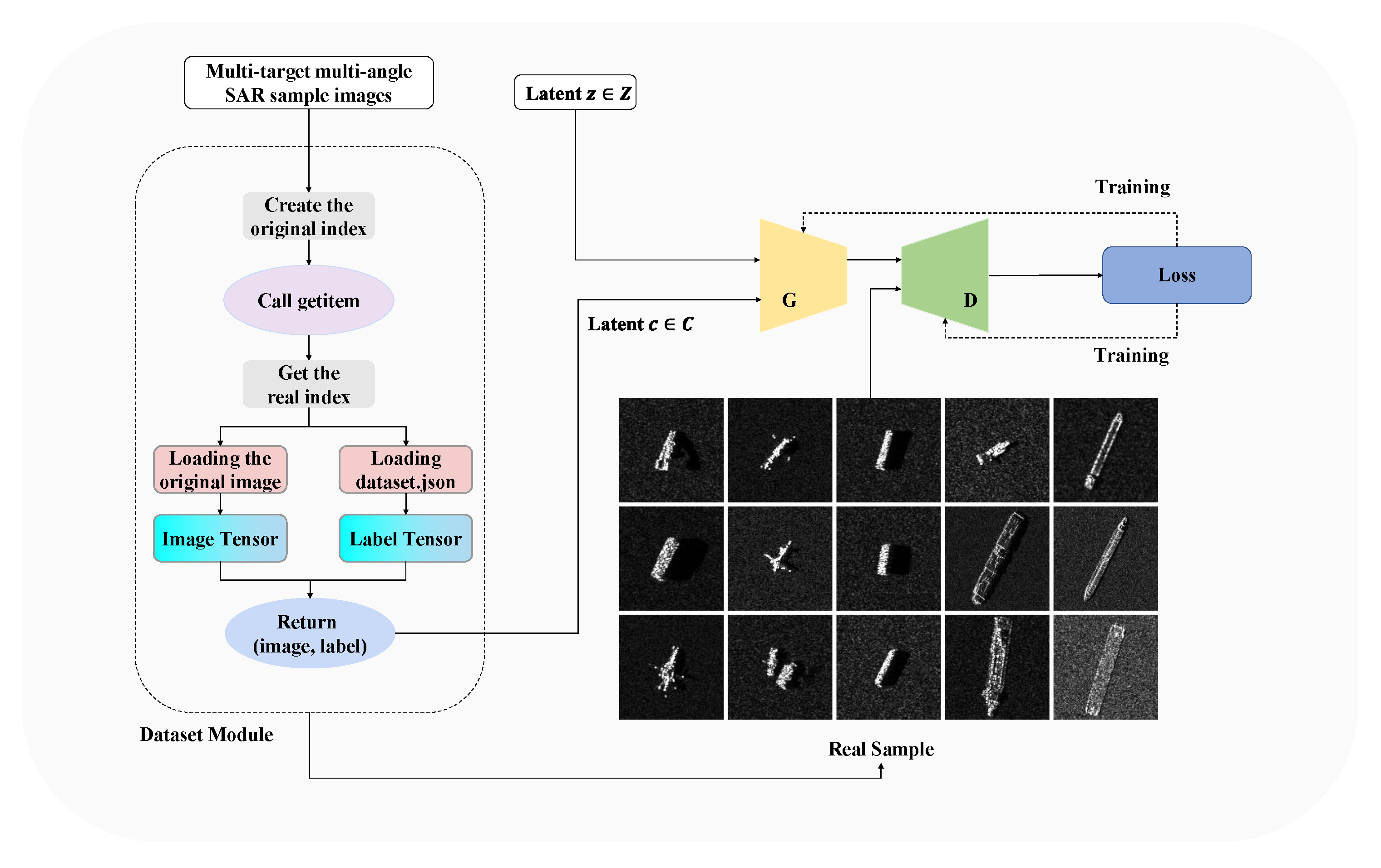

To address the aforementioned challenges, this paper proposes a novel multi-mode SAR echo simulation system characterised by high scalability and reusability. The system decomposes the echo simulation task into four core sub-algorithms that operate collaboratively, thereby reducing inter-model coupling at the architectural level and significantly enhancing system flexibility and parallelisation capabilities.

Satellite orbit dynamics modeling: Accurately generates satellite orbit and attitude information based on the spatial positioning constraints of the target region and input satellite parameters. The satellite motion equation is [

42]:

where

is the satellite position vector,

is the Earth’s gravitational constant, and

represents the perturbation acceleration (such as the gravitational pull of the sun and moon, atmospheric drag, etc.) The position of the satellite in the Earth-centered inertial system can be calculated from the six orbital elements

:

where

, the eccentric anomaly

is solved iteratively by the Kepler equation

, and the mean anomaly

,

.

Beam pointing trajectory deduction dynamically determines the instantaneous beam direction by integrating the platform’s three-axis attitude (pitch, yaw, and roll), antenna configuration, and orbital trajectory. This ensures that the computed beam orientation accurately reflects the platform’s full three-dimensional motion during imaging. The beam direction vector can be expressed as

where

is the coordinate rotation matrix,

,

i, and

are the right ascension of the ascending node, the orbital inclination, and the argument of perigee in the orbital elements, respectively, and

is the initial antenna pointing vector.

Error modeling and injection: Enhances the realism and engineering applicability of the simulation by incorporating typical non-ideal factors—such as orbital errors, attitude disturbances, and system delays—into the simulation chain. The effect of velocity error on Doppler frequency modulation is

where

is the Doppler frequency error,

is the wavelength,

is the target pointing unit vector, and

is the satellite velocity error vector. This formula shows that it is primarily affected by the flight direction velocity error and adjusts with changes in viewing angle. The impact of position error on Doppler cubic compensation:

where

is the relative distance between the satellite and the target,

,

, and

are the first, second, and third derivatives of the equivalent slant range, respectively. Position measurement error

affects the coefficients of each order, thereby affecting the accuracy of the cubic compensation.

Parallel computing optimisation for echo generation: Improves simulation efficiency through pulse coherence processing in the range-frequency domain combined with multi-threaded decomposition, enabling parallelised simulation of the echo reception process. The echo signal of a single point target can be simplified as follows [

43]:

where

is the complex amplitude including the target scattering and system gain,

and

are the range and azimuth envelope functions,

is the instantaneous slant range,

c is the speed of light,

is the radar wavelength,

is the reference azimuth time,

is the azimuth window, and

is the range chirp rate.

This approach effectively balances simulation performance with computational resource utilisation while maintaining accuracy, thereby offering theoretical support and engineering feasibility for the rapid simulation of large-scale, high-resolution SAR imaging tasks.

2.3. Implementation of a Multi-Target SAR Imaging Method

The core processing chain of spaceborne synthetic aperture radar (SAR) imaging typically involves steps such as range compression (RC), secondary range compression (SRC), azimuth matched filtering, and range cell migration correction (RCMC). Time-domain imaging methods are often associated with high computational complexity and low efficiency. As a result, mainstream SAR imaging algorithms are predominantly implemented in the frequency domain, including the range-Doppler (RD) algorithm, the chirp scaling (CS) algorithm, and the

-K algorithm algorithm [

44,

45]. These approaches perform key operations—including SRC, RCMC, and azimuth compression—within the Doppler domain.

This paper adopts the chirp scaling algorithm as the core method for the imaging process due to its advantages in high image quality, robustness against noise, and tolerance to incomplete data segments. These characteristics make it particularly suitable for application scenarios with constrained data acquisition conditions. The algorithm utilises chirp scaling to simultaneously accomplish range migration correction and range compression in the frequency domain, followed by azimuth compression to achieve fully focused two-dimensional imagery. The fundamental concept can be summarised as follows:

where

is the range frequency, where

is the azimuth frequency, and where

is the chirp rate. Two-dimensional imaging focusing is then achieved through azimuth compression:

where

represents the azimuth matched filter and

represents the two-dimensional inverse Fourier transform.

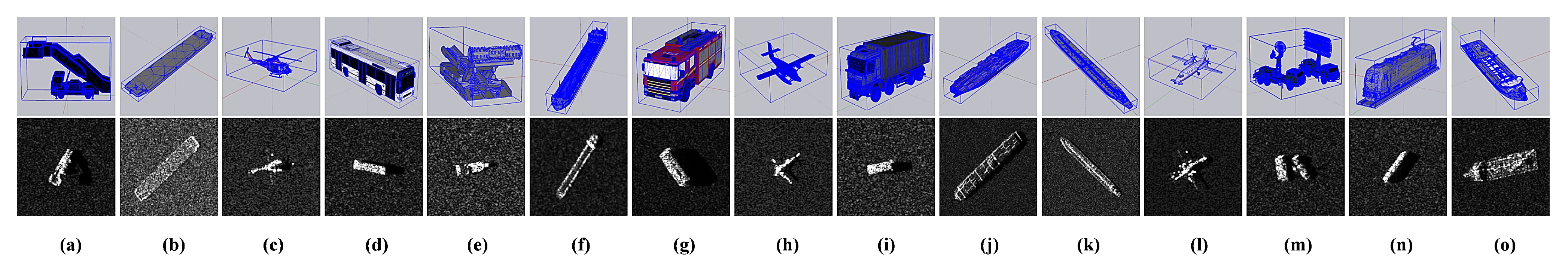

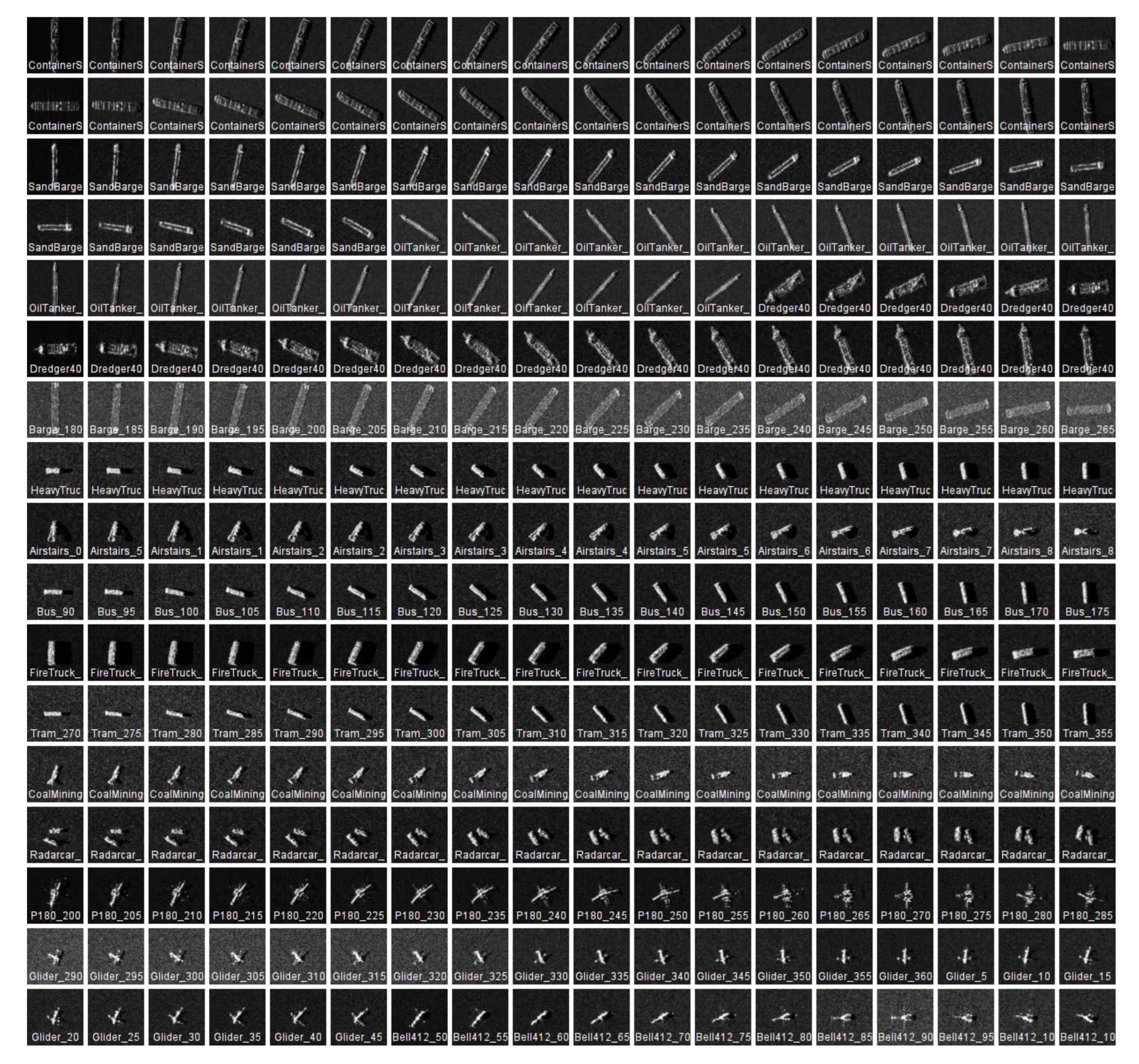

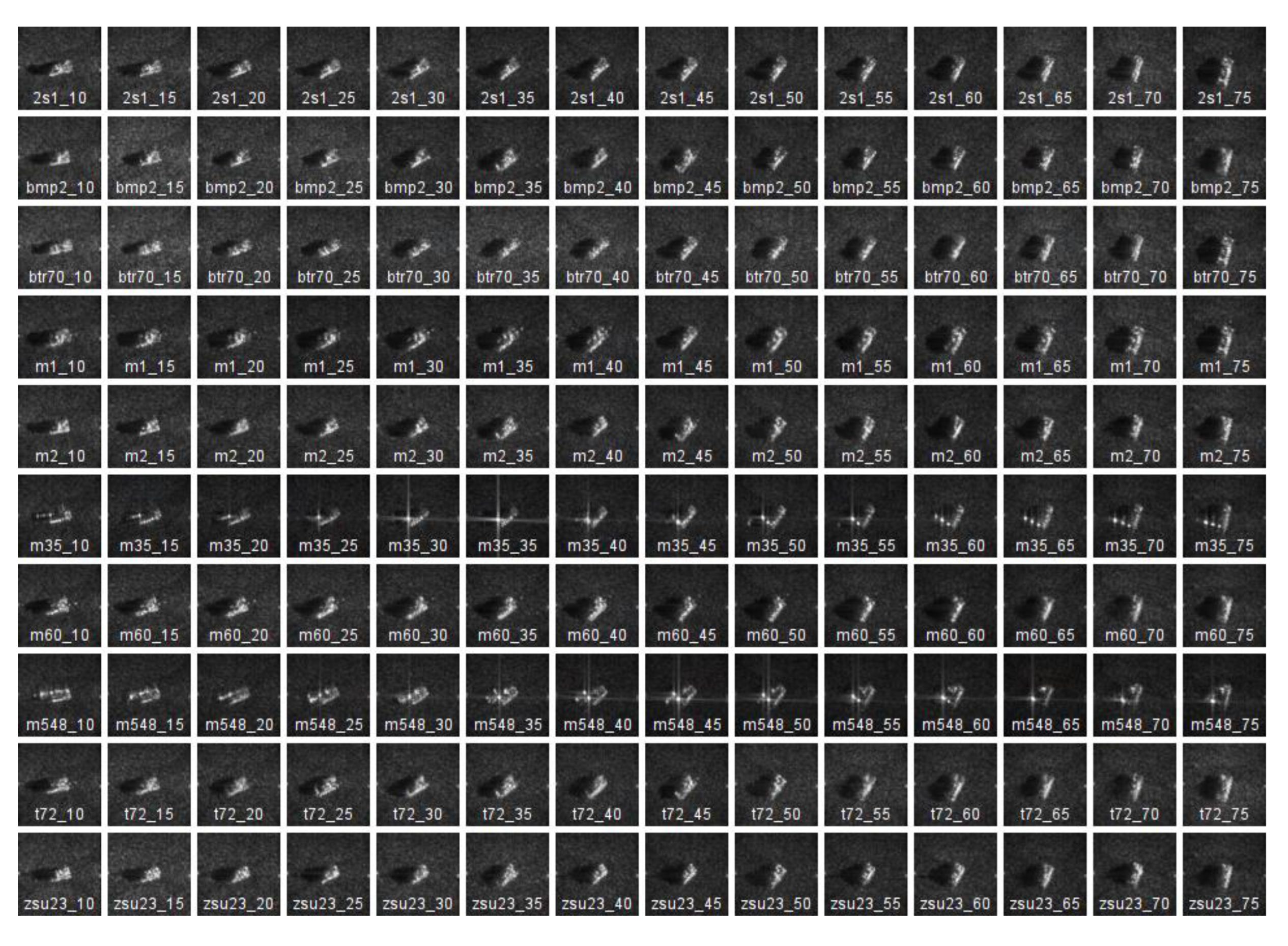

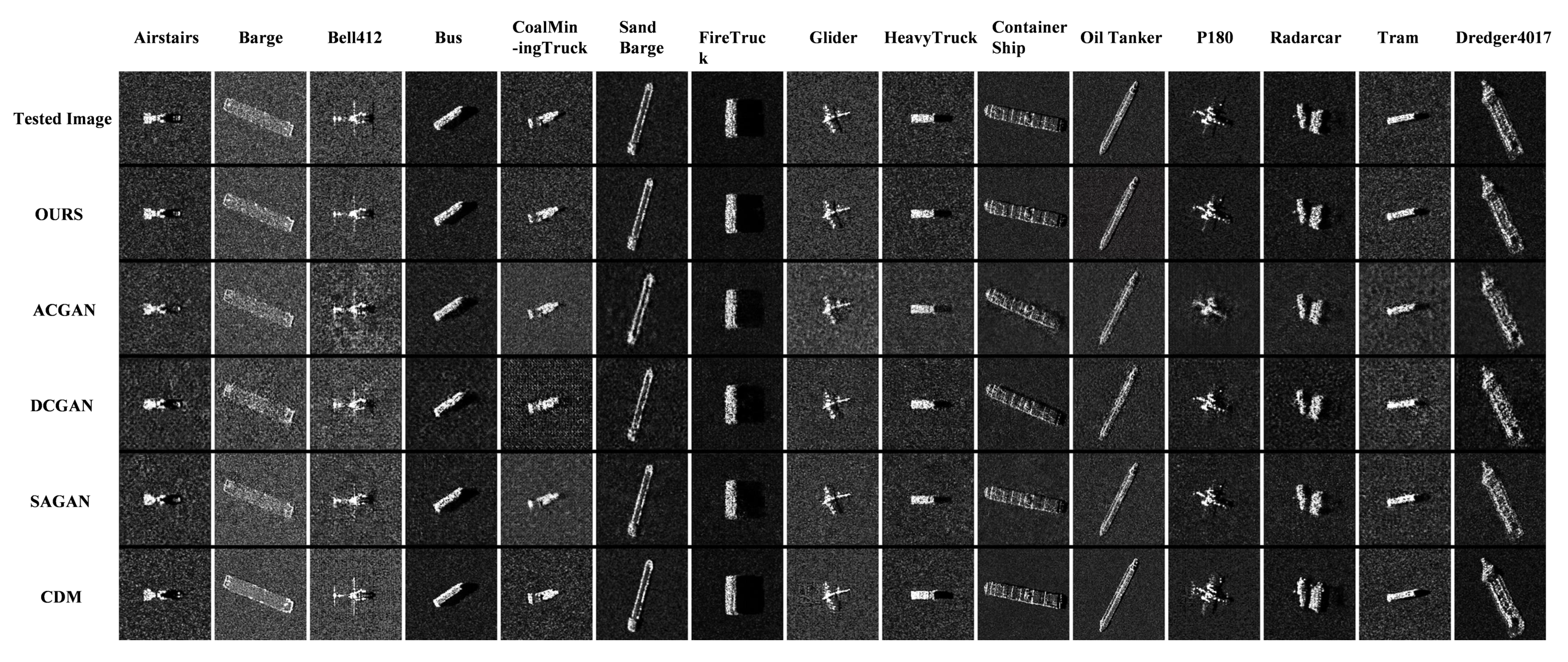

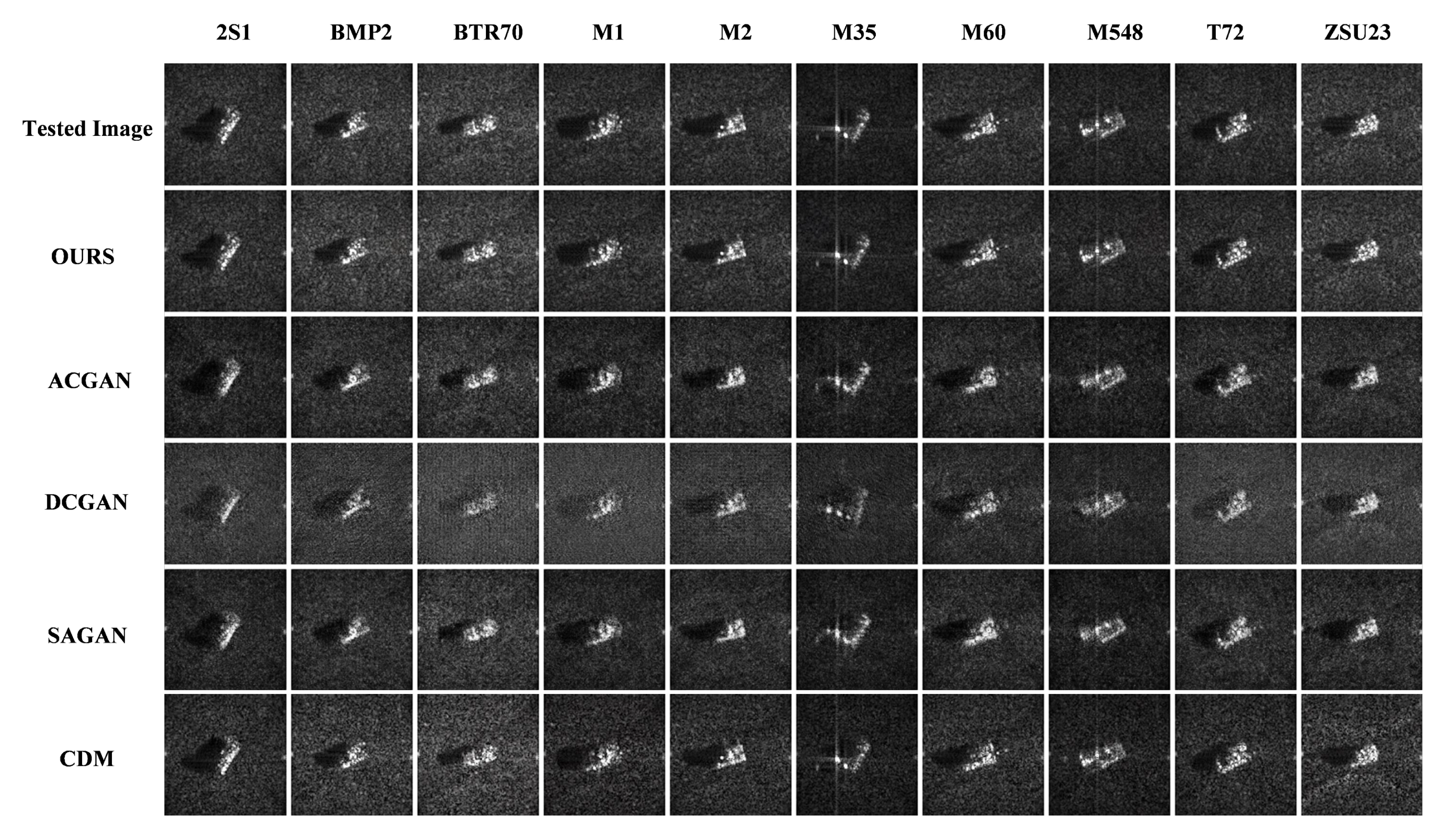

Finally, a multi-target dataset encompassing 15 typical targets across ground, maritime, and aerial categories was constructed. SAR images were generated at 10° intervals, yielding a total of 5940 images. A comparison between the 3D models and their corresponding SAR representations for all targets is provided in

Figure 3. Each image was formatted to a size of 128 × 128 pixels, with amplitude values ranging from 0 to 255.