Semantic Segmentation of High-Resolution Remote Sensing Images Based on RS3Mamba: An Investigation of the Extraction Algorithm for Rural Compound Utilization Status

Abstract

Highlights

- It is confirmed that high-spatial-resolution remote sensing images can achieve high-precision estimation of rural homestead utilization rate and calculation of vacancy rate via semantic segmentation methods.

- A high-precision extraction algorithm framework suitable for rural homesteads in regularly shaped areas is proposed.

- It provides a feasible technical approach for the rapid and accurate acquisition of rural homestead spatial information, breaking through the limitation of low efficiency in traditional manual surveys.

- The proposed algorithm framework can offer key technical support and data references for rural planning, homestead management, and optimal allocation of land resources.

Abstract

1. Introduction

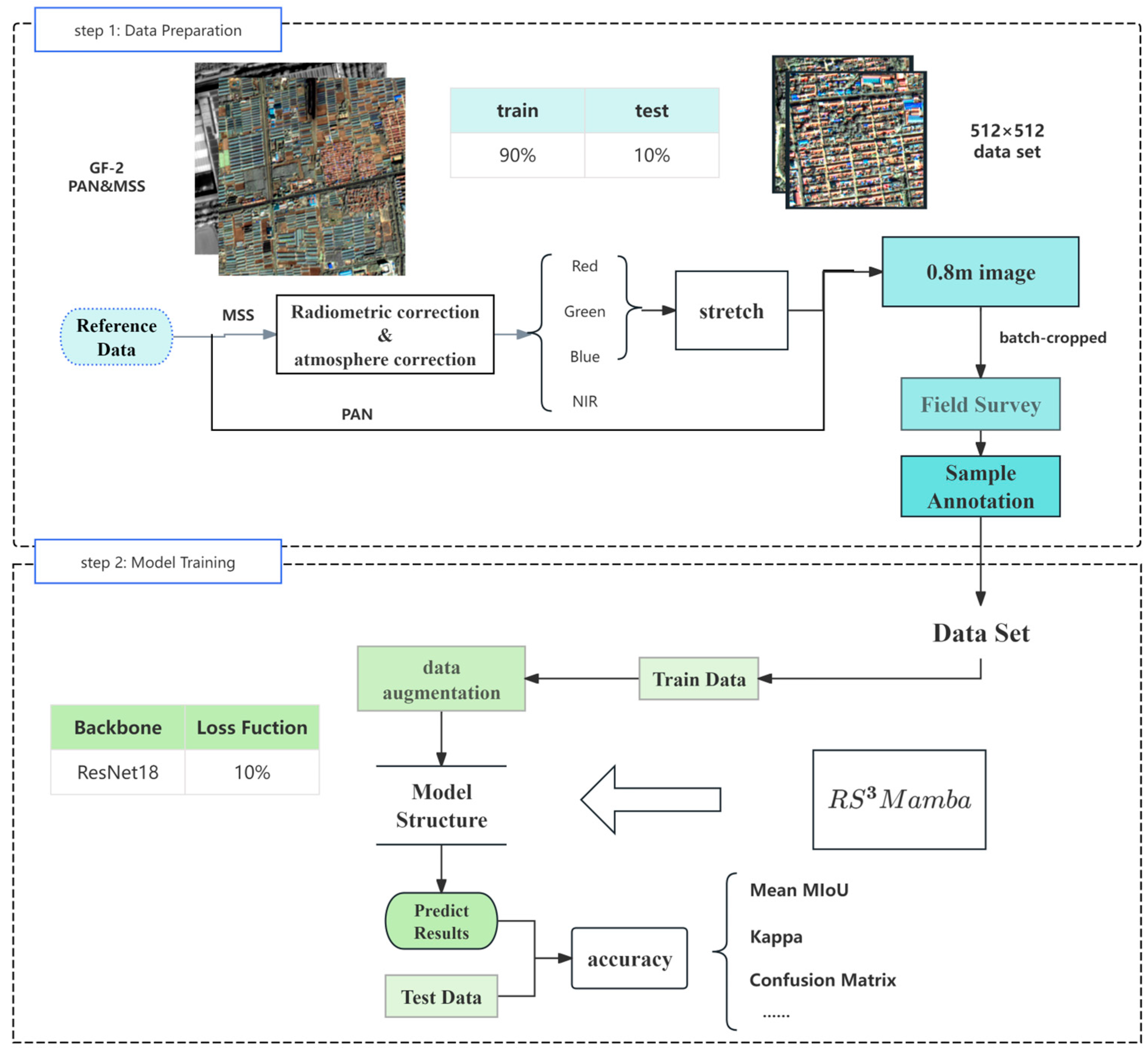

2. Data Processing and Algorithm Design

2.1. Preprocessing

2.2. Study Area and Data Source

2.3. Algorithm

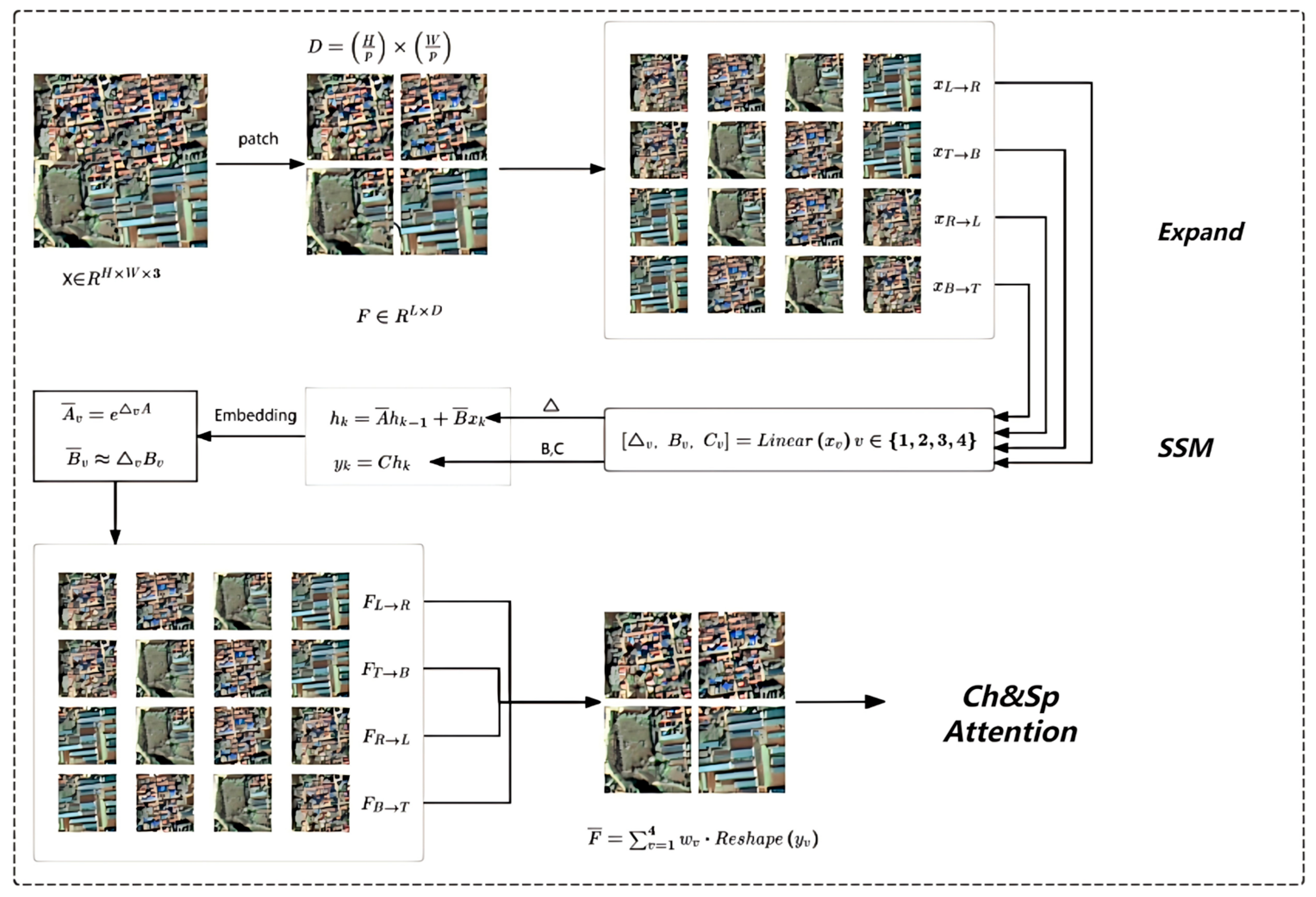

2.3.1. Auxiliary Branch Based on Mamba

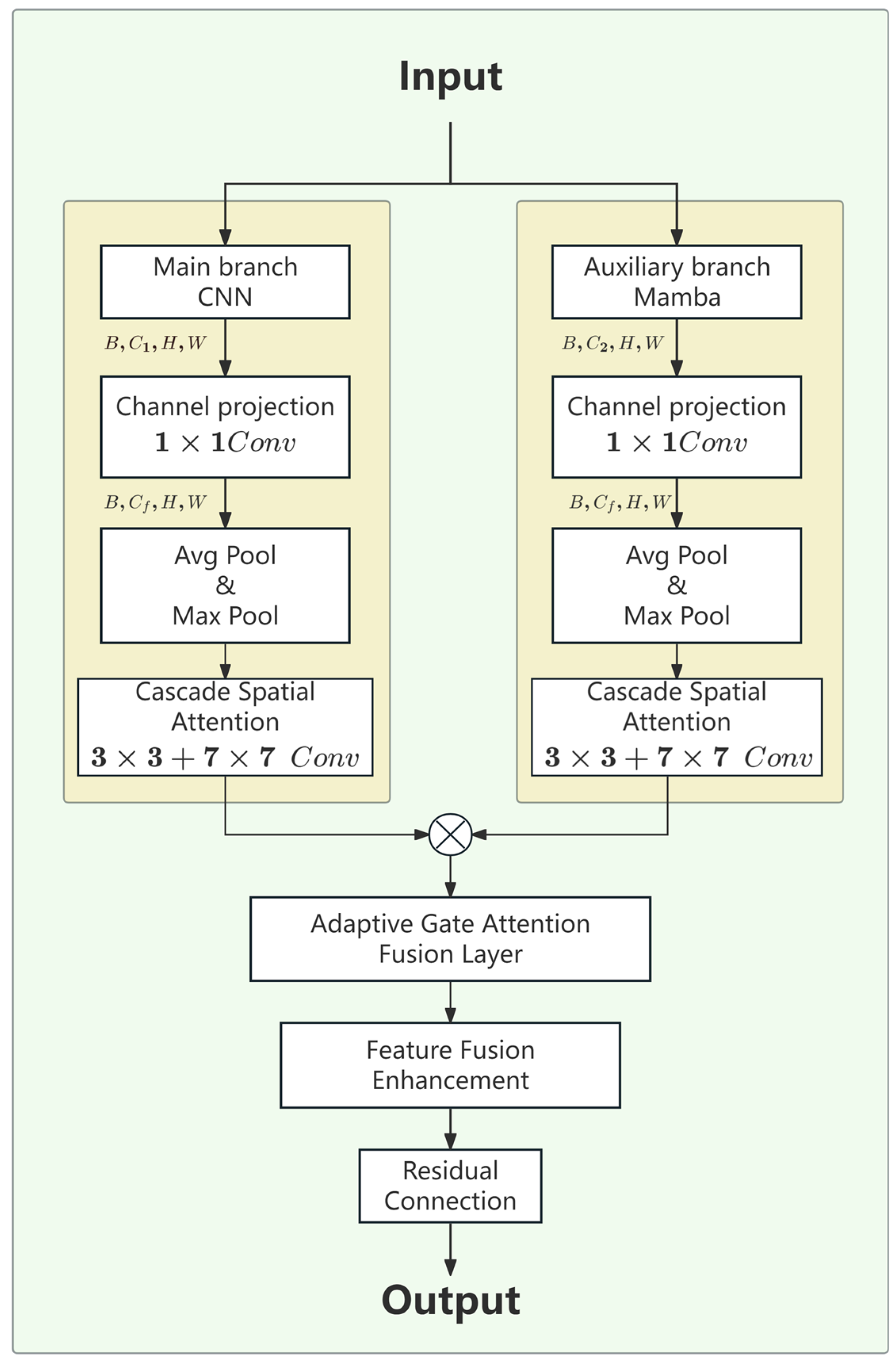

2.3.2. Multiscale Attention Feature Fusion Module

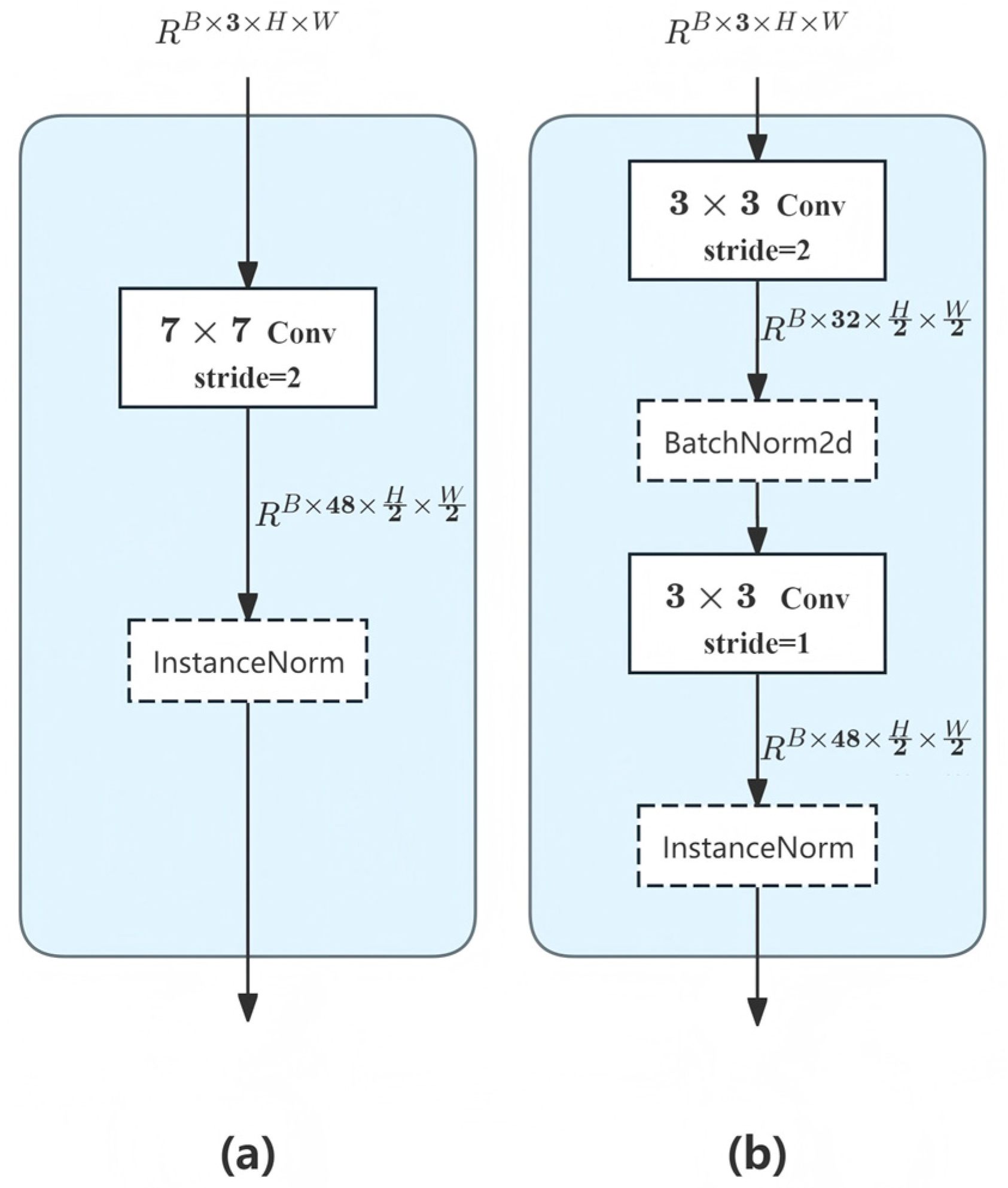

2.3.3. Model Enhancement

- (1)

- Loss Function

- (2)

- Optimizer

- (3)

- Predictive reprocessing

2.4. Parameter

3. Feature Extraction Process and Implementation

3.1. Dataset Labeling

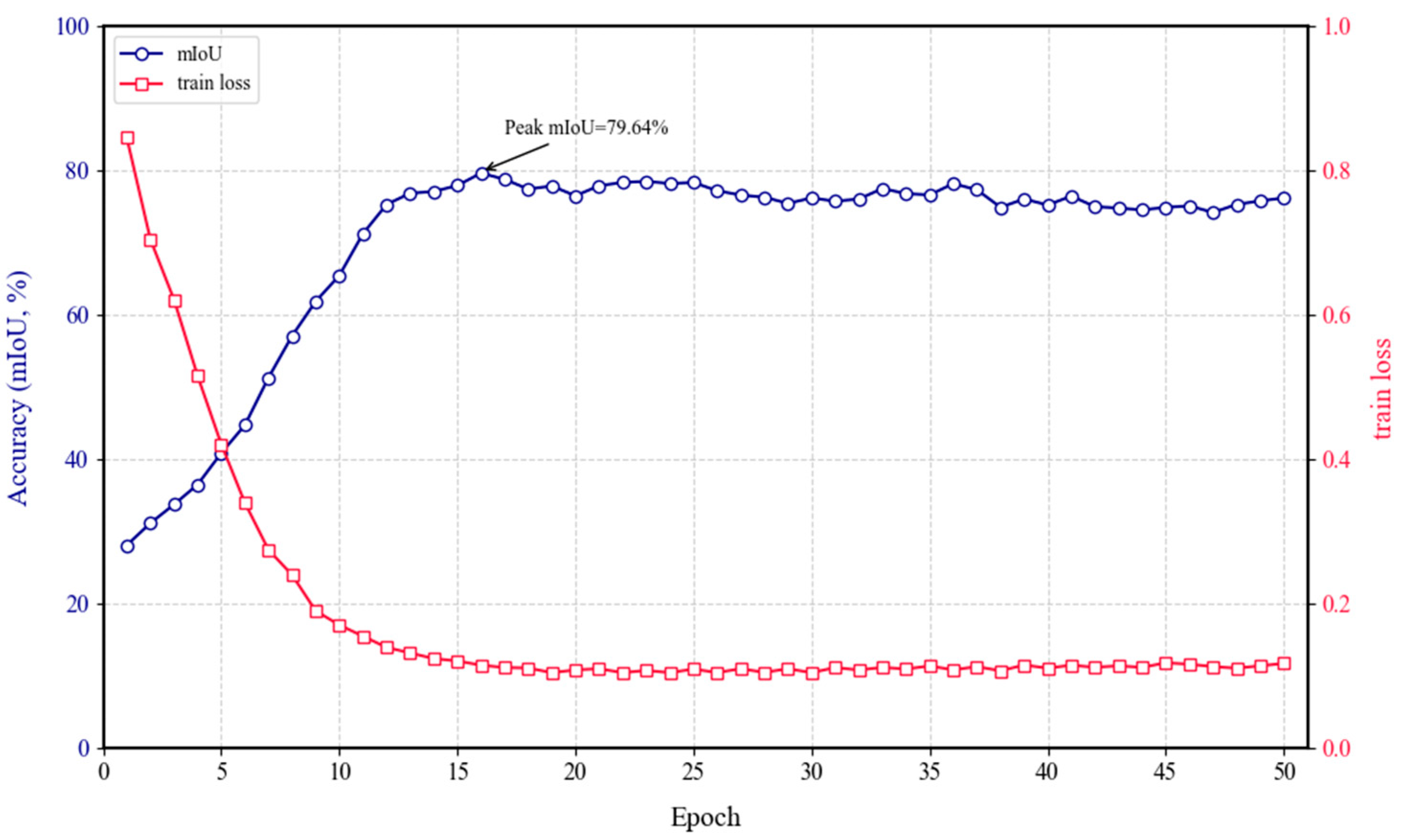

3.2. Model Performance Evaluation

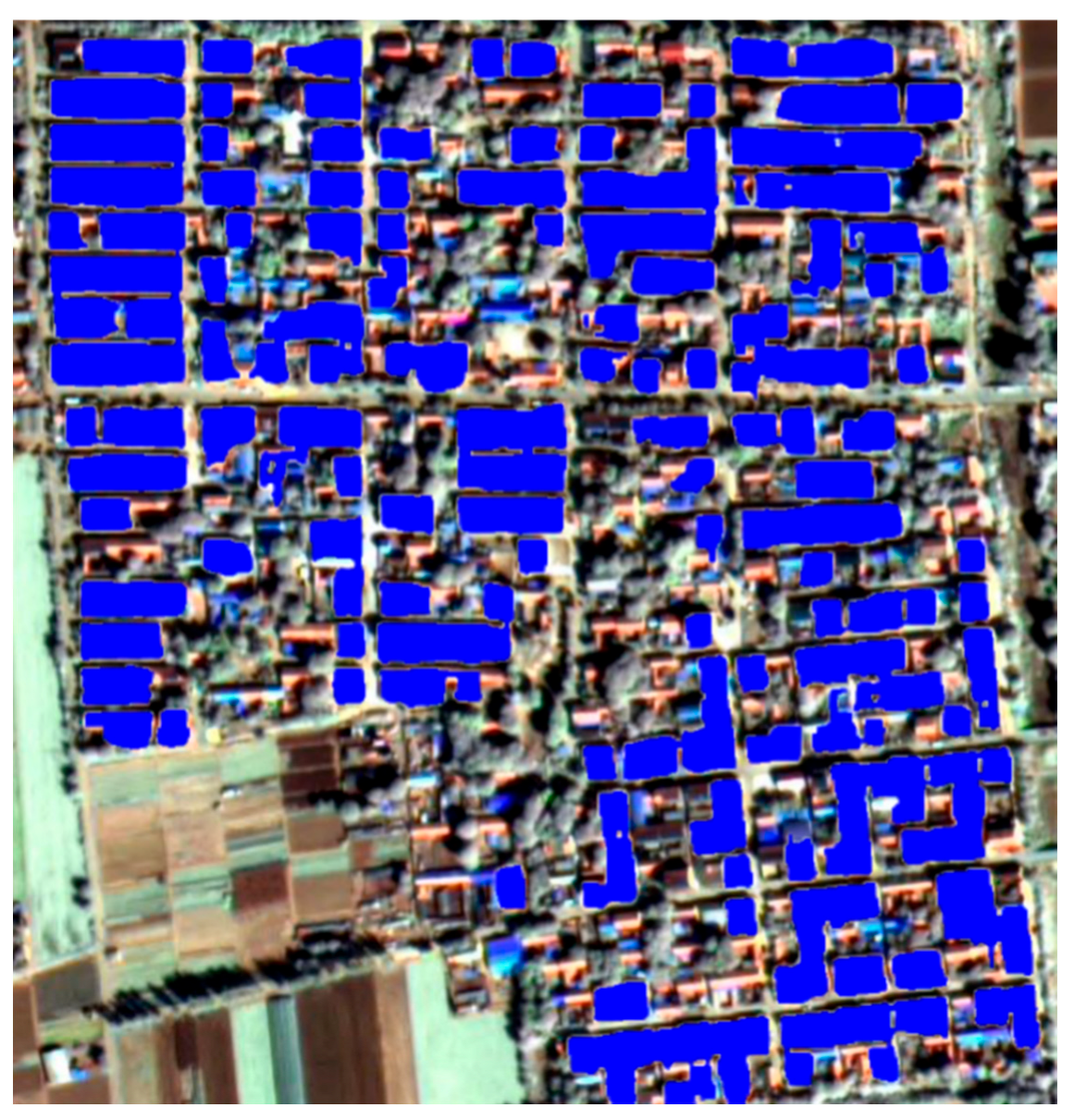

3.3. Realization of Results

4. Discussion

4.1. Comparison of Base Algorithm Accuracy

4.2. Local Visualization Analysis

4.3. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, C.; Xu, M. Characteristics and Influencing Factors on the Hollowing of Traditional Villages—Taking 2645 Villages from the Chinese Traditional Village Catalogue (Batch 5) as an Example. Int. J. Environ. Res. Public Health 2021, 18, 12759. [Google Scholar] [CrossRef]

- Smith, G. The Hollow State: Rural Governance in China. China Q. 2010, 203, 601–618. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, A.; Hou, J.; Chen, X.; Xia, J. Comprehensive Evaluation of Rural Courtyard Utilization Efficiency: A Case Study in Shandong Province, Eastern China. J. Mt. Sci. 2020, 17, 2280–2295. [Google Scholar] [CrossRef]

- Wen, Q.; Li, J.; Ding, J.; Wang, J. Evolutionary Process and Mechanism of Population Hollowing out in Rural Villages in the Farming-Pastoral Ecotone of Northern China: A Case Study of Yanchi County, Ningxia. Land Use Policy 2023, 125, 106506. [Google Scholar] [CrossRef]

- Liu, Y.-S.; Liu, Y. Progress and Prospect on the Study of Rural Hollowing in China. Geogr. Res. 2010, 29, 35–42. [Google Scholar]

- Carr, P.J.; Kefalas, M.J. Hollowing Out the Middle: The Rural Brain Drain and What It Means for America; Beacon Press: Boston, MA, USA, 2009; ISBN 978-0-8070-4239-7. [Google Scholar]

- Guo, B.; Bian, Y.; Pei, L.; Zhu, X.; Zhang, D.; Zhang, W.; Guo, X.; Chen, Q. Identifying Population Hollowing Out Regions and Their Dynamic Characteristics across Central China. Sustainability 2022, 14, 9815. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Chen, Y.; Long, H. The Process and Driving Forces of Rural Hollowing in China under Rapid Urbanization. J. Geogr. Sci. 2010, 20, 876–888. [Google Scholar] [CrossRef]

- Sun, H.; Liu, Y.; Xu, K. Hollow Villages and Rural Restructuring in Major Rural Regions of China: A Case Study of Yucheng City, Shandong Province. Chin. Geogr. Sci. 2011, 21, 354–363. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Y.; Xiong, S.; Lu, X.; Zhu, X.X.; Mou, L. Integrating Detailed Features and Global Contexts for Semantic Segmentation in Ultra-High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar]

- Zeng, Q.; Zhou, J.; Tao, J.; Chen, L.; Niu, X.; Zhang, Y. Multiscale Global Context Network for Semantic Segmentation of High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Wu, Z.; Li, J.; Wang, Y.; Hu, Z.; Molinier, M. Self-Attentive Generative Adversarial Network for Cloud Detection in High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1792–1796. [Google Scholar] [CrossRef]

- Zang, N.; Cao, Y.; Wang, Y.; Huang, B.; Zhang, L.; Mathiopoulos, P.T. Land-Use Mapping for High-Spatial Resolution Remote Sensing Image Via Deep Learning: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5372–5391. [Google Scholar] [CrossRef]

- Li, Z. Research on Key Technology for Acquiring Building Information of Hollow Village Based on UAV High Resolution Imagery. Ph.D. Thesis, Southwest Jiaotong University, Chengdu, China, 2018. (In Chinese). [Google Scholar]

- Fan, R.; Wang, L.; Feng, R.; Zhu, Y. Attention Based Residual Network for High-Resolution Remote Sensing Imagery Scene Classification. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: New York, NY, USA, 2019; pp. 1346–1349. [Google Scholar]

- Chiu, W.-T.; Lin, C.-H.; Jhu, C.-L.; Lin, C.; Chen, Y.-C.; Huang, M.-J.; Liu, W.-M. Semantic Segmentation of Lotus Leaves in UAV Aerial Images via U-Net and DeepLab-Based Networks. In Proceedings of the 2020 International Computer Symposium (ICS), Tainan, Taiwan, 17–19 December 2020; IEEE: New York, NY, USA, 2020; pp. 535–540. [Google Scholar]

- Qian, Z.; Cao, Y.; Shi, Z.; Qiu, L.; Shi, C. A Semantic Segmentation Method for Remote Sensing Images Based on Deeplab V3. In Proceedings of the 2021 2nd International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE), Zhuhai, China, 24–26 September 2021; IEEE: New York, NY, USA, 2021; pp. 396–400. [Google Scholar]

- Zhang, R.; Zhang, Q.; Zhang, G. LSRFormer: Efficient Transformer Supply Convolutional Neural Networks with Global Information for Aerial Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, T.; Chen, J.; Liu, L.; Guo, L. A Review: How Deep Learning Technology Impacts the Evaluation of Traditional Village Landscapes. Buildings 2023, 13, 525. [Google Scholar] [CrossRef]

- Zhao, H.; Li, X.; Gu, Y.; Deng, W.; Huang, Y.; Zhou, S. Integrating Time-Series Nighttime Light Data With Static Remote Sensing and Village View Images for Hollow Villages Identification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 9151–9165. [Google Scholar] [CrossRef]

- Meng, C.; Song, Y.; Ji, J.; Jia, Z.; Zhou, Z.; Gao, P.; Liu, S. Automatic classification of rural building characteristics using deep learning methods on oblique photography. Build. Simul. 2022, 15, 1161–1174. [Google Scholar] [CrossRef]

- Wang, M.; Xu, W.; Cao, G.; Liu, T. Identification of Rural Courtyards’ Utilization Status Using Deep Learning and Machine Learning Methods on Unmanned Aerial Vehicle Images in North China. Build. Simul. 2024, 17, 799–818. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, L.; Deng, H. MFMamba: A Mamba-Based Multi-Modal Fusion Network for Semantic Segmentation of Remote Sensing Images. Sensors 2024, 24, 7266. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Jin, X.; Zhou, X.; Dong, J.; Du, Q. MSFMamba: Multiscale Feature Fusion State Space Model for Multisource Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–16. [Google Scholar] [CrossRef]

- Li, Y.; Li, D.; Xie, W.; Ma, J.; He, S.; Fang, L. Semi-Mamba: Mamba-Driven Semi-Supervised Multimodal Remote Sensing Feature Classification. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 9837–9849. [Google Scholar] [CrossRef]

- Zhan, Z.; Zhang, X.; Liu, Y.; Sun, X.; Pang, C.; Zhao, C. Vegetation Land Use/Land Cover Extraction From High-Resolution Satellite Images Based on Adaptive Context Inference. IEEE Access 2020, 8, 21036–21051. [Google Scholar] [CrossRef]

- Huang, X.; Liu, H.; Zhang, L. Spatiotemporal Detection and Analysis of Urban Villages in Mega City Regions of China Using High-Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3639–3657. [Google Scholar] [CrossRef]

- Li, Y.; Xu, W.; Chen, H.; Jiang, J.; Li, X. A Novel Framework Based on Mask R-CNN and Histogram Thresholding for Scalable Segmentation of New and Old Rural Buildings. Remote Sens. 2021, 13, 1070. [Google Scholar] [CrossRef]

- Liu, Y.; Long, H. Land use transitions and their dynamic mechanism: The case of the Huang-Huai-Hai Plain. J. Geogr. Sci. 2016, 26, 515–530. [Google Scholar] [CrossRef]

- Fu, Z.; Yang, Y.; Wang, L.; Zhu, X.; Lv, H.; Qiao, J. Geographical Types and Driving Mechanisms of Rural Hollowing-Out in the Yellow River Basin. Agriculture 2024, 14, 365. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.-O. RS3 Mamba: Visual State Space Model for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Chen, K.; Chen, B.; Liu, C.; Li, W.; Zou, Z.; Shi, Z. RSMamba: Remote Sensing Image Classification with State Space Model 2024. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar]

- Shawky, O.A.; Hagag, A.; El-Dahshan, E.-S.A.; Ismail, M.A. Remote Sensing Image Scene Classification Using CNN-MLP with Data Augmentation. Optik 2020, 221, 165356. [Google Scholar] [CrossRef]

- Li, B.; Guo, Y.; Yang, J.; Wang, L.; Wang, Y.; An, W. Gated Recurrent Multiattention Network for VHR Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5606113. [Google Scholar] [CrossRef]

- Llugsi, R.; El Yacoubi, S.; Fontaine, A.; Lupera, P. Comparison between Adam, AdaMax and Adam W Optimizers to Implement a Weather Forecast Based on Neural Networks for the Andean City of Quito. In Proceedings of the 2021 IEEE Fifth Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 12–15 October 2021. [Google Scholar]

- Su, H.; Wang, Y.; Zhang, Z.; Dong, W. Characteristics and Influencing Factors of Traditional Village Distribution in China. Land 2022, 11, 1631. [Google Scholar] [CrossRef]

- Wang, D.; Zhu, Y.; Zhao, M.; Lv, Q. Multi-Dimensional Hollowing Characteristics of Traditional Villages and Its Influence Mechanism Based on the Micro-Scale: A Case Study of Dongcun Village in Suzhou, China. Land Use Policy 2021, 101, 105146. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation; Springer: Berlin/Heidelberg, Germany, 2018; pp. 801–818. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Yu, M.; He, L.; Shen, Z.; Lv, M. STRD-Net: A Dual-Encoder Semantic Segmentation Network for Urban Green Space Extraction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

| Predicted Class\True Class | True Background | True Building | Row Sum |

|---|---|---|---|

| Predicted Background | 97.10 | 23.43 | 100.00 |

| Predicted Building | 2.90 | 76.57 | 100.00 |

| Column Sum | 100.00 | 100.00 |

| Method | Building | Background | mF1 | mIoU | FLOPs (G) |

|---|---|---|---|---|---|

| DeepLabv3 [40] | 0.6025/0.5551 | 0.9083/0.7991 | 0.7454 | 0.6771 | 70.5 |

| SwinT-UNet [20] | 0.6449/0.5421 | 0.9023/0.8243 | 0.7736 | 0.6832 | 5.8 |

| SwinT-Unet (150 epoch) | 0.7210/0.5636 | 0.9655/0.9332 | 0.8432 | 0.7484 | - |

| ConvLSR-Net [18] | 0.6653/0.5684 | 0.9164/0.8824 | 0.7909 | 0.7254 | 35.7047 |

| Transformer [41] | 0.6605/0.5102 | 0.8869/0.8478 | 0.7737 | 0.6784 | 6.21 |

| STRD-Net [42] | 0.6871/0.6048 | 0.9134/0.8914 | 0.8003 | 0.7481 | 1033.85 |

| RS3Mamba+ | 0.8131/0.7626 | 0.9772/0.9055 | 0.8815 | 0.7964 | 327.31 |

| Model Name | BuildingIoU | BackgroundIoU | mF1 | mIoU | Kappa |

| Backbone | 0.626 | 0.7646 | 0.7124 | 0.6953 | 0.6851 |

| Backbone + Mamba | 0.7251 | 0.8272 | 0.8692 | 0.7762 | 0.7739 |

| Backbone + Gated-Attention | 0.6797 | 0.7912 | 0.8125 | 0.7355 | 0.7251 |

| RS3Mamba+(Ours) | 0.7626 | 0.9055 | 0.8815 | 0.7964 | 0.7889 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, X.; Liu, Z.; Xie, S.; Ge, Y. Semantic Segmentation of High-Resolution Remote Sensing Images Based on RS3Mamba: An Investigation of the Extraction Algorithm for Rural Compound Utilization Status. Remote Sens. 2025, 17, 3443. https://doi.org/10.3390/rs17203443

Fang X, Liu Z, Xie S, Ge Y. Semantic Segmentation of High-Resolution Remote Sensing Images Based on RS3Mamba: An Investigation of the Extraction Algorithm for Rural Compound Utilization Status. Remote Sensing. 2025; 17(20):3443. https://doi.org/10.3390/rs17203443

Chicago/Turabian StyleFang, Xinyu, Zhenbo Liu, Su’an Xie, and Yunjian Ge. 2025. "Semantic Segmentation of High-Resolution Remote Sensing Images Based on RS3Mamba: An Investigation of the Extraction Algorithm for Rural Compound Utilization Status" Remote Sensing 17, no. 20: 3443. https://doi.org/10.3390/rs17203443

APA StyleFang, X., Liu, Z., Xie, S., & Ge, Y. (2025). Semantic Segmentation of High-Resolution Remote Sensing Images Based on RS3Mamba: An Investigation of the Extraction Algorithm for Rural Compound Utilization Status. Remote Sensing, 17(20), 3443. https://doi.org/10.3390/rs17203443