An Improved Instance Segmentation Approach for Solid Waste Retrieval with Precise Edge from UAV Images

Abstract

Highlights

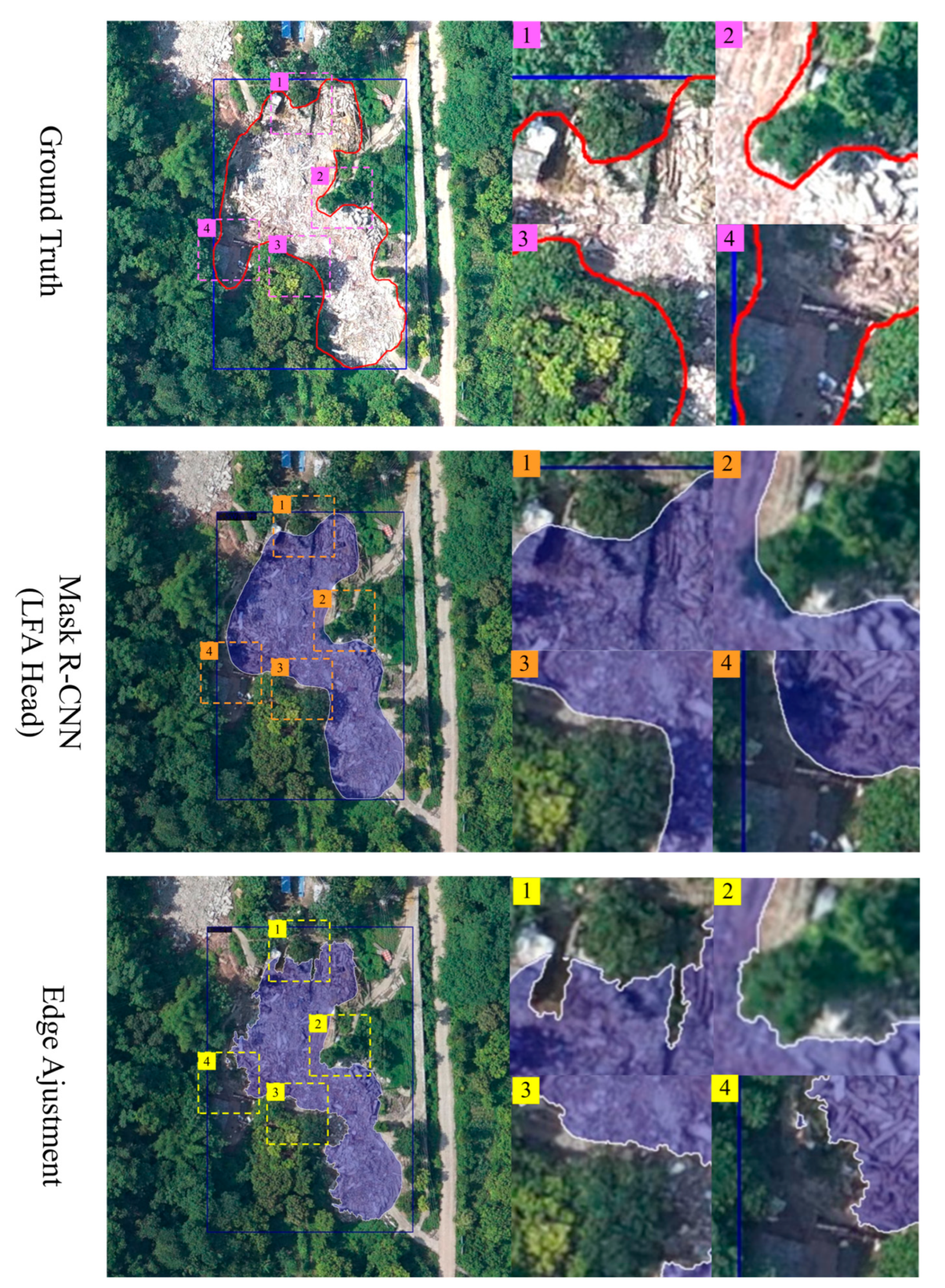

- Proposes WMNet-SW, which fuses an improved Mask R-CNN (with anchor/RoI optimization and a Layer Feature Aggregation mask head) with the watershed transform to retrieve precise-edge SW from high-resolution UAV images.

- Outperforms baseline deep learning model, captures fine edge details and even mitigates limitations in training GT.

- Provides a practical solution for retrieving precise-edge SW from UAV imagery, contributing to the protection of regional environment and ecosystem health.

Abstract

1. Introduction

- (1)

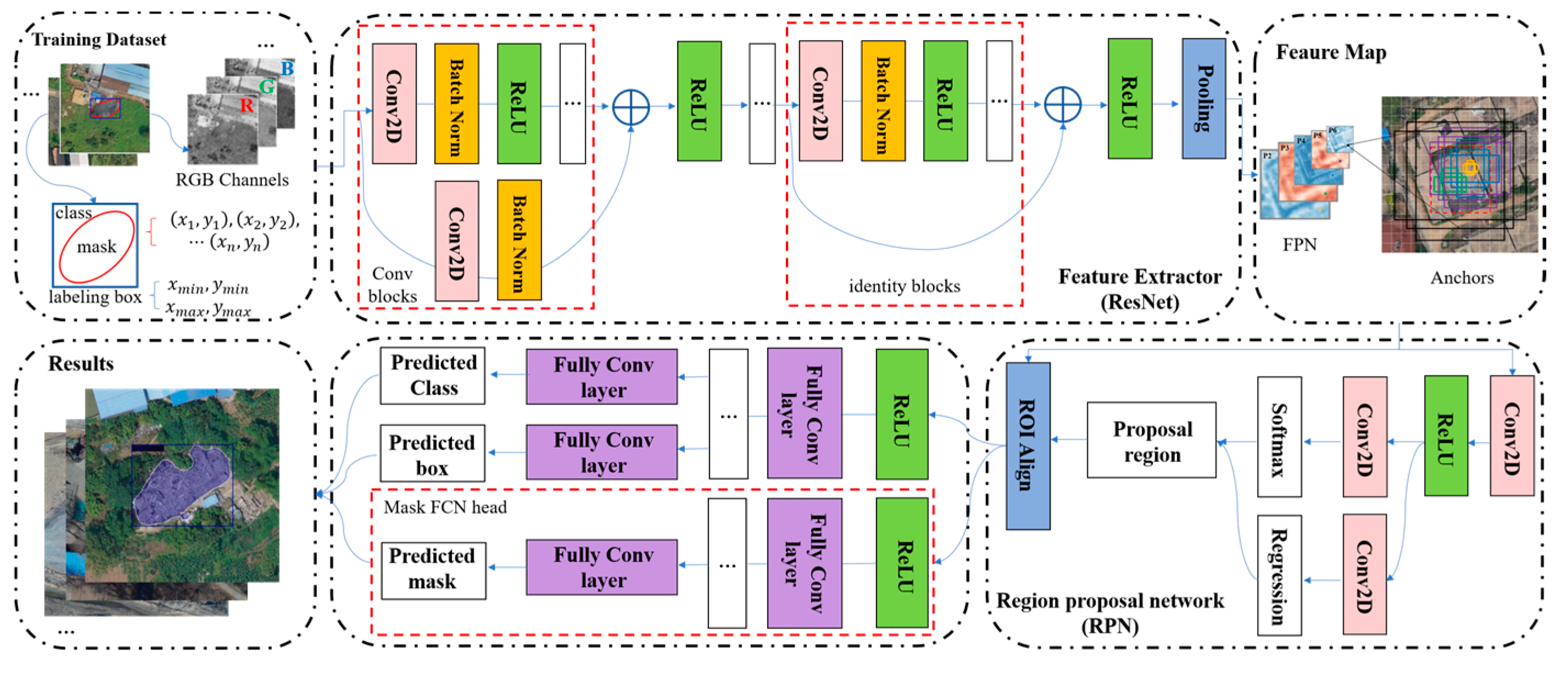

- A hyperparameter optimization strategy that enhances the adaptability of Mask R-CNN to specialized target detection tasks is proposed. Compared to common object detection tasks, the model with parameters optimized for specialized targets demonstrates superior detection accuracy and enhanced robustness.

- (2)

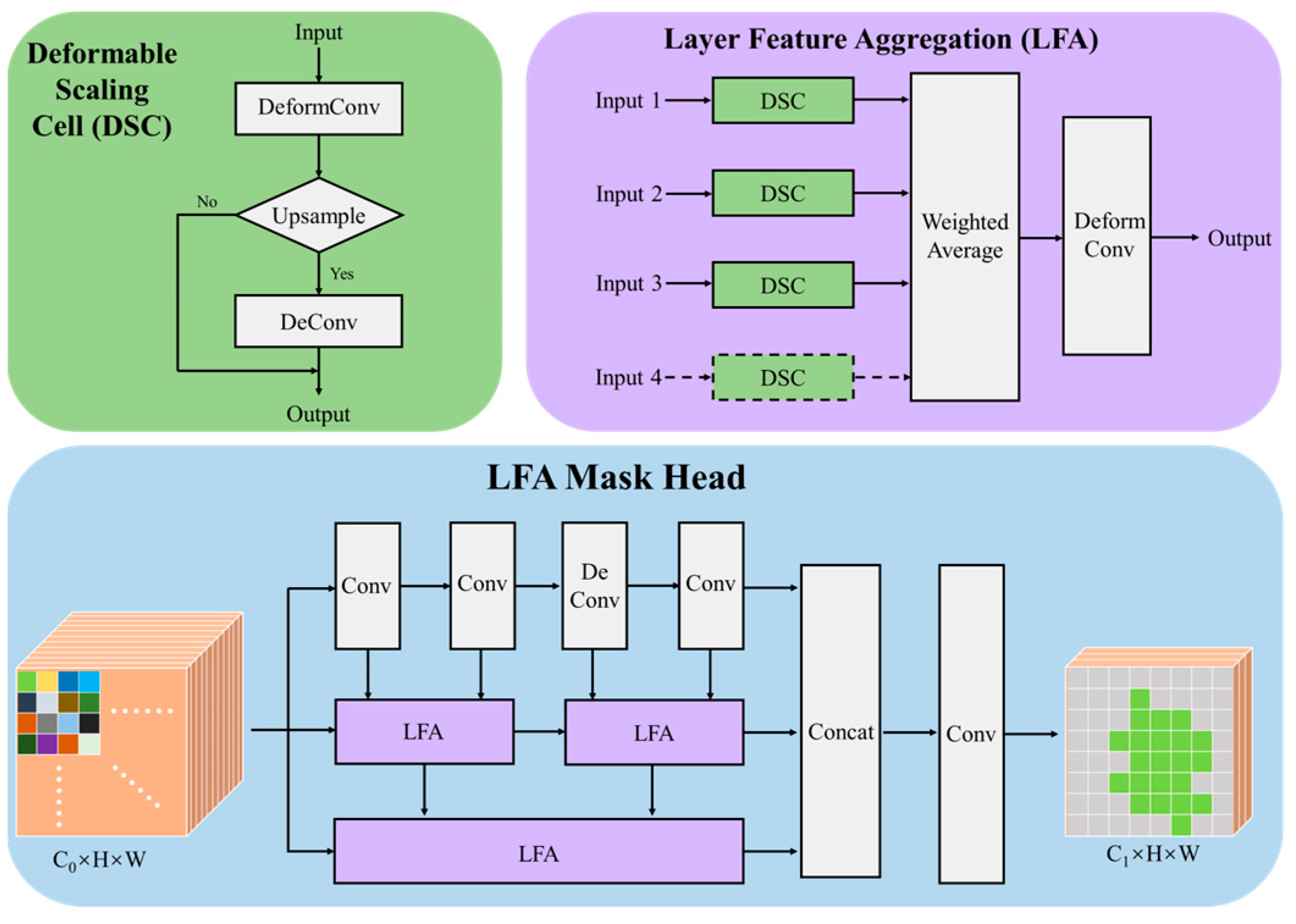

- Two novel and plug-and-play modules based on multi-scale feature fusion are proposed: Deformable Scaling Cell (DSC) and Layer Feature Aggregation (LFA), which together form an innovative LFA mask head to better extract solid waste samples in complex geographical environments. In particular, the new mask head simultaneously extracts coarse-to-fine multi-scale features and enables cross-scale feature synthesis.

- (3)

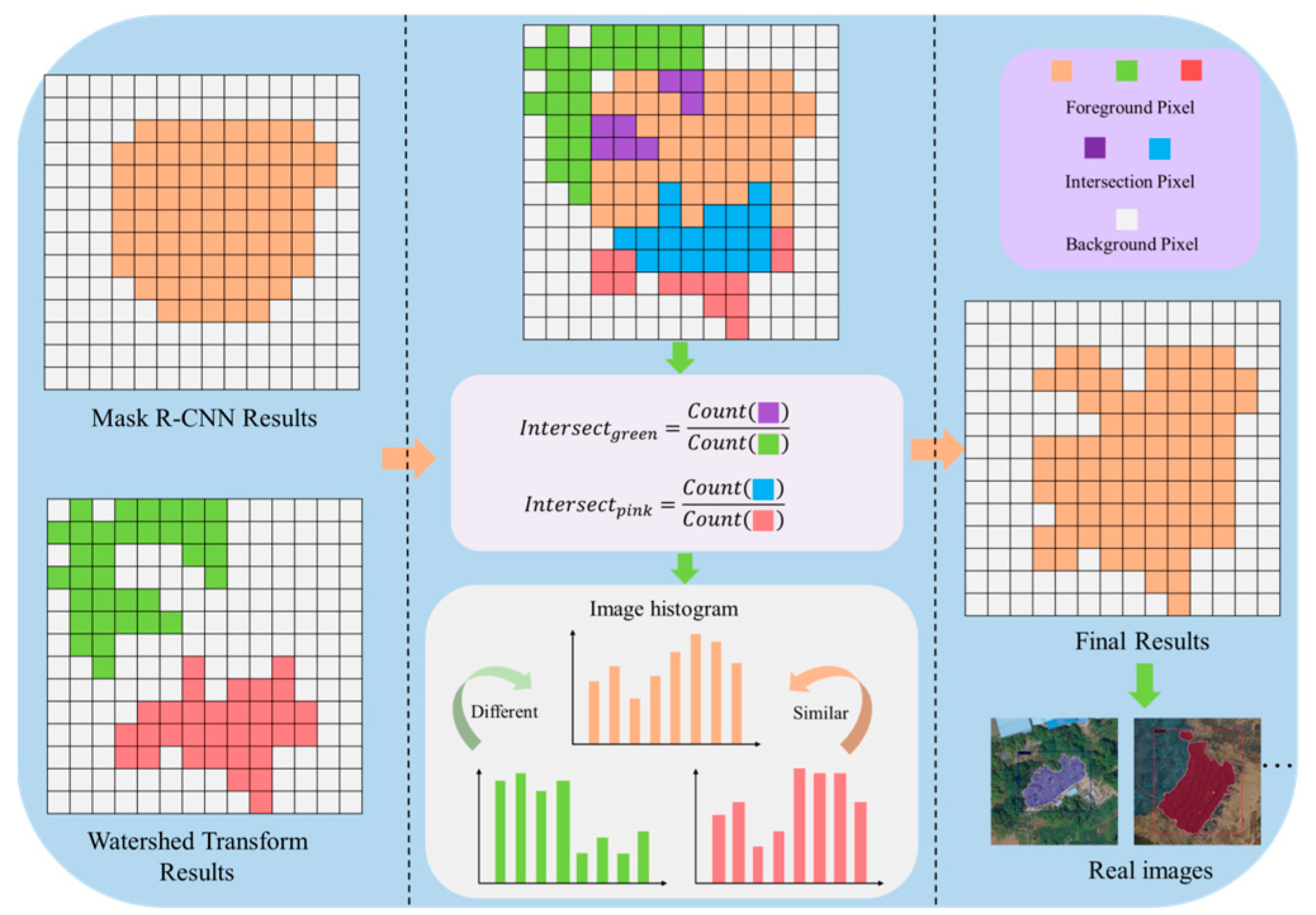

- A precise edge adjustment module based on overlay analysis and image histogram analysis is proposed, which employs spatial coincidence and spectral consistency metrics to integrate deep learning and watershed outputs, thereby refining the edges while preventing fragmentation and over-sharpening of edges.

2. Materials and Methods

2.1. Data Resource and Processing

2.2. WMNet-SW for Automatic Extraction of Solid Waste

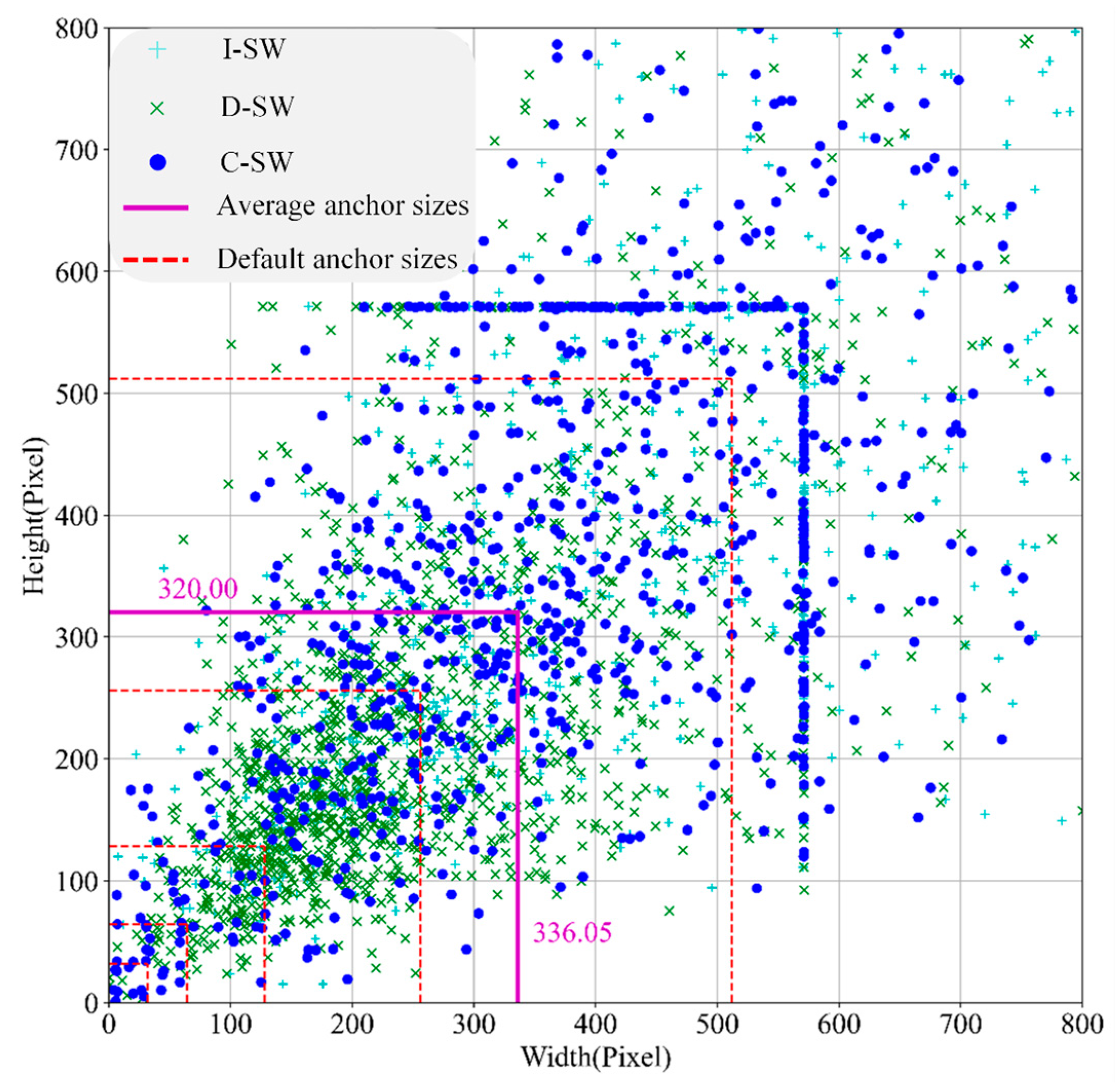

2.2.1. Mask R-CNN Optimization

2.2.2. Layer Feature Aggregation Mask Head

2.2.3. Precise Edge Adjustment

3. Results

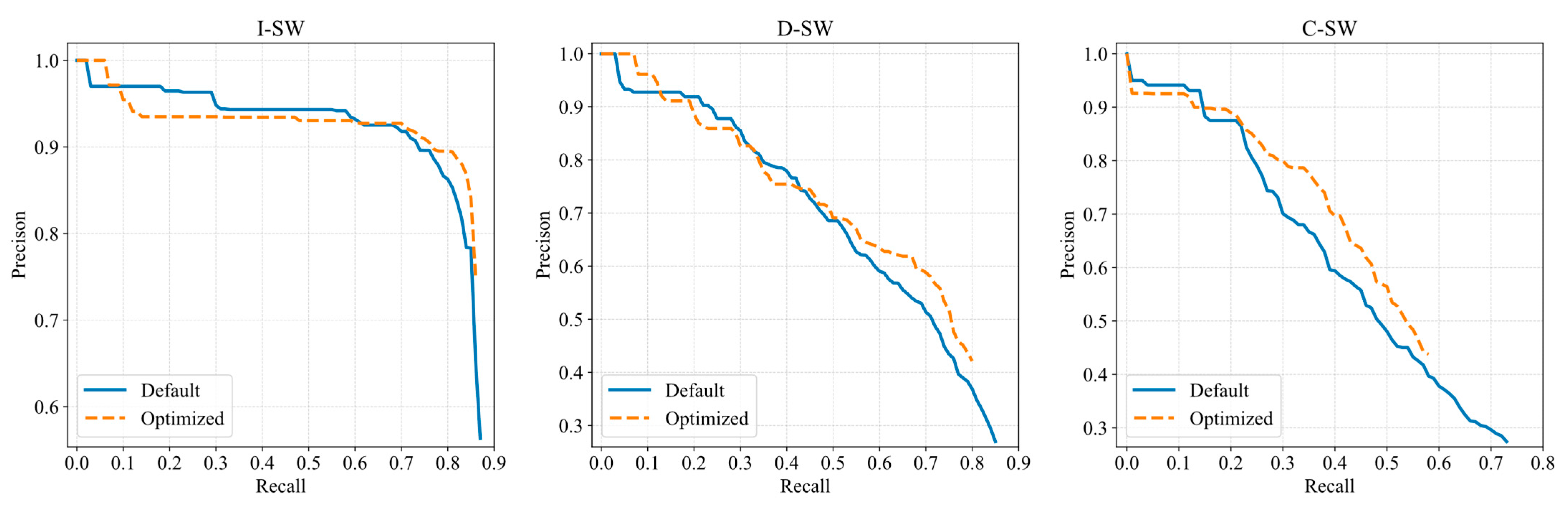

3.1. Mask R-CNN Optimization Results

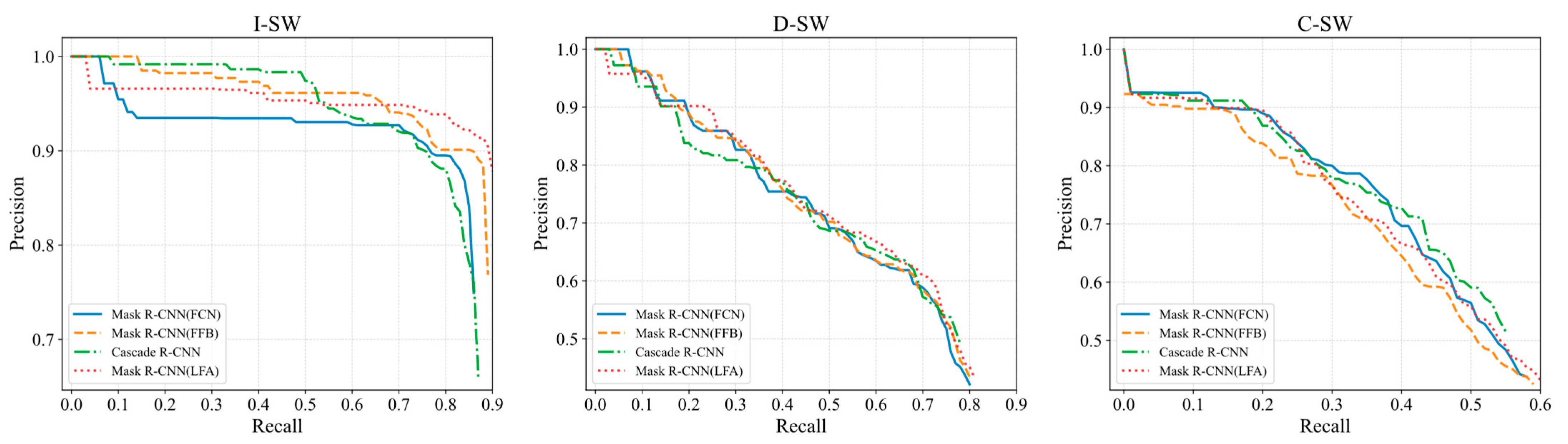

3.2. LFA Mask Head Results

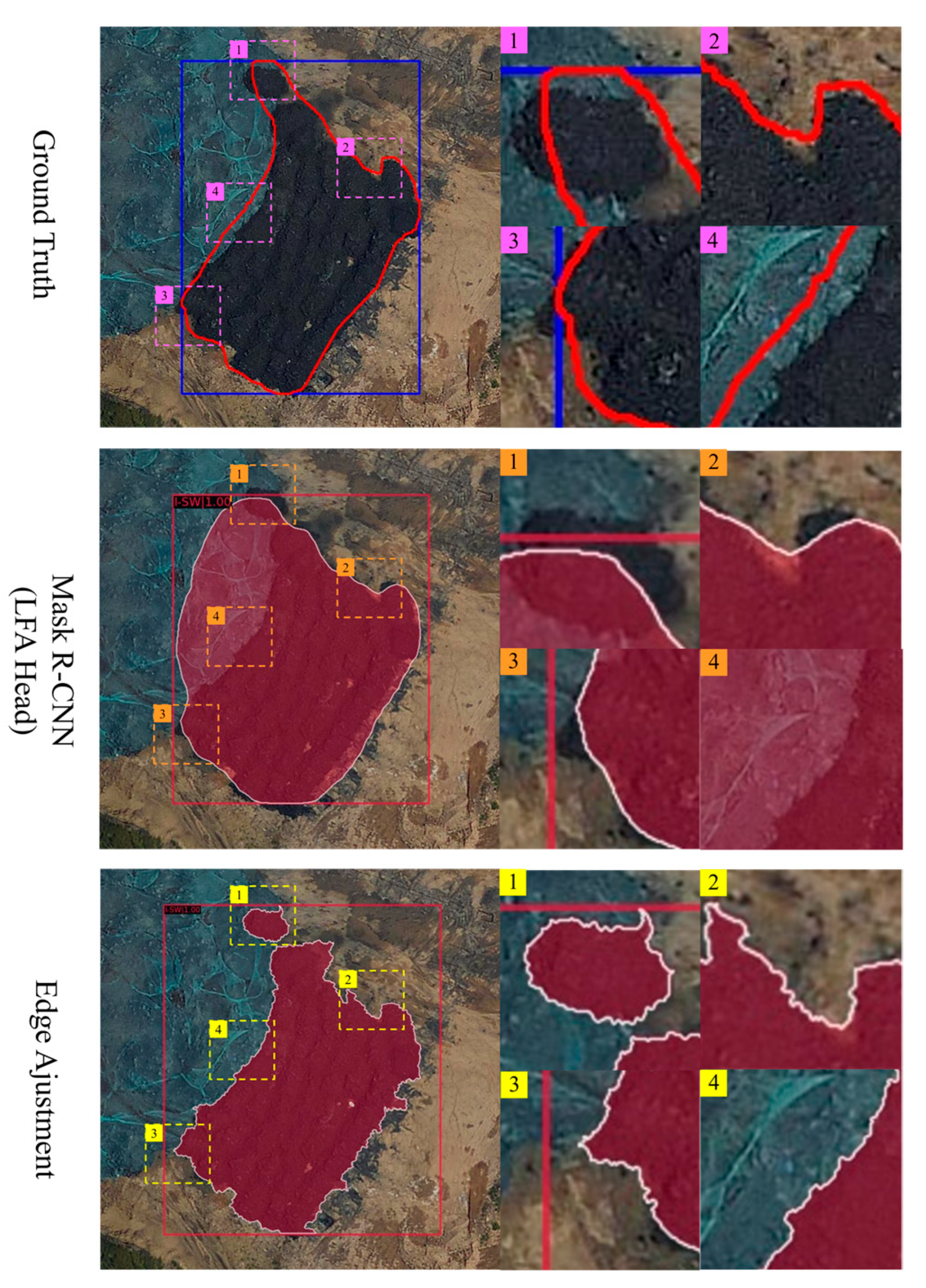

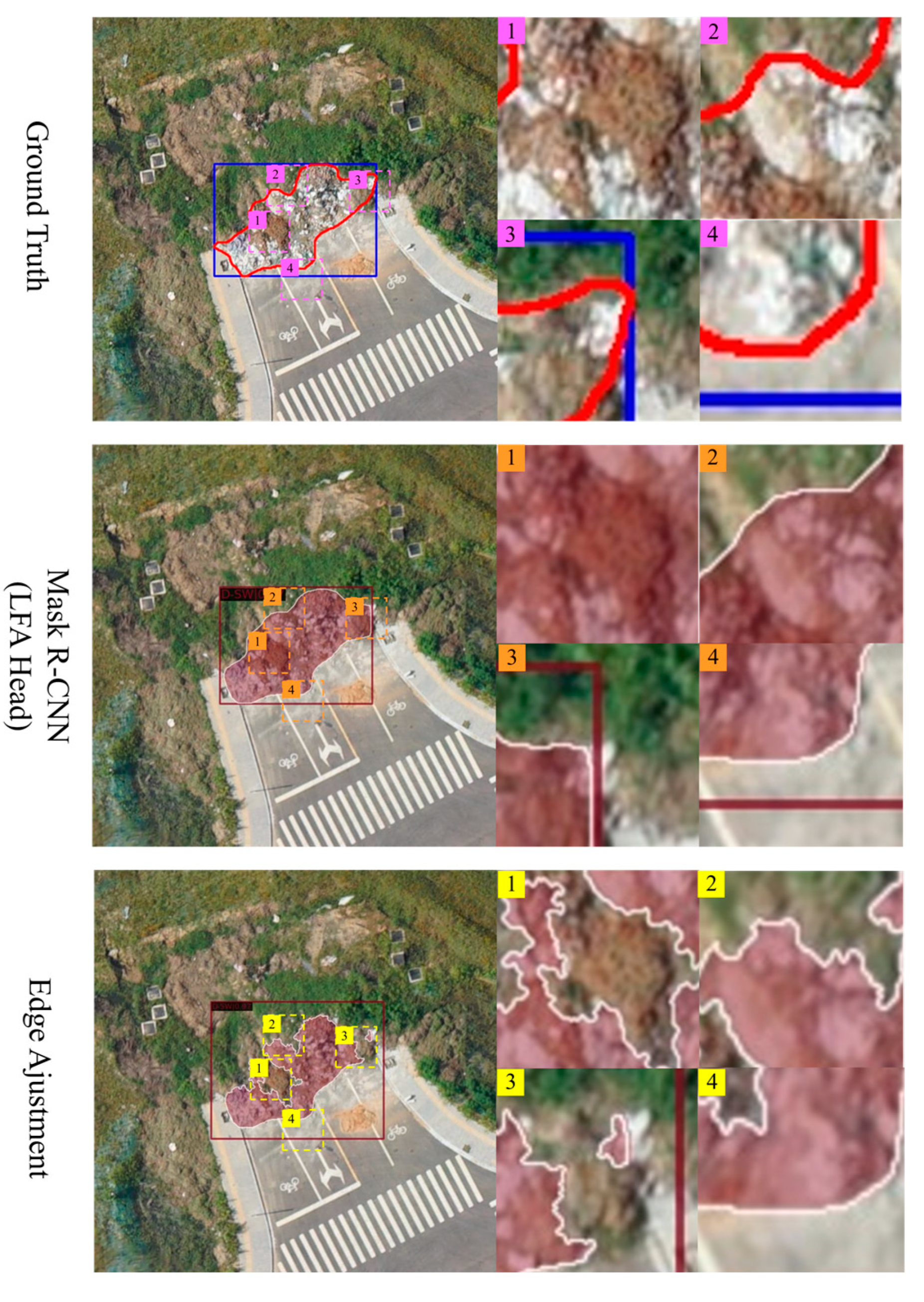

3.3. Evaluation of Precise Edge Adjustment

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiang, N.; Sun, Y.Z.; Li, X.F.; Pan, J.Q. Research progress of solid waste-derived carbon materials for electrochemical capacitors through controlled structural regulation. J. Power Sources 2025, 629, 235990. [Google Scholar] [CrossRef]

- Sharma, G.; Sharma, A. Solid Waste Management by Considering Compost Potential in Bikaner and Challenges. AIP Conf. Proc. 2020, 2220, 020165. [Google Scholar] [CrossRef]

- Song, Q.B.; Li, J.H.; Zeng, X.L. Minimizing the increasing solid waste through zero waste strategy. J. Clean. Prod. 2015, 104, 199–210. [Google Scholar] [CrossRef]

- Shovon, S.M.; Akash, F.A.; Rahman, W.; Rahman, M.A.; Chakraborty, P.; Hossain, H.M.Z.; Monir, M.U. Strategies of Managing Solid Waste and Energy Recovery for a Developing Country—A Review. Heliyon. 2024, 10, e24736. [Google Scholar] [CrossRef]

- World Bank. Solid Waste Management. Available online: https://www.worldbank.org/en/topic/urbandevelopment/brief/solid-waste-management (accessed on 11 February 2022).

- Hu, H.Z. China Energy Statistical Yearbook; China Statistics Press: Beijing, China, 2022; Available online: https://inds.cnki.net/knavi/yearbook/Detail/GOBY/YCXME (accessed on 1 March 2022).

- Chen, W.Y.; Zhao, Y.Y.; You, T.F.; Wang, H.F.; Yang, Y.; Yang, K. Automatic detection of scattered garbage regions using small unmanned aerial vehicle low-altitude remote sensing images for high-altitude natural reserve environmental protection. Environ. Sci. Technol. 2021, 55, 3604–3611. [Google Scholar] [CrossRef]

- Cruvinel, V.R.N.; Marques, C.P.; Cardoso, V.; Novaes, M.R.; Araújo, W.N.; Angulo-Tuesta, A.; Escalda, P.M.; Galato, D.; Brito, P.; Da Silva, E.N. Health conditions and occupational risks in a novel group: Waste pickers in the largest open garbage dump in Latin America. BMC Public Health 2019, 19, 581. [Google Scholar] [CrossRef]

- Li, H.F.; Hu, C.; Zhong, X.R.; Zeng, C.; Shen, H.F. Solid Waste Detection in Cities Using Remote Sensing Imagery Based on a Location-Guided Key Point Network With Multiple Enhancements. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 16, 191–201. [Google Scholar] [CrossRef]

- Wang, B.S.; Xing, Y.H.; Wang, N.; Chen, C.L.P. Monitoring Waste From Uncrewed Aerial Vehicles and Satellite Imagery Using Deep Learning Techniques: A Review. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2024, 17, 20064–20079. [Google Scholar] [CrossRef]

- Li, P.; Xu, J.Y.; Liu, S.B. Solid Waste Detection Using Enhanced YOLOv8 Lightweight Convolutional Neural Networks. Mathematics 2024, 12, 2185. [Google Scholar] [CrossRef]

- Sun, X.; Yin, D.; Qin, F.; Yu, H.; Lu, W.; Yao, F.; He, Q.; Huang, X.; Yan, Z.; Wang, P.; et al. Revealing influencing factors on global waste distribution via deep-learning based dumpsite detection from satellite imagery. Nat. Commun. 2023, 14, 1444. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.H.; Wu, C.B.; Yang, H.J.; Zhu, H.T.; Chen, M.X.; Yang, J. An Improved Deep Learning Approach for Retrieving Outfalls Into Rivers From UAS Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Hu, X.; Luo, C.; Wang, X.Y.; Zhao, J.; Zhang, L.P. WHU-Hi: UAV-borne hyperspectral with high spatial resolution (H2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sens. Environ. 2020, 250, 112012. [Google Scholar] [CrossRef]

- Aplin, P. On scales and dynamics in observing the environment. Int. J. Remote Sens. 2007, 27, 2123–2140. [Google Scholar] [CrossRef]

- Tamouridou, A.A.; Alexandridis, T.K.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of UAV imagery for mapping Silybum marianum weed patches. Int. J. Remote Sens. 2016, 38, 2246–2259. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Chen, Q.; Li, Y.Y.; Jia, Z.Y.; Cheng, Q.H. 3D Change Detection of Urban Construction Waste Accumulations Using Unmanned Aerial Vehicle Photogrammetry. Sens. Mater. 2021, 33, 4521–4543. [Google Scholar] [CrossRef]

- Long-Xin, X.; Yong-hua, S.; Wen-huan, W.; Kai, Z.; Shi-jun, H.; Yuan-ming, Z.; Miao, Y.; Xiao-han, Z. Research on Classification of Construction Waste Based on UAV Hyperspectral Image. Spectrosc. Spectr. Anal. 2022, 42, 3927–3934. [Google Scholar] [CrossRef]

- Huang, Y.H.; Zhao, C.P.; Yang, H.J.; Song, X.Y.; Chen, J.; Li, Z.H. Feature Selection Solution with High Dimensionality and Low-Sample Size for Land Cover Classification in Object-Based Image Analysis. Remote Sens. 2017, 9, 939. [Google Scholar] [CrossRef]

- Jiang, D.; Huang, Y.H.; Zhuang, D.F.; Zhu, Y.Q.; Xu, X.L.; Ren, H.Y. A Simple Semi-Automatic Approach for Land Cover Classification from Multispectral Remote Sensing Imagery. PLoS ONE 2012, 7, e45889. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, W.Y.; Wang, H.F.; Li, H.; Li, Q.J.; Yang, Y.; Yang, K. Real-Time Garbage Object Detection With Data Augmentation and Feature Fusion Using SUAV Low-Altitude Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Gao, S.Y.; Liu, Y.; Cao, S.; Chen, Q.; Du, M.; Zhang, D.; Jia, J.; Zou, W. IUNet-IF: Identification of construction waste using unmanned aerial vehicle remote sensing and multi-layer deep learning methods. Int. J. Remote Sens. 2022, 43, 7181–7212. [Google Scholar] [CrossRef]

- Zhang, X.Y.; Chen, Y.L.; Han, W.; Chen, X.D.; Wang, S. Fine mapping of Hubei open pit mines via a multi-branch global–local-feature-based ConvFormer and a high-resolution benchmark. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104111. [Google Scholar] [CrossRef]

- Liu, Y.; Gou, P.; Nie, W.; Xu, N.; Zhou, T.Y.; Zheng, Y.L. Urban Surface Solid Waste Detection Based on UAV Images. In Proceedings of the 8th China High Resolution Earth Observation Conference (CHREOC 2022), Beijing, China, 5–8 November 2022; pp. 124–134. [Google Scholar]

- Li, X.; Xu, F.; Liu, F.; Tong, Y.; Lyu, X.; Zhou, J. Semantic Segmentation of Remote Sensing Images by Interactive Representation Refinement and Geometric Prior-Guided Inference. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–18. [Google Scholar] [CrossRef]

- Soylu, B.E.; Guzel, M.S.; Bostanci, G.E.; Ekinci, F.; Asuroglu, T.; Acici, K. Deep-Learning-Based Approaches for Semantic Segmentation of Natural Scene Images: A Review. Electronics 2023, 12, 2730. [Google Scholar] [CrossRef]

- Kheradmandi, N.; Mehranfar, V. A critical review and comparative study on image segmentation-based techniques for pavement crack detection. Constr. Build. Mater. 2022, 321, 126162. [Google Scholar] [CrossRef]

- Xiao, J.; Mao, S.; Li, M.; Liu, H.; Zhang, H.; Fang, S.; Yuan, M.; Zhang, C. MFPA-Net: An efficient deep learning network for automatic ground fissures extraction in UAV images of the coal mining area. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103039. [Google Scholar] [CrossRef]

- Vincent, L.; Soille, P. Watersheds in digital spaces: An efficient algorithm based on immersion simulations. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 13, 583–598. [Google Scholar] [CrossRef]

- Yasir, M.; Sheng, H.; Fan, H.; Nazir, S.; Niang, A.J.; Salauddin, M.; Khan, S. Automatic Coastline Extraction and Changes Analysis Using Remote Sensing and GIS Technology. IEEE Access 2020, 8, 180156–180170. [Google Scholar] [CrossRef]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 386–397. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Wada, K. Labelme: Image Polygonal Annotation with Python (Version 3.9.0). 2019. Available online: https://github.com/zhong110020/labelme (accessed on 20 August 2025).

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Wang, S.J.; Sun, G.L.; Zheng, B.W.; Du, Y.W. A Crop Image Segmentation and Extraction Algorithm Based on Mask RCNN. Entropy 2021, 23, 1160. [Google Scholar] [CrossRef]

- Dai, J.F.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zeiler, M.D.; Krishnan, D.; Taylor, G.W.; Fergus, R. Deconvolutional networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2528–2535. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1520–1528. [Google Scholar]

- Caponetti, L.; Castellan, G.; Basile, M.T.; Corsini, V. Fuzzy mathematical morphology for biological image segmentation. Appl. Intell. 2014, 41, 117–127. [Google Scholar] [CrossRef]

- Chen, Q.X.; Zhou, C.H.; Luo, J.C.; Ming, D.P. Fast segmentation of high-resolution satellite images using watershed transform combined with an efficient region merging approach. In Proceedings of the 10th International Workshop on Combinatorial Image Analysis, Auckland, New Zealand, 1–3 December 2004; pp. 621–630. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Lyu, X.; Gao, H.M.; Zhou, J.; Kaup, A. A Euclidean Affinity-Augmented Hyperbolic Neural Network for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–18. [Google Scholar] [CrossRef]

- Xiao, C.; Yin, Q.; Ying, X.; Li, R.; Wu, S.; Li, M.; Liu, L.; An, W.; Chen, Z. DSFNet: Dynamic and Static Fusion Network for Moving Object Detection in Satellite Videos. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Cai, Z.W.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.M.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Li, X.; Xu, F.; Liu, F.; Lyu, X.; Tong, Y.; Xu, Z.N.; Zhou, J. A Synergistical Attention Model for Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

| Type | Images | Instances | Train Instances | Validation Instances |

|---|---|---|---|---|

| I-SW | 1194 | 1269 | 908 | 361 |

| D-SW | 610 | 1467 | 1039 | 428 |

| C-SW | 1815 | 1642 | 1098 | 544 |

| Model | Params Aspects: [1:1, 1:2, 2:1] | Type | Precision (%) | Recall (%) | F1 | AP (%) |

|---|---|---|---|---|---|---|

| Default | Scales: [32, 64, 128, 256, 512] RoIs:1000 | I-SW | 56.35 | 87.81 | 0.68 | 81.00 |

| D-SW | 26.96 | 85.98 | 0.41 | 60.99 | ||

| C-SW | 27.43 | 73.16 | 0.40 | 47.13 | ||

| Avg | 36.91 | 82.32 | 0.50 | 63.04 | ||

| Optimized | Scales: [48, 96, 192, 384, 768] RoIs:900 | I-SW | 74.94 | 86.70 | 0.80 | 80.24 |

| D-SW | 42.41 | 80.37 | 0.55 | 60.92 | ||

| C-SW | 43.77 | 58.09 | 0.50 | 44.89 | ||

| Avg | 53.61 | 75.06 | 0.62 | 62.02 |

| Model | Type | Precision (%) | Recall (%) | F1 | AP (%) |

|---|---|---|---|---|---|

| Mask R-CNN (FCN Head) | I-SW | 74.94 | 86.70 | 0.80 | 80.24 |

| D-SW | 42.41 | 80.37 | 0.55 | 60.92 | |

| C-SW | 43.77 | 58.09 | 0.50 | 44.89 | |

| Avg | 53.61 | 75.06 | 0.62 | 62.02 | |

| Mask R-CNN (FFB Head) | I-SW | 76.85 | 89.75 | 0.82 | 85.54 |

| D-SW | 43.36 | 80.84 | 0.56 | 61.17 | |

| C-SW | 42.46 | 59.19 | 0.49 | 43.18 | |

| Avg | 54.22 | 76.59 | 0.63 | 63.3 | |

| Cascade R-CNN | I-SW | 65.62 | 87.26 | 0.75 | 83.00 |

| D-SW | 49.12 | 78.27 | 0.60 | 59.66 | |

| C-SW | 51.54 | 55.7 | 0.53 | 43.87 | |

| Avg | 55.43 | 73.74 | 0.63 | 62.18 | |

| Mask R-CNN (LFA head) | I-SW | 87.84 | 90.30 | 0.89 | 85.96 |

| D-SW | 43.32 | 81.07 | 0.56 | 62.22 | |

| C-SW | 43.14 | 60.48 | 0.50 | 45.08 | |

| Avg | 58.10 | 77.29 | 0.65 | 64.42 |

| Model | Type | Bounding Box Regression | Segmentation | Training Time | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AP | mAP50 | mAP75 | mAPM | mAPL | AP | mAP50 | mAP75 | mAPM | mAPL | |||

| Mask R-CNN (FCN Head) | I-SW | 0.504 | 0.659 | 0.409 | 0.265 | 0.412 | 0.397 | 0.620 | 0.265 | 0.285 | 0.315 | 4.20 h |

| D-SW | 0.364 | 0.284 | ||||||||||

| C-SW | 0.311 | 0.241 | ||||||||||

| Avg | 0.393 | 0.307 | ||||||||||

| Mask R-CNN (FFB Head) | I-SW | 0.547 | 0.660 | 0.447 | 0.305 | 0.431 | 0.450 | 0.633 | 0.317 | 0.282 | 0.340 | 10.62 h |

| D-SW | 0.376 | 0.294 | ||||||||||

| C-SW | 0.319 | 0.241 | ||||||||||

| Avg | 0.414 | 0.328 | ||||||||||

| Cascade R-CNN | I-SW | 0.560 | 0.641 | 0.486 | 0.291 | 0.451 | 0.411 | 0.622 | 0.291 | 0.263 | 0.325 | 9.50 h |

| D-SW | 0.387 | 0.282 | ||||||||||

| C-SW | 0.337 | 0.246 | ||||||||||

| Avg | 0.428 | 0.313 | ||||||||||

| Mask R-CNN (LFA head) | I-SW | 0.553 | 0.664 | 0.459 | 0.306 | 0.442 | 0.440 | 0.644 | 0.317 | 0.292 | 0.342 | 7.49 h |

| D-SW | 0.386 | 0.303 | ||||||||||

| C-SW | 0.331 | 0.255 | ||||||||||

| Avg | 0.423 | 0.332 | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Chen, Z. An Improved Instance Segmentation Approach for Solid Waste Retrieval with Precise Edge from UAV Images. Remote Sens. 2025, 17, 3410. https://doi.org/10.3390/rs17203410

Huang Y, Chen Z. An Improved Instance Segmentation Approach for Solid Waste Retrieval with Precise Edge from UAV Images. Remote Sensing. 2025; 17(20):3410. https://doi.org/10.3390/rs17203410

Chicago/Turabian StyleHuang, Yaohuan, and Zhuo Chen. 2025. "An Improved Instance Segmentation Approach for Solid Waste Retrieval with Precise Edge from UAV Images" Remote Sensing 17, no. 20: 3410. https://doi.org/10.3390/rs17203410

APA StyleHuang, Y., & Chen, Z. (2025). An Improved Instance Segmentation Approach for Solid Waste Retrieval with Precise Edge from UAV Images. Remote Sensing, 17(20), 3410. https://doi.org/10.3390/rs17203410