SAM-Based Few-Shot Learning for Coastal Vegetation Segmentation in UAV Imagery via Cross-Matching and Self-Matching

Abstract

Highlights

- A few-shot semantic segmentation network adopting the strategy of combining meta-learners and base learners is proposed.

- A few-shot semantic segmentation network based on SAM refinement for multi-layer similarity comparison is proposed for coastal zone images.

- The base learner is added and the lexical semantic information is introduced to improve the meta-learner. The joint effect of the two branches results in more accurate segmentation results.

- A cross-matching module and a self-matching module are introduced, and the SAM visual large model is used to refine the segmentation results, enabling the attainment of prediction results with smoother edges and clearer contours.

Abstract

1. Introduction

- (1).

- Proposing the core method: It introduces a novel few-shot semantic segmentation (FSS) method tailored specifically for coastal region analysis, filling the gap in targeted research on few-shot segmentation technology in this particular scenario.

- (2).

- Designing a dedicated network: It constructs an FSS network suitable for coastal scenarios. The core advantage of this network lies in the integration of two mechanisms—cross-matching and self-matching—to adapt to the complex geographical and semantic features of coastal zones.

- (3).

- Verifying performance and value: Experimental verification shows that this method significantly surpasses existing methods in performance for coastal-related tasks and can ultimately provide an efficient and implementable solution for large-scale coastal monitoring works.

2. Related Work

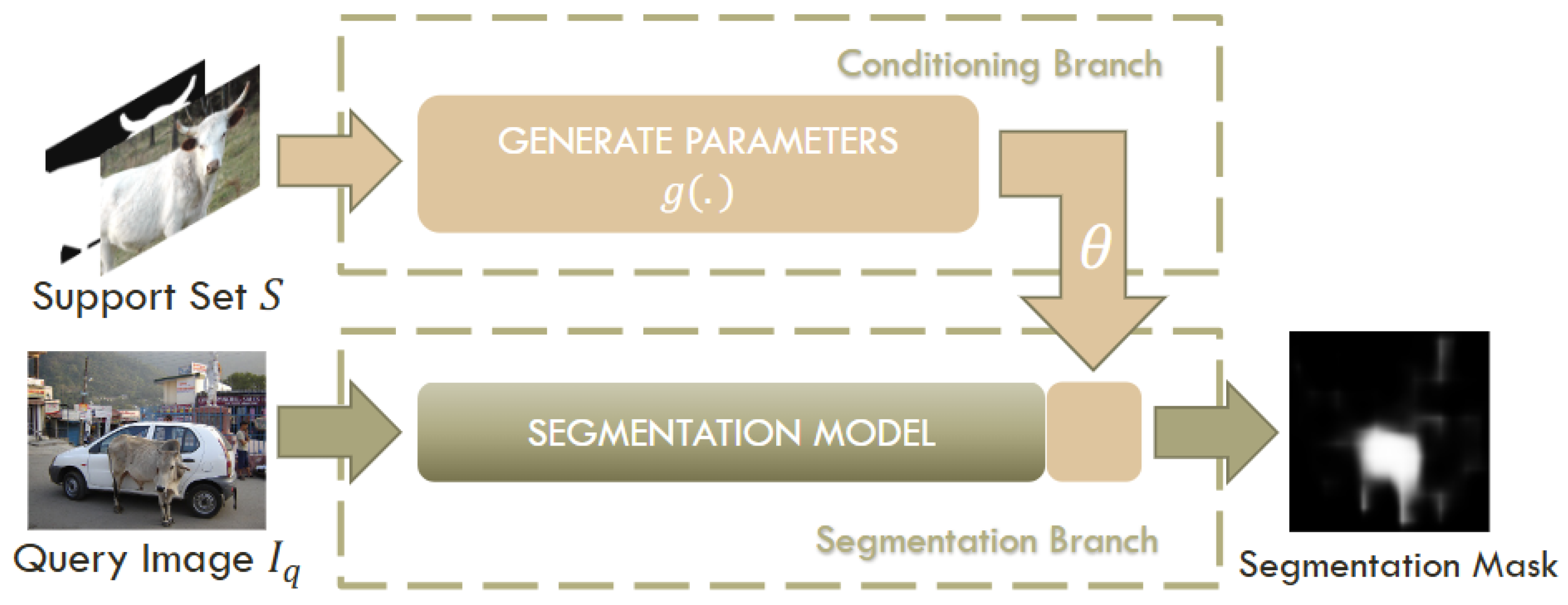

2.1. Few-Shot Learning

2.2. Double-Branch Structure of Methods

2.3. Prototype-Based Methods

2.4. Matching-Based Methods

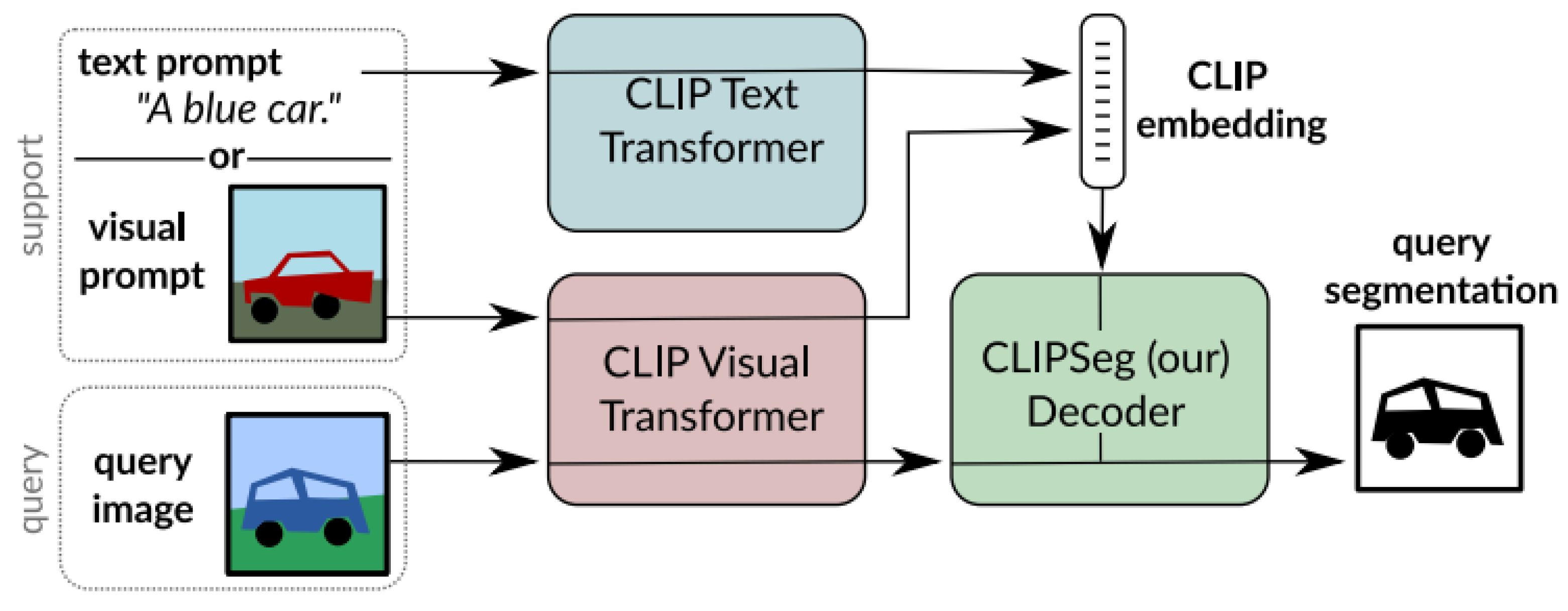

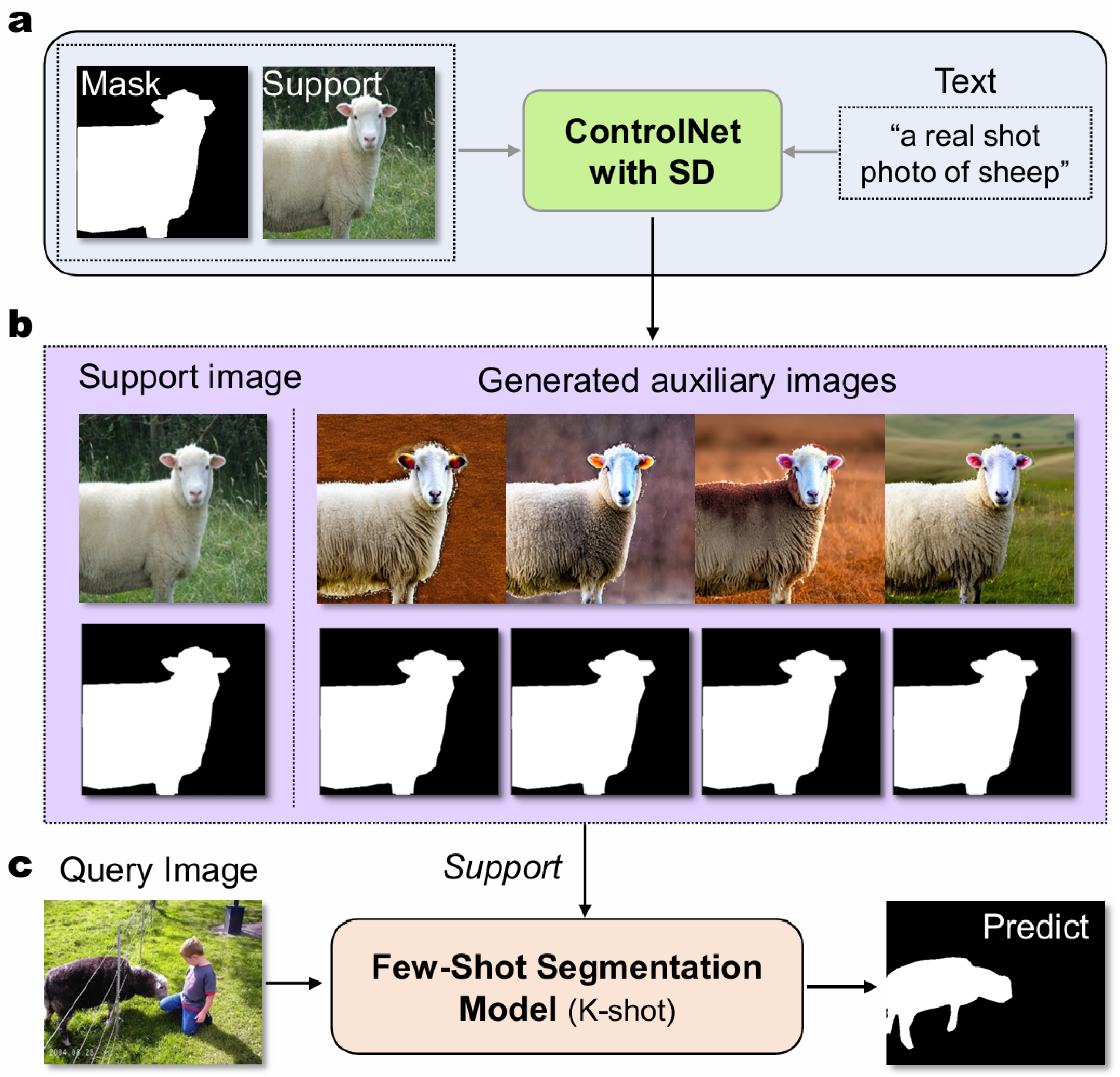

2.5. Methodology Utilizing Basic Models

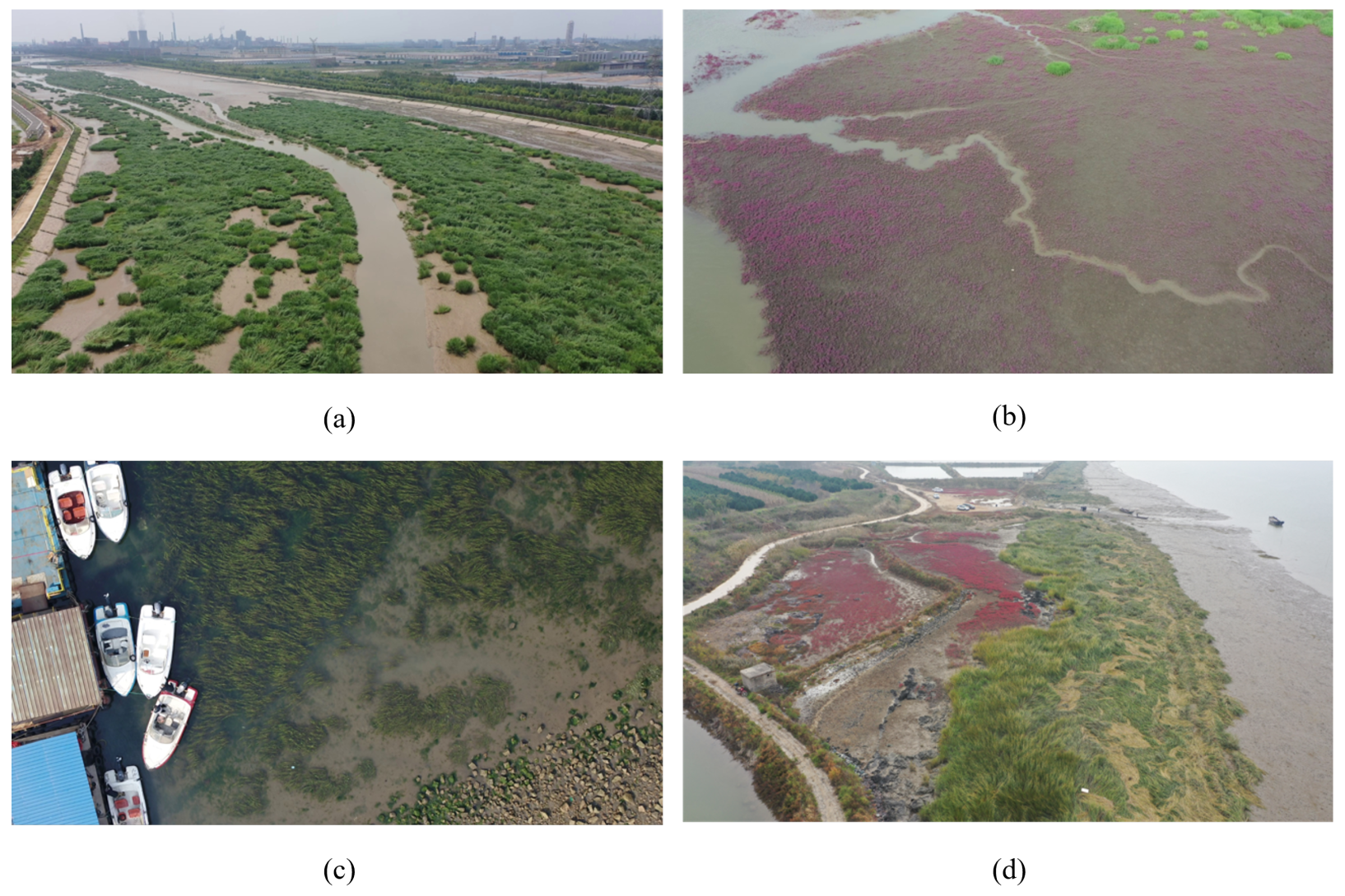

3. Scene Challenge Analysis

3.1. Segmentation Challenges Caused by UAV Imaging

3.2. Segmentation Challenges Arising from Vegetation Characteristics

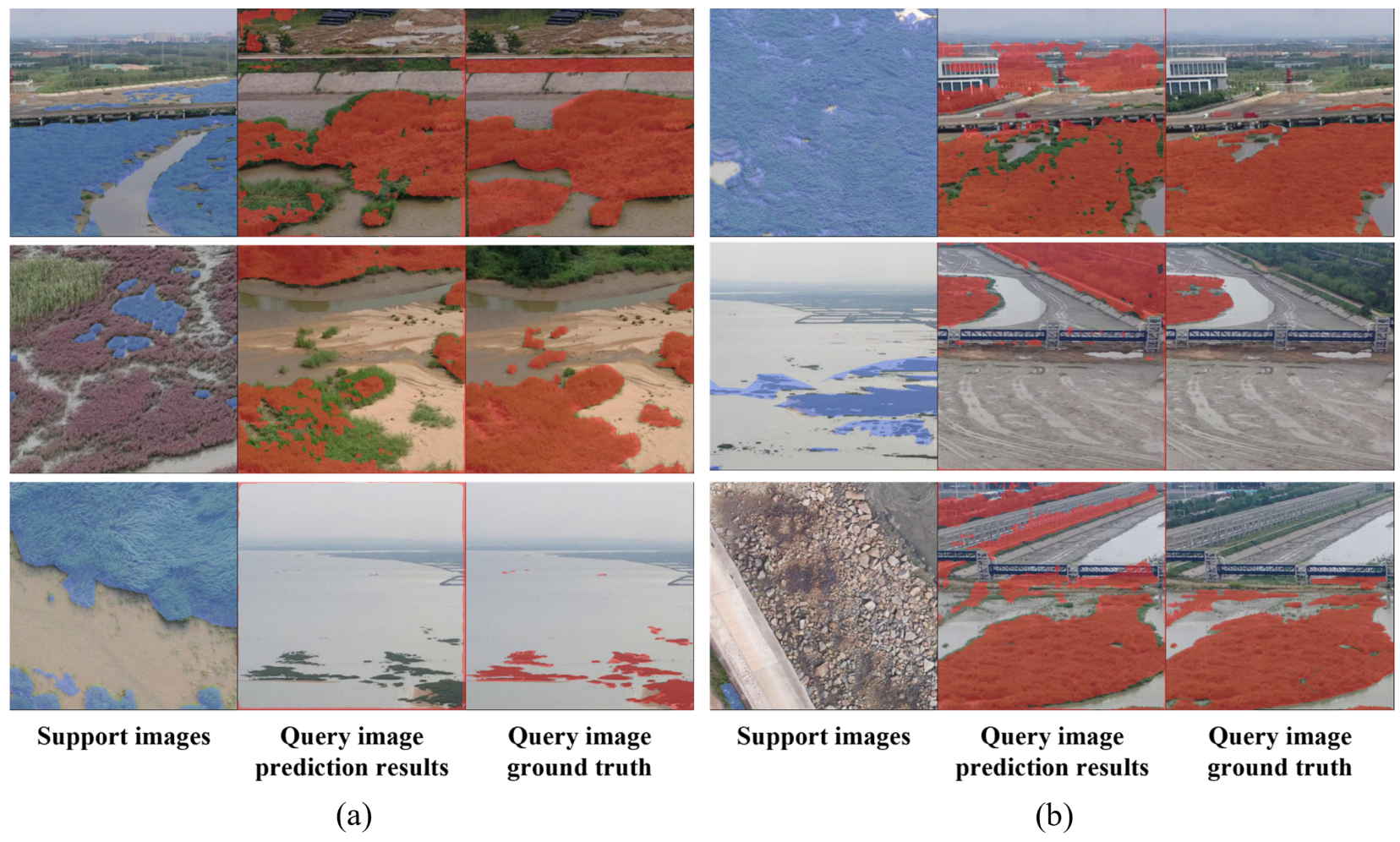

3.3. Visualization Analysis

4. Suggested Approach

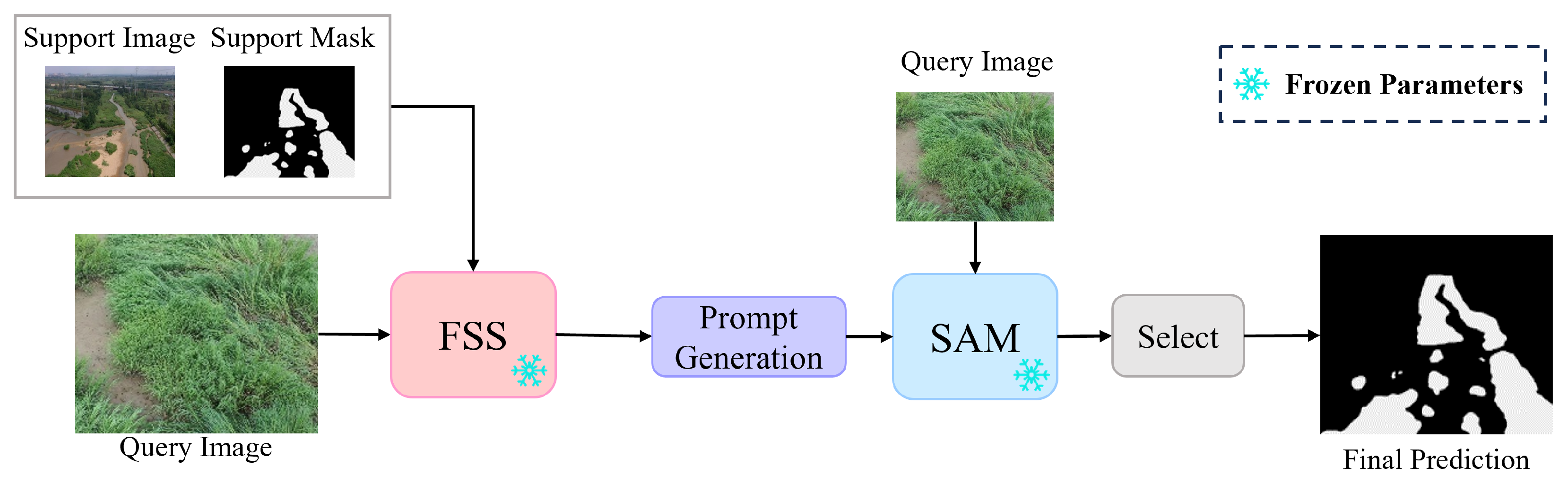

4.1. Overall Suggested Approach Framework

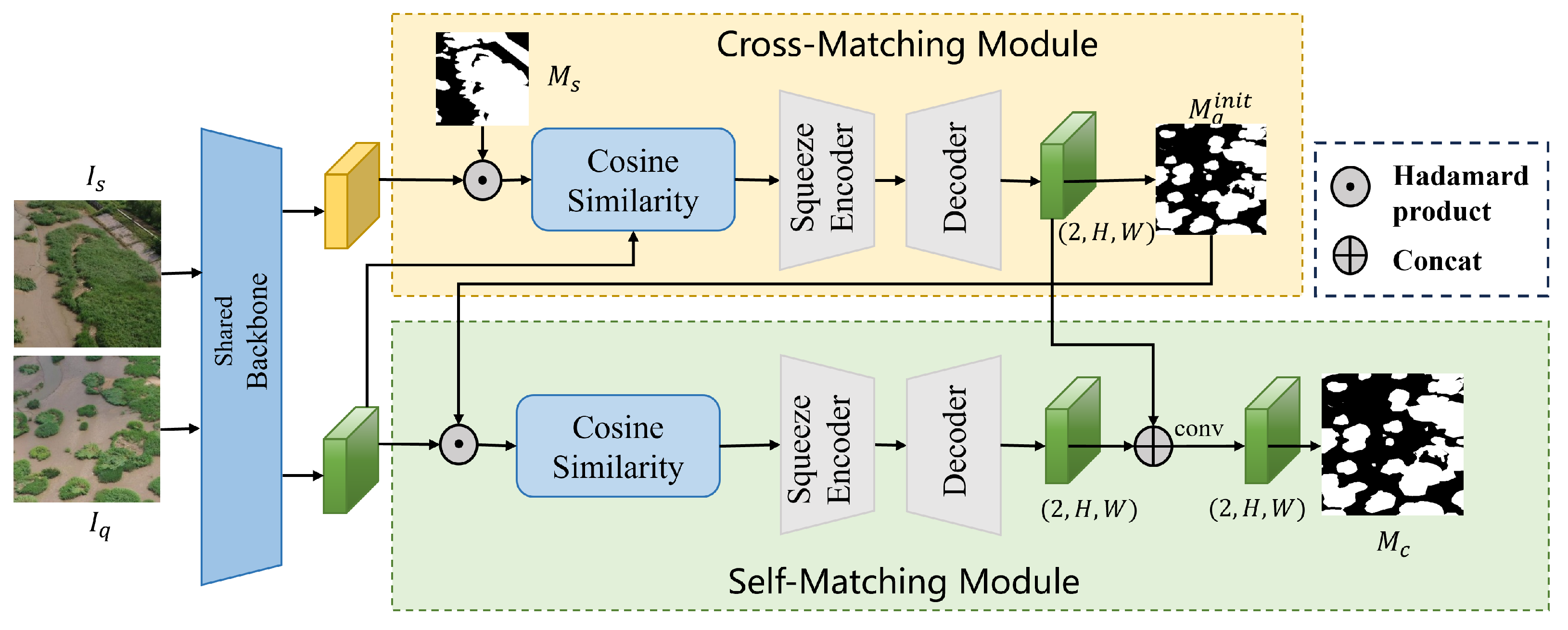

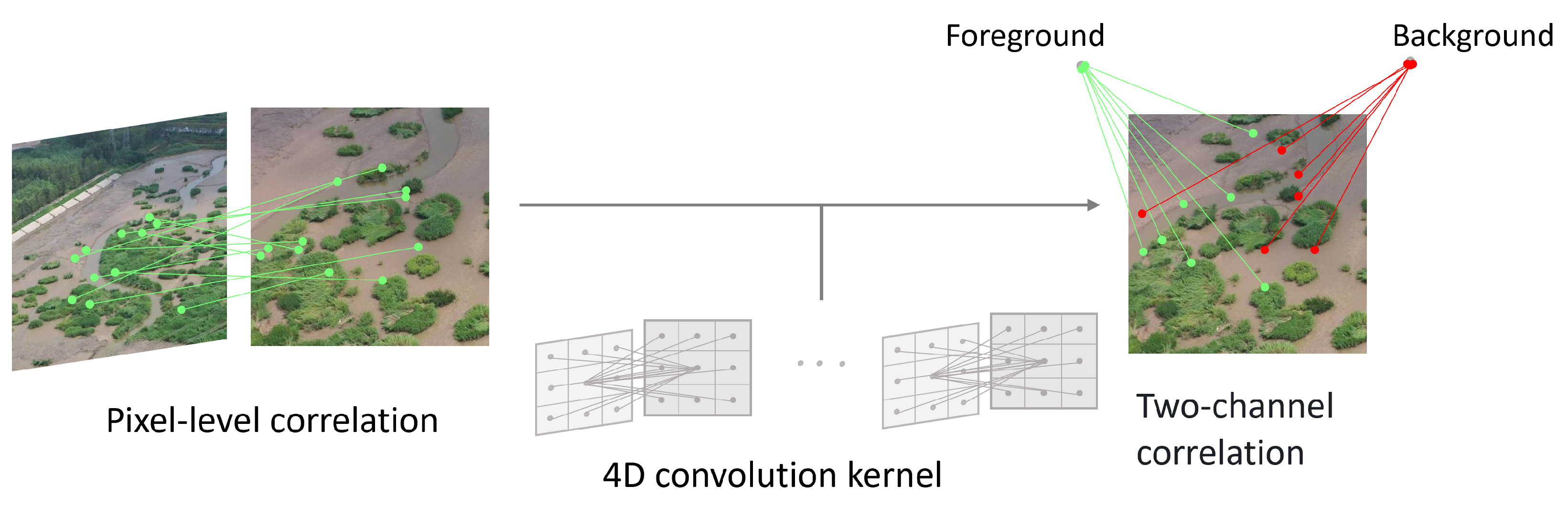

4.2. Cross-Matching Module

4.3. Self-Matching Module

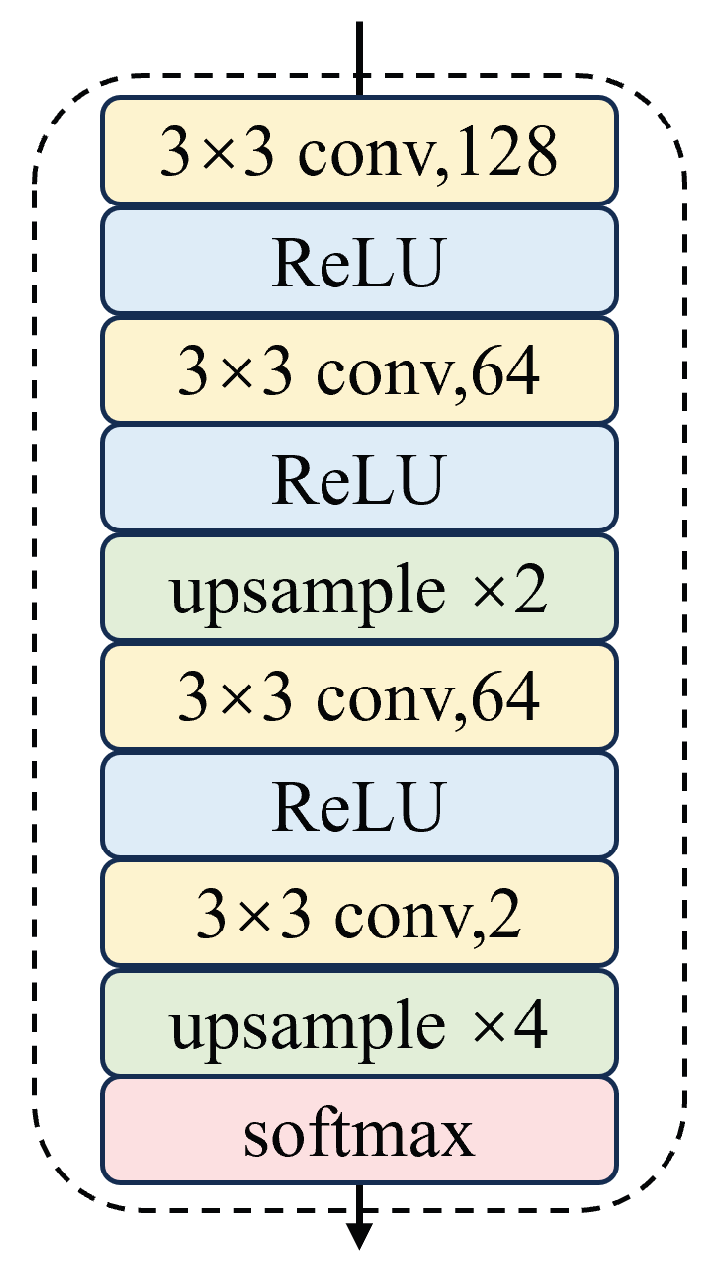

4.4. Refinement Using SAM

- (1).

- FSS predicts correctly, SAM predicts correctly: In this case, the prediction outperforms in terms of accuracy.

- (2).

- FSS predicts correctly, SAM predicts incorrectly: Here, performs worse than . This situation can be mitigated.

- (3).

- FSS predicts incorrectly: FSS predicts the wrong location for the target object. Consequently, the generated prompts are invalid, and the corresponding SAM output is also not useful.

5. Experiment and Evaluation

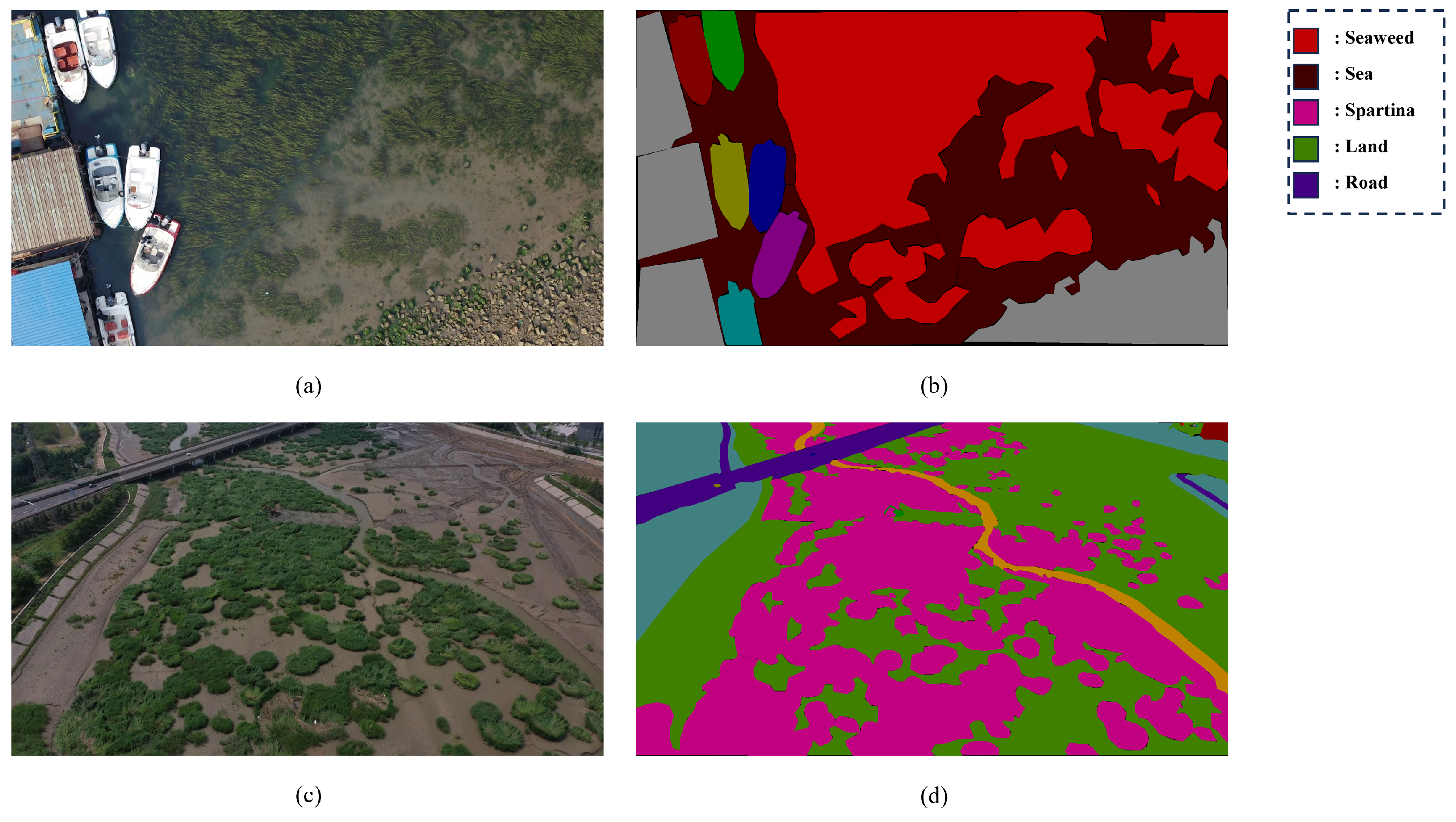

5.1. Dataset Description

5.2. Experimental Details

5.3. Comparative Experiments

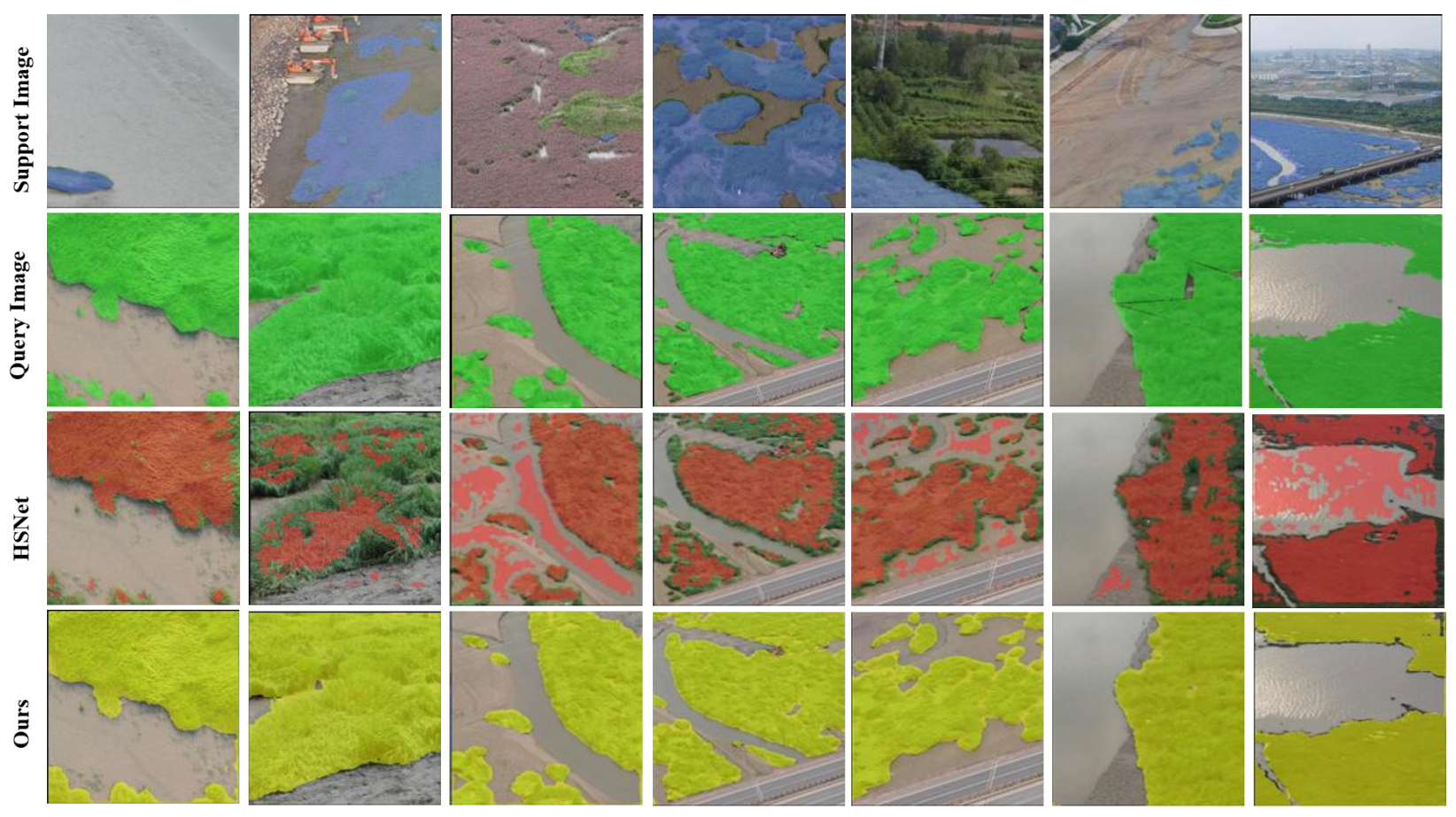

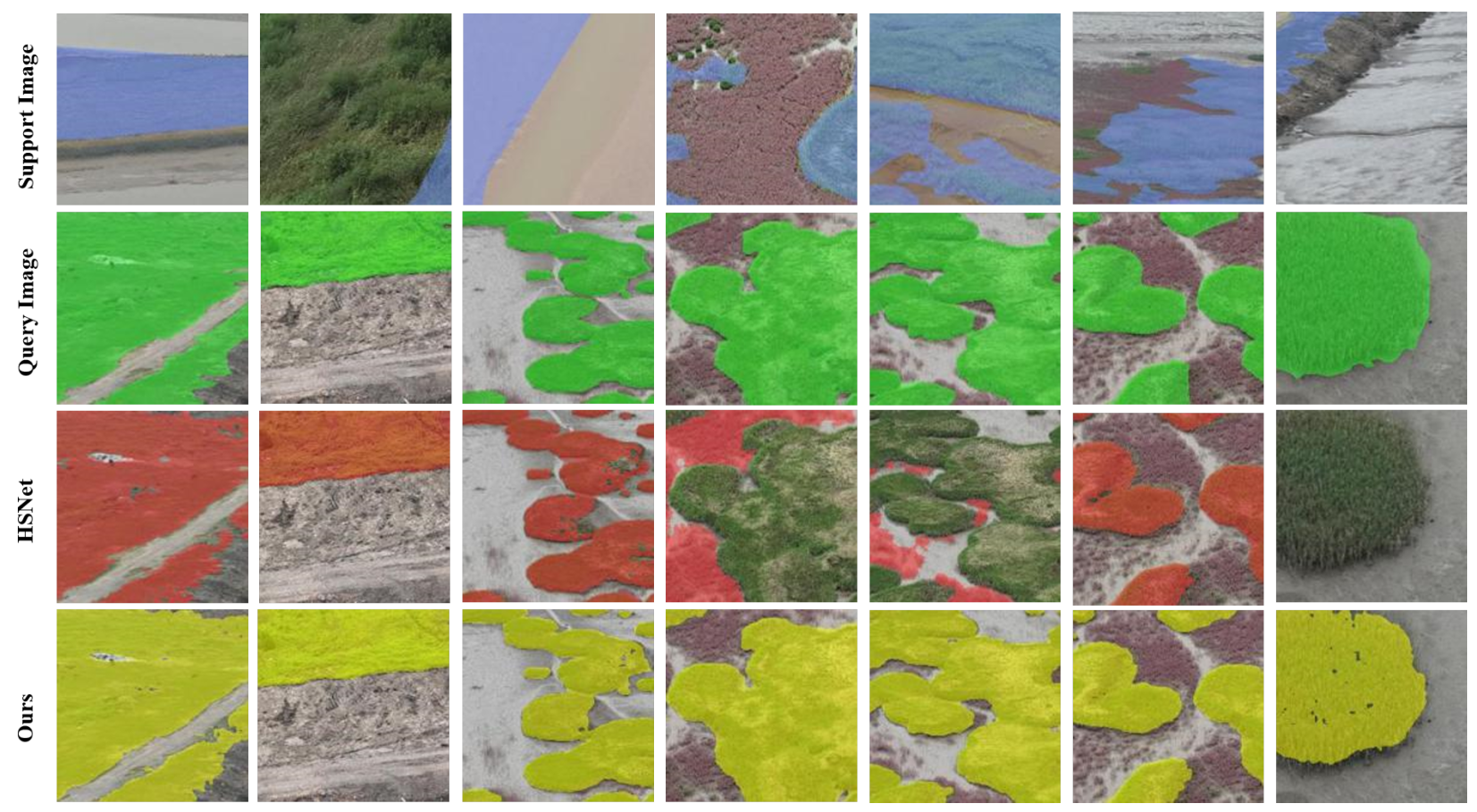

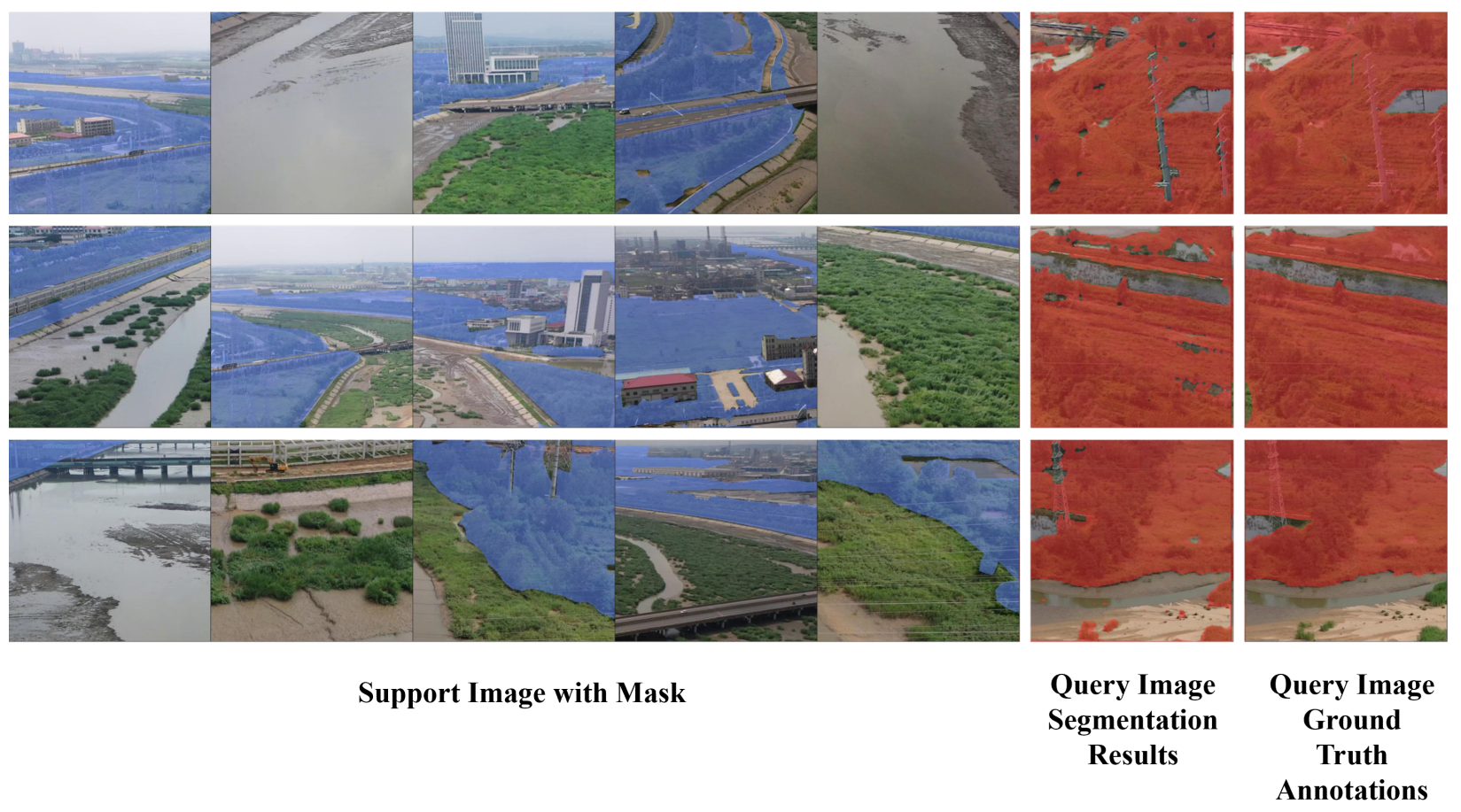

5.3.1. Qualitative Experiments

5.3.2. Quantitative Experiments

5.4. Ablation Study

6. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Hu, F.; Xiao, K.; Chen, X.; Ye, S.; Sun, L.; Qin, S. Research Progress on the Delineation of Coastal Zone Scope and Boundaries. J. Appl. Oceanogr. 2025, 44, 15–20. [Google Scholar]

- Zhang, S. UAV-Based Research on Semantic Segmentation Methods for Coastal Zone Remote Sensing Images. Master’s Thesis, Liaoning Technical University, Fuxin, China, 2022. [Google Scholar]

- Jiang, X.; He, X.; Lin, M.; Gong, F.; Ye, X.; Pan, D. Advances in the Application of Marine Satellite Remote Sensing in China. Acta Oceanol. Sin. 2019, 41, 113–124. [Google Scholar]

- Li, X.; Li, X.; Liu, W.; Wei, B.; Xu, X. A UAV-based Framework for Crop Lodging Assessment. Eur. J. Agron. 2021, 123, 126201. [Google Scholar] [CrossRef]

- Ghassoun, Y.; Gerke, M.; Khedar, Y.; Backhaus, J.; Bobbe, M.; Meissner, H.; Tiwary, P.K.; Heyen, R. Implementation and Validation of a High Accuracy UAV-Photogrammetry Based Rail Track Inspection System. Remote Sens. 2021, 13, 384. [Google Scholar] [CrossRef]

- Dou, Y.; Yao, F.; Wang, X.; Qu, L.; Chen, L.; Xu, Z.; Ding, L.; Bullock, L.B.; Zhong, G.; Wang, S. PanopticUAV: Panoptic Segmentation of UAV Images for Marine Environment Monitoring. Comput. Model. Eng. Sci. 2024, 138, 1001–1014. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, F.; Ding, L.; Xu, Z.; Yang, X.; Wang, S. An Image Segmentation Method Based on Transformer and Multi-Scale Feature Fusion for UAV Marine Environment Monitoring. In Proceedings of the 2023 8th International Conference on Image, Vision and Computing (ICIVC), Dalian, China, 27–29 July 2023; pp. 328–336. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Qu, L.; Yao, F.; Liu, Y.; Li, C.; Wang, Y.; Zhong, G. Semantic Segmentation Benchmark Dataset for Coastal Ecosystem Monitoring Based on Unmanned Aerial Vehicle (UAV). J. Image Graph. 2024, 29, 2162–2174. [Google Scholar] [CrossRef]

- Wang, S.; Wang, D.; Liang, Q.; Jin, Y.; Liu, L.; Zhang, T. Review on Few-Shot Learning. Space Control Technol. Appl. 2023, 49, 1–10. [Google Scholar]

- Li, K.; Chen, J.; Li, G.; Jiang, X. Survey on Research of Few-Shot Learning. Mech. Electr. Eng. Technol. 2023, 1–10. [Google Scholar]

- Ravi, S.; Larochelle, H. Optimization as a Model for Few-Shot Learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Antoniou, A.; Edwards, H.; Storkey, A. How to Train Your MAML. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-SGD: Learning to Learn Quickly for Few-Shot Learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Elsken, T.; Stafffer, B.; Metzen, J.H.; Hutter, F. Meta-Learning of Neural Architectures for Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12365–12375. [Google Scholar]

- Graves, A. Neural Turing Machines. arXiv 2014, arXiv:1410.5401. [Google Scholar] [CrossRef]

- Cai, Q.; Pan, Y.; Yao, T.; Yan, C.; Mei, T. Memory Matching Networks for One-Shot Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4080–4088. [Google Scholar]

- Munkhdalai, T.; Yuan, X.; Mehri, S.; Trischler, A. Rapid Adaptation with Conditionally Shifted Neurons. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 3664–3673. [Google Scholar]

- Kaiser, Ł.; Nachum, O.; Roy, A.; Bengio, S. Learning to Remember Rare Events. arXiv 2017, arXiv:1703.03129. [Google Scholar]

- Wei, T.; Li, X.; Liu, H. A Survey on Semantic Image Segmentation Under the Few—Shot Learning Dilemma. Comput. Eng. Appl. 2023, 59, 1–11. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2, pp. 1–30. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching Networks for One-Shot Learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-Shot Learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Shaban, A.; Bansal, S.; Liu, Z.; Essa, I.; Boots, B. One-Shot Learning for Semantic Segmentation. arXiv 2017, arXiv:1709.03410. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Rakelly, K.; Shelhamer, E.; Darrell, T.; Efros, A.; Levine, S. Conditional Networks for Few-Shot Semantic Segmentation. In Proceedings of the ICLR, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Dong, N.; Xing, E.P. Few-Shot Semantic Segmentation with Prototype Learning. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; Volume 3, p. 4. [Google Scholar]

- Zhang, X.; Wei, Y.; Yang, Y.; Huang, P. SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation. IEEE Trans. Cybern. 2020, 50, 3855–3865. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, Y.; Feng, J. Panet: Few-Shot Image Semantic Segmentation with Prototype Alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9197–9206. [Google Scholar] [CrossRef]

- Zhang, C.; Lin, G.; Liu, F.; Yao, T.; Shen, T. Canet: Class-Agnostic Segmentation Networks with Iterative Refinement and Attentive Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5217–5226. [Google Scholar] [CrossRef]

- Zhang, C.; Lin, G.; Liu, F.; Guo, J.; Wu, W.; Yao, T. Pyramid Graph Networks with Connection Attentions for Region-Based One-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9587–9595. [Google Scholar] [CrossRef]

- Yang, B.; Liu, C.; Li, B.; Jiao, J.; Ye, Q. Prototype Mixture Models for Few-Shot Semantic Segmentation. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VIII. Springer: Berlin/Heidelberg, Germany, 2020; pp. 763–778. [Google Scholar] [CrossRef]

- Min, J.; Kang, D.; Cho, M. Hypercorrelation Squeeze for Few-Shot Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6941–6952. [Google Scholar] [CrossRef]

- Shi, X.; Wei, D.; Zhang, Y.; Lu, J.; Ning, J.; Chen, Y.; Ma, J.; Zheng, Y. Dense Cross-Query-and-Support Attention Weighted Mask Aggregation for Few-Shot Segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 151–168. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, S.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv 2022, arXiv:2112.10752. [Google Scholar] [CrossRef]

- Lüddecke, T.; Ecker, A.S. Image Segmentation Using Text and Image Prompts. arXiv 2022, arXiv:2112.10003. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, X.; Cao, Y.; Wang, D.; Shen, T.; Huang, T. Seggpt: Segmenting Everything in Context. arXiv 2023, arXiv:2304.03284. [Google Scholar] [CrossRef]

- Tan, W.; Chen, S.; Yan, B. DiffSS: Diffusion Model for Few-Shot Semantic Segmentation. arXiv 2023, arXiv:2307.00773. [Google Scholar] [CrossRef]

- Chen, S.; Meng, F.; Zhang, R.; Qiu, H.; Li, H.; Wu, Q.; Xu, L. Visual and Textual Prior Guided Mask Assemble for Few-Shot Segmentation and Beyond. arXiv 2023, arXiv:2308.07539. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. Linear Methods for Classification. In The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 101–137. [Google Scholar]

- Ruder, S. An Overview of Multi-Task Learning in Deep Neural Networks. arXiv 2017, arXiv:1706.05098. [Google Scholar] [CrossRef]

- Tian, Z.; Zhao, H.; Shu, M.; Yang, L.; Li, X.; Jia, J. Prior Guided Feature Enrichment Network for Few-Shot Segmentation. arXiv 2020, arXiv:2008.01449. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Hovakimyan, N.; Hobbs, J. Genco: An Auxiliary Generator from Contrastive Learning for Enhanced Few-Shot Learning in Remote Sensing. In ECAI 2023; IOS Press: Amsterdam, The Netherlands, 2023. [Google Scholar]

- Naik, H.; Chan, A.H.H.; Yang, J.; Delacoux, M.; Couzin, I.D.; Kano, F.; Nagy, M. 3D-POP—An Automated Annotation Approach to Facilitate Markerless 2D-3D Tracking of Freely Moving Birds with Marker-based Motion Capture. arXiv 2023, arXiv:2303.13174. [Google Scholar] [CrossRef]

- Chen, Y.; Pant, Y.; Yang, H.; Yao, T.; Meit, T. VP3D: Unleashing 2D Visual Prompt for Text-to-3D Generation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Chen, X.; Shi, M.; Zhou, Z.; He, L.; Tsoka, S. Enhancing Generalized Few-Shot Semantic Segmentation via Effective Knowledge Transfer. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar]

- Xu, Q.; Zhao, W.; Lin, G.; Long, Y. Self-Calibrated Cross Attention Network for Few-Shot Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 655–665. [Google Scholar] [CrossRef]

- Liu, H.; Peng, P.; Chen, T.; Wang, X.; Yao, Y.; Hua, Z. Fecanet: Boosting few-shot semantic segmentation with feature-enhanced context-aware network. IEEE Trans. Multimed. 2023, 25, 8580–8592. [Google Scholar] [CrossRef]

| T | 1-Shot (Fold-0) | 5-Shot (Fold-0) |

|---|---|---|

| 0.85 | 52.23 | 54.62 |

| 0.75 | 53.09 | 55.54 |

| 0.65 | 52.64 | 53.83 |

| Fold Number | Category |

|---|---|

| Fold-0 | Spartina, Suaeda |

| Fold-1 | Tamarix, reed |

| Fold-2 | seaweed, vegetation |

| Backbone | Methods | 1-Shot | 5-Shot | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Fold-0 | Fold-1 | Fold-2 | Mean | Fold-0 | Fold-1 | Fold-2 | Mean | ||

| ResNet50 | PANet (ICCV’19) [30] | 32.36 | 29.11 | 32.05 | 31.17 | 33.82 | 32.40 | 39.12 | 35.11 |

| CANet (CVPR’19) [31] | 39.73 | 37.98 | 30.93 | 22.88 | 43.45 | 40.53 | 50.12 | 44.70 | |

| PMMs (ECCV’20) [33] | 40.87 | 36.07 | 44.65 | 40.53 | 43.31 | 36.61 | 47.43 | 42.45 | |

| PFENet (TPAMI’20) [46] | 38.75 | 37.24 | 42.09 | 39.36 | 39.57 | 38.43 | 46.14 | 41.38 | |

| HSNet (ICCV’21) [34] | 47.52 | 56.66 | 43.04 | 49.07 | 56.52 | 51.40 | 45.53 | 51.15 | |

| GenCo (ECAI’23) [47] | 51.25 | 50.56 | 45.04 | 48.95 | 54.11 | 55.66 | 45.11 | 51.63 | |

| POP (CVPR’23) [48] | 30.54 | 37.93 | 46.32 | 38.26 | 42.08 | 41.83 | 51.41 | 45.11 | |

| VP (CVPR’24) [49] | 42.93 | 40.71 | 32.78 | 38.81 | 51.01 | 42.51 | 39.64 | 44.39 | |

| EKT (AAAI’25) [50] | 47.34 | 53.06 | 52.29 | 50.90 | 51.51 | 53.09 | 57.44 | 54.01 | |

| Ours | 53.09 | 60.21 | 47.97 | 53.76 | 55.54 | 65.35 | 47.52 | 56.13 | |

| Backbone | Methods | 1-Shot | 5-Shot | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Fold-0 | Fold-1 | Fold-2 | Mean | Fold-0 | Fold-1 | Fold-2 | Mean | ||

| ResNet101 | PANet (ICCV’19) [30] | 33.86 | 31.11 | 33.85 | 32.94 | 35.52 | 34.20 | 41.12 | 36.95 |

| CANet (CVPR’19) [31] | 41.63 | 39.98 | 52.73 | 44.78 | 45.25 | 42.13 | 52.12 | 46.50 | |

| PMMs (ECCV’20) [33] | 41.43 | 39.88 | 52.43 | 44.58 | 45.02 | 39.24 | 50.65 | 44.97 | |

| PFENet (TPAMI’20) [46] | 40.65 | 39.24 | 43.89 | 41.26 | 41.27 | 40.33 | 48.14 | 43.24 | |

| HSNet (ICCV’21) [34] | 51.70 | 49.41 | 54.23 | 51.78 | 58.12 | 53.20 | 49.66 | 53.66 | |

| GenCo (ECAI’23) [47] | 54.29 | 52.25 | 55.62 | 54.05 | 59.88 | 50.59 | 65.70 | 58.72 | |

| Ours | 54.19 | 54.25 | 57.32 | 55.25 | 61.48 | 52.39 | 67.70 | 60.52 | |

| Backbone | Methods | FB-IoU (%) | |

|---|---|---|---|

| 1-Shot | 5-Shot | ||

| ResNet50 | HSNet (ICCV’21) [34] | 62.30 | 66.12 |

| DCAMA (ECCV’22) [35] | 63.45 | 66.83 | |

| SCCAN (ICCV’23) [51] | 65.10 | 67.55 | |

| FECANet (TMM’23) [52] | 65.53 | 68.02 | |

| Ours | 66.21 | 69.05 | |

| ResNet101 | HSNet (ICCV’21) [34] | 63.72 | 64.55 |

| DCAMA (ECCV’22) [35] | 64.50 | 65.83 | |

| SCCAN (ICCV’23) [51] | 65.71 | 68.03 | |

| Ours | 67.83 | 70.62 | |

| CMM | SMM | SAM (with Select) | Fold-0 | Fold-1 | Fold-2 | Mean |

|---|---|---|---|---|---|---|

| 47.52 | 56.66 | 43.04 | 49.07 | |||

| ✓ | 48.05 | 57.74 | 53.89 | |||

| ✓ | 48.67 | 57.03 | 44.68 | |||

| ✓ | ✓ | 51.14 | 58.72 | 45.88 | ||

| ✓ | ✓ | ✓ | 53.09 | 60.21 | 47.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, Y.; Guo, Z.; Li, C.; Li, W.; Wang, S. SAM-Based Few-Shot Learning for Coastal Vegetation Segmentation in UAV Imagery via Cross-Matching and Self-Matching. Remote Sens. 2025, 17, 3404. https://doi.org/10.3390/rs17203404

Wei Y, Guo Z, Li C, Li W, Wang S. SAM-Based Few-Shot Learning for Coastal Vegetation Segmentation in UAV Imagery via Cross-Matching and Self-Matching. Remote Sensing. 2025; 17(20):3404. https://doi.org/10.3390/rs17203404

Chicago/Turabian StyleWei, Yunfan, Zhiyou Guo, Conghui Li, Weiran Li, and Shengke Wang. 2025. "SAM-Based Few-Shot Learning for Coastal Vegetation Segmentation in UAV Imagery via Cross-Matching and Self-Matching" Remote Sensing 17, no. 20: 3404. https://doi.org/10.3390/rs17203404

APA StyleWei, Y., Guo, Z., Li, C., Li, W., & Wang, S. (2025). SAM-Based Few-Shot Learning for Coastal Vegetation Segmentation in UAV Imagery via Cross-Matching and Self-Matching. Remote Sensing, 17(20), 3404. https://doi.org/10.3390/rs17203404