An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery

Abstract

Highlights

- We reframe levee monitoring as an unsupervised anomaly detection task, unifying diverse hazards and response elements into a single “abnormal targets” category for comprehensive situational awareness.

- We propose a novel, fully automated and training-free framework that integrates multi-modal fusion with an adaptive segmentation module, where Bayesian optimization automatically tunes a mean-shift algorithm.

- Our framework provides a practical solution for a challenging, data-scarce domain by eliminating the need for labelled training data, a major bottleneck for traditional supervised methods.

- The proposed method demonstrates superior performance over all baselines in complex, real-world scenarios, proving the effectiveness of synergistic multi-modal fusion and adaptive unsupervised learning for disaster management.

Abstract

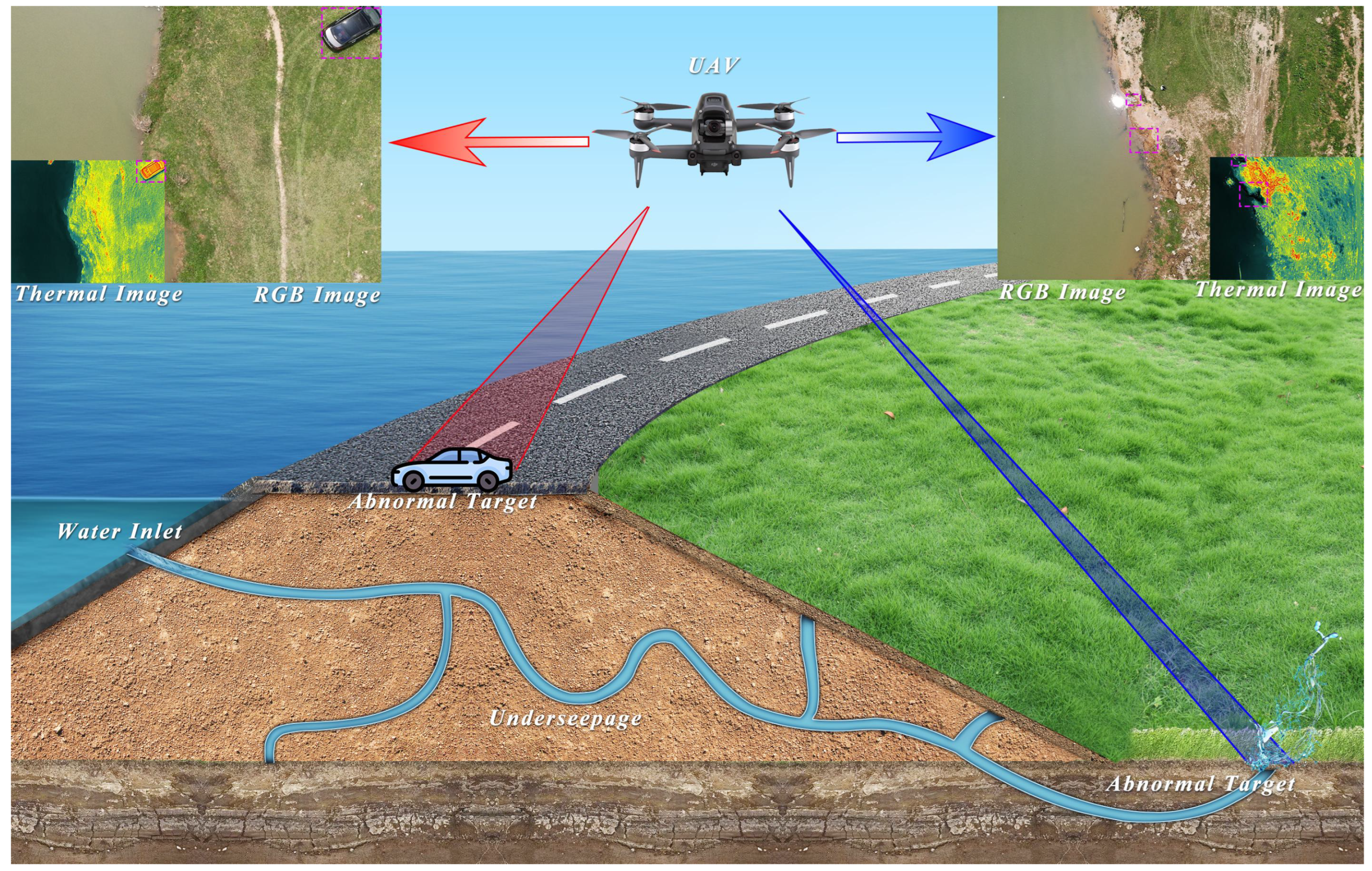

1. Introduction

- We are the first to frame levee monitoring as an unsupervised anomaly detection task. The definition of abnormal targets unifies diverse critical objects for comprehensive situational awareness.

- We propose a novel, fully automated framework for levee hazard identification, which integrates UAV-based multi-modal image registration, fusion, and segmentation, demonstrating a complete automatic solution.

- We introduce an innovative unsupervised segmentation module that couples the mean-shift algorithm with Bayesian optimization. This approach eliminates the need for manual annotation and enables adaptive segmentation across diverse scenes, significantly enhancing the framework’s practicality and scalability.

- We validate the proposed framework on a real-world levee dataset, providing empirical evidence that the fusion of thermal and visible imagery substantially improves the identification accuracy of potential piping hazards compared to using either modality alone.

2. Related Work

2.1. Levee Safety Monitoring and Situational Awareness

2.1.1. Traditional and Geophysical Methods

2.1.2. UAV-Based Monitoring with Supervised Learning

2.2. Unsupervised Anomaly Segmentation

2.3. Multi-Modal Fusion for Levee Anomaly Segmentation

2.3.1. The Synergy of Thermal and Visible Data

2.3.2. Deep Learning-Based Fusion

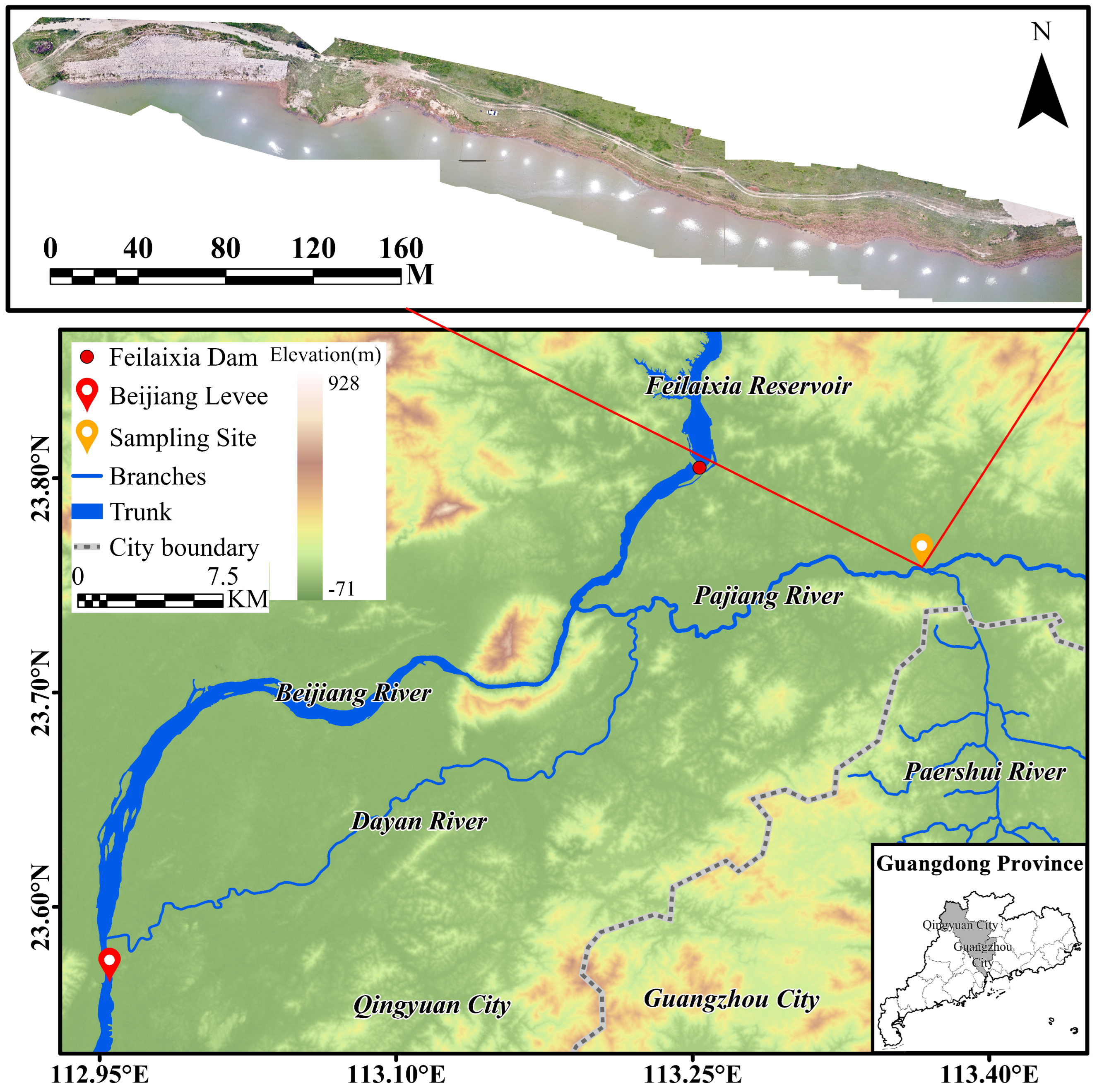

3. Study Site and Dataset

3.1. Study Site

3.2. Sensors and Dataset

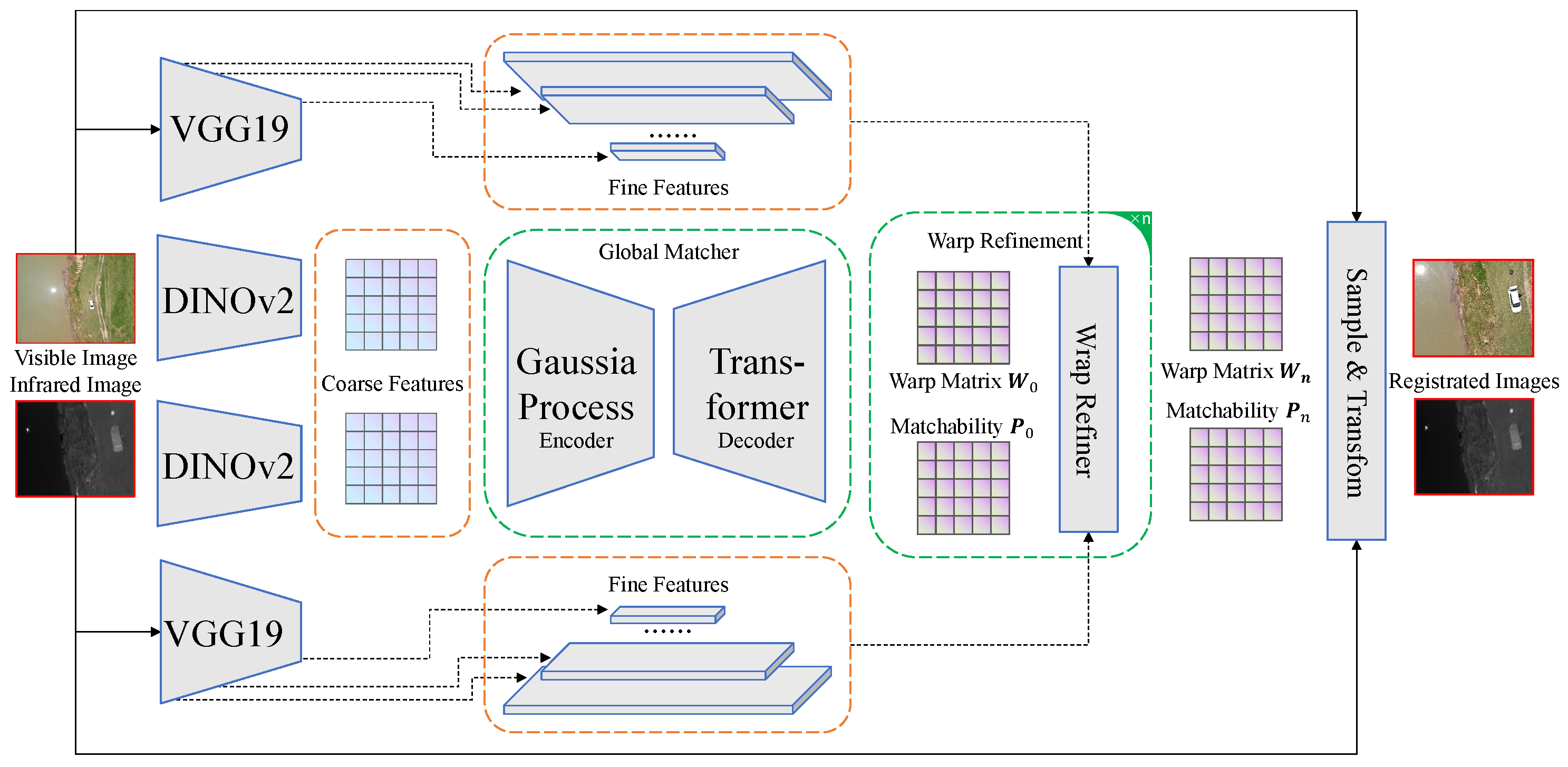

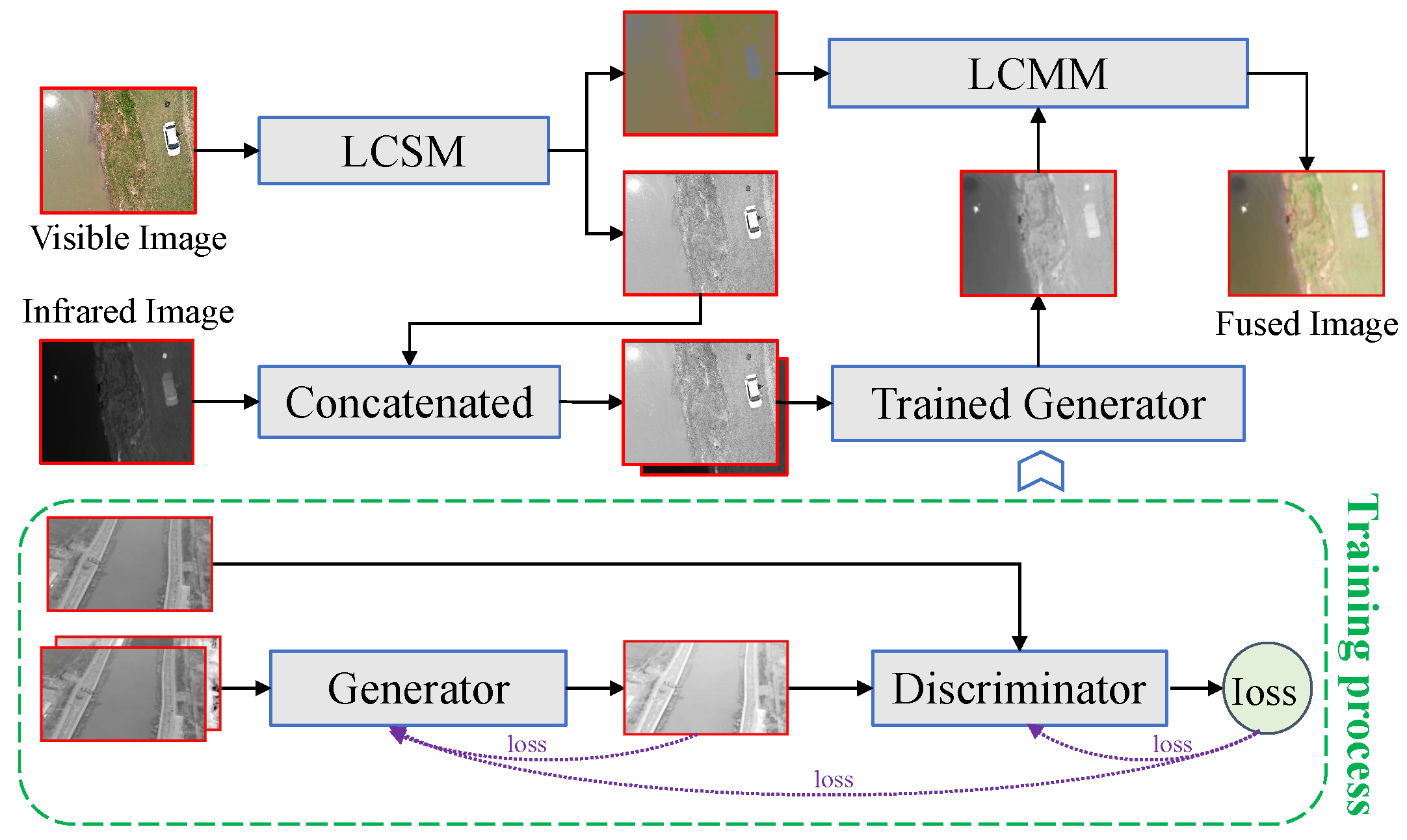

4. Methods

4.1. Framework

4.2. Registration of Infrared and Visible Images

4.3. Fusion of Infrared and Visible Images

4.4. Segmentation of Fused Images

- Choose a sample point randomly from the dataset.

- Calculate the kernel density estimation of and find the local maximum density position of .

- If the local maximum density position of is not in the cluster, add to the cluster.

- If the local maximum density position of is in the cluster, choose a new sample point randomly from the dataset and repeat step 2.

- Repeat step 2 and 3 until all data points are in the cluster.

4.5. Hyperparameter Optimization

4.6. Evaluation

- Instance Extraction: First, unique instance IDs are extracted from both the ground truth (GT) and predicted (Pred) segmentation masks. Let represent the set of ground truth instances and denote the set of predicted instances, where m and n are the number of instances in GT and Pred respectively.

- IoU Matrix Construction: For each pair of ground truth instance and predicted instance , we calculate their IoUs:where is the number of overlapping pixels and is the total number of pixels in either instance. These scores are stored in an IoU matrix , where .

- Greedy Matching: The algorithm processes each predicted instance in turn:

- For , find the ground truth instance with the maximum IoU score in column j of matrix .

- If , where is a predefined threshold, establish a match between and .

- Once matched, is removed from consideration for other predicted instances to ensure one-to-one matching.

5. Results

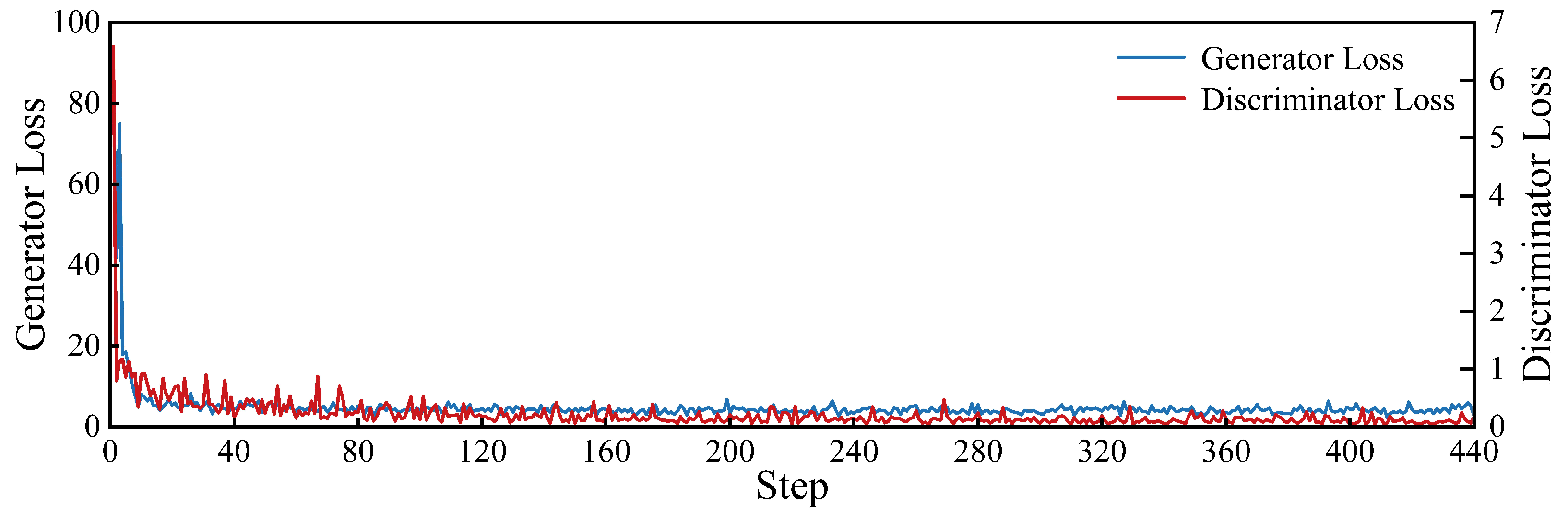

5.1. Model Training

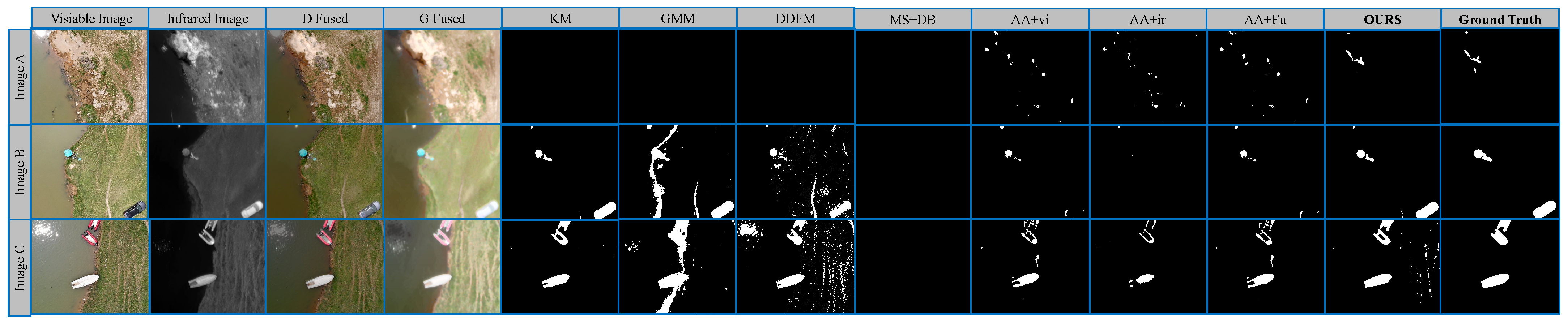

5.2. Comparative Experiments

- Segmentation Module (SM): We displaced the mean-shift algorithm with K-means [38] and a Gaussian Mixture Model [39]. In the optimisation module, considering the hyperparameter must be an integer, we calculated the corresponding results and their EI for each value between 1 and 6, and then selected the minimum EI and its corresponding result as the final result. Detailed experimental results can be found in E 2-1 and E 2-2 respectively.

- Fusion Module (FM): We compare the FGM with DDFM [29]. DDFM is one of the best fusion algorithms. The rest remains unchanged. Detailed experimental results can be found in E 2-3.

- Optimisation Module (OM): We compare the proposed EI with DB [33]. This experiment is set to demonstrate that the proposed EI is better than other traditional clustering indexes. Detailed experimental results can be found in E 2-4.

5.3. Ablation Studies

- Fusion Module (FM): In order to verify the fusion module, we removed the fusion process and segmented the visible and infrared images. The results are shown in E 3-1 and E 3-2.

- LCSM-LCMM Module (LM): As the FGM can only process single-channel images, we conduct the fusion based on the greyscale visible images and infrared images. The rest remains unchanged and the results are shown in E 3-3.

- Optimisation Module (OM): We replace the BO with an empirical and adaptable method to obtain the hyperparameters of the MS algorithm, the results of which can be found in E 3-4. The baseline is shown as follows:

- First, sample 100 pixels from the image. Record them as set .

- Next, calculate the distance in RGB space between any two different points in sample set . Record them as set .

- Finally, calculate the q-quantile of the distance set , where . Record it as . Then, the bandwidth, a hyperparameter of the MS algorithm, is set as .

5.4. Computational Efficiency Analysis

6. Discussion

6.1. Discussion on Fusion Module

6.2. Discussion on Segmentation and Optimisation Module

6.3. Discussion on Synergistic Effects of the Proposed Framework

6.4. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| mIoU | Mean Intersection over Union |

| TIR | Thermal Infrared Remote Sensing |

| SURF | Speeded-Up Robust Features |

| DFOV | Diagonal Field of View |

| RoMa | Robust Dense Feature Matching |

| MS | Mean-Shift |

| FGM | FusionGAN Model |

| BO | Bayesian Optimization |

| GP | Gaussian Process |

| LCSM | luminance/chrominance separation module |

| LCMM | Luminance/Chrominance Merging Module |

| DI | Dunn’s Index |

| DB | Davies–Bouldin Index |

| EI | Entropy Index |

| GT | Ground Truth |

| Pred | Prediction |

| DDFM | Denoising Diffusion image Fusion Model |

References

- Kreibich, H.; Loon, A.F.; Schrter, K.; Ward, P.J.; Mazzoleni, M.; Sairam, N.; Abeshu, G.W.; Agafonova, S.; Aghakouchak, A.; Aksoy, H. The challenge of unprecedented floods and droughts in risk management. Nature 2022, 608, 80–86. [Google Scholar] [CrossRef]

- Ceccato, F.; Simonini, P. The effect of heterogeneities and small cavities on levee failures: The case study of the Panaro levee breach (Italy) on 6 December 2020. J. Flood Risk Manag. 2023, 16, e12882. [Google Scholar] [CrossRef]

- Vorogushyn, S.; Lindenschmidt, K.E.; Kreibich, H.; Apel, H.; Merz, B. Analysis of a detention basin impact on dike failure probabilities and flood risk for a channel-dike-floodplain system along the river Elbe, Germany. J. Hydrol. 2012, 436, 120–131. [Google Scholar] [CrossRef]

- Ghorbani, A.; Revil, A.; Bonelli, S.; Barde-Cabusson, S.; Girolami, L.; Nicoleau, F.; Vaudelet, P. Occurrence of sand boils landside of a river dike during flooding: A geophysical perspective. Eng. Geol. 2024, 329, 107403. [Google Scholar] [CrossRef]

- Li, R.; Wang, Z.; Sun, H.; Zhou, S.; Liu, Y.; Liu, J. Automatic Identification of Earth Rock Embankment Piping Hazards in Small and Medium Rivers Based on UAV Thermal Infrared and Visible Images. Remote Sens. 2023, 15, 4492. [Google Scholar] [CrossRef]

- Nam, J.; Chang, I.; Lim, J.S.; Song, J.; Lee, N.; Cho, H.H. Active adaptation in infrared and visible vision through multispectral thermal Lens. Int. Commun. Heat Mass Transf. 2024, 158, 107898. [Google Scholar] [CrossRef]

- Duan, Q.; Chen, B.; Luo, L. Rapid and Automatic UAV Detection of River Embankment Piping. Water Resour. Res. 2025, 61, e2024WR038931. [Google Scholar] [CrossRef]

- Su, H.; Ma, J.; Zhou, R.; Wen, Z. Detect and identify earth rock embankment leakage based on UAV visible and infrared images. Infrared Phys. Technol. 2022, 122, 104105. [Google Scholar] [CrossRef]

- Zhou, R.; Wen, Z.; Su, H. Automatic recognition of earth rock embankment leakage based on UAV passive infrared thermography and deep learning. ISPRS J. Photogramm. Remote Sens. 2022, 191, 85–104. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Falkner, S.; Klein, A.; Hutter, F. BOHB: Robust and Efficient Hyperparameter Optimization at Scale. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Dy, J., Krause, A., Eds.; PMLR, Proceedings of Machine Learning Research. Volume 80, pp. 1437–1446. [Google Scholar]

- Bersan, S.; Koelewijn, A.R.; Simonini, P. Effectiveness of distributed temperature measurements for early detection of piping in river embankments. Hydrol. Earth Syst. Sci. 2018, 22, 1491–1508. [Google Scholar] [CrossRef]

- Loke, M.; Wilkinson, P.; Chambers, J.; Uhlemann, S.; Sorensen, J. Optimized arrays for 2-D resistivity survey lines with a large number of electrodes. J. Appl. Geophys. 2015, 112, 136–146. [Google Scholar] [CrossRef]

- Baccani, G.; Bonechi, L.; Bongi, M.; Casagli, N.; Ciaranfi, R.; Ciulli, V.; D’Alessandro, R.; Gonzi, S.; Lombardi, L.; Morelli, S.; et al. The reliability of muography applied in the detection of the animal burrows within River Levees validated by means of geophysical techniques. J. Appl. Geophys. 2021, 191, 104376. [Google Scholar] [CrossRef]

- Wang, T.; Chen, J.; Li, P.; Yin, Y.; Shen, C. Natural tracing for concentrated leakage detection in a rockfill dam. Eng. Geol. 2019, 249, 1–12. [Google Scholar] [CrossRef]

- Wieland, M.; Martinis, S.; Kiefl, R.; Gstaiger, V. Semantic segmentation of water bodies in very high-resolution satellite and aerial images. Remote Sens. Environ. 2023, 287, 113452. [Google Scholar] [CrossRef]

- Chen, B.; Duan, Q.; Luo, L. From manual to UAV-based inspection: Efficient detection of levee seepage hazards driven by thermal infrared image and deep learning. Int. J. Disaster Risk Reduct. 2024, 114, 104982. [Google Scholar] [CrossRef]

- Jha, S.B.; Babiceanu, R.F. Deep CNN-based visual defect detection: Survey of current literature. Comput. Ind. 2023, 148, 103911. [Google Scholar] [CrossRef]

- Fanwu, M.; Tao, G.; Di, W.; Xiangyi, X. Unsupervised surface defect detection using dictionary-based sparse representation. Eng. Appl. Artif. Intell. 2025, 143, 110020. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Zhang, L.; Zhong, Y. Auto-AD: Autonomous hyperspectral anomaly detection network based on fully convolutional autoencoder. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Su, S.; Yan, L.; Xie, H.; Chen, C.; Zhang, X.; Gao, L.; Zhang, R. Multi-Level Hazard Detection Using a UAV-Mounted Multi-Sensor for Levee Inspection. Drones 2024, 8, 90. [Google Scholar] [CrossRef]

- Tang, Y.; He, H.; Wang, Y.; Mao, Z.; Wang, H. Multi-modality 3D object detection in autonomous driving: A review. Neurocomputing 2023, 553, 126587. [Google Scholar] [CrossRef]

- Salvi, M.; Loh, H.W.; Seoni, S.; Barua, P.D.; García, S.; Molinari, F.; Acharya, U.R. Multi-modality approaches for medical support systems: A systematic review of the last decade. Inf. Fusion 2024, 103, 102134. [Google Scholar] [CrossRef]

- Tang, H.; Liu, G.; Qian, Y.; Wang, J.; Xiong, J. EgeFusion: Towards Edge Gradient Enhancement in Infrared and Visible Image Fusion With Multi-Scale Transform. IEEE Trans. Comput. Imaging 2024, 10, 385–398. [Google Scholar] [CrossRef]

- Li, X.; Tan, H.; Zhou, F.; Wang, G.; Li, X. Infrared and visible image fusion based on domain transform filtering and sparse representation. Infrared Phys. Technol. 2023, 131, 104701. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A Fusion Approach to Infrared and Visible Images. IEEE Trans. Image Process. 2019, 28, 2614–2623. [Google Scholar] [CrossRef] [PubMed]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 6–12 December 2020. NIPS ’20. [Google Scholar]

- Zhao, Z.; Bai, H.; Zhu, Y.; Zhang, J.; Xu, S.; Zhang, Y.; Zhang, K.; Meng, D.; Timofte, R.; Van Gool, L. DDFM: Denoising diffusion model for multi-modality image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 8082–8093. [Google Scholar]

- Edstedt, J.; Sun, Q.; Bökman, G.; Wadenbäck, M.; Felsberg, M. RoMa: Robust Dense Feature Matching. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 19790–19800. [Google Scholar]

- He, X.; Zhao, K.; Chu, X. AutoML: A survey of the state-of-the-art. Knowl.-Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Bezdek, J.C.; Pal, N.R. Cluster Validation with Generalized Dunn’s Indices. In Proceedings of the 1995 Second New Zealand International Two-Stream Conference on Artificial Neural Networks and Expert Systems, Dunedin, New Zealand, 20–23 November 1995. [Google Scholar]

- Davies, D.L.; Bouldin, D.W. A Cluster Separation Measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, PAMI-1, 224–227. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware Dual Adversarial Learning and a Multi-scenario Multi-Modality Benchmark to Fuse Infrared and Visible for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Xu, H.; Ma, J.; Le, Z.; Jiang, J.; Guo, X. FusionDN: A Unified Densely Connected Network for Image Fusion. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Pengyu, Z.; Zhao, J.; Wang, D.; Lu, H.; Ruan, X. Visible-Thermal UAV Tracking: A Large-Scale Benchmark and New Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zhong, Y.; Hu, X.; Luo, C.; Wang, X.; Zhao, J.; Zhang, L. WHU-Hi: UAV-borne hyperspectral with high spatial resolution (H2) benchmark datasets and classifier for precise crop identification based on deep convolutional neural network with CRF. Remote Sens. Environ. 2020, 250, 112012. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Ezugwu, A.E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- Rasmussen, C. The infinite Gaussian mixture model. Adv. Neural Inf. Process. Syst. 1999, 12. [Google Scholar]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Liu, J.; Wu, G.; Liu, Z.; Wang, D.; Jiang, Z.; Ma, L.; Zhong, W.; Fan, X. Infrared and visible image fusion: From data compatibility to task adaption. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2349–2369. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhang, X.; Cao, Y.; Wang, W.; Shen, C.; Huang, T. SegGPT: Towards Segmenting Everything in Context. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 1130–1140. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding DINO: Marrying DINO with Grounded Pre-training for Open-Set Object Detection. In European Conference on Computer Vision, Proceedings of the Computer Vision–ECCV 2024, 18th European Conference, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 38–55. [Google Scholar]

| Sensors | Parameters | Specifications |

|---|---|---|

| RGB Camera | Specifications of Sensor | 1/2.3″ CMOS |

| Effective Pixels of Sensor | 12 million | |

| DFOV 1 of Lens | 82.9° | |

| Focal Length of Lens | 4.5 mm (Equivalent: 24 mm) | |

| Aperture of Lens | f/2.8 | |

| Focus Distance | 1 m to infinity | |

| Exposure Mode | Program Auto Exposure | |

| Exposure Compensation | ±3.0 (in 1/3-step increments) | |

| Metering Mode | Spot Metering, Center-Weighted Metering | |

| Shutter Speed | 1-1/8000 | |

| ISO Range | 100 to 25,600 | |

| Thermal Camera | Type | Uncooled Vanadium Oxide (VOx) Microbolometer |

| DFOV of Lens | 40.6° | |

| Focal Length of Lens | 13.5 mm (Equivalent: 58 mm) | |

| Aperture of Lens | f/1.0 | |

| Focus Distance of Lens | 5 m to infinity | |

| Photo Resolution | 640 × 512 | |

| Pixel Pitch | 12 μm | |

| Spectral Range | 8–14 μm | |

| NETD 2 | ≤50 mK @ f/1.0 | |

| Temperature Measurement Modes | Point Measurement, Area Measurement | |

| Temperature Range | −40 °C to 150 °C (High Gain Mode), | |

| −40 °C to 550 °C (Low Gain Mode) |

| ID | Experiments | EB or IB | Evaluation Metrics of Abnormal Targets | |||

|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | IoU | |||

| E 2-1 | (SM) GMM | IB | 0.254 | 0.565 | 0.315 | 0.231 |

| E 2-2 | (SM) KM | IB | 0.297 | 0.332 | 0.305 | 0.253 |

| E 2-3 | (FM) DDFM | IB | 0.162 | 0.520 | 0.219 | 0.148 |

| E 2-4 | (OM) MS+DB | IB | 0.054 | 0.047 | 0.050 | 0.043 |

| E 2-5 | Auto-AD+RGB | EB | 0.344 | 0.260 | 0.235 | 0.157 |

| E 2-6 | Auto-AD+Thermal | EB | 0.338 | 0.164 | 0.186 | 0.129 |

| E 2-7 | Auto-AD+Fused | EB | 0.349 | 0.256 | 0.235 | 0.158 |

| E 0-0 | ours | — | 0.489 | 0.605 | 0.479 | 0.378 |

| ID | Experiments | Evaluation Metrics of Abnormal Targets | |||

|---|---|---|---|---|---|

| Precision | Recall | F1-Score | IoU | ||

| E 3-1 | (FM) Only ir | 0.290 | 0.428 | 0.319 | 0.244 |

| E 3-2 | (FM) Only vi | 0.166 | 0.516 | 0.207 | 0.144 |

| E 3-3 | (LM) Greyscale | 0.218 | 0.326 | 0.252 | 0.188 |

| E 3-4 | (OM) Without BO | 0.347 | 0.431 | 0.367 | 0.300 |

| E 0-0 | ours | 0.489 | 0.605 | 0.479 | 0.378 |

| ID | Model | Module | Processing Time per Image (Seconds) |

|---|---|---|---|

| E 4-1 | - | RoMa Registration | 10.439 |

| E 4-2 | - | FGM Fusion | 0.093 |

| E 4-3 | - | KM Abnormal Segmentation | 1.012 |

| E 4-4 | - | GMM Abnormal Segmentation | 21.555 |

| E 4-5 | - | MS Abnormal Segmentation (without optimization) | 1.703 |

| E 4-6 | - | MS Abnormal Segmentation ( iterations of optimization) | |

| E 0-0 | ours | Total ( iterations of optimization) | 80.232 |

| E 4-7 | Auto-AD | - | 119.202 |

| Model | EN ↑ | SF ↑ | MI ↑ | PSNR ↑ | VIF ↑ | SSIM ↑ |

|---|---|---|---|---|---|---|

| FGAN | 7.024 | 2.477 | 0.980 | 10.074 | 0.404 | 0.553 |

| DDFM | 6.520 | 18.505 | 1.516 | 13.838 | 1.220 | 1.397 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Wang, Z.; Chen, J.; Wang, F.; Gao, L. An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery. Remote Sens. 2025, 17, 3398. https://doi.org/10.3390/rs17203398

Zhang J, Wang Z, Chen J, Wang F, Gao L. An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery. Remote Sensing. 2025; 17(20):3398. https://doi.org/10.3390/rs17203398

Chicago/Turabian StyleZhang, Jiyuan, Zhonggen Wang, Jing Chen, Fei Wang, and Lyuzhou Gao. 2025. "An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery" Remote Sensing 17, no. 20: 3398. https://doi.org/10.3390/rs17203398

APA StyleZhang, J., Wang, Z., Chen, J., Wang, F., & Gao, L. (2025). An Automated Framework for Abnormal Target Segmentation in Levee Scenarios Using Fusion of UAV-Based Infrared and Visible Imagery. Remote Sensing, 17(20), 3398. https://doi.org/10.3390/rs17203398