FLDSensing: Remote Sensing Flood Inundation Mapping with FLDPLN

Highlights

- The FLDSensing method was developed for remote sensing flood mapping using satellite imagery and the FLDPLN flood inundation model.

- The FLDSensing method improves remote sensing flood mapping and performs favorably against existing hybrid approaches.

Abstract

1. Introduction

2. Study Area and Data

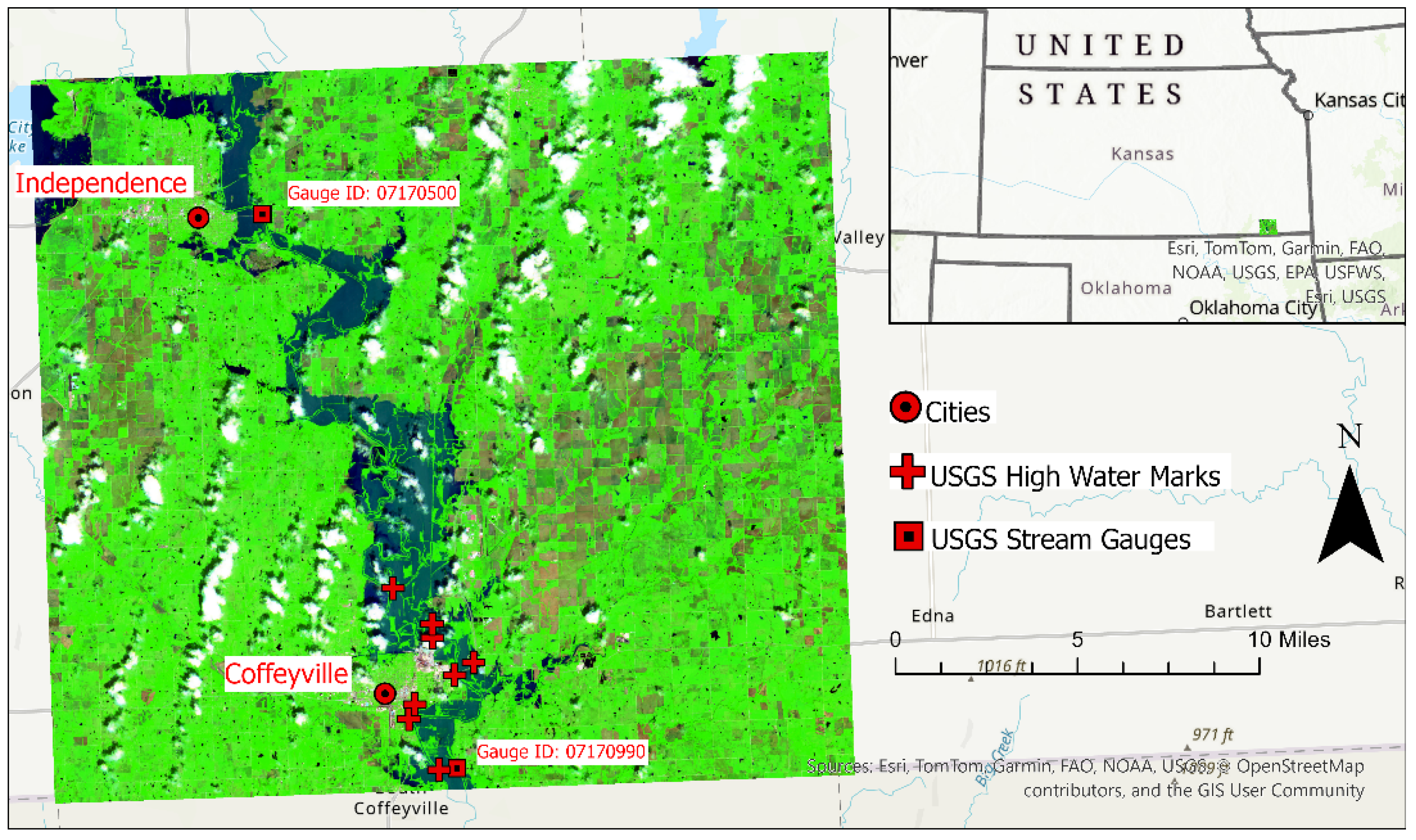

2.1. Study Area

2.2. Data

2.2.1. Remote Sensing Imagery and Cloud Mask

2.2.2. Land Cover Dataset and DEMs

2.2.3. Global Flood Monitoring Products

3. Methodology

3.1. The FLDPLN Model and Library

3.2. Identifying Clean Water-Land Flood Edge Pixels

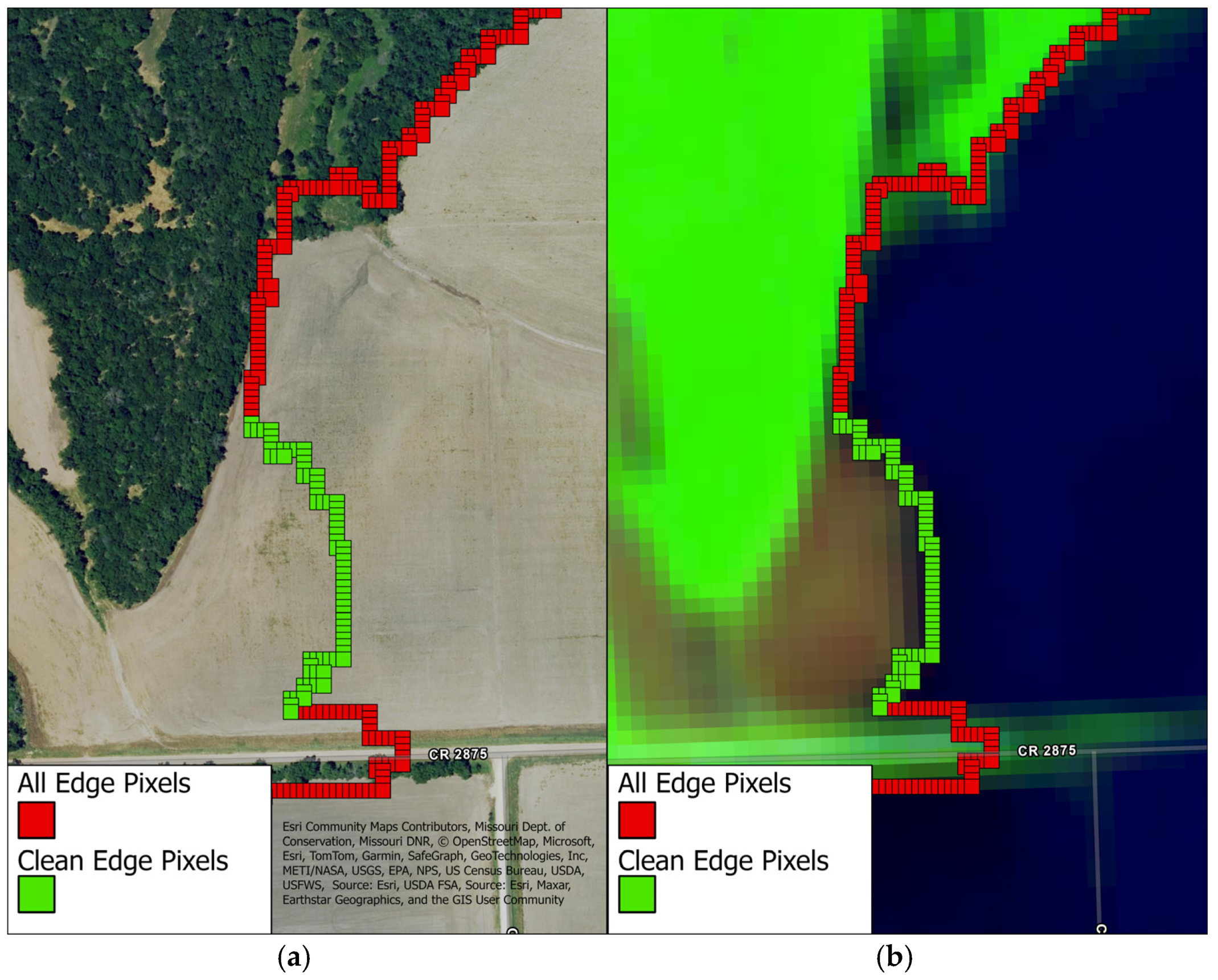

3.2.1. Identify Flood Edge Pixels

3.2.2. Identify Clean Flood Edge Pixels

3.3. Estimate FSP DOF Using Clean Flood Edge Pixels

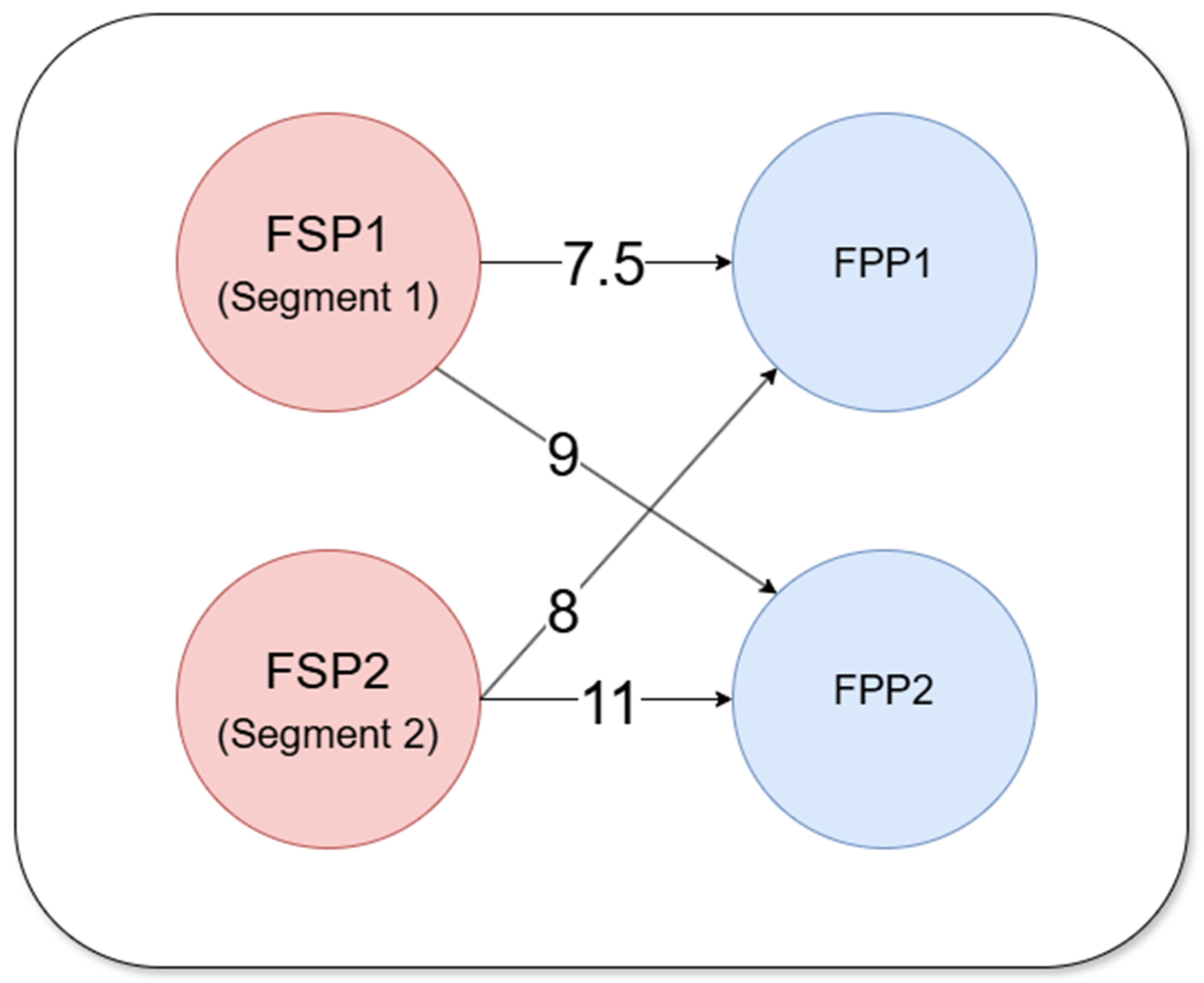

3.3.1. Identify FSPs and Estimate Their DOFs

3.3.2. Filter FSPs by Stream Order

3.4. Smooth DOFs and Generate Flood Inundation Map

3.5. Ground Truth and Accuracy Metrics

4. Results

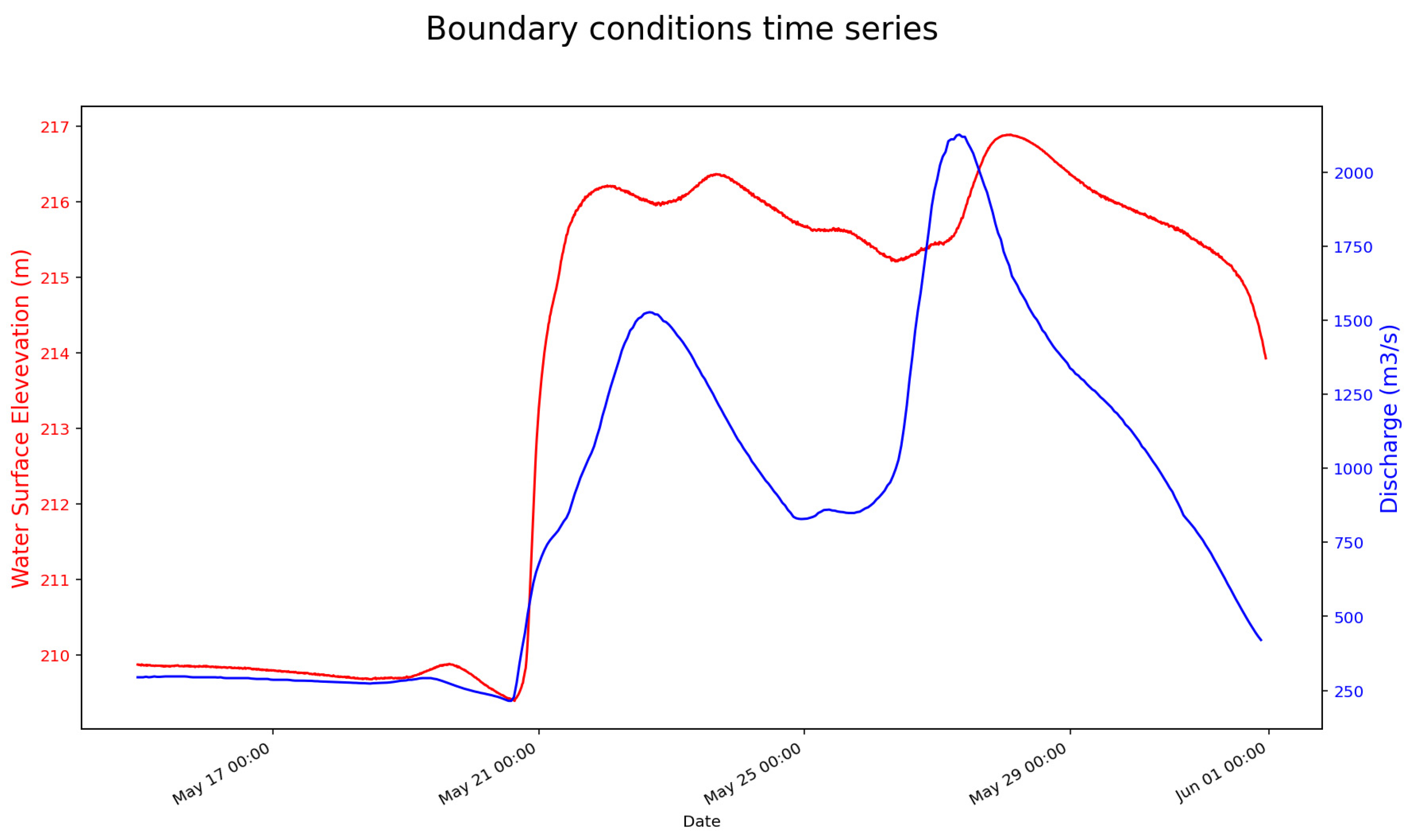

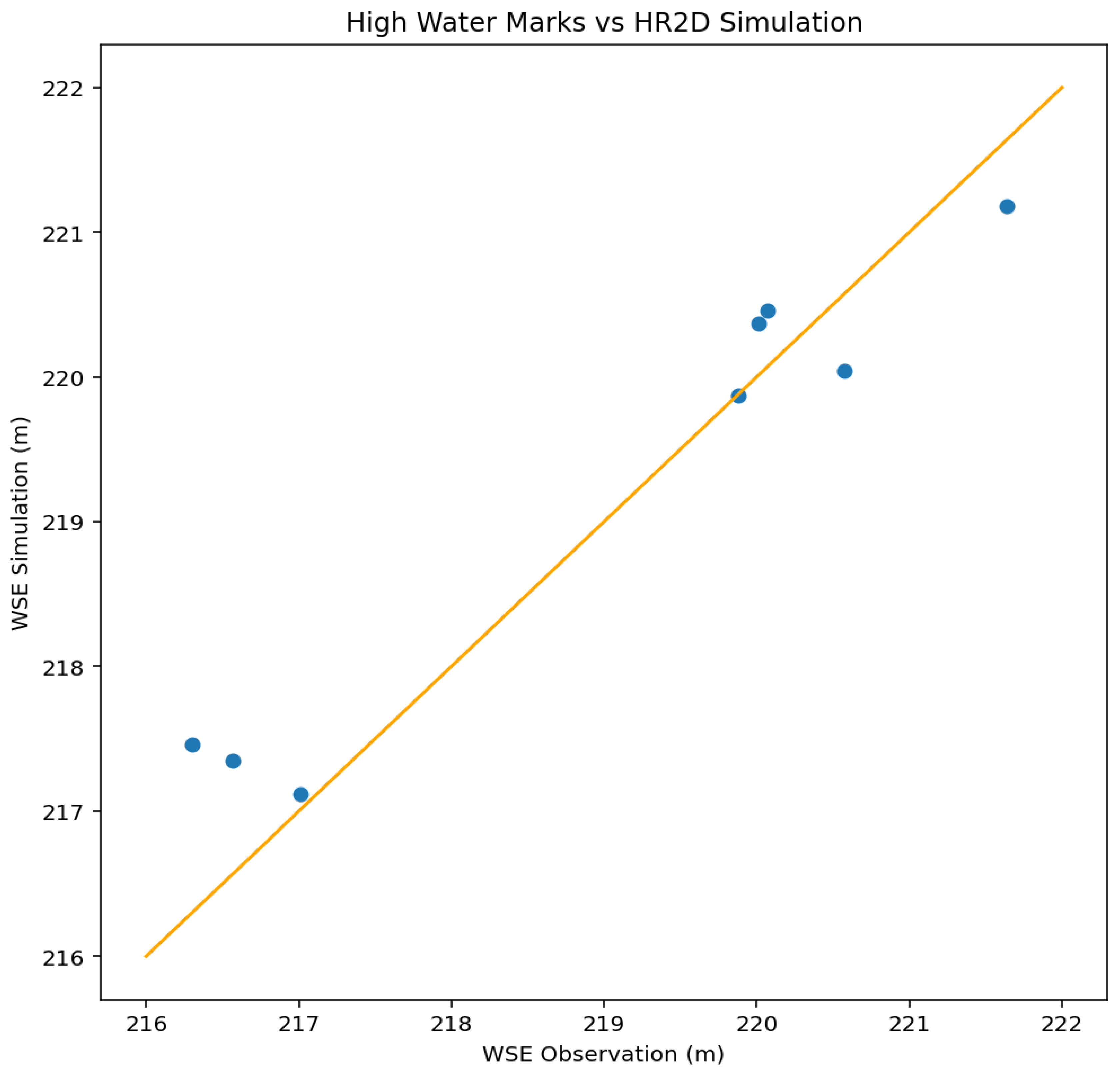

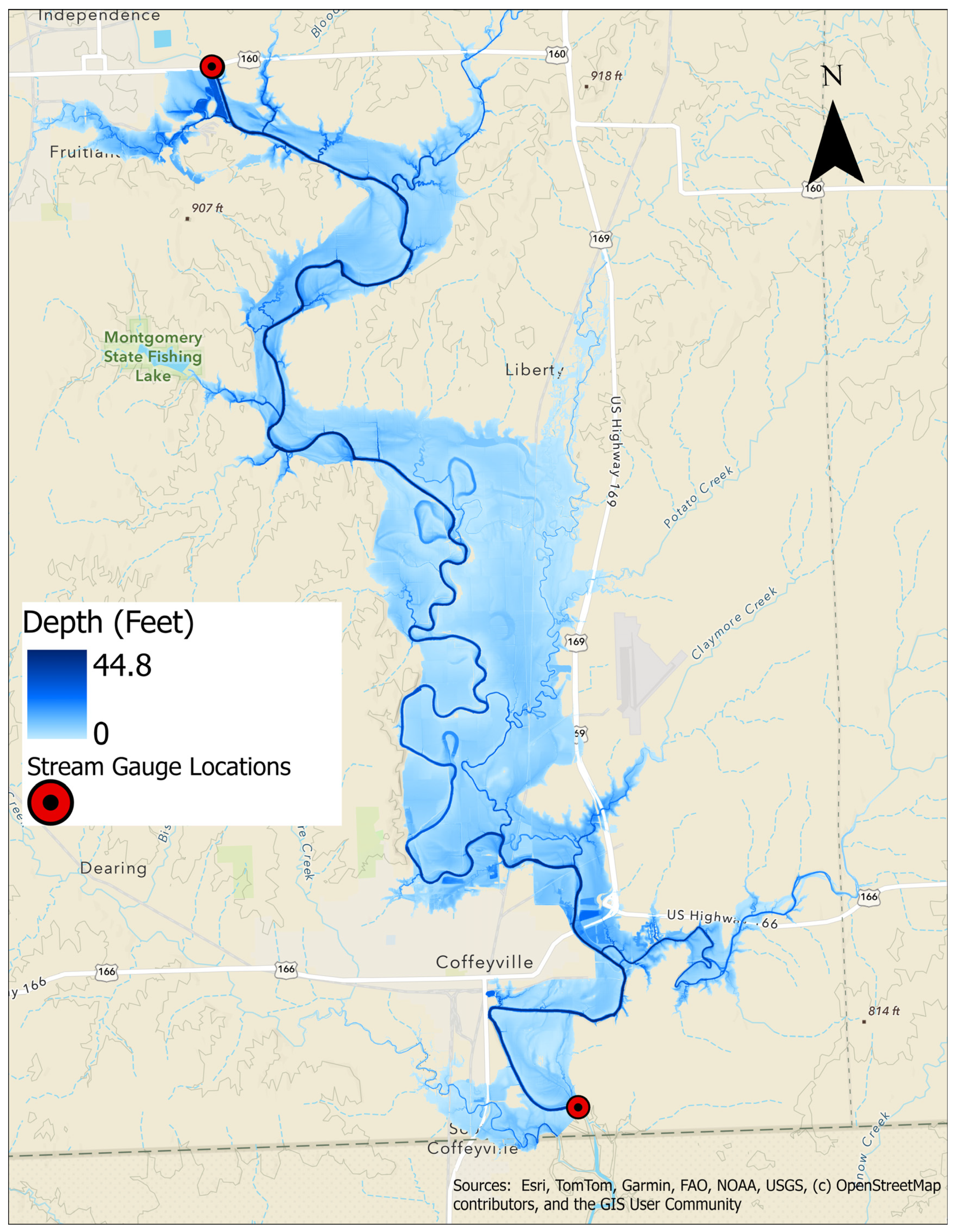

4.1. HEC-RAS 2D Inundation Map

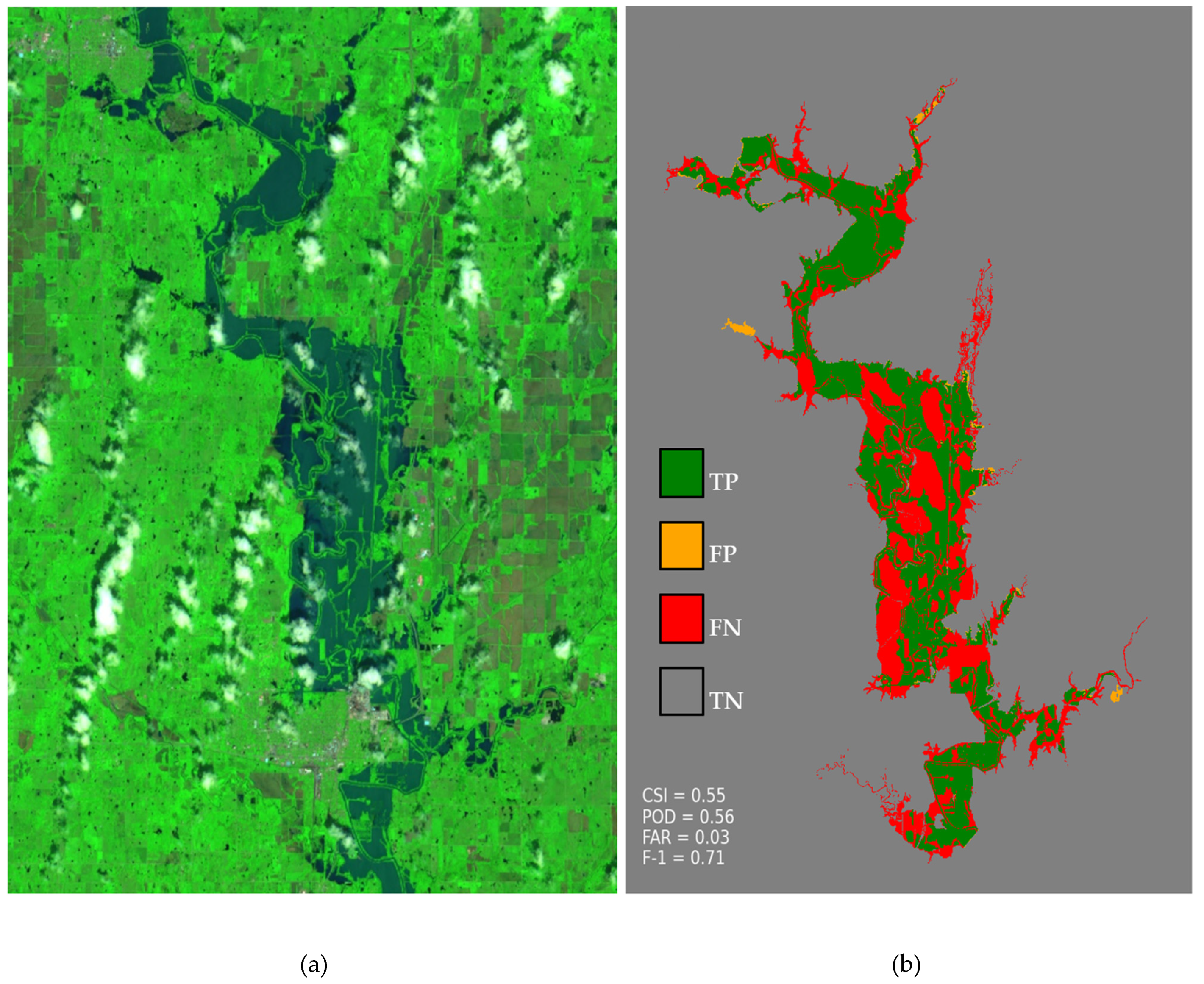

4.2. Remote Sensing-Based FIM

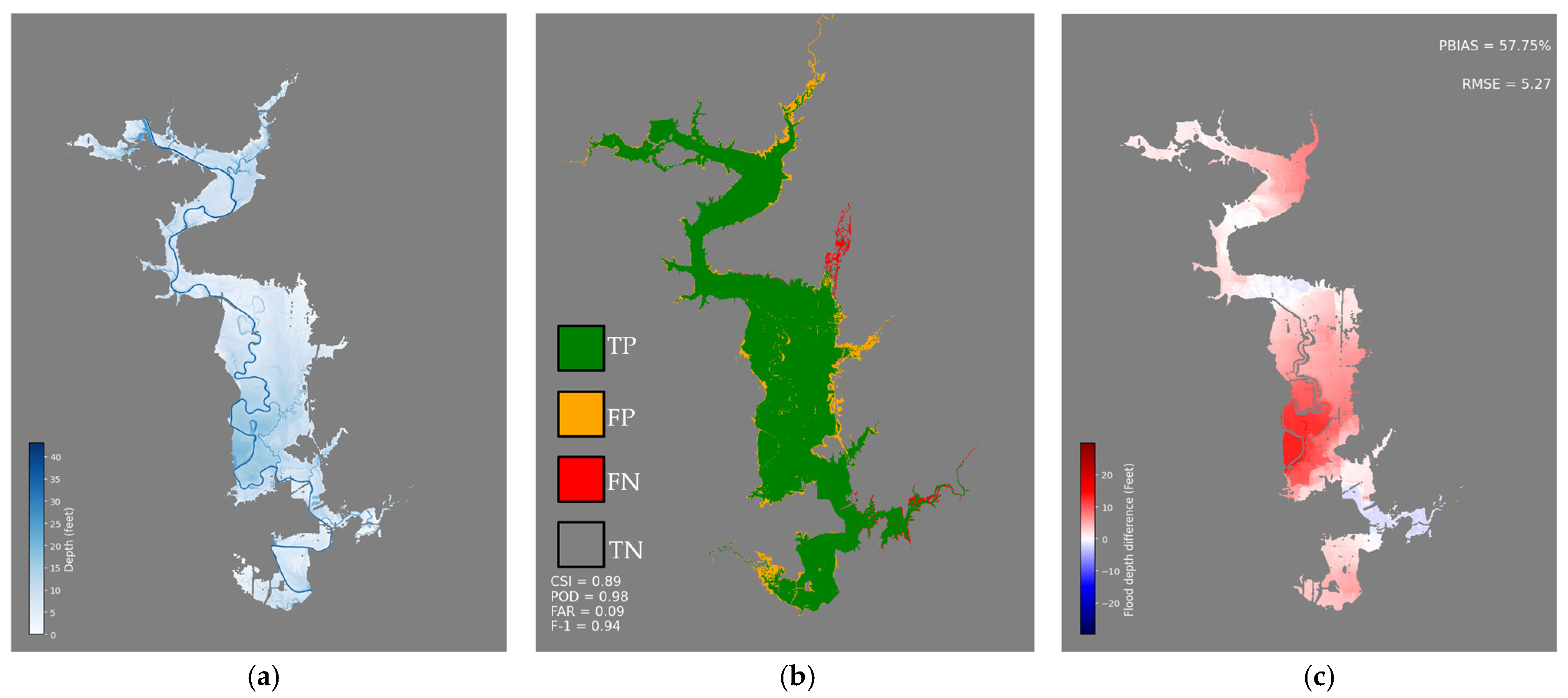

4.3. FLDSensing FIM

4.4. Comparison with the FLEXTH and FwDET Methods

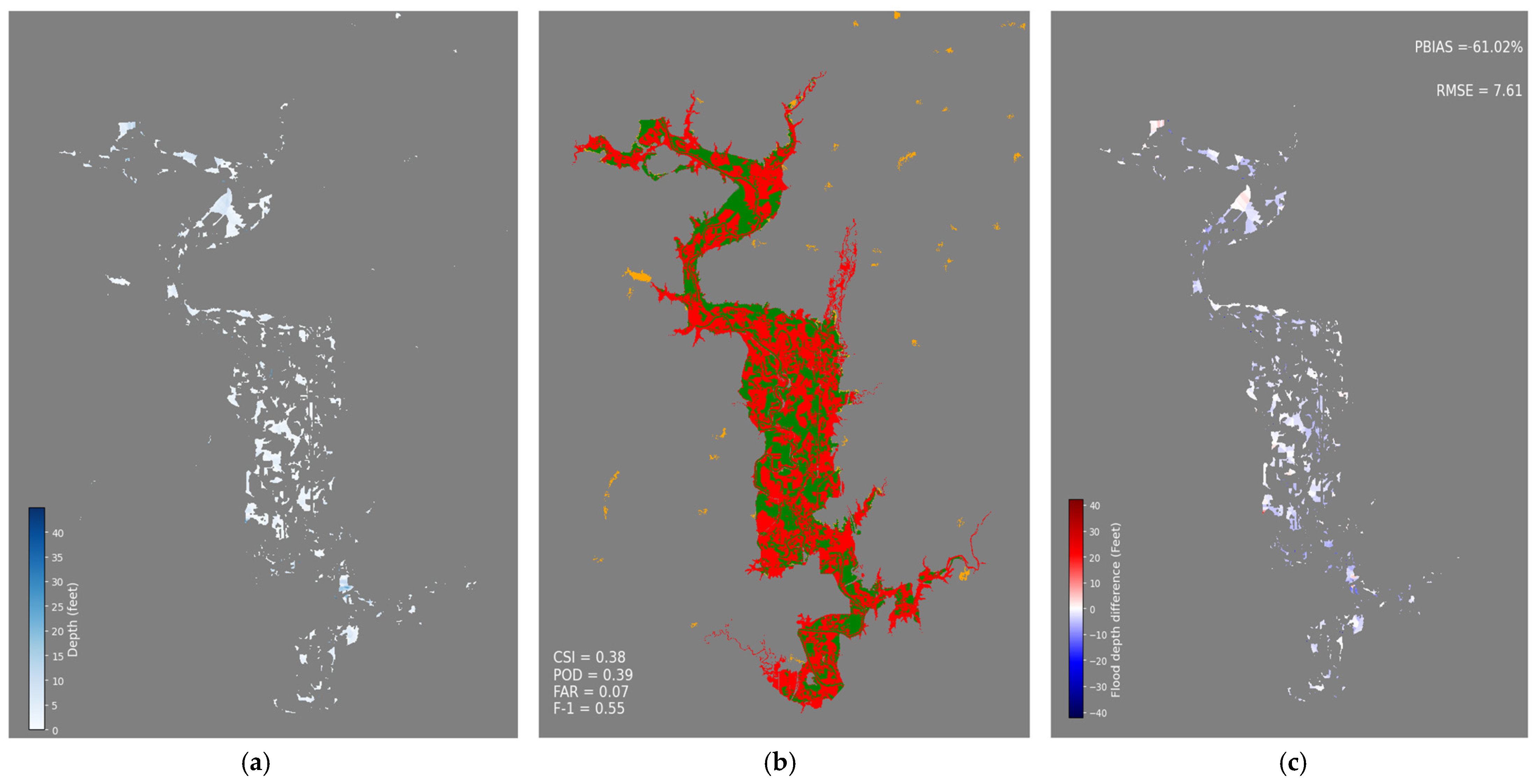

4.4.1. FwDET

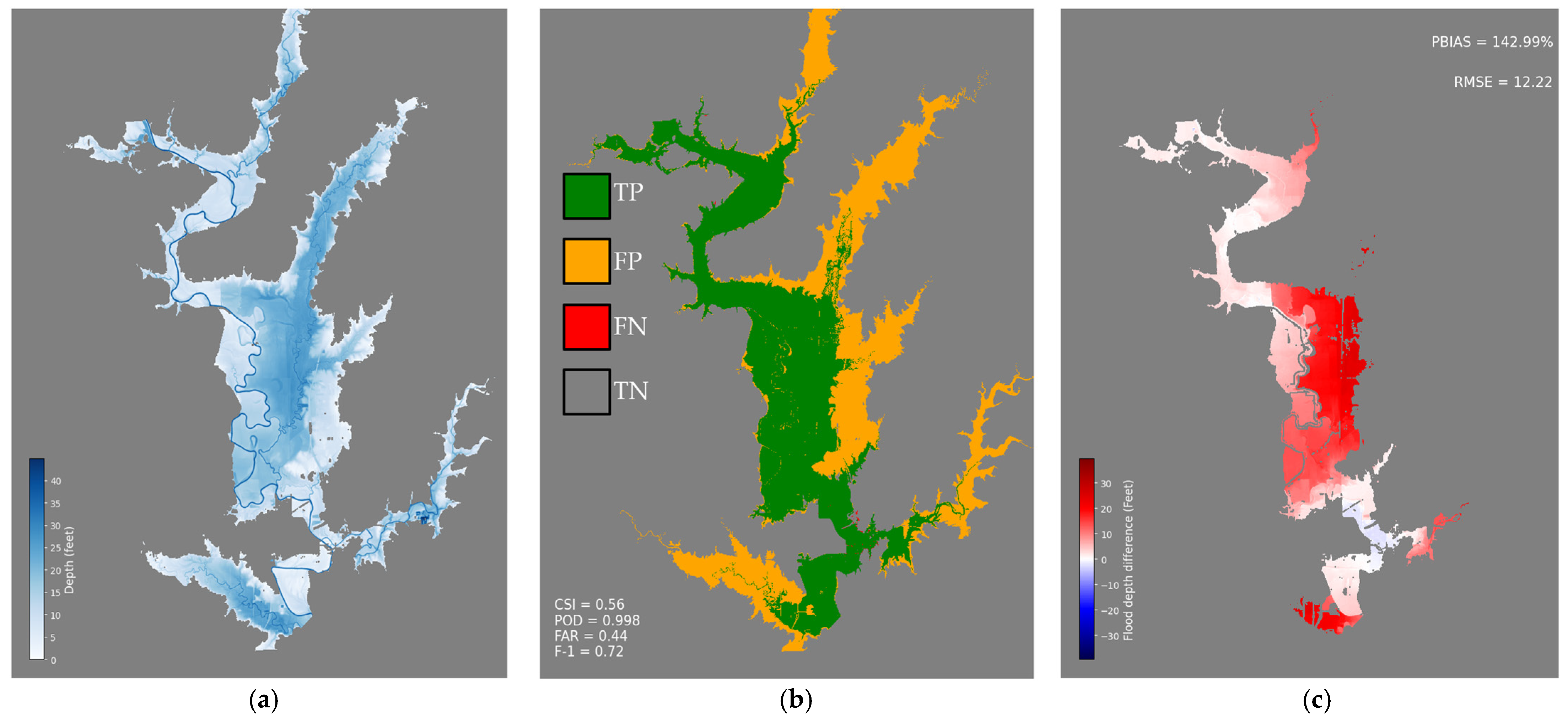

4.4.2. FLEXTH

5. Discussion

5.1. The Tributary Problem

5.2. Impacts of the Savitzky–Golay Filter

5.3. Model Parameters, Uncertainty, and Best Practices

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| FSP | Flood Source Pixel |

| FPP | Floodplain Pixel |

| DTF | Depth to Flood |

| DOF | Depth of Flow |

| DEM | Digital Elevation Model |

| FIM | Flood Inundation Mapping |

| POD | Probability of Detection |

| FAR | False Alarm Ratio |

| CSI | Critical Success Index |

| HAND | Height Above Nearest Drainage |

| GFM | Global Flood Monitoring product |

| AWEI | Automated Water Extraction Index |

| NDWI | Normalized Difference Water Index |

| MNDWI | Modified Normalized Difference Water Index |

| GEE | Google Earth Engine |

| PBIAS | Percent Bias |

| RMSE | Root Mean Square Error |

| HR2D | HEC-RAS 2D |

| WSE | Water Surface Elevation |

| SAR | Synthetic Aperture Radar |

| GFM | Global Flood Monitoring |

| GloFAS | Global Flood Awareness System |

References

- Guha-Sapir, D.; Hoyois, P.; Wallemacq, P.; Below, R. Annual Disaster Statistical Review 2016. Available online: https://www.emdat.be/sites/default/files/adsr_2016.pdf (accessed on 10 August 2024).

- Nobre, A.D.; Cuartas, L.A.; Hodnett, M.; Rennó, C.D.; Rodrigues, G.; Silveira, A.; Waterloo, M.; Saleska, S. Height Above the Nearest Drainage—A hydrologically relevant new terrain model. J. Hydrol. 2011, 404, 13–29. [Google Scholar] [CrossRef]

- Kastens, J.H. Some New Developments on Two Separate Topics: Statistical Cross Validation and Floodplain Mapping. 2008. Available online: https://kuscholarworks.ku.edu/handle/1808/5354 (accessed on 22 April 2024).

- Shustikova, I.; Domeneghetti, A.; Neal, J.C.; Bates, P.; Castellarin, A. Comparing 2D capabilities of HEC-RAS and LISFLOOD-FP on complex topography. Hydrol. Sci. J. 2019, 64, 1769–1782. [Google Scholar] [CrossRef]

- Aristizabal, F.; Salas, F.; Petrochenkov, G.; Grout, T.; Avant, B.; Bates, B.; Spies, R.; Chadwick, N.; Wills, Z.; Judge, J. Extending Height Above Nearest Drainage to Model Multiple Fluvial Sources in Flood Inundation Mapping Applications for the U.S. National Water Model. Water Resour. Res. 2023, 59, e2022WR032039. [Google Scholar] [CrossRef]

- Salmoral, G.; Casado, M.R.; Muthusamy, M.; Butler, D.; Menon, P.P.; Leinster, P. Guidelines for the Use of Unmanned Aerial Systems in Flood Emergency Response. Water 2020, 12, 521. [Google Scholar] [CrossRef]

- Mohney, D.; Terabytes from Space: Satellite Imaging is Filling Data Centers. Data Center Frontier. Available online: https://www.datacenterfrontier.com/internet-of-things/article/11429032/terabytes-from-space-satellite-imaging-is-filling-data-centers (accessed on 25 March 2025).

- Tarpanelli, A.; Mondini, A.C.; Camici, S. Effectiveness of Sentinel-1 and Sentinel-2 for Flood Detection Assessment in Europe. Nat. Hazards Earth Syst. Sci. 2022, 22, 2473–2489. [Google Scholar] [CrossRef]

- Mcfeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Feyisa, G.L.; Meilby, H.; Fensholt, R.; Proud, S.R. Automated Water Extraction Index: A new technique for surface water mapping using Landsat imagery. Remote Sens. Environ. 2014, 140, 23–35. [Google Scholar] [CrossRef]

- Dartmouth Flood Observatory. Available online: https://floodobservatory.colorado.edu/Archives/ (accessed on 28 April 2025).

- Hamidi, E.; Peter, B.G.; Muñoz, D.F.; Moftakhari, H.; Moradkhani, H. Fast Flood Extent Monitoring with SAR Change Detection Using Google Earth Engine. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Roth, F.; Tupas, M.E.; Navacchi, C.; Zhao, J.; Wagner, W.; Bauer-Marschallinger, B. Evaluating the robustness of Bayesian flood mapping with Sentinel-1 data: A multi-event validation study. Sci. Remote Sens. 2025, 11, 100210. [Google Scholar] [CrossRef]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-temporal synthetic aperture radar flood mapping using change detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Do, S.K.; Du, T.L.T.; Lee, H.; Chang, C.; Bui, D.D.; Nguyen, N.T.; Markert, K.N.; Strömqvist, J.; Towashiraporn, P.; Darby, S.E.; et al. Assessing Impacts of Hydropower Development on Downstream Inundation Using a Hybrid Modeling Framework Integrating Satellite Data-Driven and Process-Based Models. Water Resour. Res. 2025, 61, e2024WR037528. [Google Scholar] [CrossRef]

- Jo, M.-J.; Osmanoglu, B.; Zhang, B.; Wdowinski, S. Flood Extent Mapping Using Dual-Polarimetric Sentinel-1 Synthetic Aperture Radar Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018; XLII-3, 711–713. [Google Scholar] [CrossRef][Green Version]

- Amitrano, D.; Di Martino, G.; Di Simone, A.; Imperatore, P. Flood Detection with SAR: A Review of Techniques and Datasets. Remote Sens. 2024, 16, 656. [Google Scholar] [CrossRef]

- Klemas, V. Remote Sensing of Floods and Flood-Prone Areas: An Overview. J. Coast. Res. 2015, 31, 1005–1013. [Google Scholar] [CrossRef]

- Betterle, A.; Salamon, P. Water depth estimate and flood extent enhancement for satellite-based inundation maps. Nat. Hazards Earth Syst. Sci. 2024, 24, 2817–2836. [Google Scholar] [CrossRef]

- Cohen, S.; Brakenridge, G.R.; Kettner, A.; Bates, B.; Nelson, J.; McDonald, R.; Huang, Y.-F.; Munasinghe, D.; Zhang, J. Estimating Floodwater Depths from Flood Inundation Maps and Topography. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 847–858. [Google Scholar] [CrossRef]

- Cohen, S.; Raney, A.; Munasinghe, D.; Loftis, J.D.; Molthan, A.; Bell, J.; Rogers, L.; Galantowicz, J.; Brakenridge, G.R.; Kettner, A.J.; et al. The Floodwater Depth Estimation Tool (FwDET v2.0) for improved remote sensing analysis of coastal flooding. Nat. Hazards Earth Syst. Sci. 2019, 19, 2053–2065. [Google Scholar] [CrossRef]

- Cohen, S.; Peter, B.G.; Haag, A.; Munasinghe, D.; Moragoda, N.; Narayanan, A.; May, S. Sensitivity of Remote Sensing Floodwater Depth Calculation to Boundary Filtering and Digital Elevation Model Selections. Remote Sens. 2022, 14, 5313. [Google Scholar] [CrossRef]

- Aristizabal, F.; Judge, J. Mapping Fluvial Inundation Extents with Graph Signal Filtering of River Depths Determined from Unsupervised Clustering of Synthetic Aperture Radar Imagery. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6124–6127. [Google Scholar] [CrossRef]

- Dobbs, K.E. Toward Rapid Flood Mapping Using Modeled Inundation Libraries. 2017. Available online: https://kuscholarworks.ku.edu/handle/1808/26323 (accessed on 27 February 2024).

- Yang, X.; Zhao, S.; Qin, X.; Zhao, N.; Liang, L. Mapping of Urban Surface Water Bodies from Sentinel-2 MSI Imagery at 10 m Resolution via NDWI-Based Image Sharpening. Remote Sens. 2017, 9, 596. [Google Scholar] [CrossRef]

- Pasquarella, V.J.; Brown, C.F.; Czerwinski, W.; Rucklidge, W.J. Comprehensive quality assessment of optical satellite imagery using weakly supervised video learning. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 2125–2135. [Google Scholar] [CrossRef]

- Homer, C.G.; Fry, J.A.; Barnes, C.A. The National Land Cover Database; Report 2012–3020; Earth Resources Observation and Science (EROS) Center: Reston, VA, USA, 2012. [Google Scholar] [CrossRef]

- Salamon, P.; Mctlormick, N.; Reimer, C.; Clarke, T.; Bauer-Marschallinger, B.; Wagner, W.; Martinis, S.; Chow, C.; Bohnke, C.; Matgen, P.; et al. The New, Systematic Global Flood Monitoring Product of the Copernicus Emergency Management Service. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 1053–1056. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Larco, K.; Mahmoudi, S. National Water Center Innovators Program Summer Institute Report 2024. 2024. Available online: https://www.cuahsi.org/uploads/pages/doc/202407_Summer_Institute_Final_Report_v2.0.pdf (accessed on 5 April 2025).

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Vivó-Truyols, G.; Schoenmakers, P.J. Automatic Selection of Optimal Savitzky−Golay Smoothing. Anal. Chem. 2006, 78, 4598–4608. [Google Scholar] [CrossRef] [PubMed]

- Gomez, F.J.; Jafarzadegan, K.; Moftakhari, H.; Moradkhani, H. Probabilistic flood inundation mapping through copula Bayesian multi-modeling of precipitation products. Nat. Hazards Earth Syst. Sci. 2024, 24, 2647–2665. [Google Scholar] [CrossRef]

- Zeiger, S.J.; Hubbart, J.A. Measuring and modeling event-based environmental flows: An assessment of HEC-RAS 2D rain-on-grid simulations. J. Environ. Manag. 2021, 285, 112125. [Google Scholar] [CrossRef]

- Alipour, A.; Jafarzadegan, K.; Moradkhani, H. Global sensitivity analysis in hydrodynamic modeling and flood inundation mapping. Environ. Model. Softw. 2022, 152, 105398. [Google Scholar] [CrossRef]

- Rangari, V.A.; Umamahesh, N.V.; Bhatt, C.M. Assessment of inundation risk in urban floods using HEC RAS 2D. Model. Earth Syst. Environ. 2019, 5, 1839–1851. [Google Scholar] [CrossRef]

- Evaluating HAND Performance·NOAA-OWP/inundation-mapping Wiki·GitHub. Available online: https://github.com/NOAA-OWP/inundation-mapping/wiki/6.-Evaluating-HAND-Performance (accessed on 23 March 2025).

- FAristizabal; Petrochenkov, G. Gval: Geospatial Evaluation Engine. Available online: https://github.com/NOAA-OWP/gval (accessed on 15 July 2024).

- Moriasi, D.N.; Arnold, J.G.; Van Liew, M.W.; Bingner, R.L.; Harmel, R.D.; Veith, T.L. Model Evaluation Guidelines for Systematic Quantification of Accuracy in Watershed Simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- Arcement, G.J.; Schneider, V.R. Guide for Selecting Manning’s Roughness Coefficients for Natural Channels and Flood Plains; Report 2339; U.S. Geological Survey: Reston, VA, USA, 1989. [Google Scholar] [CrossRef]

- Chow, V.T. Open-Channel Hydraulics; McGraw-Hill: Columbus, OH, USA, 1959. [Google Scholar]

- U.S. Army Corps of Engineers—USACE. HEC-RAS River Analysis System, Version 6.3.1; Hydrologic Engineering Center: Davis, CA, USA, 2022.

- U.S. Army Corps of Engineers—USACE. HEC-RAS 2D User’s Manual: Creating Land Cover, Manning’s n Values, and % Impervious Layers; USACE: Davis, CA, USA, 2024. [Google Scholar]

| Case | DOF (FSP1) | DOF (FSP2) | Flood Depth at FPP1 | Flood Depth at FPP2 | Valid? |

|---|---|---|---|---|---|

| 1 | 7.5 | 8 | max(7.5 − 7.5, 8 − 8) = 0 | max(7.5 − 9, 8 − 11) = −1.5 | No (0, −1.5) |

| 2 | 7.5 | 11 | max(7.5 − 7.5, 11 − 8) = 3 | max(7.5 − 9, 11 − 11) = 0 | Yes (3, 0) |

| 3 | 9 | 8 | max(9 − 7.5, 8 − 8) = 1.5 | max(9 − 9, 8 − 11) = 0 | Yes (1.5, 0) |

| 4 | 9 | 11 | max(9 − 7.5, 11 − 8) = 3 | max(9 − 9, 11 − 11) = 0 | Yes (3, 0) |

| Metric | Formula | Target Score |

|---|---|---|

| Critical Success Index (CSI) | CSI = | 1 |

| Probability of Detection (POD) | POD = | 1 |

| False Alarm Ratio (FAR) | FAR = | 0 |

| F1 Score | Recall = Precision = F1 Score = | 1 |

| Land Cover | Range Evaluated for Roughness Coefficient | Final Roughness Coefficient |

|---|---|---|

| Open water | 0.02–0.045 | 0.035 |

| Developed areas | 0.075–0.15 | 0.08 |

| Barren land | 0.03–0.05 | 0.04 |

| Forests/Wetlands | 0.08–0.2 | 0.1 |

| Cultivated crops | 0.04–0.065 | 0.05 |

| Results | CSI | POD | FAR | F1 | PBIAS% | RMSE (Feet) |

|---|---|---|---|---|---|---|

| Sentinel-2 Only | 0.55 | 0.56 | 0.03 | 0.71 | N/A | N/A |

| FLDSensing | 0.89 | 0.98 | 0.09 | 0.94 | 57.75% | 5.27 |

| FwDET 2.1 | 0.38 | 0.39 | 0.07 | 0.55 | −61.02% | 7.61 |

| FLEXTH | 0.81 | 0.90 | 0.11 | 0.89 | −29.98% | 3.38 |

| Results | CSI | POD | FAR | F1 | PBIAS% | RMSE (Feet) |

|---|---|---|---|---|---|---|

| FLDSensing (with only mainstem) | 0.89 | 0.98 | 0.09 | 0.94 | 57.75% | 5.27 |

| FLDSensing (all tributaries) | 0.56 | 0.998 | 0.44 | 0.72 | 142.99% | 12.22 |

| FLDSensing (without filtering) | 0.75 | 0.98 | 0.24 | 0.86 | 135.69% | 10.06 |

| Window Size | F1 | FAR | PBIAS % |

|---|---|---|---|

| 101 | 0.88 | 0.21 | 113.53 |

| 1001 | 0.88 | 0.19 | 108.80 |

| 7001 | 0.93 | 0.11 | 55.56% |

| 14,001 | 0.94 | 0.09 | 57.75% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Edwards, J.; Gomez, F.J.; Do, S.K.; Weiss, D.A.; Kastens, J.; Cohen, S.; Moradkhani, H.; Lakshmi, V.; Li, X. FLDSensing: Remote Sensing Flood Inundation Mapping with FLDPLN. Remote Sens. 2025, 17, 3362. https://doi.org/10.3390/rs17193362

Edwards J, Gomez FJ, Do SK, Weiss DA, Kastens J, Cohen S, Moradkhani H, Lakshmi V, Li X. FLDSensing: Remote Sensing Flood Inundation Mapping with FLDPLN. Remote Sensing. 2025; 17(19):3362. https://doi.org/10.3390/rs17193362

Chicago/Turabian StyleEdwards, Jackson, Francisco J. Gomez, Son Kim Do, David A. Weiss, Jude Kastens, Sagy Cohen, Hamid Moradkhani, Venkataraman Lakshmi, and Xingong Li. 2025. "FLDSensing: Remote Sensing Flood Inundation Mapping with FLDPLN" Remote Sensing 17, no. 19: 3362. https://doi.org/10.3390/rs17193362

APA StyleEdwards, J., Gomez, F. J., Do, S. K., Weiss, D. A., Kastens, J., Cohen, S., Moradkhani, H., Lakshmi, V., & Li, X. (2025). FLDSensing: Remote Sensing Flood Inundation Mapping with FLDPLN. Remote Sensing, 17(19), 3362. https://doi.org/10.3390/rs17193362