Research on Rice Field Identification Methods in Mountainous Regions

Abstract

Highlights

- A customized GCN was constructed using rice plots as graph nodes and integrat-ing multidimensional rice features, achieving 98.3% accuracy for rice identifica-tion under complex mountainous terrain and improving computational efficiency.

- The integration of multidimensional features provided an ecologically consistent representation of rice, significantly enhancing classification accuracy under com-plex terrain.

- The method showed strong transferability and scalability, supporting large-area rice mapping and monitoring across diverse regions and cloudy conditions.

Abstract

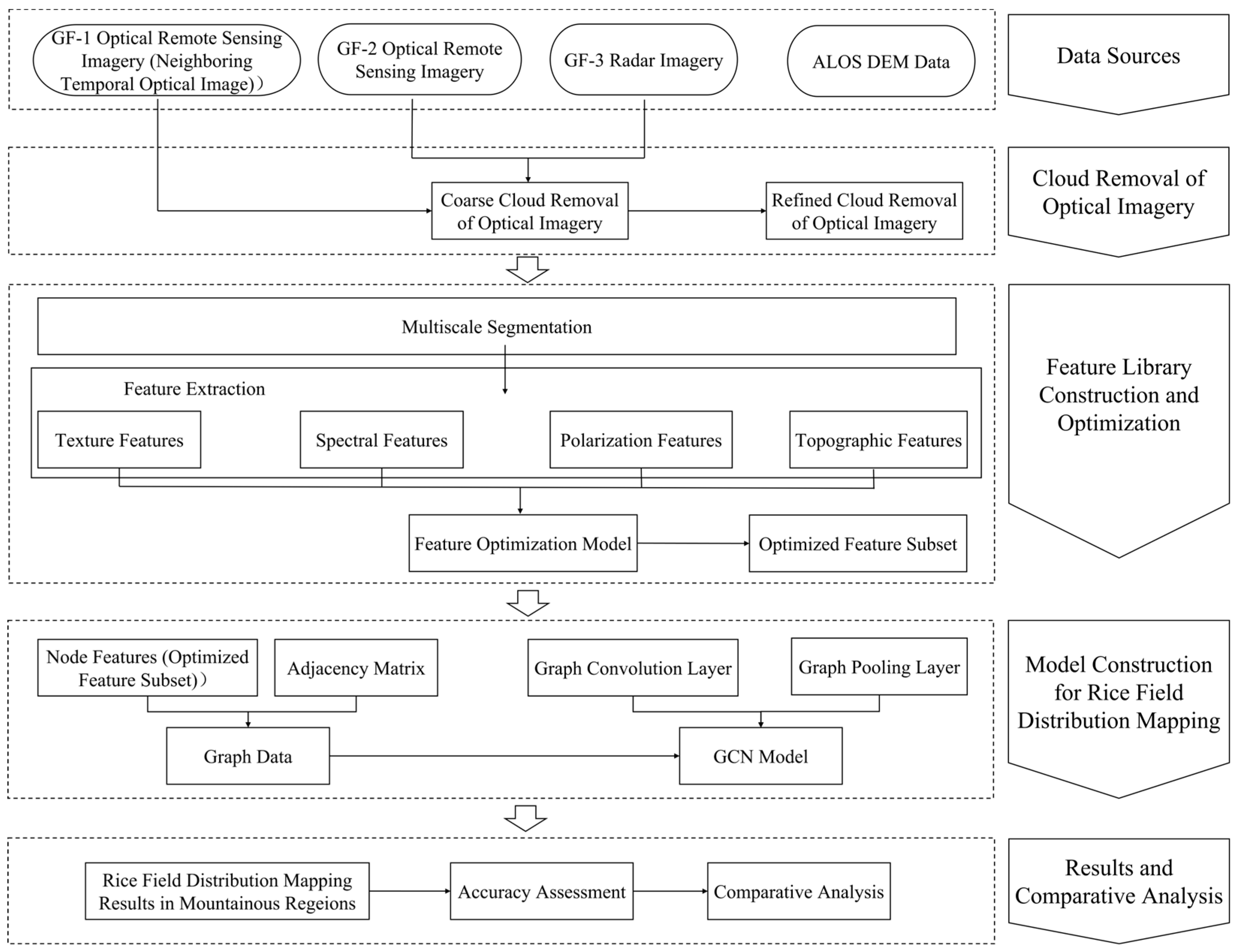

1. Introduction

- (1)

- It demonstrates that integrating terrain features with spectral, texture, and polarization characteristics can effectively enhance rice identification accuracy in mountainous areas.

- (2)

- It introduces a graph convolutional neural network that accounts for spatial correlation features between plots, and validates its effectiveness and applicability for rice identification tasks in mountainous regions.

2. Materials and Methods

2.1. Optical Remote Sensing Imagery Cloud Removal

2.2. Construction and Optimization of Feature Library

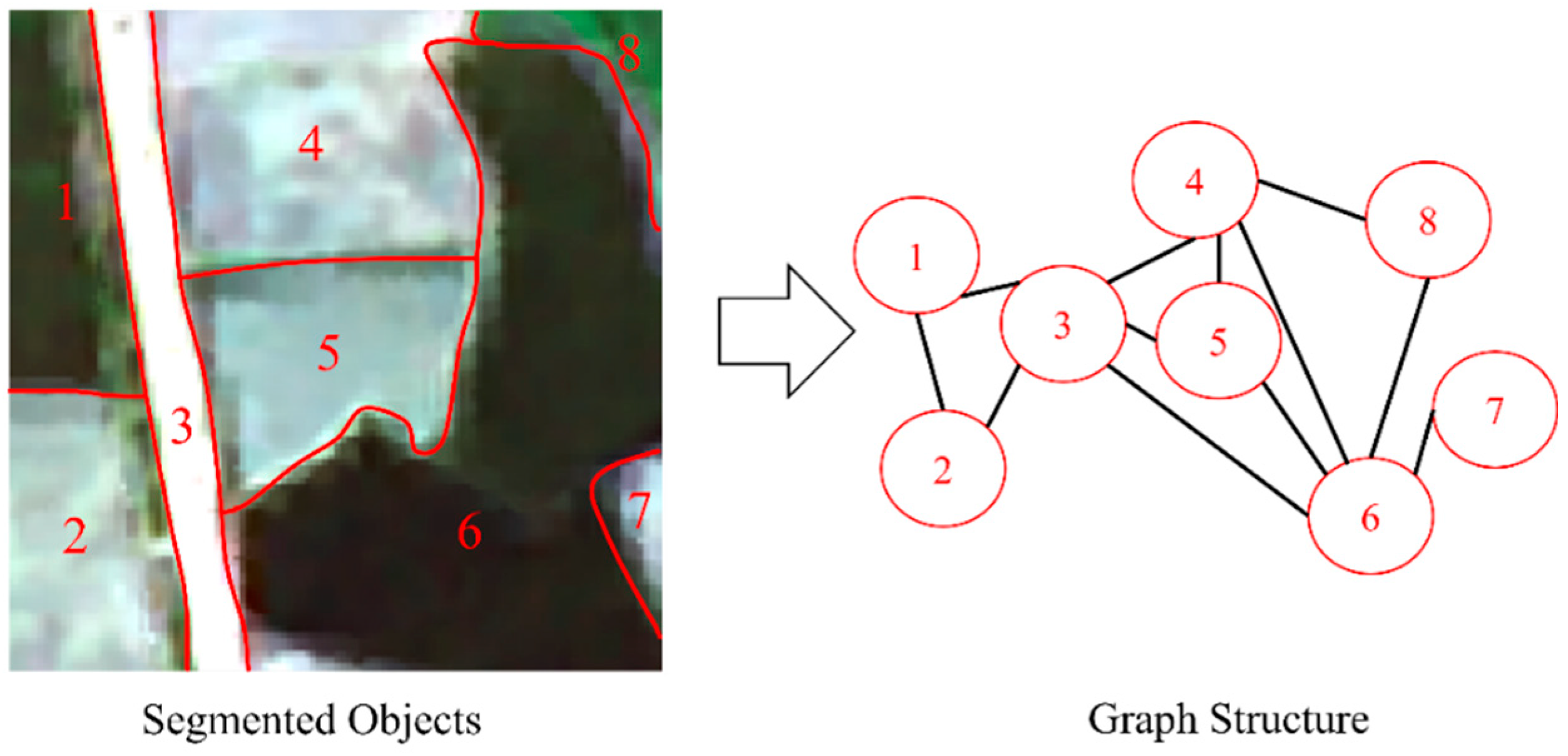

2.2.1. Imagery Segmentation

2.2.2. Feature Extraction

2.2.3. Feature Selection

- (A)

- Mutual Information Algorithm

- (B) Relief Algorithm

- (C) GWO_RF Algorithm

- ①

- Initialization of the population: A certain number of gray wolves were randomly generated, where each wolf’s position represented a feature subset. Each element in the position vector corresponded to a feature and took a value of 0 or 1, indicating whether the feature was selected. The population size, maximum number of iterations, and related parameters (e.g., a, A, C) were also set.

- ②

- Fitness evaluation: The classification accuracy of each feature subset corresponding to a gray wolf was assessed using the random forest algorithm. The classification accuracy served as the fitness value of the gray wolf, with higher fitness indicating stronger classification capability of the feature subset.

- ③

- Population ranking: The population was ranked based on fitness values, and the three wolves with the highest fitness were designated as the alpha () wolf (best solution), beta () wolf (second best), and delta () wolf (third best), respectively.

- ④

- Position update: The positions of ordinary gray wolves were updated based on the positions of the , , and wolves, as well as dynamically adjusted coefficients and , according to the following formulas:, , and represent the positions of the , , and wolves, respectively; denotes the current position of a gray wolf; , , and are random weighting coefficients; and , , and represent the distances between the gray wolf and the , , and wolves, respectively.

- ⑤

- Iterative update: Steps ② through ④ were repeated. In each iteration, the positions and fitness values of the and wolves were updated, and the gray wolf population’s positions were continuously optimized to progressively search for the global optimal feature subset.

- ⑥

- Termination criteria: The algorithm terminated when the maximum number of iterations was reached or when there was no significant improvement in the wolves’ fitness values.

- ⑦

- Output: The optimal feature subset corresponding to the wolf was ultimately obtained.

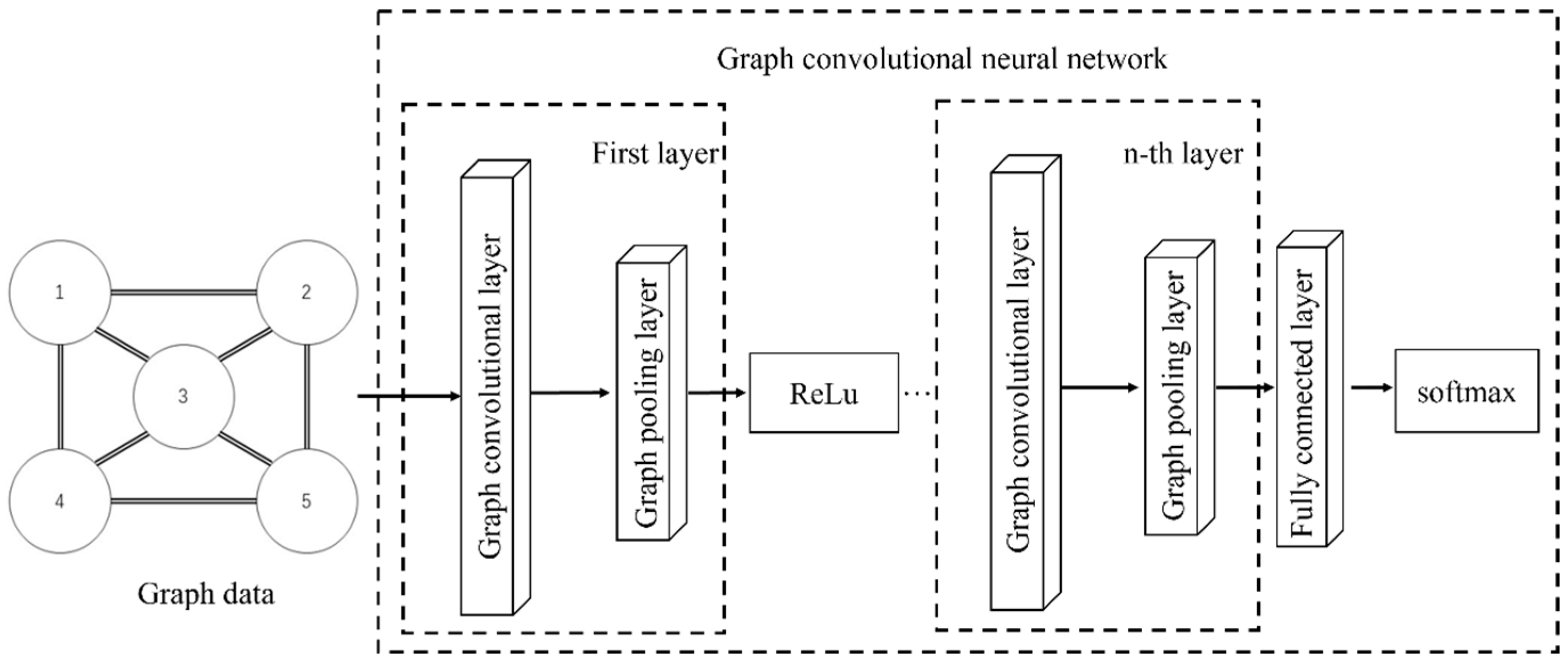

2.3. Model Construction for Rice Field Distribution Mapping

2.3.1. Graph Data Construction

2.3.2. GCN Model Design

2.4. Accuracy Evaluation Methods

- (1)

- Confusion Matrix-Based Accuracy Assessment

- (2)

- Comparative Analysis with Statistical Yearbook Data

- (3)

- Visual Interpretation of Sample Regions

3. Experiments and Results

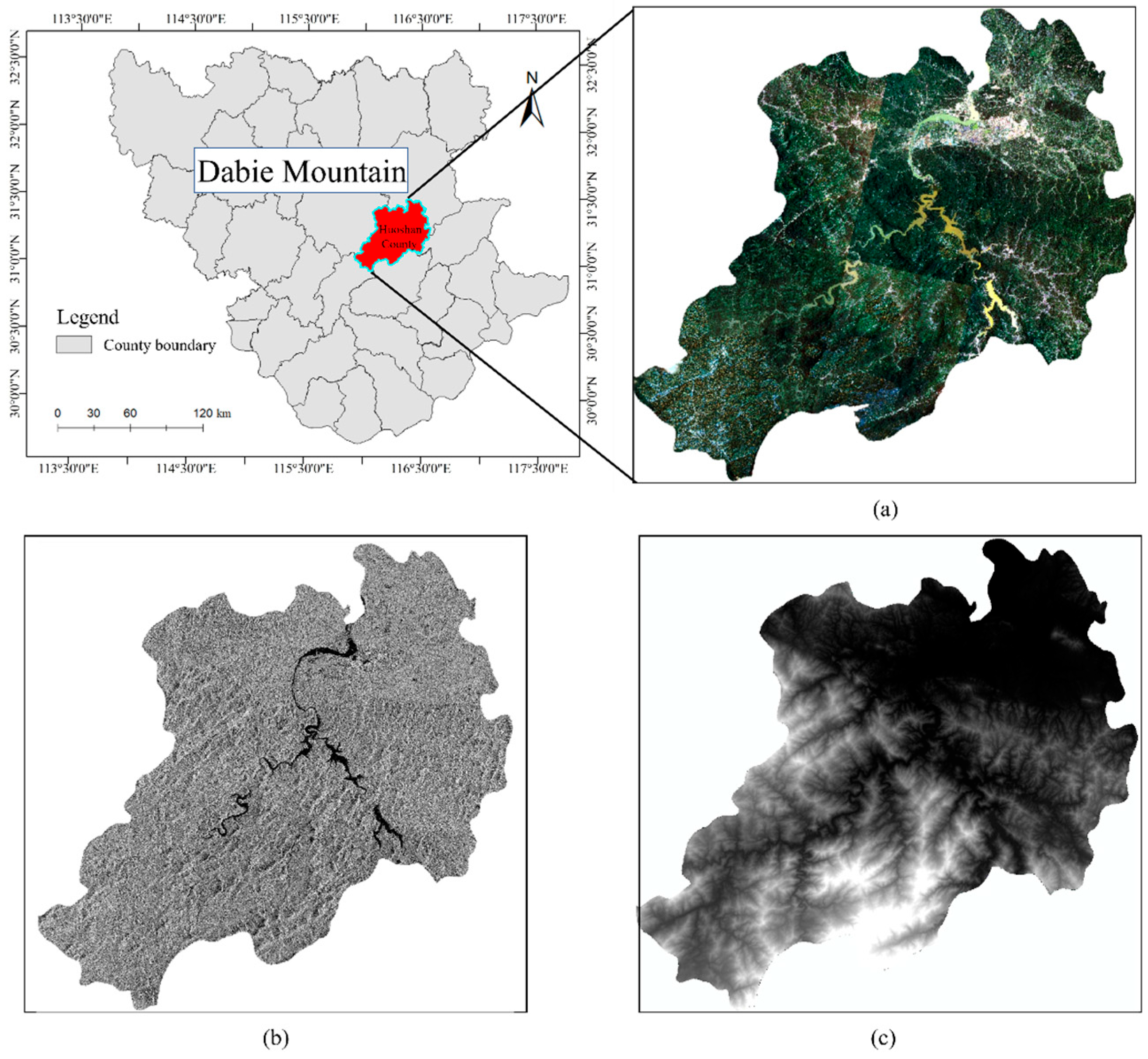

3.1. Study Area and Data

3.1.1. Study Area

3.1.2. Data

- (1)

- Remote Sensing Data for the Rice field identification

- (2)

- Auxiliary Data

3.2. Experimental Setup

3.2.1. Sample Dataset

3.2.2. Experimental Environment and Parameter Settings

3.3. Results

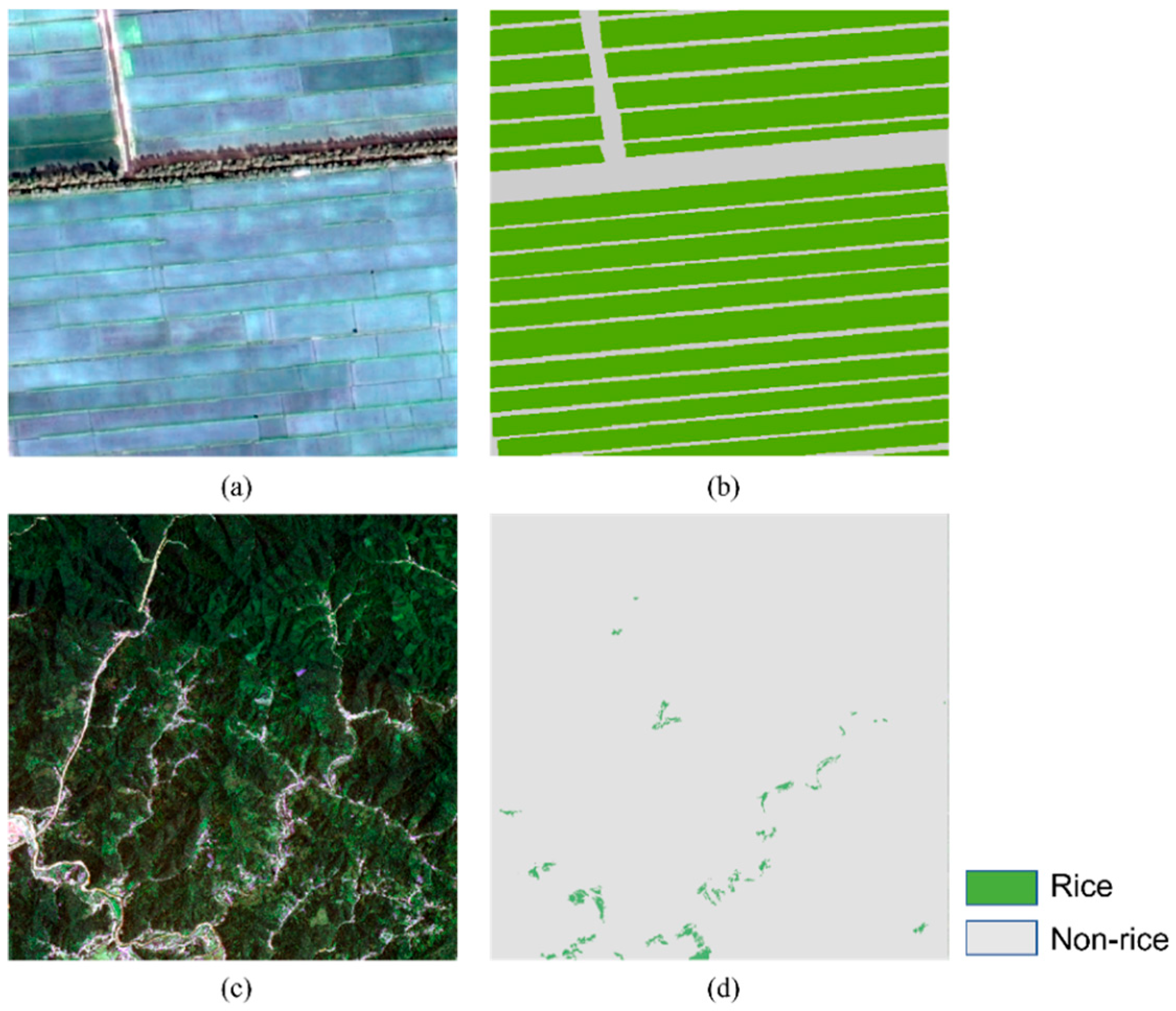

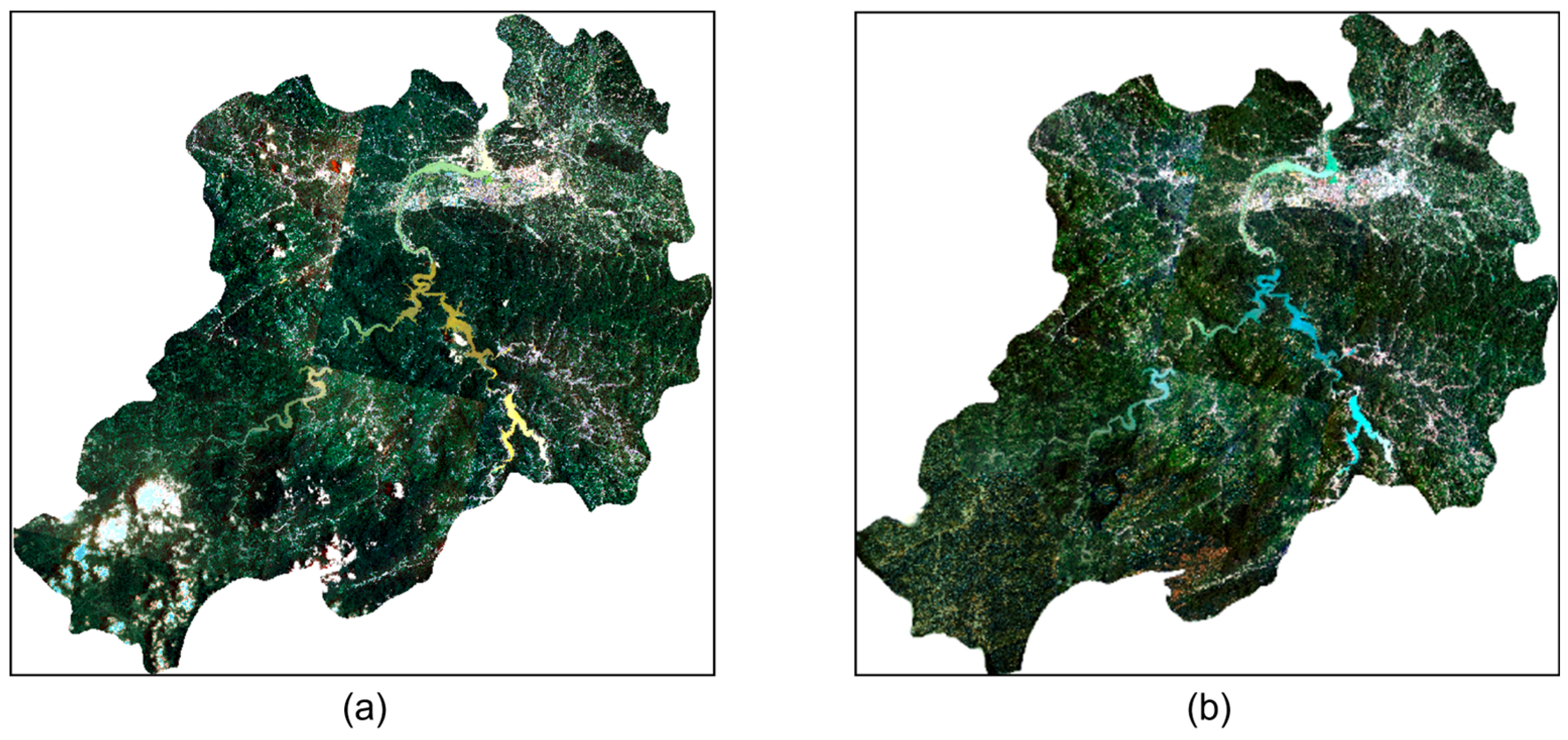

3.3.1. Cloud Removal Results of the Optical Remote Sensing Imagery

3.3.2. Feature Selection Results

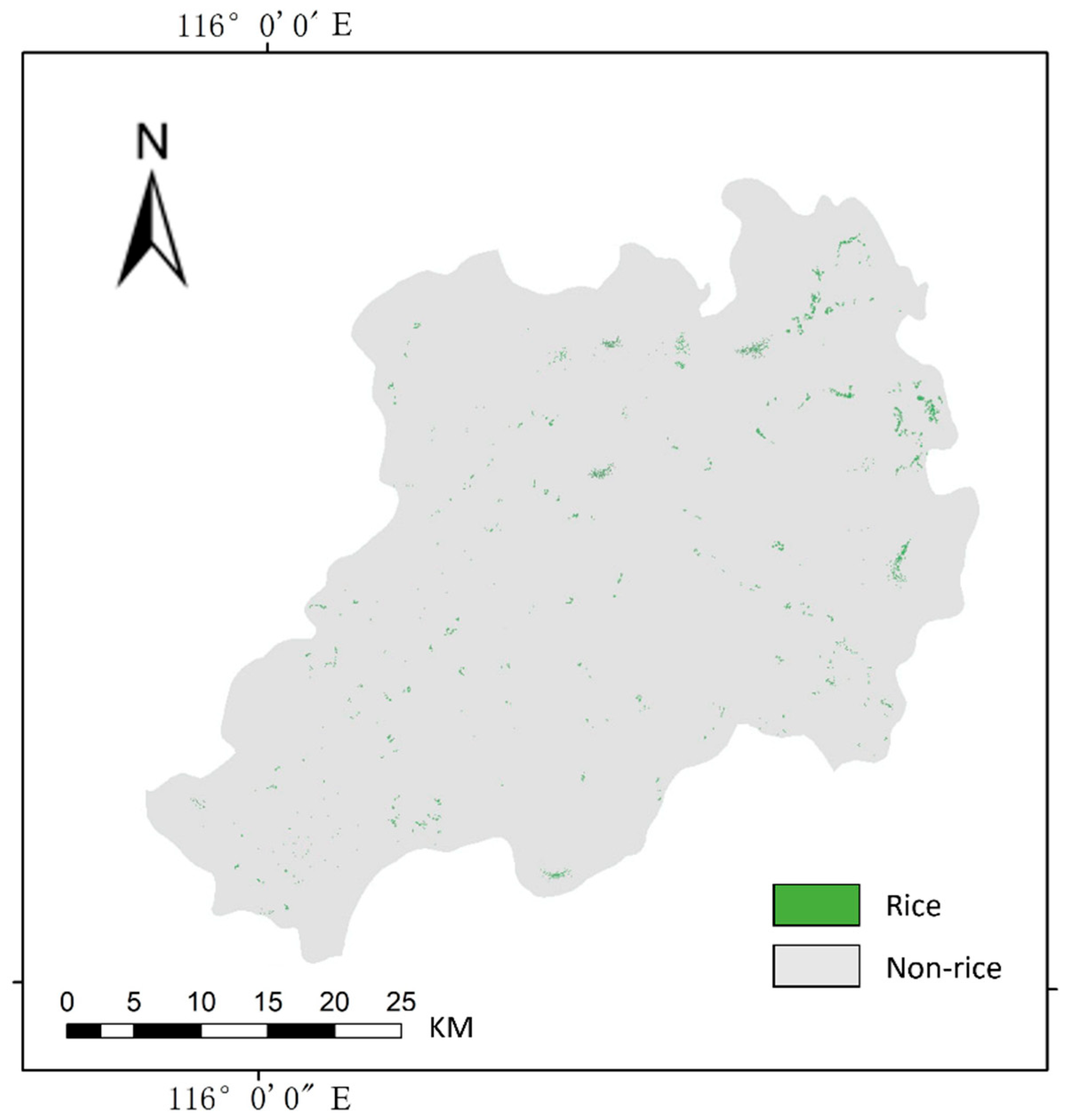

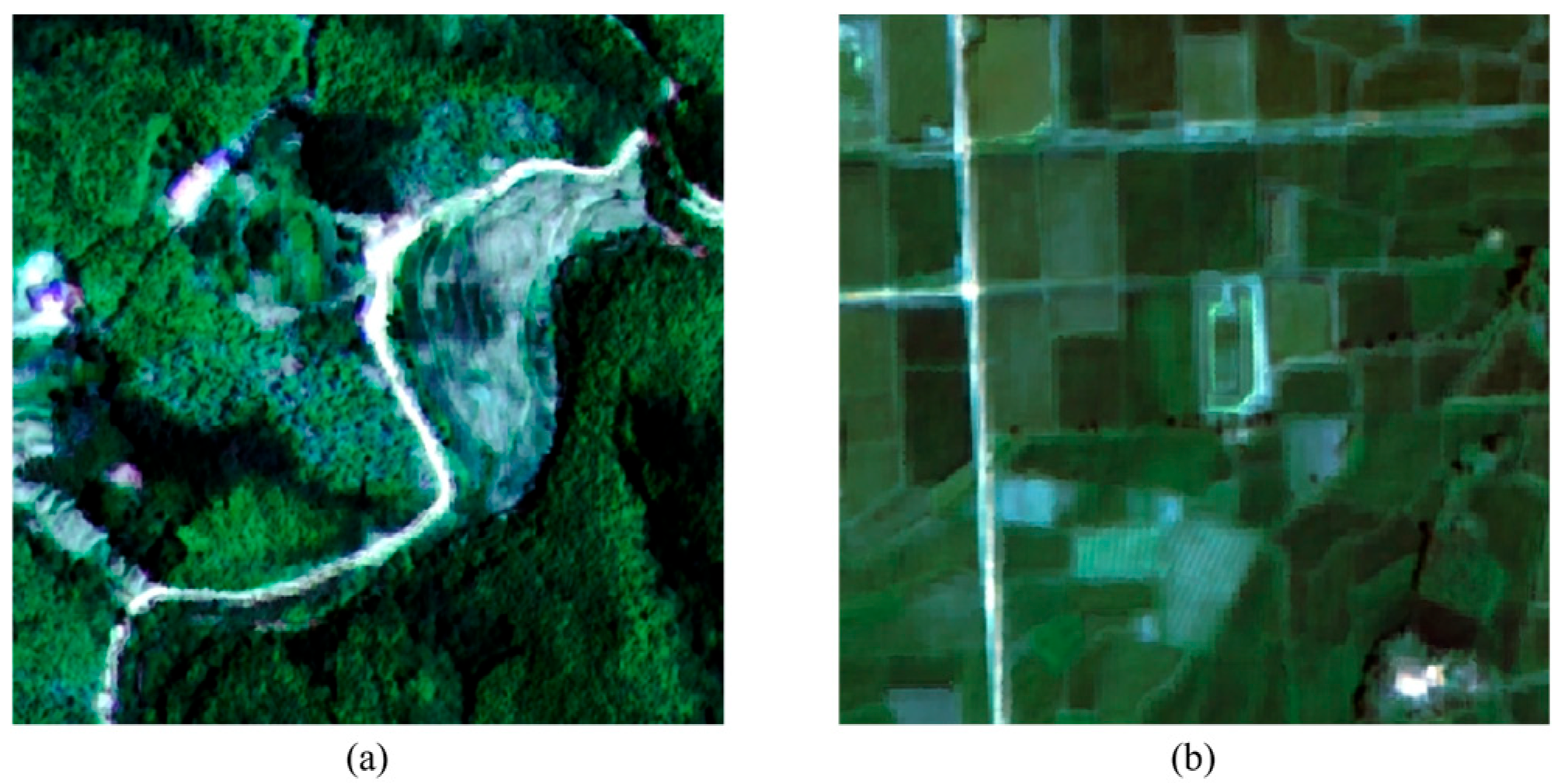

3.3.3. Rice Field Identification Results

4. Discussion

4.1. Ablation Study on Terrain Features

4.2. Comparison with Other Methods

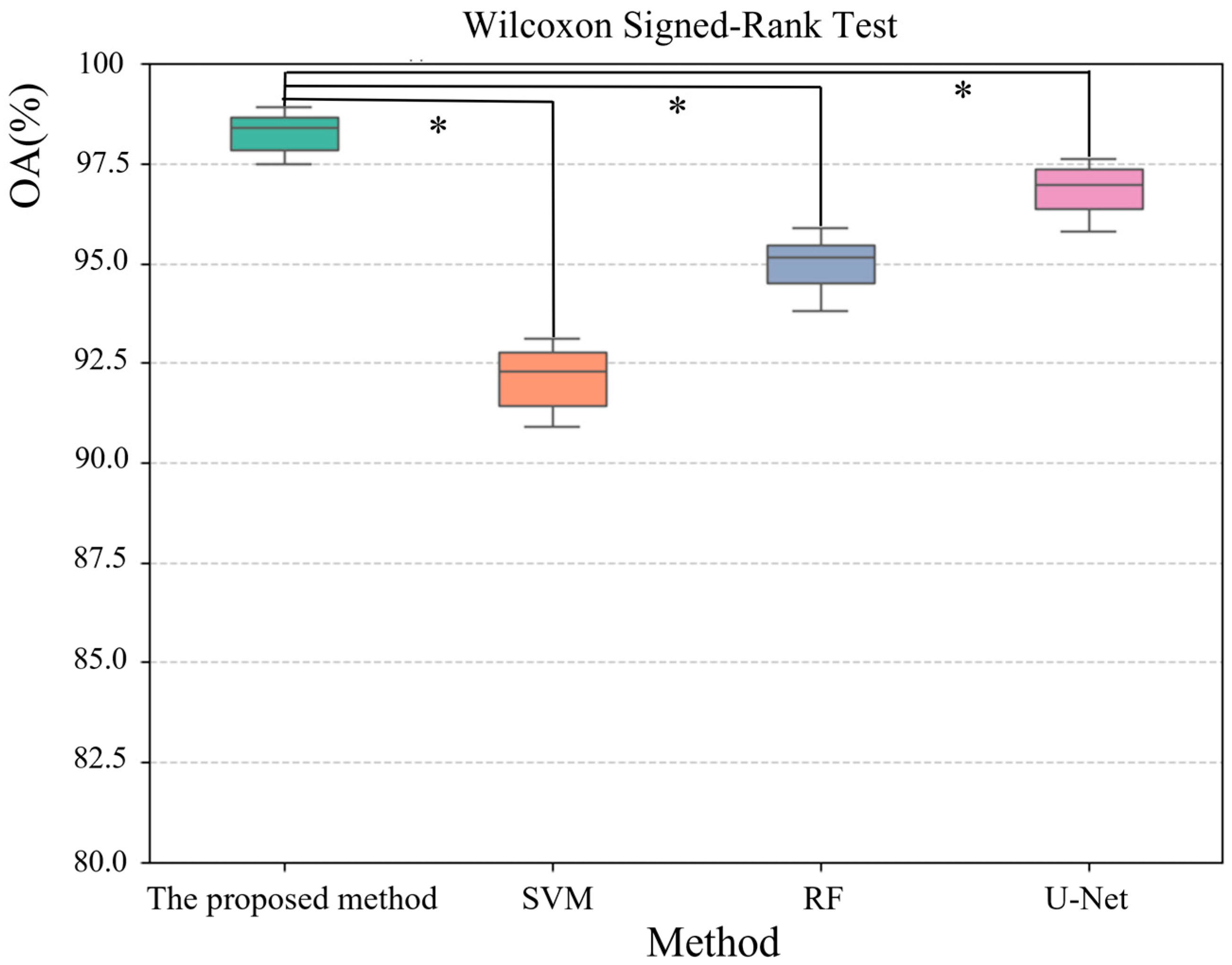

4.2.1. Overall Accuracy Comparison

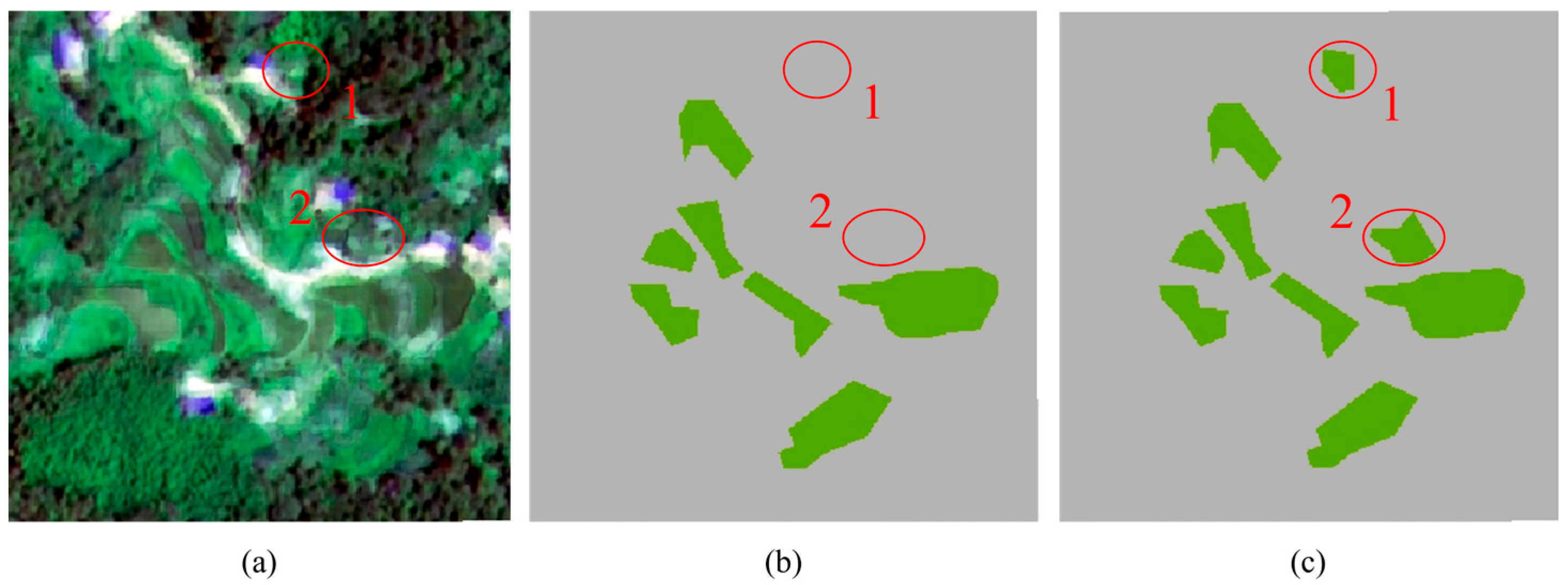

4.2.2. Local Performance Comparison

4.2.3. Terrain Adaptability Comparison

4.2.4. Comparison of Training Sample Requirements

4.2.5. Comparison of Computational Costs

4.3. Key Advances and Highlights

4.4. Limitations and Future Research

5. Conclusion

- A coarse-to-fine cloud removal strategy was implemented, which comprehensively integrated features from SAR imagery and neighboring temporal optical remote sensing imageries to achieve effective cloud removal from optical data. The method achieved high performance and outperformed alternative methods in both accuracy and applicability.

- By fully accounting for the growth environment and spatial distribution characteristics of rice in mountainous terrain, and by integrating multi-source features through graph structure modeling, the proposed approach successfully addressed the limitations of existing methods in handling complex terrain. This led to significant improvements in identification accuracy and model robustness.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rezaei, E.E.; Webber, H.; Asseng, S.; Boote, K.; Durand, J.L.; Ewert, F.; Martre, P.; MacCarthy, D.S. Climate change impacts on crop yields. Nat. Rev. Earth Environ. 2023, 4, 831–846. [Google Scholar] [CrossRef]

- Yang, H.; Pan, B.; Wu, W.; Tai, J. Field-based rice classification in Wuhua county through integration of multi-temporal Sentinel-1A and Landsat-8 OLI data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 226–236. [Google Scholar] [CrossRef]

- Pang, J.; Zhang, R.; Yu, B.; Liao, M.; Lv, J.; Xie, L.; Li, S.; Zhan, J. Pixel-level rice planting information monitoring in Fujin City based on time-series SAR imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102551. [Google Scholar] [CrossRef]

- Estacio, I.; Basu, M.; Sianipar, C.P.; Onitsuka, K.; Hoshino, S. Dynamics of land cover transitions and agricultural abandonment in a mountainous agricultural landscape: Case of Ifugao rice terraces, Philippines. Landsc. Urban Plan. 2022, 222, 104394. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, X.; Ding, C.; Liu, S.; Wu, C.; Wu, L. Mapping Rice Paddies in Complex Landscapes with Convolutional Neural Networks and Phenological Metrics. GISci. Remote Sens. 2019, 57, 37–48. [Google Scholar] [CrossRef]

- Nduati, E.; Sofue, Y.; Matniyaz, A.; Park, J.G.; Yang, W.; Kondoh, A. Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area. Remote Sens. 2019, 11, 207. [Google Scholar] [CrossRef]

- Jing, W.; Huang, J.; Zhang, K.; Li, X.; She, B.; Wei, C.; Gao, J.; Song, X. Rice Fields Mapping in Fragmented Area Using Multi-Temporal HJ-1A/B CCD Images. Remote Sens. 2015, 7, 3467–3488. [Google Scholar]

- Quan, J.; Wang, Y.; Wang, X.; Tang, W.; Wang, Q. Extraction of rice planting areas in the Dabie Mountains using remote sensing images: A case study of Landsat 8. Chin. Agric. Sci. Bull. 2019, 35, 104–111. [Google Scholar]

- Sang, G.; Tang, Z.; Mao, K.; Deng, G.; Wang, J.; Li, J. Extraction of high-resolution rice planting areas based on GEE cloud platform and Sentinel data: A case study of Hunan Province. Acta Agron. Sin. 2022, 48, 2409–2420. [Google Scholar]

- Zhang, K.; Chen, Y.; Zhang, B.; Hu, J.; Wang, W. A Multitemporal Mountain Rice Identification and Extraction Method Based on the Optimal Feature Combination and Machine Learning. Remote Sens. 2022, 14, 5096. [Google Scholar] [CrossRef]

- Liu, W.; Wang, J.; Luo, J.; Wu, Z.; Chen, J.; Zhou, Y.; Sun, Y.; Shen, Z.; Xu, N.; Yang, Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sens. 2020, 12, 3733. [Google Scholar] [CrossRef]

- Chen, A.; Li, Y. Rice identification at different growth stages in the southwest mountainous region based on Sentinel-2 imagery. Trans. Chin. Soc. Agric. Eng. 2020, 36, 8–15. [Google Scholar]

- Zhang, W.; Liu, H.; Wu, W.; Zhan, L.; Wei, J. Mapping rice paddy based on machine learning with Sentinel-2 multi-temporal data: Model comparison and transferability. Remote Sens. 2020, 12, 1620. [Google Scholar] [CrossRef]

- You, J.; You, S.; Tong, Y.; Li, J.; Pan, L.; Liu, H.; Zhang, L. A geostatistical approach for correcting bias in rice remote sensing identification. Trans. Chin. Soc. Agric. Eng. 2013, 29, 11–18. [Google Scholar]

- Ma, H.; Wang, L.; Sun, W.; Yang, S.; Gao, Y.; Fan, L.; Yang, G.; Wang, Y. A new rice identification algorithm under complex terrain combining multi-characteristic parameters and homogeneous objects based on time series dual-polarization synthetic aperture radar. Front. Ecol. Evol. 2023, 11, 1093454. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, J.; Duan, Y.; Yang, Z.; Xia, X. A review of cloud detection and thick cloud removal in medium-resolution remote sensing imagery. Remote Sens. Technol. Appl. 2023, 38, 143–155. [Google Scholar]

- Li, Y.; Hong, D.; Li, C.; Yao, J.; Chanussot, J. HD-Net: High-resolution decoupled network for building footprint extraction via deeply supervised body and boundary decomposition. ISPRS J. Photogramm. Remote Sens. 2024, 209, 51–65. [Google Scholar] [CrossRef]

- Xiao, M.; Li, X.; Zhang, X.; Zhang, L. An image segmentation algorithm based on multi-scale region growing. J. Jilin Univ. (Eng. Technol. Ed.) 2017, 47, 1591–1597. [Google Scholar]

- Alirezapour, H.; Mansouri, N.; Zade, B.M.H. A Comprehensive Survey on Feature Selection with Grasshopper Optimization Algorithm. Neural Process. Lett. 2024, 56, 28. [Google Scholar] [CrossRef]

- Kong, Y.; Ni, D. Semi-Supervised Classification of Wafer Map Based on Ladder Network. In Proceedings of the 2018 14th IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Qingdao, China, 31 October–3 November 2018. [Google Scholar]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and Empirical Analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, S.; Zhou, T.; Armaghani, D.J.; Qiu, Y. Employing a genetic algorithm and grey wolf optimizer for optimizing RF models to evaluate soil liquefaction potential. Artif. Intell. Rev. 2022, 55, 5673–5705. [Google Scholar] [CrossRef]

- Zuo, X.; Lu, H.; Zhang, Y.; Cheng, J.; Guo, Y. Graph convolutional network method for small-sample classification of hyperspectral imagery. Acta Geod. Cartogr. Sin. 2021, 50, 1358–1369. [Google Scholar]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Zhu, C.; Li, D.; Shao, Z.; Yin, J.; Wang, J. Evaluation method for segmentation accuracy of high-resolution remote sensing imagery based on object-oriented approach. High Power Laser Part. Beams 2015, 27, 43–49. [Google Scholar]

- Chen, Y.; Mo, W.; Mo, J.; Wang, J.; Zhong, S. Extraction of rice planting areas in southern China based on object-oriented classification. Remote Sens. Technol. Appl. 2011, 26, 163–168. [Google Scholar]

- Zhang, K.; Su, H.; Dou, Y. A novel method for evaluating classification accuracy of multi-class tasks based on confusion matrix. Comput. Eng. Sci. 2021, 43, 1910–1919. [Google Scholar]

- Chen, X.; Tang, L.; Wang, Z. Research on the current situation and countermeasures of characteristic agricultural development in Huoshan County. Shanxi Agric. Econ. 2022, 22, 147–149. [Google Scholar]

- Sun, W.; Yang, G.; Chen, C.; Chang, M.; Huang, K.; Meng, X.; Liu, L. Current status and literature analysis of Earth observation remote sensing satellite development in China. J. Remote Sens. 2020, 24, 479–510. [Google Scholar]

- Shan, J.; Wang, Y.; Liu, J.; Zhao, Y.; Li, Y. Monitoring rice planting areas based on multi-temporal Gaofen-1 satellite imagery. Jiangsu Agric. Sci. 2017, 45, 229–232. [Google Scholar]

- Cao, M.; Shi, Z.; Shen, Q. Study on optimal band selection of ALOS imagery in land cover classification. Bull. Surv. Mapp. 2008, 12, 16–18+27. [Google Scholar]

- Chen, Z.; Zhang, Q.; Chi, T.; Fu, W.; Li, C. Gaofen-3 satellite system and its applications. Satell. Appl. 2024, 2, 20–26. [Google Scholar]

- Wang, Z.; Wang, M.; Huang, Y.; Li, Y. A review on remote sensing image classification using support vector machine. Comput. Sci. 2016, 43, 11–17+31. [Google Scholar]

- Ma, Y.; Jiang, Q.; Meng, Z.; Li, Y.; Wang, D.; Liu, H. Land use classification of agricultural areas based on random forest algorithm. Trans. Chin. Soc. Agric. Mach. 2016, 47, 297–303. [Google Scholar]

- He, H.; Yang, G.; Li, H.; Feng, H.; Xu, B.; Wang, L. Water body extraction from high-resolution remote sensing imagery based on improved U-Net network. Geo-Inf. Sci. 2020, 22, 2010–2022. [Google Scholar]

- Guan, X.; Huang, C.; Liu, H.; Meng, X.; Liu, Q. Mapping rice cropping systems in Vietnam using an NDVI-based time-series similarity measurement based on DTW distance. Remote Sens. 2016, 8, 19. [Google Scholar] [CrossRef]

- Guan, S.; Fukami, K.; Matsunaka, H.; Okami, M.; Takahashi, K. Assessing correlation of high-resolution NDVI with fertilizer application level and yield of rice and wheat crops using small UAVs. Remote Sens. 2019, 11, 112. [Google Scholar] [CrossRef]

- Akbari, E.; Boloorani, A.; Samany, N.N.; Hamzeh, S.; Pignatti, S. Crop mapping using random forest and particle swarm optimization based on multi-temporal Sentinel-2. Remote Sens. 2020, 12, 1449. [Google Scholar] [CrossRef]

- Sun, C.; Zhang, H.; Ge, J.; Wang, C.; Li, L.; Xu, L. Rice mapping in a subtropical hilly region based on Sentinel-1 time series feature analysis and the dual branch BiLSTM model. Remote Sens. 2022, 14, 3213. [Google Scholar] [CrossRef]

- Zhu, W.; Pan, Y.; Hu, Z.; Jin, Y.; Bai, Y. Decline in planting areas of double-season rice by half in southern China over the last two decades. Remote Sens. 2024, 16, 440. [Google Scholar] [CrossRef]

- Gui, B.; Song, C.; Li, Y.; Wang, J.; Shang, S. SAGRNet: A novel object-based graph convolutional neural network for diverse vegetation cover classification in remotely-sensed imagery. ISPRS J. Photogramm. Remote Sens. 2025, 227, 99–124. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Meng, W. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud removal with fusion of high resolution optical and SAR images using generative adversarial networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Tian, X.; Zhou, Q.; Wang, L.; Feng, H.; Yang, P. Crop classification in mountainous areas using object-oriented methods and multi-source data: A case study of Xishui County, China. Agronomy 2023, 13, 3037. [Google Scholar] [CrossRef]

| Feature Type | Feature Description |

|---|---|

| Spectral Features | B1, B2, B3, B4, NDVI, CARI, RVI, DVI, EVI, SAVI, DNWI, VARI, PCA1, PCA2, HSV spectral-spatial features (12 types) |

| Texture Features | Entropy, Energy, Autocorrelation, Contrast, Dissimilarity, Variance, Small Gradient Dominance, Large Gradient Dominance, Uneven Distribution of Gray Levels, Uneven Distribution of Gradients, Gradient Energy, Gradient Variance, Correlation, Gray-level Entropy, Gradient Entropy, Mixed Entropy |

| Polarization Features | HH, VV, HV, VH |

| Terrain Features | DEM, Aspect, Slope, Curvature |

| Features | Feature 1, Feature 2, …, Feature M | ||||

|---|---|---|---|---|---|

| Object ID | Spectral Features | Texture Features | Polarization Feature | Topographic Features | |

| 1 | |||||

| 2 | |||||

| N | |||||

| Object | ① | ② | ③ | ④ | ⑤ | ⑥ | ⑦ | ⑧ | |

|---|---|---|---|---|---|---|---|---|---|

| Object | |||||||||

| ① | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | |

| ② | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| ③ | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | |

| ④ | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | |

| ⑤ | 0 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | |

| ⑥ | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | |

| ⑦ | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | |

| ⑧ | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | |

| Data Type | Source | Acquisition Time | Spatial Resolution | Coverage | Remarks |

|---|---|---|---|---|---|

| Remote Sensing Data for Rice Identification | Gaofen-2 Multispectral Imagery | June 2022 | 1 m | Full Huoshan County | Some clouds; mountainous terrain, varying altitude |

| Auxiliary Data | ALOS Topographic Data (DEM) | July 2010 | 12.5 m | Full Huoshan County | Stable weather |

| Gaofen-3 SAR Imagery | June 2022 | 5 m | Full Huoshan County | Microwave, weather- independent | |

| Gaofen-1 Multispectral Imagery | July 2022 | 2 m | Partial, low clouds | Clear-sky conditions |

| Component | Specification | Frequency/Memory |

|---|---|---|

| CPU | i5-14600kf | 3.5 GHz |

| Memory | 32 G × 2 | 3600 MHz |

| GPU | NVIDIA GeForce GTX 4060ti | 16 G |

| Feature Type | Feature Content | Number of Features |

|---|---|---|

| Spectral Features | B3, B4, NDVI, EVI, SAVI, NDWI, VARI, PCA1, Maximum V, Standard Deviation of S | 10 |

| Texture Features | Entropy, Contrast, Correlation, Gray Level Variance, Gradient Entropy | 5 |

| Terrain Features | Slope, Aspect | 2 |

| Polarization Features | HV, HH | 2 |

| Total | 19 |

| Category | Truth (Objects) | PA | UA | F1-Score | OA | ||

|---|---|---|---|---|---|---|---|

| Rice | Non-Rice | ||||||

| Classification (Objects) | Rice | 5916 | 951 | 0.841 | 0.861 | 0.877 | 0.983 |

| No-Rice | 1125 | 114182 | 0.992 | 0.990 | 0.985 | ||

| Rice Recognition Area (km2) | Huo Shan County Rice Area in 2022 (km2) | Recognition Accuracy (%) |

|---|---|---|

| 173.3 | 179.1 | 96.8 |

| Region | Overall Accuracy (OA)/% | Accuracy Improvement/% | |

|---|---|---|---|

| Before Incorporating Topographic Features | After Incorporating Topographic Features | ||

| Mountainous Area | 89.4 | 93.2 | 3.8 |

| Plain region | 94.6 | 94.8 | 0.2 |

| Model | Overall Accuracy (OA)/% |

|---|---|

| Proposed Method | 98.3 |

| SVM | 92.1 |

| RF | 95.1 |

| U-Net | 96.7 |

| Method Comparison | Wilcoxon Signed-Rank Test (p-Value) |

|---|---|

| Proposed Method vs. SVM | 0.015 |

| Proposed Method vs. RF | 0.027 |

| Proposed Method vs. U-Net | 0.041 |

| Method | Low Slope (<10°) | Medium Slope (10–30°) | High Slope (>30°) | Average |

|---|---|---|---|---|

| Proposed Method | 98.1% | 95.6% | 93.8% | 95.8% |

| SVM | 91.5% | 87.6% | 80.3% | 87.1% |

| RF | 91.8% | 89.1% | 82.9% | 88.0% |

| U-Net | 96.7% | 93.3% | 86.4% | 92.1% |

| Method | Accuracy (%) | Accuracy Drop (%) | ||

|---|---|---|---|---|

| 100% Samples | 75% Samples | 50% Samples | ||

| Proposed Method | 98.3 | 95.1 | 88.0 | −10.3 |

| SVM | 92.1 | 83.5 | 66.3 | −25.8 |

| RF | 93.5 | 85.9 | 69.1 | −24.4 |

| U-Net | 96.7 | 91.3 | 80.4 | −16.3 |

| Method | Prediction Time /s (256 × 256) | Prediction Time (s) (512 × 512) | Model Parameters (M) |

|---|---|---|---|

| GCN | 1.1 | 2.2 | 8 |

| SVM | 0.3 | 0.6 | 10 |

| RF | 0.4 | 0.8 | 15 |

| U-Net | 2.5 | 5.2 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Cheng, J.; Yuan, Z.; Zang, W. Research on Rice Field Identification Methods in Mountainous Regions. Remote Sens. 2025, 17, 3356. https://doi.org/10.3390/rs17193356

Wang Y, Cheng J, Yuan Z, Zang W. Research on Rice Field Identification Methods in Mountainous Regions. Remote Sensing. 2025; 17(19):3356. https://doi.org/10.3390/rs17193356

Chicago/Turabian StyleWang, Yuyao, Jiehai Cheng, Zhanliang Yuan, and Wenqian Zang. 2025. "Research on Rice Field Identification Methods in Mountainous Regions" Remote Sensing 17, no. 19: 3356. https://doi.org/10.3390/rs17193356

APA StyleWang, Y., Cheng, J., Yuan, Z., & Zang, W. (2025). Research on Rice Field Identification Methods in Mountainous Regions. Remote Sensing, 17(19), 3356. https://doi.org/10.3390/rs17193356