ILF-BDSNet: A Compressed Network for SAR-to-Optical Image Translation Based on Intermediate-Layer Features and Bio-Inspired Dynamic Search

Abstract

Highlights

- This paper proposes a specialized compressed network, ILF-BDSNet, for SAR-to-optical remote sensing image translation, incorporating key modules such as a dual-resolution collaborative discriminator, knowledge distillation based on intermediate-layer features, and a bio-inspired dynamic search of channel configuration (BDSCC) algorithm.

- While significantly reducing number of parameters and computational complexity, the network still generates high-quality optical remote sensing images, providing an efficient solution for SAR image translation in resource-constrained environments.

Abstract

1. Introduction

- (1)

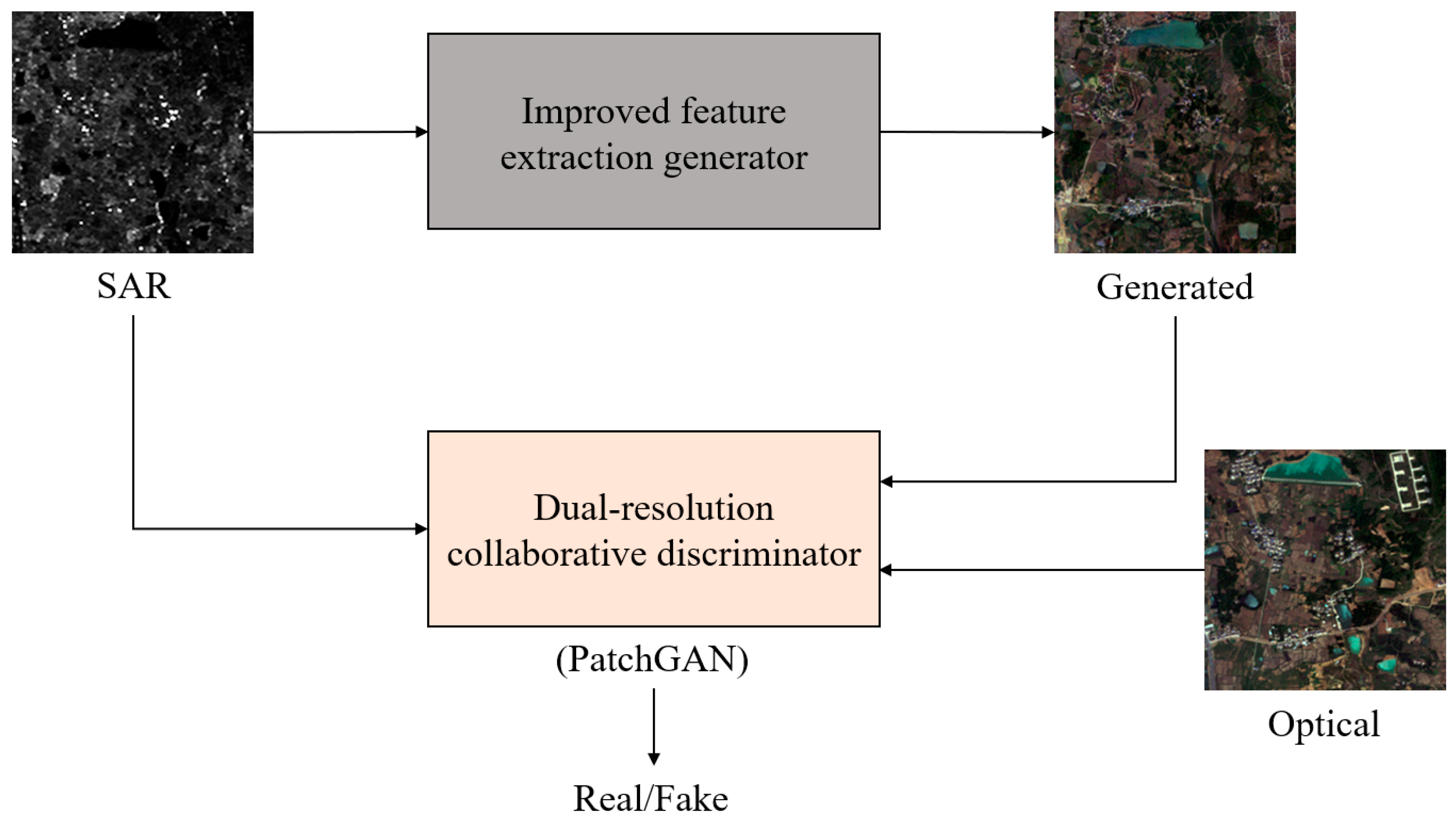

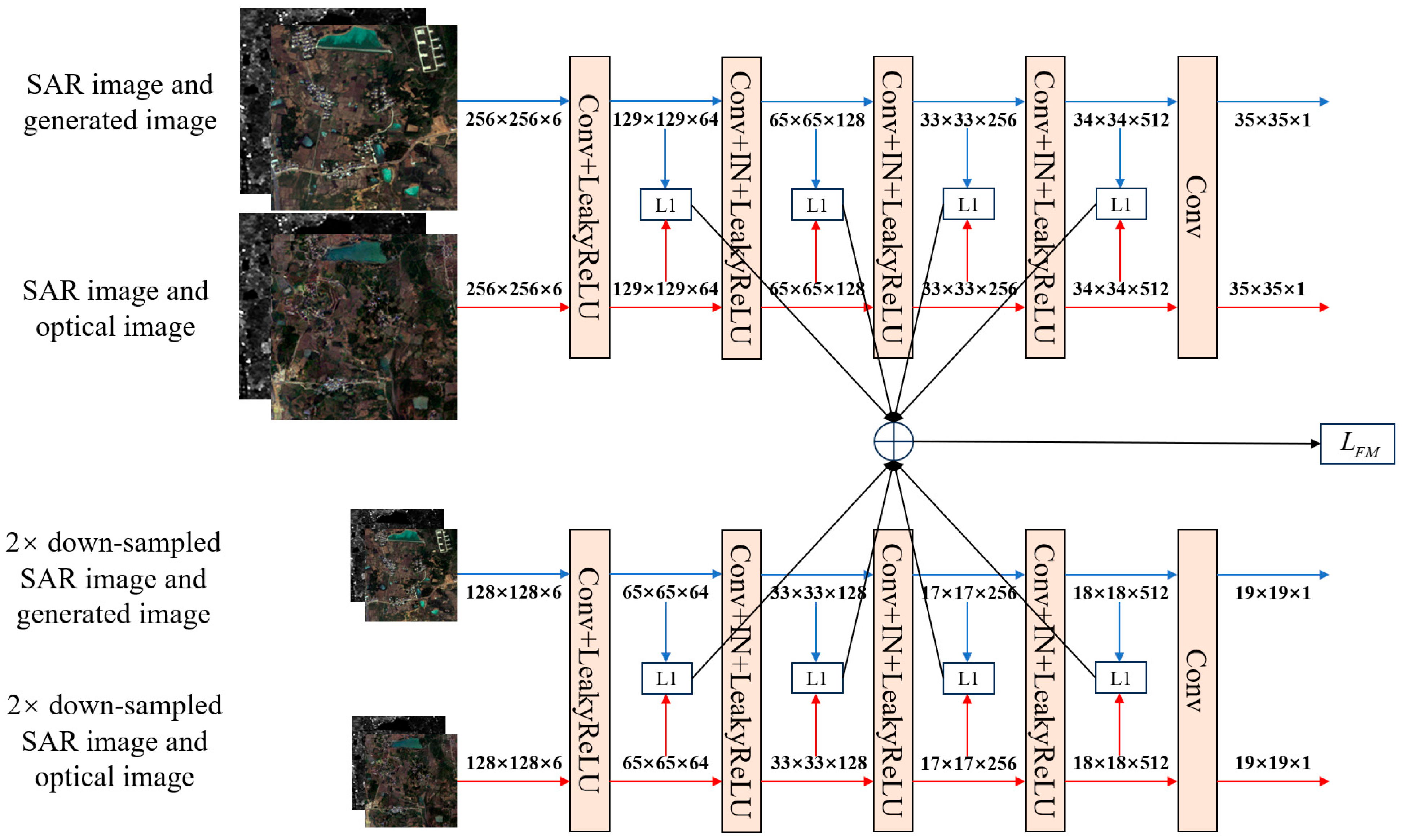

- To address the common issue in existing CGANs where it is difficult to balance global structural consistency and local detail authenticity in SAR image translation tasks, this paper proposes a dual-resolution collaborative discriminator. This structure uses PatchGAN based on local receptive fields as the basic framework of the discriminator. By constructing a high- and low-resolution collaborative analysis network, it focuses on pixel-level processing and scene-level semantic features, significantly improving the consistency between macro coherence and micro fidelity of generated images.

- (2)

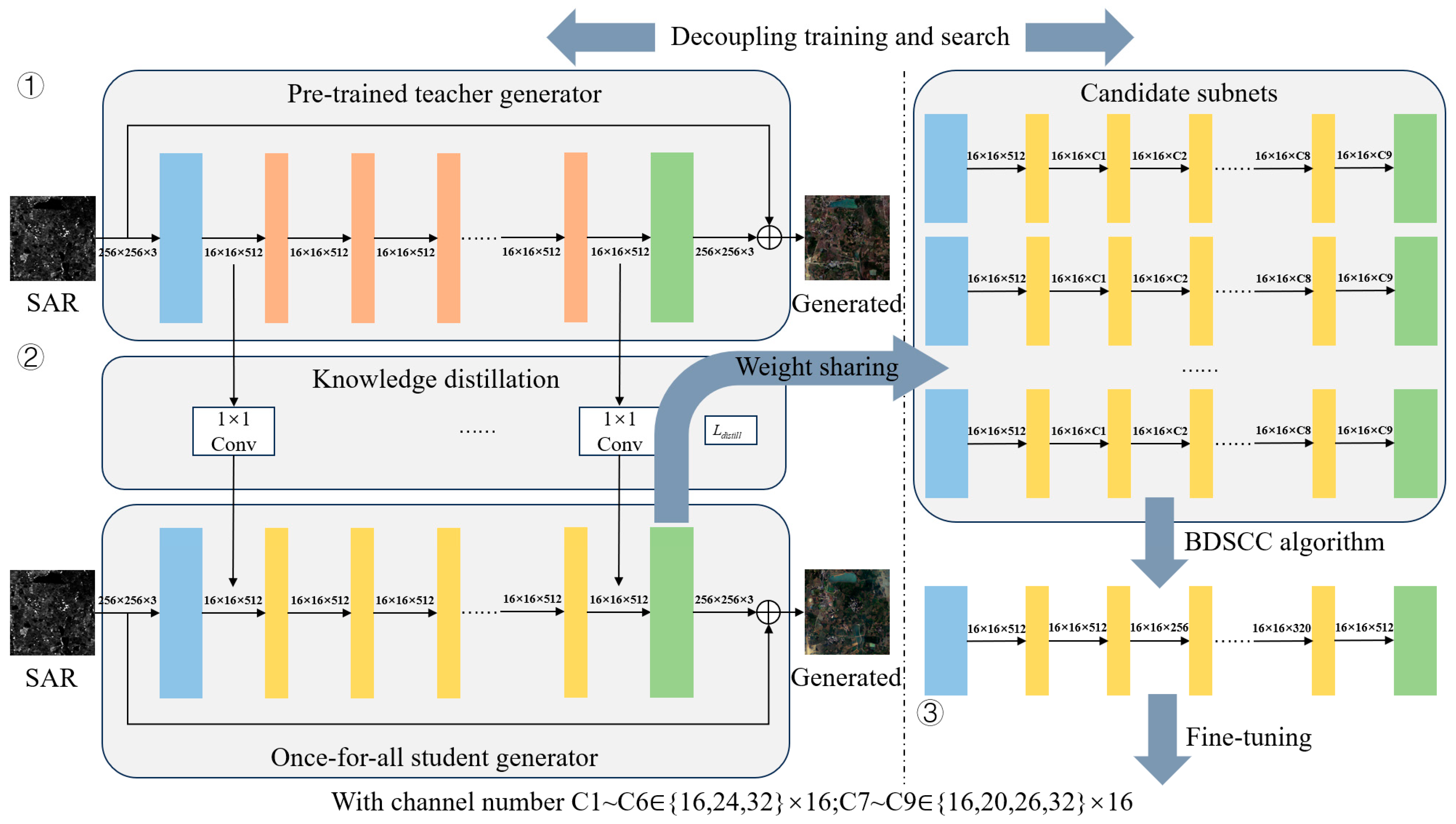

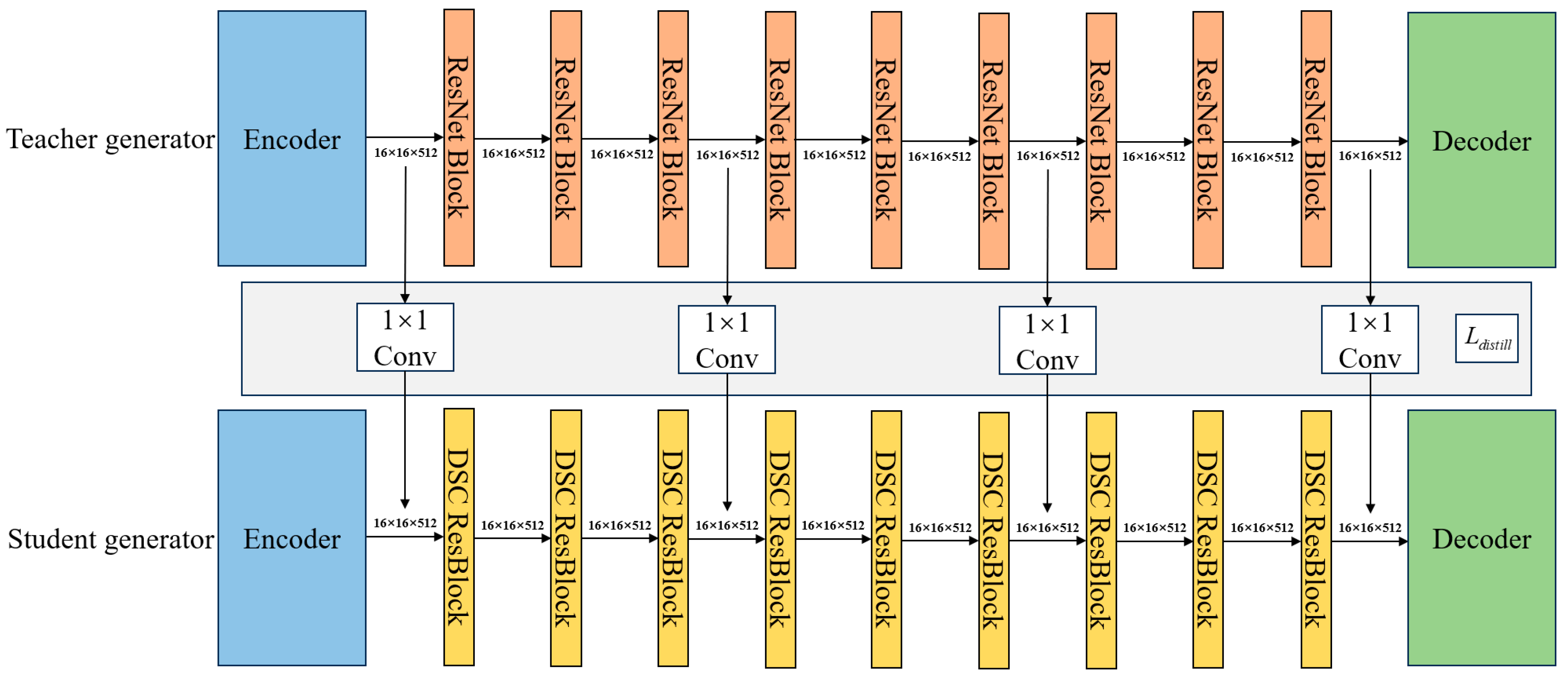

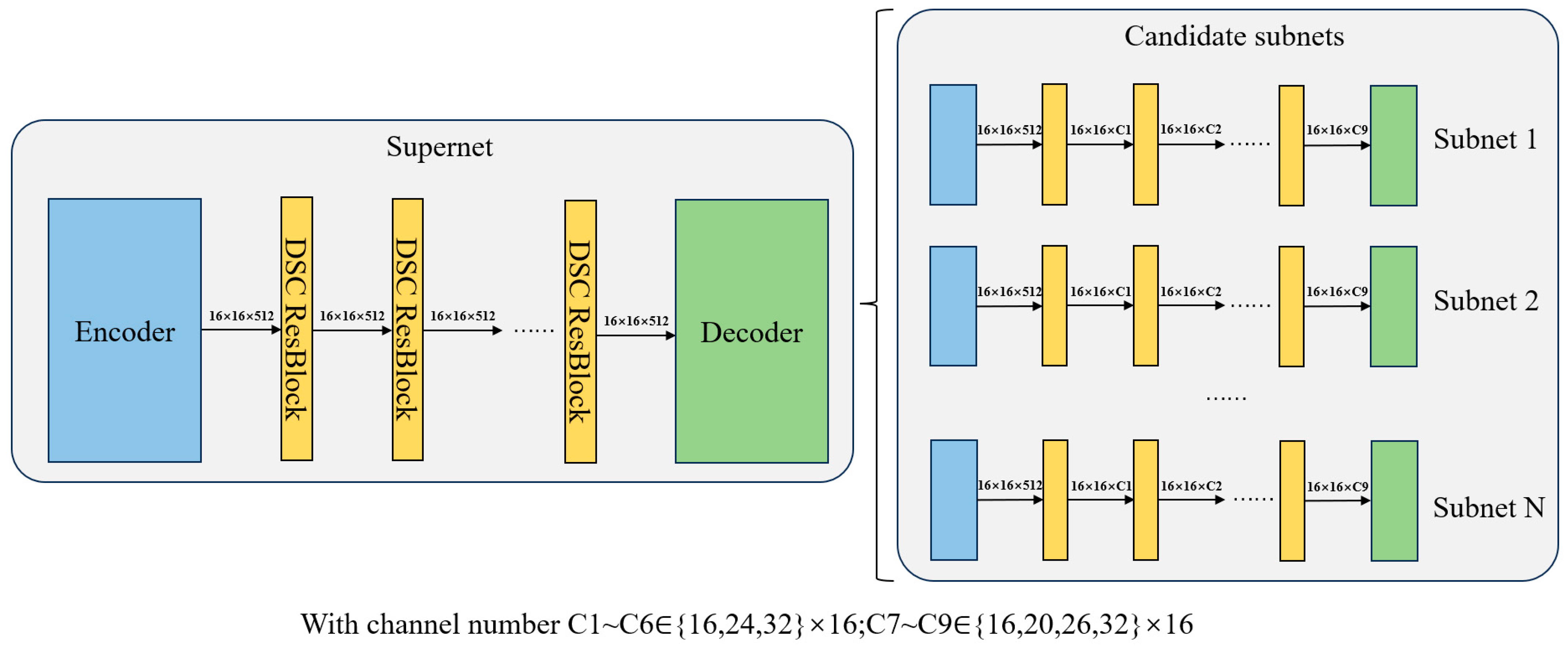

- In response to the limited guidance provided by traditional knowledge distillation strategies to the student network, which is not suitable for SAR-to-optical remote sensing image translation tasks, this paper designs knowledge distillation based on intermediate-layer features of the teacher network. Furthermore, to solve the potential issue of a large number of channel configurations, a channel-pruning strategy based on weight sharing is proposed.

- (3)

- To overcome the time-consuming disadvantages of traditional brute-force search algorithm, this paper proposes a bio-inspired dynamic search of channel configuration (BDSCC) algorithm. The algorithm constructs a dynamic biological population to simulate processes such as fitness evaluation, natural selection, gene recombination, and gene mutation in biology, significantly improving the search efficiency.

- (4)

- To address the issues of speckle noise and other problems in SAR images, this paper designs a pixel-semantic dual-domain alignment loss function. This loss function is jointly optimized through adversarial loss, perceptual loss, and feature-matching loss. The feature-matching loss, combined with the dual-resolution collaborative discriminator, constrains the statistical distribution consistency of the intermediate-layer features between generated images and target images in the discriminator, achieving cross-layer alignment from pixel-level details to semantic-level structures.

2. Related Work

2.1. SAR-to-Optical Image Translation

2.2. Model Compression

- (1)

- (2)

- Knowledge distillation, which trains a student network to learn knowledge from a teacher network, enabling the performance of the student network to approximate that of the teacher network as closely as possible. The concept of distillation in neural networks was first proposed by Hinton et al. [20]. It is a relatively universal and simple neural network compression technique and has been widely adopted in CNN model compression.

- (3)

- Pruning, which is generally divided into two types: structured pruning and unstructured pruning, with differences in what is pruned. Currently, structured pruning is more widely used. It prunes layers or channels and is suitable for traditional network architectures. Common approaches include pruning convolutional kernels [21] and pruning channels [22]. Unstructured pruning targets weights. However, since the pruned weight matrix is sparse, without specialized hardware conditions, it is difficult to achieve acceleration effects.

- (4)

- Quantization, which converts high-precision computations into low-precision operations. This significantly reduces both number of parameters and computational complexity.

3. Methodology

3.1. Holistic Compression Method

3.2. Network Architecture

3.2.1. Overall Architecture

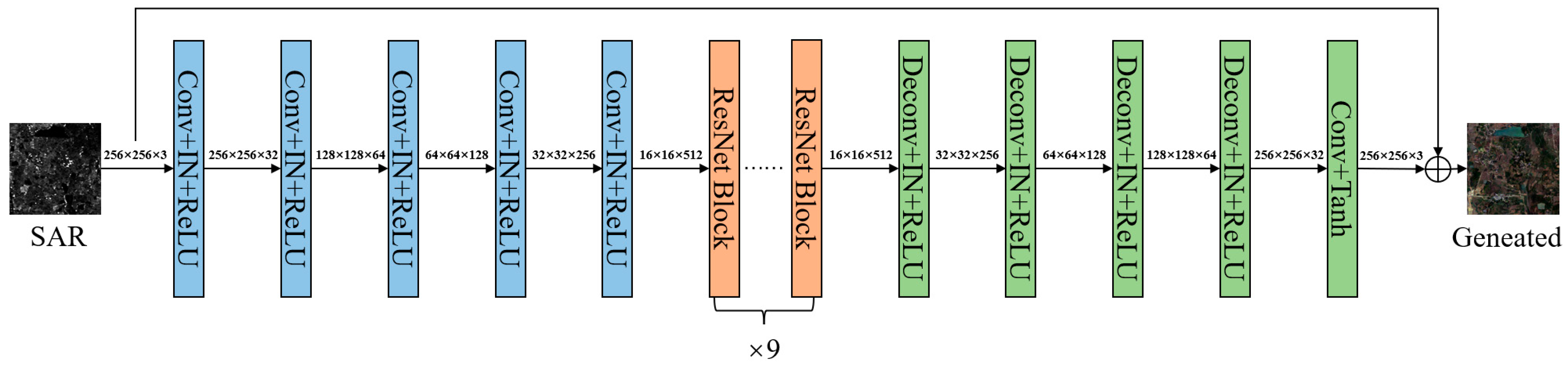

3.2.2. Teacher Generator

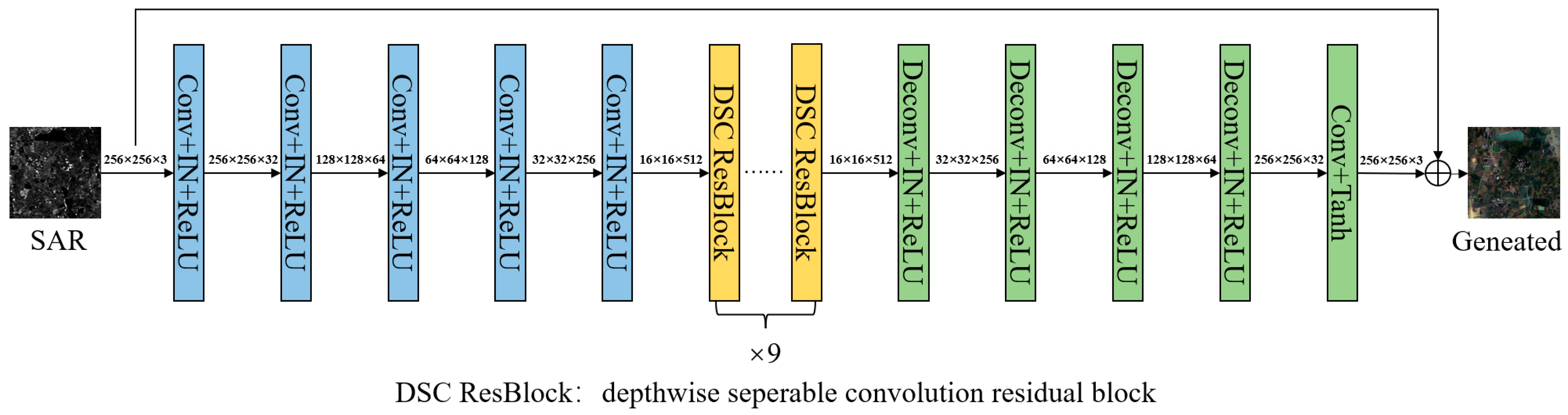

3.2.3. Student Generator

3.2.4. Dual-Resolution Collaborative Discriminator

3.3. Training and Search Strategies of the Student Network

3.3.1. Knowledge Distillation Based on Intermediate-Layer Features

3.3.2. Channel Pruning via Weight Sharing

3.3.3. BDSCC: Bio-Inspired Dynamic Search of Channel Configuration Algorithm

- (1)

- Fitness evaluation mechanism

- (2)

- Natural selection mechanism

- (3)

- Gene recombination mechanism

- (4)

- Gene mutation mechanism

3.4. Loss Function

3.4.1. Adversarial Loss

3.4.2. Perceptual Loss

3.4.3. Feature-Matching Loss

3.4.4. Total Loss

4. Results

4.1. Exprimental Procedures

4.2. Datasets and Parameter Settings

4.3. Evaluation Metrics

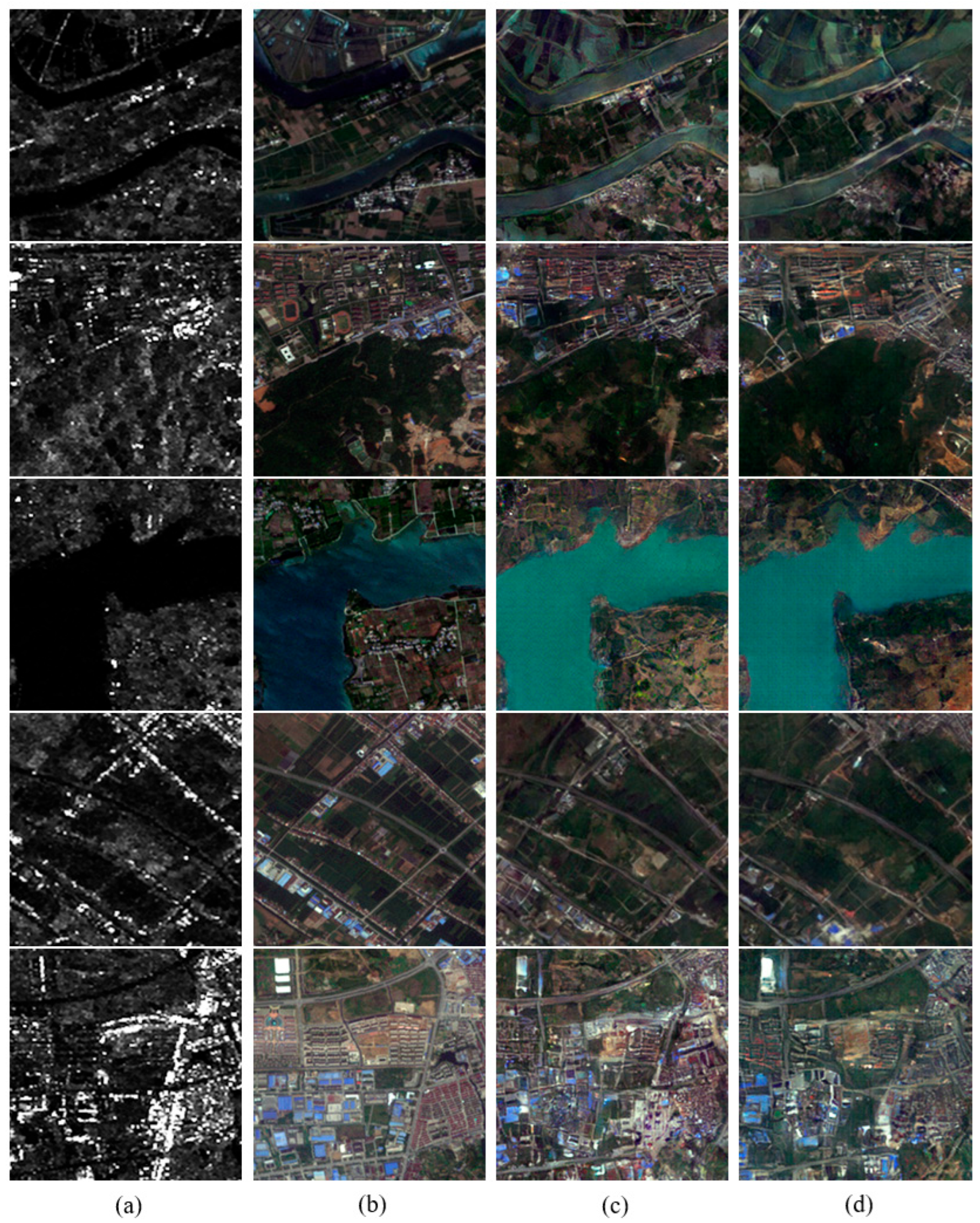

4.4. Results and Analysis

4.4.1. Results of Searching the Supernet

4.4.2. Comparison with the Teacher Network

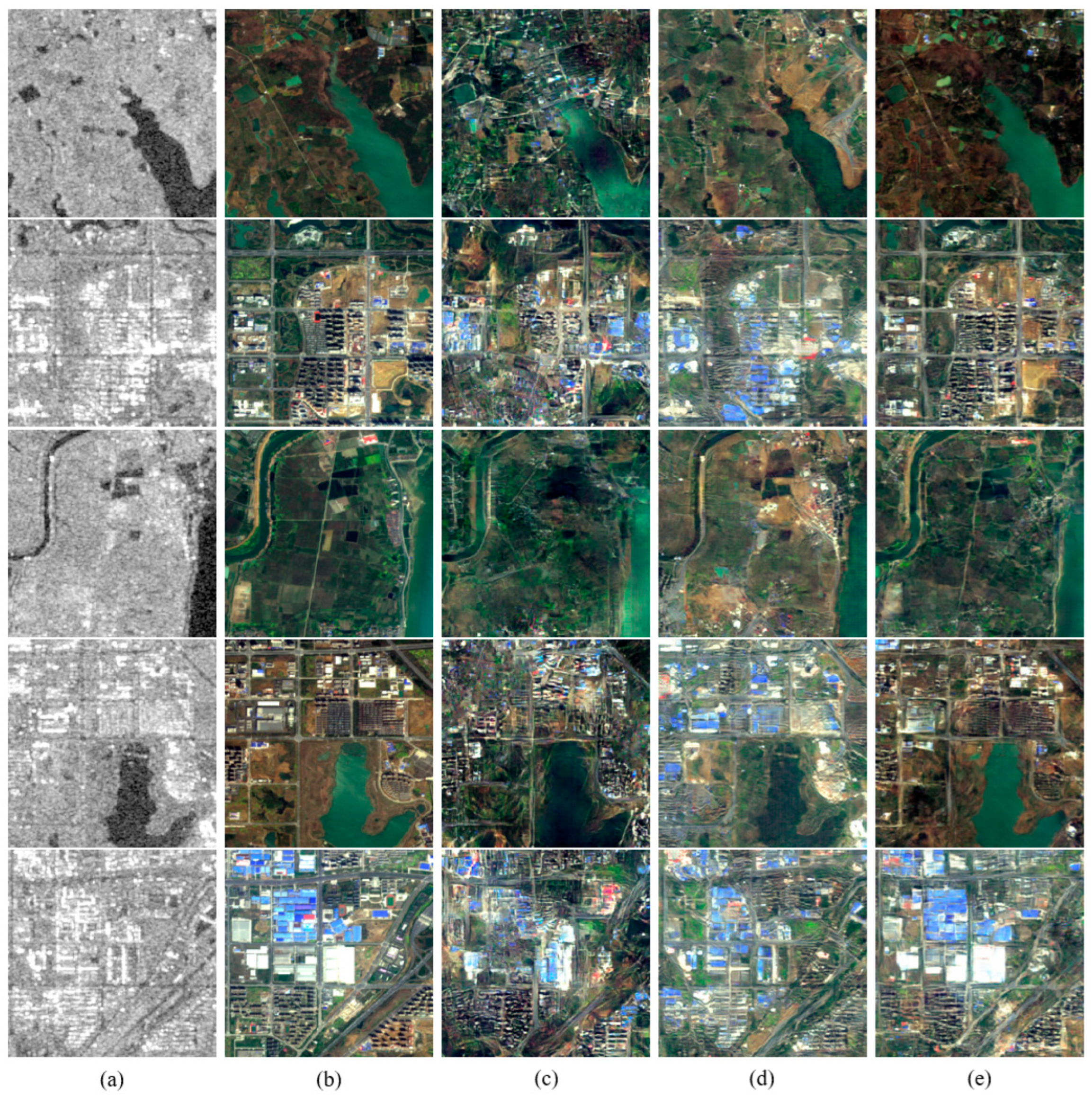

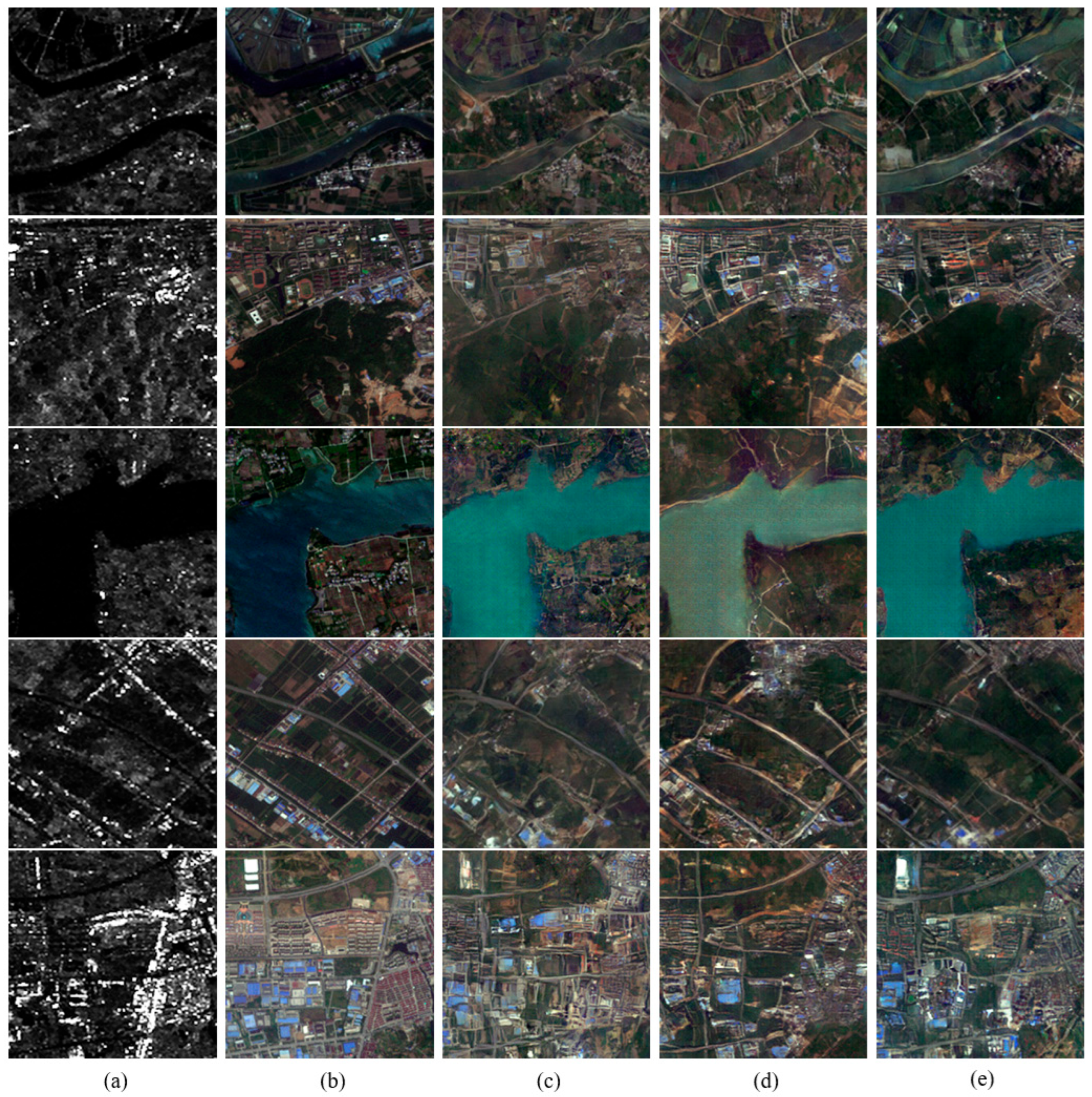

4.4.3. Different Network Analysis

4.4.4. Ablation Experiments

4.4.5. Loss Function Analysis

5. Conclusions

- (1)

- Although image translation networks combining Transformer and CNN can significantly improve the quality of generated images, Transformer still suffers from large number of parameters and high computational complexity. Moreover, research on the compression and acceleration of the Transformer is not yet complete. Therefore, a potential future research direction could focus on Transformer compression methods, aiming to achieve effective network compression while maintaining the quality of the generated images.

- (2)

- The compressed network proposed in this paper is supervised and relies on strictly paired SAR-optical remote sensing image datasets. However, obtaining precisely paired datasets in practical applications is significantly challenging. Thus, future research could focus on unsupervised compressed network for SAR-to-optical remote sensing image translation.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xue, W.; Ai, J.; Zhu, Y.; Chen, J.; Zhuang, S. AIS-FCANet: Long-Term AIS Data Assisted Frequency-Spatial Contextual Awareness Network for Salient Ship Detection in SAR Imagery. IEEE Trans. Aerosp. Electron. Syst. 2025, 1–6. [Google Scholar] [CrossRef]

- Zhou, H.; Yang, J.; Zhang, T.; Dai, A.; Wu, C. EAS-CNN: Automatic Design of Convolutional Neural Network for Remote Sensing Images Semantic Segmentation. Int. J. Remote Sens. 2023, 44, 3911–3938. [Google Scholar] [CrossRef]

- Manoharan, T.; Basha, S.H.; Murugan, J.S.; Suja, G.P.; Rajkumar, R.; Srimathi, S. A Novel Framework for Classifying Remote Sensing Images Using Convolutional Neural Networks. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; Volume 1, pp. 1–6. [Google Scholar]

- Vasileiou, C.; Smith, J.; Thiagarajan, S.; Nigh, M.; Makris, Y.; Torlak, M. Efficient CNN-Based Super Resolution Algorithms for Mmwave Mobile Radar Imaging. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3803–3807. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Neural Information Processing Systems (NIPS): San Diego, CA, USA, 2014; Volume 27, pp. 2672–2680. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Wang, T.-C.; Liu, M.-Y.; Zhu, J.-Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8798–8807. [Google Scholar]

- Fu, S.; Xu, F.; Jin, Y.-Q. Reciprocal Translation between SAR and Optical Remote Sensing Images with Cascaded-Residual Adversarial Networks. Sci. China Inf. Sci. 2021, 64, 122301. [Google Scholar] [CrossRef]

- Tan, D.; Liu, Y.; Li, G.; Yao, L.; Sun, S.; He, Y. Serial GANs: A Feature-Preserving Heterogeneous Remote Sensing Image Transformation Model. Remote Sens. 2021, 13, 3968. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, X.; Liu, M.; Zou, X.; Zhu, L.; Ruan, X. Comparative Analysis of Edge Information and Polarization on SAR-to-Optical Translation Based on Conditional Generative Adversarial Networks. Remote Sens. 2021, 13, 128. [Google Scholar] [CrossRef]

- Turnes, J.N.; Bermudez Castro, J.D.; Torres, D.L.; Soto Vega, P.J.; Feitosa, R.Q.; Happ, P.N. Atrous cGAN for SAR to Optical Image Translation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4003905. [Google Scholar] [CrossRef]

- Zhan, T.; Bian, J.; Yang, J.; Dang, Q.; Zhang, E. Improved Conditional Generative Adversarial Networks for SAR-to-Optical Image Translation. In Proceedings of the Pattern Recognition and Computer Vision, PRCV 2023, PT IV., Xiamen, China, 13–15 October 2023; Liu, Q., Wang, H., Ma, Z., Zheng, W., Zha, H., Chen, X., Wang, L., Ji, R., Eds.; Springer-Verlag Singapore Pte Ltd.: Singapore, 2024; Volume 14428, pp. 279–291. [Google Scholar]

- Shi, H.; Cui, Z.; Chen, L.; He, J.; Yang, J. A Brain-Inspired Approach for SAR-to-Optical Image Translation Based on Diffusion Models. Front. Neurosci. 2024, 18, 1352841. [Google Scholar] [CrossRef] [PubMed]

- Ji, G.; Wang, Z.; Zhou, L.; Xia, Y.; Zhong, S.; Gong, S. SAR Image Colorization Using Multidomain Cycle-Consistency Generative Adversarial Network. IEEE Geosci. Remote Sens. Lett. 2021, 18, 296–300. [Google Scholar] [CrossRef]

- Hwang, J.; Shin, Y. SAR-to-Optical Image Translation Using SSIM Loss Based Unpaired GAN. In Proceedings of the 2022 13th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 19–21 October 2022; pp. 917–920. [Google Scholar]

- Yang, X.; Wang, Z.; Zhao, J.; Yang, D. FG-GAN: A Fine-Grained Generative Adversarial Network for Unsupervised SAR-to-Optical Image Translation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5621211. [Google Scholar] [CrossRef]

- Wang, J.; Yang, H.; He, Y.; Zheng, F.; Liu, Z.; Chen, H. An Unpaired SAR-to-Optical Image Translation Method Based on Schrodinger Bridge Network and Multi-Scale Feature Fusion. Sci Rep 2024, 14, 27047. [Google Scholar] [CrossRef] [PubMed]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 4510–4520. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 5987–5995. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Luo, J.-H.; Wu, J.; Lin, W. ThiNet: A Filter Level Pruning Method for Deep Neural Network Compression. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 5068–5076. [Google Scholar]

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning Filters for Efficient ConvNets. arXiv 2017, arXiv:1608.08710. [Google Scholar] [CrossRef]

- Li, S.; Lin, M.; Wang, Y.; Chao, F.; Shao, L.; Ji, R. Learning Efficient GANs for Image Translation via Differentiable Masks and Co-Attention Distillation. IEEE Trans. Multimed. 2023, 25, 3180–3189. [Google Scholar] [CrossRef]

- You, L.; Hu, T.; Chao, F. Enhancing GAN Compression by Image Probability Distribution Distillation. In Pattern Recognition and Computer Vision; Liu, Q., Wang, H., Ma, Z., Zheng, W., Zha, H., Chen, X., Wang, L., Ji, R., Eds.; Lecture Notes in Computer Science; Springer Nature Singapore: Singapore, 2024; Volume 14435, pp. 76–88. ISBN 978-981-99-8551-7. [Google Scholar]

- Lin, Y.-J.; Yang, S.-H. Compressing Generative Adversarial Networks Using Improved Early Pruning. In Proceedings of the 2024 11th International Conference on Consumer Electronics-Taiwan, ICCE-Taiwan 2024, Taichung, Taiwan, 9–11 July 2024; IEEE: New York, NY, USA, 2024; pp. 39–40. [Google Scholar]

- Yeo, S.; Jang, Y.; Yoo, J. Nickel and Diming Your GAN: A Dual-Method Approach to Enhancing GAN Efficiency via Knowledge Distillation. In Proceedings of the Computer Vision—ECCV 2024, PT LXXXVIII., Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2025; Volume 15146, pp. 104–121. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Neural Information Processing Systems (nips): San Diego, CA, USA, 2017; Volume 30. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Cai, H.; Gan, C.; Wang, T.; Zhang, Z.; Han, S. Once-for-All: Train One Network and Specialize It for Efficient Deployment. Available online: https://arxiv.org/abs/1908.09791v5 (accessed on 19 June 2025).

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Neural Information Processing Systems (nips): San Diego, CA, USA, 2017; Volume 30. [Google Scholar]

- Kong, Y.; Liu, S.; Peng, X. Multi-Scale Translation Method from SAR to Optical Remote Sensing Images Based on Conditional Generative Adversarial Network. Int. J. Remote Sens. 2022, 43, 2837–2860. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least Squares Generative Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 2813–2821. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.-F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the Computer Vision—ECCV 2016, PT II., Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2016; Volume 9906, pp. 694–711. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 586–595. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R., Eds.; Neural Information Processing Systems (nips): San Diego, CA, USA, 2016; Volume 29. [Google Scholar]

- Schmitt, M.; Hughes, L.H.; Zhu, X.X. The Sen1-2 Dataset for Deep Learning in Sar-Optical Data Fusion. In Proceedings of the ISPRS TC I Mid-Term Symposium Innovative Sensing—From Sensors to Methods and Applications, Changsha, China, 10–12 October 2018; Jutzi, B., Weinmann, M., Hinz, S., Eds.; Copernicus Gesellschaft Mbh: Gottingen, Germany, 2018; Volume 4–1, pp. 141–146. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 2242–2251. [Google Scholar]

| Parameters | Value |

|---|---|

| Population size | 100 |

| Probability of individual gene mutation | 0.2 |

| Ratio of population gene mutation | 0.5 |

| Ratio of population gene recombination | 0.25 |

| Times of iterations | 200 |

| Number of Iterations | Channel Configuration | FID ↓ | MACs ↓ |

|---|---|---|---|

| 1 | 16_32_16_16_16_32_26_26_32 | 86.71 | 7.58 G |

| 2–4 | 24_32_32_32_24_32_26_32_32 | 86.30 | 7.81 G |

| 5–83 | 32_16_16_16_24_16_26_26_20 | 85.60 | 7.49 G |

| 84–87 | 32_24_24_16_24_24_26_32_20 | 85.59 | 7.62 G |

| 88–168 | 32_32_16_32_32_24_32_32_32 | 85.21 | 7.80 G |

| 169–200 | 32_16_32_16_16_24_32_20_32 | 85.02 | 7.61 G |

| FID ↓ | LPIPS ↓ | MSE ↓ | PSNR ↑ | SSIM ↑ | Number of Parameters ↓ | ||

|---|---|---|---|---|---|---|---|

| G | D | ||||||

| Teacher network | 79.08 | 0.5801 | 0.5867 | 17.02 | 0.1987 | 45.621 M | 5.534 M |

| ILF-BDSNet | 78.50 | 0.5852 | 0.5882 | 16.93 | 0.2124 | 6.838 M | 5.534 M |

| Number of Parameters ↓ | MACs ↓ | Image-Processing Time ↓ | GPU Memory Usage ↓ | |

|---|---|---|---|---|

| Teacher network | 45.621 M | 17.54 G | 0.0199 s/img | 6965 MB |

| ILF-BDSNet | 6.838 M | 7.61 G | 0.0158 s/img | 5175 MB |

| FID ↓ | LPIPS ↓ | MSE ↓ | PSNR ↑ | SSIM ↑ | Number of Parameters ↓ | ||

|---|---|---|---|---|---|---|---|

| G | D | ||||||

| Pix2pix | 142.10 | 0.6145 | 0.7427 | 15.03 | 0.1237 | 54.414 M | 2.769 M |

| CycleGAN | 99.14 | 0.5919 | 0.6995 | 15.59 | 0.1645 | 22.756 M | 5.530 M |

| ILF-BDSNet | 78.50 | 0.5852 | 0.5882 | 16.93 | 0.2124 | 6.838 M | 5.534 M |

| FID ↓ | LPIPS ↓ | MSE ↓ | PSNR ↑ | SSIM ↑ | Number of Parameters ↓ | ||

|---|---|---|---|---|---|---|---|

| G | D | ||||||

| Pix2pix | 109.84 | 0.5640 | 0.5942 | 14.73 | 0.1780 | 54.414 M | 2.769 M |

| CycleGAN | 122.27 | 0.6024 | 0.7183 | 13.08 | 0.1157 | 22.756 M | 5.530 M |

| ILF-BDSNet | 67.56 | 0.4590 | 0.4347 | 17.47 | 0.3072 | 6.838 M | 5.534 M |

| FID ↓ | LPIPS ↓ | MSE ↓ | PSNR ↑ | SSIM ↑ | Number of Parameters ↓ | ||

|---|---|---|---|---|---|---|---|

| G | D | ||||||

| Single discriminator | 84.39 | 0.5850 | 0.5961 | 16.85 | 0.2066 | 6.838 M | 2.767 M |

| Traditional distillation | 85.63 | 0.5846 | 0.5986 | 16.78 | 0.1997 | 6.838 M | 5.534 M |

| Without pruning | 82.32 | 0.5825 | 0.5808 | 17.04 | 0.2123 | 7.964 M | 5.534 M |

| Lightweight network | 107.45 | 0.6021 | 0.6093 | 16.69 | 0.1981 | 6.420 M | 5.534 M |

| ILF-BDSNet | 78.50 | 0.5852 | 0.5882 | 16.93 | 0.2124 | 6.838 M | 5.534 M |

| FID ↓ | LPIPS ↓ | MSE ↓ | PSNR ↑ | SSIM ↑ | |

|---|---|---|---|---|---|

| w/o vgg | 92.83 | 0.5912 | 0.6061 | 16.80 | 0.1881 |

| w/o feat | 84.24 | 0.5938 | 0.6541 | 16.23 | 0.1810 |

| w/vgg&feat | 78.50 | 0.5852 | 0.5882 | 16.93 | 0.2124 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, Y.; Xu, C. ILF-BDSNet: A Compressed Network for SAR-to-Optical Image Translation Based on Intermediate-Layer Features and Bio-Inspired Dynamic Search. Remote Sens. 2025, 17, 3351. https://doi.org/10.3390/rs17193351

Kong Y, Xu C. ILF-BDSNet: A Compressed Network for SAR-to-Optical Image Translation Based on Intermediate-Layer Features and Bio-Inspired Dynamic Search. Remote Sensing. 2025; 17(19):3351. https://doi.org/10.3390/rs17193351

Chicago/Turabian StyleKong, Yingying, and Cheng Xu. 2025. "ILF-BDSNet: A Compressed Network for SAR-to-Optical Image Translation Based on Intermediate-Layer Features and Bio-Inspired Dynamic Search" Remote Sensing 17, no. 19: 3351. https://doi.org/10.3390/rs17193351

APA StyleKong, Y., & Xu, C. (2025). ILF-BDSNet: A Compressed Network for SAR-to-Optical Image Translation Based on Intermediate-Layer Features and Bio-Inspired Dynamic Search. Remote Sensing, 17(19), 3351. https://doi.org/10.3390/rs17193351