CGAQ-DETR: DETR with Corner Guided and Adaptive Query for SAR Object Detection

Abstract

Highlights

- A novel DETR with Corner Guided and Adaptive Query for SAR Object Detection, named CGAQ-DETR, achieves state-of-the-art mAP@50 scores of 69.8% on SARDet-100K and 92.9% on FAIR-CSAR, demonstrating high accuracy and robustness.

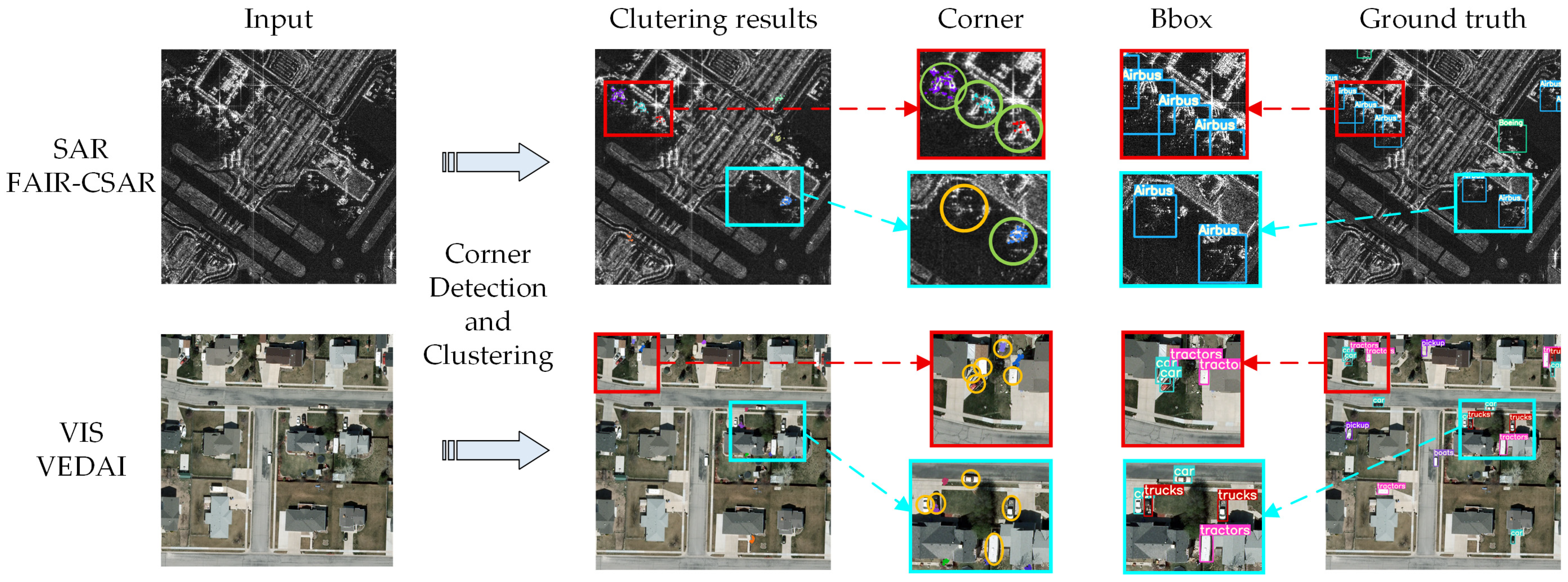

- A Corner-Guided Multi-Scale Feature Enhancement Module (CMFE) module and an Adaptive Query Regression Module (AQR) module are introduced, enabling the model to perform adaptive, high-precision detection despite fluctuations in the scale and number of SAR objects.

- This method effectively addresses challenges in SAR object detection, such as fluctuations in object quantity, scale variations, and discrete characteristics, while exploring new applications of DETR’s adaptive query mechanism.

- This method provides an efficient, input data-driven solution that is applicable to standard SAR detection tasks, without the need for architectural modifications or extensive retraining.

Abstract

1. Introduction

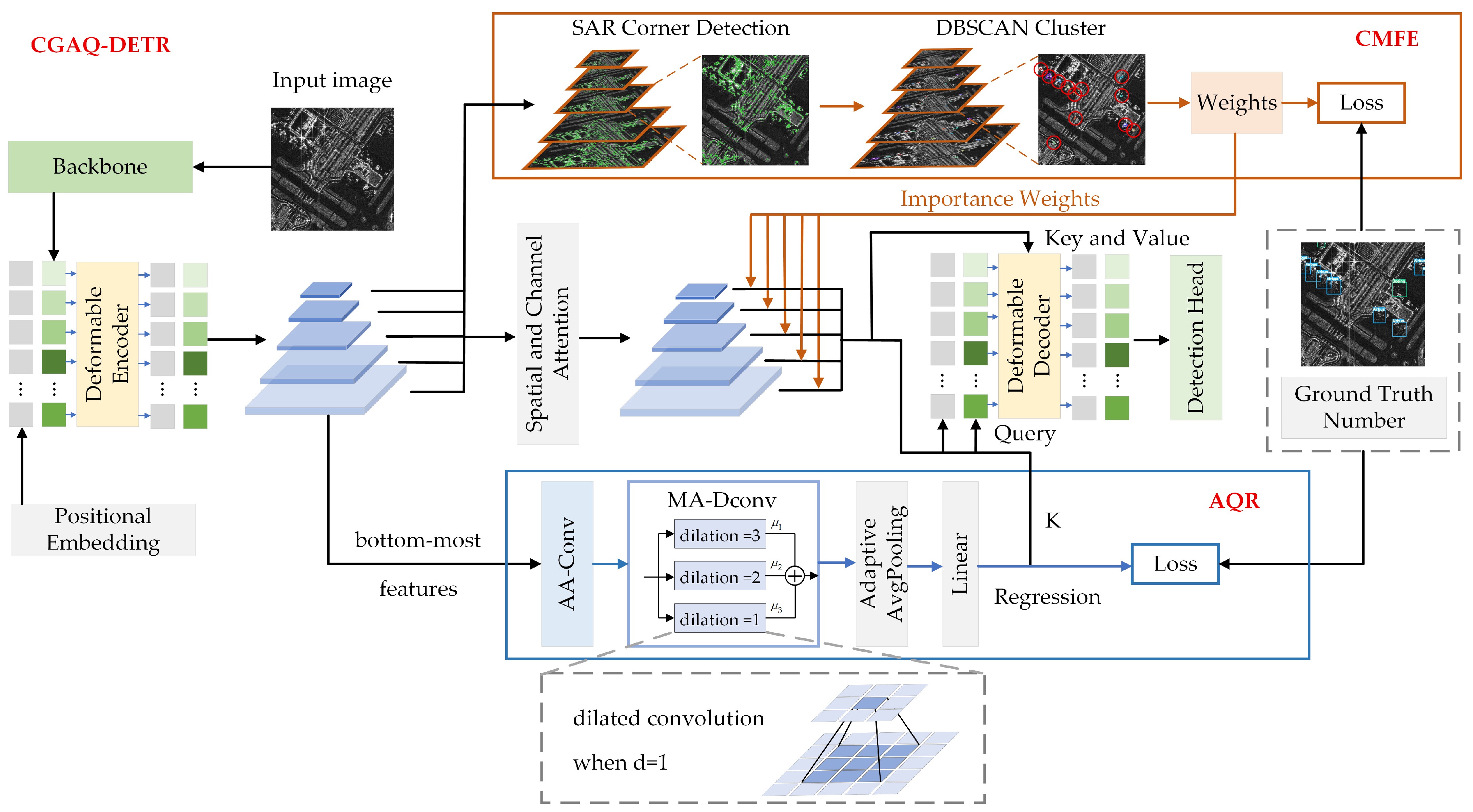

- We propose a novel SAR object detection method named CGAQ-DETR, which is the first detector designed to simultaneously leverage the discrete characteristics of SAR objects and address the frequent fluctuations in object scale and quantity within this task.

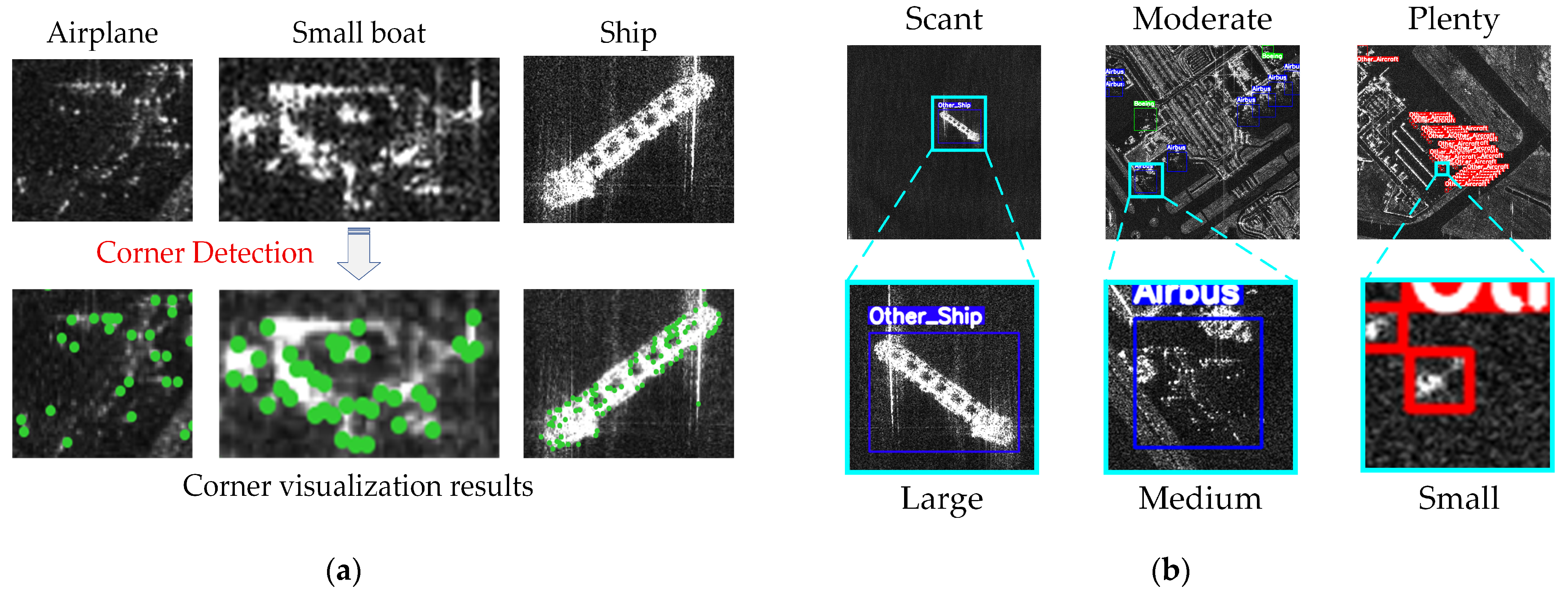

- To address the issue of object scale variation, we designed a Corner-Guided Multi-Scale Feature Enhancement Module (CMFE), which evaluates the object scale and quantity based on the discrete characteristics of SAR objects. This module, in conjunction with ground truth, enhances multi-scale features during training to enable the model to dynamically focus on important feature layers.

- To address the issue of object quantity fluctuations, we designed an Adaptive Query Regression Module (AQR), which leverages the most informative low-level features to perform lightweight object quantity estimation, enabling fine-grained dynamic adjustment of K.

- Our method is extensively evaluated through detailed data statistics and numerous experiments on the SARDet-100K and FAIR-CSAR datasets, achieving outstanding performance in all cases. Experimental results demonstrate that our algorithm delivers excellent performance across datasets with varying scales and quantity distributions.

2. Related Work

2.1. Detection Transformer

2.2. SAR Object Detection

2.3. Physical Characteristics of SAR

3. Materials and Methods

3.1. Corner-Guided Multi-Scale Feature Enhancement Module

3.2. Adaptive Query Regression Module

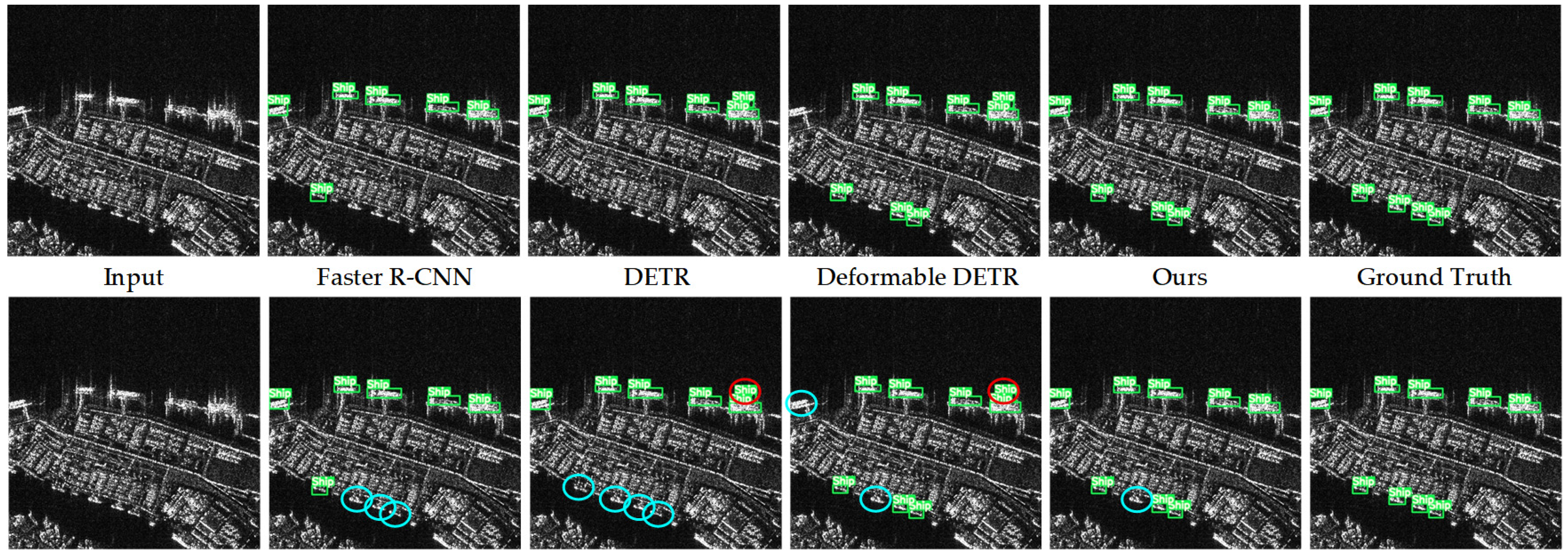

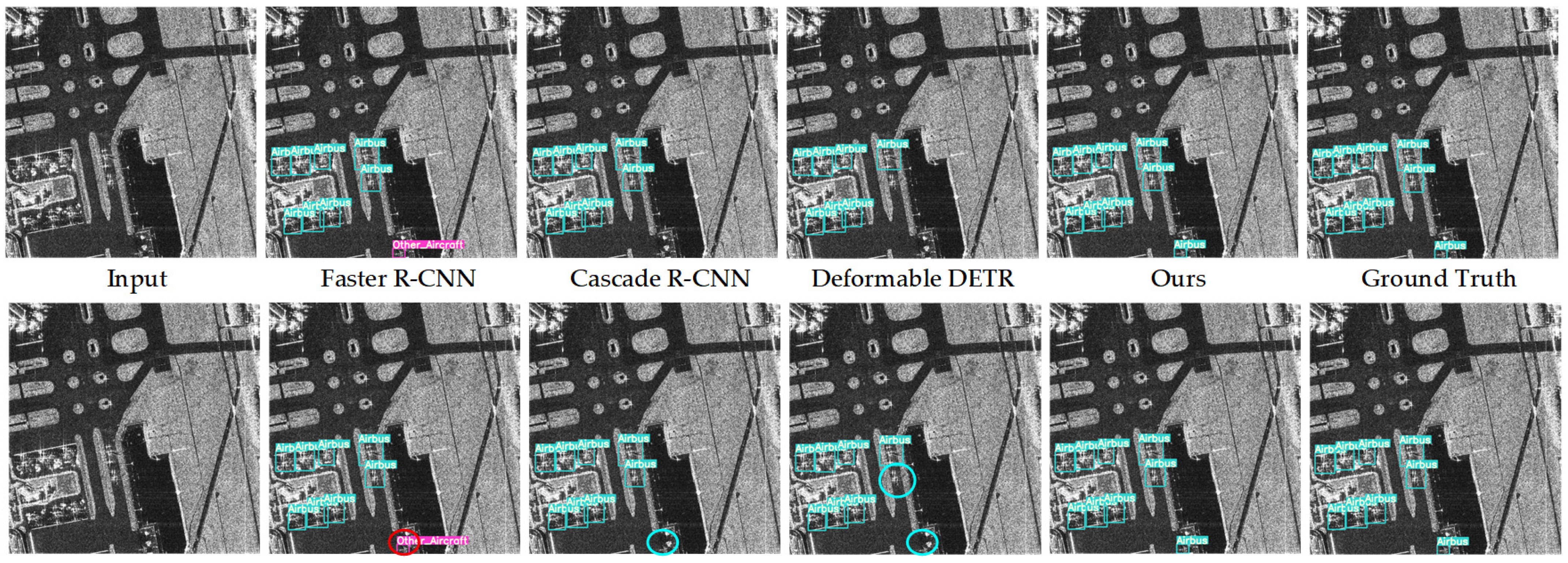

4. Results

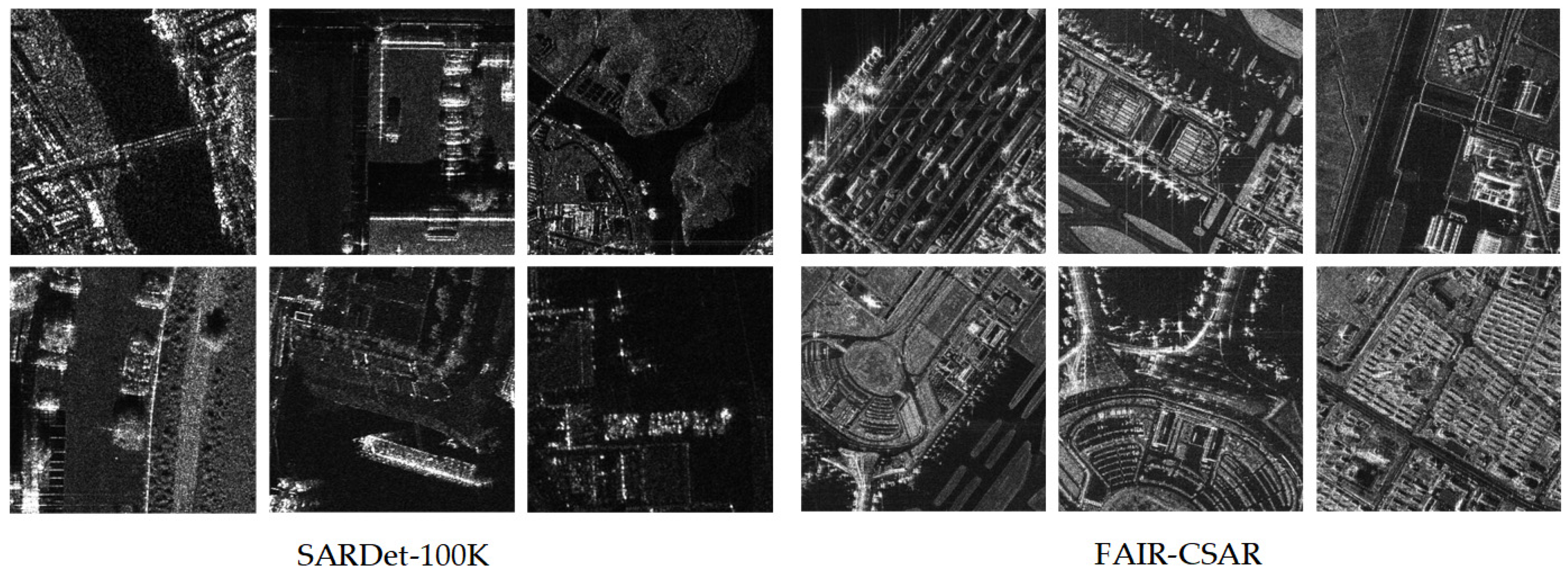

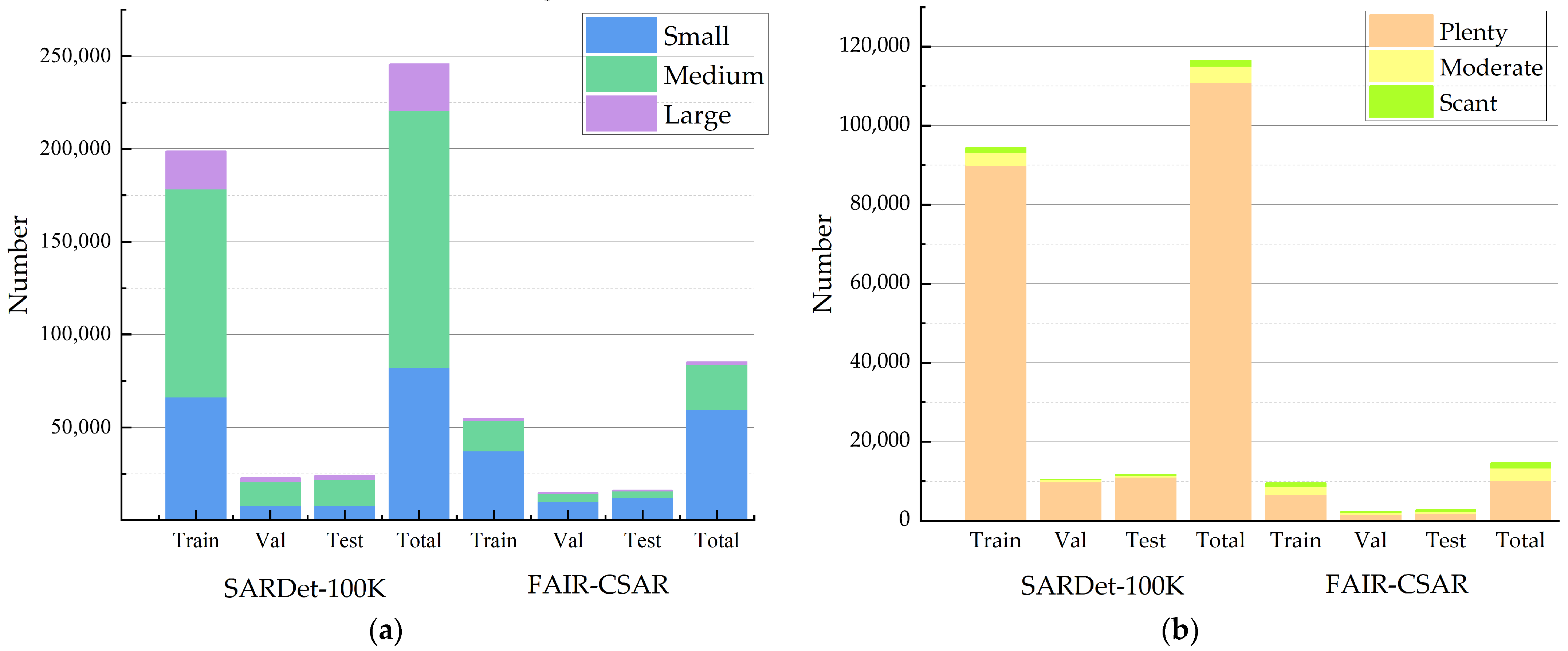

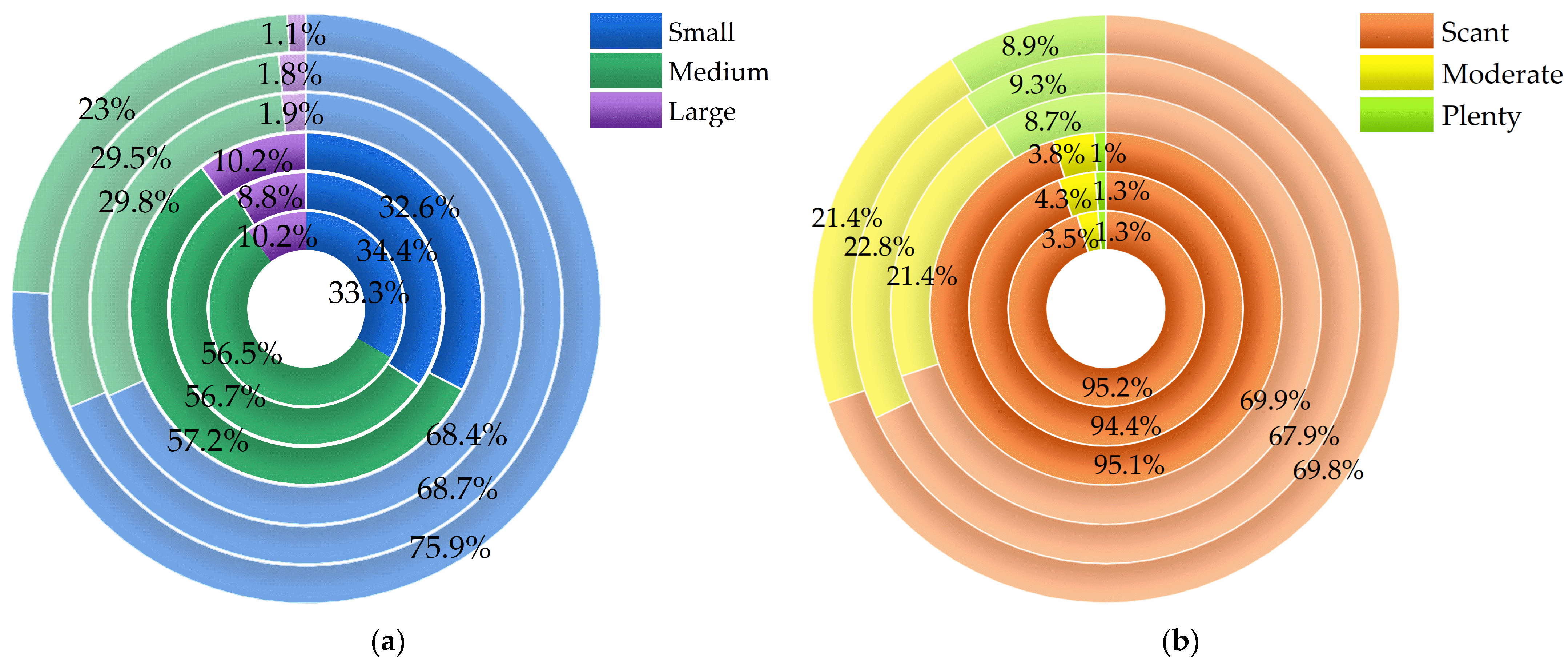

4.1. Datasets

4.2. Implementation Details

4.3. Experiment Results

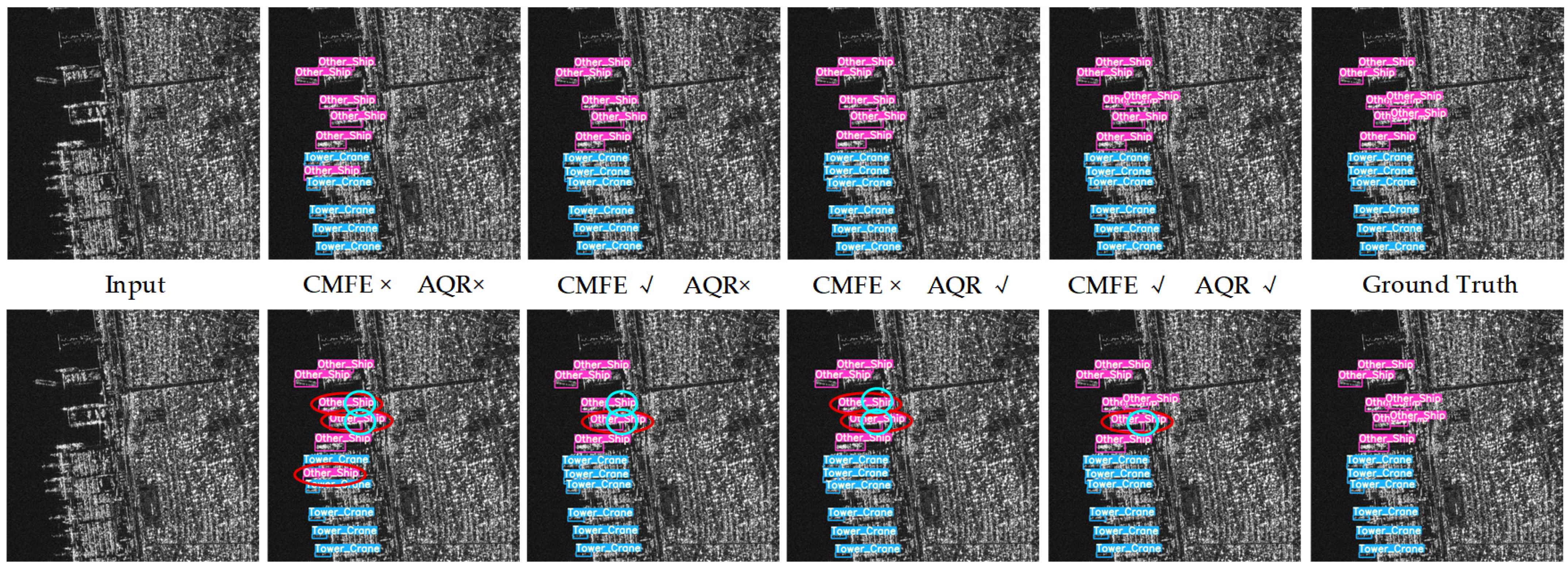

4.4. Ablation Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef]

- Xu, G.; Gao, Y.; Li, J.; Xing, M. InSAR phase denoising: A review of current technologies and future directions. IEEE Geosci. Remote Sens. Mag. 2020, 8, 64–82. [Google Scholar] [CrossRef]

- Chen, J.; Xing, M.; Yu, H.; Liang, B.; Peng, J.; Sun, G.-C. Motion compensation/autofocus in airborne synthetic aperture radar: A review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 185–206. [Google Scholar] [CrossRef]

- Li, C.; Yue, C.; Li, H.; Wang, Z. Context-aware SAR image ship detection and recognition network. Front. Neurorobotics 2024, 18, 1293992. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A Centernet++ model for ship detection in SAR images. Pattern Recognit. 2021, 112, 107787. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Zhou, G.; Xu, Z.; Fan, Y.; Zhang, Z.; Qiu, X.; Zhang, B.; Fu, K.; Wu, Y. HPHR-SAR-Net: Hyperpixel High-Resolution SAR Imaging Network Based on Nonlocal Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8595–8608. [Google Scholar] [CrossRef]

- Zhao, G.; Li, P.; Zhang, Z.; Guo, F.; Huang, X.; Xu, W.; Chen, J. Towards SAR Automatic Target Recognition: Multi-Category SAR Image Classification Based on Light Weight Vision Transformer. In Proceedings of the 2024 21st Annual International Conference on Privacy, Security and Trust (PST), Sydney, Australia, 28–30 August 2024; pp. 1–6. [Google Scholar]

- Gai, J.; Li, C. Semi-Supervised Multiscale Matching for SAR-Optical Image. arXiv 2025, arXiv:2508.07812. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Proc. Adv. Neural Inf. Process. Syst. (NIPS) 2020, 33, 6840–6851. [Google Scholar]

- Xiong, X.; Zhang, X.; Jiang, W.; Liu, L.; Liu, Y.; Liu, T. SAR-GTR: Attributed Scattering Information Guided SAR Graph Transformer Recognition Algorithm. arXiv 2025, arXiv:2505.08547. [Google Scholar] [CrossRef]

- Cheng, X.; He, Y.; Zhu, J.; Qiu, C.; Wang, J.; Huang, Q.; Yang, K. SAR-TEXT: A Large-Scale SAR Image-Text Dataset Built with SAR-Narrator and Progressive Transfer Learning. arXiv 2025, arXiv:2507.18743. [Google Scholar]

- Luo, B.; Cao, H.; Cui, J.; Lv, X.; He, J.; Li, H.; Peng, C. SAR-PATT: A Physical Adversarial Attack for SAR Image Automatic Target Recognition. Remote Sens. 2025, 17, 21. [Google Scholar] [CrossRef]

- Ma, Y.; Guan, D.; Deng, Y.; Yuan, W.; Wei, M. 3SD-Net: SAR small ship detection neural network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5221613. [Google Scholar] [CrossRef]

- Huang, H.; Guo, J.; Lin, H.; Huang, Y.; Ding, X. Domain Adaptive Oriented Object Detection from Optical to SAR Images. IEEE Trans. Geosci. Remote Sens 2024, 63, 5200314. [Google Scholar] [CrossRef]

- Wu, F.; Zhou, Z.; Wang, B.; Ma, J. Inshore Ship Detection Based on Convolutional Neural Network in Optical Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4005–4015. [Google Scholar] [CrossRef]

- Zhang, P.; Xu, H.; Tian, T.; Gao, P.; Tian, J. SFRE-Net: Scattering Feature Relation Enhancement Network for Aircraft Detection in SAR Images. Remote Sens. 2022, 14, 2076. [Google Scholar] [CrossRef]

- Liu, Q.; Ye, Z.; Zhu, C.; Ouyang, D.; Gu, D.; Wang, H. Intelligent Target Detection in Synthetic Aperture Radar Images Based on Multi-Level Fusion. Remote Sens. 2025, 17, 112. [Google Scholar] [CrossRef]

- Zhao, Z.; Tong, Y.; Jia, M.; Qiu, Y.; Wang, X.; Hei, X. Few-Shot SAR Image Classification via Multiple Prototypes Ensemble. Neurocomputing 2025, 635, 129989. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, G.; Yang, J.; Xie, Y.; Liu, C.; Liu, Y. CSS-YOLO: A SAR Image Ship Detection Method for Complex Scenes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, early access. [Google Scholar] [CrossRef]

- Wang, T.; Zeng, Z. Adaptive multiscale reversible column network for SAR ship detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6894–6909. [Google Scholar] [CrossRef]

- Ning, T.; Pan, S.; Zhou, J. YOLOv7-SIMAM: An Effective Method for SAR Ship Detection. In Proceedings of the 2024 4th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 19–21 January 2024; pp. 754–758. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector; UNC Chapel Hill: Chapel Hill, NC, USA; Zoox Inc.: Palo Alto, CA, USA; Google Inc.: Mountain View, CA, USA; University of Michigan: Ann-Arbor, MI, USA; RWTH Aachen: Aachen, Germany; Czech Technical University: Prague, Czech Republic; University of Trento: Povo-Trento, Italy; University of Amsterdam: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9, 860. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense attention pyramid networks for multi-scale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A novel YOLO-based method for arbitrary-oriented ship detection in high-resolution SAR images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Zhang, C.; Yu, R.; Wang, S.; Zhang, F.; Ge, S.; Li, S.; Zhao, X. Edge-Optimized Lightweight YOLO for Real-Time SAR Object Detection. Remote Sens. 2025, 17, 2168. [Google Scholar] [CrossRef]

- Zhao, D.; Chen, Z.; Gao, Y.; Shi, Z. Classification Matters More: Global Instance Contrast for Fine-Grained SAR Aircraft Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5203815. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, N.; Guo, J.; Zhang, C.; Wang, B. SCFNet: Semantic Condition Constraint Guided Feature Aware Network for Aircraft Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5239420. [Google Scholar] [CrossRef]

- Chen, L.; Luo, R.; Xing, J.; Li, Z.; Yuan, Z.; Cai, X. Geospatial transformer is what you need for aircraft detection in SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5225715. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Li, C.; Kuang, G. Pyramid attention dilated network for aircraft detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 662–666. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, H.; Xu, F. Scattering enhanced attention pyramid network for aircraft detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7570–7587. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Liu, Z.; Hu, D.; Kuang, G.; Liu, L. Attentional feature refinement and alignment network for aircraft detection in SAR imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5220616. [Google Scholar] [CrossRef]

- Yang, R.; Pan, Z.; Jia, X.; Zhang, L.; Deng, Y. A novel CNN-based detector for ship detection based on rotatable bounding box in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 1938–1958. [Google Scholar] [CrossRef]

- Zeng, L.; Zhu, Q.; Lu, D.; Zhang, T.; Wang, H.; Yin, J.; Yang, J. Dual-polarized SAR ship grained classification based on CNN with hybrid channel feature loss. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4011905. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zhou, Y.; Jiang, X.; Xu, G.; Yang, X.; Liu, X.; Li, Z. PVT-SAR: An Arbitrarily Oriented SAR Ship Detector With Pyramid Vision Transformer. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 291–305. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. NeurIPS 2017, 30, 600–610. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable Detr: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Chen, P.; Zhou, H.; Li, Y.; Liu, B.; Liu, P. A Deformable and Multi-Scale Network with Self-Attentive Feature Fusion for SAR Ship Classification. J. Mar. Sci. Eng. 2024, 12, 1524. [Google Scholar] [CrossRef]

- Meng, L.; Li, D.; He, J.; Ma, L.; Li, Z. Convolutional Feature Enhancement and Attention Fusion BiFPN for Ship Detection in SAR Images. arXiv 2025, arXiv:2506.15231. [Google Scholar] [CrossRef]

- Huang, Y.X.; Liu, H.I.; Shuai, H.H.; Cheng, W.H. DQ-DETR: DETR with Dynamic Query for Tiny Object Detection. In Computer Vision—ECCV 2024, Proceedings of the 18th European Conference, Milan, Italy, September 29–October 4 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2024; pp. 290–305. [Google Scholar]

- Liu, C.; He, Y.; Zhang, X.; Wang, Y.; Dong, Z.; Hong, H. CS-FSDet: A Few-Shot SAR Target Detection Method for Cross-Sensor Scenarios. Remote Sens. 2025, 17, 2841. [Google Scholar] [CrossRef]

- Xu, Y.; Pan, H.; Wang, L.; Zou, R. MC-ASFF-ShipYOLO: Improved Algorithm for Small-Target and Multi-Scale Ship Detection for Synthetic Aperture Radar (SAR) Images. Sensors 2025, 25, 2940. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Li, W.; Hou, Q.; Liu, L.; Cheng, M.M.; Yang, J. SARDet-100K: Towards Open-Source Benchmark and ToolKit for Large-Scale SAR Object Detection. arXiv 2024, arXiv:2403.06534. [Google Scholar]

- Wu, Y.; Suo, Y.; Meng, Q.; Dai, W.; Miao, T.; Zhao, W.; Yan, Z.; Diao, W.; Xie, G.; Ke, Q.; et al. FAIR-CSAR: A Benchmark Dataset for Fine-Grained Object Detection and Recognition Based on Single-Look Complex SAR Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5201022. [Google Scholar] [CrossRef]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional Detr For Fast Training Convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3651–3660. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. DAB-DETR: Dynamic Anchor Boxes Are Better Queries for DETR. arXiv 2022, arXiv:2201.12329. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Computer Vision—ECCV 2024, Proceedings of the European Conference on Computer Vision, London, UK, 15–16 January 2025; Springer: Cham, Switzerland; pp. 1–21.

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Li, C.; Hei, Y.; Xi, L.; Li, W.; Xiao, Z. GL-DETR: Global-to-Local Transformers for Small Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4016805. [Google Scholar] [CrossRef]

- Feng, Y.; You, Y.; Tian, J.; Meng, G. OEGR-DETR: A Novel Detection Transformer Based on Orientation Enhancement and Group Relations for SAR Object Detection. Remote Sens. 2024, 16, 106. [Google Scholar] [CrossRef]

- Ren, P.; Han, Z.; Yu, Z.; Zhang, B. Confucius tri-learning: A paradigm of learning from both good examples and bad examples. Pattern Recognit. 2025, 163, 111481. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, L.; Yang, S.; Wang, Z.; Cheng, G.; Han, J. Physics-Guided Detector for SAR Airplanes. IEEE Trans. Circuits Syst. Video Technol. 2025, early access. [Google Scholar] [CrossRef]

- Zhao, W.; Huang, L.; Liu, H.; Yan, C. Scattering-Point-Guided Oriented RepPoints for Ship Detection. Remote Sens. 2024, 16, 933. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Li, X.; Lv, C.; Wang, W. Generalized Focal Loss: Towards Efficient Representation Learning for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3139–3153. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid R-CNN. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. RepPoints: Point Set Representation for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9656–9665. [Google Scholar]

- Razakarivony, S.; Jurie, F. Vehicle detection in aerial imagery: A small target detection benchmark. J. Vis. Commun. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Golubović, D. The future of maritime target detection using HFSWRs: High-resolution approach. In Proceedings of the 2024 32nd Telecommunications Forum (TELFOR), Belgrade, Serbia, 26–27 November 2024; pp. 1–8. [Google Scholar]

- Ji, Y.; Zhang, J.; Meng, J.; Wang, Y. Point association analysis of vessel target detection with SAR, HFSWR and AIS. Acta Oceanol. Sin 2014, 33, 73–81. [Google Scholar] [CrossRef]

| Class | The Quantities of Various Types | |||

|---|---|---|---|---|

| Train | Val | Test | All | |

| Aircraft | 40,705 | 5194 | 6779 | 52,678 |

| Bridge | 27,615 | 3318 | 3281 | 34,214 |

| Car | 9561 | 1222 | 1230 | 12,013 |

| Harbor | 3306 | 404 | 399 | 4109 |

| Ship | 93,373 | 10,530 | 10,741 | 114,644 |

| Tank | 24,187 | 2035 | 1773 | 27,995 |

| all | 198,747 | 22,703 | 24,203 | 245,653 |

| Class | The Quantities of Various Types | |||

|---|---|---|---|---|

| Train | Val | Test | All | |

| Airbus | 5191 | 1375 | 1282 | 7848 |

| Boeing | 4431 | 1347 | 622 | 6400 |

| Other_Aircraft | 6472 | 1601 | 1158 | 9231 |

| Other_Ship | 20,689 | 5569 | 6894 | 33,152 |

| Oil_Tanker | 1173 | 305 | 326 | 1804 |

| Warship | 1668 | 309 | 280 | 2257 |

| Bridge | 1697 | 417 | 693 | 2807 |

| Tank | 12,090 | 3274 | 4062 | 19,426 |

| Tower_Crane | 1250 | 440 | 670 | 2360 |

| all | 54,661 | 14,637 | 15,987 | 85,285 |

| Datasets | SARDet-100K | FAIR-CSAR | |||||

|---|---|---|---|---|---|---|---|

| Train | Val | Test | Train | Val | Test | ||

| Scale (number of objects) | Small | 66,250 (33.33%) | 7813 (34.41%) | 7894 (32.62%) | 37,374 (68.37%) | 10,057 (68.71%) | 12,139 (75.93%) |

| Medium | 112,232 (56.47%) | 12,882 (56.74%) | 13,851 (57.23%) | 16,275 (29.77%) | 4324 (29.54%) | 3680 (23.02%) | |

| Large | 20,265 (10.20%) | 2008 (8.84%) | 2458 (10.16%) | 1012 (1.85%) | 256 (1.75%) | 168 (1.05%) | |

| Quantity (number of images) | Scant | 89,983 (95.23%) | 9903 (94.39%) | 11,046 (95.13) | 6689 (69.92%) | 1603 (67.02%) | 1887 (69.73%) |

| Moderate | 3266 (3.46%) | 456 (4.35%) | 446 (3.84) | 2048 (21.41%) | 561 (23.45%) | 579 (21.40%) | |

| Plenty | 1244 (1.32%) | 133 (1.27%) | 120 (1.03%) | 830 (8.68%) | 228 (9.53%) | 240 (8.87%) | |

| Method | mAP@50 | mAP@75 | mAP@50-95 | mAP_l | mAP_m | mAP_s |

|---|---|---|---|---|---|---|

| GFL [60] | 63.7% | 33.0% | 34.1% | 30.5% | 41.4% | 28.2% |

| FCOS [61] | 60.9% | 26.9% | 30.3% | 26.4% | 38.2% | 24.0% |

| RetinaNet [62] | 56.2% | 34.9% | 33.5% | 41.7% | 37.2% | 17.7% |

| Cascade R-CNN [63] | 65.6% | 34.0% | 35.9% | 38.5% | 42.7% | 27.4% |

| Grid R-CNN [64] | 63.2% | 32.3% | 33.5% | 35.4% | 40.7% | 25.4% |

| Faster R-CNN [37] | 63.4% | 32.1% | 33.6% | 35.9% | 40.5% | 27.1% |

| DETR [39] | 22.0% | 2.3% | 7.1% | 10.9% | 10.5% | 3.6% |

| Deformable DETR [41] | 66.6% | 30.0% | 33.3% | 32.6% | 44.7% | 27.7% |

| Dab-DETR [50] | 57.1% | 24.8% | 28.1% | 26.0% | 37.7% | 21.9% |

| Ours | 69.8% | 39.7% | 38.6% | 40.6% | 49.1% | 31.8% |

| Method | mAP@50 | mAP@50-95 | Param.s(M) | FPS |

|---|---|---|---|---|

| Faster R-CNN [37] | 87.8% | 56.9% | 41.39 | 144.0 |

| Cascade R-CNN [63] | 89.2% | 64.3% | 77.05 | 22.4 |

| FoveaBox [65] | 87.0% | 58.1% | 36.26 | 135.2 |

| FCOS [61] | 86.2% | 55.0% | 32.13 | 151.3 |

| RetinaNet [62] | 85.5% | 55.6% | 36.50 | 142.4 |

| RepPoints [66] | 89.4% | 56.8% | 36.82 | 145.8 |

| Deformable-DETR [41] | 81.1% | 44.5% | 40.10 | 139.1 |

| Ours | 92.9% | 62.5% | 59.95 | 137.9 |

| CMFE | AQR | mAP@50 | mAP@75 | mAP@50-95 | mAP_l | mAP_m | mAP_s |

|---|---|---|---|---|---|---|---|

| × | × | 72.7% | 35.7% | 38.6% | 53.1% | 35.6% | 26.0% |

| √ | × | 74.5% (+1.8%) | 37.3% (+1.6%) | 39.8% (+1.2%) | 55.8% (+2.7%) | 36.7% (+1.1%) | 29.4% (+3.4%) |

| × | √ | 73.8% (+1.1%) | 37.4% (+1.7%) | 39.5% (+0.9%) | 54.5% (+1.4%) | 36.4% (+0.8%) | 28.4% (+2.4%) |

| √ | √ | 76.4% (+3.7%) | 41.6% (+5.9%) | 42.1% (+3.5%) | 55.6% (+2.5%) | 39.5% (+3.9%) | 29.7% (+3.7%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, Z.; Cheng, Z.; Huang, S.; Wei, J.; Wu, Z. CGAQ-DETR: DETR with Corner Guided and Adaptive Query for SAR Object Detection. Remote Sens. 2025, 17, 3254. https://doi.org/10.3390/rs17183254

Zuo Z, Cheng Z, Huang S, Wei J, Wu Z. CGAQ-DETR: DETR with Corner Guided and Adaptive Query for SAR Object Detection. Remote Sensing. 2025; 17(18):3254. https://doi.org/10.3390/rs17183254

Chicago/Turabian StyleZuo, Zhen, Zhangjunjie Cheng, Siyang Huang, Junyu Wei, and Zhuoyuan Wu. 2025. "CGAQ-DETR: DETR with Corner Guided and Adaptive Query for SAR Object Detection" Remote Sensing 17, no. 18: 3254. https://doi.org/10.3390/rs17183254

APA StyleZuo, Z., Cheng, Z., Huang, S., Wei, J., & Wu, Z. (2025). CGAQ-DETR: DETR with Corner Guided and Adaptive Query for SAR Object Detection. Remote Sensing, 17(18), 3254. https://doi.org/10.3390/rs17183254