Semantic Segmentation Method of Residential Areas in Remote Sensing Images Based on Cross-Attention Mechanism

Abstract

Highlights

- A novel CrossAtt-UNet architecture is proposed, integrating a cross-attention module to capture cross-level dependencies and enhance feature interactions in remote sensing semantic segmentation.

- Experimental results on the Urban Residential Semantic Segmentation Dataset (URSSD) demonstrate superior accuracy (95.47%), mIoU (89.80%), F1-score (94.63%) and robustness compared with mainstream segmentation networks.

- The proposed method significantly improves structural coherence, boundary recognition, and generalization ability, enabling reliable extraction of complex urban features in high-resolution remote sensing images.

- CrossAtt-UNet shows strong adaptability across tasks, as validated by its performance in concrete damage detection, highlighting its potential for urban planning, disaster monitoring, and infrastructure assessment.

Abstract

1. Introduction

2. Materials and Methods

2.1. Extraction of Living Area Information

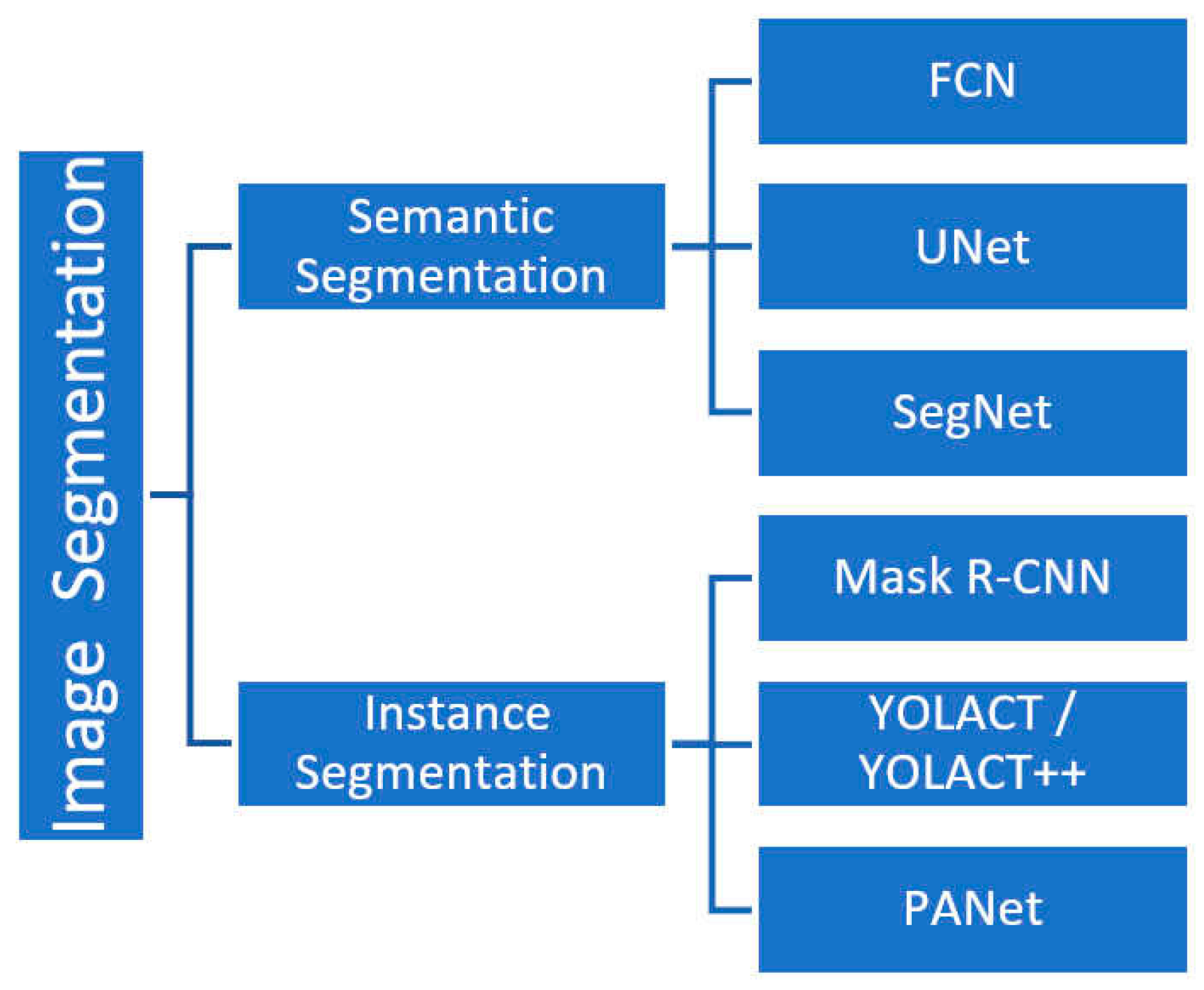

2.1.1. Image Segmentation Method for Residential Areas

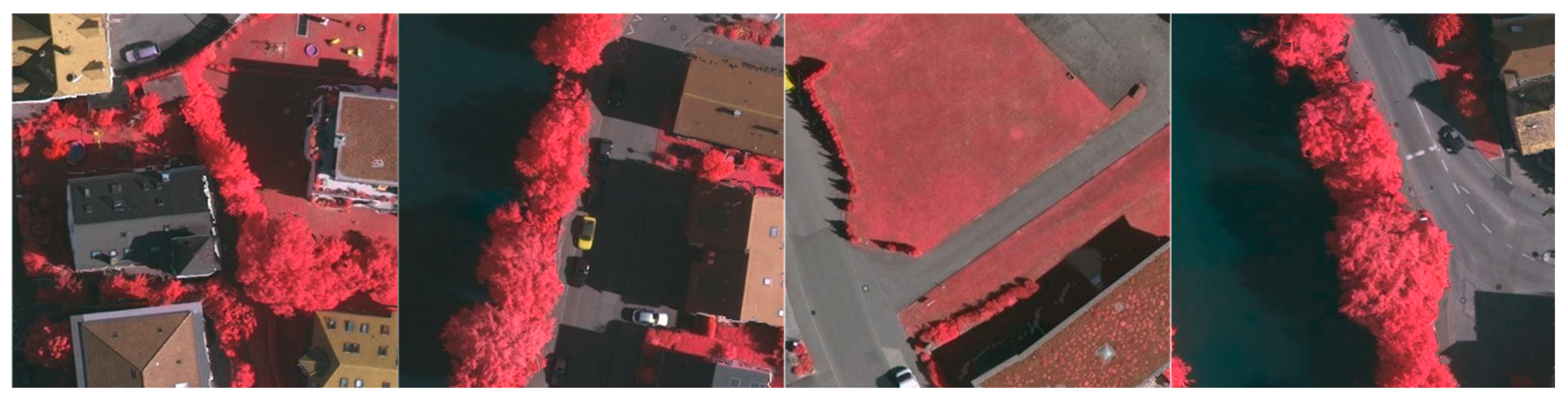

2.1.2. Semantic Segmentation Dataset of Residential Areas

2.2. Semantic Segmentation of Remote Sensing Residential Areas Based on CrossAtt-UNet

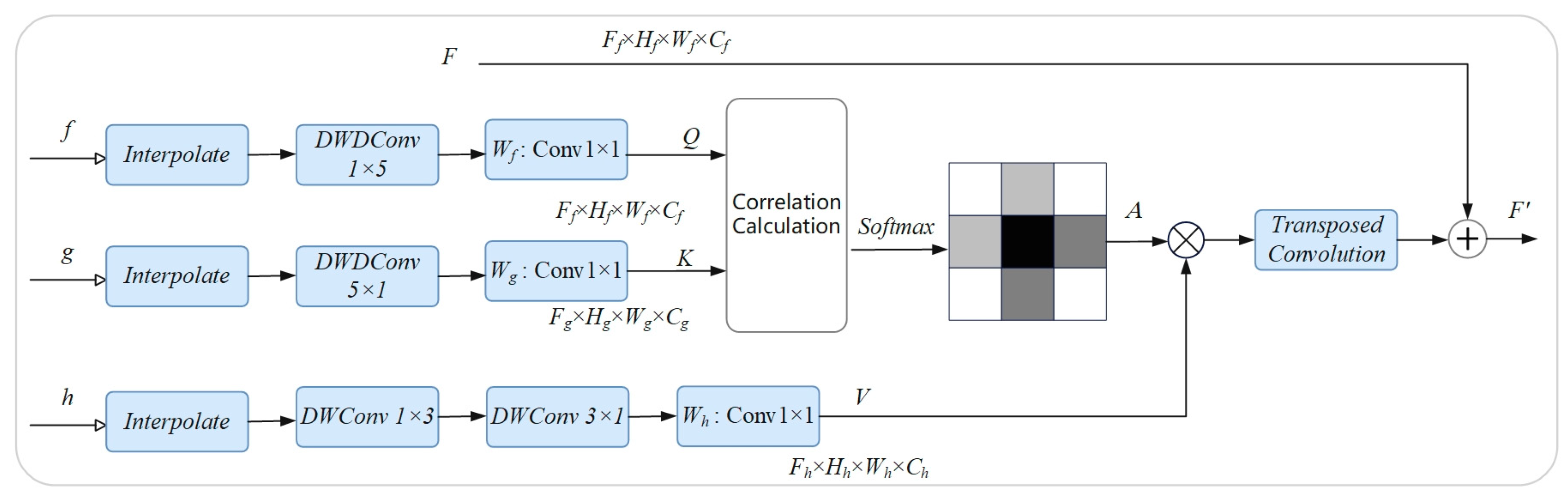

2.2.1. Cross Attention

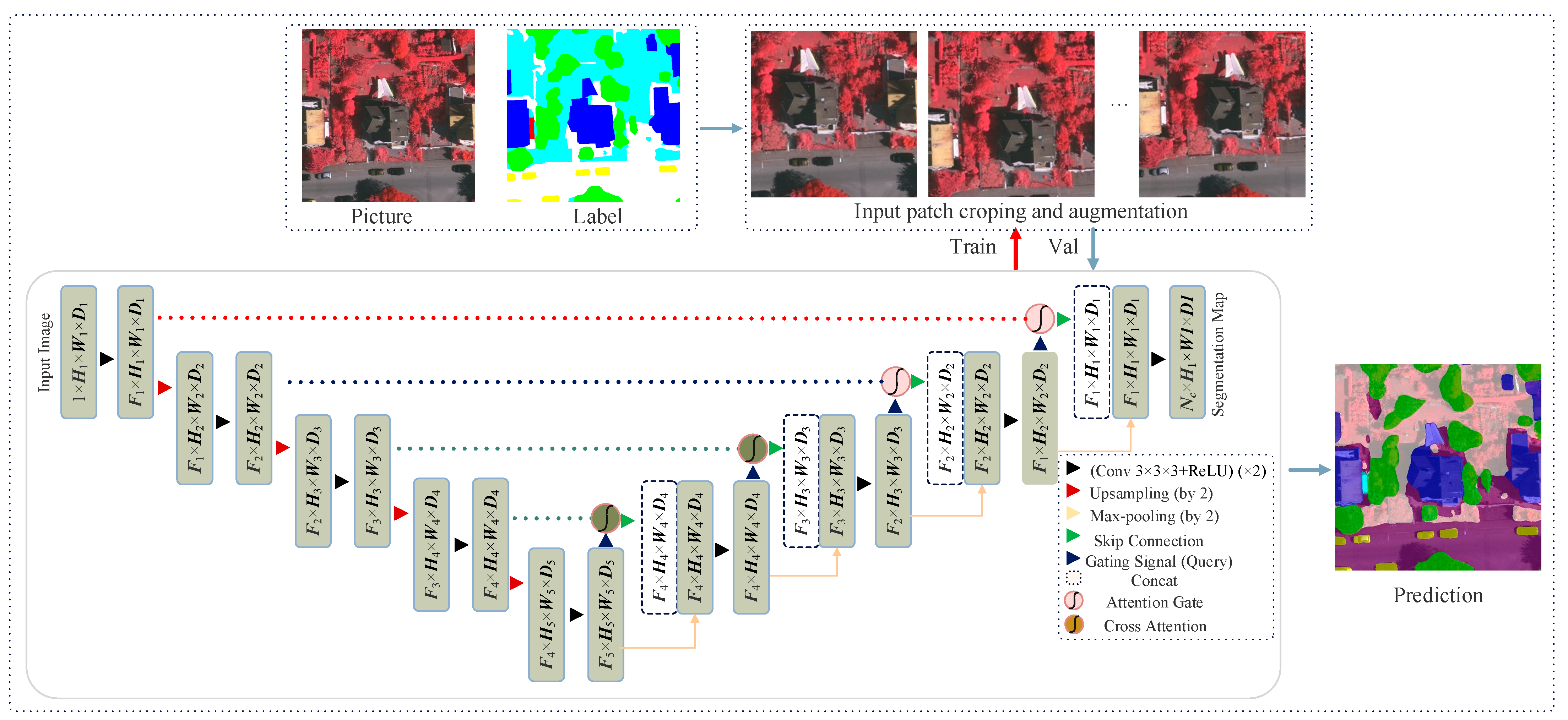

2.2.2. CrossAtt-UNet Architecture

2.2.3. Loss Function and Evaluation Indicators

2.2.4. Comparative Innovation Analysis

3. Results and Analysis

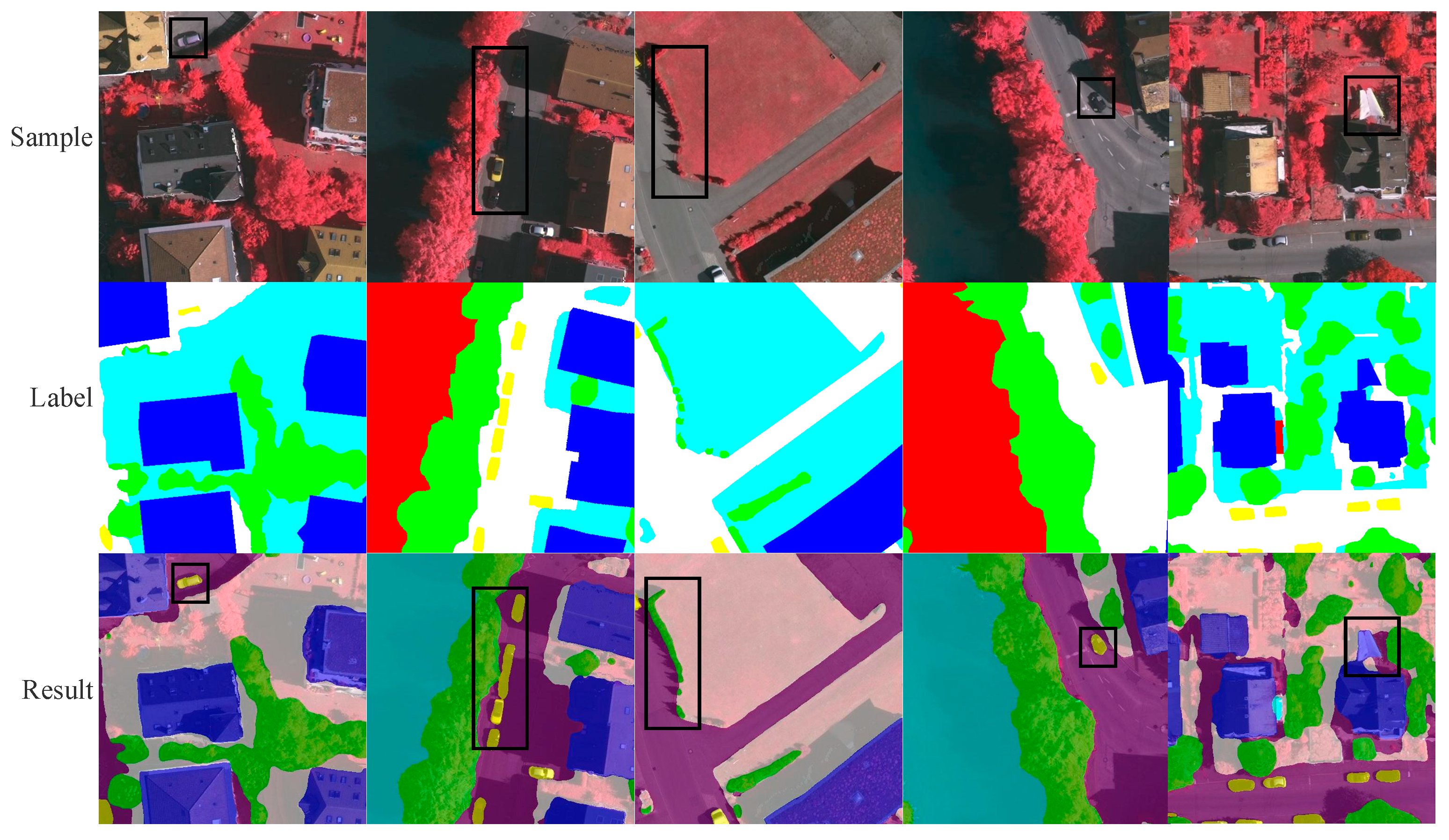

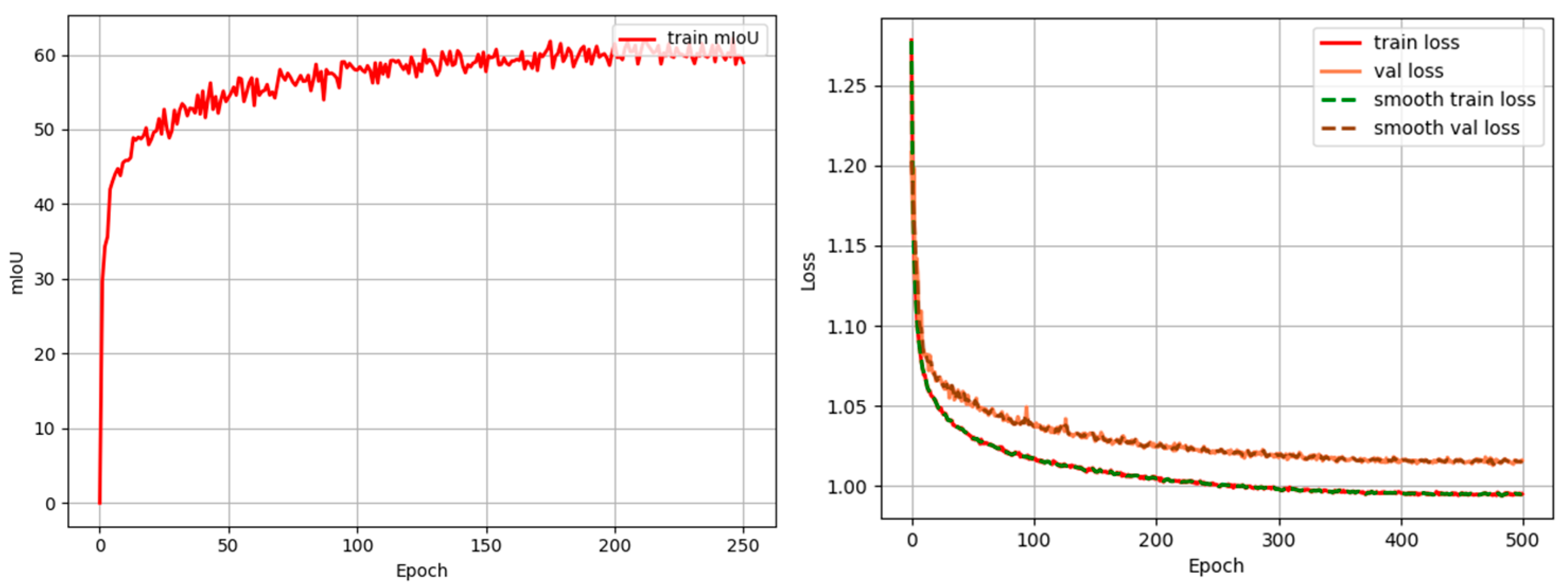

3.1. Experiment on Semantic Segmentation of Residential Areas

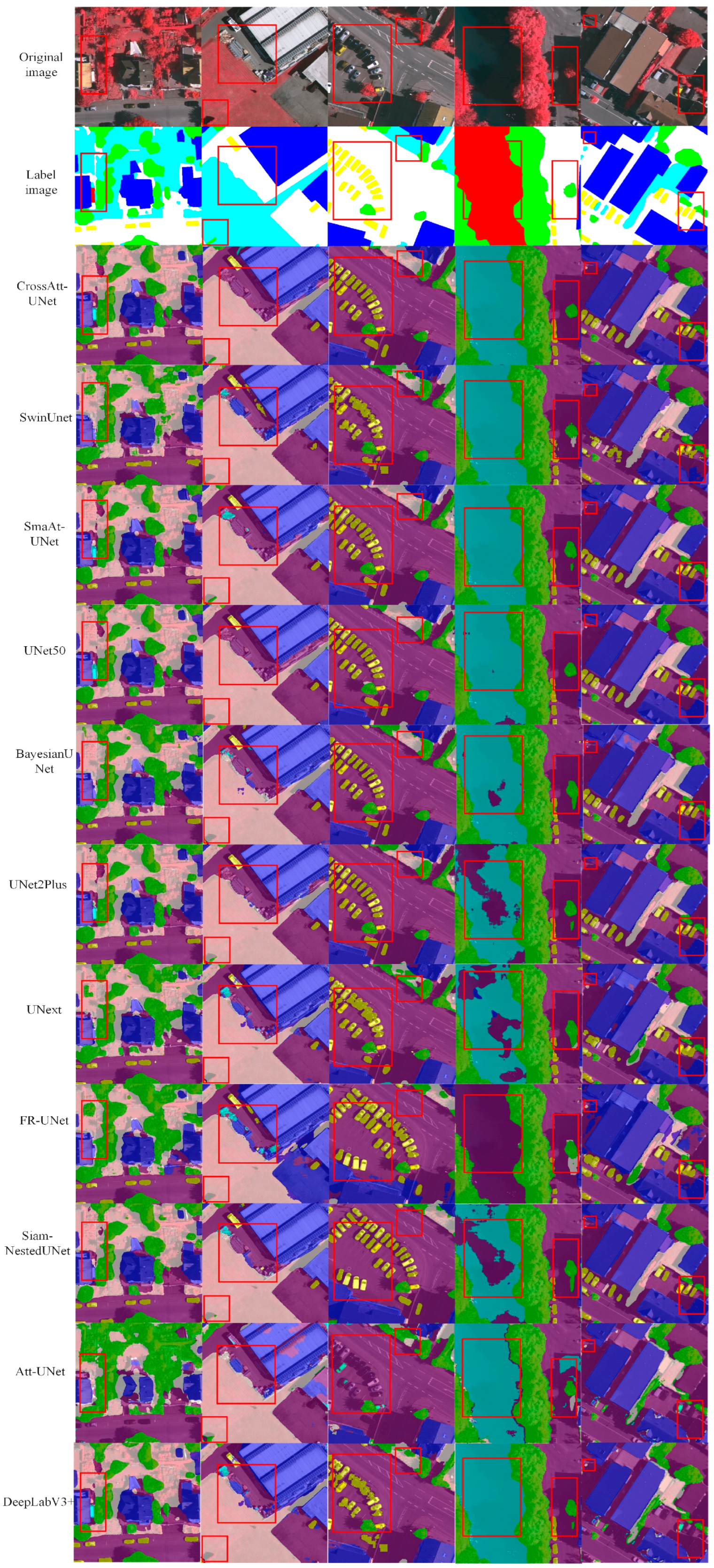

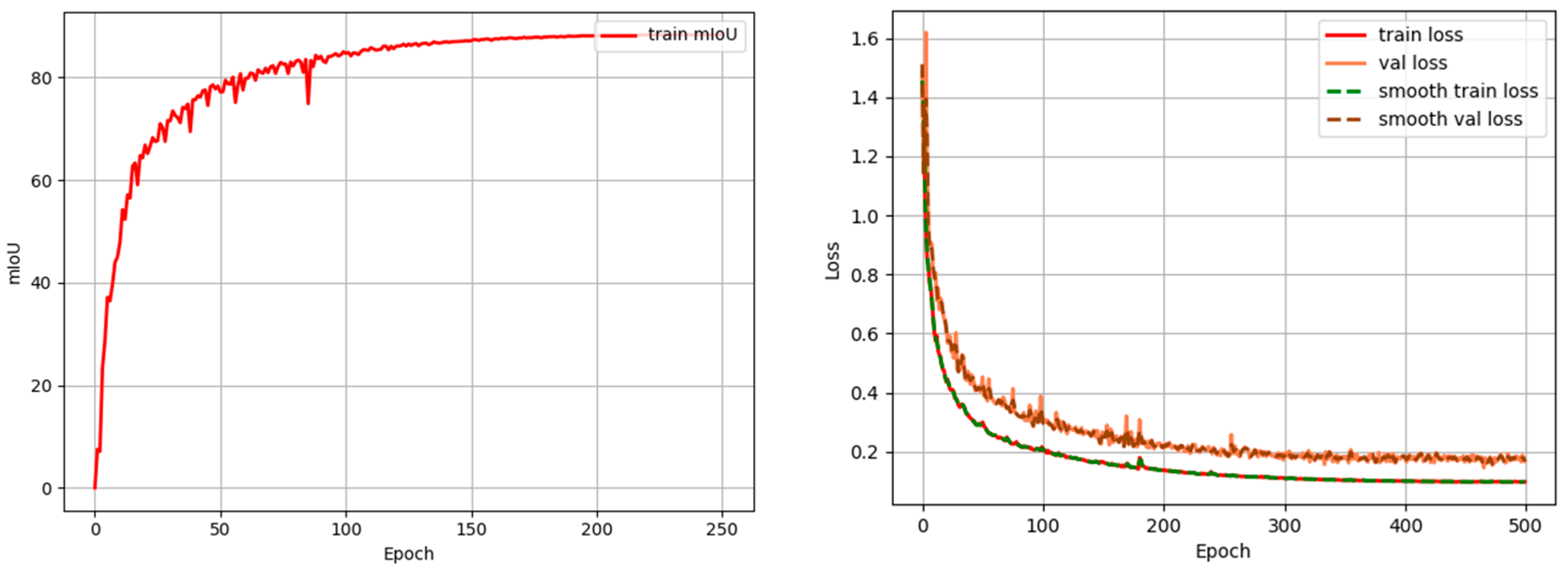

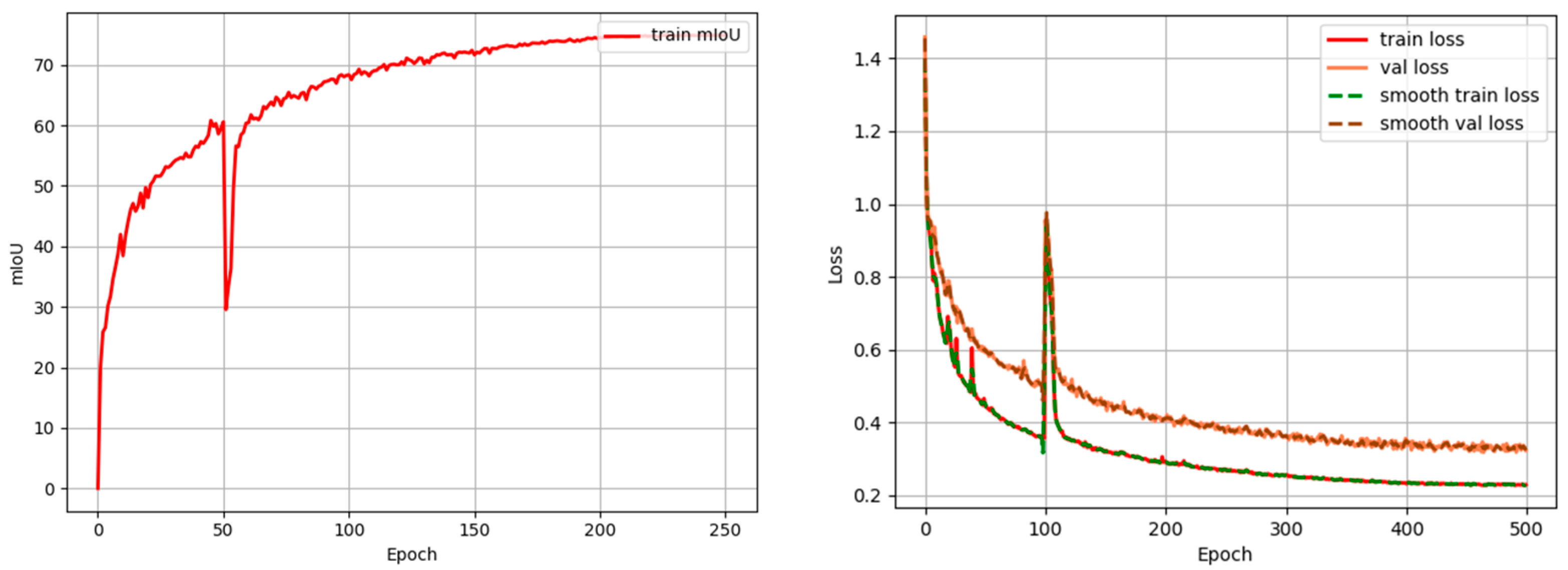

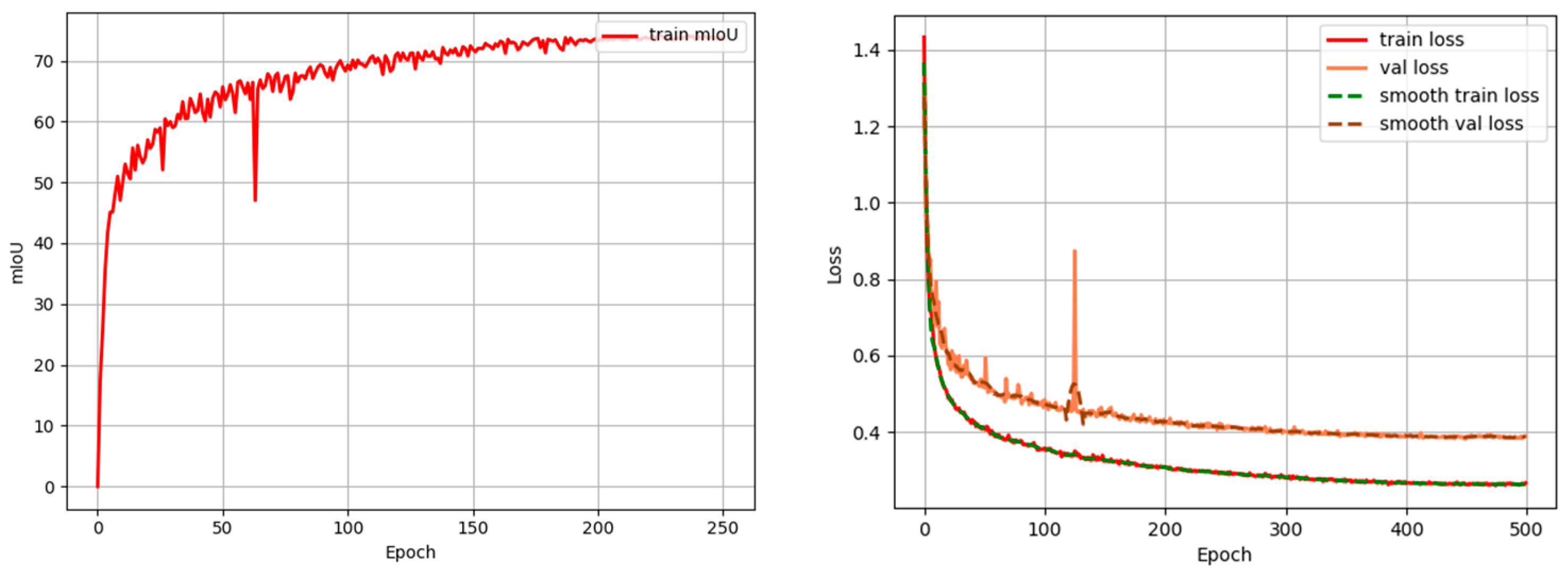

3.2. Comparison of Different Network Performances

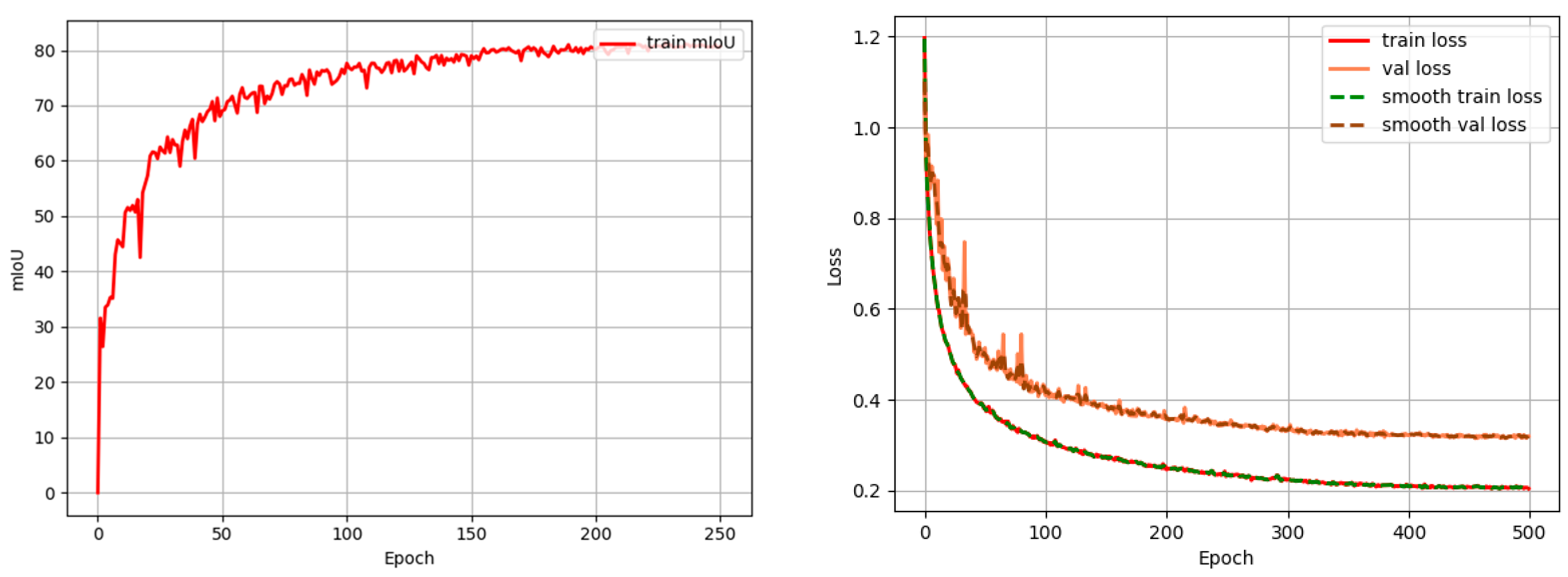

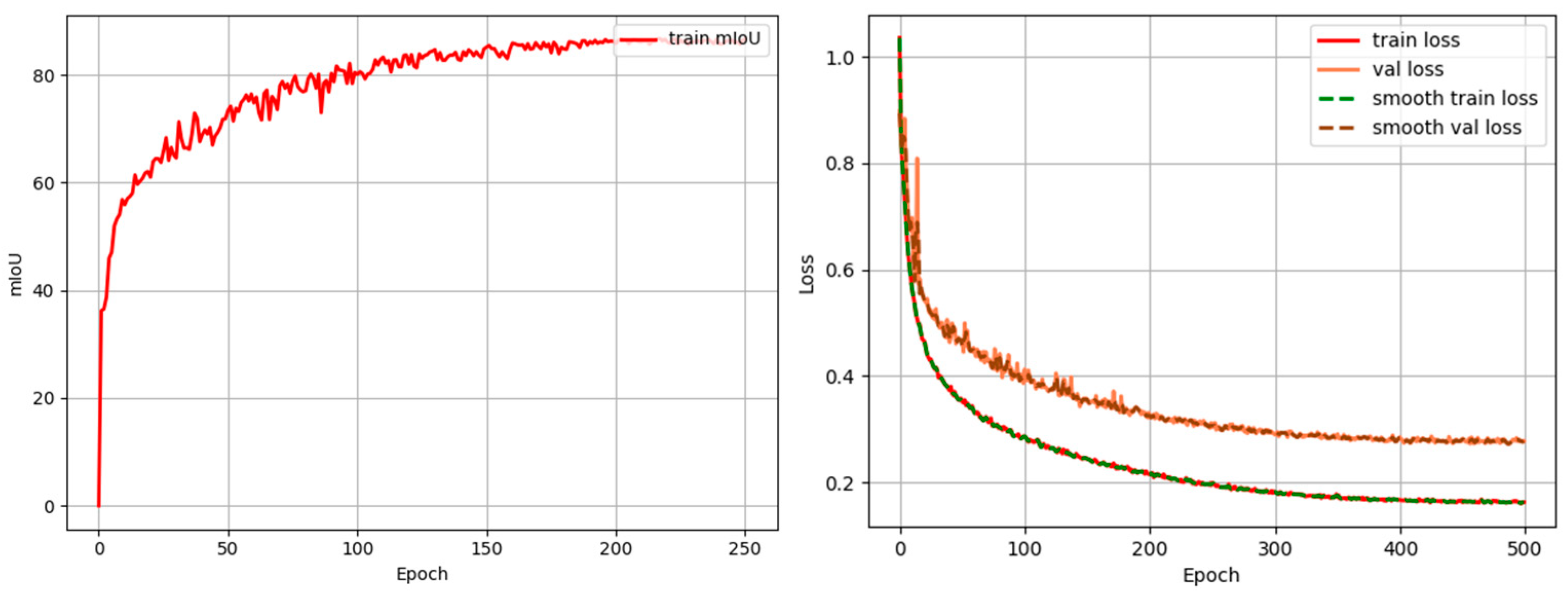

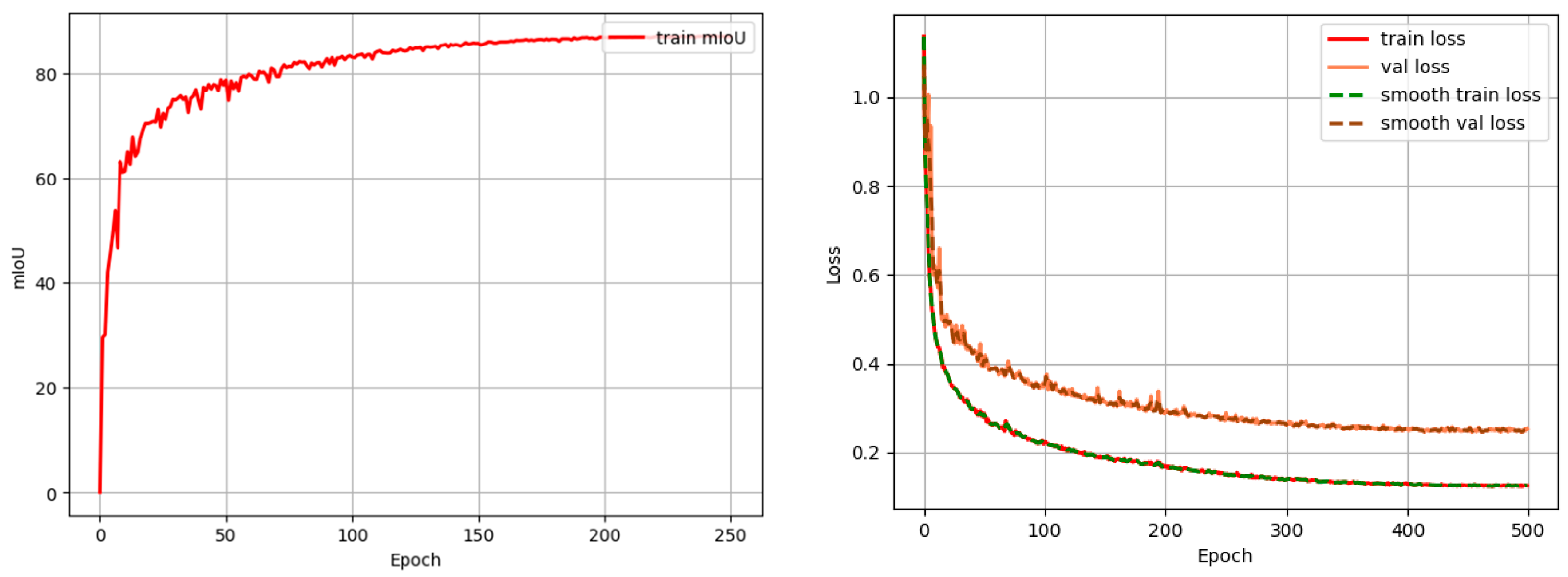

3.3. Ablation Experiment

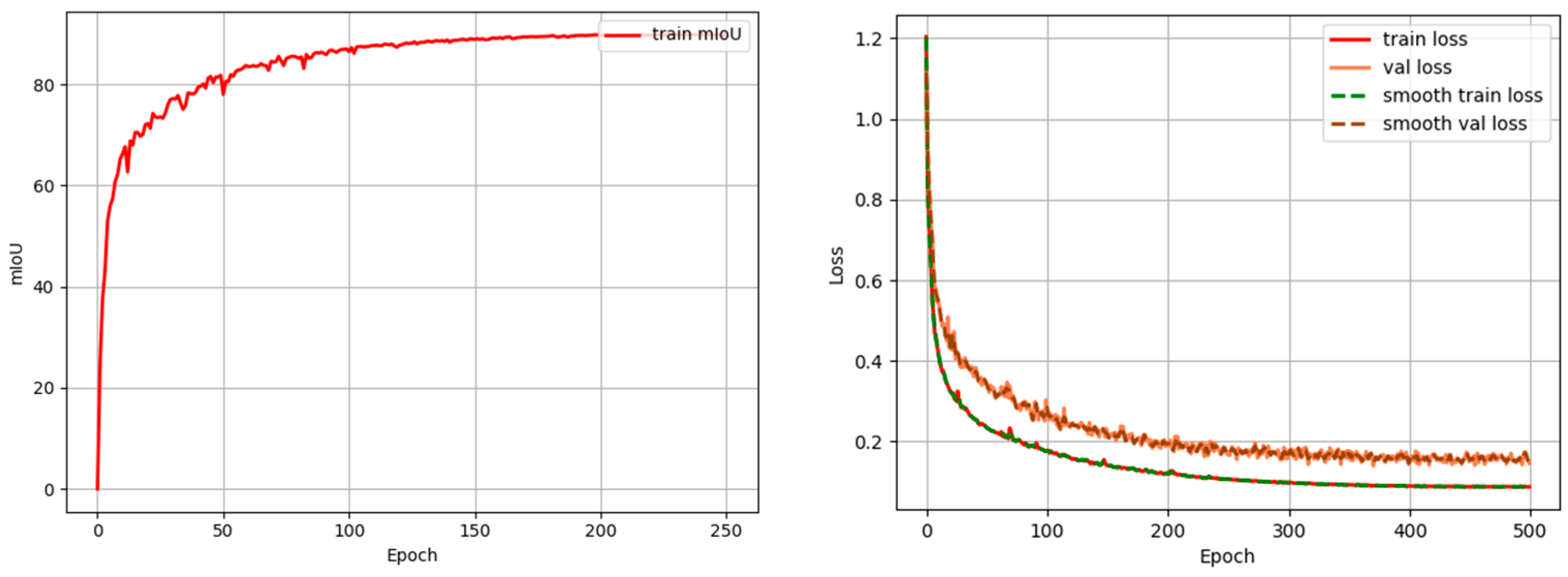

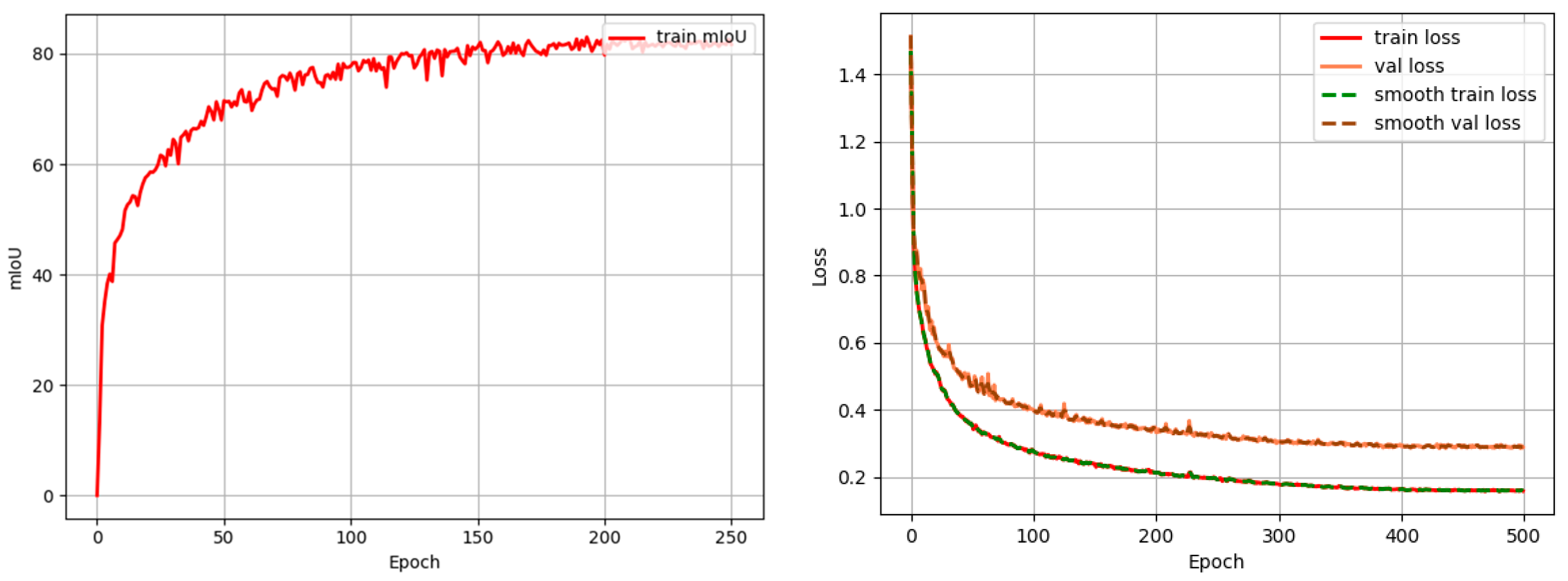

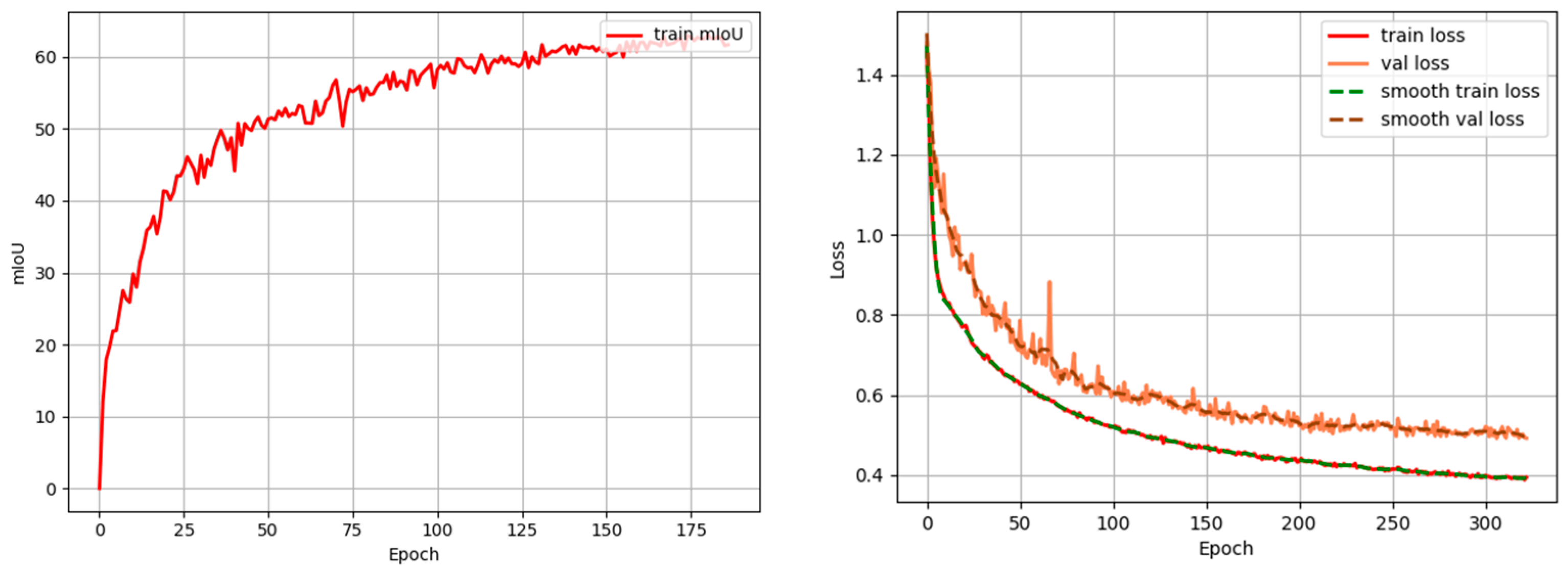

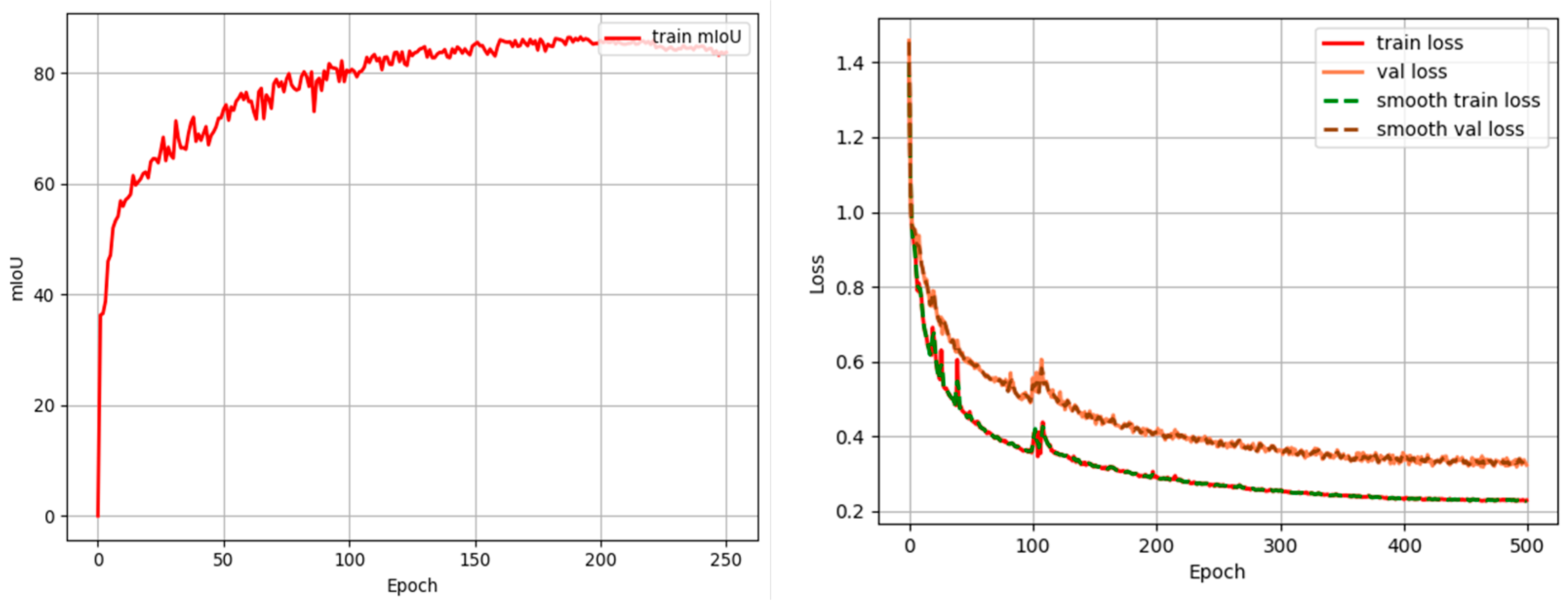

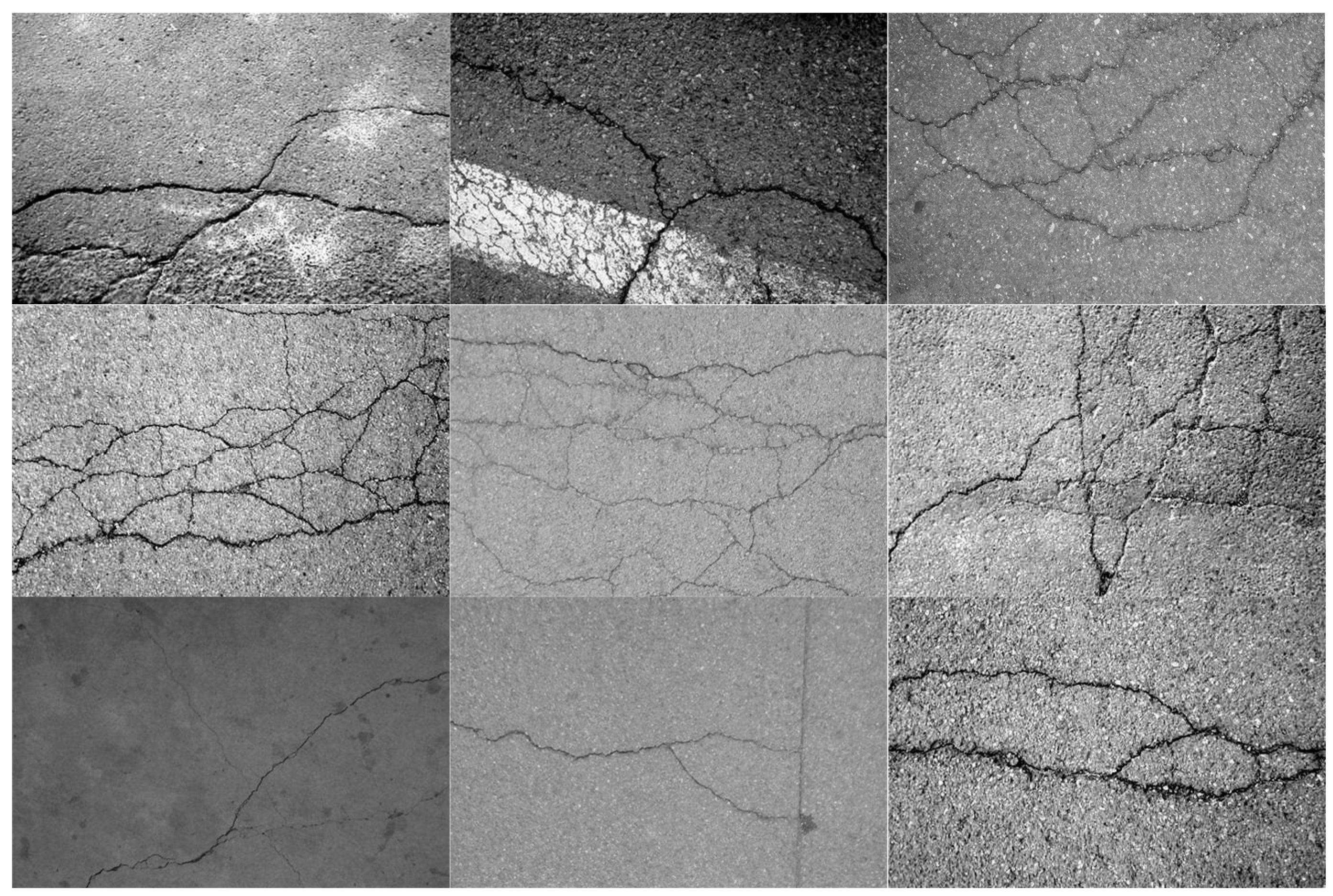

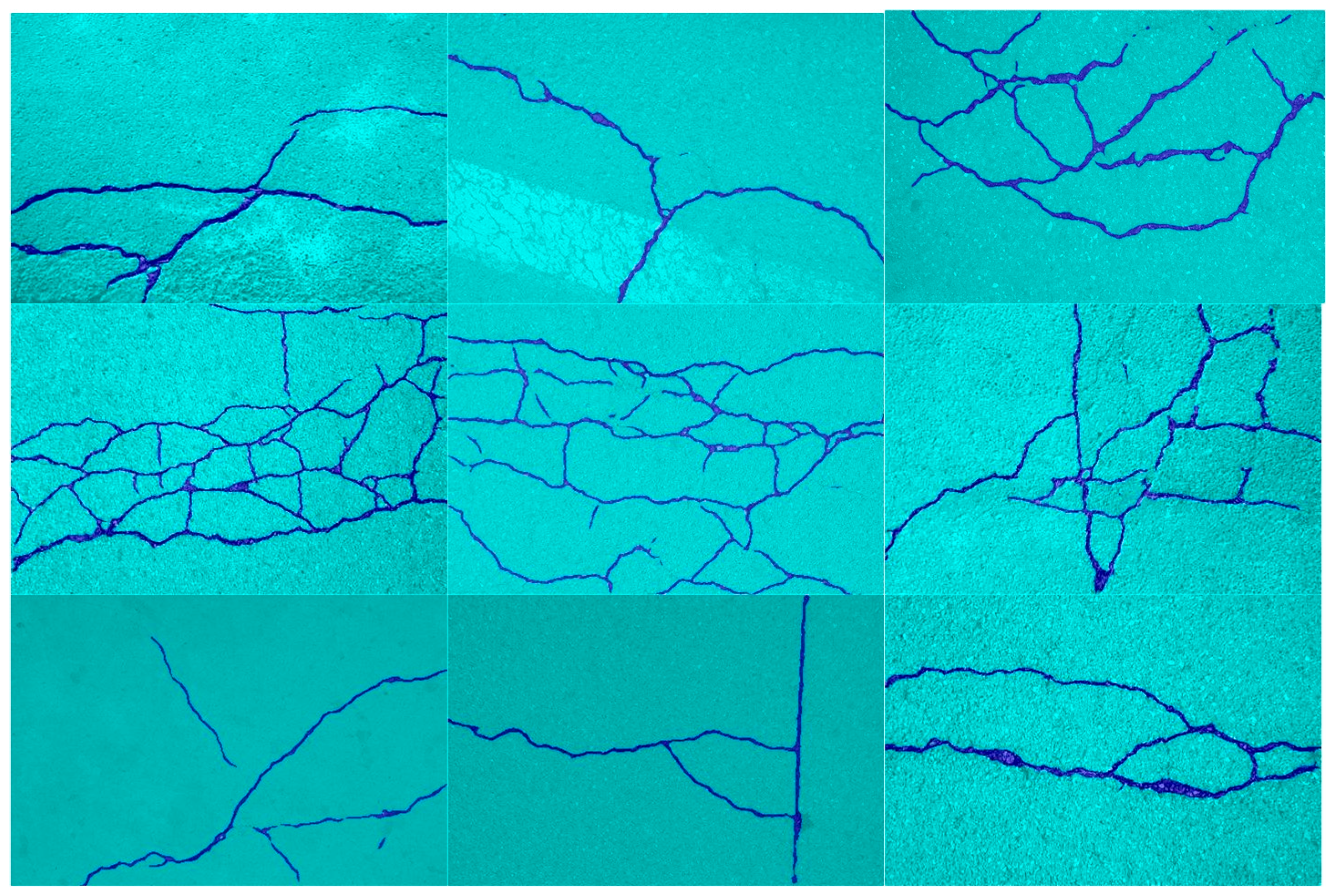

3.4. Network Generalization Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Fan, L.; Zhou, Y.; Liu, H.; Li, Y.; Cao, D. Combining Swin Transformer with UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5530111. [Google Scholar] [CrossRef]

- Zhao, X.; Wu, Z.; Chen, Y.; Zhou, W.; Wei, M. Fine-Grained High-Resolution Remote Sensing Image Change Detection by SAM-UNet Change Detection Model. Remote Sens. 2024, 16, 3620. [Google Scholar] [CrossRef]

- Zhu, Z.; Zhang, S.; Qiu, L.; Wang, H.; Luo, G. Axis-Based Transformer UNet for RGB Remote Sensing Image Denoising. IEEE Signal Process. Lett. 2024, 31, 2515–2519. [Google Scholar] [CrossRef]

- Jonnala, N.; Bheemana, R.; Prakash, K.; Bansal, S.; Jain, A.; Pandey, V. DSIA U-Net: Deep shallow interaction with attention mechanism UNet for remote sensing satellite images. Sci. Rep. 2025, 15, 549. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Hu, Z.; Shi, S.; Hou, M.; Xu, L.; Zhang, X. A deep learning method for optimizing semantic segmentation accuracy of remote sensing images based on improved UNet. Sci. Rep. 2023, 13, 7600. [Google Scholar] [CrossRef]

- Lv, Z.; Huang, H.; Gao, L.; Benediktsson, J.; Zhao, M.; Shi, C. Simple Multiscale UNet for Change Detection with Heterogeneous Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 2504905. [Google Scholar] [CrossRef]

- Wang, X.; Fan, Z.; Jiang, Z.; Yan, Y.; Yang, H. EDFF-Unet: An Improved Unet-Based Method for Cloud and Cloud Shadow Segmentation in Remote Sensing Images. Remote Sens. 2025, 17, 1432. [Google Scholar] [CrossRef]

- Lu, Y.; Li, H.; Zhang, C.; Zhang, S. Object-Based Semi-Supervised Spatial Attention Residual UNet for Urban High-Resolution Remote Sensing Image Classification. Remote Sens. 2024, 16, 1444. [Google Scholar] [CrossRef]

- Li, X.; Yang, X.; Li, X.; Lu, S.; Ye, Y.; Ban, Y. GCDB-UNet: A novel robust cloud detection approach for remote sensing images. Knowl.-Based Syst. 2022, 238, 107890. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, H.; Ma, G.; Zhao, H.; Xie, D.; Geng, S.; Tian, W.; Sian, K. MU-Net: Embedding MixFormer into Unet to Extract Water Bodies from Remote Sensing Images. Remote Sens. 2023, 15, 3559. [Google Scholar] [CrossRef]

- Ye, F.; Zhang, R.; Xu, X.; Wu, K.; Zheng, P.; Li, D. Water Body Segmentation of SAR Images Based on SAR Image Reconstruction and an Improved UNet. IEEE Geosci. Remote Sens. Lett. 2024, 21, 4010005. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Chen, G.; Tan, X.; Guo, B.; Zhu, K.; Liao, P.; Wang, T.; Wang, Q.; Zhang, X. SDFCNv2: An Improved FCN Framework for Remote Sensing Images Semantic Segmentation. Remote Sens. 2021, 13, 4902. [Google Scholar] [CrossRef]

- Rajamani, K.T.; Rani, P.; Siebert, H.; ElagiriRamalingam, R.; Heinrich, M.P. Attention-augmented U-Net (AA-U-Net) for semantic segmentation. Signal Image Video Process. 2023, 17, 981–989. [Google Scholar] [CrossRef]

- Amo-Boateng, M.; Sey, N.E.N.; Amproche, A.A.; Domfeh, M.K. Instance segmentation scheme for roofs in rural areas based on Mask R-CNN Instance segmentation scheme for roofs in rural areas based on Mask R-CNN. Egypt. J. Remote Sens. Space Sci. 2022, 25, 569–577. [Google Scholar]

- Zeng, J.; Ouyang, H.; Liu, M.; Leng, L.; Fu, X. Multi-scale YOLACT for instance segmentation Multi-scale YOLACT for instance segmentation. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9419–9427. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, Y.; Han, X.; Gao, W.; Hu, Y.; Zhang, Y. A feature enhancement network combining UNet and vision transformer for building change detection in high-resolution remote sensing images. Neural Comput. Appl. 2025, 37, 1429–1456. [Google Scholar] [CrossRef]

- Tang, Y.; Cao, Z.; Guo, N.; Jiang, M. A Siamese Swin-Unet for image change detection. Sci. Rep. 2024, 14, 4577. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Zhao, K.; Zhao, X.; Song, C. FSL-Unet: Full-Scale Linked Unet with Spatial-Spectral Joint Perceptual Attention for Hyperspectral and Multispectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5539114. [Google Scholar] [CrossRef]

- Yang, M.; Yuan, Y.; Liu, G. SDUNet: Road extraction via spatial enhanced and densely connected UNet. Pattern Recognit. 2022, 126, 108549. [Google Scholar] [CrossRef]

- Liang, F.; Wang, Z.; Ma, W.; Liu, B.; En, Q.; Wang, D.; Duan, L. HDFA-Net: A high-dimensional decoupled frequency attention network for steel surface defect detection. Measurement 2025, 242, 116255. [Google Scholar] [CrossRef]

- Thai, D.; Fei, X.; Le, M.; Züfle, A.; Wessels, K. Riesz-Quincunx-UNet Variational Autoencoder for Unsupervised Satellite Image Denoising. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5404519. [Google Scholar] [CrossRef]

- Xie, H.; Pan, Y.; Luan, J.; Yang, X.; Xi, Y. Open-pit Mining Area Segmentation of Remote Sensing Images Based on DUSegNet. J. Indian Soc. Remote Sens. 2021, 49, 1257–1270. [Google Scholar] [CrossRef]

- Yang, Y.; Zheng, S.; Wang, X.; Ao, W.; Liu, Z. AMMUNet: Multiscale Attention Map Merging for Remote Sensing Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6000705. [Google Scholar] [CrossRef]

- Jing, Y.; Zhang, T.; Liu, Z.; Hou, Y.; Sun, C. Swin-ResUNet+: An edge enhancement module for road extraction from remote sensing images. Comput. Vis. Image Underst. 2023, 237, 103807. [Google Scholar] [CrossRef]

- Sun, Y.; Bi, F.; Gao, Y.; Chen, L.; Feng, S. A Multi-Attention UNet for Semantic Segmentation in Remote Sensing Images. Symmetry 2022, 14, 906. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, K.; Wang, H.; Yang, Z.; Wang, P.; Ji, S.; Huang, Y.; Zhu, Z.; Zhao, X. A Transformer-based multi-modal fusion network for semantic segmentation of high-resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104083. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.O.; Liu, M. A multilevel multimodal fusion transformer for remote sensing semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403215. [Google Scholar] [CrossRef]

- Chowdary, G.J.; Yin, Z. Diffusion transformer u-net for medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; pp. 622–631. [Google Scholar]

- Chen, B.; Liu, Y.; Zhang, Z.; Lu, G.; Kong, A.W.K. Transattunet: Multi-level attention-guided u-net with transformer for medical image segmentation. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 55–68. [Google Scholar] [CrossRef]

- Saidu, I.C.; Csató, L. Active learning with bayesian UNet for efficient semantic image segmentation. J. Imaging 2021, 7, 37. [Google Scholar] [CrossRef]

- Valanarasu, J.M.J.; Patel, V.M. Unext: Mlp-based rapid medical image segmentation network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 18–22 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 23–33. [Google Scholar]

- Tian, Y.; Fu, L.; Fang, W.; Li, T. FR-UNet: A Feature Restoration-Based UNet for Seismic Data Consecutively Missing Trace Interpolation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5904310. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Shao, J.; Li, Z. SNUNet-CD: A densely connected Siamese network for change detection of VHR images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8007805. [Google Scholar] [CrossRef]

- Chang, Z.; Li, H.; Chen, D.; Liu, Y.; Zou, C.; Chen, J.; Han, W.; Liu, S.; Zhang, N. Crop type identification using high-resolution remote sensing images based on an improved DeepLabV3+ network. Remote Sens. 2023, 15, 5088. [Google Scholar] [CrossRef]

- Trebing, K.; Staǹczyk, T.; Mehrkanoon, S. SmaAt-UNet: Precipitation nowcasting using a small attention-UNet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- Xue, W.; Ai, J.; Zhu, Y.; Chen, J.; Zhuang, S. AIS-FCANet: Long-term AIS Data assisted Frequency-Spatial Contextual Awareness Network for Salient Ship Detection in SAR Imagery. IEEE Trans. Aerosp. Electron. Syst. 2025, 1–6. [Google Scholar] [CrossRef]

- Ai, J.; Xue, W.; Zhu, Y.; Zhuang, S.; Xu, C.; Yan, H.; Chen, L.; Wang, Z. AIS-PVT: Long-Time AIS Data Assisted Pyramid Vision Transformer for Sea-Land Segmentation in Dual-Polarization SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5220712. [Google Scholar] [CrossRef]

| Label Category | Label Number |

|---|---|

| background | 1328 |

| surfaces | 575 |

| building | 965 |

| vegetation | 834 |

| tree | 765 |

| car | 586 |

| Name | Version | Name | Value |

|---|---|---|---|

| OS | Ubuntu MATE 16.04 | epochs | 500 |

| CPU | Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10 GHz | batch | 16 |

| RAM | 128 GB | μ | 0.9 |

| GPU | GeForce RTX 3090*2 | workers | 8 |

| Driver | 455.23.05 | dropout | 0 |

| CUDA | 11.1 | scale | 0.5 |

| python | 3.7.13 | SGD | 1 × 10−2 |

| torch | 1.10.1+cu11 | LR | 0.2 |

| torchvision | 0.11.2++cu111 | optimizer | Adam |

| Algorithms | Accuracy | mAP | mIoU | F1-Score | train_loss | val_loss | fps |

|---|---|---|---|---|---|---|---|

| UNet50 | 94.78% | 93.98% | 88.32% | 93.80% | 0.0976 | 0.1675 | 22.47 |

| BayesianUNet [32] | 90.73% | 89.34% | 80.71% | 89.33% | 0.2043 | 0.3197 | 20.12 |

| Unet2Plus | 94.26% | 91.94% | 86.67% | 92.86% | 0.1630 | 0.2775 | 5.71 |

| UNext [33] | 88.15% | 83.12% | 73.55% | 84.76% | 0.2672 | 0.3897 | 33.67 |

| FR-UNet [34] | 83.41% | 73.02% | 62.04% | 76.57% | 0.3942 | 0.4921 | 15.35 |

| CrossAtt-UNet | 95.47% | 94.57% | 89.80% | 94.63% | 0.0878 | 0.1459 | 15.72 |

| Att-UNet | 85.16% | 73.95% | 58.95% | 74.17% | 0.9951 | 1.0160 | 16.12 |

| Siam-NestedUNet [35] | 92.8% | 87.64% | 81.67% | 89.91% | 0.1578 | 0.2882 | 17.74 |

| SwinUnet | 88.62% | 85.24% | 74.89% | 85.64% | 0.2282 | 0.3227 | 14.52 |

| DeepLabV3+ [36] | 94.54% | 91.79% | 83.45% | 90.98% | 0.2295 | 0.3148 | 27.45 |

| SmaAt-UNet [37] | 94.08% | 93.36% | 86.91% | 93.00% | 0.1253 | 0.2533 | 19.89 |

| Algorithms | Accuracy | mAP | mIoU | F1-Score | train_loss | val_loss | fps |

|---|---|---|---|---|---|---|---|

| CrossAtt-UNet | 95.47% | 94.57% | 89.80% | 94.64% | 0.0878 | 0.1459 | 15.72 |

| CrossAtt-UNet without Attention Gate | 91.94% | 80.53% | 79.83% | 88.83% | 0.2136 | 0.4554 | 15.93 |

| CrossAtt-UNet without Attention Gate and Cross Attention | 85.16% | 73.95% | 58.95% | 74.15% | 0.9951 | 1.0160 | 16.12 |

| Algorithms | Accuracy | mAP | mIoU | F1-Score | train_loss | val_loss | fps |

|---|---|---|---|---|---|---|---|

| UNet50 | 98.04% | 74.48% | 74.71% | 85.50% | 0.1542 | 0.1604 | 22.65 |

| BayesianUNet | 98.09% | 79.47% | 73.90% | 84.96% | 0.1655 | 0.1607 | 21.72 |

| Unet2Plus | 97.74% | 68.57% | 63.93% | 77.97% | 0.1420 | 0.2640 | 5.82 |

| UNext | 98.06% | 76.82% | 72.54% | 84.05% | 0.17458 | 0.1699 | 39.56 |

| FR-UNet | 98.21% | 77.6% | 73.92% | 84.97% | 0.1286 | 0.1445 | 16.48 |

| CrossAtt-UNet | 98.22% | 79.14% | 75.20% | 85.83% | 0.1410 | 0.1651 | 14.66 |

| Att-UNet | 88.05% | 68.35% | 52.48% | 68.84% | 0.9857 | 1.1554 | 12.32 |

| Siam-NestedUNet | 91.34% | 77.45% | 73.54% | 84.72% | 0.1450 | 0.2541 | 18.12 |

| SwinUnet | 97.84% | 75.5% | 70.49% | 82.67% | 0.2155 | 0.2050 | 15.63 |

| DeepLabV3+ | 97.54% | 72.51 | 71.45% | 83.39% | 0.2055 | 0.2414 | 32.34 |

| SmaAt-UNet | 98.09% | 79.47% | 73.9% | 84.96% | 0.1939 | 0.1712 | 20.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, B.; Mi, Y.; Sun, R.; Wu, C. Semantic Segmentation Method of Residential Areas in Remote Sensing Images Based on Cross-Attention Mechanism. Remote Sens. 2025, 17, 3253. https://doi.org/10.3390/rs17183253

Zhao B, Mi Y, Sun R, Wu C. Semantic Segmentation Method of Residential Areas in Remote Sensing Images Based on Cross-Attention Mechanism. Remote Sensing. 2025; 17(18):3253. https://doi.org/10.3390/rs17183253

Chicago/Turabian StyleZhao, Bin, Yang Mi, Ruohuai Sun, and Chengdong Wu. 2025. "Semantic Segmentation Method of Residential Areas in Remote Sensing Images Based on Cross-Attention Mechanism" Remote Sensing 17, no. 18: 3253. https://doi.org/10.3390/rs17183253

APA StyleZhao, B., Mi, Y., Sun, R., & Wu, C. (2025). Semantic Segmentation Method of Residential Areas in Remote Sensing Images Based on Cross-Attention Mechanism. Remote Sensing, 17(18), 3253. https://doi.org/10.3390/rs17183253