Instance Segmentation of LiDAR Point Clouds with Local Perception and Channel Similarity

Abstract

Highlights

- The LPM fuses multi-scale/multi-neighborhood point cloud features on a uniform BEV/polar coordinate grid.

- The ICCM calculates the attention weight matrix between feature channels, which adaptively enhances effective channel features and removes redundant channel features.

- The LPM is more effective against distance sparsity and occlusion in LiDAR than conventional FPN/CBAM that only does channel weighting or in-scale fusion.

- The ICCM makes representative features of point cloud extracted more effectively.

Abstract

1. Introduction

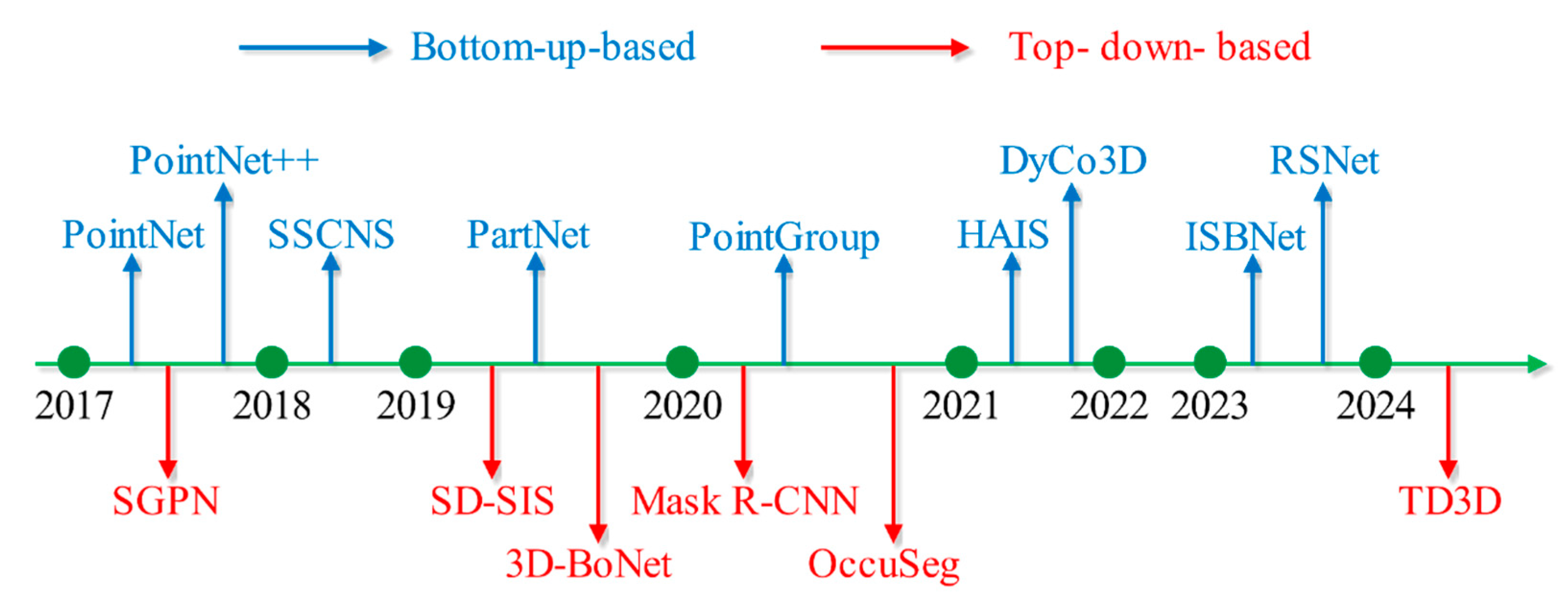

2. Related Work

2.1. Top-Down-Based Methods

2.2. Bottom-Up-Based Methods

3. Methods

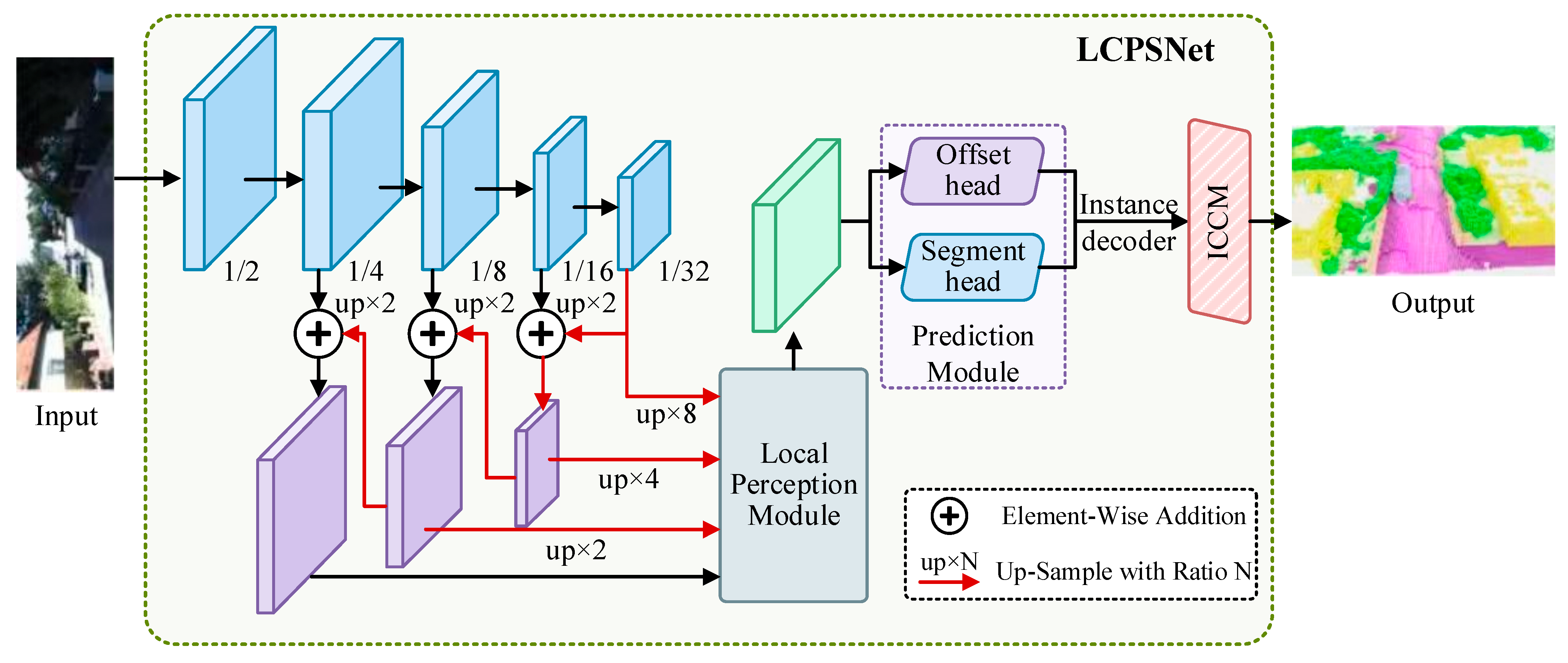

3.1. The Overall Structure of the LiDAR Channel-Aware Point Segmentation Network

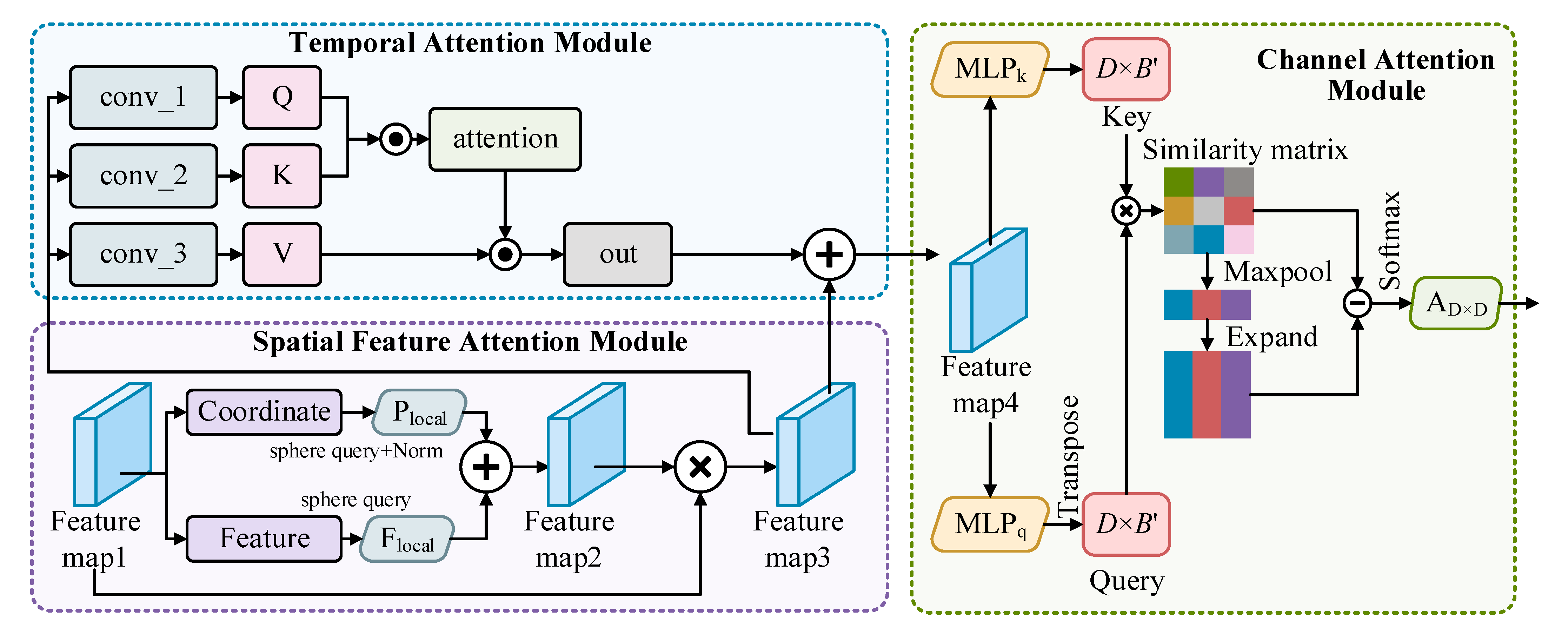

3.2. Local Perception Module

| Algorithm 1. Local Perception Module |

| 1 Input F 2 F′ = Concat(F) 3 F1 = Conv(F′) 4 Global Spatial Attention: 5 F2 = MaxPooling(F1) 6 F2′ = ConvTanh(F2) 7 F2″ = ConvTanh(F2′) 8 F3 = Expand(F2″) + F2 9 output = ConvTanh(ConvTanh(F3)) 10 Return output 11 expanded_weights = Expand(output) 12 fused = WiseProduct (F′, expanded_weights) 13 Output fused |

3.3. Inter-Channel Correlation Module Based on Channel Similarity

3.4. Cross Entropy Loss Function

4. Experiments

4.1. Datasets and Metrics

4.2. Comparison Experiments

4.3. Ablation Experiments

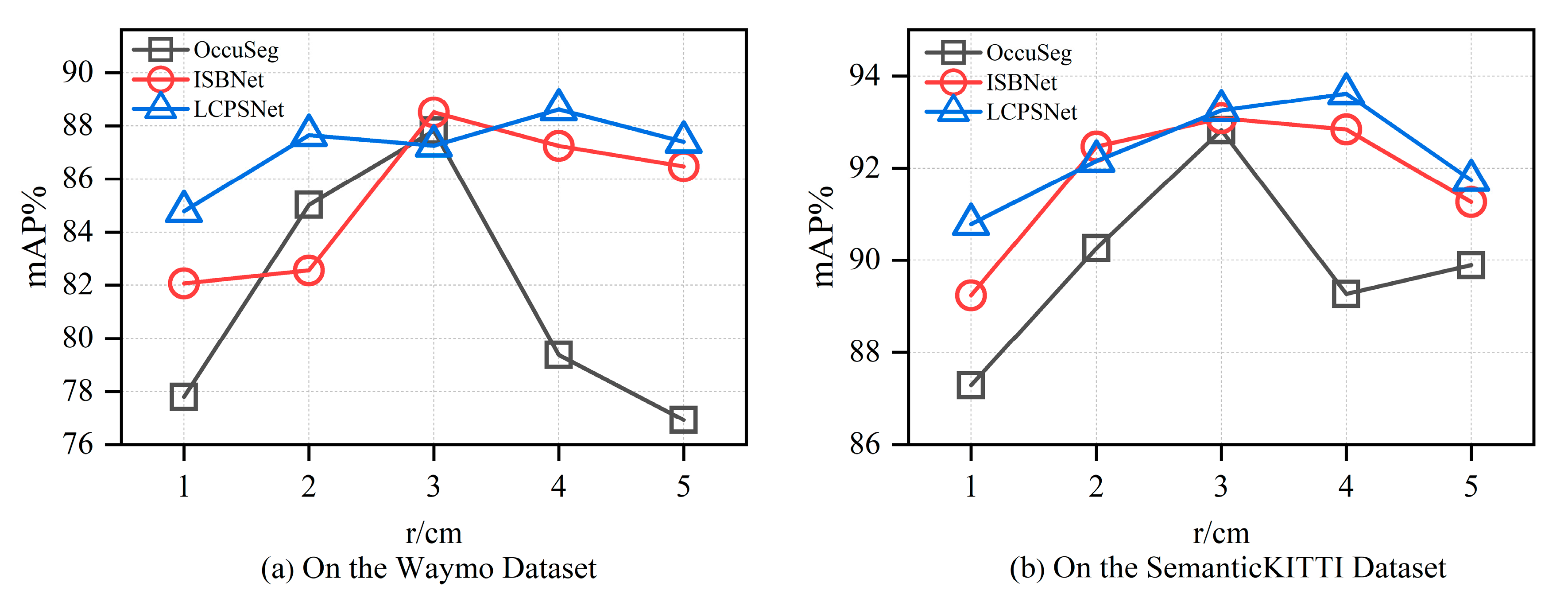

4.4. Hyperparameter Sensitivity Analysis

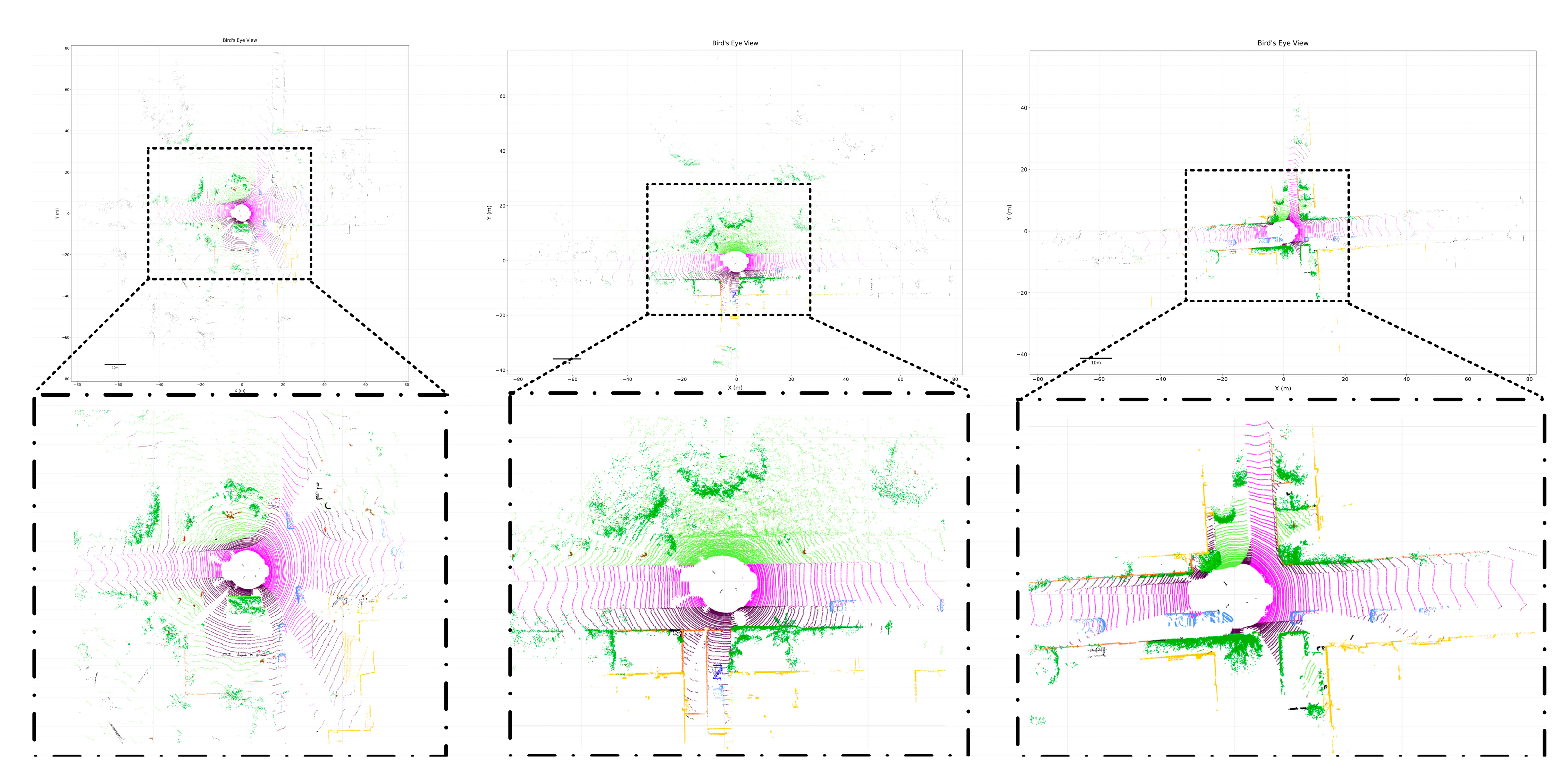

4.5. Visualization Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huang, X.S.; Mei, G.F.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. Available online: https://arxiv.org/abs/2103.02690v2 (accessed on 12 December 2024).

- Zeng, Y.H.; Jiang, C.H.; Mao, J.G.; Han, J.H.; Ye, C.Q.; Huang, Q.Q. CLIP2: Contrastive language-image-point pretraining from real world point cloud data. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE Press: New York, NY, USA, 2023; pp. 15244–15253. [Google Scholar]

- Marinos, V.; Farmakis, I.; Chatzitheodosiou, T.; Papouli, D.; Theodoropoulos, T.; Athanasoulis, D.; Kalavria, E. Engineering Geological Mapping for the Preservation of Ancient Underground Quarries via a VR Application. Remote Sens. 2025, 17, 544. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X.R. 3D object detection for autonomous driving: A survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Lee, S.; Lim, H.; Myung, H. Patchwork: Fast and robust ground segmentation solving partial under-segmentation using 3D point cloud. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE Press: New York, NY, USA, 2022; pp. 13276–13283. [Google Scholar]

- Xiao, A.R.; Yang, X.F.; Lu, S.J.; Guan, D.; Huang, J. FPS-Net: A convolutional fusion network for large-scale LiDAR point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2021, 176, 237–249. [Google Scholar] [CrossRef]

- Hafiz, A.M.; Bhat, G.M. A survey on instance segmentation: State of the art. Int. J. Multimed. Inf. Retr. 2020, 9, 171–189. [Google Scholar] [CrossRef]

- Guo, Y.L.; Wang, H.Y.; Hu, Q.Y.; Liu, H.; Bennamoun, M. Deep learning for 3D point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef] [PubMed]

- Lu, B.; Liu, Y.W.; Zhang, Y.H.; Yang, Z.Y. Point cloud segmentation algorithm based on density awareness and self-attention mechanism. Laser Optoelectron. Prog. 2024, 61, 0811004. [Google Scholar] [CrossRef]

- Ai, D.; Zhang, X.Y.; Xu, C.; Qin, S.Y.; Yuan, H. Advancements in semantic segmentation methods for large-scale point clouds based on deep learning. Laser Optoelectron. Prog. 2024, 61, 1200003. [Google Scholar] [CrossRef]

- Zhang, K.; Zhu, Y.W.; Wang, X.H.; Zhang, L.T.; Zhong, R.F. Three-dimensional point cloud semantic segmentation network based on spatial graph convolution network. Laser Optoelectron. Prog. 2023, 60, 0228007. [Google Scholar] [CrossRef]

- Xu, X. Research on 3D Instance Segmentation Method for Indoor Scene; Northeast Petroleum University: Daqing, China, 2023; pp. 12–13. [Google Scholar]

- Cui, L.Q.; Hao, S.Y.; Luan, W.Y. Lightweight 3D point cloud instance segmentation algorithm based on Mamba. Comput. Eng. Appl. 2025, 61, 194–203. [Google Scholar] [CrossRef]

- Lei, T.; Guan, B.; Liang, M.; Li, X.Y.; Liu, J.B.; Tao, J.; Shang, Y.; Yu, Q.F. Event-based multi-view photogrammetry for high-dynamic, high-velocity target measurement. arXiv 2025, arXiv:2506.00578. [Google Scholar]

- Liu, Z.; Liang, S.; Guan, B.; Tan, D.; Shang, Y.; Yu, Q.F. Collimator-assisted high-precision calibration method for event cameras. Opt. Lett. 2025, 50, 4254–4257. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.Y.; Yu, R.; Huang, Q.G. SGPN: Similarity group proposal network for 3D point cloud instance segmentation. arXiv 2017, arXiv:1711.08588. [Google Scholar]

- Hou, J.; Dai, A.; Nießner, M. 3D-SIS: 3D semantic instance segmentation of RGB-D scans. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE Press: New York, NY, USA, 2019; pp. 4416–4425. [Google Scholar]

- Lin, K.H.; Zhao, H.M.; Lv, J.J.; Li, C.Y.; Liu, X.Y.; Chen, R.J.; Zhao, R.Y. Face detection and segmentation based on improved mask R-CNN. Discret. Dyn. Nat. Soc. 2020, 2020, 9242917. [Google Scholar] [CrossRef]

- Yang, B.; Wang, J.; Clark, R.; Hu, Q.; Wang, S.; Markham, A.; Trigoni, N. Learning object bounding boxes for 3D instance segmentation on point clouds. In Proceedings of the 33rd Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 1–10. [Google Scholar]

- Han, L.; Zheng, T.; Lan, X.; Lu, F. OccuSeg: Occupancy aware 3D instance segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2937–2946. [Google Scholar]

- Kolodiazhnyi, M.; Vorontsova, A.; Konushin, A.; Rukhovich, D. Top-down beats bottom-up in 3D instance segmentation. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; IEEE Press: New York, NY, USA, 2024; pp. 3554–3562. [Google Scholar]

- Charles, R.Q.; Li, Y.; Hao, S.; Leonidas, J.G. PointNet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. Available online: https://arxiv.org/abs/1706.02413 (accessed on 12 December 2024).

- Charles, R.Q.; Hao, S.; Mo, K.C.; Leonidas, J.G. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE Press: New York, NY, USA, 2017; pp. 77–85. [Google Scholar]

- Mo, K.C.; Zhu, S.L.; Chang, A.X.; Yi, L.; Tripathi, S.; Guibas, L.J. PartNet: A large-scale benchmark for fine-grained and hierarchical part level 3D object understanding. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE Press: New York, NY, USA, 2019; pp. 909–918. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9224–9232. [Google Scholar]

- Jiang, L.; Zhao, H.; Shi, S.; Liu, S.; Fu, C.W.; Jia, J. PointGroup: Dual-set point grouping for 3D instance segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4866–4875. [Google Scholar]

- Chen, S.Y.; Fang, J.M.; Zhang, Q.; Liu, W.Y.; Wang, X.G. Hierarchical aggregation for 3D instance segmentation. arXiv 2021, arXiv:2108.02350. [Google Scholar] [CrossRef]

- He, T.; Shen, C.; Hengel, V.D.A. DyCO3D: Robust instance segmentation of 3D point clouds through dynamic convolution. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 354–363. [Google Scholar]

- Ngo, T.D.; Hua, B.S.; Nguyen, K. ISBNet: A 3D point cloud instance segmentation network with instance-aware sampling and box-aware dynamic convolution. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE Press: New York, NY, USA, 2023; pp. 13550–13559. [Google Scholar]

- Kolodiazhnyi, M.; Vorontsova, A.; Konushin, A.; Rukhovich, D. Oneformer3d: One transformer for unified point cloud segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 20943–20953. [Google Scholar]

- Zou, T.; Qu, S.; Li, Z.; Knoll, A.; He, L.H.; Chen, G.; Jiang, C.J. Hgl: Hierarchical geometry learning for test-time adaptation in 3d point cloud segmentation. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 19–36. [Google Scholar]

- Zhao, L.; Hu, Y.; Yang, X.; Dou, Z.L.; Kang, L.S. Robust multi-task learning network for complex LiDAR point cloud data preprocessing. Expert Syst. Appl. 2024, 237, 121552. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P. Scalability in perception for autonomous driving: Waymo open dataset. In IEEE/CVF Conference on Computer Vision and Pattern Recognition; IEEE Press: New York, NY, USA, 2020; pp. 2446–2454. [Google Scholar]

- Zhang, Y.; Zhou, Z.; David, P.; Yue, X.; Xi, Z.; Gong, B. Polarnet: An improved grid representation for online lidar point clouds semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9601–9610. [Google Scholar]

- Zhou, H.; Zhu, X.; Song, X.; Ma, Y.; Wang, Z.; Li, H.; Lin, D. Cylinder3d: An effective 3d framework for driving-scene lidar semantic segmentation. arXiv 2020, arXiv:2008.01550. [Google Scholar]

- Cheng, R.; Razani, R.; Taghavi, E.; Li, E.; Liu, B. (AF)2-s3net: Attentive feature fusion with adaptive feature selection for sparse semantic segmentation network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12547–12556. [Google Scholar]

- Kong, L.; Liu, Y.; Chen, R.; Ma, Y.; Zhu, X.; Li, Y. Rethinking range view representation for lidar segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 228–240. [Google Scholar]

- Cardace, A.; Spezialetti, R.; Ramirez, P.Z.; Salti, S.; Stefano, L.D. Self-distillation for unsupervised 3d domain adaptation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4166–4177. [Google Scholar]

- Wei, Y.; Liu, H.; Xie, T.; Ke, Q.; Guo, Y. Spatial-temporal transformer for 3d point cloud sequences. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1171–1180. [Google Scholar]

| Method | PQ | mIoU (%) | FPS | Params (M) |

|---|---|---|---|---|

| PointNet [21] | 17.5 | 18.2 | 120 | 3.5 |

| PointNet++ [20] | 20.8 | 23.4 | 84.8 | 1.7 |

| SSCNS [25] | 35.2 | 37.9 | 25.2 | 16 |

| PolarNet [35] | 54.3 | 55.7 | 16.9 | 13.6 |

| PointGroup [24] | 41.7 | 42.5 | 12.4 | 22.8 |

| Cylinder3D [36] | 66.8 | 68.9 | 11.5 | 25.1 |

| AF2S3Net [37] | 64.9 | 69.7 | 9 | 31.4 |

| RangeFormer [38] | 64.1 | 73.6 | 10.2 | 28.2 |

| SDSeg3D [39] | 62.6 | 70.4 | 8.5 | 25 |

| SpAtten [40] | 70.5 | 76.8 | 6 | 40 |

| LCPSNet (Ours) | 70.9 | 77.1 | 13.5 | 18.2 |

| Combination | LPM | ICCM | mIoU (%) | PQ |

|---|---|---|---|---|

| Baseline | × | × | 69.9 | 61.4 |

| LPM | √ | × | 72.4 | 64.8 |

| ICCM | × | √ | 72.9 | 65.2 |

| LCPSNet (Ours) | √ | √ | 77.1 | 70.9 |

| Category | Baseline | LPM | ICCM | LCPSNet (Ours) |

|---|---|---|---|---|

| car | 94.3 | 94.7 | 95 | 98.2 |

| bicy | 68.3 | 69.3 | 68.5 | 72.4 |

| moto | 70.8 | 72.3 | 72.8 | 75.7 |

| truc | 59.1 | 60.8 | 60.2 | 63.9 |

| o.veh | 69.4 | 71 | 71.8 | 74.5 |

| ped | 73.7 | 75.8 | 76.1 | 79.3 |

| b.list | 70.5 | 71.3 | 71.5 | 75.2 |

| m.list | 56.1 | 58.1 | 58.1 | 60.9 |

| road | 88.2 | 89.2 | 89.2 | 92.9 |

| park | 69.9 | 72 | 71.9 | 74.4 |

| walk | 75.6 | 76.4 | 76.8 | 79.7 |

| o.gro | 42.5 | 44 | 43.8 | 46.5 |

| build | 89.9 | 90.1 | 91 | 93.8 |

| fenc | 67.4 | 69.7 | 69.5 | 72.9 |

| veg | 83 | 84.8 | 85.3 | 87.8 |

| trun | 72.4 | 73 | 73.6 | 76.6 |

| terr | 68.1 | 70.3 | 69.6 | 73.4 |

| pole | 63.9 | 65.1 | 66.1 | 68.7 |

| sign | 64.9 | 65.6 | 65.9 | 68.9 |

| mIoU | 69.9 | 72.4 | 72.9 | 77.1 |

| Loss Function | PQ (%) | mIoU (%) |

|---|---|---|

| Cross-Entropy (CE) | 70.9 | 77.1 |

| IoU | 69.8 | 76.2 |

| mAcc | 68.9 | 75.6 |

| Combination | LPM | ICCM | mIoU (%) |

|---|---|---|---|

| Baseline | × | × | 62.7 |

| LPM | √ | × | 66.9 |

| ICCM | × | √ | 67.5 |

| LCPSNet (Ours) | √ | √ | 70.4 |

| Waymo | r (cm) | 1 | 2 | 3 | 4 | 5 |

| OccuSeg | 77.79 | 85.02 | 87.81 | 79.37 | 76.93 | |

| ISBNet | 82.07 | 82.55 | 88.5 | 87.24 | 86.47 | |

| LCPSNet | 84.78 | 87.64 | 87.25 | 88.61 | 87.39 | |

| SemanticKITTI | r (cm) | 1 | 2 | 3 | 4 | 5 |

| OccuSeg | 87.29 | 90.26 | 92.82 | 89.26 | 89.89 | |

| ISBNet | 89.24 | 92.47 | 93.08 | 92.84 | 91.26 | |

| LCPSNet | 90.78 | 92.16 | 93.25 | 93.61 | 91.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, X.; Wu, X. Instance Segmentation of LiDAR Point Clouds with Local Perception and Channel Similarity. Remote Sens. 2025, 17, 3239. https://doi.org/10.3390/rs17183239

Du X, Wu X. Instance Segmentation of LiDAR Point Clouds with Local Perception and Channel Similarity. Remote Sensing. 2025; 17(18):3239. https://doi.org/10.3390/rs17183239

Chicago/Turabian StyleDu, Xinmiao, and Xihong Wu. 2025. "Instance Segmentation of LiDAR Point Clouds with Local Perception and Channel Similarity" Remote Sensing 17, no. 18: 3239. https://doi.org/10.3390/rs17183239

APA StyleDu, X., & Wu, X. (2025). Instance Segmentation of LiDAR Point Clouds with Local Perception and Channel Similarity. Remote Sensing, 17(18), 3239. https://doi.org/10.3390/rs17183239