1. Introduction

Different sensors have been used for Earth observation, like infrared and synthetic aperture radar (SAR) [

1,

2,

3]. Within this context, hyperspectral imaging, a cornerstone of modern remote sensing, can capture a wealth of information across hundreds of contiguous, narrow spectral bands, enabling fine-grained material discrimination and revolutionizing applications such as precision agriculture [

4], environmental monitoring [

5], urban planning [

6], and mineral exploration [

7,

8]. The unique spectral signature contained in each pixel offers unparalleled potential for detailed land-cover mapping. However, the richness of HSI data also poses a major challenge. The so-called curse of dimensionality (Hughes phenomenon) [

9] arises from the high number of spectral bands relative to the limited number of labeled training samples, which often leads to overfitting and poor generalization. In addition, HSI data commonly exhibit high intra-class spectral variability, inter-class similarity, the presence of mixed pixels due to low spatial resolution, and atmospheric or sensor-induced noise, making accurate pixel-wise classification challenging [

10,

11]. At the same time, accurately capturing the microscopic spectral–spatial details that are crucial for classification while effectively modeling the macroscopic spatial context and suppressing noise remains a core bottleneck for current advanced methods.

Traditional machine-learning algorithms, including Support Vector Machines (SVMs) [

12], Random Forests (RFs) [

13], k-Nearest Neighbors (KNNs) [

14], and Multinomial Logistic Regression (MLR) [

15], have been widely used for HSI classification. These approaches typically rely on spectral information, sometimes augmented with handcrafted spatial features, such as Gabor filters or Local Binary Patterns. While they can perform reasonably well on a small amount of data, these methods often struggle to capture the complex nonlinear relationships inherent in hyperspectral data and require extensive feature engineering [

16].

The advent of deep learning, particularly convolutional neural networks (CNNs), has significantly advanced HSI classification by enabling hierarchical and automatic learning of spatial–spectral features [

17,

18]. Multiple CNN variants have been explored: 1D CNNs focus on deep spectral features [

19], 2D CNNs utilize the spatial context of selected or reduced bands [

20,

21], and 3D CNNs jointly extract spatial and spectral features by convolving over the full data cube [

22,

23]. Hybrid architectures, such as HybridSN [

24], which combine 3D CNNs for local feature extraction with 2D CNNs for broader spatial modeling, have shown promising performance in HSI classification. Building on this concept, several studies have further explored 2D-3D fusion strategies. For instance, Zhong et al. [

25] developed the Spectral–Spatial Residual Network (SSRN), employing a hierarchical design that utilizes 3D convolutions for the initial feature extraction followed by 2D residual blocks to capture multi-level representations. Feng et al. [

26] developed a hybrid convolutional neural network (OCT-MCNN) that integrates 3D octave convolutions with 2D vanilla convolutions for HSI classification. Additionally, the MDGCN framework [

27] synergistically combines 3D CNNs and graph-based contextual modeling. To further enhance CNN performance, techniques like attention mechanisms [

28], residual connections [

29], and dilated convolutions [

30] have been incorporated. However, a fundamental limitation of CNNs lies in their local receptive fields. While stacking many layers can enlarge the effective receptive field, capturing truly long-range dependencies and global contextual relationships efficiently remains a challenge, which is crucial for distinguishing spectrally ambiguous classes in complex scenes [

31].

To overcome the global contextual modeling limitations of CNNs, transformer architectures, which have revolutionized Natural Language Processing (NLP) through their self-attention mechanism, have been successfully adapted for computer vision. The Vision Transformer (ViT) [

32] has shown that pure transformer architectures can achieve state-of-the-art performance in image classification by treating image patches as token sequences. This has spurred significant interest in applying transformers to HSI classification [

33,

34]. The Swin transformer [

35] has taken this further by introducing a hierarchical architecture and a shifted window-based self-attention mechanism, enabling more efficient modeling of multi-scale features and reducing computational complexity, making it particularly suitable for dense prediction tasks in remote sensing [

36].

Building upon these foundation models, several studies have explored combining CNNs with transformers to leverage the local feature extraction strengths of CNNs and the global modeling capabilities of transformers for HSI classification. For example, Hong et al. [

37] presented SpectralFormer, one of the first works that applied the transformer architecture, for HSI classification by modeling spectral sequences. Wang et al. [

38] introduced a transformer architecture with CNN-enhanced cross-attention to fuse local and global features for improved HSI classification. Guerri et al. [

39] introduced a gate-shift fuse block to a CNN–transformer model to improve the extraction of local features and global features by attention-driven fusion. These hybrid CNN–transformer approaches have demonstrated superior performance in HSI classification by effectively capturing both local spatial–spectral patterns and long-range dependencies through sophisticated attention mechanisms. However, because they rely primarily on CNNs for the perception of local features, these methods have inherited the limitations of CNNs in capturing non-local, frequency-based features. Fundamentally, the patch-based paradigm of the transformer backbone itself, while powerful for context, remains the primary bottleneck for preserving the fidelity of intra-patch details.

This patch-based limitation manifests in several critical shortcomings for current transformer-based HSI classification methods. First, the tokenization process, which flattens 2D or 3D patches to 1D vectors, inherently risks the loss of fine-grained local information. Subtle but diagnostically crucial details, such as the precise shape of a narrow spectral absorption feature or a delicate spatial texture, can be disrupted or averaged out before the self-attention mechanism even processes them [

40]. The model operates on coarse, patch-level representations, potentially smoothing over the very high-frequency cues needed to distinguish spectrally similar classes. Then, transformers lack the strong inductive biases that are innate to CNNs [

32]. This makes them more vulnerable to the noise and high spectral redundancy common in HSI data. The self-attention mechanism, without these constraints, can sometimes learn spurious correlations from noisy pixels or over-attend to redundant bands, degrading the quality of the learned features. Finally, it is well established that transformers are data-hungry models that typically require large-scale pretraining or extensive labeled data to achieve the optimal performance. This presents a significant practical challenge in remote sensing, where acquiring a large number of high-quality labeled samples for HSI is often difficult and expensive [

41]. These limitations collectively motivate the exploration of alternative domains to complement the transformer’s powerful contextual modeling with enhanced fine-grained feature extraction and improved robustness.

To address these limitations and enhance fine-grained feature extraction, frequency-domain analysis, in particular, the wavelet transform, offers a powerful complementary approach for signal and image processing. Wavelet transforms decompose signals to frequency sub-bands at multiple scales and are excellent for capturing local transient features (e.g., edges and subtle peaks in spectra) and representing texture, while exhibiting inherent noise reduction capabilities through multi-resolution decomposition [

42,

43]. In the HSI domain, wavelet transforms have traditionally been used for tasks such as noise reduction [

44], feature extraction for conventional classifiers [

45], and band selection [

46]. Some recent work has also begun to integrate wavelet transforms with CNNs for HSI classification, often using wavelet coefficients as input features or designing wavelet-inspired network layers [

47,

48]. While these applications demonstrate the utility of wavelets, the deep and synergistic integration with advanced transformer architectures remains a challenge. A critical, yet often overlooked, issue is the semantic gap between the hierarchical, context-rich features from a transformer and the fine-grained, location-specific details from a wavelet decomposition. Simply concatenating these features is unlikely to fully exploit their complementary strengths, potentially leading to suboptimal performance due to feature redundancy and semantic misalignment.

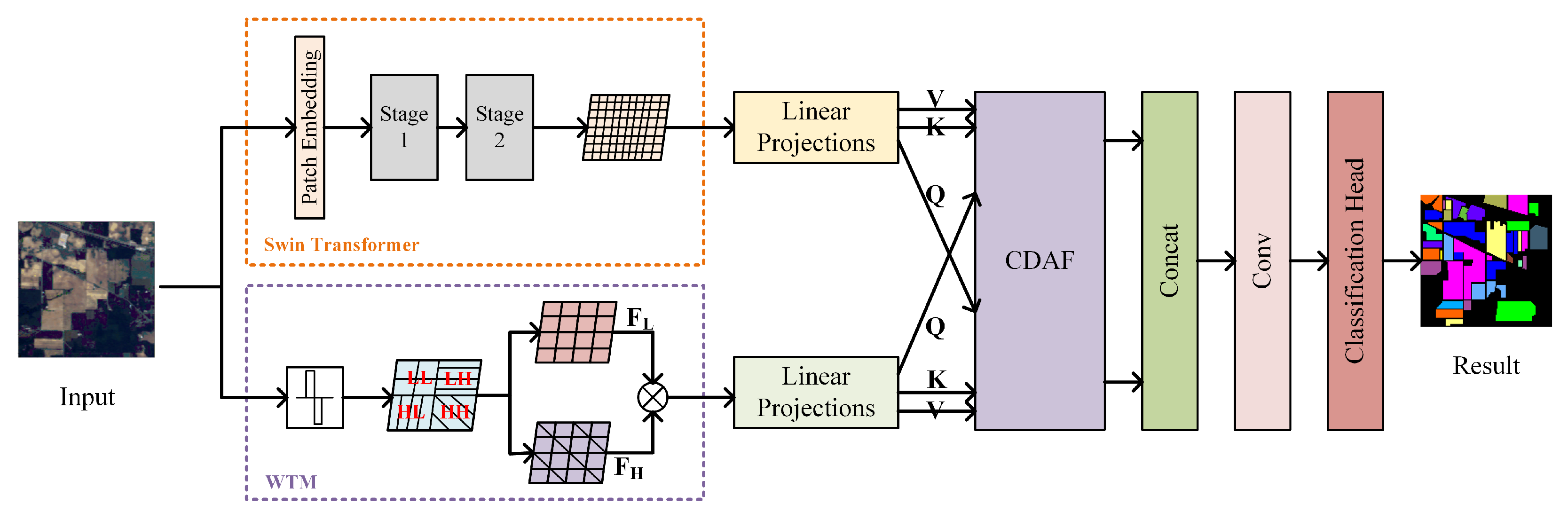

This work directly tackles the above-mentioned challenges. In brief, a novel framework, WSC-Net (Wavelet-Enhanced Swin Transformer with Cross-Domain Attention), that synergistically merges the features of these two distinct but complementary domains, is proposed. Its core is to introduce a dedicated wavelet analysis branch to extract multi-scale detailed features that might be overlooked by the Swin-transformer-based backbone. Crucially, and as the primary contribution of this work, we develop a Cross-Domain Attention Fusion (CDAF) module to enable intelligent and adaptive interaction between the wavelet-derived features and the Swin transformer’s contextual representations. This CDAF module is not a generic attention block but a purpose-built mechanism that allows features from one domain to selectively attend to and enhance features from the other, fostering a more discriminative and robust feature space for HSI classification. The main contributions of this work are summarized as follows:

This paper proposes WSC-Net, a novel dual-branch architecture that effectively integrates wavelet-transform-based feature enhancement with a two-stage Swin transformer backbone, specifically adapted for HSI patch analysis, aiming to capture both global contextual and fine-grained local details;

We introduce a Cross-Domain Attention Fusion (CDAF) module, the core innovation of WSC-Net. It facilitates the synergistic, bi-directional interaction and adaptive fusion of features from the wavelet domain and the spatial–spectral domain, overcoming the semantic gap that hinders simple fusion strategies and leading to richer and more robust feature representations;

Extensive experiments on benchmark HSI datasets demonstrate that the proposed WSC-Net achieves superior classification performance compared to those of several state-of-the-art HSI classification methods.

The subsequent sections of this paper are organized as follows:

Section 2 details the methodology,

Section 3 reports on the experiments and delivers an analysis of the results.

Section 4 provides an in-depth discussion of our findings and the model’s implications. Finally,

Section 5 concludes the paper and suggests directions for future research.

3. Experiments and Results

3.1. Experimental Datasets and Evaluation Indicators

To comprehensively evaluate the performance of the proposed WSC-Net, we conducted extensive experiments on four widely used HSI datasets: Indian Pines (IP), Pavia University (PU), Salinas (SA), and Longkou (LK). These datasets were selected to cover a diverse range of characteristics, including varying spatial resolutions, spectral properties, scene complexities, and class distributions. This diversity provides a robust testbed for assessing the model’s effectiveness, generalization capability, and robustness. An overview of these datasets, including their false-color images and ground-truth maps, is presented in

Figure 5. Detailed information on the land-cover classes and sample counts for each dataset is provided in

Table 1.

Indian Pines (IP): The Indian Pines dataset was acquired by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over a mixed agricultural and forest area in northwestern Indiana, USA. The scene comprises a spatial dimension of 145 × 145 pixels. It originally consists of 224 spectral bands spanning wavelengths from 0.4 to 2.5 μm, from which 24 water absorption and noisy bands were removed, resulting in 200 bands retained for experimentation. This dataset has 16 distinct land-cover classes. The classification task is particularly challenging due to the high spectral similarity among certain vegetation types and the severe class imbalance, where minority classes contain as few as 20 samples [

49].

Pavia University (PU): The Pavia University dataset was collected by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor over an urban environment at the University of Pavia, Italy. The image has a spatial dimension of 610 × 340 pixels and a high spatial resolution of 1.3 m. After discarding 12 noisy bands, 103 spectral bands ranging from 0.43 to 0.86 μm were used for the analysis. This dataset encompasses nine urban structure classes. The complex urban scene, with its intricate spatial layouts and spectrally similar human-made materials, makes it a standard benchmark for testing a model’s fine-grained discrimination ability [

50].

Salinas (SA): The Salinas dataset was also captured by the AVIRIS sensor but over the Salinas Valley, California, an area with high agricultural uniformity. The image covers 512 × 217 pixels, with a high spatial resolution of 3.7 m. From the original 224 bands, 20 noisy and water absorption bands were removed, leaving 204 bands for the experiments. The dataset contains 16 agriculture-related classes, including various vegetables, fallow fields, and vineyard vines.

Longkou (LK): The Longkou dataset was acquired by an airborne Headwall A-series hyperspectral imaging sensor over a coastal farming area in Longkou, Shandong Province, China [

51]. This large-scale dataset has a spatial dimension of 550 × 400 pixels and a spatial resolution of about 0.46 m. It contains 270 spectral bands covering the 0.4 to 1.0 μm range. The dataset comprises 19 distinct classes, featuring a diverse mix of crops, trees, water, and human-made structures.

We evaluate WSC-Net and baseline methods using three widely adopted metrics for hyperspectral image classification: the overall accuracy (OA), average accuracy (AA), and kappa coefficient (). These metrics provide complementary insights into different aspects of the classification performance. Let N be the total number of test samples, C be the number of land-cover classes, and be the confusion matrix, where the element represents the number of samples from class i that were classified as class j.

Overall Accuracy (OA): This metric represents the proportion of correctly classified pixels relative to the total number of test pixels. OA is

However, OA can be biased toward majority classes in imbalanced datasets.

Average Accuracy (AA): AA calculates the mean classification accuracy for each class, giving equal weight to all the classes regardless of the sample size. This provides a more balanced assessment across all the categories. AA is defined as follows:

Kappa Coefficient (): The kappa coefficient measures the agreement between the classification results and ground truth while correcting for chance agreement. It is computed as follows:

Higher kappa values (closer to 1) indicate better classification performance.

In addition to these three metrics, individual class accuracies will be reported to enable a fine-grained analysis of the model performance on specific land-cover types. All the results are averaged over multiple independent runs to ensure statistical significance.

3.2. Implementation Details

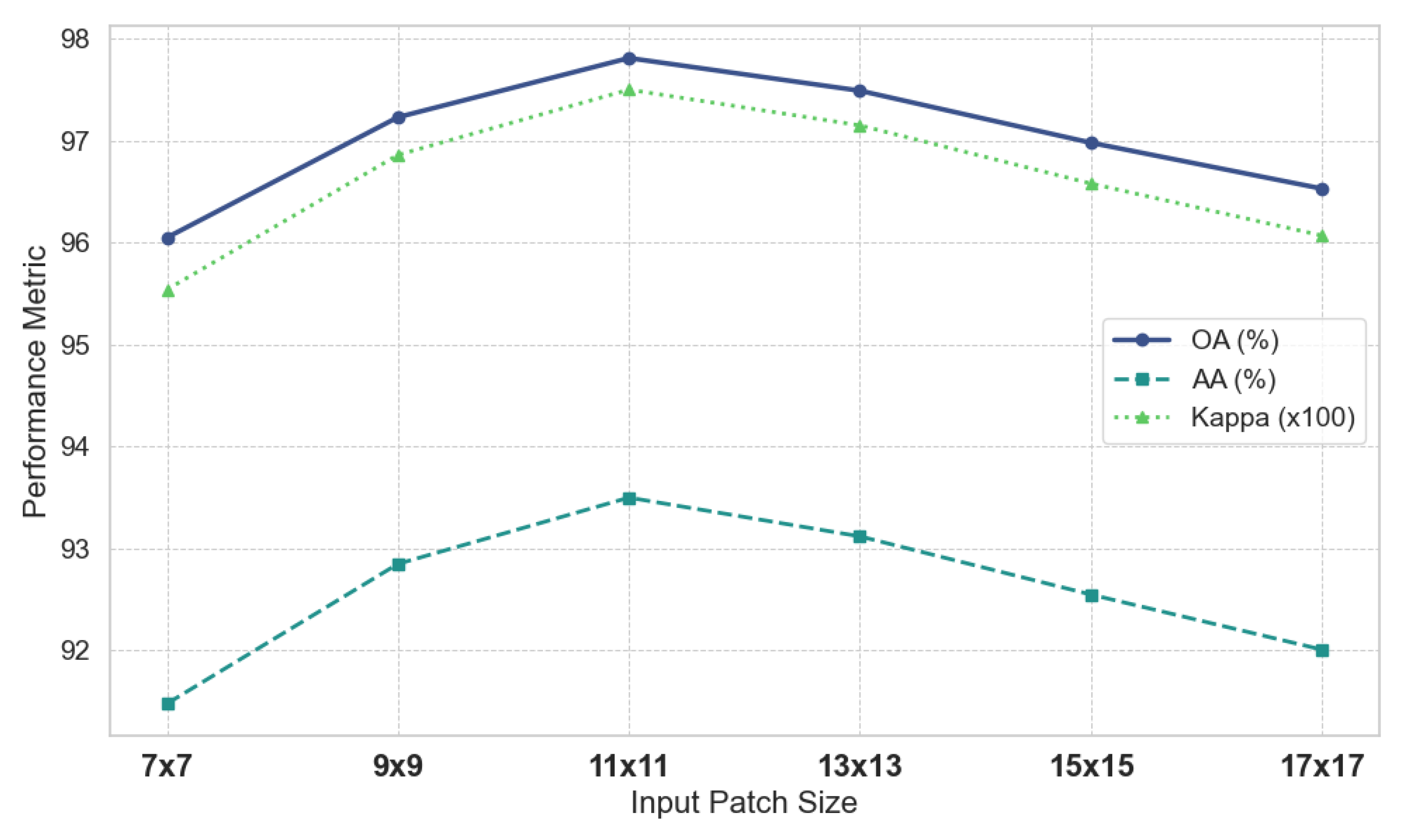

To ensure a fair comparison and reproducibility, all the experiments were conducted within a unified framework on a single NVIDIA Tesla V100 GPU using the PyTorch 1.12.1 framework. For data preparation, we first applied PCA to each HSI cube to reduce its spectral dimensionality, setting the number of principal components at 30 for the IP, SA, and LK datasets and at 15 for the PU dataset. These values were chosen based on common practice to retain over 99% of the spectral variance while reducing the computational overhead. From these reduced data cubes, spatial patches of size 11 × 11 were extracted around each labeled pixel to form the input for the networks. This patch size was determined to be optimal through a dedicated ablation study (

Section 3.6), which balances the need for sufficient spatial context with the risk of introducing noise from irrelevant neighboring pixels. The training set for the IP dataset consisted of 5% of the samples from each class. For the SA, PU, and LK datasets, the training sets were composed of 0.5%, 1%, and 5% of the samples per class, respectively. These challenging, small-sample ratios were selected to rigorously test the model’s generalization capability. The remaining samples in each dataset were used for testing.

The architecture of our proposed WSC-Net is configured based on the Swin–Tiny variant, which has been adapted to effectively process the low spatial dimensions of HSI patches. The two-stage Swin transformer backbone is configured with depths of [2, 2] and numbers of attention heads of [3, 6] for each stage, respectively. This two-stage design is a specific adaptation for the small 11×11 HSI patches to prevent the excessive spatial downsampling that would occur in a standard four-stage architecture. The initial embedding dimension is set at ninety-six, and the attention window size is seven. WTM employs a single-level 2D Haar wavelet, chosen for its computational efficiency and effectiveness in capturing sharp local features, as validated in our ablation study (

Section 3.6). The attention dimension (

) within the CDAF module was set at 64, a standard value for such mechanisms.

During training, all the models were optimized for 100 epochs using the AdamW optimizer with a learning rate of and a weight decay of 0.05. A cosine-annealing scheduler was used to adjust the learning rate, and the batch size was set at 64. These training hyperparameters were determined through preliminary experiments to ensure stable and efficient convergence across all the datasets. To ensure statistical significance, the final results reported are the average and standard deviation of 10 independent runs, each with a different random seed for data splitting and model initialization.

3.3. Comparison Methods

To thoroughly validate the effectiveness of the proposed method, we conducted a comprehensive comparative analysis against eight representative methods. These baselines were carefully selected to cover the main technological paradigms in HSI classification, including Support Vector Machines (SVMs) [

12], 3D CNNs [

22], the Hybrid Spectral–Spatial Network (HybridSN) [

24], Vision Transformer (ViT) [

32], the Hyperspectral Image Transformer (HiT) [

52], Discrete Wavelet Transform and Dense CNN (DWTDense) [

53], the Spectral–Spatial Feature Tokenization Transformer (SSFTT) [

54], and the Spectral–Spatial Morphological Attention Transformer (MorphFormer) [

55]. All the methods were implemented under the same experimental conditions for a fair and rigorous comparison.

3.4. Quantitative Results and Analysis

The comprehensive quantitative results of the proposed WSC-Net and the eight comparative methods across the four benchmark datasets are presented in

Table 2,

Table 3,

Table 4 and

Table 5. These tables provide per-class accuracy, OA, AA, and

values. A thorough analysis of these results reveals the clear superiority of our proposed approach.

WSC-Net establishes new state-of-the-art performances across all four datasets, consistently achieving the highest or near-highest scores in the three primary metrics. This validates both its exceptional performance and strong generalization capability. Specifically, in the IP, PU, SA, and LK datasets, WSC-Net achieves leading OA scores of 97.81%, 97.92%, 96.90%, and 98.84%, respectively. Such remarkable performance spans datasets with vastly different characteristics—from the low-resolution, noisy environment of IP to the complex, high-resolution agricultural scenes of LK—confirming the effectiveness and adaptability of our architecture. The most compelling evidence emerges from a detailed examination of the challenging IP dataset. Here, WSC-Net achieves an outstanding AA of 93.50%, substantially outperforming competing methods, including recent powerful models, like MorphFormer (92.14%) and SSFTT (86.67%). This superior AA is particularly meaningful, given the dataset’s severe class imbalance, as it reflects more balanced performance across all the classes. The model’s strength becomes especially apparent in notoriously difficult, low-sample classes. For instance, WSC-Net achieves perfect (100.00%) accuracy in Class 7 (Grass-p-mowed) and a highly competitive accuracy of 72.22% in Class 9 (Oats), significantly surpassing those of the other advanced transformer models. Similar excellence in challenging classes appears throughout all the datasets, including outstanding results in Class 8 (Untrained grapes) in SA and Class 7 (Bitumen) in PU.

These results illuminate the fundamental value of our architectural innovations. While advanced transformer models, like HiT and MorphFormer, excel at capturing spatial context, they often struggle with fine-grained details and noise resilience. Notably, even when compared to DWTDense, a strong baseline that also leverages wavelet transforms with a powerful DenseNet backbone, our WSC-Net consistently maintains a performance advantage across all the datasets. This is a critical finding, as it suggests that the superiority of our model stems not merely from the inclusion of wavelet features but also fundamentally from the intelligent, adaptive fusion mechanism of the CDAF module. WSC-Net addresses these limitations through two key components: WTM extracts subtle frequency-domain features, while the CDAF module intelligently integrates this information with the spatial context. The consistently high AA scores further validate this approach, demonstrating that our model delivers more balanced and reliable classification across diverse land-cover types. Rather than offering merely incremental improvements, WSC-Net provides a principled solution to the fundamental challenges inherent in HSI classification.

3.5. Visual Analysis and Classification Maps

To complement the quantitative metrics, a qualitative assessment of the classification maps provides a more intuitive understanding of our model’s practical advantages.

Figure 6,

Figure 7,

Figure 8 and

Figure 9 display the classification results generated by several representative methods for selected sub-regions of the four datasets, alongside the ground-truth maps. Visual inspection immediately reveals a clear performance hierarchy among the different approaches.

The maps from classical SVMs are characterized by significant “salt-and-pepper” noise, indicating a lack of spatial contextual awareness. While CNN-based architectures, like HybridSN, produce more homogeneous regions, they often struggle with accurately delineating class boundaries, resulting in overly smoothed or blurred edges. Even advanced transformer-based models, such as SSFTT, despite their strong performances, can occasionally produce blocky artifacts or exhibit confusion in areas with complex textural patterns. These limitations become particularly apparent when examining fine-grained spatial details or transitional zones between different land-cover types. In striking contrast, the classification maps produced by WSC-Net demonstrate remarkable fidelity to the ground truth across all the tested scenarios. This visual excellence manifests through several distinctive characteristics. First, the intra-class regions exhibit exceptional smoothness and uniformity, with drastically reduced misclassified pixels. This is particularly evident in the large agricultural fields of the SA dataset. Second, WSC-Net maintains sharp and precise boundaries between different land-cover types, as observed in the intricate urban layout of the PU map, where building edges remain clearly defined. Third, our model demonstrates outstanding capability in correctly identifying small or irregularly shaped objects, a task where competing methods frequently fail. A clear example is the accurate classification of the small ’Oats’ class in the IP dataset.

This enhanced spatial coherence and detail preservation stem directly from our architectural innovations. The wavelet features from the WTM provide crucial textural and edge information, which is then intelligently integrated by the CDAF module with the global context from the Swin transformer. This synergistic fusion enables WSC-Net to not only generate accurate pixel-wise predictions but also reconstruct the underlying spatial structure of scenes with high fidelity. The result is more reliable and interpretable classification outcomes that better reflect the true complexity of real-world landscapes.

3.6. Ablation Studies

Ablation study of different model configurations: To validate the effectiveness of the proposed architectural components, we conducted a comprehensive ablation study on the IP dataset. This analysis systematically deconstructs WSC-Net to isolate and quantify the contributions of both the WTM and the CDAF module. The results, presented in

Table 6, demonstrate a clear and synergistic relationship between the components, where each part plays a vital role in achieving the final performance.

The baseline model consists solely of our customized two-stage Swin transformer backbone (w/o WTM and CDAF), which achieves a strong OA of 94.89%. When the CDAF module is introduced without the wavelet branch (w/o WTM), it functions as a self-attention enhancement and increases the OA to 95.82%, confirming its independent effectiveness in refining spatial–spectral features. In contrast, adding the WTM with simple concatenation (w/o CDAF) leads to a more substantial improvement, achieving an OA of 97.15%, thereby providing direct evidence that wavelet-derived features supply crucial complementary information. Finally, the full WSC-Net model integrates both components, where the CDAF module intelligently fuses the spatial–spectral and frequency-domain streams, boosting performance to the highest OA of 97.81%. This step-by-step progression demonstrates that both WTM and CDAF contribute distinct and complementary benefits: wavelet features provide significant enhancement, while CDAF unlocks their full potential through adaptive cross-domain fusion.

Ablation study of the per-class performance: To further investigate the classification behavior of the proposed WSC-Net, we present the normalized confusion matrices for four datasets in

Figure 10. These matrices visualize the per-class recall rates, offering a granular view of the model’s performance. A consistent pattern of strongly weighted diagonals is evident across all four datasets, confirming that WSC-Net achieves high and balanced recall rates for the vast majority of the classes. This demonstrates the model’s robustness and adaptability to diverse spectral and spatial characteristics, ranging from the complex agricultural landscapes of the IP and SA datasets to the urban–rural environments in the PU and LK datasets.

Ablation study of the wavelet basis selection: To validate our choice of the Haar wavelet, an additional ablation study was conducted on the IP dataset to compare its performance against those of other commonly used wavelet bases, namely, Daubechies 4 (db4) and Symlets 4 (sym4). The results, presented in

Table 7, provide valuable insights into the model’s sensitivity to the choice of the wavelet.

The experimental results indicate that while all the tested wavelet bases enable the model to achieve high-quality classification, the Haar wavelet yields the best performance across all three metrics. The db4 and sym4 wavelets, which are smoother and have longer filters, result in a slight but consistent decrease in performance. A plausible explanation is that the simple, discontinuous nature of the Haar wavelet is particularly well suited for capturing the sharp, step-like changes often present in hyperspectral signatures at material boundaries and in specific absorption features. Smoother wavelets, like db4 and sym4, might slightly blur these critical high-frequency details during decomposition. Therefore, considering its marginally superior accuracy and well-known computational efficiency, the Haar wavelet is confirmed as the most effective choice for the WSC-Net architecture.

Ablation study of the input patch size: To determine the optimal spatial input, we evaluated WSC-Net’s performance in the IP dataset with patch sizes ranging from

to

. As illustrated in

Figure 11, the results reveal a distinct “peaking” behavior. Performance improves from

to its maximum at

, demonstrating the benefit of a moderate spatial context. Beyond this peak, however, the accuracy consistently declines, indicating that larger patches introduce noise from irrelevant neighboring pixels, a phenomenon known as the “curse of the context.” This finding strongly supports our model’s design rationale. WSC-Net achieves its best performance with a relatively compact

patch because its WTM already captures the fine-grained details that other models seek from larger, potentially noisy receptive fields. The

size strikes an optimal balance between sufficient context for the Swin transformer backbone and minimal noise introduction. Therefore, this configuration is confirmed as the ideal setting and is used for all the experiments in this paper.

4. Discussion

The experimental results presented in the previous sections confirm the quantitative and qualitative effectiveness of WSC-Net. This section provides a broader context for these findings by discussing the rationale behind our patch-based methodology, analyzing the architectural principles that contribute to the model’s performance and acknowledging its current limitations.

A pertinent consideration in HSI classification is the role of patch-based deep learning models in relation to object-based approaches, which have demonstrated high accuracies on certain benchmarks. These two paradigms, however, address distinct scientific questions and application needs. Object-based methods excel in scenarios requiring cartographically clean outputs for large, homogeneous regions. In contrast, our patch-based methodology is aligned with the objective of advancing fine-grained analysis at the highest possible spatial resolution. This approach is fundamental for applications such as mineral exploration or precision agriculture, where the classification of individual pixels or small pixel groups is critical. It provides an end-to-end framework that learns features directly from the data, offering greater generalizability to scenes with complex or fragmented landscapes, where pre-segmentation is often challenging. Therefore, research into advanced patch-based models remains a vital frontier for developing foundational feature extractors with broad applicability in remote sensing.

Furthermore, a key inquiry into any new model is to understand why it is effective, beyond simply achieving higher metrics. The advantages of WSC-Net are rooted in its dual-branch architecture and the intelligent fusion enabled by the CDAF module. A logical inference of this mechanism’s behavior can be drawn directly from the experimental evidence. For instance, the model’s high AA in the imbalanced IP dataset points to its enhanced ability to classify small or minority classes, which often depend on subtle, high-frequency textural or spectral cues that a standard transformer might average out. WSC-Net’s success in these cases suggests that the CDAF module is effectively leveraging the detail-rich features from the wavelet branch. Concurrently, the high spatial coherence and low noise in the classification maps for large, uniform areas (e.g., in the SA dataset) indicate that the model is not simply overfitting to high-frequency information. This observed balance between sensitivity to fine details and stability in homogeneous regions provides strong evidence that the CDAF module functions as an adaptive arbitrator. It likely learns to dynamically prioritize high-frequency information from the WTM when classifying complex, boundary-like regions, while relying on the robust, context-rich features from the Swin transformer for simpler, uniform areas. This capacity for adaptive, data-driven feature synthesis is the primary reason for WSC-Net’s robust and well-balanced performance.

Finally, it is important to acknowledge the limitations of the proposed architecture. The dual-branch design, while effective, results in a higher parameter count and increased computational cost compared to those of single-stream models. This tradeoff between accuracy and efficiency is a key consideration for practical deployment on resource-constrained platforms. Future work could focus on model compression or the development of more computationally efficient cross-domain interaction mechanisms. The principles of fusing spatial and frequency domains validated herein could also be extended to other challenging remote sensing tasks, presenting a clear path for continued research.