Target Detection in Sea Clutter Background via Deep Multi-Domain Feature Fusion

Abstract

Highlights

- Effectively integrating sea clutter radar echo features in the time, frequency, fractal, and polarization domains demonstrates that multi-feature fusion can enhance the separability of sea clutter and target samples, which helps overcome the limitations of single-feature domain detection methods in different scenarios.

- Applying deep learning networks to sea surface low-altitude small target detection and designing effective false alarm control methods tailored to specific application scenarios.

- This method provides a reliable approach for detecting sea surface targets in complex scenarios.

- This method offers an effective framework and ideas for the application of deep learning in sea surface remote sensing and intelligent detection.

Abstract

1. Introduction

- The characteristics of sea clutter vary significantly under different detection scenarios, and detection methods based on single-domain sea clutter features have considerable limitations. This paper fuses sea surface echo features from four domains to somewhat overcome the issues caused by changes in detection scenarios.

- Unlike traditional approaches that depend on single-polarization data and are sensitive to polarization differences, this work utilizes fully polarized data. By analyzing echo characteristics from multiple feature domains, it captures more complete radar information, which can lead to more robust detection performance.

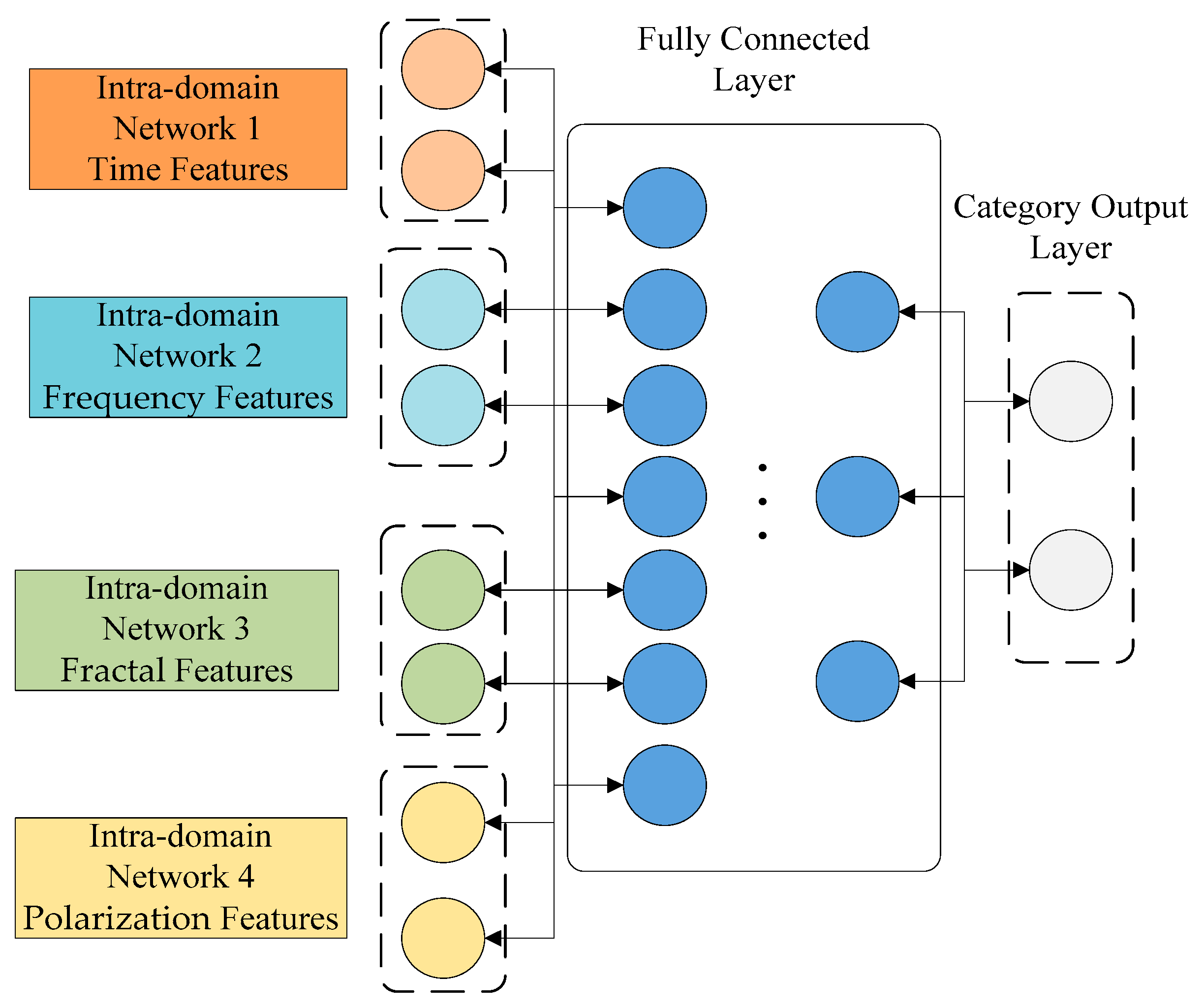

- An intelligent method for weak target detection amid sea clutter is presented. It employs intra-domain networks to reduce feature redundancy and inter-domain networks to effectively integrate diverse domain features. Controllable false alarm rates are achieved by optimizing loss functions. Validation on the IPIX radar dataset confirms the algorithm’s effectiveness and superiority over compared methods.

2. Review of Target Detection in Sea Clutter Background

3. Multi-Domain Feature Fusion Network

3.1. Multi-Domain Feature Extraction

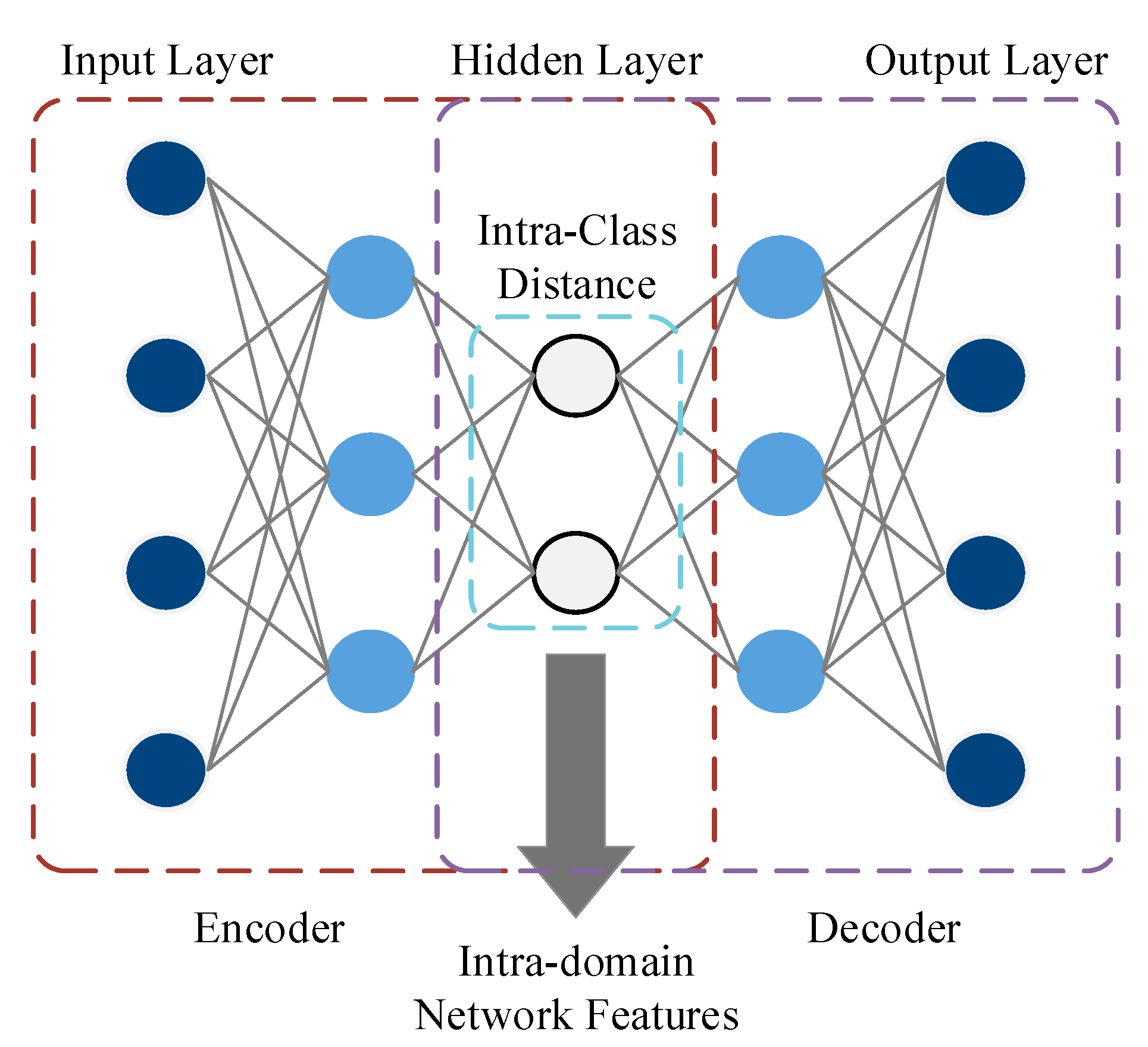

3.2. Intra-Domain Network

3.3. Inter-Domain Network

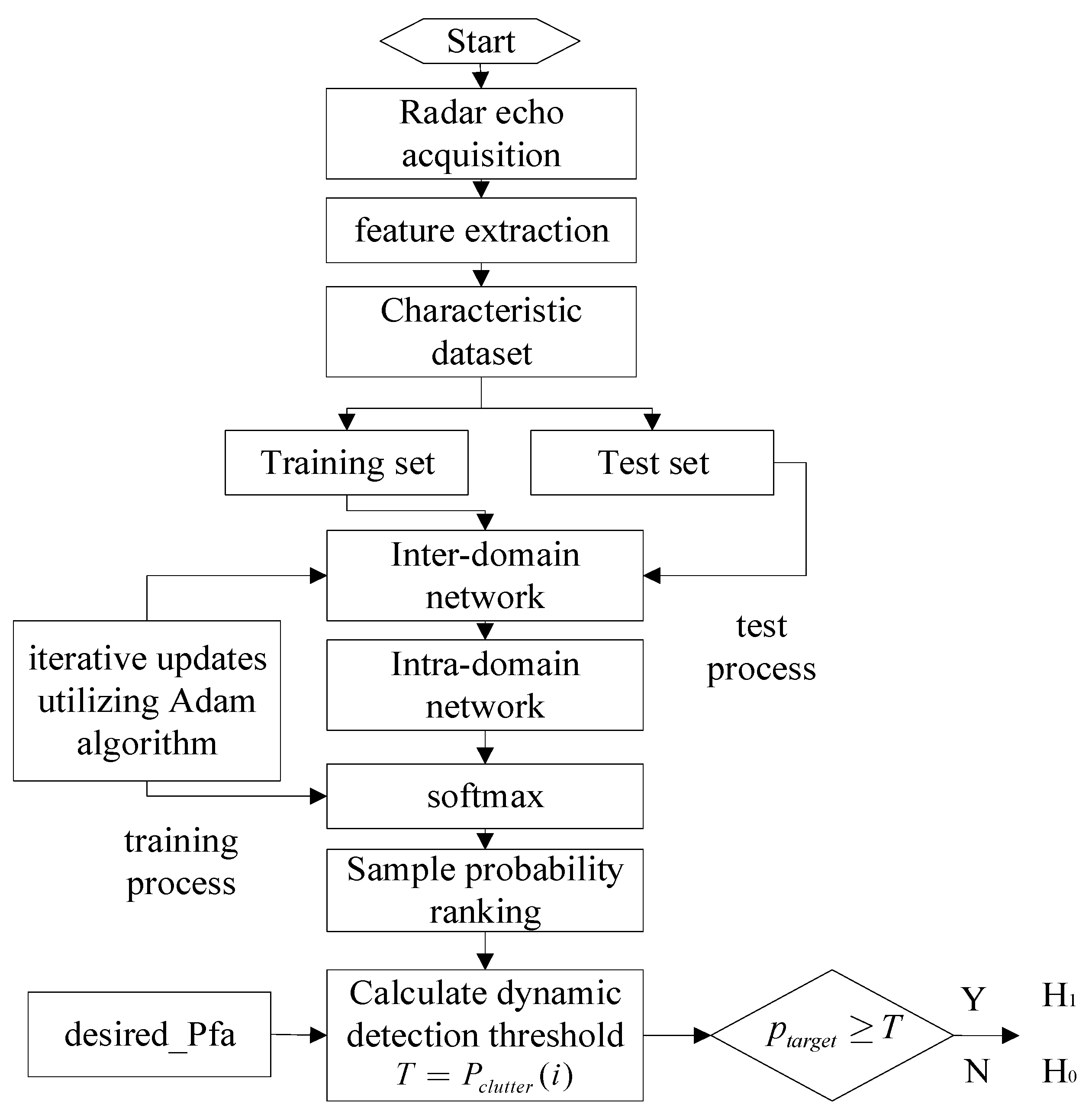

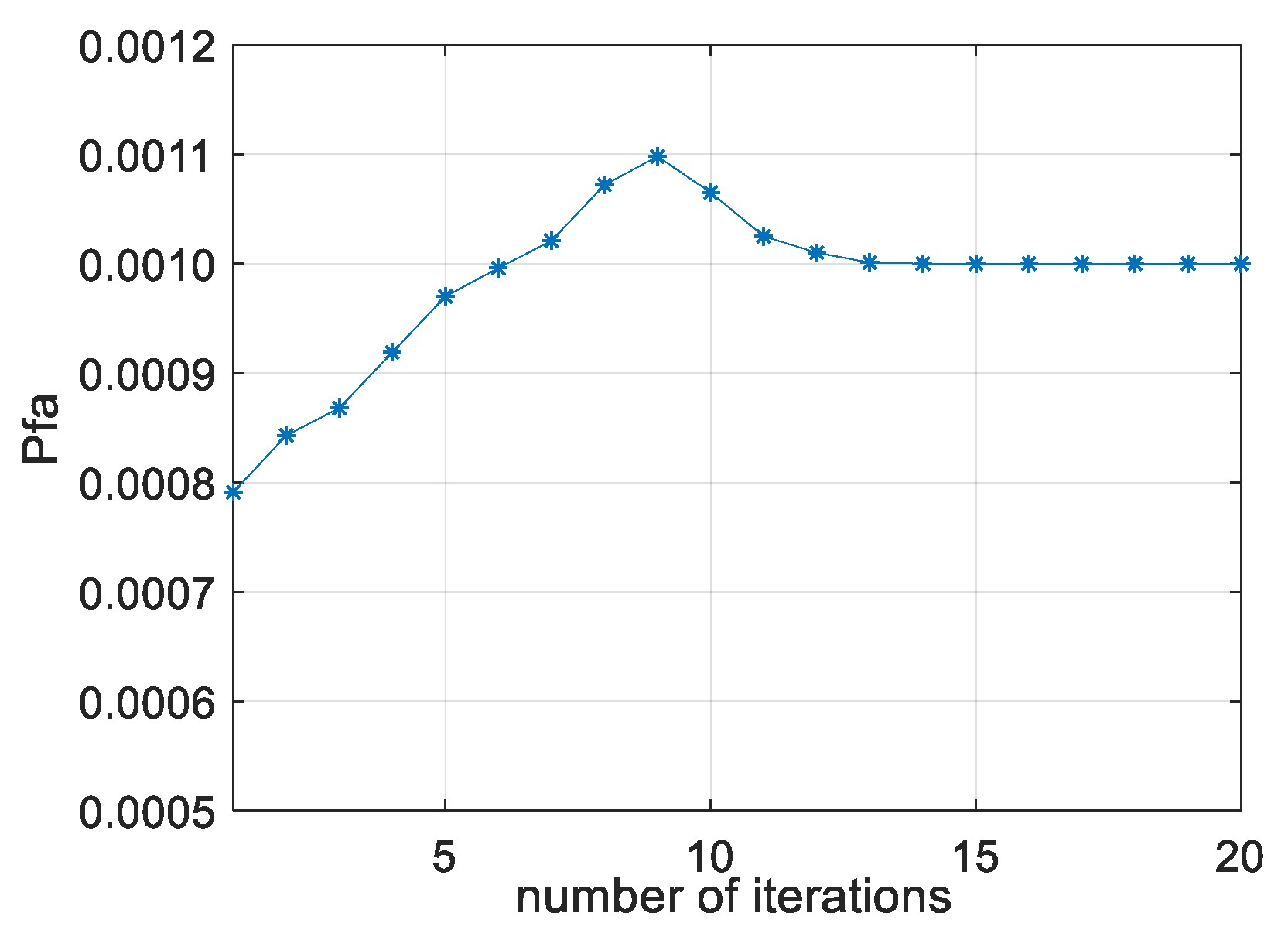

3.4. Control of False Alarms

- (1)

- Softmax output and sample probability representation

- (2)

- Output sample probability ranking

- (3)

- Detection threshold setting

- (4)

- Algorithm update and false alarm control process

- Step 1:

- Obtain radar echo data and perform feature extraction to generate a feature dataset.

- Step 2:

- Divide the feature dataset into a training set and a testing set.

- Step 3:

- Input the features from the training set into both intra-domain and inter-domain networks and iteratively update the networks using the Adam algorithm.

- Step 4:

- Obtain the output sample probability based on the Softmax function of the inter-domain network and rank the sample probabilities.

- Step 5:

- Calculate the detection threshold based on the expected false alarm rate and sample probability value.

- Step 6:

- Input the test set samples into the trained intra-domain network and inter-domain network, and complete the detection based on the obtained detection threshold.

4. Results

4.1. Description of the Measured Data

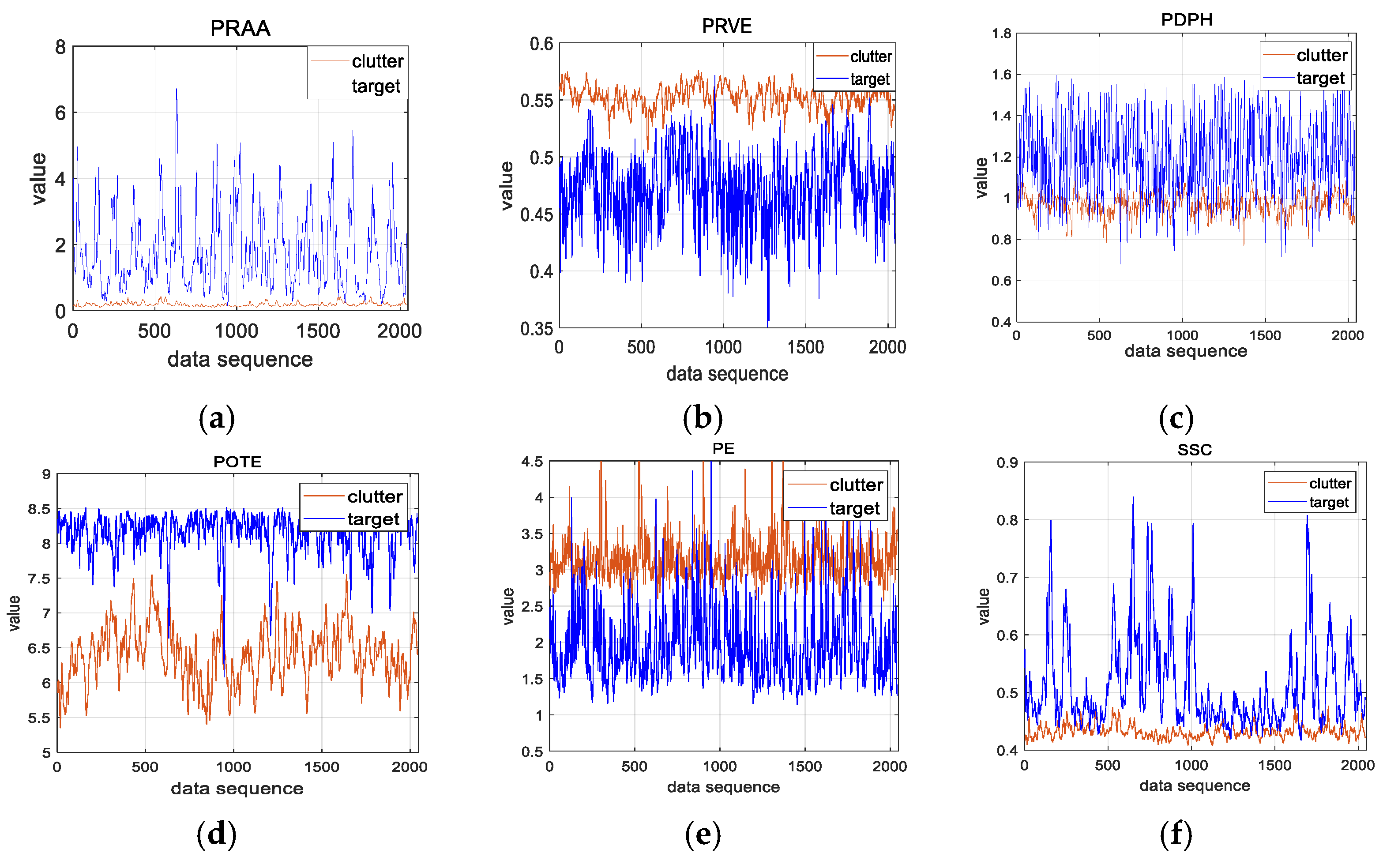

4.2. Separability Analysis of Features

4.3. Performance of the Proposed Method

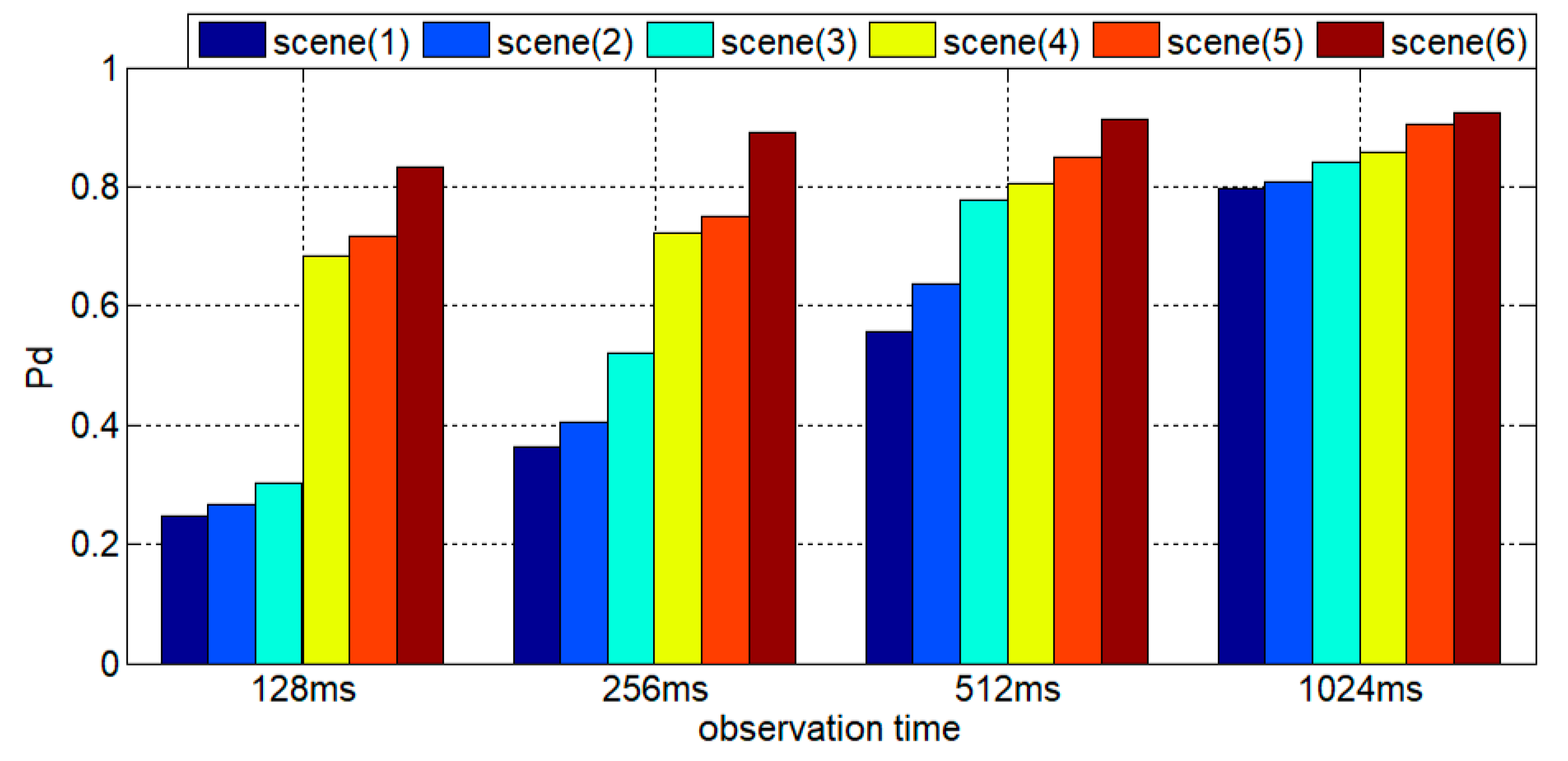

- (1)

- Impact of network constraints on algorithm performance

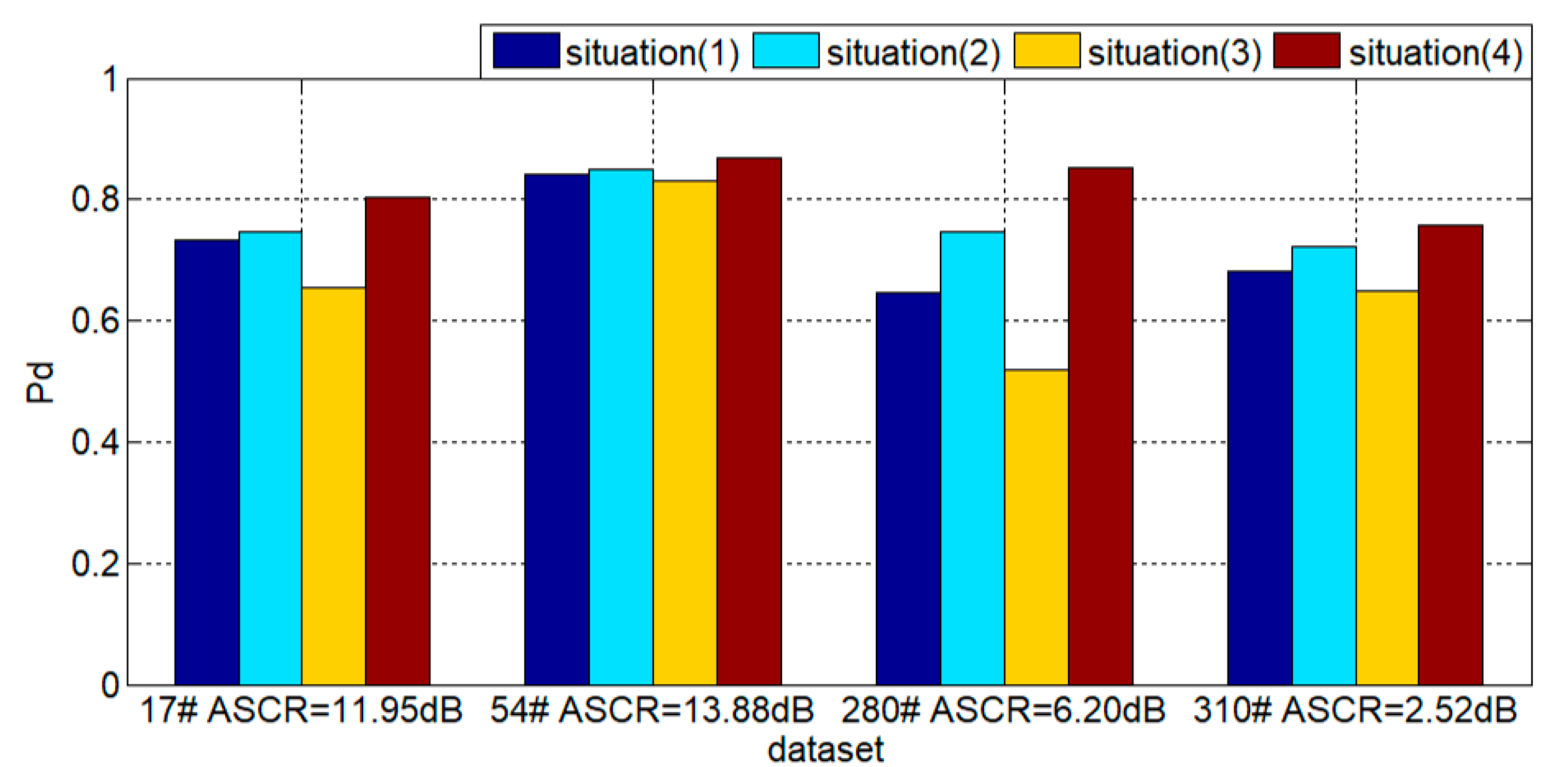

- (2)

- The impact of network parameters on algorithm performance

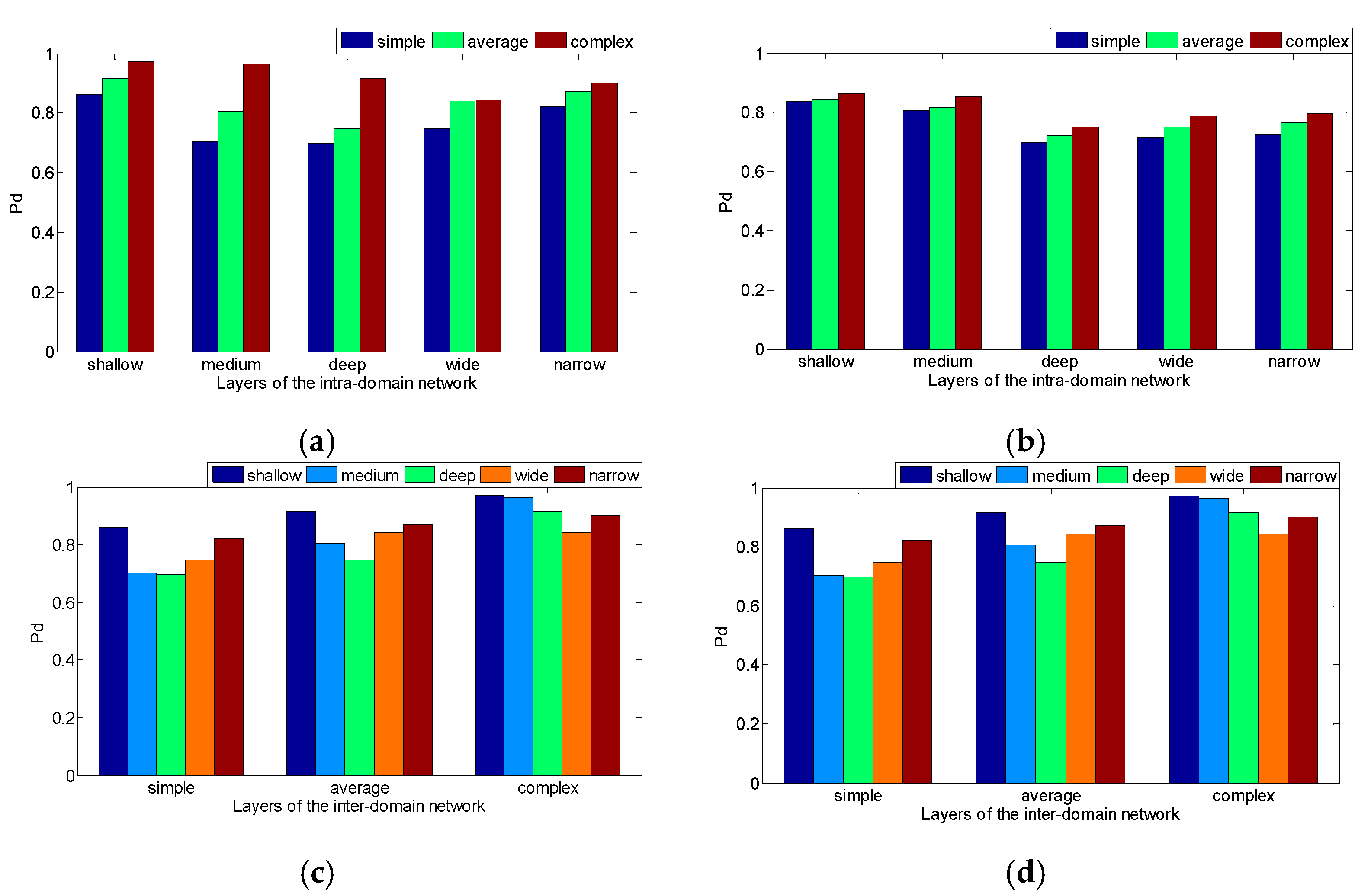

- (1)

- Discussion on the number of network layers

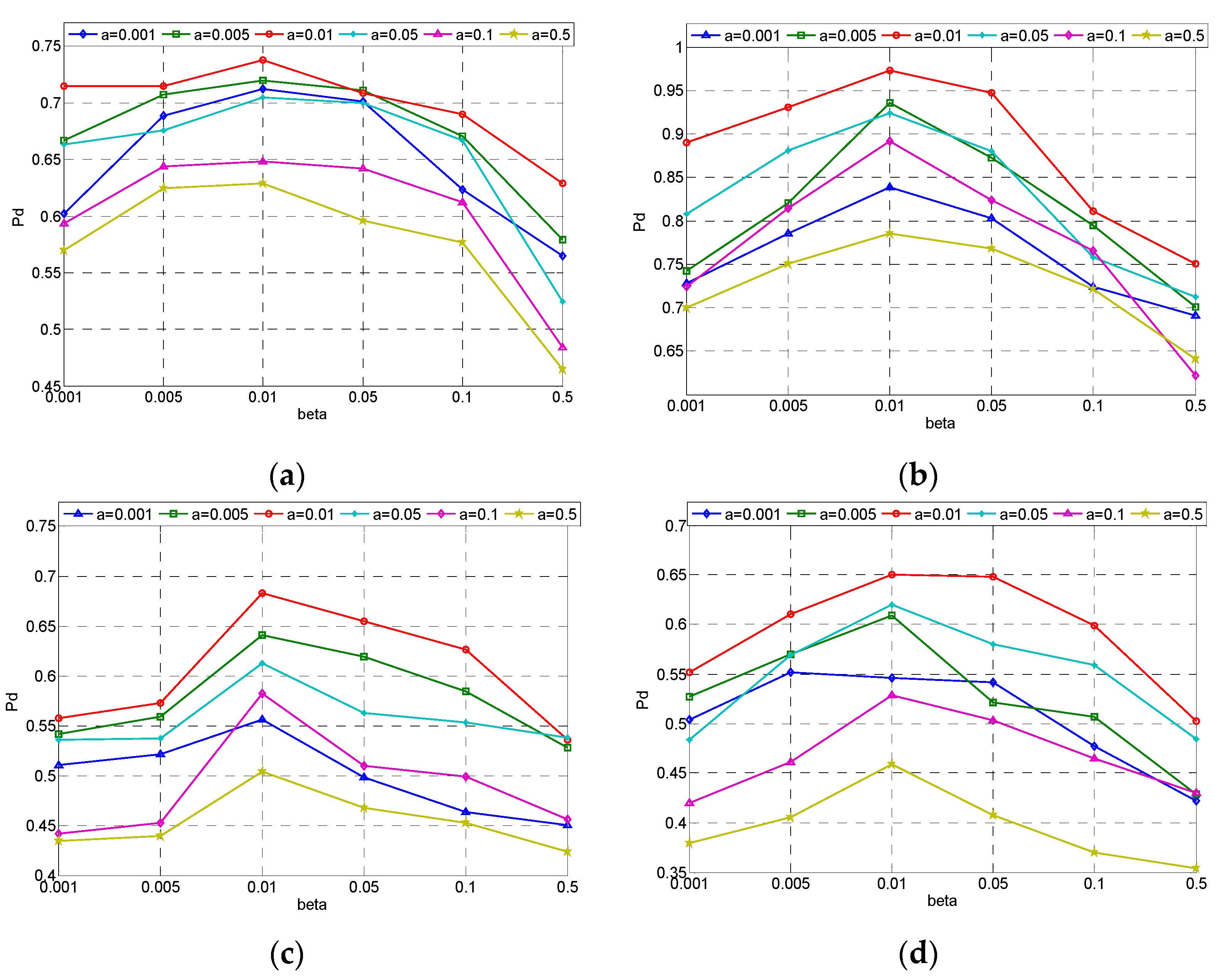

- (2)

- Discussion on α and β

5. Discussion

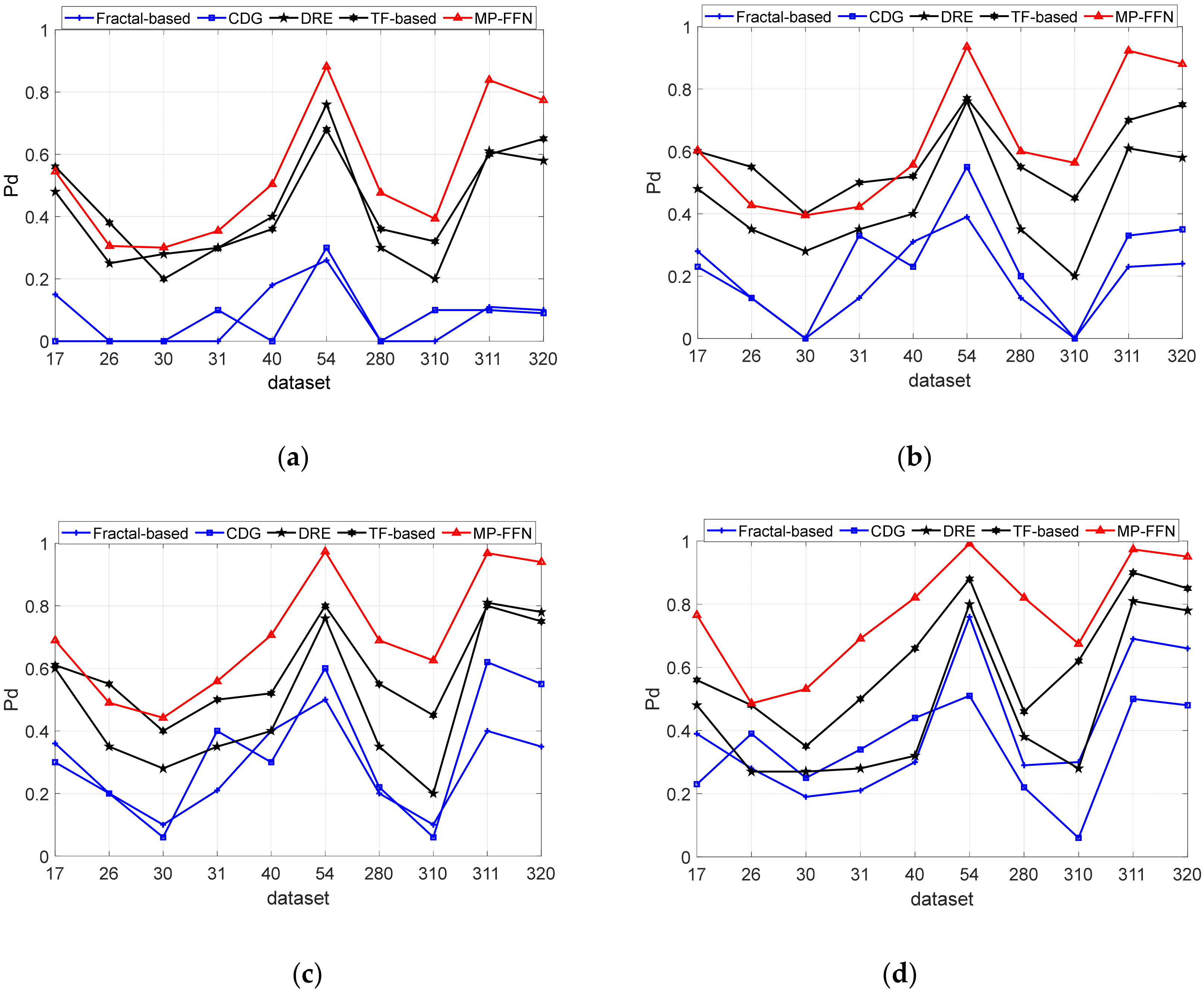

5.1. Comparison of Different Detection Methods

- (1)

- Comparison with classical methods for sea surface target detection

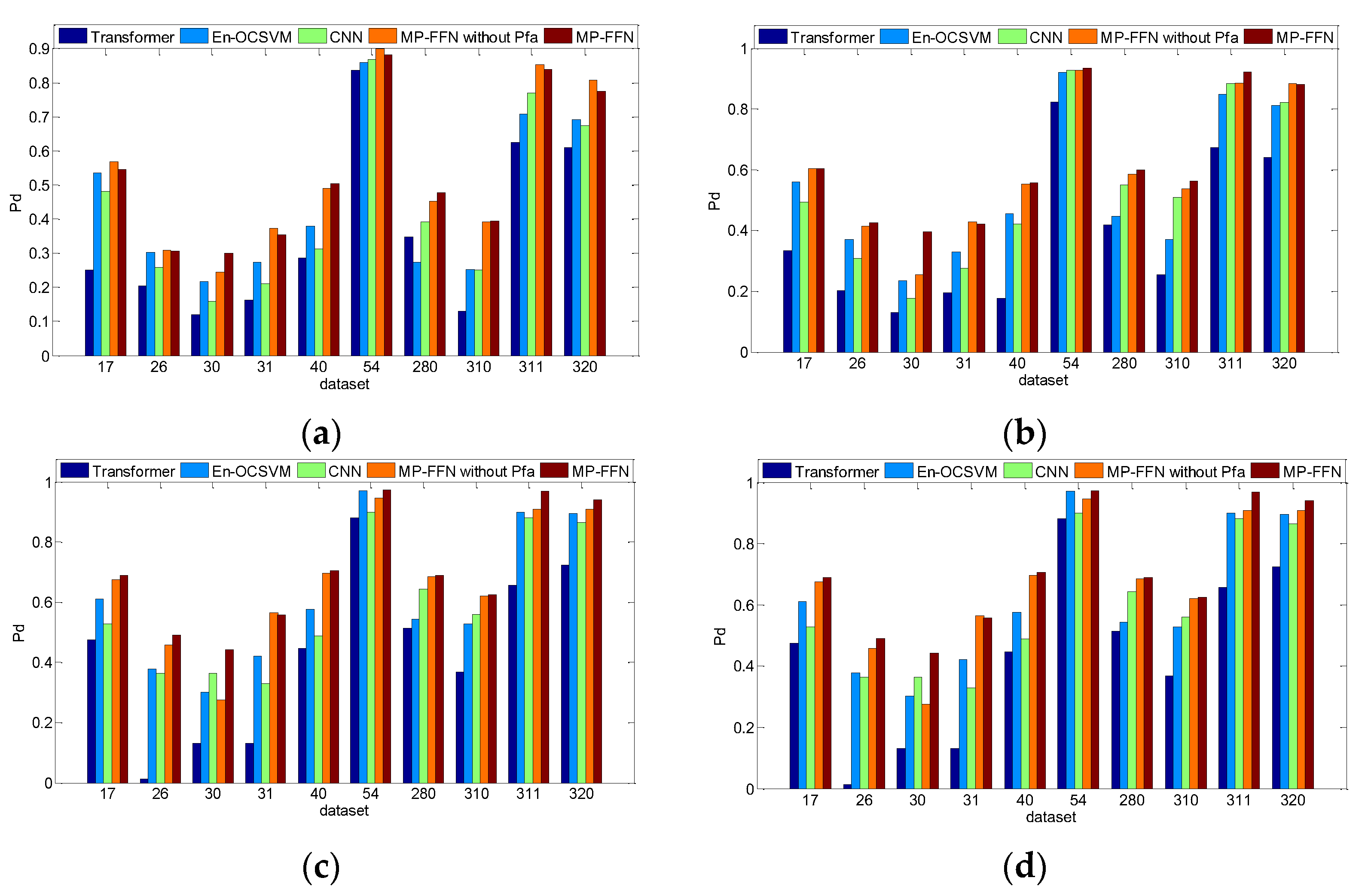

5.2. Comparison of Different Detection Methods Based on Feature Fusion

5.3. Stability of the Proposed Method

6. Conclusions

- The MP-FFN extracts six features from four distinct feature domains, adopting a full polarization perspective. Given the significant variations of different features and feature domains across various datasets, the detection effectiveness of the proposed method can be enhanced by at least 10% under different SCRs when compared to existing three-feature methods, methods based on time–frequency-domain features, and methods based on polarization-domain features;

- In MP-FFN, the intra-domain network minimizes feature redundancy and bolsters feature separability by computing intra-class and inter-class distances. Experimental results indicate a notable enhancement in feature separability after SAE fusion;

- The inter-domain network in the proposed method incorporates a dynamic threshold adjustment mechanism based on output probability ranking. By sorting the output results of the inter-domain network and setting an adaptive decision threshold, the detection performance can be improved by 10% compared to existing fusion algorithms, while satisfying the specified false alarm rate.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, Y.; Huang, Y.; Guan, J.; Liu, N. Research progress in radar maritime target detection technology. J. Signal Process. 2025, 41, 969–992. [Google Scholar]

- Guan, J. Summary of marine radar target characteristics. J. Radars 2020, 9, 674–683. [Google Scholar] [CrossRef]

- Xu, S.; Bai, X.; Guo, Z.; Shui, P. Status and prospects of feature-based detection methods for floating targets on the sea surface. J. Radars 2020, 9, 684–714. [Google Scholar] [CrossRef]

- Shi, Y.; Xie, X.; Li, D. Range distributed floating target detection in sea clutter via feature-based detector. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1847–1850. [Google Scholar] [CrossRef]

- Wang, Z.; Xin, Z.; Liao, G.; Huang, P.; Xuan, J.; Sun, Y.; Tai, Y. Land-sea target detection and recognition in SAR image based on non-local channel attention network. IEEE Trans. Geosci. Remote Sensing 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, X.; Chen, S.; Guo, Z.; Su, J.; Tao, M.; Wang, L. Sea Surface Weak Target Detection Based on Weighted Difference Visibility Graph. IEEE Geosci. Remote Sens. Lett. 2025, 22, 3503605. [Google Scholar] [CrossRef]

- Fan, Y.; Luo, F.; Li, M.; Hu, C.; Chen, S. Fractal properties of autoregressive spectrum and its application on weak target detection. IET Radar Sonar Navig. 2015, 9, 1070–1077. [Google Scholar] [CrossRef]

- Shui, P.; Li, D.; Xu, S. Tri-Feature-Based Detection of Floating Small Targets in Sea Clutter. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 1416–1430. [Google Scholar] [CrossRef]

- Yan, K.; Wu, H.; Xiao, H.; Zhang, X. Novel robust band-limited signal detection approach using graphs. IEEE Commun. Lett. 2017, 21, 20–23. [Google Scholar] [CrossRef]

- Shi, S.; Jiang, L.; Cao, D.; Zhang, Y. Sea-surface small target detection using entropy features with dual-domain clutter suppression. Remote Sens. Lett. 2022, 13, 1142–1152. [Google Scholar] [CrossRef]

- Shi, S.; Zhang, R.; Wang, J.; Li, T. Dual-Channel Detection of Sea-Surface Small Targets in Recurrence Plot. IEEE Geosci. Remote Sens. Lett. 2025, 22, 3503005. [Google Scholar] [CrossRef]

- Gu, T. Detection of Small Floating Targets on the Sea Surface Based on Multi-Features and Principal Component Analysis. IEEE Geosci. Remote Sens. Lett. 2020, 17, 809–813. [Google Scholar] [CrossRef]

- Ruff, L.; Vandermeulen, R.; Goernitz, N.; Deecke, L.; Siddiqui, S.A.; Binder, A.; Müller, E.; Kloft, M. Deep One-Class Classification. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Zhang, X.; Li, P.; Cai, C. Regional Urban Extent Extraction Using Multi-Sensor Data and One-Class Classification. Remote Sens. 2015, 7, 7671–7694. [Google Scholar] [CrossRef]

- Maaten, L.; Hinton, G. Visualizing Data Using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Villeval, S.; Bilik, I.; Gürbuz, S.Z. Application of a 24 GHz FMCW Automotive Radar for Urban Target Classification. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014. [Google Scholar]

- Chen, S.; Luo, F.; Luo, X. Multi-view Feature-based Sea Surface Small Target Detection in Short Observation Time. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1189–1193. [Google Scholar] [CrossRef]

- Chen, S.; Ouyang, X.; Luo, F. Ensemble One-class Support Vector Machine for Sea Surface Target Detection Based on K-means Clustering. Remote Sens. 2024, 16, 2401. [Google Scholar] [CrossRef]

- Wang, Z.; Hou, G.; Xin, Z.; Liao, G.; Huang, P.; Tai, Y. Detection of SAR image multiscale ship targets in complex inshore scenes based on improved YOLOv5. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5804–5823. [Google Scholar] [CrossRef]

- Shi, Y.; Tao, P.; Xu, S. Small float target detection in sea clutter based on WGAN-GP-CNN. Signal Process. 2024, 40, 1082–1097. [Google Scholar]

- Chen, K.; Liu, X.; Shen, C. Transformer-based Radar/Lidar/Camera Fusion Solution for 3D Object Detection. Flight Control Detect. 2025, 8, 82–92. [Google Scholar]

- Shi, S.; Shui, P. Sea-surface Floating small target detection by one-class classifier in time-frequency feature space. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6395–6411. [Google Scholar] [CrossRef]

- Anderson, S.J.; Morris, J.T. Aspect Dependence of the Polarimetric Characteristics of Sea Clutter: II. Variation with Azimuth Angle. In Proceedings of the 2008 International Conference on Radar, Adelaide, SA, Australia, 2–5 September 2008. [Google Scholar]

- An, W.; Cui, Y.; Zhang, W.; Yang, J. Data Compression for Multilook Polarimetric SAR Data. IEEE Geosci. Remote Sens. Lett. 2009, 6, 476–480. [Google Scholar] [CrossRef]

- An, W.; Cui, Y.; Yang, J. Three-Component Model-Based Decomposition for Polarimetric SAR Data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2732–2739. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, Y.; Wang, Y.; Zhuo, Z. Narrowband Radar Automatic Target Recognition Based on a Hierarchical Fusing Network with Multidomain Features. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1039–1043. [Google Scholar] [CrossRef]

- Zhang, W.; Du, L.; Li, L.; Zhang, X.; Liu, H. Infinite Bayesian One-Class Support Vector Machine Based on Dirichlet Process Mixture Clustering. Pattern Recognit. 2018, 78, 56–78. [Google Scholar] [CrossRef]

| : Polarization mode, . : The serial number of the sample to be tested. : The -th radar echo sample. : Probability of . : Non-extensive parameter. : Calculation of extracted features, . : Loss function. : Distance between samples. : The weight of the reconstruction loss in the intra-domain network. : The weight of the proportional loss in the intra-domain network. : The serial number of sample categories. : The serial number of neurons. : The serial number of hidden-layer features. : The number of samples labeled as . : The hidden layer feature labeled as k at the c-th position. : The empirical parameter of activation function. : Activation function of the Leaky ReLU. : The probability of the i-th sample being classified as belonging to class c. : The total number of samples in the test set. : Detection threshold. : Index value corresponding to the detection threshold under a given false alarm rate. : The penalty term for all parameters in the network. : The separability measurement function. |

| No | Dataset | TC | PC | WS (km/h) | SWH (m) | ASCR (dB) |

|---|---|---|---|---|---|---|

| 1 | 1993_17 | 9 | 8:11 | 9 | 2.2 | 11.95 |

| 2 | 1993_26 | 7 | 6:8 | 9 | 1.1 | 6.43 |

| 3 | 1993_30 | 7 | 6:8 | 19 | 0.9 | 2.96 |

| 4 | 1993_31 | 7 | 6:9 | 19 | 0.9 | 8.03 |

| 5 | 1993_40 | 7 | 5:8 | 9 | 1.0 | 11.39 |

| 6 | 1993_54 | 8 | 7:10 | 20 | 0.7 | 13.88 |

| 7 | 1993_280 | 8 | 7:10 | 10 | 1.6 | 6.20 |

| 8 | 1993_310 | 7 | 6:9 | 33 | 0.9 | 2.52 |

| 9 | 1993_311 | 7 | 6:9 | 33 | 0.9 | 11.38 |

| 10 | 1993_320 | 7 | 6:9 | 28 | 0.9 | 10.64 |

| Observation Time | Pure Clutter Sample | Target Sample | Training Sample | Test Sample |

|---|---|---|---|---|

| 128 ms | 10,240 | 1024 | 67,584 | 45,056 |

| 256 ms | 5120 | 512 | 33,792 | 22,528 |

| 512 ms | 2560 | 256 | 16,896 | 11,264 |

| 1024 ms | 1280 | 128 | 8448 | 5632 |

| Feature | 17 | 54 | 280 | 310 |

|---|---|---|---|---|

| PRAA | 0.1446 | 0.8015 | 0.2717 | 0.1737 |

| PRVE | 0.2711 | 1.7218 | 0.3128 | 0.2208 |

| PDPH | 0.6513 | 1.8952 | 0.3975 | 0.6177 |

| POTE | 0.5815 | 4.5309 | 0.5457 | 0.1891 |

| Direct splicing | 1.3085 | 6.5120 | 0.9781 | 0.8557 |

| Settings | Number | Feature Selection | Feature Domain |

|---|---|---|---|

| Scene (1) | 1 | PRAA | Time |

| Scene (2) | 1 | PRVE | Frequency |

| Scene (3) | 2 | PRVE, PDPH | Frequency |

| Scene (4) | 3 | PRAA, PRVE, PDPH | Time and frequency |

| Scene (5) | 4 | PRAA, PRVE, PDPH, POTE | Time, frequency, and fractal |

| Scene (6) | 6 | PRAA, PRVE, PDPH, POTE, PE, SSC | Time, frequency, fractal, and polarization |

| Observation Time | 128 ms | 256 ms | 512 ms | 1024 ms |

|---|---|---|---|---|

| Scene (1) | 0.2466 | 0.3631 | 0.5557 | 0.7983 |

| Scene (2) | 0.2662 | 0.4059 | 0.6365 | 0.8081 |

| Scene (3) | 0.3028 | 0.5215 | 0.7772 | 0.8401 |

| Scene (4) | 0.6838 | 0.7237 | 0.8066 | 0.8573 |

| Scene (5) | 0.7155 | 0.7506 | 0.8494 | 0.9041 |

| Scene (6) | 0.8339 | 0.8900 | 0.9130 | 0.9250 |

| Settings | 17 | 54 | 280 | 310 |

|---|---|---|---|---|

| Situation (1) | 0.7332 | 0.8421 | 0.6450 | 0.6818 |

| Situation (2) | 0.7479 | 0.8507 | 0.7479 | 0.7222 |

| Situation (3) | 0.6536 | 0.8311 | 0.5177 | 0.6499 |

| Situation (4) | 0.8042 | 0.8690 | 0.8519 | 0.7576 |

| Type | Number of Layers | Number of Neurons per Layer |

|---|---|---|

| Shallow | 1 | 4 |

| Medium | 2 | 8, 4 |

| Deep | 3 | 16, 8, 4 |

| Wide | 1 | 16 |

| Narrow | 1 | 8 |

| Type | Number of Layers | Number of Neurons per Layer |

|---|---|---|

| Simple | 1 | 8 |

| Average | 2 | 16,8 |

| Complex | 3 | 32,16,8 |

| Methods | Pd | Pfa | Cr |

|---|---|---|---|

| En-OCSVM [18] | 0.4493 | 0.0544 | 0.8231 |

| CNN [20] | 0.4376 | 0.0053 | 0.9439 |

| Transformer [21] | 0.3576 | 0.0063 | 0.9372 |

| MP-FFN without Pfa | 0.5388 | 0.0026 | 0.9621 |

| MP-FFN | 0.5375 | 0.0010 | 0.9060 |

| Methods | Pd | Pfa | Cr |

|---|---|---|---|

| En-OCSVM [18] | 0.5352 | 0.0567 | 0.8433 |

| CNN [20] | 0.5710 | 0.0062 | 0.9536 |

| Transformer [21] | 0.3850 | 0.0043 | 0.9416 |

| MP-FFN without Pfa | 0.6076 | 0.0025 | 0.9671 |

| MP-FFN | 0.6317 | 0.0010 | 0.9289 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Wu, Y.; Sun, W.; Yu, H.; Luo, F. Target Detection in Sea Clutter Background via Deep Multi-Domain Feature Fusion. Remote Sens. 2025, 17, 3213. https://doi.org/10.3390/rs17183213

Chen S, Wu Y, Sun W, Yu H, Luo F. Target Detection in Sea Clutter Background via Deep Multi-Domain Feature Fusion. Remote Sensing. 2025; 17(18):3213. https://doi.org/10.3390/rs17183213

Chicago/Turabian StyleChen, Shichao, Yue Wu, Wanghaoyu Sun, Hengli Yu, and Feng Luo. 2025. "Target Detection in Sea Clutter Background via Deep Multi-Domain Feature Fusion" Remote Sensing 17, no. 18: 3213. https://doi.org/10.3390/rs17183213

APA StyleChen, S., Wu, Y., Sun, W., Yu, H., & Luo, F. (2025). Target Detection in Sea Clutter Background via Deep Multi-Domain Feature Fusion. Remote Sensing, 17(18), 3213. https://doi.org/10.3390/rs17183213