Deep Learning Applications for Crop Mapping Using Multi-Temporal Sentinel-2 Data and Red-Edge Vegetation Indices: Integrating Convolutional and Recurrent Neural Networks

Abstract

Highlights

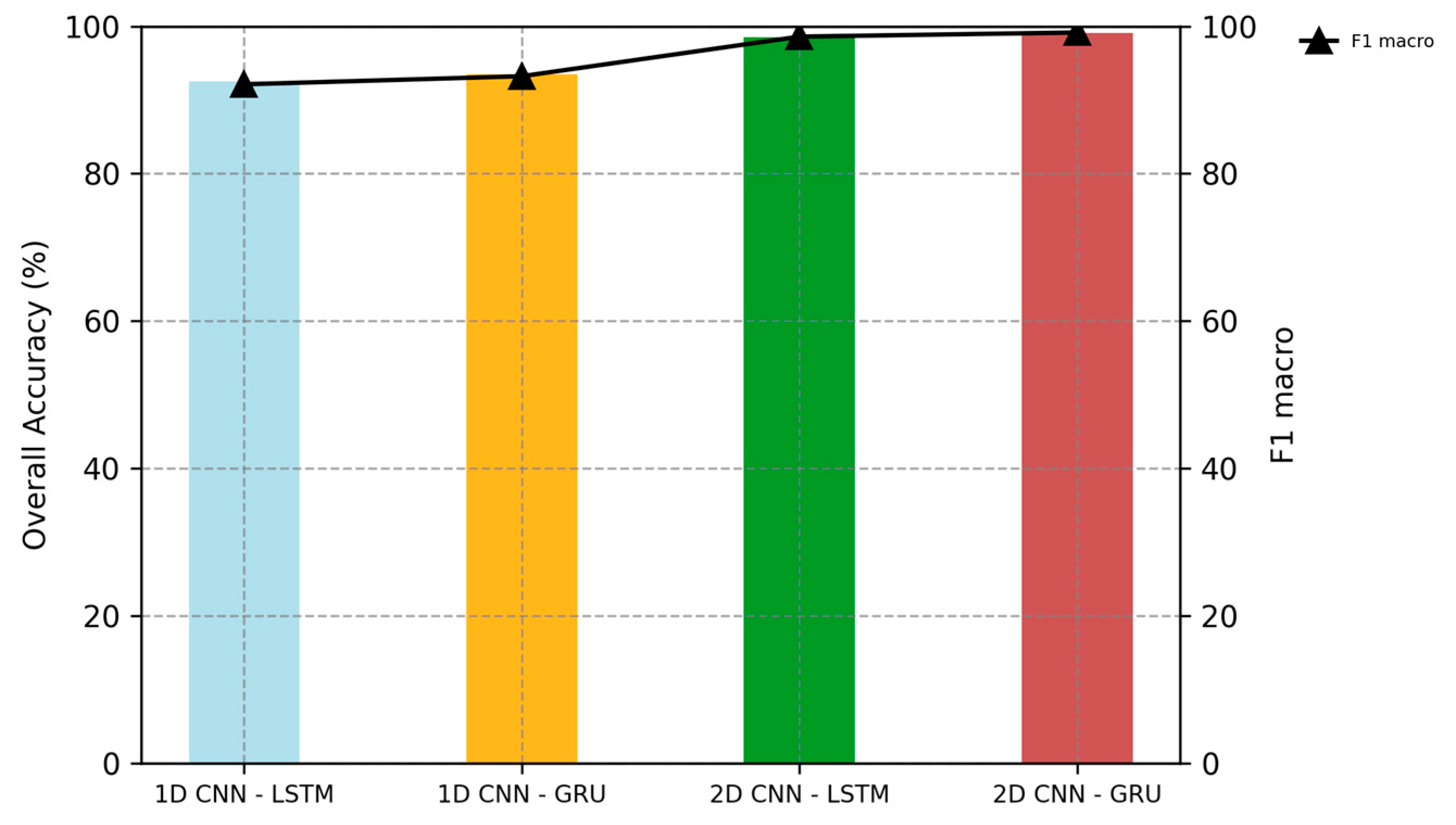

- Benchmark of four hybrid CNN-RNNs (1D/2D × LSTM/GRU) on Sentinel-2; 2D CNN-GRU leads (OA 99.12%, macro-F1 99.14%).

- Combining spatial context (2D CNN) with temporal phenology (RNN) outperforms 1D and stand-alone models.

- Hybrid spatiotemporal modeling with NDVI/red-edge indices enables accurate, operational crop mapping.

- Reporting accuracy and efficiency supports practical deployment and reproducibility.

Abstract

1. Introduction

- Testing the use of advanced deep learning methods on large-scale Sentinel-2 datasets, addressing the challenges of scalability and data complexity.

- Evaluating the potential of combining CNN and RNNs to handle the complexity of multi-temporal remote sensing data for crop classification.

- Assess the impact of temporal richness and spectral diversity of Sentinel-2 imagery, including red-edge indices, to improve classification accuracy.

- Develop a workflow where 1D CNN extract spectral and temporal features, 2D CNN capture spatial patterns, and RNNs (LSTM/GRU) model temporal dependencies.

- Evaluate the performance of combined 1D and 2D CNN-LSTM and CNN-GRU models against each other for crop type classification.

2. Materials and Methods

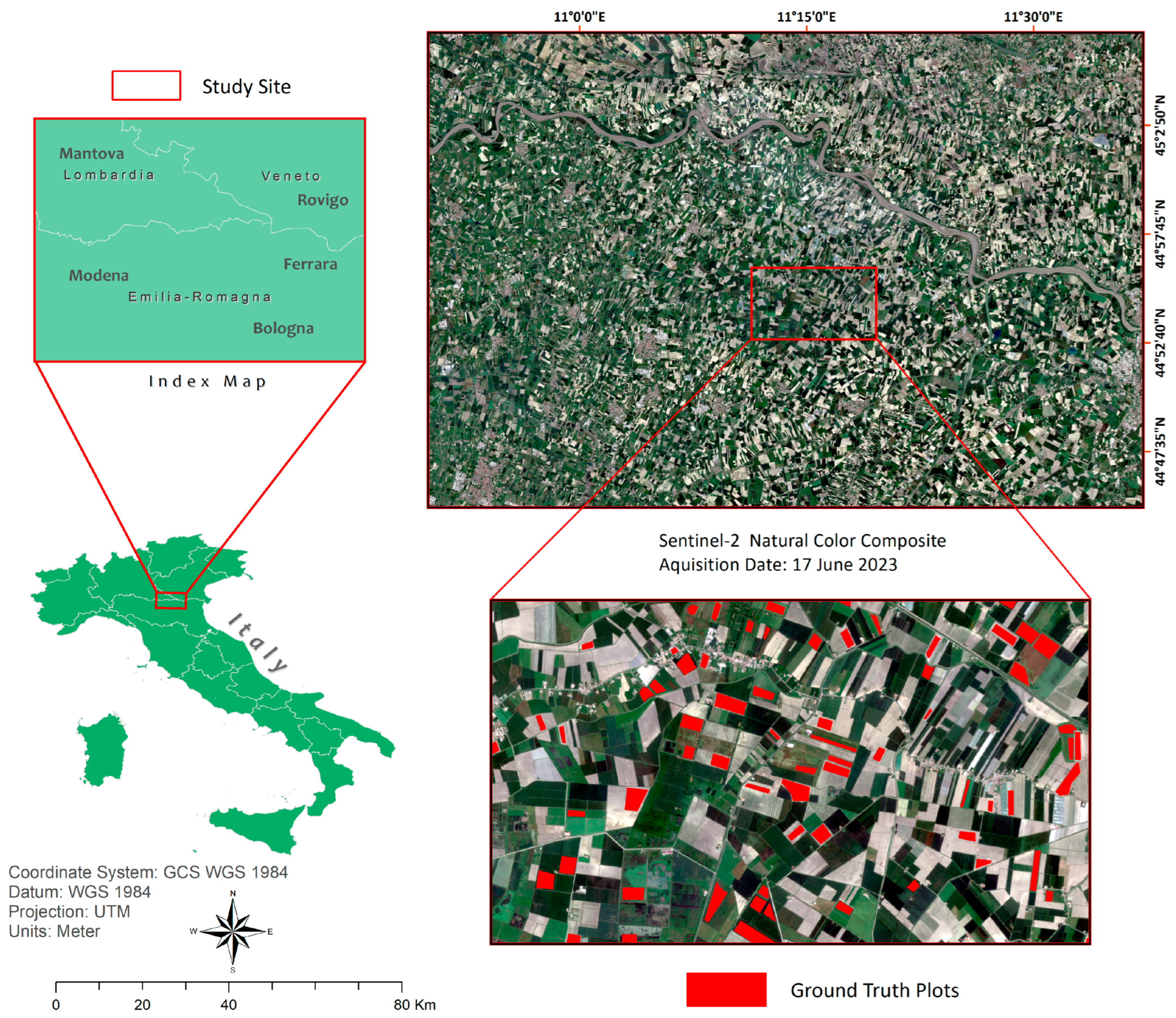

2.1. Study Area

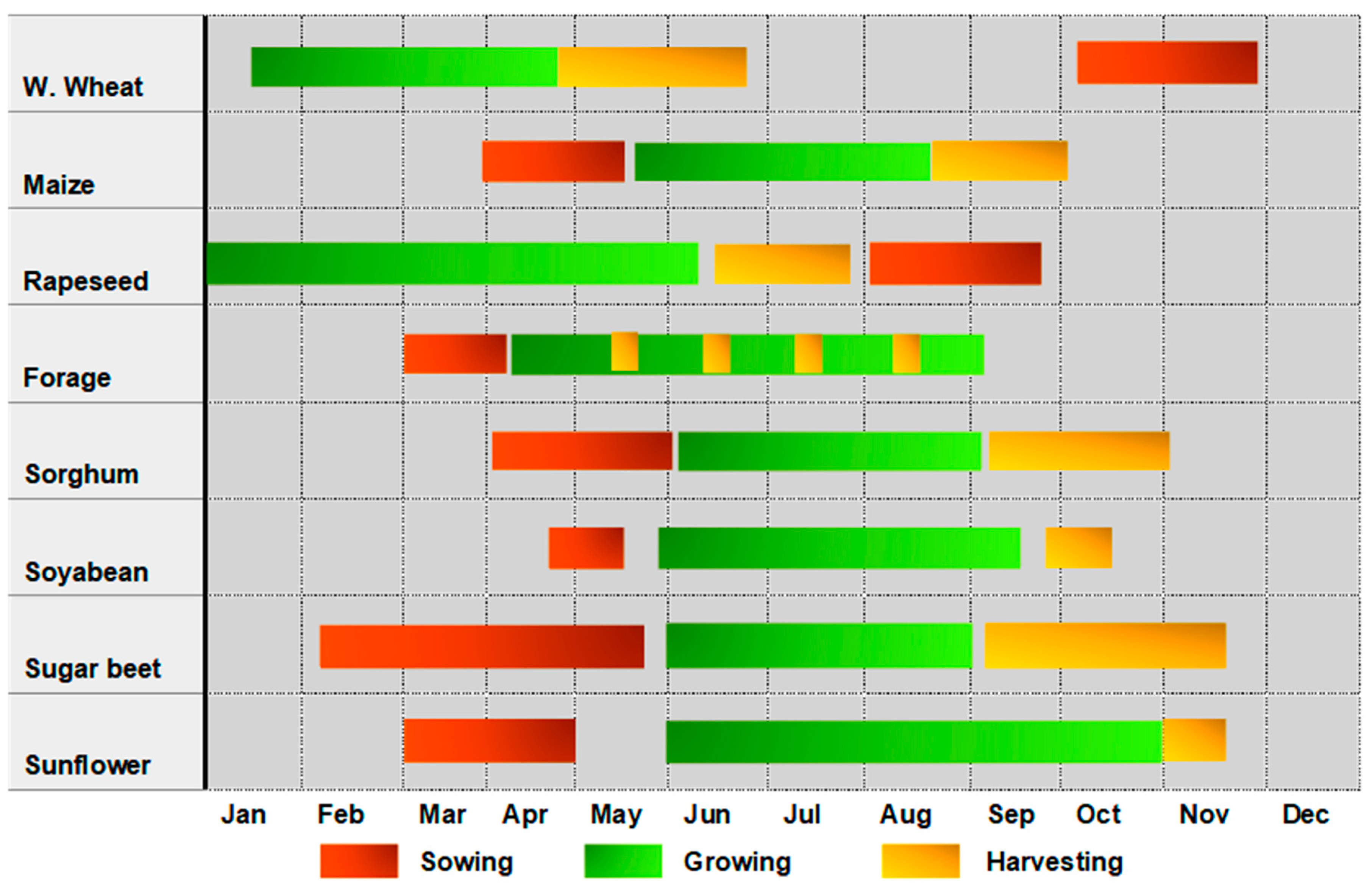

2.2. Data Resources

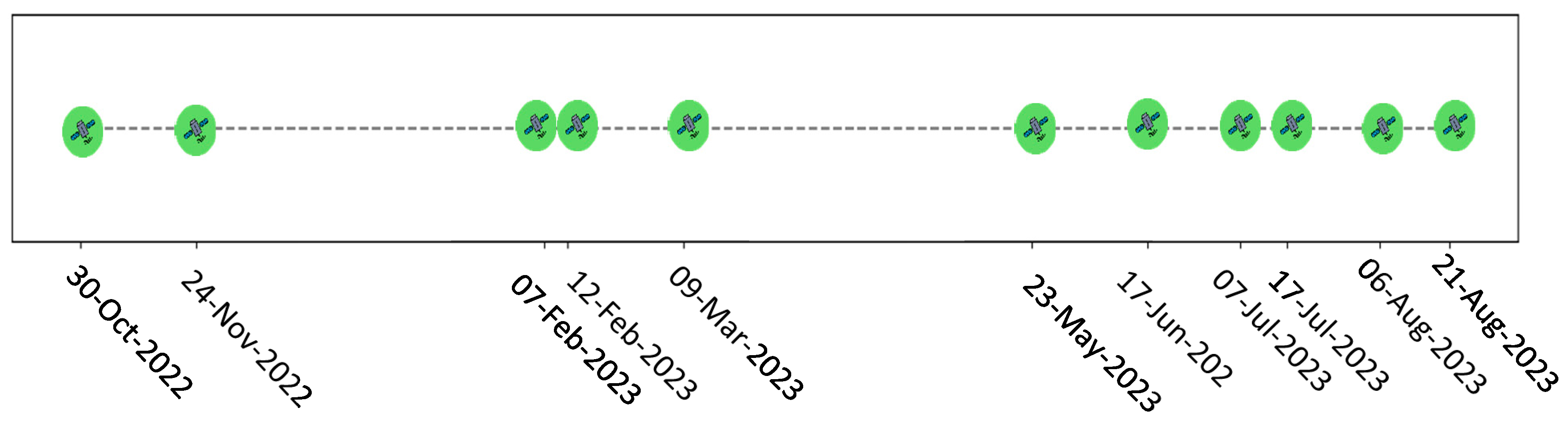

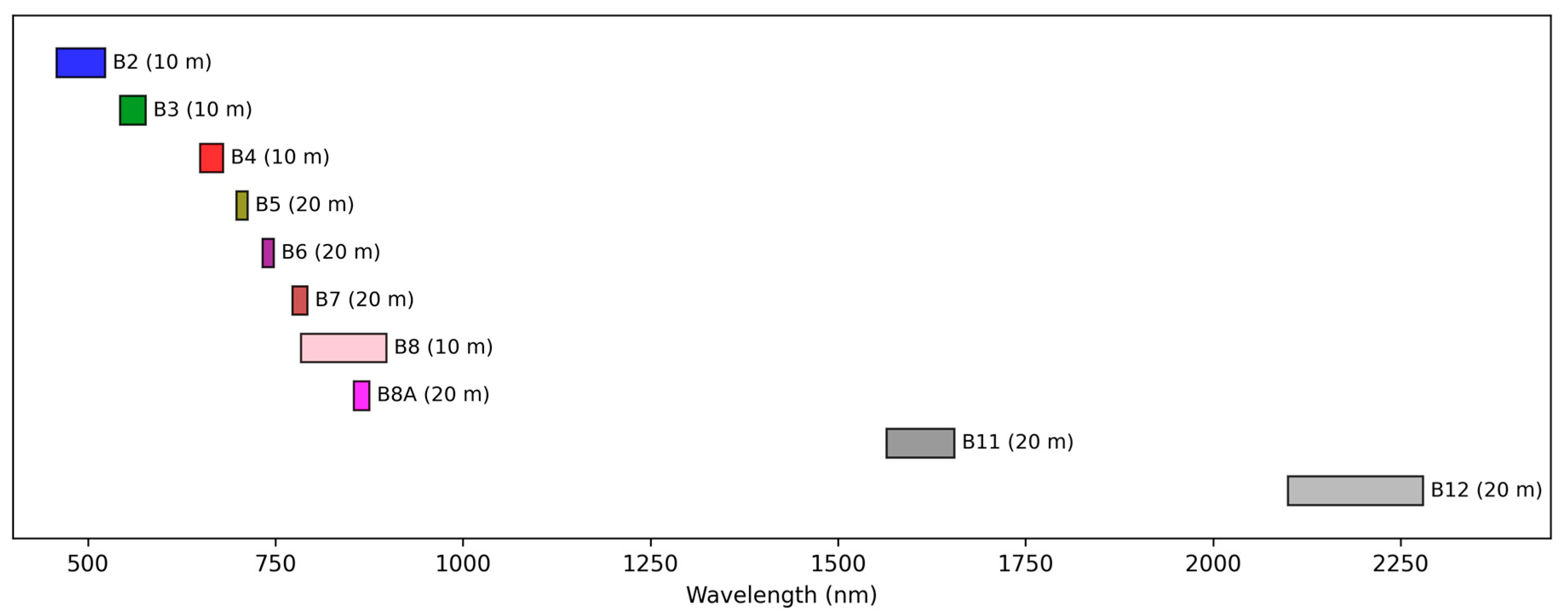

2.2.1. Satellite Data Acquisition

2.2.2. Ground Truth Data for Training, Validation, and Testing

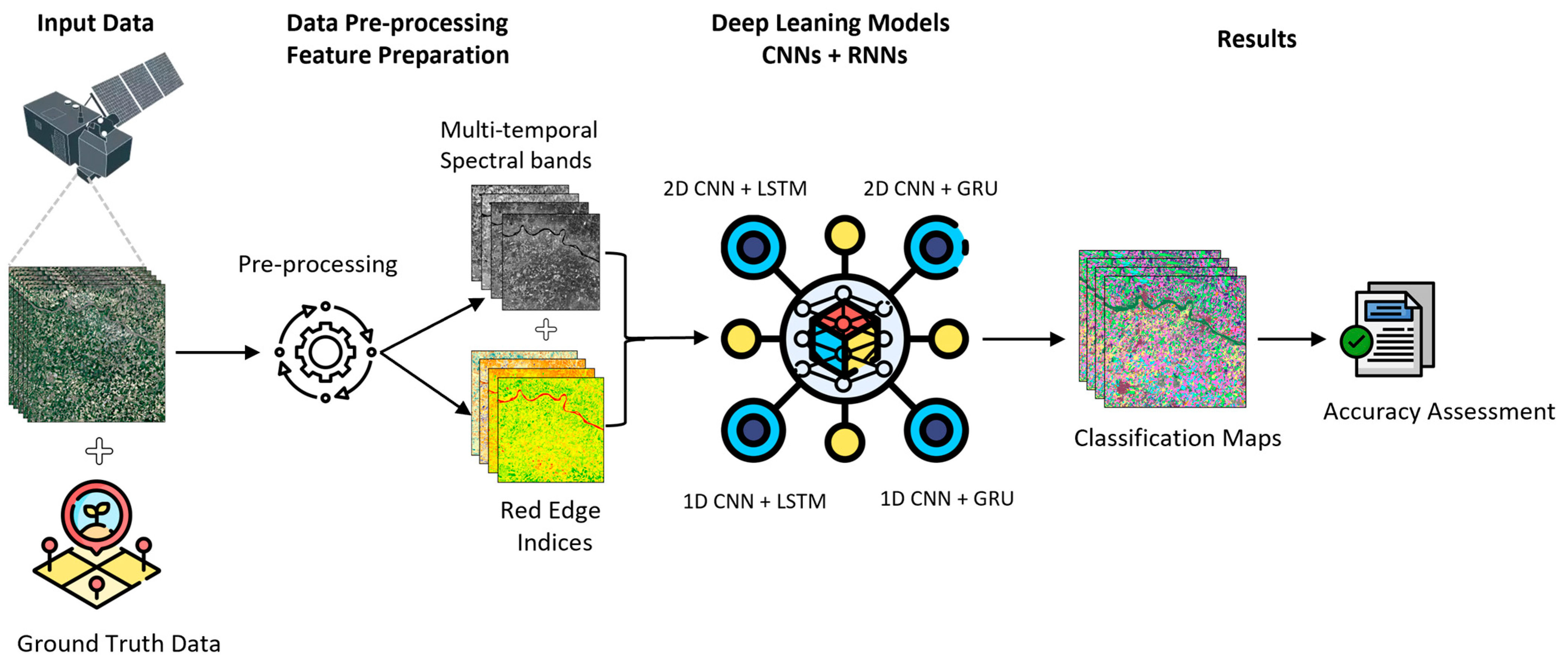

2.3. Methodological Overview

2.4. Data Preprocessing

2.5. Feature Preparation for Classification

2.6. Deep Learning Models

2.6.1. Convolution Neural Network (1D & 2D)

2.6.2. Recurrent Neural Networks (LSTM & GRU)

2.6.3. Proposed Hybrid CNN-RNN Architectures

2.6.4. Sample Design and Classifier Training

2.7. Evaluation Model

3. Results

3.1. Performance Analysis of Classification Models

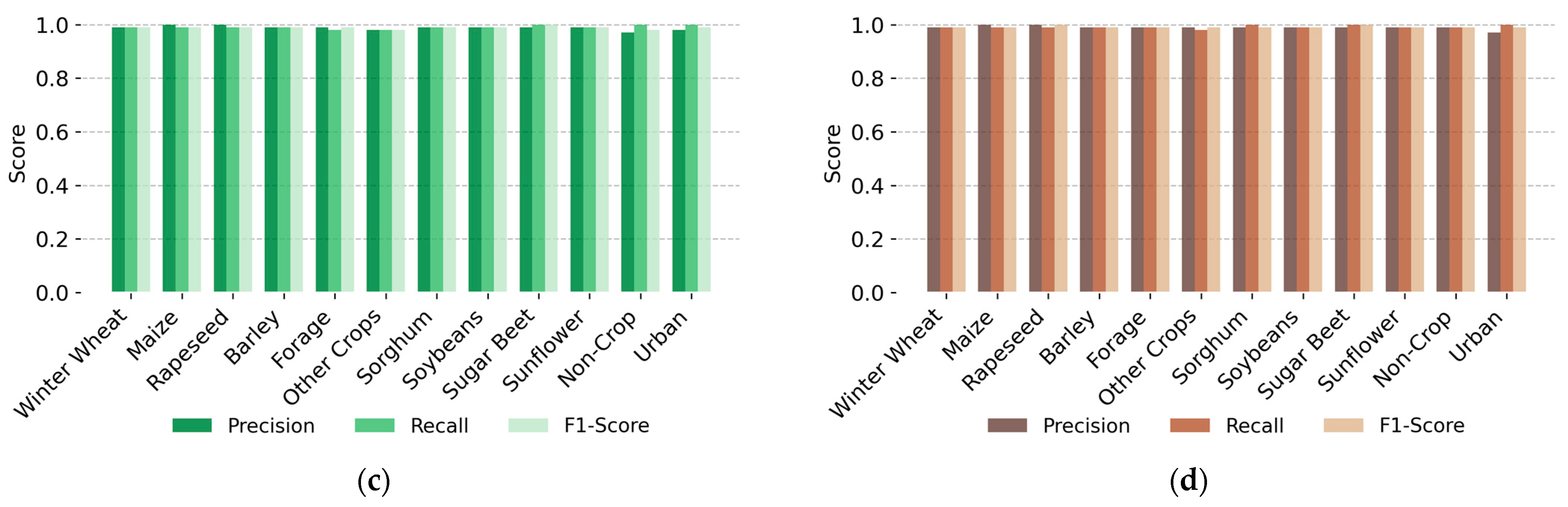

3.2. Evaluation Metrics and Per-Class Analysis

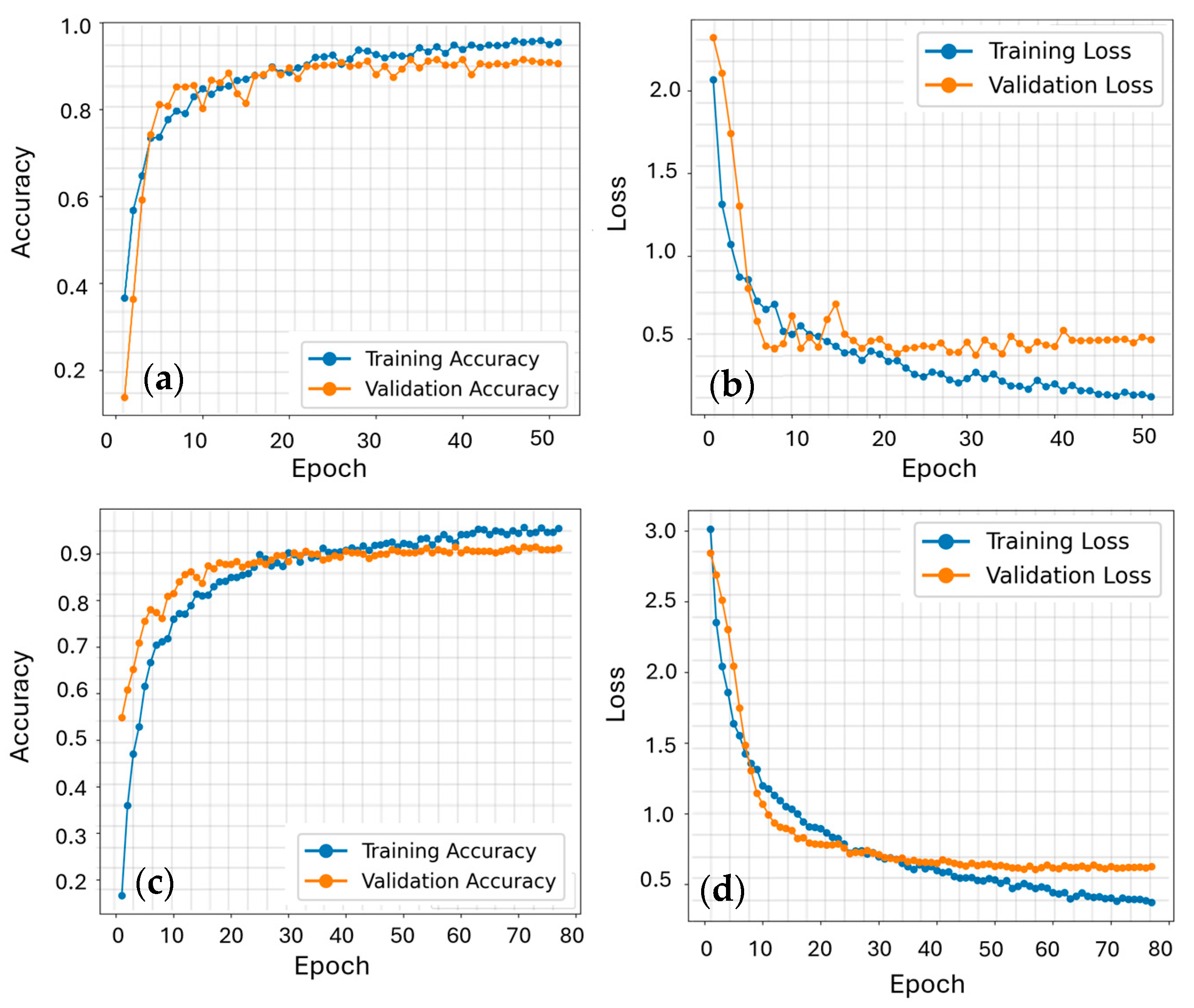

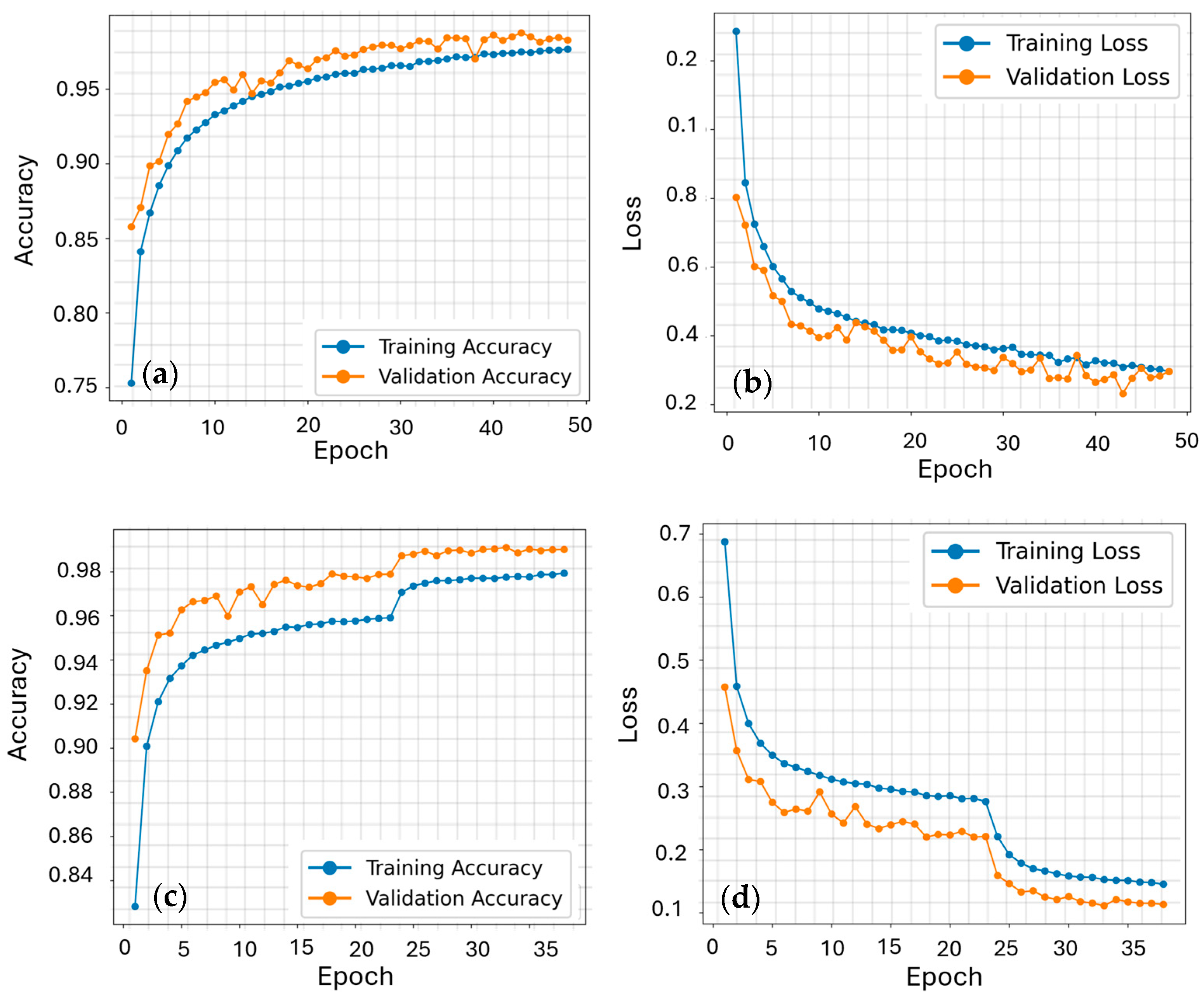

3.3. Learning Curves and Convergence Behavior

4. Discussion

4.1. Performance Comparison of Classification Models

4.2. Performance Comparison of Per-Class Analysis

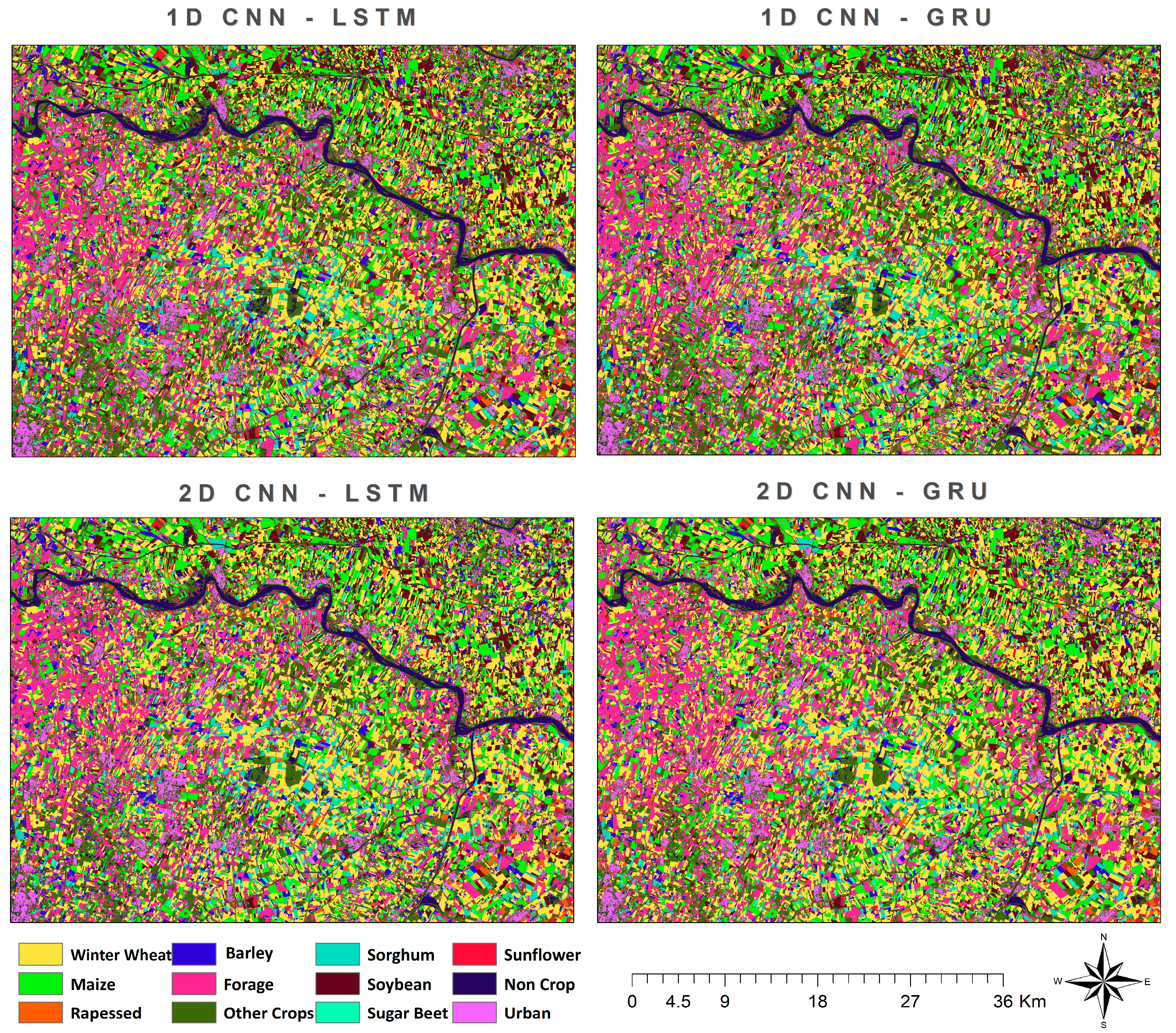

4.3. Visual Comparison of Crop Classification Results

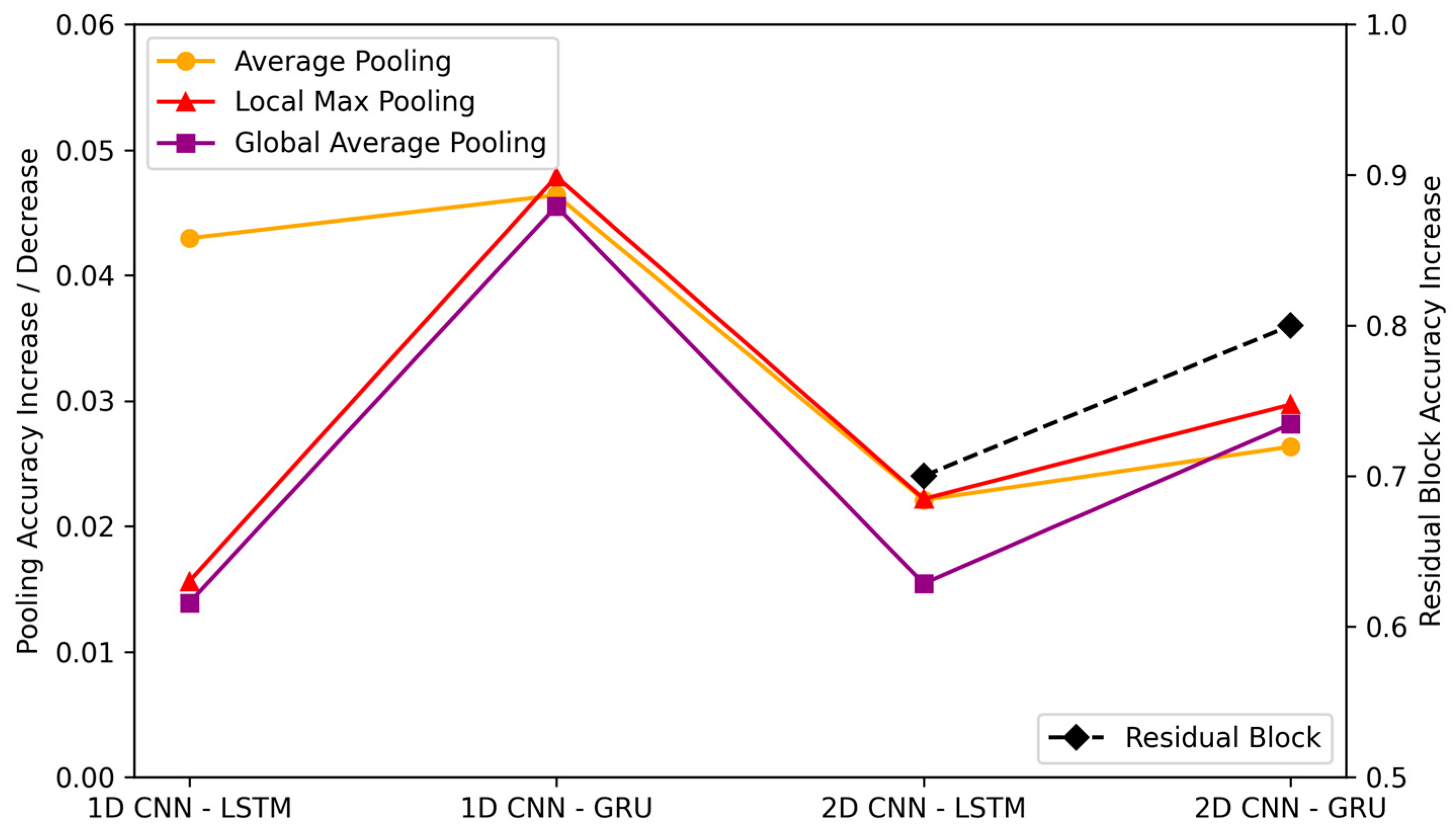

4.4. Impact of Pooling Types and Residual Block Architectures

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbol | Meaning |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Networks |

| 1D CNN | One-Dimensional Convolutional Neural Networks |

| 2D CNN | Two-Dimensional Convolutional Neural Networks |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| DL | Deep Learning |

| ML | Machine Learning |

| RF | Random Forest |

| SVM | Support Vector Machine |

| VI | Vegetation Indices |

| BOA | Bottom of the Atmosphere |

| RE | Red Edge |

| KNN | K-Nearest Neighbors Radial Basis Function |

References

- Harris, D.R.; Fuller, D.Q. Agriculture: Definition and overview. In Encyclopedia of Global Archaeology; Smith, C., Ed.; Springer: New York, NY, USA, 2014; pp. 104–113. [Google Scholar] [CrossRef]

- Petersen, B.; Snapp, S. What is sustainable intensification? Views from experts. Land Use Policy 2015, 46, 1–10. [Google Scholar] [CrossRef]

- FAO. Hunger and Food Insecurity. 2025. Available online: https://www.fao.org/hunger/en (accessed on 9 January 2025).

- FAO. FAOSTAT: Suite of Food Security Indicators. 2024. Available online: https://www.fao.org/faostat/en/#data/FS (accessed on 9 January 2025).

- Wijerathna-Yapa, A.; Pathirana, R. Sustainable agro-food systems for addressing climate change and food security. Agriculture 2022, 12, 1554. [Google Scholar] [CrossRef]

- Rehman, M.U.; Eesaar, H.; Abbas, Z.; Seneviratne, L.; Hussain, I.; Chong, K.T. Advanced drone-based weed detection using feature-enriched deep learning approach. Knowl.-Based Syst. 2024, 305, 112655. [Google Scholar] [CrossRef]

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development. 2025. Available online: https://sdgs.un.org/2030agenda (accessed on 9 January 2025).

- Hegarty-Craver, M.; Polly, J.; O’neil, M.; Ujeneza, N.; Rineer, J.; Beach, R.H.; Lapidus, D.; Temple, D.S. Remote crop mapping at scale: Using satellite imagery and UAV-acquired data as ground truth. Remote Sens. 2020, 12, 1984. [Google Scholar] [CrossRef]

- Vizzari, M.; Lesti, G.; Acharki, S. Crop classification in Google Earth Engine: Leveraging Sentinel-1, Sentinel-2, European CAP data, and object-based machine-learning approaches. Geo-Spat. Inf. Sci. 2024, 28, 815–830. [Google Scholar] [CrossRef]

- Balsamo, G.; Agusti-Panareda, A.; Albergel, C.; Arduini, G.; Beljaars, A.; Bidlot, J.; Blyth, E.; Bousserez, N.; Boussetta, S.; Brown, A.; et al. Satellite and in situ observations for advancing global Earth surface modelling: A review. Remote Sens. 2018, 10, 2038. [Google Scholar] [CrossRef]

- Guo, H.; Fu, W.; Liu, G. Earth observation technologies and scientific satellites for global change. In Scientific Satellite and Moon-Based Earth Observation for Global Change; Springer: Singapore, 2019; pp. 263–281. [Google Scholar] [CrossRef]

- Stojanova, D.; Panov, P.; Gjorgjioski, V.; Kobler, A.; Džeroski, S. Estimating vegetation height and canopy cover from remotely sensed data with machine learning. Ecol. Inform. 2010, 5, 256–266. [Google Scholar] [CrossRef]

- Fang, H.; Liang, S.; Chen, Y.; Ma, H.; Li, W.; He, T.; Tian, F.; Zhang, F. A comprehensive review of rice mapping from satellite data: Algorithms, product characteristics and consistency assessment. Sci. Remote Sens. 2024, 10, 100172. [Google Scholar] [CrossRef]

- Mishra, H.; Mishra, D. Sustainable smart agriculture to ensure zero hunger. In Sustainable Development Goals: Technologies and Opportunities; CRC Press: Boca Raton, FL, USA, 2024; pp. 16–37. [Google Scholar]

- Aldana-Martín, J.F.; García-Nieto, J.; del Mar Roldán-García, M.; Aldana-Montes, J.F. Semantic modelling of earth observation remote sensing. Expert Syst. Appl. 2022, 187, 115838. [Google Scholar] [CrossRef]

- Karthikeyan, L.; Chawla, I.; Mishra, A.K. A review of remote sensing applications in agriculture for food security: Crop growth and yield, irrigation, and crop losses. J. Hydrol. 2020, 586, 124905. [Google Scholar] [CrossRef]

- Khanal, S.; Kc, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote sensing in agriculture—Accomplishments, limitations, and opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Gascon, F. Sentinel-2 for agricultural monitoring. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 8166–8168. [Google Scholar] [CrossRef]

- Zheng, Y.; Dong, W.; Yang, Z.; Lu, Y.; Zhang, X.; Dong, Y.; Sun, F. A new attention-based deep metric model for crop type mapping in complex agricultural landscapes using multisource remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104204. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Segarra, J.; Buchaillot, M.L.; Araus, J.L.; Kefauver, S.C. Remote sensing for precision agriculture: Sentinel-2 improved features and applications. Agronomy 2020, 10, 641. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Thomas, N.; Neigh, C.S.R.; Carroll, M.L.; McCarty, J.L.; Bunting, P. Fusion approach for remotely-sensed mapping of agriculture (FARMA): A scalable open source method for land cover monitoring using data fusion. Remote Sens. 2020, 12, 3459. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep learning for precision agriculture: A bibliometric analysis. Intell. Syst. Appl. 2022, 16, 200102. [Google Scholar] [CrossRef]

- Gawade, S.D.; Bhansali, A.; Chopade, S.; Kulkarni, U. Optimizing crop yield prediction with R2U-Net-AgriFocus: A deep learning architecture with leveraging satellite imagery and agro-environmental data. Expert Syst. Appl. 2025, 296, 128942. [Google Scholar] [CrossRef]

- Bantchina, B.B.; Gündoğdu, K.S.; Yazar, S.; Author, C. Crop type classification using Sentinel-2A-derived normalized difference red edge index (NDRE) and machine learning approach. Bursa Uludağ Üniversitesi Ziraat Fakültesi Derg. 2024, 38, 89–105. [Google Scholar] [CrossRef]

- Moumni, A.; Lahrouni, A. Machine learning-based classification for crop-type mapping using the fusion of high-resolution satellite imagery in a semiarid area. Scientifica 2021, 2021, 6613372. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, J.; Xun, L.; Wang, J.; Wu, Z.; Henchiri, M.; Zhang, S.; Zhang, S.; Bai, Y.; Yang, S.; et al. Evaluating the effectiveness of machine learning and deep learning models combined time-series satellite data for multiple crop types classification over a large-scale region. Remote Sens. 2022, 14, 2341. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Thorp, K.; Drajat, D. Deep machine learning with Sentinel satellite data to map paddy rice production stages across West Java, Indonesia. Sens. Environ. 2021, 265, 112679. [Google Scholar] [CrossRef]

- Wang, J.; Wang, P.; Tian, H.; Tansey, K.; Liu, J.; Quan, W. A deep learning framework combining CNN and GRU for improving wheat yield estimates using time series remotely sensed multi-variables. Comput. Electron. Agric. 2023, 206, 107705. [Google Scholar] [CrossRef]

- Venkatanaresh, M.; Kullayamma, I. A new approach for crop type mapping in satellite images using hybrid deep capsule auto encoder. Knowl.-Based Syst. 2022, 256, 109881. [Google Scholar] [CrossRef]

- Saini, R. Integrating vegetation indices and spectral features for vegetation mapping from multispectral satellite imagery using AdaBoost and Random Forest machine learning classifiers. Geomat. Environ. Eng. 2023, 17, 57–74. [Google Scholar] [CrossRef]

- Amankulova, K.; Farmonov, N.; Mukhtorov, U.; Mucsi, L. Sunflower crop yield prediction by advanced statistical modeling using satellite-derived vegetation indices and crop phenology. Geocarto Int. 2023, 38, 2197509. [Google Scholar] [CrossRef]

- Sitokonstantinou, V.; Papoutsis, I.; Kontoes, C.; Arnal, A.L.; Andrés, A.P.A.; Zurbano, J.A.G. Scalable parcel-based crop identification scheme using Sentinel-2 data time-series for the monitoring of the Common Agricultural Policy. Remote Sens. 2018, 10, 911. [Google Scholar] [CrossRef]

- Gao, Y.; Zhao, Z.; Shang, G.; Liu, Y.; Liu, S.; Yan, H.; Chen, Y.; Zhang, X.; Li, W. Optimal feature selection and crop extraction using random forest based on GF-6 WFV data. Int. J. Remote Sens. 2024, 45, 7395–7414. [Google Scholar] [CrossRef]

- Kang, Y.; Meng, Q.; Liu, M.; Zou, Y.; Wang, X. Crop classification based on red edge features analysis of GF-6 WFV data. Sensors 2021, 21, 4328. [Google Scholar] [CrossRef]

- Adrian, J.; Sagan, V.; Maimaitijiang, M. Sentinel SAR-optical fusion for crop type mapping using deep learning and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 175, 215–235. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder crop area mapped with a semantic segmentation deep learning method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Song, W.; Feng, A.; Wang, G.; Zhang, Q.; Dai, W.; Wei, X.; Hu, Y.; Amankwah, S.O.Y.; Zhou, F.; Liu, Y. Bi-objective crop mapping from Sentinel-2 images based on multiple deep learning networks. Remote Sens. 2023, 15, 3417. [Google Scholar] [CrossRef]

- Ofori-Ampofo, S.; Pelletier, C.; Lang, S. Crop type mapping from optical and radar time series using attention-based deep learning. Remote Sens. 2021, 13, 4668. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Ienco, D.; Interdonato, R.; Gaetano, R.; Ho Tong Minh, D. Combining Sentinel-1 and Sentinel-2 satellite image time series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal convolutional neural network for the classification of satellite image time series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Feng, F.; Gao, M.; Liu, R.; Yao, S.; Yang, G. A deep learning framework for crop mapping with reconstructed Sentinel-2 time series images. Comput. Electron. Agric. 2023, 213, 108227. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land cover classification via multitemporal spatial data by deep recurrent neural networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. Available online: https://papers.nips.cc/paper_files/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html (accessed on 5 February 2025).

- Sher, M.; Minallah, N.; Frnda, J.; Khan, W. Elevating crop classification performance through CNN-GRU feature fusion. IEEE Access 2024, 12, 141013–141025. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on convolutional neural networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Liu, J.; Wang, T.; Skidmore, A.; Sun, Y.; Jia, P.; Zhang, K. Integrated 1D, 2D, and 3D CNNs enable robust and efficient land cover classification from hyperspectral imagery. Remote Sens. 2023, 15, 4797. [Google Scholar] [CrossRef]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent neural networks for time series forecasting: Current status and future directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. Available online: https://arxiv.org/abs/1409.2329 (accessed on 24 August 2025).

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Salem, F.M. Gated RNN: The gated recurrent unit (GRU) RNN. In Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2022; pp. 85–100. [Google Scholar]

- Fan, X.; Chen, L.; Xu, X.; Yan, C.; Fan, J.; Li, X. Land cover classification of remote sensing images based on hierarchical convolutional recurrent neural network. Forests 2023, 14, 1881. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in land cover and crop classification based on temporal features learning from Sentinel-2 data using recurrent-convolutional neural network (R-CNN). Appl. Sci. 2019, 10, 238. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral–spatial features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef]

- Zhao, H.; Duan, S.; Liu, J.; Sun, L.; Reymondin, L. Evaluation of five deep learning models for crop type mapping using Sentinel-2 time series images with missing information. Remote Sens. 2021, 13, 2790. [Google Scholar] [CrossRef]

- Kerner, H.R.; Sahajpal, R.; Pai, D.B.; Skakun, S.; Puricelli, E.; Hosseini, M.; Meyer, S.; Becker-Reshef, I. Phenological normalization can improve in-season classification of maize and soybean: A case study in the central US Corn Belt. Sci. Remote Sens. 2022, 6, 100059. [Google Scholar] [CrossRef]

- Ghisellini, P.; Zucaro, A.; Viglia, S.; Ulgiati, S. Monitoring and evaluating the sustainability of the Italian agricultural system: An emergy decomposition analysis. Ecol. Model. 2014, 271, 132–148. [Google Scholar] [CrossRef]

- Azar, R.; Villa, P.; Stroppiana, D.; Crema, A.; Boschetti, M.; Brivio, P.A. Assessing in-season crop classification performance using satellite data: A test case in Northern Italy. Eur. J. Remote Sens. 2016, 49, 361–380. [Google Scholar] [CrossRef]

- Shojaeezadeh, S.A.; Elnashar, A.; Weber, T.K.D. A novel fusion of Sentinel-1 and Sentinel-2 with climate data for crop phenology estimation using machine learning. Sci. Remote Sens. 2025, 11, 100227. [Google Scholar] [CrossRef]

- Tufail, R.; Ahmad, A.; Javed, M.A.; Ahmad, S.R. A machine learning approach for accurate crop type mapping using combined SAR and optical time series data. Adv. Space Res. 2022, 69, 331–346. [Google Scholar] [CrossRef]

- Delogu, G.; Caputi, E.; Perretta, M.; Ripa, M.N.; Boccia, L. Using PRISMA hyperspectral data for land cover classification with artificial intelligence support. Sustainability 2023, 15, 13786. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, X.; Chen, Y.; Liang, X. Land-cover mapping using Random Forest classification and incorporating NDVI time-series and texture: A case study of central Shandong. Int. J. Remote Sens. 2018, 39, 8703–8723. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Crop classification from Sentinel-2-derived vegetation indices using ensemble learning. J. Appl. Remote Sens. 2018, 12, 026019. [Google Scholar] [CrossRef]

- Kang, Y.; Hu, X.; Meng, Q.; Zou, Y.; Zhang, L.; Liu, M.; Zhao, M. Land cover and crop classification based on red edge indices features of GF-6 WFV time series data. Remote Sens. 2021, 13, 4522. [Google Scholar] [CrossRef]

- Li, Q.; Tian, J.; Tian, Q. Deep learning application for crop classification via multi-temporal remote sensing images. Agriculture 2023, 13, 906. [Google Scholar] [CrossRef]

- Ndikumana, E.; Ho Tong Minh, D.; Baghdadi, N.; Courault, D.; Hossard, L. Deep recurrent neural network for agricultural classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef]

- Jogin, M.; Mohana, H.S.; Madhulika, M.S.; Divya, G.D.; Meghana, R.K.; Apoorva, S. Feature extraction using convolution neural networks (CNN) and deep learning. In Proceedings of the 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 18–19 May 2018; pp. 2319–2323. [Google Scholar] [CrossRef]

- Ding, W.; Abdel-Basset, M.; Alrashdi, I.; Hawash, H. Next generation of computer vision for plant disease monitoring in precision agriculture: A contemporary survey, taxonomy, experiments, and future direction. Inf. Sci. 2024, 665, 120338. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, Z.; Jiang, H.; Jing, W.; Sun, L.; Feng, M. Evaluation of three deep learning models for early crop classification using Sentinel-1A imagery time series—A case study in Zhanjiang, China. Remote Sens. 2019, 11, 2673. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. Available online: https://proceedings.mlr.press/v37/ioffe15.html (accessed on 24 August 2025).

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. Available online: https://arxiv.org/abs/1207.0580 (accessed on 24 August 2025). [CrossRef]

- Boureau, Y.L.; Ponce, J.; LeCun, Y. A theoretical analysis of feature pooling in visual recognition. In Proceedings of the 27th International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Orr, G.B.; Müller, K.-R. Efficient Backprop. In Neural Networks: Tricks of the Trade; Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 9–50. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A critical review of recurrent neural networks for sequence learning. arXiv 2015, arXiv:1506.00019. Available online: https://arxiv.org/abs/1506.00019 (accessed on 24 August 2025). [CrossRef]

- Bakker, B. Reinforcement learning with long short-term memory. Adv. Neural Inf. Process. Syst. 2001, 14. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint 2014, arXiv:1412.3555. Available online: https://arxiv.org/abs/1412.3555 (accessed on 24 August 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Ashwini, B.; Kaur, M.; Singh, D.; Roy, S.; Amoon, M. Efficient skip connections-based residual network (ESRNet) for brain tumor classification. Diagnostics 2023, 13, 3234. [Google Scholar] [CrossRef]

- Zhang, Z.; Sabuncu, M.R. Generalized cross entropy loss for training deep neural networks with noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper_files/paper/2018/hash/13f3cf8c531952d72e5847c4183e6910-Abstract.html (accessed on 15 March 2025).

- Russwurm, M.; Körner, M. Temporal vegetation modelling using long short-term memory networks for crop identification from medium-resolution multispectral satellite images. Remote Sens. 2017, 9, 11–19. [Google Scholar]

- Garnot, V.S.F.; Landrieu, L.; Giordano, S.; Chehata, N. Time-space tradeoff in deep learning models for crop classification on satellite multi-spectral image time series. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 6247–6250. [Google Scholar] [CrossRef]

- Kwak, G.H.; Park, M.G.; Park, C.W.; Lee, K.D.; Na, S.I.; Ahn, H.Y.; Park, N.W. Combining 2D CNN and bidirectional LSTM to consider spatio-temporal features in crop classification. Korean J. Remote Sens. 2019, 35, 681–692. [Google Scholar]

- Zhang, F.; Yin, J.; Wu, N.; Hu, X.; Sun, S.; Wang, Y. A dual-path model merging CNN and RNN with attention mechanism for crop classification. Eur. J. Agron. 2024, 159, 127273. [Google Scholar] [CrossRef]

- Tufail, R.; Tassinari, P.; Torreggiani, D. Assessing feature extraction, selection, and classification combinations for crop mapping using Sentinel-2 time series: A case study in northern Italy. Remote Sens. Appl. Soc. Environ. 2025, 38, 101525. [Google Scholar] [CrossRef]

- Justo, J.A.; Garrett, J.L.; Georgescu, M.-I.; Gonzalez-Llorente, J.; Ionescu, R.T.; Johansen, T.A. Sea-land-cloud segmentation in satellite hyperspectral imagery by deep learning. Remote Sens. 2023, 15, 1267. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, Z.; Baghbaderani, R.K.; Wang, F.; Qu, Y.; Stuttsy, C.; Qi, H. Land cover classification for satellite images through 1D CNN. In Proceedings of the 2019 10th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019. [Google Scholar]

- Cai, J.; Boust, C.; Mansouri, A. ATSFCNN: A novel attention-based triple-stream fused CNN model for hyperspectral image classification. Mach. Learn. Sci. Technol. 2024, 5, 015024. [Google Scholar] [CrossRef]

- Fırat, H.; Asker, M.E.; Hanbay, D. Classification of hyperspectral remote sensing images using different dimension reduction methods with 3D/2D CNN. Remote Sens. Appl. Soc. Environ. 2022, 25, 100694. [Google Scholar] [CrossRef]

- Saralioglu, E.; Gungor, O. Semantic segmentation of land cover from high-resolution multispectral satellite images by spectral-spatial convolutional neural network. Geocarto Int. 2022, 37, 657–677. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://papers.nips.cc/paper_files/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 4 March 2025).

- Bieder, F.; Sandkühler, R.; Cattin, P.C. Comparison of methods generalizing max- and average-pooling. arXiv 2021, arXiv:2103.01746. [Google Scholar] [CrossRef]

- Kwak, N.-J.; Shin, H.-J.; Yang, J.-S.; Song, T.-S. CNN applied modified residual block structure. J. Korea Multimed. Soc. 2020, 23, 803–811. [Google Scholar]

- Mohamed Yassin, W.; Faizal Abdollah, M.; Muslim, Z.; Ahmad, R.; Ismail, A. An emotion and gender detection using hybridized convolutional 2D and batch norm residual network learning. In Proceedings of the 2021 9th International Conference on Information Technology: IoT and Smart City, Guangzhou, China, 22–25 December 2021; pp. 79–84. [Google Scholar]

| Crop | Ground Truth Polygons | Avg Area (ha) |

|---|---|---|

| Winter Wheat | 231 | 4.8 |

| Maize | 257 | 5.6 |

| Rapeseed | 120 | 3.6 |

| Barley | 120 | 3.1 |

| Forage | 275 | 3.0 |

| Other Crops | 254 | 2.9 |

| Sorghum | 188 | 2.5 |

| Soyabean | 282 | 2.8 |

| Sugar beet | 71 | 3.0 |

| Sunflower | 82 | 2.0 |

| Non-Crop | 131 | 4.3 |

| Urban | 160 | 3.0 |

| Index | Abbreviation | Formula |

|---|---|---|

| NDVI | Normalized Difference Vegetation Index | |

| IRECI | Inverted Red-Edge Chlorophyll Index | |

| MTCI | Meris Terrestrial Chlorophyll Index algorithm | (B6B5)(B5B4) |

| S2REP | Sentinel-2 Red-Edge Position Index | 705 + 35 × ((B4 + B7)/2 − B5)/(B6 − B5) |

| Hyperparameters | 2D CNN-LSTM or GRU | 1D CNN-LSTM or GRU |

|---|---|---|

| Optimizer | Adam | Adam |

| Learning Rate | 0.001 | 0.001 |

| Loss Function | Sparse Categorical Crossentropy | Sparse Categorical Crossentropy |

| Batch Size | 32, 64, 128, 256 | 32, 64, 128, 256 |

| Epochs | 100 | 100 |

| Convolution Filters | 32, then 64 | 128, then 64 |

| Kernel Size | 3 × 3 | 7 × 7 |

| Padding | same | same |

| Pooling | Local Max Pooling 2D (2,2) | Local Max Pooling 1D size 2 |

| Residual Blocks | Residual blocks, 32 & 64 filters | |

| Dropout Rates | 0.25 → 0.3 in CNN layers | 0.3 in first block, 0.2 in second |

| L2 Regularization | 1 × 10−4 in Conv layers | 1 × 10−3 in Conv layers |

| LSTM/GRU Units | 128, then 64 | 128, then 64 |

| Bidirectional | True | True |

| Dropout | 0.3 → 0.2 in LSTM/GRU layers | 0.3, 0.2 after LSTM/GRU layers |

| Batch Normalization | Applied after LSTM/GRU layers | Applied after LSTM/GRU layers |

| Attention | Dot-product self-attention (Keras Attention) | Dot-product self-attention (Keras Attention) |

| Dense Layers | 128 → 64 → Output | 128 → 64 → Output |

| Activations | ReLU in hidden layers, Softmax in output | ReLU in hidden layers, Softmax in output |

| Dropout | ~0.3 in dense layers | 0.3, 0.2 in dense layers |

| Model | Accuracy | |

|---|---|---|

| OA (%) | F1 (%) | |

| 1D CNN-LSTM | 92.54 ± 1.21 | 92.11 ± 1.27 |

| 1D CNN-GRU | 93.46 ± 1.13 | 93.21 ± 1.16 |

| 2D CNN-LSTM | 98.51 ± 0.42 | 98.58 ± 0.50 |

| 2D CNN-GRU | 99.12 ± 0.29 | 99.14 ± 0.29 |

| Comparison | t | p-Value | Significance |

|---|---|---|---|

| 1D CNN-LSTM v. One-dimensional CNN-GRU | −15.38 | <0.001 | ** |

| 1D CNN-LSTM v. Two-dimensional CNN-LSTM | −15.56 | <0.001 | ** |

| 1D CNN-GRU v. Two-dimensional CNN-LSTM | −11.34 | <0.001 | ** |

| 1D CNN-GRU v. Two-dimensional CNN-LSTM | −15.37 | <0.001 | ** |

| 1D CNN-GRU v. Two-dimensional CNN-GRU | −10.69 | <0.001 | ** |

| 2D CNN-LSTM v. Two-dimensional CNN-GRU | −2.86 | 0.046 | * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tufail, R.; Tassinari, P.; Torreggiani, D. Deep Learning Applications for Crop Mapping Using Multi-Temporal Sentinel-2 Data and Red-Edge Vegetation Indices: Integrating Convolutional and Recurrent Neural Networks. Remote Sens. 2025, 17, 3207. https://doi.org/10.3390/rs17183207

Tufail R, Tassinari P, Torreggiani D. Deep Learning Applications for Crop Mapping Using Multi-Temporal Sentinel-2 Data and Red-Edge Vegetation Indices: Integrating Convolutional and Recurrent Neural Networks. Remote Sensing. 2025; 17(18):3207. https://doi.org/10.3390/rs17183207

Chicago/Turabian StyleTufail, Rahat, Patrizia Tassinari, and Daniele Torreggiani. 2025. "Deep Learning Applications for Crop Mapping Using Multi-Temporal Sentinel-2 Data and Red-Edge Vegetation Indices: Integrating Convolutional and Recurrent Neural Networks" Remote Sensing 17, no. 18: 3207. https://doi.org/10.3390/rs17183207

APA StyleTufail, R., Tassinari, P., & Torreggiani, D. (2025). Deep Learning Applications for Crop Mapping Using Multi-Temporal Sentinel-2 Data and Red-Edge Vegetation Indices: Integrating Convolutional and Recurrent Neural Networks. Remote Sensing, 17(18), 3207. https://doi.org/10.3390/rs17183207