Highlights

What are the main findings?

- A lightweight encoder–decoder specifically designed for low-light remote sensing scenarios and an end-to-end trained single-step residual diffusion model.

- Illumination-invariant physical priors derived from a light transfer theory guide the inference process.

What is the implication of the main finding?

- The encoding–decoding speed is improved by 203× at the same compression ratio.

- Compared with a diffusion-based method, the inference speed is increased by 20×.

Abstract

Low-light image enhancement, especially for remote sensing images, remains a challenging task due to issues like low brightness, high noise, color distortion, and the unique complexities of remote sensing scenes, such as uneven illumination and large coverage. Existing methods often struggle to balance efficiency, accuracy, and robustness. Diffusion models have shown potential in image restoration, but they often rely on multi-step noise estimation, leading to inefficiency. To address these issues, this study proposes an enhancement framework based on a lightweight encoder–decoder and a physical-prior-guided end-to-end single-step residual diffusion model. The lightweight encoder–decoder, tailored for low-light scenarios, reduces computational redundancy while preserving key features, ensuring efficient mapping between pixel and latent spaces. Guided by physical priors, the end-to-end trained single-step residual diffusion model simplifies the process by eliminating multi-step noise estimation through end-to-end training, accelerating inference without sacrificing quality. Illumination-invariant priors guide the inference process, alleviating blurriness from missing details and ensuring structural consistency. Experimental results show that it not only demonstrates superiority over mainstream methods in quantitative metrics and visual effects but also achieves a 20× speedup compared with an advanced diffusion-based method.

1. Introduction

In the field of remote sensing, obtaining high-quality images is crucial for the analysis and understanding of Earth’s surface features. However, in low-light environments, such as dawn, dusk, or nighttime, remote sensing images often suffer from low contrast, noise interference, and loss of detailed information, which impair subsequent image interpretation and analysis tasks. Therefore, low-light remote sensing enhancement technology has become one of the key issues in the field of remote sensing image processing, aiming to improve the image quality and information utility under low-light conditions.

Traditional methods mainly rely on fixed mathematical or physical models, such as histogram equalization [1,2,3] and Retinex theory [4,5,6]. These traditional methods [7,8] typically depend on manually designed features and parameter adjustments, lacking the ability to adapt to complex image content. As a result, they exhibit poor generalization performance when dealing with remote sensing images from different scenes and imaging conditions.

Deep learning methods are mainly based on Convolutional Neural Networks (CNNs) [9,10] and Transformers [11,12,13]. These methods construct deep neural network models to automatically learn the mapping relationship from low-light images to normal-light images, which can effectively improve the image contrast and brightness and enhance the image visual quality. However, CNN-based methods still have certain limitations. Due to the limited receptive field of CNNs, their ability to capture long-range dependencies and global structural information in images is weak, which may lead to the loss of some key detailed information during the enhancement process. Although Transformer-based methods can effectively model global dependencies through the self-attention mechanism and perform well in capturing long-range feature correlations, they have problems such as high computational complexity, leading to insufficiently refined modeling of local details.

Diffusion models [14], as an emerging type of generative model, have demonstrated strong capabilities and potential in fields such as image generation [15,16] and image editing [17]. Diffusion models work by modeling the diffusion process of data, where they gradually add noise to the data, then learn the distribution of the noise, and recover the original data from the noise through reverse sampling. Applying diffusion models to low-light remote sensing image enhancement can not only make full use of their strong generative capabilities and ability to model complex distributions but also bring new ideas and methods to the field of remote sensing image enhancement. However, diffusion models face limitations in this task: First, the sampling process relies on multi-step iterative denoising, resulting in a low efficiency and limited performance. Second, they lack effective utilization of the structural information of the input image, making the generated results prone to edge blurring or structural distortion. Third, current encoder–decoders for the latent space have enormous computational complexity, resulting in low computational efficiency.

To address these issues, this study proposes an enhancement framework based on a lightweight encoder–decoder and a physical prior guided end-to-end single-step residual diffusion model. The lightweight encoder–decoder, specifically tailored for low-light remote sensing scenarios, reduces computational redundancy while preserving key image features, ensuring efficient mapping between the pixel space and the latent space. Under the guidance of physical priors, the end-to-end single-step residual diffusion model simplifies the enhancement process by eliminates the multi-step noise estimation step through end-to-end training, thereby accelerating the inference speed without sacrificing the quality. Meanwhile, the illumination-invariant priors impose necessary guidance on the generation process, alleviating the blurriness in generation caused by missing details under low-light conditions and ensuring the structural consistency of the enhanced results. Our main contributions are summarized as follows:

- We designed an compressed lightweight encoder–decoder dedicated to low-light remote sensing image enhancement, which connects the pixel space and latent space.

- We propose an end-to-end single-step residual diffusion model, which breaks the inherent paradigm of diffusion models relying on multi-step noise estimation. It reconstructs the training objective that directly serves the image reconstruction task and combines the end-to-end training strategy.

- We introduced illumination-invariant priors derived from a light transfer theory, which provide stable structural guidance for the inference process.

2. Related Work

2.1. Diffusion Model in Image Restoration

Diffusion models have received extensive attention in the field of image restoration due to their strong generative capabilities and ability to handle complex noise distributions. Saharia et al. [18] proposed a method to achieve image super-resolution through iterative refinement, which is a pioneering work applying diffusion models to image super-resolution and demonstrates the great potential of diffusion models in the field of image restoration. Guo et al. [19] proposed a new diffusion-based unsupervised shadow removal solution to model shadow, non-shadow, and their boundary regions separately. It uses a pre-trained unconditional diffusion model fused with unimpaired information to generate natural shadow-free images. Liu et al. [20] proposed the Residual Denoising Diffusion Model (RDDM). Compared with existing diffusion models that only focus on noise estimation, the residual diffusion model predicts residuals to represent directional diffusion from the target domain to the input domain while estimating noise to account for random perturbations in the diffusion process, unifying the image generation and restoration. Zheng et al. [21] proposed a selective hourglass mapping method based on conditional diffusion models (DiffUIR), which can simultaneously achieve shared distribution mapping and strong conditional guidance for better general image restoration learning.

2.2. Low-Light Remote Sensing Image Enhancement

Low-light remote sensing image enhancement is a specialized task that faces unique challenges due to the complex imaging conditions of remote sensing scenes, such as uneven illumination, large scene coverage, and diverse ground objects. Wu et al. [22] proposed a Retinex-based deep unfolding network, which unfolds an optimization problem into a learnable network to decompose low-light images into reflection and illumination layers. Fu et al. [23] used two low-light images for training to fully extract information from low-light images. Yang et al. [24] applied implicit neural representation to low-light image enhancement. Liu et al. [25] implemented low-light enhancement using three basic components: Visual State Space Module (VSSM), Local Feature Module (LFM), and Dual-Gate Dconv Feed-Forward Network (DGDFFN). Xing et al. [26] proposed a GAN framework that maximizes the mutual information between low-light images and restored images through Self-Similarity Contrast Learning (SSCL) in a fully unsupervised manner. Yao et al. [27] divided the low-light enhancement task into two subtasks: the first stage learns amplitude information to restore image brightness, and the second stage learns phase information to refine details. Zhao et al. [28] proposed an enhancement method based on the atmospheric scattering model, specifically tailored for uneven low-light conditions.

3. Preliminaries

Diffusion Models

Diffusion models [14] are a type of generative model based on Markov chains. Their core idea is to realize generative modeling from noise to target data by simulating two symmetric stochastic processes: “forward diffusion” and “reverse denoising”.

Forward process: The forward diffusion process is a process of gradually introducing Gaussian noise into the original data. Its purpose is to gradually transform the original data distribution into a known simple noise distribution (usually a standard Gaussian distribution) through a series of tiny noise perturbations. This process can be described as a T-step Markov chain, where each step only depends on the result of the previous step, as follows:

where represents the probability distribution of obtaining the noisy image after a T-step noise-adding process starting from the original image . Each step represents, at the t-th step, the conditional probability distribution of obtaining the current image given the image from the previous step. represents the Gaussian distribution, and is the noise coefficient of the t-th step, which satisfies . By gradually increasing , the original data will be completely submerged by noise after T steps, and at this time, approximately follows the standard Gaussian distribution .

Equation (2) can also be expressed as , where is standard Gaussian noise. To simplify the calculation, the forward diffusion process can be represented by cumulative parameters. Define and ; then, at any step can be directly expressed by the initial data and cumulative noise:

where . This property allows the forward diffusion process to sample at any step directly from without step-by-step calculation, which brings convenience to model training.

Reverse process: The reverse denoising process is the inverse process of forward diffusion. Its goal is to start from the standard Gaussian distribution and gradually denoise through T steps to finally recover a sample that conforms to the original data distribution :

where is the noise standard deviation, whose value is fixed and related to . is an unknown term. Equation (5) can also be expressed as

where is the sampling noise. The training objective of the diffusion model is to fit a noise prediction model by using a neural network (usually a U-Net [29]) and optimizing the network parameters so as to indirectly obtain , and then calculate so as to realize the gradual recovery of the original data. The objective function is formulated as

Diffusion models are built on Markov chains consisting of a forward process and a reverse process, which require step-by-step generation. Generally, T is set to 1000, leading to a relatively slow sampling speed. The Denoising Diffusion Implicit Model (DDIM) proposed by Song et al. [30] removes the Markov constraint, enabling the skipping of intermediate steps and direct prediction of earlier noise states. In this case, the number of steps required for the reverse process is the implicit sampling steps.

4. Methodology

4.1. Overall Framework

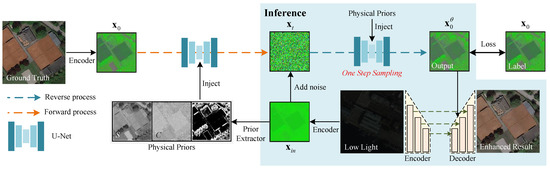

Figure 1 illustrates the overall architecture of the proposed method, which consists of three collaborative components: a lightweight encoder–decoder, an end-to-end single-step residual diffusion model, and a prior extractor. The overall workflow can be summarized as follows: first, the input low-light image is mapped to a low-dimensional latent space via the lightweight encoder, and then physical prior features are extracted from the latent code of the input image. Guided by the physical priors, the end-to-end single-step residual diffusion model directly performs one-step reconstruction on the features in the latent space and outputs the enhanced latent code. Finally, the latent code is mapped back to the pixel space through the lightweight decoder by integrating multi-scale features of the input image, resulting in the final enhanced image.

Figure 1.

The architecture of our proposed method.

The collaborative mechanism of the three components is reflected in the following aspects: the lightweight encoder–decoder provides an efficient latent space adapted to low-light enhancement tasks for the diffusion model, addressing the redundancy issue of the Latent Diffusion Model (LDM) [31] encoder–decoder; the end-to-end single-step diffusion model breaks the inherent paradigm of noise estimation, significantly improving efficiency; and the extraction and guidance of physical priors compensate for the ambiguity in generation caused by missing details in low-light images by introducing structural information into the generation process. Together, they form a closed loop of “encoding-guided reconstruction-decoding,” enabling fast and high-quality enhancement of low-light images.

4.2. Lightweight Encoder–Decoder

As the core carrier for diffusion models to efficiently process images, the latent space directly influences the performance and efficiency of the model. In Latent Diffusion Models (LDMs) [31], the latent space is constructed through an encoder–decoder, which maps high-dimensional pixel space images to a low-dimensional latent space, thereby reducing the computational complexity of the training and inference process. An LDM employs a pre-trained VQ-VAE [32] or VQ-GAN [33] architecture as its encoder–decoder. The encoder compresses input images into latent codes, while the decoder reconstructs latent codes back into pixel-space images. Through joint training, they achieve accurate mapping between the latent space and pixel space.

However, in low-light remote sensing scenarios, the general-purpose encoder–decoder of an LDM has significant limitations. Low-light remote sensing images not only exhibit characteristics such as low illumination, high noise, and blurred details but also present issues specific to remote sensing, including weak spectral information of ground objects and imbalanced contrast between large dark areas and local bright targets (e.g., night lights and reflective buildings).

Designed to adapt to various natural images, the general-purpose encoder–decoders lack targeted optimization for low-light remote sensing scenarios. The encoder tends to lose scarce critical ground object details during compression, such as road textures in dark areas and building edges, resulting in latent codes failing to retain the core features required for enhancement, while the decoder struggles to accurately restore unique illumination distributions of low-light remote sensing images during reconstruction (e.g., light intensity differences between urban and suburban areas or uneven illumination caused by topographic shadows), undermining the authenticity of enhanced results and further interferes with subsequent remote sensing interpretation tasks.

In addition, general-purpose encoder–decoders adopt complex structures with large parameters to pursue versatility. However, low-light remote sensing images often have large sizes and high resolutions, leading to a large amount of redundant computation in enhancement tasks and making it difficult to meet fast processing requirements.

To address the above issues, we constructed and trained a lightweight encoder–decoder specifically for the field of low-light remote sensing enhancement similar to the U-Net structure. Its core design revolves around the feature requirements of low-light remote sensing scenarios, achieving model lightweight while ensuring the integrity of latent code information.

Compared with the U-Net, our improvements mainly include separating the encoding and decoding structures, introducing Depth-wise Separable Convolutions (DSConvs) [34], adding residual blocks in feature layers of different scales, and adopting a flexible feature fusion mechanism that combines multi-scale features of low-light images during the latent code decoding process. These designs make the model more suitable for the characteristic requirements of low-light remote sensing image enhancement tasks. Following [23], we trained the encoder–decoder using approximately 10,000 pairs of images with different exposures.

To verify the advantages of the proposed lightweight encoder–decoder in low-light remote sensing enhancement tasks, in Section 5.2, we conducted a systematic comparison between it and the general-purpose encoder–decoder based on the VQ-VAE commonly used in an LDM, covering two dimensions: encoding–decoding effect and model efficiency. Since the use of a general-purpose encoder–decoder leads to a significant decrease in efficiency, where processing a single high-resolution remote sensing image can take several seconds or even dozens of seconds and the performance degradation demonstrated in the experiments is obvious, we did not conduct ablation studies on this part of the content.

4.3. End-to-End Trained Single-Step Residual Diffusion Model

The core design of the standard diffusion model in Section 3 follows the paradigm of “noise prediction-iterative denoising”. Its target task in the training phase is defined as “noise estimation”: the model learns to predict the added noise from noisy images, thereby achieving the modeling of data distribution. In the sampling phase, based on the pre-trained noise estimation network, the model starts from pure noise and gradually removes noise through multi-step iterations, ultimately generating images that conform to the target distribution. However, in image reconstruction tasks, such as low-light image enhancement, the actual target task is “reconstructing normal-light images from input low-light images”. This mismatch between “noise estimation” and “image reconstruction” leads to significant limitations in standard diffusion models.

To address this core contradiction, we propose an end-to-end trained single-step diffusion reconstruction mechanism. By reconstructing the training objectives and training process, the diffusion model is made to directly serve the image reconstruction task. Its core design idea is the following: during the training phase, the reverse sampling process of the diffusion model is executed simultaneously, and only the reconstruction loss is used as the optimization objective to achieve task consistency between “training and sampling”.

Diffusion models generally require 50–100 sampling steps [35]. At this time, using the end-to-end training method will greatly prolong the training time. To accelerate the model training, reduce the number of sampling steps, and achieve efficient single-step reconstruction, we introduce the residual diffusion model [20] to replace the standard diffusion model as the core of the model. The residual diffusion model decouples the traditional single denoising diffusion process into a dual diffusion process of residual diffusion and noise diffusion, which provides the possibility for reducing the number of sampling steps and achieving efficient single-step reconstruction.

Forward process: The forward diffusion process of the residual diffusion model involves gradually introducing Gaussian noise and residual terms into the original data, aiming to transform the original data distribution into a noise-added degraded image through a series of minor noise perturbations and residuals. This process can be described as a T-step Markov chain, where each step depends only on the result of the previous step, specifically as follows:

where , is the degraded image, and is the target image. The residual diffusion and noise diffusion are controlled by independent coefficient schedules and , respectively, to regulate their diffusion rates. Equation (9) can also be expressed as

where . Similar to standard diffusion models, at any step can be directly represented by the initial data , residuals, and accumulated noise:

where , , and . By default, we set as a uniformly increasing sequence, and ; when , the endpoint of the forward diffusion process is , which presents the degraded image with random perturbations added.

In the dual diffusion process, the residual diffusion part represents the directional diffusion from the target image to the degraded input image, explicitly providing guidance for the reverse generation process of image restoration. This directionality enables the model to focus more on key information transmission in image restoration tasks and reduces the implicit sampling steps because the degraded image is known, eliminating the need for lengthy reverse processes starting from noise, like standard diffusion models. The noise diffusion, on the other hand, represents random perturbations in the diffusion process. While ensuring the diversity of model outputs, its independent coefficient scheduling mechanism with residual diffusion allows the model to more flexibly balance the needs for determinism and diversity.

Reverse process: The reverse process of the residual diffusion model starts from the degraded image with added random perturbations, gradually reducing the noise and residuals, and ultimately generating a clean target data distribution. If are given, where , the reverse process is defined as

where , and for a deterministic generation process. When , we can obtain any one-step reverse process:

The training objective of the residual diffusion model is to fit a residual prediction model to predict by using a U-Net and optimizing the network parameters so as to indirectly obtain from Equation (11), and then calculate so as to realize the gradual recovery of the original data.

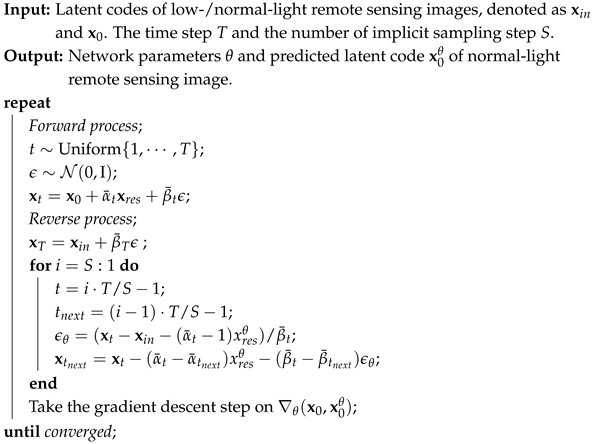

For our end-to-end reconstruction one-step training, following [30], we implement one-step implicit sampling, modify the training objective to indirectly predict through the reverse process, and only calculate the reconstruction loss between and . The training and inference processes can refer to Algorithms 1 and 2. Following [36], we use L1 loss and MS-SSIM loss as the reconstruction loss, the objective function is formulated as

| Algorithm 1: End-to-end residual diffusion model training. |

|

| Algorithm 2: End-to-end residual diffusion model inference. |

|

4.4. Physical Prior Extractor

Although the end-to-end trained single-step diffusion mechanism has significantly improved the efficiency of low-light image enhancement, in practical applications, it is found that the significant reduction in the number of sampling steps will lead to a certain degree of degradation in the model performance. This is because standard multi-step diffusion models gradually correct the generated results through an iterative process. However, in the absence of multi-step iterative correction, single-step diffusion models are prone to structural inconsistencies or illumination anomalies in the generated results due to local-feature-fitting deviations. Especially in low-light images, since the structural information of the original image is inherently blurred, it is more difficult for single-step diffusion models to accurately capture the structural mapping relationship from low light to normal light, which further exacerbates the performance loss.

To address the performance degradation issue in single-step diffusion, we propose an innovative strategy that derives physical priors based on the Kubelka–Munk theory to guide the generation process of the residual diffusion model. The Kubelka–Munk theory describes the spectral energy of light reflected from the surface of an object, and its basic formula is

where denotes the wavelength; x denotes the spatial location; is the spectral energy of the reflected light; is the light source spectrum; is the specular reflection coefficient; and is the intrinsic reflectivity of the material, which is only related to material properties and is independent of the illumination.

Through reasonable simplifying assumptions on the above model, illumination-related factors such as the light source spectrum e and specular reflection i can be eliminated, leaving only the intrinsic material property , thereby obtaining the illumination-invariant physical priors.

Assuming that the light source energy is uniform, which means independent of wavelength, degenerates into ; thus, the model is simplified to

By taking the ratio of the first derivative to the second derivative of the spectral energy, i.e., , and can be eliminated, resulting in an illumination invariant S related to , that is,

Assuming that the object has a diffuse reflection surface, specular reflection is negligible, i.e., ; thus, the model is simplified to

By taking the ratio of the spectral energy E to its first derivative , i.e., , can be eliminated, resulting in an illumination-invariant C related to , that is,

The last illumination invariant prior is from CIConv [37]. Specifically, under further simplifying assumptions, considering the spectral energy E; its spatial derivatives and ; and the spatial derivatives , , , and of the first-/second-order spectral derivatives and , the first derived quantities are defined as follows:

Similarly, , , and can be obtained. On this basis, the illumination invariant W is defined as the gradient magnitude of these derived quantities:

W can stably reflect the intrinsic edge characteristics of materials without being disturbed by the light intensity, color changes, or specular reflection, thereby achieving robustness against illumination variations:

where presents a small perturbation, and and refer to the sample mean and standard deviation, respectively.

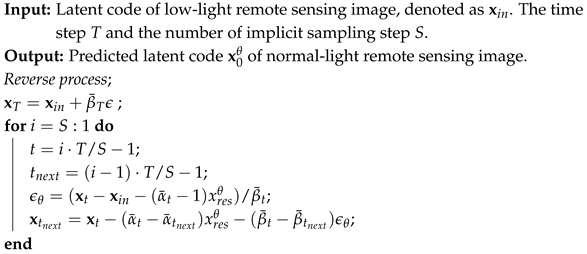

Our prior extractor is shown in Figure 2. After extracting the prior, we concatenate the prior and inject it as a condition into the U-Net used by the residual diffusion model to guide the model training.

Figure 2.

The architecture of physical prior extractor.

The Gaussian color model is used to estimate the spectral energy and its derivatives from RGB images, providing a basis for the calculation of illumination-invariant physical priors. As a bridge connecting RGB images and spectral energy features, it maps RGB channel values to variables related to spectral energy, such as the spectral energy , first-order spectral derivative , and second-order spectral derivative , through linear transformation, thereby supporting the derivation of subsequent illumination-invariant priors: S, C, and W. For the input RGB image, the Gaussian color model performs a linear transformation through a 3 × 3 matrix with the formula

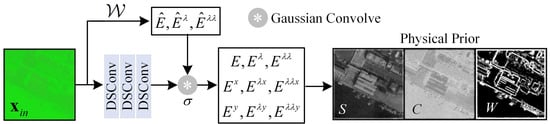

where represents the estimated spectral energy; and represent the first-order and second-order spectral derivatives, respectively; and is the pixel position. After obtaining , , and , we calculate E, , and by convolving with Gaussian color smoothing and derivative filters of scale . is predicted from the input image using three DSConv layers [34]. , , and are calculated from , and , , and are calculated from . The latent codes of normal-light images, along with the priors extracted from their corresponding input images, are shown in Figure 3.

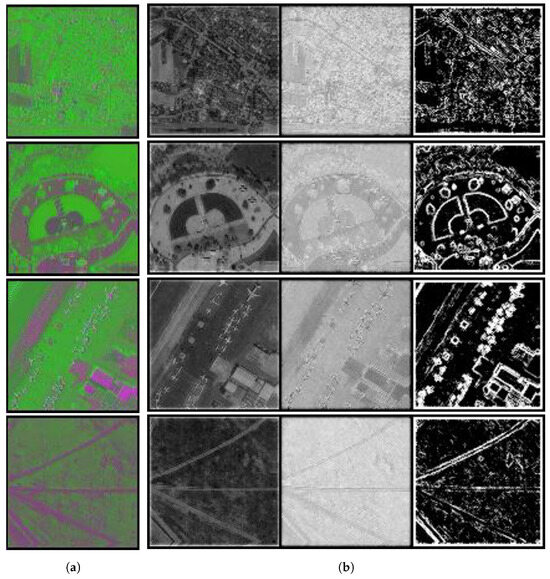

Figure 3.

The latent codes of normal-light images and the physical priors: (a) latent codes; (b) physical priors.

5. Experiments

5.1. Experimental Settings

5.1.1. Datasets and Metrics

Due to the extreme difficulty in obtaining paired data in low-light remote sensing scenarios, following [26,28], we constructed a low-light paired dataset, i.e., dark-iSAID, based on the remote sensing dataset iSAID [38]. This dataset was generated by simulating light variations in low-illumination environments and adding appropriate noise to simulate dark-region noise and sensor noise. Meanwhile, we validated the effectiveness of the proposed method on the real-scene low-light remote sensing dataset Darkrs. These datasets have been widely used in research related to remote sensing image enhancement [27,38].

To further evaluate the generalization ability of the model for low-light images in different real scenarios, we additionally selected several well-recognized datasets for testing, including DICM [39], NPE [40], LIME [41], and LSRW [42]. The datasets used in the experiments cover both remote sensing and non-remote sensing fields, including natural outdoor scenes and typical indoor environments. Among them, non-remote sensing datasets (such as aerial sky images and low-light indoor scenes) can further verify the generalization performance of the algorithm.

In addition, the datasets can be divided into two categories: paired and unpaired, and a comprehensive comparison was conducted using multi-dimensional evaluation metrics. For the paired datasets, full-reference metrics were adopted: Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) [43], Learned Perceptual Image Patch Similarity (LPIPS) [44], and Mean Absolute Error (MAE). For the unpaired datasets, no-reference metrics were adopted: Natural Image Quality Evaluator (NIQE) [45], Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) [46], Perceptual Image Quality Evaluator (PIQE) [47], and Noise Level (NL) [48].

5.1.2. Implementation Details

The proposed method was implemented based on Pytorch [49], and all comparative experiments were conducted on a system equipped with an NVIDIA RTX 4090 graphics card.

During the training process, the lightweight encoder–decoder and the end-to-end single-step latent residual diffusion model were trained separately, with independent Adam optimizers [50] configured for each. Both optimizers had an initial learning rate of , and the momentum parameter was uniformly set to (0.9, 0.999). During training, image patches were randomly cropped and input into the model; in the inference phase, the length and width of the image were first reflectively padded to multiples of 64, and after the enhancement, the results were cropped back to the original size.

The time step T in the training phase was set to 1000, while the implicit sampling step S was set to 1 in both the training and inference phases. We set the noise coefficients in the residual diffusion model as a uniformly decreasing sequence, and . We set different hyper-parameters for the U-Net used in the end-to-end residual diffusion model, dividing it into four versions:

- Ours-T: C = 32, channel multiplier = 1, 1, 1, 1;

- Ours-S: C = 32, channel multiplier = 1, 2, 2, 4;

- Ours-M: C = 64, channel multiplier = 1, 2, 2, 4;

- Ours-L: C = 64, channel multiplier = 1, 2, 4, 8.

where C is the number of channels in the first hidden layer. The parameters of each version are 0.89 M, 3.27 M, 12.41 M, and 36.26 M (excluding the encoder–decoder).

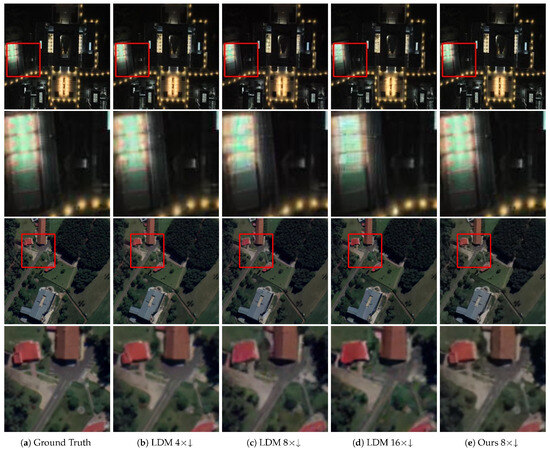

5.2. Comparison of the Encoder–Decoder

To verify the role of our lightweight encoder–decoder dedicated to the low-light remote sensing field, we validated the reconstruction efficiency and performance of our encoder–decoder with three encoders–decoders in an LDM (4× compression, 8× compression, and 16× compression) on the dark-iSAID and darkrs datasets. The encoders–decoders in the LDM infer by default at a size of pixels, so we also conducted experiments at this size. We report the model parameters, average reconstruction time, and PSNR and SSIM metrics on the normal-light test set of dark-ISAID in Table 1. We also show visual comparison in Figure 4. As shown in the table, our encoder–decoder has significantly fewer parameters, only 5.914 M, while the LDM encoder–decoder has a minimum of 72.793 M. Meanwhile, its reconstruction time is extremely short, only 0.0035 s, achieving a 203× speedup at the same compression ratio. In terms of the performance metrics, its PSNR (30.854) and SSIM (0.891) are much higher than even LDM 8×↓, and even higher than LDM 4×↓, indicating that our method has advantages in both efficiency and reconstruction quality.

Table 1.

Quantitative comparison of the encoder–decoder. The best results are highlighted in bold.

Figure 4.

Visual comparison of reconstruction between encoder–decoder with different compression levels in LDM and ours on darkrs and dark-iSAID datasets. Image details are in magnified views.

In terms of visual comparison, for LDM with 4× compression, there are slight deviations from the ground truth, yet still retains a certain level of structural similarity. As the compression level of LDM increases to 8× and 16×, the visual degradation becomes more apparent. Details in the highlighted regions become blurred; edges become less distinct; and there is a noticeable loss of fine textures, especially in complex structures like buildings and vegetation boundaries. In contrast, our method shows a remarkable improvement in visual fidelity. The details, colors, and brightness are much better preserved, presenting a more natural and accurate visual reconstruction, which further validates the superiority of our lightweight encoder–decoder in low-light remote sensing image processing.

5.3. Comparison with the State-of-the-Art Methods

In this section, we conducted both quantitative and qualitative comparative evaluations to assess the effectiveness of our proposed method against several state-of-the-art (SOTA) methods in the field of low-light remote sensing image enhancement. The comparative methods were Zero-DCE [51], RUAS [52], SCI [53], URetinexNet [22], Uformer [12], CLIP-LIT [54], PairLIE [23], NeRCo [24], GPP-LLIE [55], and LIEDNet [25].

For the dark-iSAID dataset, quantitative results in Table 2 demonstrate the superiority of our proposed methods across four metrics (PSNR, SSIM, LPIPS, MAE). Among our methods, Ours-L achieves the best performance, its PSNR reaches 26.838, outperforming the second-best method Uformer (26.252) by 0.586; the SSIM hits 0.776, leading Uformer (0.692) by a large margin (0.084); the LPIPS is 0.318, the lowest across all methods and indicating minimal perceptual difference; the MAE is 9.044 better than LIEDNet (9.450). Other variants (Ours-T, Ours-S, Ours-M) also show competitive results, with PSNR values ranging from 26.354 to 26.402, the SSIM from 0.755 to 0.771, the LPIPS from 0.326 to 0.353, and the MAE from 9.453 to 9.689. Overall, our method series comprehensively surpasses state-of-the-art (SOTA) methods like Uformer and LIEDNet in restoring image signals, structural integrity, perceptual quality, and pixel details, providing a more accurate solution for low-light remote sensing image enhancement.

Table 2.

Quantitative results on the dark-iSAID test set. The best results are highlighted in bold.

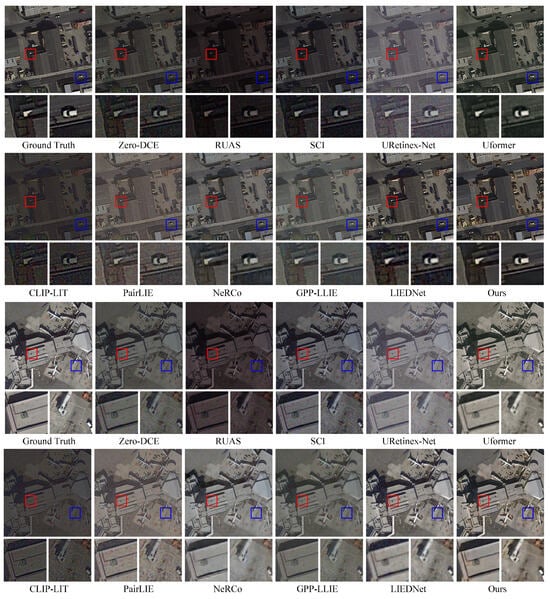

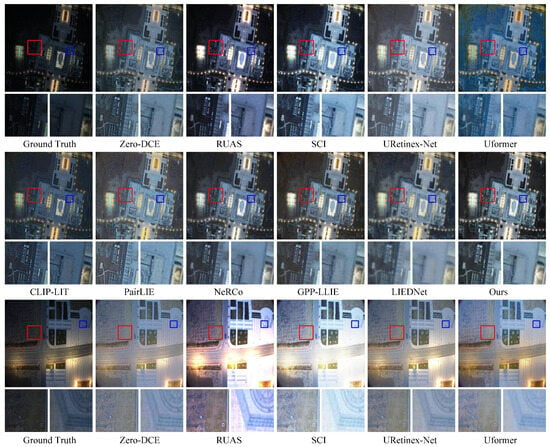

Visual comparisons shown in Figure 5 further highlight the advantages of our method. Methods such as Uformer, CLIP-LIT, PairLIE, NeRCo, GPP-LLIE, and LIEDNet have limitations. Uformer suffers from color distortion and an overall dark frame; CLIP-LIT and PairLIE fail to completely eliminate noise, with a large amount of residual noise in the zoomed-in sections; NeRCo and GPP-LLIE exhibit uneven brightness and an overall whitish tone in the frame; and LIEDNet has the issue of detail loss, where building textures and vehicle shapes appear blurred or distorted. In contrast, our method effectively suppresses noise, accurately restores colors and details, balances noise removal and detail enhancement, clearly presents targets such as buildings and vehicles, and achieves a uniform brightness distribution—thus providing excellent visual quality for low-light image enhancement on the dark-iSAID dataset.

Figure 5.

Visual comparison on dark-iSAID dataset. Image details are shown in magnified views.

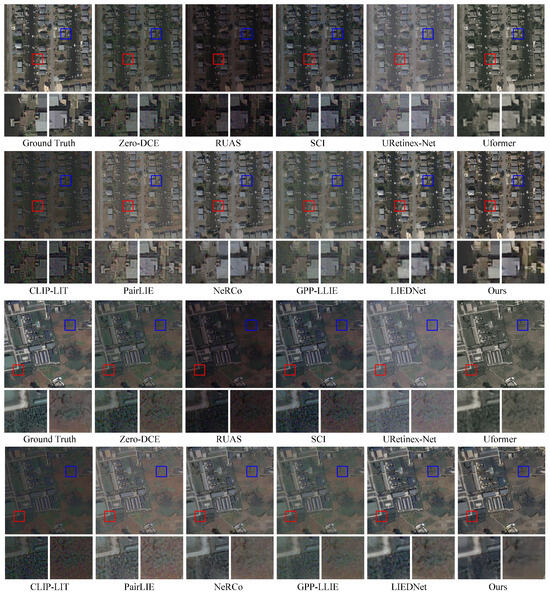

We further evaluated the methods on the darkrs dataset across the NIQE, BRISQUE, PIQE, and NL metrics. As shown in Table 3, our method achieves an NIQE score of 2.706, substantially lower than the second-best competitor NeRCo (3.313), and a BRISQUE score of 13.976, outpacing the second-best method LIEDNet (17.799), which showcases superior perceptual quality. The PIQE score reaches 6.748, standing out against LIEDNet (6.884), and the NL score reaches the second-best result of 0.315.

Table 3.

Quantitative results from Figure 6. The best results are highlighted in bold.

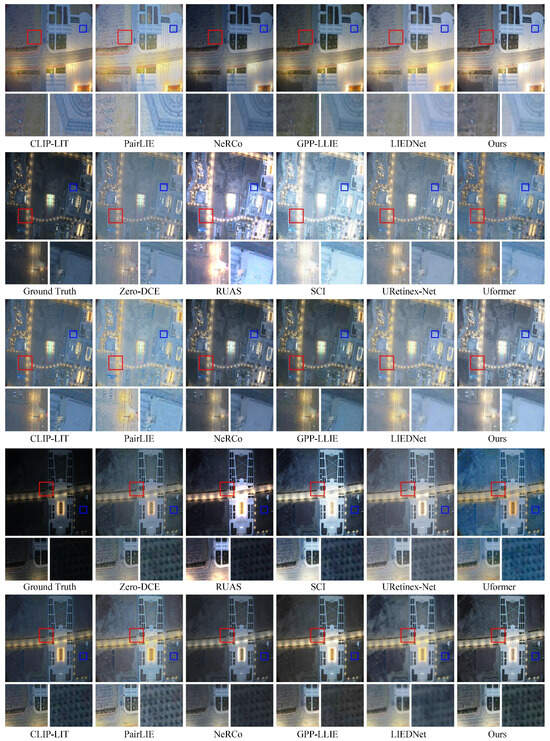

For the visual results shown in Figure 6, the brightness enhanced by NeRCo and GPP-LLIE is insufficient, resulting in an overall dark frame. LIEDNet loses details and distorts colors during the enhancement process. In contrast, our method effectively suppresses the noise, uniformly enhances the brightness, and accurately restores the scene details and colors. It clearly highlights building textures and road details, achieving a visual effect that far surpasses other methods.

Figure 6.

Visual comparison on darkrs dataset. Image details are shown in magnified views.

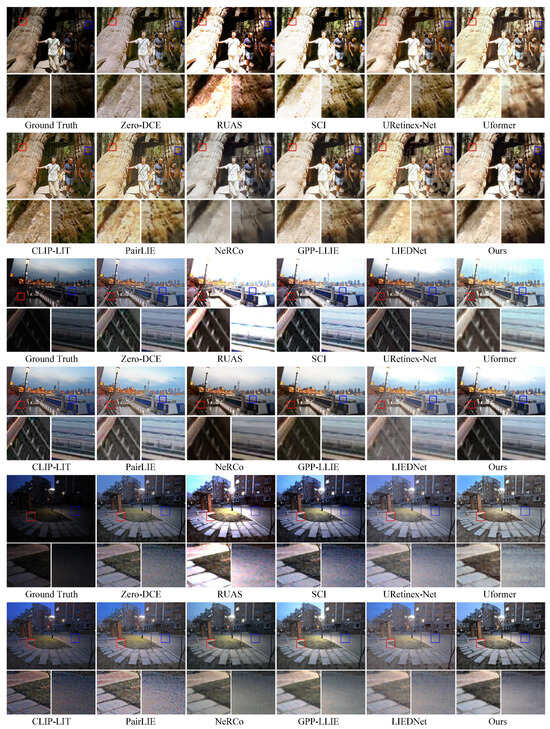

We further evaluated the performance in non-remote sensing scenarios to assess the robustness of our method. For unpaired datasets (DICM, NPE, and LIME), as shown in Table 4, our method achieves scores of 2.479, 16.363, and 0.386 in the NIQE, BRISQUE, and NL, respectively, outperforming the second-best methods Zero-DCE (2.730) in the NIQE, URetinexNet (16.433) in the BRISQUE, and GPP-LLIE (0.402) in the NL. Visual comparisons in Figure 7 indicate that Zero-DCE and URetinexNet leave a large amount of residual noise; while GPP-LLIE can effectively remove noise, it may introduce color distortion. In contrast, our method performs excellently across all scenarios, and it clearly presents elements such as tree textures, building details, and facilities in outdoor scenes.

Table 4.

Quantitative results from Figure 7. The best results are highlighted in bold.

Figure 7.

Visual comparison on DICM, NPE and LIME datasets. Image details are shown in magnified views.

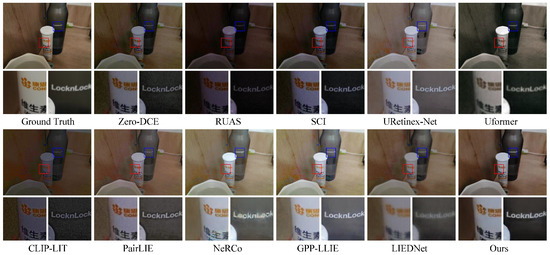

For the paired dataset (LSRW), the results are presented in Table 5 and Figure 8. Quantitatively, Ours-L achieves the highest values in the PSNR and SSIM, which are 19.420 and 0.576, respectively, surpassing the second-best methods by 0.543 and 0.031, respectively. It also attains the lowest MAE score of 22.187, which is superior to the second-best method LIEDNet (24.284). Qualitatively, NeRCo poorly integrates brightness and color, and LIEDNet fails to restore fine details. In contrast, our method effectively suppresses noise, accurately restores colors and fine details (such as wood color and text details), and ensures a uniform and natural brightness distribution, leading to visual effects that comprehensively outperform comparative methods.

Table 5.

Quantitative results on LSRW test set. The best results are highlighted in bold.

Figure 8.

Visual comparison on LSRW dataset. Image details are shown in magnified views.

5.4. Computational Complexity

In addition to the enhancement performance, the computational efficiency is also important in the field of low-light remote sensing image enhancement. We evaluate the computational costs of various methods using the inference times (s) under different input resolutions (, , , and ), and the results are summarized in Table 6. When comparing competing methods with the methods proposed in this paper, namely, Ours-T, Ours-S, Ours-M, and Ours-L, a shorter inference time indicates lower computational complexity. It should be noted that some inference times are unavailable due to out-of-memory (OOM) issues.

Table 6.

Inference times (s) under different resolutions. The best results are highlighted in bold.

Specifically, at the resolution, the CNN-based URetinexNet achieves an inference time of 0.0155, which is the lowest among the compared methods. Among our proposed methods, Ours-T, with an inference time of 0.0335, is competitive when compared with some of the other methods (such as NeRCo with 0.1620 and LIEDNet with 0.0535). As the resolution increases to , , and , our methods consistently achieve the lowest inference times, which are 0.0466, 0.0584, and 0.1098, respectively. These results outperform those of the CNN-based methods (URetinexNet and PairLIE), Transformer-based method (Uformer), implicit-neural-representation-based method (NeRCo), Mamba-based method (LIEDNet), and diffusion-based method (GPP-LLIE). As a diffusion-model-based method, our approach (0.0335) achieves approximately 20× faster inference speed than GPP-LLIE (0.6901). This indicates that our methods have great application potential for large-sized remote sensing images.

5.5. Ablation Studies

To assess the effectiveness of several key components in our proposed method, we conducted a series of ablation studies. All ablation experiments were performed using the dark-iSAID dataset. As shown in Table 7, our default setting is highlight in bold.

Table 7.

Ablation studies on the design effectiveness of our method. “w/o” indicates without.

5.5.1. End-to-End Training

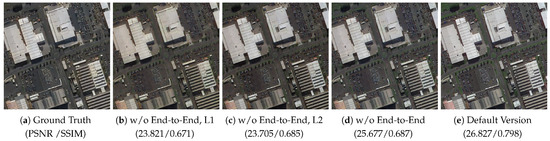

To verify the effectiveness of our end-to-end trained residual diffusion model, we conducted an ablation experiment using the standard diffusion model training method (w/o end-to-end). Specifically, we no longer synchronously execute the sampling process of the diffusion model during the training phase, so that the residual diffusion model no longer directly serves the reconstruction task. Instead, it focuses on using the U-Net to predict the residuals and noise that need to be removed at each step. Our training objective is updated to , furthermore, we also tested variants with only L1 loss (w/o end-to-end, L1 loss) and only L2 loss (w/o end-to-end, L2 loss).

From Table 7, the PSNR of “w/o end-to-end” (26.003) is lower than that of the default version (26.838), and its SSIM (0.659) and LPIPS (0.406) are also less optimal. The L1 loss variant has a PSNR of 25.110, and the L2 loss variant has a PSNR of 24.868, both significantly lower than the end-to-end training. The visual and metric comparisons on a single image are presented in Figure 9. All of these show that the end-to-end training mechanism helps the model better fit the task, enabling the residual diffusion model to more effectively contribute to image reconstruction, and the combined L1 + MS-SSIM loss is more beneficial for performance improvement compared with using a single loss.

Figure 9.

Visual comparison of ablation studies on end-to-end training. “w/o” indicates without.

5.5.2. Sampling Steps and Noise Coefficient

In the experimental settings of Section 5.1.2, we set the number of implicit sampling steps S to 1, the coefficient sequence of the noise diffusion process to decrease uniformly, and . We conducted ablation experiments to evaluate the impact of different S and . Specifically, we further tried combinations of implicit sampling steps S of 2 and 5, and of 0.1.

The results in Table 7 show that when the number of S increases from 1 to 2 and then to 5, the model’s performance in various metrics only fluctuates slightly, the variation range of PSNR values does not exceed 0.349 dB, the fluctuation of SSIM values is controlled within 0.005, and the difference in LPIPS values is also less than 0.012. This phenomenon benefits from the end-to-end training mechanism and physical prior guidance we adopted. End-to-end training enables the model to directly serve the reconstruction task, while physical priors provide a stable guidance framework, thus ensuring the robustness of the model’s performance. Meanwhile, it can be found that the differences under the two settings (, ) are also small. This indicates that under the guidance of physical priors, the model has strong adaptability to changes in noise coefficients, and the specific values of noise coefficients will not significantly affect its processing effect on low-light image enhancement tasks.

5.5.3. Physical Priors

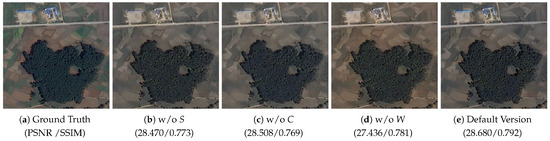

Finally, we analyzed the role of each illumination-invariant physical prior. In the experiment, we separately removed each of the three priors () introduced in the model and observed the changes in the various metrics one by one.

As shown in Table 7, the PSNRs of “w/o S” is 26.293, “w/o C” is 26.181, and “w/o W” is 25.805, all of which are lower than the default version (26.838). Their SSIM and LPIPS also show worse performances compared with the default. This demonstrates that each component of the physical priors plays a positive role in the model. The visual and metric comparisons on a single image are presented in Figure 10. The integration of these priors helps the model better conform to the physical characteristics of images during the enhancement process, thereby improving the overall quality of low-light remote sensing image enhancement.

Figure 10.

Visual comparison of ablation studies on physical priors. “w/o” indicates without.

6. Discussion

The proposed framework integrates a lightweight encoder–decoder, an end-to-end trained single-step residual diffusion model, and illumination-invariant physical priors. Its design addresses critical challenges in existing methods, particularly in balancing efficiency and performance.

The dedicated lightweight encoder–decoder stands out by targeting the unique characteristics of low-light remote sensing scenes. Unlike general-purpose encoders–decoders that often carry redundant computations and struggle to preserve subtle features in dim environments, this tailored component efficiently compresses and reconstructs information. This adaptability to low-light feature distributions lays a solid foundation for subsequent diffusion-based enhancement.

The end-to-end trained single-step residual diffusion model redefines the efficiency of diffusion models in this domain. By abandoning the multi-step noise estimation paradigm, it streamlines the enhancement pipeline without compromising quality. This is crucial for remote sensing tasks, where rapid processing of large-volume imagery is essential. The mechanism’s success highlights that diffusion models can be optimized for practical speed requirements when aligned with task-specific goals.

The integration of illumination-invariant priors addresses a long-standing issue in low-light processing of ambiguity from missing details. By imposing structural guidance rooted in physical properties, the framework reduces generation blurriness and enhances robustness across varying low-light conditions. This physics-informed guidance bridges the gap between data-driven learning and domain knowledge, demonstrating that incorporating prior knowledge can significantly stabilize and improve model performance.

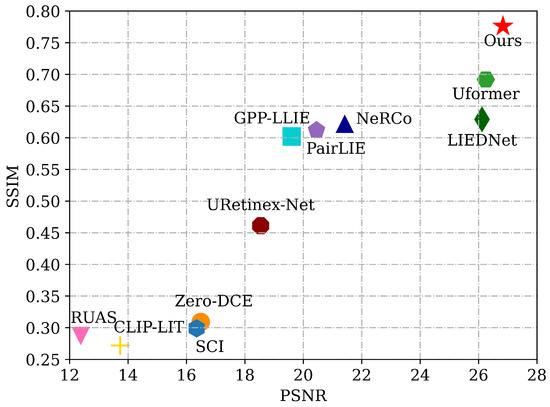

Comparisons with mainstream methods underscore the framework’s superiority in both quantitative metrics (e.g., PSNR and SSIM shown in Figure 11) and visual quality. However, it is important to note that the framework’s performance may vary in extreme low-light conditions, as the priors rely on at least minimal residual information to guide the enhancement. In addition, in some scenarios where there is a large gap between bright and dark areas, the model may experience partial overexposure. Future work could explore fixing these issues and extending this method to low-light enhancement of multispectral remote sensing images.

Figure 11.

The metrics of different methods on dark-iSAID.

7. Conclusions

In conclusion, this study presents a robust and efficient framework for low-light remote sensing image enhancement, with four key contributions. First, the lightweight encoder–decoder, tailored to low-light remote sensing scenes, reduces computational redundancy while preserving critical features. Second, the end-to-end trained single-step residual diffusion model accelerates inference significantly, enabling fast processing without sacrificing quality. Third, illumination-invariant priors provide structural guidance, mitigating blurriness from missing details and enhancing robustness in complex environments. Finally, extensive experiments on multiple datasets validate the framework’s superiority over mainstream methods, confirming its practical value.

This work balances speed, accuracy, and perceptual quality in low-light remote sensing image enhancement, the framework meets the demands of real-world remote sensing applications, where both efficiency and reliability are paramount. Future research could focus on extending the framework to handle ultra-low-light scenarios and general image restoration tasks, further broadening its impact in remote sensing and related fields.

Author Contributions

Conceptualization, B.D.; methodology, B.D. and B.S.; software, B.D.; validation, B.D.; formal analysis, B.D. and B.S.; investigation, B.D. and X.S.; resources, B.D.; data curation, B.D.; writing—original draft preparation, B.D.; writing—review and editing, B.D.; visualization, B.D.; supervision, B.S.; project administration, X.S.; funding acquisition, B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Singh, K.; Kapoor, R.; Sinha, S.K. Enhancement of low exposure images via recursive histogram equalization algorithms. Optik 2015, 126, 2619–2625. [Google Scholar] [CrossRef]

- Wang, Q.; Ward, R.K. Fast image/video contrast enhancement based on weighted thresholded histogram equalization. IEEE Trans. Consum. Electron. 2007, 53, 757–764. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond brightening low-light images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Rahman, Z.U.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 16–19 September 1996; IEEE: New York, NY, USA, 1996; Volume 3, pp. 1003–1006. [Google Scholar]

- Cai, R.; Chen, Z. Brain-like retinex: A biologically plausible retinex algorithm for low light image enhancement. Pattern Recognit. 2023, 136, 109195. [Google Scholar] [CrossRef]

- Hao, S.; Han, X.; Guo, Y.; Xu, X.; Wang, M. Low-light image enhancement with semi-decoupled decomposition. IEEE Trans. Multimed. 2020, 22, 3025–3038. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv 2017, arXiv:1711.00591. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Wang, H.; Xu, K.; Lau, R.W. Local color distributions prior for image enhancement. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 343–359. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12504–12513. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Peebles, W.; Xie, S. Scalable diffusion models with transformers. In Proceedings of the IEEE/CVF international Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4195–4205. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.; Ho, J.; Salimans, T.; Fleet, D.; Norouzi, M. Palette: Image-to-image diffusion models. In Proceedings of the ACM SIGGRAPH 2022 Conference Proceedings, Vancouver, BC, Canada, 7–11 August 2022; pp. 1–10. [Google Scholar]

- Saharia, C.; Ho, J.; Chan, W.; Salimans, T.; Fleet, D.J.; Norouzi, M. Image super-resolution via iterative refinement. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4713–4726. [Google Scholar] [CrossRef]

- Guo, L.; Wang, C.; Yang, W.; Wang, Y.; Wen, B. Boundary-Aware Divide and Conquer: A Diffusion-based Solution for Unsupervised Shadow Removal. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 12999–13008. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Q.; Fan, H.; Wang, Y.; Tang, Y.; Qu, L. Residual denoising diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 2773–2783. [Google Scholar]

- Zheng, D.; Wu, X.M.; Yang, S.; Zhang, J.; Hu, J.F.; Zheng, W.S. Selective hourglass mapping for universal image restoration based on diffusion model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 25445–25455. [Google Scholar]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Fu, Z.; Yang, Y.; Tu, X.; Huang, Y.; Ding, X.; Ma, K.K. Learning a simple low-light image enhancer from paired low-light instances. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Paris, France, 2–6 October 2023; pp. 22252–22261. [Google Scholar]

- Yang, S.; Ding, M.; Wu, Y.; Li, Z.; Zhang, J. Implicit neural representation for cooperative low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12918–12927. [Google Scholar]

- Liu, M.; Cui, Y.; Ren, W.; Zhou, J.; Knoll, A.C. LIEDNet: A Lightweight Network for Low-Light Enhancement and Deblurring. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 6602–6615. [Google Scholar] [CrossRef]

- Xing, L.; Qu, H.; Xu, S.; Tian, Y. CLEGAN: Toward Low-Light Image Enhancement for UAVs via Self-Similarity Exploitation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Yao, Z.; Fan, G.; Fan, J.; Gan, M.; Philip Chen, C.L. Spatial–Frequency Dual-Domain Feature Fusion Network for Low-Light Remote Sensing Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Zhao, X.; Huang, L.; Li, M.; Han, C.; Nie, T. Atmospheric Scattering Model and Non-Uniform Illumination Compensation for Low-Light Remote Sensing Image Enhancement. Remote Sens. 2025, 17, 2069. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention--MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; proceedings, part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Van Den Oord, A.; Vinyals, O.; Kavukcuoglu, K. Neural discrete representation learning. arXiv 2017, arXiv:1711.00937. [Google Scholar] [CrossRef]

- Esser, P.; Rombach, R.; Ommer, B. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12873–12883. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Jiang, H.; Luo, A.; Liu, X.; Han, S.; Liu, S. Lightendiffusion: Unsupervised low-light image enhancement with latent-retinex diffusion models. In Proceedings of the European Conference on Computer Vision, Seattle, WA, USA, 17–18 June 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 161–179. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Lengyel, A.; Garg, S.; Milford, M.; van Gemert, J.C. Zero-shot day-night domain adaptation with a physics prior. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 4399–4409. [Google Scholar]

- Waqas Zamir, S.; Arora, A.; Gupta, A.; Khan, S.; Sun, G.; Shahbaz Khan, F.; Zhu, F.; Shao, L.; Xia, G.S.; Bai, X. isaid: A large-scale dataset for instance segmentation in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019; pp. 28–37. [Google Scholar]

- Lee, C.; Lee, C.; Kim, C.S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, G.; Zhu, F.; Ann Heng, P. An efficient statistical method for image noise level estimation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 477–485. [Google Scholar]

- Paszke, A. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10561–10570. [Google Scholar]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Liang, Z.; Li, C.; Zhou, S.; Feng, R.; Loy, C.C. Iterative prompt learning for unsupervised backlit image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 8094–8103. [Google Scholar]

- Zhou, H.; Dong, W.; Liu, X.; Zhang, Y.; Zhai, G.; Chen, J. Low-light image enhancement via generative perceptual priors. Proc. Aaai Conf. Artif. Intell. 2025, 39, 10752–10760. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).