1. Introduction

During nighttime driving, complex environmental conditions such as fog, rain, and blind spots hinder the recognition of critical scene features. These challenges primarily arise from insufficient illumination. To overcome these limitations, it is necessary to utilize complementary spectral bands to capture additional scene information. By operating in a specific band of the electromagnetic spectrum, infrared imaging can detect the thermal radiation emitted by objects in the environment. It offers strong penetration capabilities and a high sensitivity to thermal sources. As a result, infrared images can effectively extract precise feature information. The fusion of visible and infrared images significantly enhances perceptual capabilities under such challenging conditions. Research indicates that this fusion technology holds substantial theoretical significance and practical potential in applications such as nighttime autonomous driving [

1] and military reconnaissance [

2]. Particularly, enhanced low-illumination and infrared image fusion techniques have significant applications in remote sensing, including nighttime urban monitoring, disaster response, and resource surveying.

In terms of application domain, image fusion primarily comprises three categories: remote sensing image fusion [

3,

4], visible–infrared image fusion [

5,

6,

7,

8], and multi-focus image fusion [

9,

10]. Methodologically, it may be broadly categorized into traditional multi-scale transform methods and deep learning-based algorithms [

11,

12,

13,

14,

15]. Among these, deep learning fusion methods constitute a current research focus due to their powerful feature extraction capabilities. These approaches use deep neural networks to extract hierarchical image features and then reconstruct fused images using specific integration strategies.

Under nighttime vision conditions, visible images often fail to capture background details due to insufficient illumination. Traditional visible and infrared image fusion methods, such as the Discrete Wavelet Transform [

11] and the Nonsubsampled Contourlet Transform [

12], typically only superimpose thermal source information. However, they have limited capacity to represent latent features within nighttime blind spots, including low-illumination regions and areas saturated by intense lighting. As a result, the completeness of fused image information is restricted. The fundamental reason lies in the inability of traditional methods to fully exploit the differences in deep image features, making them ineffective at simultaneously mitigating the degradation caused by low illumination and the distortion induced by overexposure in visible images. This limitation, in turn, constrains the reliability of visual perception in nighttime driving scenarios. Although deep neural networks offer significant advantages in feature extraction and nonlinear modeling, providing new opportunities to overcome the performance bottlenecks of traditional fusion approaches, the inherent characteristics of visible images, such as low illumination and high dynamic range, still lead to substantial performance variation across different deep learning-based fusion algorithms [

16].

Overall, the primary challenges confronting infrared–visible image fusion in nocturnal environments can be summarized as follows:

Image Information Deficiency: Imaging detectors inherently acquire incomplete information in nighttime scenes. Background and target features are constrained by grayscale dynamic range limitations and noise interference, causing feature loss that complicates fusion processes and leads to suboptimal fused image quality.

Insufficient Target Feature Saliency: When targets reside in visual blind zones within natural scenes, low-illumination conditions obscure their features in visible images. Meanwhile, infrared imagery provides only thermal signatures, substantially impairing subsequent target detection performance.

Local Contrast Saturation: In dark night environments, light illumination and reflections frequently induce localized oversaturation in detector-captured images. This phenomenon severely compromises image quality and substantially amplifies the difficulty of image enhancement.

Current mainstream visible–infrared image fusion algorithms employ diverse integration strategies to acquire enriched detailed information. However, due to extensive information-deficient regions (such as blind zones) in nocturnal visible images, fused results remain limited in achieving comprehensive scene representation. Jia et al. [

17] proposed fusing enhanced visible images with infrared counterparts to improve fusion image contrast. This approach, however, introduces two significant limitations. First, visible images are highly susceptible to oversaturation; second, the capacity for critical feature representation remains insufficient. To address these challenges, this work proposes a deep adaptive enhancement fusion algorithm for visible and infrared images, specifically optimized for night vision environments.

This study addresses critical challenges in infrared and visible image fusion for night vision systems, including texture loss, target degradation, and artifacts. First, to overcome adverse imaging conditions such as low illumination, rain, and fog, we develop a local color-adaptive enhancement model based on color mapping and adaptive enhancement principles. This model suppresses oversaturation in night vision imagery while effectively enhancing degraded content.

Next, a pretrained ResNet152 network extracts quintuple-layer deep features separately from both enhanced visible and original infrared images. For each feature map, we execute max pooling and average pooling operations in parallel. Max pooling captures salient feature differences while average pooling preserves global background information. Concatenating both pooling outputs generates comprehensive feature representations that retain gradient and edge information from both source images.

Finally, we apply Linear Discriminant Analysis to project feature data into a more discriminative space. Simultaneously, quadtree decomposition extracts salient infrared features to construct adaptive weighting factors for reconstructing the final fused image. Validation combines objective metrics with subjective visual assessments. Experiments demonstrate the proposed framework’s superior fusion performance over current mainstream algorithms on public benchmark datasets.

2. Related Work

Recent advances in deep learning, particularly within domains such as pattern recognition, have significantly advanced the field of image fusion, leading to the development of numerous improved fusion network models. However, low-illumination visible images inherently suffer from insufficient brightness and local saturation artifacts. When relying solely on neural networks, the fusion of visible and infrared images often produces suboptimal results. Accordingly, this section provides an overview of the current research status of low-illumination visible image enhancement algorithms, followed by a summary of the latest progress in visible and infrared image fusion algorithms.

2.1. Visible Image Enhancement Algorithms

Both non-uniformly illuminated images and globally low-illumination images require enhancement to restore visual quality. Specifically, non-uniform illumination is characterized by a spatially uneven light distribution, whereas low-illumination conditions manifest as globally insufficient brightness across the entire scene. The objective for enhancing both types is to restore images to a perceptually favorable state with clearly discernible details. In non-uniformly illuminated images, indiscriminate global brightness enhancement often causes severe oversaturation in relatively normal or moderately bright regions. Similarly, in low-illumination images, substantial luminance amplification used to reveal obscured details in dark areas can cause oversaturation in inherently bright regions such as license plates, headlights, or reflective surfaces. This resultant oversaturation precipitates irreversible information loss and noise amplification, thereby compromising visual quality.

For non-uniformly illuminated image enhancement, Pu et al. [

18] proposed treating images as products of luminance mapping and contrast measure transfer functions through a perceptually inspired method. This approach frequently causes over-enhancement. Li et al. [

19] applied a multi-scale top-hat transformation to estimate background illumination and correct light inhomogeneity. Their method suffers from unevenness and threshold drift. Pu et al. [

20] introduced a contrast/residual decomposition framework that separates images into contrast and residual components, with the contrast component containing the scene details. However, this technique is computationally intensive. Lin et al. [

21] presented two CNN-based systems using convolutional layers with exponential and logarithmic activation functions for unsupervised enhancement. These models suffer from high complexity and slow convergence.

For low-illumination image enhancement, the Retinex theory models this process as a color mapping transformation [

22]. Images processed by this method exhibit a visually smoother appearance while significantly enhancing the texture details and features present in the original image. Zhang et al. [

23] proposed a single convolutional layer model (SCLM). The algorithm introduces a local adaptation module that learns a set of shared parameters to accomplish local illumination correction and address the issue of varied exposure levels in different image regions. Although this method reduces oversaturation, it produces only marginal improvements in image enhancement.

Additionally, Luo et al. [

24] proposed a pseudo-supervised image enhancement method. Their approach first employs quadratic curves to generate pseudo-clear images. These pseudo-paired images are then fed into two parallel isomorphic branches, ultimately producing enhanced results through knowledge learning. However, the improvement in image details was marginal. Fan et al. [

25] introduced an end-to-end illumination image enhancement model called the multi-scale low-illumination image enhancement network with illumination constraints to achieve enhanced generalization capability and stable performance. Despite these advantages, the model was largely ineffective in mitigating overexposure. Jiang et al. [

26] developed an efficient unsupervised generative adversarial network. This algorithm demonstrates broad applicability for enhancing real-world images across diverse domains, though it exhibits a suboptimal denoising performance. Consequently, adaptive enhancement methods capable of processing bright regions and low-contrast regions in low-illumination images without inducing oversaturation remain an unmet research need.

2.2. Infrared and Visible Image Fusion Algorithms

Research on image fusion algorithms for night vision systems continues to advance, yet significant challenges persist. Gao et al. [

27] proposed a new method for infrared and visible image fusion based on a densely connected disentanglement representation generative adversarial network. However, their fused images exhibited the incomplete enhancement of salient features from visible images, the inadequate enhancement of target information from infrared images, and weak visual perception. Zhao et al. [

28] introduced a model-based convolutional neural network model to preserve both the thermal radiation information of infrared images and the texture details of visible images. However, these features remained inadequately enhanced in the fusion results. In parallel, Wang et al. [

29] proposed a cross-scale iterative attention adversarial fusion network, incorporating a cross-modal attention integration module to merge content from different modal images. However, their approach amplified noise together with details, resulting in over-enhancement and distortion. Ma et al. [

30] applied a state-of-the-art fusion algorithm to combine nighttime visible and infrared images. While the algorithm effectively enhanced target information in the fused image, the overall scene information remained less prominent. Park et al. [

15] developed a fusion algorithm based on cross-modal transformers, which captures global interactions by faithfully extracting complementary information from source images. However, the enhancement of night vision images was not significant. Finally, Tang et al. [

8] proposed a darkness-free infrared and visible image fusion method that effectively illuminates dark areas and facilitates complementary information integration. Yet, the fused image exhibited slight color distortion.

Beyond these fundamental approaches, Transformer- and GAN-based frameworks have gained prominence. Vibashan et al. [

31] introduced a Transformer-based image fusion method that models long-range dependencies between image patches through a self-attention mechanism, with its core mechanism residing in learning cross-modal global feature interactions and representations. This method, however, lacks model-specific preprocessing capabilities and fails to effectively extract salient structures and fundamental texture information from feature maps. Wang et al. [

32] developed a GAN-based fusion approach that primarily leverages the adversarial learning framework between a generator and a discriminator, aiming to generate fused results preserving salient features from source images. This methodology is susceptible to challenges during adversarial training, including mode collapse, training instability, and convergence difficulties.

When applied to downstream visual tasks, fusion methods face additional limitations. Wang et al. [

33] present a multi-scale gated fusion network to enhance change detection accuracy, utilizing EfficientNetB4 for bitemporal feature extraction. However, this approach demonstrates a limited generalization capability and unstable performance. Separately, Ma et al. [

34] propose a novel cross-domain image fusion framework based on the Swin Transformer for long-range learning. Nevertheless, their method lacks specialized optimization for nighttime infrared and visible image fusion in our target domain.

3. The Proposed Fusion Framework

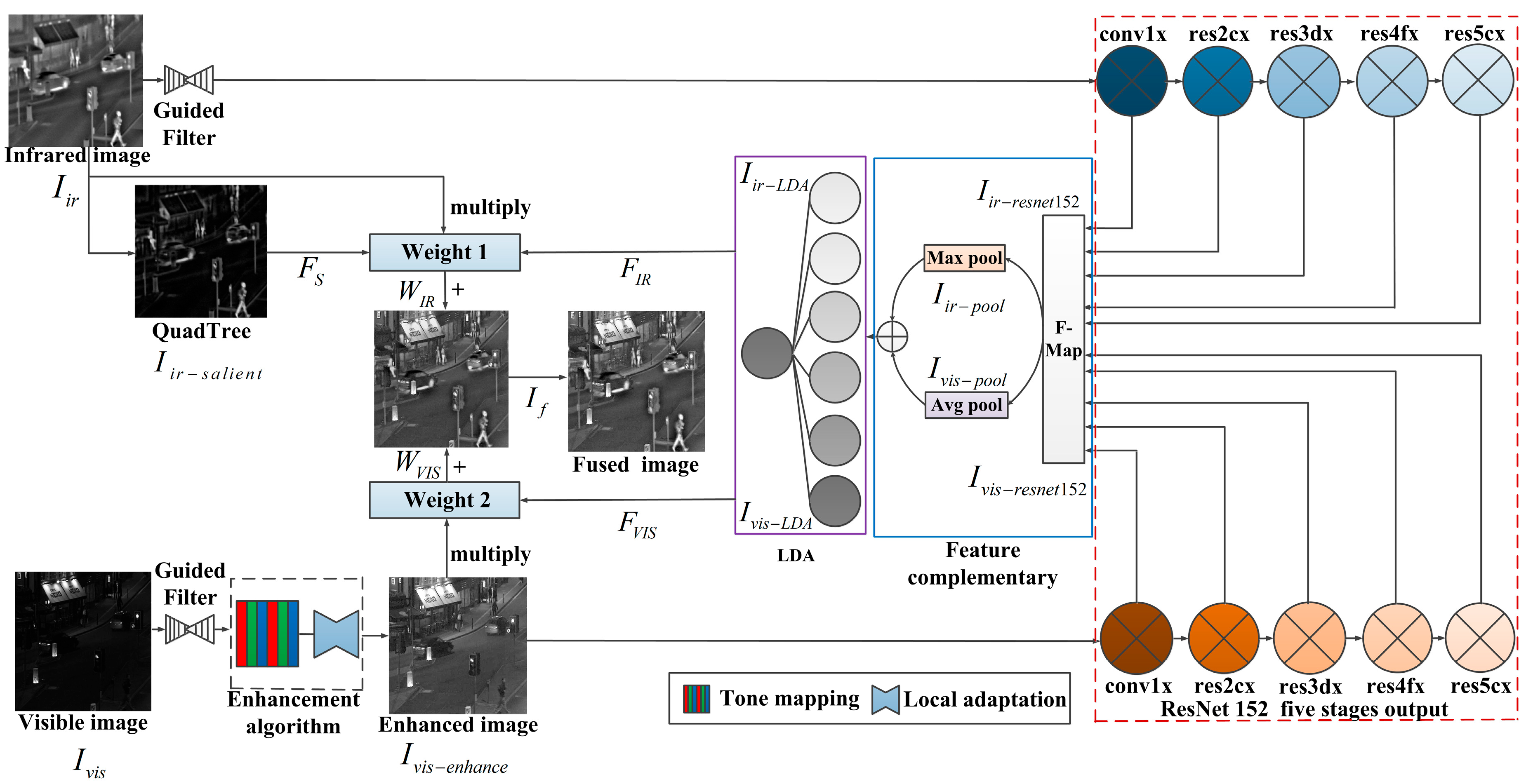

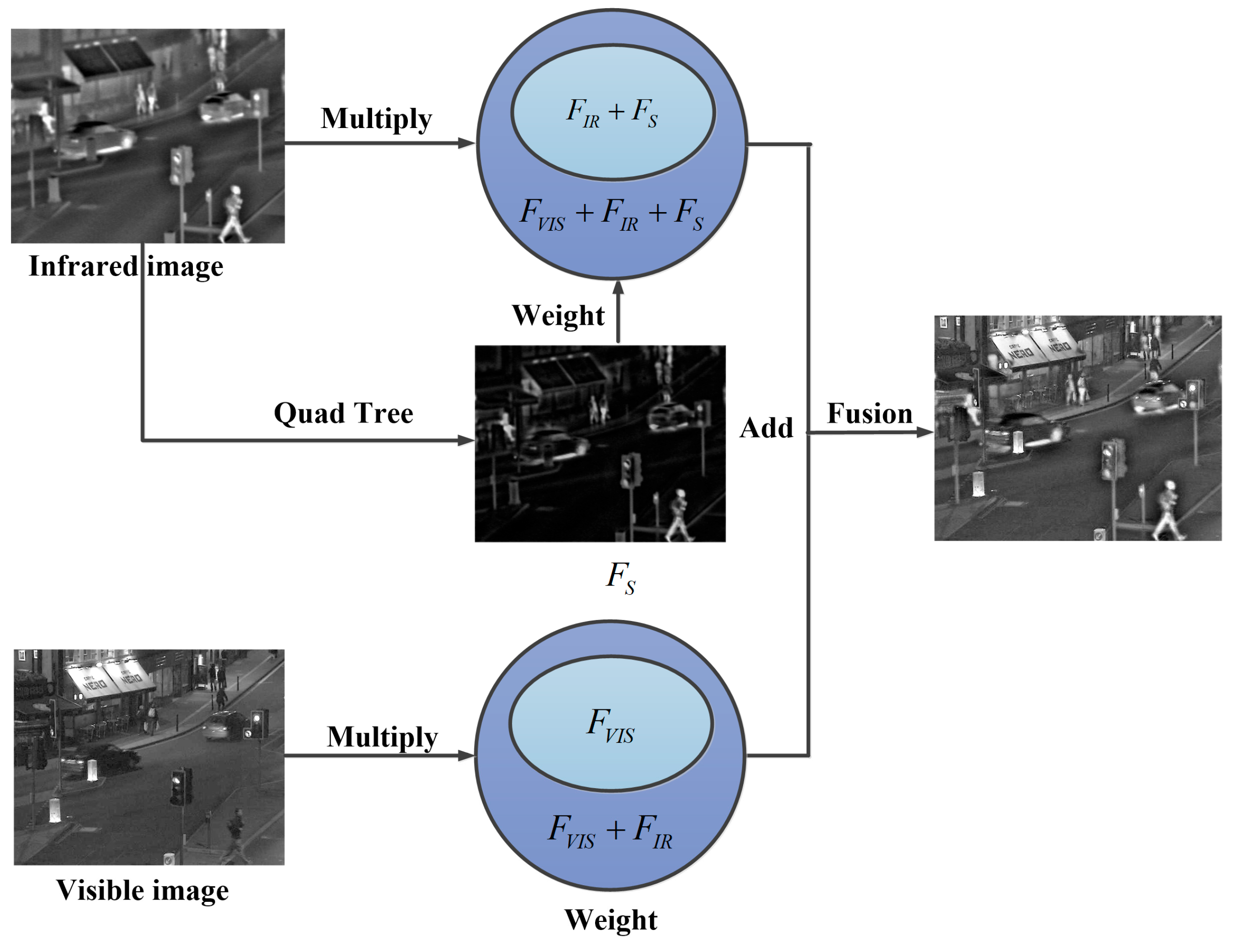

As shown in

Figure 1, the proposed visible and infrared image fusion pipeline operates in two stages: enhancing nighttime visible images, then fusing them with infrared data to deliver high-quality fused results.

Figure 2 details the proposed night vision image fusion framework. The system takes visible and infrared images as inputs. For the visible image, we apply color mapping and adaptive enhancement to suppress oversaturation. A pretrained ResNet152 model then extracts hierarchical feature maps separately from both the enhanced visible image and the original infrared input. At each feature level, we execute max pooling and average pooling operations in parallel: max pooling captures salient local feature variations, while average pooling preserves global contextual information. We subsequently project the fused pooling features into a more discriminative subspace using Linear Discriminant Analysis (LDA). Concurrently, we implement quadtree decomposition to derive salient infrared features, constructing adaptive weighting factors from these features for high-quality fused image reconstruction.

This section details the proposed fusion network.

Section 3.1 presents the enhancement algorithm.

Section 3.2 introduces the infrared feature extraction method.

Section 3.3 describes the deep learning-based fusion strategy.

3.1. Image Enhancement Algorithm

In night vision systems, visible images often suffer from low illumination and noise. Preprocessing is essential for noise removal, a crucial step for enhancing image visibility. Image filtering aims to reduce noise, often at the cost of slight blurring. Night vision visible images typically exhibit fuzzy edges and dark backgrounds. The neighborhood average method [

35] leverages spatial proximity and pixel similarity to suppress edge blurring. For improved edge preservation, the bilateral filter [

36] offers a nonlinear approach that incorporates both spatial information and gray level similarity.

Bilateral filtering combines a spatial Gaussian kernel with a range kernel based on gray level similarity. The Gaussian spatial function ensures that only pixels within the neighborhood influence the center point, based on spatial distance and gray level similarity. Meanwhile, the Gaussian function ensures that only the gray values of the center pixel are similar to those used for the blur operation. The method smooths images while preserving edges [

37]. The property of edge-preserving smoothing provides a more accurate and smoother illumination estimate for subsequent adaptive brightness adjustment, thereby effectively preventing halo artifacts or oversaturation during tone mapping.

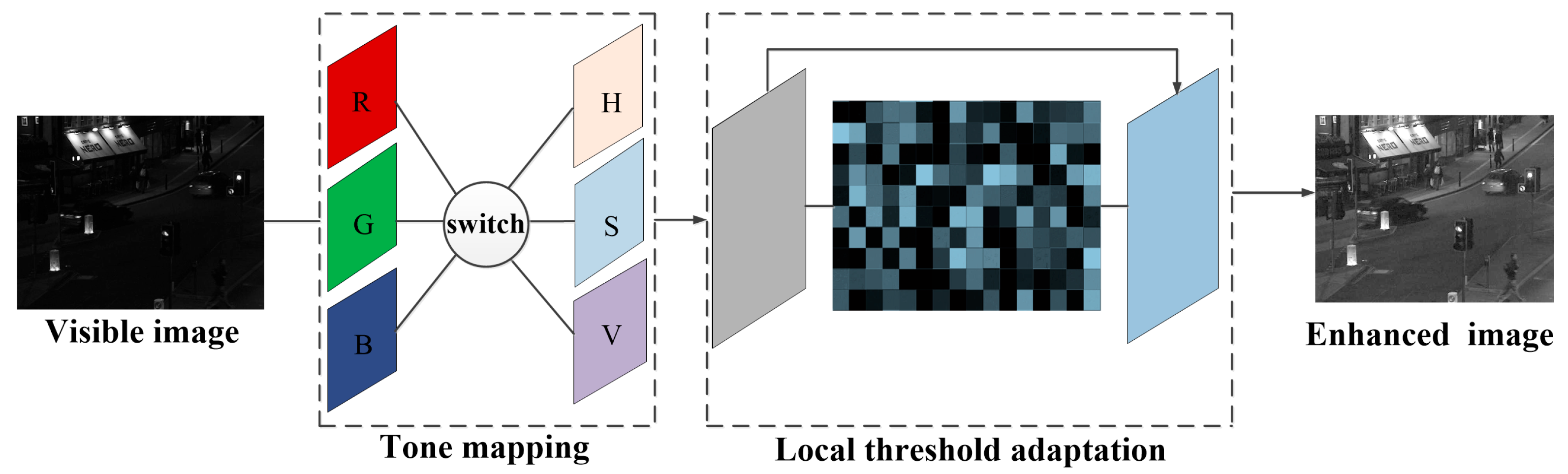

Figure 3 shows the image enhancement framework. This process first applies tone mapping, followed by adaptive local thresholding.

The enhancement process is described as follows: Given an input image

and a guidance image

(typically

), we obtain the filtered output

. The approach relies on a core assumption of guided filtering: within a local window

, the output relates linearly to the guidance image. This is illustrated as follows:

where

represents the linear coefficient,

denotes the bias term,

represents the window index.

indicates a local window,

represents the guidance image’s pixel value at location

, and

indicates the filtered output.

The coefficient

is derived as follows:

where

represents the variance of the guided image

within window

.

denotes the smoothing regularization parameter.

The bias term

is as follows:

where

represents the mean of the original image

within window

.

When processing nighttime visible images in foggy backlit scenes, the initial enhancement remains inadequate. To address localized oversaturation, we integrate color mapping and adaptive saturation suppression.

Because the visual system responds most strongly to green, followed by red and then blue, using different weights will yield a more reasonable grayscale image. The following values are derived based on experiment and theory.

We compute luminance

as follows:

where

,

, and

represent the red, green, and blue components in the image, respectively.

The logarithmic mean brightness is defined by Equation (5) as follows:

where

represents the average logarithm of the input luminance and

denotes a small constant, which is primarily to avoid numerical overflow when performing log calculations on pure black pixels.

is the arithmetic mean operator and

is the natural logarithm.

The fully adapted output is

where

represents the luminance at

and

denotes its global maximum.

Since enhanced images exhibit elevated luminance in saturated regions, adaptive processing is essential to prevent oversaturation artifacts. Accordingly, we employ adaptive saturation suppression.

where

represents the minimum luminance value of the input image and

denotes the maximum luminance value of the input image. The output

constitutes the final enhanced result.

The process optimally elevates global brightness before fusion with the infrared image.

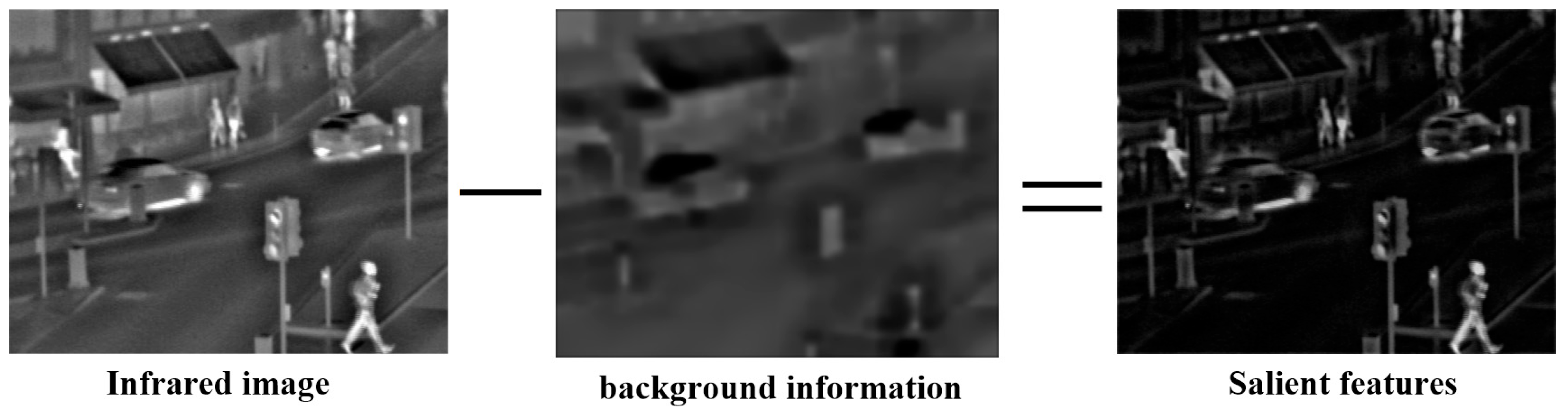

3.2. Infrared Feature Extraction

The accurate reconstruction of the background is crucial for extracting target features from infrared images. This process requires maximized sampling of control points from background regions while excluding interfering points from target areas. Existing pixel-wise search strategies exhibit significant limitations in computational efficiency and noise sensitivity. Since the quadtree decomposition method simultaneously excludes target points and efficiently samples background control points, it significantly enhances both accuracy and speed in infrared target segmentation [

38]. Therefore, this study adopts quadtree decomposition for infrared image processing through the following steps—as shown in

Figure 4.

The infrared image serves as the root node of a quadtree structure. When the intensity range (defined as the difference between the maximum and minimum intensity values) of any node exceeds a preset threshold , the node undergoes recursive subdivision into four child nodes. This decomposition terminates upon reaching the minimum size or meeting non-subdividable conditions. Parameter governs the splitting decisions, while constrains the spatial resolution. Smaller values are typically employed to suppress noise perturbations, yielding smooth image blocks.

Subsequently, we uniformly sample 16 control points per terminal block. The algorithm assigns each control point’s intensity based on the local minimum within its spatial neighborhood. Following Equations (8)–(10), we reconstruct the Bézier surface for each block by interpolating the three-dimensional coordinates of the control points, where denotes intensity. The complete procedure comprises three stages.

- 1.

Per-Block Background Reconstruction via Bézier Surfaces:

where

represents image pixel coordinates,

denotes the control point matrix of the

k-

th quadtree block,

and

indicate the normalized local coordinates,

denotes the cubic Bézier basis function,

indicates the transposed basis function, and

represents the block background grayscale value.

- 2.

Block combination and smoothing:

where

represents the quadtree block index,

denotes the block stitching operator,

indicates the Gaussian smoothing operator,

represents the standard deviation, and

represents the image background information.

- 3.

Salient feature extraction:

where

represents original infrared image and

denotes the infrared salient feature information.

3.3. Deep Learning Fusion Algorithm

At CVPR 2016, He et al. [

39] proposed a network architecture utilizing shortcut connections and residual representations to address the degradation problem. Compared with prior networks, this architecture is easier to optimize and achieves a higher accuracy with increased depth.

Figure 5 illustrates a residual block.

In

Figure 5,

denotes the input to the network block.

represents a network operation and relu indicates the rectified linear unit activation function. The output of the residual block equals

. This structure enables the construction of multi-level feature representations. Consequently, image reconstruction tasks using residual blocks demonstrate enhanced performance [

40]. We incorporate this architecture into our fusion algorithm.

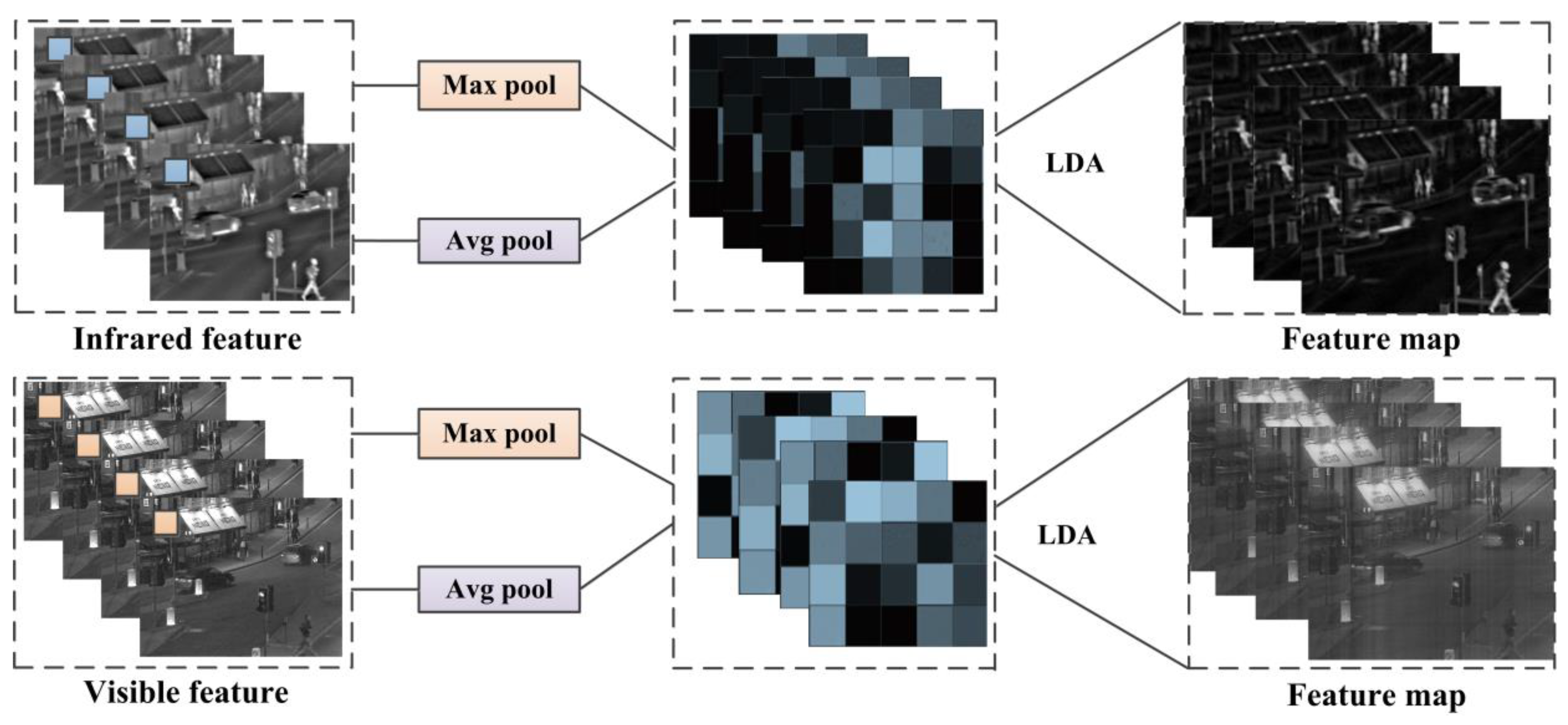

First, ResNet extracts hierarchical features from infrared and visible images through its convolutional architecture. The network generates five multi-scale feature maps at distinct processing stages (conv1x, res2cx, res3dx, res4fx, res5cx), with each layer capturing modality-specific characteristics. Second, feature maps undergo max pooling and average pooling operations. While max pooling preserves texture details and average pooling maintains background structures, an over-reliance on averaging can lead to global blurring. We therefore fuse both operations via weighted averaging to retain discriminative details and structural contours. Finally, to eliminate high-dimensional redundancies in the multi-layer features, we apply Linear Discriminant Analysis (LDA) for joint decorrelation and dimensionality reduction.

ResNet generates five hierarchical feature maps from input images. Each feature map encodes distinct characteristics of infrared and enhanced visible modalities.

Figure 2 illustrates this feature extraction process, where weight 1 and weight 2 each operate across the five feature layers.

3.3.1. Dimension Reduction and Decorrelation of Linear Discriminant Analysis (LDA)

Initially formulated for binary classification, Linear Discriminant Analysis (LDA) leverages discriminant functions to maximize class separability. Its computational process inherently reduces data dimensionality, enabling supervised linear dimensionality reduction. The algorithm optimizes inter-class separation while minimizing intra-class variance for classes, producing compact class embeddings with maximal between-class distance in the projection subspace.

The process of LDA contains the following five steps:

- 1.

Establish data standardization, normalize each feature to zero mean and unit variance.

where

and

represent the mean and standard deviation of the

j-th feature across all samples.

is the original feature dimension.

is the high-dimensional data.

- 2.

Compute the class and global mean vectors.

where

represents the total number of samples,

denotes the number of samples in class

,

indicates the set of samples in class

,

is the mean vector of class

, and

is the global mean vector.

- 3.

Compute the between-class scatter matrix

and within-class scatter matrix

.

where

represents the number of classes,

denotes the within-class scatter matrix, and

indicates the between-class scatter matrix.

- 4.

Calculate the eigenvector corresponding to the eigenvalue of the matrix.

where

represents the projection matrix and

denotes the discriminant ability index.

- 5.

Construct the projection matrix

and transform the data.

where

represents the projection matrix and

denotes the projected data matrix.

The image feature pooling and LDA conversion are shown in

Figure 6.

3.3.2. Image Reconstruction

To enrich the infrared thermal target information in the fused image, we incorporate significant features into infrared regions when selecting weight factors, thereby enhancing the night vision image.

Figure 7 displays the reconstructed fusion result.

After the dimensionality reduction extracts feature values from each image layer, we fuse the enhanced visible and infrared images using a weighted sum. Equations (20) and (21) show the corresponding equations.

where

represents the infrared image feature,

denotes the visible image feature,

corresponds to the infrared image’s salient feature,

designates the infrared feature’s weight in the fused image, and

indicates the visible feature’s weight in the fused image.

The weight factor and the source image are fused and reconstructed as follows:

where

represents the infrared image,

denotes the enhanced visible image, and

indicates the fused image.

The process carried out in this study is shown in Algorithm 1.

| Algorithm 1 AEFusion: Adaptive Enhanced Fusion of Visible and Infrared Images for Night Vision |

Input

14: end for

15: end for |

4. Experiments and Analysis

In this section, we first establish the infrared and visible image datasets. Secondly, we construct the experimental setup. Finally, we evaluate our fusion method against existing techniques both subjectively and objectively, supplemented by ablation studies that objectively compare technique results with our model’s outcomes.

4.1. Infrared and Visible Image Datasets

To address the problem of fusing infrared and visible images in night vision systems, we have established an infrared and visible dataset, which includes TNO [

41], VOT2020 [

42], and a multi-spectral dataset [

43]—as illustrated in

Table 1 and

Figure 8.

The TNO dataset features nighttime scenes containing objects such as houses, vehicles, and people. The VOT2020 dataset provides video sequences depicting vehicles, buildings, and trees. The multi-spectral dataset comprises focused imagery, including night vision scenes with buildings, vehicles, and human subjects. All datasets encompass diverse environmental conditions (such as indoor, outdoor, nighttime, partial exposure), enabling a rigorous investigation of image fusion algorithm generalization.

The rationale for selecting the TNO, VOT 2020, and multi-spectral datasets in this study rests upon three pivotal considerations:

Field Recognition: All three constitute benchmark datasets widely acknowledged in the image fusion domain.

Comprehensive Night Scenario Coverage: They encompass diverse indoor/outdoor night vision environments, addressing extreme illumination validation needs.

Task-Specific Utility:

TNO: Provides infrared–visible image pairs under extreme nocturnal conditions (smoke occlusion/low illumination), enabling the quantitative assessment of fusion algorithms in mutual information, target contour integrity, and low-illumination texture recovery.

VOT 2020: Constructs dynamic nighttime tracking scenarios, featuring glare interference, motion blur, and scale variations, specifically designed to test fusion algorithms’ low-illumination robustness and real-time performance.

Multi-spectral: Integrates spectral, spatial, and temporal dimensions through multi-band data (such as 390–950 nm) and dynamic disturbances (such as rapid motion, occlusion, and cluttered backgrounds), supporting generalization validation for cross-modal downstream tasks.

4.2. Experimental Settings

This study utilized source images from the TNO, VOT2020, and multi-spectral datasets. We propose a two-stage algorithmic architecture: stage 1 performs night vision visible image enhancement, and stage 2 fuses the enhanced visible images with infrared images. To validate the efficacy of this two-stage architecture, we designed comparative experiments for quantitative evaluation.

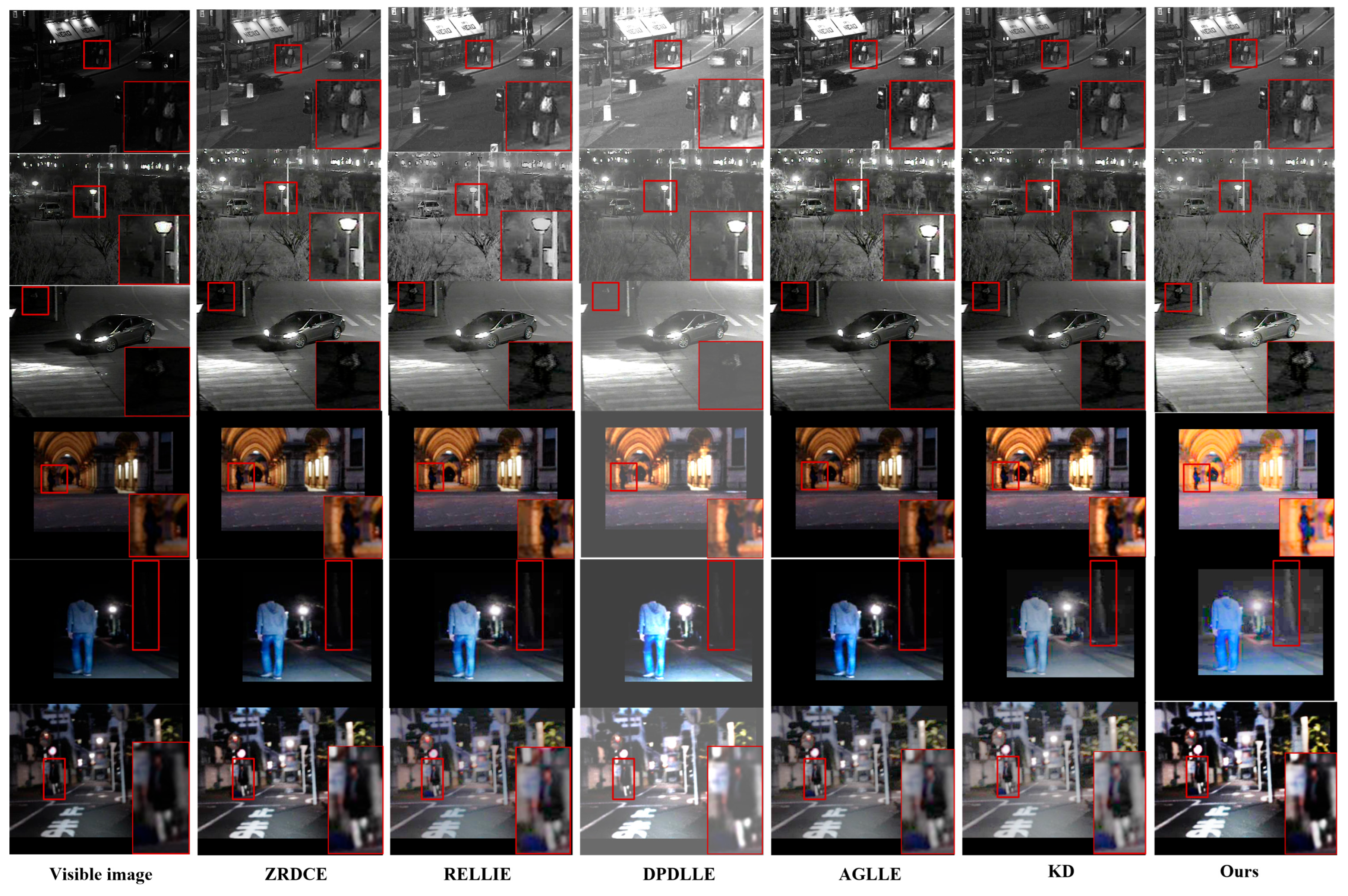

Figure 9 illustrates enhanced visible images from night vision scenarios.

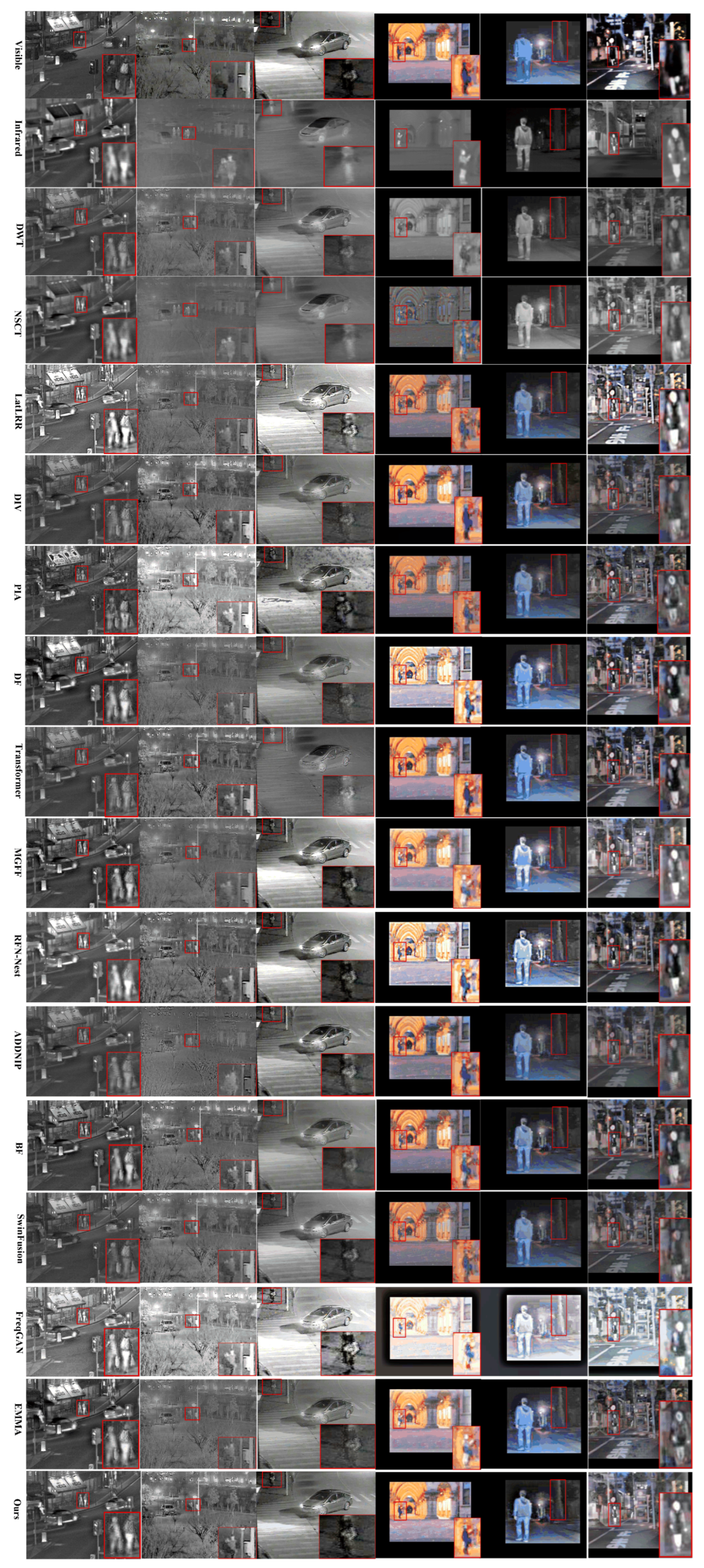

Figure 10 presents the fusion results of these enhanced visible images with corresponding infrared images.

4.2.1. Experimental Comparison of Night Vision Visible Image Enhancement

We selected five state-of-the-art image enhancement algorithms for comparison with our proposed method: ZRDCE [

44], RELLIE [

45], DPDLLE [

46], AGLLE [

47], and KD [

48].

We implemented ZRDCE and RELLIE in PyTorch 2.7.1 with Python 3.7.0 and ran DPDLLE, AGLLE, and KD on TensorFlow 2.0 using an NVIDIA GeForce GTX 1080 Ti GPU (16 GB RAM). We developed our algorithm on a Windows 10 workstation equipped with an Intel Core i7-10750H CPU (2.60 GHz) and 16 GB of RAM. We conducted all experiments in MATLAB R2022a.

We selected six representative images for comparative analysis, as shown in

Figure 9.

4.2.2. Image Fusion Experiment Comparison

For the comparison of image fusion algorithms, we selected fourteen classic methods, including DWT [

11], NSCT [

12], LatLRR [

49], DIV [

8], PIA [

5], DF [

50], Transformer [

31], MGFF [

51], RFN-Nest [

52], ADDNIP [

53], BF [

54], SwinFusion [

34], FreqGAN [

32], and EMMA [

55].

We implemented the following fusion algorithms for comparison: DWT, NSCT, LatLRR, MGFF, and BF in MATLAB 2022a; DIV, PIA, and DF in TensorFlow 2.0 on an NVIDIA TITAN RTX GPU; and Transformer, RFN-Nest, ADDNIP, SwinFusion, FreqGAN, and EMMA in PyTorch 2.7.1. Our algorithm was deployed on a workstation (Intel® Core™ i7-10750H @ 2.60 GHz, 16 GB RAM, Windows 10) using MATLAB 2022a. Feature extraction utilized ResNet152 weights that were pretrained on ImageNet. Training executed 110 epochs with a batch size of 64 and RMSprop optimizer (lr = 0.005). To ensure a fair comparison, we carefully tuned each method to its best performance using the configurations reported in its original publication.

The TNO dataset comprises 63 pairs of night vision images, allocated as follows: 43 pairs to the training set, 10 pairs to the validation set, and 10 pairs to the testing set. From the 14-night vision video sequences in the VOT2020 dataset, we extracted 167 image pairs, allocating 107 pairs to the training set, 30 pairs to the validation set, and 30 pairs to the testing set. The multi-spectral dataset consists of 137 pairs of night vision images, distributed as follows: 97 pairs to the training set, 20 pairs to the validation set, and 20 pairs to the testing set.

In this experiment, we implement the proposed algorithm to fuse enhanced visible and infrared images across all datasets. We conduct comprehensive evaluations against fourteen benchmark fusion algorithms.

Figure 10 visually compares six representative fusion results.

4.3. Subjective Evaluation

The primary purpose of subjective visual effect evaluation is to distinguish the contrast of scene information in the image. Existing subjective assessment methods primarily derive quality judgments from normalized observer ratings. Its drawback is limited mathematical tractability, whereas its strength is faithfully reflecting perceived visual quality. In this study, we conducted fourteen types of comparative experiments. Some algorithms are classic traditional fusion methods, and others are the latest fusion algorithms. We utilize the Mean Opinion Score (MOS) for the quantitative subjective evaluation of fusion results [

56]—as shown in

Table 2.

This study utilizes three benchmark datasets (TNO, VOT2020, and multi-spectral), selecting five representative night vision infrared and visible image pairs per dataset for a total of 15 pairs, where each set comprises original infrared images, original visible images, fusion results from 14 classical and deep learning-based algorithms, and outputs generated by the proposed method. Subsequently, 20 observers (age 25–40, normal/corrected-to-normal vision, 10 image processing experts and 10 non-experts) were recruited for subjective evaluation, with each required to independently perform ratings under a controlled protocol featuring 20 s image sequence presentations followed by 5 s blank intervals to eliminate the persistence of vision effects. The data processing workflow involved discarding extreme scores (highest and lowest) per fused image while retaining the middle 80% of valid ratings, followed by the computation of global Mean Opinion Scores (MOSs) for all 15 fusion algorithms based on evaluations across the 15 image sets.

Figure 9 presents the enhancement results of six night vision visible image sets processed by six algorithms, while

Figure 10 displays the fusion results of six infrared and visible image sets generated by fifteen algorithms.

Table 3 summarizes the Mean Opinion Scores (MOSs) evaluated by 20 observers across all 15 image sets for each algorithm. A comparative analysis reveals that the proposed algorithm achieves an optimal visual performance, demonstrating superior capabilities in target saliency enhancement, detail preservation, and brightness–contrast improvement. The DIV, Transformer, ADDNIP, RFN-Nest, BF, SwinFusion, and EMMA methods exhibit a relatively strong performance, each excelling in specific dimensions such as target saliency, detail retention, and visual naturalness. Conversely, the FreqGAN method demonstrates a suboptimal performance across these three metrics due to its inherent instability.

4.4. Objective Evaluation

4.4.1. Image Enhancement Evaluation

For the evaluation of night vision visible image enhancement results, there is no full reference image for comparison due to the changes in the color and structure of the image. Therefore, we evaluated the enhanced images using the natural image quality evaluator (NIQE) and lightness order error (LOE) indexes of non-reference evaluation standards [

57,

58]. It is noteworthy that these indicators reflect only certain aspects of image quality and may not be entirely consistent with human visual perception.

Table 4 presents a comparison of the average enhancement metric scores for each algorithm. The results demonstrate that the proposed algorithm achieved lower LOE and NIQE values compared with the other five methods. This indicates that the local adaptive method applied after tone mapping is highly effective for enhancing low-illumination images.

4.4.2. Image Fusion Evaluation

For infrared and visible images, the following problems occur during the fusion process:

The long-distance contour of the infrared image collected is unclear.

The visible image in the night vision system contains many blind areas. Its background information is unclear, resulting in difficulty in recognizing the target.

The fused image fails to highlight target information while also lacking comprehensive background details.

To address specific challenges, we propose six evaluation indicators:

Information entropy (EN) quantifies information richness in inherently unclear infrared images [

59].

Peak signal-to-noise ratio (PSNR) evaluates fusion quality to enhance low-contrast visible images in night vision systems [

60].

Structural similarity (SSIM) assesses distortion probability in fusion-prone outputs [

61].

Edge preservation index (

) measures edge integrity retention to counter frequent blurring in fused results [

62].

Visual Information Fidelity (VIF) quantifies the fidelity level of multi-source visual information preservation in fused images, thereby reflecting robustness against detail loss [

63].

Average Gradient (AG) assesses spatial clarity in fused outputs to counteract edge blurring artifacts [

64].

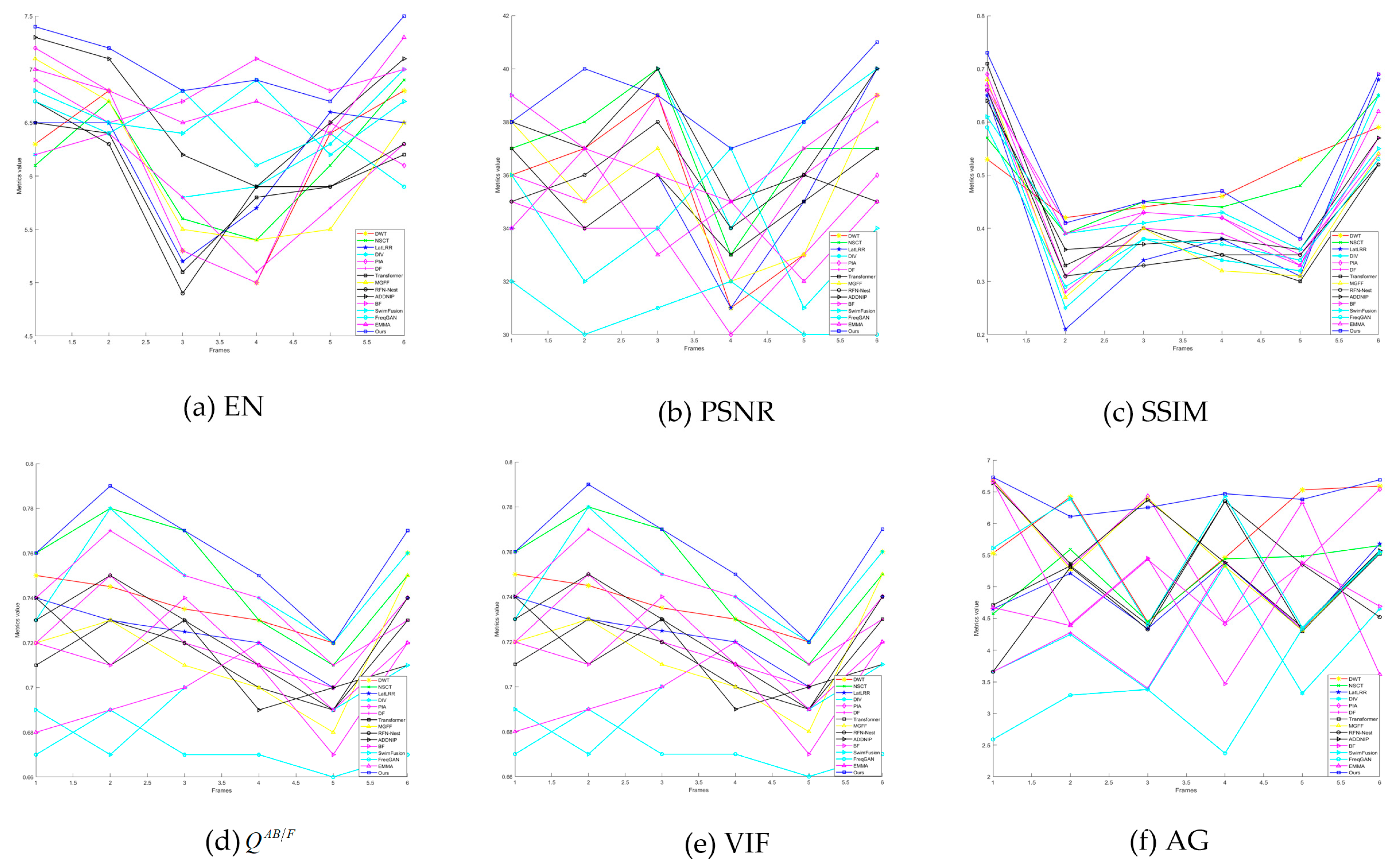

We selected the latest fifteen fusion algorithms for comparison.

Figure 11 shows the comparison of six indicators for the fusion results of each algorithm.

Figure 11 presents comparative performance metrics of the fifteen fusion algorithms across six benchmark image sets. The proposed algorithm demonstrates consistent superiority on all three datasets. A quantitative evaluation using six key metrics (EN, SSIM, PSNR,

, VIF, and AG) confirms that our method outperforms fourteen baseline algorithms across all performance dimensions. Subsequent analysis will examine the distinctive characteristics of each fusion approach.

The DWT [

11] approach employs multi-scale decomposition to extract multi-resolution image features, and its fusion results demonstrate strong performance on EN and PSNR metrics. The NSCT [

12] method utilizes its non-subsampled strategy to capture image edge and texture features effectively, and its fusion results exhibit significant advantages across multiple metrics, including EN, PSNR, VIF, and AG. The LatLRR [

49] method extracts detailed image features. Its fused images demonstrate favorable values for the PSNR, SSIM, EN, and VIF metrics. The DIV [

8] method decomposes images into contour and detail components, utilizing neural networks to extract multi-layer feature maps from the detail part. DIV’s fused images show improved values for EN, PSNR,

, and AG. The PIA [

5] method establishes an adversarial network between a discriminator and a generator, aiming to retain edge and gradient features in fused images. PIA’s fused images indicate better values for SSIM, VIF, AG, and

.

The DF [

50] method constructs a coding network to extract richer texture information from source images, with its fused images demonstrating superior EN, PSNR, SSIM, and AG metrics. The Patch Pyramid Transformer [

31] extracts non-local features for accurate input reconstruction, yielding fused images with enhanced EN, SSIM, and

values. The MGFF [

51] approach employs guided image filtering to isolate significant regions in multi-view scenes, producing fused results exhibiting higher PSNR, SSIM, and AG. Further advancing the field, the RFN-Nest [

52] preserves feature enhancement via a two-stage training strategy, where its fused images achieve improved SSIM, VIF, AG, and

. The ADDNIP [

53] framework decomposes images into tripartite frequency components to capture comprehensive features, leading to fused outputs with elevated EN, PSNR, SSIM, VIF, AG, and

. The BF [

54] method utilizes hierarchical Bayesian inference to align fusion results with human visual perception, culminating in fused images displaying superior EN, SSIM, VIF, and AG.

The SwinFusion [

34] method employs an attention-guided cross-domain interaction module to achieve domain-specific feature extraction and complementary cross-domain integration, yielding fused images with an exceptional performance for EN, PSNR, and

. The FreqGAN [

32] constructs a frequency-compensating generator to enhance contour and detail features. Its fused images suffer from suboptimal results across multiple metrics due to training instability. The EMMA [

55] framework adopts an end-to-end self-supervised learning paradigm for direct fused image generation, demonstrating significant advantages in EN, PSNR,

, and VIF.

Compared with existing methods, the fourteen algorithms demonstrate only partial performance advantages on specific evaluation metrics. However, our proposed algorithm surpasses all comparative methods across all assessment metrics. Overall, the approach successfully extracts detailed features from source images, prominently highlights salient target regions, fully preserves fine structural details, and achieves natural contrast enhancement.

4.5. Ablation Study

To comprehensively and objectively evaluate the advantages of the proposed algorithm, we designed two sets of controlled experiments. Under the condition of maintaining the base model architecture, we sequentially compared the baseline ResNet152 model, the baseline ResNet152 model integrated with dual pooling and LDA modules, and the model proposed in this study to assess the evolution of performance. Concurrently, testing was conducted on three public datasets using identical objective evaluation metrics as referenced in the original literature.

Table 5 delineates the quantitative results of ablation models versus the complete model across all metrics, thereby precisely quantifying the contribution of each module to the performance enhancement of the final integrated model.

As shown in

Table 5, the ablation results demonstrate that when the adaptive visible image enhancement module is excluded, the ResNet152 baseline and its dual pooling+LDA variant achieve a key metric performance comparable to the fourteen baseline algorithms across three datasets. Critically, the ResNet152 model integrated with dual pooling and LDA significantly outperforms the standalone ResNet152 model, validating the efficacy of the dual pooling strategy (preserving salient features and gradient information) and LDA (optimizing feature discriminability). Furthermore, ResNet152’s deep feature extraction captures richer information than shallow or handcrafted features, while the adaptive enhancement module substantially improves visual quality in low-illumination and oversaturated regions. The quantitative analysis confirms our key components: adaptive visible image enhancement, ResNet152 feature extraction, a dual pooling strategy, and LDA optimization collectively provide indispensable contributions to systematic image fusion enhancement.

5. Discussion

Our proposed locally adaptive enhancement algorithm specifically targets low-illumination scenarios and localized luminance saturation. Under severely adverse weather conditions characterized by critically limited visibility (such as dense fog, heavy rain, or snow), visible imagery undergoes significant quality degradation, exhibiting substantial scattering noise and extremely low-contrast information. In such operational environments, our enhancement method may amplify inherent noise or fog-induced artifacts in the visible inputs. This could potentially introduce unintended pseudo-features or over-enhancement in the fused outputs, manifesting as excessive brightness in foggy regions or textural degradation. While infrared imaging can penetrate atmospheric obscurants to reveal thermal signatures, its scene details become progressively obscured in dense fog. Therefore, future research will focus on integrating weather-aware modules to develop jointly optimized fusion strategies for adverse weather conditions.

The existing fusion algorithms, particularly those involving deep neural networks (such as ResNet152) for multi-layer feature extraction, pooling operations, LDA-based dimensionality reduction, and subsequent reconstruction steps, exhibit relatively high computational complexity. Processing a single standard-resolution image (640 × 512 pixels) on current experimental platforms takes approximately 50 ms per frame. This performance is inadequate for meeting the stringent requirements of real-time applications. Consequently, future optimization efforts will prioritize model compression, model lightweighting, and inference engine optimization strategies. The specific deployment is as follows:

Model Compression: We will employ structured pruning to remove redundant channels or filters in the feature extraction backbone (ResNet152). This approach will significantly reduce the model’s parameter count and computational load while maintaining its representational capacity.

Lightweight Backbone Replacement: To achieve an optimal fusion performance, this study employed the parameter-heavy ResNet152 as its feature extractor. Subsequently, we will replace the backbone network with lightweight architectures specifically designed for mobile and embedded devices, such as GhostNet, EfficientNet-Lite, or SqueezeNet.

Inference Engine Optimization: During actual deployment, we will employ an efficient inference engine to accelerate the optimized model. These tools deliver operator fusion, inter-layer computation optimization, and hardware-specific optimizations, further enhancing inference speed.

These experiments primarily relied on existing benchmark datasets, whose limited scale may restrict a comprehensive evaluation of the model’s performance under extreme or highly diverse nighttime conditions. Although the model achieves strong results on available data, the further validation of its generalization ability using larger and more real-world datasets remains necessary. To thoroughly assess the model’s robustness and adaptability in practical applications, future work will include extensive testing on newly emerging large-scale urban nighttime driving datasets (such as KAIST) that contain richer scenarios.

6. Conclusions

In this study, we propose an adaptive enhanced fusion algorithm for visible and infrared images in night vision scenarios. Our central contribution is a coordinated processing framework that integrates adaptive enhancement with deep feature extraction. This framework effectively addresses the shortcomings of existing methods under extreme lighting conditions. First, we designed a local adaptive enhancement algorithm that addresses the simultaneous presence of low-illumination and overexposed areas in visible images. This algorithm employs a dual branch correction mechanism to effectively suppress halo artifacts while enhancing textural details, thereby providing better-balanced source images for subsequent fusion. When compared with existing end-to-end fusion methods such as those based on GANs or Transformers, this preprocessing step substantially improves performance in complex nighttime environments. Second, we fully utilize the hierarchical representation capability of ResNet152 to extract multi-scale features from both infrared and visible images. Our approach combines max and average pooling to preserve prominent thermal targets for infrared images while enhancing structural details from visible ones, achieving more complementary feature representations. Furthermore, we introduce a feature significance-based adaptive weighting mechanism for fusion. This method uses infrared characteristics to guide weight generation, ensuring that the fused image emphasizes thermal targets while preserving visible light details to the greatest extent possible. The experimental results demonstrate that the proposed method outperforms current state-of-the-art techniques in both subjective visual quality and objective evaluation metrics. It significantly improves target recognition rates and scene perception in nighttime environments, effectively reducing risks associated with blind zones.