1. Introduction

The use of spatial and semantic data for digitizing the built environment has rapidly increased in recent years [

1]. These data are fundamental for a wide range of applications, including asset management, facility monitoring, urban planning, construction management, and digital documentation [

2]. Point clouds and RGB images serve as common sources, providing both geometric and visual information about physical assets. However, generating complete and dense point clouds for a scene remains a costly and time-consuming process [

3]. Laser scanning and photogrammetry often require specialized equipment, multiple viewpoints, and complex post-processing steps. Moreover, raw point clouds and RGB images lack semantic information, such as labels for structural elements and objects, which limits their usefulness for analysis and decision-making [

4]. Adding these labels typically involves manual annotation or advanced machine learning, often constrained by limited training data and a narrow range of object classes.

Traditional approaches such as stereo matching and Structure-from-Motion (SfM) can generate dense 3D reconstructions from multiple images, but these methods are computationally intensive and frequently struggle with incomplete or noisy data, particularly in cluttered indoor environments [

4]. Artificial Intelligence (AI)-based methods for 2D and 3D segmentation, classification, and object detection have improved semantic labeling capabilities; however, most existing approaches rely on datasets covering only a limited range of object classes, reducing their effectiveness for scenes with rare or complex structures and restricting generalization across diverse environments.

To address these challenges, this study introduces a fully automated framework designed to replace traditional scan-to-BIM workflows by generating semantically enriched 3D data directly from a single RGB image. The proposed method unifies domain adaptation and multi-task deep learning to eliminate manual annotation, reduce data acquisition costs, and extend the range of recognizable object classes. The framework is developed to achieve high semantic segmentation accuracy and precise 3D reconstruction, as evidenced by per-point semantic accuracy of 81.89% and a reconstruction RMSE as low as 0.02. By integrating high-capacity 2D neural networks for joint semantic segmentation and depth estimation, the system enables direct export of BIM-compatible 3D models, facilitating efficient and scalable digitalization of complex built environments.

Specifically, the method employs 2D neural networks to perform semantic segmentation and depth estimation from a single input image. The predicted depth and semantic information are fused to reconstruct a detailed 3D mesh and transfer semantic labels into the 3D domain, thus enabling the direct creation of BIM-compatible objects. This end-to-end pipeline advances the automation of scan-to-BIM processes, enhances semantic richness, and supports practical applications such as facility management, digital twins, and digital documentation of complex indoor scenes. The method generates 3D reconstructions with annotation masks from a single image, enabling export of point cloud meshes as segmented objects to CAD/BIM software, such as Revit 2025. Importantly, the framework enables the segmentation of previously unrepresented objects such as stairs, balustrades, railings, and people—object categories that are new to 3D segmentation networks. The approach significantly reduces the need for extensive data capture and manual processing, while providing rich semantic information valuable for applications such as Building Information Modeling (BIM), facility management, construction monitoring, and digital documentation. This method addresses key limitations of current workflows by enabling fast, scalable generation of semantically rich 3D data from minimal input. The solution is well-positioned to advance scan-to-BIM, automated modeling, digital twins, and asset management applications.

The key contributions of this work include:

Introduction of a domain adaptation framework that transfers semantic labels from 2D images to 3D reconstructions;

Implementation of a multi-task learning approach that simultaneously predicts segmentation and depth from a single image;

Provision of a 41-class 3D point cloud dataset, including 37 shared classes and 5 new classes relevant to building structures and indoor environments;

Development of new 3D segmentation models, evaluated on both the generated dataset and standard benchmarks;

Achievement of high reconstruction accuracy, with 0.02 RMSE and 81.89% per-point accuracy;

Proposal of an automated workflow enabling the direct transformation of a single RGB image into semantically enriched 3D BIM objects, streamlining the process of generating detailed, class-aware digital models and facilitating advanced semantic enrichment without manual intervention or specialized sensor data.

In summary, this research introduces the first domain adaptation pipeline that transfers detailed semantic information from 2D image annotations to 3D BIM-compatible objects, enabling recognition and export of object classes such as stairs, balustrades, railings, people, and books, which have not previously been addressed in 3D segmentation datasets or scan-to-BIM pipelines. The structure of this paper is as follows:

Section 2 reviews applications and benefits of generating 3D point clouds, followed by a discussion of AI-based domain adaptation for semantic enrichment of point clouds using RGB images and 3D reconstruction methods.

Section 3 outlines the proposed methodology, applied techniques, and models.

Section 4 presents the implementation and results on real-world cases, demonstrating practical feasibility.

Section 5 summarizes the key findings, limitations, and potential directions for future work.

Section 6 provides the concluding remarks.

2. Related Works

The digitization and semantic enrichment of built environments has attracted considerable attention in recent years, giving rise to diverse approaches in scan-to-BIM, point cloud processing, and domain adaptation. This section first reviews existing methods for generating 3D point clouds and subsequently discusses advances in AI-driven domain adaptation for 3D indoor datasets, with particular emphasis on key trends in cross-modal semantic mapping.

2.1. Applications and Benefits of Generating 3D Point Clouds

With the growing interest in the digital transformation of the Architecture, Engineering, Construction, and Operations (AECO) sectors, the demand for digital models and accurate documentation has increased significantly. Technologies such as Building Information Modeling (BIM) and Digital Twin (DT) have demonstrated their potential for facilitating the digitalization, monitoring, and management of buildings and related assets [

5]. However, other built environment elements and infrastructure still require further attention to achieve seamless digitization.

A key foundation for the digitalization of built assets and their subsequent application is the ability to capture their as-is state within the environment [

5]. Laser scanning and photogrammetry have emerged as state-of-the-art techniques for capturing 3D information about built environments. The resulting images and point clouds are highly valuable as they contain rich spatial and visual information about a scene. Nonetheless, these data often require extensive post-processing to enable scene understanding, making them usable for downstream applications. Despite their benefits, laser scanning and photogrammetry present several challenges, including high cost, long data acquisition times, site accessibility constraints, and intensive processing requirements [

6]. Moreover, extracting semantic information and meaningful insights from these raw data to enable further processing is a challenging endeavor that demands advanced methodologies in Artificial Intelligence (AI) and Machine Learning (ML).

In this context, generating semantically rich 3D data from a single RGB image and transferring 2D semantic labels into a 3D space presents a significant opportunity for advancement. Such approaches can streamline the digitalization process, reduce cost and complexity, and pave the way for a range of practical applications across the AECO sectors, with benefits in various research fields:

Digital Documentation: Generating semantically rich 3D data from a single RGB image, combined with the transfer of 2D semantic labels into a 3D space, provides an effective approach for digital documentation in the AECO sector [

7]. By embedding semantic information within a 3D context, this approach enables the structured representation of building elements and environments. Such semantically enriched digital records can reduce the need for extensive manual annotation, streamline information capture, and enable more efficient downstream analyses, including design review, quality assessment, and archival recording of as-built conditions [

8].

Scan-to-BIM and Digital Twinning of the Built Environment: The ability to generate semantically segmented 3D data from a single RGB image has significant implications for Scan-to-BIM and digital twinning workflows [

9]. Compared to traditional multi-sensor scanning methods, this approach can offer a more cost-effective and accessible means of capturing the state of buildings and infrastructure [

6]. Incorporating semantic labels transferred from 2D imagery, it allows the automated generation of semantically structured 3D reconstructions to be aligned with existing BIM models or utilized to support DT platforms. This provides a foundation for streamlining data acquisition and integration across the life cycle of built environments.

Facility Management: In the context of facility management, extracting semantically rich 3D data from a single RGB image and embedding this information within a spatial model can enable improved spatial awareness and asset tracking. By converting 2D labels, such as equipment type or material classification, into 3D semantics, this approach supports more accurate information retrieval, spatial queries, and maintenance planning [

7]. The resulting data structure allows facilities managers to better assess spatial relationships, evaluate the status of equipment and spaces, and integrate information from visual observations with other operational datasets.

Monitoring of Assets and Infrastructure: For the monitoring of assets and infrastructure, generating semantically segmented 3D data from RGB images and transferring 2D labels to a 3D context can enable more precise inspection and assessment of built environments [

7]. This approach allows for the identification and localization of critical elements within a spatial framework, supporting systematic monitoring and facilitating the detection of changes over time. Ding et al. [

10], for instance, delve into the specialized use of mobile laser scanners for the detailed 3D mapping of intricate tunnel geometries, showcasing the adaptability of these techniques in challenging and varied structural environments. By combining semantic information with 3D geometry, it provides a robust foundation for automated anomaly detection, condition assessment, and long-term monitoring strategies, with potential benefits for maintenance planning, risk mitigation, and resource optimization.

Energy and Sustainability Assessments: By extracting and classifying elements such as walls, roofs, windows, and openings, this approach allows the rapid generation of a spatially accurate digital model that incorporates semantic information about the surface and material characteristics of built assets [

7]. Such data is open to be leveraged to assess thermal performance and estimate energy losses across the building envelope. In retrofit and renovation, this semantic enrichment enables automated identification of priority areas for thermal insulation or glazing replacement. Moreover, by facilitating the integration of image-derived information with building performance simulations, this approach enables more robust sustainability assessments across the design and operational phases of a building’s life cycle [

5].

Real-Time Robotic Task Execution and Navigation: The ability to generate semantically rich 3D data from a single RGB image and to transfer 2D labels into a 3D space has significant potential for robot perception and navigation in built environments. By providing a spatially and semantically accurate scene representation, this approach allows mobile robots or automated inspection platforms to recognize and localize relevant elements, such as walls, doors, equipment, or hazards, in real time [

11]. This semantic awareness can enable robots to adapt their path planning, optimize inspection routes, or carry out targeted maintenance and assembly tasks. Moreover, the ability to integrate visual semantics into a 3D map facilitates higher-level reasoning about the environment, supporting improved decision-making, safer navigation, and more effective interaction between robots and their surroundings. In this way, semantically enriched 3D data can serve as a foundation for advances in intelligent, context-aware robotics within the built environment.

Built Heritage and Cultural Asset Documentation: Recent advances in 3D point cloud processing have also enabled a transformative impact on the documentation, analysis, and preservation of built heritage and cultural assets. The adoption of 3D reality-based survey techniques and retopology has enabled unprecedented detail in structural analysis of cultural heritage, supporting accurate digital archiving and restorative interventions, as explored by Barsanti et al. [

12]. Complementing these approaches, the rise of digital twin technology has extended the use of point cloud data far beyond mere visualization, supporting continuous monitoring, simulation, and predictive maintenance within the built environment. As detailed by Mousavi et al. [

13], digital twins utilize high-fidelity 3D models, often from reality-based surveys, as dynamic, data-rich assets for managing building lifecycles, assessing structural health, and enabling integrated decision-making.

Together, these developments underscore the critical role of advanced 3D data acquisition and processing in digital twinning, heritage conservation, and the realization of smart, adaptive built environments, as well as their application in real-time systems.

2.2. Artificial Intelligence-Driven Domain Adaptation in Generating and Processing 3D Point Clouds

In the burgeoning field of AI-driven 3D surface reconstruction, the integration of domain adaptation techniques presents significant advances in processing and interpreting complex datasets. Tanveer et al. [

14] provided a seminal review in this area, which extensively evaluates the methodologies applied in different domains, particularly emphasizing the challenges and solutions of adapting models to robust performance. Tanveer et al. [

14] is particularly relevant for its exploration of domain adaptation techniques involving point-cloud data derived from laser scanners. The research provides a comprehensive overview of how domain-adapted AI models can accurately interpret and reconstruct 3D environments from raw sensor inputs, thereby enhancing both the fidelity and utility of the reconstructed models in various real-world conditions.

Domain adaptation in Artificial Intelligence addresses the challenge of transferring knowledge from a source to a target domain, particularly when data is limited, unlabeled, or significantly different. This approach is crucial when models trained on a specific dataset experience a performance drop when applied to new, often more complex, environments. When it comes to 3D point clouds, domain adaptation presents unique challenges. Point clouds, which capture spatial and geometric information from sensors such as LiDAR, are unstructured and can vary significantly due to differences in sensor specifications, environmental conditions, and scene intricacies. This variability can result in significant domain shifts, for example, between synthetic training data and real-world environments in fields such as autonomous driving or robotics. Therefore, effective domain adaptation methods are necessary to bridge these gaps, ensuring robust and reliable performance in downstream tasks like classification, segmentation, and detection.

Scene Segmentation and Uncertainty Handling: Recent advances in domain adaptation have introduced several innovative techniques. For example, PaSCo [

15] focuses on completing the panoptic scene with uncertainty awareness, thus enhancing the model’s reliability by providing confidence estimates at the voxel and instance levels.

Multimodal and Masking-Based Domain Adaptation: MICDrop [

16] employs a complementary masking strategy to integrate image and depth modalities, thereby enhancing semantic segmentation across various domains. Moreover, the research includes frameworks that employ multiscale feature alignment and self-training pipelines, highlighting the various strategies developed to address domain discrepancies in 3D point cloud data. To further improve the landscape of 3D reconstruction, Liu et al. [

17] have innovative approaches to thermal imaging and dynamic environment mapping, demonstrating the application of Gaussian splatting techniques to achieve high-resolution thermal models.

Vision–Language Models and Contrastive Learning for 3D Adaptation: Similarly, CLIP2Point [

18] introduces a method for image-depth pretraining based on contrastive learning that adapts CLIP for point cloud classification in the 3D domain. The main innovation of this method is a novel depth rendering strategy, using 52,460 paired images and depth maps generated from ShapeNet to minimize the domain gap between 2D images and 3D point clouds. Additionally, a Gated Dual-Path Adapter (GDPA) is employed to fine-tune CLIP’s capabilities for 3D tasks by incorporating global view aggregation and gated fusion mechanisms. CLIP2Point surpasses existing 3D transfer learning methodologies, setting a new benchmark for performance in zero-shot, few-shot, and fully supervised classification scenarios. PointCLIP V2 [

19] advances zero-shot 3D understanding by integrating CLIP and GPT into PointCLIP V2, facilitating classification, segmentation, and detection on point clouds without training on 3D datasets. It features a shape projection module that enhances the alignment of 3D depth maps with natural image features, thereby increasing the effectiveness of CLIP for 3D tasks. Furthermore, GPT-generated text prompts refine textual embeddings for CLIP’s language model.

Adapting to Real-World Data: Similarly, 3D reconstruction requires an in-depth understanding and comprehensive knowledge of domain adaptation techniques. In the approach of adversarial masking for synthetic to real adaptation [

20], a learnable masking module is included in the network that processes the source features and their associated 3D coordinates. This module employs the Gumbel-Softmax operation to generate binary masks within an end-to-end differentiable framework, enabling it to adaptively select and mask specific sections of the synthetic point clouds. By integrating this masking mechanism with adversarial training, the module effectively learns to replicate the noise patterns commonly found in real-world data. Triplane Meets Gaussian Splatting [

21] presents an innovative hybrid Triplane-Gaussian representation designed to achieve rapid and high-quality single-view 3D reconstruction by leveraging transformer-based point and triplane decoders. Rather than relying on traditional methods such as Score Distillation Sampling (SDS) or diffusion models, this new feedforward network allows for real-time 3D object generation. The methodology integrates explicit point cloud structures alongside Gaussian feature queries, which significantly enhances both the rendering speed and the overall visual quality of the generated objects.

These innovations improve the generalizability of AI models across diverse domains and contribute to the development of more adaptable systems for critical applications, including semantic enrichment of digital models, autonomous navigation, and robotic perception. The related literature emphasizes the importance of robust feature alignment, uncertainty estimation, and multimodal learning strategies for overcoming the challenges posed by domain shifts in 3D environments.

2.3. Research Gap

Despite significant advances in scan-to-BIM, point cloud processing, and AI-based 3D segmentation, existing methods are constrained by limited class coverage, manual or sensor-dependent annotation processes, and difficulties in generalizing across diverse built environments. Notably, key building elements and movable objects remain largely unaddressed in 3D segmentation benchmarks. Current domain adaptation techniques also fall short in transferring detailed semantic labels from 2D images to 3D models in a fully automated and scalable manner. Furthermore, state-of-the-art single-image 3D reconstruction approaches, such as those by Liu et al. [

22] and Ranftl et al. [

23], typically focus on geometric prediction within a single dataset and do not address semantic enrichment, domain adaptation, or BIM-ready output generation. To bridge these gaps, this research presents a novel pipeline capable of generating annotations and semantically enriched, BIM-compatible 3D reconstructions from a single RGB image, introducing new classes and automated workflows for comprehensive scene understanding.

3. Materials and Methods

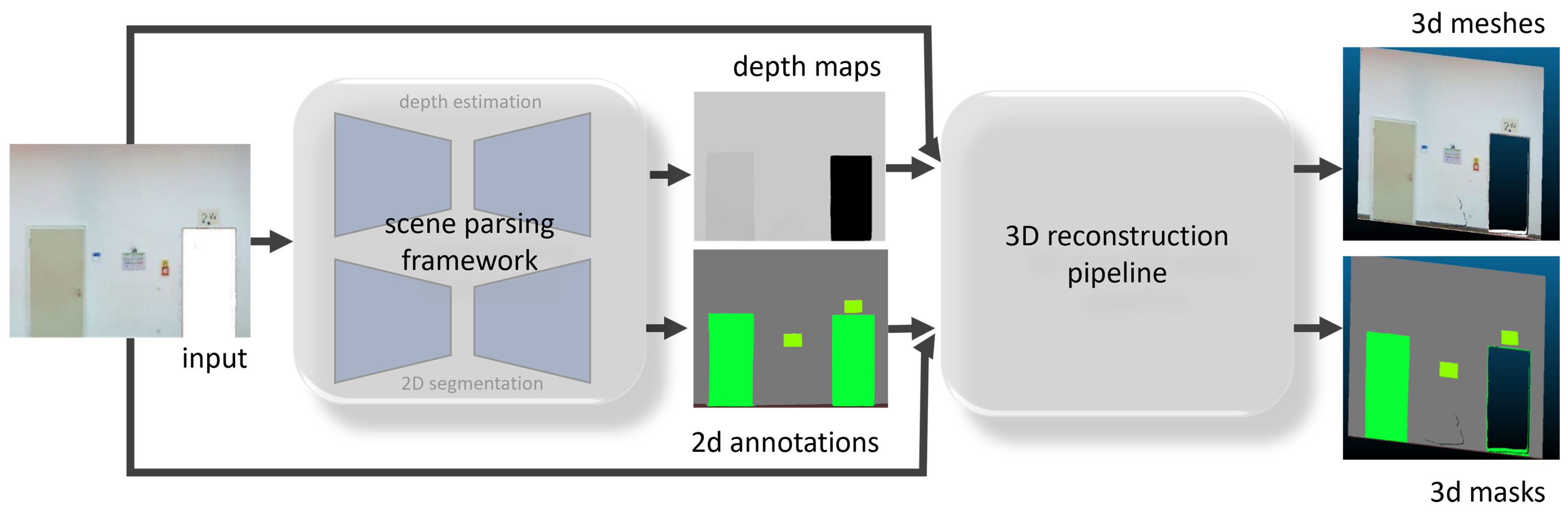

The methodology developed in this study establishes an end-to-end domain adaptation framework that transforms a single RGB image into BIM-ready 3D models. The proposed pipeline requires only a single input image and automatically produces fully segmented, metrically scaled, BIM-compatible 3D models without manual intervention or external annotation. Initially, input preprocessing is performed to facilitate AI-based domain adaptation. As depicted in

Figure 1, the pipeline then proceeds to the 2D scene parsing stage, in which semantic segmentation and depth estimation are jointly executed using multi-task learning. The predicted semantic labels and depth information are subsequently fused in the 3D reconstruction step, resulting in spatially accurate, semantically enriched models. These outputs serve both as training data for advanced computer vision networks and as a basis for the direct export of segmented BIM objects. Thus, the developed approach seamlessly integrates domain adaptation and 3D reconstruction, enabling highly automated and scalable digitization of built environments from minimal input data.

3.1. Main Framework for Scene Parsing and 3D Reconstruction

The research introduces an innovative multi-task framework that processes 2D image inputs for 3D spatial reconstruction by generating point cloud meshes and 3D masks with object-based annotations, thereby enhancing the understanding of indoor environments (

Figure 2). The approach employs 2D scene parsing methods, including depth estimation and semantic segmentation, to leverage meaningful information for 3D understanding from a single 2D image. Within the scene parsing pipeline, Vision Transformer-based encoder-decoder networks are utilized, which are pretrained for 160,000 iterations on the ADE20K dataset [

24] for 2D semantic segmentation, and for 80,000 iterations on the NYU-D_v2 [

25] and SUN-RGB-D [

26] datasets for depth estimation. The use of these pretrained neural networks enhances the capacity to generate 3D spatial meshes and semantic segmentation masks, leveraging the rich semantic context inherent in 2D images [

27].

To address the inherent scale ambiguity in monocular depth estimation, the 3D reconstruction pipeline accepts arguments for signed distance algorithms that calibrate predicted depth values to real-world metric measurements. This approach allows depth maps generated from monocular images to be accurately scaled, ensuring that the resulting 3D point clouds reflect true metric dimensions regardless of the scene or device. By introducing metric references such as known scene measurements or device—specific intrinsic parameters—into the reconstruction process, the system can consistently convert relative depth predictions into metric reconstructions. This strategy ensures that all generated point clouds are not only scalable but also directly usable for downstream applications requiring precise geometric information. This multi-task learning configuration enables the generated outputs, 3D meshes and masks, to serve as input for subsequent training stages, thereby streamlining the reconstruction pipeline and optimizing resource allocation [

28]. The dual focus on 2D and 3D capabilities further facilitates domain adaptation by mapping features from 2D inputs to 3D outputs.

Central to this research is the integration of 2D semantic segmentation results and depth estimations to create 3D spatial meshes. This process provides a foundational understanding of the 3D scene, allowing the model to retain valuable contextual information while adapting from 2D to 3D spaces. The generated 3D segmentation masks, derived from 2D outputs, enable a more coherent representation of the scene’s geometrical and material properties. Domain adaptation is further enhanced through the creation of masks based on object annotations, which provide labels for different classes within the spatial representation. The research pipeline enables the learning, recognition, and categorization of these objects, effectively reducing the performance gap between different domains by facilitating a more cohesive integration of 2D semantic information into 3D reconstructions.

To evaluate the effectiveness of the proposed framework, it was benchmarked against the classified outputs from several established datasets, including S3DIS [

29], ScanNet [

30], TUM CMS Indoor Point Clouds [

31], and Structured3D [

32]. The experimental analysis involved testing the performance of the framework’s 3D reconstruction pipeline by comparing the reconstructed surfaces with the corresponding original ground-truth meshes. This comparison was designed to assess the robustness and generalizability of the applied method across diverse indoor environments.

For quantitative evaluation, the evaluation metrics are chosen to comprehensively assess both geometric and semantic reconstruction quality. Geometric accuracy is quantified using ICP registration error and RMSE, measuring the spatial alignment and deviation between predicted and ground-truth 3D models. Semantic segmentation performance is evaluated using pixel-wise accuracy for 2D outputs, per-point accuracy for 3D masks, as well as Intersection over Union (IoU) and mean IoU (mIoU) to capture class-level segmentation quality. These indicators together provide a robust assessment of reconstruction fidelity and semantic enrichment across all datasets. Specifically, the ICP algorithm was utilized to measure the geometric alignment between the reconstructed and original meshes in the TUM CMS dataset. Additionally, point-wise or pixel-wise accuracy was calculated to assess the fidelity of the reconstructions, particularly in relation to the RMSE values obtained from the optimized outputs.

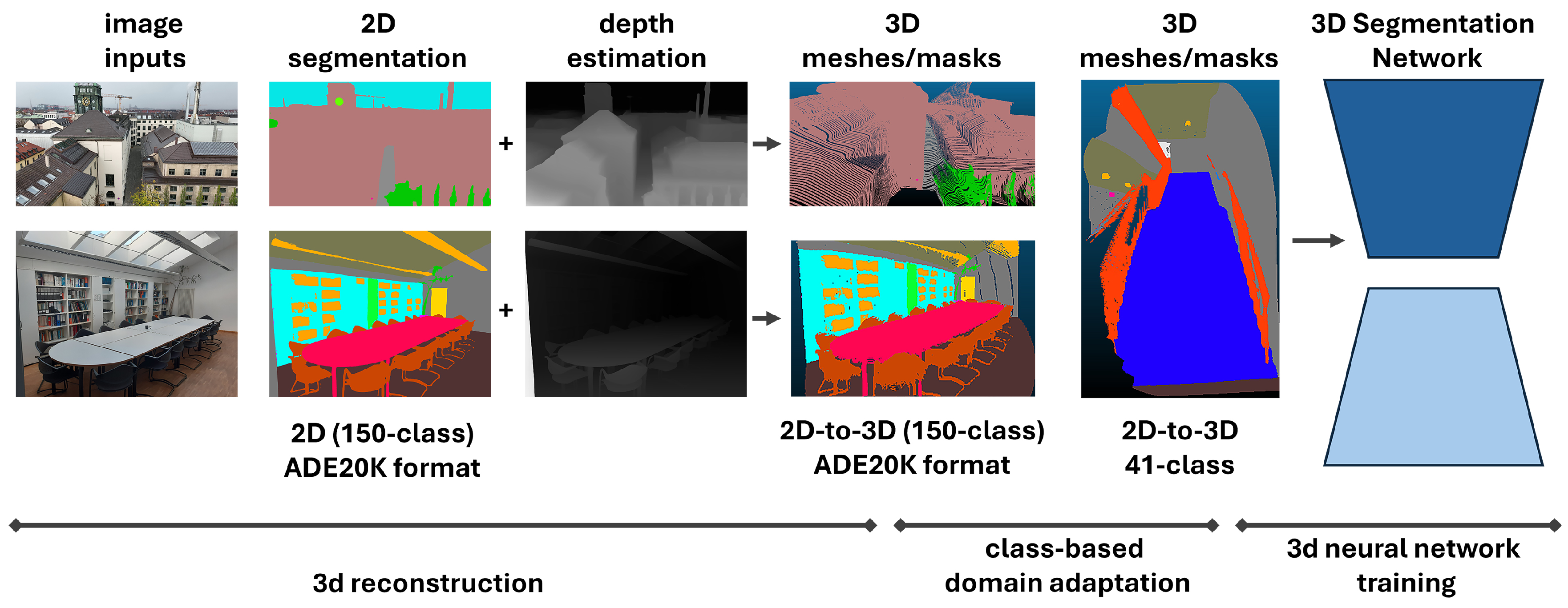

3.2. Domain Adaptation Framework from 2D to 3D

In practice, it is possible to annotate objects in 3D, even with pretrained neural networks that are trained on different datasets, such as S3DIS and ScanNet. Nevertheless, there are significant objects and building components that are not annotated in these benchmarking datasets, such as stairs, balustrades, railings, books, or people, which are crucial for safety and circulation, but are not distinguished as classes in well-known benchmarking 3D point clouds. Stairs, for instance, are extremely important building components and need to be recognized in 3D for the advancement of automata studies deploying vision recognition models with annotations. The domain adaptation techniques between 2D and 3D datasets enable the reconstruction of surfaces and their annotations by the selected source domain in 2D, allowing them to be recognized through the target domain in 3D (

Figure 3).

Figure 3 illustrates the developed domain adaptation framework, highlighting how semantic information derived from a single RGB image, together with depth estimation, is leveraged to bridge the gap between the 2D source domain, such as ADE20K annotations, and the 3D target domain, such as BIM-compatible indoor point clouds. By integrating multi-task learning and leveraging pretrained neural networks, the framework effectively transfers semantic labels from 2D to 3D without external manual annotation or domain-specific training data. This process also enables training 3D segmentation networks on the generated unique classes and data to have accurate segmentation and classification of both common and previously unrepresented object categories within BIM-compatible datasets.

More specifically, the use of paired depth predictions and 2D semantic segmentation annotations derived from the ADE20K dataset, which encompasses over 150 annotated object classes, enabled the identification and reconstruction of object categories that are typically absent in standard indoor 3D scene parsing datasets, such as S3DIS, ScanNet, and Structured3D (

Figure 3). This includes objects like stairs, balustrades, railings, people, and books. By leveraging these annotations, the proposed method facilitates the documentation and generation of 3D surface meshes and corresponding segmentation masks for these underrepresented classes within the selected research contexts. Consequently, the integration of depth estimation, 2D semantic segmentation, and optimized 3D surface reconstruction enables the transfer of recognition capabilities to previously unrepresented object categories. This approach effectively extends the semantic richness of indoor scene representations to include human figures and structural or functional elements such as staircases, handrails, and various everyday objects (

Table 1).

In the domain adaptation between 2D and 3D, the trained networks on ADE20K also enable the generation of 3D segmentation masks that are introduced to 3D environments alongside S3DIS, ScanNet, and Structured3D during the training of 3D segmentation neural networks (

Figure 3). Some common classes are indexed based on compounded training configurations that are widely applied in the literature (

Table 1). Missing classes, such as beams in ADE20K, are reserved to be recognized by class-based domain adaptation techniques using compounded datasets. To enhance the effectiveness of this cross-domain learning, attention-based filtering is applied to selectively emphasize relevant features from 2D projections that are most informative for 3D reconstruction and semantic segmentation. This mechanism allows the model to prioritize spatial and contextual information across modalities, reducing noise from less relevant features and improving the precision of class recognition in 3D space. Consequently, five additional semantic classes have been introduced to the generated 3D reconstruction data as a benchmark.

These new classes are indexed in a manner consistent with the labeling schemes of other widely used datasets, ensuring compatibility and facilitating cross-dataset comparison (

Table 1). The generated surfaces and annotations that do not exist in other datasets are derived from 2D information to be applied in 3D, allowing for the training and application of networks to annotate objects that are not present in other benchmarking datasets. In this regard, it is also necessary to introduce the generated meshes for training, allowing for the focus on and introduction of 41 distinct classes in 3D to neural networks, along with other datasets. This enables the generation of a framework that produces its dataset and annotations by selecting images and projections for 3D information.

3.3. Use of 3D Reconstruction Data for Training Neural Networks

This study utilizes 3D reconstruction data as a foundation for training transformer-based neural networks tailored to indoor semantic segmentation and scene understanding. The Vision Transformer (ViT) architecture serves as the baseline, featuring a hierarchical encoder-decoder structure with four stages of increasing channel capacities (32 to 384) and a consistent stride configuration of [2, 2, 2, 2]. Each block in the encoder network consists of input pre-processing layers with positional encoding and multi-head attention mechanisms of vision transformer architecture (

Figure 4). Multi-head self-attention mechanisms at each stage effectively capture spatial dependencies within the 3D structure, while the encoder and decoder process high-resolution patches of 1024 × 1024 pixels to manage detailed spatial information efficiently.

To facilitate robust domain adaptation across multiple datasets, class definitions derived from the high-capacity 2D segmentation networks are carefully harmonized with those found in leading 3D indoor benchmarks such as S3DIS and ScanNet. Initially, the proposed annotation scheme identifies 41 semantic categories, including rare or previously underrepresented objects such as stairs, railings, balustrades, bookshelves, plants, and people, providing rich semantic coverage of the indoor environment. For practical model training and improved interoperability, semantically similar or infrequently occurring classes are consolidated, resulting in a refined set of 37 distinct training labels. This merging strategy enables the network to focus on well-represented and meaningful categories, enhancing both computational efficiency and model performance.

To further support semantic consistency and cross-domain generalization, vision–language models (VLMs) are employed to facilitate semantic alignment and adaptation of our class definitions with those used in external benchmark datasets such as S3DIS and ScanNet (

Figure 4). By leveraging the cross-modal understanding provided by VLMs, the developed framework ensures that the semantic categories recognized via 2D segmentation are consistently mapped and merged with the corresponding labels in 3D datasets, thereby supporting robust domain adaptation across diverse annotation schemes. This approach draws on recent advances in vision–language integration for segmentation and classification tasks, such as CLIP-ViT [

33] and Point Transformer V3 [

34], and effectively bridges the domain gap between our dataset and established benchmarks. To address potential mis-segmentation, the pipeline incorporates an automated mapping mechanism, facilitated by the vision–language model (VLM), which applies a tolerance threshold of 10 pixels. This approach corrects class assignments for regions exhibiting minor deviations from the expected RGB values.

For geometric data processing, voxelization is employed to discretize and structure raw point clouds, providing the network with regularized spatial inputs. A rotation-aware preprocessing pipeline further enhances model robustness by addressing orientation variability common in indoor scenes. Additional data augmentation techniques, including random rotations along all three axes, scaling, jittering, and flipping, are applied to introduce spatial diversity and mitigate overfitting. Finally, normalization and centering ensure standardized inputs across scenes, supporting stable and generalizable network training.

3.4. Domain Adaptation from Image-to-3DMesh, Image-to-3DMask, and Image-to-BIM

Recent developments in building information modeling (BIM) workflows have highlighted the critical role of 3D data acquisition, object segmentation, and semantic annotation for effective digitalization of the built environment. Traditional scan-to-BIM approaches typically rely on terrestrial laser scanning (TLS) or photogrammetry to generate dense point clouds, which are then processed using a combination of manual annotation, rule-based algorithms, or supervised learning methods to segment, classify, and model as-built geometries into BIM-compatible objects [

35,

36,

37]. While effective, these pipelines are often labor-intensive, requiring expert intervention for annotation and validation, or they depend on costly and specialized sensor data.

In contrast, the proposed method leverages deep neural networks for joint monocular depth estimation and 2D semantic segmentation directly from standard RGB images, enabling a fully automated pipeline for 3D reconstruction and semantic enrichment. Building on recent advances in depth estimation [

23,

38,

39] and 2D scene parsing [

24], the applied framework simultaneously generates 3D surface meshes and 3D semantic masks by internalizing surface reconstruction methods, without requiring ground-truth 3D annotations or manual labeling. By fusing the predicted 2D segmentation maps with the reconstructed 3D geometry, each segmented object is directly exported as an individual BIM entity or CAD object, facilitating downstream applications in design, simulation, and facility management. This automated, annotation-exempt workflow offers significant advantages over classical scan-to-BIM and semantic enrichment methods, especially in terms of scalability and practicality in environments where direct 3D sensing or expert labeling is unavailable.

Unlike classical scan-to-BIM pipelines, which are limited by the classes available in point cloud datasets and often require laborious manual annotation, the proposed method introduces a domain adaptation framework that leverages a high-capacity, 150-class 2D segmentation network based on ADE20K dataset [

24] as a bridge for semantic enrichment (

Figure 1). Through this strategy, richly annotated 2D semantic information is transferred onto reconstructed 3D geometry, enabling the segmentation and export of a much wider variety of object classes than is possible with traditional 3D data sources alone.

As a result, the pipeline is capable of automatically identifying and reconstructing not only standard building elements such as walls, columns, doors, and windows, but also previously underrepresented or absent classes, including stairs, balustrades, railings, and even people, directly from monocular images. These segmented classes are then projected into 3D space and exported as distinct BIM or CAD objects with precise spatial coordinates, enabling fully enriched digital twins of built environments with unprecedented detail and utility. This automated, annotation-free workflow both extends and distinguishes the method proposed in this research from existing semantic enrichment and scan-to-BIM solutions [

35,

36,

37], since it enables the introduction of new semantic categories into 3D BIM models without requiring any manual 3D labeling or specialized sensor data. The resulting models support advanced applications in facility management, safety analysis, and digital heritage, and pave the way for comprehensive, multi-class, and highly automated 3D scene understanding.

4. Experiments and Results

This section presents the experimental implementation and validation of the proposed multi-task framework, designed to transform single RGB images into semantically enriched 3D reconstructions for indoor environments. The following subsections systematically demonstrate the effectiveness of the applied methods, beginning with the detailed implementation of the 3D reconstruction pipeline, followed by the setup and performance evaluation of neural networks trained through domain adaptation. Finally, practical outcomes illustrating the applicability and exportability of the generated data to BIM-compatible formats are presented.

4.1. Implementation of Multi-Task Framework for Scene Parsing and 3D Reconstruction

The proposed multi-task framework integrates semantic scene parsing and 3D reconstruction by jointly utilizing depth estimation and segmentation predictions. Given still images or sequences of frames from indoor scenes, where dynamic movement or temporal actions are not the focus, each frame is processed to generate paired outputs: a depth map and a corresponding 2D semantic segmentation (

Figure 5). The scene parsing methods are implemented via the ViT-based encoder-decoder network, pretrained on ADE20K dataset for 160K iterations (

Table 2). The model was trained on the ADE20K dataset using

image crops. The AdamW optimizer was employed with a base learning rate of

, a weight decay of

, and a batch size of 4. Training was conducted for

iterations with standard data augmentation techniques, including random scaling, flipping, and photometric distortion. A polynomial learning rate schedule with warm-up was applied, and stochastic depth was set to

. Both the backbone and segmentation head were fine-tuned during training. During the experiments, NVIDIA A100-SXM4-40GB is used as the single GPU hardware resource with 83.5 GB RAM on a cloud-based training platform (

Table 2).

The trained 2D segmentation network achieves an inference speed of 0.75 images per second in scene parsing experiments. The paired predictions of depth estimation and semantic segmentation serve as the input for the 3D reconstruction pipeline. Using these pairs, the system reconstructs 3D surface meshes and semantic masks. The reconstruction process is followed by optimization procedures designed to improve the geometric and semantic accuracy of the resulting meshes. These procedures include refinement of depth predictions, surface smoothing, and label consistency checks across spatial neighborhoods.

To evaluate the framework’s ability to generalize to complex indoor scenes, the experiments focus on circulation zones and common-use spaces where traditional networks often fail to perform accurately. These include architectural elements such as staircases, railings, balustrades, columns, walls, and floors, as well as functional objects like bookcases, books, chairs, and tables. These categories are often underrepresented or inconsistently labeled in standard 3D indoor datasets, making them suitable for evaluating the added value of the proposed method (

Figure 6).

As a result of 3D reconstruction techniques applied by the multi-task framework, 32 distinct indoor scenes are generated, consisting of 17 from the TUM campus and 15 additional scenes from private environments. These scenes are generated from image inputs that are taken by a SAMSUNG A30 camera, together with 2D semantic annotations based on 2D annotations from ADE20K, effectively transferring 2D class labels into 3D representations of point clouds and semantic meshes (

Figure 5,

Figure 6,

Figure 7,

Figure 8 and

Figure 9). These outputs are then used to train and evaluate neural networks in 37 effective classes, derived from a set of 41 original semantic categories after class merging for computational efficiency.

The geometric accuracy of the generated meshes is assessed using the ICP algorithm, with a distance tolerance of 1.0 and a maximum of 2000 iterations. Evaluations on the TUM CMS Indoor Point Clouds demonstrate highly accurate alignment between reconstructed and ground-truth meshes. The optimized examples achieve an RMSE as low as 0.02, with nearly complete spatial overlap between the generated and original point clouds (

Figure 10). Additionally, segmentation accuracy per each point reaches a mean Intersection over Union (mIoU) of approximately 81.89%, indicating the framework’s effectiveness in both geometric and semantic tasks (

Figure 10 and

Table 3). The precision results, based on RMSE values, are compared to other state-of-the-art 3D reconstruction methods in

Table 3.

4.2. Implementing 3D Learning Networks Based on Domain Adaptation Methods from 2D to 3D

Based on the proposed framework and annotation methodology, a new set of annotated indoor scenes was constructed by indexing and labeling classified objects using depth and segmentation data. These annotated scenes were derived from a combination of sources, including the TUM Campus Indoor Dataset and residences from various countries. A total of 32 scenes were used, of which 25 scenes were allocated for training, 5 scenes were reserved for validation, and 2 scenes were used for testing the neural network models. To further strengthen the model’s generalization capabilities and to align class labels with established semantic hierarchies, the training process was supplemented with additional benchmark datasets, including S3DIS, ScanNet, and Structured3D. Each of these datasets was used solely for training purposes, with careful integration to ensure class consistency and semantic alignment:

Stanford Large-Scale 3D Indoor Spaces Dataset (S3DIS) [

29] provides detailed 3D scans of six large-scale indoor areas, annotated with object-level semantic labels across various room types, such as offices and conference rooms. Its structured segmentation enables robust learning of spatial context.

ScanNet_v2 [

30] contains over 1500 RGB-D scans of indoor scenes with dense surface reconstructions and semantic annotations, useful for training networks to handle noisy or incomplete data in cluttered environments.

Structured3D [

32] offers a large-scale dataset of synthetic indoor scenes with rich semantic, geometric, and photometric annotations. It is particularly useful for pretraining models on highly diverse, photorealistic examples.

To harmonize the semantic labels across these datasets and ensure consistency in the target prediction space, a vision–language alignment strategy was employed. By leveraging language-based models, the class definitions across datasets were mapped and reduced to a consolidated set of 37 indexed classes. These include merged categories designed to improve training efficiency while maintaining semantic richness. A detailed mapping of class definitions and their correspondence across datasets is provided in

Table 4.

The proposed network is trained on the S3DIS and ScanNet datasets and was optimized using the AdamW optimizer with a learning rate of 0.006 and a weight decay of 0.05. A mini-batch size of 6 was employed. The model was trained for 100 epochs on each respective dataset and evaluated on a separate test set consisting of annotated indoor scenes. These hyperparameters and training strategies were selected to ensure consistency and optimal performance across both benchmarks. The performance of the applied model, trained with this compound dataset configuration, is reported in

Table 2, including evaluations on held-out scenes from both S3DIS and ScanNet, demonstrating competitive results across standard benchmarks.

Indoor scenes featuring complex architectural and structural elements, such as stairs, railings, and balustrades (

Figure 6), were introduced to a ViT-based 3D neural network framework. To support training from 2D image annotations and ensure semantic consistency, vision–language models were used to assist in class definition and alignment. Class indices (training IDs) were defined in accordance with labels derived from ADE20K annotations, where each semantic category was originally associated with a distinct color code. These color labels were programmatically converted into segmentation IDs, forming the class indices introduced to the network. For practical and computational reasons, some rarely represented or ambiguous classes were merged, while others, such as the beam class, present in S3DIS and TUM CMS Indoor Point Clouds but absent in ADE20K, were excluded from training. As a result, a unified label space consisting of 37 training classes was established, serving as a novel and structured contribution to 3D indoor scene understanding. This harmonized label scheme enables cross-dataset training and evaluation, and a complete mapping of class definitions is provided in

Table 4.

The model developed for 3D segmentation was trained from scratch using the generated 3D datasets, with the goal of evaluating its ability to learn rich semantic representations from diverse and often underrepresented scene elements. The network was trained on multiple indoor environments of 25 scenes, and its performance was evaluated using mean Intersection over Union (mIoU) and overall pixel/point-wise accuracy, as reported in subsequent sections. For training on the generated dataset, the 3D segmentation network was trained with the same implementation as applied to the S3DIS and ScanNet datasets, utilizing the AdamW optimizer with learning rate of 0.006, weight decay of 0.05, and a mini-batch size of 6. The model was trained for 100 epochs and evaluated on a separate test set composed of annotated indoor scenes, ensuring methodological consistency across datasets. The primary loss function was CrossEntropy Loss, with the Lovász-Softmax loss additionally incorporated during multi-class training phases to improve segmentation accuracy, particularly in boundary regions and imbalanced class scenarios. All computational experiments are completed by using an NVIDIA A100 GPU hardware resource.

The learning performance of the proposed network was evaluated on the test examples from the generated 3D Domain Adaptation dataset by using intersection-over-union (IoU) and point-wise accuracy metrics in all semantic categories. The quantitative results of this evaluation, including the IoU per class and overall precision, are presented in

Table 4.

The distribution of semantic annotation of each class in the applied dataset is also reported in

Table 4. The results demonstrate that the generated dataset and the applied network trained from scratch return robust outcomes on the highly represented classes such as wall, floor, and window that are significant building components as well as objects and classes such as table, sofa, bed, and people that are significant to be recognized by automated systems in indoor environments. Emerging test results of the 3D segmentation network on the newly introduced classes such as stairs, balustrades, railings, people, and books is another novelty of this research that is unique in the field of computer vision dwelling on 3D indoor scene understanding.

4.3. Semantic Domain Transfer from Image to BIM Objects

This multi-task framework enables the automated extraction and reconstruction of a diverse set of building elements and interior objects from a single RGB image. The pipeline accurately identifies, segments, and reconstructs not only primary architectural components such as walls, doors, stairs, columns, and baseboards, but also secondary and movable objects, including tables, chairs, bookshelves, books, and even indoor plants (

Figure 11). This rich semantic diversity is achieved through domain adaptation from 2D RGB images by the proposed pipeline, allowing the generation of precise 3D segmentation masks for object classes often absent from conventional 3D datasets.

Each segmented object is exported as a discrete geometric entity in standard formats compatible with BIM, such as IFC, CAD, such as STL, and mesh-based design environments, such as OBJ. The 3D output is compatible with BIM/Authoring tools to be imported into the software like Revit 2025, CloudCompare v2.12.4 and v2.13.2, and Recap. The figures below illustrate the import of the output in Revit and CloudCompare software (

Figure 12). Thus, CloudCompare and Revit Autodesk software are also used for visualization and in 3D mesh processing and exportation of point clouds to BIM software in addition to the applied automated pipeline. Based on the RGB color of semantic labels, the segmented classes are exported and labeled as BIM objects (

Figure 12). The results demonstrate that complex interior scenes are decomposed into multiple, semantically meaningful 3D BIM objects, including both fixed structures and loose furnishings, from a single image input. This exportability enables seamless integration with downstream digital workflows, supporting advanced applications in building management, digital twins, and automated facility documentation. The inclusion of previously underrepresented object classes, such as stairs, tables, chairs, bookshelves, and plants, further underscores the versatility and practical impact of the proposed domain adaptation framework.

5. Discussion

This research employs an innovative multi-task learning framework that generates and learns from 3D data based on 2D inputs, thereby enhancing the understanding and recognition of indoor environments (

Figure 1 and

Figure 2). The framework utilizes 2D scene parsing methods, including depth estimation and semantic segmentation, to convert 2D images into informative 3D representations (

Figure 5,

Figure 7 and

Figure 8). The integration of 2D semantic segmentation and depth estimation for spatial meshes, together with 3D segmentation masks, contributes to a coherent representation of the geometrical and material attributes of each indoor scene. Segmentation tasks enhance the recognition of point clouds as masks, enabling more accurate depth prediction and mesh correction for precise details. Consequently, these point clouds are then used to train 3D networks generated from 2D images.

The process of creating 3D masks further improves domain adaptation by providing RGB values and annotation labels for distinct object classes in the 3D representation. The network, trained on the ADE20K dataset, enables the generation of 3D segmentation masks that include both classes common to S3DIS or ScanNet and new classes not present in other 3D indoor datasets. Thus, the methods for generating surfaces and annotations from pairs of depth estimations and 2D segmentation maps enable recognition of objects such as garbage bins, stairs, balustrades, railings, people, and books in 3D, solely from 2D image information (

Figure 6,

Figure 7 and

Figure 9). Accordingly, a comprehensive 3D spatial dataset can be generated from 2D inputs using the multi-task framework.

A total of thirty-two distinct scenes were generated via 3D surface reconstruction from 2D segmentation annotations: twenty-five were used for training, five for validation, and two for testing. The compounded training strategies, including vision–language models (VLMs), enabled domain adaptation between ADE20K, S3DIS, ScanNet, and TUM CMS Indoor Point Clouds. In total, forty-one unique class annotations were produced as 3D segmentation masks, which were consolidated into thirty-seven training classes—including five newly introduced categories: stairs, balustrades, railings, people, and books (

Table 1 and

Table 4). Thus, the framework enables the creation of a comprehensive 3D spatial dataset directly from 2D inputs using the multi-task learning approach.

In addition to expanding the scope of recognizable object classes, this framework pioneers a domain adaptation approach that directly leverages high-capacity 2D semantic segmentation networks (trained on up to 150 classes) to project richly detailed annotations into the 3D domain. This not only enables the automated segmentation and export of previously underrepresented or absent categories—such as stairs, balustrades, railings, people, and fine architectural elements like baseboards—but also facilitates their export as discrete BIM, OBJ, or CAD-compatible objects with spatially precise coordinates. The method thus bridges a fundamental gap in traditional scan-to-BIM pipelines, which are typically limited by the annotation scope of existing 3D datasets or require costly manual labeling. In this pipeline, 3D segmentation masks are derived without any manual or sensor-based annotation, making possible the rapid generation of semantically rich, highly detailed 3D digital twins directly from standard imagery. This substantially enhances automation, digital documentation, and facility management capabilities for both heritage and contemporary built environments (

Figure 11).

In this regard, selected exemplar scenes from the generated 3D point clouds are introduced as the training dataset to the designated 3D segmentation networks, which are also trained in conjunction with other indoor 3D datasets. As the proposed model learns to identify and categorize these objects, it effectively diminishes the performance discrepancies between different domains, fostering a smoother integration of 2D semantic information into 3D reconstructions.

For instance, a major challenge prior to the development of 3D networks was the frequent misclassification or under-recognition of large tables in office and meeting hall scenes within the TUM campus environment. This research directly addresses that limitation by refining semantic labeling and enhancing class-level clarity during training (

Figure 9). To reduce ambiguity and computational overhead, confusing or inconsistently labeled categories—such as generic clutter, miscellaneous furniture, or ambiguous structural elements—were consolidated into broader, semantically coherent classes. This merging approach improves model efficiency and accuracy by minimizing noise from overlapping or redundant categories across datasets.

The 3D reconstruction method achieves an RMSE as low as 0.02 and per-point accuracy of 81.89% based on generated mesh comparisons with TUM CMS Indoor Point Cloud targets, representing one of the most precise results reported in the literature to date (

Table 3). The outcomes of the 3D reconstruction also demonstrate that output meshes have significantly more frequent neighboring points than traditional documentation methods, making them useful for complementing existing point clouds and filling in missing data (

Figure 10). In that regard, it becomes possible to complement any 3D information lost by noise or due to obstacles by obtaining pictures or video from different angles of the objects.

Results obtained from training and testing the network with a ViT baseline suggest that the generated dataset is both challenging and beneficial for learning classes in the minority, when compared to other benchmarking datasets, as well as the overall class distribution in the dataset (

Table 2 and

Table 4). Nevertheless, the network still returns adequate performance on minority classes while it performs considerably well—78% mIoU (77.7)—on major classes such as wall, floor, cabinet, bed, sofa, table, window, pillow, toilet, people, and other objects that constitute more than 66% of the dataset with above 70% IoU for each class (

Table 4).

Moreover, it becomes possible to learn and recognize objects such as stairs, balustrades, railings, and books in this work that were not previously introduced to any other state-of-the-art 3D segmentation networks for indoor scenes (

Table 4). The network demonstrates considerable performance in recognizing and segmenting people in 3D indoor scenes, achieving 80% mIoU (79.5), which can be applied to other indoor environments to provide predefined class-based predictions. Accordingly, it is aimed to increase the number of scenes and underrepresented classes to improve network performance, also by proposing more advanced neural networks as future work.

The experimental results, as illustrated in

Figure 11, confirm that each segmented layer, whether wall, door, signage, or baseboard, can be isolated and exported in standard BIM, OBJ, or STL formats. This exportability highlights the practical integration of the proposed approach into existing digital workflows, allowing for seamless downstream usage in architectural design, construction, simulation, and building management applications. The ability to produce class-aware, geometrically explicit digital models from 2D inputs alone is a substantial advancement over existing methods, and demonstrates the feasibility of end-to-end automated 3D reconstruction and semantic enrichment.

In brief, the research demonstrates not only the practicality of domain adaptation techniques, but also the potential for future developments in modeling, intelligent environments, automation, and robotic construction that require precise 3D spatial representations and data. The validation efforts on 3D reconstruction techniques reinforce the model’s ability to seamlessly transition from 2D to 3D environments, ensuring high fidelity in the resulting digital models. Continuous refinement and testing of the framework aim to overcome the limitations of traditional scanning methods by enabling a more dynamic, adaptable approach to 3D reconstruction with higher point-wise resolution from high-quality images.

Ultimately, these results demonstrate that harnessing multi-task learning and domain adaptation enables the rapid and automatic generation of multi-class, semantically rich 3D models from standard imagery, extending the frontiers of scan-to-BIM, semantic enrichment, and digital twin creation for the built environment.

Limitations and Future Work

A key limitation of the current approach is reduced segmentation accuracy for underrepresented object classes—such as picture (0.17% IoU), refrigerator (2%), nightstand (3.12%), shower curtain (11.85%), and dresser (0.49%)—as well as for challenging surfaces like mirrors (8% IoU) in the generated 3D reconstruction scenes (

Table 4). Although the framework incorporates a vision–language model (VLM) to support semantic enrichment and cross-domain generalization, the limited frequency of these classes in the training data restricts the model’s ability to reliably recognize and segment them. Additionally, scenes with heavy object occlusion continue to pose challenges for both 3D reconstruction and semantic segmentation (

Figure 13). These limitations are expected to be mitigated by expanding the dataset to include a greater diversity of scenes featuring rare objects and by training on multiple views of the same environment to enhance robustness to occlusion.

While the current system generates geometric BIM objects with semantic annotations, automatic population of full IFC property sets is not yet implemented. The developed pipeline focuses on geometry generation and semantic segmentation, producing 3D point clouds from single images. The semantic labels correspond to architectural and structural components, such as walls, windows, beams, and doors, providing a strong foundation for subsequent BIM modeling. However, the system does not yet automatically populate IFC property sets. This task goes beyond basic semantic enrichment and requires additional data processing and metadata enhancement using tools such as IfcOpenShell. Nevertheless, the pipeline is capable of extracting metadata such as object coordinates, class, and material-related cues from RGB and depth information. Future work will focus on mapping these extracted features to standard IFC attributes, enabling richer and more automated metadata population in exported BIM files and supporting comprehensive BIM interoperability.

The proposed framework is also applicable to outdoor urban scenes, challenging construction sites, and facade analyses as future work, since these contexts require additional object classes and adjustments to the training pipeline beyond the labels unique to this research (

Figure 13). Further improvements will focus on optimizing the VLM-supported 3D segmentation pipeline, specifically targeting enhanced learning from limited examples and improved recognition of minority classes. In addition, future work will enable user-driven parametrization of image-to-BIM object conversion, allowing for the direct incorporation of customized BIM properties and user prompts within the semantic enrichment process.

6. Conclusions

This study presents a significant advance in the digitization and semantic enrichment of built environments, overcoming key limitations in both data acquisition and annotation for 3D modeling. By introducing a unified domain adaptation and multi-task learning framework, it is demonstrated that detailed, semantically segmented 3D reconstructions can now be generated directly from a single RGB image. Unlike traditional scan-to-BIM and AI-based methods, which are hampered by laborious data collection, limited class diversity, and dependence on specialized sensors or extensive manual labeling, the proposed approach enables rapid and automated creation of semantically rich 3D models.

The pipeline’s ability to simultaneously perform 2D segmentation and depth estimation allows for the reconstruction and annotation of complex indoor scenes, including the detection of previously unrepresented classes such as stairs, balustrades, railings, people, and fine architectural details like baseboards and other structures. This expands the applicability of 3D semantic segmentation far beyond the scope of existing benchmarks and point cloud datasets, introducing new levels of detail and utility for Building Information Modeling (BIM), facility management, digital twins, and automated construction monitoring.

The experimental results validate that the proposed framework achieves high accuracy in both geometric and semantic reconstruction, as evidenced by an RMSE as low as 0.02 and a per-point accuracy of 81.89%. Importantly, the segmented 3D masks are exportable in standard OBJ formats, streamlining the integration of results into real-world digital workflows and asset management systems. The newly generated 3D dataset, featuring 41 annotated classes—including five novel object categories—sets a new benchmark for 3D indoor scene understanding and supports further research on rare or complex structures.

In summary, this work bridges the gap between limited traditional datasets and the increasing demand for intelligent, automated, and versatile 3D digitization solutions. By enabling the generation of semantically enriched 3D data from minimal input, the presented method paves the way for more dynamic, adaptive, and accessible approaches to the digital transformation of the built environment. Future developments will focus on expanding the diversity of parametric information and feature transfer for mesh and mask objects with annotated classes to BIM interfaces, improving recognition of minority objects, and broadening the range of use cases for robotic construction, automation, digital twinning, and digital heritage preservation.