DCEDet: Tiny Object Detection in Remote Sensing Images Based on Dual-Contrast Feature Enhancement and Dynamic Distance Measurement

Abstract

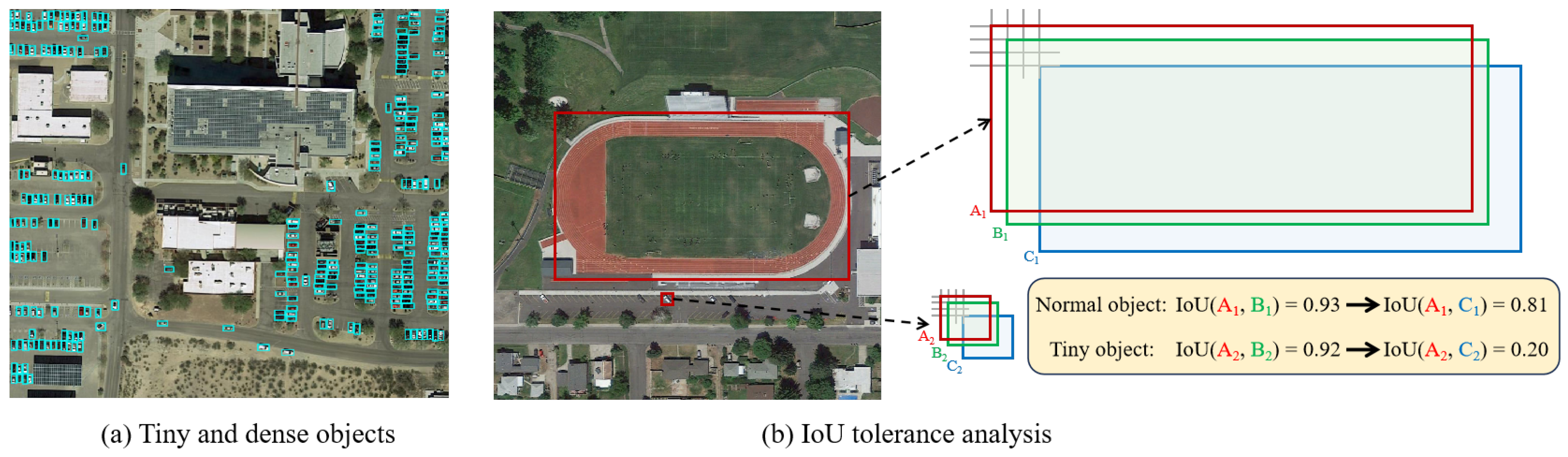

1. Introduction

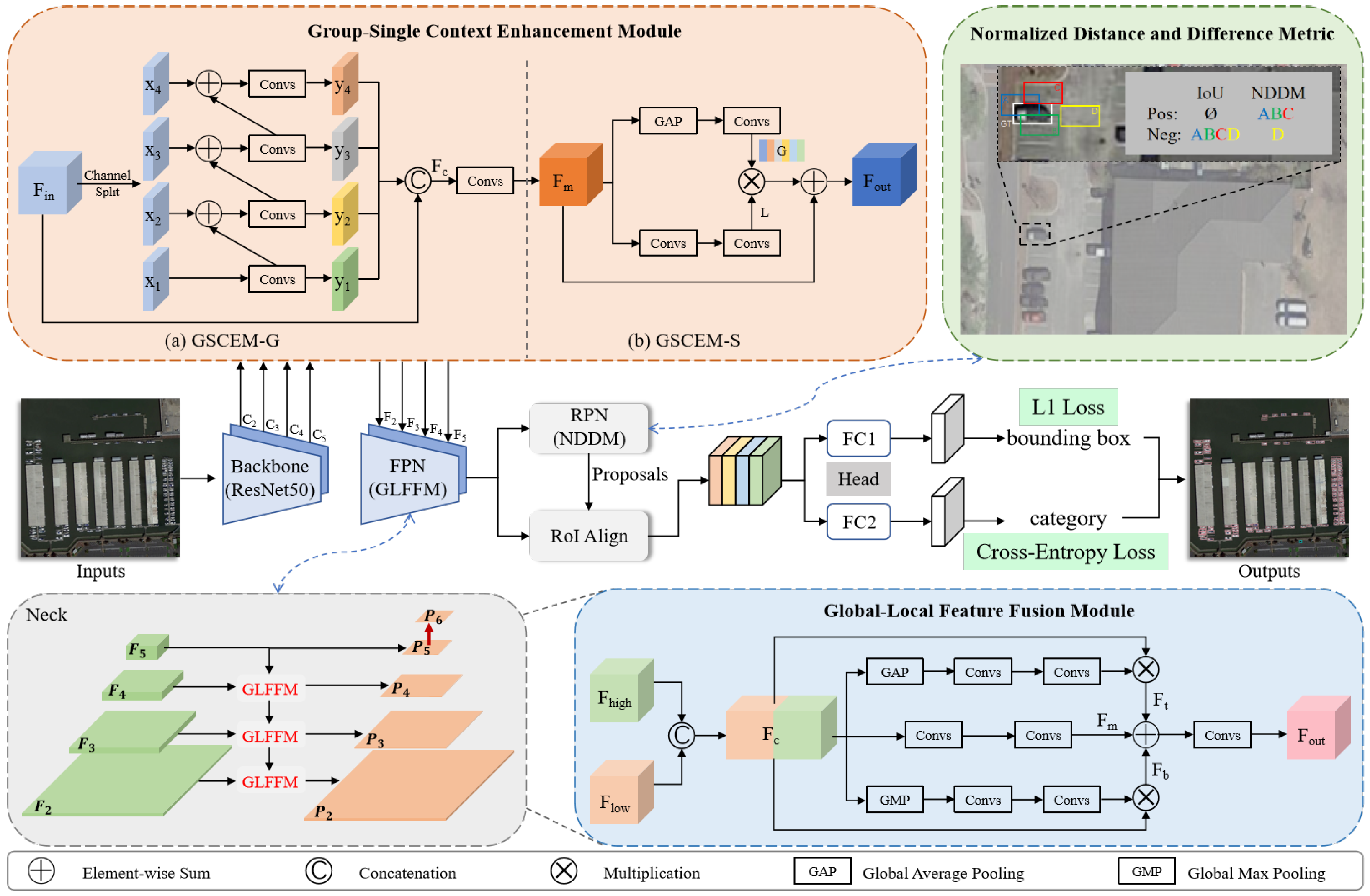

- We propose a new tiny object detector for remote sensing images named DCEDet, which improves detection performance by enhancing feature representation and aligning with a suitable label assignment strategy.

- We present the GSCEM and GLFFM to extract, respectively, context information and fuse multi-view features in order to improve the feature representation of tiny objects.

- We devise the NDDM to replace the IoU-based label assignment in Region Proposal Network (RPN), thereby facilitating the assignment of positive samples for tiny objects.

- To demonstrate the effectiveness of our method, we conduct extensive experiments on two tiny object detection datasets, achieving optimal performance.

2. Related Work

2.1. Generic Object Detection

2.2. Object Detection in Remote Sensing Images

2.3. Tiny Object Detection in Remote Sensing Images

3. Methodology

3.1. Overview

3.2. Group–Single Context Enhancement Module

3.3. Global–Local Feature Fusion Module

3.4. Normalized Distance and Difference Metric

| Algorithm 1 Normalized Distance and Difference Metric |

| Require: is a set of ground truth boxes on the image is a set of all anchor boxes , and are the predefined hyperparameters and are the thresholds of positive and negative samples T is the total number of training epochs and t is the current training epoch Ensure: is a set of positive samples is a set of negative samples is a set of ignore samples |

3.5. Loss Function

4. Experiments

4.1. Datasets

- AI-TODv2 [21]: This dataset is an enhanced version of the AI-TOD [19] dataset, designed for TOD in remote sensing images. It contains 28,036 images, each with a resolution of 800 × 800 pixels, along with 752,754 object instances annotated with horizontal bounding boxes (HBBs). These instances are divided into eight categories: airplane (AI), bridge (BR), storage-tank (ST), ship (SH), swimming-pool (SP), vehicle (VE), person (PE), and wind-mill (WM). The average absolute size of these instances is only 12.7 pixels. Based on their sizes, they can be further classified into four categories: very tiny (2∼8 pixels), tiny (8∼16 pixels), small (16∼32 pixels), and medium (32∼64 pixels). The proportions of these categories are 12.4%, 73.4%, 12.4%, and 1.8%, respectively. In addition, the numbers of images in the training set, validation set, and test set are 11,214, 2804, and 14,018, respectively. In this paper, we combine the training and validation sets to train models, while the test set is used to evaluate performance.

- LEVIR-SHIP [70]: This is a tiny ship detection dataset comprising 3896 remote sensing images, each with a resolution of 512 × 512 pixels. The images are captured by the GaoFen-1 and GaoFen-6 satellites and have a spatial resolution of 16 m. The dataset includes 3219 ship instances, annotated with HBBs. Most instances have sizes below 20 × 20 pixels, with a concentration around 10 × 10 pixels. Additionally, the distribution of images across the training, validation, and test sets corresponds to 3/5, 1/5, and 1/5 of the total dataset, respectively. In our experiments, we utilize the training set for model training and the test set for performance evaluation.

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Ablation Studies

4.4.1. Effectiveness of GSCEM

4.4.2. Effectiveness of GLFFM

4.4.3. Effectiveness of NDDM

4.5. Comparison Experiments

4.5.1. Results on the AI-TODv2 Dataset

4.5.2. Results on the LEVIR-SHIP Dataset

4.6. Analytical Experiments

4.6.1. Analysis of GSCEM-G

4.6.2. Analysis of GSCEM-S

4.6.3. Analytical Experiments of GSCEM Internal Components

4.6.4. Analytical Experiments on Determining the Training Scheduler in NDDM

4.6.5. Analysis of Hyperparameters in NDDM

4.6.6. Analysis of Curriculum Learning Strategy

4.6.7. Analysis of Positive Samples Obtained by NDDM

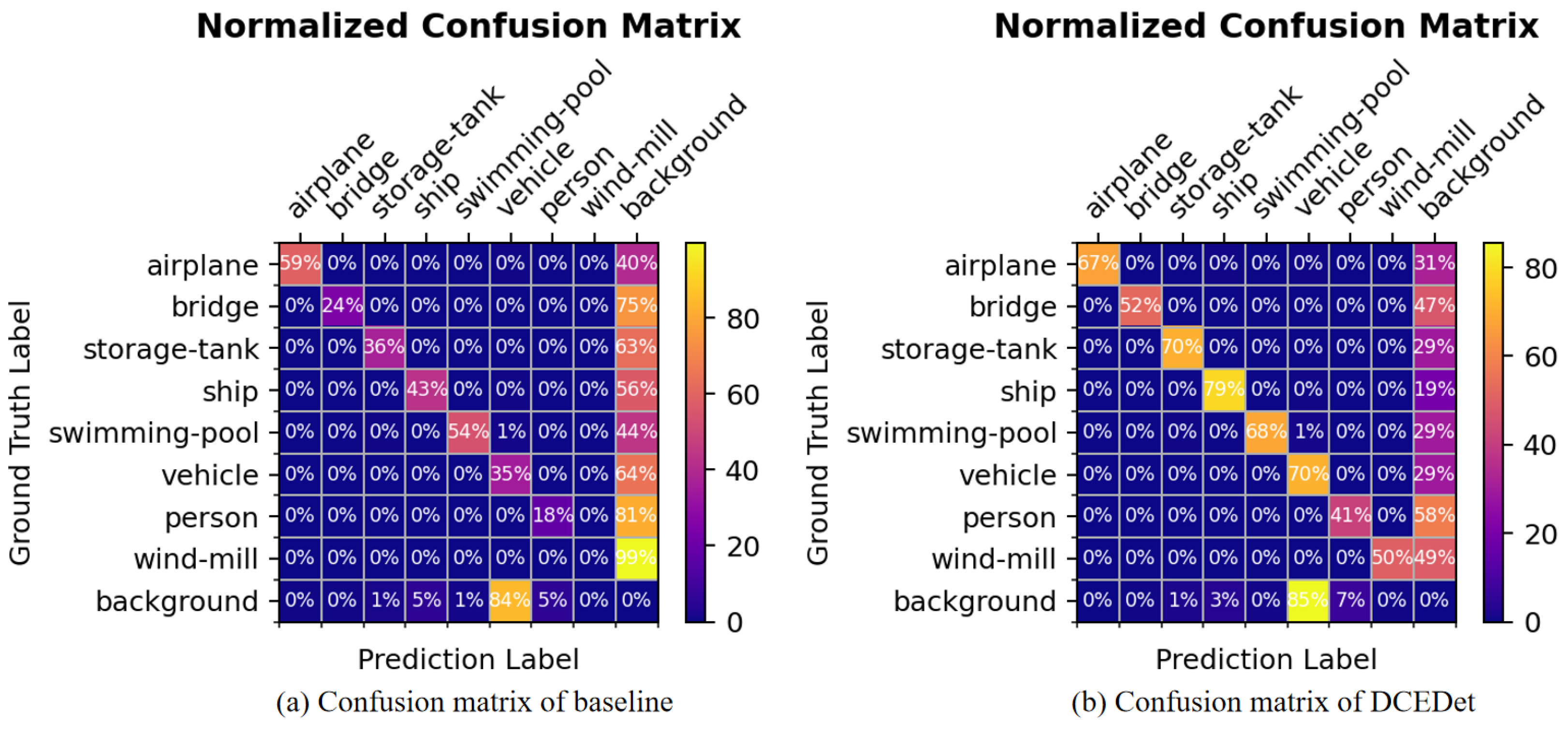

4.6.8. Analysis of Confusion Matrix

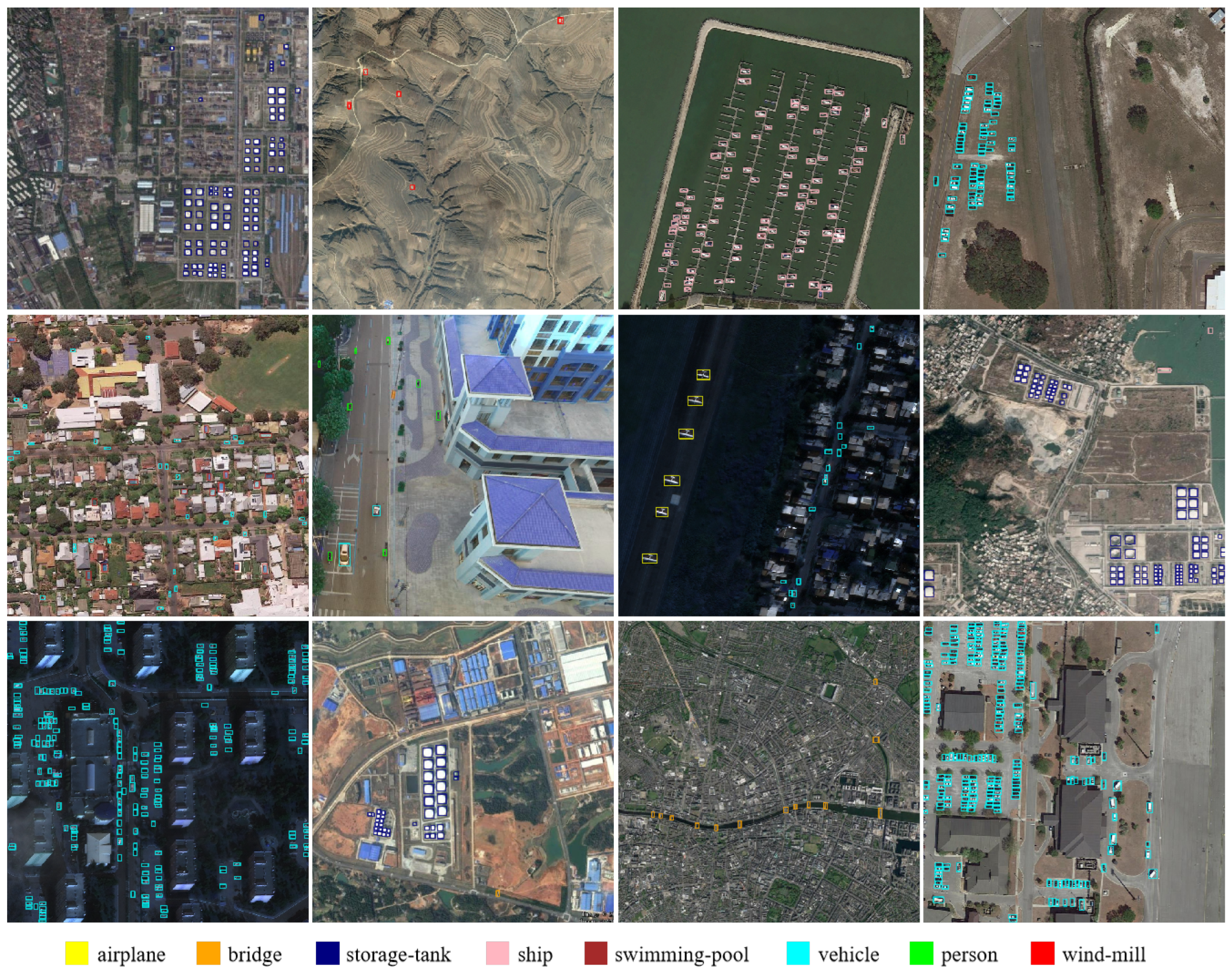

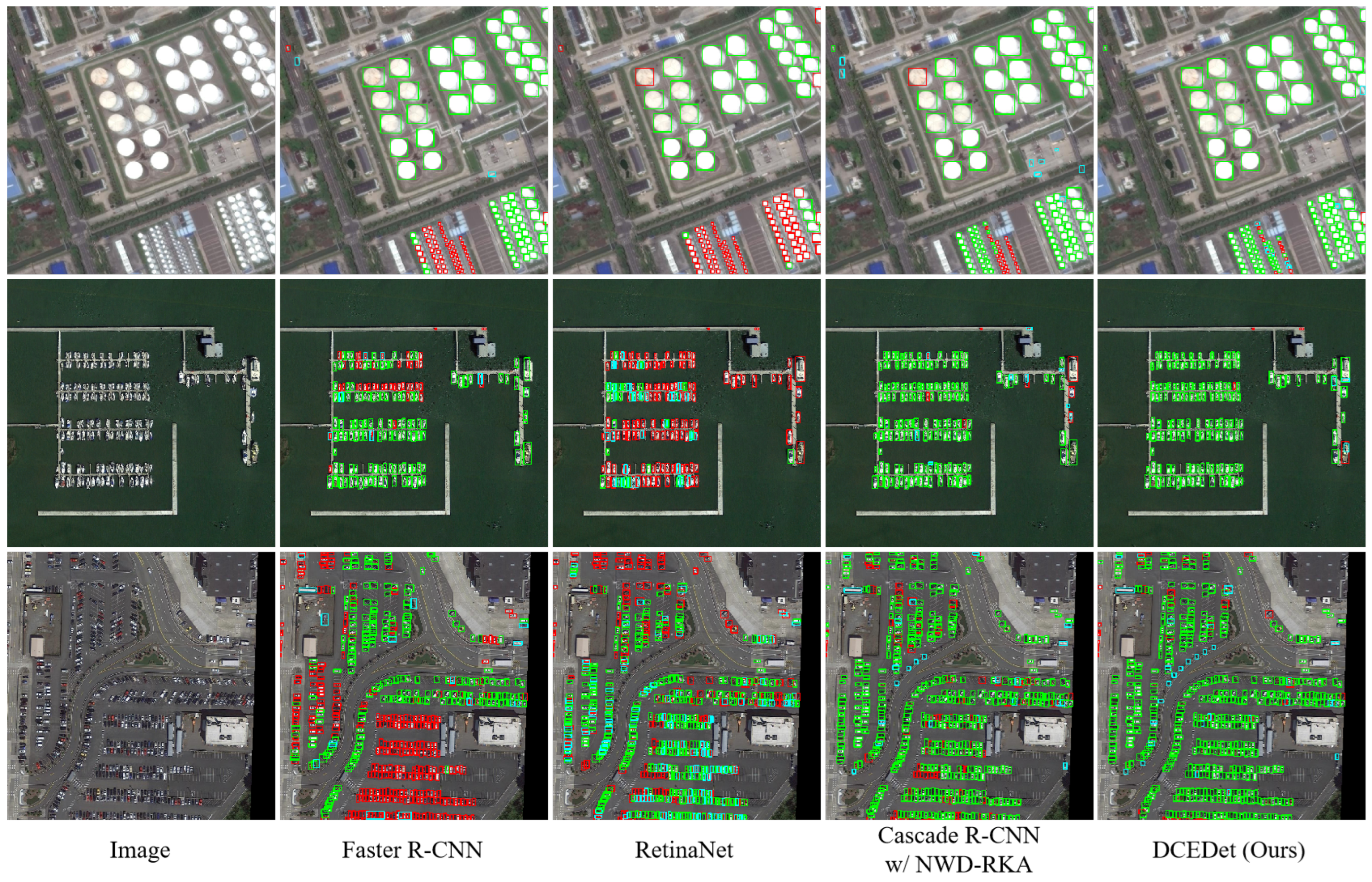

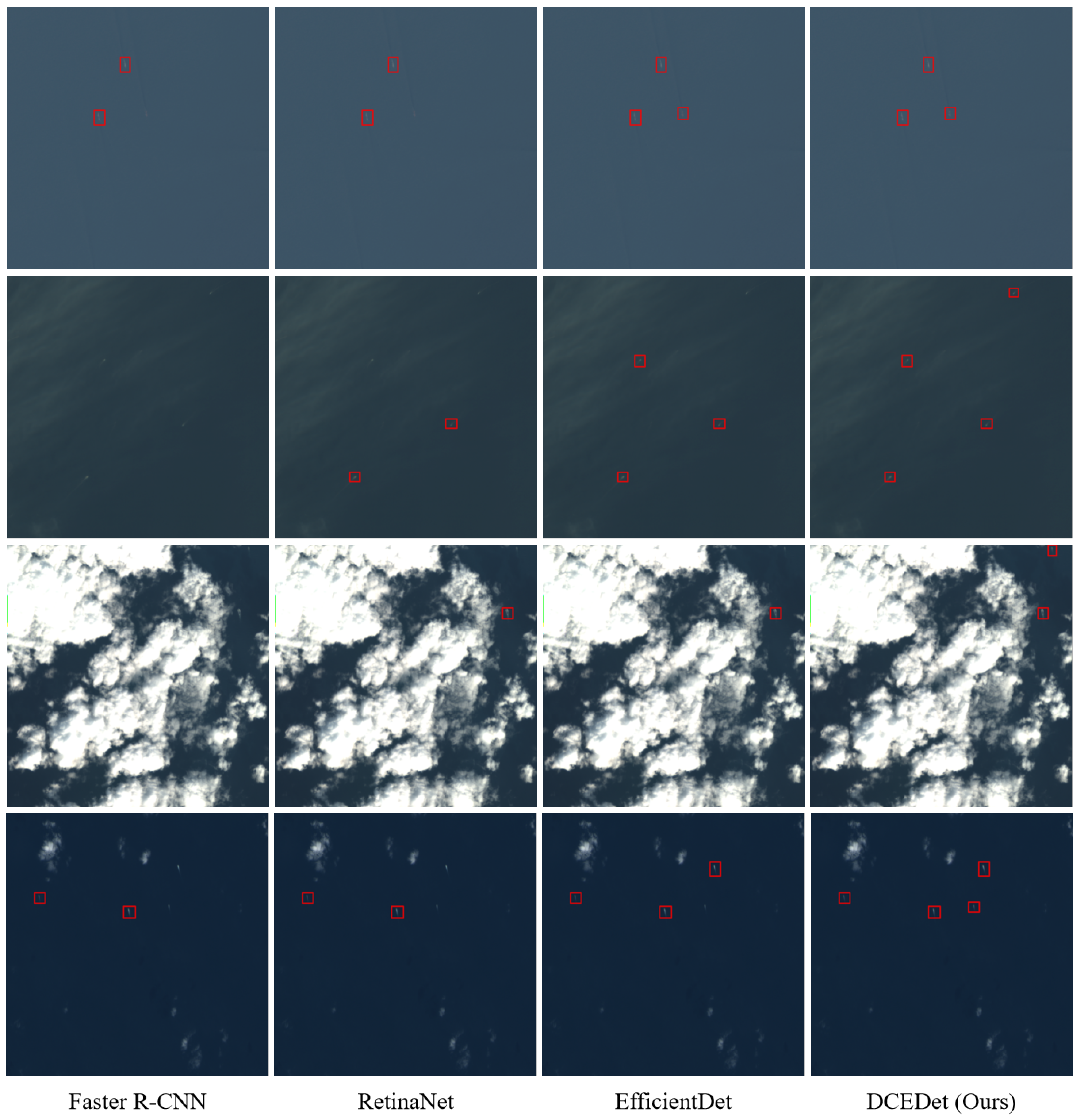

4.7. Visual Results

4.7.1. Detection Results on the AI-TODv2 Dataset

4.7.2. Detection Results on the LEVIR-SHIP Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, B.; Sui, H.; Ma, G.; Zhou, Y.; Zhou, M. Gmodet: A real-time detector for ground-moving objects in optical remote sensing images with regional awareness and semantic–spatial progressive interaction. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–23. [Google Scholar] [CrossRef]

- Ren, Z.; Tang, Y.; Yang, Y.; Zhang, W. Sasod: Saliency-aware ship object detection in high-resolution optical images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Wu, X.; Li, W.; Hong, D.; Tian, J.; Tao, R.; Du, Q. Vehicle detection of multi-source remote sensing data using active fine-tuning network. ISPRS J. Photogramm. Remote Sens. 2020, 167, 39–53. [Google Scholar] [CrossRef]

- Zhang, Q.; Zheng, Y.; Yuan, Q.; Song, M.; Yu, H.; Xiao, Y. Hyperspectral image denoising: From model-driven, data-driven, to model-data-driven. IEEE Trans. Neural Networks Learn. Syst. 2023, 35, 13143–13163. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Song, M.; Yu, H.; Zhang, L. Cooperated spectral low-rankness prior and deep spatial prior for HSI unsupervised denoising. IEEE Trans. Image Process. 2022, 31, 6356–6368. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Cheng, P.; Yu, Z.; Yu, L.; Chi, C. A survey on deep-learning-based real-time SAR ship detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 3218–3247. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Ming, Q.; Miao, L.; Zhou, Z.; Song, J.; Pizurica, A. Gradient calibration loss for fast and accurate oriented bounding box regression. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I. Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhang, T.; Wang, G.; Zhu, P.; Tang, X.; Jia, X.; Jiao, L. Remote sensing object detection meets deep learning: A metareview of challenges and advances. IEEE Geosci. Remote Sens. Mag. 2023, 11, 8–44. [Google Scholar] [CrossRef]

- Muzammul, M.; Li, X. A survey on deep domain adaptation and tiny object detection challenges, techniques and datasets. arXiv 2021, arXiv:2107.07927. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y. Deep learning-based detection from the perspective of small or tiny objects: A survey. Image Vis. Comput. 2022, 123, 104471. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, C.; Liu, Y.; Wang, X.; Tang, J.; Luo, B.; Huang, Z. Tiny object tracking: A large-scale dataset and a baseline. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 10273–10287. [Google Scholar] [CrossRef]

- Wang, J.; Yang, W.; Guo, H.; Zhang, R.; Xia, G.S. Tiny object detection in aerial images. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3791–3798. [Google Scholar]

- Zhang, T.; Zhang, X.; Zhu, X.; Wang, G.; Han, X.; Tang, X.; Jiao, L. Multistage enhancement network for tiny object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Detecting tiny objects in aerial images: A normalized wasserstein distance and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2022, 190, 79–93. [Google Scholar] [CrossRef]

- Ge, L.; Wang, G.; Zhang, T.; Zhuang, Y.; Chen, H.; Dong, H.; Chen, L. Adaptive dynamic label assignment for tiny object detection in aerial images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 6201–6214. [Google Scholar] [CrossRef]

- Fu, R.; Chen, C.; Yan, S.; Heidari, A.A.; Wang, X.; Mansour, R.F.; Chen, H. Gaussian similarity-based adaptive dynamic label assignment for tiny object detection. Neurocomputing 2023, 543, 126285. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, J.; Qi, Y.; Wu, Y.; Zhang, Y. Tiny object detection in remote sensing images based on object reconstruction and multiple receptive field adaptive feature enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5616213. [Google Scholar] [CrossRef]

- Wu, J.; Pan, Z.; Lei, B.; Hu, Y. Fsanet: Feature-and-spatial-aligned network for tiny object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, Y.; Yuan, Y.; Feng, Y. Gated and axis-concentrated localization network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2019, 58, 179–192. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Yang, W.; Yu, L. Dot distance for tiny object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1192–1201. [Google Scholar]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Rfla: Gaussian receptive field based label assignment for tiny object detection. In Proceedings of the European Conference on Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part IX. Springer: Cham, Switzerland, 2022; pp. 526–543. [Google Scholar]

- Zhou, Z.; Zhu, Y. Kldet: Detecting tiny objects in remote sensing images via kullback-leibler divergence. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4703316. [Google Scholar] [CrossRef]

- Li, Z.; Dong, Y.; Shen, L.; Liu, Y.; Pei, Y.; Yang, H.; Zheng, L.; Ma, J. Development and challenges of object detection: A survey. Neurocomputing 2024, 598, 128102. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Vercheval, N.; Pižurica, A. Not all boxes are equal: Learning to optimize bounding boxes with discriminative distributions in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5622514. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6154–6162. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2980–2988. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9627–9636. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 734–750. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6569–6578. [Google Scholar]

- Zhang, R.; Lei, Y. Afgn: Attention feature guided network for object detection in optical remote sensing image. Neurocomputing 2024, 610, 128527. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Yu, P.; Wang, S.; Tao, R. Sfsanet: Multiscale object detection in remote sensing image based on semantic fusion and scale adaptability. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–10. [Google Scholar] [CrossRef]

- Ma, W.; Li, N.; Zhu, H.; Jiao, L.; Tang, X.; Guo, Y.; Hou, B. Feature split–merge–enhancement network for remote sensing object detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Q.; Yuan, Y.; Du, Q.; Wang, Q. Abnet: Adaptive balanced network for multiscale object detection in remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Cheng, G.; Yao, Y.; Li, S.; Li, K.; Xie, X.; Wang, J.; Yao, X.; Han, J. Dual-aligned oriented detector. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Yao, Y.; Cheng, G.; Wang, G.; Li, S.; Zhou, P.; Xie, X.; Han, J. On improving bounding box representations for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2022, 61, 1–11. [Google Scholar] [CrossRef]

- Yu, L.; Zhi, X.; Hu, J.; Zhang, S.; Niu, R.; Zhang, W.; Jiang, S. Improved deformable convolution method for aircraft object detection in flight based on feature separation in remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 8313–8323. [Google Scholar] [CrossRef]

- Huang, Z.; Li, W.; Xia, X.G.; Wu, X.; Cai, Z.; Tao, R. A novel nonlocal-aware pyramid and multiscale multitask refinement detector for object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–20. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A centerNet++ model for ship detection in sar images. Pattern Recognit. 2021, 112, 107787. [Google Scholar] [CrossRef]

- Li, M.; Gao, Y.; Cai, W.; Yang, W.; Huang, Z.; Hu, X.; Leung, V.C. Enhanced attention guided teacher–student network for weakly supervised object detection. Neurocomputing 2024, 597, 127910. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, B.; Zhang, Q.; Gong, Y.; Lu, J.; Zeng, D. A visual knowledge oriented approach for weakly supervised remote sensing object detection. Neurocomputing 2024, 597, 128114. [Google Scholar] [CrossRef]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-cnn for few-shot object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Lu, X.; Sun, X.; Diao, W.; Mao, Y.; Li, J.; Zhang, Y.; Wang, P.; Fu, K. Few-shot object detection in aerial imagery guided by text-modal knowledge. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 966–970. [Google Scholar]

- Kisantal, M. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar] [CrossRef]

- Yu, X.; Gong, Y.; Jiang, N.; Ye, Q.; Han, Z. Scale match for tiny person detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1257–1265. [Google Scholar]

- Liu, H.I.; Tseng, Y.W.; Chang, K.C.; Wang, P.J.; Shuai, H.H.; Cheng, W.H. A denoising fpn with transformer r-cnn for tiny object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4704415. [Google Scholar] [CrossRef]

- Tong, X.; Su, S.; Wu, P.; Guo, R.; Wei, J.; Zuo, Z.; Sun, B. Msaffnet: A multiscale label-supervised attention feature fusion network for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Zhao, Z.; Du, J.; Li, C.; Fang, X.; Xiao, Y.; Tang, J. Dense tiny object detection: A scene context guided approach and a unified benchmark. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5606913. [Google Scholar] [CrossRef]

- Li, S.; Tong, Q.; Liu, X.; Cui, Z.; Liu, X. Ma2-fpn for tiny object detection from remote sensing images. In Proceedings of the 2022 15th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 5–7 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar]

- Shi, T.; Gong, J.; Hu, J.; Zhi, X.; Zhu, G.; Yuan, B.; Sun, Y.; Zhang, W. Adaptive feature fusion with attention-guided small target detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5623116. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, J.; Qi, Y.; Wu, Y.; Zhang, Y. A tiny object detection method based on explicit semantic guidance for remote sensing images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective fusion factor in fpn for tiny object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1160–1168. [Google Scholar]

- Zhang, X.; Zhang, X.; Cao, S.Y.; Yu, B.; Zhang, C.; Shen, H.L. Mrf3net: An infrared small target detection network using multireceptive field perception and effective feature fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5629414. [Google Scholar] [CrossRef]

- Chen, P.; Wang, J.; Zhang, Z.; He, C. Frli-net: Feature reconstruction and learning interaction network for tiny object detection in remote sensing images. IEEE Signal Processing Letters 2025, 32, 2159–2163. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2117–2125. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual generative adversarial networks for small object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1222–1230. [Google Scholar]

- Lin, C.; Mao, X.; Qiu, C.; Zou, L. Dtcnet: Transformer-cnn distillation for super-resolution of remote sensing image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11117–11133. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Zhang, Q.; Dong, Y.; Zheng, Y.; Yu, H.; Song, M.; Zhang, L.; Yuan, Q. Three-dimension spatial-spectral attention transformer for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5531213. [Google Scholar] [CrossRef]

- Wang, X.; Chen, Y.; Zhu, W. A survey on curriculum learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4555–4576. [Google Scholar] [CrossRef]

- Chen, J.; Chen, K.; Chen, H.; Zou, Z.; Shi, Z. A degraded reconstruction enhancement-based method for tiny ship detection in remote sensing images with a new large-scale dataset. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Oksuz, K.; Cam, B.C.; Akbas, E.; Kalkan, S. Localization recall precision (LRP): A new performance metric for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 504–519. [Google Scholar]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. One metric to measure them all: Localisation recall precision (lrp) for evaluating visual detection tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9446–9463. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. Mmdetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 3, pp. 850–855. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6054–6063. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10213–10224. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 9759–9768. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. Reppoints: Point set representation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9657–9666. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7363–7372. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Li, Q.; Mou, L.; Liu, Q.; Wang, Y.; Zhu, X.X. Hsf-net: Multiscale deep feature embedding for ship detection in optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 7147–7161. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10781–10790. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 21–26 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. Eca-net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 11534–11542. [Google Scholar]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3560–3569. [Google Scholar]

| GSCEM | GLFFM | NDDM | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 12.4 | 28.7 | 8.6 | 0.0 | 8.8 | 24.2 | 36.8 | 88.3 | 30.1 | 48.2 | 68.3 | |||

| ✔ | 14.1 | 31.8 | 10.5 | 0.1 | 10.8 | 27.1 | 38.0 | 86.6 | 28.4 | 42.8 | 65.4 | ||

| ✔ | 13.2 | 30.3 | 9.5 | 0.0 | 9.7 | 25.3 | 37.5 | 87.5 | 29.2 | 33.9 | 67.2 | ||

| ✔ | 20.3 | 50.3 | 12.5 | 5.8 | 19.5 | 26.3 | 35.1 | 82.1 | 29.3 | 36.0 | 49.2 | ||

| ✔ | ✔ | 14.4 | 32.1 | 10.9 | 0.1 | 10.9 | 27.1 | 38.6 | 86.4 | 28.3 | 43.8 | 65.2 | |

| ✔ | ✔ | ✔ | 23.5 | 53.9 | 16.8 | 8.5 | 24.1 | 28.1 | 37.1 | 78.8 | 27.7 | 32.4 | 44.7 |

| GSCEM | GLFFM | NDDM | AI | BR | ST | SH | SP | VE | PE | WM |

|---|---|---|---|---|---|---|---|---|---|---|

| / | / | / | / | / | / | / | / | |||

| 25.6/77.0 | 3.4/96.3 | 19.1/81.8 | 20.2/82.1 | 12.7/86.8 | 13.8/87.2 | 4.2/95.3 | 0.1/99.9 | |||

| ✔ | 27.7/75.2 | 7.9/91.8 | 21.1/80.3 | 23.3/79.1 | 11.9/86.5 | 14.9/86.2 | 5.4/94.5 | 0.4/98.9 | ||

| ✔ | 26.9/75.4 | 6.4/93.7 | 19.7/81.0 | 21.1/81.3 | 12.5/87.0 | 14.2/86.8 | 4.7/95.0 | 0.2/99.9 | ||

| ✔ | 28.5/75.8 | 14.1/87.5 | 32.6/71.3 | 34.1/69.9 | 14.2/86.4 | 24.7/78.6 | 8.8/92.1 | 4.9/95.4 | ||

| ✔ | ✔ | 27.3/75.1 | 9.9/90.1 | 20.5/81.1 | 23.7/78.7 | 12.8/86.4 | 15.3/85.7 | 5.7/94.3 | 0.1/99.5 | |

| ✔ | ✔ | ✔ | 32.4/71.5 | 17.5/83.5 | 34.2/69.5 | 45.9/58.3 | 15.0/86.0 | 26.9/76.1 | 10.9/90.4 | 5.3/94.7 |

| Method | Publication | Backbone | AP | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Anchor-based two-stage | |||||||||

| Faster R-CNN [11] | TPAMI2016 | ResNet-50 | 12.8 | 29.9 | 9.4 | 0.0 | 9.2 | 24.6 | 37.0 |

| Faster R-CNN [11] | TPAMI2016 | ResNet-101 | 13.1 | 30.7 | 9.2 | 0.0 | 9.7 | 24.6 | 35.5 |

| Faster R-CNN [11] | TPAMI2016 | HRNet-w32 | 14.5 | 32.8 | 10.6 | 0.1 | 11.1 | 27.4 | 37.8 |

| Cascade R-CNN [32] | CVPR2018 | ResNet-50 | 15.1 | 34.2 | 11.2 | 0.1 | 11.5 | 26.7 | 38.5 |

| TridentNet [77] | ICCV2019 | ResNet-50 | 10.1 | 24.5 | 6.7 | 0.1 | 6.3 | 19.8 | 31.9 |

| DetectoRS [78] | CVPR2021 | ResNet-50 | 16.1 | 35.5 | 12.5 | 0.1 | 12.6 | 28.3 | 40.0 |

| DotD [27] | CVPR2021 | ResNet-50 | 20.4 | 51.4 | 12.3 | 8.5 | 21.1 | 24.6 | 30.4 |

| Cascade R-CNN w/NWD-RKA [21] | ISPRS2022 | ResNet-50 | 22.2 | 52.5 | 15.1 | 7.8 | 21.8 | 28.0 | 37.2 |

| Anchor-based one-stage | |||||||||

| SSD [12] | ECCV2016 | VGG-16 | 10.7 | 32.5 | 4.0 | 2.0 | 8.7 | 16.8 | 28.0 |

| RetinaNet [33] | CVPR2017 | ResNet-50 | 8.9 | 24.2 | 4.6 | 2.7 | 8.4 | 13.1 | 20.4 |

| ATSS [79] | CVPR2022 | ResNet-50 | 13.0 | 31.0 | 8.7 | 2.3 | 11.2 | 18.0 | 29.9 |

| Anchor-free | |||||||||

| FCOS [34] | ICCV2019 | ResNet-50 | 12.0 | 30.2 | 7.3 | 2.2 | 11.1 | 16.6 | 26.9 |

| RepPoints [80] | ICCV2019 | ResNet-50 | 9.3 | 23.6 | 5.4 | 2.8 | 10.0 | 12.3 | 18.9 |

| Grid R-CNN [81] | CVPR2019 | ResNet-50 | 14.3 | 31.1 | 11.0 | 0.1 | 11.0 | 25.7 | 36.7 |

| FoveaBox [82] | TIP2020 | ResNet-50 | 11.3 | 28.1 | 7.4 | 1.4 | 8.6 | 17.8 | 32.2 |

| FSANet [25] | TGRS2022 | ResNet-50 | 17.6 | 45.0 | 10.5 | 5.4 | 15.8 | 22.9 | 33.8 |

| ORFENet [24] | TGRS2024 | ResNet-50 | 18.9 | 44.4 | 12.7 | 6.9 | 18.4 | 23.4 | 30.3 |

| ESG_TODNet [59] | GRSL2024 | ResNet-50 | 19.9 | 47.7 | 13.6 | 6.1 | 19.3 | 24.7 | 30.4 |

| FRLI-Net [62] | SPL2025 | ResNet-50 | 20.1 | 48.5 | 13.5 | 6.1 | 20.8 | 25.9 | 31.8 |

| DCEDet (Ours) | – | ResNet-50 | 23.5 | 53.9 | 16.8 | 8.5 | 24.1 | 28.1 | 37.1 |

| Cascade R-CNN w/NWD- [21] | ISPRS2022 | ResNet-50 | 25.1 | 55.4 | 18.9 | 10.1 | 25.0 | 29.2 | 38.8 |

| [24] | TGRS2024 | ResNet-50 | 24.8 | 55.4 | 18.2 | 9.7 | 24.4 | 28.7 | 35.1 |

| ESG_ [59] | GRSL2024 | ResNet-50 | 24.6 | 55.1 | 18.1 | 9.5 | 24.0 | 29.4 | 35.6 |

| (Ours) | – | ResNet-50 | 26.8 | 55.8 | 21.9 | 11.2 | 27.8 | 31.2 | 40.2 |

| Method | Publication | Backbone | AI | BR | ST | SH | SP | VE | PE | WM |

|---|---|---|---|---|---|---|---|---|---|---|

| Anchor-based two-stage | ||||||||||

| TridentNet [77] | ICCV2019 | ResNet-50 | 19.3 | 0.1 | 17.2 | 16.2 | 12.4 | 12.5 | 3.4 | 0.0 |

| Faster R-CNN [11] | TPAMI2016 | ResNet-50 | 19.7 | 4.8 | 19.0 | 19.9 | 3.7 | 14.4 | 4.8 | 0.0 |

| Faster R-CNN [11] | TPAMI2016 | ResNet-101 | 25.3 | 8.5 | 19.4 | 19.9 | 12.5 | 14.6 | 4.5 | 0.0 |

| Faster R-CNN [11] | TPAMI2016 | HRNet-w32 | 27.9 | 9.5 | 21.5 | 21.4 | 13.0 | 16.7 | 5.9 | 0.0 |

| Cascade R-CNN [32] | CVPR2018 | ResNet-50 | 26.2 | 9.6 | 24.0 | 24.3 | 13.2 | 17.5 | 5.8 | 0.1 |

| TridentNet [77] | ICCV2019 | ResNet-50 | 19.3 | 0.1 | 17.2 | 16.2 | 12.4 | 12.5 | 3.4 | 0.0 |

| DetectoRS [78] | CVPR2021 | ResNet-50 | 28.5 | 11.7 | 23.2 | 26.4 | 14.9 | 17.6 | 6.5 | 0.2 |

| DotD [27] | CVPR2021 | ResNet-50 | 18.7 | 17.5 | 34.7 | 37.0 | 12.4 | 25.4 | 10.3 | 7.4 |

| Cascade R-CNN w/NWD-RKA [21] | ISPRS2022 | ResNet-50 | 28.5 | 17.5 | 36.9 | 38.3 | 13.7 | 26.6 | 10.4 | 5.7 |

| Anchor-based one-stage | ||||||||||

| SSD [12] | ECCV2016 | VGG-16 | 14.9 | 9.6 | 13.2 | 18.2 | 10.6 | 12.7 | 2.9 | 3.1 |

| RetinaNet [33] | CVPR2017 | ResNet-50 | 1.3 | 11.8 | 14.3 | 23.6 | 5.8 | 11.4 | 2.3 | 0.5 |

| ATSS [79] | CVPR2022 | ResNet-50 | 15.4 | 11.7 | 20.0 | 27.6 | 9.4 | 14.8 | 4.7 | 0.0 |

| Anchor-free | ||||||||||

| FCOS [34] | ICCV2019 | ResNet-50 | 7.2 | 13.4 | 20.2 | 26.7 | 8.4 | 16.3 | 3.5 | 0.0 |

| RepPoints [80] | ICCV2019 | ResNet-50 | 0.0 | 0.1 | 22.5 | 28.8 | 0.2 | 18.3 | 4.1 | 0.0 |

| Grid R-CNN [81] | CVPR2019 | ResNet-50 | 24.5 | 11.7 | 20.9 | 23.5 | 12.1 | 16.1 | 5.1 | 0.4 |

| FoveaBox [82] | TIP2020 | ResNet-50 | 15.6 | 3.3 | 21.1 | 20.8 | 9.7 | 16.3 | 4.0 | 0.0 |

| FSANet [25] | TGRS2022 | ResNet-50 | 19.2 | 16.0 | 28.3 | 33.0 | 12.9 | 20.4 | 6.0 | 5.3 |

| ORFENet [24] | TGRS2024 | ResNet-50 | 14.6 | 18.8 | 32.2 | 38.2 | 13.1 | 25.5 | 8.4 | 0.0 |

| ESG_TODNet [59] | GRSL2024 | ResNet-50 | 17.5 | 18.2 | 34.1 | 37.8 | 13.0 | 25.1 | 8.0 | 5.2 |

| FRLI-Net [62] | SPL2025 | ResNet-50 | 17.3 | 19.3 | 33.7 | 37.9 | 14.2 | 25.5 | 8.9 | 6.1 |

| DCEDet (Ours) | – | ResNet-50 | 32.4 | 17.5 | 34.2 | 45.9 | 15.0 | 26.9 | 10.9 | 5.3 |

| Cascade R-CNN w/NWD- [21] | ISPRS2022 | ResNet-50 | 32.3 | 16.8 | 36.2 | 53.1 | 16.6 | 27.0 | 12.0 | 6.8 |

| [24] | TGRS2024 | ResNet-50 | 26.0 | 21.1 | 35.8 | 50.6 | 17.1 | 27.8 | 11.0 | 8.7 |

| ESG_ [59] | GRSL2024 | ResNet-50 | 26.6 | 20.5 | 35.5 | 50.7 | 16.5 | 27.8 | 11.2 | 8.0 |

| (Ours) | – | ResNet-50 | 34.7 | 19.8 | 37.0 | 54.0 | 18.4 | 29.1 | 14.3 | 7.1 |

| Method | ||

|---|---|---|

| Faster R-CNN [11] | 69.9 | 7.0 |

| FCOS [34] | 75.1 | 10.8 |

| SSD [12] | 51.1 | 3.4 |

| RetinaNet [33] | 73.7 | 10.6 |

| HSF-Net [83] | 73.4 | 8.7 |

| CenterNet [36] | 77.9 | 10.1 |

| EfficientDet [84] | 79.4 | 13.1 |

| DCEDet (Ours) | 81.2 | 13.4 |

| Kernel Sizes | AP | ||||||

|---|---|---|---|---|---|---|---|

| 1-3-5-7 | 13.6 | 30.9 | 10.0 | 0.0 | 9.7 | 26.3 | 38.1 |

| 3-3-3-3 | 13.7 | 31.4 | 10.0 | 0.0 | 9.6 | 26.4 | 38.1 |

| 3-5-7-9 | 13.9 | 31.7 | 10.3 | 0.1 | 10.4 | 26.6 | 38.0 |

| 5-5-5-5 | 13.6 | 31.1 | 9.8 | 0.1 | 10.2 | 26.2 | 37.4 |

| 5-7-9-11 | 13.5 | 30.6 | 10.3 | 0.0 | 9.6 | 26.2 | 38.0 |

| 7-7-7-7 | 13.5 | 30.5 | 10.0 | 0.0 | 9.8 | 25.9 | 38.5 |

| Attention | AP | ||||||

|---|---|---|---|---|---|---|---|

| – | 13.9 | 31.7 | 10.3 | 0.1 | 10.4 | 26.6 | 38.0 |

| SE | 13.1 | 29.7 | 9.7 | 0.0 | 9.3 | 25.8 | 37.7 |

| ECA | 13.3 | 30.3 | 9.8 | 0.1 | 9.5 | 25.8 | 37.8 |

| MS-CAM | 13.4 | 30.6 | 9.6 | 0.0 | 9.9 | 26.2 | 38.0 |

| GSCEM-S | 14.1 | 31.8 | 10.5 | 0.1 | 10.8 | 27.1 | 38.0 |

| Model | AP | ||||||

|---|---|---|---|---|---|---|---|

| Baseline | 12.4 | 28.7 | 8.6 | 0.0 | 8.8 | 24.2 | 36.8 |

| Baseline_GSCEM-G | 13.9 | 31.7 | 10.3 | 0.1 | 10.4 | 26.6 | 38.0 |

| Baseline_GSCEM-S | 13.8 | 31.2 | 10.3 | 0.0 | 10.0 | 26.7 | 38.8 |

| Baseline_GSCEM-G_GSCEM-S | 14.1 | 31.8 | 10.5 | 0.1 | 10.8 | 27.1 | 38.0 |

| AP | |||||||

|---|---|---|---|---|---|---|---|

| Baseline_NDDM_linear | 18.8 | 47.0 | 11.5 | 3.6 | 18.3 | 25.2 | 34.3 |

| Baseline_NDDM_root | 19.6 | 48.5 | 12.2 | 4.3 | 19.0 | 25.7 | 35.2 |

| Baseline_NDDM_exponential | 15.9 | 41.4 | 8.8 | 2.8 | 14.4 | 22.1 | 33.5 |

| 0.00 | 0.10 | 0.15 | 0.20 | 0.25 | 0.30 | ||

|---|---|---|---|---|---|---|---|

| 0.80 | 19.8/4.0/19.2 | 20.3/4.8/19.8 | 20.3/3.5/19.8 | 20.2/3.8/19.8 | 20.3/4.2/20.0 | 19.9/3.9/20.0 | |

| 0.85 | 20.0/3.3/19.8 | 20.3/4.2/20.0 | 20.0/4.6/20.1 | 20.1/5.0/20.0 | 20.3/4.7/20.2 | 20.6/5.2/20.3 | |

| 0.90 | 19.5/3.3/19.0 | 19.9/3.2/20.0 | 20.0/3.9/19.9 | 20.4/3.5/19.7 | 20.0/4.8/19.7 | 20.1/3.4/19.8 | |

| 0.95 | 19.7/3.6/19.3 | 20.1/4.2/20.2 | 20.2/3.5/19.9 | 20.2/3.3/20.1 | 20.1/3.0/20.1 | 20.3/3.1/20.0 | |

| 1.00 | 19.7/2.6/19.4 | 20.1/2.7/20.0 | 20.2/3.3/20.2 | 20.2/3.3/19.6 | 20.3/2.6/19.7 | 20.2/2.4/19.8 | |

| AP | |||||||

|---|---|---|---|---|---|---|---|

| 0 | 13.6 | 34.5 | 8.2 | 2.0 | 11.0 | 20.5 | 34.4 |

| 1 | 18.8 | 47.2 | 11.1 | 2.7 | 18.2 | 26.1 | 34.9 |

| 0.85–0.30 | 19.2 | 48.2 | 11.4 | 5.0 | 18.5 | 26.0 | 35.1 |

| 0.30–0.85 | 20.3 | 50.3 | 12.5 | 5.8 | 19.5 | 26.3 | 35.1 |

| Model | Pos_num | AP | ||

|---|---|---|---|---|

| Baseline | 244,177 (∼244K) | 12.2 | 0.0 | 8.6 |

| Baseline + NDDM | 669,011 (∼669K) | 20.0 | 5.4 | 19.4 |

| Baseline + GSCEM + GLFFM | 244,229 (∼244K) | 14.4 | 0.0 | 10.8 |

| Baseline + GSCEM + GLFFM + NDDM | 669,030 (∼669K) | 23.3 | 7.2 | 23.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, X.; Ren, Z.; Bhatti, U.A.; Huang, M.; Wu, Y. DCEDet: Tiny Object Detection in Remote Sensing Images Based on Dual-Contrast Feature Enhancement and Dynamic Distance Measurement. Remote Sens. 2025, 17, 2876. https://doi.org/10.3390/rs17162876

Hu X, Ren Z, Bhatti UA, Huang M, Wu Y. DCEDet: Tiny Object Detection in Remote Sensing Images Based on Dual-Contrast Feature Enhancement and Dynamic Distance Measurement. Remote Sensing. 2025; 17(16):2876. https://doi.org/10.3390/rs17162876

Chicago/Turabian StyleHu, Xinkai, Zhida Ren, Uzair Aslam Bhatti, Mengxing Huang, and Yirong Wu. 2025. "DCEDet: Tiny Object Detection in Remote Sensing Images Based on Dual-Contrast Feature Enhancement and Dynamic Distance Measurement" Remote Sensing 17, no. 16: 2876. https://doi.org/10.3390/rs17162876

APA StyleHu, X., Ren, Z., Bhatti, U. A., Huang, M., & Wu, Y. (2025). DCEDet: Tiny Object Detection in Remote Sensing Images Based on Dual-Contrast Feature Enhancement and Dynamic Distance Measurement. Remote Sensing, 17(16), 2876. https://doi.org/10.3390/rs17162876