LSTMConvSR: Joint Long–Short-Range Modeling via LSTM-First–CNN-Next Architecture for Remote Sensing Image Super-Resolution

Abstract

1. Introduction

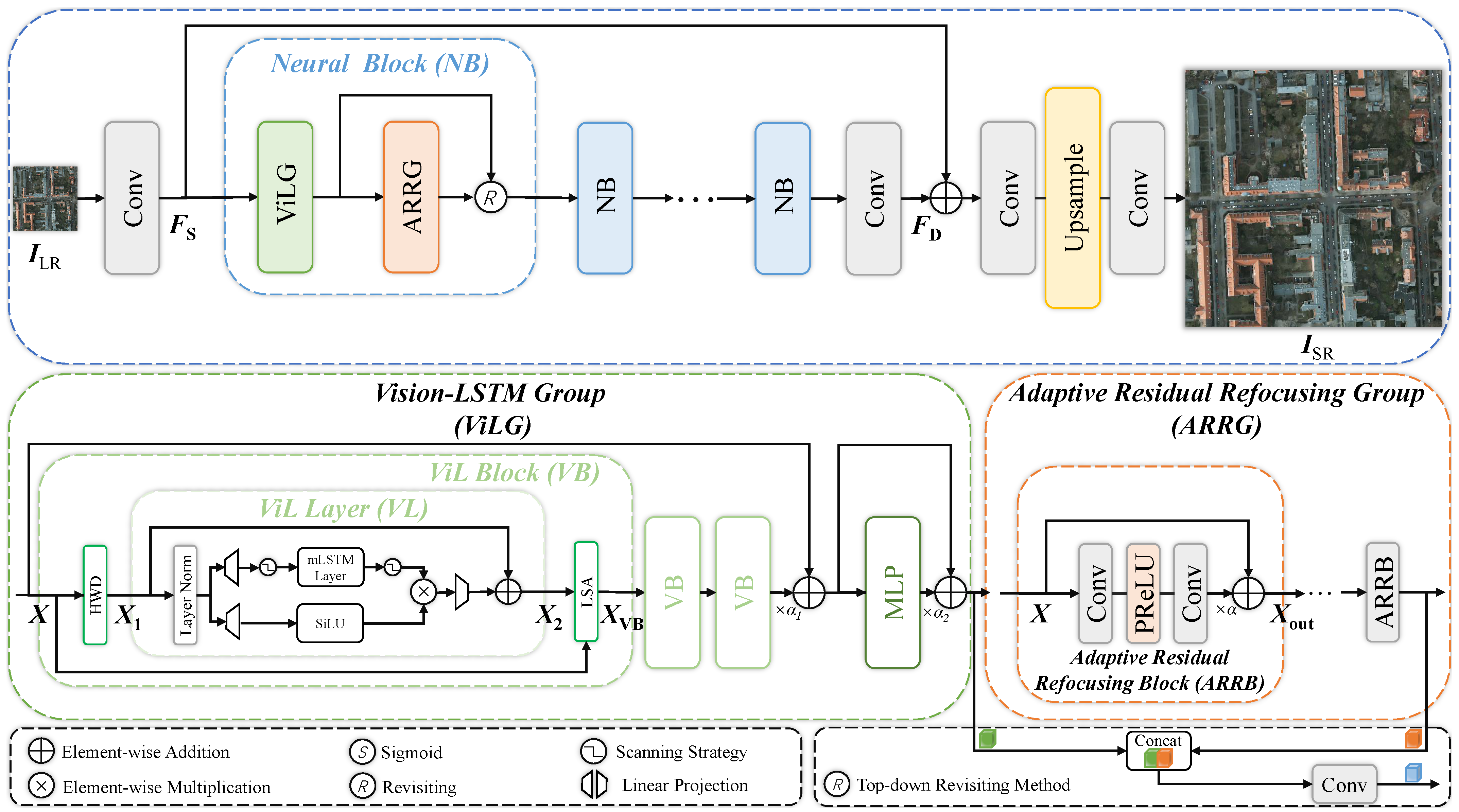

- We propose LSTMConvSR: a framework of LSTM-first–CNN-next based on top-down neural attention for remote sensing image super-resolution.

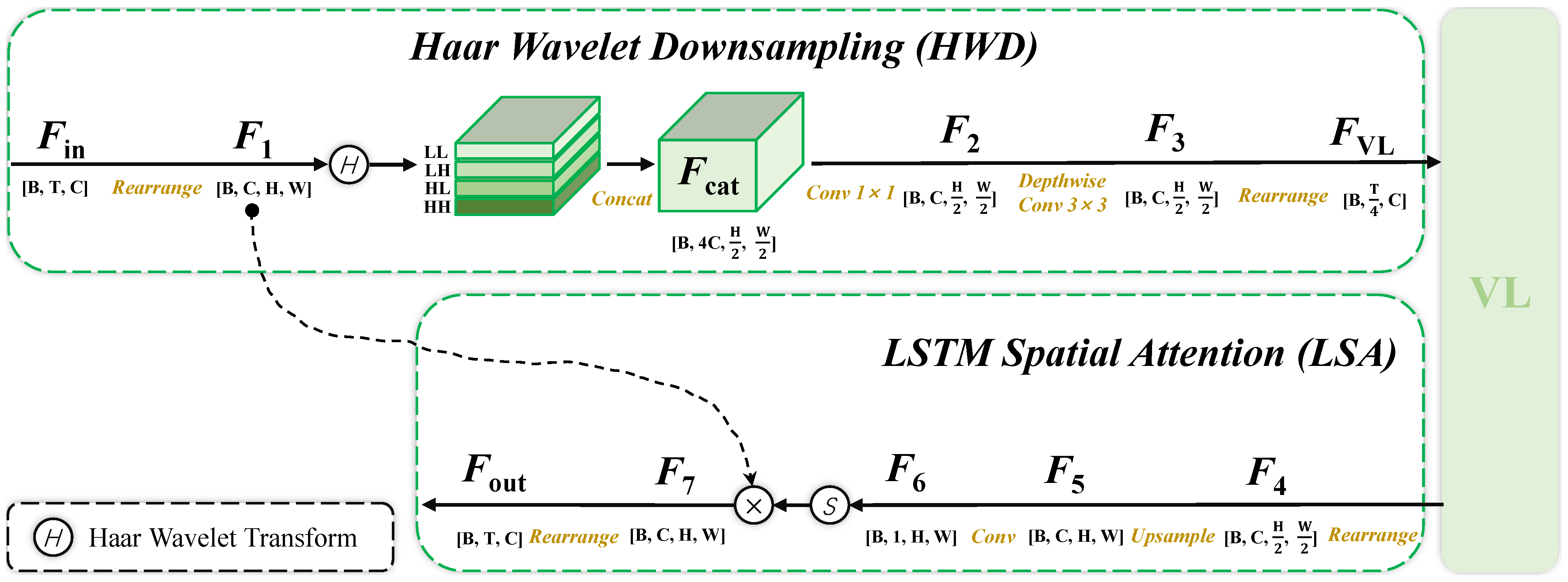

- We propose Haar Wavelet Downsampling (HWD) and LSTM Spatial Attention (LSA) in ViL to reduce its computational complexity while maintaining reconstruction performance.

- We propose a Top-down Revisiting Method that cascades LSTM-derived features with CNN-refocused representations through concatenation–projection, establishing an LSTM-first–CNN-next framework with cross-stage feature retrospection.

2. Related Works

2.1. Remote Sensing Single-Image Super-Resolution

2.2. Biomimetic Top-Down Neural Attention-Based Vision Models

3. Methodology

3.1. Overview of LSTMConvSR

3.2. Vision-LSTM Group

3.3. Adaptive Residual Refocusing Group

3.4. Top-Down Revisiting Method

4. Experiments

4.1. Datasets and Evaluation

4.2. Implementation Details

4.3. Ablation Study

4.3.1. Effect of Key Modules

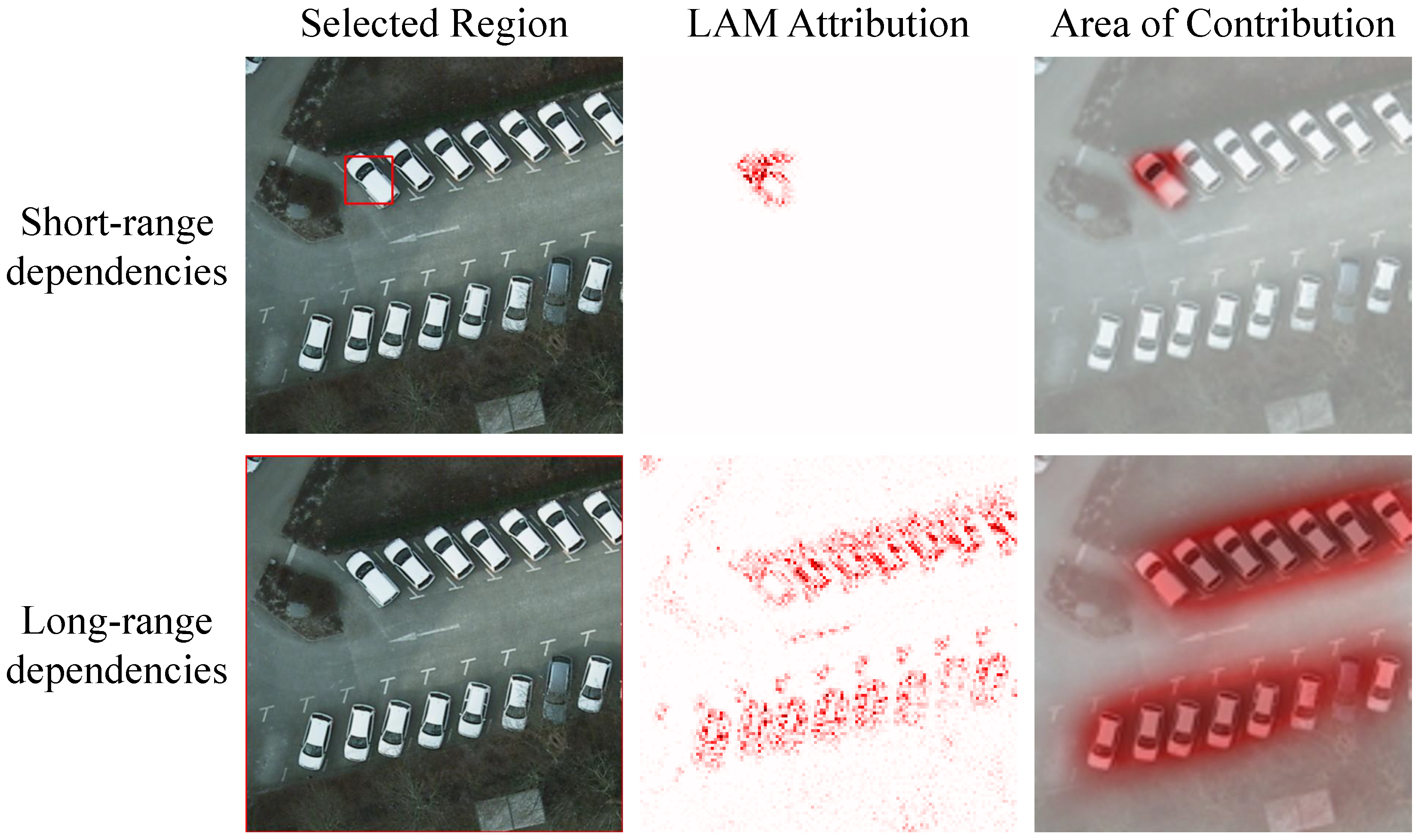

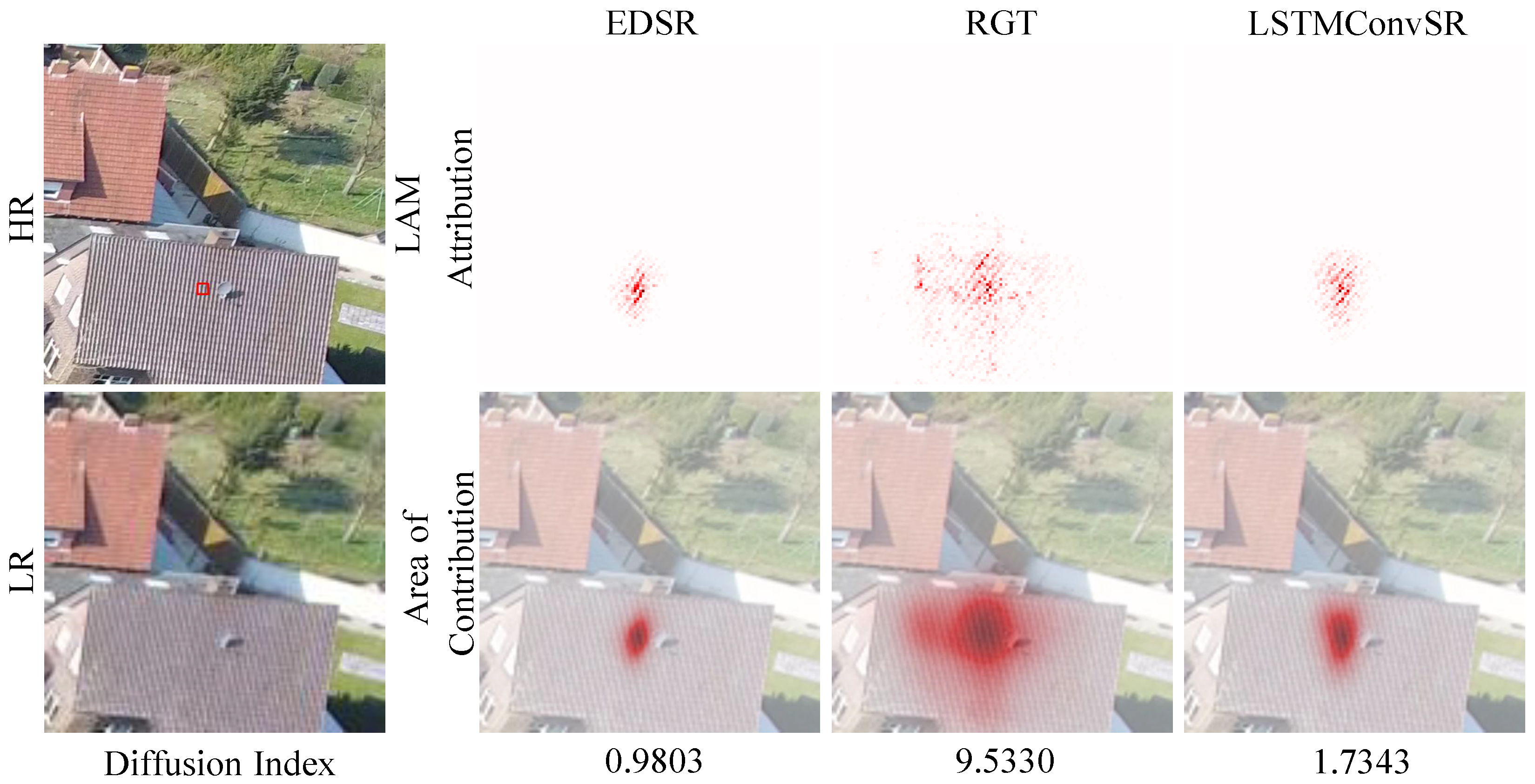

4.3.2. Visualization of LSTM-First-CNN-Next

4.4. Comparisons with State of the Art

4.4.1. Comparative Methods

4.4.2. Quantitative Evaluations

4.4.3. Complexity and Efficiency Evaluation

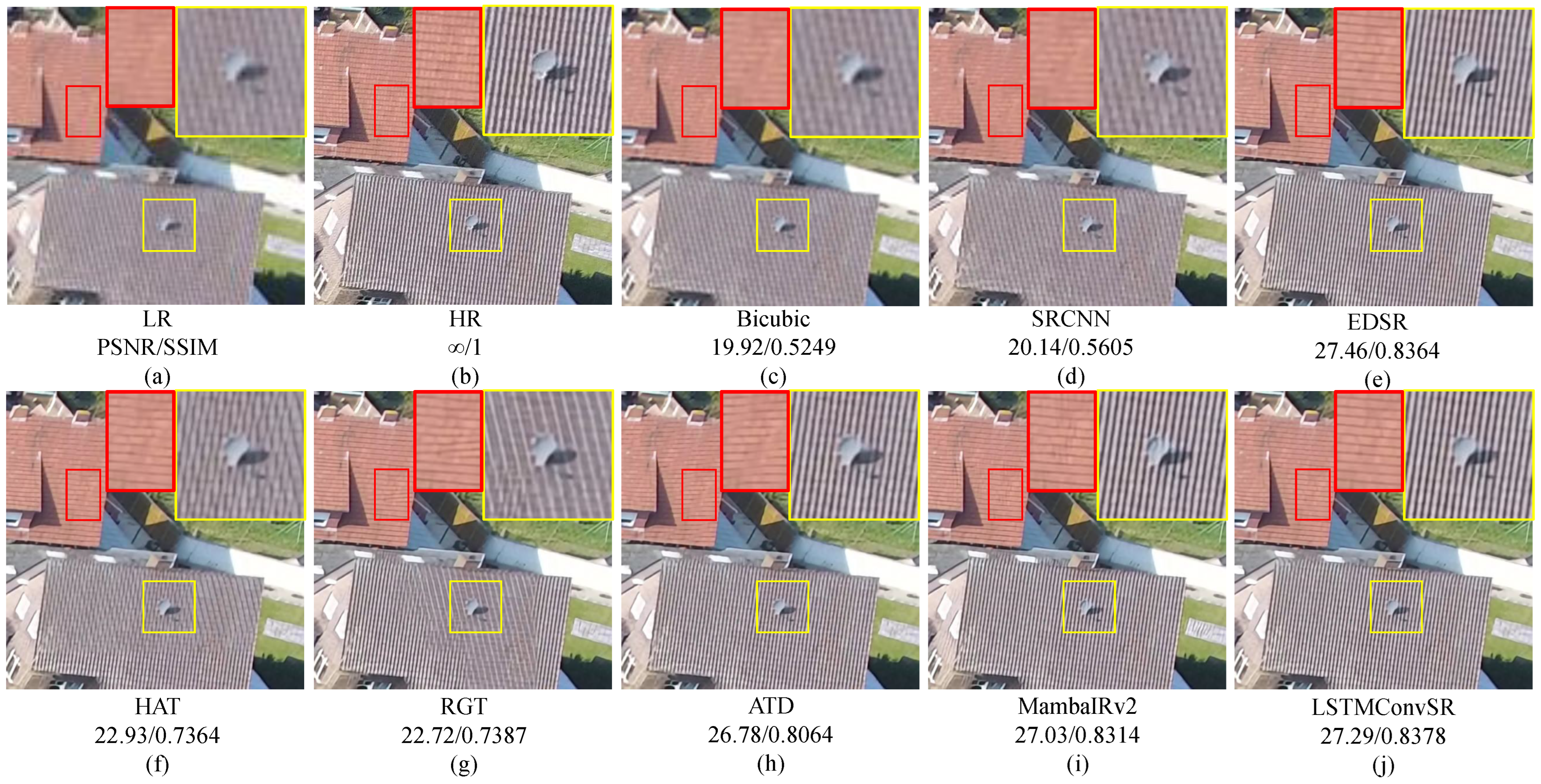

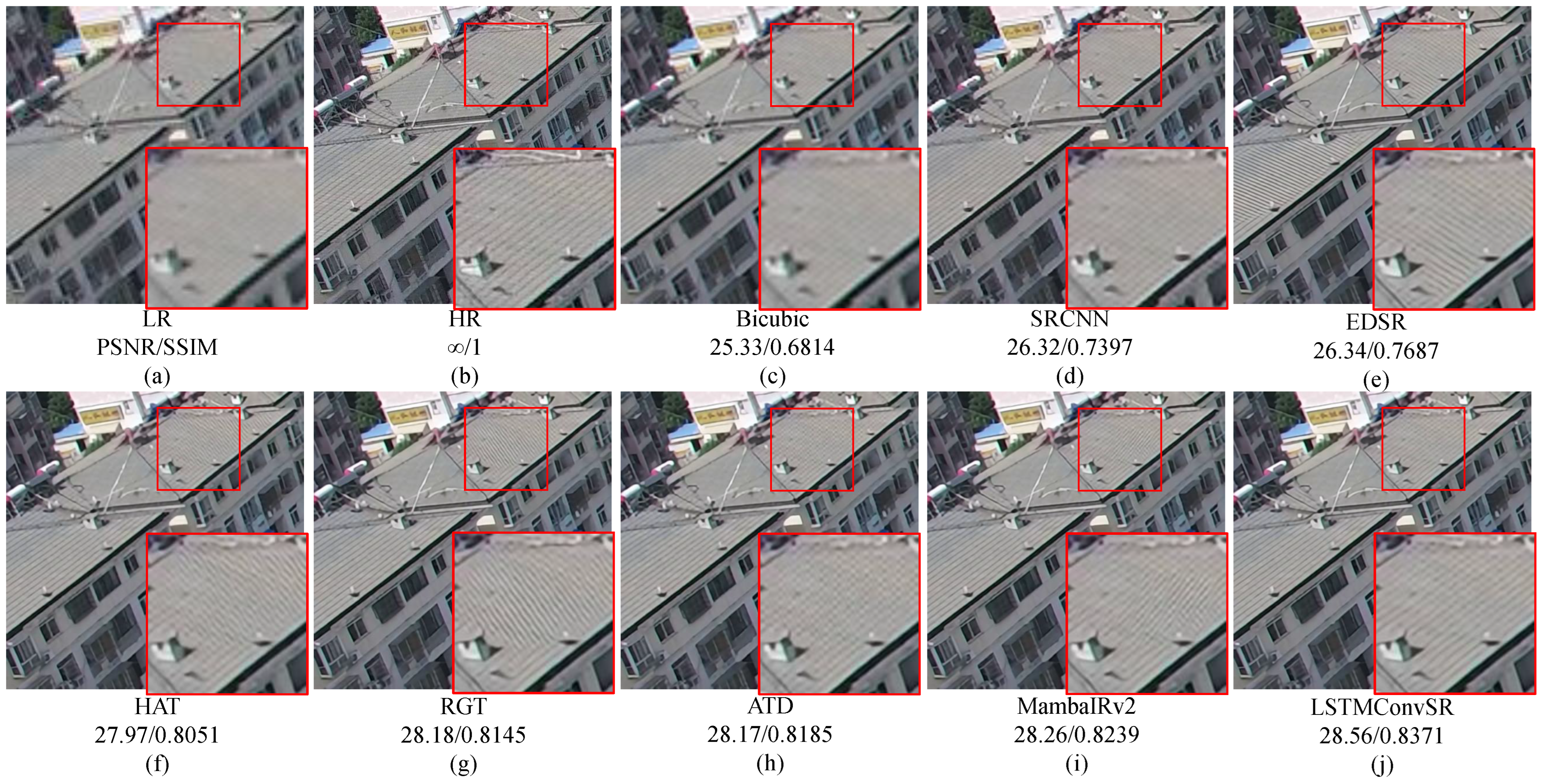

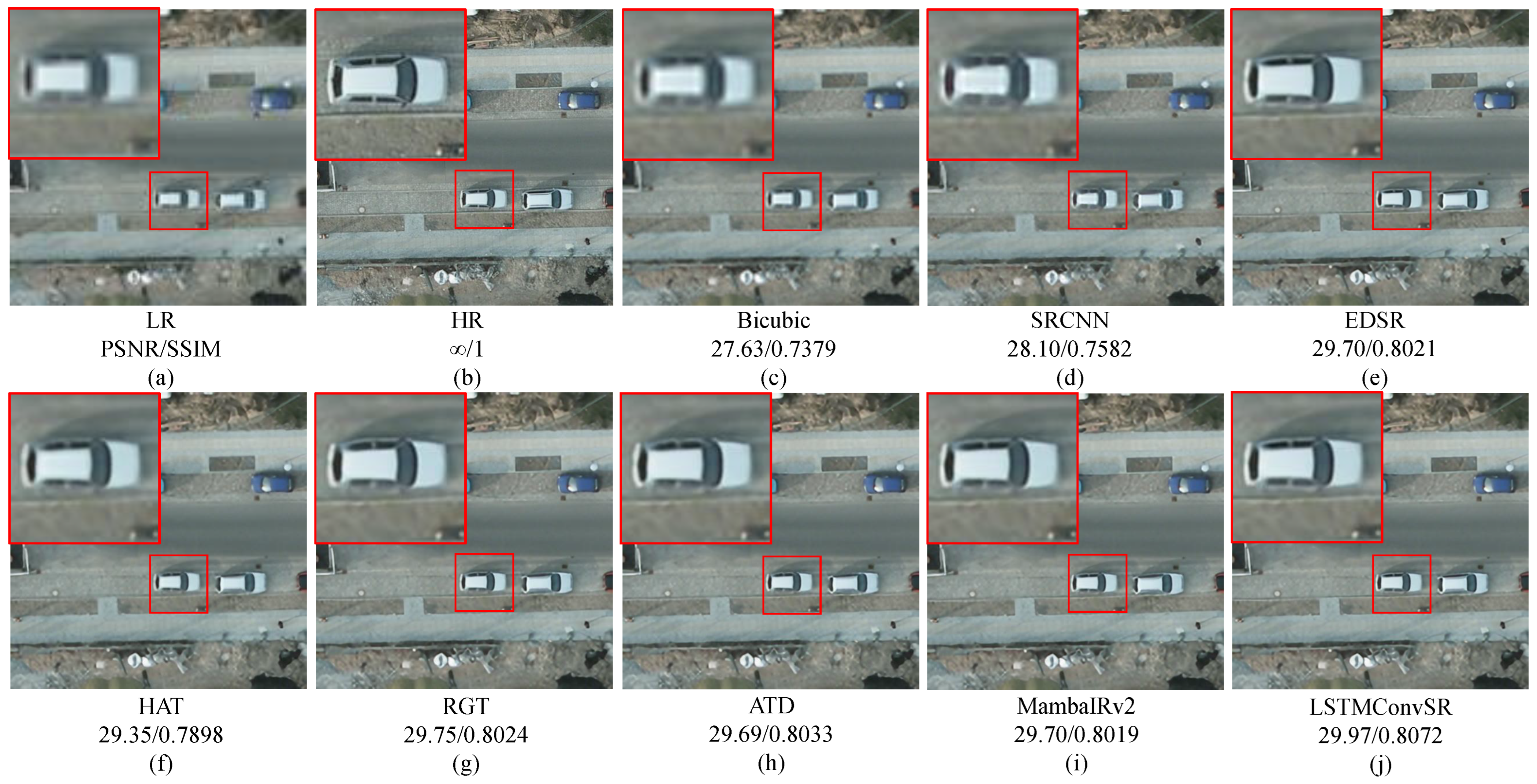

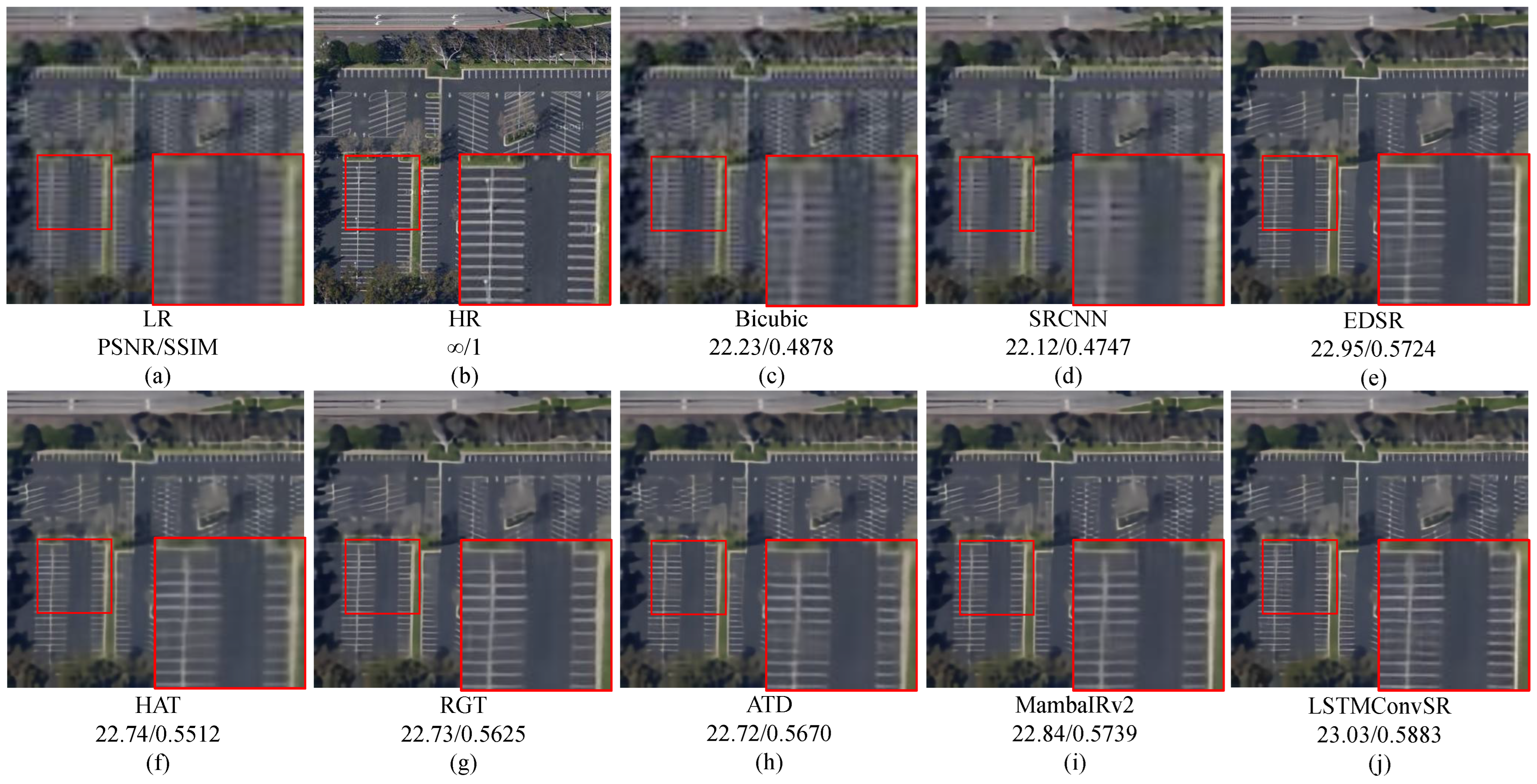

4.4.4. Qualitative Results

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super Resolution Assisted Object Detection in Multimodal Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605415. [Google Scholar] [CrossRef]

- Feng, H.; Zhang, L.; Yang, X.; Liu, Z. Enhancing class-incremental object detection in remote sensing through instance-aware distillation. Neurocomputing 2024, 583, 127552. [Google Scholar] [CrossRef]

- Bashir, S.M.A.; Wang, Y. Small object detection in remote sensing images with residual feature aggregation-based super-resolution and object detector network. Remote Sens. 2021, 13, 1854. [Google Scholar] [CrossRef]

- Zhang, L.; Dong, R.; Yuan, S.; Li, W.; Zheng, J.; Fu, H. Making low-resolution satellite images reborn: A deep learning approach for super-resolution building extraction. Remote Sens. 2021, 13, 2872. [Google Scholar] [CrossRef]

- Li, J.; He, W.; Cao, W.; Zhang, L.; Zhang, H. UANet: An Uncertainty-Aware Network for Building Extraction From Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5608513. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, D.; Shi, B.; Zhou, Y.; Chen, J.; Yao, R.; Xue, Y. Multi-source collaborative enhanced for remote sensing images semantic segmentation. Neurocomputing 2022, 493, 76–90. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Cui, B.; Zhang, H.; Jing, W.; Liu, H.; Cui, J. SRSe-net: Super-resolution-based semantic segmentation network for green tide extraction. Remote Sens. 2022, 14, 710. [Google Scholar] [CrossRef]

- Deng, Y.; Tang, S.; Chang, S.; Zhang, H.; Liu, D.; Wang, W. A Novel Scheme for Range Ambiguity Suppression of Spaceborne SAR Based on Underdetermined Blind Source Separation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5207915. [Google Scholar] [CrossRef]

- Guo, D.; Xia, Y.; Xu, L.; Li, W.; Luo, X. Remote sensing image super-resolution using cascade generative adversarial nets. Neurocomputing 2021, 443, 117–130. [Google Scholar] [CrossRef]

- Salvetti, F.; Mazzia, V.; Khaliq, A.; Chiaberge, M. Multi-image super resolution of remotely sensed images using residual attention deep neural networks. Remote Sens. 2020, 12, 2207. [Google Scholar] [CrossRef]

- Gu, J.; Sun, X.; Zhang, Y.; Fu, K.; Wang, L. Deep Residual Squeeze and Excitation Network for Remote Sensing Image Super-Resolution. Remote Sens. 2019, 11, 1817. [Google Scholar] [CrossRef]

- Huang, B.; He, B.; Wu, L.; Guo, Z. Deep residual dual-attention network for super-resolution reconstruction of remote sensing images. Remote Sens. 2021, 13, 2784. [Google Scholar] [CrossRef]

- Wang, X.; Yi, J.; Guo, J.; Song, Y.; Lyu, J.; Xu, J.; Yan, W.; Zhao, J.; Cai, Q.; Min, H. A Review of Image Super-Resolution Approaches Based on Deep Learning and Applications in Remote Sensing. Remote Sens. 2022, 14, 5423. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22367–22377. [Google Scholar]

- Chen, Z.; Zhang, Y.; Gu, J.; Kong, L.; Yang, X. Recursive Generalization Transformer for Image Super-Resolution. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Yu, W.; Wang, X. MambaOut: Do We Really Need Mamba for Vision? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2025.

- Guo, H.; Li, J.; Dai, T.; Ouyang, Z.; Ren, X.; Xia, S.T. Mambair: A simple baseline for image restoration with state-space model. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 222–241. [Google Scholar]

- Zhu, Q.; Zhang, G.; Zou, X.; Wang, X.; Huang, J.; Li, X. ConvMambaSR: Leveraging State-Space Models and CNNs in a Dual-Branch Architecture for Remote Sensing Imagery Super-Resolution. Remote Sens. 2024, 16, 3254. [Google Scholar] [CrossRef]

- Guo, H.; Guo, Y.; Zha, Y.; Zhang, Y.; Li, W.; Dai, T.; Xia, S.T.; Li, Y. MambaIRv2: Attentive State Space Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Alkin, B.; Beck, M.; Pöppel, K.; Hochreiter, S.; Brandstetter, J. Vision-LSTM: XLSTM as Generic Vision Backbone. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Pöppel, K.; Beck, M.; Spanring, M.; Auer, A.; Prudnikova, O.; Kopp, M.K.; Klambauer, G.; Brandstetter, J.; Hochreiter, S. xLSTM: Extended Long Short-Term Memory. In Proceedings of the First Workshop on Long-Context Foundation Models @ ICML 2024, Vienna, Austria, 27 July 2024. [Google Scholar]

- Xie, F.; Zhang, W.; Wang, Z.; Ma, C. Quadmamba: Learning quadtree-based selective scan for visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 117682–117707. [Google Scholar]

- Lei, S.; Shi, Z.; Zou, Z. Super-Resolution for Remote Sensing Images via Local-Global Combined Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1243–1247. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Fernández-Beltran, R.; Plaza, J.; Plaza, A.; Li, J. Remote sensing single-image superresolution based on a deep compendium model. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1432–1436. [Google Scholar] [CrossRef]

- Dong, X.; Sun, X.; Jia, X.; Xi, Z.; Gao, L.; Zhang, B. Remote sensing image super-resolution using novel dense-sampling networks. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1618–1633. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z. Hybrid-Scale Self-Similarity Exploitation for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5401410. [Google Scholar] [CrossRef]

- Wang, J.; Wang, B.; Wang, X.; Zhao, Y.; Long, T. Hybrid Attention-Based U-Shaped Network for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5612515. [Google Scholar] [CrossRef]

- Huo, Y.; Gang, S.; Guan, C. FCIHMRT: Feature Cross-Layer Interaction Hybrid Method Based on Res2Net and Transformer for Remote Sensing Scene Classification. Electronics 2023, 12, 4362. [Google Scholar] [CrossRef]

- Lei, S.; Shi, Z.; Mo, W. Transformer-Based Multistage Enhancement for Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5615611. [Google Scholar] [CrossRef]

- Kang, X.; Duan, P.; Li, J.; Li, S. Efficient Swin Transformer for Remote Sensing Image Super-Resolution. IEEE Trans. Image Process. 2024, 33, 6367–6379. [Google Scholar] [CrossRef]

- Kang, Y.; Zhang, X.; Wang, S.; Jin, G. SCAT: Shift Channel Attention Transformer for Remote Sensing Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 10337–10347. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Zhang, Q.; Lin, C.W. Frequency-Assisted Mamba for Remote Sensing Image Super-Resolution. IEEE Trans. Multimed. 2025, 27, 1783–1796. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, H.; Zhou, F.; Luo, C.; Sun, X.; Rahardja, S.; Ren, P. MambaHSISR: Mamba Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–16. [Google Scholar] [CrossRef]

- Gu, J.; Dong, C. Interpreting super-resolution networks with local attribution maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9199–9208. [Google Scholar]

- Gilbert, C.D.; Sigman, M. Brain states: Top-down influences in sensory processing. Neuron 2007, 54, 677–696. [Google Scholar] [CrossRef] [PubMed]

- Saalmann, Y.B.; Pigarev, I.N.; Vidyasagar, T.R. Neural mechanisms of visual attention: How top-down feedback highlights relevant locations. Science 2007, 316, 1612–1615. [Google Scholar] [CrossRef]

- Li, Z. Understanding Vision: Theory, Models, and Data; University Press: Oxford, UK, 2014. [Google Scholar]

- Hu, P.; Ramanan, D. Bottom-up and top-down reasoning with hierarchical rectified gaussians. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5600–5609. [Google Scholar]

- Zhang, J.; Bargal, S.A.; Lin, Z.; Brandt, J.; Shen, X.; Sclaroff, S. Top-down neural attention by excitation backprop. Int. J. Comput. Vis. 2018, 126, 1084–1102. [Google Scholar] [CrossRef]

- Pang, B.; Li, Y.; Li, J.; Li, M.; Cao, H.; Lu, C. Tdaf: Top-down attention framework for vision tasks. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 2384–2392. [Google Scholar]

- Shi, B.; Darrell, T.; Wang, X. Top-down visual attention from analysis by synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2102–2112. [Google Scholar]

- Lou, M.; Yu, Y. OverLoCK: An Overview-first-Look-Closely-next ConvNet with Context-Mixing Dynamic Kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2025. [Google Scholar]

- Xiao, M.; Zheng, S.; Liu, C.; Wang, Y.; He, D.; Ke, G.; Bian, J.; Lin, Z.; Liu, T.Y. Invertible image rescaling. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 126–144. [Google Scholar]

- Xu, G.; Liao, W.; Zhang, X.; Li, C.; He, X.; Wu, X. Haar wavelet downsampling: A simple but effective downsampling module for semantic segmentation. Pattern Recognit. 2023, 143, 109819. [Google Scholar] [CrossRef]

- Wu, H.; Yang, Y.; Xu, H.; Wang, W.; Zhou, J.; Zhu, L. RainMamba: Enhanced Locality Learning with State Space Models for Video Deraining. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 7881–7890. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Lyu, Y.; Vosselman, G.; Xia, G.S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Wang, J.; Chan, K.C.; Loy, C.C. Exploring clip for assessing the look and feel of images. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2555–2563. [Google Scholar]

- Zhang, L.; Li, Y.; Zhou, X.; Zhao, X.; Gu, S. Transcending the Limit of Local Window: Advanced Super-Resolution Transformer with Adaptive Token Dictionary. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 2856–2865. [Google Scholar]

- Hao, J.; Li, W.; Lu, Y.; Jin, Y.; Zhao, Y.; Wang, S.; Wang, B. Scale-Aware Backprojection Transformer for Single Remote Sensing Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5649013. [Google Scholar] [CrossRef]

| Dataset | ViLG | ARRBs | PSNR ↑ | SSIM ↑ | LPIPS ↓ | CLIPIQA ↑ |

|---|---|---|---|---|---|---|

| Potsdam | 0 | 1 | 33.55 | 0.8631 | 0.2858 | 0.2157 |

| 1 | 1 | 33.77 | 0.8661 | 0.2793 | 0.2169 | |

| 0 | 12 | 33.90 | 0.8679 | 0.2738 | 0.2211 | |

| 1 | 12 | 33.94 | 0.8684 | 0.2727 | 0.2203 | |

| UAVid | 0 | 1 | 27.99 | 0.7433 | 0.3392 | 0.2501 |

| 1 | 1 | 28.11 | 0.7475 | 0.3344 | 0.2515 | |

| 0 | 12 | 28.23 | 0.7517 | 0.3286 | 0.2558 | |

| 1 | 12 | 28.24 | 0.7521 | 0.3287 | 0.2570 | |

| RSSCN7 | 0 | 1 | 26.31 | 0.6288 | 0.5341 | 0.2038 |

| 1 | 1 | 26.34 | 0.6311 | 0.5272 | 0.2121 | |

| 0 | 12 | 26.35 | 0.6332 | 0.5177 | 0.2237 | |

| 1 | 12 | 26.36 | 0.6336 | 0.5186 | 0.2242 |

| Dataset | ARRBs | PSNR ↑ | SSIM ↑ | LPIPS ↓ | CLIPIQA ↑ |

|---|---|---|---|---|---|

| Potsdam | 0 | 33.60 | 0.8637 | 0.2843 | 0.2174 |

| 1 | 33.77 | 0.8661 | 0.2793 | 0.2169 | |

| 4 | 33.86 | 0.8672 | 0.2762 | 0.2191 | |

| 8 | 33.91 | 0.8680 | 0.2742 | 0.2193 | |

| 12 | 33.94 | 0.8684 | 0.2727 | 0.2203 | |

| 16 | 33.94 | 0.8685 | 0.2723 | 0.2222 | |

| UAVid | 0 | 28.01 | 0.7438 | 0.3391 | 0.2529 |

| 1 | 28.11 | 0.7475 | 0.3344 | 0.2515 | |

| 4 | 28.18 | 0.7498 | 0.3315 | 0.2522 | |

| 8 | 28.22 | 0.7517 | 0.3294 | 0.2540 | |

| 12 | 28.24 | 0.7521 | 0.3287 | 0.2570 | |

| 16 | 28.26 | 0.7530 | 0.3276 | 0.2567 | |

| RSSCN7 | 0 | 26.31 | 0.6286 | 0.5342 | 0.2057 |

| 1 | 26.34 | 0.6311 | 0.5272 | 0.2121 | |

| 4 | 26.36 | 0.6327 | 0.5239 | 0.2178 | |

| 8 | 26.36 | 0.6334 | 0.5199 | 0.2220 | |

| 12 | 26.36 | 0.6336 | 0.5186 | 0.2242 | |

| 16 | 26.35 | 0.6338 | 0.5165 | 0.2254 |

| Dataset | Revisiting | PSNR ↑ | SSIM ↑ | LPIPS ↓ | CLIPIQA ↑ |

|---|---|---|---|---|---|

| Potsdam | w/o | 33.91 | 0.8679 | 0.2734 | 0.2205 |

| w | 33.94 | 0.8684 | 0.2727 | 0.2203 | |

| UAVid | w/o | 28.23 | 0.7518 | 0.3287 | 0.2590 |

| w | 28.24 | 0.7521 | 0.3287 | 0.2570 | |

| RSSCN7 | w/o | 26.34 | 0.6330 | 0.5169 | 0.2243 |

| w | 26.36 | 0.6336 | 0.5186 | 0.2242 |

| Dataset | HWD and LSA | PSNR ↑ | SSIM ↑ | LPIPS ↓ | CLIPIQA ↑ |

|---|---|---|---|---|---|

| Potsdam | w/o | 33.94 | 0.8685 | 0.2721 | 0.2204 |

| w | 33.94 | 0.8684 | 0.2727 | 0.2203 | |

| UAVid | w/o | 28.26 | 0.7527 | 0.3279 | 0.2552 |

| w | 28.24 | 0.7521 | 0.3287 | 0.2570 | |

| RSSCN7 | w/o | 26.36 | 0.6337 | 0.5162 | 0.2247 |

| w | 26.36 | 0.6336 | 0.5186 | 0.2242 |

| HWD and LSA | #Param. | FLOPs | Memory | FPS |

|---|---|---|---|---|

| w/o | 18.39 M | 2061.13 G | 41,788 MB | 0.9 |

| w | 19.20 M | 405.66 G | 2750 MB | 9.9 |

| Dataset | Model | PSNR ↑ | SSIM ↑ | LPIPS ↓ | CLIPIQA ↑ |

|---|---|---|---|---|---|

| Potsdam | Bicubic | 31.26 | 0.8212 | 0.4253 | 0.2034 |

| SRCNN [16] | 31.97 | 0.8373 | 0.3578 | 0.2049 | |

| EDSR [17] | 33.83 | 0.8667 | 0.2797 | 0.2189 | |

| HAT [20] | 33.47 | 0.8603 | 0.2980 | 0.2004 | |

| RGT [21] | 33.86 | 0.8675 | 0.2758 | 0.2153 | |

| ATD [59] | 33.80 | 0.8668 | 0.2772 | 0.2089 | |

| SPT [60] | 33.68 | 0.8651 | 0.2813 | 0.2131 | |

| MambaIRv2 [26] | 33.81 | 0.8669 | 0.2765 | 0.2147 | |

| LSTMConvSR_s (ours) | 33.79 | 0.8664 | 0.2787 | 0.2192 | |

| LSTMConvSR (ours) | 33.94 | 0.8684 | 0.2727 | 0.2203 | |

| UAVid | Bicubic | 26.48 | 0.6699 | 0.4803 | 0.3015 |

| SRCNN [16] | 27.00 | 0.7050 | 0.3947 | 0.2501 | |

| EDSR [17] | 28.14 | 0.7489 | 0.3307 | 0.2522 | |

| HAT [20] | 28.08 | 0.7443 | 0.3443 | 0.2665 | |

| RGT [21] | 28.26 | 0.7529 | 0.3290 | 0.2615 | |

| ATD [59] | 28.19 | 0.7496 | 0.3313 | 0.2511 | |

| SPT [60] | 28.08 | 0.7464 | 0.3358 | 0.2557 | |

| MambaIRv2 [26] | 28.23 | 0.7520 | 0.3287 | 0.2545 | |

| LSTMConvSR_s (ours) | 28.16 | 0.7491 | 0.3337 | 0.2536 | |

| LSTMConvSR (ours) | 28.24 | 0.7521 | 0.3287 | 0.2570 | |

| RSSCN7 | Bicubic | 25.85 | 0.6024 | 0.6405 | 0.1521 |

| SRCNN [16] | 26.01 | 0.6125 | 0.5717 | 0.1499 | |

| EDSR [17] | 26.35 | 0.6313 | 0.5216 | 0.2221 | |

| HAT [20] | 26.34 | 0.6289 | 0.5452 | 0.1977 | |

| RGT [21] | 26.35 | 0.6326 | 0.5255 | 0.2319 | |

| ATD [59] | 26.39 | 0.6333 | 0.5242 | 0.2187 | |

| SPT [60] | 26.35 | 0.6307 | 0.5318 | 0.2060 | |

| MambaIRv2 [26] | 26.37 | 0.6336 | 0.5213 | 0.2187 | |

| LSTMConvSR_s (ours) | 26.35 | 0.6319 | 0.5255 | 0.2145 | |

| LSTMConvSR (ours) | 26.36 | 0.6336 | 0.5186 | 0.2242 |

| Model | #Param. | FLOPs | FPS |

|---|---|---|---|

| Bicubic | - | - | 27,505.6 |

| SRCNN [16] | 0.02 M | 5.29 G | 1001.3 |

| EDSR [17] | 43.09 M | 823.88 G | 45.3 |

| HAT [20] | 20.77 M | 424.23 G | 7.7 |

| RGT [21] | 13.37 M | 255.78 G | 3.6 |

| ATD [59] | 20.26 M | 474.04 G | 4.8 |

| SPT [60] | 3.21 M | 299.10 G | 5.0 |

| MambaIRv2 [26] | 23.05 M | 432.37 G | 4.1 |

| LSTMConvSR_s (ours) | 5.09 M | 142.06 G | 11.6 |

| LSTMConvSR (ours) | 19.20 M | 405.66 G | 9.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Q.; Zhang, G.; Wang, X.; Huang, J. LSTMConvSR: Joint Long–Short-Range Modeling via LSTM-First–CNN-Next Architecture for Remote Sensing Image Super-Resolution. Remote Sens. 2025, 17, 2745. https://doi.org/10.3390/rs17152745

Zhu Q, Zhang G, Wang X, Huang J. LSTMConvSR: Joint Long–Short-Range Modeling via LSTM-First–CNN-Next Architecture for Remote Sensing Image Super-Resolution. Remote Sensing. 2025; 17(15):2745. https://doi.org/10.3390/rs17152745

Chicago/Turabian StyleZhu, Qiwei, Guojing Zhang, Xiaoying Wang, and Jianqiang Huang. 2025. "LSTMConvSR: Joint Long–Short-Range Modeling via LSTM-First–CNN-Next Architecture for Remote Sensing Image Super-Resolution" Remote Sensing 17, no. 15: 2745. https://doi.org/10.3390/rs17152745

APA StyleZhu, Q., Zhang, G., Wang, X., & Huang, J. (2025). LSTMConvSR: Joint Long–Short-Range Modeling via LSTM-First–CNN-Next Architecture for Remote Sensing Image Super-Resolution. Remote Sensing, 17(15), 2745. https://doi.org/10.3390/rs17152745