Standard Classes for Urban Topographic Mapping with ALS: Classification Scheme and a First Implementation

Abstract

1. Introduction

1.1. Related Work

1.1.1. Deep Learning-Based Point Cloud Semantic Segmentation Methods

1.1.2. Semantic Segmentation of ALS Data

2. Materials and Methods

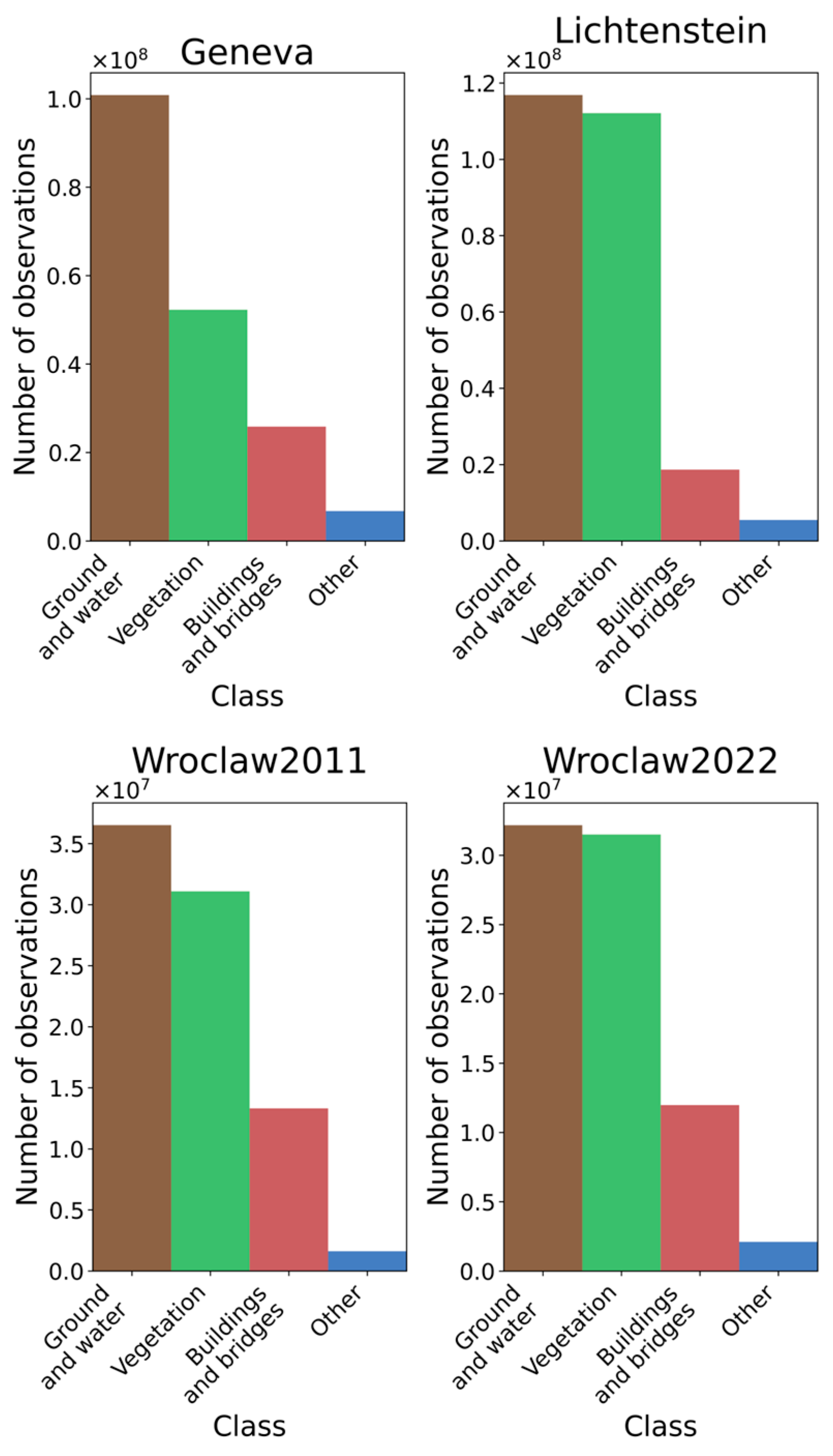

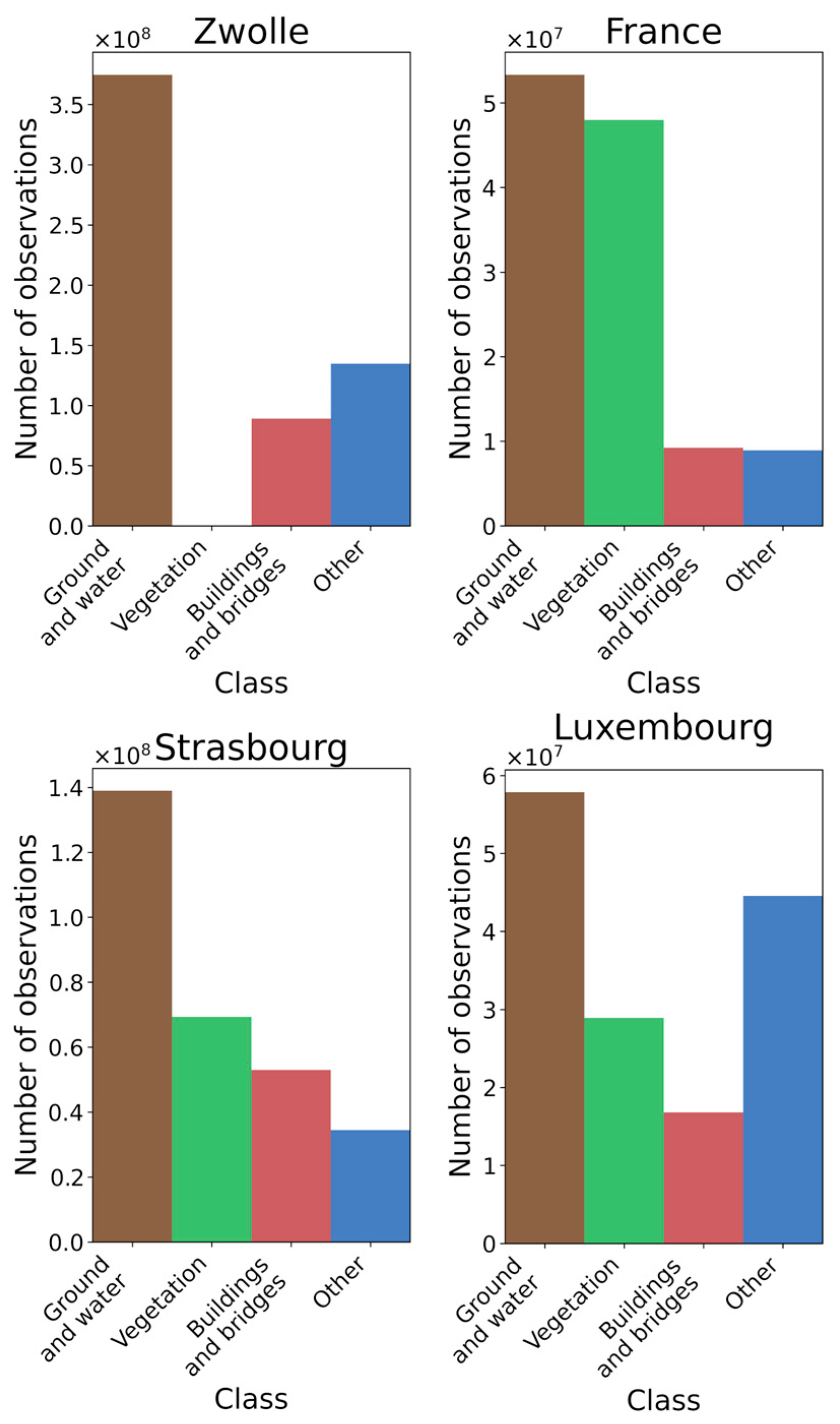

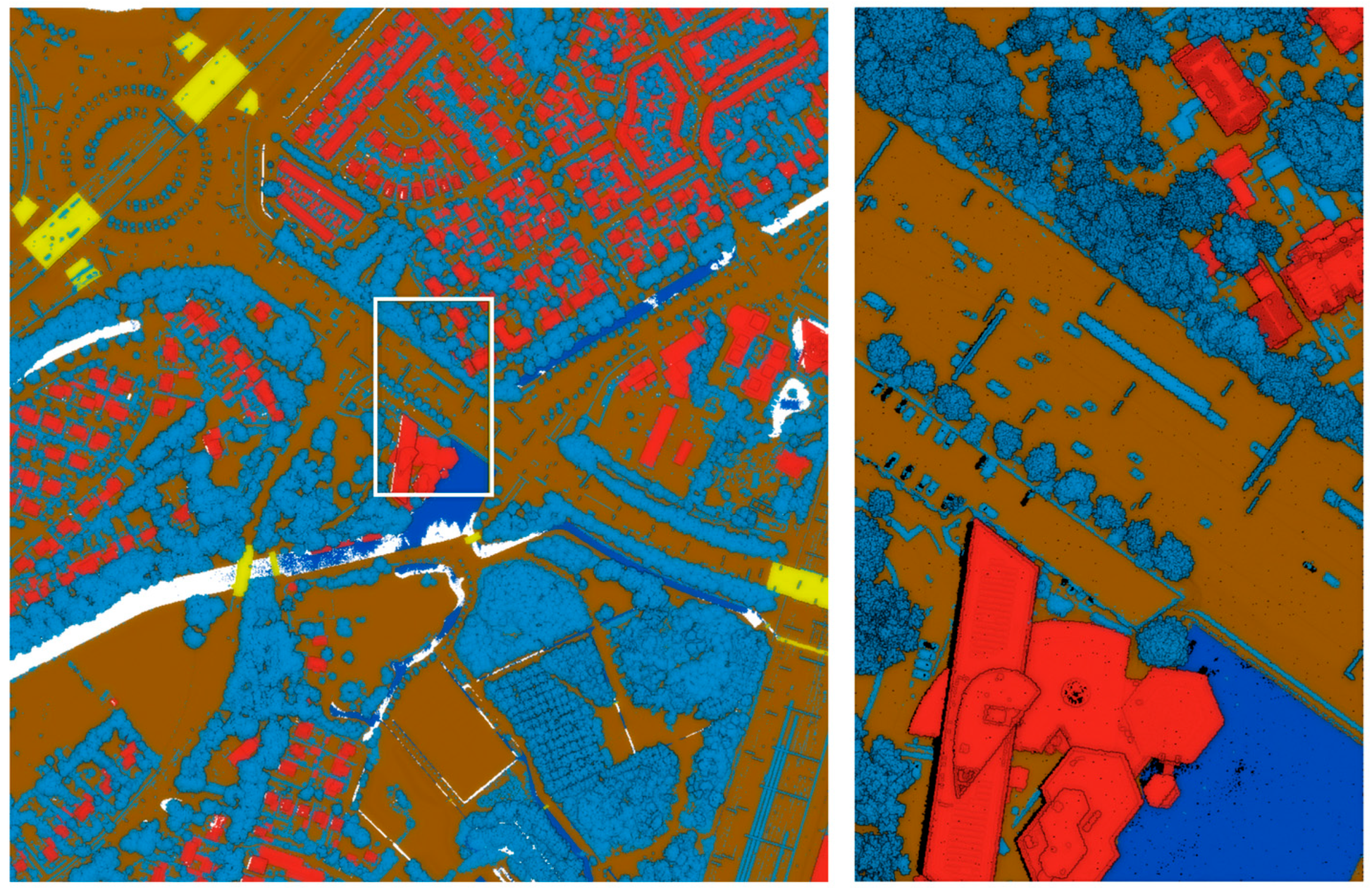

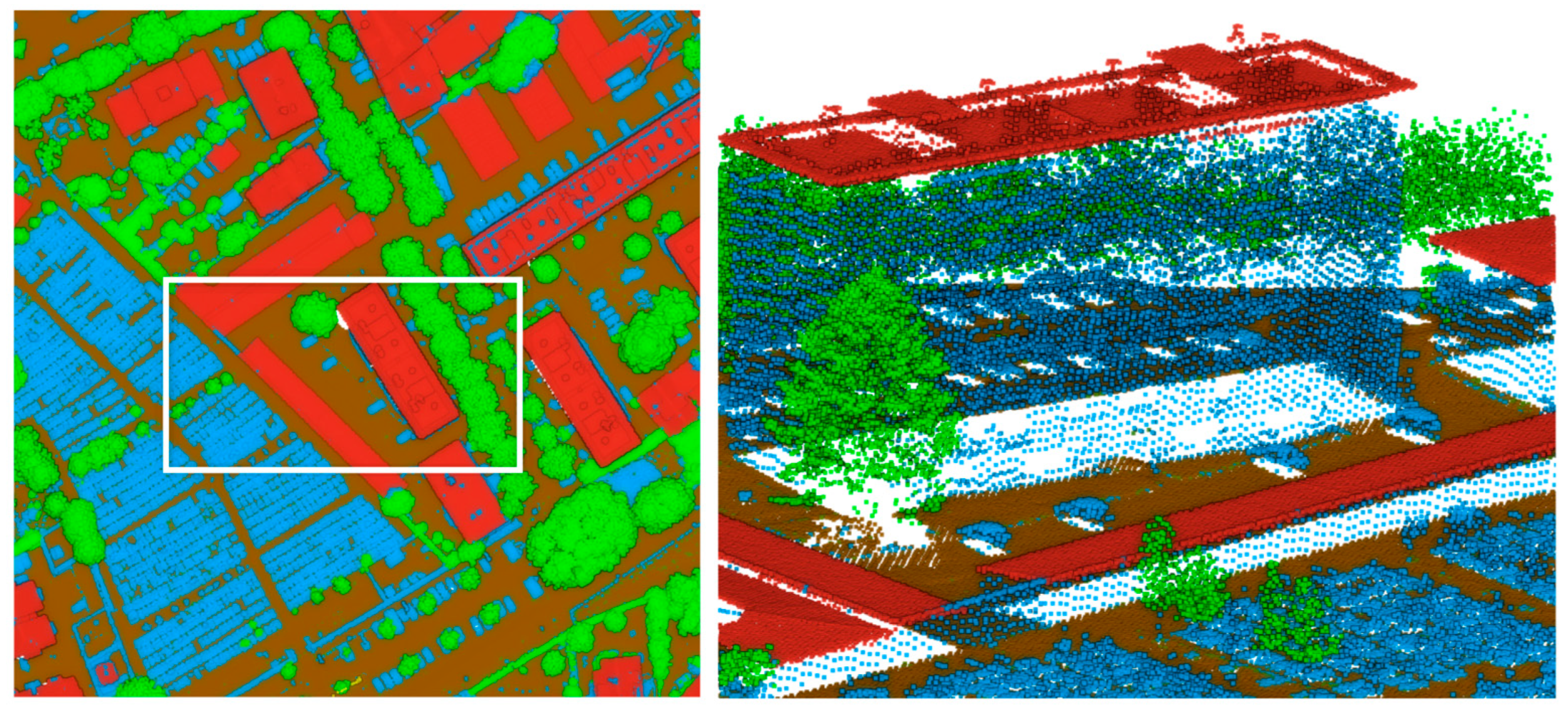

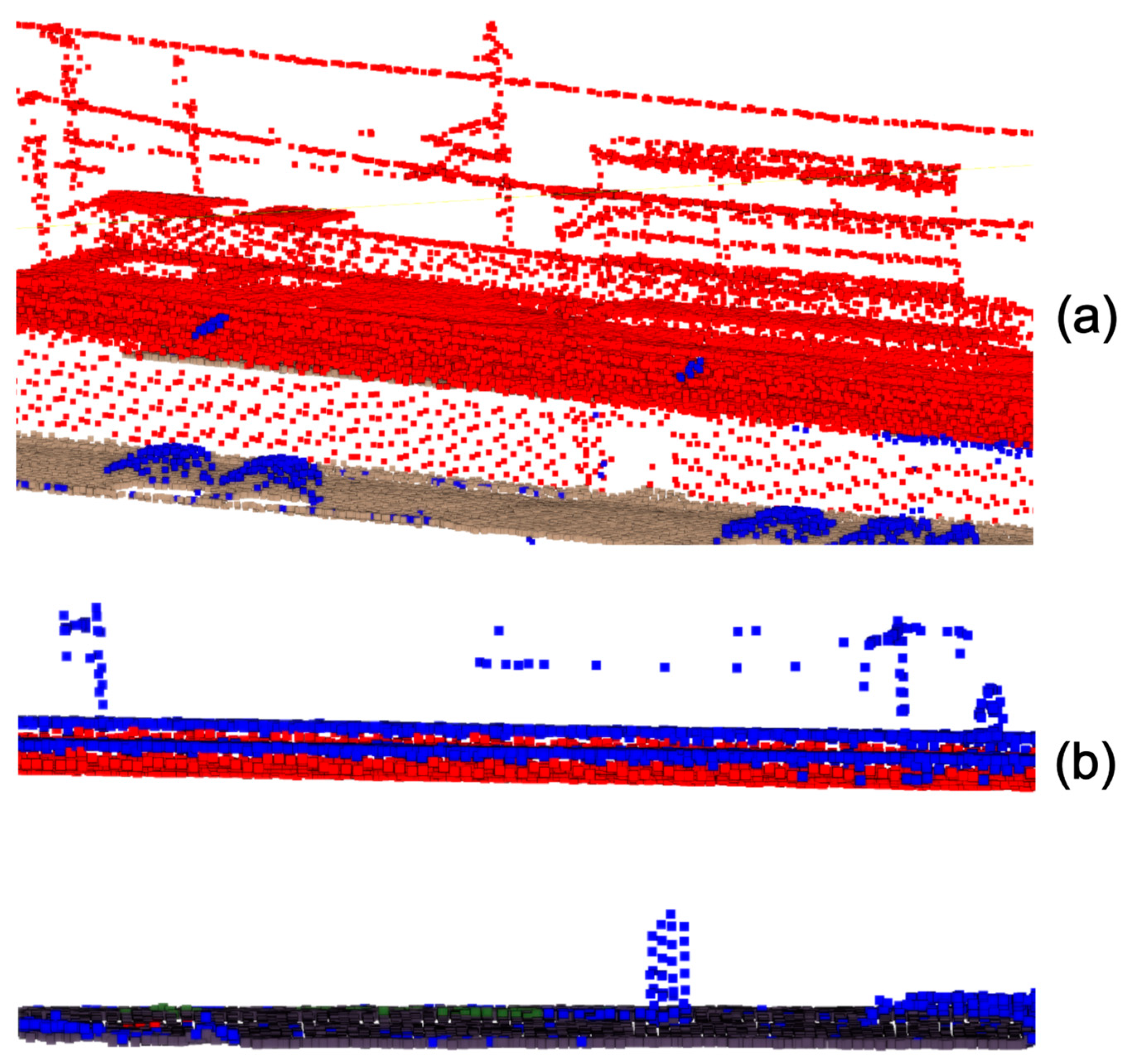

2.1. Data

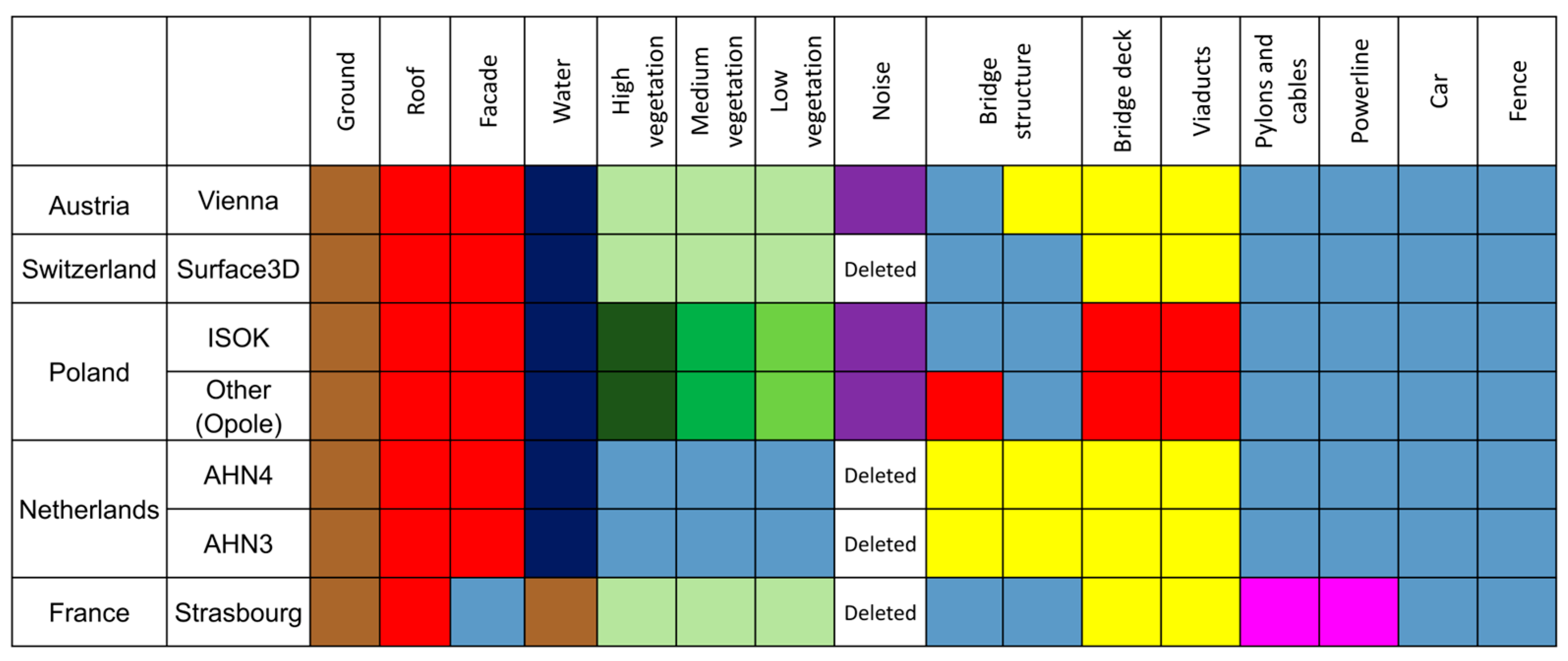

2.1.1. Differences in Classification Schemes

- (1)

- The Netherlands: The newest datasets of the Actueel Hoogtebestand Nederland (AHN) project (AHN3 and AHN4) are analyzed as they contain the classification of the point clouds. Previous editions of the point clouds (AHN1 and AHN2 data collection campaigns) were filtered but not classified. Both analyzed datasets cover the whole country.

- (2)

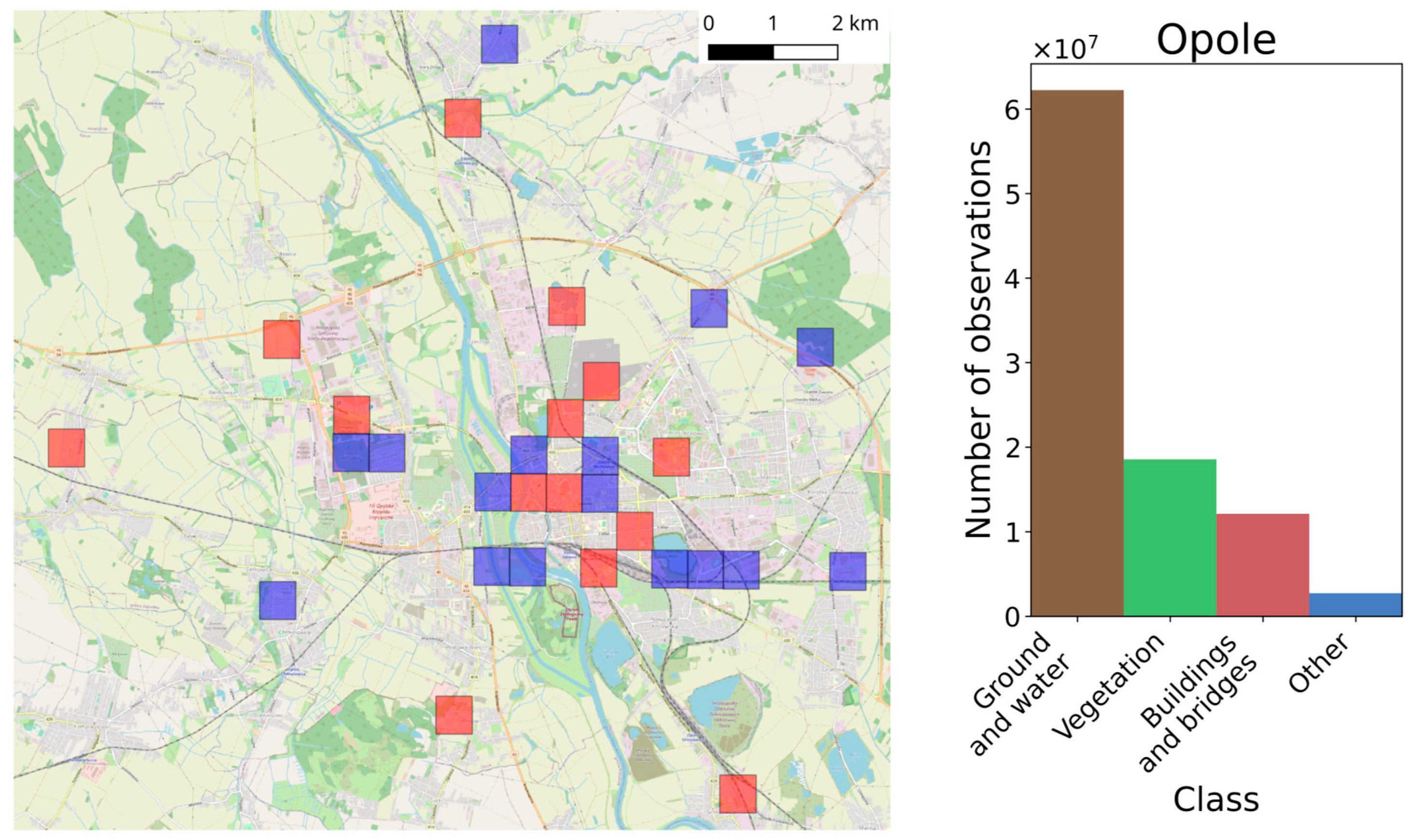

- Poland: Two types of datasets are analyzed: the data collected during project “Informatyczny System Osłony Kraju przed nadzwyczajnymi zagrożeniami” (ISOK) and other available data. In the latter case, the data are usually ordered independently by individual territorial division units. However, the data are validated and accepted by the mapping agency. The ISOK data cover the whole country, whereas other datasets are available locally.

- (3)

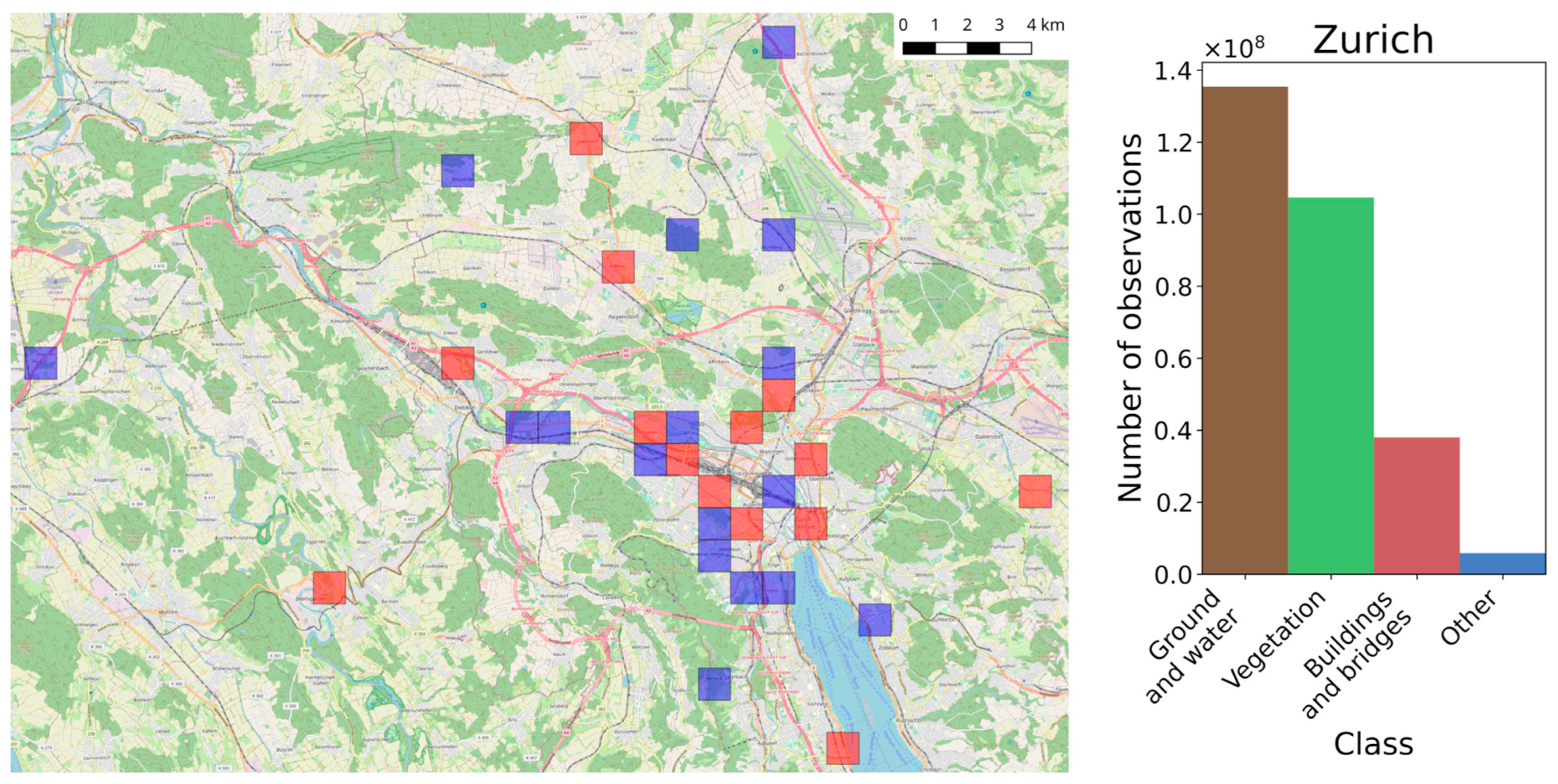

- Switzerland: The data are collected and distributed by a mapping agency in the framework of the swissSURFACE3D (©swisstopo) project. The data do not cover the area of the whole country yet but the remaining data are in the collection and processing stage.

- (4)

- France: The analyzed dataset was collected only for the city of Strasbourg and is not a part of the larger country-wise dataset. This dataset is separate from the French national point cloud mentioned in Table 1.

- (5)

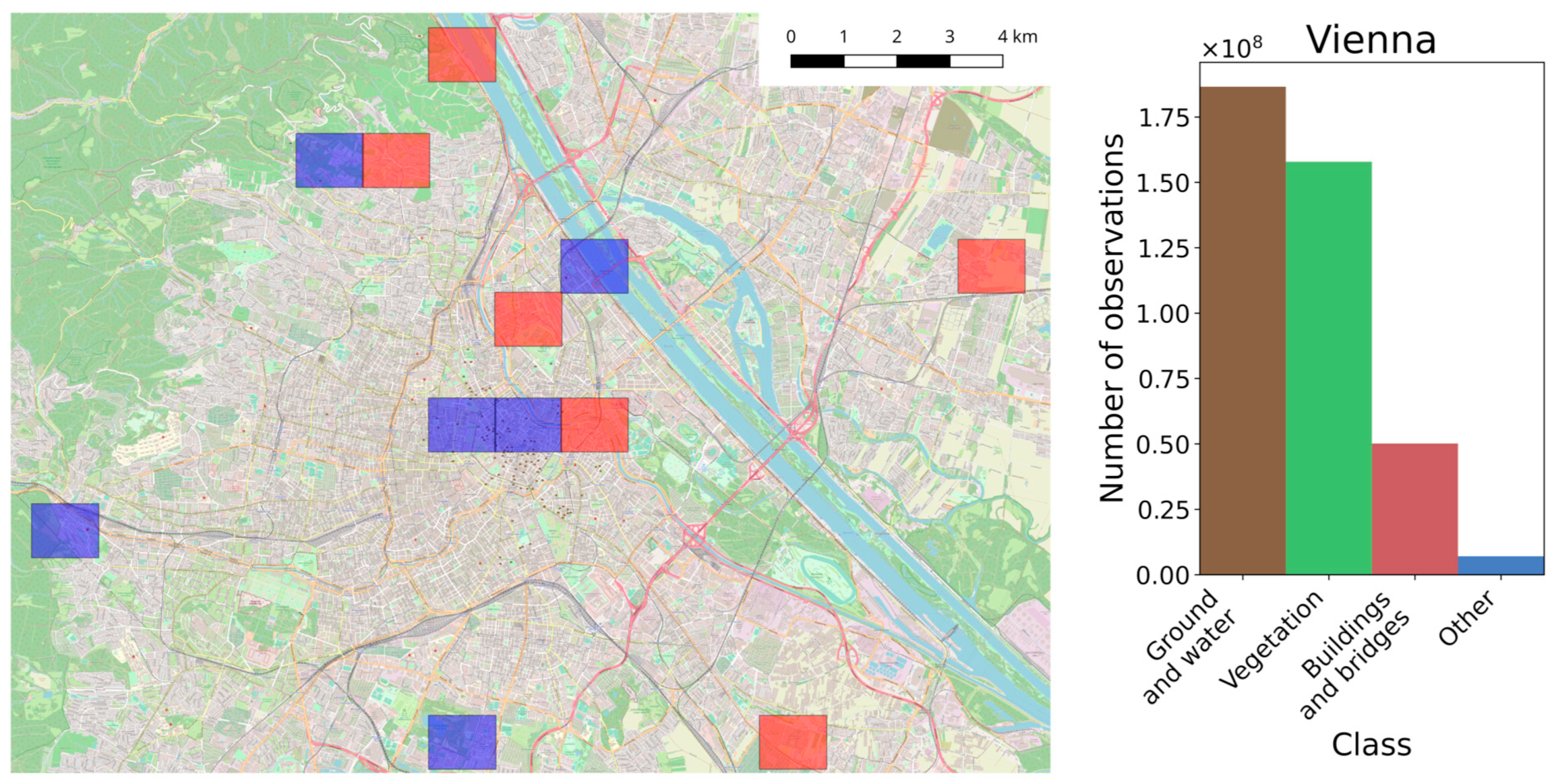

- Austria: The analyzed data were collected only for the city of Vienna.

2.1.2. Selected Training and Testing Datasets

2.1.3. Selected Validation Datasets

- (1)

- The data collected for Poland and Switzerland but representing different cities than the training data. Additionally, the data from Liechtenstein was included in this group as it was collected jointly with Swiss data.

- (2)

- Data from other European countries, which for various reasons were not suitable for network training. These reasons include substantial differences in classification schemes which were impossible to unify without a potential loss of accuracy, the very small size of the dataset, or a large classification error in one of the classes while obtaining high classification accuracy in other classes.

- (3)

- Benchmarking datasets.

2.2. Methodology

2.2.1. Classification Scheme

2.2.2. Evaluation Strategy

- Experiment 1. The evaluation of the accuracy of semantic segmentation using testing datasets of Vienna, Opole, and Zurich included the following experiments:

- Experiment 2. The evaluation of the accuracy of semantic segmentation using datasets A, B, and C.

2.2.3. Network Type and Architecture

- Voxel size: 8 cm and 25 cm;

- Number of epochs: 10, 18, and 26;

- Number of layers: 5, 7, and 9;

- Number of features generated by each convolutional layer: 8, 16, and 32;

- Loss function: weighted and non-weighted.

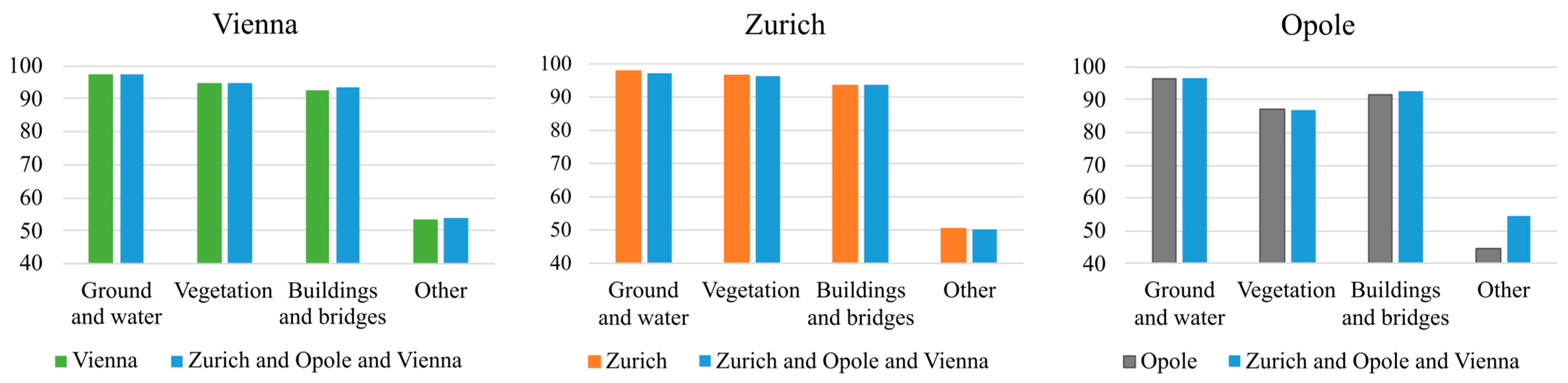

3. Results

3.1. Adjustment of Network Parameters

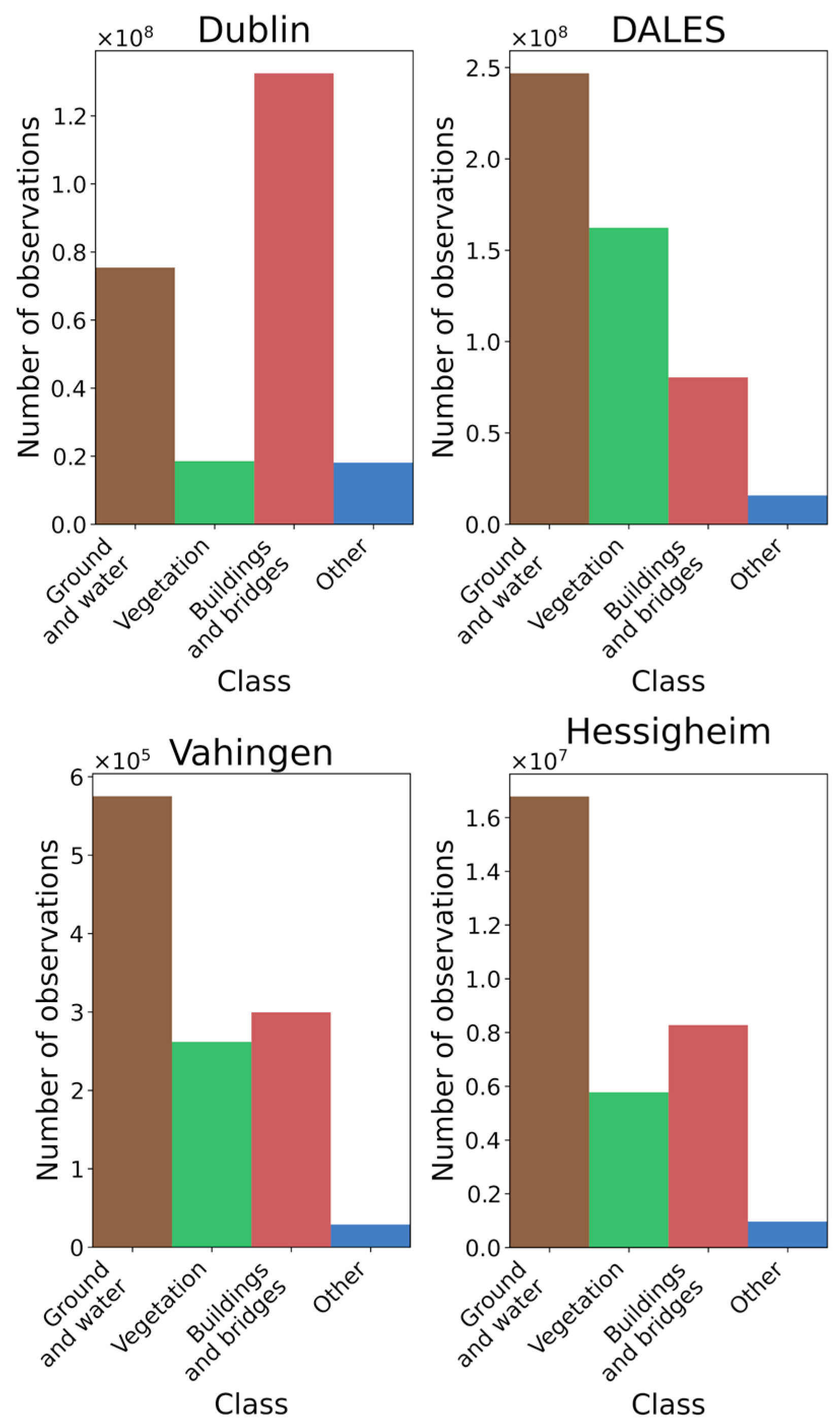

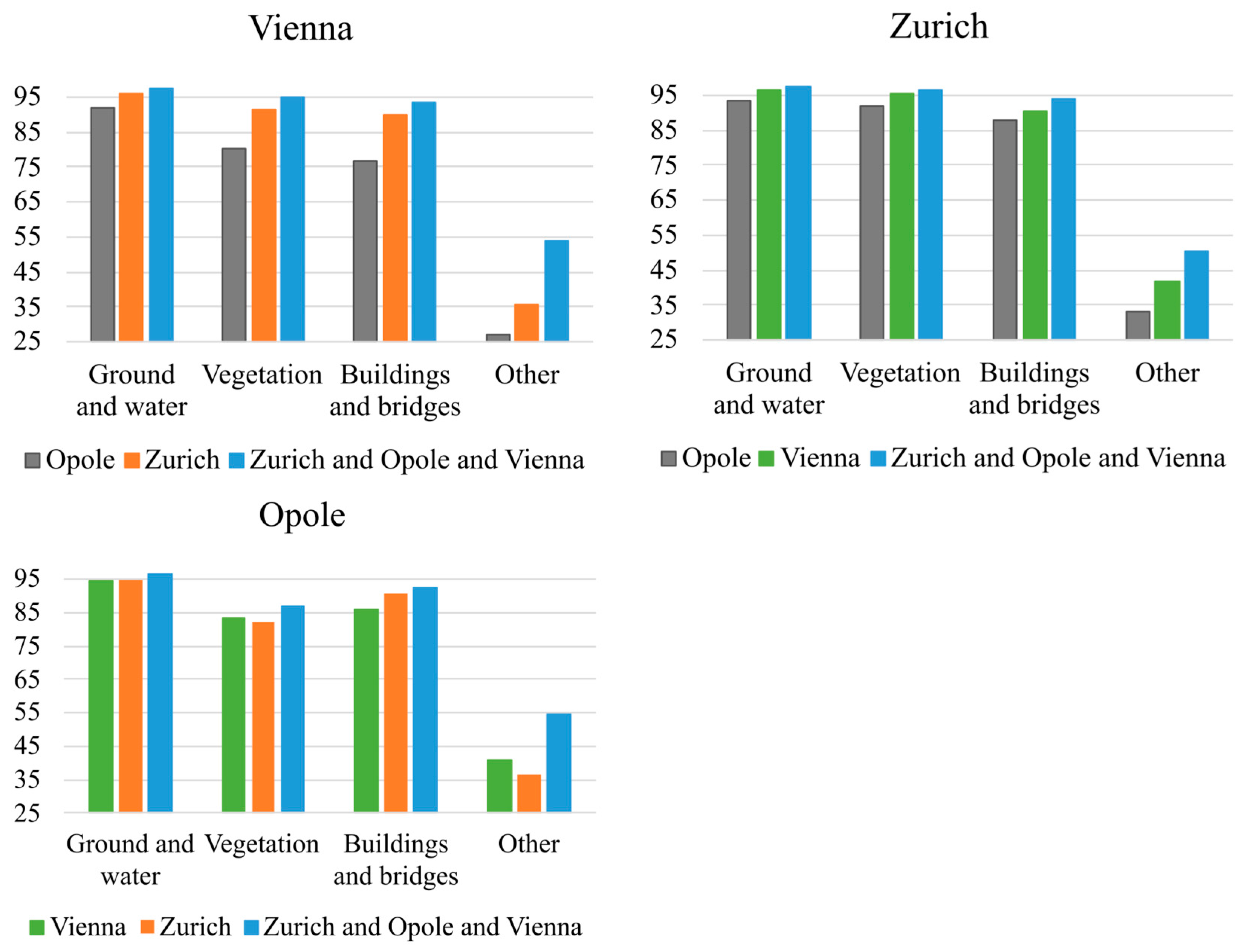

3.2. Experiment 1

3.3. Experiment 2

4. Discussion

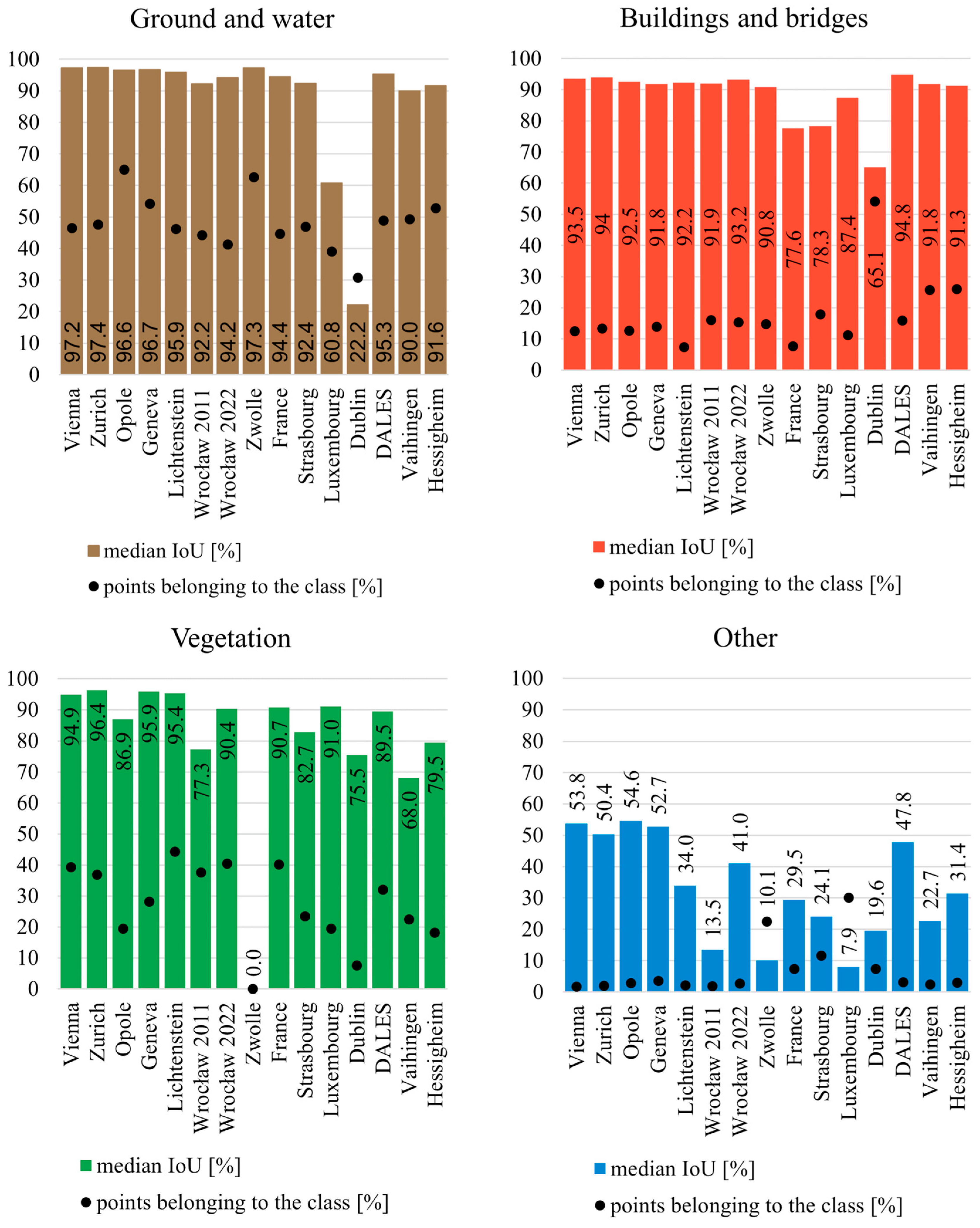

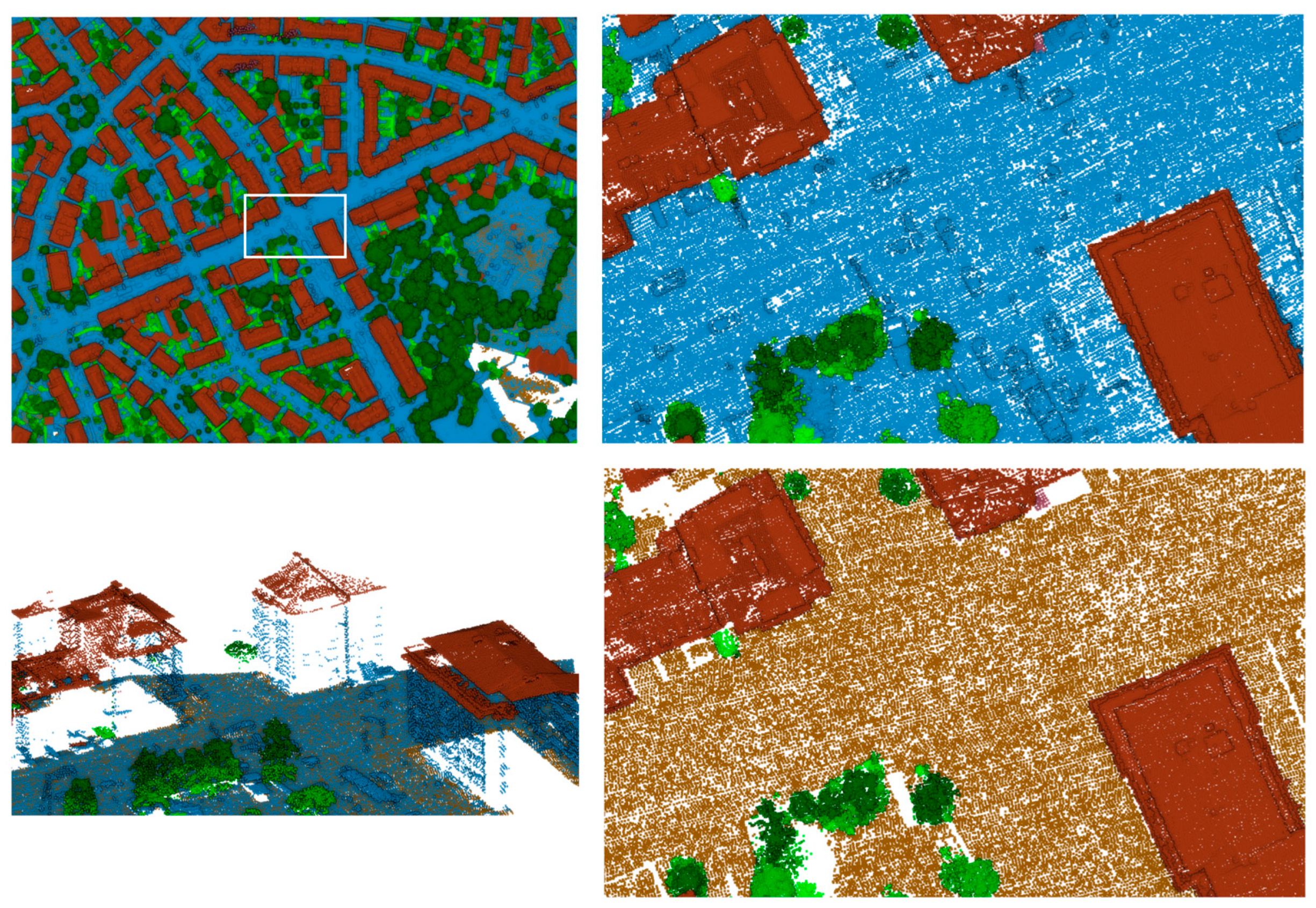

4.1. Choice of Classes and Data

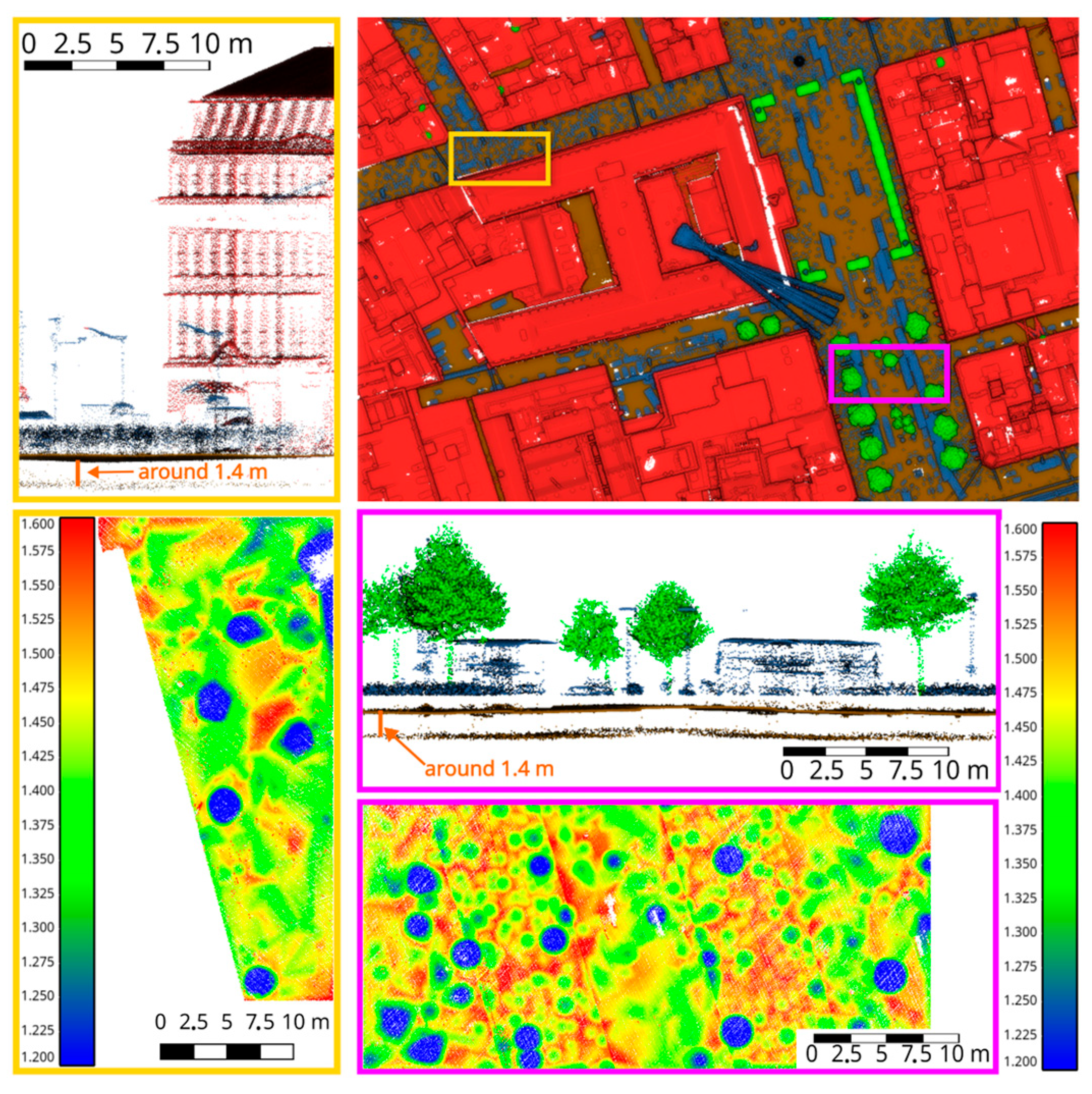

4.2. Model Performance

4.3. Generalization

4.4. Quality of Data and Labels

4.5. Comparison to the Literature

- The experiments in the literature are performed using various national ALS datasets, but they are mostly considering AHN point clouds, whereas in the investigations presented in this paper, this dataset could not be fully used due to significant differences in classification schemes.

- A lack of standardization in terms of classes into which the data are classified. As a result, different investigations propose a different set of classes that usually depend on the classification scheme of the reference data. Since the number of classes influences the values of the metrics describing the accuracy of semantic segmentation, in this case, the results cannot be reliably compared.

- A lack of standardization in terms of metrics used for accuracy evaluation. The most commonly used metric seems to be the F1 score. However, sensitivity, precision, and IoU are also often used.

- The test set of benchmarking datasets is often not available for a direct comparison and requires sending a classifier to the organizers.

- Even when the publications use the same national dataset, they present the results using different parts of the dataset (e.g., different geographical location).

- (1)

- 94.2% and 97.5% for ground;

- (2)

- 40.3% and 95.3% for water;

- (3)

- 87.7% and 94.5% for buildings;

- (4)

- 31.5% to 49.1% for civil structures;

- (5)

- and 86.9% to 94.9% for other.

4.6. Future Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Dataset | Original Classification | Reassigned Classification |

|---|---|---|

| Vienna | Ground | Ground and water |

| Vegetation | Vegetation | |

| Buildings | Building and bridges | |

| Water | Ground and water | |

| Bridge | Building and bridges | |

| Noise | – | |

| Other | Other | |

| Zurich Geneva Liechtenstein | Ground | Ground and water |

| Vegetation | Vegetation | |

| Buildings | Building and bridges | |

| Bridges and viaducts | Building and bridges | |

| Water | Ground and water | |

| Other | Other | |

| Opole Wrocław 2011 Wrocław 2022 | Ground | Ground and water |

| Low vegetation | Vegetation | |

| Medium vegetation | Vegetation | |

| High vegetation | Vegetation | |

| Buildings and bridges | Building and bridges | |

| High points | – | |

| Low points | – | |

| Water | Ground and water | |

| Other | Other | |

| Zwolle | Ground | Ground and water |

| Buildings | Buildings and bridges | |

| Civil structures | Buildings and bridges | |

| Water | Ground and water | |

| Other | Other | |

| France | Ground | Ground and water |

| Low vegetation | Vegetation | |

| Medium vegetation | Vegetation | |

| High vegetation | Vegetation | |

| Buildings | Buildings and bridges | |

| Water | Ground and water | |

| Bridge | Buildings and bridges | |

| Perennial soil | Ground and water | |

| Noise | – | |

| Virtual points | – | |

| Other | Other | |

| Strasbourg | Ground and water | Ground and water |

| Building roofs | Buildings and bridges | |

| Vegetation | Vegetation | |

| Bridges | Buildings and bridges | |

| Pylons and cables | Other | |

| Other | Other | |

| Luxembourg | Ground | Ground and water |

| Low vegetation | Vegetation | |

| Medium vegetation | Vegetation | |

| High vegetation | Vegetation | |

| Buildings | Buildings and bridges | |

| Noise | – | |

| Water | Ground and water | |

| Bridges | Buildings and bridges | |

| Power lines | Other | |

| Other | Other | |

| Dublin | Building | Buildings and bridges |

| Vegetation | Vegetation | |

| Ground | Ground and water | |

| Undefined | Other | |

| Dales | Other | Other |

| Ground | Ground and water | |

| Vegetation | Vegetation | |

| Cars | Other | |

| Trucks | Other | |

| Power lines | Other | |

| Fences | Other | |

| Poles | Other | |

| Buildings | Buildings and bridges | |

| Vaihingen | Powerline | Other |

| Low vegetation | Ground and water | |

| Impervious surfaces | Ground and water | |

| Car | Other | |

| Fence/hedge | Other | |

| Roof | Buildings and bridges | |

| Façade | Buildings and bridges | |

| Shrub | Vegetation | |

| Tree | Vegetation | |

| Hessigheim | Low vegetation | Ground and water |

| Impervious surfaces | Ground and water | |

| Vehicle | Other | |

| Urban furniture | Other | |

| Roof | Buildings and bridges | |

| Façade | Buildings and bridges | |

| Shrub | Vegetation | |

| Tree | Vegetation | |

| Soil/gravel | Ground and water | |

| Vertical surfaces | Other |

Appendix B

| Name | Country | Ground and Water | Vegetation | Buildings and Bridges | Other | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||

| Vienna | AT | 98.5 | 99.2 | 98.8 | 97.5 | 97.5 | 97.5 | 97.3 | 96.3 | 96.8 | 68.1 | 74.8 | 71.3 |

| Zurich | CH | 99.1 | 98.5 | 98.8 | 98.3 | 98.1 | 98.2 | 96.4 | 97.7 | 97 | 65.3 | 71.6 | 68.3 |

| Opole | PL | 98.0 | 99.1 | 98.5 | 95.9 | 90.0 | 92.9 | 97.4 | 95.5 | 96.4 | 67.9 | 72.6 | 70.2 |

| Geneva | CH | 98.3 | 98.4 | 98.3 | 98.0 | 97.7 | 97.8 | 95.5 | 96.0 | 95.7 | 67.3 | 74.0 | 70.5 |

| Liechtenstein | LI | 97.5 | 98.3 | 97.9 | 97.7 | 98.0 | 97.8 | 95.7 | 97.0 | 96.3 | 57.8 | 47.8 | 52.3 |

| Wrocław 2011 | PL | 94.6 | 98.6 | 96.6 | 98.2 | 78.5 | 87.3 | 95.7 | 95.5 | 95.6 | 15.4 | 61.3 | 24.6 |

| Wrocław 2022 | PL | 96.9 | 96.6 | 96.7 | 96.8 | 93.8 | 95.3 | 95.6 | 95.8 | 95.7 | 46.8 | 77.6 | 58.4 |

| Zwolle | NL | 97.7 | 99.5 | 98.6 | – | – | – | 94.2 | 95.9 | 95 | – | – | – |

| France | FR | 95.9 | 98.9 | 97.4 | 95.7 | 96.5 | 96.1 | 83.9 | 97.8 | 90.3 | 63.3 | 34.1 | 44.3 |

| Strasbourg | FR | 92.9 | 99.6 | 96.1 | 88.6 | 92.3 | 90.4 | – | – | – | – | – | – |

| Luxembourg | LU | – | – | – | 92.6 | 98.3 | 95.4 | 87.8 | 99.4 | 93.2 | – | – | – |

| Dublin | IE | – | – | – | 79.2 | 92.4 | 85.3 | – | – | – | 48.0 | 27.0 | 34.6 |

| DALES | USA | 96.5 | 98.8 | 97.6 | 96.1 | 93.2 | 94.6 | 96.9 | 97.9 | 97.4 | 72.8 | 59.0 | 65.2 |

| Vaihingen | DE | 98.0 | 91.3 | 94.5 | 88.2 | 77.2 | 82.3 | 94.8 | 96.5 | 95.6 | 25.0 | 83.4 | 38.5 |

| Hessigheim | DE | 96.7 | 94.5 | 95.6 | 89.2 | 88.1 | 88.6 | 93.8 | 97.1 | 95.4 | 44.0 | 52.3 | 47.8 |

Appendix C

| Training Dataset | Testing Dataset | IoU | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ground and Water | Vegetation | Buildings and Bridges | Other | ||||||||||

| Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | ||

| Opole | Vienna | 91.8 | 88.7 | 12.1 | 80.0 | 75.4 | 19.7 | 76.9 | 65.5 | 31.6 | 27.3 | 29.3 | 21.4 |

| Zurich | Vienna | 95.9 | 94.8 | 5.0 | 91.6 | 90.4 | 5.6 | 89.7 | 83.1 | 17.4 | 35.7 | 37.1 | 19.4 |

| Zurich and Opole | Vienna | 91.7 | 90.1 | 7.9 | 89.4 | 87.0 | 8.2 | 85.6 | 76.4 | 22.3 | 17.4 | 23.3 | 16.9 |

| Opole | Zurich | 93.5 | 93.2 | 2.7 | 92.1 | 91.7 | 3.5 | 87.6 | 81.1 | 17.8 | 33.1 | 33.0 | 11.1 |

| Vienna | Zurich | 96.6 | 96.6 | 1.4 | 95.2 | 95.2 | 3.0 | 90.5 | 88.1 | 6.6 | 41.6 | 43.9 | 10.4 |

| Vienna and Opole | Zurich | 95.8 | 95.7 | 1.7 | 95.1 | 94.5 | 3.1 | 89.4 | 88.3 | 6.2 | 38.3 | 41.5 | 11.8 |

| Vienna | Opole | 94.6 | 94.3 | 1.8 | 83.4 | 82.0 | 7.3 | 85.8 | 83.8 | 6.7 | 40.9 | 39.2 | 11.6 |

| Zurich | Opole | 95.1 | 94.3 | 2.3 | 82.1 | 81.4 | 8.0 | 90.9 | 87.7 | 7.8 | 36.4 | 36.6 | 13.0 |

| Zurich and Vienna | Opole | 95.1 | 94.7 | 1.7 | 84.0 | 82.6 | 6.8 | 91.2 | 87.7 | 6.9 | 42.0 | 41.3 | 12.6 |

References

- Kippers, R.G.; Moth, L.; Oude Elberink, S.J. Automatic Modelling Of 3D Trees Using Aerial Lidar Point Cloud Data And Deep Learning. Int. Arch. Photogramm. Remote Sens. 2021, XLIII-B2-2021, 179–184. [Google Scholar] [CrossRef]

- Li, M.; Rottensteiner, F.; Heipke, C. Modelling of Buildings from Aerial LiDAR Point Clouds Using TINs and Label Maps. ISPRS J. Photogramm. Remote Sens. 2019, 154, 127–138. [Google Scholar] [CrossRef]

- Pađen, I.; Peters, R.; García-Sánchez, C.; Ledoux, H. Automatic High-Detailed Building Reconstruction Workflow for Urban Microscale Simulations. Build. Environ. 2024, 265, 111978. [Google Scholar] [CrossRef]

- Park, Y.; Guldmann, J.-M. Creating 3D City Models with Building Footprints and LIDAR Point Cloud Classification: A Machine Learning Approach. Comput. Environ. Urban Syst. 2019, 75, 76–89. [Google Scholar] [CrossRef]

- Soilán, M.; Riveiro, B.; Liñares, P.; Padín-Beltrán, M. Automatic Parametrization and Shadow Analysis of Roofs in Urban Areas from ALS Point Clouds with Solar Energy Purposes. ISPRS Int. J. Geo-Inf. 2018, 7, 301. [Google Scholar] [CrossRef]

- Zhou, Z.; Gong, J.; Hu, X. Community-Scale Multi-Level Post-Hurricane Damage Assessment of Residential Buildings Using Multi-Temporal Airborne LiDAR Data. Autom. Constr. 2019, 98, 30–45. [Google Scholar] [CrossRef]

- Korzeniowska, K.; Pfeifer, N.; Mandlburger, G.; Lugmayr, A. Experimental Evaluation of ALS Point Cloud Ground Extraction Tools over Different Terrain Slope and Land-Cover Types. Int. J. Remote Sens. 2014, 35, 4673–4697. [Google Scholar] [CrossRef]

- Chen, C.; Guo, J.; Wu, H.; Li, Y.; Shi, B. Performance Comparison of Filtering Algorithms for High-Density Airborne LiDAR Point Clouds over Complex LandScapes. Remote Sens. 2021, 13, 2663. [Google Scholar] [CrossRef]

- Li, N.; Kähler, O.; Pfeifer, N. A Comparison of Deep Learning Methods for Airborne Lidar Point Clouds Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6467–6486. [Google Scholar] [CrossRef]

- Griffiths, D.; Boehm, J. A Review on Deep Learning Techniques for 3D Sensed Data Classification. Remote Sens. 2019, 11, 1499. [Google Scholar] [CrossRef]

- Li, W.; Wang, F.-D.; Xia, G.-S. A Geometry-Attentional Network for ALS Point Cloud Classification. ISPRS J. Photogramm. Remote Sens. 2020, 164, 26–40. [Google Scholar] [CrossRef]

- Yousefhussien, M.; Kelbe, D.J.; Ientilucci, E.J.; Salvaggio, C. A Multi-Scale Fully Convolutional Network for Semantic Labeling of 3D Point Clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 191–204. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Teferle, F.; Li, J.; Lindenbergh, R.; Hunegnaw, A. An Efficient Deep Learning Approach for Ground Point Filtering in Aerial Laser Scanning Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B1-2021, 31–38. [Google Scholar] [CrossRef]

- Rizaldy, A.; Persello, C.; Gevaert, C.; Oude Elberink, S.; Vosselman, G. Ground and Multi-Class Classification of Airborne Laser Scanner Point Clouds Using Fully Convolutional Networks. Remote Sens. 2018, 10, 1723. [Google Scholar] [CrossRef]

- Soilán Rodríguez, M.; Lindenbergh, R.; Riveiro Rodríguez, B.; Sánchez Rodríguez, A. Pointnet for the Automatic Classification of Aerial Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W5, 445–452. [Google Scholar]

- Widyaningrum, E.; Bai, Q.; Fajari, M.K.; Lindenbergh, R.C. Airborne Laser Scanning Point Cloud Classification Using the DGCNN Deep Learning Method. Remote Sens. 2021, 13, 859. [Google Scholar] [CrossRef]

- Wen, C.; Yang, L.; Li, X.; Peng, L.; Chi, T. Directionally Constrained Fully Convolutional Neural Network for Airborne LiDAR Point Cloud Classification. ISPRS J. Photogramm. Remote Sens. 2020, 162, 50–62. [Google Scholar] [CrossRef]

- Xie, Y.; Schindler, K.; Tian, J.; Zhu, X.X. Exploring Cross-City Semantic Segmentation of ALS Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B2-2021, 247–254. [Google Scholar] [CrossRef]

- Qin, N.; Tan, W.; Ma, L.; Zhang, D.; Guan, H.; Li, J. Deep Learning for Filtering the Ground from ALS Point Clouds: A Dataset, Evaluations and Issues. ISPRS J. Photogramm. Remote Sens. 2023, 202, 246–261. [Google Scholar] [CrossRef]

- Usmani, A.U.; Jadidi, M.; Sohn, G. TOWARDS THE AUTOMATIC ONTOLOGY GENERATION AND ALIGNMENT OF BIM AND GIS DATA FORMATS. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, VIII-4/W2-2021, 183–188. [Google Scholar] [CrossRef]

- LAS Specification 1.4-R15; The American Society for Photogrammetry & Remote Sensing: Baton Rouge, LA, USA, 2019.

- Walicka, A.; Pfeifer, N. Semantic Segmentation of Buildings Using Multisource ALS Data. In Recent Advances in 3D Geoinformation Science; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Bello, S.A.; Yu, S.; Wang, C.; Adam, J.M.; Li, J. Review: Deep Learning on 3D Point Clouds. Remote Sens. 2020, 12, 1729. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-View Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Boulch, A.; Le Saux, B.; Audebert, N. Unstructured Point Cloud Semantic Labeling Using Deep Segmentation Networks. In Proceedings of the 3DOR Eurographics, the Workshop on 3D Object Retrieval, Lyon, France, 23–24 April 2017; Volume 3, pp. 17–24. [Google Scholar]

- Zhang, J.; Zhao, X.; Chen, Z.; Lu, Z. A Review of Deep Learning-Based Semantic Segmentation for Point Cloud. IEEE Access 2019, 7, 179118–179133. [Google Scholar] [CrossRef]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Graham, B.; Engelcke, M.; Van Der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Su, H.; Jampani, V.; Sun, D.; Maji, S.; Kalogerakis, E.; Yang, M.-H.; Kautz, J. SPLATNet: Sparse Lattice Networks for Point Cloud Processing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2530–2539. [Google Scholar]

- Rao, Y.; Lu, J.; Zhou, J. Spherical Fractal Convolutional Neural Networks for Point Cloud Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 452–460. [Google Scholar]

- Charles, R.Q.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution On X-Transformed Points. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9621–9630. [Google Scholar]

- Groh, F.; Wieschollek, P.; Lensch, H.P.A. Flex-Convolution. In Computer Vision—ACCV 2018; Jawahar, C.V., Li, H., Mori, G., Schindler, K., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 105–122. [Google Scholar]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. SpiderCNN: Deep Learning on Point Sets with Parameterized Convolutional Filters. In Computer Vision—ACCV 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 87–102. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Camuffo, E.; Mari, D.; Milani, S. Recent Advancements in Learning Algorithms for Point Clouds: An Updated Overview. Sensors 2022, 22, 1357. [Google Scholar] [CrossRef] [PubMed]

- Diab, A.; Kashef, R.; Shaker, A. Deep Learning for LiDAR Point Cloud Classification in Remote Sensing. Sensors 2022, 22, 7868. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

- Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. A Survey on Deep Learning Based Segmentation, Detection and Classification for 3D Point Clouds. Entropy 2023, 25, 635. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, G.; Xu, Y.; Pan, P.; Xing, Y. PointNet++ Network Architecture with Individual Point Level and Global Features on Centroid for ALS Point Cloud Classification. Remote Sens. 2021, 13, 472. [Google Scholar] [CrossRef]

- Grilli, E.; Daniele, A.; Bassier, M.; Remondino, F.; Serafini, L. Knowledge Enhanced Neural Networks for Point Cloud Semantic Segmentation. Remote Sens. 2023, 15, 2590. [Google Scholar] [CrossRef]

- Mao, Y.; Chen, K.; Diao, W.; Sun, X.; Lu, X.; Fu, K.; Weinmann, M. Beyond Single Receptive Field: A Receptive Field Fusion-and-Stratification Network for Airborne Laser Scanning Point Cloud Classification. ISPRS J. Photogramm. Remote Sens. 2022, 188, 45–61. [Google Scholar] [CrossRef]

- Huang, R.; Xu, Y.; Hong, D.; Yao, W.; Ghamisi, P.; Stilla, U. Deep Point Embedding for Urban Classification Using ALS Point Clouds: A New Perspective from Local to Global. ISPRS J. Photogramm. Remote Sens. 2020, 163, 62–81. [Google Scholar] [CrossRef]

- Schmohl, S.; Sörgel, U. Submanifold Sparse Convolutional Networks For Semantic Segmentation Of Large-Scale Als Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W5, 77–84. [Google Scholar] [CrossRef]

- Soilán, M.; Riveiro, B.; Balado, J.; Arias, P. Comparison of Heuristic and Deep Learning-Based Methods for Ground Classification from Aerial Point Clouds. Int. J. Digit. Earth 2020, 13, 1115–1134. [Google Scholar] [CrossRef]

- Arief, H.A.; Indahl, U.G.; Strand, G.-H.; Tveite, H. Addressing Overfitting on Point Cloud Classification Using Atrous XCRF. ISPRS J. Photogramm. Remote Sens. 2019, 155, 90–101. [Google Scholar] [CrossRef]

- Hsu, P.-H.; Zhuang, Z.-Y. Incorporating Handcrafted Features into Deep Learning for Point Cloud Classification. Remote Sens. 2020, 12, 3713. [Google Scholar] [CrossRef]

- Winiwarter, L.; Mandlburger, G.; Schmohl, S.; Pfeifer, N. Classification of ALS Point Clouds Using End-to-End Deep Learning. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2019, 87, 75–90. [Google Scholar] [CrossRef]

- Li, N.; Liu, C.; Pfeifer, N. Improving LiDAR Classification Accuracy by Contextual Label Smoothing in Post-Processing. ISPRS J. Photogramm. Remote Sens. 2019, 148, 13–31. [Google Scholar] [CrossRef]

- Laefer, D.F.; Abuwarda, S.; Vo, A.-V.; Truong-Hong, L.; Gharibi, H. 2015 Aerial Laser and Photogrammetry Survey of Dublin City Collection Record; NYU Libraries: New York, NY, USA, 2015. [Google Scholar]

- Zolanvari, S.; Ruano, S.; Rana, A.; Cummins, A.; da Silva, R.E.; Rahbar, M.; Smolic, A. DublinCity: Annotated LiDAR Point Cloud and Its Applications. arXiv 2019, arXiv:1909.03613. [Google Scholar]

- Varney, N.; Asari, V.K.; Graehling, Q. DALES: A Large-Scale Aerial LiDAR Data Set for Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 717–726. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual Classification of Lidar Data and Building Object Detection in Urban Areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Kölle, M.; Laupheimer, D.; Schmohl, S.; Haala, N.; Rottensteiner, F.; Wegner, J.D.; Ledoux, H. The Hessigheim 3D (H3D) Benchmark on Semantic Segmentation of High-Resolution 3D Point Clouds and Textured Meshes from UAV LiDAR and Multi-View-Stereo. ISPRS Open J. Photogramm. Remote Sens. 2021, 1, 100001. [Google Scholar] [CrossRef]

- Graham, B.; van der Maaten, L. Submanifold Sparse Convolutional Networks. arXiv 2017, arXiv:1706.01307. [Google Scholar] [PubMed]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3d.Net: A New Large-Scale Point Cloud Classification Benchmark. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-1-W1, 91–98. [Google Scholar] [CrossRef]

- Roscher, R.; Russwurm, M.; Gevaert, C.; Kampffmeyer, M.; Dos Santos, J.A.; Vakalopoulou, M.; Hänsch, R.; Hansen, S.; Nogueira, K.; Prexl, J.; et al. Better, Not Just More: Data-Centric Machine Learning for Earth Observation. IEEE Geosci. Remote Sens. Mag. 2024, 12, 335–355. [Google Scholar] [CrossRef]

- U.S. Geological Survey. Lidar Base Specification: Tables. Available online: https://www.usgs.gov/ngp-standards-and-specifications/lidar-base-specification-tables (accessed on 3 July 2025).

- Copernicus Global Land Cover and Tropical Forest Mapping and Monitoring Service (LCFM). Available online: https://land.copernicus.eu/en/technical-library/product-user-manual-global-land-cover-10-m/@@download/file (accessed on 3 July 2025).

- Brzank, A.; Heipke, C. Classification of Lidar Data into Water and Land Points in Coastal Areas. ISPRS Arch. 2006, XXXVI/3, 197–202. [Google Scholar]

- Li, H.; Zech, J.; Ludwig, C.; Fendrich, S.; Shapiro, A.; Schultz, M.; Zipf, A. Automatic Mapping of National Surface Water with OpenStreetMap and Sentinel-2 MSI Data Using Deep Learning. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102571. [Google Scholar] [CrossRef]

- Tschirschwitz, D.; Rodehorst, V. Label Convergence: Defining an Upper Performance Bound in Object Recognition Through Contradictory Annotations. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 28 February–4 March 2025; pp. 6848–6857. [Google Scholar]

| # Tiles | Test/Train Site Area [km2] | Tile Size [m] | Tile Overlap [m] | Acquisition Date | Acquisition Season | Density [pts/m2] | Classification | Type of Dataset | Purpose | |

|---|---|---|---|---|---|---|---|---|---|---|

| Vienna | 6/6 | 7.8/7.8 | 1270 × 1020 | 20 | 10–11.2015 | Leaf-off | 23–139 | Ground, vegetation, buildings, water, bridge, noise, and other (5) | City-wise | Train/Test |

| Zurich | 17/14 | 17/14 | 1000 × 1000 | 0 | 2017–2020 | Leaf-off (1) | 8–25 | Ground, vegetation, buildings, bridges and viaducts, water, and other (5) | National | Train/Test |

| Geneva | 18 | 18 | 2018–2019 | 31–57 | Validation | |||||

| Liechtenstein | 24 | 24 | 2017–2018 | 15–33 | Validation | |||||

| Opole | 16/14 | 4/3.5 | 500 × 500 | 0 | 03.2022 | Leaf-off | 20–35 | Ground, high vegetation, medium vegetation, low vegetation, buildings and bridges, high points, low points, water, and other (5) | City-wise | Train/Test |

| Wrocław2011 | 12 | 3 | 07.2011 | Leaf-on | 22–30 | National | Validation | |||

| Wrocław2022 | 12 | 3 | 05.2022 | Leaf-on | 22–53 | City-wise | Validation | |||

| Zwolle | 18 | 22.5 | 1000 × 1250 (2) | 25 (2) | 2020–2022 | Leaf-off | 20–34 | Ground, buildings, civil structures, water, and other (5) | National | Validation |

| France (national point cloud) | 6 | 6 | 1000 × 1000 | 0 | - | - | 9–34 | Ground, low vegetation, medium vegetation, high vegetation, buildings, water, bridge, perennial soil, noise, virtual points, and other | National | Validation |

| Strasbourg (point cloud acquired by city of Strasbourg) | 51 | 12.75 | 500 × 500 | 0 | 2015–2016 | - | 17–35 | Ground and water, building roofs, vegetation, bridges, pylons and cables, and other (5) | City-wise | Validation |

| Luxembourg | 23 | 5.75 | 500 × 500 | 0 | 02.2019 | Leaf-off | 21–40 | Ground, low vegetation, medium vegetation, high vegetation, buildings, noise, water, bridges, power lines, and other | City-wise | Validation |

| DALES | 40 | 10 | 500 × 500 | 0 | - | - | 48 | Ground, vegetation, cars, trucks, poles, power lines, fences, and buildings | Benchmark | Validation |

| Dublin | 13 | 2 | Irregular | 0 | 03.2015 | Leaf-off | 250–348 | Hierarchical | Benchmark | Validation |

| Vaihingen | 3 | 0.13 (3) | Irregular | 0 | - | - | 4 | Powerline, low vegetation, impervious surface, car, fence/hedge, roof, façade, shrub, and tree | Benchmark | Validation |

| Hessigheim | 2 | 0.16 (3) (4) | Irregular | 0 | 03.2016 | Leaf-off | 20 | Low vegetation, impervious surfaces, vehicle, urban furniture, roof, façade, shrub, tree, soil/gravel, vertical surface, and chimney | Benchmark | Validation |

| Summary | 2–51 | 0.13–24 | - | 0–25 | 2011–2022 | - | 4–348 | - | - | - |

| Test Number | Voxel Size [cm] | # Layers | # Features for Each Layer | # Epochs | Weighted Loss Function | Mean IoU [%] | Min. IoU [%] |

|---|---|---|---|---|---|---|---|

| Baseline | 8 | 7 | 16 | 18 | Yes | 84.0 | 52.9 |

| 1 | 25 | 7 | 16 | 18 | Yes | 82.8 | 49.0 |

| 2 | 8 | 5 | 16 | 18 | Yes | 79.5 | 46.0 |

| 3 | 8 | 9 | 16 | 18 | Yes | 83.3 | 50.8 |

| 4 | 8 | 7 | 16 | 18 | No | 82.8 | 47.8 |

| 5 | 8 | 7 | 8 | 18 | Yes | 82.8 | 49.1 |

| 6 | 8 | 7 | 16 | 10 | Yes | 82.9 | 49.6 |

| 7 | 8 | 7 | 16 | 26 | Yes | 84.1 | 52.7 |

| Training Dataset | Testing Dataset | Average Median IoU [%] | IoU [%] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ground and Water | Vegetation | Buildings and Bridges | Other | |||||||||||

| Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | |||

| Vienna | Vienna | 84.5 | 97.3 | 97.0 | 1.8 | 94.8 | 94.2 | 3.5 | 92.4 | 88.0 | 15.7 | 53.5 | 52.2 | 13.3 |

| Opole | Vienna | 69.0 | 91.8 | 88.7 | 12.1 | 80.0 | 75.4 | 19.7 | 76.9 | 65.5 | 31.6 | 27.3 | 29.3 | 21.4 |

| Zurich | Vienna | 78.2 | 95.9 | 94.8 | 5.0 | 91.6 | 90.4 | 5.6 | 89.7 | 83.1 | 17.4 | 35.7 | 37.1 | 19.4 |

| Zurich and Opole and Vienna | Vienna | 84.9 | 97.2 | 96.9 | 2.0 | 94.9 | 94.3 | 3.3 | 93.5 | 89.8 | 10.5 | 53.8 | 50.8 | 17.2 |

| Zurich | Zurich | 84.7 | 97.8 | 97.5 | 1.1 | 96.6 | 96.6 | 2.2 | 93.6 | 92.0 | 5.1 | 50.8 | 53.4 | 11.4 |

| Opole | Zurich | 76.6 | 93.5 | 93.2 | 2.7 | 92.1 | 91.7 | 3.5 | 87.6 | 81.1 | 17.8 | 33.1 | 33.0 | 11.1 |

| Vienna | Zurich | 81.0 | 96.6 | 96.6 | 1.4 | 95.2 | 95.2 | 3.0 | 90.5 | 88.1 | 6.6 | 41.6 | 43.9 | 10.4 |

| Zurich and Opole and Vienna | Zurich | 84.6 | 97.4 | 97.4 | 1.1 | 96.4 | 96.4 | 2.3 | 94.0 | 92.4 | 4.4 | 50.4 | 53.4 | 11.5 |

| Opole | Opole | 79.9 | 96.3 | 95.9 | 1.7 | 86.9 | 86.5 | 5.1 | 91.5 | 89.6 | 5.9 | 44.7 | 48.2 | 11.7 |

| Vienna | Opole | 76.2 | 94.6 | 94.3 | 1.8 | 83.4 | 82.0 | 7.3 | 85.8 | 83.8 | 6.7 | 40.9 | 39.2 | 11.6 |

| Zurich | Opole | 76.1 | 95.1 | 94.3 | 2.3 | 82.1 | 81.4 | 8.0 | 90.9 | 87.7 | 7.8 | 36.4 | 36.6 | 13.0 |

| Zurich and Opole and Vienna | Opole | 82.7 | 96.6 | 96.1 | 1.9 | 86.9 | 86.6 | 5.4 | 92.5 | 90.0 | 6.5 | 54.6 | 52.4 | 10.8 |

| Name | Country | IoU [%] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ground and Water | Vegetation | Buildings and Bridges | Other | ||||||||||

| Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | Median | Mean | Std | ||

| Vienna | AT | 97.2 | 96.9 | 2.0 | 94.9 | 94.3 | 3.3 | 93.5 | 89.8 | 10.5 | 53.8 | 50.8 | 17.2 |

| Zurich | CH | 97.4 | 97.4 | 1.1 | 96.4 | 96.4 | 2.3 | 94.0 | 92.4 | 4.4 | 50.4 | 53.4 | 11.5 |

| Opole | PL | 96.6 | 96.1 | 1.9 | 86.9 | 86.6 | 5.4 | 92.5 | 90.0 | 6.5 | 54.6 | 52.4 | 10.8 |

| Geneva | CH | 96.7 | 96.8 | 1.0 | 95.9 | 95.4 | 1.6 | 91.8 | 87.1 | 10.3 | 52.7 | 51.9 | 9.8 |

| Lichtenstein | LI | 95.9 | 95.7 | 1.6 | 95.4 | 94.4 | 3.3 | 92.2 | 91.6 | 3.0 | 34.0 | 36.7 | 7.9 |

| Wrocław 2011 | PL | 92.2 | 93.4 | 2.7 | 77.3 | 78.6 | 8.4 | 91.9 | 87.1 | 12.0 | 13.5 | 17.8 | 11.9 |

| Wrocław 2022 | PL | 94.2 | 93.1 | 3.2 | 90.4 | 90.2 | 4.0 | 93.2 | 89.9 | 7.0 | 41.0 | 39.3 | 13.8 |

| Zwolle | NL | 97.3 | 97.1 | 2.1 | – | – | – | 90.8 | 86.7 | 10.4 | – | – | – |

| France | FR | 94.4 | 90.7 | 7.9 | 90.7 | 85.0 | 17.0 | 77.6 | 66.4 | 32.0 | 29.5 | 23.0 | 18.4 |

| Strasbourg | FR | 92.4 | 91.5 | 3.8 | 82.7 | 79.0 | 15.6 | – | – | – | – | – | – |

| Luxembourg | LU | – | – | – | 91.0 | 90.5 | 3.7 | – | – | – | – | – | – |

| Dublin | IE | – | – | – | 75.5 | 72.6 | 19.1 | – | – | – | 19.6 | 20.0 | 11.9 |

| DALES | USA | 95.3 | 94.7 | 2.3 | 89.5 | 89.2 | 3.5 | 94.8 | 91.3 | 14.3 | 47.8 | 46.3 | 9.2 |

| Vaihingen | DE | 90.0 | 89.6 | 2.7 | 68.0 | 70.4 | 4.3 | 91.8 | 91.2 | 1.4 | 22.7 | 23.4 | 3.2 |

| Hessigheim | DE | 91.6 | 91.6 | 3.0 | 79.5 | 79.5 | 3.9 | 91.3 | 91.3 | 2.7 | 31.4 | 31.4 | 1.1 |

| Imperv. Surf | Low Veg. | Shrub | Tree | Roof | Facade | Car | Fence/Hedge | Powerline | |

|---|---|---|---|---|---|---|---|---|---|

| [18] | 90.2 | 81.7 | 83.8 | 95.0 | 47.4 | - | |||

| [12] | 91.5 | 77.9 | 45.9 | 82.5 | 94.0 | 49.3 | 73.4 | 18.0 | 37.5 |

| [11] | 91.6 | 82.0 | 49.6 | 82.6 | 94.4 | 61.5 | 77.8 | 44.2 | 75.4 |

| [44] | 91.4 | 82.7 | 44.2 | 79.8 | 92.4 | 56.8 | 76.9 | 39.6 | 77.0 |

| [45] | 91.2 | 80.8 | 43.8 | 83.6 | 93.1 | 58.6 | 62.1 | 53.7 | 55.7 |

| [46] | 90.5 | 80.0 | 48.3 | 75.7 | 92.7 | 57.9 | 78.5 | 45.5 | 75.5 |

| [47] | 99.3 | 86.5 | 39.4 | 72.6 | 91.1 | 44.2 | 75.2 | 19.5 | 68.1 |

| [48] | 91.1 | 82.0 | 46.8 | 83.1 | 93.6 | 60.8 | 76.5 | 40.5 | 56.1 |

| [50] | 91.9 | 82.6 | 50.7 | 82.7 | 94.5 | 59.3 | 74.9 | 39.9 | 63.0 |

| [52] | 90.2 | 80.5 | 34.7 | 74.5 | 93.1 | 47.3 | 45.7 | 7.6 | 70.1 |

| Method | Gravel | Imperv. Surfaces | Low Veg. | Shrub | Tree | Roof | Facade | Chimney | Vehicle | Urban fur. | Vert. Surf. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Zhan | 58.4 | 87.5 | 89.4 | 65.2 | 93.8 | 96.8 | 69.3 | 75.4 | 67.5 | 45.7 | 53.8 |

| X | 51.7 | 87.5 | 89.3 | 66.4 | 93.6 | 96.0 | 68.3 | 76.2 | 66.7 | 40.5 | 54.7 |

| Ifp-RF | 55.5 | 85.9 | 87.5 | 59.8 | 92.4 | 96.0 | 64.1 | 56.5 | 57.0 | 39.1 | 34.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Walicka, A.; Pfeifer, N. Standard Classes for Urban Topographic Mapping with ALS: Classification Scheme and a First Implementation. Remote Sens. 2025, 17, 2731. https://doi.org/10.3390/rs17152731

Walicka A, Pfeifer N. Standard Classes for Urban Topographic Mapping with ALS: Classification Scheme and a First Implementation. Remote Sensing. 2025; 17(15):2731. https://doi.org/10.3390/rs17152731

Chicago/Turabian StyleWalicka, Agata, and Norbert Pfeifer. 2025. "Standard Classes for Urban Topographic Mapping with ALS: Classification Scheme and a First Implementation" Remote Sensing 17, no. 15: 2731. https://doi.org/10.3390/rs17152731

APA StyleWalicka, A., & Pfeifer, N. (2025). Standard Classes for Urban Topographic Mapping with ALS: Classification Scheme and a First Implementation. Remote Sensing, 17(15), 2731. https://doi.org/10.3390/rs17152731