Abstract

Floods stand as one of the most harmful natural disasters, which have become more dangerous because of climate change effects on urban structures and agricultural fields. This research presents a comprehensive flood mapping approach that combines multi-sensor satellite data with a machine learning method to evaluate the July 2021 flood in the Netherlands. The research developed 25 different feature scenarios through the combination of Sentinel-1, Landsat-8, and Radarsat-2 imagery data by using backscattering coefficients together with optical Normalized Difference Water Index (NDWI) and Hue, Saturation, and Value (HSV) images and Synthetic Aperture Radar (SAR)-derived Grey Level Co-occurrence Matrix (GLCM) texture features. The Random Forest (RF) classifier was optimized before its application based on two different flood-prone regions, which included Zutphen’s urban area and Heijen’s agricultural land. Results demonstrated that the multi-sensor fusion scenarios (S18, S20, and S25) achieved the highest classification performance, with overall accuracy reaching 96.4% (Kappa = 0.906–0.949) in Zutphen and 87.5% (Kappa = 0.754–0.833) in Heijen. For the flood class F1 scores of all scenarios, they varied from 0.742 to 0.969 in Zutphen and from 0.626 to 0.969 in Heijen. Eventually, the addition of SAR texture metrics enhanced flood boundary identification throughout both urban and agricultural settings. Radarsat-2 provided limited benefits to the overall results, since Sentinel-1 and Landsat-8 data proved more effective despite being freely available. This study demonstrates that using SAR and optical features together with texture information creates a powerful and expandable flood mapping system, and RF classification performs well in diverse landscape settings.

1. Introduction

Natural disasters, such as floods, have caused extensive damage since they occur frequently, while climate change exacerbates their effects, thereby endangering human lives and both infrastructure and ecosystems worldwide. The past five years have witnessed major flood disasters in Spain (2024), Pakistan (2022), Türkiye (2021), Western Europe (2021), and China (2021), which have caused extensive economic damage and human loss and humanitarian disasters worldwide. The 2021 Limburg floods demonstrated the critical need for better flood monitoring and risk mitigation approaches when they led to almost €600 million in damages throughout the Netherlands, Belgium, and Germany [1,2]. The Netherlands faces extreme flooding risks because its territory extends below sea level by a quarter while maintaining high population density and infrastructure development levels in Europe [3]. The country experiences severe economic and social impacts from flooding despite having globally recognized flood control systems. Therefore, the need to develop precise and timely flood extent information becomes essential, since it supports both emergency response operations and long-term climate adaptation planning.

Remote sensing (RS) technologies provide innovative solutions to the constraints of ground-based monitoring systems because they allow for wide-area data acquisition at repeatable intervals and in near real time. The technology of RS functions as an efficient and adaptable instrument for flood surveillance, as it provides broad spatial coverage while enabling frequent temporal monitoring. RS platforms utilize satellite-based and aircraft-based systems and Unmanned Aerial Vehicles (UAVs) to deliver key data required to create and monitor flood extents. The two fundamental technologies used in these platforms are Synthetic Aperture Radar (SAR) and optical sensors. SAR sensors remain essential for flood detection because they can penetrate through cloud cover and obtain images at any time of day while maintaining reliability under various weather conditions [4,5,6]. Multiple SAR sources like Sentinel-1, the Advanced Land Observing Satellite (ALOS), and Radarsat can enhance temporal resolution in flood surveillance through integration. On the other hand, commercial SAR data can be expensive; however, the industry needs effective ways to integrate various SAR sensors which differ in configuration. As their drawbacks, SAR-based flood detection experiences difficulties in separating water from dark surfaces such as snow and ice, as well as deserts and dry croplands, because these surfaces produce similar backscatter intensities [7]. In contrast, the optical imaging system benefits from strong land cover discrimination in the visible and infrared regions as well as high signal-to-noise ratios and multiple spectral bands, which makes it suitable for flood and water body monitoring [8]. Nevertheless, cloud cover remains a major limitation for optical sensors due to obstructing the visibility during crucial flood events. Moreover, water reflectance might show considerable variations because of turbidity along with vegetation presence and sun angle conditions, which makes accurate flooded area delineation difficult, especially in high-resolution images [7,9]. Given these challenges, the use of single-sensor approaches with either SAR or optical systems can produce incomplete or unreliable flood detection outcomes when dealing with complex and dynamic landscapes.

To overcome the limitations of single-sensor methods, the fusion or combined use of SAR and optical data has become an increasingly effective approach in flood mapping [10,11]. As highlighted above, SAR provides robust geometric and surface roughness information in all weather conditions alongside optical imagery, which enhances land–water discrimination by using spectral indices. The Normalized Difference Water Index (NDWI) and Modified NDWI (MNDWI) operate as leading optical indices for detecting open water bodies across both natural and urban areas by using different band combinations of Green-Near Infrared (NIR) and Green-Shortwave Infrared (SWIR) [12,13]. Flood-affected vegetation can be detected based on the Normalized Difference Vegetation Index (NDVI) because it reveals plant health and canopy greenness anomalies [14]. The Normalized Difference Moisture Index (NDMI) and Land Surface Water Index (LSWI) provide improved sensitivity to both surface moisture and shallow water in agricultural areas, according to Stoyanova [15] and Mohammadi et al. [16]. The Hue-Saturation-Value (HSV) color space transformation enables better separation of water from spectrally similar shadows or dark rooftops in urban areas; also, the HSV provides effective solutions for flood monitoring [17]. Compared to the traditional water indices (i.e., NDWI, AWEI), HSV’s better performance is likely due to its more effective spectral alignment with water-related features. Ref. [18] recommended the HSV color space instead of Red-Green-Blue (RGB) due to reasons such as the existence of correlation between bands in the RGB space and the difficulty of selecting a specific color in threshold-based approaches. In the study conducted for the detection of shadow areas in high-resolution images, the HSV space achieved better results than the RGB [19]. These optical spectral indices enable a detailed analysis of flood extent throughout different land cover types under various illumination situations.

In addition to these optical spectral data, SAR systems provide essential backscatter and structural data to improve flood detection when cloudy or nighttime conditions prevent optical observations. Floodwaters in radar imagery show minimal backscattering because of their reflective properties, leading to the use of coefficients from different polarizations. SAR polarization variations enable the detection of flooded vegetation and the separation of flooded vegetation from other low-backscatter features. Additionally, temporal coherence between SAR acquisitions can reveal abrupt surface changes linked to inundation, while texture metrics derived from the Gray-Level Co-occurrence Matrix (GLCM)—such as contrast, entropy, and homogeneity—capture spatial heterogeneity, which is useful for mapping floodwaters amidst buildings or vegetation. The GLCM texture information, as defined by [20], describes the co-occurrence of pixel information with different spatial relationships. Several texture information can be generated with different window sizes and angle values. But it can increase the number of input data. On the other hand, the dimension of the data size can be reduced, and a smaller number of features can be selected based on principal component analysis (PCA). The impact on the classification can be considerable, even if the textural information is sparse [21]. Ref. [22] proposed dimension reduction using PCA to reduce the number of features produced by GLCM, and they noticed the improvement in the accuracies. Overall, the combination of SAR and optical features creates a multi-dimensional view of flooded areas, which enhances detection accuracy and reliability in complex environments beyond what one sensor can achieve.

The translation of the RS observations into actionable flood hazard information requires various modeling techniques due to the complex nature of flood dynamics and the multiple affected landscapes. The flood hazard mapping methodologies include physical modelling, physically based modelling, and empirical modelling, according to Mudashiru et al. [23] and Teng et al. [24]. Physical modeling conducts laboratory tests that duplicate flood processes to study hydrodynamic behaviors, yet these approaches face limitations from scale constraints and high costs [25,26]. On the other hand, physically based models apply mathematical expressions for hydrologic and hydraulic processes such as rainfall-runoff transformation, channel flow, and surface inundation that integrate high-resolution spatial data including Digital Elevation Models (DEMs), land use maps, and meteorological inputs [27]. These models present more realistic representations, yet need complex calibration, consume significant computational resources, and face data availability challenges across various regions worldwide [26]. In contrast, empirical models utilize statistical or machine learning techniques to build connections between observed flood areas and predictor variables that originate from remote sensing data as well as topography, soil type, and land cover [24]. The empirical approaches have gained attention in recent years because they base their assessments on data-driven approaches, which provide fast and flexible hazard evaluations without requiring detailed hydrological inputs or boundary conditions. In particular, empirical models utilizing Random Forest (RF), Support Vector Machines (SVMs), and Deep Learning (DL) architectures achieve robust classification when combined with multi-sensor RS data to perform well across different geographic and climatic regions [28,29,30,31]. Ultimately, the choice of modeling approach depends on the specific objectives, data availability, and computational resources of a given study.

This proposed research aims to develop an integrated framework for flood hazard mapping by using multi-temporal SAR and optical RS data with an RF classifier in heterogeneous landscapes. The research uses freely available Sentinel-1 and Landsat-8 imagery as well as commercial Radarsat-2 data to extract a wide range of flood-sensitive features in the Netherlands, which faces high flood risks despite its well-developed water management systems. The inventory includes SAR backscattering coefficients (, , ), Landsat-derived NDWI, conversion of RGB images to the color space (HSV), and textural components from the GLCM (textPC1). We created 25 different scenarios that span from using single-source data to complex multi-sensor configurations that combine raw and textural information from all three satellite platforms. This study has two main objectives: (1) Advancing the scientific foundation for selecting sensor combinations and features best suited for flood detection in delta regions and (2) Creating functional and extendable operational flood monitoring system approaches. Additionally, we would like to address the following questions: (1) Which feature combinations from Sentinel-1, Radarsat-2, and Landsat-8 (e.g., backscattering coefficients, spectral indices, and texture features) yield the highest accuracy in flood extent mapping? (2) What is the contribution of commercial Radarsat-2 data to flood mapping performance when integrated with Sentinel-1 and Landsat-8? (3) How does the SAR-derived texture metric (GLCM-based textPC1) improve the delineation of flooded areas in heterogeneous and complex landscapes? (4) How effective is the RF classifier in handling multi-source input features and maintaining robustness across diverse flood scenarios and terrain conditions?

2. Materials and Methods

2.1. Study Area

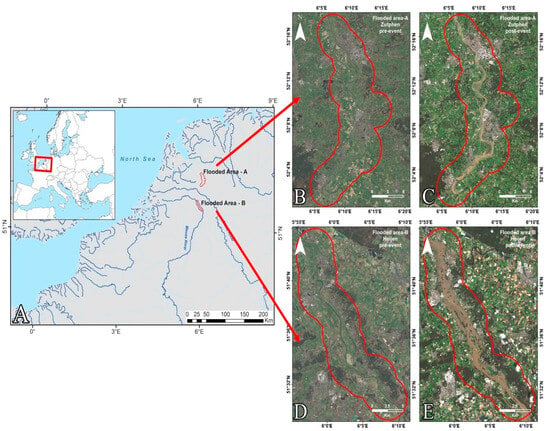

On 13–14 July 2021, large parts of Belgium, Germany, and the Netherlands experienced heavy rainfall and flooding (Figure 1). The rainfall accumulated in the basins of the Meuse (Maas) and Rhine Rivers, causing rivers to overflow in many regions and water to reach residential areas. Hundreds of lives were lost, especially in Belgium and Germany, and significant physical and economic losses occurred in the Netherlands.

Figure 1.

Illustration of the study areas. (A) General overview, (B) Pre-event image of Flooded area-A (Zutphen), (C) Post-event image of Flooded area-A (Zutphen), (D) Pre-event image of Flooded area-B (Heijen), (E) Post-event image of Flooded area-B (Heijen).

While rainfall exceeding 50 mm per day is normally considered a “heavy rain day” in the Netherlands, during the 2021 flood, 160 to 180 mm of rain was recorded in two days. This level of rainfall is extremely unusual for this region, especially in the summer months, and is at levels not previously measured. The peak flow values measured near Eijsden in the Meuse Basin and in regional streams represent the highest water levels on record [32].

These extraordinary meteorological conditions also caused flood risks in residential areas such as Heijen (Flooded area-B in Figure 1) in South Holland, which is close to the Meuse River, and Zutphen (Flooded area-A in Figure 1) in the eastern region around the IJssel River. In Heijen, the overflowing river water flooded agricultural land and roads, causing temporary evacuations. In Zutphen, due to the increased flow of the IJssel River, local flooding was observed in low-lying areas close to the river. The July 2021 flood showed that flood risk in Europe is not limited to the winter months but can also reach unprecedented levels in the summer months. Therefore, the event in question is a very important example of disaster risk management and resilient infrastructure planning in the context of climate change.

2.2. Multi-Sensor Data, Scenarios, and Workflow of the Methodology

This study used various combinations of radar and optical satellite datasets to analyze the surface features resulting from the flood that occurred in July 2021. The datasets included Sentinel-1 and Radarsat-2 SAR data along with optical data from Landsat-8.

Sentinel-1 was provided free of charge to users by the European Space Agency (ESA) and was obtained in the Interferometric Wide (IW) mode Single Look Complex (SLC) format. The image, acquired on 18 July 2021, is in ascending orbit. The image contains Vertical-Vertical (VV) and Vertical-Horizontal (VH) polarimetric data, and the analyses were carried out with these polarizations. Radarsat-2 images were provided in SLC format in Extra Fine Beam mode. In this mission, the image was also obtained on 18 July 2021, in descending orbit. Since the analyzed Radarsat-2 image has Horizontal-Horizontal (HH) polarimetric feature, the analyses were carried out only in this polarization. The open-source Sentinel Application Platform (SNAP) [33] software, developed by ESA, was used to extract the backscattering coefficients (σ0) from Sentinel-1 images at 5 m in range × 20 m in azimuth spatial resolution and Radarsat-2 images at 3 m in range × 3 m in azimuth spatial resolution. For Sentinel-1, the preprocessing steps consisted of (i) Terrain Observation by Progressive Scans (TOPS) split, (ii) precise orbit integration, (iii) radiometric calibration, (iv) TOPS deburst, (v) speckle filter using 3 × 3 Lee Sigma filter, (vi) geometric correction using Range Doppler Terrain Correction [34] using Shuttle Radar Topography Mission (SRTM) 1 Arc-Second DEM (USGS 2014), and (vii) calculation of dB values converted from linear values of the backscattering coefficient (σ0) using Equation (1) [35]. Similar preprocessing steps were applied for Radarsat-2, except for the precise orbit integration and TOPS steps.

SAR texture information features can be extremely useful for image classification, especially in complex areas [22,36]. With the spatial information inherent in SAR effectively used, new texture images can be reconstructed and contribute to the improvement of classification accuracy. Thus, to capture spatial patterns and surface heterogeneity, GLCM is one of the most widely used methods. Each element value of GLCM is calculated as follows [20,37]:

where P (i, j, d, θ) is the probability that the positional direction is θ and the distance is d for two pixels with gray levels of i and j.

In this study, GLCM textural features—Angular Second Moment (ASM), Contrast, Dissimilarity, Energy, Entropy, GLCM Correlation, GLCM Mean, GLCM Variance, Homogeneity, and MAX—were calculated for both Sentinel-1 and Radarsat-2 SAR data from the post-event images of 18 July 2021. These features were calculated using a 9 × 9 pixel window at the relevant spatial resolutions. The use of a 9 × 9 pixel moving window to extract GLCM-based SAR texture features is based on both visual assessments and its prevalence in previous SAR-based flood mapping studies. This size offers a balanced compromise between preserving spatial detail and representing surface texture, minimizing the risk of over-smoothing or under-capturing textural diversity [38,39]. After this process, Principal Component Analysis (PCA) was applied to reduce the dimensionality of the GLCM feature set and to perform classification with the best texture information that could be obtained [40]. PCA is a statistical dimensionality reduction method that creates new components representing the highest variance by eliminating the correlation in multidimensional data [41,42]. In this study, the first principal component (textPC1) was used to highlight the most significant texture variations.

Optical imagery from Landsat-8 Operational Land Imager (OLI) was used to derive NDWI (Equation (3)) and HSV color space transformations [12,43]. The Landsat-8 satellite provides imagery with 16-day temporal resolution and 12-bit radiometric resolution. The sensor records geometrically corrected images in the visible, NIR, and SWIR spectral regions for nine spectral bands with 30 m spatial resolution and panchromatic bands with 15 m resolution and is available to users free of charge (https://earthexplorer.usgs.gov/: accessed on 2 June 2025). As with the SAR images, the cloud-free L8 OLI image was also acquired on 18 July 2021. In this study, Landsat-8 Collection 2 Tier 1 top-of-atmosphere (TOA) reflectance calibrated images were used in the GEE platform. Additionally, NDWI and HSV were obtained and used on the same platform. Eventually, to ensure both temporal and spatial consistency, we acquired all satellite imagery on 18 July 2021 (Table 1). Subsequently, every image was resampled to the 30 m resolution native to Landsat-8.

where Green and NIR refer to green and NIR reflective bands.

Table 1.

Overview of multi-sensor satellite datasets.

The integration of SAR backscattering coefficients (, , ), Landsat-derived NDWI, RGB imagery to color space (HSV), and textural components from GLCM provided a robust multi-sensor approach to assess flood-related surface changes. Within the scope of this study, 25 different scenarios with different features were created with 8 variables from three satellite platforms (Table 2). This integration facilitated better classification and analysis of land cover and water availability, i.e., flood effects in the study area.

Table 2.

Feature Stack Scenarios Table. S1, L8, and R2 refers to Sentinel-1, Landsat-8, and Radarsat-2, respectively.

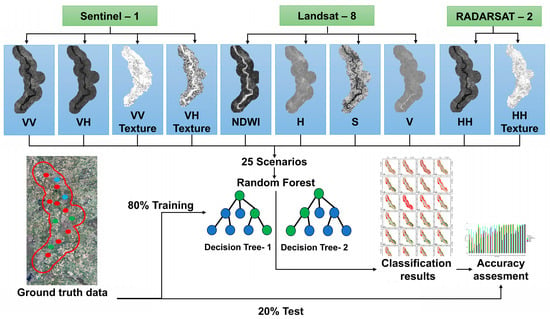

The overall workflow of this study is illustrated in Figure 2. Multi-sensor remote sensing data from Sentinel-1 (VV, VH backscatter and VV, VH texture layers), Landsat-8 (NDWI and HSV color space components), and Radarsat-2 (HH backscatter and texture) were processed and used to construct 25 different feature stack scenarios. Ground truth data were collected from high-resolution aerial imagery obtained from GeoTiles (https://geotiles.citg.tudelft.nl/: accessed on 1 January 2025), and used to train and test an RF classifier, with 80% of labeled samples allocated for training and the remaining 20% reserved for testing. Each scenario was classified independently using the RF model, and the results were compiled into classified flood maps. Accuracy assessment was conducted using standard metrics, including Overall Accuracy (OA), Kappa coefficient, and class-specific F1 scores, to evaluate the performance of each scenario in detecting flooded areas and other land cover types.

Figure 2.

Workflow of the methodology. Red, green, and blue dots are only used as an illustration of training samples for different land cover types.

2.3. Random Forest Classification

RF, one of the machine learning methods, was used in our study. RF is an algorithm that aims to find the correct result by combining multiple decision trees, and it is a supervised approach commonly used in pixel-based classification studies. Concerning the efficiency of the RF classifiers, they deliver multiple advantages for flood mapping through their ability to handle multiple data sources [44,45]. The system integrates optical, SAR, terrain, and hydro-climatic variables without distributional assumptions to achieve more than 90% overall accuracy [46,47]. Furthermore, the ensemble structure of the RF classifiers provides better resistance to noise and overfitting, which reduces the need for extensive parameter tuning compared to other models and enables uncertainty reduction methods [47]. The results of multi-source assessments demonstrate that RF performs better than traditional and other machine learning models, and moreover, SAR-based studies validate RF’s high cross-validation reliability for reducing false positives [46,48,49]. The superiority of RF enables real-time flood mapping at various scales across regions through the Google Earth Engine (GEE) platform.

In this study, the GEE platform was used to classify satellite images. In the RF algorithm, grid search was used to select hyperparameters. In this context, classification was tested with 36 different variables for each scenario with the help of hyperparameter variables: numberOfTrees = [50, 100, 150, 200], minLeafPopulation = [1, 5, 10], and bagFraction = [0.5, 0.7, 1.0]. Five different classes, namely Cropland, Water (Permanent water bodies), Flooded Area, Trees and Urban, were considered in the classification. The training and test pixels of the Urban, Trees, and Water classes were obtained from a 25 cm resolution aerial photograph (https://geotiles.citg.tudelft.nl/: accessed on 1 January 2025). Since Cropland areas are affected by flooding, Cropland areas and flooded areas were selected from the satellite images used in the classification. The number of pixels used for training and testing in the classes in Zutphen (Flooded area-1) is given in Table 3. Overall accuracy (OA), the Kappa coefficient, and the F1 score were calculated to compare the performance of each scenario and the RF algorithm.

Table 3.

Pixel counts for each class.

3. Results

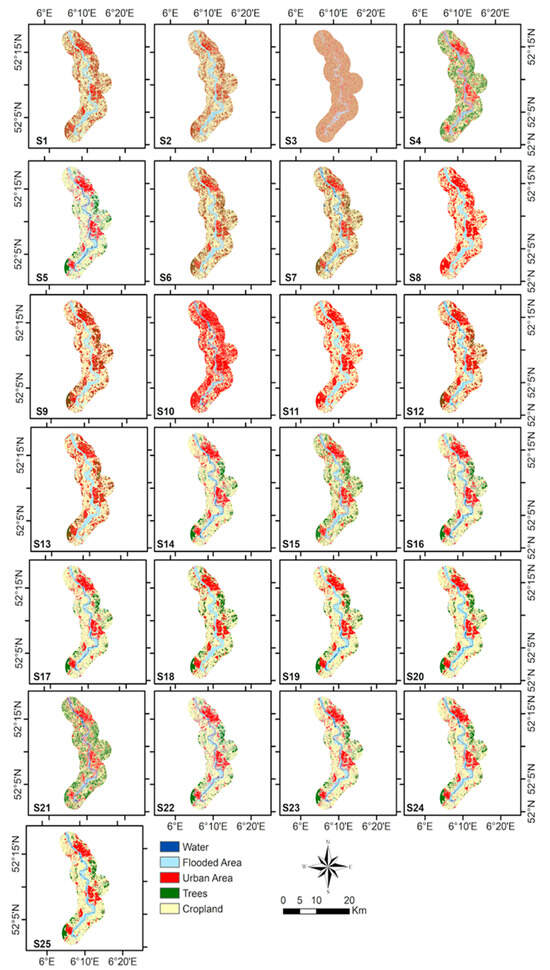

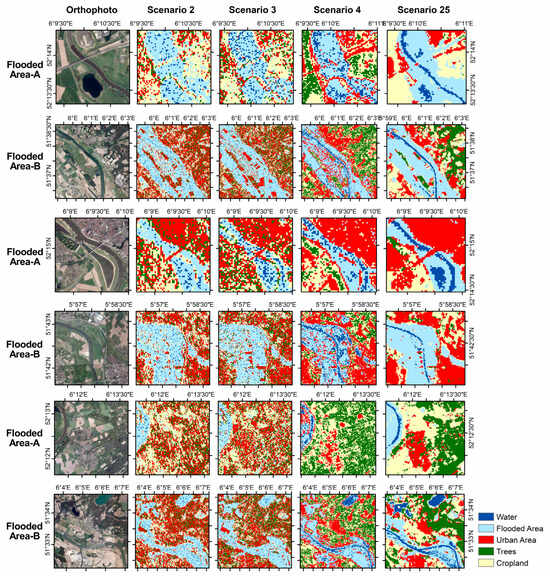

The classified maps presented in Figure 3 provide a visual comparison of how different feature combinations affect flood mapping performance for the Zutphen (Flooded Area-A) region in all 25 scenarios. The first three single-feature scenarios (S1–S3) produced noisy results with inconsistent classification of flooded areas throughout urban and cropland zones using Sentinel-1 or Radarsat-2 backscatter data. The Radarsat-2 HH backscatter-based S3 scenario produced the worst results, which resulted in a complete loss of flood detection capabilities. S4–S5 optical-only scenarios showed better spatial patterns with NDWI (S4) improving flood extent detection but also confusing flooded cropland with water bodies and HSV (S5) better capturing urban areas but poorly representing flooding near infrastructure. The addition of SAR textures in S6–S13 led to enhanced spatial coherence together with lower classification noise levels. Notably, S10 produced large negative outcomes because Radarsat-2 HH texture features fail to separate land cover types correctly in complex environments, particularly in croplands and vegetated zones. In contrast, S12 presented the most refined and structured flood map with precise riverbank flood boundary alignment, since it uses all SAR backscatter and texture features. The addition of optical indices in S14–S20 further improved map quality. In particular, the combination of SAR and optical data in S17, S18, and S20 scenarios achieved accurate and balanced mapping results. Among Radarsat-2 and optical combinations (S21–S23), S23 represented the most stable classification outcome between Radarsat-2 and optical combinations because it achieved clear flood delineation. Finally, S25, which integrated all SAR and optical features along with texture metrics, delivered the highest visual quality. The visual performance matched the quantitative excellence because full multi-sensor fusion with texture information produced the most reliable flood mapping results in complex urban–rural landscapes.

Figure 3.

Classified images of each scenario for the Zutphen region (Flooded area-A).

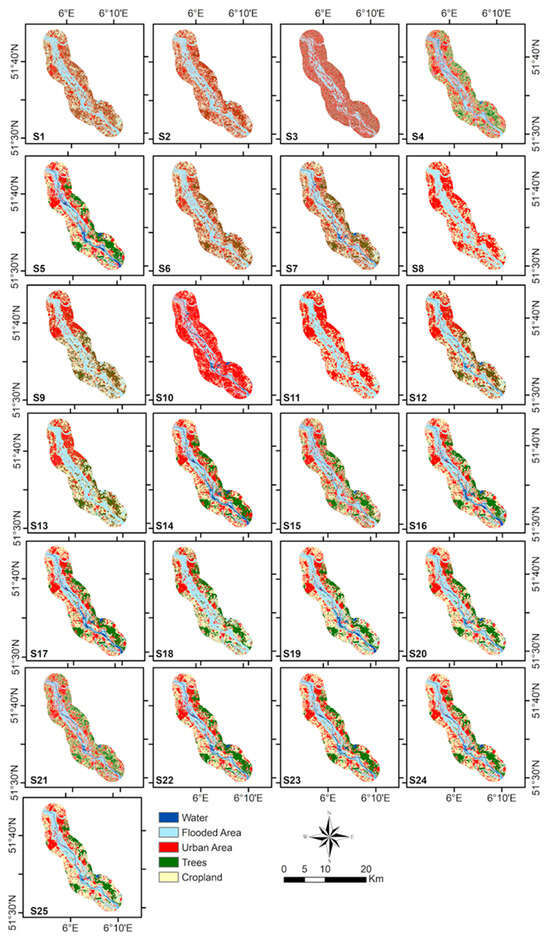

The 25 scenarios applied to the Heijen region (Flooded Area-B) are presented in Figure 4 to visually evaluate classification quality in a primarily agricultural landscape. The three single-feature scenarios (S1–S3) showed major misclassification problems. Sentinel-1 backscatter inputs (S1, S2) generated maps with numerous incorrect flood detections throughout cropland and vegetated areas, and Radarsat-2 HH backscatter (S3) fails again to detect flood zones by showing excessive homogeneity of cropland. The optical-only scenarios produced better visual results. The NDWI (S4) yielded accurate flood boundary detection near river channels but incorrectly labeled some agricultural land as water bodies. The HSV (S5) method provided better identification of urban and tree-covered regions yet failed to detect flooding, particularly in vegetated areas. The visual improvement from SAR texture-based scenarios (S6–S13) remained less effective than what was observed in Zutphen. The poor performance of S10 continued from Area A by incorrectly expanding the urban class while hiding flooded cropland. On the other hand, the addition of texture in S11 and S12 led to better detection of flooded zones, although some noise remained present. The introduction of optical data in scenarios S14–S20 contributed to much better flood delineation. For instance, the maps produced by S18 and S20 show balanced visual results because they correctly depicted hydrological patterns and minimized misclassifications. Among Radarsat-2 and optical combinations (S21–S23), S23 produced the best results by precisely mapping flood areas and differentiating between trees. In the end, the full-feature scenario S25 delivered the most accurate visual classification results. The visual coherence and flood delineation quality of S25 supported its leading performance metrics, which demonstrated that multi-sensor texture-augmented approaches worked equally well in agricultural landscapes.

Figure 4.

Classified images of each scenario for the Heijen region (Flooded area-B).

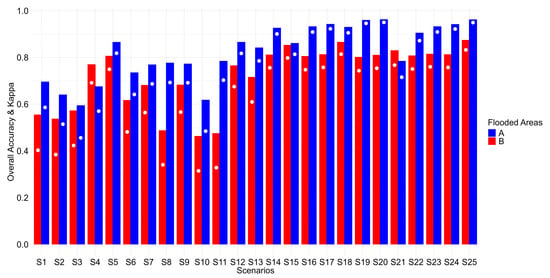

Considering the general classification accuracy assessment, the effect of band combinations obtained with different sensors and indices on the accuracy was analysed in two areas, and their accuracy was evaluated with OA and Kappa (Figure 5, Table 4). In the single-band scenarios (S1–S5), S5 (Landsat HSV) gave the best result with an OA of 86.6% and 80.7% in Zutphen and Heijen, respectively. Among the combinations with Radar data only (between S6 and S13), the best scenario was obtained with S12 for both areas (Figure 5, Table 4). In S14, where Landsat HSV and Landsat NDWI were used together, the OA in Flooded Area-A increased from 67.6% to 92.7% compared to the NDWI-only scenario (S4). On the other hand, in Flooded Area-B for S14, OA increased from 77.1% to 81.2% compared to the NDWI-only scenario (S4). For Sentinel-1 and optical combinations (S15–S20), OA in Flooded Area-A ranged from 86.2% to 96.4%, and in Flooded Area-B, OA ranged between 80.2% and 86.7%. In the scenarios where Radarsat-2 and Landsat-8 were used together (for S21–S23), the highest accuracy was S23 with OA 93.3% for Flooded Area-A. On the contrary, for Flooded Area-B, the highest OA was S21 with 83.1%. In Flooded Area-A, the use of Landsat-8 HSV increased the accuracy by about 2%. In scenarios S24 and S25, which include the combination of all data, S25 provided higher accuracies. The presence of texture information in S25 improved the overall accuracy in Zupthen and Heijen by 2% and 6%, respectively. S25 Landsat HSV was better than Landsat NDWI for both areas (Figure 5, Table 4). The best band combinations (S20 and S25) provided 96.4–96.3% OA and 0.950–0.949 Kappa for Flooded Area-A (Zutphen), respectively. For the Flooded Area-B (Heijen), S25 presented the best results with 87.5% OA and 0.833 Kappa.

Figure 5.

Overall accuracy and Kappa values of the classified images for each scenario. White dots represent kappa values.

Table 4.

Overall accuracy and Kappa values of the classified images for each scenario. The colors in the cells range from red to green, indicating low to high accuracy, respectively.

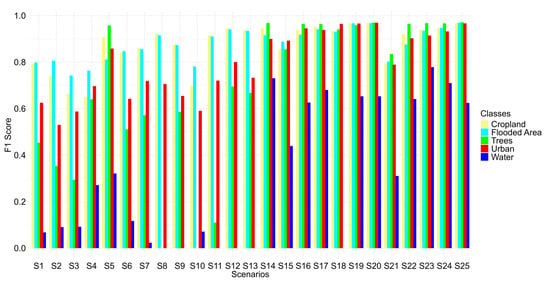

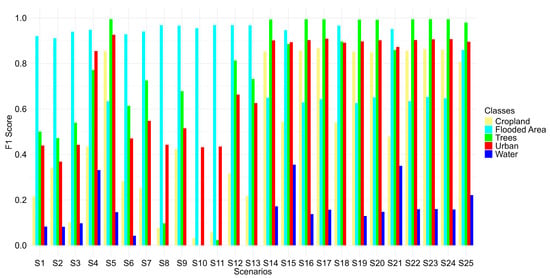

F1 scores were calculated for class accuracies in both areas (Figure 6, Figure 7 and Table 5, Table 6). In Area-A (Zutphen), in all scenarios, the Flooded area class was higher than 0.742, the Urban class was higher than 0.529, the Cropland class was higher than 0.651, and the Trees class of S5 and S14–S25 was higher than 0.85 (Figure 6, Table 5). In the evaluations conducted using a single feature (S1–S5), optical data provided satisfactory accuracies in both test sites for the flooded area class. This is provided by HSV for Area-A and NDWI for Area-B.

Figure 6.

F1 scores of each scenario for the Zutphen region (Flooded area-A).

Figure 7.

F1 scores of each scenario for the Heijen region (Flooded area-B).

Table 5.

F1 scores of each scenario for the Zutphen region (Flooded area-A).

Table 6.

F1 scores of each scenario for the Heijen region (Flooded area-B).

When data combinations are compared, both the SAR data among themselves and the SAR and optical combinations increased the accuracy on a class basis. For Area A, the highest F1 values for flooded areas and trees were obtained with S25 as 0.969 and 0.971, respectively. For urban and cropland, F1 values were found as 0.969 and 0.968 in the S20 combination, respectively. For these classes, the best values after S20 were again obtained with S25. Only the water class was lower than the others, yet, with the F1 score of 0.778, the S23 combination provided the highest accuracy in this class.

In Area-2, only SAR features (S11 and S12) provided the best results (both F1 = 0.969) for the flooded area even though the overall classification accuracies of these scenarios remained lower (Figure 7, Table 6). However, NDWI (S4) also provided an F1 score of 0.949, which was very close to the best one. Concerning the Urban class, HSV of Landsat (S5) provided high accuracy. In the Trees class, S5, S14, S16, S17, S19, S20, and S22–S25 provide higher F1 values than 0.990. For the cropland class, different scenarios, such as S14, S16, S17, S19, S20 and S22–S25, respectively, presented high accuracies. Concerning the water class that represents permanent water boundaries, few scenarios like S4, S15, and S21 achieved the F1 score above 0.330.

To visually assess the performance of flood classification under different multi-source data configurations, comparisons were made between the classification outputs of Scenarios 2, 3, 4, and 25 and high-resolution aerial orthophotos obtained from https://geotiles.citg.tudelft.nl/: accessed on 1 January 2025 (Figure 8). This analysis includes detailed insets of flooded areas to demonstrate how multi-source data fusion enhances the detection of riverbanks, small ponds, and floodplain boundaries. The results demonstrate that boundary accuracy and small water body detection improve with an increase in the number and variety of input factors. The floodwater extents in Scenario 25 achieved high spatial fidelity since it combined optical and SAR data with terrain features to distinguish between permanent water bodies and temporary flooded zones. The reduced number of mixed pixels at class boundaries demonstrated how data fusion improved classification sharpness. Eventually, the multi-source approaches demonstrate their ability to detect hydrological features that single-source methods usually overlook.

Figure 8.

A visual comparison between sample scenarios in zoomed locations.

4. Discussion

This research evaluates the multi-sensor flood mapping approach through Sentinel-1, Radarsat-2, and Landsat-8 data integration with the RF classification in two different Dutch landscapes. The study reveals that the best feature combinations differ between the urban Zutphen (Flooded Area-A) and the agricultural Heijen (Flooded Area-B) test sites, with a specific focus on flood class detection through F1 scores and complete classification performance evaluation using the OA and Kappa coefficients.

4.1. The Best Feature Combinations from Sentinel-1, Radarsat-2, and Landsat-8 for the Highest Accuracy in Flood Extent Mapping

The multi-sensor fusion approach (S25) proved to be the most balanced solution because it performed best in both flood detection (F1 = 0.969 in Zutphen, 0.859 in Heijen) and general classification (96.3% OA and 0.949 Kappa in Zutphen; 87.5% OA and 0.833 Kappa in Heijen). Some scenarios demonstrated significant differences between their overall and flood-specific performance results. For example, S16 (Sentinel-1 VV/VH + Landsat HSV) achieved excellent overall metrics (93.3% OA and 0.909 Kappa) in Zutphen but had a relatively lower flood F1 score (0.918), which indicates that the general classification was strong but some flood pixels were misclassified as urban features because of spectral similarities. The classification accuracy of S14 (Landsat NDWI + HSV) in Heijen was 81.2% OA and 0.756 Kappa, but it had the poorest flood F1 score (0.649) among multi-feature scenarios, which shows that the spectral indices performed well for general land cover classification, yet failed to detect floods in agricultural areas, possibly because of mixed pixel effects in croplands.

The urban environment of Zutphen achieved its best results through the combination of SAR texture features with Landsat’s HSV transformation (S19: F1 = 0.967, OA = 96.1%, Kappa = 0.946), where HSV proved its efficiency for differentiating floodwaters from urban features. The tradeoff became apparent in S5 (Landsat HSV alone) because it achieved 86.6% OA and 0.818 Kappa in Zutphen; however, its flood F1 score reached only 0.811. This shows that optical data can work well for general classification but needs SAR enhancement to reach its best performance in flood mapping. The agricultural area of Heijen showed multiple scenarios that achieved high results for both flood identification and total classification accuracy. S15 (Sentinel-1 VV/VH + Landsat NDWI) achieved outstanding performance through its excellent flood detection (F1 = 0.947) and strong overall metrics (85.4% OA/0.798 Kappa), and S18 (Sentinel-1 VV/VH + textures + Landsat NDWI) achieved even better results (F1 = 0.967, 86.7% OA/0.814 Kappa). The flood detection capability of Radarsat-2’s HH polarization with NDWI (Scenario S21: F1 = 0.951, OA = 83.1%, Kappa = 0.767) matches S15 and S18, but its overall classification performance is slightly lower. The high F1 score of Radarsat-2’s HH polarization for agricultural flood detection indicates its effectiveness; nevertheless, it provides limited discrimination between different land cover classes [50]. The results indicate that the best sensor combinations depend on whether the goal is to achieve maximum flood detection precision or to maintain fair performance across all land cover types.

The combination of NDWI with SAR backscatter and textures produced the best results because NDWI improved water detection while SAR backscatter and textures preserved good discrimination of other land cover classes [12,51]. The NDWI scenario (S4) in Heijen produced an opposite pattern with 77.1% OA and a 0.691 Kappa value but achieved an outstanding flood F1 score of 0.949, which indicates that NDWI performs well for flood feature detection but struggles with other land cover type identification in complex agricultural areas [13,52]. The differences between these results demonstrate why it is crucial to choose features depending on the main goals of the application between complete land cover classification and exact flood boundary mapping.

4.2. The Contribution of Commercial Radarsat-2 Data to Flood Mapping Performance

The analysis of Radarsat-2’s contribution revealed important but limited benefits that do not universally justify its mandatory inclusion. The data showed site-specific advantages; however, the overall impact was not substantial enough to recommend its definitive use across all scenarios. It is also crucial to highlight that the implications of Radarsat-2 in this study are based on the resampled pixel (30 m) values, not on the native resolution. The urban Zutphen area showed only small improvements in flood detection through Radarsat-2’s HH polarization (S7: F1 = 0.856 vs. S6: F1 = 0.847) with no significant impact on overall classification metrics (77.0% OA/0.687 Kappa vs. 73.6% OA/0.642 Kappa, respectively). On the other hand, the agricultural environment of Heijen showed more noticeable benefits, especially for flooded vegetation detection (S7: F1 = 0.955 vs. S6: F1 = 0.928), although these came with only modest gains in overall accuracy (68.2% vs. 61.7% OA, respectively).

The evaluation of these enhancements requires consideration of three essential factors: (1) the commercial price and restricted time availability of Radarsat-2 data compared to Sentinel-1 data, which is freely available; (2) the small performance gains that could be obtained through different feature combinations without Radarsat-2 data (e.g., S18 in Heijen reached F1 = 0.967 without Radarsat-2); and (3) the limited effect on general land cover classification when focusing on flood detection tasks. This research indicates that Radarsat-2 delivers additional value in particular agricultural flood mapping applications, yet its use is not sufficient to achieve high-quality results. Operational systems could select Sentinel-1 and optical data combinations as their primary choice while using Radarsat-2 only for specific cases where its HH polarization can solve particular ambiguities in vegetated flood detection [53].

4.3. The Contribution of SAR-Derived Texture Metric (GLCM-Based textPC1) for the Delineation of Flooded Areas

The SAR-derived texture metric (GLCM-based textPC1) enhanced flood delineation in both urban and agricultural landscapes when combined with various SAR backscatter features, but the degree of enhancement depended on polarization and land cover type. The addition of texture features to Sentinel-1 VV backscatter (S8) improved flood detection in urban Zutphen to F1 = 0.916 compared to VV backscatter alone (S1: F1 = 0.798), which resulted in a 14.8% increase and improved overall accuracy from 69.6% to 77.7%. The addition of textures to VH polarization (S9) showed a similar improvement but less than VV polarization (S9: F1 = 0.873 vs. S2: F1 = 0.806), indicating VV’s superior urban flood discrimination ability [54]. The agricultural landscape of Heijen demonstrated the most significant texture benefits for Radarsat-2’s HH polarization (S10: F1 = 0.955 vs. S3: F1 = 0.940), which resulted in a slight improvement but substantially reduced overall accuracy (46.4% vs. 57.3%). The results show that texture features enhance flood detection ability across all SAR bands and polarizations, but their effectiveness depends on sensor settings and environmental conditions [55]. The flood-specific metrics (F1 scores) showed consistent improvement despite variable overall classification accuracy, since texture features excel at water detection rather than land cover separation, which makes them essential for operational flood mapping [56].

4.4. The Effectiveness of the RF Classifier in Handling Multi-Source Input Features and Maintaining Robustness Across Diverse Flood Scenarios and Terrain Conditions

The RF classifier achieved strong results for flood detection at both test sites because its F1 scores for the flooded class exceeded 0.90 in most multi-sensor scenarios. The classifier not only achieved outstanding flood detection performance (F1 = 0.969 in S25) but also maintained high overall classification accuracy (96.3% OA) in Zutphen. Similarly, the classifier yielded excellent flood detection (best F1 = 0.969 in S11 and S12) in Heijen even though the overall classification metrics reached 76.6%, which indicates the classifier focused on precise flood detection rather than the other class accuracies.

4.5. Hyperparameter Optimization: Importance of the Tuning Process

Our RF classifier underwent complete hyperparameter optimization, which produced vital information about model performance across different sensor combinations and landscape types. The evaluation of 36 parameter configurations through testing bagging fractions (0.5, 0.7, 1.0), minimum leaf samples (1, 5, 10), and tree counts (50, 100, 150, 200) showed substantial variations in optimal settings between different scenarios and test sites (Table 7). The model behavior depends on the intricate connection between input features and landscape characteristics, which results in these observed differences.

Table 7.

Variables used for hyperparameter optimization.

Our analysis revealed multiple distinct patterns in the data. The most successful configurations in urban Zutphen used moderate parameter values where minLeafs = 5 appeared in 40% of top scenarios and trees = 150 appeared in 35% of cases (Table 8). The results indicate that urban flood mapping requires slightly limited model complexity to process diverse features without excessive overfitting. The agricultural Heijen area demonstrated a preference for aggressive splitting through minLeafs = 1 in 45% of scenarios and smaller ensemble sizes with trees = 50 in 40% of cases because simpler models work well for rural flooding patterns. The best-performing scenarios in both areas selected similar model parameters with bag = 0.7–1.0 and minLeafs = 1–5 and trees = 150–200, although agricultural areas reached good performance with fewer trees, which indicates that less computationally intensive models could work for simpler landscapes.

Table 8.

Parameters that provided the best results in scenarios.

The analysis of sensor inputs in relation to optimal parameters delivered significant findings. The use of optical indices (NDWI/HSV) in scenarios needed more cautious parameter settings for urban areas because these features share similar spectral characteristics. The best results in agricultural areas came from SAR-only scenarios that used texture features when they applied aggressive splitting techniques to detect backscatter variations in flooded vegetation. The performance of texture-enhanced scenarios depended on landscape type because urban applications required 100-tree depth with leaf size set to 5, while agricultural applications needed 200-tree depth with leaf size limited to 1. The optically heavy scenarios showed a consistent preference for minLeafs = 5 in urban areas, yet displayed greater variability in agricultural settings.

The research findings provide essential operational value for flood mapping systems. The results indicate that operational parameter strategies need adaptation because urban applications require constrained growth and larger ensembles; however, agricultural area monitoring can need simpler models. The large differences in optimal parameters between scenarios demonstrate that sensor combination changes require individual parameter adjustments. The study shows that RF optimization depends strongly on both the input data features and the characteristics of the target landscape. The results confirm the necessity of systematic hyperparameter testing for multi-sensor flood mapping systems and indicate potential development of automated optimization methods that adjust parameters through real-time feature and landscape analysis. Future research should investigate adaptive methods to optimize operational implementation without compromising classification accuracy.

5. Conclusions

This study revealed that using Sentinel-1 with Radarsat-2 and Landsat-8 multi-sensor satellite data through RF classification produces accurate and reliable flood extent maps in diverse environments. We evaluated 25 different feature scenarios to analyze the impact of radar backscattering coefficients together with optical indices (NDWI and HSV) and SAR-derived texture metrics (GLCM-based textPC1) on flood extent detection. The study examined two different flood-prone areas in the Netherlands through Zutphen’s urban district and Heijen’s agricultural region.

The findings highlighted that the S25, which combined all SAR and optical features with texture components, produced the highest classification accuracy and flood-specific detection outcomes. The S20 and S25 approaches achieved the highest OAs (96.4–96.3%) and Kappa coefficients (0.950–0.949) along with the highest F1 scores (0.968–0.969) for flooded areas within the complex urban-rural interfaces of Zutphen. On the other hand, the overall classification accuracy of Heijen remained lower at 87.5% with a Kappa coefficient of 0.833 for S25 compared to the Zutphen area but achieved the best performance among the others. Maximum F1 scores of 0.969 for flooded regions in Heijen were obtained through the addition of texture features in scenarios S11 and S12. The research demonstrated an important observation that high-precision flood detection becomes possible through spectral ambiguity reduction in agricultural areas even when land cover classification remains challenging.

SAR texture features proved essential for improving flood delineation within both study areas. The implementation of texture metrics led to improved classification outputs by better differentiating between flooded vegetation and built-up surfaces that resembled spectrally identical background classes. Commercial Radarsat-2 imagery delivered inconsistent performance benefits to the study. The detection of flooded croplands received some improvement through Radarsat-2 HH polarimetric data; however, the system’s total accuracy gain remained relatively minor compared to Sentinel-1 and Landsat-8 freely accessible data. Radarsat-2 should only be used as an optional choice because its operational costs and data availability need to be considered. In addition, other polarizations of the satellite should be tested in future studies.

The RF performed well across different scenarios and landscapes because of its strong adaptability and comprehensive hyperparameter optimization process. The multi-source input processing capability of the classifier led to successful classification results, while specific adjustments to model parameters for each landscape proved essential for achieving optimal detection accuracy. Landscapes with diverse characteristics need adaptive modeling approaches that address both sensor deployment and landscape variability.

This research established an operational method for flood mapping using both free and commercial satellite imagery, which is supported by data analysis. The proposed approach determined the best sensor configurations for different land cover environments and demonstrated the significance of texture elements and classifier optimization for better flood identification. The future development should include integrating new high-resolution and real-time datasets while creating automatic hyperparameter selection systems and developing deep learning models for active flood surveillance. The framework requires additional testing across diverse geographic regions and climate zones to establish its universal application in disaster management planning and climate resilience development.

Author Contributions

Conceptualization, A.S., C.B., F.B.B., M.A., F.B.S. and S.A.; Methodology, O.G.N., A.S., C.B., F.B.B., M.A., F.B.S. and S.A.; Software, O.G.N. and C.B.; Validation, O.G.N. and C.B.; Formal analysis, O.G.N., A.S., C.B. and M.A.; Investigation, A.S., C.B., F.B.B. and F.B.S.; Resources, A.S., M.A. and S.A.; Writing—original draft, O.G.N., A.S., C.B. and S.A.; Writing—review and editing, O.G.N., A.S., C.B., F.B.B., M.A., F.B.S. and S.A.; Visualization, O.G.N. and C.B.; Supervision, A.S., C.B., F.B.B., F.B.S. and S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets used in the current study are available from the corresponding author upon a reasonable request.

Acknowledgments

We thank the Netherlands Space Office for providing Radarsat-2 data for the study. We also thank The U.S. Geological Survey (USGS) and The European Space Agency (ESA) for providing Landsat and Sentinel 1 satellite imageries, respectively.

Conflicts of Interest

Author Mahmut Arıkan is affiliated to BeeSense. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RS | Remote Sensing |

| UAVs | Unmanned Aerial Vehicles |

| SAR | Synthetic Aperture Radar |

| ALOS | Advanced Land Observing Satellite |

| NDWI | Normalized Difference Water Index |

| MNDWI | Modified NDWI |

| NDVI | Normalized Difference Vegetation Index |

| NDMI | Normalized Difference Moisture Index |

| LSWI | Land Surface Water Index |

| HSV | Hue-Saturation-Value |

| GLCM | Gray-Level Co-occurrence Matrix |

| RF | Random Forest |

| SVM | Support Vector Machines |

| ESA | European Space Agency |

| IW | Interferometric Wide |

| SLC | Single Look Complex |

| VV | vertical-vertical polarization |

| VH | vertical-horizontal polarization |

| HH | horizontal-horizontal polarization |

| SNAP | Sentinel Application Platform |

| TOPS | Terrain Observation by Progressive Scans |

| DEM | Digital Elevation Model |

| ASM | Angular Second Moment |

| PCA | Principal Component Analysis |

| OLI | Operational Land Imager |

| TOA | top-of-atmosphere |

| OA | Overall Accuracy |

References

- Kok, M.; Slager, K.; de Moel, H.; Botzen, W.; de Bruijn, K.; Wagenaar, D.; Rikkert, S.; Koks, E.; van Ginkel, K. Rapid damage assessment caused by the flooding event 2021 in Limburg, Netherlands. J. Coast. Riverine Flood Risk 2023, 2, 10. [Google Scholar] [CrossRef]

- Strijker, B.; Asselman, N.; de Jong, J.; Barneveld, H. The 2021 floods in the Netherlands from a river engineering perspective. J. Coast. Riverine Flood Risk 2023, 2, 6. [Google Scholar] [CrossRef]

- Meyer, H.; Nijhuis, S.; Bobbink, I. Delta Urbanism: The Netherlands; Routledge: New York, NY, USA, 2017. [Google Scholar]

- Amitrano, D.; Di Martino, G.; Di Simone, A.; Imperatore, P. Flood Detection with SAR: A Review of Techniques and Datasets. Remote Sens. 2024, 16, 656. [Google Scholar] [CrossRef]

- Lang, F.; Zhu, Y.; Zhao, J.; Hu, X.; Shi, H.; Zheng, N.; Zha, J. Flood Mapping of Synthetic Aperture Radar (SAR) Imagery Based on Semi-Automatic Thresholding and Change Detection. Remote Sens. 2024, 16, 2763. [Google Scholar] [CrossRef]

- Lahsaini, M.; Albano, F.; Albano, R.; Mazzariello, A.; Lacava, T. A Synthetic Aperture Radar-Based Robust Satellite Technique (RST) for Timely Mapping of Floods. Remote Sens. 2024, 16, 2193. [Google Scholar] [CrossRef]

- Yang, Q.; Shen, X.; Zhang, Q.; Helfrich, S.; Kellndorfer, J.M.; Straka, W.; Hao, W.; Steiner, N.C.; Villa, M.; Ruff, T. Advanced Operational Flood Monitoring in the New Era: Harnessing High-Resolution, Event Based, and Multi-Source Remote Sensing Data for Flood Extent Detection and Depth Estimation. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 1366–1369. [Google Scholar]

- Farhadi, H.; Ebadi, H.; Kiani, A.; Asgary, A. Near Real-Time Flood Monitoring Using Multi-Sensor Optical Imagery and Machine Learning by GEE: An Automatic Feature-Based Multi-Class Classification Approach. Remote Sens. 2024, 16, 4454. [Google Scholar] [CrossRef]

- Anusha, N.; Bharathi, B. Flood detection and flood mapping using multi-temporal synthetic aperture radar and optical data. Egypt. J. Remote Sens. Space Sci. 2020, 23, 207–219. [Google Scholar] [CrossRef]

- D’Addabbo, A.; Refice, A.; Pasquariello, G.; Lovergine, F. SAR/optical data fusion for flood detection. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7631–7634. [Google Scholar]

- Tavus, B.; Kocaman, S.; Nefeslioglu, H.A.; Gokceoglu, C. A Fusion Approach for Flood Mapping Using Sentinel-1 And Sentinel-2 Datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 641–648. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Atefi, M.R.; Miura, H. Detection of Flash Flood Inundated Areas Using Relative Difference in NDVI from Sentinel-2 Images: A Case Study of the August 2020 Event in Charikar, Afghanistan. Remote Sens. 2022, 14, 3647. [Google Scholar] [CrossRef]

- Stoyanova, E. Remote Sensing for Flood Inundation Mapping Using Various Processing Methods with Sentinel-1 and Sentinel-2. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-M-1-2023, 339–346. [Google Scholar] [CrossRef]

- Mohammadi, A.; Costelloe, J.F.; Ryu, D. Application of time series of remotely sensed normalized difference water, vegetation and moisture indices in characterizing flood dynamics of large-scale arid zone floodplains. Remote Sens. Environ. 2017, 190, 70–82. [Google Scholar] [CrossRef]

- Konapala, G.; Kumar, S.V.; Khalique Ahmad, S. Exploring Sentinel-1 and Sentinel-2 diversity for flood inundation mapping using deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 180, 163–173. [Google Scholar] [CrossRef]

- Pekel, J.F.; Vancutsem, C.; Bastin, L.; Clerici, M.; Vanbogaert, E.; Bartholomé, E.; Defourny, P. A near real-time water surface detection method based on HSV transformation of MODIS multi-spectral time series data. Remote Sens. Environ. 2014, 140, 704–716. [Google Scholar] [CrossRef]

- Bhaskaran, S.; Devi, S.; Bhatia, S.; Samal, A.; Brown, L. Mapping shadows in very high-resolution satellite data using HSV and edge detection techniques. Appl. Geomat. 2013, 5, 299–310. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K. Computer Classification of Reservoir Sandstones. IEEE Trans. Geosci. Electron. 1973, 11, 171–177. [Google Scholar] [CrossRef]

- Tassi, A.; Gigante, D.; Modica, G.; Di Martino, L.; Vizzari, M. Pixel- vs. Object-Based Landsat 8 Data Classification in Google Earth Engine Using Random Forest: The Case Study of Maiella National Park. Remote Sens. 2021, 13, 2299. [Google Scholar] [CrossRef]

- Tavus, B.; Kocaman, S. Flood mapping in mountainous areas using sentinel-1 & 2 data and GLCM features. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-1/W2-2023, 1575–1580. [Google Scholar] [CrossRef]

- Mudashiru, R.B.; Sabtu, N.; Abustan, I.; Balogun, W. Flood hazard mapping methods: A review. J. Hydrol. 2021, 603, 126846. [Google Scholar] [CrossRef]

- Teng, J.; Jakeman, A.J.; Vaze, J.; Croke, B.F.W.; Dutta, D.; Kim, S. Flood inundation modelling: A review of methods, recent advances and uncertainty analysis. Environ. Model. Softw. 2017, 90, 201–216. [Google Scholar] [CrossRef]

- Bellos, V. Ways for flood hazard mapping in urbanised environments: A short. Water Util. J 2012, 4, 25–31. [Google Scholar]

- Darlington, C.; Raikes, J.; Henstra, D.; Thistlethwaite, J.; Raven, E.K. Mapping current and future flood exposure using a 5 m flood model and climate change projections. Nat. Hazards Earth Syst. Sci. 2024, 24, 699–714. [Google Scholar] [CrossRef]

- Ji, J.; Choi, C.; Yu, M.; Yi, J. Comparison of a data-driven model and a physical model for flood forecasting. WIT Trans. Ecol. Environ. 2012, 159, 133–142. [Google Scholar] [CrossRef]

- Mojaddadi, H.; Biswajeet, P.; Haleh, N.; Noordin, A.; and Ghazali, A.H.b. Ensemble machine-learning-based geospatial approach for flood risk assessment using multi-sensor remote-sensing data and GIS. Geomat. Nat. Hazards Risk 2017, 8, 1080–1102. [Google Scholar] [CrossRef]

- Farhadi, H.; Najafzadeh, M. Flood Risk Mapping by Remote Sensing Data and Random Forest Technique. Water 2021, 13, 3115. [Google Scholar] [CrossRef]

- Jenifer, A.E.; Natarajan, S. DeepFlood: A deep learning based flood detection framework using feature-level fusion of multi-sensor remote sensing images. J. Univers. Comput. Sci. 2022, 28, 329–343. [Google Scholar] [CrossRef]

- Negri, R.G.; da Costa, F.D.; da Silva Andrade Ferreira, B.; Rodrigues, M.W.; Bankole, A.; Casaca, W. Assessing Machine Learning Models on Temporal and Multi-Sensor Data for Mapping Flooded Areas. Trans. GIS 2025, 29, e70028. [Google Scholar] [CrossRef]

- Pot, W.; de Ridder, Y.; Dewulf, A. Avoiding future surprises after acute shocks: Long-term flood risk lessons catalysed by the 2021 summer flood in the Netherlands. Environ. Sci. Eur. 2024, 36, 138. [Google Scholar] [CrossRef]

- European Space, A. Sentinel Application Platform (SNAP); European Space Agency (ESA) ER: Paris, France, 2014. [Google Scholar]

- Small, D.; Schubert, A. Guide to ASAR Geocoding; ESA-ESRIN Tech. Note RSL-ASAR-GC-AD; University of Zurich: Zurich, Switzerland, 2008; Volume 1, p. 36. [Google Scholar]

- Navacchi, C.; Cao, S.; Bauer-Marschallinger, B.; Snoeij, P.; Small, D.; Wagner, W. Utilising Sentinel-1’s orbital stability for efficient pre-processing of sigma nought backscatter. ISPRS J. Photogramm. Remote Sens. 2022, 192, 130–141. [Google Scholar] [CrossRef]

- Dasgupta, A.; Grimaldi, S.; Ramsankaran, R.; Walker, J.P. Optimized glcm-based texture features for improved SAR-based flood mapping. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3258–3261. [Google Scholar]

- Eichkitz, C.G.; Amtmann, J.; Schreilechner, M.G. Calculation of grey level co-occurrence matrix-based seismic attributes in three dimensions. Comput. Geosci. 2013, 60, 176–183. [Google Scholar] [CrossRef]

- Morell-Monzó, S.; Sebastiá-Frasquet, M.-T.; Estornell, J. Land Use Classification of VHR Images for Mapping Small-Sized Abandoned Citrus Plots by Using Spectral and Textural Information. Remote Sens. 2021, 13, 681. [Google Scholar] [CrossRef]

- Nguyen, T.T.H.; Chau, T.N.Q.; Pham, T.A.; Tran, T.X.P.; Phan, T.H.; Pham, T.M.T. Mapping Land use/land cover using a combination of Radar Sentinel-1A and Sentinel-2A optical images. IOP Conf. Ser. Earth Environ. Sci. 2021, 652, 012021. [Google Scholar] [CrossRef]

- Mustafa, M.; Taib, M.N.; Murat, Z.H.; Lias, S. GLCM texture feature reduction for EEG spectrogram image using PCA. In Proceedings of the 2010 IEEE Student Conference on Research and Development (SCOReD), Putrajaya, Malaysia, 13–14 December 2010; pp. 426–429. [Google Scholar]

- Jolliffe, I.T. Principal Component Analysis for Special Types of Data; Springer: New York, NY, USA, 2002. [Google Scholar]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Smith, A.R. Color gamut transform pairs. SIGGRAPH Comput. Graph. 1978, 12, 12–19. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. Urban Flood Mapping Based on Unmanned Aerial Vehicle Remote Sensing and Random Forest Classifier—A Case of Yuyao, China. Water 2015, 7, 1437–1455. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Albertini, C.; Gioia, A.; Iacobellis, V.; Petropoulos, G.P.; Manfreda, S. Assessing multi-source random forest classification and robustness of predictor variables in flooded areas mapping. Remote Sens. Appl. Soc. Environ. 2024, 35, 101239. [Google Scholar] [CrossRef]

- Al-Aizari, A.R.; Alzahrani, H.; AlThuwaynee, O.F.; Al-Masnay, Y.A.; Ullah, K.; Park, H.-J.; Al-Areeq, N.M.; Rahman, M.; Hazaea, B.Y.; Liu, X. Uncertainty Reduction in Flood Susceptibility Mapping Using Random Forest and eXtreme Gradient Boosting Algorithms in Two Tropical Desert Cities, Shibam and Marib, Yemen. Remote Sens. 2024, 16, 336. [Google Scholar] [CrossRef]

- Schratz, P.; Muenchow, J.; Iturritxa, E.; Richter, J.; Brenning, A. Hyperparameter tuning and performance assessment of statistical and machine-learning algorithms using spatial data. Ecol. Model. 2019, 406, 109–120. [Google Scholar] [CrossRef]

- Peng, G.; Tang, Y.; Cowan, T.; Enns, G.; Zhao, H.; Scharfe, C. Reducing False-Positive Results in Newborn Screening Using Machine Learning. Int. J. Neonatal Screen. 2020, 6, 16. [Google Scholar] [CrossRef]

- Veljanovski, T.; Lamovec, P.; Pehani, P.; Oštir, K. Comparison of three techniques for detection of flooded areas on ENVISAT and RADARSAT-2 satellite images. In Proceedings of the Gi4DM 2011: Geo-Information for Disaster Management, Antalya, Turkey, 3–8 May 2011; pp. 1–6. [Google Scholar]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-temporal synthetic aperture radar flood mapping using change detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Ji, L.; Zhang, L.; Wylie, B. Analysis of Dynamic Thresholds for the Normalized Difference Water Index. Photogramm. Eng. Remote Sens. 2009, 75, 1307–1317. [Google Scholar] [CrossRef]

- Tanguy, M.; Chokmani, K.; Bernier, M.; Poulin, J.; Raymond, S. River flood mapping in urban areas combining Radarsat-2 data and flood return period data. Remote Sens. Environ. 2017, 198, 442–459. [Google Scholar] [CrossRef]

- Abbasi, M.; Shah-Hosseini, R.; Aghdami-Nia, M. Sentinel-1 Polarization Comparison for Flood Segmentation Using Deep Learning. Proceedings 2023, 87, 14. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Sandro, M.; Philip, M.; Ludwig, R. SAR-based detection of flooded vegetation—A review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Foroughnia, F.; Alfieri, S.M.; Menenti, M.; Lindenbergh, R. Evaluation of SAR and Optical Data for Flood Delineation Using Supervised and Unsupervised Classification. Remote Sens. 2022, 14, 3718. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).