Abstract

The identification and detection of planting holes, combined with UAV technology, provides an effective solution to the challenges posed by manual counting, high labor costs, and low efficiency in large-scale planting operations. However, existing target detection algorithms face difficulties in identifying planting holes based on their edge features, particularly in complex environments. To address this issue, a target detection network named YOLO-PH was designed to efficiently and rapidly detect planting holes in complex environments. Compared to the YOLOv8 network, the proposed YOLO-PH network incorporates the C2f_DyGhostConv module as a replacement for the original C2f module in both the backbone network and neck network. Furthermore, the ATSS label allocation method is employed to optimize sample allocation and enhance detection effectiveness. Lastly, our proposed Siblings Detection Head reduces computational burden while significantly improving detection performance. Ablation experiments demonstrate that compared to baseline models, YOLO-PH exhibits notable improvements of 1.3% in mAP50 and 1.1% in mAP50:95 while simultaneously achieving a reduction of 48.8% in FLOPs and an impressive increase of 26.8 FPS (frames per second) in detection speed. In practical applications for detecting indistinct boundary planting holes within complex scenarios, our algorithm consistently outperforms other detection networks with exceptional precision (F1-score = 0.95), low computational cost, rapid detection speed, and robustness, thus laying a solid foundation for advancing precision agriculture.

1. Introduction

Afforestation plays a pivotal role in enhancing forest coverage and carbon sequestration. Before implementation, the excavation of numerous planting holes is imperative to ensure successful plantation establishment [1]. However, accurately and efficiently detecting the number of planting holes in a large area remains a challenge. Conventional methods employed for hole detection are labor-intensive and time-consuming, often resulting in inaccurate counts and suboptimal outcomes. Therefore, there is an urgent need to develop rapid and precise methodologies to effectively address these challenges associated with hole detection. Smart agriculture and forestry have revolutionized productivity through advanced technology [2,3], leading to significant transformations in production methods where unmanned aerial vehicles (UAVs) have become increasingly indispensable [4,5]. With the rapid advancements in UAV technology, its stability and safety have been significantly enhanced, making it easier to operate while providing efficient solutions for tackling agricultural and forestry issues [6,7,8]. The integration of deep learning technology with UAVs holds immense potential in agriculture and forestry [9,10,11].

Recently, UAV target detection algorithms can be categorized into two groups: traditional machine learning detection algorithms and current mainstream deep learning target detection algorithms [12]. The traditional machine learning detection algorithm primarily relies on morphological features [13]. Initially, a sliding window approach is employed to select the target image region, followed by extraction and screening of relevant features, and ultimately utilizing an appropriate classifier for classification and regression tasks [12]. However, in recent years, some researchers have integrated machine learning algorithms with UAV images for target detection purposes [14,15]. Nevertheless, due to the sliding-window-based region selection strategy used in machine learning methods, there exists high computational complexity along with a redundancy issue concerning windows. Furthermore, the diversity of target morphology, complex background variations, and illumination changes pose challenges in feature design that make it difficult to manually create robust features [16]. In contrast, deep learning algorithms possess the capability to automatically learn and extract features without requiring intricate manual feature engineering processes [17,18]. Consequently, compared to traditional machine learning approaches for target detection purposes, deep-learning-based techniques offer significant advantages in terms of accuracy enhancement as well as robustness and automation.

Furthermore, with the rapid advancement of deep learning object detection algorithms, object detection can be broadly categorized into two main approaches: two-stage detectors, exemplified by R-CNN [19] and Faster R-CNN [20], and one-stage detectors, represented by YOLO [21] and DETR [22]. The two-stage approach initially generates candidate target regions followed by the classification and localization of these candidates. On the other hand, the one-stage approach eliminates the need for region selection and directly predicts both the category and location of targets through dense networks and anchor frames, thereby achieving faster processing. While the two-stage approach offers higher accuracy, its complexity in computation and slower detection speed pose challenges for real-time applications on mobile devices with stringent timing requirements [23].

Among one-stage detection methods, the YOLO series is particularly widely employed for drone target detection in various domains, including transportation [24], construction engineering [25], agriculture [26], and others [27,28]. Recent advancements in deep learning techniques have facilitated rapid evolution within the YOLO series algorithms, such as YOLOX [29,30], YOLOv7 [30], and YOLOv8, progressively optimizing their network structures. However, complex backgrounds encountered during drone imaging, such as those with occlusion and shadows, often present challenges that can impact detection accuracy. Moreover, due to variations in detected objects and their identification requirements, specific network features may be insufficient to fully describe the object during the feature extraction process which can also affect the accuracy of detection results.

To enhance the adaptability of the detection network to tasks with similar features or specific detection objects, and improve its performance in complex scenes, it is necessary to refine the original model. As a crucial component for feature extraction, strengthening the backbone network can effectively enhance the model’s ability to perceive intricate details in complex environments, thereby enhancing object detection accuracy and robustness. To address challenges posed by complex scenes, Zhou [31] integrates the shortest–longest gradient strategy and self-attention mechanism into CSPNet and PANet architectures, resulting in an improved Rep-CSPNet network. These enhancements significantly augment the model’s capability to detect objects in complex scenes. The detection head responsible for generating final detection results from feature maps can be enhanced through multi-scale feature fusion [32], adaptive mechanisms [33], and dynamic confidence adjustment [34]. These improvements effectively elevate model performance in complex scenes by enabling better handling of multiple targets, occlusion, feature ambiguity, and scale variations. Zhang [35] propose a Parallel Dual-Branch Detection Heads design that incorporates parallel classification and regression branches based on neck features of different scales without interference with each other. This design substantially enhances both detection accuracy and real-time performance of drones operating in complex scenarios.

Several YOLO-based models have also been proposed for agricultural applications. Ag-YOLO [26] improves detection for densely packed fruit targets through enhanced feature fusion, but it lacks adaptability to field-scale scenarios. YOLOD [8] introduces decoupled heads and local dynamic convolution to enhance precision, yet the model complexity limits real-time deployment. LDHD-Net [35] addresses long-distance and high-density crop detection using deformable convolutions, but the network is relatively heavy and focuses primarily on structured plantation layouts. In contrast, our proposed YOLO-PH is specifically designed for detecting forest planting holes in complex UAV-acquired imagery, where factors such as vegetation occlusion, soil erosion, and low contrast often degrade detection performance. YOLO-PH integrates a lightweight architecture with adaptive positive sample selection (ATSS) to achieve improved detection accuracy, robustness, and real-time performance under such challenging conditions.

Although existing algorithms perform well in simple scenarios, they face challenges in complex environments specifically related to plant hole detection. Therefore, there is an urgent need to develop an efficient detection network specifically designed to address characteristics associated with planting holes.

In this study, a target detection network named YOLO-PH was designed to efficiently and rapidly detect planting holes in complex environments. To address the challenges associated with capturing the edge of planting holes, the integration of the C2f_DyGhostConv module into the detection network was proposed. This module offers a lighter weight and stronger feature expression ability in both trunk and neck regions, thereby enhancing effective feature richness for improved image detail capture and fusion. Additionally, a more suitable ATSS label allocation method is adopted to make the detection network more compatible with consistent shape and scale features of planting holes. To further improve performance in complex environments, Siblings Detection Head as a multi-scale, smaller size, faster detection speed solution with superior results was introduced. Finally, the proposed detection network undergoes comprehensive evaluation to assess its contribution towards enhancing overall performance and providing robust technical support for future advancements in large-scale planting and precision agriculture.

2. Study Area and Data

2.1. Study Area

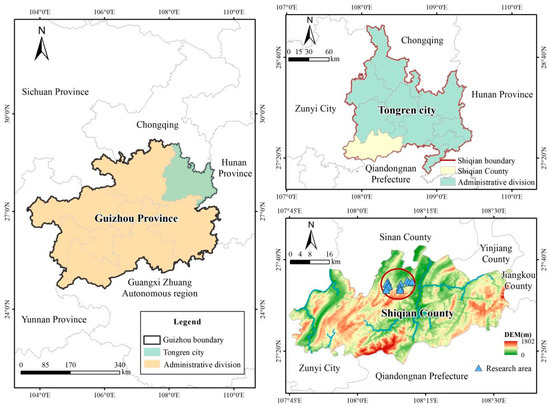

The images of planting holes in this study were obtained from planting areas in Longjing Gelao Dong Township, situated northwest of Shiqian County, Tongren City, Guizhou Province. This region exhibits a complex terrain characterized by typical karst landforms and an average elevation of approximately 818 m. The geographical coordinates for the study area are 27°37′N latitude and 108°10′E longitude. Figure 1 illustrates the location of the study area.

Figure 1.

The location map of study area.

2.2. Datasets

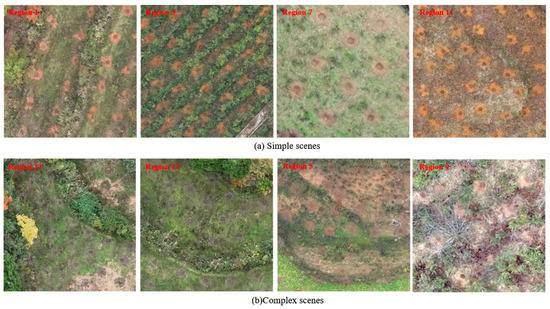

In this study, 14 aerial orthophotos were generated from 14 images acquired by unmanned aerial vehicle (UAV) aerial photography technology with a spatial resolution of 0.05 m and subsequent necessary processing steps to acquire digital orthophoto maps. To assess the model’s detection capability in complex scenes, these images were categorized into two parts: simple scenes and complex scenes (Table 1 and Figure 2). In a simplified scenario, the planting holes conspicuously contrast with the surrounding environment. However, over time, the pervasive influence of the surroundings gradually obscures these planting holes into the backdrop, presenting a formidable challenge for accurate identification within intricate environments.

Table 1.

The division of complex and sample scenes.

Figure 2.

The images of simple and complex scenes.

To improve the efficiency of model training, the original images were divided into sub-images with a size of 512 × 512 pixels and saved in TIF format. To minimize the risk of splitting individual planting holes across patch boundaries, a 20% overlap was introduced between adjacent sub-images during the cropping process. This overlap ensures that each planting hole is fully included in at least one patch. After object detection, Non-Maximum Suppression (NMS) was applied at the full-image level to eliminate duplicate predictions. After removing invalid or target-less sub-images, a total of 1113 valid sub-images were selected for further analysis. These sub-images were annotated and split into training, test, and validation sets at a ratio of 7:2:1, respectively (Table 2).

Table 2.

The number of training, test, and validation sets.

3. Methods

3.1. YOLOv8 Detection Algorithm

YOLOv8 (You Only Look Once version 8) is an advanced real-time target detection algorithm that has been further optimized and enhanced based on the YOLO series. It employs an end-to-end object detection network architecture, enabling direct prediction of object categories and positions with image pixels. The main structure of the YOLOv8 network consists of three components: a backbone network, a neck network, and an output layer. Specifically, CSPDarknet53 serves as the backbone network in YOLOv8, comprising convolution modules and C2f modules that effectively fuse multi-scale features. To enhance small object detection capability by capturing rich semantic information at different levels, the Feature Pyramid Network (FPN) constructs a feature pyramid within the neck network. The output layer of YOLOv8 consists of multiple detection heads responsible for predicting targets within specific image areas. These heads utilize distinct convolution kernels and activation functions to achieve precise target detection. Consequently, the YOLOv8 algorithm demonstrates exceptional overall performance and serves as an ideal foundational network for detecting planting holes.

Although the YOLOv8 algorithm enhances adaptability to complex tasks through the C2f module, the bottleneck module within the C2f module lacks sufficient feature extraction capabilities. This deficiency may impede the algorithm’s ability to accurately discriminate small planting holes from their background, particularly in dense environments. Moreover, while exhibiting robust target recognition capabilities, the original detection head is excessively large and occupies a significant portion of the overall algorithm, resulting in substantial computational resource wastage. Additionally, the original label assignment method is ill-suited for small and relatively uniform planting holes. Consequently, these limitations adversely affect label accuracy and increase computational load, thereby restricting real-time performance in resource-constrained environments.

3.2. Improved YOLO-PH Detection Algorithm

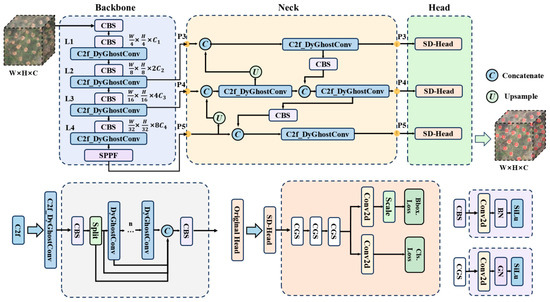

To overcome the limitations of the YOLOv8 algorithm, an enhanced YOLO-PH detection algorithm was proposed to precisely identify planting holes in complex scenes (Figure 3). The major improvements in the YOLO-PH detection algorithm include enhancements to the bottleneck structure, label allocation strategies, and improved detection head of models.

Figure 3.

Model structure of YOLO-PH.

Firstly, the proposed YOLO-PH model replaces the bottleneck structure in the C2f module with DyGhostConv, resulting in a novel C2f_DyGhostConv module that is seamlessly integrated into both the backbone and neck networks. Simultaneously, to enhance feature representation and improve its capability for precise target recognition, the network structure was streamlined by eliminating the original C2f module. Furthermore, the Task-Aligned Assigner used in the original model faces challenges in effectively allocating labels due to minimal variation in target scale and shape within the planting holes dataset. In contrast, Adaptive Training Sample Selection (ATSS) is better suited for targets with large scale and fixed shapes. Therefore, the Task-Aligned Assigner with ATSS in the YOLO-PH model was replaced to precisely select positive and negative samples, optimize training process efficiency, and enhance the detection ability of our model. Lastly, due to the limited field of view and computational burden of the YOLOv8 model’s detection head, as well as its insufficient real-time performance, a novel lightweight design called Siblings Detection Head (SD-Head) was proposed. This design enhances feature perception while reducing deployment costs, specifically tailored for resource-constrained environments. The subsequent sections provide detailed insights into the improvements made to the baseline model using the enhanced YOLO-PH network.

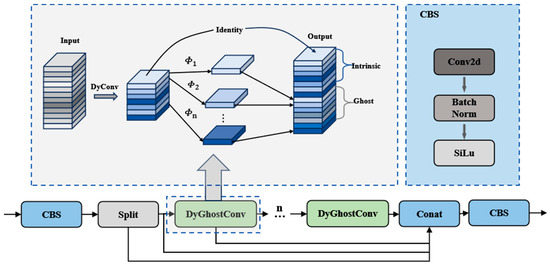

3.2.1. C2f_DyGhostConv Module

In YOLOv8, the C2f module plays a crucial role in enhancing model performance and accuracy. By incorporating the bottleneck module, the C2f module partitions the feature map to improve its nonlinear representation and ability to handle complex image features. While typically used for multi-scale feature fusion and extraction, the bottleneck module may fall short in extracting features for extremely small or highly variable target objects, which can affect detection accuracy. To address this limitation, the Ghost module [36] creates more features with fewer parameters while maintaining the same feature map size. This mechanism can be represented as follows:

where denotes the real convolution kernel and represents cheap linear operations used to generate ghost features.

This significantly reduces both the number of parameters and computational complexity compared to traditional convolutional neural networks. Additionally, the Dynamic Convolution module [37] adaptively fuses multiple convolution kernels based on input data without expanding network depth or width, increasing model complexity and performance within computational constraints. The operation of Dynamic Convolution can be expressed as follows:

where represents the attention-based weight coefficients satisfying , is the i-th convolution kernel, and denotes the convolution operation.

In target detection of planting holes, where accuracy is challenging due to minimal contrast with the background after excavation over time, YOLO-PH introduces DyGhostConv modules that replace standard convolution in Ghost modules with dynamic convolution. The combined operation of DyGhostConv is given by the following:

This modification effectively combines the advantages of both methods by reducing computation and parameter count while enhancing feature extraction capabilities (Figure 4). Additionally, DyGhostConv replaces bottleneck modules in C2f_DyGhostConv modules applied to the YOLOv8 backbone and neck networks, replacing all original C2f modules. The overall structure of the new C2f_DyGhostConv module can be abstracted as follows:

Figure 4.

The structure of the C2f_DyGhostConv module.

As a result, it becomes lightweight enough for deployment on mobile devices while significantly improving the network’s ability to extract features.

3.2.2. Adaptive Training Sample Selection

The YOLOv8 baseline model employs Task-aligned Sample Assignment, which combines classification scores and localization IoU scores to determine label assignments based on their targets [38]. This method adjusts the two indicators using exponential factors, ensuring accurate label assignment and enhancing target detection performance. However, this label assignment method is not well-suited for small and relatively uniform planting holes.

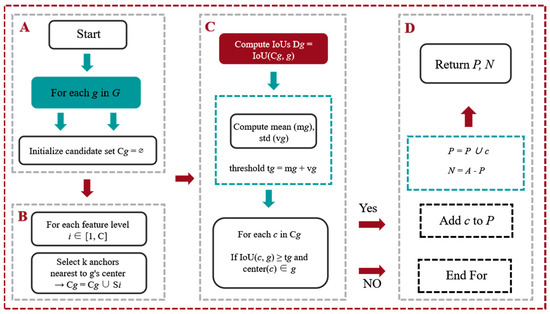

The YOLO-PH model employs the Adaptive Training Sample Selection (ATSS) label allocation strategy proposed by [39] instead. ATSS is an adaptive sample selection strategy that automatically chooses positive and negative samples based on target statistics. This method reduces the number of hyperparameters to just one, making ATSS less sensitive to hyperparameter settings. It avoids fixed threshold issues and significantly improves detection capability without adding extra computational costs or parameters. Experiments show that ATSS performs better than the Task-aligned strategy in datasets with minimal shape variation and uniform target distribution. ATSS can adaptively adjust the distribution of positive and negative samples, enhancing model performance and making it ideal for planting hole detection tasks (Figure 5).

Figure 5.

The principle of Adaptive Training Sample Selection. Note: A–D represents the order of ATSS execution.

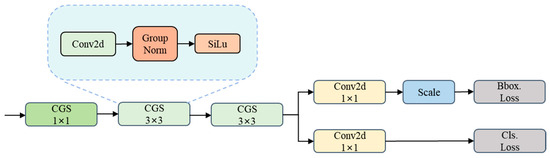

3.2.3. Siblings Detection Head

Despite the efficiency and accuracy of YOLOv8, its original detection head exhibits certain limitations. Firstly, the original detection head employs three separate detection heads to process feature maps P3, P4, and P5 of varying scales. Each of these detection heads utilizes two 3 × 3 CBS convolution modules followed by one 1 × 1 convolution for extracting target bounding box and category information. The CBS module integrates the Conv2d layer, Batch Normalization, and SiLU activation into a single convolutional unit to facilitate comprehensive feature extraction. However, this structure necessitates significant computational resources for target detection, accounting for approximately 35% of the network’s total workload. Consequently, it can impede real-time target detection on resource-constrained devices due to reduced computation speed. Secondly, the design of multiple prediction feature maps with different scales in YOLOv8’s detection head is intended to detect targets of various sizes; however, inherent structural limitations such as large receptive field and stride values within the detection head architecture compromises its accuracy in detecting small targets. This limitation results in potential blurring or loss of characteristics specific to small targets during planting hole identification.

To overcome these challenges, [40] proposed a novel normalization technique known as group normalization (GN), which partitions the channels into groups and computes the normalized mean and variance within each group. The core formula of Group Normalization is defined as follows:

where and are the mean and variance computed within each group of channels.

Irrespective of the batch size, GN effectively substitutes batch normalization (BN) while significantly enhancing the performance and accuracy of the detection model. Therefore, a new detection head, named the Siblings Detection Head (SD-Head), is proposed (Figure 6). The proposed detection head no longer employs multiple convolutions for each scale. Instead, feature maps P3, P4, and P5 share a common convolution module, reflecting their sibling-like characteristics. The shared convolution module in SD-Head operates as follows:

where σ(·) is the SiLU activation, and GN denotes Group Normalization.

Figure 6.

The structure of Siblings Detection Head.

GN was then employed in the convolution module in place of BN. The shared convolutions reduce the network’s computational load and parameters, while GN greatly enhances its perception capability.

To tackle the issue of inconsistent target scales detected by different heads, the Scale layer is introduced to normalize the features and ensure consistent scale detection. The Scale layer adjusts feature magnitudes using the following:

where is a learnable scaling factor.

This approach effectively handles variations in target scale, enhancing both the model’s robustness and accuracy. The novel design elements within the SD-Head efficiently reduce network parameters and computation, making it more suitable for resource-constrained devices. Simultaneously, the SD-Head enhances the model’s ability to detect different scales, resulting in improved accuracy and performance. The final classification and regression outputs are computed as follows:

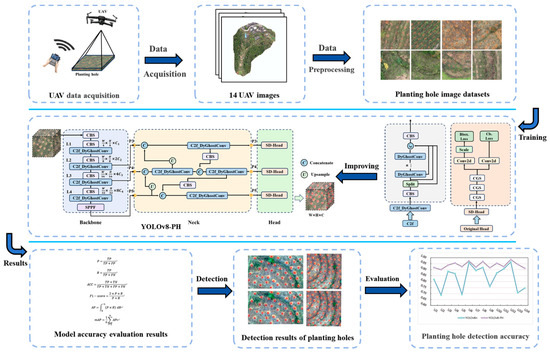

3.3. Detection Process of Planting Holes

The planting hole detection process utilizes the enhanced YOLO-PH network, as depicted in Figure 7. The technical roadmap encompasses several crucial steps: Firstly, UAV data is gathered from the designated planting hole area. Subsequently, the images are processed and segmented into training, validation, and test sets to establish a comprehensive dataset for planting holes. Next, the enhanced YOLO-PH network undergoes training on the designated set for 200 epochs to attain an optimal model. This model is then employed to accurately identify planting holes within UAV images. Finally, a thorough evaluation and analysis of recognition accuracy is conducted.

Figure 7.

Planting holes detection technology route with YOLO-PH network.

4. Results

4.1. Experimental Environment Configuration

To ensure reproducibility and alignment with established best practices, most training hyperparameters were set according to the default configuration recommended by the YOLOv8 framework. Specifically, parameters such as momentum (0.937), initial learning rate (set to 0.1) with a cosine annealing scheduler gradually decaying it to 0.01, weight decay (0.005), and batch size (16) were retained as defaults, which have been empirically validated for stability and performance in various detection tasks. This approach minimizes manual tuning while ensuring a fair and robust baseline for evaluating the model’s effectiveness in planting hole detection. The experimental hardware and software environment are shown in Table 3.

Table 3.

The hardware and software environment of the experiment.

4.2. Model Performance Evaluation Indicators

The prediction results are classified into four categories based on the model’s predictions and the actual sample categories: true positive (TP), false positive (FP), true negative (TN), and false negative (FN). To comprehensively evaluate the performance of the model, multiple evaluation metrics were utilized, including Precision (P), Recall (R), Accuracy (ACC), F1-score, Average Precision (AP), mean average precision (mAP), mAP50, mAP75, and mAP50:95.

4.3. Results of Ablation Experiments

The improved YOLO-PH detection algorithm introduces three pivotal enhancements to the original YOLOv8 model. To validate these advancements, ablation experiments were conducted under identical environmental conditions and model hyperparameters. The detailed results of these experiments are presented in Table 4. The results demonstrated significant improvements in performance metrics for models incorporating the three enhancements. Compared to the YOLOv8n model, Models A, B, and C exhibited respective increases of 0.2%, 0.1%, and 0.2% in mAP50, along with gains of 0.2%, 1.1%, and 2.0% in mAP75, respectively. Although Model C experienced a slight decrease of 0.2% in mAP50:95, Models B and C demonstrated improvements of 0.3% and 0.1%, respectively.

Table 4.

The results of ablation experiments.

In terms of computational load and parameter count, Model C retained the same parameters and FLOPs as the baseline model due to changes in its label assignment strategy. However, Models A and B witnessed reductions of parameters by 27.4% and 21.4%, accompanied by decreases in FLOPs by 29.2% and 19.5%. Regarding detection speed, Models A and B showcased enhancements with speeds reaching 14.6 FPS and 10.6 FPS over the baseline model. Furthermore, the enhanced detection accuracy of Model C confirms that the ATSS label assignment strategy is more suitable for detecting planting holes compared to the original YOLOv8 label strategy.

Furthermore, by incorporating the enhancements in the model, the combined models AB, AC, and BC exhibited significant improvements in performance compared to both the baseline and individual models (A, B, and C). Specifically, relative to the baseline model, AB, AC, and BC achieved gains of 1.1%, 0.8%, and 0.6% in mAP50; 0.7%, 0.8%, and 1.7% in mAP75; and 0.6%, 0.9%, and 1.3% in mAP50:95, respectively. In terms of parameter count and detection speed, Model C’s improvements based on the label assignment strategy did not impact either detection speed or model size significantly. Furthermore, models AC and BC maintained consistent FLOPs and parameter count with models A and B, respectively. Compared to the baseline model, the AB model reduced parameters by a substantial margin of 48.8%. Additionally, it decreased FLOPs by an equivalent percentage while achieving a remarkable increase of 26.8 FPS in detection speed. The integration of efficient features, such as the C2f_DyGhostConv module and SD-Head detector technology, effectively reduces model parameters and enhances detection performance.

4.4. Detection Results of Planting Holes

4.4.1. The Performance of YOLO-PH Detection Algorithm

Based on the ablation results, a comparison was made between the performance and speed of the baseline YOLOv8n and the improved YOLO-PH for detecting planting holes. A comprehensive evaluation was further conducted to ascertain the superiority of YOLO-PH over various existing improved YOLO models. The corresponding results are presented in Table 5.

Table 5.

Performance comparison of various existing improved YOLO models.

Compared to the baseline YOLOv8, the improved YOLO-PH demonstrates a significant enhancement in performance and speed for planting hole detection. Specifically, it achieves a 1.3% increase in mAP50, a 2.1% increase in mAP75, and a 1.1% increase in mAP50:95. Moreover, it exhibits remarkable efficiency improvements with a reduction of 48.8% in both parameter count and FLOPs while achieving an impressive improvement of 26.8 FPS in detection speed. Compared to YOLOv5n, YOLO-PH outperforms by achieving higher mAP50 (1.2%), mAP75 (0.9%), and mAP50:95 (0.6%) scores along with faster detection speed by 5.8 FPS without compromising on parameter count or computational load. When compared against YOLOv11n and YOLOv7-tiny models, YOLO-PH also showcases its superiority by having significantly fewer parameters (0.86 M and 4.44 M less, respectively) as well as reduced FLOPs (1.9 G and 9.0 G less, respectively). Furthermore, when compared with mainstream two-stage and one-stage detectors such as RetinaNet-R18 and Faster R-CNN-R18, YOLO-PH achieves considerably higher detection speeds (220.7 FPS vs. 93.3 FPS and 82.7 FPS, respectively) while maintaining competitive or better accuracy. Notably, RetinaNet-R18 and Faster R-CNN-R18 incur substantially larger computational costs and parameter counts, with over 20 M and 28 M parameters and FLOPs exceeding 96 G and 101 G, respectively, which limits their real-time applicability in planting hole detection. These results clearly demonstrate that the improved YOLO-PH model excels over other YOLO series models not only in performance but also in detection speed for planting hole detection.

4.4.2. Detection Results of Planting Holes

To assess the accuracy of the YOLO-PH model in detecting planting holes, a comparative analysis with various models was conducted using 14 images (Table 6). The results revealed that YOLOv8n modes exhibited limited performance by missing 1496 planting holes and erroneously detecting 253 others, resulting in an accuracy of 0.79 and an F1-score of 0.88. Similarly, YOLOv5n underperformed relative to YOLO-PH with an F1-score of 0.90. Furthermore, YOLOv11n and YOLOv7-tiny also demonstrated inferior performance with F1-scores of 0.92 and 0.87, respectively. In contrast, the YOLO-PH model outperformed these counterparts by exhibiting fewer missed detections of planting holes while achieving accuracy metrics exceeding 0.9 consistently across all evaluated models. It is inferred that the improved YOLO-PH detection algorithm has great capability to capture the key features in detecting the planting holes. Additionally, mainstream detectors RetinaNet-R18 and Faster R-CNN-R18 exhibited lower accuracy and F1-scores (around 0.79–0.81 accuracy and 0.88–0.89 F1-score), further highlighting the superior detection capability and robustness of YOLO-PH in this task.

Table 6.

Accuracy indices of planting hole detection results.

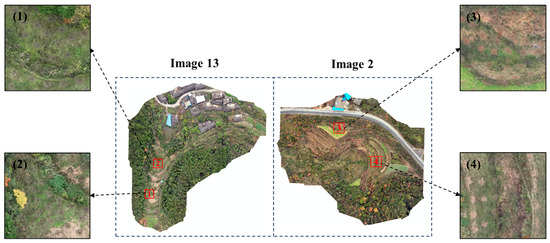

5. Discussion

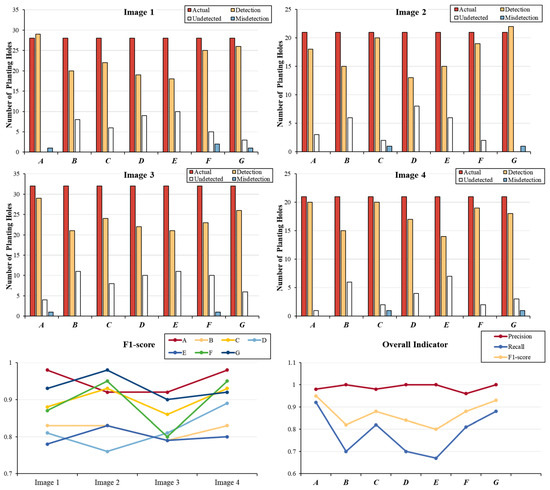

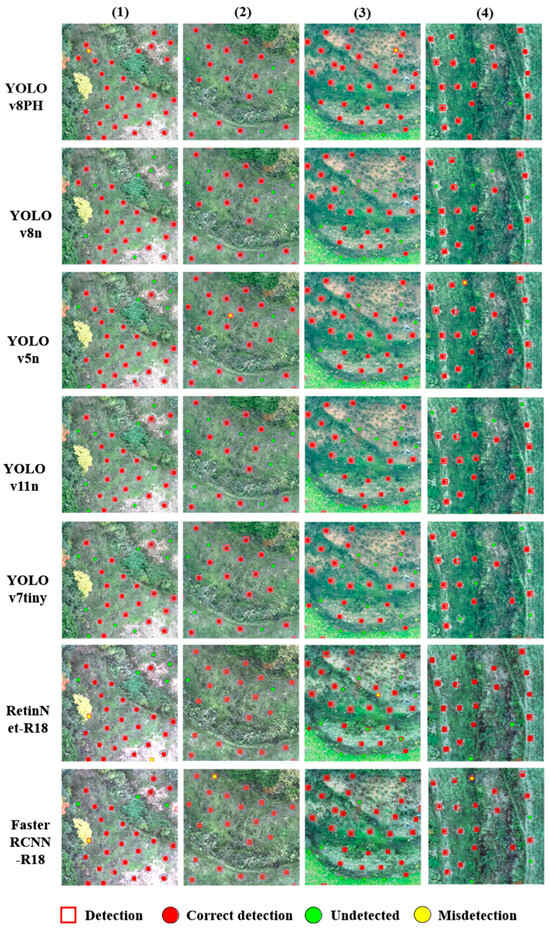

The results of the comparative detection experiments indicate that the performance of the YOLO-PH model is significantly influenced by scene complexity. To further assess its robustness and adaptability under challenging environmental conditions, four representative 20 m × 20 m subregions were selected for evaluation. Two of these subregions were extracted from Image 13, which featured the most complex environmental background, while the remaining two were taken from Image 2, characterized by the lowest detection performance across all test scenes. Detailed spatial configurations and characteristics of these subregions are illustrated in Figure 8.

Figure 8.

Validation regions in complex scenes.

The detection results across various models within these complex subregions, as shown in Figure 9, reveal substantial disparities in performance. YOLOv8n exhibited relatively weak results, with 31 missed detections and an overall F1-score of only 0.82, performing especially poorly in subregion 3 where it failed to detect 11 out of 32 targets. YOLOv5n showed moderate results with 18 undetected and 2 misdetected targets, achieving an F1-score of 0.89. YOLOv11r suffered from similar limitations, missing 31 targets in total and obtaining a relatively low F1-score of 0.82, with its worst performance seen in subregion 2. YOLOv7-tiny demonstrated the lowest accuracy among the tested models, with 34 missed detections and an F1-score of 0.80, reflecting difficulties in both subregions 1 and 4. RetinaNet, although achieving higher precision in some scenes, still missed 19 targets and misdetected 3, resulting in an F1-score of 0.88. Faster R-CNN achieved a better balance, with 12 undetected and 3 misdetected targets, reaching an F1-score of 0.93, but still showed performance degradation in cluttered scenes such as subregion 4.

Figure 9.

The Detection results of four subregions in complex scenes. Note: A denotes YOLO-PH, B denotes YOLOv8n, C denotes YOLOv5n, D denotes YOLOv11n, E denote YOLOv7-tiny, F denote RetinaNet-R18, and G denote Faster R-CNN-R18.

In contrast, the YOLO-PH model exhibited superior performance across all subregions, highlighting its robustness in complex environments. In subregion 1, it achieved an F1-score of 0.98, with only a single missed target, demonstrating near-perfect detection accuracy. Similarly high performance was observed in subregions 2, 3, and 4, with minimal instances of false negatives. While occasional false positives were noted—attributable to the model’s enhanced feature extraction capabilities and sensitivity to fine-grained texture—the overall detection accuracy remained exceptionally high.

Representative detection outcomes are shown in Figure 10. Some missed detections may be attributed to subtle visual characteristics, such as low contrast with the background or partial occlusion, which challenged the model’s ability to extract sufficient features. As for false positives, several cases occurred in regions with soil depressions or unnatural textures resembling planting holes. In some instances, the predicted bounding box may have surrounded a true pit-like structure, but not a standard planting hole particularly when two predicted holes appeared unusually close together, which contradicts common forestry planting practices. These observations suggest that while the model exhibits strong semantic awareness, its contextual understanding of planting patterns could be further refined in future iterations.

Figure 10.

The visualization of detection results in complex scenes. Note: (1), (2), (3), (4) represent Image 1, Image 2, Image 3, Image 4.

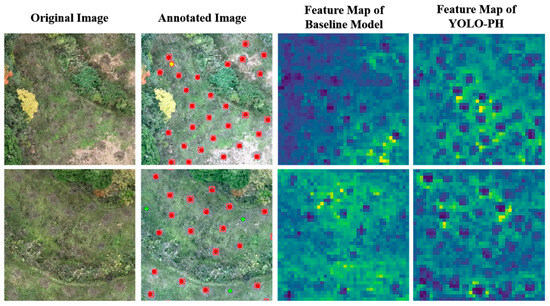

To further validate the enhanced edge feature extraction capability of the proposed YOLO-PH model, intermediate feature maps were visualized and compared between the baseline model (YOLOv8n) and YOLO-PH. As illustrated in Figure 11, two representative subregions with differing levels of scene complexity were selected for analysis. The feature responses generated by the baseline model appear relatively diffuse and lack well-defined structural patterns, particularly around object boundaries. This insufficient edge activation may hinder the accurate detection of planting holes with low contrast or ambiguous outlines. In contrast, the feature maps produced by YOLO-PH exhibit stronger, more coherent activations along the edges of planting holes, indicating its superior ability to capture fine-grained boundary details. These visualizations provide qualitative evidence that the proposed model achieves improved spatial localization and edge sensitivity, thereby contributing to its robust detection performance under complex environmental conditions.

Figure 11.

Comparative visualization of feature maps between the baseline and proposed YOLO-PH models.

Nonetheless, we acknowledge that the current model has not yet been systematically evaluated under low-resolution, oblique-view, or highly heterogeneous planting hole conditions. In addition, generalization across diverse soil colors, vegetation types, or seasonal variations remains a potential challenge, which we plan to explore in future work.

6. Conclusions

In this paper, the target detection network, YOLO-PH, was designed to efficiently and rapidly detect planting holes in complex environments. Verification experiments were also conducted to evaluate its performance. The main contributions of this study can be summarized as follows:

- (1)

- Introducing lightweight feature modules such as the Ghost module and dynamic convolution, along with a new detection head called Siblings Detection Head, resulted in the proposed YOLO-PH model that is suitable for detecting planting holes in complex scenes.

- (2)

- The experimental results demonstrate that the YOLO-PH model achieves a mAP50 of 96%, mAP50:95 of 51.6%, FLOPs of 4.2 G, and detection speed of 220.7 FPS. Compared to the baseline model, there is an improvement in mAP50 by 1.3% and mAP50:95 by 1.1%. Additionally, the detection speed has increased by 13.8%, while FLOPs have been reduced by 48.8%.

- (3)

- Further evaluation of the model’s detection performance in complex scenes demonstrated its exceptional accuracy and robustness in detecting planting holes. The model exhibits remarkable precision and rapid detection capabilities, and is well-suited for deployment on resource-constrained devices.

Although the proposed model effectively detects planting holes in complex scenes, it possesses certain limitations. Firstly, the range of complex scene categories is relatively limited, and additional datasets representing a wider spectrum of scene complexities are required to enhance the model’s generalization capability. Moreover, occlusion caused by objects such as tree crowns still results in missed detections. Future research can focus on further investigating the characteristics of planting holes in multi-level complex scenes to optimize the detection model.

Author Contributions

Conceptualization, J.L. and H.L.; methodology, K.L.; software, K.L.; validation, J.L., K.L. and Y.Y.; formal analysis, J.L.; investigation, S.L.; resources, S.L.; data curation, S.L.; writing—original draft preparation, K.L.; writing—review and editing, J.L.; visualization, K.L. and Y.Y.; supervision, H.L.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Project. number: 32171784).

Data Availability Statement

The UAV imagery data used in this study are not publicly available due to institutional data sharing policies, but they can be made available by the corresponding author upon reasonable request for academic and non-commercial use, subject to approval by the authors’ affiliated institution.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Paudel, D.; Boogaard, H.; de Wit, A.; Janssen, S.; Osinga, S.; Pylianidis, C.; Athanasiadis, I.N. Machine Learning for Large-Scale Crop Yield Forecasting. Agric. Syst. 2021, 187, 103016. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty Five Years of Remote Sensing in Precision Agriculture: Key Advances and Remaining Knowledge Gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.P.L.F.; Krishnamachari, B.; Ueyama, J. An Adaptive Approach for UAV-Based Pesticide Spraying in Dynamic Environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Hopkins, M. The Role of Drone Technology in Sustainable Agriculture—Global Ag Tech Initiative. Available online: https://www.precisionag.com/in-fieldtechnologies/drones-uavs/the-role-of-drone-technology-in-sustainable-agriculture/ (accessed on 11 March 2021).

- Wu, J.; Yang, G.; Yang, H.; Zhu, Y.; Li, Z.; Lei, L.; Zhao, C. Extracting Apple Tree Crown Information from Remote Imagery Using Deep Learning. Comput. Electron. Agric. 2020, 174, 105504. [Google Scholar] [CrossRef]

- Liu, S.; Yin, D.; Feng, H.; Li, Z.; Xu, X.; Shi, L.; Jin, X. Estimating Maize Seedling Number with UAV RGB Images and Advanced Image Processing Methods. Precis. Agric. 2022, 23, 1604–1632. [Google Scholar] [CrossRef]

- Luo, X.; Wu, Y.; Zhao, L. YOLOD: A Target Detection Method for UAV Aerial Imagery. Remote Sens. 2022, 14, 3240. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Osco, L.P.; Marcato Junior, J.; Marques Ramos, A.P.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A Review on Deep Learning in UAV Remote Sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102456. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, H.; Meng, Z.; Chen, J. Deep Learning-Based Automatic Recognition Network of Agricultural Machinery Images. Comput. Electron. Agric. 2019, 166, 104978. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Jiang, B.; Wang, P.; Zhuang, S.; Li, M.; Li, Z.; Gong, Z. Detection of Maize Drought Based on Texture and Morphological Features. Comput. Electron. Agric. 2018, 151, 50–60. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling Maize Above-Ground Biomass Based on Machine Learning Approaches Using UAV Remote-Sensing Data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Zan, X.; Zhang, X.; Xing, Z.; Liu, W.; Zhang, X.; Su, W.; Liu, Z.; Zhao, Y.; Li, S. Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sens. 2020, 12, 3049. [Google Scholar] [CrossRef]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A Review of Object Detection Based on Deep Learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mittal, P.; Singh, R.; Sharma, A. Deep Learning-Based Object Detection in Low-Altitude UAV Datasets: A Survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020; Volume 12346 LNCS. [Google Scholar]

- Soviany, P.; Ionescu, R.T. Optimizing the Trade-off between Single-Stage and Two-Stage Deep Object Detectors Using Image Difficulty Prediction. In Proceedings of the 2018 20th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing, SYNASC 2018, Timisoara, Romania, 20–23 September 2018. [Google Scholar]

- Luo, X.; Tian, X.; Zhang, H.; Hou, W.; Leng, G.; Xu, W.; Jia, H.; He, X.; Wang, M.; Zhang, J. Fast Automatic Vehicle Detection in UAV Images Using Convolutional Neural Networks. Remote Sens. 2020, 12, 1994. [Google Scholar] [CrossRef]

- Bao, W.; Du, X.; Wang, N.; Yuan, M.; Yang, X. A Defect Detection Method Based on BC-YOLO for Transmission Line Components in UAV Remote Sensing Images. Remote Sens. 2022, 14, 5176. [Google Scholar] [CrossRef]

- Qin, Z.; Wang, W.; Dammer, K.H.; Guo, L.; Cao, Z. Ag-YOLO: A Real-Time Low-Cost Detector for Precise Spraying With Case Study of Palms. Front. Plant Sci. 2021, 12, 753603. [Google Scholar] [CrossRef]

- Boudjit, K.; Ramzan, N. Human Detection Based on Deep Learning YOLO-v2 for Real-Time UAV Applications. J. Exp. Theor. Artif. Intell. 2022, 34, 527–544. [Google Scholar] [CrossRef]

- Chen, G.; Cheng, R.; Lin, X.; Jiao, W.; Bai, D.; Lin, H. LMDFS: A Lightweight Model for Detecting Forest Fire Smoke in UAV Images Based on YOLOv7. Remote Sens. 2023, 15, 3790. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhou, Y. A YOLO-NL Object Detector for Real-Time Detection. Expert Syst. Appl. 2024, 238, 122256. [Google Scholar] [CrossRef]

- Vasanthi, P.; Mohan, L. Multi-Head-Self-Attention Based YOLOv5X-Transformer for Multi-Scale Object Detection. Multimed. Tools Appl. 2024, 83, 36491–36517. [Google Scholar] [CrossRef]

- Jianqiang, L.; Haoxuan, L.; Chaoran, Y.; Xiao, L.; Jiewei, H.; Haiwei, W.; Liang, W.; Caijuan, Y. Tea Bud DG: A Lightweight Tea Bud Detection Model Based on Dynamic Detection Head and Adaptive Loss Function. Comput. Electron. Agric. 2024, 227, 109522. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Zhang, C.; Gao, Q.; Shi, R.; Yue, M. LDHD-Net: A Lightweight Network With Double Branch Head for Feature Enhancement of UAV Targets in Complex Scenes. Int. J. Intell. Syst. 2024, 2024, 7259029. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Dong, X.; Yuan, L.; Liu, Z. Mobile-Former: Bridging MobileNet and Transformer. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-Aligned One-Stage Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the Gap between Anchor-Based and Anchor-Free Detection via Adaptive Training Sample Selection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. Int. J. Comput. Vis. 2020, 128, 742–755. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).