Abstract

In remote sensing building detection tasks, data acquisition remains a critical bottleneck that limits both model performance and large-scale deployment. Due to the high cost of manual annotation, limited geographic coverage, and constraints of image acquisition conditions, obtaining large-scale, high-quality labeled datasets remains a significant challenge. To address this issue, this study proposes an automatic semantic labeling framework for remote sensing imagery. The framework leverages geospatial vector data provided by OpenStreetMap, precisely aligns it with high-resolution satellite imagery from Bing Maps through projection transformation, and incorporates a quality-aware sample filtering strategy to automatically generate accurate annotations for building detection. The resulting dataset comprises 36,647 samples, covering buildings in both urban and suburban areas across multiple cities. To evaluate its effectiveness, we selected three publicly available datasets—WHU, INRIA, and DZU—and conducted three types of experiments using the following four representative object detection models: SSD, Faster R-CNN, DETR, and YOLOv11s. The experiments include benchmark performance evaluation, input perturbation robustness testing, and cross-dataset generalization analysis. Results show that our dataset achieved a mAP at 0.5 intersection over union of up to 93.2%, with a precision of 89.4% and a recall of 90.6%, outperforming the open-source benchmarks across all four models. Furthermore, when simulating real-world noise in satellite image acquisition—such as motion blur and brightness variation—our dataset maintained a mean average precision of 90.4% under the most severe perturbation, indicating strong robustness. In addition, it demonstrated superior cross-dataset stability compared to the benchmarks. Finally, comparative experiments conducted on public test areas further validated the effectiveness and reliability of the proposed annotation framework.

1. Introduction

With the rapid advancement of remote sensing technology, abundant spatial information has become available to support urban planning, land resource monitoring, environmental assessment, and disaster response. Among various surface features, buildings are among the most critical artificial structures [1,2]. Accurate and automated extraction of building footprints is essential for fine-grained urban management, dynamic land use monitoring, and effective disaster prevention and mitigation [3]. As the spatial resolution of remote sensing imagery continues to improve, traditional object detection methods based on handcrafted features and classical machine learning algorithms have proven inadequate to meet the increasing demands for accuracy and efficiency. In recent years, the emergence of deep learning—particularly convolutional neural networks (CNNs)—has introduced a new research paradigm for object detection in remote sensing, significantly enhancing the accuracy, efficiency, and robustness of automated building extraction [4]. Recent studies have addressed these challenges through innovative architectural designs. For instance, CSTFNet [5] integrates a CNN and Dual Swin-Transformer to handle the multi-source characteristics of hyperspectral data, achieving accurate fusion and classification of coastal areas by leveraging the CNN’s local feature extraction and the Transformer’s global context modeling. GLR-CNN [6] introduces a global latent relationship embedding module to capture hierarchical dependencies across scales, enhancing the adaptability of building detection in high-resolution scenes. Meanwhile, Zaid et al. [7] demonstrate that transfer learning-based CNN frameworks significantly improve generalization performance by adapting pre-trained models to diverse remote sensing scenarios, which is particularly beneficial for complex urban environments with mixed building types and textures. However, practical applications still face bottlenecks such as missed detections, false positives, and limited precision in complex scenes. Improving the performance of deep learning-based building detection models under diverse and heterogeneous conditions remains a key challenge in current remote sensing research [8].

Recent breakthroughs in deep learning have led to a proliferation of convolutional neural network (CNN)–based object detection frameworks for identifying buildings in remote sensing imagery. Early models—most notably Single Shot MultiBox Detector (SSD) and Faster Region-based Convolutional Neural Network (Faster R-CNN)—established high-accuracy baselines through region-proposal mechanisms and multi-scale feature fusion and are still valued for their robustness in densely built-up areas. More advanced architectures, including the You Only Look Once (YOLO) family and the DEtection TRansformer (DETR), strike a superior balance between accuracy and real-time performance [9,10,11,12,13,14]. YOLO offers exceptional inference speed and precision, making it a preferred choice for large-scale remote sensing tasks such as land use mapping, post-disaster assessment, and infrastructure monitoring [15,16]. DETR replaces hand-crafted components (for example, non-maximum suppression) with an end-to-end transformer mechanism, thereby streamlining the detection pipeline. Despite their architectural differences, both model families depend critically on large, accurately annotated, and geographically diverse training datasets. High-quality building datasets are essential for achieving robust and transferable performance across heterogeneous urban and rural environments. They enable models to learn intricate spatial patterns under challenging imaging conditions—such as variable illumination, shadowing, and diverse rooftop geometries—while mitigating overfitting to specific sensors or regions [17]. Conversely, a shortage of diverse annotations markedly reduces a model’s ability to generalize, degrading accuracy when confronted with unseen areas.

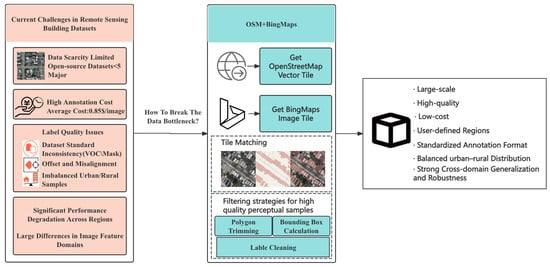

Although high-resolution satellite imagery is increasingly accessible, publicly available datasets specifically curated for building detection remain scarce and fragmented. Many suffer from limited geographic coverage, inadequate scene diversity, or modest sample sizes. Manual footprint annotation is also slow, costly, and error-prone, posing serious obstacles to scalable, repeatable deep learning workflows [18]. Consequently, automated, scalable, and accurate dataset-generation strategies have become a priority in remote sensing research. Leveraging open geospatial resources such as OpenStreetMap (OSM) and pairing them with high-resolution imagery plus quality-aware filtering offers a promising route to alleviate the annotation bottleneck. We therefore propose an automatic semantic labeling framework that aligns OSM vector data with Bing Maps imagery, filters low-quality samples, and automatically produces precise building annotations. The major limitations in current building datasets and the proposed solution strategy are summarized in Figure 1.

Figure 1.

Solving data bottlenecks in remote sensing building datasets using open geospatial resources.

To address these challenges, this study proposes an automated dataset generation framework that integrates OpenStreetMap—an open-source geospatial vector data platform—with high-resolution satellite imagery from Bing Maps [19]. OSM offers globally accessible, high-precision vector annotations of buildings, while Bing Maps provides wide spatial coverage, fine spatial resolution, and frequent data updates [20]. In this study, five cities—Washington, DC, Wellington, Sydney, New York, and Los Angeles—were selected as target areas for dataset generation. Although this study limits the geographic scope of the dataset and selects regions with relatively high data quality to control variability and ensure the consistency of generated samples, it is still important to acknowledge the inherent biases associated with the underlying data sources. OpenStreetMap (OSM), as the primary source of vector information, offers advantages such as openness and wide coverage. However, since OSM is contributed by volunteers, it exhibits notable disparities in completeness and semantic consistency across different regions. Urban areas in developed countries are typically well-annotated and frequently updated, whereas rural or less economically developed regions may suffer from incomplete building annotations, missing attributes, or outdated data. This uneven geographic coverage can introduce representational bias in the dataset’s spatial distribution, potentially limiting the generalizability of models trained on it for underrepresented regions. Similarly, Bing Maps imagery, which serves as the visual foundation of the dataset, is subject to quality fluctuations. These include differences in spatial resolution between regions, inconsistencies in acquisition time that may lead to seasonal or lighting variations, and atmospheric conditions such as cloud cover or haze that affect image clarity. Although these issues have been mitigated through region selection and quality control during data collection, they may still introduce a degree of noise and inconsistency in the generated samples. While the impact of these factors is relatively limited within the scope of this study, they should not be overlooked in future research aiming for globally scalable models. By integrating these two data sources, we developed an automated pipeline for large-scale building dataset generation. This approach substantially reduces the cost of manual annotation while maintaining consistency and precision. The resulting datasets support the training and evaluation of building detection models, enabling improvements in accuracy, robustness, and cross-scene generalization in complex remote sensing environments [21,22,23,24,25,26]. Overall, the proposed framework offers a technically feasible and cost-effective alternative to traditional dataset construction methods and holds substantial theoretical and practical value for deep learning applications in remote sensing.

In this paper, we present the following key contributions:

- We propose a robust and scalable automatic building annotation algorithm named MapAnnoFactory, which leverages geographic vector data from OpenStreetMap. Through projection transformation, it achieves precise alignment with high-resolution satellite imagery from Bing Maps. A quality-aware sample filtering strategy is incorporated to automatically generate high-precision building detection annotations.

- Using MapAnnoFactory we constructed a large-scale building dataset named AutoBuildRS, which contains 36,647 fully annotated samples covering multiple metropolitan areas in Australia and North America.

- Extensive experiments were conducted using four representative object detection models—SSD, Faster R-CNN, DETR, and YOLOv11s—and compared against the following three widely used open-source benchmark datasets: Wuhan University dataset (WHU), Institut National de Recherche en Informatique et en Automatique dataset (INRIA), and Dizhi University dataset (DZU). Evaluation metrics included mean average precision (mAP), precision, recall, and F1-score. Additional tests on input perturbation robustness and cross-dataset generalization further demonstrated the advantages of the proposed dataset in terms of detection accuracy, robustness, and generalization capability. Finally, experiments on a publicly available test region confirmed the outstanding performance and strong cross-regional transferability of the dataset generated by this framework.

2. Materials and Methods

This section introduces all the datasets used in this study, including the three publicly available datasets—WHU, DZU, and INRIA—as well as the proposed AutoBuildRS dataset and the final public test region dataset.

2.1. Materials

2.1.1. Open-Source Datasets

The WHU is based on aerial imagery sourced from the Land Information New Zealand platform. The original images were downsampled to a spatial resolution of 0.3 m. The selected study area is located in the city of Christchurch and contains approximately 196,016 individual buildings. For model training and evaluation, the remote sensing imagery was segmented into 8188 image tiles of 512 × 512 pixels. The dataset was divided into the following three subsets: 6550 images for training, 819 for validation, and 819 for testing.

The INRIA comprises aerial images covering a total area of 810 km2 at a spatial resolution of 0.3 m. It spans a diverse range of urban environments, from densely populated areas like San Francisco’s financial district to complex mountainous regions such as Lienz in Tyrol, Austria, and includes 177,809 annotated buildings. Unlike conventional datasets, INRIA does not provide predefined training and testing splits at tile level. Instead, the data is distributed in large Geo TIFF files (5000 × 5000 pixels). In this study, the high-resolution images were cropped into 8820 image patches of 640 × 640 pixels with slight overlaps to avoid truncating buildings near tile boundaries. The cropped dataset was split into the following three parts: 7056 images for training, 882 for validation, and 882 for testing.

The DZU consists of typical urban building instances from the following four major Chinese cities: Beijing, Shanghai, Shenzhen, and Wuhan. It contains 7260 image samples (with an original size of 500 × 500 pixels as provided by the open-source dataset, without additional cropping or scaling) and a total of 47,081 annotated buildings, with a spatial resolution of 0.3 m. The dataset was divided into 5808 images for training, 726 for validation, and 726 for testing [27].

2.1.2. AutoBuildRS Dataset

The proposed AutoBuildRS dataset is generated by fusing OSM vector data with Bing Maps imagery to automatically annotate building footprints. It consists of 36,647 samples (256 × 256 pixels, 0.3 m resolution), covering 154,703 buildings across the following five cities: Washington, DC, Wellington, Sydney, New York, and Los Angeles. The dataset includes annotations in Pascal VOC format and reflects both high-density urban centers and low-density suburban areas. It is split into 29,317 training, 3664 validation, and 3846 testing images. This dataset supports large-scale, low-cost model training without manual labeling or reliance on expensive aerial data sources. Refer to Table 1 for specific parameters of the four datasets.

Table 1.

Comparison of benchmark remote sensing building datasets.

2.1.3. Public Test Area Dataset

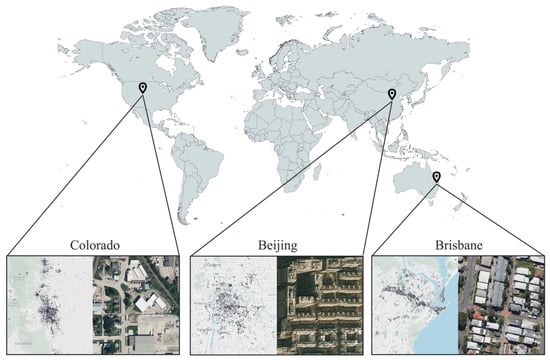

This study selected urban and suburban areas in Colorado, Beijing, and Brisbane as public test regions to evaluate the generalization performance of detection models across diverse geographic environments. A high-quality test dataset comprising 1267 samples was manually annotated and carefully validated to ensure high precision and consistency in building boundary delineation. The dataset captures a wide variety of architectural styles across different countries and urban settings, and exhibits strong representativeness in terms of sample distribution, image quality, and scene complexity. It serves as a reliable benchmark for assessing model stability and robustness in real-world cross-regional applications. The example diagram is shown in Figure 2.

Figure 2.

Spatial distribution of public test areas used for generalization evaluation.

2.2. Methods

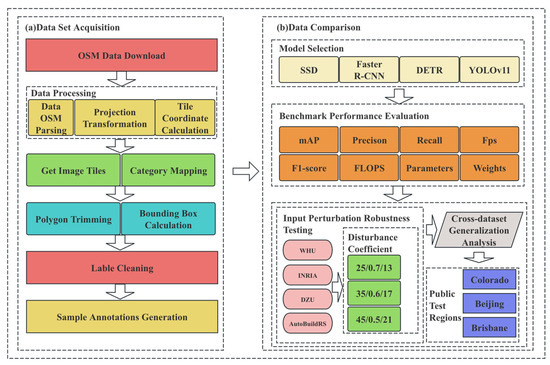

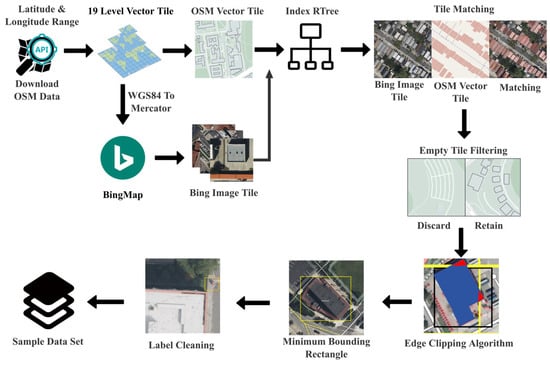

To construct a high-quality dataset for building detection research, we developed a systematic pipeline that integrates OpenStreetMap (Version SVN R11427) vector annotations with high-resolution Bing Maps (API version 13) imagery. This section outlines the methodology employed in this study, including the dataset construction process, model selection, and evaluation metrics. Figure 3 presents the overall framework of the study. This study adopts zoom level 19 tiles as Bing Maps at this level provides a ground sampling distance (GSD) of approximately 0.3 m per pixel. In urban areas, typical building footprints range from 25 to several hundred square meters. At this resolution, even small residential buildings can occupy between 50 and 300 pixels in an image, which is conducive to the extraction of meaningful spatial and structural features by convolutional neural networks. In contrast, zoom level 18 corresponds to a lower spatial resolution of approximately 0.6 m per pixel, where small buildings cover significantly fewer pixels, making them more likely to be missed by detection models and thereby reducing overall detection accuracy. Moreover, OpenStreetMap (OSM) provides vector-level building footprint annotations with spatial precision that often exceeds that of the imagery itself. The use of zoom level 19 facilitates more accurate alignment between OSM annotations and Bing Maps imagery due to the higher visual resolution, effectively reducing spatial misalignment errors. This helps to minimize annotation noise at the source and enhances the overall quality of the training dataset. The dataset generation workflow consists of the following four main stages: (1) parsing OSM data and retrieving corresponding tile images; (2) automatically generating initial bounding box annotations; (3) filtering and refining the annotations; (4) aligning the final annotations and exporting them in a standardized format. The entire pipeline is automated using custom Python (Version 3.9.21) scripts. The resulting dataset serves as the training and evaluation source for the following four representative object detection models: SSD, Faster R-CNN, DETR, and YOLOv11s. The complete workflow is illustrated in Figure 4.

Figure 3.

Overview of the dataset construction and model evaluation pipeline. (a) Shows the automated acquisition and refinement process of building datasets based on OSM and Bing imagery. (b) Illustrates the evaluation framework including model benchmarking, robustness validation, and cross-domain generalization. Final performance is verified on a unified public test area to assess the effectiveness of the proposed dataset.

Figure 4.

Workflow of tile-based remote sensing sample generation from OSM vector data and Bing imagery, including tile filtering, spatial indexing, and bounding box refinement. Edge Clipping Algorithm In the picture, the blue part represents the retained area, and the red part represents the deleted area.

2.2.1. OSM Vector Data Definition

The OSM data model is based on XML, and its core elements contain the following three major types of abstraction: Node, Way, and Relation [1]. The fundamental objective of the parsing algorithm is to transform these geographic elements into a computer-processable structured data model while preserving the integrity of the spatial topological relationships [28].

The OSM dataset can be formalized as a quaternion , a four-dimensional quantity used to represent rotation and orientation in three-dimensional space. In the context of geospatial analysis, is a globally unique identifier, node is defined as a fundamental point-like entity, represents the latitude (in degrees, ranging from −90° to 90°), and represents the longitude (in degrees, ranging from −180° to 180°). Mathematically, can be represented as follows:

The path set delineates linear or faceted geographic elements, including roads, building contours, lakes, and analogous features, with the following algebraic structure:

where denotes the globally unique identifier of the way in the OSM dataset, analogous to the node identifier (e.g., to ensure uniqueness across all ways). represents an ordered sequence of node identifiers, where each corresponds to the unique identifier of a node (e.g., = refers to ); the order of defines the geometric shape and direction of the ). encapsulates the attribute set of , including semantic tags (e.g., “building = residential” for road type), topological relations. Boundary box defines the geographic coverage of the dataset.

2.2.2. Parsing Algorithm and Implementation Principles

The parser first processes node parsing and coordinate mapping by iterating through the <node> elements in the XML structure. For each node, the following operations are executed:

Subsequently, each node is stored in a hash table as . The use of a hash table enables constant time access to node coordinates, which is critical for the efficiency of subsequent path-related computations.

Each <way> element undergoes two essential parsing steps. The first involves extracting the sequence of node references by collecting the ref attributes from all child <nd> elements. This process generates an ordered list that defines the spatial structure of the path, such as a road centerline or a building footprint. The extraction process can be formally described as follows:

In Equation (7), denotes the key of an OSM tag (e.g., the semantic identifier “building” in the tag “building = residential”), and represents the associated value (e.g., the road-type descriptor “residential” in the same tag). This key–value pairing encodes the semantic properties of the way , such as its functional type, topological relationships, or metadata. The construction of the tag map can be formally expressed as follows:

There are two modes for obtaining the bounding box B. The first is explicit declaration where if a <bounds> tag is present, the bounding box can be directly retrieved from its attributes. The extraction process is defined as follows:

The second mode is implicit derivation where if no explicit <bounds> tag is provided, the bounding box is computed by traversing all nodes and determining the coordinate extrema. The computation is performed as follows:

2.2.3. Projection Transformation

- 1.

- Web Mercator Projection Transformation

The OSM file adopts the WGS84 coordinate reference system by default (EPSG:4326) [29,30], in which geographic coordinates are represented as longitude and latitude on the Earth’s ellipsoidal surface. However, WGS84 is not suitable for direct mapping to pixel or tile coordinates. To address this Web Mercator—a planar projection designed for web-based map visualization—is employed, which enables direct conversion between tile coordinates and pixel coordinates . In this study, we utilize the Web Mercator projection to convert WGS84 geographic coordinates into projected planar coordinates [30]. The projection first maps the Earth’s ellipsoidal surface onto a cylindrical surface, which is then unfolded into a flat map. Assuming the Earth’s radius is , and given the latitude–longitude pair , the projected coordinates are calculated as follows:

- 2.

- Tile Coordinate Calculation

In practical web mapping systems, to facilitate distributed rendering the map is partitioned into image tiles. At each zoom level z, the tiling scheme divides the global map into tiles [31]. To convert geographic coordinates (longitude and latitude) into tile indices, the coordinates must first be normalized. The normalization is computed as follows:

According to the zoom level , the normalized geographic coordinates are then converted into tile-level floating-point indices for further processing, which is shown as follows:

Finally, pixel coordinates are calculated. In the standard tile system, each tile is divided into 256 × 256 pixels. Given that the integer tile indices of the current tile are , the corresponding pixel coordinates are computed as follows:

- 3.

- Bing Map Image Tile Index Generation and Download

In web-based mapping systems, hierarchical spatial partitioning is commonly used to support multi-level zooming. Bing Maps employs a quadtree structure, in which the map is recursively divided into 2 × 2 subregions. Each zoom level corresponds to a grid of size , and each tile is uniquely identified by a QuadKey string. The tile coordinates are derived from projected geographic coordinates using precise transformation formulas and are then floored to obtain integer values . The corresponding imagery is retrieved through a URL constructed by combining the subdomain, primary domain, path, imagery type prefix, QuadKey, file extension, and query parameters.

2.2.4. Category Mapping and Geometric Processing

- 1.

- Category Mapping

This part of the algorithm is mainly designed to select a primary category tag from the tag set of each way object in the OSM data, which will then be used to generate subsequent annotation files. Each tag is denoted as a key–value pair , such as (building, residential) The composition of the tag set for each way is specifically defined as follows:

The study defines a set of interested keywords, such as the following:

Finally, a category label selection function is defined to determine the label name used for generating annotation files in subsequent steps. The specific function is formulated as follows:

- 2.

- Polygon Trimming and Bounding Box Calculation

When a building or any target object is divided across multiple image tiles, its annotation boxes may be fragmented into several partial segments. Including these small fragments in training is undesirable as they often correspond to ambiguous regions such as eaves, shadows, or unidentifiable object remnants. However, discarding too many of these fragments may result in the complete loss of valid targets. To address this issue, this study introduces the Adaptive Contribution-based Retention (ACR) algorithm, a rule-based mechanism designed to automatically retain meaningful object segments while filtering out uninformative ones [32,33,34]. The core decision formula of ACR is defined as follows:

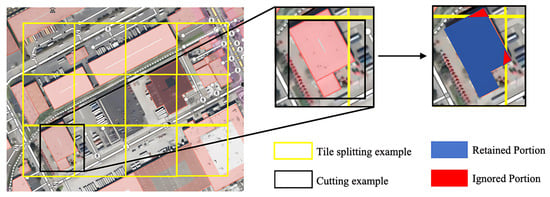

where in the area-contribution-based adaptive retention algorithm, let denote the area of the -th sub-block, and let represent the total area of the original bounding box. The following two threshold parameters are defined: the cumulative contribution threshold and the minimum individual contribution threshold . For each sub-block, the retention indicator is set to 1 if and only if or , where denotes the accumulated area of the previous sub-blocks. This rule implies that the largest sub-blocks are retained first until their cumulative area reaches at least the proportion of the original bounding box. Subsequently, only sub-blocks with area ratios no less than are retained, effectively filtering out trivial fragments. This approach ensures that the main structure is preserved while suppressing noise introduced by segmentation. The example of Polygon Trimming is shown in Figure 5.

Figure 5.

Workflow of the Adaptive Contribution-based Retention (ACR) algorithm for filtering partial object annotations across tile boundaries.

To verify the robustness of the ACR algorithm, we conducted a sensitivity analysis to evaluate its impact on the retention of valid targets and the filtering of fragmented annotations under the YOLOv11s network. We assessed multiple combinations of α ∈ {0.05, 0.1, 0.15} and β ∈ {0.7, 0.8, 0.9}, along with a baseline scenario where ACR was not applied, using a fragmented building annotation dataset containing 5000 images. Performance was measured using three metrics: mAP@0.5, precision, and recall. As shown in Table 2, the combination of α = 0.10 and β = 0.8 achieved the best balance, with a recall of 91.2% and a precision of 89.5%. When α exceeded 0.15, the recall dropped sharply due to the exclusion of small but critical fragments. Conversely, lowering β below 0.7 introduced substantial noise and reduced precision as too many trivial fragments were retained. These findings confirm that the selected thresholds are robust for our dataset and provide practical guidance for adapting the ACR algorithm to other domains.

Table 2.

Performance comparison under different ACR threshold combinations (α, β) on the fragmented annotation dataset. Metrics include mAP@0.5, precision, and recall.

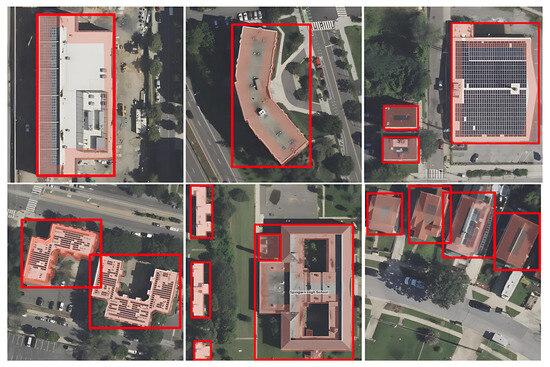

This section restricts building polygons within tile boundaries and extracts the minimum bounding rectangle (MBR) of each cropped sub-polygon for training annotation. Figure 6 shows an example of the generated optimal minimum enclosing rectangle annotation. The tile boundary is defined by the unit image size, usually . Shapely polygon objects are constructed using .

Figure 6.

The generated optimal minimum bounding rectangle (MBR) annotation. The red thick rectangular box in the figure represents the generated bounding box.

The polygon clipping operation is then performed using geometric intersection, defined as . The Sutherland–Hodgman polygon clipping algorithm is adopted, which is a boundary-substitution-based method. It sequentially intersects the set of polygon vertices with the half-planes defined by the clipping boundaries in the following manner:

- For each clipping edge;

- Sequentially process each edge of the original polygon and compute its intersections with the clipping boundary;

- Retain all vertices and intersection points that lie within the clipping region to form a new set of polygon vertices.

Let be a set of polygons. For each polygon , let its vertex set be denoted as . The corresponding bounding box is computed as follows:

Obtain the final bounding box as follows:

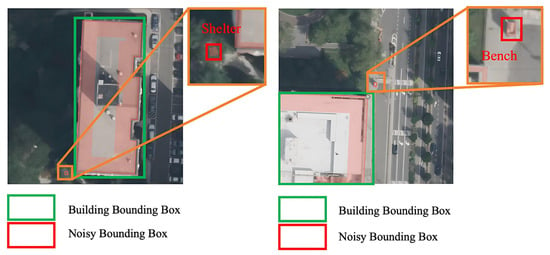

2.2.5. Label Cleaning

Automatically generated annotation files for urban building recognition often contain extremely small bounding boxes. As can be seen from Figure 7, these bounding boxes might correspond to separate toilets, guard booths, pavilions, or even false labels introduced due to errors in manual annotation. Because such objects occupy only a few pixels, they convey limited visual information and readily trigger false positives or model over-fitting. At zoom level 19 in Bing Maps, the ground sampling distance is approximately 0.3 m per pixel. At this resolution even small residential buildings typically occupy several hundred pixels in area. During sample generation and statistical analysis, we observed that bounding boxes smaller than 280 pixels2 predominantly correspond to marginal structures such as public restrooms, guard booths, and pavilions, or result from annotation noise caused by OSM data inaccuracies or misalignment errors. To enhance dataset quality and improve training robustness, we apply the following area-based filtering strategy: any bounding box whose area—computed from its width and height—is smaller than 280 pixels2 is discarded prior to training. Its formula is as follows:

Figure 7.

Noisy bounding box annotations that must be filtered using an area threshold.

2.2.6. OSM Tag Quality Filtering Logic Based on Editing History

To enhance the quality of samples generated from OSM data, the MapAnnoFactory framework implements an edit-history-based filtering strategy. This method systematically filters out low-quality labels by quantitatively evaluating the community-verified level of the data. The core of edit history analysis lies in transforming ‘traces of community collaboration’ into quantifiable quality indicators. Editor credibility constitutes the primary evaluation dimension, quantified through the following formula:

where represents the normalized contribution value, which is based on the total number of building elements an editor has processed, with the maximum value corresponding to 100 points, reflecting the accumulation of editing experience. denotes the revision retention rate—the proportion of an editor’s contributions retained in subsequent versions—directly mapped to a 0–100 scale, indicating the community’s recognition of their work. refers to domain focus, defined as the proportion of building-related elements in an editor’s total contributions. A threshold of 70% is set and if met it is assigned 100 points; otherwise, it is scaled proportionally. This ensures the editor’s professionalism in annotating building tags. Under this scoring system, editors with are defined as “highly credible editors” and their contributed tags are inherently assigned higher weights.

The editing stability of individual elements constitutes another critical dimension. For a single building element its quality positively correlates with editing frequency; therefore, let N be the total number of edits for an element. If and at least 2 of these edits are made by editors with then the element is classified as a “community-deeply-verified element”, as multiple iterative revisions reduce initial drawing errors. Conversely, if N = 1 and the editor’s then the element is deemed a “low-verification element”, posing a high risk of semantic bias (e.g., bounding box offset, mislabeling) and requiring secondary verification.

Temporal consistency must also be evaluated. Let T denote the time difference between the last edit of an element and the acquisition time of the corresponding Bing Maps image. When years, the probability of misalignment between the element’s tag and the real-world scene increases significantly (e.g., buildings demolished or newly constructed but not updated in OSM). In such cases, supplementary verification against visual features of the image (e.g., presence of continuous building contours, shadow matching) is required, with mismatched elements directly excluded. When year, the risk of temporal inconsistency is low, and elements can be prioritized for retention.

This editing-history-based filtering logic essentially converts subjective assessments of tag reliability into objective quantitative decisions by mining “quality signals implicit in community collaboration”. Elements contributed by highly credible editors, subjected to multiple verifications, and temporally matched are included in the high-quality sample library, while isolated contributions from low-credibility editors, elements with few edits, or those with temporal lag are filtered out or flagged for verification—ultimately enhancing the consistency between dataset tags and real-world geographic scenes. We used the YOLOv11s model to conduct three mAP@0.5 comparisons before and after applying the OSM Tag Quality Filtering Logic Based on Editing History on the samples of the established regions of New Zealand, Sydney, and Washington D.C. Finally, it was found that the dataset processed by OSM Tag Quality Filtering Logic Based on Editing History had an increase of 2.37%, 1.74%, and 3.77% in model mAP@0.5, respectively, verifying the usability of this method.

2.2.7. Sample Annotations Generation

The Pascal Visual Object Classes (VOCs) annotation specification defines a tree-structured logical format for annotation files, which essentially represents a labeled directed tree. Formally, the XML annotation tree is defined as , where the node set , the edge set encodes parent–child relationships, and the labeling function assigns textual values to each node. The structure satisfies the following recursive definition:

2.2.8. Experimental Platform and Hyper-Parameter Settings

The sample generation of the MapAnnoFactory algorithm was conducted on the following consumer-grade hardware: an AMD Ryzen 7 7700X CPU (8 cores, 3.8 GHz base frequency, Advanced Micro Devices, Inc. Completed by TSMC in Hsinchu City, Taiwan Province of China) paired with 32 GB of DDR4 RAM (Shenzhen Xinkexing Stark Technology Co., Ltd., Shenzhen, China) and a 512 GB NVMe SSD(KIOXIA Corporation, the headquarters in Japan, has actual production operations involving Taiwan region of China.). The process relied on 8-thread CPU parallelism without GPU acceleration. Memory usage varied with OSM tag complexity and geographic density, stabilizing below 6 GB for sparse regions (e.g., New Zealand, Sydney) and peaking at 8 GB for dense urban areas (e.g., New York, Los Angeles), well within the 32 GB capacity. Processing time correlated strongly with feature density, ranging from 15–20 min per cycle for sparse regions to 80–100 min for dense urban clusters. The 8-core CPU efficiently handled sequential tasks, with multithreading further reducing latency. The “Boxes/sec” metric fluctuated between 4 and 48, with sparse regions achieving higher throughput due to simpler spatial relationships among OSM elements. Even with hardware downgrades (e.g., 6-core CPU + 16 GB RAM), the algorithm maintained functionality with a 30–50% increase in processing time, demonstrating MapAnnoFactory’s compatibility with consumer-grade environments. The following are the exact version numbers of all the software libraries used in the sample generation algorithm: osmium ≥ 4.0.1, osmnx ≥ 2.0.3, matplotlib ≥ 3.9.2, requests ≥ 2.32.2, shapely ≥ 2.0.6, tqdm ≥ 4.67.0, lxml > 5.3.0, amd imageio > 2.36.9. The specific resource consumption and performance metrics can be found in Table 3 below.

Table 3.

Resource consumption and performance metrics of MapAnnoFactory for sample generation across diverse geographic regions.

All the model training and evaluation experiments were executed on a dedicated workstation running Ubuntu 18.04.6 LTS(Canonical Ltd., London, UK). The system is powered by an AMD Ryzen Threadripper 3970X (32 cores/64 threads, Advanced Micro Devices, Inc. is manufactured in Xinyu City, Taiwan Province of China) processor, which handles data-loading and preprocessing in parallel with model computation. Three NVIDIA GeForce RTX 3090 (NVIDIA Corporation, located in Hsinchu City, Taiwan Province of China, for production.) graphics cards—each equipped with 24 GB of VRAM, for a combined GPU memory of 72 GB—perform the forward and backward passes. The server is further provisioned with 125.7 GB of DDR4 system RAM, ensuring that large mini-batches and high-resolution imagery can be processed without I/O bottlenecks. All experiments were compiled and executed under CUDA 11.5 and PyTorch 1.12.1, providing deterministic GPU kernels and mixed-precision support. A concise summary of the hardware–software stack is presented in Table 4 to facilitate full transparency and reproducibility.

Table 4.

Experimental platform hardware and software configuration.

During training, the SSD300 received 300 × 300-pixel inputs. The random seed was fixed at 11, and the backbone network was Visual Geometry Group (VGG). Optimization relied on stochastic gradient descent (SGD) combined with a cosine learning-rate-decay schedule. The data loader used four worker threads, the non-maximum suppression (NMS) employed an intersection over union threshold of 0.50, the initial learning rate was 0.002, and the model was trained for 50 frozen and 200 unfrozen epochs.

For Faster R-CNN, a ResNet-50 backbone was used without mixed-precision training. The input size was 600 × 600 pixels. The initial learning rate was 1 × 10−4, and the minimum learning rate was set to 1 × 10−6. Training comprised 50 frozen epochs followed by 200 unfrozen epochs.

In the DETR experiment, a ResNet-50 backbone and the AdamW optimizer were adopted. The overall learning rate was 1 × 10−4, while the backbone learning rate was fixed at 1 × 10−5. A total of 300 epochs were executed.

For the YOLOv11s model, images were resized to 640 × 640 pixels. The network was trained for 300 epochs with a batch size of 48 and 4 data-loading workers. Stochastic gradient descent served as the optimizer, and the initial learning rate was 0.01.

2.2.9. Evaluation of Experiments and Indicators

This study conducts the following four evaluation experiments: benchmark performance evaluation, input perturbation robustness testing, cross-dataset generalization analysis, and comparative experiments on public test regions. Among them, the benchmark performance evaluation is designed to systematically assess detection accuracy and computational efficiency of various mainstream object detection models across different remote sensing datasets. The evaluation metrics include mAP, precision, recall, F1-score, frames per second (FPS), and floating point operations (FLOPs) [35,36]. The corresponding formulas are defined as follows:

In operational settings, object detection models are exposed to complex and rapidly changing conditions, including image degradation, illumination variation, and sensor noise. Such perturbations can markedly reduce detection accuracy, so performance measured exclusively on pristine test images rarely reflects a model’s real-world adaptability and reliability [37,38,39,40,41]. To provide a more comprehensive assessment, this study introduces three robustness metrics—RAR, AAD, and SRS. These indicators quantify performance degradation under common corruptions, namely Gaussian noise, brightness shifts, and motion blur.

The metric denotes the detection accuracy achieved under clean, unperturbed conditions, whereas refers to the accuracy obtained after input disturbances are introduced. Three robustness indices are employed. RAR quantifies the relative decline in accuracy: a lower value indicates weaker sensitivity to perturbations and therefore higher robustness. AAD measures the absolute loss in accuracy, directly reflecting performance degradation in adverse environments. SRS represents the proportion of accuracy retained under perturbation: a higher value signifies stronger robustness. To evaluate robustness consistently across models and datasets, three representative perturbations were imposed. (1) Low-illumination simulation: original pixel intensities were down-scaled to 60%, 50%, and 40% of their initial values. (2) Gaussian-noise injection: zero-mean Gaussian noises with standard deviations of 15, 35, and 45 were added. (3) Motion blur synthesis: Horizontal convolution kernels of sizes 13, 17, and 21 were applied, with larger kernels inducing more severe blurring. Formal definitions of RAR, AAD, and SRS are as follows:

In remote sensing image analysis—as in many computer-vision tasks—deep learning models often achieve outstanding accuracy on the datasets used for training, yet their performance degrades markedly when applied to previously unseen domains [42,43,44]. To quantify this cross-domain generalization gap, we conducted a systematic set of cross-domain experiments. In each run a model was trained from scratch on a single source dataset with identical hyper-parameters, augmentation policies, and learning schedules; the resulting weights were then evaluated on the other three target datasets without any fine-tuning or domain adaptation. Repeating this procedure for all four datasets produced a complete evaluation matrix whose diagonal entries represent in-domain accuracy and whose off-diagonal entries measure out-of-domain performance. Cross-dataset validation reveals a model’s robustness and adaptability in the presence of domain shift, making it a critical means of assessing its real-world applicability. This experiment helps identify overfitting risks, evaluate model universality, and provide a more reliable basis for field deployment in remote sensing object detection tasks.

Finally, a validation experiment was conducted on a publicly available test region to evaluate the dataset’s adaptability and generalization capability in real-world scenarios beyond the training domain. Unlike controlled datasets, public test regions typically exhibit diverse geographic backgrounds and complex image characteristics, making them more representative of practical application environments. This experiment is intended to assess the dataset’s stability when applied to unseen regions and to verify its robustness against out-of-domain samples. Evaluation metrics were consistent with those used in the benchmark performance evaluation. The test set consists of 1267 manually annotated samples collected from both urban and suburban areas of the following two cities and one state: Colorado, Beijing, and Brisbane. This independent test set was excluded from all training procedures and serves as a standardized benchmark to measure the real-world detection performance of 16 model variants under consistent conditions.

3. Results

This section presents a comprehensive evaluation of three public datasets and one newly constructed dataset on the following four object detection models: SSD, Faster R-CNN, DETR, and YOLOv11s. The following nine evaluation metrics are used to compare the datasets: mAP@0.50, mAP@0.50:0.95, precision, recall, FPS, F1-score, parameter count, GFLOPS, and model size [11,35,36,45,46,47]. Subsequently, cross-domain validation is conducted by training the models on the proposed dataset and testing them on each public dataset to quantify performance degradation across different domains. To further assess robustness, the proposed dataset is subjected to Gaussian noise, brightness reduction, and motion blur perturbations, and the resulting accuracy loss is measured. Finally, an independent public test region is used for additional verification. The aggregated results demonstrate that the dataset generated by the proposed automated pipeline exhibits significantly higher quality and effectiveness compared to existing benchmark datasets.

3.1. Benchmark Performance Evaluation

The comparative performance of the WHU, DZU, INRIA, and AutoBuildRS datasets was evaluated with the following four object detection backbones—SSD, Faster R-CNN, DETR, and YOLOv11s. AutoBuildRS consistently attained superior results on most metrics for three of the four models, whereas YOLOv11s delivered the highest overall accuracy among all detectors. For detailed numerical results, please refer to Table 5 and Table 6 below.

Table 5.

Benchmark performance evaluation of the AutoBuildRS dataset across four models.

Table 6.

Benchmark performance evaluation of WHU across four models.

YOLOv11s delivers the strongest overall performance on both the AutoBuildRS and WHU, achieving mAP@0.5 scores of 93.2% and 93.8%, and mAP@0.5:0.95 scores of 73.7% and 76.6%, respectively. In each case it also attains the highest inference speed—exceeding 128 FPS—and thus offers an excellent balance between accuracy and efficiency. The DETR attains the highest mAP@0.5:0.95 value (77.8%) on AutoBuildRS, indicating particular effectiveness in scenes with regular structural patterns. Faster R-CNN reaches 77.9% mAP@0.5 on WHU, yet its large parameter count and low inference speed (≈20 FPS) limit practical deployment. SSD records the lowest accuracy, with 74.8% mAP@0.5 on WHU; although its throughput is comparatively high, it falls short of the high-precision requirements. Notably, the default implementation of DETR does not report precision or recall metrics as it lacks an integrated evaluation module; these metrics must be computed manually using the COCO API or custom evaluation scripts. The blank parts in the table regarding precision, recall, F1-score, and FPS will all be uniformly represented by “N/A”. Overall, YOLOv11s provides the most favorable trade-off among accuracy, speed, and computational cost, making it the preferred model for remote sensing object detection applications. Detailed results can be found in Table 7 and Table 8 below.

Table 7.

Benchmark performance evaluation of DZU across four models.

Table 8.

Benchmark performance evaluation of INRIA across four models.

YOLOv11s exhibits a marked performance advantage over DZU and INRIA, both of which model complex remote-sensing scenes. On DZU, YOLOv11s attains a mAP@0.5 of 75.6%, a mAP@0.5:0.95 of 55.2%, and an F1-score of 70.72%, whilst sustaining the highest inference speed at 131.58 FPS. Its results on INRIA are even stronger, reaching a mAP@0.5 of 83.3%, a mAP@0.5:0.95 of 59.5%, and an F1-score of 82.02%, thus surpassing all competing models. By contrast, the Faster R-CNN delivers moderately lower accuracy and never exceeds 21 FPS, limiting its deployment potential. The SSD records the poorest accuracy—only 50.4% mAP@0.5 on INRIA—despite a tolerable inference speed, rendering it unsuitable for complex applications. The DETR shows promise in accuracy. Due to the fixed number of predictions and the use of the Hungarian matching algorithm for one-to-one assignment between predicted and ground-truth boxes, the DETR model does not rely on confidence-based ranking or non-maximum suppression (NMS) as traditional detectors do. As a result, in the absence of explicit confidence thresholds or filtering mechanisms it becomes difficult to distinguish valid from invalid predictions, making it challenging to accurately construct a precision–recall curve. Overall, YOLOv11s achieves the most favorable trade-off between detection accuracy and computational efficiency across diverse remote sensing environments, making it the most practically valuable framework among the four models evaluated.

3.2. Input Perturbation Robustness Testing

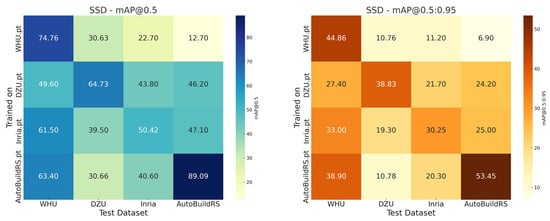

In the input perturbation robustness testing, the YOLOv11s model consistently achieved the highest accuracy across all datasets and was therefore selected for subsequent robustness experiments. Figure 8 presents a comparison of the detection results before and after perturbation. Table 9 shows the results of the input disturbance robustness evaluations are presented

Figure 8.

The images from left to right illustrate the datasets WHU, DZU, INRIA, and AutoBuildRS before and after perturbation.

Table 9.

The results of the input disturbance robustness evaluations are presented. From left to right, the disturbance conditions correspond to Gaussian noise, brightness factor, and motion blur index.

Experimental results show that the AutoBuildRS dataset achieves the greatest robustness under all perturbation scenarios. Its RAR and AAD remain consistently the lowest, whereas its SRS is consistently the highest. Under the most severe perturbation—Gaussian noise with σ = 45, illumination scaled to 40%, and a 21-pixel motion blur kernel—AutoBuildRS attains an mAP@0.5 of 90.4, an RAR of only 3.0%, and an SRS of 0.97, markedly outperforming INRIA, which records an SRS of 0.85 and an RAR of 14.89%. Although WHU remains generally stable, the robustness of DZU and INRIA declines sharply under high-intensity noise or severe motion blur, leading to pronounced fluctuations in detection accuracy. These findings verify that the MapAnnoFactory automatic annotation framework effectively mitigates image quality degradation and other complex input conditions. Consequently, the resulting dataset exhibits superior robustness and generalization, making it well suited for reliable object detection in real-world remote sensing applications.

3.3. Cross-Dataset Generalization Analysis

The contrast between these two groups of scores directly quantifies the accuracy loss practitioners can expect when deploying a model in an unseen region. Moreover, the symmetry of the matrix enables pairwise comparisons of domain difficulty and transferability, thereby identifying source domains whose annotations yield the greatest return on future data collection efforts. This protocol thus provides a rigorous means of assessing how well features learned in one domain transfer to heterogeneous datasets under a fixed network architecture and training regimen.

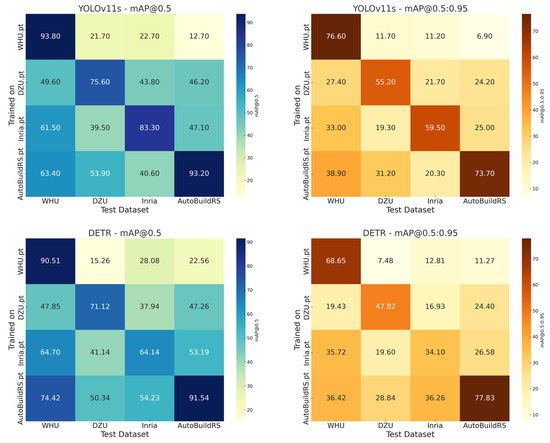

Figure 9 shows that in all cross-domain evaluations, models trained on AutoBuildRS delivered the most stable out-of-domain performance, whereas those trained on WHU and DZU deteriorated sharply when tested on external datasets. For instance, a WHU-trained YOLOv11s model dropped to 21.7 mAP on DZU, and its DETR counterpart fell to 15.3 mAP, highlighting a pronounced distribution shift. Models trained on INRIA showed intermediate transferability—high accuracy on their native split but inconsistent results on DZU and AutoBuildRS. These results demonstrate that AutoBuildRS, owing to its greater scene diversity and annotation consistency, provides markedly superior cross-domain robustness.

Figure 9.

A comparative cross-dataset generalization evaluation was conducted using the mAP@0.5 and mAP@0.5:0.95 metrics across four datasets under the YOLOv11s and DETR models.

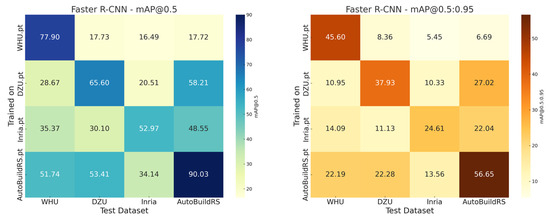

From Figure 10, we can conclude that AutoBuildRS.pt demonstrates the highest cross-domain robustness in both the Faster R-CNN and SSD frameworks. Within Faster R-CNN, AutoBuildRS.pt attains a mAP of 90.03 on its native test set and preserves competitive accuracy on the external WHU, DZU, and INRIA datasets. A similar trend is observed under SSD, where AutoBuildRS.pt again delivers the highest accuracy on the AutoBuildRS test set. By contrast, WHU.pt and DZU.pt perform consistently worse on non-native datasets, with their mAP on AutoBuildRS falling below 20 in nearly all cases.

Figure 10.

A comparative cross-dataset generalization evaluation was conducted using the mAP@0.5 and mAP@0.5:0.95 metrics across four datasets under the Faster R-CNN and SSD models.

The behavior of INRIA.pt is more structure dependent. In Faster R-CNN it records a mAP of 52.9 on its native test set and shows moderate generalization to other datasets. Under SSD, however, its accuracy declines sharply on DZU and AutoBuildRS, underscoring SSD’s limited adaptability to the multi-scale targets prevalent in INRIA. Notably, although DZU.pt attains high accuracy on DZU with SSD, its performance on WHU and AutoBuildRS remains inconsistent.

3.4. Comparative Experiments on Public Test Regions

Table 10 presents a detailed summary of the comparative experiments conducted on public test regions. The proposed AutoBuildRS dataset consistently achieves the highest detection accuracy, with an mAP@0.50 of 85.7%, while WHU performs the worst, with only 14.08%. DZU and INRIA exhibit relatively stable performance around 60% mAP@0.50, but still show a substantial gap compared to AutoBuildRS. The complete results are shown in Table 10.

Table 10.

Comparative results of generalization performance on the public test region.

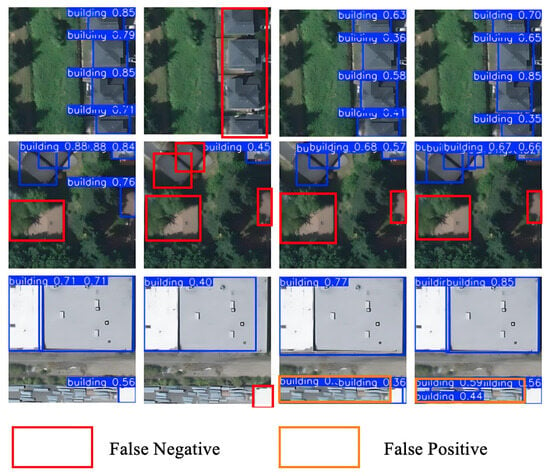

As illustrated in Figure 11, the comparative experiments on the public test regions for YOLOv11s reveal that the AutoBuildRS dataset outperforms the other three datasets in terms of mAP while also exhibiting the lowest false negative (FN) and false positive (FP) rates. By contrast, INRIA and DZU show notably higher FP rates, whereas WHU records the highest FN rate.

Figure 11.

Comparative experiments on the public test regions for the YOLOv11s model across four datasets—shown from left to right as AutoBuildRS, WHU, INRIA, and DZU.

4. Discussion

Based on our experimental results, we found that the method of generating building samples by combining OSM vector tiles with Bing Maps image tiles is highly applicable in remote sensing scene detection tasks. Existing studies mainly focus on coordinate processing using Mercator projection and latitude–longitude-to-pixel coordinate conversion, ultimately generating samples through integration via R-Tree spatial indexing, coordinate transformation, and manual visual correction [19]. This approach has achieved significant results in improving the efficiency of large-scale data collection and reducing labor costs. Although the aforementioned method has certain universality in constructing large-scale building detection datasets, several key issues remain in practical detection tasks, such as redundant interference from tiny objects, where extremely small buildings occupy very few pixels in images; cross-tile building objects resulting from a single building being split across multiple tiles; due to the low credibility issues in the annotations of Volunteer Geographical Information (VGI); reliance on manual correction in post-processing; and pixel-level offsets due to image resolution, projection distortion, elevation differences (in regions outside North America and Australia), or temporal mismatches.

To address these issues, this paper further explores the following methods to improve the model’s detection performance. Firstly, the Polygon Trimming Algorithm is used to dynamically calculate the area of resulting sub-boxes after cutting, determining whether to retain or discard the target. This solves the problem of unclear target semantics caused by cross-tile buildings. Secondly, Label Cleaning is performed by setting a threshold to delete bounding boxes smaller than 280 pixels, resolving redundant interference from tiny building boxes or mislabeled targets. Finally, the MapAnnoFactory framework implements an edit-history-based filtering strategy. This method systematically eliminates low-quality labels by quantitatively assessing the degree of community validation of the data. On the other hand, it can also effectively address the issue of inconsistent semantic labels in OSM. Therefore, we believe developing building sample generation methods to address these three types of issues is necessary.

Future research will focus on combining high-resolution Digital Surface Models (DSMs) with high-precision Digital Elevation Models (DEMs) to resolve pixel-level data deviations caused by projection distortion and elevation differences (in regions outside North America and Australia). To address the issue of missing OSM tags in regions such as Asia, the plan is to adopt multi-source open data fusion and active learning for enhanced annotation, integrating government open maps, localized crowdsourcing platforms, and commercial POI data, using spatial entity matching algorithms to supplement the missing tags, combining weakly supervised pre-training models to generate candidate building masks, and applying active learning strategies to prioritize the annotation of low-confidence areas. To address the issue of geographical coverage bias, the plan is to deploy domain adaptation and synthetic data generation technologies, using Fourier domain adaptation (FDA) and style transfer (such as CyCADA) to align the image feature distributions of high/low resource areas, combining the meta-learning framework (MAML) to train the domain adaptation model, and generating synthetic samples with the texture of the target area through GAN to fill in the data gaps. To address the issues of image occlusion and inconsistent resolution, a cloud detection–repair pipeline and a multi-scale fusion model are planned to be constructed in the following manner: develop a cloud detection module based on time-series analysis to automatically identify occluded areas, and use adjacent time-phase images or the super-resolution model (ESRGAN) to repair the damaged areas; design a multi-scale pyramid network to uniformly process inputs of different resolutions, and introduce a resolution-invariant loss function to enhance the model’s robustness; and for the threshold setting in Label Cleaning, conduct statistical analyses on tiles of other levels to derive thresholds suitable for their specific scales. In the future, addressing these issues will enhance the global applicability of building sample generation algorithms—particularly in developing regions with uneven OSM data quality and topographically complex areas—enabling the generation of more accurate and consistent building samples. This will provide a standardized data foundation for global-scale research, such as urban dynamic monitoring and disaster risk assessment.

5. Conclusions

This study proposes an automatic building sample generation algorithm. The algorithm parses OSM vector tile data, performs projection conversion and tile coordinate calculation, and then conducts accurate indexing and downloading of Bing Maps image tiles. Subsequently, it implements category mapping and geometric processing, utilizing the Polygon Trimming Algorithm to dynamically calculate the area of cut sub-regions for determining target retention or discarding. Finally, Label Cleaning is performed to eliminate redundant interference caused by tiny building boxes or mislabeled targets, generating annotation files in VOC format and corresponding images.

The resulting large-scale dataset demonstrates high geometric fidelity. Evaluations using four mainstream detectors—SSD, DETR, Faster R-CNN, and YOLOv11s—show that it consistently outperforms three widely used benchmarks (WHU, DZU, and INRIA) in precision, recall, and mean average precision. Specifically, AutoBuildRS achieves an mAP@0.5 of 93.2%, which is 17.6% and 9.9% higher than that of DZU and INRIA, respectively. Although its mAP@0.5 is only 0.6% lower than WHU, this method exhibits significant advantages in generation efficiency and sample quantity.

In input perturbation robustness testing, the RAR and AAD values of the AutoBuildRS dataset consistently remain at the lowest levels, while its SRS remains the highest. Even under the most severe interference, it maintains a high mAP@0.5 of 90.4%. In contrast, the robustness of WHU, DZU, and INRIA declines sharply when exposed to high-intensity noise and motion blur.

Through cross-dataset generalization analysis, we found that the YOLOv11s model trained on WHU achieves an mAP@0.5 of only 21.7% on DZU, and the corresponding DETR model drops to 15.3% mAP on DZU. Comprehensive comparisons indicate that the AutoBuildRS dataset maintains stronger robustness and generalization ability.

Finally, we conducted comparative experiments on public test regions to evaluate the performance of the four datasets across four models when processing images with diverse and complex geographical backgrounds beyond the training regions. DZU and INRIA showed relative stability, with mAP@0.5 reaching approximately 60%, while WHU performed the worst at only 14.08%. In contrast, AutoBuildRS consistently achieved the highest detection accuracy.

In summary, this method provides an efficient solution for establishing large-scale building samples, significantly advancing the large-scale application of building information extraction in remote sensing scenarios. It leverages OSM’s semantic labels to reduce annotation costs and provides data support for global urban modeling, change detection, and other research. Future work will focus on utilizing elliptical bounding boxes to handle displacement [48], combining high-resolution Digital Surface Models (DSMs) with high-precision Digital Elevation Models (DEMs) to address pixel-level deviations caused by elevation differences, developing intelligent filtering mechanisms for OSM data based on user contribution behaviors to enhance sample reliability in low-quality regions, and introducing temporal change detection algorithms to realize dynamic sample updates—ultimately promoting the global application of this algorithm.

Author Contributions

Conceptualization, J.G.; methodology, J.G.; algorithm development, J.G.; validation, J.G. and C.J.; formal analysis, J.G.; resources, C.J., L.J., L.C. and X.Z.; data curation, C.J.; writing—original draft preparation, J.G.; writing—review and editing, C.J., J.G. and H.C.; visualization, J.G. and H.C.; supervision, C.J., L.J., L.C. and X.Z.; project administration, C.J.; funding acquisition, C.J., L.J., L.C. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (Grant No. 42401548) and the Natural Science Foundation of Jiangsu Province Higher Education Basic Science (24KJB170010). The research is also partially supported under the Jiangsu Natural Science Funds (BK20241069) and Jiangsu Province College Student Innovation Training Program (2023112760372, 202411276111Y, 202411276140Y). The project was supported by the Open Research Fund of the Jiangsu Collaborative Innovation Center for Smart Distribution Network, Nanjing Institute of Technology (No. XTCX202508).

Data Availability Statement

The algorithm implementation and code used in this study will be made publicly available via [https://github.com/gujiawei123/MapAnnoFactory.git (can be accessed on 1 October 2025)] upon publication of the paper to ensure reproducibility and facilitate academic exchange. Data associated with this research are available online. WHU is available at https://gpcv.whu.edu.cn/data/ (accessed on 3 March 2025); https://gpcv.whu.edu.cn/data/ (accessed on 7 March 2025). INRIA is available at https://project.inria.fr/aerialimagelabeling/ (accessed on 7 March 2025). DZU is available at https://www.scidb.cn/en/detail?dataSetId=806674532768153600&dataSetType=journal (accessed on 7 March 2025). The YOLOv11s model can be accessed through the following link: https://github.com/ultralytics/ultralytics (accessed on 6 March 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Atwal, K.S.; Anderson, T.; Pfoser, D.; Züfle, A. Predicting building types using OpenStreetMap. Sci. Rep. 2022, 12, 19976. [Google Scholar] [CrossRef] [PubMed]

- Bagheri, H.; Schmitt, M.; Zhu, X. Fusion of multi-sensor-derived heights and OSM-derived building footprints for urban 3D reconstruction. ISPRS Int. J. Geo-Inf. 2019, 8, 193. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L. Remote sensing image scene classification using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Sun, X.; Liu, L.; Li, C.; Yin, J.; Zhao, J.; Si, W. Classification for remote sensing data with improved CNN-SVM method. IEEE Access 2019, 7, 164507–164516. [Google Scholar] [CrossRef]

- Li, D.; Neira-Molina, H.; Huang, M.; Zhang, Y.; Junfeng, Z.; Bhatti, U.A.; Asif, M.; Sarhan, N.; Awwad, E.M. CSTFNet: A CNN and dual Swin-transformer fusion network for remote sensing hyperspectral data fusion and classification of coastal areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5853–5865. [Google Scholar] [CrossRef]

- Liu, L.; Wang, Y.; Peng, J.; Zhang, L. GLR-CNN: CNN-based framework with global latent relationship embedding for high-resolution remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5633913. [Google Scholar] [CrossRef]

- Zaid, M.M.A.; Mohammed, A.A.; Sumari, P. Remote sensing image classification using convolutional neural network (CNN) and transfer learning techniques. arXiv 2025, arXiv:2503.02510. [Google Scholar]

- Feng, H.; Wang, Y.; Li, Z.; Zhang, N.; Zhang, Y.; Gao, Y. Information leakage in deep learning-based hyperspectral image classification: A survey. Remote Sens. 2023, 15, 3793. [Google Scholar] [CrossRef]

- Cheng, L.; Li, J.; Duan, P.; Wang, M. A small attentional YOLO model for landslide detection from satellite remote sensing images. Landslides 2021, 18, 2751–2765. [Google Scholar] [CrossRef]

- He, X.; Liang, K.; Zhang, W.; Li, F.; Jiang, Z.; Zuo, Z.; Tan, X. DETR-ORD: An Improved DETR Detector for Oriented Remote Sensing Object Detection with Feature Reconstruction and Dynamic Query. Remote Sens. 2024, 16, 3516. [Google Scholar] [CrossRef]

- Lu, X.; Ji, J.; Xing, Z.; Miao, Q. Attention and feature fusion SSD for remote sensing object detection. IEEE Trans. Instrum. Meas. 2021, 70, 5501309. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, X.; Chen, G.; Dai, F.; Gong, Y.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar] [CrossRef]

- Wu, T.; Dong, Y. YOLO-SE: Improved YOLOv8 for remote sensing object detection and recognition. Appl. Sci. 2023, 13, 12977. [Google Scholar] [CrossRef]

- Yin, R.; Zhao, W.; Fan, X.; Yin, Y. AF-SSD: An accurate and fast single shot detector for high spatial remote sensing imagery. Sensors 2020, 20, 6530. [Google Scholar] [CrossRef] [PubMed]

- Ding, W.; Zhang, L. Building detection in remote sensing image based on improved YOLOv5. In Proceedings of the 2021 17th International Conference on Computational Intelligence and Security (CIS), Chengdu, China, 19–22 November 2021; pp. 133–136. [Google Scholar]

- Lin, J.; Zhao, Y.; Wang, S.; Tang, Y. YOLO-DA: An efficient YOLO-based detector for remote sensing object detection. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6008705. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Van Etten, A.; Lindenbaum, D.; Bacastow, T.M. Spacenet: A remote sensing dataset and challenge series. arXiv 2018, arXiv:1807.01232. [Google Scholar]

- Zhao, M.; Zhang, C.; Gu, Z.; Cao, Z.; Cai, C.; Wu, Z.; He, L. Automatic generation of high-quality building samples using OpenStreetMap and deep learning. Int. J. Appl. Earth Obs. Geoinf. 2025, 139, 104564. [Google Scholar] [CrossRef]

- Vargas-Munoz, J.E.; Srivastava, S.; Tuia, D.; Falcao, A.X. OpenStreetMap: Challenges and opportunities in machine learning and remote sensing. IEEE Geosci. Remote Sens. Mag. 2020, 9, 184–199. [Google Scholar] [CrossRef]

- Chen, K.; Fu, K.; Gao, X.; Yan, M.; Sun, X.; Zhang, H. Building extraction from remote sensing images with deep learning in a supervised manner. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 1672–1675. [Google Scholar]

- Huang, F.; Yu, Y.; Feng, T. Automatic building change image quality assessment in high resolution remote sensing based on deep learning. J. Vis. Commun. Image Represent. 2019, 63, 102585. [Google Scholar] [CrossRef]

- Hui, J.; Du, M.; Ye, X.; Qin, Q.; Sui, J. Effective building extraction from high-resolution remote sensing images with multitask driven deep neural network. IEEE Geosci. Remote Sens. Lett. 2018, 16, 786–790. [Google Scholar] [CrossRef]

- Luo, L.; Li, P.; Yan, X. Deep learning-based building extraction from remote sensing images: A comprehensive review. Energies 2021, 14, 7982. [Google Scholar] [CrossRef]

- Schuegraf, P.; Zorzi, S.; Fraundorfer, F.; Bittner, K. Deep learning for the automatic division of building constructions into sections on remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 7186–7200. [Google Scholar] [CrossRef]

- Zeng, Y.; Guo, Y.; Li, J. Recognition and extraction of high-resolution satellite remote sensing image buildings based on deep learning. Neural Comput. Appl. 2022, 34, 2691–2706. [Google Scholar] [CrossRef]

- Fang, F.; Wu, K.; Liu, Y.; Li, S.; Wan, B.; Chen, Y.; Zheng, D. A Coarse-to-Fine Contour Optimization Network for Extracting Building Instances from High-Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 3814. [Google Scholar] [CrossRef]

- Fan, H.; Zipf, A.; Fu, Q. Estimation of building types on OpenStreetMap based on urban morphology analysis. In Connecting a Digital Europe Through Location and Place; Springer: Berlin/Heidelberg, Germany, 2014; pp. 19–35. [Google Scholar]

- Battersby, S. Web Mercator: Past, present, and future. Int. J. Cartogr. 2025, 11, 271–276. [Google Scholar] [CrossRef]

- Wada, T. On some information geometric structures concerning Mercator projections. Phys. A Stat. Mech. Its Appl. 2019, 531, 121591. [Google Scholar] [CrossRef]

- Ye, W.; Zhang, F.; He, X.; Bai, Y.; Liu, R.; Du, Z. A tile-based framework with a spatial-aware feature for easy access and efficient analysis of marine remote sensing data. Remote Sens. 2020, 12, 1932. [Google Scholar] [CrossRef]

- Ozge Unel, F.; Ozkalayci, B.O.; Cigla, C. The power of tiling for small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 0–0. [Google Scholar]

- Tulabandhula, T.; Nguyen, D. Dynamic Tiling: A Model-Agnostic, Adaptive, Scalable, and Inference-Data-Centric Approach for Efficient and Accurate Small Object Detection. arXiv 2023, arXiv:2309.11069. [Google Scholar]

- Varga, L.A.; Zell, A. Tackling the background bias in sparse object detection via cropped windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2768–2777. [Google Scholar]

- Gao, J.; Chen, Y.; Wei, Y.; Li, J. Detection of specific building in remote sensing images using a novel YOLO-S-CIOU model. Case: Gas station identification. Sensors 2021, 21, 1375. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Ma, Z.; Li, X. Rs-detr: An improved remote sensing object detection model based on rt-detr. Appl. Sci. 2024, 14, 10331. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.-Z.; Zhao, X.-L.; Deng, L.-J.; Huang, J. Stripe noise removal of remote sensing images by total variation regularization and group sparsity constraint. Remote Sens. 2017, 9, 559. [Google Scholar] [CrossRef]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change detection methods for remote sensing in the last decade: A comprehensive review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Chen, M.; Liu, S.; Shao, Z.; Zhou, X.; Liu, P. Illumination and contrast balancing for remote sensing images. Remote Sens. 2014, 6, 1102–1123. [Google Scholar] [CrossRef]

- Ma, X.; Wang, Q.; Tong, X. Nighttime light remote sensing image haze removal based on a deep learning model. Remote Sens. Environ. 2025, 318, 114575. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Aidini, A.; Fotiadou, K.; Giannopoulos, M.; Pentari, A.; Tsakalides, P. Survey of deep-learning approaches for remote sensing observation enhancement. Sensors 2019, 19, 3929. [Google Scholar] [CrossRef] [PubMed]

- Gominski, D.; Gouet-Brunet, V.; Chen, L. Cross-dataset learning for generalizable land use scene classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1382–1391. [Google Scholar]

- Rolf, E. Evaluation challenges for geospatial ML. arXiv 2023, arXiv:2303.18087. [Google Scholar]

- Zhang, X.; Huang, H.; Zhang, D.; Zhuang, S.; Han, S.; Lai, P.; Liu, H. Cross-Dataset Generalization in Deep Learning. arXiv 2024, arXiv:2410.11207. [Google Scholar]

- Bai, T.; Pang, Y.; Wang, J.; Han, K.; Luo, J.; Wang, H.; Lin, J.; Wu, J.; Zhang, H. An optimized faster R-CNN method based on DRNet and RoI align for building detection in remote sensing images. Remote Sens. 2020, 12, 762. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Zhong, R.; Chen, D.; Ke, Y.; Peethambaran, J.; Chen, C.; Sun, L. Multilevel building detection framework in remote sensing images based on convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3688–3700. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, J.; Fan, Y.; Gao, H.; Shao, Y. An efficient building extraction method from high spatial resolution remote sensing images based on improved mask R-CNN. Sensors 2020, 20, 1465. [Google Scholar] [CrossRef] [PubMed]

- Jung, S.; Song, A.; Lee, K.; Lee, W.H. Advanced Building Detection with Faster R-CNN Using Elliptical Bounding Boxes for Displacement Handling. Remote Sens. 2025, 17, 1247. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).