1. Introduction

The scanning radar is widely utilized in various fields, including aircraft autonomous landing, material airdrop, and ground attack [

1,

2,

3]. However, its angular resolution is limited to the size of the radar aperture in the forward-looking directions. Especially for airborne platforms, it is challenging to provide the necessary space resources [

4,

5], leading to low angular resolution. The conventional synthetic aperture radar (SAR) methodology encounters challenges in forward-looking imaging as a result of the concurrence of the equal-distance and equal-Doppler lines within the forward-looking zone [

6,

7,

8]. Doppler beam sharpening (DBS) encounters difficulties in the resolution of forward-looking imaging due to the symmetry issues and the reduction in the Doppler centroid gradient [

9,

10]. Based on the scanning mode of radar beam, angular super-resolution methods have been studied to improve the angular resolution depending on the convolution signal model between the antenna pattern and target scatterings.

The super-resolution imaging methods are proposed from different perspectives, such as spectral estimation-based methods [

11,

12,

13], Bayesian methods [

4,

14,

15] and regularization methods [

16,

17,

18,

19,

20]. In [

11], a fast conjugate gradient iterative adaptive algorithm-based spectral estimation approach was developed for forward-looking super-resolution imaging. This approach can accurately model velocity migration across different directions, decouple range and azimuth-elevation dependencies, and enhance target resolution efficiency. However, it only constructs the echo covariance matrix based on a specific range bin, which is difficult applied in low signal-to-noise ratios (SNRs) and limited snapshots conditions. Alternatively, an efficient Bayesian forward-looking super-resolution imaging algorithm [

4] was proposed by reformulating the imaging task as a convex optimization problem. However, the method suffers from high computational complexity and prior distribution sensitivity. To avoid the reliance on idealized models of spectral estimation methods and the high computational costs of Bayesian approaches, the regularization methods was proposed based on sparse

norm constraints [

20]. However, the penalty parameters of the regularization methods should be manually adjusted according to the actual environment.

To leverage sparsity, reference [

21] introduces the Sparse Iterative Covariance Estimation (SPICE). Experimental results demonstrate that this method offers a higher angular resolution compared to the Iterative Adaptive Approach (IAA). However, when the target exhibits distinct edge characteristics, the SPICE method fails to reconstruct the detailed contours of the target. To reconstruct contour information of the target, a total variation (TV) regularization method [

22] has been introduced for scanning radar super-resolution imaging. This method uses the TV norm as a penalty term to preserve edge details and achieve high-quality imaging. It preserves the edge contours of the target, but it is no significant enhancement in angular resolution.

To address the aforementioned challenge of simultaneously enhancing angular resolution and preserving target contours, researchers have developed multimodal fusion techniques [

23,

24,

25]. For instance, reference [

23] achieved significant improvement in emotion recognition accuracy by integrating local convolutional features with global transformer attention through cross-modal transformers. Similarly, in the radar domain, such multimodal fusion approaches [

26,

27,

28,

29] have demonstrated potential for enhancing detection capabilities.

To enhance angular resolution and preserve target contours simultaneously, a super-resolution imaging method for scanning radar called SPICE-TV was proposed in [

30]. However, this method employs MATLAB’s CVX toolbox to solve the joint optimization problem of SPICE-TV, and it does not offer a closed-form solution, which leading to significant computational costs. Furthermore, a split SPICE-TV method [

31] is proposed by deriving a closed-form solution of the SPICE-TV method using the split Bregman method. Nevertheless, the method necessitates the inversion of a high-dimensional matrix on numerous occasions, and it is only capable of batch processing the entirety of the scanned data.

To reduce computational complexity and enable real-time processing, several online angular super-resolution methods have been put forth, primarily based on beam recursion approaches. In [

32], an online Tikhonov method is proposed to achieve real-time super-resolution capability, but it delivers limited resolution improvement. The subsequent online

q-SPICE method [

33] can significantly enhance angular resolution, but the improvement comes at the expense of losing target contour reconstruction. The total variation method [

34] can address this limitation by accurately recovering target contours, though its angular resolution performance remains constrained.

In order to address the aforementioned issues, this paper introduces a grid-updating split SPICE-TV super-resolution method. The method allows for the efficient updating of reconstruction results with both contour and resolution, and a recursive grid-updating implementation framework of the split SPICE-TV has the capability to reduce the computational complexity. First, the scanning radar angular super-resolution problem is transformed into a constrained optimization problem by simultaneously employing sparse covariance fitting criteria and TV regularization constraints. Then, the split Bregman method is employed to derive an efficient closed-form solution to the problem. Ultimately, the matrix inversion problem is transformed into an online iterative equation to reduce the computational complexity and memory consumption. The proposed method offers two advantages. On the one hand, the spatial complexity of the proposed method remains constant throughout the beam recursion process, and does not increase with time. On the other hand, the online grid-updating procedure can reduce the number of iterations, improving the processing efficiency and imaging quality without additional computational burden.

The arrangement of this paper is as follows.

Section 2 introduces the echo model of the real aperture scanning radar.

Section 3 presents the proposed method.

Section 4 and

Section 5 provide the simulation and measured data results. Finally,

Section 6 concludes the paper.

4. Simulation

This section presents point target and area target simulations using the IAA method, the OMP method, the traditional TV method, the online TV method, the split SPICE method, the online q-SPICE method, the split SPICE-TV method, and the grid-updating split SPICE-TV method. By comparing the simulation results of these methods, the effectiveness of the grid-updating split SPICE-TV method will be demonstrated. All simulations were conducted on a Windows operating system equipped with 64 GB of RAM and a 12th Gen Intel(R) Core(TM) i9-12900H processor, utilizing MATLAB 2019 (The equipment we purchased from Hubei Hanlian Technology Co., Ltd., Wuhan, China).

4.1. Point Target Simulation

This section presents point target simulations to evaluate the proposed method’s effectiveness. The simulation parameters include an azimuth scanning range of to , azimuth beamwidth, scanning velocity, and pulse repetition frequency.

4.1.1. Profile Results

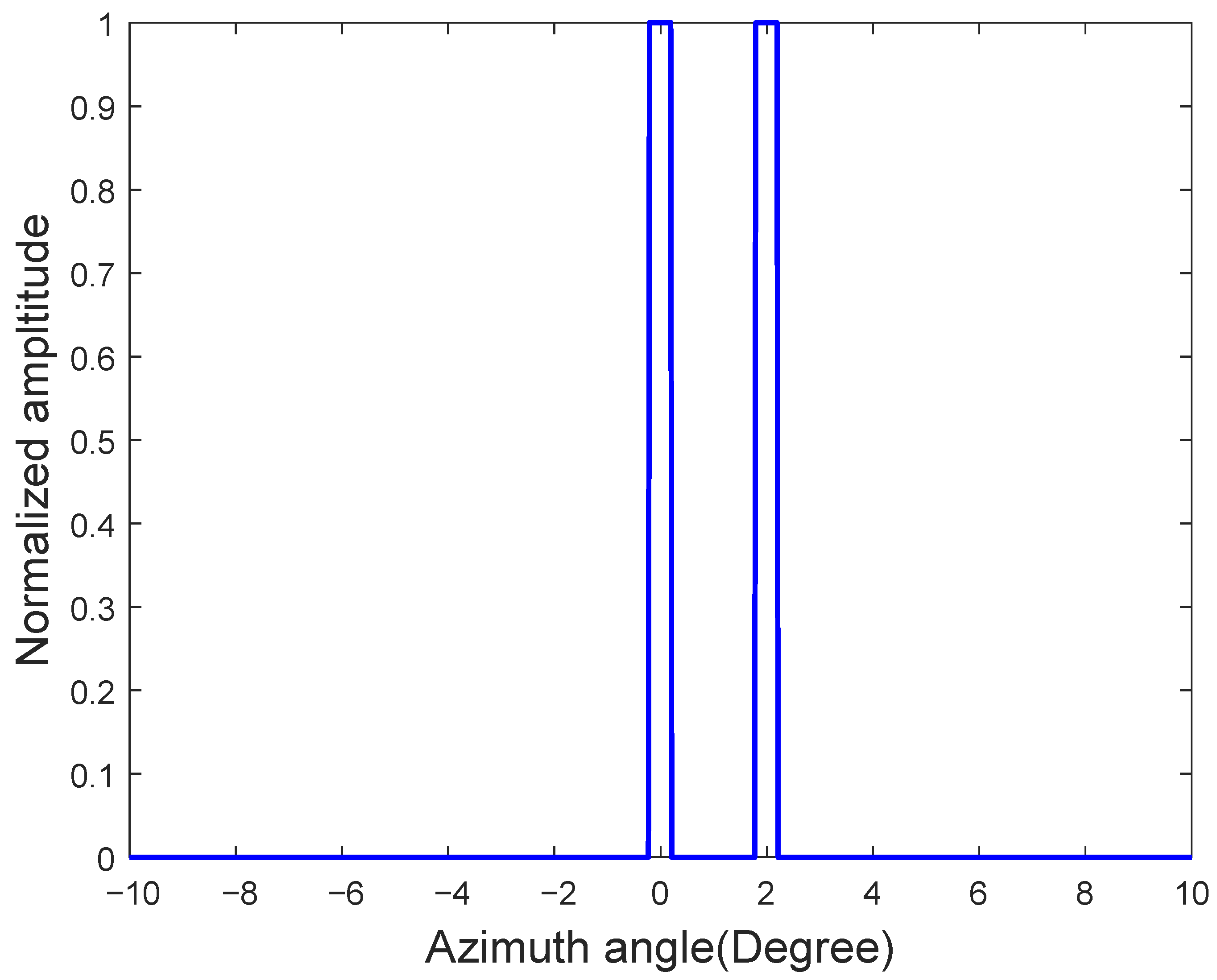

The initial point target configuration is shown in

Figure 2, featuring two targets of equal amplitude and edge properties located at

and

azimuth positions.

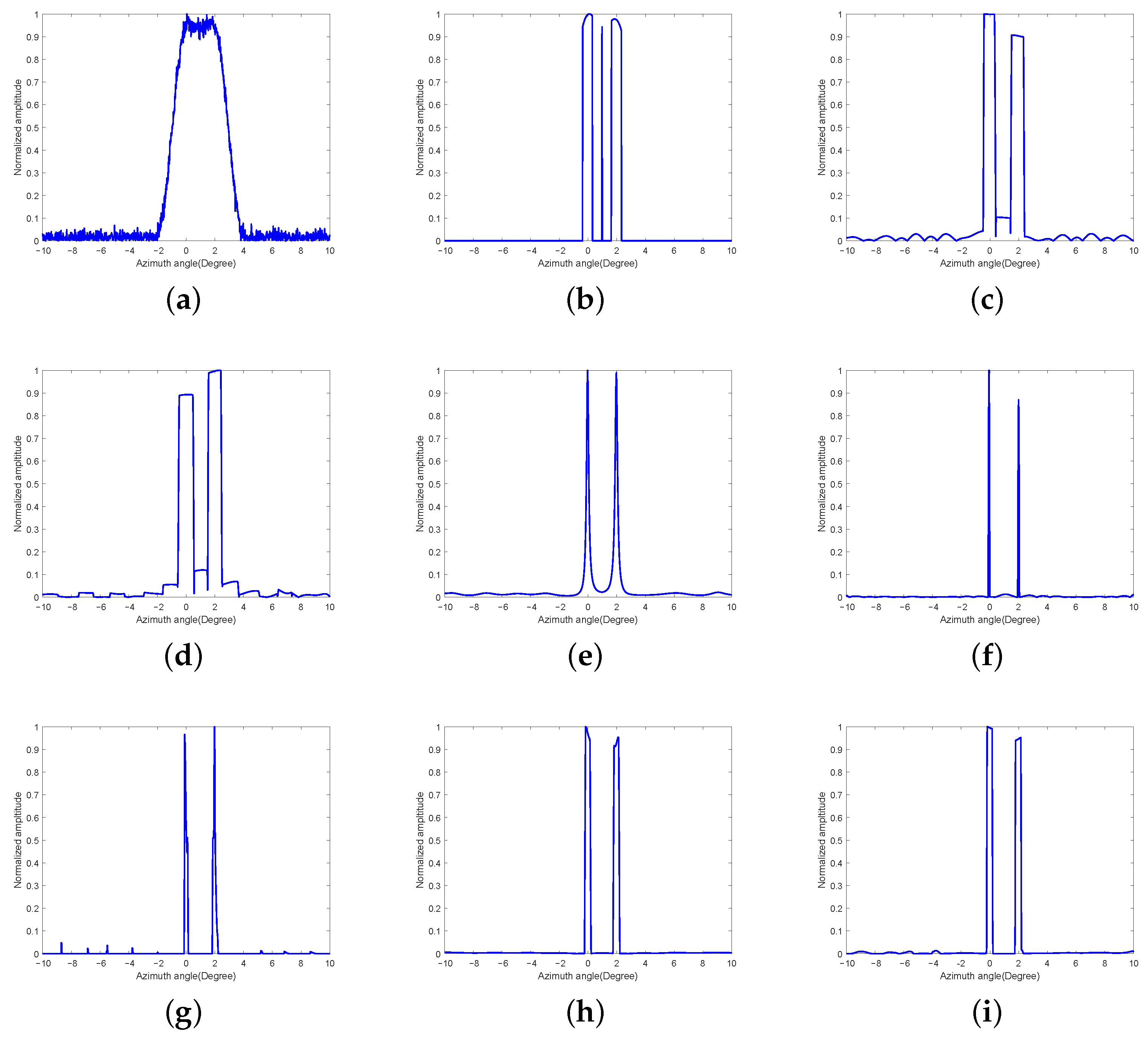

Figure 3a displays the received echoes at 25 dB SNR. Beamwidth-induced aliasing occurs due to the target angular separation being smaller than the antenna beamwidth. We conducted 100 Monte Carlo simulation trials, with representative super-resolution processing outcomes demonstrated in

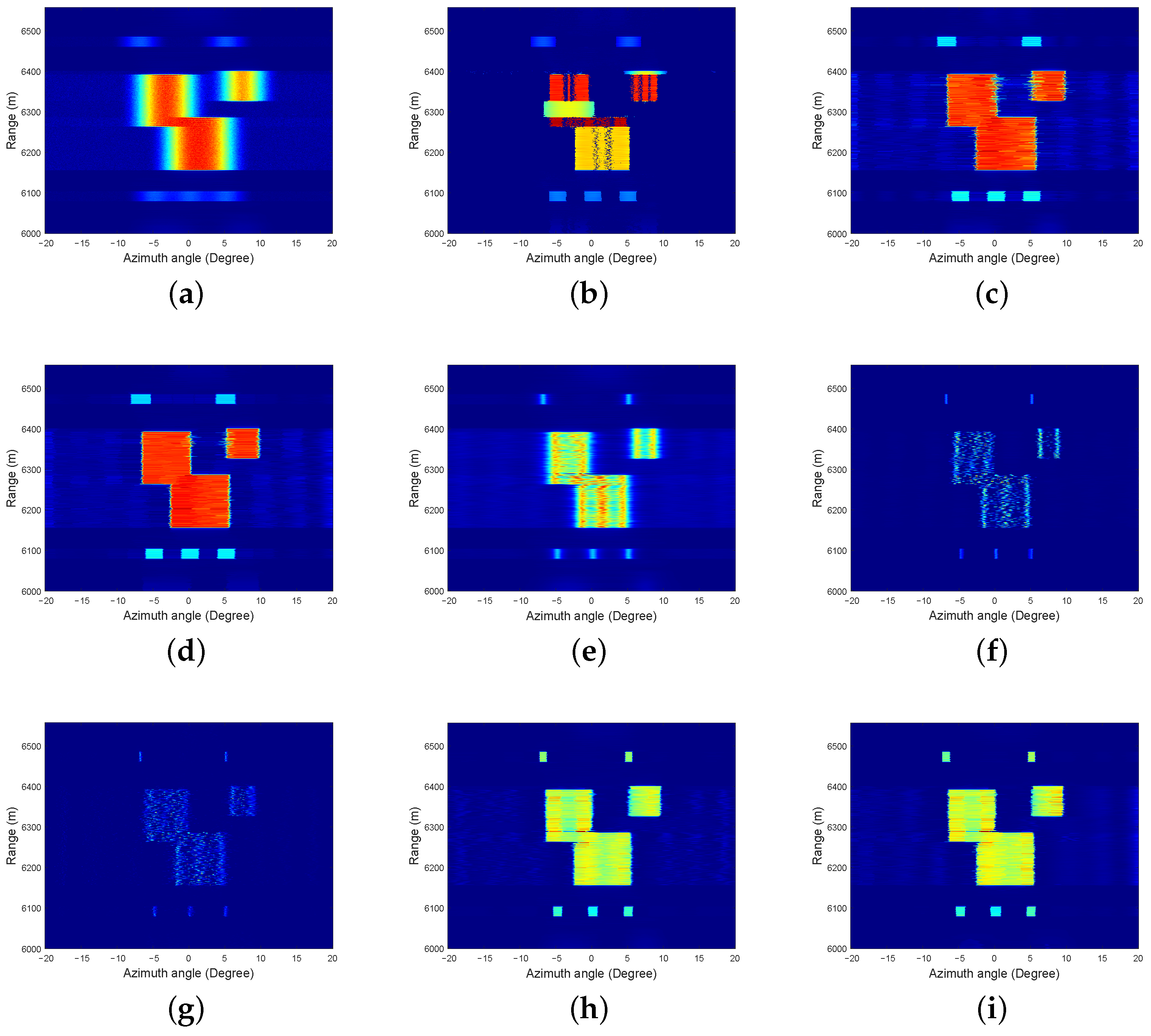

Figure 3b–i.

Figure 3b displays the OMP approach (

,

). Although this method can effectively delineate target contours, its resolution remains limited, and it produces a noticeable false target.

Figure 3c presents the reconstruction using conventional TV regularization (

,

,

), revealing significant sidelobe artifacts and limited resolution capability.

Figure 3d displays the online TV reconstruction (

,

), which, similarly to conventional TV regularization, exhibits pronounced sidelobe artifacts and degraded resolution performance. In the simulation environment, while the OMP method demonstrates satisfactory noise suppression performance, it generates distinct reconstruction artifacts. Both TV-based methods exhibit inadequate noise suppression capabilities along with noticeable artifacts in their imaging results.

Figure 3e presents the reconstruction using IAA approach (

,

). It can be seen that this method can distinguish the target well and has a relatively high resolution, but it cannot display the edge information of the target.

Figure 3f presents the split SPICE reconstruction (

,

), demonstrating superior performance relative to both traditional and online TV methods with markedly suppressed sidelobes and enhanced resolution. However, the technique shows limited capability in preserving target edge delineation.

Figure 3g demonstrates the online

q-SPICE reconstruction (

), which achieves comparable performance to the split SPICE approach with substantial sidelobe suppression and resolution enhancement. However, similar limitations persist in accurately representing target edge geometries. In the simulation environment, both SPICE methods and the IAA method demonstrate competent noise suppression performance while maintaining artifact-free reconstruction quality. As can be observed, both SPICE methods demonstrate superior resolution compared to the IAA approach.

The split SPICE-TV method’s output (

,

,

,

) in

Figure 3h reveals significant advancements over previous techniques: while maintaining the sidelobe and resolution advantages of SPICE, it uniquely preserves edge information that was previously obscured. Finally, the imaging result of the grid-updating split SPICE-TV method (

,

,

) is presented in

Figure 3i. The sidelobe suppression effect of this proposed method is significantly superior to that of the two TV methods, and it also enhances resolution. In comparison to the split SPICE method and online

q-SPICE method, the edge contours of the targets are clearly displayed. Furthermore, the sidelobe suppression and resolution improvement effects are essentially comparable to those of the split SPICE-TV method. In the simulation environment, the imaging results of the two split SPICE-TV methods demonstrate satisfactory noise suppression performance without observable artifacts.

4.1.2. Quantitative Analysis

For comprehensive super-resolution performance assessment, the Relative Error (ReErr) metric is employed to quantify the correlation between reconstructed images and the original scene. The ReErr is mathematically defined as

where

corresponds to the super-resolved reconstruction and

represents the ground truth. The metric exhibits an inverse relationship with reconstruction fidelity—lower ReErr values indicate superior imaging quality through closer approximation to the original scene.

We conducted 100 Monte Carlo trials, recording the maximum, minimum, and mean values of ReErr along with the average runtime for each method. These performance metrics are comprehensively summarized in

Table 1. Quantitative comparisons at 25 dB SNR are summarized in

Table 1. Notably, the ReErr value of the beam-updating split SPICE-TV method is significantly lower than that of the IAA method, the OMP method, the traditional TV method, the online TV method, the split SPICE method, and the online

q-SPICE method, while remaining comparable to the split SPICE-TV method.

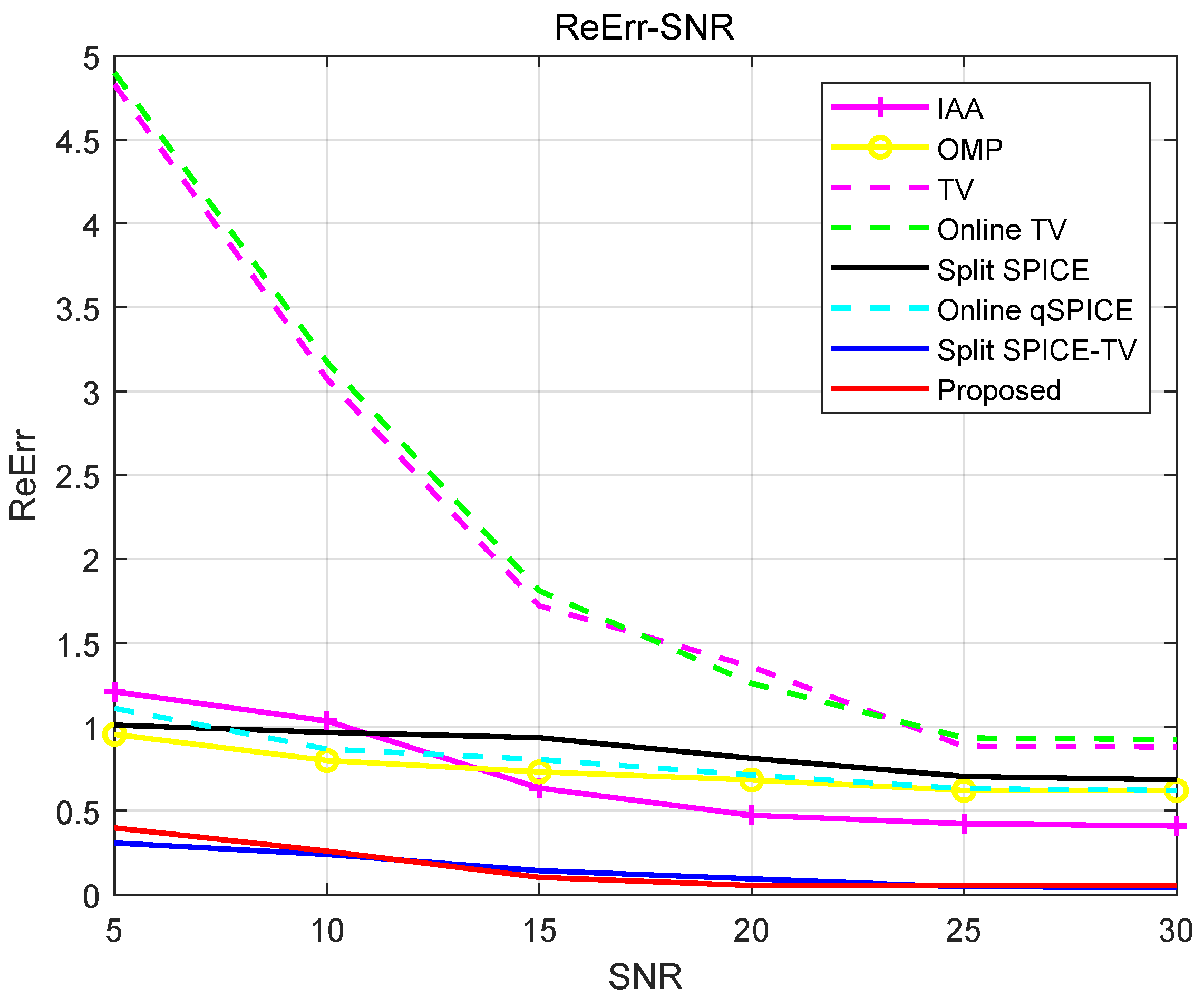

To further analyze the noise suppression performance of each method,

Figure 4 illustrates the ReErr variation across SNR levels for all eight approaches, using the parameter configurations specified in

Table 1. The proposed approach maintains superior performance (lower ReErr) compared to IAA, OMP, traditional TV, online TV, split SPICE, and online

q-SPICE methods across all tested SNR conditions, while achieving comparable accuracy to the split SPICE-TV baseline.

The runtime of the eight methods is detailed in

Table 1. The proposed method, which does not require matrix inversions during its iterative process, exhibits significantly lower computational complexity compared to batch processing methods. It is clear that the runtime of the proposed method is considerably less than that of the IAA method, the OMP method, the traditional TV method, the split SPICE method, and the split SPICE-TV method. Additionally, its runtime is nearly identical to that of the online TV method and the online

q-SPICE method, consistent with the earlier theoretical analysis.

4.2. Surface Target Simulation

This section presents a surface target simulation to rigorously evaluate the grid-updating split SPICE-TV method’s efficacy. The complete simulation configuration is specified in

Table 2.

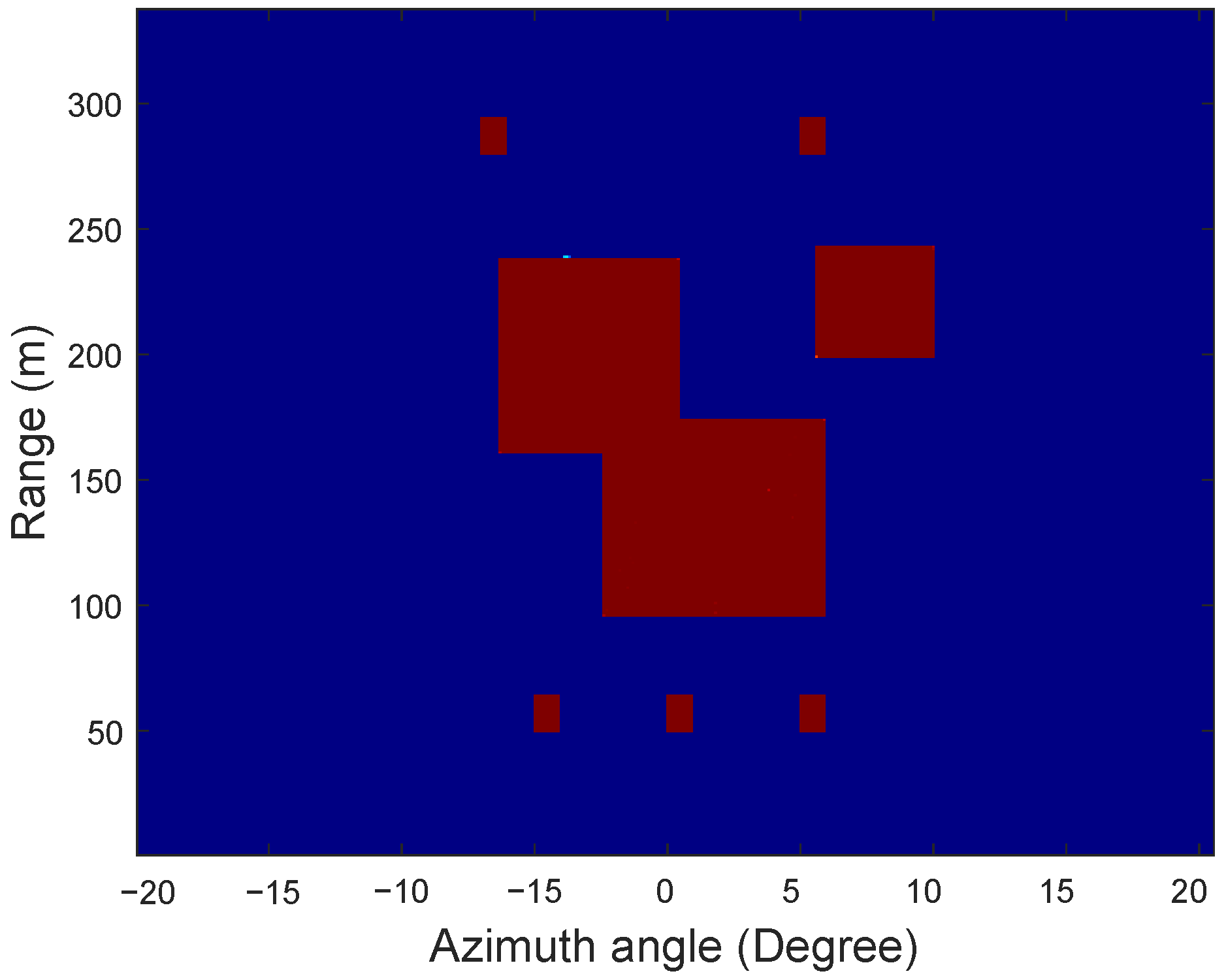

4.2.1. Surface Target Results

Figure 5 displays the reference surface target scenario, consisting of three rectangular landmasses. Two connected islands contain five sparse targets in total—two positioned above and three below. The 20 dB SNR echo response in

Figure 6a shows beamwidth-induced overlap among the lower three targets due to insufficient angular separation. Across 100 Monte Carlo simulation trials, representative super-resolution processing outcomes are demonstrated in

Figure 6b–i, with all parameters consistent with the point target simulation protocol.

The simulation result of the OMP method is presented in

Figure 6b. It can be seen that the resolution of this method is relatively low and it cannot restore the outline of the extended island target very well. The simulation result of the traditional TV method is shown in

Figure 6c. Similar to the point target simulation results, this method exhibits numerous sidelobes and demonstrates lower resolution for the five sparse targets above and below. The simulation result of the online TV method is presented in

Figure 6d. Analysis reveals that the five sparse targets exhibit very low resolution, with noticeable sidelobes present in the center of the island target. While the OMP method demonstrates satisfactory noise suppression performance, it generates distinct reconstruction artifacts. Both TV-based methods exhibit inadequate noise suppression capabilities along with noticeable artifacts in their imaging results.

The simulation result of the IAA method is shown in

Figure 6e. Although the imaging result of this method has a very high resolution, the peaks and troughs can be clearly seen from the imaging result of the intermediate extended island targets. The simulation result of the split SPICE method is shown in

Figure 6f. While this method achieves high resolution for the five sparse targets, it fails to capture the edge characteristics of the targets and does not identify the intermediate island targets. The simulation result of the online

q-SPICE method is displayed in

Figure 6g. Although the resolution of the five sparse targets is high, it does not accurately represent the outline of the middle island target. Both SPICE methods and the IAA method demonstrate competent noise suppression performance while maintaining artifact-free reconstruction quality. As can be observed, both SPICE methods demonstrate superior resolution compared to the IAA approach.

The simulation result of the split SPICE-TV method is shown in

Figure 6h. Compared to the traditional TV method and the online TV method, the split SPICE-TV method reduces sidelobes of the island group targets while enhancing the resolution of the five sparse targets. In contrast to the split SPICE method and the online

q-SPICE method, this approach effectively captures the edge contours of the targets. The imaging results of the grid-updating split SPICE-TV method are illustrated in

Figure 6i. It is evident that the proposed method outperforms the traditional TV method and the online TV method in terms of resolution and sidelobe suppression. Furthermore, in comparison to the split SPICE method and the online

q-SPICE method, the proposed approach accurately identifies island targets and reflects target contours, with imaging results largely consistent with those of the split SPICE-TV method. The imaging results of the two split SPICE-TV methods demonstrate satisfactory noise suppression performance without observable artifacts.

4.2.2. Quantitative Analysis

For quantitative super-resolution assessment of the

Figure 6 results, we utilize the Structural Similarity Index (SSIM) to measure the reconstruction fidelity. SSIM comprehensively evaluates the perceptual image quality through luminance, contrast, and structural comparisons, with values approaching one indicating optimal reconstruction. The metric is mathematically defined as

where

is the matrix of the results of each super-resolution method,

is the echo matrix,

is the average value of

,

is the mean value of

,

is the variance of

,

is the variance of

, and

is the covariance of

and

.

One hundred Monte Carlo experiments were performed to evaluate each method’s SSIM (maximum, minimum, mean) and average runtime, with the results compiled in

Table 3. The SSIM values of the simulation results of the three super-resolution methods are shown in

Table 3.

Although the IAA method achieves the highest SSIM value, it exhibits inferior edge recovery performance for extended targets compared to the proposed method, along with significantly higher computational time. The SSIM value of the proposed method is higher than those of the OMP method, traditional TV method, the online TV method, the split SPICE method, and the online q-SPICE method, while remaining nearly identical to that of the split SPICE-TV method.

The runtime of the four methods is presented in

Table 3. The computational complexity of the proposed method is significantly lower than that of batch processing methods, as its iterative process does not require matrix inversions. The runtime of the grid-updating split SPICE-TV method is considerably less than that of the IAA method, the OMP method, the traditional TV method, the split SPICE method, and the split SPICE-TV method. Furthermore, it is nearly equivalent to the runtimes of the online TV method and the online

q-SPICE method, supporting the earlier theoretical analysis.

The data presented above demonstrate that the proposed method effectively reduces computational complexity while maintaining the high image quality characteristic of the split SPICE-TV method.

4.3. Statistical Significance Analysis

To statistically validate whether the observed differences between ReErr and SSIM metrics in the quantitative analysis are significant rather than coincidental, we conducted a samples t-test for rigorous significance testing. Since ReErr and SSIM are derived from two distinct datasets, we employed an independent samples t-test for statistical validation, which can be formally expressed as

where

is the mean of ReErr,

is the mean of SSIM,

is the variance of ReErr,

is the variance of SSIM,

is the number of Monte Carlo experiments conducted to collect ReErr, and

is the number of Monte Carlo experiments conducted to collect SSIM. We have conducted 100 Monte Carlo simulations to evaluate the proposed method’s ReErr and SSIM performance metrics, followed by rigorous t-test statistical analysis. The statistical analysis yielded

(

), indicating no significant difference at the

level.

5. Measured Data Results

In the previous section, the feasibility of the proposed method was demonstrated through point target and surface target simulations. To further evaluate the imaging performance of the grid-updating split SPICE-TV method, this section processes two sets of measured data using the IAA method, the OMP method, the traditional TV method, the online TV method, the split SPICE method, the online q-SPICE method, the split SPICE-TV method, and the grid-updating split SPICE-TV method to validate the restoration capabilities of the grid-updating split SPICE-TV method for sparse targets and target contours. Additionally, a prominent point was selected in the scene, and profiles of the echoes, along with results from the IAA method, the OMP method, the traditional TV method, the online TV method, the split SPICE method, the online q-SPICE method, the split SPICE-TV method, and the grid-updating split SPICE-TV method, were compared.

5.1. Imaging for Urban Monitoring Applications

In this section, the effectiveness of the proposed method is validated using measured data collected from a urban monitoring application. The experimental parameters are presented in

Table 4.

5.1.1. Imaging Results

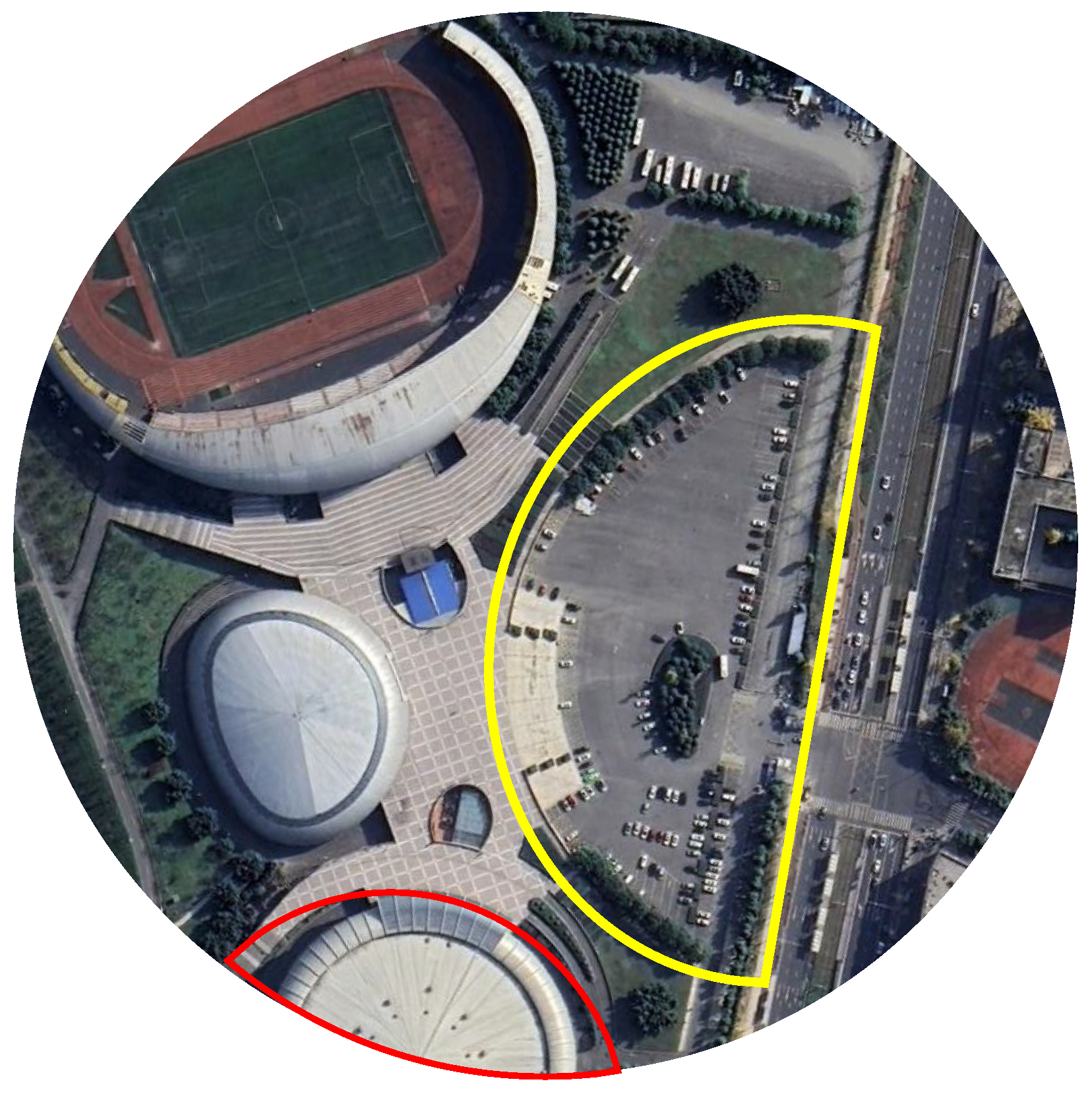

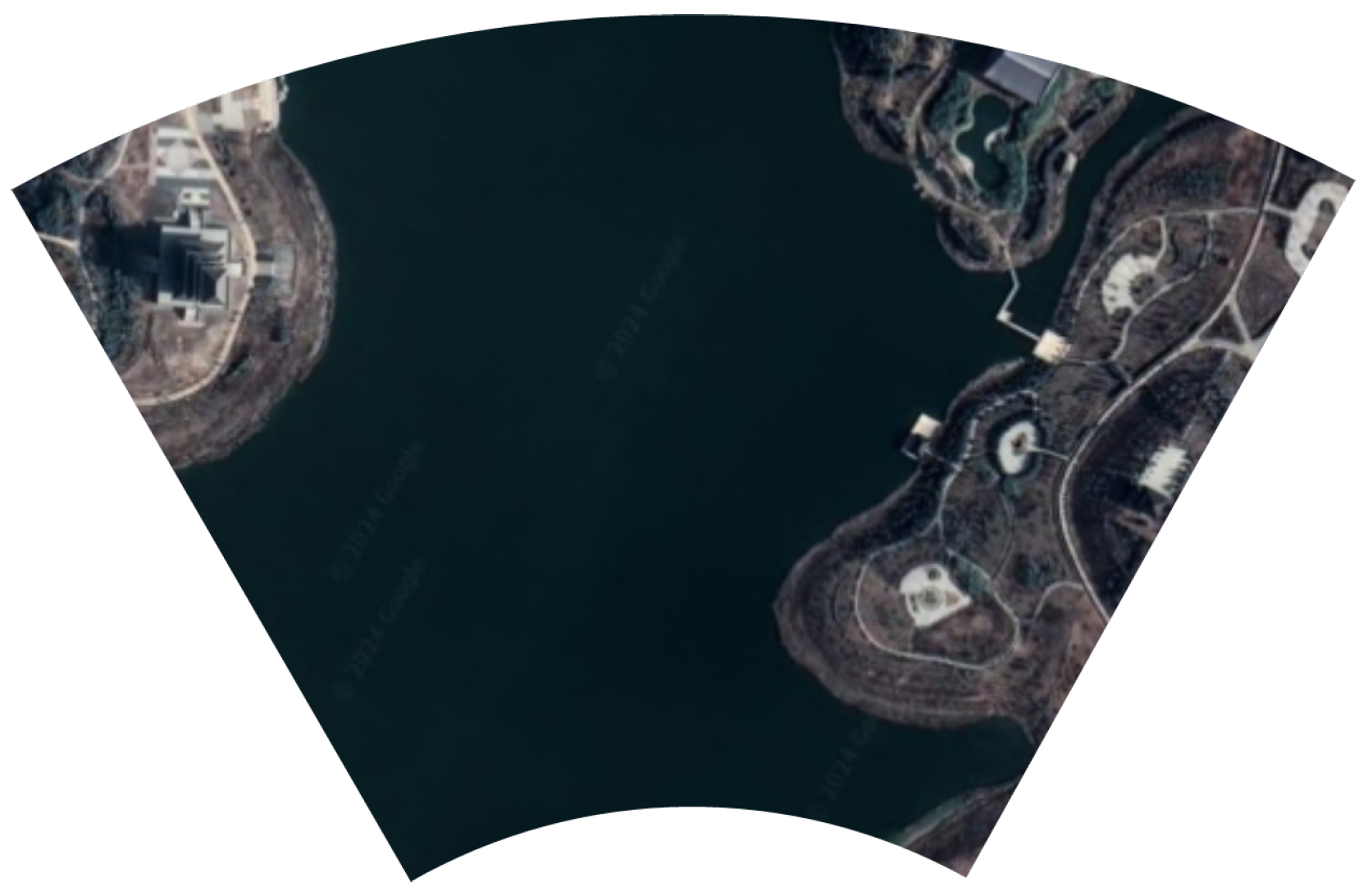

The real scene is depicted in

Figure 7, where the building outlined in red and the parking lot marked in yellow represent the key areas of interest for this set of measured data. The echo pattern of the original scene, shown in

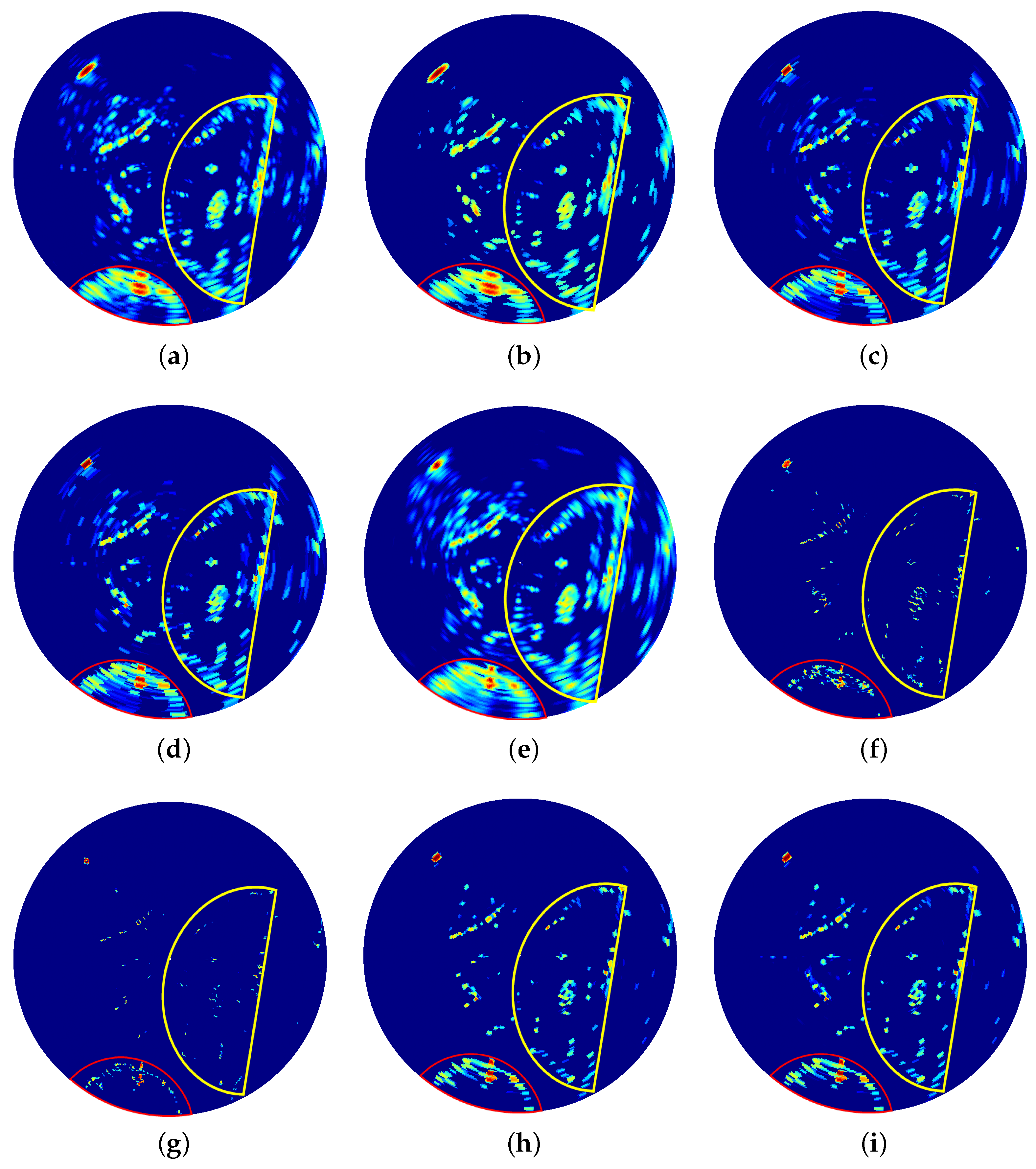

Figure 8a, demonstrates clear contours in both the red and yellow regions. The super-resolution imaging results are presented in

Figure 8b–i. All methodological parameters maintain identical configurations to those implemented in the previous section.

The imaging result of the OMP method is shown in

Figure 8b. The results indicate no significant resolution improvement compared to the original echo data. The imaging result of the traditional TV method is displayed in

Figure 8c. Compared to the echo of the original scene, the contours of the imaging results in the red and yellow regions are sharper, but the improvement in resolution is limited. The imaging result of the online TV method is shown in

Figure 8d. Similar to the traditional TV method, the imaging results of the online TV method exhibit sharper contours in the red and yellow regions. However, the enhancement in the azimuth resolution remains minimal. Consistent with the favorable simulation results obtained previously, the OMP method and two TV methods demonstrate comparably effective noise suppression performance with artifacts under high-SNR experimental conditions.

The imaging result of the IAA method is displayed in

Figure 8e. As observed, the IAA method suffers from severe energy dispersion and sidelobe interference when dealing with complex scenes, significantly degrading its resolution performance. The imaging result of the split SPICE method is presented in

Figure 8f. It can be observed that, although the split SPICE method demonstrates a significant improvement in azimuth resolution compared to both the traditional TV method and the online TV method, this approach fails to reconstruct the contours of the building in the red region and the parking lot in the yellow region. The imaging result of the

q-SPICE method is shown in

Figure 8g. Similar to the split SPICE method, despite its high resolution, this method fails to reveal the contours of the targets in the red and yellow regions. Diverging from the favorable simulation outcomes, high-SNR experimental measurements reveal marked performance differences: the IAA method demonstrates substantially degraded noise suppression with conspicuous artifacts, whereas both SPICE variants maintain excellent noise rejection capabilities while producing artifact-free reconstructions.

The imaging result of the split SPICE-TV method is displayed in

Figure 8h. Compared to the traditional TV method and the online TV method, the split SPICE-TV method significantly enhances the resolution of targets in both the red and yellow regions. Furthermore, in contrast to the split SPICE method and the online

q-SPICE method, the split SPICE-TV method successfully reconstructs the contours of the targets in the red and yellow regions. The imaging result of the grid-updating split SPICE-TV method is shown in

Figure 8i. It is also evident that the proposed method significantly improves the resolution of targets in the red and yellow regions compared to the traditional TV method and the online TV method. Additionally, it successfully reconstructs the contours of the targets in these regions, outperforming both the split SPICE method and the online

q-SPICE method. Moreover, the imaging result is nearly identical to that of the split SPICE-TV method. Under high-SNR measured data conditions, the imaging results of the two split SPICE-TV methods exhibit superior noise suppression performance while remaining entirely free of discernible artifacts.

5.1.2. Quantitative Analysis

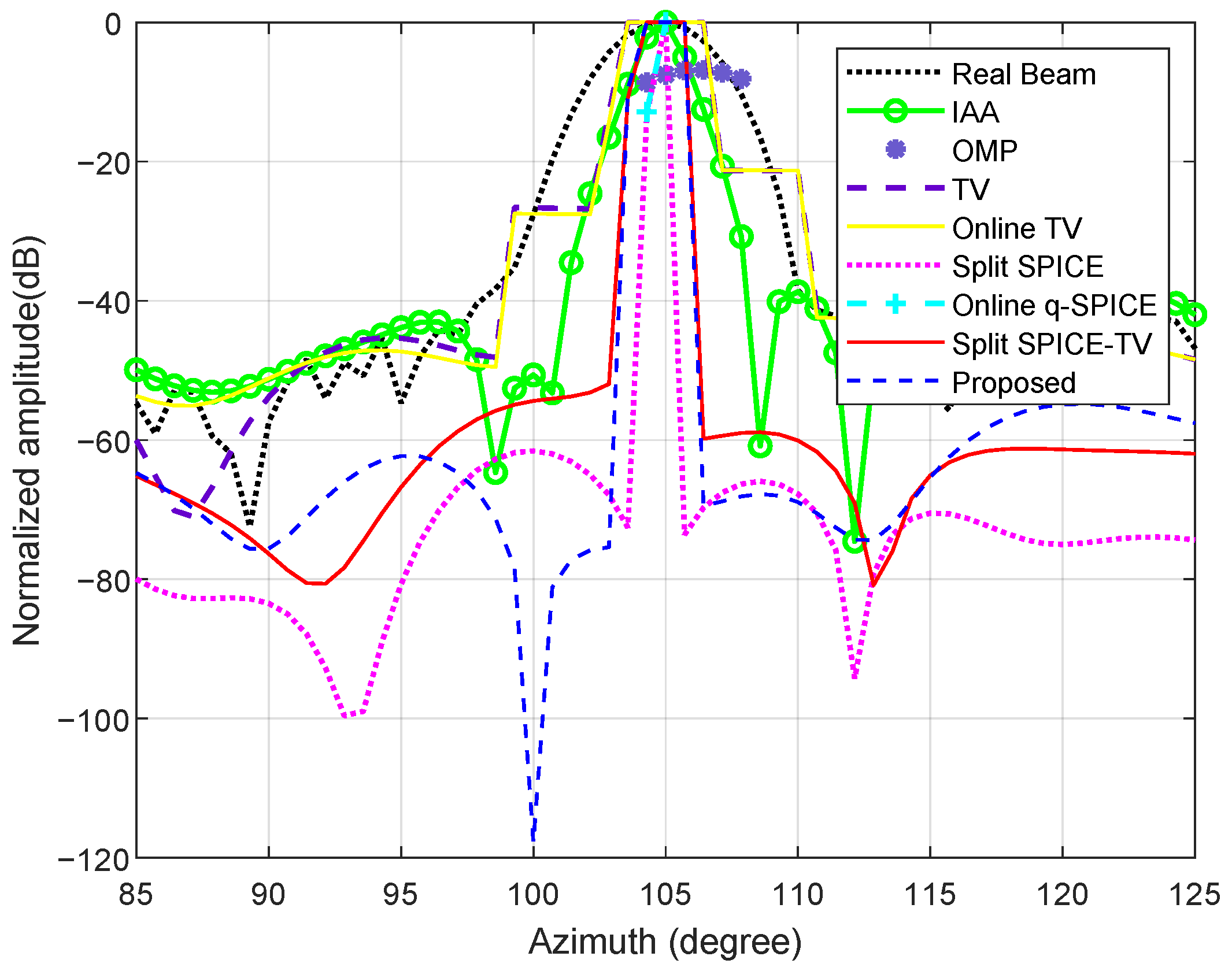

The range bin of 50 m from the profile results of the eight methods is extracted from

Figure 8 and depicted in

Figure 9. As demonstrated, when processing complex real-world scenarios, both IAA and OMP methods exhibit significantly lower resolution performance compared to the proposed approach. In comparison to the traditional TV method and the online TV method, the proposed method exhibits reduced sidelobes and enhanced resolution. Additionally, when compared to the online

q-SPICE method, it successfully recovers the target contours, yielding imaging results similar to those of the split SPICE-TV method.

Table 5 compares the −3 dB bandwidth performance across methods. The proposed method demonstrates superior resolution (narrower bandwidth) to the IAA method, the OMP method, the traditional TV method, the online TV method while matching the split SPICE-TV’s performance. The proposed method outperforms both TV variants and maintains comparable bandwidth characteristics to split SPICE-TV.

The runtime of the eight methods is presented in

Table 5. The runtime of the grid-updating split SPICE-TV method is significantly shorter than that of the IAA method, the OMP method, the traditional TV method, the split SPICE method, and the split SPICE-TV method, while remaining nearly the same as that of the online TV method and the online

q-SPICE method, which aligns with the earlier theoretical analysis.

5.2. Imaging for Coastal Monitoring Applications

In the previous section, the effectiveness of the proposed method was demonstrated using measured data recorded from a urban monitoring application. In this section, measured data captured from a coastal monitoring application will be utilized to further validate the efficacy of the proposed method. The experimental parameters are detailed in

Table 6.

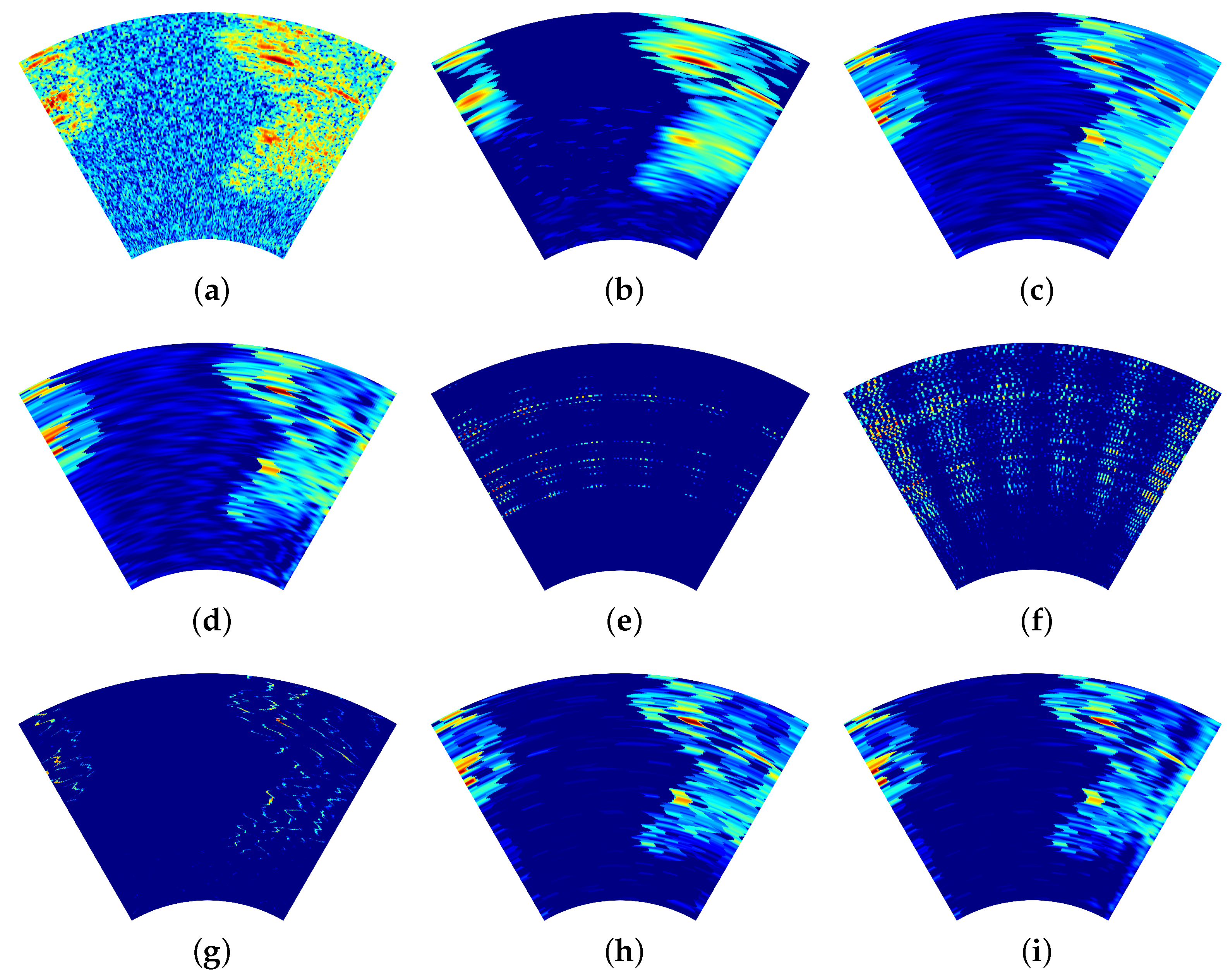

The real scene is depicted in

Figure 10, where two outlined targets are visible in the bottom-left and top-right corners. The echo of the original scene is shown in

Figure 11a. The super-resolution imaging results are illustrated in

Figure 11b–i. Due to the high level of noise present in the measured data, the parameters of the methods discussed in the preceding sections are not directly applicable here. Consequently, the parameters for each method have been re-adjusted in this section to accommodate the specific characteristics of the measured data.

The imaging result of the OMP method (

,

= 10,000) is shown in

Figure 11b. Similar to the previous set of measured data result obtained with the OMP method, the imaging resolution shows no significant improvement. The imaging result of the traditional TV method (

,

= 20,000,

) is displayed in

Figure 11c, which contains numerous sidelobes. The imaging result of the online TV method (

= 20,000,

) is shown in

Figure 11d. Similar to the traditional TV method, the online TV method also exhibits sidelobes. Under low-SNR experimental conditions, the imaging results of the above three methods demonstrate adequate noise suppression performance while exhibiting moderate artifact levels.

The imaging result of the IAA method (

,

) is displayed in

Figure 11e. As evidenced by the results, the IAA method demonstrates significantly degraded imaging performance when processing complex scenes with severe background noise. The imaging result of the split SPICE method (

,

= 10,000) is presented in

Figure 11f. Due to significant noise in the echoes, the split SPICE method is unable to identify the edges of the targets. The imaging result of the

q-SPICE method (

) is shown in

Figure 11g. Despite its high resolution, this method fails to reveal the contours of the targets. Under low-SNR experimental conditions, both IAA and split SPICE methods exhibit substantially compromised noise suppression capabilities along with clearly visible artifacts. In contrast, the online

q-SPICE method maintains robust noise rejection performance while producing artifact-free reconstructions.

The imaging result of the split SPICE-TV method (

,

= 10,000,

,

) is displayed in

Figure 11h, where a decrease in the number of sidelobes and an increase in resolution can be observed. The imaging result of the grid-updating split SPICE-TV method (

= 10,000,

,

) is shown in

Figure 11i. Similarly, it can be observed that its sidelobes are smaller than those of the traditional TV method and the online TV method, and it exhibits higher resolution compared to both. The imaging result is nearly identical to that of the split SPICE-TV method. Under low-SNR measured data conditions, the imaging results of the two split SPICE-TV methods maintain competent noise suppression performance while exhibiting no discernible artifacts.

Table 7 presents the runtime of all evaluated methods. The runtime of the grid-updating split SPICE-TV method is significantly shorter than that of the IAA method, the OMP method, the traditional TV method, the split SPICE method, and the split SPICE-TV method, while remaining nearly identical to that of the online TV method and the online

q-SPICE method, consistent with the earlier theoretical analysis.

The above analysis demonstrates that the proposed method effectively reduces computational complexity while preserving the high image quality of the split SPICE-TV method.

6. Conclusions

In this paper, we introduce an grid-updating split SPICE-TV method tailored for scanning radars. This method boasts several advantages. Firstly, compared to the traditional SPICE approach, it integrates TV norm regularization, better preserving azimuthal edge contours. In contrast to conventional TV regularization methods, our approach yields higher imaging resolution and lower sidelobes. Secondly, unlike traditional online methods with a single constraint term, our proposed method incorporates two constraint terms, facilitating the recovery of both sparse targets and edge contours simultaneously. Lastly, in comparison to the existing methods based on Bregman iteration, our proposed approach offers an online closed-form solution, significantly reducing computational costs without compromising imaging quality. Simulation and experimental validations substantiate the effectiveness of the proposed method.

However, the practical deployment of the proposed method is constrained by its requirement for the manual adjustment of three critical parameters. The performance degrades when these parameters are mismatched to dynamic operational environments (e.g., varying clutter or SNR conditions), and the manual tuning process reduces reproducibility across different radar systems. To address these limitations, our future work will focus on developing a neural network architecture capable of predicting optimal parameters directly from raw radar data. This adaptive parameter selection approach would preserve the method’s theoretical advantages while eliminating its manual tuning bottleneck, thereby facilitating real-world field applications.