A False-Positive-Centric Framework for Object Detection Disambiguation

Abstract

1. Introduction

1.1. Motivation

1.2. Past Object Detection Interpretability Frameworks

1.3. New Framework for Assessing Imagery for Object Detection

2. Materials and Methods

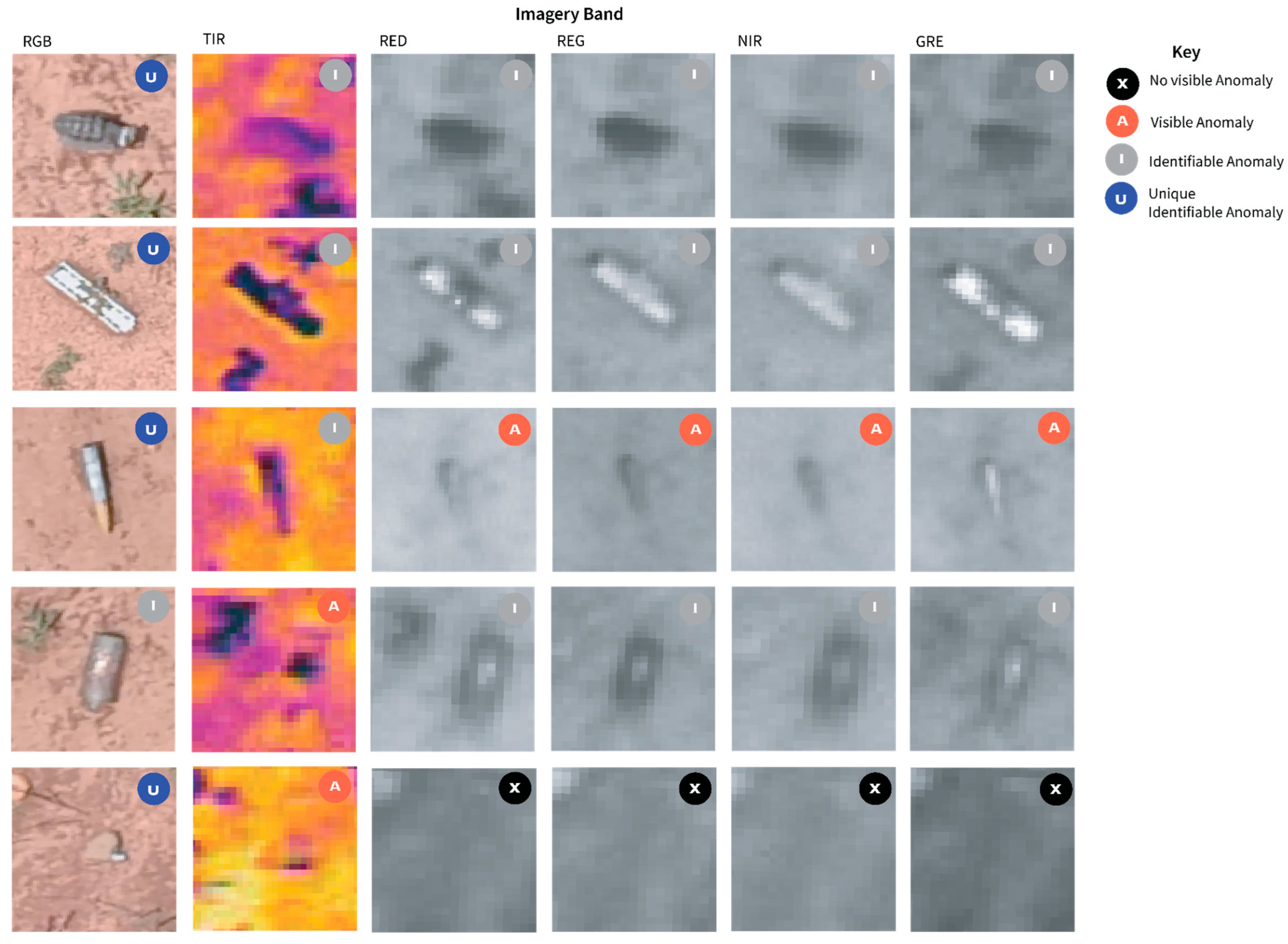

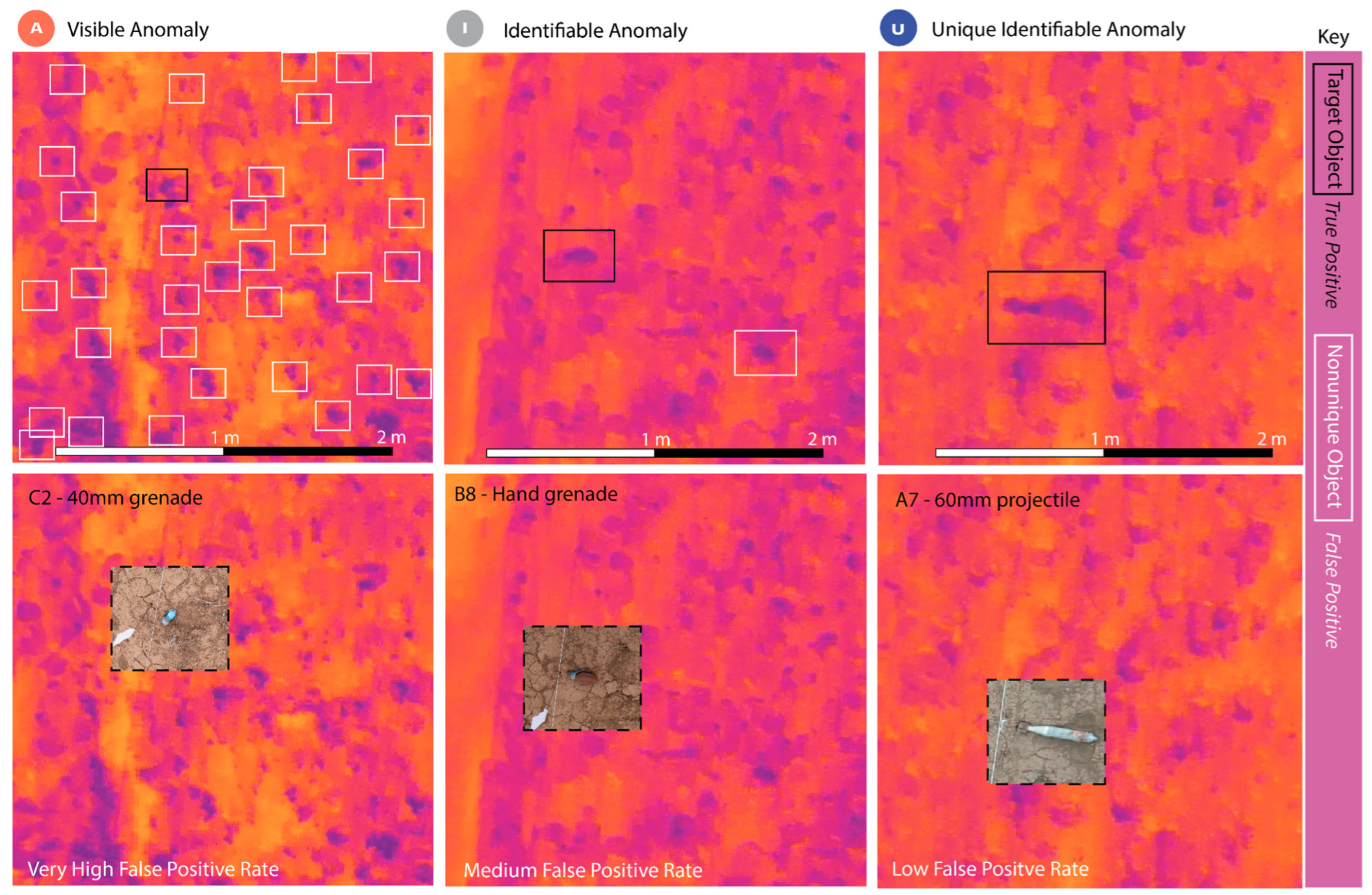

2.1. The AIU Index

2.1.1. Visual Anomaly—Level 1

2.1.2. Identifiable Anomaly—Level 2

2.1.3. Unique Identifiable Anomaly—Level 3

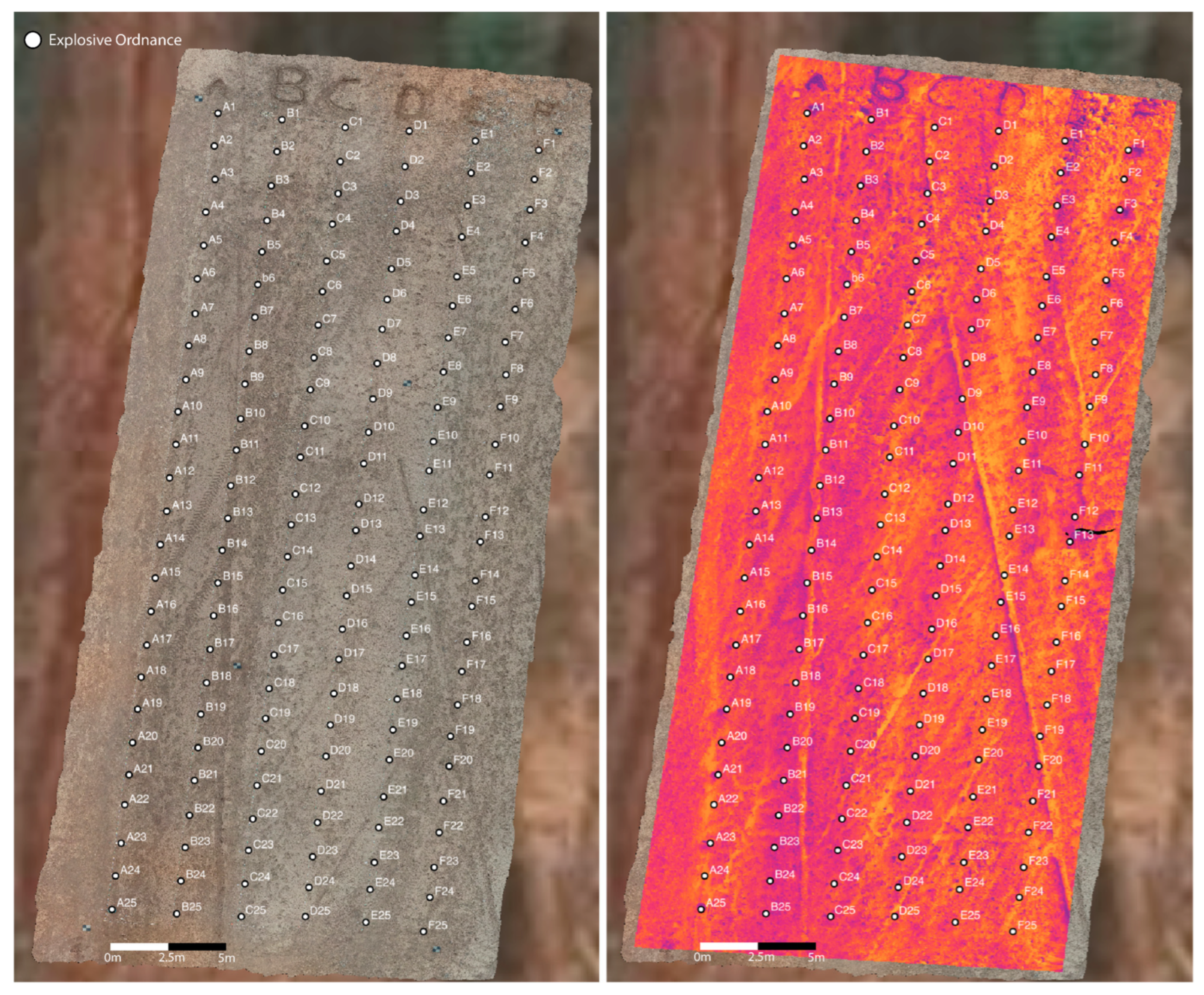

2.2. Test Site

2.3. Data Collection

2.4. Data Processing

2.5. AIU Analysis for Across Sensor Modalities

2.6. Flight Height Relationship to Identification Rate

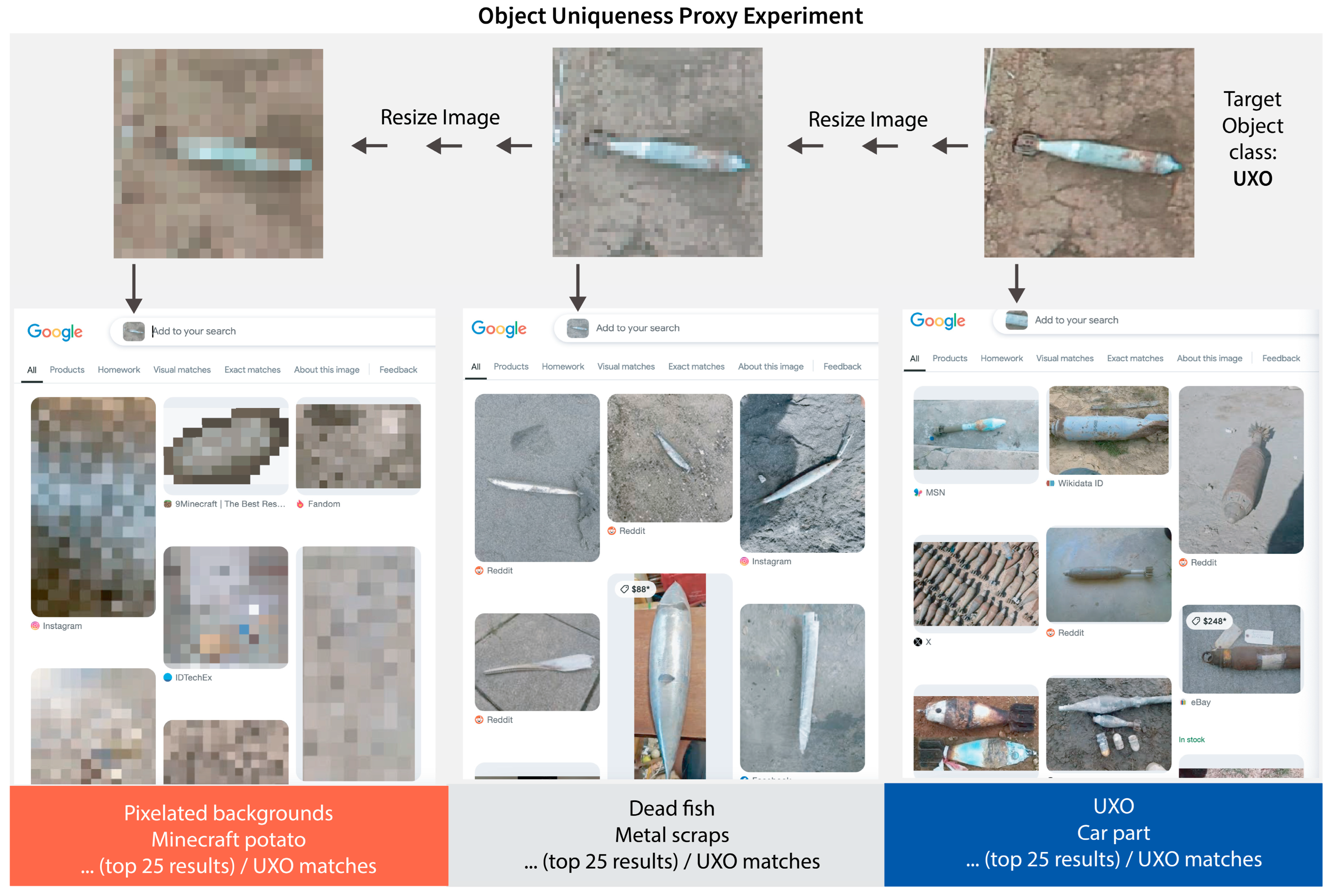

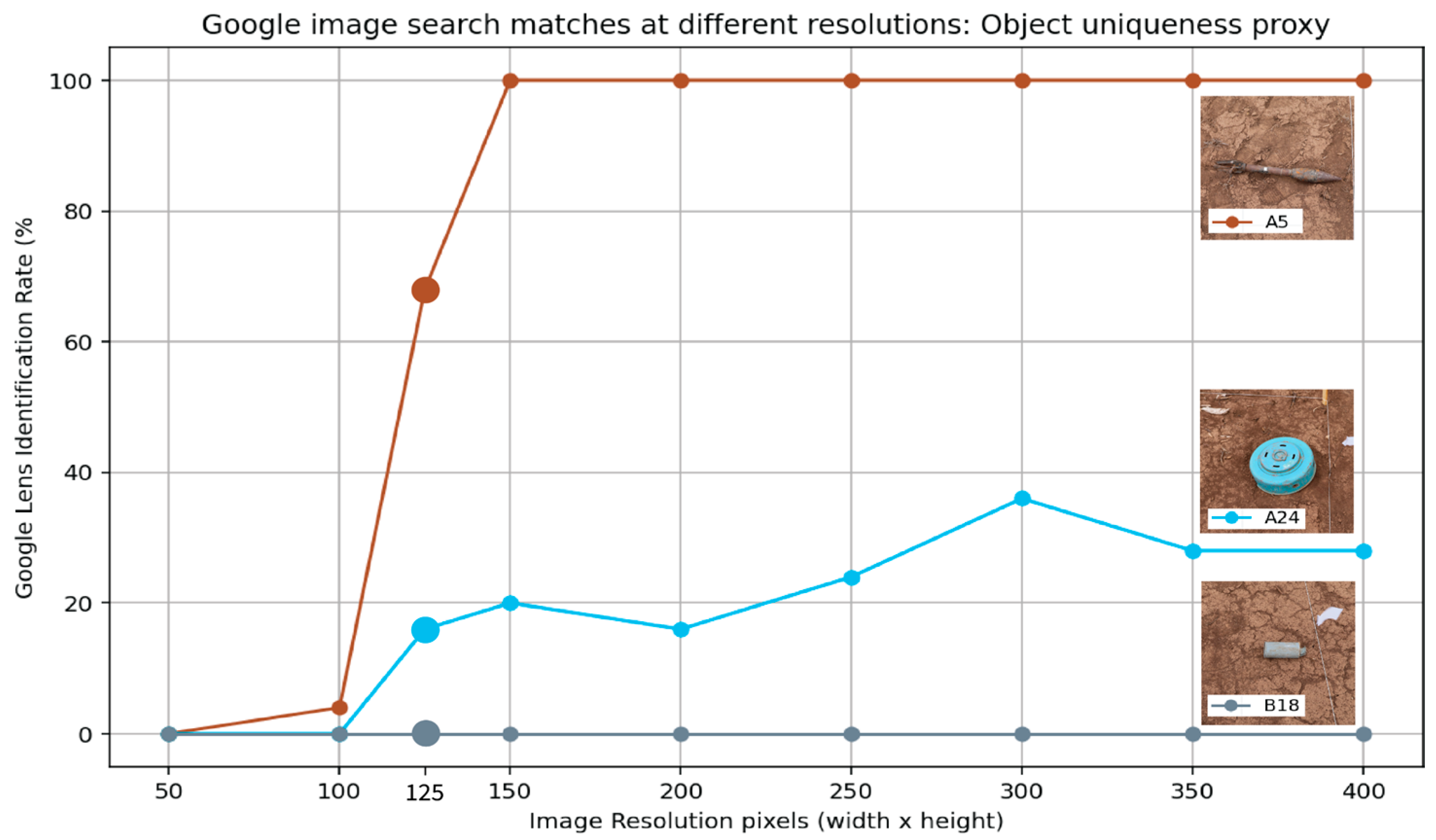

2.7. Object Uniqueness Investigation

3. Results

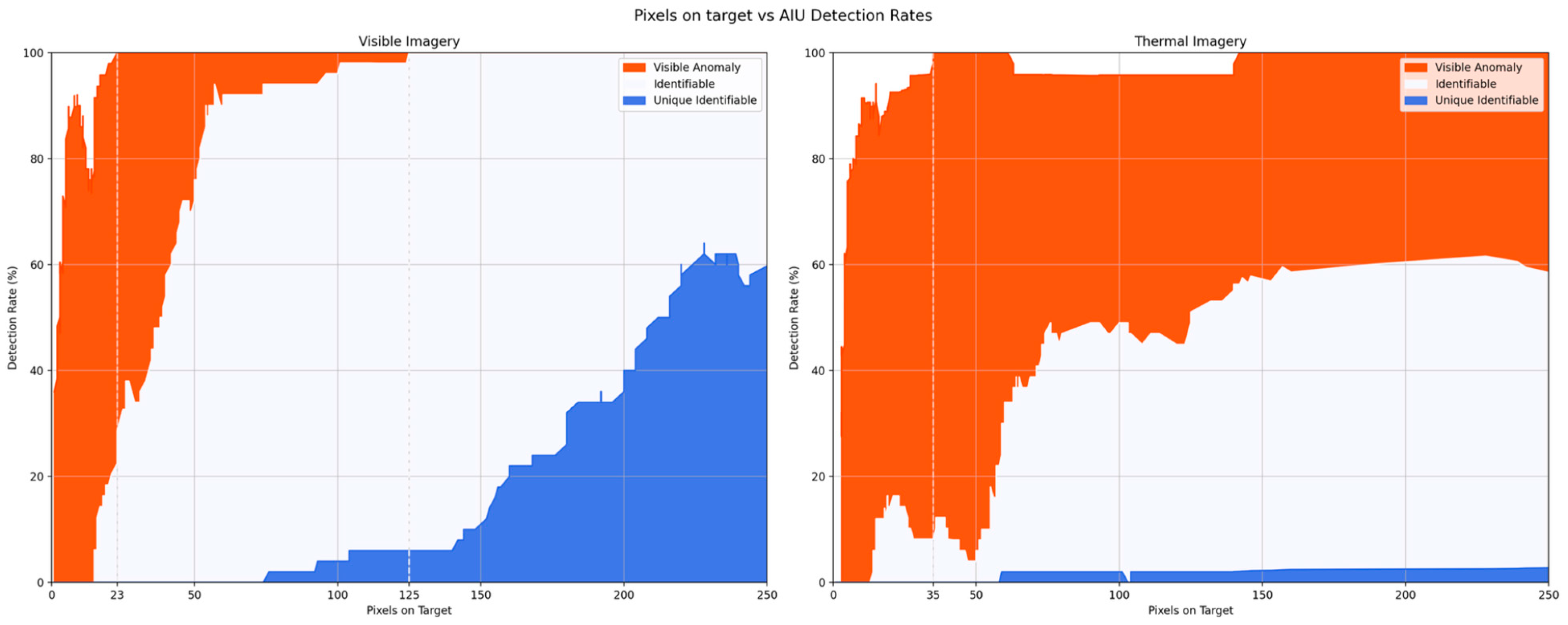

3.1. Comparing RGB, Thermal, and Multispectral Imagery for Landmine Detection

3.2. Relationship Between Flight Height and Detection Rate

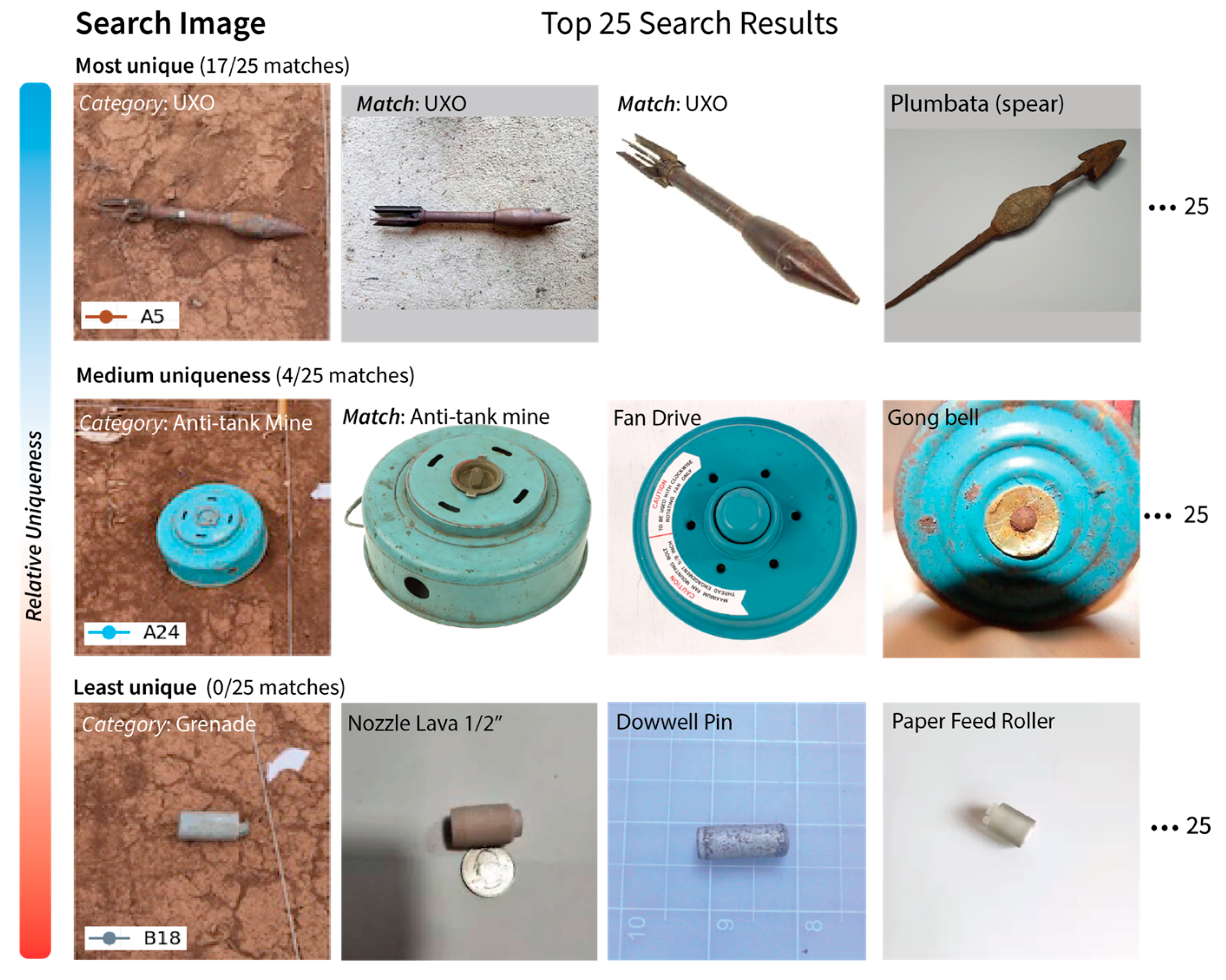

3.3. Proxy for Object Uniqueness

4. Discussion

4.1. Limitations and Discussion on Object Uniqueness

4.2. Evaluating Object Detection Across Sensor Modalities Using the AIU Index

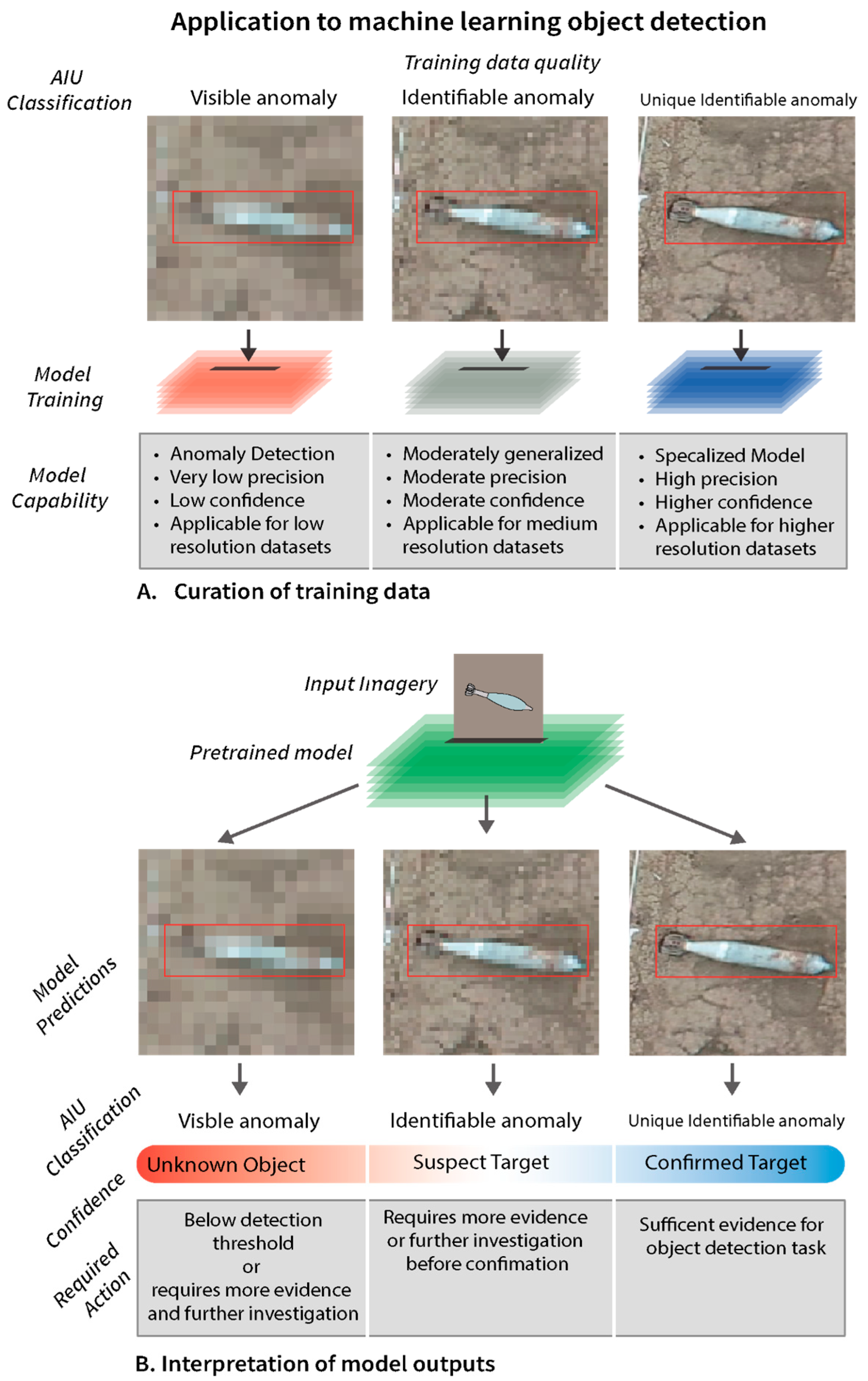

4.3. Application to Machine Learning

4.3.1. Comparing AIU to Precision and Other Object Detection Metrics

4.3.2. Training Data

4.3.3. Interpretation of Bounding Boxes

4.4. Using the AIU Framework for Data Collection for UAVs

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UXO | Unexploded Ordnance |

| DRI | Detection, Recognition, Identification |

References

- Abdelfattah, R.; Wang, X.; Wang, S. Ttpla: An aerial-image dataset for detection and segmentation of transmission towers and power lines. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022. [Google Scholar]

- Osco, L.P.; de Arruda, M.D.S.; Gonçalves, D.N.; Dias, A.; Batistoti, J.; de Souza, M.; Gomes, F.D.G.; Ramos, A.P.M.; de Castro Jorge, L.A.; Liesenberg, V.; et al. A CNN approach to simultaneously count plants and detect plantation-rows from UAV imagery. ISPRS J. Photogramm. Remote Sens. 2021, 174, 1–17. [Google Scholar] [CrossRef]

- Leng, J.; Ye, Y.; Mo, M.; Gao, C.; Gan, J.; Xiao, B.; Gao, X. Recent Advances for Aerial Object Detection: A Survey. ACM Comput. Surv. 2024, 56, 296. [Google Scholar] [CrossRef]

- Xu, J.; Fan, X.; Jian, H.; Xu, C.; Bei, W.; Ge, Q.; Zhao, T. YoloOW: A spatial scale adaptive real-time object detection neural network for open water search and rescue from uav aerial imagery. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5623115. [Google Scholar] [CrossRef]

- Bejiga, M.B.; Zeggada, A.; Melgani, F. Convolutional neural networks for near real-time object detection from UAV imagery in avalanche search and rescue operations. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 693–696. [Google Scholar]

- Baur, J.; Steinberg, G.; Nikulin, A.; Chiu, K.; de Smet, T.S. Applying deep learning to automate UAV-based detection of scatterable landmines. Remote Sens. 2020, 12, 859. [Google Scholar] [CrossRef]

- Lee, M.; Choi, M.; Yang, T.; Kim, J.; Kim, J.; Kwon, O.; Cho, N. A study on the advancement of intelligent military drones: Focusing on reconnaissance operations. IEEE Access 2024, 12, 55964–55975. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Johnson, J. Analysis of image forming systems. Sel. Pap. Infrared Des. Part I II 1985, 513, 761. [Google Scholar]

- Driggers, R.G.; Cox, P.G.; Kelley, M. National imagery interpretation rating system and the probabilities of detection, recognition, and identification. Opt. Eng. 1997, 36, 1952–1959. [Google Scholar] [CrossRef]

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habiboǧlu, Y.H.; Toreyin, B.U.; Verstockt, S. Video fire detection–review. Digit. Signal Process. 2013, 23, 1827–1843. [Google Scholar] [CrossRef]

- Havens, K.J.; Sharp, E.J. Thermal Imaging Techniques to Survey and Monitor Animals in the Wild: A Methodology; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Sjaardema, T.A.; Smith, C.S.; Birch, G.C. History and Evolution of the Johnson Criteria (No. SAND2015-6368); Sandia National Lab. (SNL-NM): Albuquerque, NM, USA, 2015. [Google Scholar]

- Weldon, W.T.; Hupy, J. Investigating methods for integrating unmanned aerial systems in search and rescue operations. Drones 2020, 4, 38. [Google Scholar] [CrossRef]

- Biederman, I. Recognition-by-components: A theory of human image understanding. Psychol. Rev. 1987, 94, 115. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Baur, J.; Steinberg, G.; Frucci, J.; Brinkley, A. An accessible seeded field for humanitarian mine action research. J. Conv. Weapons Destr. 2023, 27, 2. [Google Scholar]

- Baur, J.; Steinberg, G. Demining Research Community Seeded Field: High-Resolution RGB, Thermal IR, and Multispectral Orthomosaics [Data Set]. Zenodo. Available online: https://zenodo.org/records/15324498 (accessed on 2 May 2025).

- Pix4D. What Are the Output Results of Pix4dmapper? 2025. Available online: https://support.pix4d.com/hc/en-us/articles/205327435 (accessed on 2 May 2025).

- Jacobs, P.A. Thermal Infrared Characterization of Ground Targets and Backgrounds; SPIE Press: Bellingham, WA, USA, 2006; Volume 70. [Google Scholar]

- Nikulin, A.; De Smet, T.S.; Baur, J.; Frazer, W.D.; Abramowitz, J.C. Detection and identification of remnant PFM-1 ‘Butterfly Mines’ with a UAV-based thermal-imaging protocol. Remote Sens. 2018, 10, 1672. [Google Scholar] [CrossRef]

- Sabol, D.E.; Gillespie, A.R.; McDonald, E.; Danillina, I. Differential thermal inertia of geological surfaces. In Proceedings of the 2nd Annual International Symposium of Recent Advances in Quantitative Remote Sensing, Torrent, Spain, 25–29 September 2006; pp. 25–29. [Google Scholar]

- Zhao, H.; Ji, Z.; Li, N.; Gu, J.; Li, Y. Target detection over the diurnal cycle using a multispectral infrared sensor. Sensors 2016, 17, 56. [Google Scholar] [CrossRef] [PubMed]

- de Smet, T.S.; Nikulin, A. Catching “butterflies” in the morning: A new methodology for rapid detection of aerially deployed plastic land mines from UAVs. Lead. Edge 2018, 37, 367–371. [Google Scholar] [CrossRef]

- Hall, D.; Dayoub, F.; Skinner, J.; Zhang, H.; Miller, D.; Corke, P.; Carneiro, G.; Angelova, A.; Sünderhauf, N. Probabilistic object detection: Definition and evaluation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1031–1040. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Las Vegas, NV, USA, 27–30 June 2016; pp. 1440–1448. [Google Scholar]

- Pham, H.N.A.; Triantaphyllou, E. The Impact of Overfitting and Overgeneralization on the Classification Accuracy in Data Mining; Springer: Berlin/Heidelberg, Germany, 2008; pp. 391–431. [Google Scholar]

- Cheon, J.; Baek, S.; Paik, S.B. Invariance of object detection in untrained deep neural networks. Front. Comput. Neurosci. 2022, 16, 1030707. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Li, J.; Guan, X.; Liang, B.; Lai, Y.; Luo, X. Research on overfitting of deep learning. In Proceedings of the 2019 15th International Conference on Computational Intelligence and Security (CIS), Macao, China, 13–16 December 2019; pp. 78–81. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Elakkiya, R.; Teja, K.S.S.; Jegatha Deborah, L.; Bisogni, C.; Medaglia, C. Imaging based cervical cancer diagnostics using small object detection-generative adversarial networks. Multimed. Tools Appl. 2022, 81, 191–207. [Google Scholar] [CrossRef]

- Haq, I.; Mazhar, T.; Asif, R.N.; Ghadi, Y.Y.; Ullah, N.; Khan, M.A.; Al-Rasheed, A. YOLO and residual network for colorectal cancer cell detection and counting. Heliyon 2024, 10, e24403. [Google Scholar] [CrossRef]

- Hu, H.; Guan, Q.; Chen, S.; Ji, Z.; Lin, Y. Detection and recognition for life state of cell cancer using two-stage cascade CNNs. IEEE/ACM Trans. Comput. Biol. Bioinform. 2017, 17, 887–898. [Google Scholar] [CrossRef]

- Kang, Z.; Catal, C.; Tekinerdogan, B. Machine learning applications in production lines: A systematic literature review. Comput. Ind. Eng. 2020, 149, 106773. [Google Scholar] [CrossRef]

- Kumar, K.; Kumar, P.; Kshirsagar, V.; Bhalerao, R.H.; Shah, K.; Vaidhya, P.K.; Panda, S.K. A real-time object counting and collecting device for industrial automation process using machine vision. IEEE Sens. J. 2023, 23, 13052–13059. [Google Scholar] [CrossRef]

- Baur, J.; Dewey, K.; Steinberg, G.; Nitsche, F.O. Modeling the Effect of Vegetation Coverage on Unmanned Aerial Vehicles-Based Object Detection: A Study in the Minefield Environment. Remote Sens. 2024, 16, 2046. [Google Scholar] [CrossRef]

- Pal, M.; Palevičius, P.; Landauskas, M.; Orinaitė, U.; Timofejeva, I.; Ragulskis, M. An overview of challenges associated with automatic detection of concrete cracks in the presence of shadows. Appl. Sci. 2021, 11, 11396. [Google Scholar] [CrossRef]

| Classification Metric | Description | False Positives | Example PMN-2 Mine |

|---|---|---|---|

| Visible Anomaly - Discrimination Level 1 | A shape, pattern, or grouping of values that are statistically different from the local or global background. Below threshold for detection criteria for most imagery-based tasks. | High |  |

| Identifiable Anomaly - Discrimination Level 2 | A visible anomaly that is identifiable with domain knowledge. The object must have either indicative shape and size or visible characteristic feature. Above threshold for detection criteria. | Medium |  |

| Unique Identifiable Anomaly - Discrimination Level 3 | An object with clear, unique identifiable features, shape, or size that can be discerned with a high degree of certainty. Above threshold for detection criteria. | Low |  |

| Orthophoto (Dataset ID) | Modality | GSD cm/pix | Detected Anomaly | Identifiable Anomaly | Unique Identifiable Anomaly | Reason Not Classified as an Anomaly or an Identifiable Anomaly |

|---|---|---|---|---|---|---|

| Field 1 (3-1): 0 days post-emplacement (pre-burial) | RGB | 0.21 | 131/131 | 131/131 | 87/131 | All objects are identifiable anomalies |

| Field 1 (3-2): 3 months post-emplacement | RGB | 0.27 | 27/27 | 25/27 | 12/27 | 1/2 partial vegetation coverage 1/2 bright reflectance |

| Field 2 (19-1): 0 days post-emplacement | RGB | 0.33 | 31/31 | 31/31 | 18/31 | All objects are identifiable anomalies |

| Field 2 (19-2): 1 year post-emplacement | RGB | 0.31 | 33/33 | 24/33 | 8/33 | 8/9 partial vegetation and dirt coverage 1/9 blends into background |

| Field 3 (34-1): 0 days post-emplacement (pre-burial) | RGB | 0.31 | 130/130 | 128/130 | 47/130 | 2/2—PFM-1 blends into background without identifiable features, shape, or size |

| Field 3 (34-2): 0 days post-emplacement | RGB | 0.31 | 30/30 | 30/30 | 10/30 | All objects are identifiable anomalies |

| Field 1 (4-1): (pre-burial all surface, excluding control holes) | TIR | 1.00 | 141/143 | 37/143 | 2/143 | No identifiable features or clear shapes in TIR for most objects, temperature similar to other soil disturbances |

| Field 1 (4-2): 1 h Post-emplacement | TIR | 0.37 | 24/24 | 6/24 | 0/24 | Majority of objects did not have identifiable shape, size, or features |

| Field 1 (4-3): 3 months post-emplacement | TIR | 0.99 | 18/22 | 4/22 | 0/22 | 4/4 thermally indistinguishable from background. No visible anomaly |

| Field 2 (18-1): 1 year since burial | TIR | 0.83 | 27/29 | 9/29 | 1/29 | 2/2 thermally indistinguishable from background. No visible anomaly |

| Field 2 (18-2): 1 year since burial | TIR | 0.86 | 27/29 | 9/29 | 1/29 | 2/2 thermally indistinguishable from background. No visible anomaly |

| Field 2 (30-1) 1 year since burial | Multispec Red | 1.17 | 24/30 | 12/30 | 0/30 | 4/4 indistinguishable from background |

| Field 2 (30-2) 1 year since burial | Multispec RedEdge | 1.17 | 25/30 | 12/30 | 0/30 | 5/5 indistinguishable from background |

| Field 2 (30-3) 1 year since burial | Multispec NIR | 1.17 | 25/30 | 12/30 | 0/30 | 5/5 indistinguishable from background |

| Field 2 (30-4) 1 year since burial | Multispec Green | 1.17 | 26/30 | 15/30 | 0/30 | 4/4 indistinguishable from background |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baur, J.; Nitsche, F.O. A False-Positive-Centric Framework for Object Detection Disambiguation. Remote Sens. 2025, 17, 2429. https://doi.org/10.3390/rs17142429

Baur J, Nitsche FO. A False-Positive-Centric Framework for Object Detection Disambiguation. Remote Sensing. 2025; 17(14):2429. https://doi.org/10.3390/rs17142429

Chicago/Turabian StyleBaur, Jasper, and Frank O. Nitsche. 2025. "A False-Positive-Centric Framework for Object Detection Disambiguation" Remote Sensing 17, no. 14: 2429. https://doi.org/10.3390/rs17142429

APA StyleBaur, J., & Nitsche, F. O. (2025). A False-Positive-Centric Framework for Object Detection Disambiguation. Remote Sensing, 17(14), 2429. https://doi.org/10.3390/rs17142429