Phenology-Aware Transformer for Semantic Segmentation of Non-Food Crops from Multi-Source Remote Sensing Time Series

Abstract

1. Introduction

2. Study Area and Data

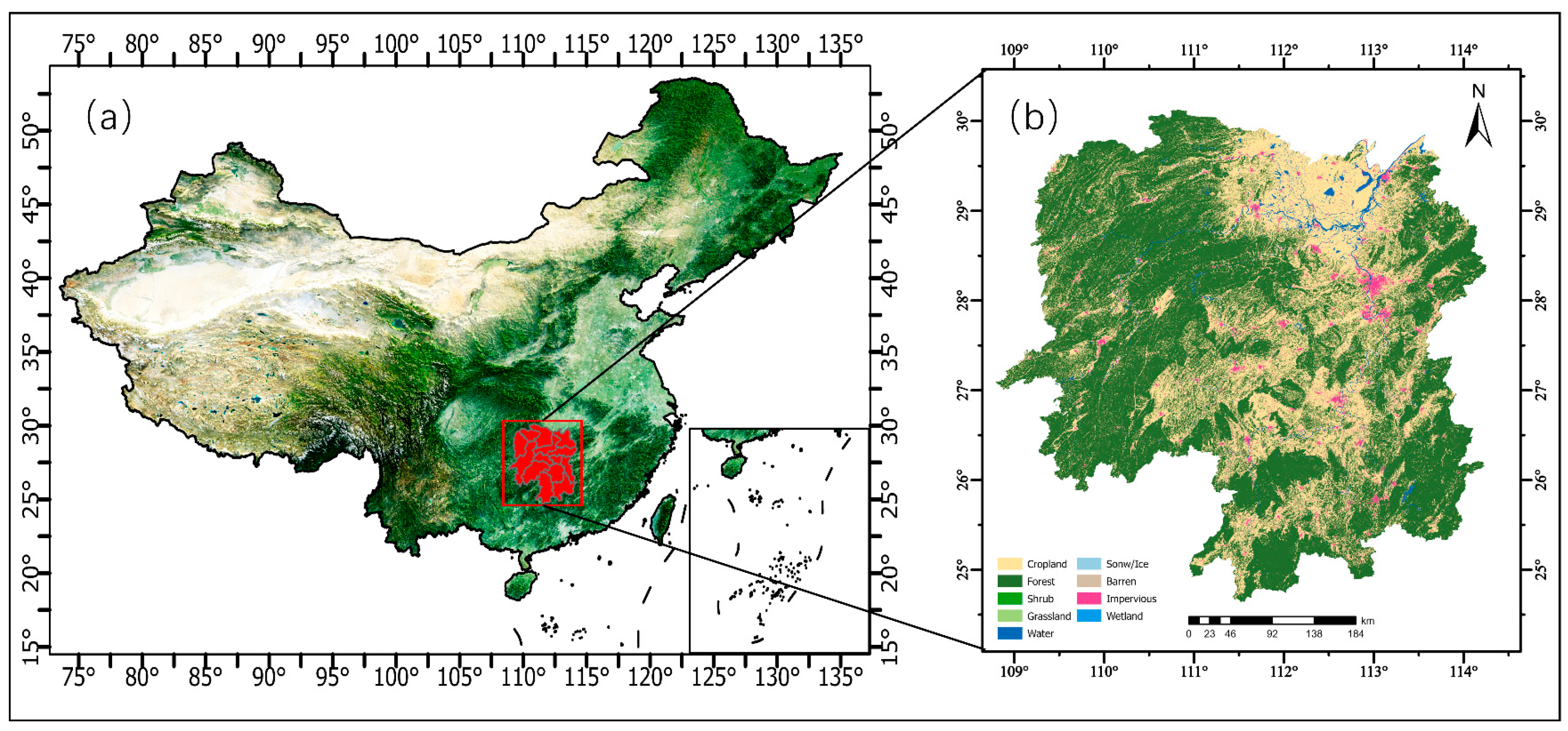

2.1. Study Area

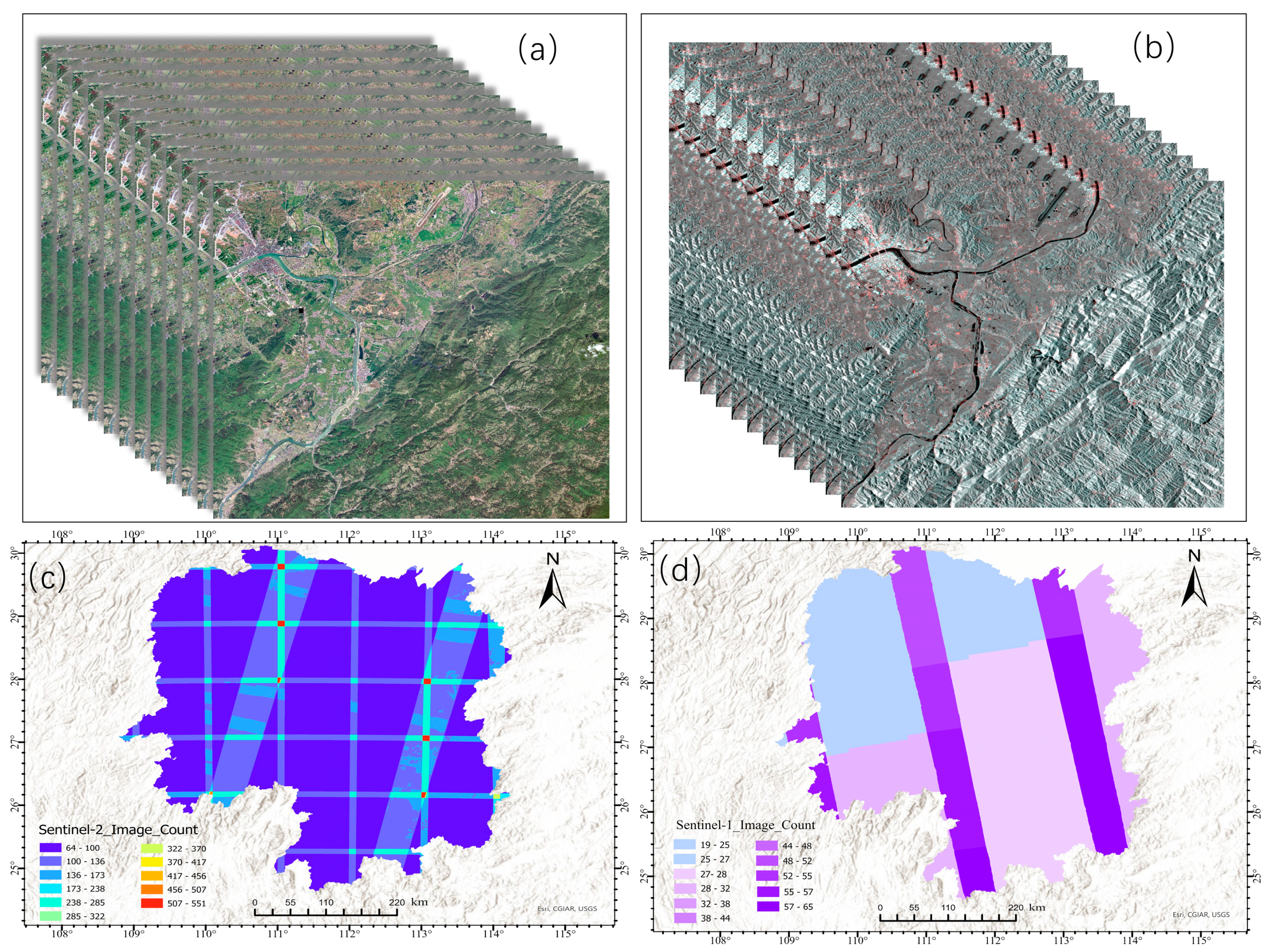

2.2. Data

2.2.1. Remote-Sensing Data

2.2.2. Auxiliary Data

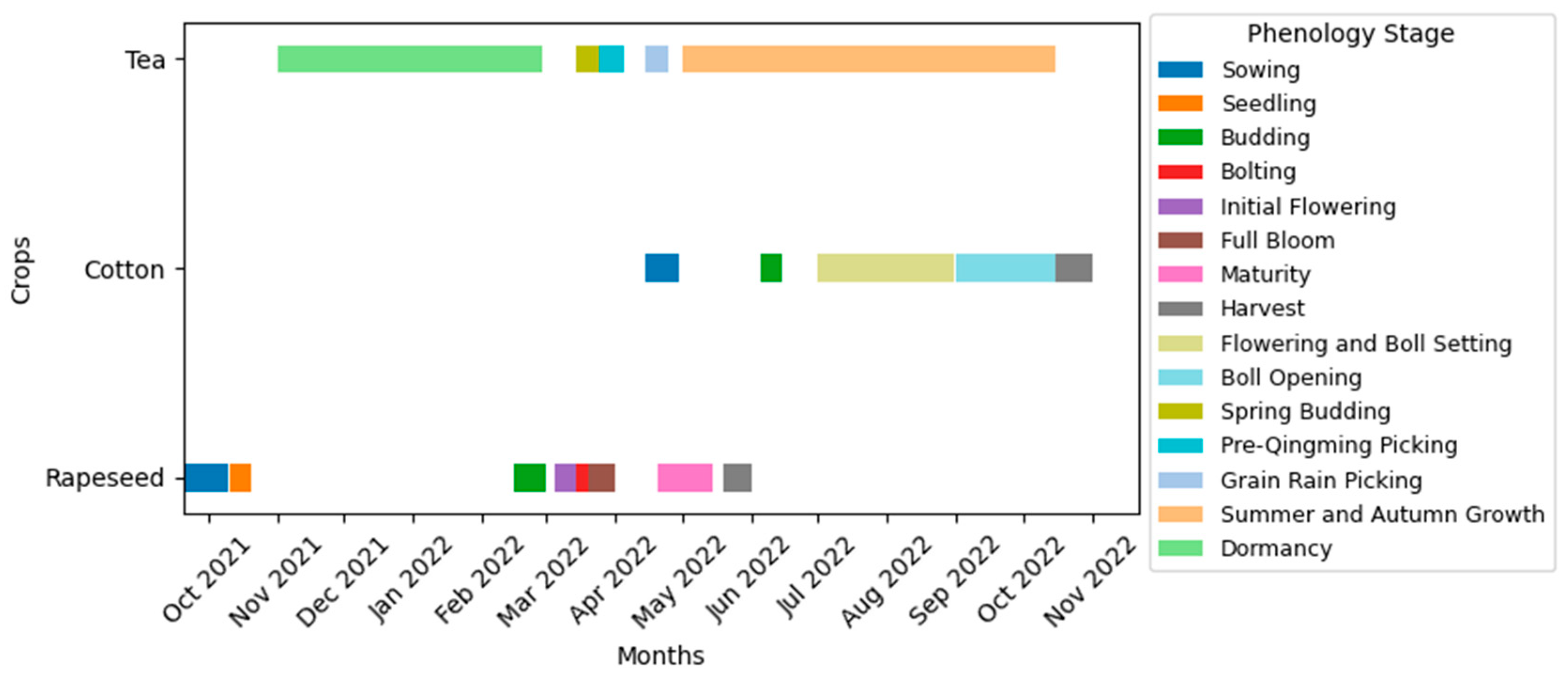

2.2.3. Sample Data

2.3. Experimental Datasets

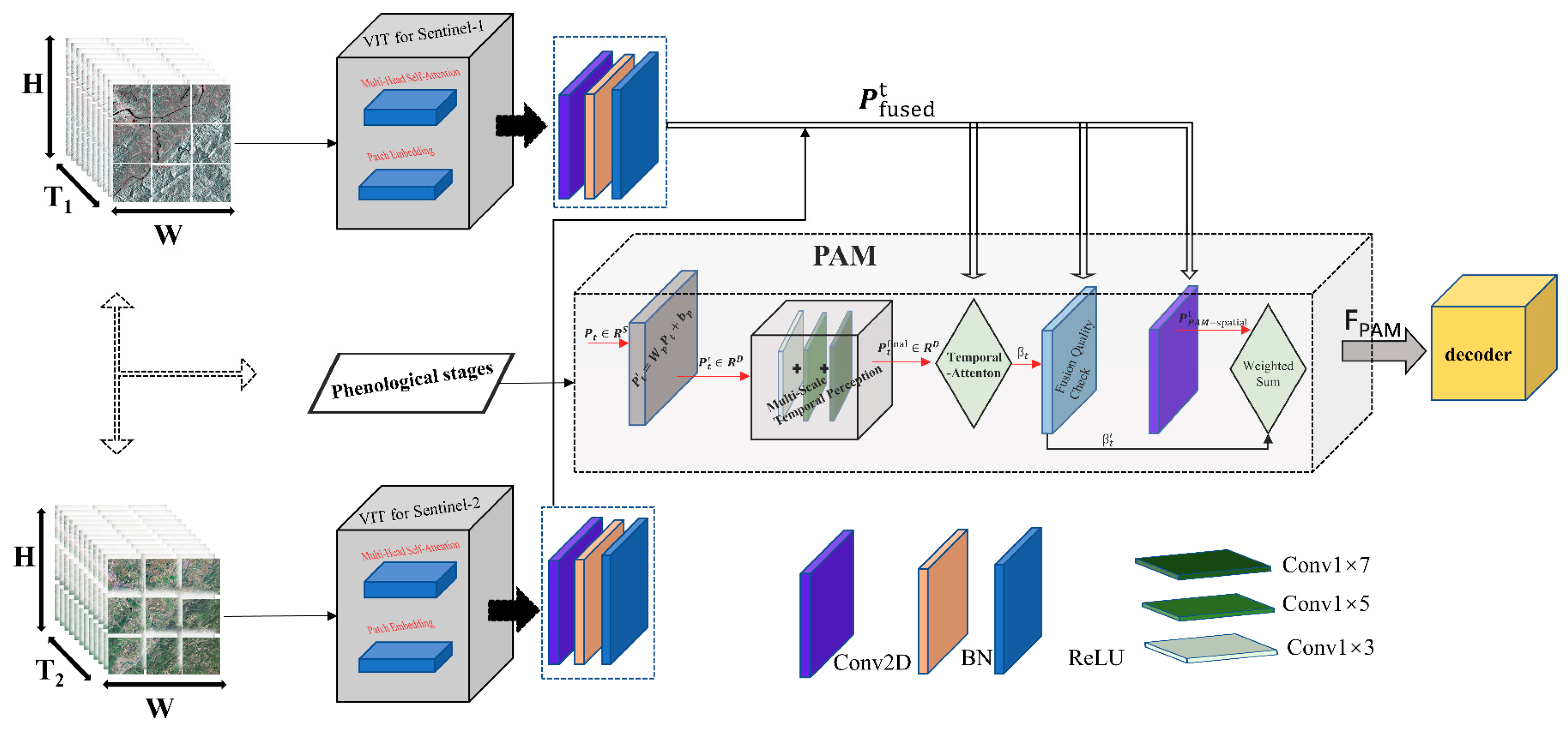

3. Materials and Methods

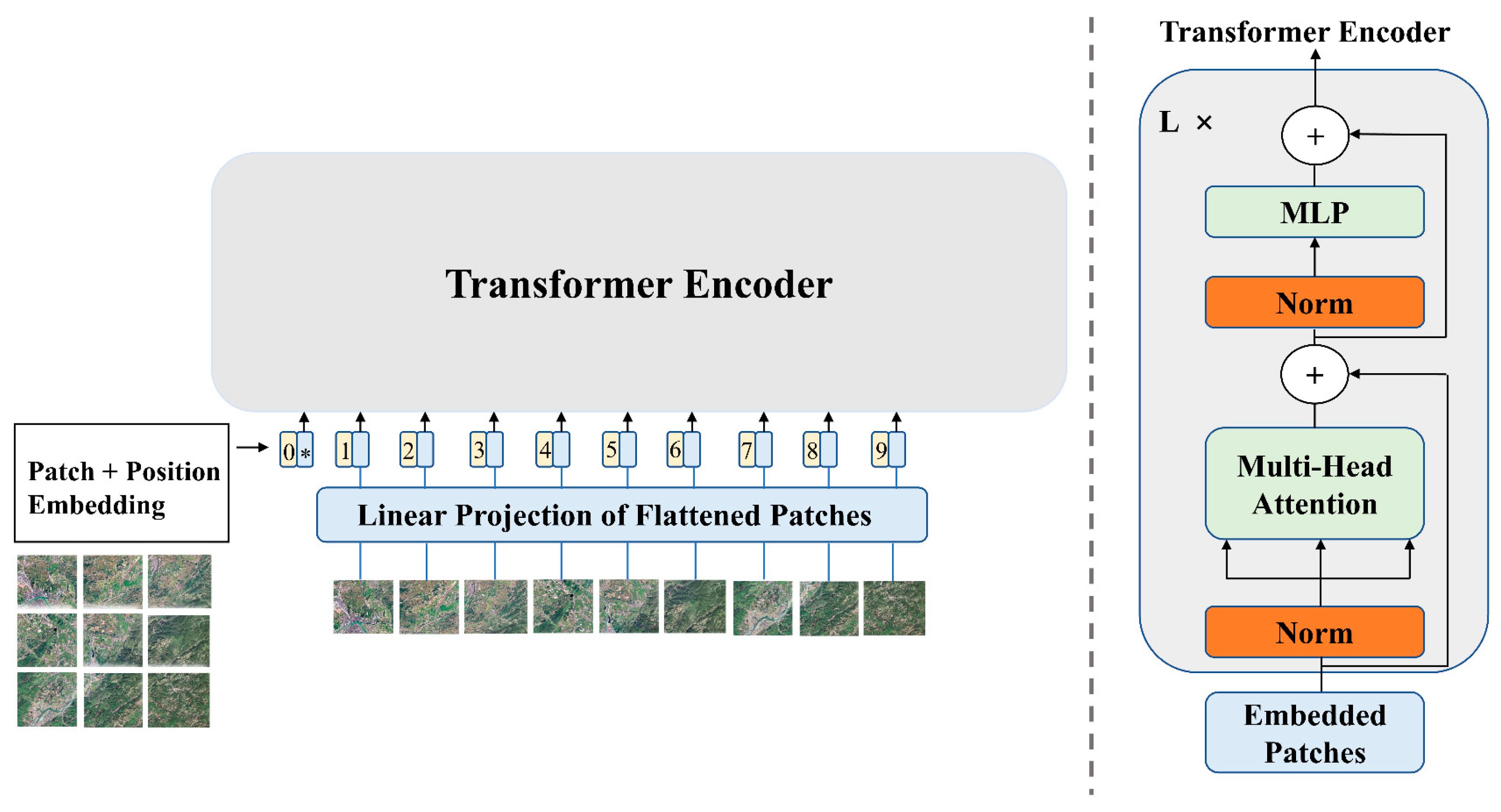

3.1. VIT Module

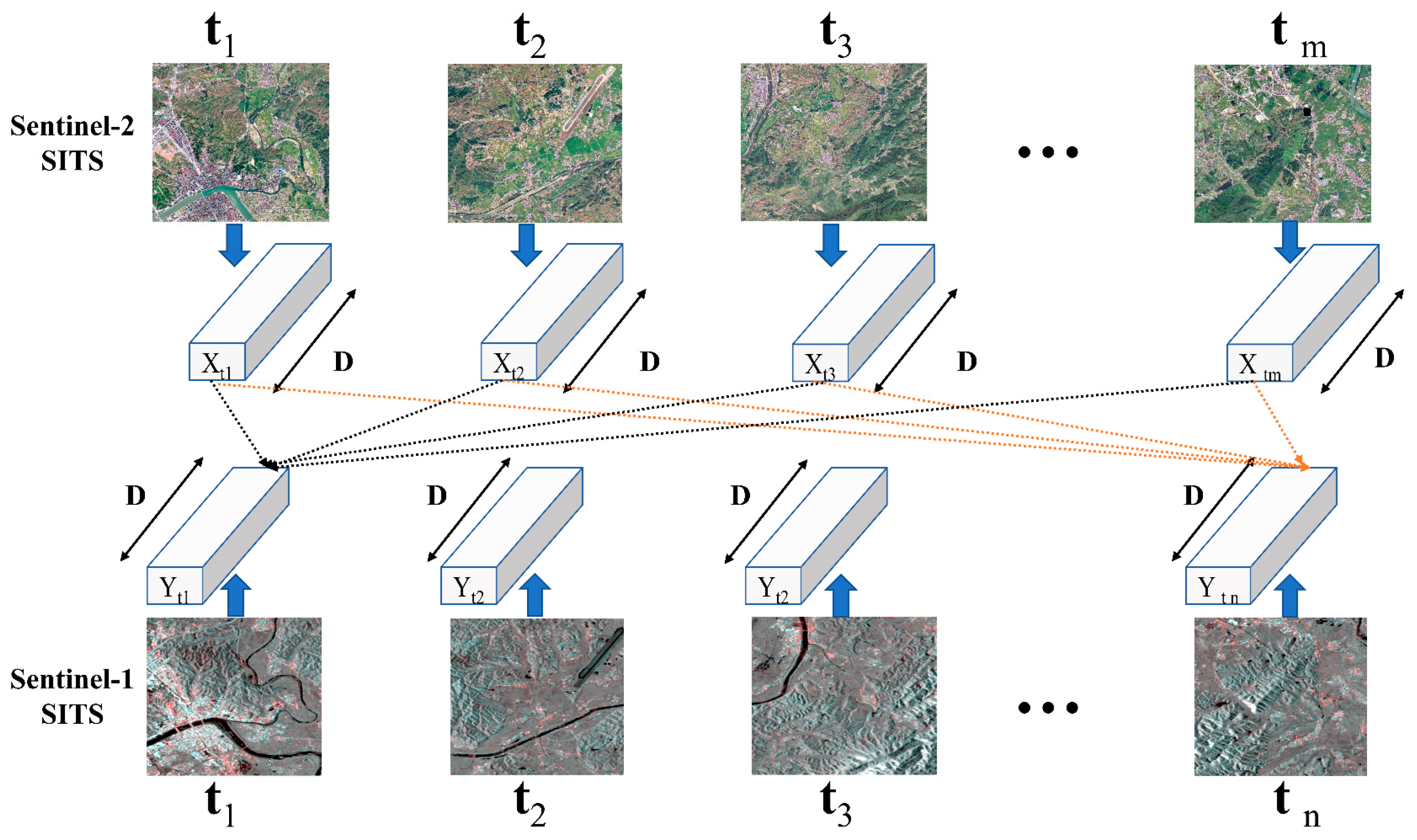

3.2. Multi-Task Attention Fusion (MTAF) Module

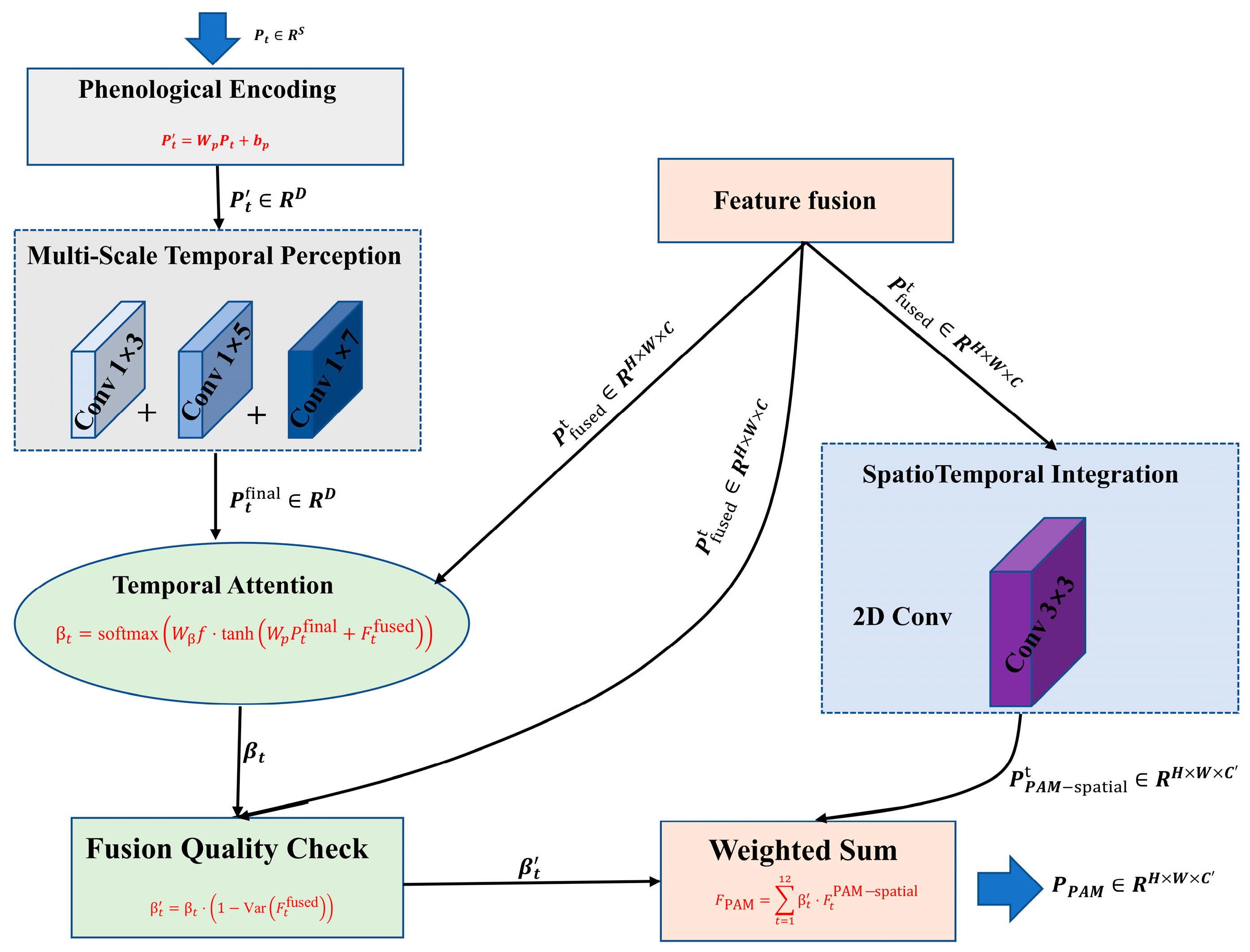

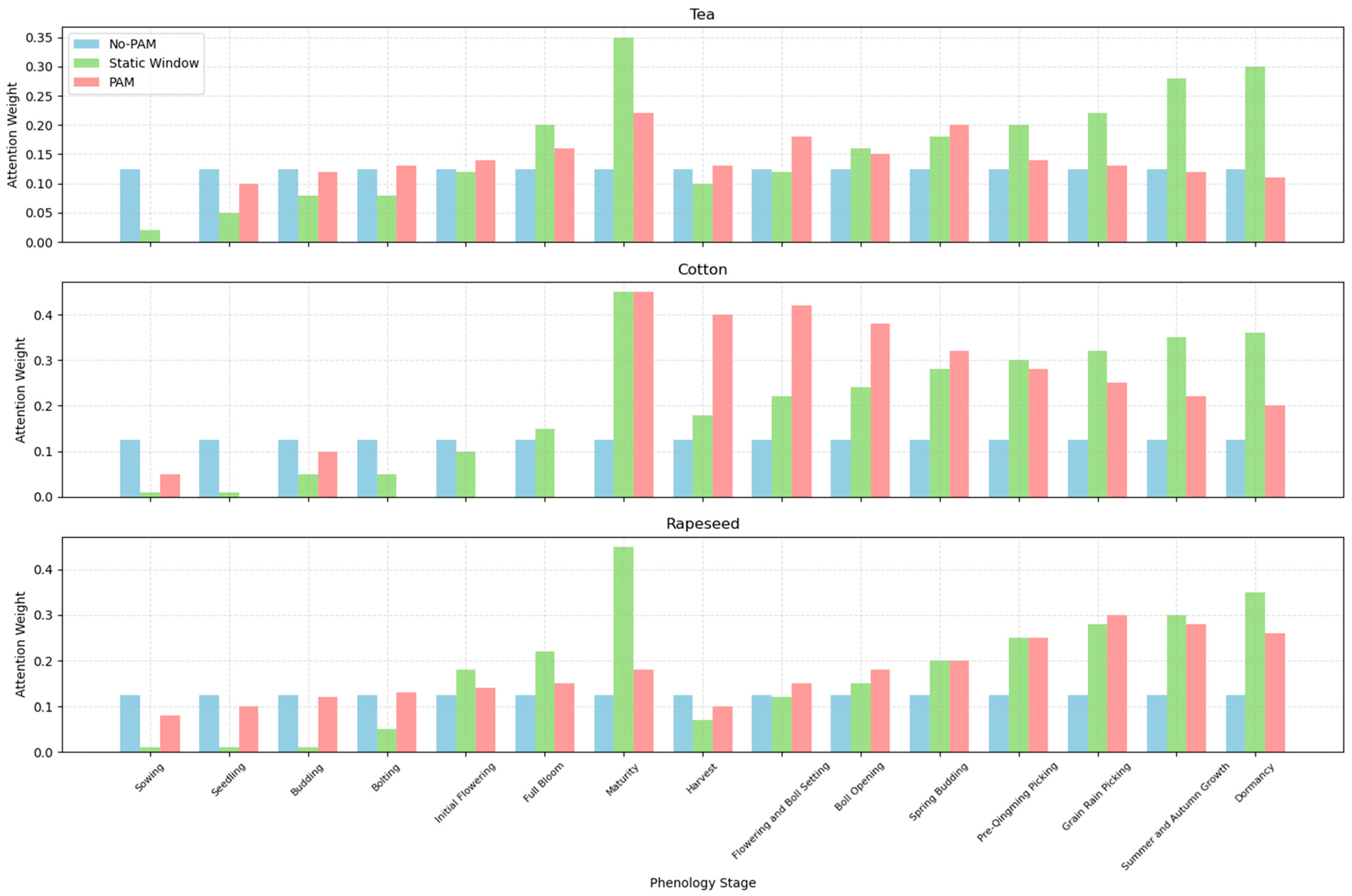

3.3. Phenology-Aware Module (PAM) Module

3.3.1. Stage Encoding

3.3.2. Multi-Scale Phenology Perception

3.3.3. Time Attention Generation

3.3.4. Spatiotemporal Integration

3.3.5. Fusion Quality Check and Stage Feature Weighting

3.4. Decoder

3.5. Loss Function

3.6. Evaluation Metrics

4. Model Validation and Analysis

4.1. Experimental Setup

4.2. Ablation Experiments

- (1)

- Using the complete PVM model for the experiment, the results were evaluated and used as the testing benchmark for the subsequent modules.

- (2)

- Testing the effect of the PAM module: We conducted an experiment where the PAM module was removed, and the MTAF + VIT + Decoder were used for the experiment. After obtaining the results, we evaluated the impact on non-food crop pixel segmentation.

- (3)

- After removing the MTAF module: We fine-tuned the model to adapt to the absence of this module and conducted a complete experiment. The results were analyzed to assess the potential impact of time-series data fusion on segmentation tasks.

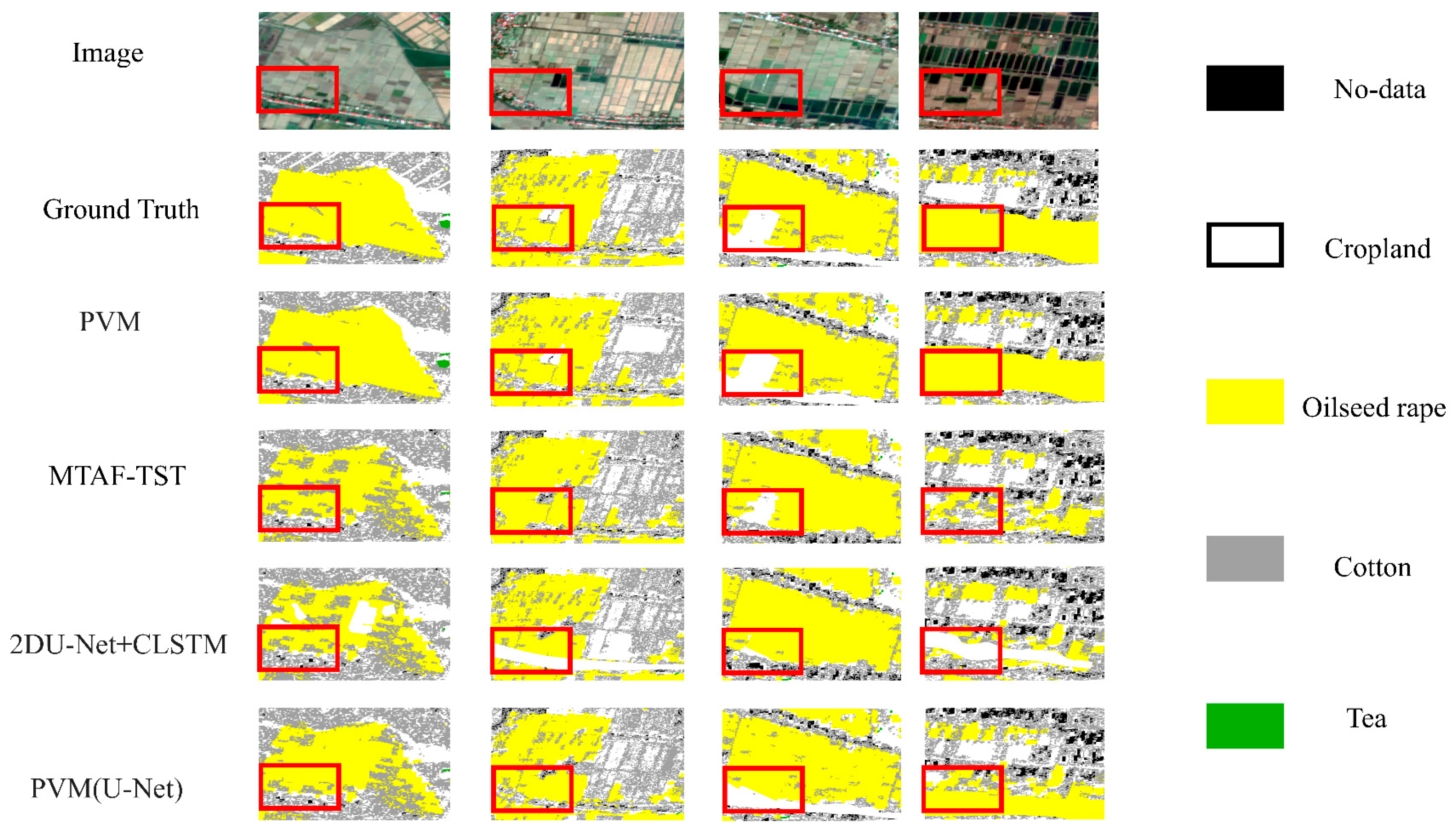

4.3. Comparative Study

- (1)

- MTAF-TST model: This is a spatiotemporal transformer (MTAF-TST) semantic segmentation framework based on multi-source temporal attention fusion, utilizing optical and SAR time-series data for land cover semantic segmentation. The model uses a Transformer-based spatiotemporal feature extraction module to mine long-range temporal dependencies and high-level spatial semantic information, making it highly suitable for multi-class semantic segmentation of large-area time-series data.

- (2)

- 2DU-Net+CLSTM: This model combines 2D U-Net for image segmentation and CLSTM for time-series processing, used for pixel-level crop type classification from remote-sensing images. By capturing spatial features and temporal dependencies, it provides instantaneous crop state information.

- (3)

- U-Net Decoder: The U-Net decoder adopts an upsampled (deconvolution) structure with skip connections. It recovers spatial resolution by concatenating feature maps from the encoder with upsampled feature maps from the decoder. It is suitable for multi-class segmentation tasks, making predictions for each pixel for multi-class classification.

5. Results

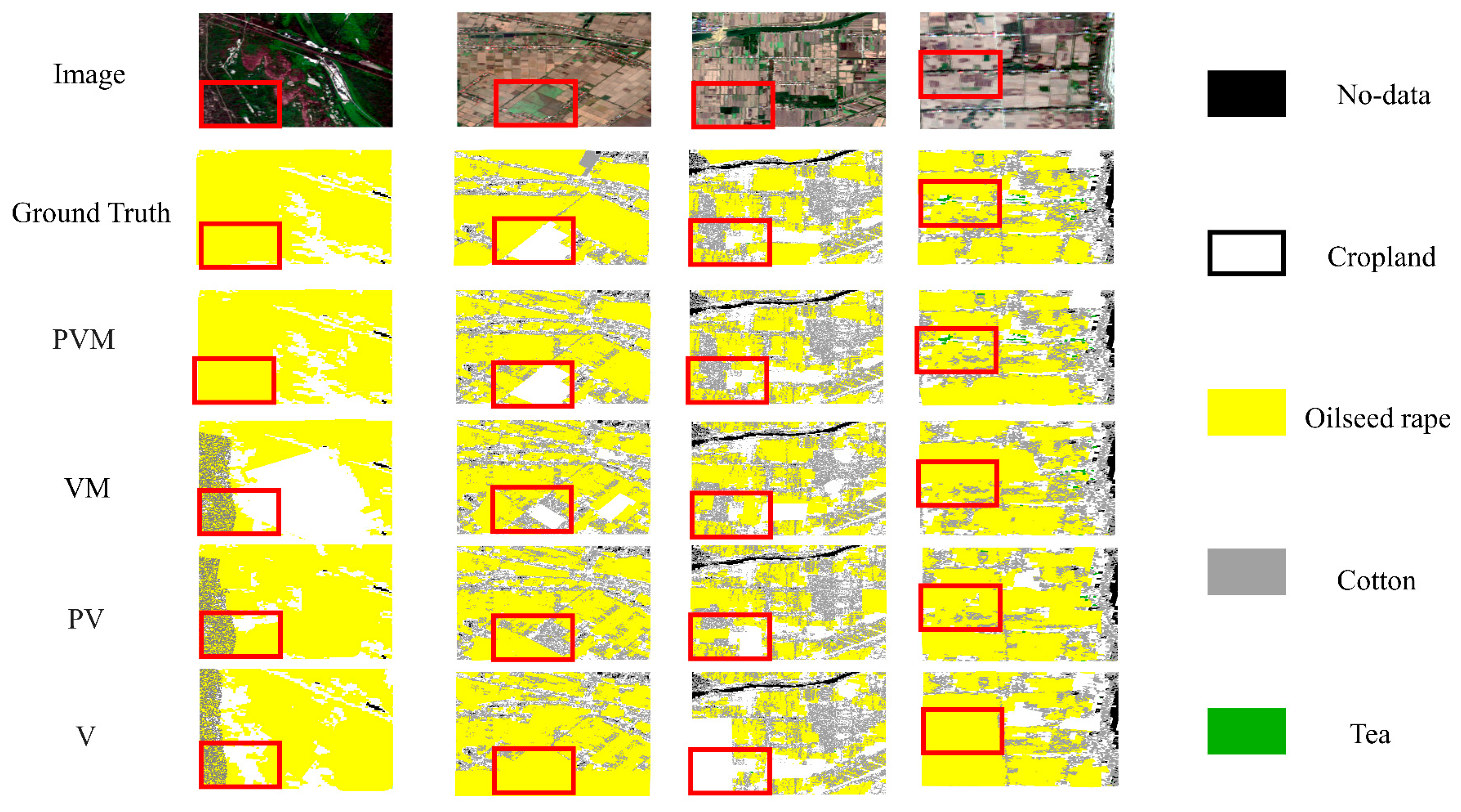

5.1. Ablation Experiment Results

5.2. Comparison Experiment Results

6. Discussion

6.1. Rapid Changes in Spectral Features and Missing Temporal Data in Non-Food Crop Growth Stages

6.2. Environmental Heterogeneity and Non-Food Crop Phenology Changes: Challenges and Prospects for Adaptive Models

6.3. Model Deployment Cost Challenges and Optimization Paths: Applications of Cross-Modal Learning and Lightweight Strategies

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lin, X.; Fu, H. Spatial-Temporal Evolution and Driving Forces of Cultivated Land Based on the PLUS Model: A Case Study of Haikou City, 1980–2020. Sustainability 2022, 14, 14284. [Google Scholar] [CrossRef]

- Long, H.; Zhang, Y.; Ma, L.; Tu, S. Land Use Transitions: Progress, Challenges and Prospects. Land 2021, 10, 903. [Google Scholar] [CrossRef]

- Wang, X.; Song, X.; Wang, Y.; Xu, H.; Ma, Z. Understanding the Distribution Patterns and Underlying Mechanisms of Non-Grain Use of Cultivated Land in Rural China. J. Rural Stud. 2024, 106, 103223. [Google Scholar] [CrossRef]

- Nduati, E.; Sofue, Y.; Matniyaz, A.; Park, J.G.; Yang, W.; Kondoh, A. Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area. Remote Sens. 2019, 11, 207. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data. Remote Sens. 2015, 7, 16091–16107. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, H.; Du, W.; Lyu, X. Crop Identification Based on Multi-Temporal Active and Passive Remote Sensing Images. Int. J. Geo-Inf. 2022, 11, 388. [Google Scholar] [CrossRef]

- Song, X.-P.; Huang, W.; Hansen, M.C.; Potapov, P. An Evaluation of Landsat, Sentinel-2, Sentinel-1 and MODIS Data for Crop Type Mapping. Sci. Remote Sens. 2021, 3, 100018. [Google Scholar] [CrossRef]

- Yi, Z.; Jia, L.; Chen, Q. Crop Classification Using Multi-Temporal Sentinel-2 Data in the Shiyang River Basin of China. Remote Sens. 2020, 12, 4052. [Google Scholar] [CrossRef]

- Huang, X.; Liu, J.; Zhu, W.; Atzberger, C.; Liu, Q. The Optimal Threshold and Vegetation Index Time Series for Retrieving Crop Phenology Based on a Modified Dynamic Threshold Method. Remote Sens. 2019, 11, 2725. [Google Scholar] [CrossRef]

- Valero, S.; Arnaud, L.; Planells, M.; Ceschia, E. Synergy of Sentinel-1 and Sentinel-2 Imagery for Early Seasonal Agricultural Crop Mapping. Remote Sens. 2021, 13, 4891. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Mack, B.; Conrad, C. Crop Type Classification Using Fusion of Sentinel-1 and Sentinel-2 Data: Assessing the Impact of Feature Selection, Optical Data Availability, and Parcel Sizes on the Accuracies. Remote Sens. 2020, 12, 2779. [Google Scholar] [CrossRef]

- Dobrinić, D.; Gašparović, M.; Medak, D. Sentinel-1 and 2 Time-Series for Vegetation Mapping Using Random Forest Classification: A Case Study of Northern Croatia. Remote Sens. 2021, 13, 2321. [Google Scholar] [CrossRef]

- Li, Q.; Tian, J.; Tian, Q. Deep Learning Application for Crop Classification via Multi-Temporal Remote Sensing Images. Agriculture 2023, 13, 906. [Google Scholar] [CrossRef]

- Gadiraju, K.K.; Vatsavai, R.R. Remote Sensing Based Crop Type Classification via Deep Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4699–4712. [Google Scholar] [CrossRef]

- Feng, F.; Gao, M.; Liu, R.; Yao, S.; Yang, G. A Deep Learning Framework for Crop Mapping with Reconstructed Sentinel-2 Time Series Images. Comput. Electron. Agric. 2023, 213, 108227. [Google Scholar] [CrossRef]

- Li, H.; Wang, G.; Dong, Z.; Wei, X.; Wu, M.; Song, H.; Amankwah, S.O.Y. Identifying Cotton Fields from Remote Sensing Images Using Multiple Deep Learning Networks. Agronomy 2021, 11, 174. [Google Scholar] [CrossRef]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A Multi-Temporal Deep Learning Approach with Improved Spatial Generalizability for Dynamic Corn and Soybean Mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Yu, J.; Zhao, L.; Liu, Y.; Chang, Q.; Wang, N. Automatic Crop Type Mapping Based on Crop-Wise Indicative Features. Int. J. Appl. Earth Obs. Geoinf. 2025, 139, 104554. [Google Scholar] [CrossRef]

- Qiu, B.; Wu, F.; Hu, X.; Yang, P.; Wu, W.; Chen, J.; Chen, X.; He, L.; Joe, B.; Tubiello, F.N.; et al. A Robust Framework for Mapping Complex Cropping Patterns: The First National-Scale 10 m Map with 10 Crops in China Using Sentinel 1/2 Images. ISPRS J. Photogramm. Remote Sens. 2025, 224, 361–381. [Google Scholar] [CrossRef]

- Nie, H.; Lin, Y.; Luo, W.; Liu, G. Rice Cropping Sequence Mapping in the Tropical Monsoon Zone via Agronomic Knowledge Graphs Integrating Phenology and Remote Sensing. Ecol. Inform. 2025, 87, 103075. [Google Scholar] [CrossRef]

- Jiang, J.; Zhang, J.; Wang, X.; Zhang, S.; Kong, D.; Wang, X.; Ali, S.; Ullah, H. Fine Extraction of Multi-Crop Planting Area Based on Deep Learning with Sentinel- 2 Time-Series Data. Env. Sci Pollut Res 2025, 32, 11931–11949. [Google Scholar] [CrossRef]

- Tian, Q.; Jiang, H.; Zhong, R.; Xiong, X.; Wang, X.; Huang, J.; Du, Z.; Lin, T. PSeqNet: A Crop Phenology Monitoring Model Accounting for Phenological Associations. ISPRS J. Photogramm. Remote Sens. 2025, 225, 257–274. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Available online: www.moa.gov.cn (accessed on 6 July 2025).

- Jia, D.; Gao, P.; Cheng, C.; Ye, S. Multiple-Feature-Driven Co-Training Method for Crop Mapping Based on Remote Sensing Time Series Imagery. Int. J. Remote Sens. 2020, 41, 8096–8120. [Google Scholar] [CrossRef]

- Lee, J.S.; Jurkevich, L.; Dewaele, P.; Wambacq, P.; Oosterlinck, A. Speckle Filtering of Synthetic Aperture Radar Images: A Review. Remote Sens. Rev. 1994, 8, 313–340. [Google Scholar] [CrossRef]

- Huang, S.; Liu, D.; Gao, G.; Guo, X. A Novel Method for Speckle Noise Reduction and Ship Target Detection in SAR Images. Pattern Recognit. 2009, 42, 1533–1542. [Google Scholar] [CrossRef]

- Li, X.; Zhu, W.; Xie, Z.; Zhan, P.; Huang, X.; Sun, L.; Duan, Z. Assessing the Effects of Time Interpolation of NDVI Composites on Phenology Trend Estimation. Remote Sens. 2021, 13, 5018. [Google Scholar] [CrossRef]

- Cai, Z.; Jönsson, P.; Jin, H.; Eklundh, L. Performance of Smoothing Methods for Reconstructing NDVI Time-Series and Estimating Vegetation Phenology from MODIS Data. Remote Sens. 2017, 9, 1271. [Google Scholar] [CrossRef]

- Yang, J.; Huang, X. 30 m Annual Land Cover and Its Dynamics in China from 1990 to 2019. Earth Syst. Sci. Data Discuss. 2021, 13, 3907–3925. [Google Scholar] [CrossRef]

- Yan, J.; Liu, J.; Liang, D.; Wang, Y.; Li, J.; Wang, L. Semantic Segmentation of Land Cover in Urban Areas by Fusing Multisource Satellite Image Time Series. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4410315. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhou, S.; Nie, D.; Adeli, E.; Yin, J.; Lian, J.; Shen, D. High-Resolution Encoder–Decoder Networks for Low-Contrast Medical Image Segmentation. IEEE Trans. Image Process. 2019, 29, 461–475. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Yang, Z.; Li, J. Efficient Transformer for Remote Sensing Image Segmentation. Remote Sens. 2021, 13, 3585. [Google Scholar] [CrossRef]

- Jadon, S. A Survey of Loss Functions for Semantic Segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Via del Mar, Chile, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Jacques, A.A.B.; Diallo, A.B.; Lord, E. The Canadian Cropland Dataset: A New Land Cover Dataset for Multitemporal Deep Learning Classification in Agriculture. arXiv 2023, arXiv:2306.00114. [Google Scholar]

- M Rustowicz, R.; Cheong, R.; Wang, L.; Ermon, S.; Burke, M.; Lobell, D. Semantic Segmentation of Crop Type in Africa: A Novel Dataset and Analysis of Deep Learning Methods. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 75–82. [Google Scholar]

- Gao, F.; Zhang, X. Mapping Crop Phenology in Near Real-Time Using Satellite Remote Sensing: Challenges and Opportunities. J. Remote Sens. 2021, 2021, 8379391. [Google Scholar] [CrossRef]

- Kasampalis, D.A.; Alexandridis, T.K.; Deva, C.; Challinor, A.; Moshou, D.; Zalidis, G. Contribution of Remote Sensing on Crop Models: A Review. J. Imaging 2018, 4, 52. [Google Scholar] [CrossRef]

- Zhao, Y.; Potgieter, A.B.; Zhang, M.; Wu, B.; Hammer, G.L. Predicting Wheat Yield at the Field Scale by Combining High-Resolution Sentinel-2 Satellite Imagery and Crop Modelling. Remote Sens. 2020, 12, 1024. [Google Scholar] [CrossRef]

- Roßberg, T.; Schmitt, M. Dense NDVI Time Series by Fusion of Optical and SAR-Derived Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7748–7758. [Google Scholar] [CrossRef]

- Belgiu, M.; Stein, A. Spatiotemporal Image Fusion in Remote Sensing. Remote Sens. 2019, 11, 818. [Google Scholar] [CrossRef]

- Bai, B.; Tan, Y.; Donchyts, G.; Haag, A.; Weerts, A. A Simple Spatio–Temporal Data Fusion Method Based on Linear Regression Coefficient Compensation. Remote Sens. 2020, 12, 3900. [Google Scholar] [CrossRef]

- Bellvert, J.; Jofre-Ĉekalović, C.; Pelechá, A.; Mata, M.; Nieto, H. Feasibility of Using the Two-Source Energy Balance Model (TSEB) with Sentinel-2 and Sentinel-3 Images to Analyze the Spatio-Temporal Variability of Vine Water Status in a Vineyard. Remote Sens. 2020, 12, 2299. [Google Scholar] [CrossRef]

- Bregaglio, S.; Ginaldi, F.; Raparelli, E.; Fila, G.; Bajocco, S. Improving Crop Yield Prediction Accuracy by Embedding Phenological Heterogeneity into Model Parameter Sets. Agric. Syst. 2023, 209, 103666. [Google Scholar] [CrossRef]

- Ceglar, A.; van der Wijngaart, R.; de Wit, A.; Lecerf, R.; Boogaard, H.; Seguini, L.; van den Berg, M.; Toreti, A.; Zampieri, M.; Fumagalli, D.; et al. Improving WOFOST Model to Simulate Winter Wheat Phenology in Europe: Evaluation and Effects on Yield. Agric. Syst. 2019, 168, 168–180. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescós, M.Á.; Álvarez-Mozos, J. Crop Classification Based on Temporal Signatures of Sentinel-1 Observations over Navarre Province, Spain. Remote Sens. 2020, 12, 278. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, J.; Cai, Z.; Yang, J.; Zhou, W.; Hu, Q.; Wang, C.; You, L.; Xu, B. A Phenology-Based Vegetation Index for Improving Ratoon Rice Mapping Using Harmonized Landsat and Sentinel-2 Data. J. Integr. Agric. 2024, 23, 1164–1178. [Google Scholar] [CrossRef]

- Liu, J.; Zhu, W.; Atzberger, C.; Zhao, A.; Pan, Y.; Huang, X. A Phenology-Based Method to Map Cropping Patterns under a Wheat-Maize Rotation Using Remotely Sensed Time-Series Data. Remote Sens. 2018, 10, 1203. [Google Scholar] [CrossRef]

| Label | Type | Pixels |

|---|---|---|

| 1 | Cropland | 564,117,840 |

| 2 | Oilseed rape | 44,745,893 |

| 3 | Tea | 30,280,657 |

| 4 | Cotton | 2,537,294 |

| Object Pixels Ratio | ||

|---|---|---|

| Dataset | HN-NG Set Object Pixels (Proportion) | CCD Set Object Pixels (Proportion) |

| Training | 46,355,337 (8.21%) | 5,571,000 (4.78%) |

| Validation | 15,451,779 (2.74%) | 1,194,480 (1.02%) |

| Test | 15,451,779 (2.74%) | 1,202,040 (1.07%) |

| Total | 77,258,895 (13.69%) | 7,967,520 (6.83%) |

| Methods | HN-NG Set | ||||

|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1_Score (%) | IoU (%) | mPA (%) | |

| PVM | 82.16 | 80.04 | 74.84 | 61.38 | 70.46 |

| VM | 80.15 | 76.84 | 70.19 | 55.22 | 67.73 |

| PV | 76.47 | 74.18 | 66.27 | 52.52 | 63.33 |

| V | 72.36 | 65.69 | 60.71 | 45.28 | 58.04 |

| Methods | CCD Set | ||||

|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1_Score (%) | IoU (%) | mPA (%) | |

| PVM | 79.16 | 75.65 | 71.93 | 55.94 | 66.14 |

| VM | 72.38 | 70.13 | 66.99 | 52.37 | 61.29 |

| PV | 69.71 | 66.74 | 62.54 | 47.95 | 60.11 |

| V | 63.34 | 60.58 | 56.82 | 41.28 | 54.78 |

| Methods | HN-NG Set | ||||

|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1_Score (%) | IoU (%) | mPA (%) | |

| PVM | 82.16 | 80.04 | 74.84 | 61.38 | 70.46 |

| MTAF-TST | 80.25 | 77.94 | 72.68 | 58.44 | 67.82 |

| 2DU-Net+CLSTM | 77.83 | 74.88 | 69.36 | 54.79 | 65.40 |

| PVM (U-Net) | 79.66 | 77.12 | 71.25 | 56.87 | 68.23 |

| Methods | CCD Set | ||||

|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1_Score (%) | IoU (%) | mPA (%) | |

| PVM | 79.16 | 75.65 | 71.93 | 55.94 | 66.14 |

| MTAF-TST | 77.06 | 75.28 | 69.14 | 56.15 | 65.04 |

| 2DU-Net+CLSTM | 74.42 | 70.76 | 66.55 | 51.83 | 62.87 |

| PVM (U-Net) | 76.38 | 74.19 | 68.24 | 54.26 | 64.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, X.; Liu, M.; Cao, S.; Jiang, J. Phenology-Aware Transformer for Semantic Segmentation of Non-Food Crops from Multi-Source Remote Sensing Time Series. Remote Sens. 2025, 17, 2346. https://doi.org/10.3390/rs17142346

Guan X, Liu M, Cao S, Jiang J. Phenology-Aware Transformer for Semantic Segmentation of Non-Food Crops from Multi-Source Remote Sensing Time Series. Remote Sensing. 2025; 17(14):2346. https://doi.org/10.3390/rs17142346

Chicago/Turabian StyleGuan, Xiongwei, Meiling Liu, Shi Cao, and Jiale Jiang. 2025. "Phenology-Aware Transformer for Semantic Segmentation of Non-Food Crops from Multi-Source Remote Sensing Time Series" Remote Sensing 17, no. 14: 2346. https://doi.org/10.3390/rs17142346

APA StyleGuan, X., Liu, M., Cao, S., & Jiang, J. (2025). Phenology-Aware Transformer for Semantic Segmentation of Non-Food Crops from Multi-Source Remote Sensing Time Series. Remote Sensing, 17(14), 2346. https://doi.org/10.3390/rs17142346