TBFH: A Total-Building-Focused Hybrid Dataset for Remote Sensing Image Building Detection

Abstract

1. Introduction

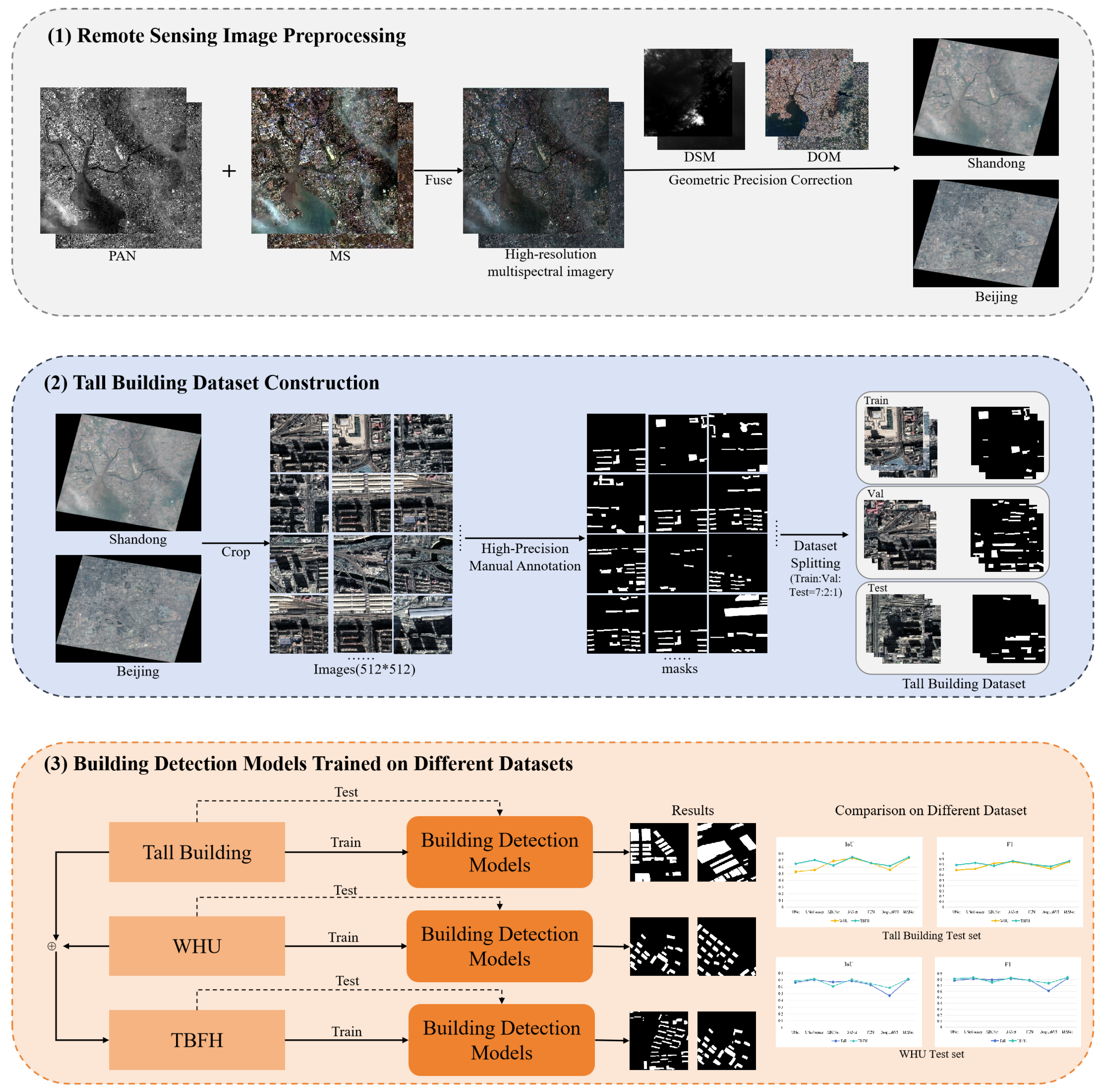

1.1. Background and Motivation

1.2. Research Objectives

- Construction of the TBFH dataset: We proposed the TBFH dataset by combining our tall building annotations with the WHU Building dataset. This enhances data diversity and enables more robust training across varied urban environments.

- Keypoint Topological Consistency (KTC) metric: We propose the KTC metric to quantitatively evaluate the structural integrity and shape fidelity of building segmentation results, offering a complementary assessment to conventional pixel-wise metrics.

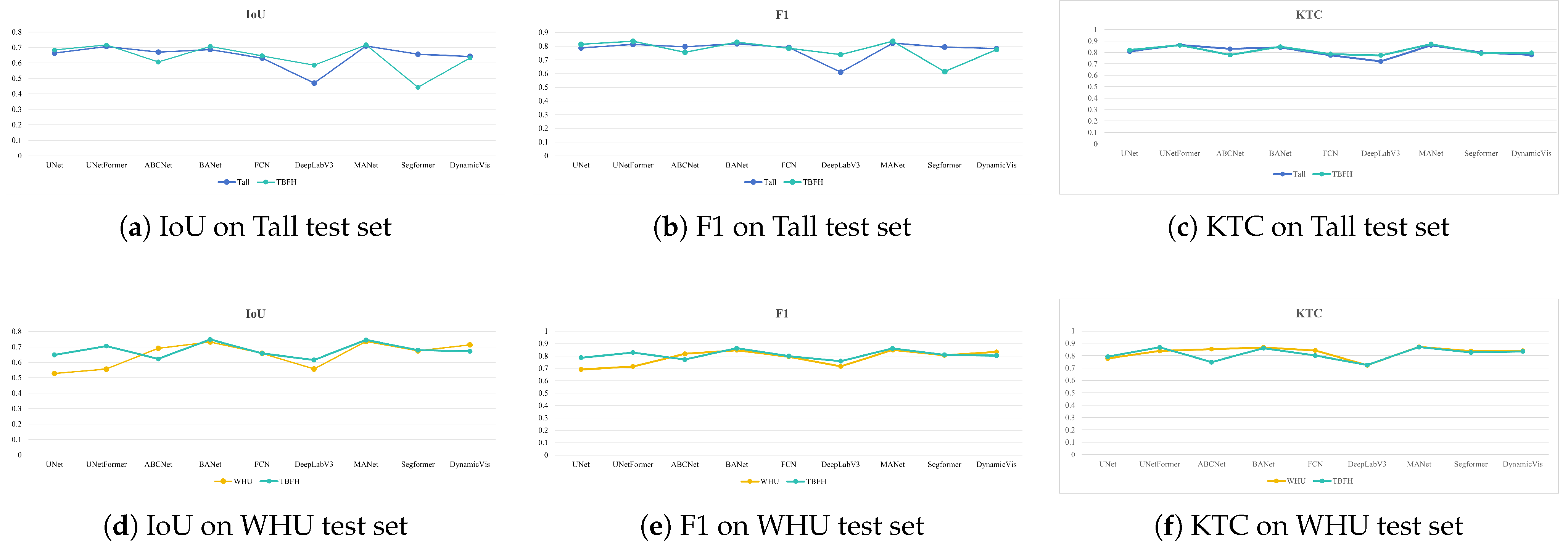

- Empirical demonstration of performance gains: Our experiments show that models trained on TBFH achieve significant improvements in IoU, F1 score, Recall, and KTC. TBFH better captures the geometry of tall buildings and reveals valuable insights for urban building extraction.

2. Related Work

2.1. Building-Extraction Methods

2.2. Dataset Limitations

2.3. Hybrid Training Strategies

2.4. Tall-Building-Extraction Challenges

3. Methodology

3.1. Dataset

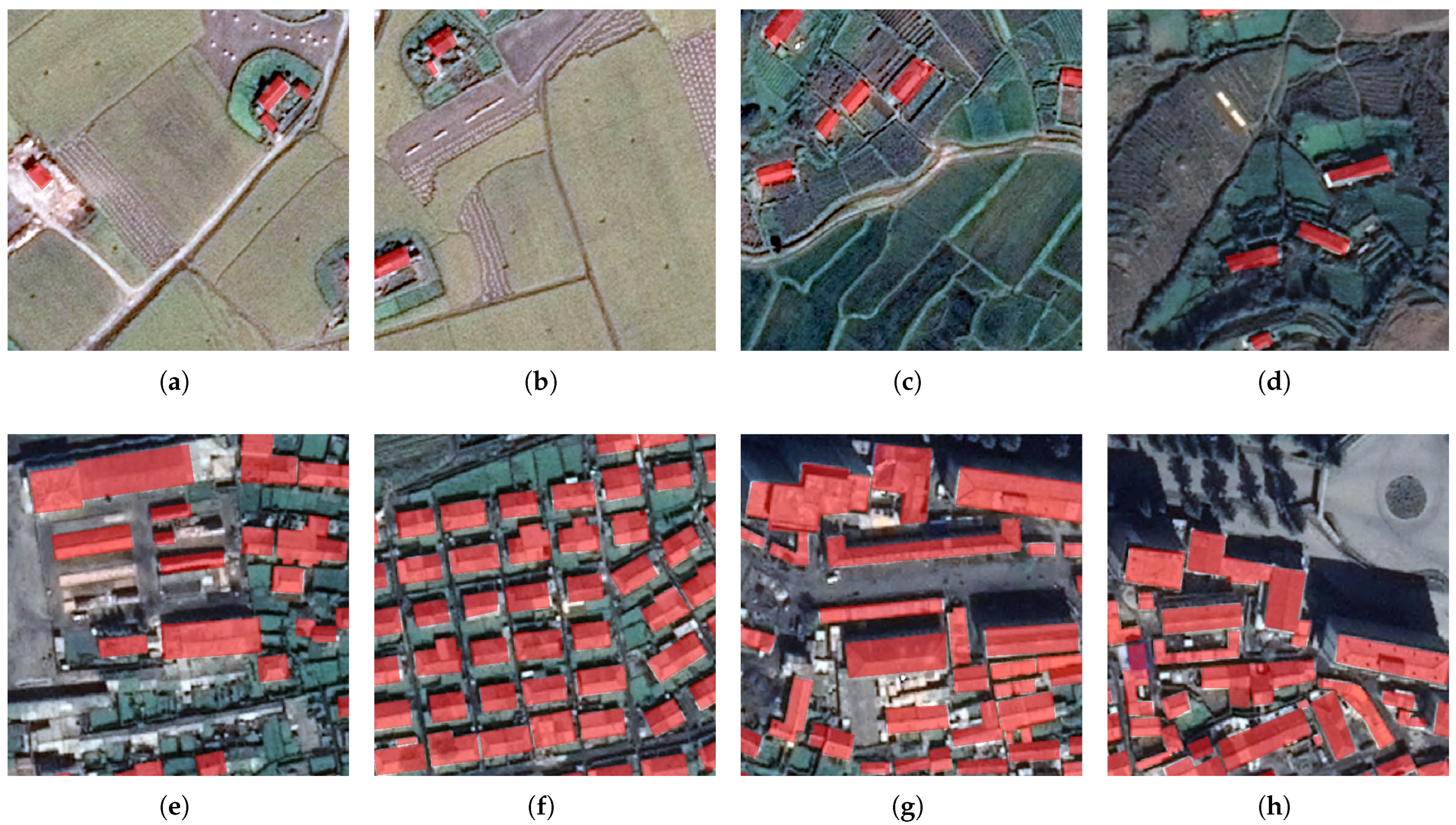

3.1.1. WHU Building Dataset

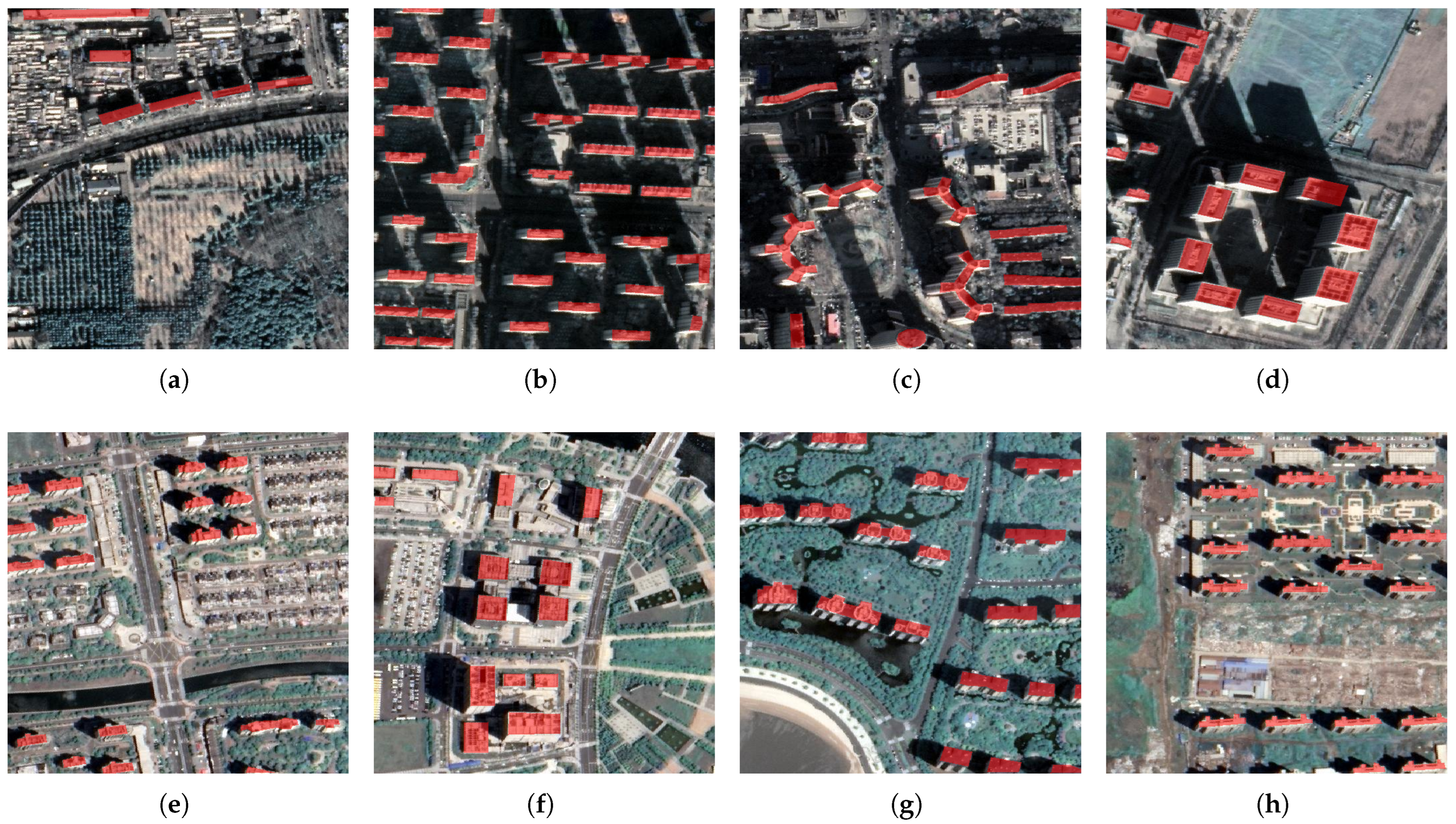

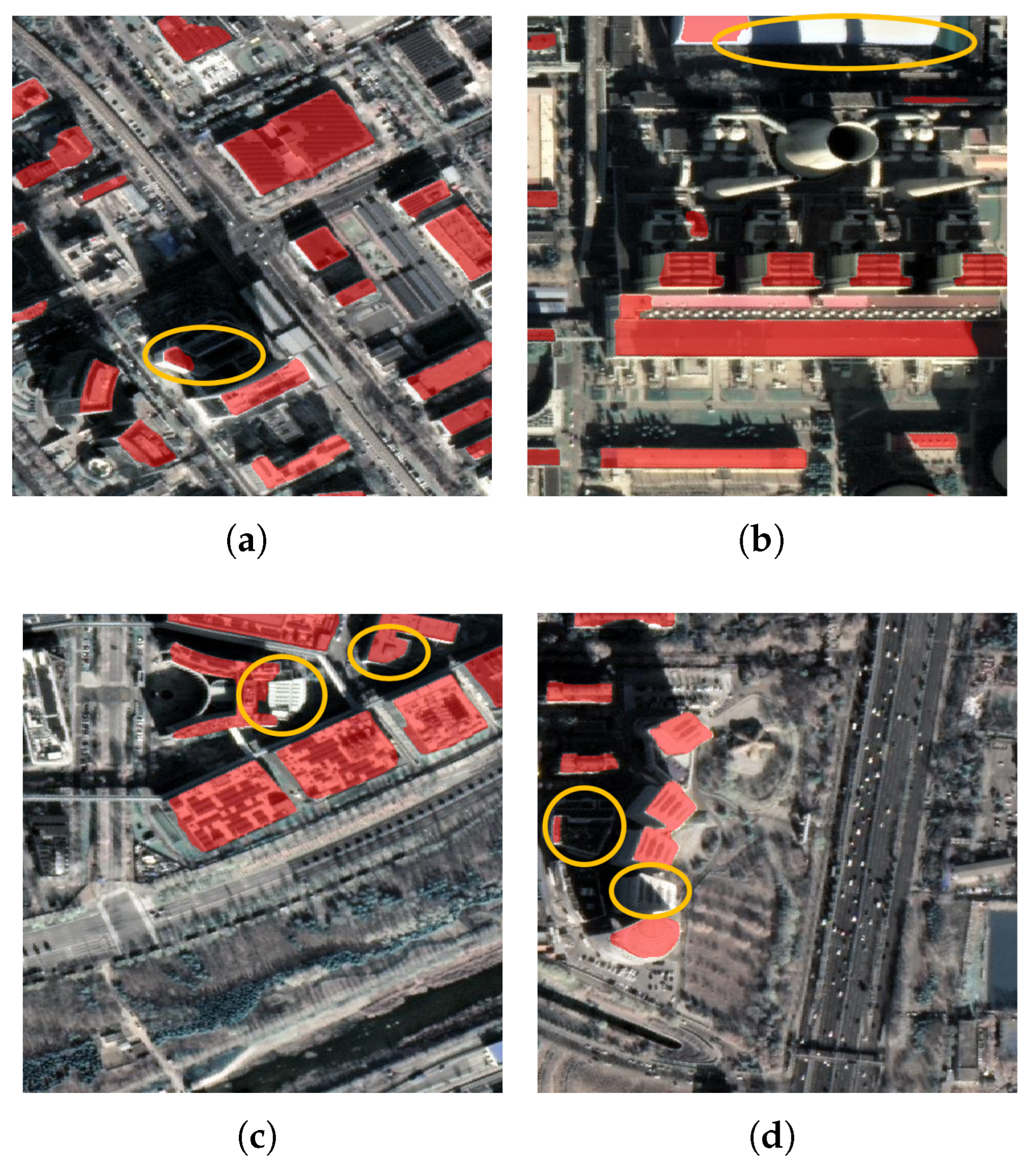

3.1.2. Tall Building Dataset

- Height exceeding 50 m;

- More than 15 floors;

- A building footprint area greater than 300 m2 combined with noticeable vertical structures visible in the imagery (e.g., clearly visible façades, cast shadows, rooftop details).

3.2. Dataset Fusion Strategy

3.3. Building-Detection Models

3.3.1. U-Net

3.3.2. UNetFormer

3.3.3. ABCNet

3.3.4. BANet

3.3.5. FCN

3.3.6. DeepLabV3

3.3.7. MANet

3.3.8. SegFormer

3.3.9. DynamicVis

3.4. Performance Evaluation

- measures the similarity in keypoint quantity:

- compares the structural adjacency of keypoints by constructing undirected cyclic graphs and computing the difference between their adjacency matrices:where and are adjacency matrices (after truncation or padding), and denotes the matrix 1-norm.

- evaluates the spatial consistency of matched keypoint pairs using a nearest-neighbor strategy:where is the closest ground-truth keypoint to , K is the number of matched pairs, and D is the diagonal length of the union bounding box for scale normalization:with a small constant (e.g., 1 × 10−6) to prevent division by zero.

4. Experiment

4.1. Experiment Setup

4.2. Dataset Preparation

4.3. Implementation Details

4.4. Comparison Methods

- Training on the Tall Building dataset: In this experiment, all the models were trained using only the proposed Tall Building dataset. This setup was intended to assess the performance of each model on a dataset specifically designed for tall building-extraction tasks.

- Training on the WHU dataset: The second experiment involved training the UNetFormer model exclusively on the WHU dataset, which is a widely recognized benchmark in remote sensing tasks. This setup allowed for an evaluation of the model’s performance on a more general dataset that contains a variety of building types.

- Training on a mixed dataset: The final experiment combined both the Tall Building and WHU datasets into a mixed training set. The UNetFormer model was trained using this combined dataset to investigate the impact of using a more diverse dataset on the model’s performance and its generalization ability across different scenarios.

5. Results

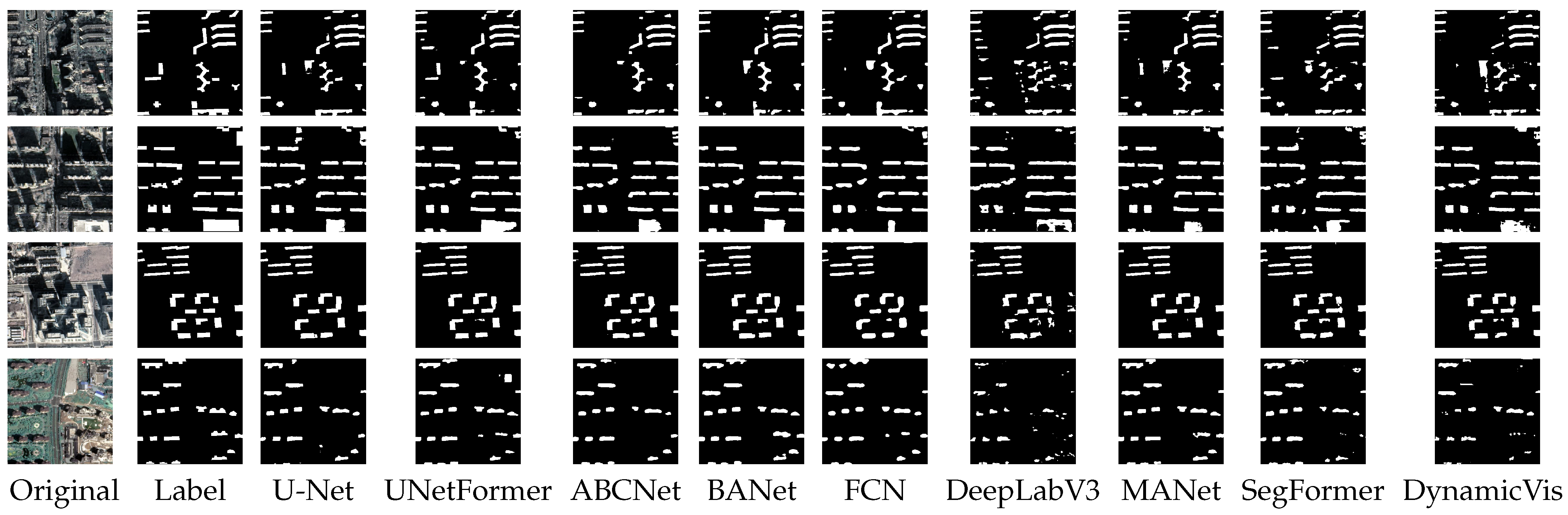

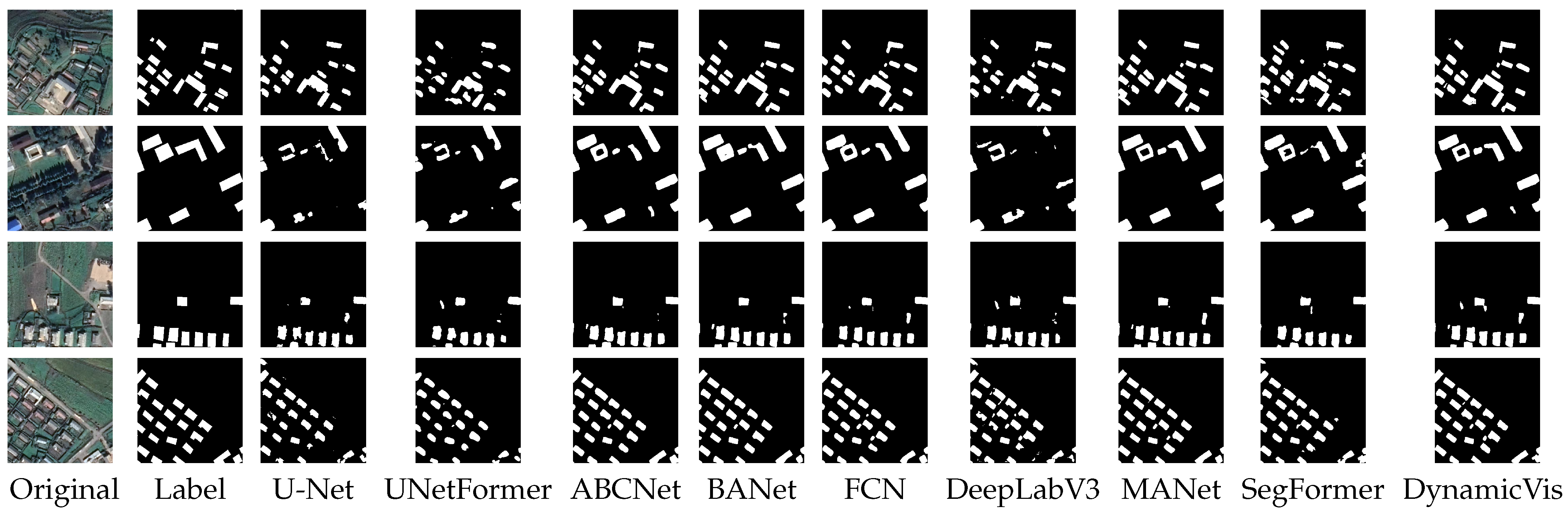

5.1. Performance Evaluation on Tall Building Dataset

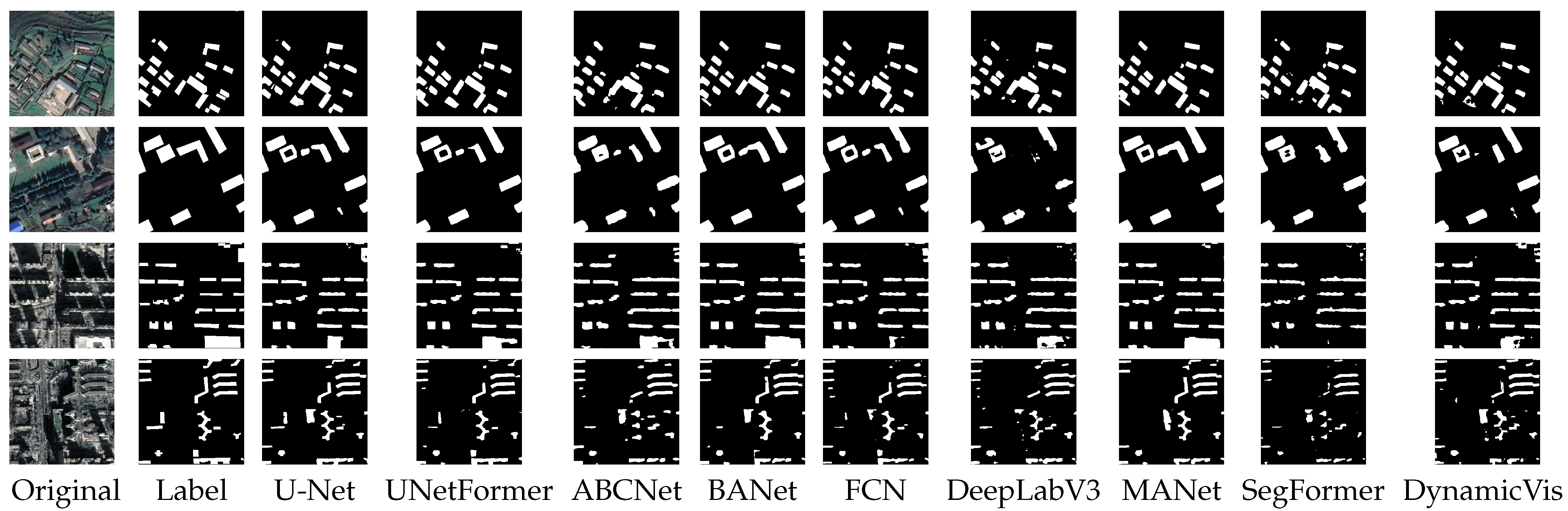

5.2. Performance Evaluation on WHU Dataset

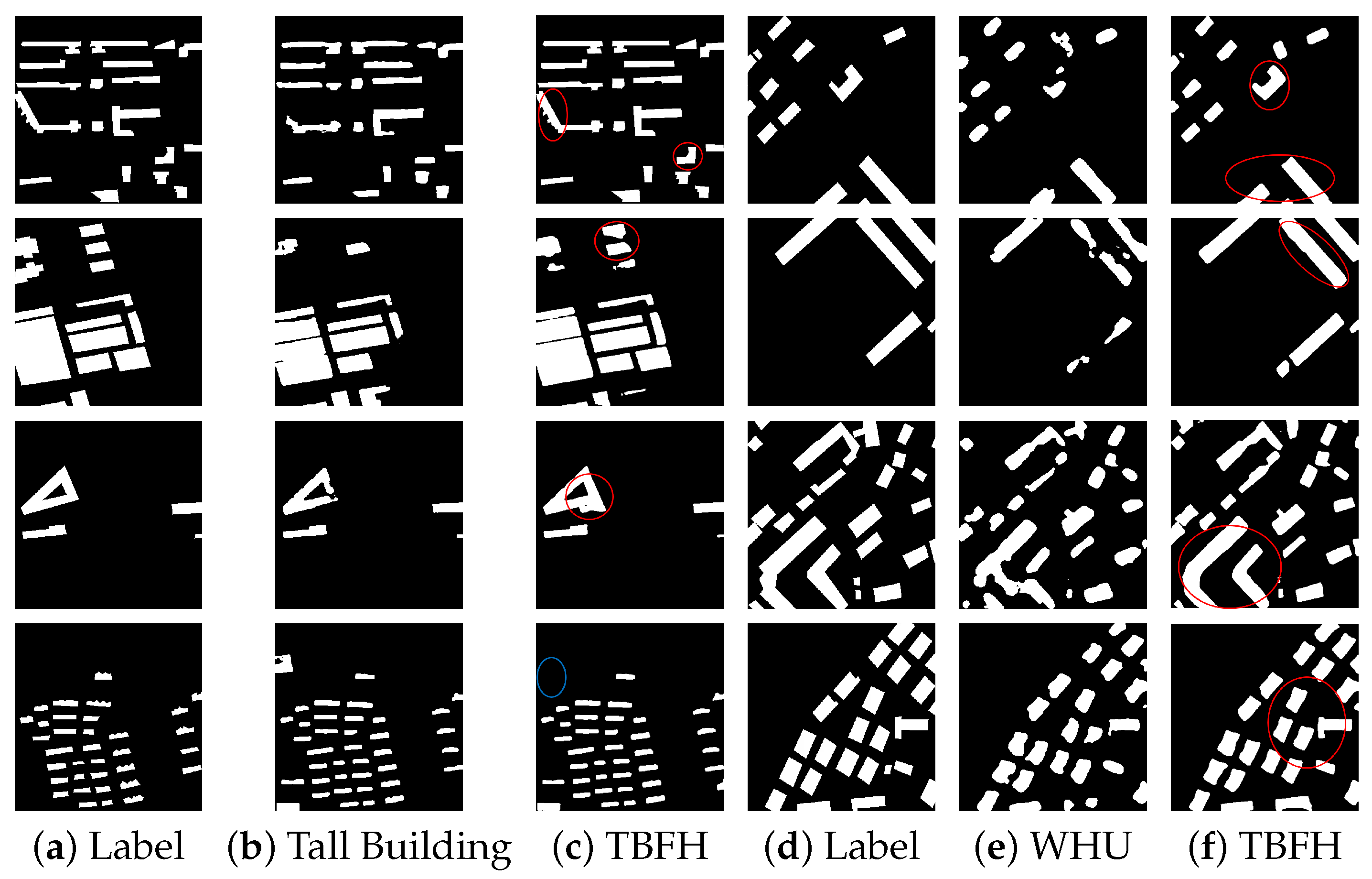

5.3. Performance Evaluation on TBFH Dataset

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dabove, P.; Daud, M.; Olivotto, L. Revolutionizing Urban Mapping: Deep Learning and Data Fusion Strategies for Accurate Building Footprint Segmentation. Sci. Rep. 2024, 14, 13510. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Q. Building Rooftop Extraction from High Resolution Aerial Images Using Multiscale Global Perceptron with Spatial Context Refinement. Sci. Rep. 2025, 15, 6499. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Li, R.; Wang, Y.; Liu, Y. Study on the Classification of Building Based on ResNet. In Proceedings of the 11th International Conference on Information Systems and Computing Technology, ISCTech 2023, Qingdao, China, 30 July–1 August 2023; Institute of Electrical and Electronics Engineers Inc.: Qingdao, China, 2023; pp. 458–462. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Khatua, A.; Bhattacharya, A.; Aithal, B.H. Automated Georeferencing and Extraction of Building Footprints from Remotely Sensed Imagery Using Deep Learning. In Proceedings of the 10th International Conference on Geographical Information Systems Theory, Applications and Management, GISTAM 2024, Angers, France, 2–4 May 2024; Science and Technology Publications, Lda.: Angers, France, 2024; pp. 128–135. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Wang, K. Edge Detection of Inner Crack Defects Based on Improved Sobel Operator and Clustering Algorithm. In Recent Trends in Materials and Mechanical Engineering, Mechatronics and Automation, PTS 1-3; Luo, Q., Ed.; Trans Tech Publications Ltd.: Baech, Switzerland, 2011; pp. 467–471. [Google Scholar] [CrossRef]

- Yuan, L.; Xu, X. Adaptive Image Edge Detection Algorithm Based on Canny Operator. In Proceedings of the 2015 4th International Conference on Advanced Information Technology and Sensor Application (AITS), Harbin, China, 21–23 August 2015; pp. 28–31. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Qin, X.; Ye, X.; Qin, Q. An Efficient Approach for Automatic Rectangular Building Extraction from Very High Resolution Optical Satellite Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 487–491. [Google Scholar] [CrossRef]

- Irvin, R.B.; McKeown, D.M. Methods for Exploiting the Relationship between Buildings and Their Shadows in Aerial Imagery. IEEE Trans. Syst. Man Cybern. 1989, 19, 1564–1575. [Google Scholar] [CrossRef]

- Ok, A.O. Automated Detection of Buildings from Single VHR Multispectral Images Using Shadow Information and Graph Cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support Vector Machines in Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in Remote Sensing: A Review of Applications and Future Directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Jiao, W.; Persello, C.; Vosselman, G. PolyR-CNN: R-CNN for End-to-End Polygonal Building Outline Extraction. ISPRS J. Photogramm. Remote Sens. 2024, 218, 33–43. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Vasavi, S.; Sri Somagani, H.; Sai, Y. Classification of Buildings from VHR Satellite Images Using Ensemble of U-Net and ResNet. Egypt. J. Remote Sens. Space Sci. 2023, 26, 937–953. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Toronto, ON, Canada, 2013. [Google Scholar]

- Wang, J.; Meng, L.; Li, W.; Yang, W.; Yu, L.; Xia, G.-S. Learning to Extract Building Footprints from Off-Nadir Aerial Images. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 1294–1301. [Google Scholar] [CrossRef] [PubMed]

- Bruzzone, L.; Marconcini, M. Domain Adaptation Problems: A DASVM Classification Technique and a Circular Validation Strategy. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 770–787. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Sun, C.; Wang, S. Shadow Detection and Compensation from Remote Sensing Images under Complex Urban Conditions. Remote Sens. 2021, 13, 699. [Google Scholar] [CrossRef]

- Sun, G.; Chen, Y.; Huang, J.; Ma, Q.; Ge, Y. Digital Surface Model Super-Resolution by Integrating High-Resolution Remote Sensing Imagery Using Generative Adversarial Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10636–10647. [Google Scholar] [CrossRef]

- Council on Tall Buildings and Urban Habitat (CTBUH). CTBUH Official Website. Available online: https://www.ctbuh.org/ (accessed on 23 June 2025).

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Wang, L.; Atkinson, P.M. ABCNet: Attentive Bilateral Contextual Network for Efficient Semantic Segmentation of Fine-Resolution Remotely Sensed Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 84–98. [Google Scholar] [CrossRef]

- Zhou, Q.; Qiang, Y.; Mo, Y.; Wu, X.; Latecki, L.J. BANet: Boundary-Assistant Encoder-Decoder Network for Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25259–25270. [Google Scholar] [CrossRef]

- Tian, T.; Chu, Z.; Hu, Q.; Ma, L. Class-Wise Fully Convolutional Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2021, 13, 3211. [Google Scholar] [CrossRef]

- Heryadi, Y.; Irwansyah, E.; Miranda, E.; Soeparno, H.; Herlawati; Hashimoto, K. The Effect of Resnet Model as Feature Extractor Network to Performance of DeepLabV3 Model for Semantic Satellite Image Segmentation. In Proceedings of the 2020 IEEE Asia-Pacific Conference on Geoscience, Electronics and Remote Sensing Technology (AGERS), Jakarta, Indonesia, 7–9 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 74–77. [Google Scholar] [CrossRef]

- He, P.; Jiao, L.; Shang, R.; Wang, S.; Liu, X.; Quan, D.; Yang, K.; Zhao, D. MANet: Multi-Scale Aware-Relation Network for Semantic Segmentation in Aerial Scenes. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5624615. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

- Chen, K.; Liu, C.; Chen, B.; Li, W.; Zou, Z.; Shi, Z. DynamicVis: An Efficient and General Visual Foundation Model for Remote Sensing Image Understanding. arXiv 2025, arXiv:2503.16426. [Google Scholar]

| Datasets | GCD (m) | Area (km2) | Sources | Tiles | Pixels | Label Format |

|---|---|---|---|---|---|---|

| Tall Building (ours) | 0.6 | 242 | sat | 2520 | 512 × 512 | raster |

| WHU | 0.075/2.7 | 450/550 | aerial/sat | 8189/17,388 | 512 × 512 | vector/raster |

| ISPRS | 0.05/0.09 | 2/11 | aerial | 24/16 | 6000 × 6000/11,500 × 7500 | raster |

| Massachusetts | 1.00 | 340 | aerial | 151 | 1500 × 1500 | raster |

| Inria | 0.3 | 405 * | aerial | 180 | 5000 × 5000 | raster |

| Model | IoU | Precision | Recall | F1 | KTC |

|---|---|---|---|---|---|

| UNet | 0.6635 | 0.8413 | 0.7584 | 0.7977 | 0.8090 |

| UNetFormer | 0.7063 | 0.8759 | 0.7849 | 0.8279 | 0.8648 |

| ABCNet | 0.6695 | 0.8481 | 0.7608 | 0.8021 | 0.8302 |

| BANet | 0.6862 | 0.8683 | 0.7660 | 0.8139 | 0.8431 |

| FCN | 0.6307 | 0.8384 | 0.7180 | 0.7735 | 0.7749 |

| Deeplabv3 | 0.4692 | 0.6493 | 0.6284 | 0.6387 | 0.7214 |

| MANet | 0.7098 | 0.8759 | 0.7892 | 0.8303 | 0.8608 |

| SegFormer | 0.6565 | 0.8280 | 0.7602 | 0.7926 | 0.7992 |

| DynamicVis | 0.6426 | 0.8298 | 0.7402 | 0.7824 | 0.7784 |

| Model | IoU | Precision | Recall | F1 | KTC |

|---|---|---|---|---|---|

| UNet | 0.5286 | 0.8078 | 0.6047 | 0.6916 | 0.7773 |

| UNetFormer | 0.5562 | 0.8558 | 0.6137 | 0.7148 | 0.8385 |

| ABCNet | 0.6907 | 0.8441 | 0.7917 | 0.8171 | 0.8535 |

| BANet | 0.7329 | 0.8518 | 0.8400 | 0.8458 | 0.8675 |

| FCN | 0.6587 | 0.8845 | 0.7207 | 0.7942 | 0.8426 |

| Deeplabv3 | 0.5572 | 0.8454 | 0.6205 | 0.7157 | 0.7236 |

| MANet | 0.7365 | 0.8704 | 0.8272 | 0.8482 | 0.8714 |

| SegFormer | 0.6741 | 0.8089 | 0.8019 | 0.8054 | 0.8367 |

| DynamicVis | 0.7137 | 0.8594 | 0.8081 | 0.8330 | 0.8401 |

| Test Data | Tall Building | WHU | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model | IoU | Precision | Recall | F1 | KTC | IoU | Precision | Recall | F1 | KTC |

| UNet | 0.6841 | 0.8515 | 0.7767 | 0.8124 | 0.8202 | 0.6481 | 0.8648 | 0.7212 | 0.7865 | 0.7924 |

| UNetFormer | 0.7162 | 0.8787 | 0.7948 | 0.8346 | 0.8624 | 0.7056 | 0.8680 | 0.7904 | 0.8274 | 0.8684 |

| ABCNet | 0.6062 | 0.8824 | 0.6976 | 0.7549 | 0.7788 | 0.6227 | 0.8633 | 0.7087 | 0.7721 | 0.7479 |

| BANet | 0.7067 | 0.8657 | 0.7937 | 0.8281 | 0.8489 | 0.7481 | 0.8782 | 0.8477 | 0.8627 | 0.8614 |

| FCN | 0.6455 | 0.8619 | 0.7200 | 0.7846 | 0.7862 | 0.6580 | 0.9068 | 0.7146 | 0.7990 | 0.8016 |

| Deeplabv3 | 0.5851 | 0.8120 | 0.6768 | 0.7383 | 0.7744 | 0.6154 | 0.8592 | 0.6894 | 0.7585 | 0.7251 |

| MANet | 0.7170 | 0.8725 | 0.8010 | 0.8352 | 0.8727 | 0.7456 | 0.8870 | 0.8365 | 0.8610 | 0.8704 |

| SegFormer | 0.4427 | 0.9157 | 0.4615 | 0.6137 | 0.7920 | 0.6786 | 0.8122 | 0.8048 | 0.8085 | 0.8259 |

| DynamicVis | 0.6319 | 0.8464 | 0.7138 | 0.7745 | 0.7948 | 0.6713 | 0.8690 | 0.7469 | 0.8034 | 0.8350 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, L.; Wang, F.; Zhou, G.; Jiao, N.; He, M.; Zhu, J.; You, H. TBFH: A Total-Building-Focused Hybrid Dataset for Remote Sensing Image Building Detection. Remote Sens. 2025, 17, 2316. https://doi.org/10.3390/rs17132316

Yi L, Wang F, Zhou G, Jiao N, He M, Zhu J, You H. TBFH: A Total-Building-Focused Hybrid Dataset for Remote Sensing Image Building Detection. Remote Sensing. 2025; 17(13):2316. https://doi.org/10.3390/rs17132316

Chicago/Turabian StyleYi, Lin, Feng Wang, Guangyao Zhou, Niangang Jiao, Minglin He, Jingxing Zhu, and Hongjian You. 2025. "TBFH: A Total-Building-Focused Hybrid Dataset for Remote Sensing Image Building Detection" Remote Sensing 17, no. 13: 2316. https://doi.org/10.3390/rs17132316

APA StyleYi, L., Wang, F., Zhou, G., Jiao, N., He, M., Zhu, J., & You, H. (2025). TBFH: A Total-Building-Focused Hybrid Dataset for Remote Sensing Image Building Detection. Remote Sensing, 17(13), 2316. https://doi.org/10.3390/rs17132316