Automated Detection of Center-Pivot Irrigation Systems from Remote Sensing Imagery Using Deep Learning

Abstract

1. Introduction

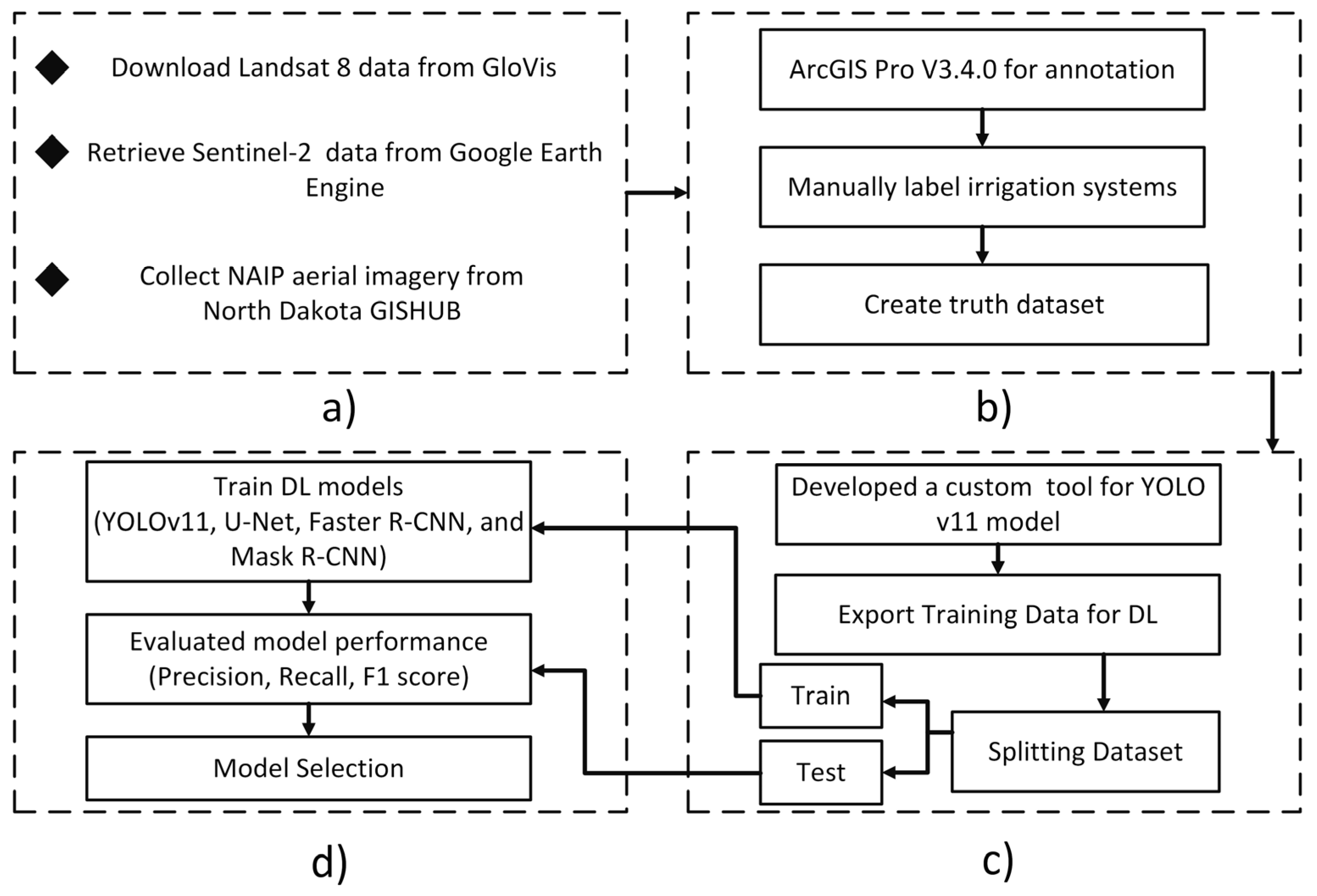

2. Materials and Methods

2.1. Study Area

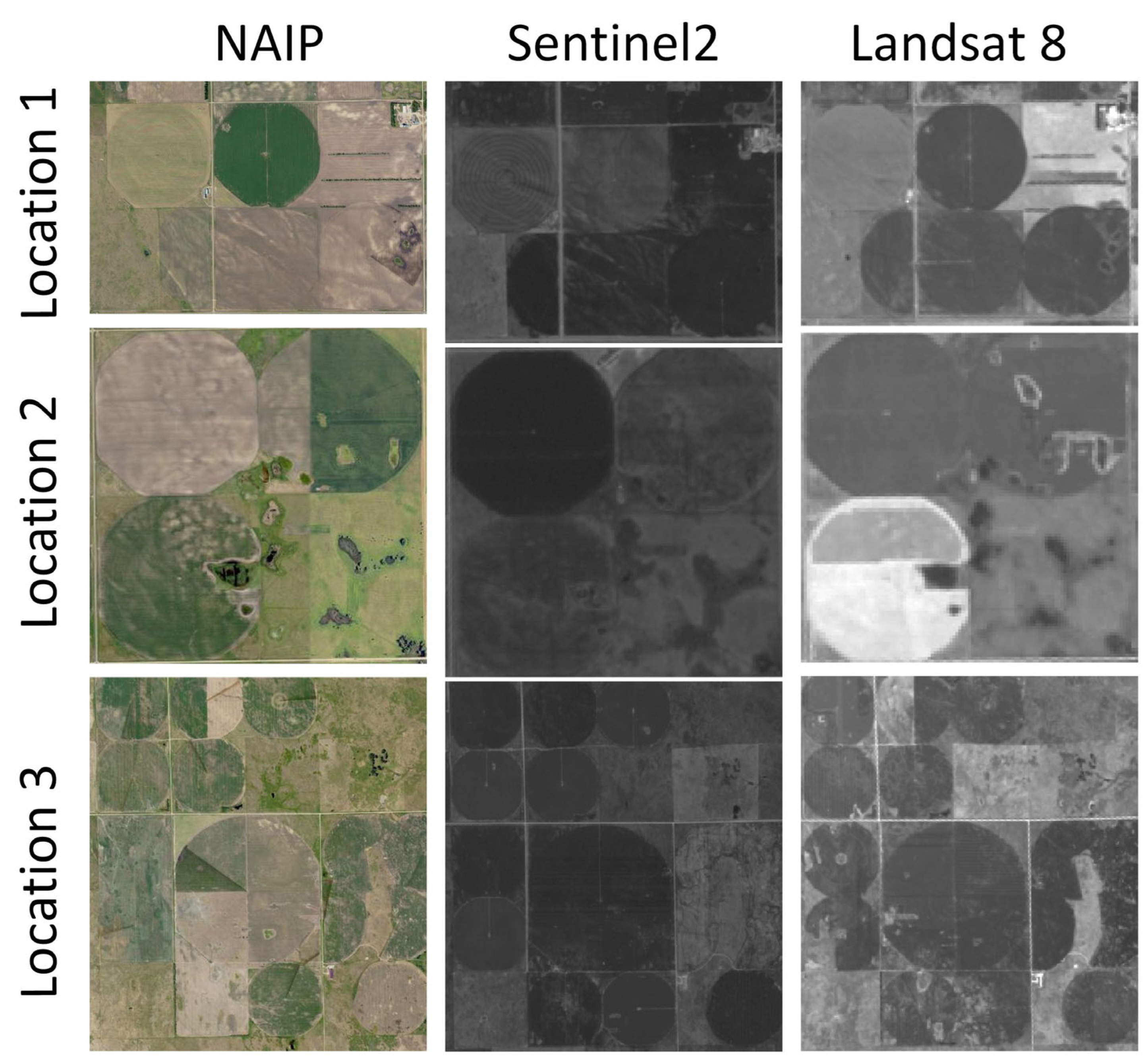

2.2. Dataset Creation

2.3. Model Selection

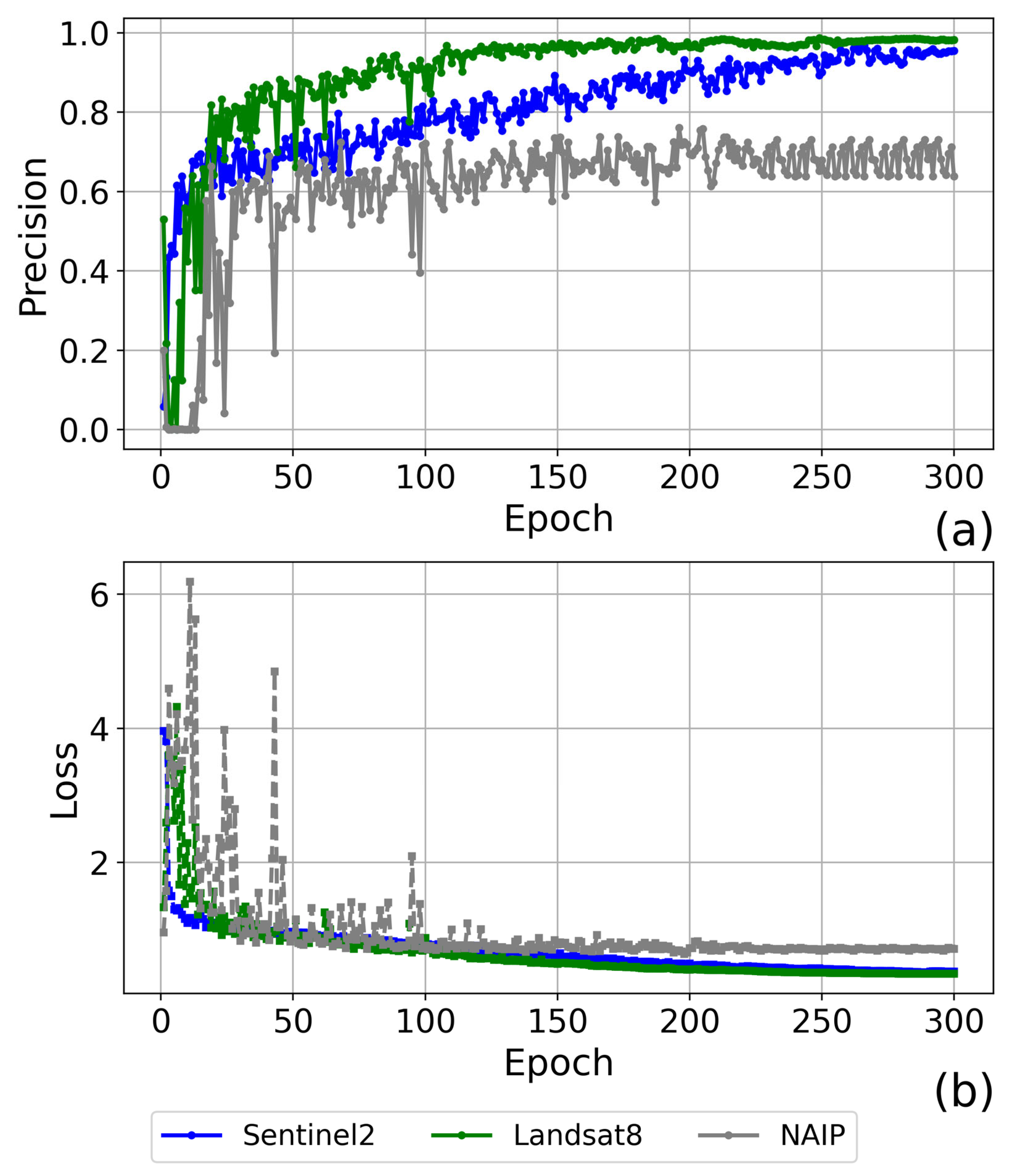

2.4. Model Training

2.5. Model Evaluation

2.5.1. Spatial Autocorrelation Analysis (Moran’s I)

2.5.2. Hotspot Analysis (Getis-Ord Gi*)

3. Results

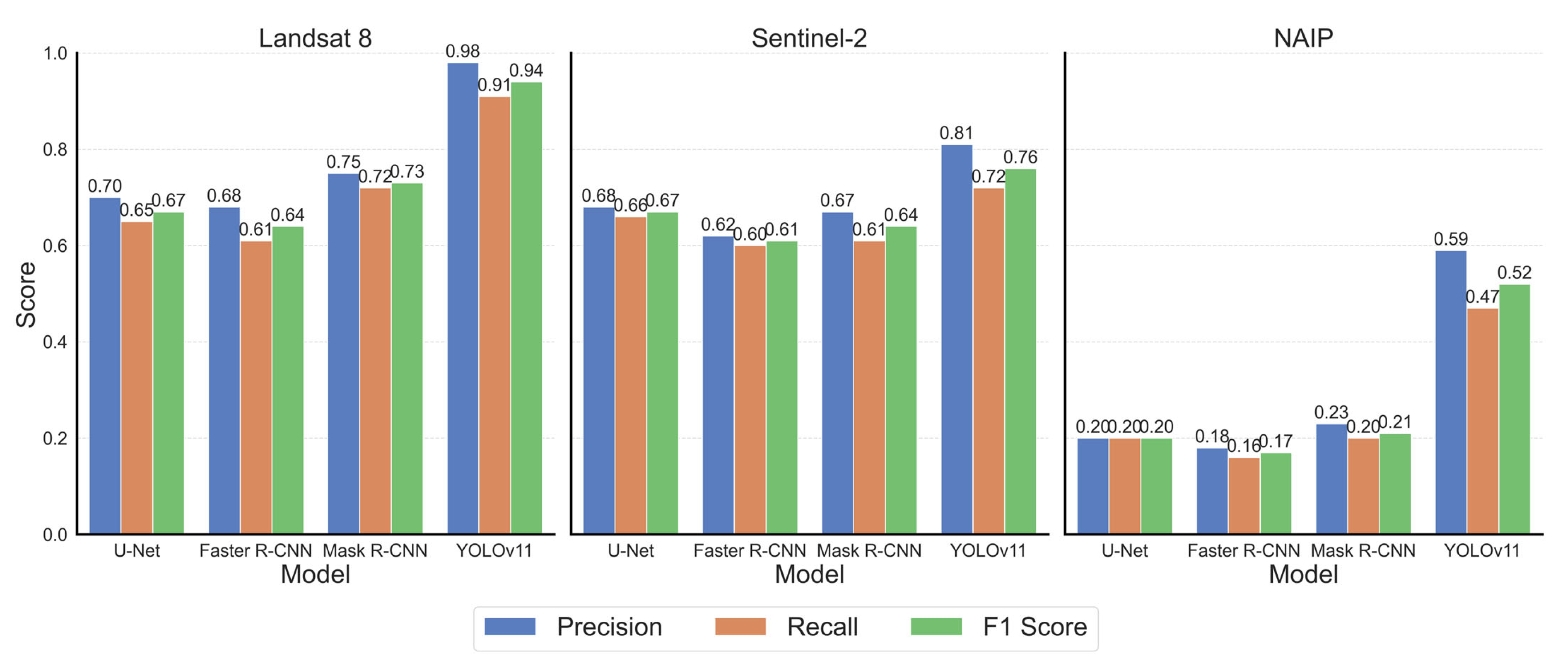

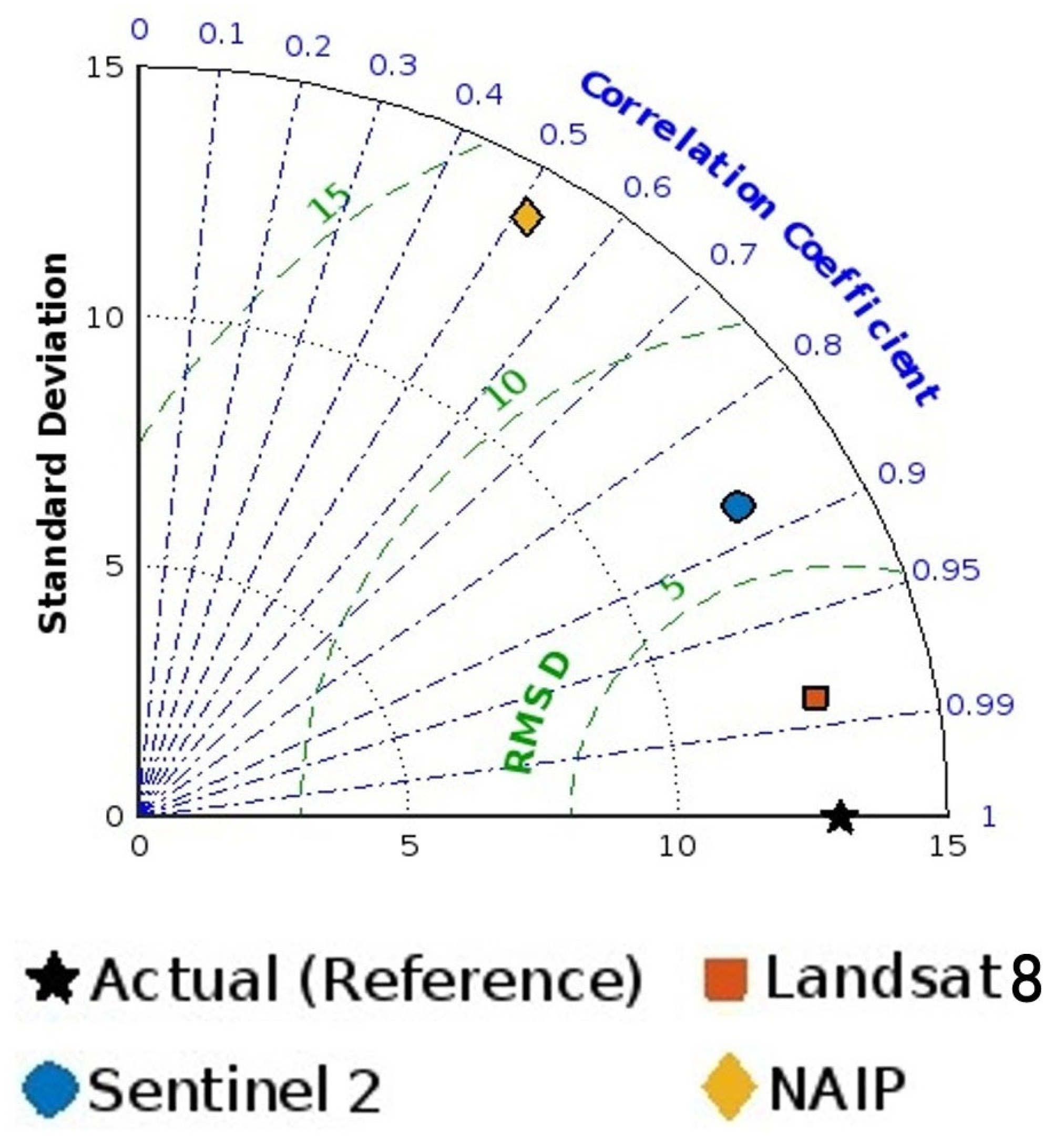

3.1. Detection Performance

3.2. Moran’s I (Spatial Autocorrelation)

3.3. Hotspot Analysis (Getis-Ord Gi*)

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ogunba, A. Threats to Groundwater: Lessons from Canada and Selected Jurisdictions. J. Energy Nat. Resour. Law 2012, 30, 159–184. [Google Scholar] [CrossRef]

- Proulx, R.A.; Knudson, M.D.; Kirilenko, A.; VanLooy, J.A.; Zhang, X. Significance of surface water in the terrestrial water budget: A case study in the Prairie Coteau using GRACE, GLDAS, Landsat, and groundwater well data. Water Resour. Res. 2013, 49, 5756–5764. [Google Scholar] [CrossRef]

- McShane, R.R.; Barnhart, T.B.; Valder, J.F.; Haines, S.S.; Macek-Rowland, K.M.; Carter, J.M.; Delzer, G.C.; Thamke, J.N. Estimates of Water Use Associated with Continuous Oil and Gas Development in the Williston Basin, North Dakota and Montana, 2007–17; US Geological Survey: Reston, VA, USA, 2020.

- Lin, Z.; Lin, T.; Lim, S.H.; Hove, M.H.; Schuh, W.M. Impacts of Bakken Shale Oil Development on Regional Water Uses and Supply. J. Am. Water Resour. Assoc. 2018, 54, 225–239. [Google Scholar] [CrossRef]

- Lin, Z.; Lim, S.H.; Lin, T.; Borders, M. Using Agent-Based Modeling for Water Resources Management in the Bakken Region. J. Water Resour. Plan. Manag. 2020, 146, 05019020. [Google Scholar] [CrossRef]

- Lin, T.; Lin, Z.; Lim, S.H.; Jia, X.; Chu, X. A Spatial Agent-Based Model for Hydraulic Fracturing Water Distribution. Front. Environ. Sci. 2022, 10, 1025559. [Google Scholar] [CrossRef]

- Li, R.; Merchant, J.W. Modeling Vulnerability of Groundwater to Pollution under Future Scenarios of Climate Change and Biofuels-Related Land Use Change: A Case Study in North Dakota, USA. Sci. Total Environ. 2013, 447, 32–45. [Google Scholar] [CrossRef]

- Nustad, R.A.; Damschen, W.C.; Vecchia, A.V. Interactive Tool to Estimate Groundwater Elevations in Central and Eastern North Dakota; US Geological Survey: Reston, VA, USA, 2018.

- Hill, R.; Keller, J. Irrigation System Selection for Maximum Crop Profit. Trans. ASAE 1980, 23, 366–372. [Google Scholar] [CrossRef]

- Hassani, K.; Taghvaeian, S.; Gholizadeh, H. A Geographical Survey of Center Pivot Irrigation Systems in the Central and Southern High Plains Aquifer Region of the United States. Appl. Eng. Agric. 2021, 37, 1139–1145. [Google Scholar] [CrossRef]

- Wenger, K.; Vadjunec, J.M.; Fagin, T. Groundwater Governance and the Growth of Center Pivot Irrigation in Cimarron County, OK and Union County, NM: Implications for Community Vulnerability to Drought. Water 2017, 9, 39. [Google Scholar] [CrossRef]

- Lian, J.; Li, Y.; Li, Y.; Zhao, X.; Zhang, T.; Wang, X.; Wang, X.; Wang, L.; Zhang, R. Effect of Center-Pivot Irrigation Intensity on Groundwater Level Dynamics in the Agro-Pastoral Ecotone of Northern China. Front. Environ. Sci. 2022, 10, 892577. [Google Scholar] [CrossRef]

- Sabir, R.M.; Sarwar, A.; Shoaib, M.; Saleem, A.; Alhousain, M.H.; Wajid, S.A.; Rasul, F.; Adnan Shahid, M.; Anjum, L.; Safdar, M.; et al. Managing Water Resources for Sustainable Agricultural Production. In Transforming Agricultural Management for a Sustainable Future: Climate Change and Machine Learning Perspectives; Springer: Berlin/Heidelberg, Germany, 2024; pp. 47–74. [Google Scholar]

- de Albuquerque, A.O.; de Carvalho, O.L.F.; e Silva, C.R.; Luiz, A.S.; de Bem, P.P.; Gomes, R.A.T.; Guimarães, R.F.; de Carvalho Júnior, O.A. Dealing with Clouds and Seasonal Changes for Center Pivot Irrigation Systems Detection Using Instance Segmentation in Sentinel-2 Time Series. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8447–8457. [Google Scholar] [CrossRef]

- Tang, J.; Arvor, D.; Corpetti, T.; Tang, P. Pvanet-Hough: Detection and Location of Center Pivot Irrigation Systems from Sentinel-2 Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 3, 559–564. [Google Scholar] [CrossRef]

- de Albuquerque, A.O.; de Carvalho, O.L.F.; e Silva, C.R.; de Bem, P.P.; Gomes, R.A.T.; Borges, D.L.; Guimarães, R.F.; Pimentel, C.M.M.; de Carvalho Júnior, O.A. Instance Segmentation of Center Pivot Irrigation Systems Using Multi-Temporal SENTINEL-1 SAR Images. Remote Sens. Appl. Soc. Environ. 2021, 23, 100537. [Google Scholar] [CrossRef]

- Pang, S.; Sun, L.; Tian, Y.; Ma, Y.; Wei, J. Convolutional Neural Network-Driven Improvements in Global Cloud Detection for Landsat 8 and Transfer Learning on Sentinel-2 Imagery. Remote Sens. 2023, 15, 1706. [Google Scholar] [CrossRef]

- Kazemi Garajeh, M.; Blaschke, T.; Hossein Haghi, V.; Weng, Q.; Valizadeh Kamran, K.; Li, Z. A Comparison between Sentinel-2 and Landsat 8 OLI Satellite Images for Soil Salinity Distribution Mapping Using a Deep Learning Convolutional Neural Network. Can. J. Remote Sens. 2022, 48, 452–468. [Google Scholar] [CrossRef]

- Saraiva, M.; Protas, É.; Salgado, M.; Souza Jr, C. Automatic Mapping of Center Pivot Irrigation Systems from Satellite Images Using Deep Learning. Remote Sens. 2020, 12, 558. [Google Scholar] [CrossRef]

- Cooley, D.; Maxwell, R.M.; Smith, S.M. Center Pivot Irrigation Systems and Where to Find Them: A Deep Learning Approach to Provide Inputs to Hydrologic and Economic Models. Front. Water 2021, 3, 786016. [Google Scholar] [CrossRef]

- Badh, A.; Akyuz, A.; Vocke, G.; Mullins, B. Impact of Climate Change on the Growing Seasons in Select Cities of North Dakota, United States of America. Int. J. Clim. Chang. Impacts Responses 2009, 1, 105. [Google Scholar] [CrossRef]

- Ustin, S.L.; Middleton, E.M. Current and Near-Term Earth-Observing Environmental Satellites, Their Missions, Characteristics, Instruments, and Applications. Sensors 2024, 24, 3488. [Google Scholar] [CrossRef]

- Razzak, M.T.; Mateo-García, G.; Lecuyer, G.; Gómez-Chova, L.; Gal, Y.; Kalaitzis, F. Multi-Spectral Multi-Image Super-Resolution of Sentinel-2 with Radiometric Consistency Losses and Its Effect on Building Delineation. ISPRS J. Photogramm. Remote Sens. 2023, 195, 1–13. [Google Scholar] [CrossRef]

- USDA-FSA-APFO Aerial Photography Field Office. National Agriculture Imagery Program (NAIP) Orthoimagery for Zone 12 Arizona State Quarter Quadrangle Pedregosa Mountains East, Ne and ID# M_3110930_ne_12_1_20070609. Tif; USDA-FSA-APFO Aerial Photography Field Office: Salt Lake City, UT, USA, 2008.

- Johansen, K.; Lopez, O.; Tu, Y.-H.; Li, T.; McCabe, M.F. Center Pivot Field Delineation and Mapping: A Satellite-Driven Object-Based Image Analysis Approach for National Scale Accounting. ISPRS J. Photogramm. Remote Sens. 2021, 175, 1–19. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Kim, H.-J. Comparative Analysis of YOLO Series (from V1 to V11) and Their Application in Computer Vision. J. Semicond. Disp. Technol. 2024, 23, 190–198. [Google Scholar]

- Bazrafkan, A.; Das, A.K.; Miranda, A.; Shah, R.; Green, A.; Flores, P. The Efficacy of UAS RGB Imagery and Deep Learning for Cereal Crop Lodging Detection. In Proceedings of the 2024 ASABE Annual International Meeting, Anaheim, CA, USA, 28–31 July 2024; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2024; p. 1. [Google Scholar]

- Girshick, R. Fast R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Bazrafkan, A.; Kim, J.; Navasca, H.; Bandillo, N.; Flores, P. Assessing Dry Pea Stands Using Deep Learning Models in ArcGIS Pro. In Proceedings of the 2024 ASABE Annual International Meeting, Anaheim, CA, USA, 28–31 July 2024; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2024; p. 1. [Google Scholar]

- Sapkota, R.; Qureshi, R.; Calero, M.; Badjugar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M.; et al. Yolo11 to Its Genesis: A Decadal and Comprehensive Review of the You Only Look Once (Yolo) Series. arXiv 2025, arXiv:2406.19407. [Google Scholar]

- de Albuquerque, A.O.; de Carvalho Júnior, O.A.; Carvalho, O.L.F.d.; de Bem, P.P.; Ferreira, P.H.G.; de Moura, R.; dos, S.; Silva, C.R.; Trancoso Gomes, R.A.; Fontes Guimarães, R. Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data. Remote Sens. 2020, 12, 2159. [Google Scholar] [CrossRef]

- Liang, L.; Huang, W.; Awan, M.; Parveen, A.; Li, R.; Bi, F.; Shao, J.; Liang, X.; Wu, C.; Liu, Z. Study and application of image water level recognition calculation method based on mask rcnn and faster r-cnn. Appl. Ecol. Environ. Res. 2023, 21, 5039–5053. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Navasca, H.; Worral, H.; Oduor, P.; Delavarpour, N.; Morales, M.; Bandillo, N.; Flores, P. Predicting Lodging Severity in Dry Peas Using UAS-Mounted RGB, LIDAR, and Multispectral Sensors. Remote Sens. Appl. Soc. Environ. 2024, 34, 101157. [Google Scholar] [CrossRef]

- Uppalapati, S.; Paramasivam, P.; Kilari, N.; Chohan, J.S.; Kanti, P.K.; Vemanaboina, H.; Dabelo, L.H.; Gupta, R. Precision Biochar Yield Forecasting Employing Random Forest and XGBoost with Taylor Diagram Visualization. Sci. Rep. 2025, 15, 7105. [Google Scholar] [CrossRef]

- Cuevas, A.; Febrero, M.; Fraiman, R. An Anova Test for Functional Data. Comput. Stat. Data Anal. 2004, 47, 111–122. [Google Scholar] [CrossRef]

- Anselin, L. The Moran Scatterplot as an ESDA Tool to Assess Local Instability in Spatial Association. In Spatial Analytical Perspectives on GIS; Routledge: London, UK, 2019; pp. 111–126. [Google Scholar]

- Kumar, S.D.P.; Angadi, D.P. GIS-Based Analysis and Assessment of Spatial Correlation of Road Accidental Hotspots: A Case Study of Mangaluru City, Karnataka. In Humanities and Sustainability from Glocal Perspectives Towards Future Earth, Proceedings of the IGU Thematic Conference 2022, Mahendragarh, India, 24–25 November 2022; Springer Nature: Berlin/Heidelberg, Germany, 2025; p. 161. [Google Scholar]

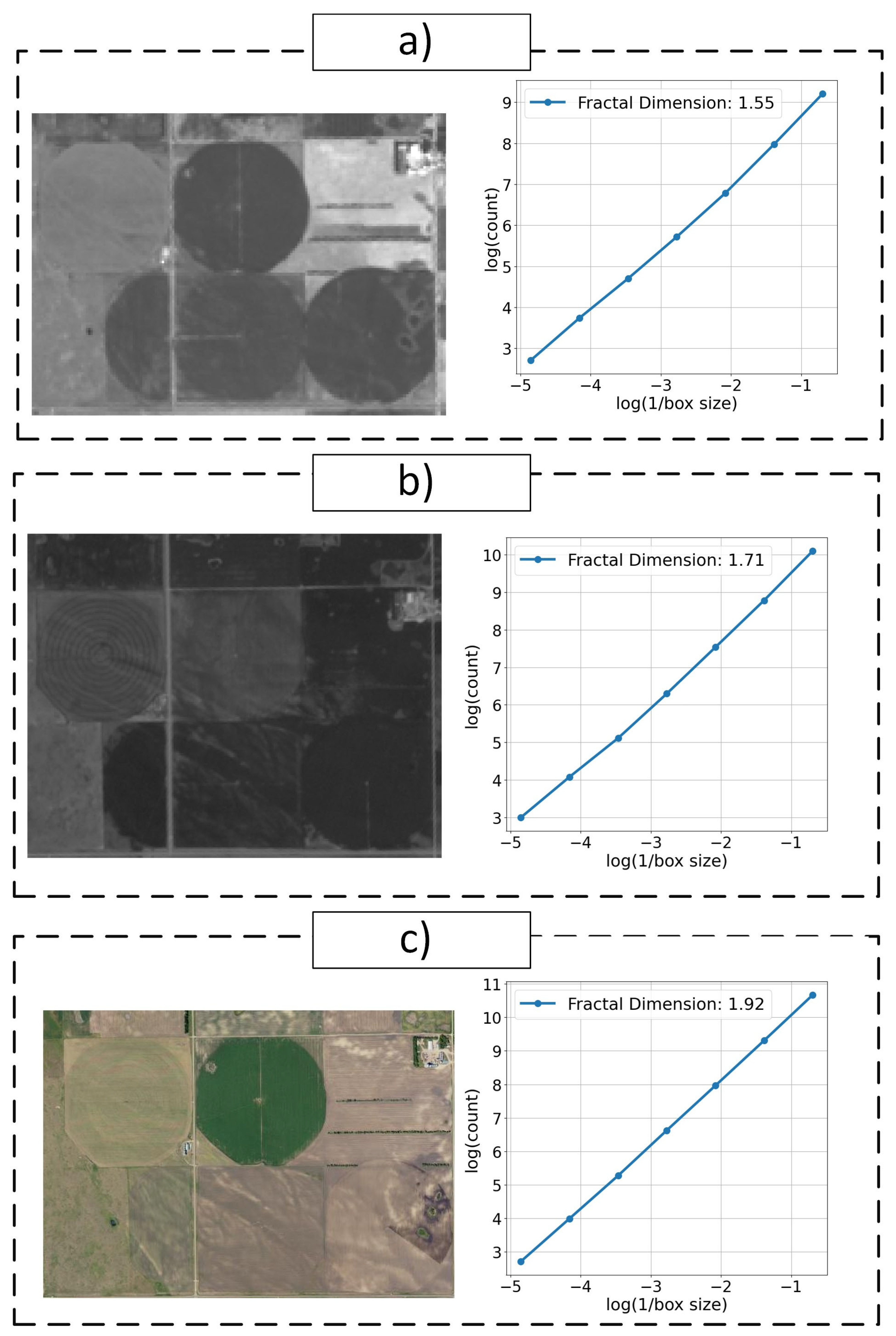

- Theiler, J. Estimating Fractal Dimension. J. Opt. Soc. Am. A 1990, 7, 1055–1073. [Google Scholar] [CrossRef]

- Xie, X.; Tian, Y.; Zhu, Z. Application of Deep Learning in High-Resolution Remote Sensing Image Classification. In Proceedings of the International Conference on Electronic Information Engineering and Computer Communication (EIECC 2021), Online, 18 December 2021; SPIE: Bellingham, WA, USA, 2022; Volume 12172, pp. 536–541. [Google Scholar]

- Huang, X. High Resolution Remote Sensing Image Classification Based on Deep Transfer Learning and Multi Feature Network. IEEE Access 2023, 11, 110075–110085. [Google Scholar] [CrossRef]

- Sustika, R.; Suksmono, A.B. Evaluation of Deep Convolutional Neural Network with Residual Learning for Remote Sensing Image Super Resolution. Comput. Eng. Appl. J. 2021, 10, 1–8. [Google Scholar] [CrossRef]

- Rajeshwari, P.; Priya, P.L.; Pooja, M.; Abhishek, G. Remote Sensing Image Super-Resolution Using Deep Learning. In Proceedings of the 2024 IEEE Space, Aerospace and Defence Conference (SPACE), Bangalore, India, 22–23 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 665–668. [Google Scholar]

- Karasiak, N.; Dejoux, J.-F.; Monteil, C.; Sheeren, D. Spatial Dependence between Training and Test Sets: Another Pitfall of Classification Accuracy Assessment in Remote Sensing. Mach. Learn. 2022, 111, 2715–2740. [Google Scholar] [CrossRef]

- Gazis, I.-Z.; Greinert, J. Importance of Spatial Autocorrelation in Machine Learning Modeling of Polymetallic Nodules, Model Uncertainty and Transferability at Local Scale. Minerals 2021, 11, 1172. [Google Scholar] [CrossRef]

- Bai, Y.; Sun, X.; Ji, Y.; Huang, J.; Fu, W.; Shi, H. Bibliometric and Visualized Analysis of Deep Learning in Remote Sensing. Int. J. Remote Sens. 2022, 43, 5534–5571. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y.; Hou, Z.; Li, X.; Geng, H.; Wang, Y.; Li, J.; Zhu, P.; Mei, J.; Jiang, Y.; et al. Deep Learning and Remote Sensing Data Analysis. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 1857–1864. [Google Scholar]

- Chen, Y.; Li, C.; Ghamisi, P.; Jia, X.; Gu, Y. Deep Fusion of Remote Sensing Data for Accurate Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1253–1257. [Google Scholar] [CrossRef]

- Tang, J.; Arvor, D.; Corpetti, T.; Tang, P. Mapping Center Pivot Irrigation Systems in the Southern Amazon from Sentinel-2 Images. Water 2021, 13, 298. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Huang, C.; Shibuya, A. High Accuracy Geochemical Map Generation Method by a Spatial Autocorrelation-Based Mixture Interpolation Using Remote Sensing Data. Remote Sens. 2020, 12, 1991. [Google Scholar] [CrossRef]

- Parelius, E.J. A Review of Deep-Learning Methods for Change Detection in Multispectral Remote Sensing Images. Remote Sens. 2023, 15, 2092. [Google Scholar] [CrossRef]

- Audebert, N.; Boulch, A.; Randrianarivo, H.; Le Saux, B.; Ferecatu, M.; Lefevre, S.; Marlet, R. Deep Learning for Urban Remote Sensing. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–4. [Google Scholar]

| Dataset | Best Spatial Resolution (m) | Spectral Resolution | Revisit Time | Number of Frames Covering North Dakota | Tile Size | Total Data Volume (Approx.) |

|---|---|---|---|---|---|---|

| Landsat 8 | 15 | 1 band | 16 days | ~14 | 185 × 185 km | ~5 GB |

| Sentinel-2 | 10 | 13 bands | 5 days | ~18 | 100 × 100 km | ~120 GB |

| NAIP | 0.6 | 4 bands (RGB + NIR) | Every 2–3 years | ~120 | Varies | ~500 GB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bazrafkan, A.; Kim, J.; Proulx, R.; Lin, Z. Automated Detection of Center-Pivot Irrigation Systems from Remote Sensing Imagery Using Deep Learning. Remote Sens. 2025, 17, 2276. https://doi.org/10.3390/rs17132276

Bazrafkan A, Kim J, Proulx R, Lin Z. Automated Detection of Center-Pivot Irrigation Systems from Remote Sensing Imagery Using Deep Learning. Remote Sensing. 2025; 17(13):2276. https://doi.org/10.3390/rs17132276

Chicago/Turabian StyleBazrafkan, Aliasghar, James Kim, Rob Proulx, and Zhulu Lin. 2025. "Automated Detection of Center-Pivot Irrigation Systems from Remote Sensing Imagery Using Deep Learning" Remote Sensing 17, no. 13: 2276. https://doi.org/10.3390/rs17132276

APA StyleBazrafkan, A., Kim, J., Proulx, R., & Lin, Z. (2025). Automated Detection of Center-Pivot Irrigation Systems from Remote Sensing Imagery Using Deep Learning. Remote Sensing, 17(13), 2276. https://doi.org/10.3390/rs17132276