Abstract

To address the challenge of insufficient spatial resolution in remote sensing precipitation data, this paper proposes a novel Multi-Scale Residual Generative Adversarial Network (MSRGAN) for reconstructing high-resolution precipitation images. The model integrates multi-source meteorological information and topographic priors, and it employs a Deep Multi-Scale Perception Module (DeepInception), a Multi-Scale Feature Modulation Module (MSFM), and a Spatial-Channel Attention Network (SCAN) to achieve high-fidelity restoration of complex precipitation structures. Experiments conducted using Weather Research and Forecasting (WRF) simulation data over the continental United States demonstrate that MSRGAN outperforms traditional interpolation methods and state-of-the-art deep learning models across various metrics, including Critical Success Index (CSI), Heidke Skill Score (HSS), False Alarm Rate (FAR), and Jensen–Shannon divergence. Notably, it exhibits significant advantages in detecting heavy precipitation events. Ablation studies further validate the effectiveness of each module. The results indicate that MSRGAN not only improves the accuracy of precipitation downscaling but also preserves spatial structural consistency and physical plausibility, offering a novel technological approach for urban flood warning, weather forecasting, and regional hydrological modeling.

1. Introduction

As a crucial component of the global water cycle, precipitation profoundly influences the development of various fields, including meteorology, hydrology, agriculture, ecology, and climate science. It also serves as one of the most fundamental data elements for natural disaster monitoring, early warning systems, and resource management decision making [1,2,3,4]. In recent years, global climate change has significantly altered the spatiotemporal characteristics of precipitation, with a noticeable increase in the frequency of localized extreme heavy rainfall events. High-resolution precipitation information—particularly for fine-scale estimations in mesoscale and urban areas—has become a core requirement in modern weather forecasting, hydrological modeling, and urban management [5,6].

Traditional precipitation observation methods, such as ground-based rain gauges and weather radar, offer high accuracy but are limited in spatial coverage. This limitation is particularly pronounced in oceans, mountainous regions, and underdeveloped areas, where representativeness and data accessibility are constrained [7,8]. The rapid advancement of satellite remote sensing technologies—especially the widespread deployment of passive microwave, active radar, and infrared sensors—has provided new avenues for global precipitation data acquisition. Satellite missions such as the Tropical Rainfall Measuring Mission (TRMM) and the Global Precipitation Measurement (GPM) offer remote sensing precipitation products with global coverage and strong accessibility, making them widely used and important data sources in meteorological, hydrological, and ecological research. However, due to generally low spatial resolution (typically ranging from 0.1° to 0.25°), these products remain insufficient for fine-scale applications such as regional climate modeling, urban flood forecasting, and surface water resource assessment [9]. To address the aforementioned challenges, the “downscaling” of remote sensing precipitation data has become a prominent research focus. The goal is to transform coarse-resolution precipitation products into high-resolution data suitable for local-scale applications by integrating high-resolution auxiliary information such as topography, vegetation indices, land surface temperature, and meteorological variables [10,11,12,13]. Currently, downscaling methods can be broadly categorized into two types as follows: dynamical downscaling based on numerical models and statistical downscaling based on empirical relationships [14]. The former involves complex computations and heavy reliance on computational resources, posing challenges in balancing accuracy and operational feasibility. In contrast, the latter has gained widespread attention due to its flexibility, computational efficiency, and ability to incorporate multi-source information.

In recent years, the rapid advancement of artificial intelligence, particularly machine learning, has introduced a variety of novel methods to remote sensing precipitation downscaling tasks. Techniques such as Random Forests (RF), Support Vector Machines (SVM), and Gradient Boosting Trees (GBDT/XGBoost) [15,16,17,18,19] have demonstrated strong capabilities in handling complex nonlinear relationships and improving prediction accuracy [20]. However, most of these machine learning methods still rely on relatively shallow model architectures (e.g., tree-based models or shallow neural networks) [15,16,17,18,19,20], which are limited in their ability to extract deep spatial features and multi-scale patterns from precipitation imagery, especially in cases involving heavy rainfall or complex terrain. As a result, their performance remains constrained in scenarios involving intense precipitation or complex terrain. With the emergence of deep learning, Convolutional Neural Networks (CNNs) and their variants have been widely adopted in downscaling applications and have shown superior performance. For instance, Super-Resolution Convolutional Neural Network (SRCNN) [21], one of the earliest deep learning models applied to image super-resolution, significantly improved reconstruction quality compared to traditional interpolation methods. Deep Learning-based Statistical Downscaling (DeepSD) [10] introduced computer vision techniques into statistical downscaling, focusing on enhancing the spatial resolution of meteorological variables, especially precipitation. Residual Super-Resolution Network for Deep Downscaling (ResDeepD) [22] leveraged residual structures to increase network depth, effectively enhancing the spatial prediction accuracy of Indian summer monsoon precipitation. Tu et al. [23] proposed a hybrid downscaling framework combining WRF and CNN, which preserved physical consistency while delivering higher-quality precipitation fields than using WRF alone. Jiang et al. [24] developed a CNN-based downscaling model for ERA5 precipitation that integrates reanalysis data with short-term high-resolution simulations, offering a viable solution for generating long-term high-resolution precipitation data in complex terrains. These approaches learn the mapping between low- and high-resolution data in an end-to-end manner, enhancing modeling capacity while retaining local features. Nevertheless, CNN-based models still face structural limitations such as restricted receptive fields and insufficient feature representation capability, which hinder their ability to capture long-range dependencies and global spatial relationships.

To further enhance the capability of high-quality precipitation reconstruction, Generative Adversarial Networks (GANs) have recently emerged as a promising model architecture in precipitation image super-resolution tasks, owing to their discriminative mechanism and self-learning ability. Through the adversarial interplay between the generator and discriminator, GANs not only improve the detail accuracy of predicted images but also strengthen the model’s ability to approximate the distribution of real precipitation patterns. This has proven especially effective in complex scenarios. One of the earliest and most representative methods of applying GANs to single-image super-resolution is SRGAN (Super-Resolution Generative Adversarial Network), proposed by Ledig et al. [25], which gained wide adoption for its capacity to generate high-quality images. Building on SRGAN, Enhanced Super-Resolution Generative Adversarial Network (ESRGAN) [26] introduced Residual-in-Residual Dense Blocks (RRDBs) without batch normalization as its core architecture and made further improvements in network structure, adversarial loss, and perceptual loss, leading to enhanced visual quality of generated images. Cheng et al. [12] proposed the DeepDT model, which innovatively adopted the RRDB architecture to enhance feature extraction and leveraged CNNs to construct a trainable framework for climate image generation. This enabled the fusion of multiple climatic variables and the construction of high-quality climate datasets. Price et al. [27] introduced CorrectorGAN, a two-stage generative network designed to improve precipitation forecasts from global numerical weather models. By incorporating a correction mechanism based on weather state information, it maps coarse forecasts to more accurate high-resolution prediction distributions. Jiang et al. [28] developed the Edge-Enhanced GAN (EEGAN), tailored for super-resolution reconstruction of satellite video imagery. This network effectively enhances edge clarity while suppressing image noise. Furthermore, Yang et al. [29] combined the Transformer mechanism with GANs to propose the ST-Transformer encoder module, which dynamically focuses on key spatial regions to produce high-fidelity precipitation simulation outputs.

Although recent studies have explored the application of GANs to remote sensing precipitation downscaling, several challenges remain as follows: (1) precipitation data are inherently sparse, making it particularly difficult to capture key spatial features under moderate to heavy rainfall conditions; (2) existing models often struggle to effectively fuse features across multiple scales, leading to the loss of high-frequency detail information; and (3) there is a lack of effective integration of conditional information such as topography and atmospheric variables, which undermines both the physical consistency and generalization capability of the models. Therefore, designing a deep adversarial network that can fully leverage multi-source meteorological information, enhance spatial structure modeling, and exhibit strong generalization performance has become a critical issue to be addressed in current precipitation downscaling research. Against this background, this paper proposes a novel multi-scale generative adversarial network—MSRGAN (Multi-Scale Residual Generative Adversarial Network)—for spatial super-resolution reconstruction of low-resolution precipitation data. The model integrates a Deep Multi-scale Perception Module (DeepInception), a Multi-Scale Feature Modulation Module (MSFM), and a Spatial-Channel Attention Network (SCAN) to enable comprehensive modeling of meteorological features across different spatial scales and high-fidelity restoration of fine-grained precipitation details. Furthermore, MSRGAN innovatively incorporates high-resolution meteorological conditions—such as topography, temperature, and water vapor—as prior knowledge into the generation process. This fusion significantly enhances the model’s physical consistency and spatial coherence, while retaining the inherent advantages of the GAN architecture. Compared to traditional interpolation methods and other deep learning models, MSRGAN demonstrates superior predictive accuracy and spatial structure reconstruction across various precipitation intensities, achieving particularly notable improvements in the identification of heavy rainfall events.

The primary contributions of this study are summarized as follows:

- We propose MSRGAN, a generative adversarial network that integrates multi-source meteorological data and high-resolution topographic priors. Through the joint design of deep convolution, attention mechanisms, and residual connections, MSRGAN effectively enhances the spatial resolution and predictive consistency of precipitation maps.

- We design a Deep Multi-Scale Perception Module (DeepInception) and a Multi-Scale Feature Modulation Module (MSFM), which can simultaneously model both local and global precipitation features. These modules address the limitations of traditional models in terms of receptive field size and their difficulty in identifying long-range precipitation structures.

- We introduce a Spatial-Channel Collaborative Attention Network (SCAN) and a conditional feature fusion mechanism to enable joint modeling across spatial and channel dimensions. This design improves the model’s capability to capture complex precipitation distribution patterns and enhances the fidelity of fine-scale details.

- We conduct both quantitative and qualitative experiments on real WRF simulation data under multiple precipitation intensity thresholds. The results demonstrate the superiority of the proposed model in extreme precipitation detection, spatial structure reconstruction, and false alarm suppression.

2. Methods

In recent years, GANs have demonstrated powerful modeling capabilities in various fields, including image super-resolution, style transfer, and medical imaging, which has inspired their exploration and application in the domain of precipitation downscaling [12,13,25,26,27,28,29]. Motivated by this progress, we design and develop a GAN-based super-resolution model for precipitation, termed MSRGAN. GAN is an innovative machine learning framework consisting of two deep neural networks: a Generator and a Discriminator. These two networks are trained in a competitive manner, where the generator aims to produce increasingly realistic images while the discriminator strives to distinguish between real and generated samples. Specifically, the generator is built upon a convolutional neural network (CNN) architecture and learns a nonlinear mapping from low-resolution inputs to high-resolution precipitation fields, thereby achieving spatial resolution enhancement. The discriminator, also a CNN-based model, is trained to evaluate the differences between generated high-resolution samples and real high-resolution precipitation data, aiming to accurately classify them as real or fake. During training, the generator and discriminator are optimized in an adversarial loop as follows: the generator continuously improves its ability to produce realistic high-resolution outputs to deceive the discriminator, while the discriminator enhances its capacity to detect generated samples. This adversarial training mechanism allows the generator to capture and reconstruct the missing fine-scale details in low-resolution inputs, incorporating physically plausible microscale features. Compared to traditional interpolation or statistical downscaling methods, the proposed MSRGAN model not only enhances the spatial resolution of precipitation fields effectively but also embeds physically consistent small-scale structures that align with real-world precipitation dynamics. MSRGAN offers a novel downscaling approach for meteorological forecasting and climate research, contributing to improved precision in heavy precipitation prediction and more accurate disaster risk assessment.

2.1. Definition of the Problem

The objective of precipitation downscaling (super-resolution) research is to achieve 12 km resolution precipitation forecasting based on 50 km resolution simulation data over the Continental United States (CONUS). The low-resolution precipitation data over this region can be represented as: , where denotes the precipitation field at the i-th time step, and , represent the width and height of the 50 km resolution data, respectively. The corresponding 12 km resolution simulation data are denoted as follows: , where and denote the width and height of the 12 km resolution dataset, and N is the temporal length of the dataset. The prediction target is denoted by . The downscaling task can be formalized as learning a mapping function, expressed as follows: , where represents the length of the input time window and denotes the prediction time horizon. Here, refers to past high-resolution observations available during training, which provide fine-grained spatial context to help guide the learning of the mapping function. No future information is included in . The corresponding optimization objective is defined as follows:

where L denotes the loss function measuring the deviation between the predicted output and the reference data . Here, t represents the forecast lead time (from 1 to ), and n indexes spatial positions (e.g., grid points) within the domain. This mathematical framework aims to learn spatiotemporal features and establish a nonlinear mapping relationship from low-resolution inputs (50 km) to high-resolution outputs (12 km), focusing on capturing the nonlinear transformation of meteorological data across different spatial scales.

2.2. Overall Architecture Design

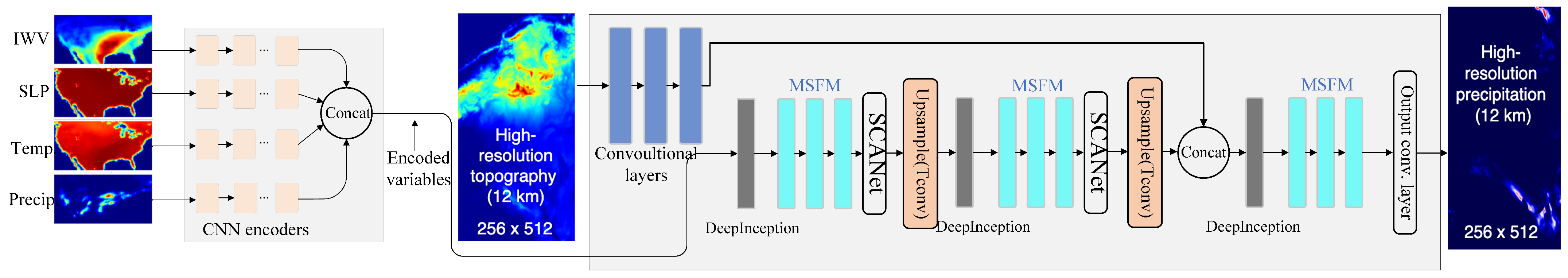

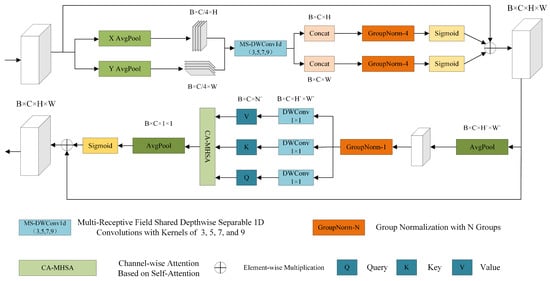

In this section, we present the detailed design of the generator architecture in the proposed MSRGAN model. As illustrated in Figure 1, the input data—which include precipitation fields and multiple meteorological variables such as Integrated Water Vapor (IWV), Sea Level Pressure (SLP), and 2-m Temperature (T2)—are first processed by a 1 × 1 convolution for channel normalization, mapping the raw input into a standardized feature space. Similar multi-variable stacking input strategies have been validated in prior downscaling research, demonstrating advantages in feature representation and modeling performance [10]. Next, the data pass through the DeepInception module for multi-branch feature extraction. This module constructs multi-scale feature representations via parallel operations including 1 × 1 convolution, 3 × 3 depthwise separable convolution, 5 × 5 dilated convolution, and hybrid pooling. By cascading four DeepInception modules and incorporating residual connections, the network enables deep extraction of spatial features from the meteorological fields. Following the feature extraction stage, the MSFM module performs dynamic feature calibration through a self-modulated feature aggregation mechanism. This module consists of the following two key components: the spatial modulation unit, which constructs spatial attention weights via adaptive max pooling and variance estimation, and the channel modulation unit, which enhances local features using depthwise separable convolution. By hierarchically stacking three MSFM modules, the model achieves progressive refinement of multi-scale features. To further enhance the global consistency of feature representations, the network integrates the SCAN module. SCAN employs a dual-branch architecture as follows: along the spatial dimension, it constructs horizontal and vertical attention maps using hybrid deep convolutions; along the channel dimension, it captures inter-channel dependencies using a multi-head self-attention mechanism. SCAN is applied at both upsampling stages, effectively improving the spatial coherence and channel discriminability of the feature maps. The model also introduces a conditional feature fusion mechanism, in which meteorological variables are encoded into conditional features via a multi-level Inception [30] structure and then concatenated with the main feature map along the channel dimension. During the terrain fusion stage, elevation data are encoded via a 3 × 3 convolution and deeply fused with the upsampled features. Inspired by the progressive reconstruction strategy in Laplacian Pyramid Super-Resolution Network (LapSRN) [31], the model employs two transposed convolutions for 2× and 4× upsampling, followed by a final 3 × 3 convolution layer to reconstruct high-resolution precipitation fields with fine-grained spatial details.

Figure 1.

Overall architecture of the MSRGAN generator. The core of the network is composed of several key modules as follows: the Deep Multi-Scale Perception Module (DeepInception), the Multi-Scale Feature Modulation Module (MSFM), and the Spatial-Channel Collaborative Attention Network (SCAN).

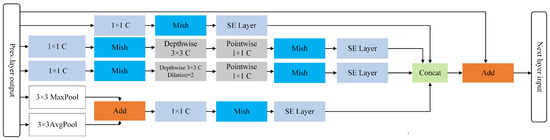

2.2.1. Deep Multi-Scale Perception Module (DeepInception)

In the task of Precipitation Image Super-Resolution, efficiently extracting multi-scale features is crucial for reconstructing high-quality precipitation images. Traditional super-resolution methods often struggle to adequately capture both local details and global patterns of precipitation fields. To address this limitation, we propose a novel module called the Deep Multi-Scale Perception Module (DeepInception), which strategically integrates established techniques in a precipitation-specific architecture to enhance feature extraction capability for precipitation images.

Established Foundations and Novel Contributions: Our approach builds upon several well-established techniques: Inception architectures for multi-scale feature extraction, Squeeze-and-Excitation (SE) mechanisms for channel attention [32], depthwise separable convolutions [33] for computational efficiency, dilated convolutions [34] for expanded receptive fields, and residual connections for gradient flow enhancement. While combinations of depthwise separable and dilated convolutions have been explored in prior work [35], our key contributions lie in the following: (1) A precipitation-specific multi-scale architecture that strategically combines 1 × 1, 3 × 3 depthwise separable, and 5 × 5 dilated depthwise separable convolutions with hybrid pooling, specifically designed for precipitation field characteristics, is presented. (2) Strategic integration of SE attention within the depthwise separable branch to enhance precipitation pattern recognition is developed. (3) A novel hybrid pooling strategy that preserves both sharp precipitation boundaries and smooth transitions critical for precipitation field reconstruction is showcased.

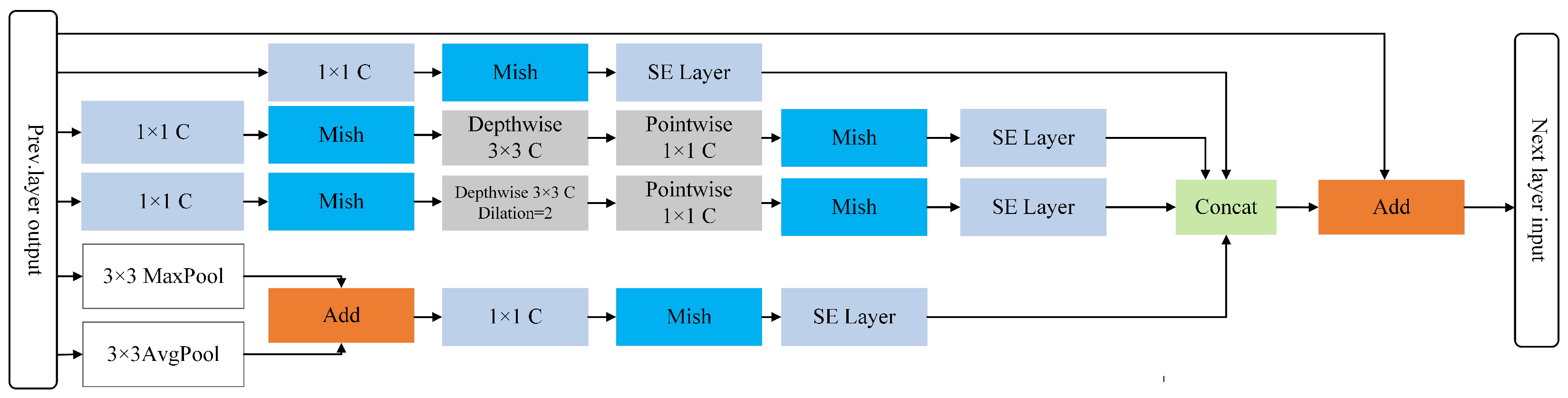

The DeepInception module is built upon a modified Inception structure. As shown in Figure 2, the module consists of four parallel branches designed to capture the following precipitation-specific features: 1 × 1 Convolution Branch captures low-level features and provides nonlinear transformations; 3 × 3 Depthwise Separable Convolution Branch reduces parameter count and computational cost via depthwise separable convolutions, while incorporating a SE layer to adjust channel-wise importance specifically for precipitation pattern recognition; 5 × 5 Dilated Depthwise Separable Convolution Branch introduces a dilation rate of 2 into the 3 × 3 depthwise separable convolution, effectively achieving a 5 × 5 receptive field. The specific dilation rate is chosen to capture precipitation cloud formations that typically span medium-range spatial scales, allowing the model to better model long-range dependencies without increasing the number of parameters; Hybrid Pooling Branch combines max pooling and average pooling to address the dual nature of precipitation data, where both peak intensity values (captured by max pooling) and regional averages (captured by average pooling) are important for reconstruction quality. This preserves key information and mitigates unnecessary information loss specific to precipitation fields.

Figure 2.

Model structure diagram of the Deep Multi-Scale Perception Module. Mish is used as the activation function. In the diagram, the 3 × 3 dilated convolution (d = 2) is equivalent to a 5 × 5 receptive field.

The outputs of the four branches are concatenated along the channel dimension and fused through a residual connection, which helps mitigate gradient vanishing issues and enhances the transmission of deep feature representations.

Precipitation-Specific Design Rationale: The specific architectural choices in DeepInception are motivated by precipitation image characteristics. The combination of different receptive field sizes addresses the multi-scale nature of precipitation patterns, from local droplet formations to large-scale weather systems. The strategic placement of SE attention only in the 3 × 3 branch is based on our analysis that medium-scale features benefit most from channel-wise recalibration in precipitation images, while the hybrid pooling preserves both extreme precipitation values and smooth transition regions essential for meteorological accuracy.

Assuming the input feature map has a size of , the depthwise separable convolution can be expressed as follows:

where denotes the depthwise convolution, denotes the pointwise convolution, and is the nonlinear activation function. Compared with standard convolution, this operation can effectively reduce computational complexity.

DeepInception adopts the Squeeze-and-Excitation (SE) mechanism to enhance the model’s feature selection capability specifically for precipitation patterns. Specifically, the SE module captures global channel-wise information through Global Average Pooling (GAP) and adaptively adjusts the weights of different channels via two fully connected layers. The computation formula of the SE module is as follows:

where denotes the global average pooling result of channel c, and are the weights of the fully connected layers, and represents the Sigmoid activation function.

To mitigate the vanishing gradient problem, we introduce residual connections, which perform element-wise addition between the input features and the output of the DeepInception module. This structure not only enhances gradient propagation but also accelerates model convergence. By combining precipitation-tailored multi-scale feature extraction, the strategically-placed SE attention mechanism, and residual connections, DeepInception achieves superior detail restoration performance in precipitation image super-resolution tasks compared to generic super-resolution approaches.

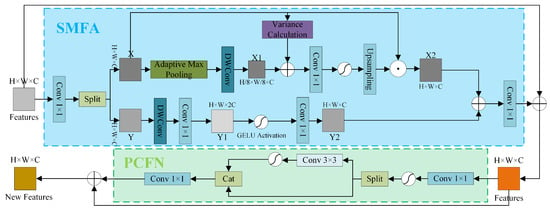

2.2.2. Multi-Scale Feature Modulation (MSFM) Module

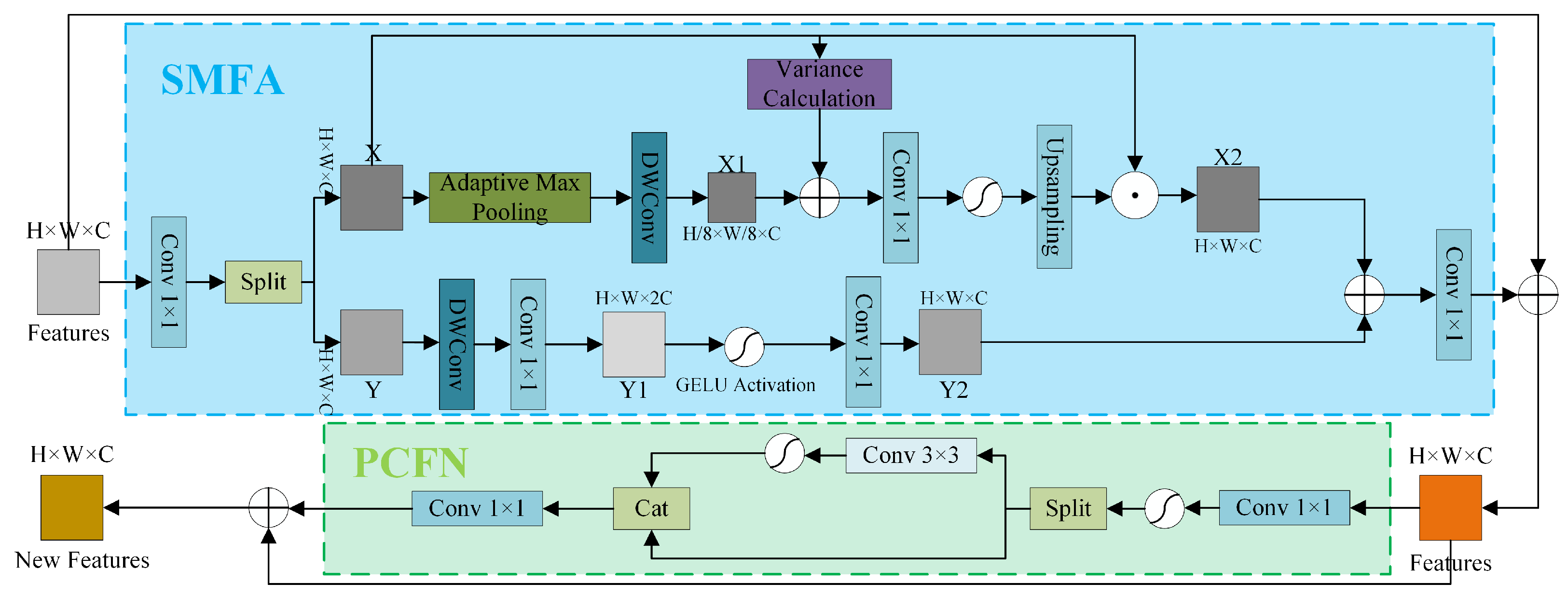

In precipitation image super-resolution tasks, the model needs not only to accurately recover spatial details but also to enhance multi-scale feature representation capabilities, in order to effectively fuse both local and global information. To address this, we propose the Multi-Scale Feature Modulation (MSFM) module, as illustrated in Figure 3. This module improves the model’s feature representation ability through adaptive feature aggregation and a dynamic feature modulation mechanism, thereby further enhancing super-resolution reconstruction performance. MSFM mainly consists of the following two components: Self-Modulated Feature Aggregation (SMFA) and the Partial Convolutional Feedforward Network (PCFN). Among them, SMFA is responsible for adaptive modulation across multi-scale features, while PCFN leverages partial convolution operations to locally enhance features, thereby improving the model’s nonlinear representational capacity.

Figure 3.

Architecture of the Multi-Scale Feature Modulation (MSFM) module, comprising SMFA and PCFN for cross-scale feature aggregation and local nonlinear enhancement in precipitation image reconstruction.

The SMFA module adopts a dual-path feature interaction mechanism to achieve the soft fusion of multi-scale features through dynamic weight allocation. Specifically, the input feature map is first split along the channel dimension into a content-aware path and a modulation path. In the content-aware path, spatial detail features are extracted using depthwise separable convolution, and a multi-scale feature pyramid is constructed via adaptive max pooling. In the modulation path, we innovatively introduce feature variance as a spatial attention signal, which, in combination with inter-scale feature statistics, is used to generate dynamic modulation coefficients.

Given an input feature , we first obtain the initial features for the two paths through the following linear transformation: . Here, Y is fed into the feature enhancement network DMlp, while X undergoes deep convolution followed by the following scale transformation:

Ultimately, feature fusion is performed using the following gating mechanisms:

Here, and are learnable parameters, and denotes the features processed by DMlp.

PCFN adopts a channel-grouped dynamic activation mechanism, applying differentiated feature processing strategies during training and inference. The hidden features are divided into activated groups and bypass groups, where only the activated group is subjected to deep convolution operations as follows:

Here, p controls the proportion of channels subjected to partial convolution.

2.2.3. Spatial-Channel Attention Network (SCAN)

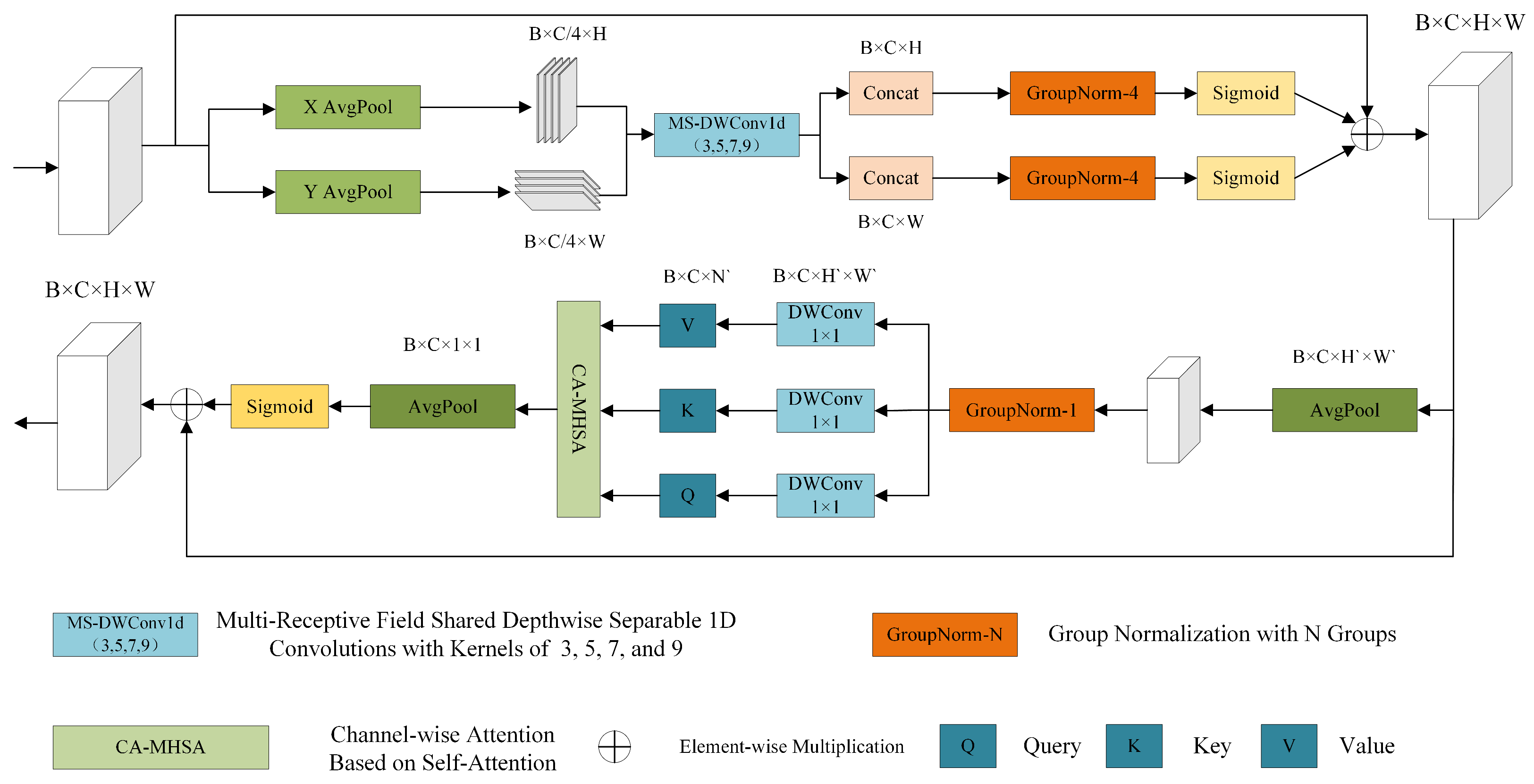

In the task of precipitation super-resolution, accurately capturing both local and global features of precipitation images is crucial. To enhance the model’s ability to identify precipitation regions, we propose a Spatial-Channel Attention Network (SCAN) module, which strengthens the interaction between spatial and channel information during feature extraction, as illustrated in Figure 4. SCAN integrates a multi-scale convolutional spatial attention mechanism with a self-attention-based channel attention mechanism. The spatial attention component models both short- and long-range spatial dependencies by introducing multi-scale depthwise convolutions along the height and width dimensions. Meanwhile, the channel attention component leverages a multi-head self-attention mechanism to model dependencies along the channel dimension, thereby improving the model’s focus on salient features.

Figure 4.

Architecture of the proposed Spatial-Channel Attention Network (SCAN). The module integrates multi-scale convolutional spatial attention and multi-head self-attention-based channel attention to enhance feature extraction in precipitation image super-resolution. The spatial attention mechanism captures both short- and long-range dependencies via local and global depthwise convolutions with varying kernel sizes (3, 5, 7, and 9), while the channel attention mechanism strengthens important feature responses across channels using self-attention.

SCAN first computes the global average features of the input feature map along the horizontal (H dimension) and vertical (W dimension) directions to extract directional information of the image. These features are then divided into four subgroups and processed using local depthwise convolutions and global depthwise convolutions with varying kernel sizes. Specifically, the kernel sizes are set to 3, 5, 7, and 9 to effectively cover precipitation areas of different spatial scales. Finally, a Softmax or Sigmoid gating mechanism is employed to fuse the multi-scale features and generate the spatial attention weights. These weights are applied to the original feature map to enhance the model’s response to precipitation regions.

While the spatial attention mechanism enhances feature representation along the spatial dimension, it remains insufficient in facilitating information interaction across the channel dimension. To address this, we further introduce a self-attention-based channel attention mechanism. First, a pooling layer (either average pooling or max pooling) is applied to the input feature map to reduce dimensionality and computational cost. Then, convolutions are used to generate the query (Q), key (K), and value (V) vectors, which are subsequently reshaped to accommodate multi-head self-attention computation. The resulting attention matrix is used to weight the value vectors, thereby enhancing the representation of important channels. Finally, a Softmax or Sigmoid gating mechanism is applied to produce the channel attention weights, which are used to modulate the original feature map and improve the extraction of fine-grained information in precipitation regions.

3. Data and Experimental Configuration

3.1. Dateset

In this study, we utilized data provided by the National Centers for Environmental Prediction Reanalysis II (NCEP-R2) under the U.S. Department of Energy. The dataset consists of one year (2005) of regional climate model (RCM) simulations conducted using the Weather Research and Forecasting (WRF) model, version 3.3.1 [11]. Two sets of simulations were performed, both covering the Continental United States (CONUS), with a total of 2920 time steps each. The spatial resolutions are 50 km and 12 km, respectively, and the temporal resolution is 3 h. The 12 km simulation produces grid cells of size 512 × 256, while the 50 km simulation yields 128 × 64 grid cells. Both simulations share identical configurations and physical parameterizations, with the only difference being their spatial resolution.

Precipitation variability is influenced by a wide range of factors, which constitutes a core focus of this study. Inspired by the physical mechanisms of precipitation, we extend the input variable set beyond the super-resolution (SR) model proposed by Vandal et al. [10]. In addition to low-resolution precipitation and high-resolution topographic data, we incorporate several additional meteorological variables—Integrated Water Vapor (IWV), Sea Level Pressure (SLP), and 2 m Air Temperature (T2)—as supplementary inputs (see Table 1). All these variables are obtained from the same WRF simulation dataset driven by NCEP-R2 reanalysis data. These atmospheric variables exhibit strong temporal correlations with precipitation and provide richer physical context, thereby enhancing the model’s ability to characterize precipitation distributions. Each meteorological variable displays clear spatial dependencies, making their data structure somewhat analogous to that of images. However, unlike conventional image data, meteorological data are often sparse, dynamic, and highly nonlinear, presenting greater challenges for model learning. To ensure the validity of the input data, we applied several preprocessing steps to the precipitation data. Specifically, a minimum precipitation threshold of 0.05 mm per 3 h was set for each grid cell to eliminate the influence of light rain or no-rain conditions that could interfere with neural network training. In addition, the upper bound of precipitation values was capped at the 99.5th percentile to prevent extreme precipitation events from disproportionately affecting the loss function, thereby improving the stability of model training.

Table 1.

Inputs and outputs of the newly developed precipitation downscaling model in this study.

3.2. Implementation Details

We implemented our model using PyTorch (version 2.6.1) as the deep learning framework, leveraging its flexibility and efficient GPU acceleration to ensure high-performance training and inference on large-scale data. The training data consist of 3-hourly precipitation records from January to September 2005, which were used for model training and validation. The data from October to December were reserved for testing the model’s generalization ability, ensuring reliable performance on unseen data. For hyperparameter settings, the initial learning rates for both the generator and discriminator were set to 0.0004. A cosine annealing schedule was employed to dynamically adjust the learning rate throughout the training process, helping the model converge more efficiently and avoid local minima. We used the Adam optimizer to update model parameters, providing stable gradient updates and accelerating convergence. The model was trained on a Tesla T4 GPU, with a batch size of 8 and a total of 12,000 epochs, ensuring the model could fully capture data characteristics and achieve stable convergence.

The selection of an appropriate loss function is paramount to the model’s overall effectiveness. In contrast to standard GANs, which utilize random noise as input for the generator, our approach employs real precipitation data and conditioning variables to establish a conditional GAN (CGAN) framework. This framework enables the generator to be trained using a composite loss formulation that combines multiple objectives to achieve optimal performance.

where and represent the respective weights for the adversarial loss and -norm components; mb denotes the mini-batch size; comprises the coarse-grained conditional variables, Precipitation, IWV, SLP, and T2; Di denotes the discriminator, which applies binary cross-entropy to the Sigmoid output for binary classification; Ge represents the generator, with indicating the trainable parameters; P is the precipitation at time grid cell i; and is the regional mean precipitation. The discriminator uses binary cross-entropy as its loss function. In this framework, the -norm component preserves the low-frequency information of the image while the adversarial component trains the generator to produce precipitation images that closely resemble real high-resolution precipitation patterns.For the loss function, we adopted the scheme proposed by Li et al. [13], where the generator’s loss combines a weighted mean norm loss with an adversarial loss. The weight is treated as a hyperparameter and jointly optimized with the discriminator during training. Based on hyperparameter tuning, we select = 1 and = 3. After training, the generator is utilized to downscale the low-resolution precipitation data.

In addition, to mitigate the impact of precipitation inconsistencies caused by differences in spatial resolution between the two simulations on downscaling performance, we carefully refined the experimental design. Specifically, we first downsampled the original 12 km high-resolution precipitation simulation data to 50 km, and then conducted joint training using the downsampled data together with the original 12 km precipitation dataset. The core idea behind this approach is to enable the model to understand and adapt to the relationships between data at different resolutions during the downscaling learning process, thereby enhancing the model’s ability to reconstruct low-resolution data. After training, we applied the trained model to the original 50 km low-resolution simulation data to evaluate its performance in practical applications. The rationale of this approach has been validated by Wang et al. [11], whose study demonstrated that joint training on data with different resolutions can effectively improve the model’s generalization ability and achieve higher accuracy in downscaling tasks. Our experimental results further confirm the effectiveness of this method, showing that the model is capable of accurately reconstructing high-resolution precipitation fields and maintaining robust prediction performance across varying precipitation intensities.

3.3. Evaluation Metrics

To comprehensively evaluate the predictive performance of the model, this study adopts several evaluation metrics, including the Critical Success Index (CSI), Heidke Skill Score (HSS), False Alarm Rate (FAR), Probability Density Function (PDF), and Jensen–Shannon Divergence (J-S Divergence) [36,37]. These metrics, respectively, quantify the model’s performance from the perspectives of hit rate, overall skill, false alarm tendency, alignment of precipitation probability distributions, and distributional differences.

The CSI is a widely used metric in binary classification tasks, especially suitable for scenarios where precipitation events are sparse. It is defined as the ratio of correctly predicted positive samples (True Positives, TP) to the union of actual positives (TP + False Negatives, FN) and predicted positives (TP + False Positives, FP). The formula is as follows:

Here, TP refers to the number of correctly predicted precipitation events, FP indicates the number of falsely predicted precipitation events, and FN denotes the number of missed precipitation events. The value of CSI ranges from , where a value closer to 1 indicates the stronger ability of the model to capture precipitation events. Since CSI ignores the influence of TN and focuses more on the detection rate of precipitation events, it is particularly suitable for applications where missed detections carry a higher cost.

The Heidke Skill Score (HSS) evaluates the overall classification skill by comparing the model’s performance with that of random guessing. Its computation is based on the confusion matrix, and the formula is as follows:

The Heidke Skill Score (HSS) ranges from , where 1 indicates perfect prediction, 0 indicates no skill compared to random guessing, and negative values suggest performance worse than random chance. Compared to the Critical Success Index (CSI), HSS accounts for the balance between positive and negative classes, making it suitable for imbalanced datasets. For example, in climate prediction tasks, HSS effectively reflects a model’s overall ability to distinguish between normal and extreme precipitation events.

The False Alarm Ratio (FAR) measures the proportion of false positives among all predicted positive cases, reflecting the reliability of the prediction results. It is defined as follows:

The False Alarm Ratio (FAR) ranges from , with lower values indicating fewer false alarms. In resource-constrained scenarios (e.g., flood warning systems), a high FAR may trigger unnecessary emergency responses, making it crucial to analyze FAR in conjunction with the Critical Success Index (CSI). For instance, if a model achieves a high CSI but also has a high FAR, one must balance event detection capability against the cost of false alarms.

The Probability Density Function (PDF) describes the statistical distribution characteristics of precipitation intensity and measures whether model-generated precipitation data conforms to the statistical patterns of real precipitation. Assuming the true distribution of precipitation data is and the distribution of model-predicted data is , the PDF can be expressed as follows:

where is the kernel density estimation function, represents sample data, and N is the number of samples. The PDF is calculated using histograms or kernel density estimation to compare the probability distributions of real precipitation data and model-predicted precipitation data at different precipitation intensities.

The Jensen–Shannon (J-S) divergence is a metric used to measure the similarity between two probability distributions and is widely applied in evaluating the distributional similarity between predicted and observed precipitation data. The J-S divergence is based on the Kullback–Leibler (KL) divergence and is defined as follows:

Here, represents the average of the true and predicted distributions, and the Kullback–Leibler (KL) divergence is computed as follows:

The Jensen–Shannon (J-S) divergence ranges from , with values closer to 0 indicating that the predicted precipitation distribution is more similar to the true distribution, suggesting that the model more accurately captures the statistical characteristics of the precipitation field. Compared to the Kullback–Leibler (KL) divergence, the J-S divergence is symmetric and ensures better interpretability, making it more advantageous for precipitation data modeling.

4. Experimental Results and Analysis

We conducted a comprehensive comparison between reference data and the outputs of six different models from both quantitative metrics and qualitative visual assessment perspectives, in order to thoroughly evaluate model effectiveness. The evaluated models include VDSR [38], LapSRN [31], ESPCN [39], DeepSD [10], Encoded-CGAN [11], as well as cubic spline interpolation.

VDSR: VDSR is a high-precision single-image super-resolution method that adopts a deep convolutional network structure. By cascading multiple small filters, it effectively integrates large-scale contextual information, significantly improving reconstruction accuracy.

LapSRN: LapSRN is based on a Laplacian pyramid architecture that progressively predicts high-frequency residuals and uses transposed convolutions for upsampling. It eliminates the need for bicubic interpolation preprocessing, balancing performance and efficiency while notably reducing computational cost.

ESPCN: ESPCN employs sub-pixel convolution layers to perform upsampling directly in the feature space, avoiding traditional interpolation operations. This enhances reconstruction efficiency while reducing computational and memory overhead.

DeepSD: DeepSD leverages a stacked CNN architecture inspired by single-image super-resolution, progressively enhancing spatial resolution by cascading SRCNN modules. It reconstructs fine-scale climate features without interpolation, enabling accurate statistical downscaling.

Encoded-CGAN: Encoded-CGAN is trained with simulation data that incorporates multiple resolutions and spatial distributions. This enables more accurate mappings from low-resolution inputs to high-resolution outputs. Its encoder structure enhances the model’s ability to capture the spatiotemporal characteristics of precipitation, improving predictive accuracy.

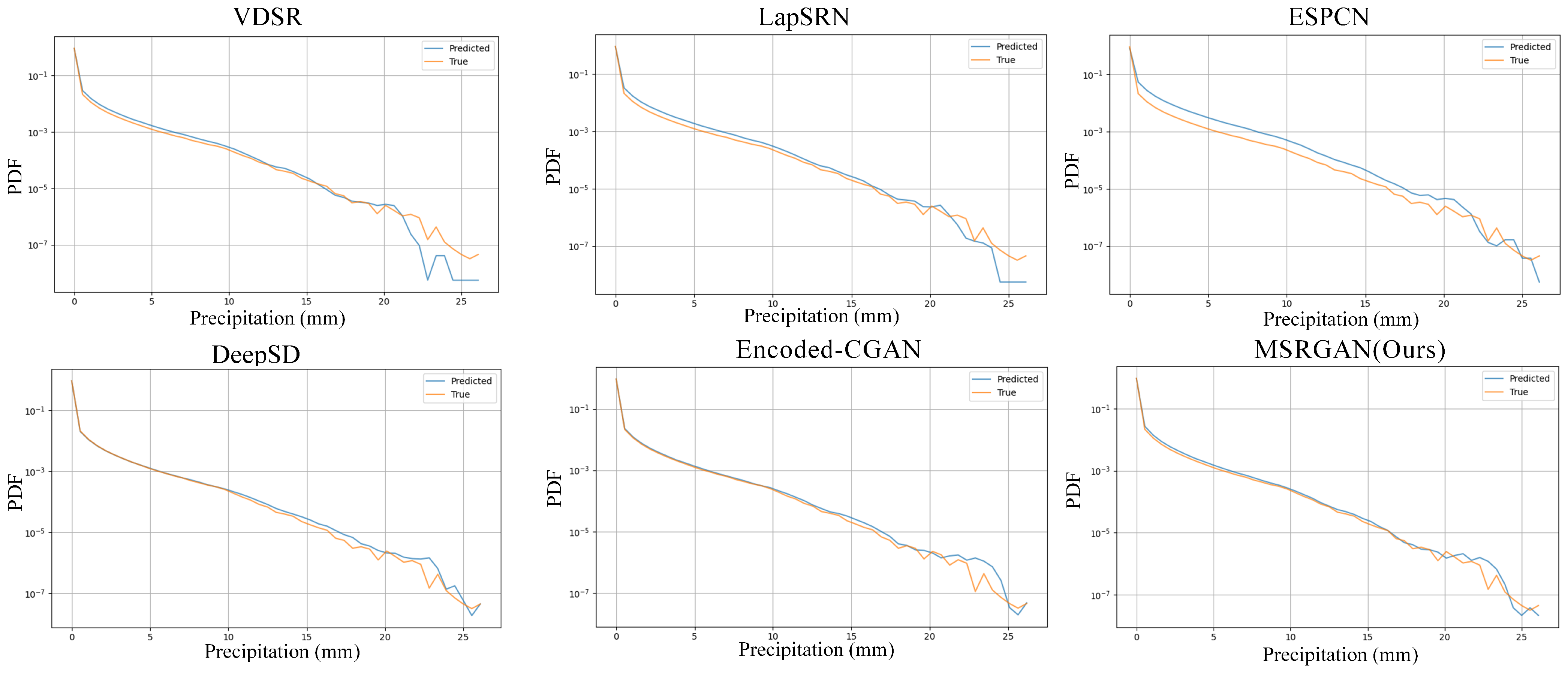

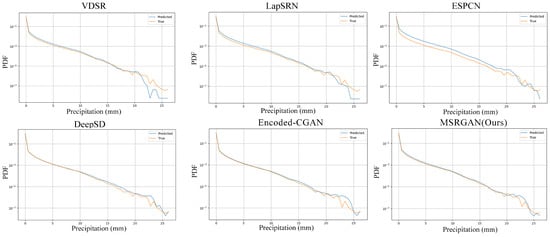

4.1. Evaluation of Downscaling Methods Using the Probability Density Function (PDF)

Figure 5 presents a comparison of the probability density functions (PDFs) between predicted and true values for six different super-resolution methods—VDSR, LapSRN, ESPCN, DeepSD, Encoded-CGAN, and MSRGAN (Ours)—applied to precipitation field downscaling reconstruction. The PDF distributions are displayed on a logarithmic scale to highlight differences in low-probability regions. The results demonstrate that while all methods show relatively similar PDF fitting performance in the low-to-moderate precipitation intensity range, significant divergences emerge in the high-intensity precipitation region (exceeding 10 mm). Traditional deep learning approaches such as VDSR, LapSRN, and ESPCN exhibit limited capability in modeling the distribution tails, as evidenced by substantially lower predicted value densities compared to true values in high precipitation intensity areas. Conversely, generative adversarial network-based methods, particularly Encoded-CGAN and our proposed MSRGAN, demonstrate enhanced ability to capture heavy-tail characteristics. Notably, MSRGAN’s predicted distribution maintains close alignment with the true distribution across the full spectrum of precipitation intensities, indicating superior performance in both spatial resolution enhancement and precipitation intensity modeling. The comprehensive PDF analysis reveals that MSRGAN achieves the highest distribution fidelity among all compared methods, demonstrating its superior capability to preserve the statistical properties of high-resolution precipitation fields during the super-resolution process.

Figure 5.

Comparison plot of the probability density functions (PDFs) of predicted and true values for six precipitation reconstruction methods, including Interpolator, VDSR, LapSRN, ESPCN, Encoded-CGAN, and the proposed MSRGAN. All the PDF curves are plotted on a logarithmic scale to highlight the modeling differences across different precipitation intensity ranges, particularly in the medium to high-intensity regions. This approach allows for a clearer examination of how each method handles variations in precipitation intensity, demonstrating where certain models may excel or underperform in capturing significant precipitation features.

4.2. Model Evaluation Analysis

Table 2 presents the quantitative results of various precipitation downscaling models, including traditional interpolation and six deep learning methods. We evaluate each model using the following four core metrics: CSI, HSS, FAR, and Jensen–Shannon (J-S) divergence, under three precipitation intensity thresholds (0.5 mm, 5 mm, 10 mm).

Table 2.

The performance of various models across different precipitation thresholds (0.5/5/10 mm/h) is measured using the CSI, HSS, and FAR metrics. MSRGAN leads in both CSI and HSS while maintaining a low FAR, indicating its superior ability in recognizing and accurately predicting precipitation events. The J-S distance represents the Jensen–Shannon distance between the predicted results and the actual precipitation data distribution; smaller values indicate a prediction distribution that is closer to the true distribution. Underlined numbers indicate the best performance for each metric across the models.

Across all thresholds, the proposed MSRGAN consistently outperforms other methods in terms of detection accuracy. For example, under the light precipitation threshold (0.5 mm), MSRGAN achieves the highest CSI score of 0.8859, slightly outperforming Encoded-CGAN and DeepSD (0.8790). At 5 mm, it maintains a CSI of 0.8088, which is 12.8% higher than bilinear interpolation and slightly better than DeepSD (0.8056). Under the 10 mm heavy precipitation threshold, MSRGAN achieves a CSI of 0.6521, representing a 90.7% improvement over VDSR, and being slightly higher than DeepSD (0.6437), showing its superior ability in capturing extreme events. In terms of classification performance, MSRGAN achieves HSS scores of 0.9353, 0.8935, and 0.7892 under the respective thresholds. Compared to LapSRN, the HSS improvement reaches up to 20.9% at the 5 mm level. DeepSD also demonstrates strong classification performance with HSS scores close to those of MSRGAN and Encoded-CGAN, reaching 0.9321, 0.8916, and 0.7830 across the thresholds, highlighting its advantage over earlier CNN-based methods in reconstructing spatial precipitation patterns. While Encoded-CGAN achieves the lowest FAR (4.90%) under light precipitation, MSRGAN strikes a better balance by maintaining relatively low FAR (5.99%) while achieving higher CSI. DeepSD also performs competitively in terms of FAR (5.37% at 0.5 mm), although its FAR increases notably under heavy precipitation (27.98% at 10 mm). At the 10 mm threshold, MSRGAN’s FAR (15.82%) remains competitive, and its CSI still leads, reflecting its robust trade-off between accuracy and false alarms.

The J-S divergence metric further reveals model capability in capturing distributional patterns. As shown in Table 2, traditional methods and CNN-based models (e.g., ESPCN and VDSR) exhibit larger divergence from the reference data, indicating poorer distributional fit. ESPCN shows the highest J-S divergence (0.1065), while GAN-based methods perform significantly better. Encoded-CGAN achieves the lowest J-S divergence (0.0064), and MSRGAN achieves 0.0200. Notably, DeepSD records a remarkably low J-S divergence of 0.0033, indicating that, despite its simpler structure, it captures the precipitation distribution with high fidelity and even outperforms GAN-based models in this regard.

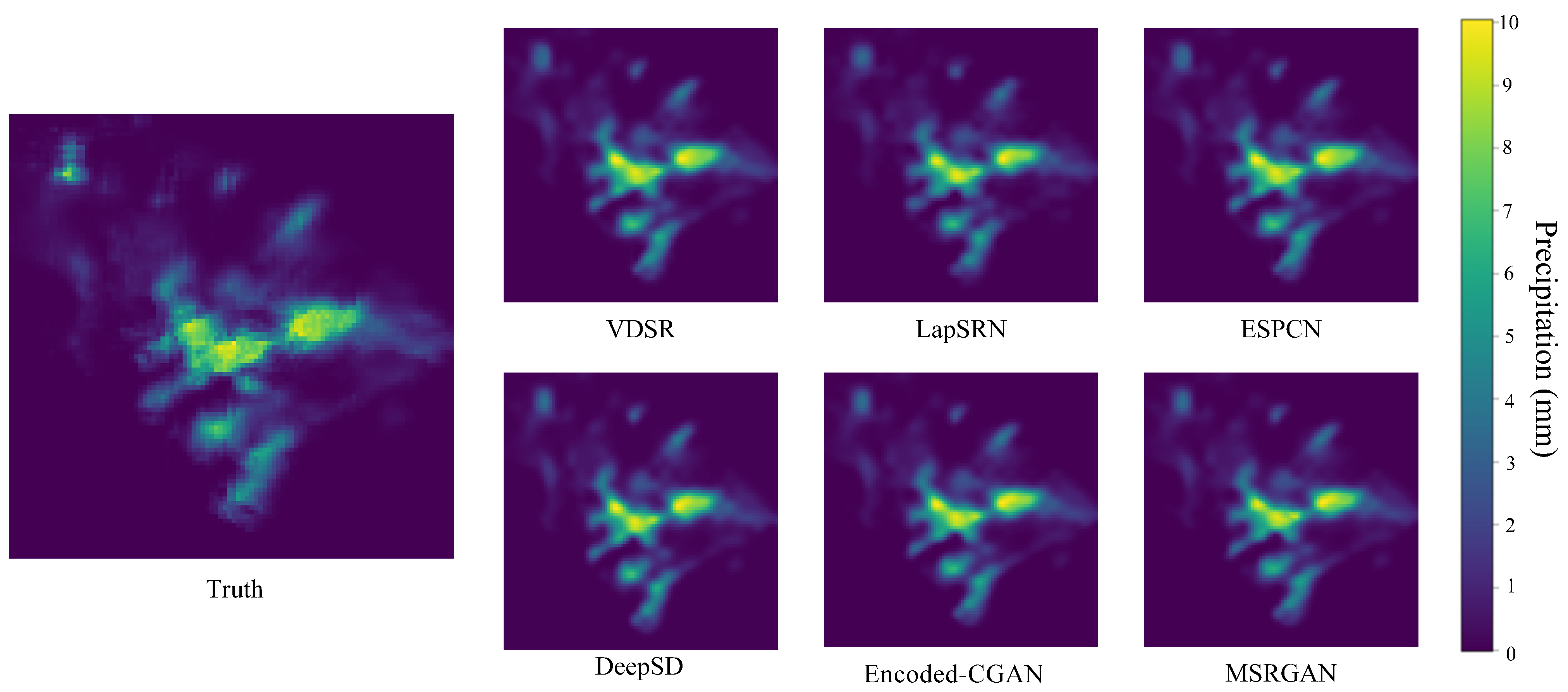

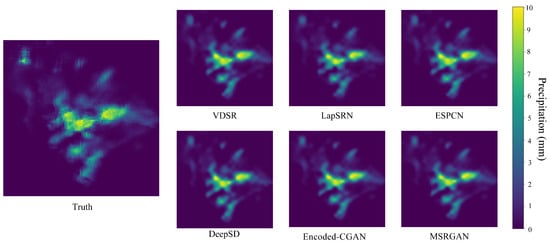

To complement the quantitative analysis, we compare visual reconstruction performance (Figure 6). While most models can roughly restore the spatial structure of precipitation fields, particularly the heavy precipitation contours, the visual differences are subtle—especially between GAN-based models such as Encoded-CGAN and MSRGAN. This confirms that their performance is highly comparable in general settings. This visual similarity reinforces the need for quantitative metrics such as J-S divergence and CSI/HSS to uncover subtle but critical differences. For instance, although the predicted images from Encoded-CGAN and MSRGAN appear similar, MSRGAN exhibits lower J-S divergence, suggesting better distributional alignment and more reliable spatial detail reconstruction under challenging conditions. These advantages are particularly evident in high-intensity rainfall events and in regions with complex topography, where fine-scale variability is critical.

Figure 6.

Reconstruction results of the same precipitation field using different models, with colors representing various precipitation intensities (units: mm/3 h). Reference data display high-resolution actual data, while the others display predictions from different methods. Although visual differences may seem limited, quantitative metrics can reveal performance superiority or inferiority.

In summary, MSRGAN demonstrates superior quantitative performance across multiple thresholds and metrics, and its visual outputs further support its robustness. These results collectively validate its effectiveness in both accurate precipitation detection and realistic spatial reconstruction.

4.3. Ablation Experiment

To further validate the effectiveness of the key components in the proposed network architecture, we conducted six groups of ablation experiments. In each group, a specific component was either removed or replaced, and the modified models were quantitatively evaluated on the test set. The results are summarized in Table 3.

Table 3.

Quantitative evaluation results of ablation experiments for key modules (CSI/HSS/FAR, with a threshold of 0.5 mm/h). The results demonstrate that the proposed DeepInception module, MSFM configuration, and SCAN all contribute significantly to the model’s performance. Underlined numbers indicate the best performance for each metric across the models.

First, replacing the proposed DeepInception module with the conventional Inception structure led to slight decreases in both CSI and HSS (from 0.8859 to 0.8789 and 0.9312, respectively), indicating that DeepInception offers stronger capability in capturing multi-scale features. Although the removal of the DeepInception module leads to a slight increase in CSI and HSS, the FAR increases significantly from 0.0484 to 0.0531. This suggests that while the model may capture more precipitation events (leading to improved CSI/HSS), it also suffers from an increased number of false alarms. Therefore, DeepInception plays a critical role in suppressing spurious detections, thereby enhancing the reliability of the downscaled precipitation forecasts. Second, the default configuration of our model includes three MSFM before each upsampling stage. Reducing this to two modules resulted in a slight performance drop (CSI decreased to 0.8817). Further reducing it to one module led to a more noticeable decline in CSI (down to 0.8772), with FAR reaching its lowest value. However, overall performance degraded, indicating that, although excessive fusion may increase false alarms, adequate multi-scale fusion is critical for reconstructing fine details in precipitation structures. Completely removing MSFM caused significant performance deterioration (CSI dropped to 0.8791 and FAR rose to 0.0668), further confirming the necessity of this module in feature extraction. Finally, replacing the proposed SCAN with the classic CBAM module also resulted in a performance decline (CSI decreased from 0.8859 to 0.8820), demonstrating that SCAN more effectively enhances feature representation capabilities. In summary, each module plays an indispensable role in the proposed model, validating both the effectiveness and necessity of their designs.

5. Discussion

Future research can further explore the deep integration of multimodal data, particularly the potential of radar reflectivity data. With their high spatiotemporal resolution and real-time dynamic capture capabilities, radar data can provide crucial information on the temporal and spatial evolution of precipitation systems. By designing a spatiotemporal fusion framework that combines radar echoes with low-resolution numerical weather simulation data, the model can more accurately capture short-term variations in precipitation. Additionally, the incorporation of cross-modal attention mechanisms is expected to enhance the synergistic representation of radar data and meteorological variables, thereby improving the physical plausibility and detail fidelity of the downscaling results. Meanwhile, the introduction of physical constraints represents a promising direction—for instance, embedding mass conservation or energy balance equations into the loss function to ensure that generated results adhere to meteorological principles. This integration of data-driven and physics-informed approaches not only enhances model interpretability but also provides meteorologists with more scientifically grounded decision support.

For fine-grained modeling of extreme precipitation events, future work can enhance the model’s ability to learn high-intensity rainfall patterns by employing dynamic loss weighting strategies. At the same time, optimizing inference efficiency through lightweight network architectures and parallel computing techniques will facilitate the deployment of the model in real-time operational meteorological systems. In terms of regional adaptability, the application of transfer learning can help migrate knowledge learned from specific regions (e.g., the continental United States) to other geographical contexts, thereby reducing data labeling costs and improving cross-regional generalization performance. Establishing a global-scale benchmark dataset for precipitation downscaling will also be essential for advancing research on model universality. In summary, MSRGAN provides an efficient and scalable solution for precipitation downscaling. With future improvements such as radar data integration, strengthened physical constraints, and enhanced real-time capability, the model holds great promise for overcoming current technical limitations and offering powerful tools for meteorological disaster warning and climate research.

6. Conclusions

This study proposes MSRGAN, a precipitation downscaling model based on Generative Adversarial Networks (GANs), which achieves high-resolution reconstruction of precipitation fields through the design of a DeepInception, MSFM, and SCAN. Experimental results show that MSRGAN significantly outperforms traditional interpolation methods and existing deep learning models in terms of CSI, HSS, FAR, and Jensen–Shannon divergence, particularly excelling in the detection and spatial reconstruction of extreme precipitation events (e.g., with a 10 mm/h threshold). By integrating multi-source meteorological data (T2, IWV, and SLP) and topographic information, the model further enhances the physical relevance of input features, providing reliable support for refined precipitation forecasting. However, some limitations remain, such as limited adaptability to real-time precipitation prediction, insufficient modeling of light or no-precipitation events, and unverified generalization capability in complex geographic regions. Addressing these issues requires broader data coverage and further model optimization strategies.

Author Contributions

Conceptualization, Y.L. (Yida Liu) and Z.L. (Zhuang Li); methodology and software, Y.L. (Yida Liu); validation, G.C. and Y.L. (Yizhe Li); formal analysis, Z.L. (Zhuang Li); investigation, Y.L. (Yida Liu) and Q.W.; resources, Y.L. (Yizhe Li); data curation and visualization, G.C.; writing—original draft preparation, Y.L. (Yida Liu); writing—review and editing, Z.L. (Zhuang Li) and Q.W.; supervision, Y.L. (Yida Liu); project administration, Z.L. (Zhenyu Lu). All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Zhejiang Provincial Natural Science Foundation Project (NO. LZJMD25D050002).

Data Availability Statement

The data are available from the corresponding author upon request.

Acknowledgments

The authors wish to express their gratitude to Remote Sensing, as well as to the anonymous reviewers who helped improve this paper through their thorough review.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kotz, M.; Levermann, A.; Wenz, L. The effect of rainfall changes on economic production. Nature 2022, 601, 223–227. [Google Scholar] [CrossRef]

- Lesk, C.; Coffel, E.; Horton, R. Net benefits to US soy and maize yields from intensifying hourly rainfall. Nat. Clim. Change 2020, 10, 819–822. [Google Scholar] [CrossRef]

- Gebregiorgis, A.S.; Hossain, F. Understanding the dependence of satellite rainfall uncertainty on topography and climate for hydrologic model simulation. IEEE Trans. Geosci. Remote Sens. 2012, 51, 704–718. [Google Scholar] [CrossRef]

- Held, I.M.; Soden, B.J. Robust responses of the hydrological cycle to global warming. J. Clim. 2006, 19, 5686–5699. [Google Scholar] [CrossRef]

- Koizumi, K.; Ishikawa, Y.; Tsuyuki, T. Assimilation of precipitation data to the JMA mesoscale model with a four-dimensional variational method and its impact on precipitation forecasts. Sola 2005, 1, 45–48. [Google Scholar] [CrossRef]

- Ricciardelli, E.; Di Paola, F.; Gentile, S.; Cersosimo, A.; Cimini, D.; Gallucci, D.; Geraldi, E.; Larosa, S.; Nilo, S.T.; Ripepi, E.; et al. Analysis of Livorno heavy rainfall event: Examples of satellite-based observation techniques in support of numerical weather prediction. Remote Sens. 2018, 10, 1549. [Google Scholar] [CrossRef]

- Xiong, L.; Liu, C.; Chen, S.; Zha, X.; Ma, Q. Review of post-processing research for remote-sensing precipitation products. Adv. Water Sci. 2021, 32, 627–637. [Google Scholar]

- Wang, H.; Wang, G.; Liu, L. Climatological beam propagation conditions for China’s weather radar network. J. Appl. Meteorol. Climatol. 2018, 57, 3–14. [Google Scholar] [CrossRef]

- Stevens, B.; Satoh, M.; Auger, L.; Biercamp, J.; Bretherton, C.S.; Chen, X.; Düben, P.; Judt, F.; Khairoutdinov, M.; Klocke, D.; et al. DYAMOND: The DYnamics of the Atmospheric general circulation Modeled On Non-hydrostatic Domains. Prog. Earth Planet. Sci. 2019, 6, 1–17. [Google Scholar] [CrossRef]

- Vandal, T.; Kodra, E.; Ganguly, S.; Michaelis, A.; Nemani, R.; Ganguly, A.R. Deepsd: Generating high resolution climate change projections through single image super-resolution. In Proceedings of the 23rd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1663–1672. [Google Scholar]

- Wang, J.; Liu, Z.; Foster, I.; Chang, W.; Kettimuthu, R.; Kotamarthi, V.R. Fast and accurate learned multiresolution dynamical downscaling for precipitation. Geosci. Model Dev. Discuss. 2021, 2021, 1–24. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, J.; Kuang, Q.; Xu, Z.; Shen, C.; Liu, W.; Zhou, K. DeepDT: Generative adversarial network for high-resolution climate prediction. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1001105. [Google Scholar] [CrossRef]

- Li, Z.; Lu, Z.; Zhang, Y.; Li, Y. PSRGAN: Generative Adversarial Networks for Precipitation Downscaling. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1001005. [Google Scholar] [CrossRef]

- Tang, J.; Niu, X.; Wang, S.; Gao, H.; Wang, X.; Wu, J. Statistical downscaling and dynamical downscaling of regional climate in China: Present climate evaluations and future climate projections. J. Geophys. Res. Atmos. 2016, 121, 2110–2129. [Google Scholar] [CrossRef]

- Taillardat, M.; Mestre, O.; Zamo, M.; Naveau, P. Calibrated ensemble forecasts using quantile regression forests and ensemble model output statistics. Mon. Weather Rev. 2016, 144, 2375–2393. [Google Scholar] [CrossRef]

- Chaney, N.W.; Herman, J.D.; Ek, M.B.; Wood, E.F. Deriving global parameter estimates for the Noah land surface model using FLUXNET and machine learning. J. Geophys. Res. Atmos. 2016, 121, 13–218. [Google Scholar] [CrossRef]

- He, X.; Chaney, N.W.; Schleiss, M.; Sheffield, J. Spatial downscaling of precipitation using adaptable random forests. Water Resour. Res. 2016, 52, 8217–8237. [Google Scholar] [CrossRef]

- Jing, W.; Yang, Y.; Yue, X.; Zhao, X. A comparison of different regression algorithms for downscaling monthly satellite-based precipitation over North China. Remote Sens. 2016, 8, 835. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Qin, B.; Zhao, S.; Duan, Z. Comparison of different methods for spatial downscaling of GPM IMERG V06B satellite precipitation product over a typical arid to semi-arid area. Front. Earth Sci. 2020, 8, 536337. [Google Scholar] [CrossRef]

- Ebrahimy, H.; Aghighi, H.; Azadbakht, M.; Amani, M.; Mahdavi, S.; Matkan, A.A. Downscaling MODIS land surface temperature product using an adaptive random forest regression method and Google Earth Engine for a 19-years spatiotemporal trend analysis over Iran. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2103–2112. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Sharma, S.C.M.; Mitra, A. ResDeepD: A residual super-resolution network for deep downscaling of daily precipitation over India. Environ. Data Sci. 2022, 1, e19. [Google Scholar] [CrossRef]

- Tu, T.; Ishida, K.; Ercan, A.; Kiyama, M.; Amagasaki, M.; Zhao, T. Hybrid precipitation downscaling over coastal watersheds in Japan using WRF and CNN. J. Hydrol. Reg. Stud. 2021, 37, 100921. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, K.; Shao, C.; Zhou, X.; Zhao, L.; Chen, Y.; Wu, H. A downscaling approach for constructing high-resolution precipitation dataset over the Tibetan Plateau from ERA5 reanalysis. Atmos. Res. 2021, 256, 105574. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Price, I.; Rasp, S. Increasing the accuracy and resolution of precipitation forecasts using deep generative models. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, Virtual, 28–30 March 2022; pp. 10555–10571. [Google Scholar]

- Jiang, K.; Wang, Z.; Yi, P.; Wang, G.; Lu, T.; Jiang, J. Edge-enhanced GAN for remote sensing image superresolution. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5799–5812. [Google Scholar] [CrossRef]

- Yang, F.; Ye, Q.; Wang, K.; Sun, L. Successful Precipitation Downscaling Through an Innovative Transformer-Based Model. Remote Sens. 2024, 16, 4292. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Hu, Y.; Tian, S.; Ge, J. Hybrid convolutional network combining multiscale 3D depthwise separable convolution and CBAM residual dilated convolution for hyperspectral image classification. Remote Sens. 2023, 15, 4796. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences; Academic Press: Cambridge, MA, USA, 2011; Volume 100. [Google Scholar]

- Gneiting, T.; Raftery, A.E. Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 2007, 102, 359–378. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).