Detection of Bacterial Leaf Spot Disease in Sesame (Sesamum indicum L.) Using a U-Net Autoencoder

Abstract

1. Introduction

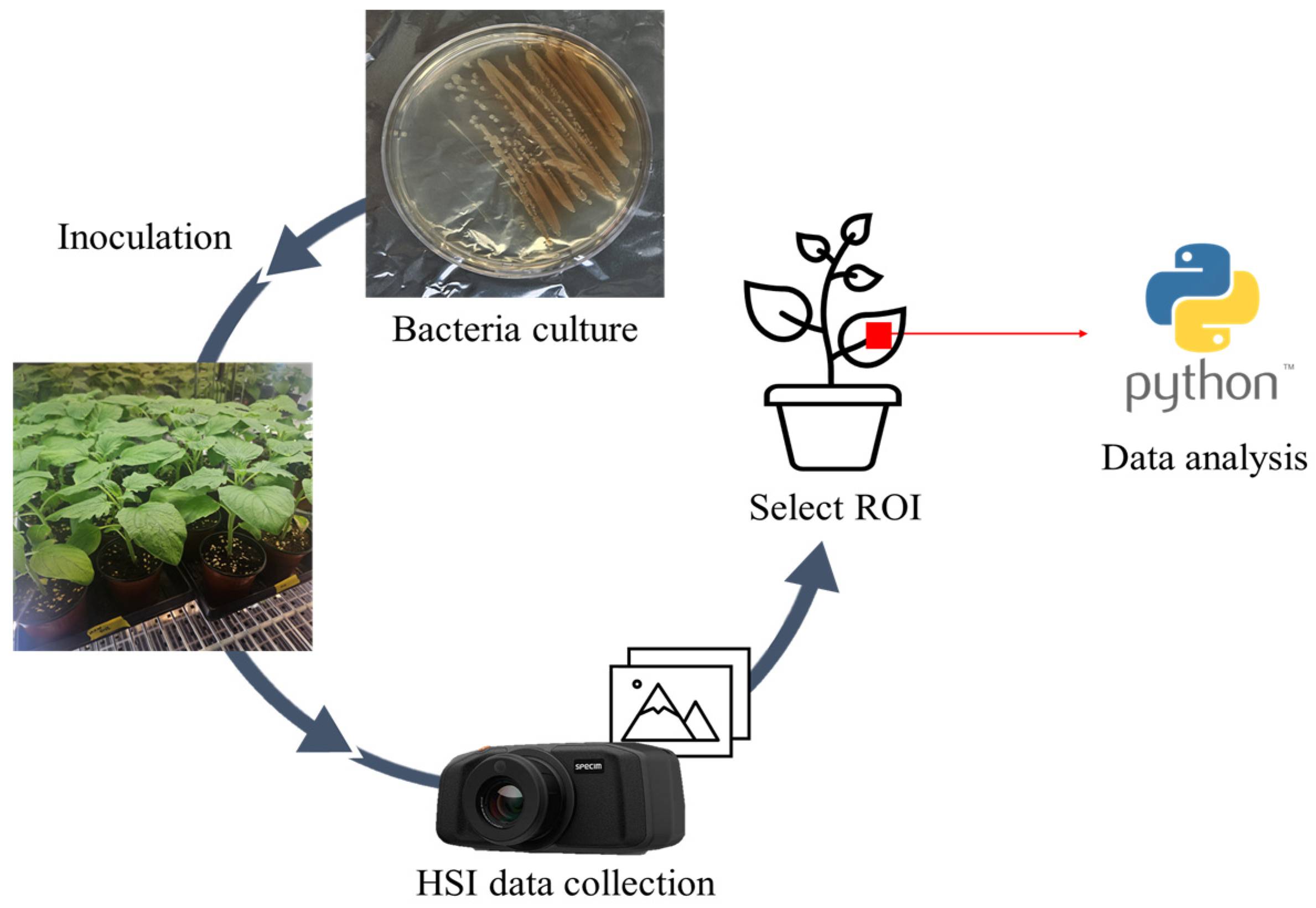

2. Materials and Methods

2.1. Plant Preparation and Hyperspectral Imaging

2.1.1. Plant Cultivation

2.1.2. Bacterial Inoculation

2.1.3. Data Acquisition

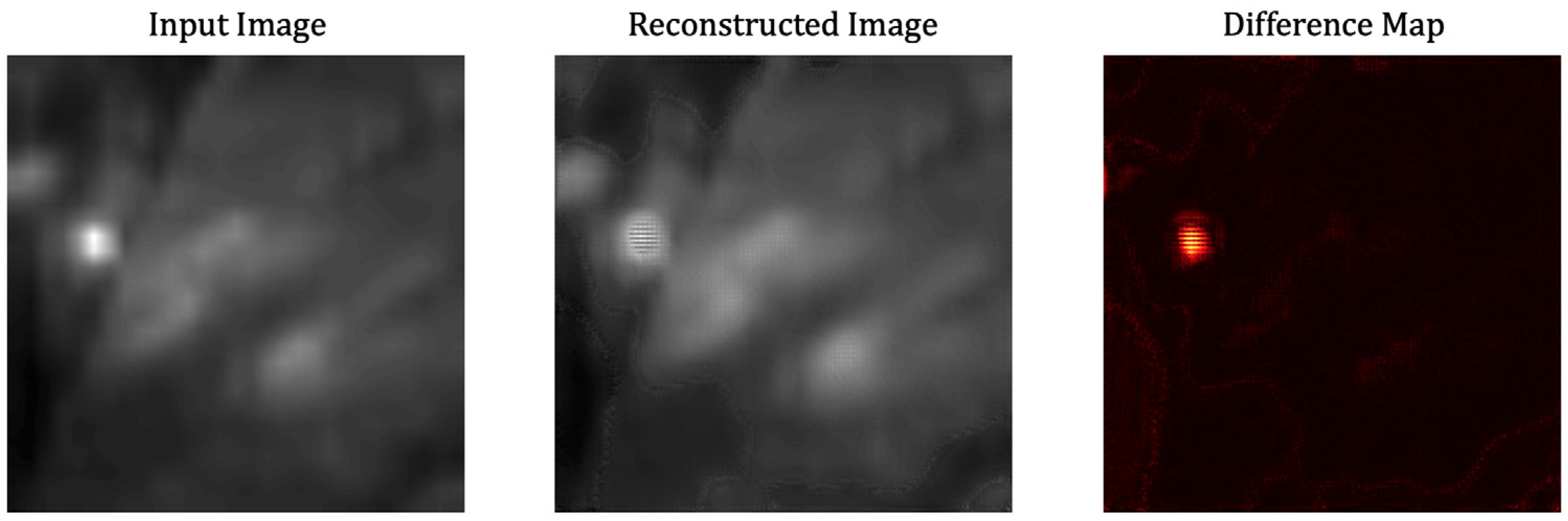

2.2. Modeling Framework for Anomaly Detection

2.2.1. Autoencoder

2.2.2. U-Net Based Autoencoder

2.2.3. Model Training and Evaluation

| Algorithm 1: Band-wise preprocessing of hyperspectral images |

|

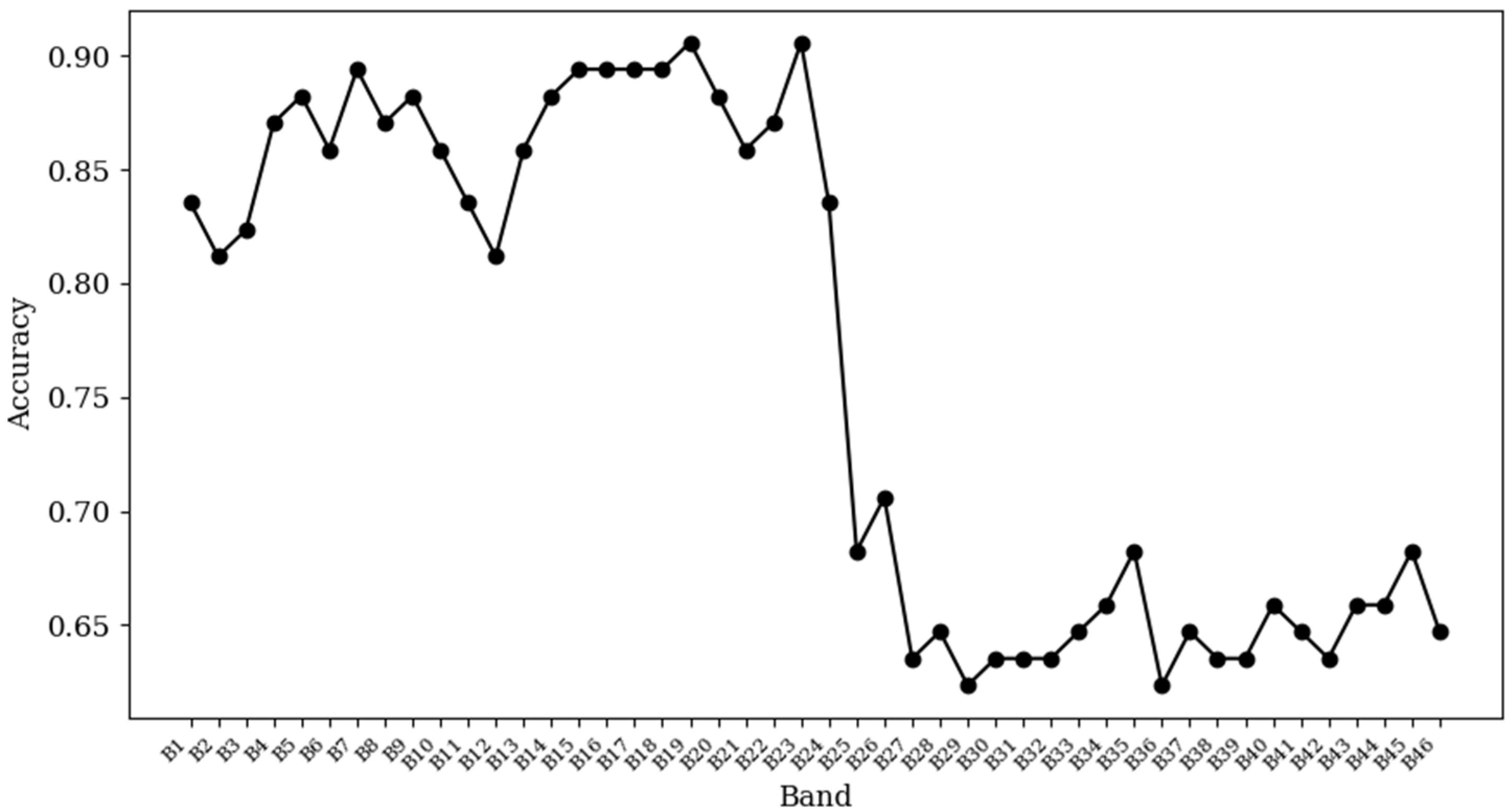

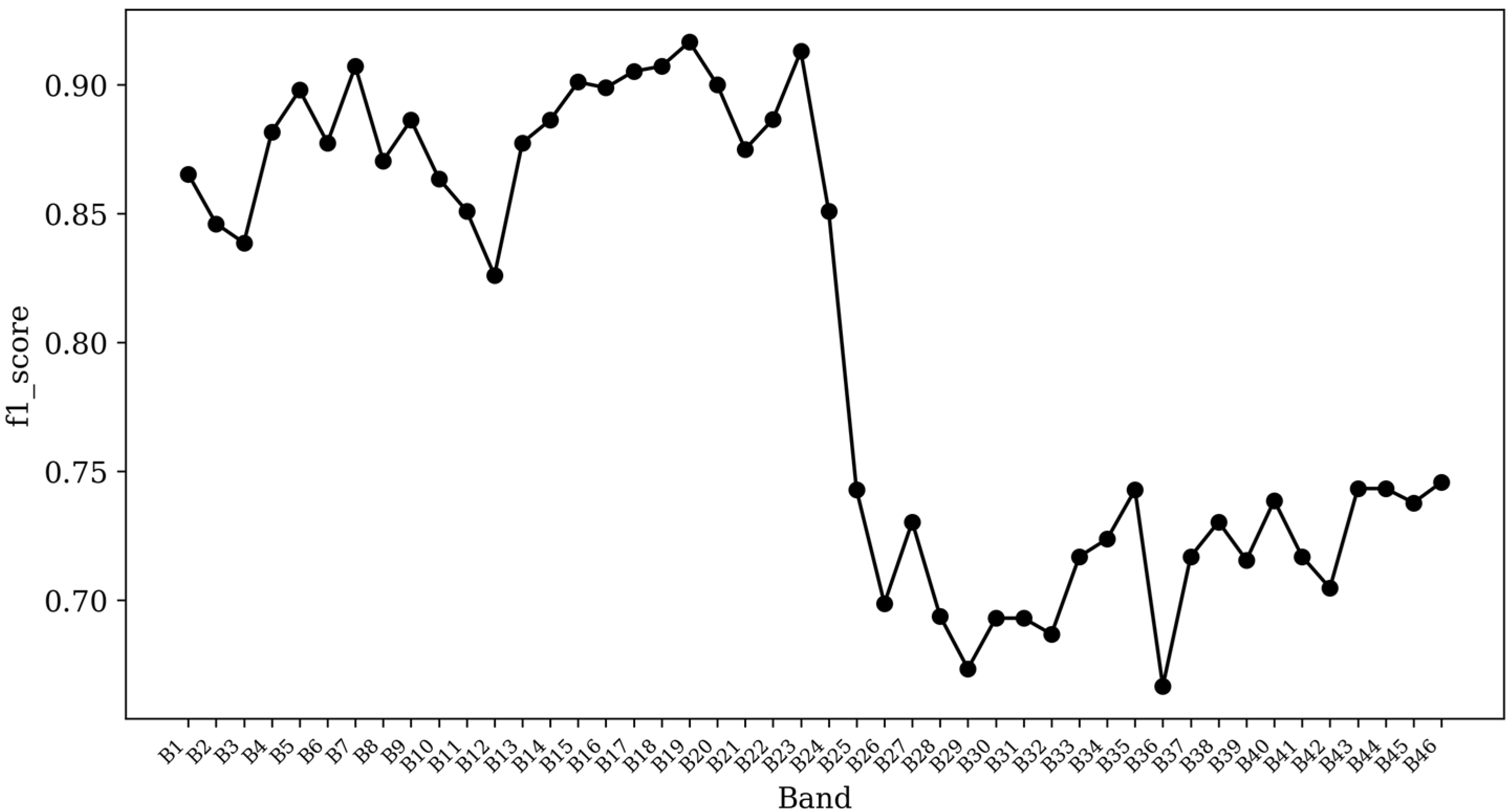

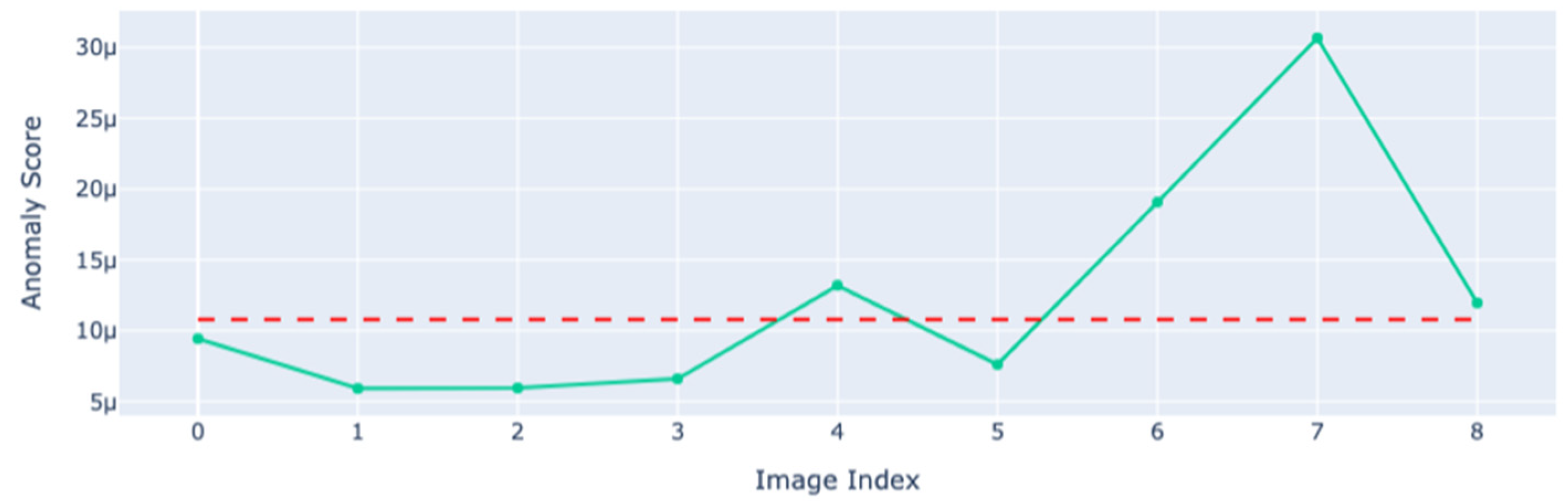

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Faostat. Crops and Livestock Products-Production Quantity of Sesame Seeds; Food and Agriculture Organization of the United Nations: Rome, Italy, 2023. [Google Scholar]

- Namiki, M. Nutraceutical functions of sesame: A review. Crit. Rev. Food Sci. Nutr. 2007, 47, 651–673. [Google Scholar] [CrossRef] [PubMed]

- Jyothi, B.; Ansari, N.A.; Vijay, Y.; Anuradha, G.; Sarkar, A.; Sudhakar, R.; Siddiq, E. Assessment of resistance to Fusarium wilt disease in sesame (Sesamum indicum L.) germplasm. Austalas. Plant Pathol. 2011, 40, 471–475. [Google Scholar] [CrossRef]

- Ransingh, N.; Khamari, B.; Adhikary, N. Modern approaches for management of sesame diseases. In Innovative Approaches in Diagnosis and Management of Crop Diseases; Apple Academic Press: Palm Bay, FL, USA, 2021; pp. 123–162. [Google Scholar]

- Vajavat, R.; Chakravarti, B. Yield Losses due to Bacterial Leaf Spot of Sesamum Orientale in Rajasthan; CABI: Wallingford, UK, 1977. [Google Scholar]

- Prathuangwong, S.; Yowabutra, P. Effects of bacterial leaf spot on yield, resistance, and seedborne infection of sesame in Thailand. In Pseudomonas Syringae Pathovars and Related Pathogens; Springer: Berlin/Heidelberg, Germany, 1997; pp. 53–60. [Google Scholar]

- Langham, D.; Cochran, K. Fungi, Oomycetes, Bacteria, and Viruses Associated with Sesame (Sesamum indicum L.); Sesame Research; LLCR&D: San Antonio, TX, USA, 2021. [Google Scholar]

- Firdous, S.S.; Asghar, R.; Ul-Haque, M.I.; Waheed, A.; Afzal, S.N.; Mirza, M.Y. Pathogenesis of Pseudomonas syringae pv. sesami associated with Sesame (Sesamum indicum L.) bacterial leaf spot. Pak. J. Bot. 2009, 41, 927–934. [Google Scholar]

- Fang, Y.; Ramasamy, R.P. Current and prospective methods for plant disease detection. Biosensors 2015, 5, 537–561. [Google Scholar] [CrossRef]

- Alemu, K. Detection of diseases, identification and diversity of viruses. J. Biol. Agric. Healthc. 2015, 5, 204–213. [Google Scholar]

- Donoso, A.; Valenzuela, S. In-field molecular diagnosis of plant pathogens: Recent trends and future perspectives. Plant Pathol. 2018, 67, 1451–1461. [Google Scholar] [CrossRef]

- Farber, C.; Mahnke, M.; Sanchez, L.; Kurouski, D. Advanced spectroscopic techniques for plant disease diagnostics. A review. Trends Anal. Chem. 2019, 118, 43–49. [Google Scholar] [CrossRef]

- Sankaran, S.; Mishra, A.; Ehsani, R.; Davis, C. A review of advanced techniques for detecting plant diseases. Comput. Electron. Agric. 2010, 72, 1–13. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Keshava, N. A survey of spectral unmixing algorithms. Linc. Lab. J. 2003, 14, 55–78. [Google Scholar]

- Shaw, G.A.; Burke, H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003, 14, 3–28. [Google Scholar]

- Li, Q.; He, X.; Wang, Y.; Liu, H.; Xu, D.; Guo, F. Review of spectral imaging technology in biomedical engineering: Achievements and challenges. J. Biomed. Opt. 2013, 18, 100901. [Google Scholar] [CrossRef]

- Zhang, Q.; Luan, R.; Wang, M.; Zhang, J.; Yu, F.; Ping, Y.; Qiu, L. Research Progress of Spectral Imaging Techniques in Plant Phenotype Studies. Plants 2024, 13, 3088. [Google Scholar] [CrossRef] [PubMed]

- Kuswidiyanto, L.W.; Noh, H.-H.; Han, X. Plant disease diagnosis using deep learning based on aerial hyperspectral images: A review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Wan, L.; Li, H.; Li, C.; Wang, A.; Yang, Y.; Wang, P. Hyperspectral sensing of plant diseases: Principle and methods. Agronomy 2022, 12, 1451. [Google Scholar] [CrossRef]

- García-Vera, Y.E.; Polochè-Arango, A.; Mendivelso-Fajardo, C.A.; Gutiérrez-Bernal, F.J. Hyperspectral image analysis and machine learning techniques for crop disease detection and identification: A review. Sustainability 2024, 16, 6064. [Google Scholar] [CrossRef]

- Ravikanth, L.; Jayas, D.S.; White, N.D.; Fields, P.G.; Sun, D.-W. Extraction of spectral information from hyperspectral data and application of hyperspectral imaging for food and agricultural products. Food Bioprocess Technol. 2017, 10, 1–33. [Google Scholar] [CrossRef]

- Mahesh, S.; Jayas, D.; Paliwal, J.; White, N. Hyperspectral imaging to classify and monitor quality of agricultural materials. J. Stored Prod. Res. 2015, 61, 17–26. [Google Scholar] [CrossRef]

- Kumar, B.; Dikshit, O.; Gupta, A.; Singh, M.K. Feature extraction for hyperspectral image classification: A review. Int. J. Remote Sens. 2020, 41, 6248–6287. [Google Scholar] [CrossRef]

- Matese, A.; Czarnecki, J.M.P.; Samiappan, S.; Moorhead, R. Are unmanned aerial vehicle-based hyperspectral imaging and machine learning advancing crop science? Trends Plant Sci. 2024, 29, 196–209. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Cao, L.; Hengel, A.V.D. Deep learning for anomaly detection: A review. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery. In Proceedings of the International Conference on Information Processing in Medical Imaging, Boone, NC, USA, 25–30 June 2017; pp. 146–157. [Google Scholar]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Langs, G.; Schmidt-Erfurth, U. f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks. Med. Image Anal. 2019, 54, 30–44. [Google Scholar] [CrossRef] [PubMed]

- Cheshkova, A. A review of hyperspectral image analysis techniques for plant disease detection and identif ication. Vavilovsk. Zhurn. Genet. Selekts. 2022, 26, 202. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Freeman, E.A.; Moisen, G.G. A comparison of the performance of threshold criteria for binary classification in terms of predicted prevalence and kappa. Ecol. Model. 2008, 217, 48–58. [Google Scholar] [CrossRef]

- Lay, L.; Lee, H.S.; Tayade, R.; Ghimire, A.; Chung, Y.S.; Yoon, Y.; Kim, Y. Evaluation of soybean wildfire prediction via hyperspectral imaging. Plants 2023, 12, 901. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.-A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; part III 18, pp. 234–241. [Google Scholar]

- Sakurada, M.; Yairi, T. Anomaly detection using autoencoders with nonlinear dimensionality reduction. In Proceedings of Proceedings of the MLSDA 2014 2nd Workshop on Machine Learning for Sensory Data Analysis, Gold Coast, Australia, 2 December 2014; pp. 4–11. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y.-B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Adv. Neural Inf. Process. Syst. 2016, 29, 2810–2818. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- Fardoos, S. Virulence Analysis of Xanthomonas Campestris Pv. Sesami and Pseudomonas Syringae Pv. Sesami the Causal Organisms of Sesame (Sesamum indicum L.) Bacterial Blight; Arid Agriculture University Rawalpindi: Pothohar Plateau, Pakistan, 2009. [Google Scholar]

- Bender, C.L. Chlorosis-inducing phytotoxins produced by Pseudomonas syringae. Eur. J. Plant Pathol. 1999, 105, 1–12. [Google Scholar] [CrossRef]

- Zhang, Z.; He, B.; Sun, S.; Zhang, X.; Li, T.; Wang, H.; Xu, L.; Afzal, A.J.; Geng, X. The phytotoxin COR induces transcriptional reprogramming of photosynthetic, hormonal and defence networks in tomato. Plant Biol. 2021, 23, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Carter, G.A.; Knapp, A.K. Leaf optical properties in higher plants: Linking spectral characteristics to stress and chlorophyll concentration. Am. J. Bot. 2001, 88, 677–684. [Google Scholar] [CrossRef]

- Horler, D.; Dockray, M.; Barber, J. The red edge of plant leaf reflectance. Int. J. Remote Sens. 1983, 4, 273–288. [Google Scholar] [CrossRef]

- Peñuelas, J.; Filella, I.; Biel, C.; Serrano, L.; Save, R. The reflectance at the 950–970 nm region as an indicator of plant water status. Int. J. Remote Sens. 1993, 14, 1887–1905. [Google Scholar] [CrossRef]

- Peñuelas, J.; Filella, I. Visible and near-infrared reflectance techniques for diagnosing plant physiological status. Trends Plant Sci. 1998, 3, 151–156. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.S. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

| Band | Wavelength (nm) | Band | Wavelength (nm) | Band | Wavelength (nm) | Band | Wavelength (nm) |

|---|---|---|---|---|---|---|---|

| B1 | 430.53 | B13 | 570.60 | B25 | 713.06 | B37 | 857.90 |

| B2 | 442.11 | B14 | 582.38 | B26 | 725.03 | B38 | 870.07 |

| B3 | 453.71 | B15 | 594.18 | B27 | 737.03 | B39 | 882.27 |

| B4 | 465.32 | B16 | 605.99 | B28 | 749.04 | B40 | 894.48 |

| B5 | 476.96 | B17 | 617.82 | B29 | 761.07 | B41 | 906.71 |

| B6 | 488.59 | B18 | 629.67 | B30 | 773.12 | B42 | 918.95 |

| B7 | 500.26 | B19 | 641.53 | B31 | 785.18 | B43 | 931.21 |

| B8 | 511.94 | B20 | 653.41 | B32 | 797.26 | B44 | 943.49 |

| B9 | 523.64 | B21 | 665.30 | B33 | 809.35 | B45 | 955.78 |

| B10 | 535.36 | B22 | 677.22 | B34 | 821.47 | B46 | 968.09 |

| B11 | 547.09 | B23 | 689.15 | B35 | 833.59 | ||

| B12 | 558.83 | B24 | 701.09 | B36 | 845.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, M.; Lee, J.; Ghimire, A.; Bae, Y.; Kang, T.-A.; Yoon, Y.; Lee, I.-J.; Park, C.-W.; Kim, B.; Kim, Y. Detection of Bacterial Leaf Spot Disease in Sesame (Sesamum indicum L.) Using a U-Net Autoencoder. Remote Sens. 2025, 17, 2230. https://doi.org/10.3390/rs17132230

Lee M, Lee J, Ghimire A, Bae Y, Kang T-A, Yoon Y, Lee I-J, Park C-W, Kim B, Kim Y. Detection of Bacterial Leaf Spot Disease in Sesame (Sesamum indicum L.) Using a U-Net Autoencoder. Remote Sensing. 2025; 17(13):2230. https://doi.org/10.3390/rs17132230

Chicago/Turabian StyleLee, Minju, Jeseok Lee, Amit Ghimire, Yegyeong Bae, Tae-An Kang, Youngnam Yoon, In-Jung Lee, Choon-Wook Park, Byungwon Kim, and Yoonha Kim. 2025. "Detection of Bacterial Leaf Spot Disease in Sesame (Sesamum indicum L.) Using a U-Net Autoencoder" Remote Sensing 17, no. 13: 2230. https://doi.org/10.3390/rs17132230

APA StyleLee, M., Lee, J., Ghimire, A., Bae, Y., Kang, T.-A., Yoon, Y., Lee, I.-J., Park, C.-W., Kim, B., & Kim, Y. (2025). Detection of Bacterial Leaf Spot Disease in Sesame (Sesamum indicum L.) Using a U-Net Autoencoder. Remote Sensing, 17(13), 2230. https://doi.org/10.3390/rs17132230