A Microwave Vision-Enhanced Environmental Perception Method for the Visual Navigation of UAVs

Abstract

1. Introduction

- (1)

- Preservation of optical image characteristics: The optical component of the benchmark must be preserved to the greatest extent possible. This is essential because the optical image characteristics of the benchmark are crucial for accurate matching with video keyframes.

- (2)

- Target information extraction from SAR images: The fusion process should focus on extracting and integrating the unique target information present in the SAR image into the optical image. This means that the fusion technique should avoid adding redundant or excessive information that could complicate the fusion result.

- It is proposed to introduce microwave vision to assist optical vision to measure the environment, overcome the influence of light and cloudy weather changes, and enhance the robustness of environmental information. Moreover, the enhancement of environmental information perception by microwave vision is realized by using multimodal visual information through SAR and optical image fusion processing under conditions of sufficient light and clear weather.

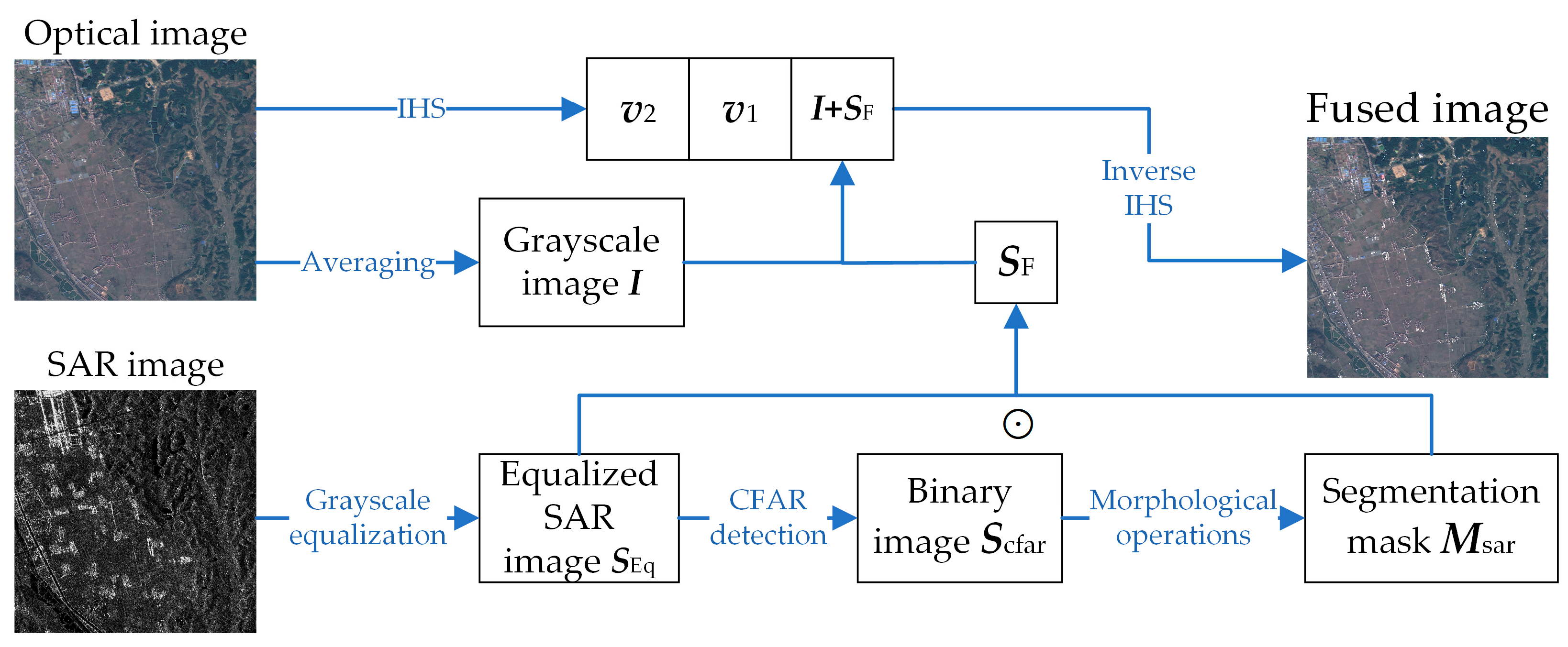

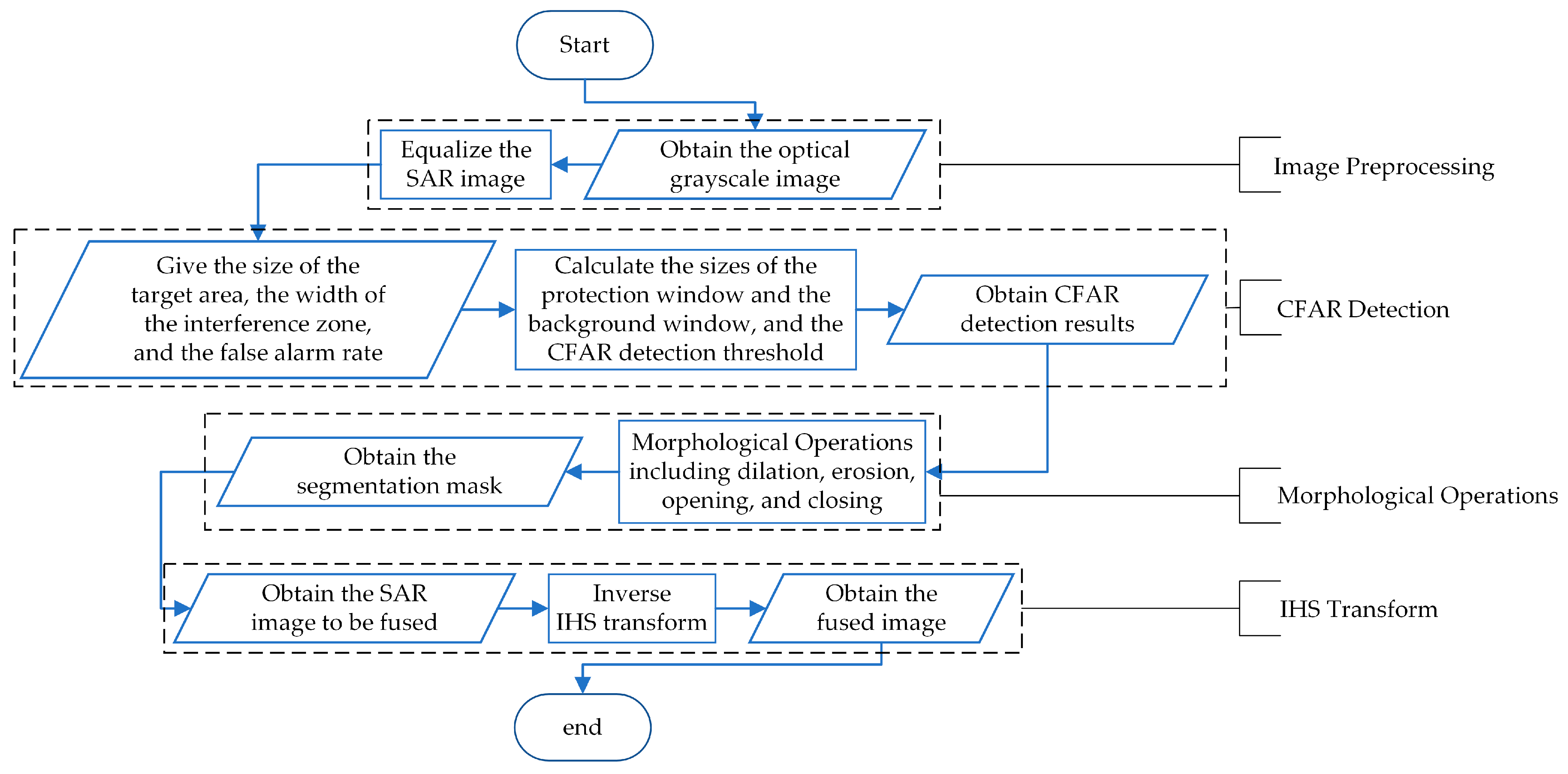

- The STAF algorithm based on the target detection of SAR images is proposed to extract useful target features of SAR images using CFAR detection and morphological processing to overcome the effect of speckle noise of SAR images. And the SAR and optical images are fused by IHS transform to obtain the microwave vision-enhanced environmental information perception results.

2. Related Works

2.1. SAR-Based Navigation Techniques

2.2. SAR and Optical Image Fusion

3. Proposed Method

3.1. Microwave Vision-Enhanced Environment Perception Strategy

- (1)

- When the image obtained by optical vision measurement cannot effectively characterize the environment and extract useful feature information, the SAR image obtained by microwave vision measurement is used as the result of environment measurement, and the features are extracted from it to be used as the data source for subsequent visual navigation;

- (2)

- When the image obtained by the optical vision measurement is partially missing due to cloud obscuration, the SAR image obtained by the microwave vision measurement is utilized to reconstruct the missing information of the optical image to generate a complete image without clouds;

- (3)

- In the case where an ideal image is obtained from the optical vision measurement, microwave vision-enhanced environmental information perception is realized through the fusion processing of SAR and optical images, utilizing the complementary nature of the feature information of the SAR and the optical image.

3.2. STAF Algorithm

3.2.1. Image Preprocessing

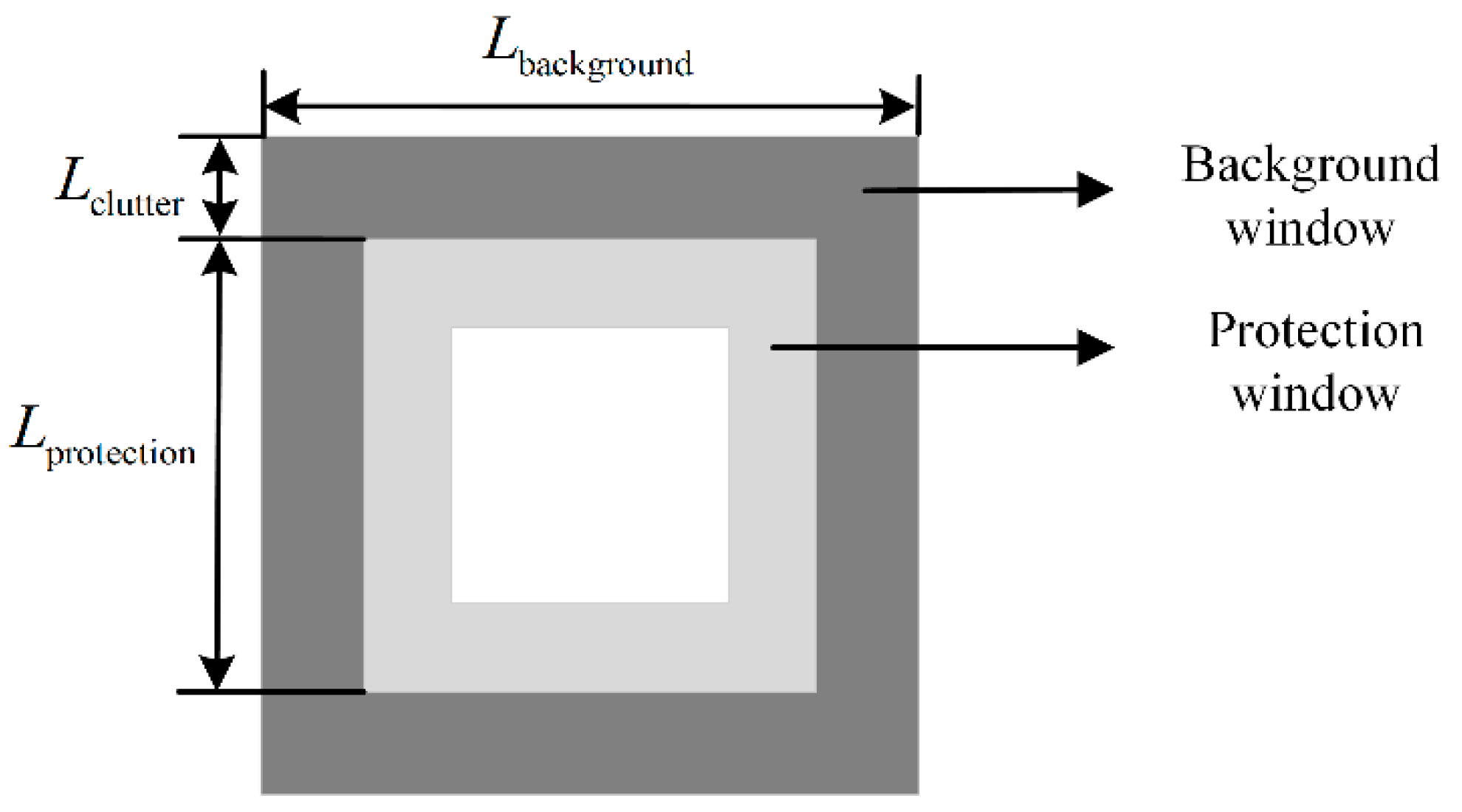

3.2.2. CFAR Detection

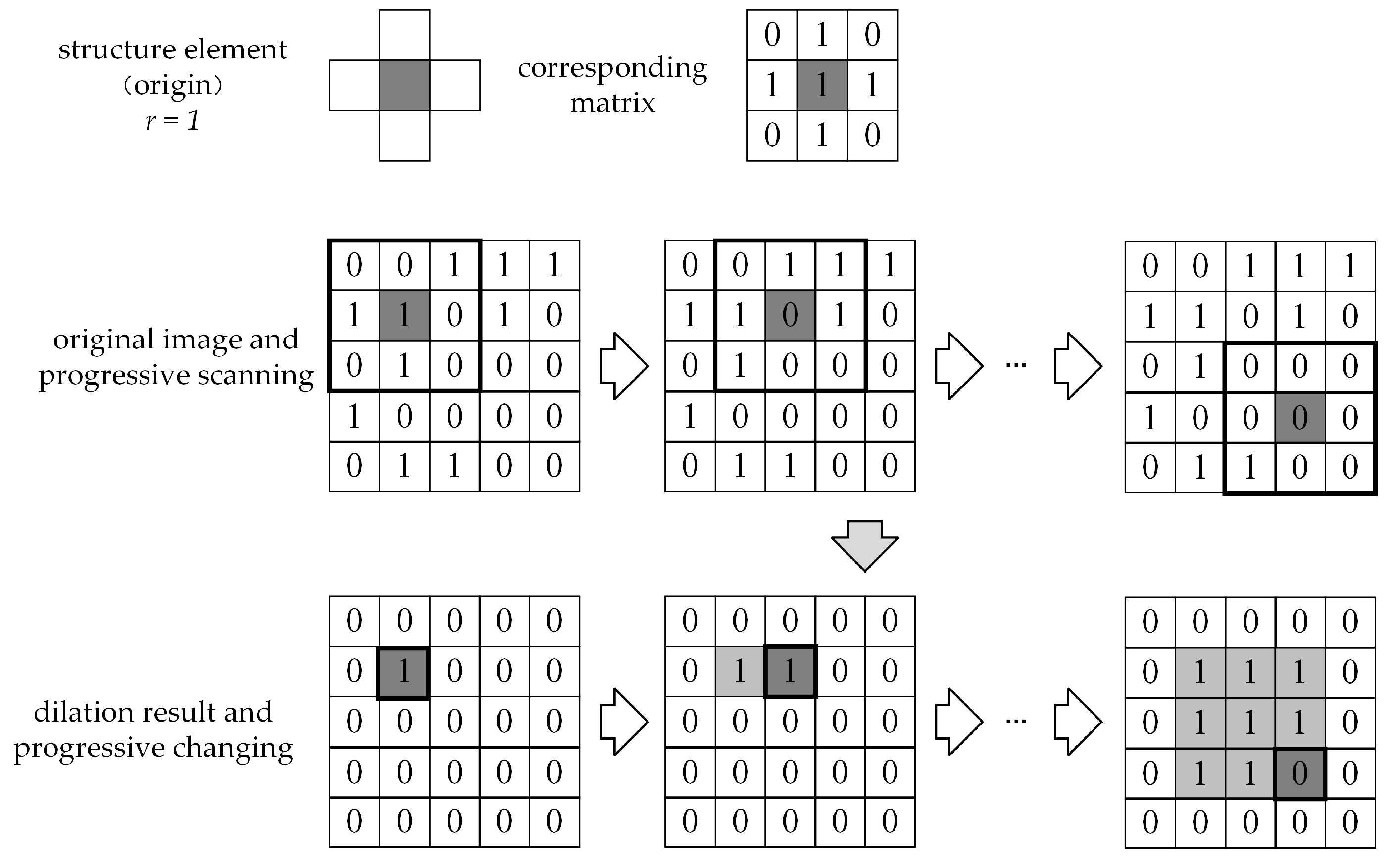

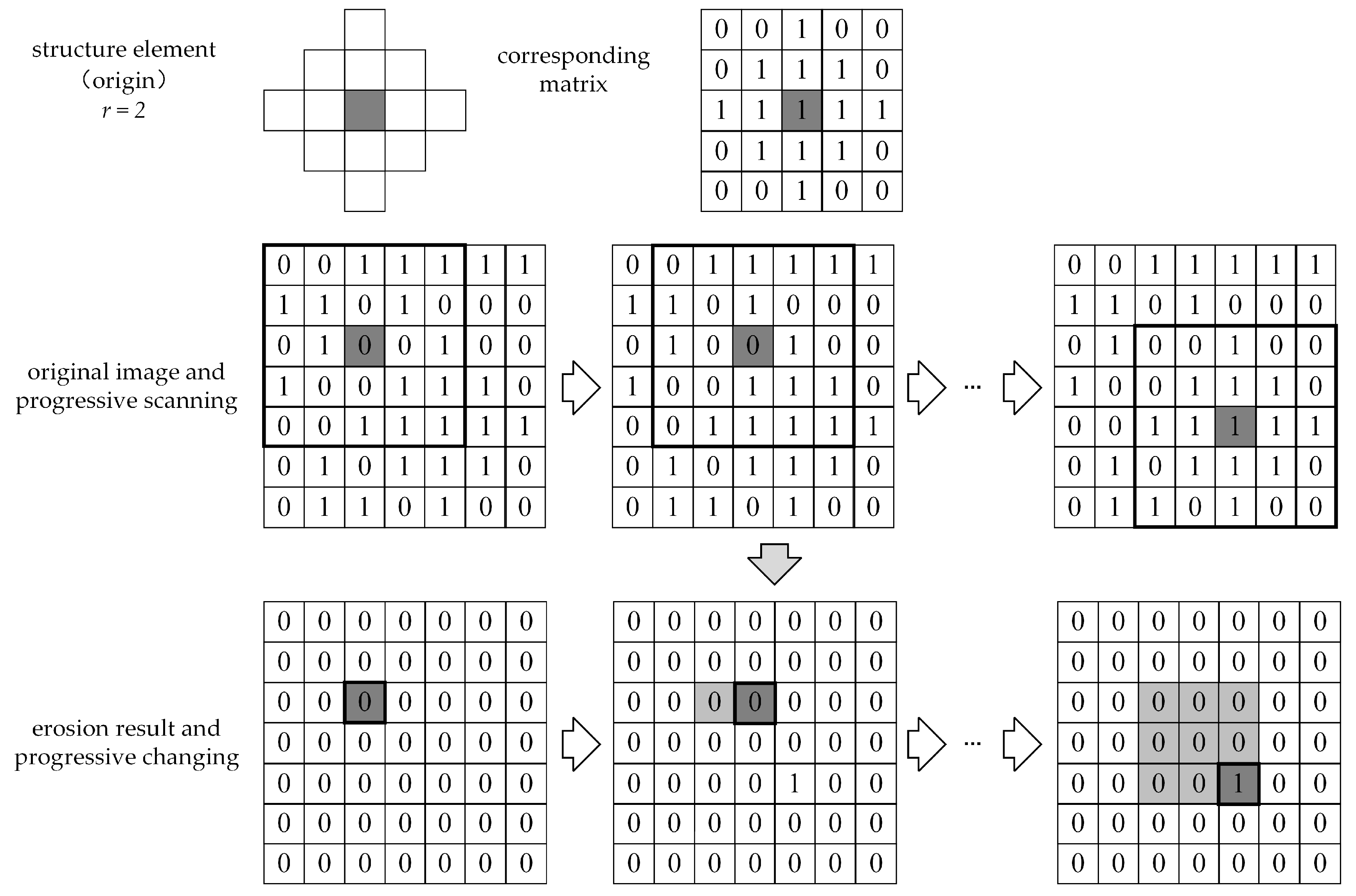

3.2.3. Morphological Operations

3.2.4. IHS Transform

4. Results

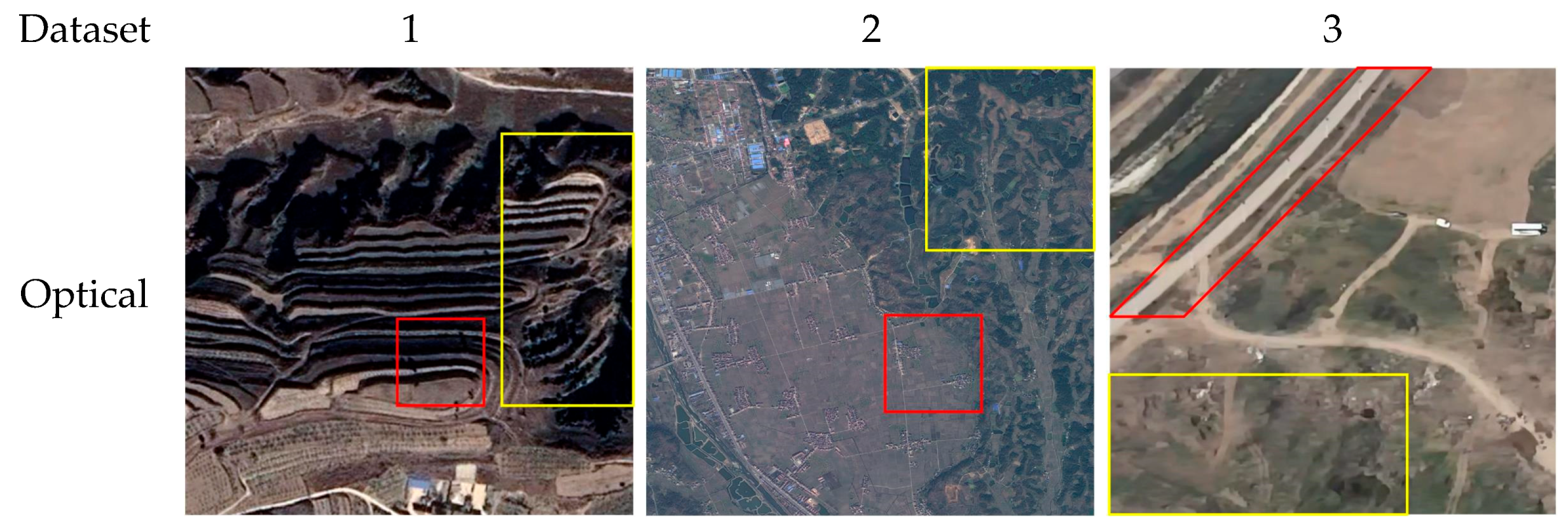

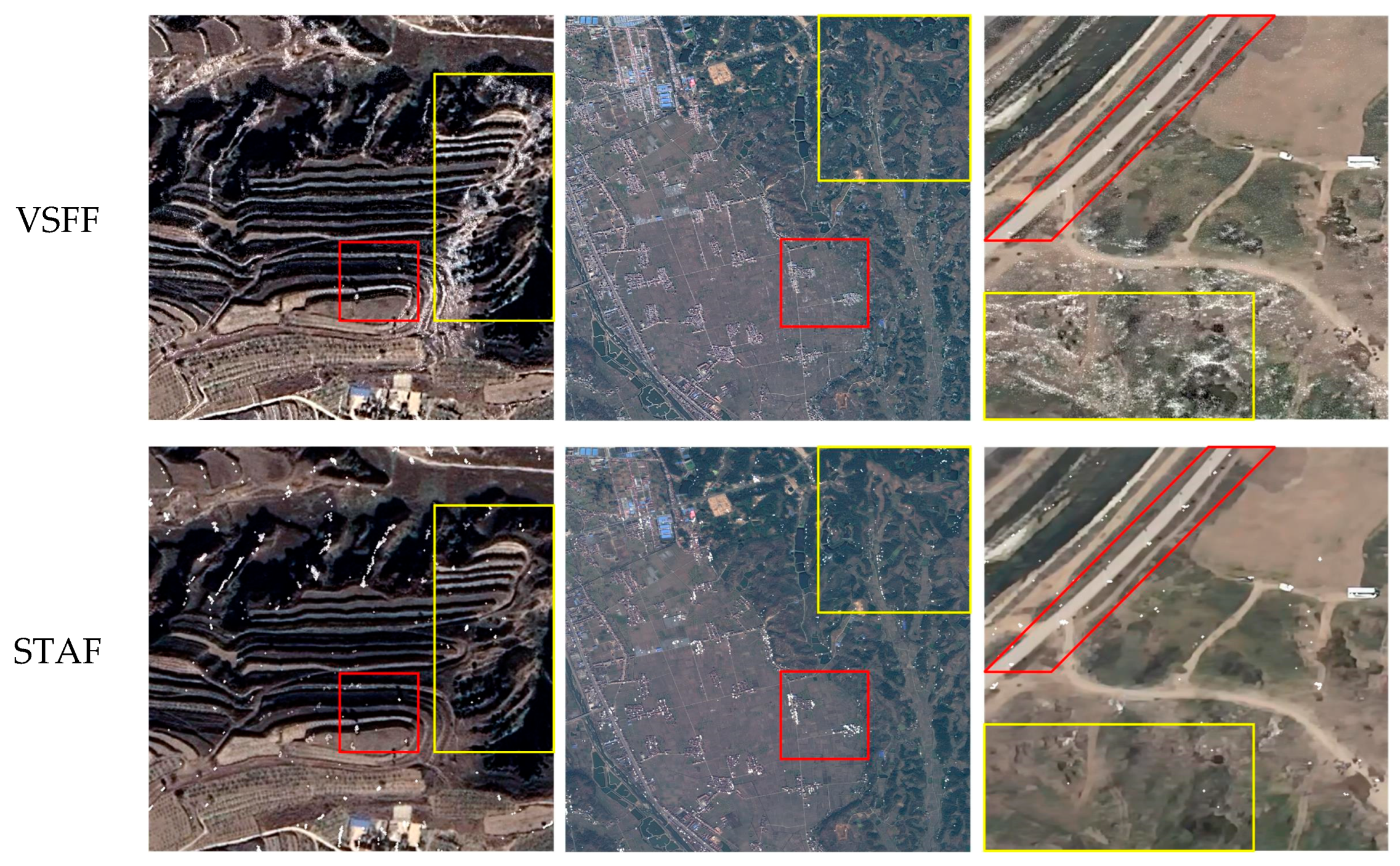

4.1. Visual Evaluation

4.1.1. Microwave Vision-Enhanced Environment Perception Method

4.1.2. Performance Comparison of Fusion Algorithms

4.2. Statistical Evaluation

4.2.1. Microwave Vision-Enhanced Environment Perception Method

4.2.2. Performance Comparison of Fusion Algorithms

- When , the fused image retains more information from the optical image, and as FS approaches 0.5, the optical content dominates.

- When , the fused image incorporates more SAR image information, and as FS approaches −0.5, the SAR content prevails.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, Y.; Xue, Z.; Xia, G.-S.; Zhang, L. A Survey on Vision-Based UAV Navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Hai, J.; Hao, Y.; Zou, F.; Lin, F.; Han, S. A Visual Navigation System for UAV under Diverse Illumination Conditions. Appl. Artif. Intell. 2021, 35, 1529–1549. [Google Scholar] [CrossRef]

- Samma, H.; El-Ferik, S. Autonomous UAV Visual Navigation Using an Improved Deep Reinforcement Learning. IEEE Access 2024, 12, 79967–79977. [Google Scholar] [CrossRef]

- Ceron, A.; Mondragon, I.; Prieto, F. Onboard Visual-Based Navigation System for Power Line Following with UAV. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418763452. [Google Scholar] [CrossRef]

- Shin, K.; Hwang, H.; Ahn, J. Mission Analysis of Solar UAV for High-Altitude Long-Endurance Flight. J. Aerosp. Eng. 2018, 31, 04018010. [Google Scholar] [CrossRef]

- Brown, A.; Anderson, D. Trajectory Optimization for High-Altitude Long-Endurance UAV Maritime Radar Surveillance. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 2406–2421. [Google Scholar] [CrossRef]

- Lindstrom, C.; Christensen, R.; Gunther, J.; Jenkins, S. GPS-Denied Navigation Aided by Synthetic Aperture Radar Using the Range-Doppler Algorithm. Navig. J. Inst. Navig. 2022, 69, navi.533. [Google Scholar] [CrossRef]

- Ahmed, M.M.; Shawky, M.A.; Zahran, S.; Moussa, A.; EL-Shimy, N.; Elmahallawy, A.A.; Ansari, S.; Shah, S.T.; Abdellatif, A.G. An Experimental Analysis of Outdoor UAV Localisation through Diverse Estimators and Crowd-Sensed Data Fusion. Phys. Commun. 2024, 66, 102475. [Google Scholar] [CrossRef]

- Xu, F.; Jin, Y. From the Emergence of Intelligent Science to the Research of Microwave Vision. Sci. Technol. Rev. 2018, 36, 30–44. [Google Scholar]

- Jin, Y. Multimode Remote Sensing Intelligent Information and Target Recognition: Physical Intelligence of Microwave Vision. J. Radars. 2019, 8, 710–716. [Google Scholar] [CrossRef]

- Xu, F.; Jin, Y. Microwave Vision and Intelligent Perception of Radar Imagery. J. Radars. 2024, 13, 285–306. [Google Scholar] [CrossRef]

- Ye, Y.; Liu, W.; Zhou, L.; Peng, T.; Xu, Q. An Unsupervised SAR and Optical Image Fusion Network Based on Structure-Texture Decomposition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4017605. [Google Scholar] [CrossRef]

- Pal, S.K.; Majumdar, T.J.; Bhattacharya, A.K. ERS-2 SAR and IRS-1C LISS III Data Fusion: A PCA Approach to Improve Remote Sensing Based Geological Interpretation. ISPRS J. Photogramm. Remote Sens. 2007, 61, 281–297. [Google Scholar] [CrossRef]

- Chen, C.-M.; Hepner, G.F.; Forster, R.R. Fusion of Hyperspectral and Radar Data Using the IHS Transformation to Enhance Urban Surface Features. ISPRS J. Photogramm. Remote Sens. 2003, 58, 19–30. [Google Scholar] [CrossRef]

- Chandrakanth, R.; Saibaba, J.; Varadan, G.; Raj, P.A. Feasibility of High Resolution SAR and Multispectral Data Fusion. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24 July 2011; pp. 356–359. [Google Scholar]

- Hong, S.; Moon, W.M.; Paik, H.Y.; Choi, G.H. Data Fusion of Multiple Polarimetric SAR Images Using Discrete Wavelet Transform (DWT). In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24 June 2002; Volume 6, pp. 3323–3325. [Google Scholar]

- Lu, Y.; Guo, L.; Li, H.; Li, L. SAR and MS Image Fusion Based on Curvelet Transform and Activity Measure. In Proceedings of the 2011 International Conference on Electric Information and Control Engineering, Wuhan, China, 15 April 2011; pp. 1680–1683. [Google Scholar]

- Hong, G.; Zhang, Y.; Mercer, B. A Wavelet and IHS Integration Method to Fuse High Resolution SAR with Moderate Resolution Multispectral Images. Photogramm. Eng. Remote Sens. 2009, 75, 1213–1223. [Google Scholar] [CrossRef]

- Xu, J.H.C.; Cheng, X. Comparative Analysis of Different Fusion Rules for SAR and Multi-Spectral Image Fusion Based on NSCT and IHS Transform. In Proceedings of the 2015 International Conference on Computer and Computational Sciences (ICCCS), Greater Noida, India, 27 January 2015; pp. 271–274. [Google Scholar]

- Chibani, Y. Additive Integration of SAR Features into Multispectral SPOT Images by Means of the à Trous Wavelet Decomposition. ISPRS J. Photogramm. Remote Sens. 2006, 60, 306–314. [Google Scholar] [CrossRef]

- Li, W.; Wu, J.; Liu, Q.; Zhang, Y.; Cui, B.; Jia, Y.; Gui, G. An Effective Multimodel Fusion Method for SAR and Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5881–5892. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, S.; Yan, H.; Xue, Q.; Shi, Z.; Hu, X. Optical and SAR Image Fusion Method with Coupling Gain Injection and Guided Filtering. J. Appl. Remote Sens. 2022, 16, 046505. [Google Scholar] [CrossRef]

- Adrian, J.; Sagan, V.; Maimaitijiang, M. Sentinel SAR-Optical Fusion for Crop Type Mapping Using Deep Learning and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 175, 215–235. [Google Scholar] [CrossRef]

- Duan, C.; Belgiu, M.; Stein, A. Efficient Cloud Removal Network for Satellite Images Using SAR-Optical Image Fusion. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6008605. [Google Scholar] [CrossRef]

- Sun, Y.; Huang, G.; Zhao, Z.; Liu, B. SAR and Multi-spectral Image Fusion Algorithms with Different Filtering Methods. Remote Sens. Inf. 2019, 34, 114–120. [Google Scholar]

- Zhang, X.; Zhang, S.; Sun, Z.; Liu, C.; Sun, Y.; Ji, K.; Kuang, G. Cross-Sensor SAR Image Target Detection Based on Dynamic Feature Discrimination and Center-Aware Calibration. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5209417. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Zhang, X.; Zhou, Z.; Xiong, B.; Ji, K.; Kuang, G. Arbitrary-Direction SAR Ship Detection Method for Multi-Scale Imbalance. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5208921. [Google Scholar] [CrossRef]

- Chen, M.; He, Y.; Wang, T.; Hu, Y.; Chen, J.; Pan, Z. Scale-Mixing Enhancement and Dual Consistency Guidance for End-to-End Semisupervised Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 15685–15701. [Google Scholar] [CrossRef]

- Greco, M.; Pinelli, G.; Kulpa, K.; Samczynski, P.; Querry, B.; Querry, S. The Study on SAR Images Exploitation for Air Platform Navigation Purposes. In Proceedings of the 2011 12th International Radar Symposium (IRS), Leipzig, Germany, 7–9 September 2011; pp. 347–352. [Google Scholar]

- Greco, M.; Kulpa, K.; Pinelli, G.; Samczynski, P. SAR and InSAR Georeferencing Algorithms for Inertial Navigation Systems. In Proceedings of the Photonics Applications in Astronomy, Communications, Industry, and High-Energy Physics Experiments 2011, Wilga, Poland, 7 October 2011; Volume 8008, pp. 499–507. [Google Scholar]

- Greco, M.; Querry, S.; Pinelli, G.; Kulpa, K.; Samczynski, P.; Gromek, D.; Gromek, A.; Malanowski, M.; Querry, B.; Bonsignore, A. SAR-Based Augmented Integrity Navigation Architecture. In Proceedings of the 2012 13th International Radar Symposium, Warsaw, Poland, 23–25 May 2012; pp. 225–229. [Google Scholar]

- Nitti, D.O.; Bovenga, F.; Chiaradia, M.T.; Greco, M.; Pinelli, G. Feasibility of Using Synthetic Aperture Radar to Aid UAV Navigation. Sensors 2015, 15, 18334–18359. [Google Scholar] [CrossRef] [PubMed]

- Gao, S.; Zhong, Y.; Li, W. Robust Adaptive Filtering Method for SINS/SAR Integrated Navigation System. Aerosol Sci. Technol. 2011, 15, 425–430. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, S.; Li, W. A Quaternion-Based Method for SINS/SAR Integrated Navigation System. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 514–524. [Google Scholar] [CrossRef]

- Gao, S.; Xue, L.; Zhong, Y.; Gu, C. Random Weighting Method for Estimation of Error Characteristics in SINS/GPS/SAR Integrated Navigation System. Aerosol Sci. Technol. 2015, 46, 22–29. [Google Scholar] [CrossRef]

- Chang, L.; Hu, B.; Chen, S.; Qin, F. Comments on “Quaternion-Based Method for SINS/SAR Integrated Navigation System”. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 1400–1402. [Google Scholar] [CrossRef]

- Toss, T.; Dämmert, P.; Sjanic, Z.; Gustafsson, F. Navigation with SAR and 3D-Map Aiding. In Proceedings of the 2015 18th International Conference on Information Fusion (Fusion), Washington, DC, USA, 6–9 July 2015; pp. 1505–1510. [Google Scholar]

- Lu, J.; Ye, L.; Han, S. A Deep Fusion Method Based on INS/SAR Integrated Navigation and SAR Bias Estimation. IEEE Sens. J. 2020, 20, 10057–10070. [Google Scholar] [CrossRef]

- Lu, J.; Ye, L.; Han, S. Analysis and Application of Geometric Dilution of Precision Based on Altitude-Assisted INS/SAR Integrated Navigation. IEEE Trans. Instrum. Meas. 2021, 70, 9501010. [Google Scholar] [CrossRef]

- Jiang, S.; Wang, B.; Xiang, M.; Fu, X.; Sun, X. Method for InSAR/INS Navigation System Based on Interferogram Matching. IET Radar Sonar Nav. 2018, 12, 938–944. [Google Scholar] [CrossRef]

- Markiewicz, J.; Abratkiewicz, K.; Gromek, A.; Ostrowski, W.; Samczyński, P.; Gromek, D. Geometrical Matching of SAR and Optical Images Utilizing ASIFT Features for SAR-Based Navigation Aided Systems. Sensors 2019, 19, 5500. [Google Scholar] [CrossRef] [PubMed]

- Gambrych, J.; Gromek, D.; Abratkiewicz, K.; Wielgo, M.; Gromek, A.; Samczyński, P. SAR and Orthophoto Image Registration With Simultaneous SAR-Based Altitude Measurement for Airborne Navigation Systems. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5219714. [Google Scholar] [CrossRef]

- Ren, S.; Chang, W.; Jin, T.; Wang, Z. Automated SAR Reference Image Preparation for Navigation. Prog. Electromagn. Res. 2011, 121, 535–555. [Google Scholar] [CrossRef]

- Kong, Y.; Hong, F.; Leung, H.; Peng, X. A Fusion Method of Optical Image and SAR Image Based on Dense-UGAN and Gram–Schmidt Transformation. Remote Sens. 2021, 13, 4274. [Google Scholar] [CrossRef]

- Wu, K.; Gu, L.; Jiang, M. Research on Fusion of SAR Image and Multispectral Image Using Texture Feature Information. In Proceedings of the Earth Observing Systems XXVI, San Diego, CA, USA, 1 August 2021; Volume 11829, pp. 353–366. [Google Scholar]

- Chu, T.; Tan, Y.; Liu, Q.; Bai, B. Novel Fusion Method for SAR and Optical Images Based on Non-Subsampled Shearlet Transform. Int. J. Remote Sens. 2020, 41, 4590–4604. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, S.; Li, Y.; Yan, H.; Zheng, Y. A Novel SAR and Optical Image Fusion Algorithm Based on an Improved SPCNN and Phase Congruency Information. Int. J. Remote Sens. 2023, 44, 1328–1347. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Guo, S. IHS-GTF: A Fusion Method for Optical and Synthetic Aperture Radar Data. Remote Sens. 2020, 12, 2796. [Google Scholar] [CrossRef]

- Gong, X.; Hou, Z.; Ma, A.; Zhong, Y.; Zhang, M.; Lv, K. An Adaptive Multiscale Gaussian Co-Occurrence Filtering Decomposition Method for Multispectral and SAR Image Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8215–8229. [Google Scholar] [CrossRef]

- Chen, Y.; Bruzzone, L. Self-Supervised SAR-Optical Data Fusion of Sentinel-1/-2 Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5406011. [Google Scholar] [CrossRef]

- Grohnfeldt, C.; Schmitt, M.; Zhu, X. A Conditional Generative Adversarial Network to Fuse Sar And Multispectral Optical Data for Cloud Removal from Sentinel-2 Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1726–1729. [Google Scholar]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Huang, D.; Tang, Y.; Wang, Q. An Image Fusion Method of SAR and Multispectral Images Based on Non-Subsampled Shearlet Transform and Activity Measure. Sensors 2022, 22, 7055. [Google Scholar] [CrossRef]

- Wu, R. Two-Parameter CFAR Ship Detection Algorithm Based on Rayleigh Distribution in SAR Images. Preprints 2021, 2021120280. [Google Scholar] [CrossRef]

- Tu, T.-M.; Huang, P.S.; Hung, C.-L.; Chang, C.-P. A Fast Intensity-Hue-Saturation Fusion Technique with Spectral Adjustment for IKONOS Imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Yang, C.; Liu, H.; Zhao, Y.; Ye, Y. Comparative Analysis of Pixel-Level Fusion Algorithms and a New High-Resolution Dataset for SAR and Optical Image Fusion. Remote Sens. 2023, 15, 5514. [Google Scholar] [CrossRef]

- Li, X.; Zhang, G.; Cui, H.; Hou, S.; Wang, S.; Li, X.; Chen, Y.; Li, Z.; Zhang, L. MCANet: A Joint Semantic Segmentation Framework of Optical and SAR Images for Land Use Classification. Int. J. Appl. Earth Obs. 2022, 106, 102638. [Google Scholar] [CrossRef]

- Burt, P.; Adelson, E. The Laplacian Pyramid as a Compact Image Code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Selesnick, I.W.; Baraniuk, R.G.; Kingsbury, N.C. The Dual-Tree Complex Wavelet Transform. IEEE Signal Process. Mag. 2005, 22, 123–151. [Google Scholar] [CrossRef]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The Nonsubsampled Contourlet Transform: Theory, Design, and Applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef]

- Zhou, Z.; Wang, B.; Li, S.; Dong, M. Perceptual Fusion of Infrared and Visible Images through a Hybrid Multi-Scale Decomposition with Gaussian and Bilateral Filters. Inf. Fusion 2016, 30, 15–26. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and Visible Image Fusion Based on Visual Saliency Map and Weighted Least Square Optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Ye, Y.; Zhang, J.; Zhou, L.; Li, J.; Ren, X.; Fan, J. Optical and SAR Image Fusion Based on Complementary Feature Decomposition and Visual Saliency Features. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5205315. [Google Scholar] [CrossRef]

- Ramesh, C.; Ranjith, T. Fusion Performance Measures and a Lifting Wavelet Transform Based Algorithm for Image Fusion. In Proceedings of the Fifth International Conference on Information Fusion. FUSION 2002. (IEEE Cat.No.02EX5997), Annapolis, MD, USA, 8–11 July 2002; Volume 1, pp. 317–320. [Google Scholar]

| Methods | EN | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| Optical vision | 6.679 | 6.339 | 6.899 |

| Microwave vision-enhanced | 7.223 | 6.367 | 6.910 |

| Dataset | Methods | PSNR | Qabf | SSIM | NIQE |

|---|---|---|---|---|---|

| 1 | LP | −38.940 | 0.438 | 0.411 | 7.384 |

| DTCWT | −38.408 | 0.407 | 0.424 | 6.364 | |

| NSCT | −38.369 | 0.444 | 0.426 | 6.827 | |

| HMSD | −37.041 | 0.480 | 0.485 | 7.535 | |

| WLS | −38.545 | 0.455 | 0.473 | 5.671 | |

| VSFF | −41.099 | 0.438 | 0.539 | 5.460 | |

| STAF | −26.813 | 0.458 | 0.550 | 4.845 | |

| 2 | LP | −40.753 | 0.368 | 0.364 | 12.937 |

| DTCWT | −40.766 | 0.361 | 0.359 | 13.086 | |

| NSCT | −40.767 | 0.365 | 0.365 | 12.535 | |

| HMSD | −32.670 | 0.536 | 0.443 | 10.100 | |

| WLS | −38.265 | 0.396 | 0.429 | 10.677 | |

| VSFF | −41.239 | 0.181 | 0.510 | 4.112 | |

| STAF | −26.264 | 0.243 | 0.520 | 2.890 | |

| 3 | LP | −42.364 | 0.220 | 0.243 | 10.018 |

| DTCWT | −42.382 | 0.206 | 0.241 | 9.981 | |

| NSCT | −42.367 | 0.224 | 0.248 | 10.360 | |

| HMSD | −37.126 | 0.430 | 0.415 | 8.543 | |

| WLS | −41.734 | 0.262 | 0.305 | 10.624 | |

| VSFF | −42.555 | 0.408 | 0.501 | 6.465 | |

| STAF | −22.058 | 0.278 | 0.544 | 4.195 |

| Methods | FS | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| LP | −0.122 | −0.358 | −0.165 |

| DTCWT | −0.120 | −0.356 | −0.155 |

| NSCT | −0.089 | −0.314 | −0.122 |

| HMSD | 0.051 | −0.371 | −0.067 |

| WLS | −0.125 | −0.324 | −0.231 |

| VSFF | 0.279 | 0.369 | 0.323 |

| STAF | 0.464 | 0.495 | 0.466 |

| Methods | Running Time (s) | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| LP | 0.0138 | 0.0319 | 0.0115 |

| DTCWT | 0.4598 | 0.6633 | 0.2994 |

| NSCT | 3.3055 | 8.5999 | 2.7529 |

| HMSD | 4.2342 | 13.6305 | 4.2150 |

| WLS | 1.7943 | 5.2651 | 1.6287 |

| VSFF | 3.2943 | 9.4742 | 2.6192 |

| STAF | 2.4472 | 8.3759 | 2.5453 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Wu, D.; Li, P.; Zhao, C.; Zhang, J.; He, J. A Microwave Vision-Enhanced Environmental Perception Method for the Visual Navigation of UAVs. Remote Sens. 2025, 17, 2107. https://doi.org/10.3390/rs17122107

Li R, Wu D, Li P, Zhao C, Zhang J, He J. A Microwave Vision-Enhanced Environmental Perception Method for the Visual Navigation of UAVs. Remote Sensing. 2025; 17(12):2107. https://doi.org/10.3390/rs17122107

Chicago/Turabian StyleLi, Rui, Dewei Wu, Peiran Li, Chenhao Zhao, Jingyi Zhang, and Jing He. 2025. "A Microwave Vision-Enhanced Environmental Perception Method for the Visual Navigation of UAVs" Remote Sensing 17, no. 12: 2107. https://doi.org/10.3390/rs17122107

APA StyleLi, R., Wu, D., Li, P., Zhao, C., Zhang, J., & He, J. (2025). A Microwave Vision-Enhanced Environmental Perception Method for the Visual Navigation of UAVs. Remote Sensing, 17(12), 2107. https://doi.org/10.3390/rs17122107