A Review of Image- and LiDAR-Based Mapping of Shallow Water Scenarios

Abstract

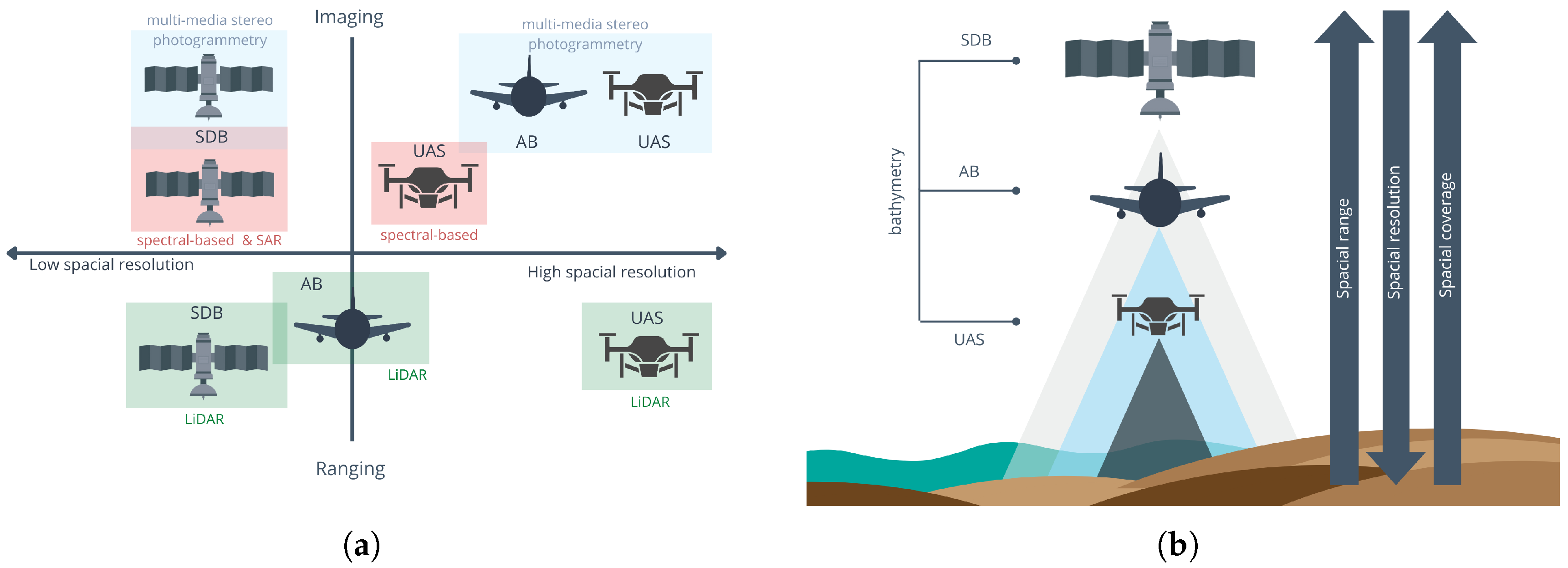

1. Introduction

| Method | Technology | Depth Range [m] | Spatial Resolution | Coverage Area | Accuracy | Influencing Factors | Advantages | Limitations | Description in Text |

|---|---|---|---|---|---|---|---|---|---|

| Image-based | UAS | up to 15 m | 0.1–5 cm | Small | Very high | Turbidity, wind, sun glint | Cost-effective, easy availability | Small area, affected by weather, requires visible bottom and calm water surface | Princ. Section 2.1, platf. Section 2.2, proc. Section 2.3.1 |

| AB | up to 20 m | 5–25 cm | Medium | High | Turbidity, wind, sun glint | Larger spatial cover than UAS | Requires visible bottoms, calm water surface, high-cost operations | Princ. Section 2.1, platf. Section 2.2, proc. Section 2.3.1 | |

| SDB (optical) | up to 30 m | 0.3–300 m | Large | Varying | Turbidity, sun glint, clouds | Freely available data | Lower accuracy at depth, ground truth needed, requires calm water surface | Princ. Section 2.1, platf. Section 2.2, proc. Section 2.3.2 | |

| SDB (SAR) | up to 100 m | 10–1000 m | Large | Low (7 m) | Wind direction/speed, strong surface currents | Suitable for turbid waters; insensitive to sunlight and clouds | Specific weather condition (regular swell) | Princ. Section 2.1, platf. Section 2.2, proc. Section 2.3.2 | |

| LiDAR-based | UAS | up to 30 m | 20–50 points/m2 | Small–medium | High | Water clarity, wind, rain | Lightweight sensors, high resolution | Weather-dependent, high-cost sensor | Princ. Section 3.1, platf. Section 3.2, proc. Section 3.3.1 |

| AB | up to 30 m | 50 points/m2 | Medium–large | High (10 cm) | Water clarity, surface waves | Simultaneous topo–bathy data | High-cost sensor and operations | Princ. Section 3.1, platf. Section 3.2, proc. Section 3.3.1 | |

| SDB | up to 70 m | 70 cm | Small (profile swath) | High (15 cm) | Water clarity, bottom material | Wide depth range, freely available data | High cost, limited swath | Princ. Section 3.1, platf. Section 3.2, proc. Section 3.3.2 |

2. Image-Based Bathymetry

2.1. Principles of the Image-Based Bathymetry

2.2. Platforms for Image-Based Bathymetry

2.3. Image Processing

2.3.1. Processing Images from UAS and AB

2.3.2. Processing Images from SDB

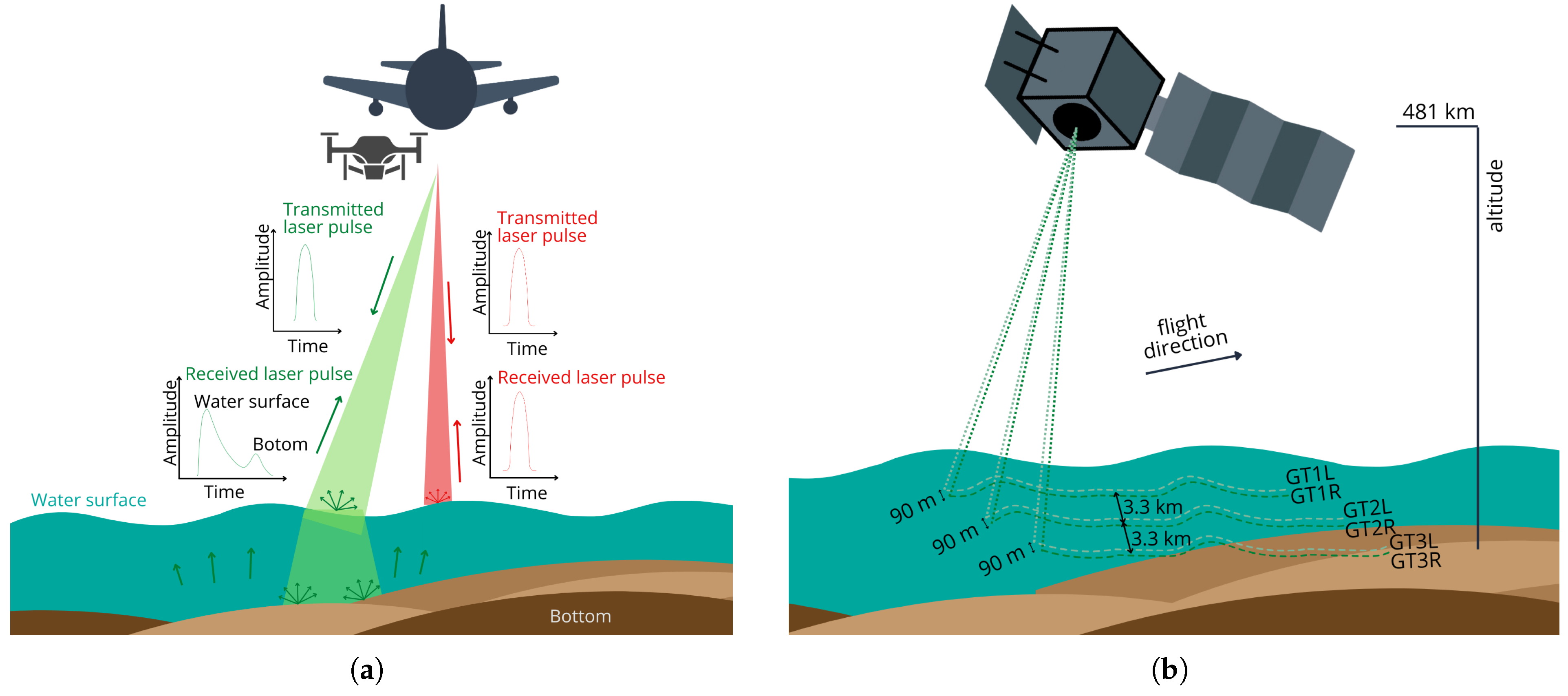

3. LiDAR-Based Bathymetry

3.1. Principles of LiDAR-Based Bathymetry

3.2. Platforms for LiDAR-Based Bathymetry

3.3. LiDAR Data Processing

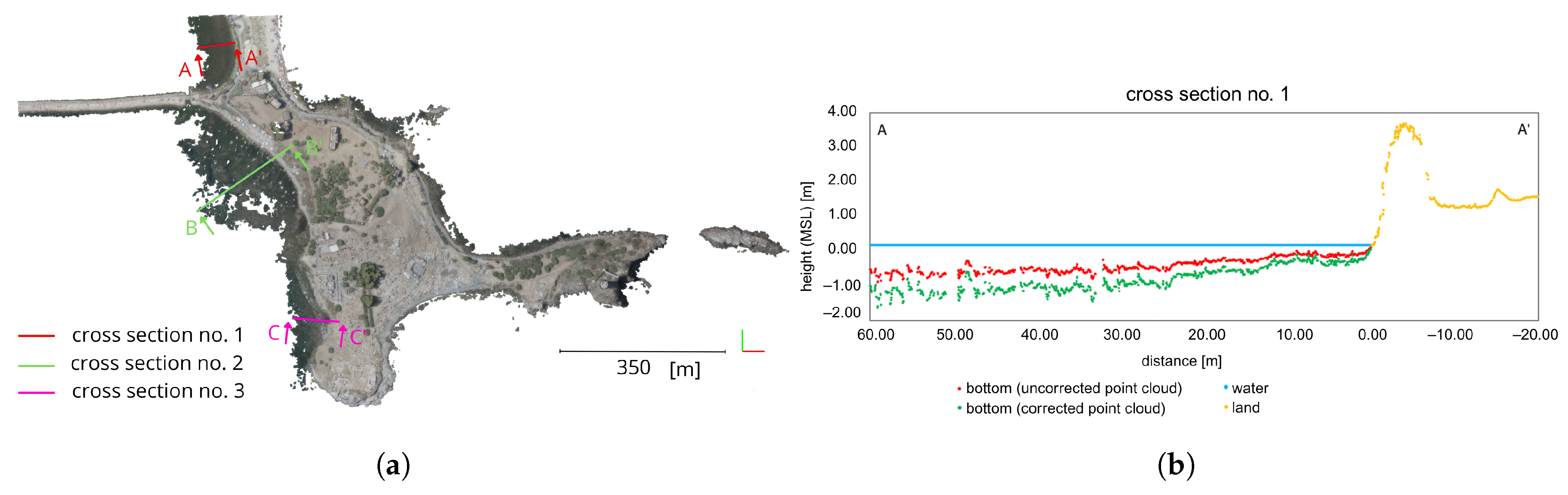

3.3.1. LiDAR Data from UAS or ALB

3.3.2. LiDAR Data from Satellite

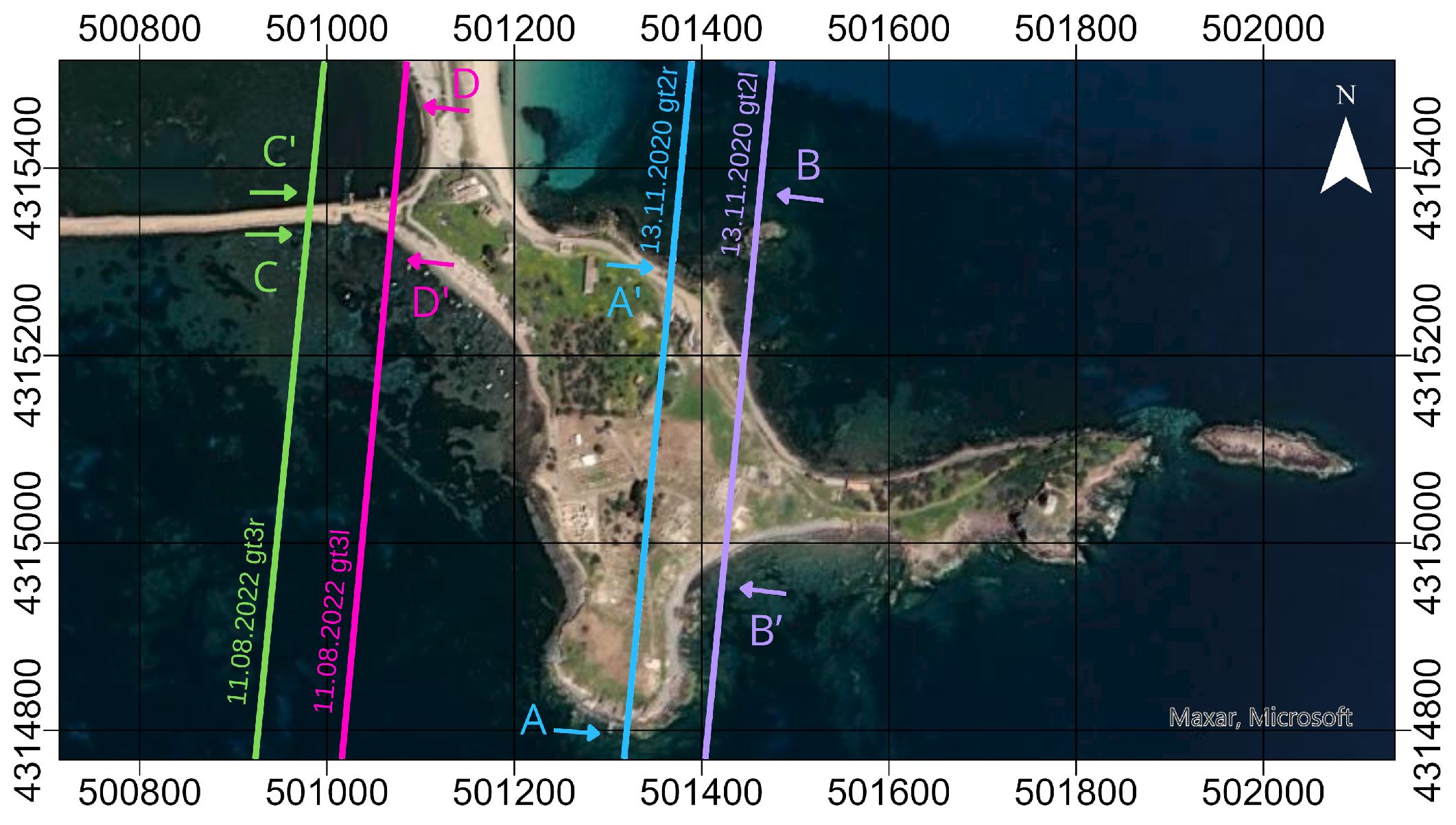

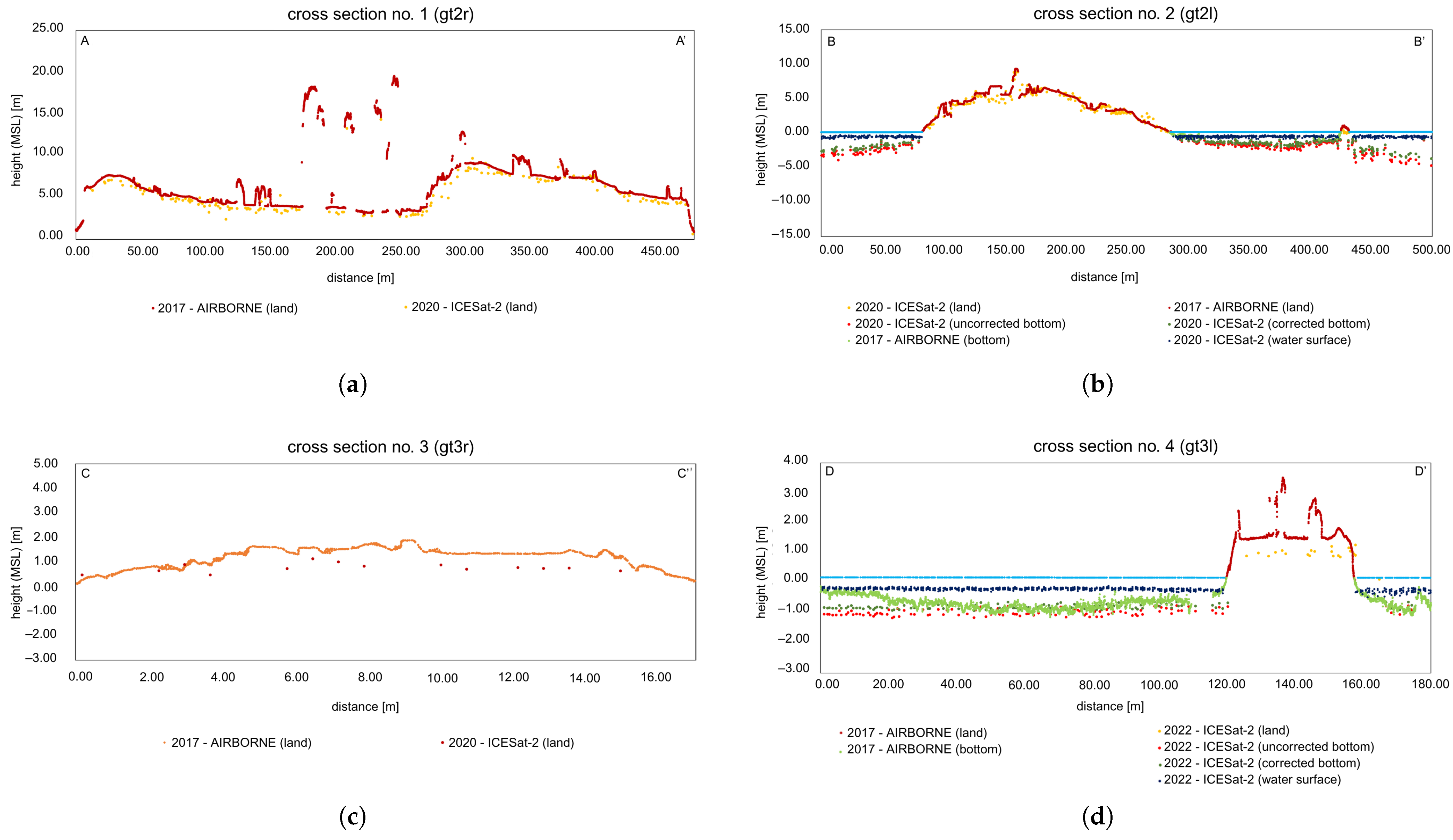

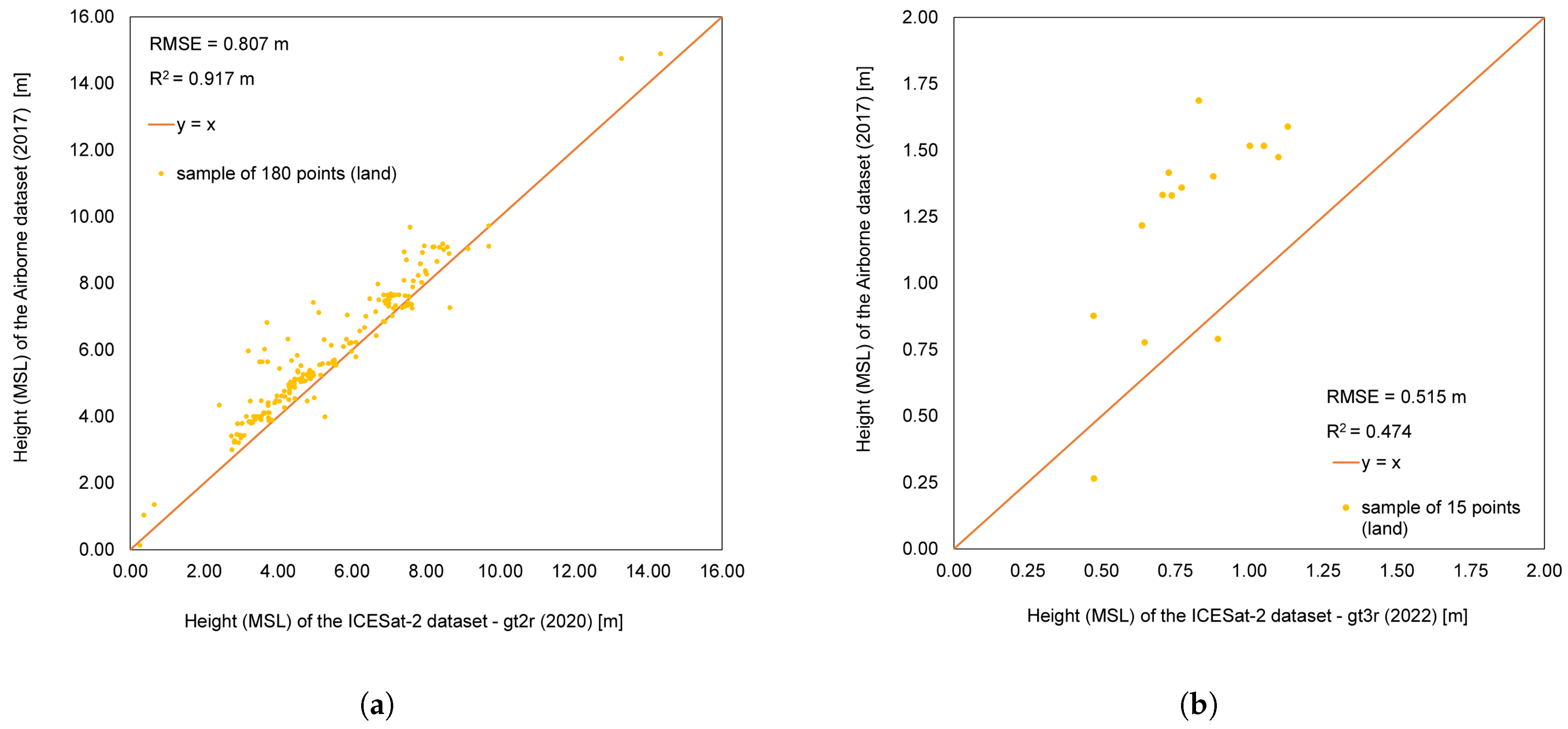

4. Experiments

4.1. Image-Based Bathymetry

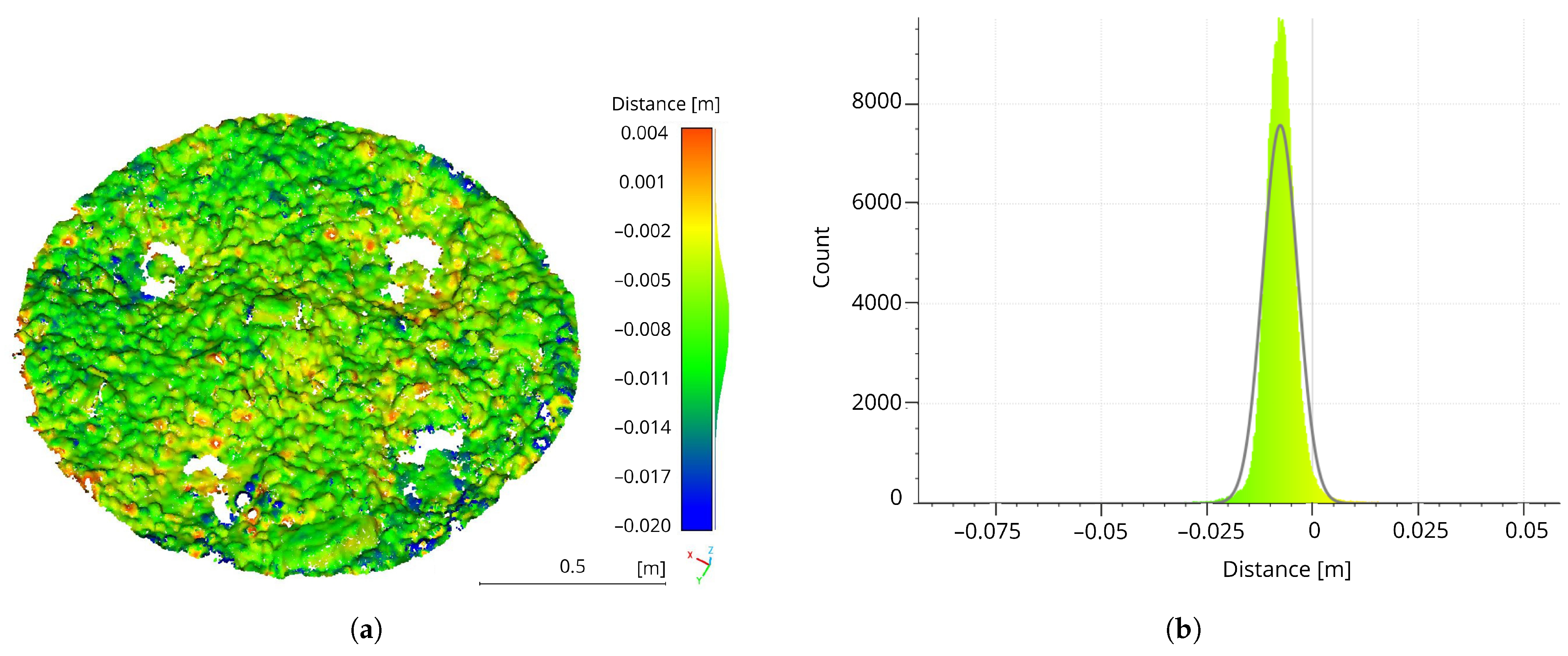

4.1.1. Baby Pool from UAS Images

4.1.2. Airborne Photogrammetry over Nora (Italy)

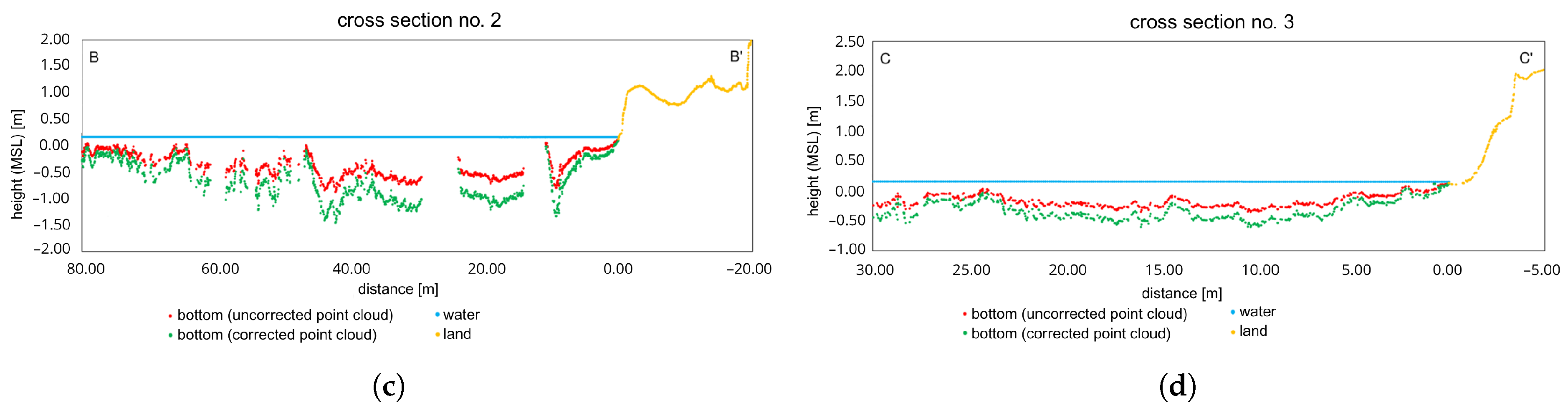

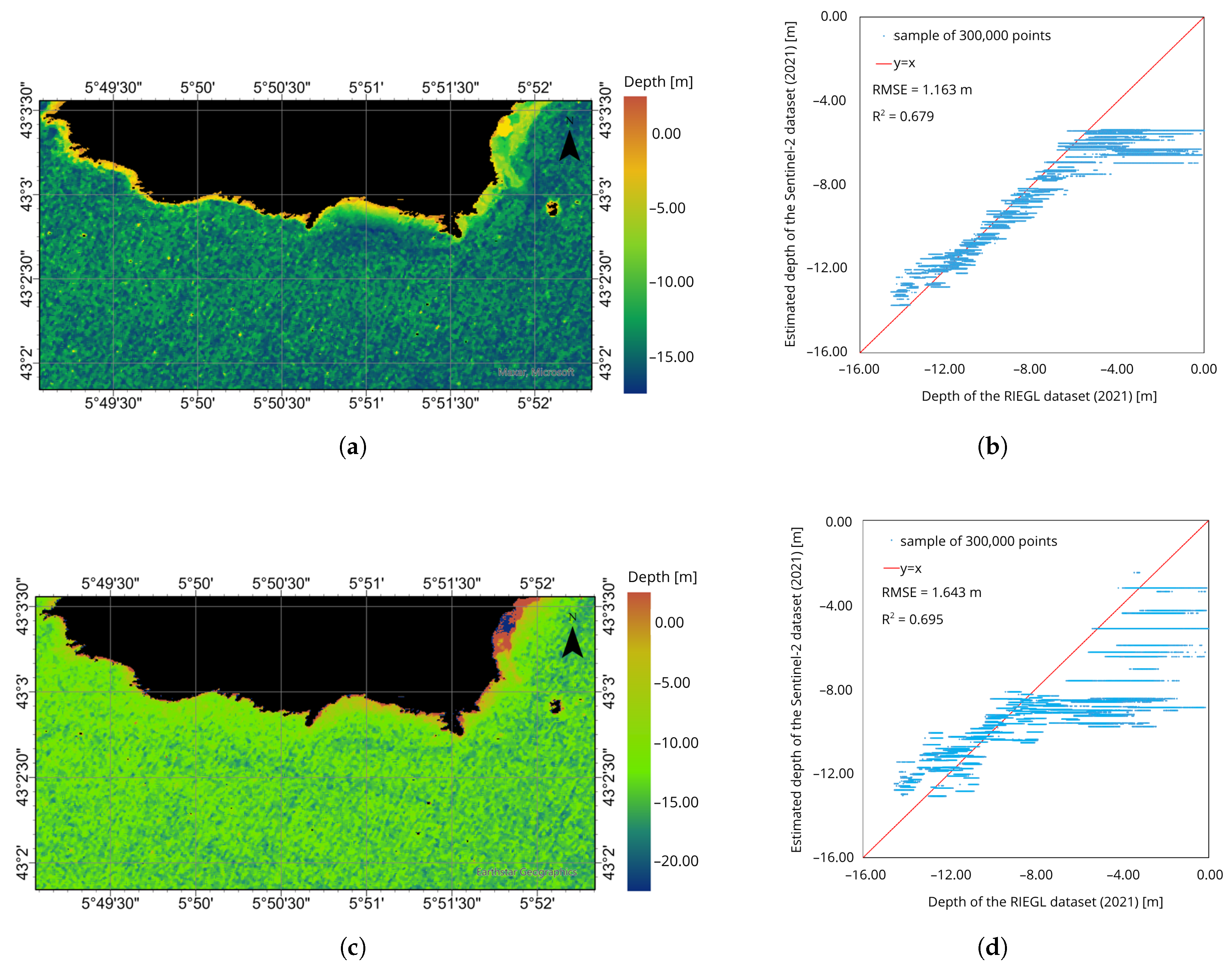

4.1.3. Satellite-Based Image Bathymetry over Les Deux Frères (France)

- Multiple Linear Regression, an extension of simple linear regression where a linear model is fitted to minimize the residual sum of squares between observed targets and targets estimated using a linear approximation [88];

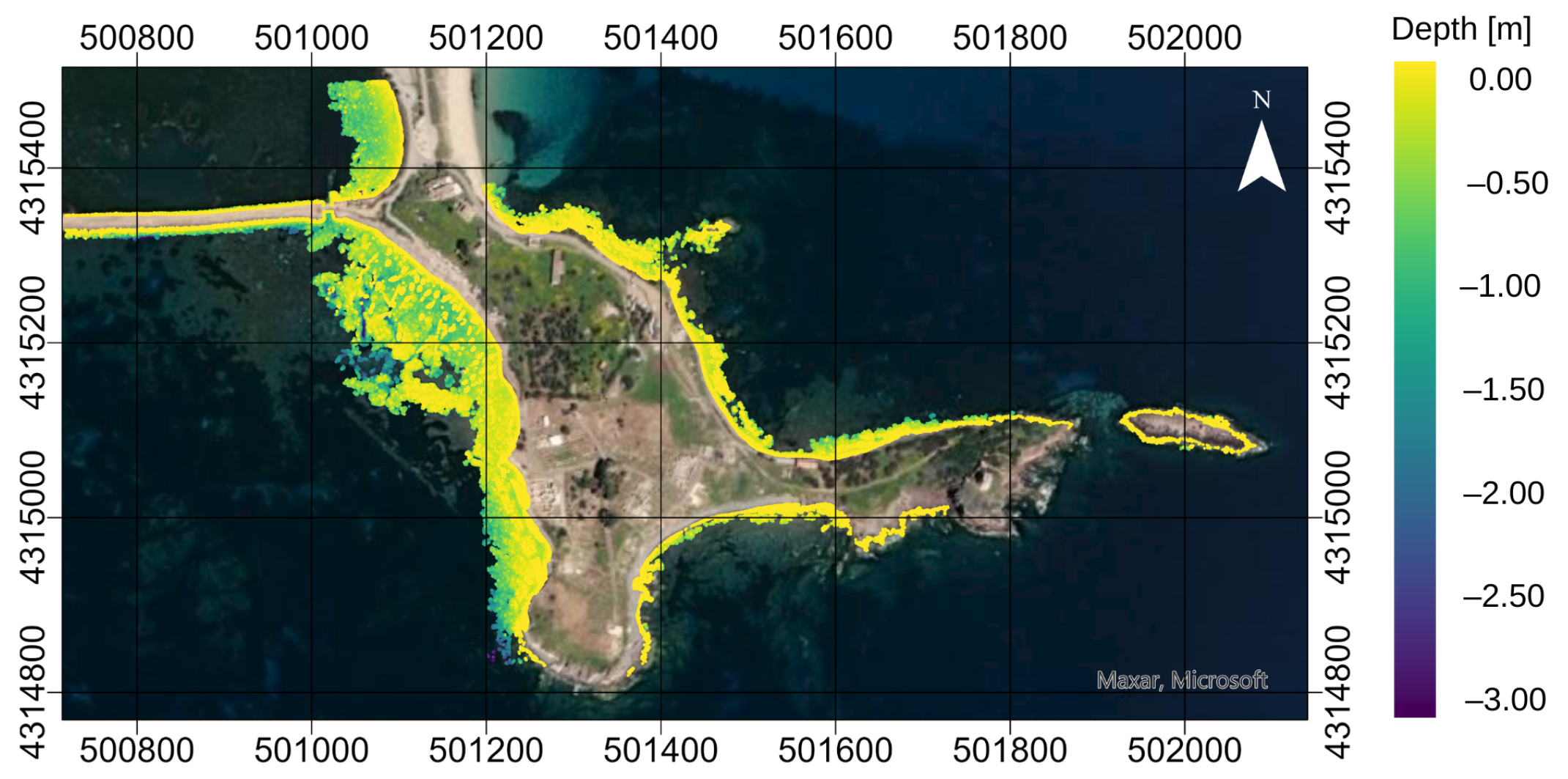

4.2. LiDAR-Based Bathymetry

4.2.1. Les Deux Frères

4.2.2. Nora

5. Discussion

6. Conclusions

- Despite the many techniques proposed for bathymetric measurement, there is still no method that combines high resolution and accuracy with maximum efficiency in terms of time and budget. Future research should therefore focus on developing new, advanced sensors for bathymetric mapping.

- Another potential research direction is the integration of data from different sensors, such as unmanned surface vehicles, underwater drones, or underwater photogrammetry. This would provide a more detailed understanding of the study area, the object being studied, and the processes taking place.

- Processing data from different sensors, whether from cameras or LiDAR, requires a customized approach and analysis. Modern methods mainly focus on machine learning and deep learning algorithms. Existing methods are continuously improved and new ones developed in order to achieve even greater accuracy and a more faithful representation of real data. There is also a focus on automating data processing, which can significantly improve the efficiency and speed of delivering results.

- Future research should also be conducted in more complex marine environments, such as coral reefs and estuaries. Studies should address varying water clarity and heterogeneous bottom texture to accurately reflect real-world conditions. The influence of dynamic water surfaces, including ripples, waves, and tides, on refraction correction and depth estimation should also be considered.

- Another important direction in the development of bathymetric technology is real-time monitoring. The acquisition of up-to-date bathymetric data on seabed conditions would enable a more accurate characterization of coastal areas, including the marine fauna and flora, and would allow objects and potential hazards to be detected more effectively. This would enable coastal managers to make more informed decisions and respond more quickly to emergency situations. It would also improve the safety of maritime navigation.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Irish, J.; White, T. Coastal engineering applications of high-resolution lidar bathymetry. Coast. Eng. 1998, 35, 47–71. [Google Scholar] [CrossRef]

- Taddia, Y.; Russo, P.; Lovo, S.; Pellegrinelli, A. Multispectral UAV monitoring of submerged seaweed in shallow water. Appl. Geomat. 2019, 12, 19–34. [Google Scholar] [CrossRef]

- Janowski, L.; Pydyn, A.; Popek, M.; Tysiąc, P. Non-invasive investigation of a submerged medieval harbour, a case study from Puck Lagoon. J. Archaeol. Sci. Rep. 2024, 58, 104717. [Google Scholar] [CrossRef]

- Jacketti, M.; Englehardt, J.D.; Beegle-Krause, C. Bayesian sunken oil tracking with SOSim v2: Inference from field and bathymetric data. Mar. Pollut. Bull. 2021, 165, 112092. [Google Scholar] [CrossRef]

- Kuhn, T.; Rühlemann, C. Exploration of Polymetallic Nodules and Resource Assessment: A Case Study from the German Contract Area in the Clarion-Clipperton Zone of the Tropical Northeast Pacific. Minerals 2021, 11, 618. [Google Scholar] [CrossRef]

- Janowski, L.; Wróblewski, R.; Rucińska, M.; Kubowicz-Grajewska, A.; Tysiąc, P. Automatic classification and mapping of the seabed using airborne LiDAR bathymetry. Eng. Geol. 2022, 301, 106615. [Google Scholar] [CrossRef]

- Kaamin, M.; Fadzil, M.A.F.M.; Razi, M.A.M.; Daud, M.E.; Abdullah, N.H.; Nor, A.H.M.; Ahmad, N.F.A. The Shoreline Bathymetry Assessment Using Unmanned Aerial Vehicle (UAV) Photogrammetry. J. Phys. Conf. Ser. 2020, 1529, 032109. [Google Scholar] [CrossRef]

- Xiong, C.B.; Li, Z.; Zhai, G.J.; Lu, H.L. A new method for inspecting the status of submarine pipeline based on a multi-beam bathymetric system. J. Mar. Sci. Technol. 2016, 24, 21. [Google Scholar] [CrossRef]

- Le Bas, T.P.; Mason, D.C. Automatic registration of TOBI side-scan sonar and multi-beam bathymetry images for improved data fusion. Mar. Geophys. Res. 1997, 19, 163–176. [Google Scholar] [CrossRef]

- Coveney, S.; Monteys, X. Integration Potential of INFOMAR Airborne LIDAR Bathymetry with External Onshore LIDAR Data Sets. J. Coast. Res. 2011, 62, 19–29. [Google Scholar] [CrossRef]

- Janowski, L.; Skarlatos, D.; Agrafiotis, P.; Tysiąc, P.; Pydyn, A.; Popek, M.; Kotarba-Morley, A.M.; Mandlburger, G.; Gajewski, L.; Kołakowski, M.; et al. High resolution optical and acoustic remote sensing datasets of the Puck Lagoon. Sci. Data 2024, 11. [Google Scholar] [CrossRef]

- Mandlburger, G. Bathymetry from Images, LiDAR, and Sonar: From Theory to Practice. J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 69–70. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Agrafiotis, P.G. Shallow Water Bathymetry from Active and Passive UAV-Borne, Airborne and Satellite-Borne Remote Sensing. Available online: https://dspace.lib.ntua.gr/xmlui/bitstream/handle/123456789/54847/Shallow%20water%20bathymetry%20from%20active%20and%20passive%20UAV-borne,%20airborne%20and%20satellite-borne%20remote%20sensing.pdf?sequence=1 (accessed on 12 June 2025).

- Mandlburger, G. A review of active and passive optical methods in hydrography. Int. Hydrogr. Rev. 2022, 28, 8–52. [Google Scholar] [CrossRef]

- Wang, E.; Li, D.; Wang, Z.; Cao, W.; Zhang, J.; Wang, J.; Zhang, H. Pixel-level bathymetry mapping of optically shallow water areas by combining aerial RGB video and photogrammetry. Geomorphology 2024, 449, 109049. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Demir, B. Deep learning-based bathymetry retrieval without in-situ depths using remote sensing imagery and SfM-MVS DSMs with data gaps. ISPRS J. Photogramm. Remote Sens. 2025, 225, 341–361. [Google Scholar] [CrossRef]

- Specht, M. Methodology for Performing Bathymetric and Photogrammetric Measurements Using UAV and USV Vehicles in the Coastal Zone. Remote Sens. 2024, 16, 3328. [Google Scholar] [CrossRef]

- Mandlburger, G.; Pfennigbauer, M.; Schwarz, R.; Pöppl, F. A decade of progress in topo-bathymetric laser scanning exemplified by the Pielach river dataset. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2023, X-1/W1-2023, 1123–1130. [Google Scholar] [CrossRef]

- McCarthy, M.J.; Otis, D.B.; Hughes, D.; Muller-Karger, F.E. Automated high-resolution satellite-derived coastal bathymetry mapping. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102693. [Google Scholar] [CrossRef]

- Duplančić Leder, T.; Baučić, M.; Leder, N.; Gilić, F. Optical Satellite-Derived Bathymetry: An Overview and WoS and Scopus Bibliometric Analysis. Remote Sens. 2023, 15, 1294. [Google Scholar] [CrossRef]

- Kulbacki, A.; Lubczonek, J.; Zaniewicz, G. Acquisition of Bathymetry for Inland Shallow and Ultra-Shallow Water Bodies Using PlanetScope Satellite Imagery. Remote Sens. 2024, 16, 3165. [Google Scholar] [CrossRef]

- Ashphaq, M.; Srivastava, P.K.; Mitra, D. Review of near-shore satellite derived bathymetry: Classification and account of five decades of coastal bathymetry research. J. Ocean. Eng. Sci. 2021, 6, 340–359. [Google Scholar] [CrossRef]

- He, J.; Zhang, S.; Cui, X.; Feng, W. Remote sensing for shallow bathymetry: A systematic review. Earth Sci. Rev. 2024, 258, 104957. [Google Scholar] [CrossRef]

- Aber, J.S.; Marzolff, I.; Ries, J.B.; Aber, S.E. Small-Format Aerial Photography and UAS Imagery: Principles, Techniques and Geoscience Applications; Academic Press: Cambridge, MA, USA, 2019. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. Shallow Water Bathymetry Mapping from UAV Imagery Based on Machine Learning. The Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W10, 9–16. [Google Scholar] [CrossRef]

- Louis, R.; Dauphin, G.; Zech, Y.; Joseph, A.; Gonomy, N.; Soares-Frazão, S. Assessment of UAV-based photogrammetry for bathymetry measurements in Haiti: Comparison with manual surveys and official data. In Proceedings of the 39th IAHR World Congress. International Association for Hydro-Environment Engineering and Research (IAHR), 2022, 39th IAHR World Congress, Granada, Spain, 19–24 June 2022; pp. 565–574. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, S.; Du, Y.; Yu, Y.; Liu, G.; Ma, W.; Yin, J.; Yang, X. Shallow Sea Bathymetry Mapping from Satellite SAR Observations Using Deep Learning. In Proceedings of the 2024 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Zhuhai, China, 22–24 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Rossi, L.; Mammi, I.; Pelliccia, F. UAV-Derived Multispectral Bathymetry. Remote Sens. 2020, 12, 3897. [Google Scholar] [CrossRef]

- Klemas, V.V. Coastal and Environmental Remote Sensing from Unmanned Aerial Vehicles: An Overview. J. Coast. Res. 2015, 315, 1260–1267. [Google Scholar] [CrossRef]

- Velez-Nicolas, M.; Garcia-Lopez, S.; Barbero, L.; Ruiz-Ortiz, V.; Sanchez-Bellon, A. Applications of Unmanned Aerial Systems (UASs) in Hydrology: A Review. Remote Sens. 2021, 13, 1359. [Google Scholar] [CrossRef]

- Lejot, J.; Gentile, V.; Demarchi, L.; Spitoni, M.; Piegay, H.; Mroz, M. Bathymetric Mapping of Shallow Rivers with UAV Hyperspectral Data. In Proceedings of the Fifth International Conference on Telecommunications and Remote Sensing SCITEPRESS—Science and Technology Publications, Milan, Italy, 10–11 October 2016; pp. 43–49. [Google Scholar] [CrossRef]

- Mudiyanselage, S.D.; Wilkinson, B.; Abd-Elrahman, A. Automated High-Resolution Bathymetry from Sentinel-1 SAR Images in Deeper Nearshore Coastal Waters in Eastern Florida. Remote Sens. 2024, 16, 1. [Google Scholar] [CrossRef]

- Mavraeidopoulos, A.K.; Pallikaris, A.; Oikonomou, E. Satellite derived bathymetry (SDB) and safety of navigation. Int. Hydrogr. Rev. 2017, 17. Available online: https://journals.lib.unb.ca/index.php/ihr/article/view/26290 (accessed on 12 June 2025).

- Lyons, M.; Phinn, S.; Roelfsema, C. Integrating Quickbird Multi-Spectral Satellite and Field Data: Mapping Bathymetry, Seagrass Cover, Seagrass Species and Change in Moreton Bay, Australia in 2004 and 2007. Remote Sens. 2011, 3, 42–64. [Google Scholar] [CrossRef]

- Figliomeni, F.G.; Parente, C. Bathymetry from Satellite Images: A Proposal for Adapting the Band Ratio Approach to IKONOS Data. Appl. Geomat. 2022, 15, 565–581. [Google Scholar] [CrossRef]

- Viaña-Borja, S.P.; Fernández-Mora, A.; Stumpf, R.P.; Navarro, G.; Caballero, I. Semi-automated Bathymetry Using Sentinel-2 for Coastal Monitoring in the Western Mediterranean. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103328. [Google Scholar] [CrossRef]

- Gülher, E.; Alganci, U. Satellite-Derived Bathymetry Mapping on Horseshoe Island, Antarctic Peninsula, with Open-Source Satellite Images: Evaluation of Atmospheric Correction Methods and Empirical Models. Remote Sens. 2023, 15, 2568. [Google Scholar] [CrossRef]

- Wicaksono, P.; Djody Harahap, S.; Hendriana, R. Satellite-Derived Bathymetry from WorldView-2 Based on Linear and Machine Learning Regression in the Optically Complex Shallow Water of the Coral Reef Ecosystem of Kemujan Island. Remote Sens. Appl. Soc. Environ. 2024, 33, 101085. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, C.; Zhu, J.; Su, D.; Yang, F.; Zhu, J. A Satellite-Derived Bathymetry Method Combining Depth Invariant Index and Adaptive Logarithmic Ratio: A Case Study in the Xisha Islands Without In-Situ Measurements. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104232. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Janowski, L.; Skarlatos, D.; Demir, B. MAGICBATHYNET: A Multimodal Remote Sensing Dataset for Bathymetry Prediction and Pixel-Based Classification in Shallow Waters. In Proceedings of the IGARSS 2024-2024 IEEE Int. Geoscience Remote Sens. Symposium, Athens, Greece, 7–12 July 2024; pp. 249–253. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3D modeling—Current status and future perspectives. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, XXXVIII-1/C22, 25–31. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2013, 6, 1–15. [Google Scholar] [CrossRef]

- Förstner, W.; Wrobel, B.P. Photogrammetric Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Mian, O.; Lutes, J.; Lipa, G.; Hutton, J.J.; Gavelle, E.; Borghini, S. Direct georeferencing on small unmanned aerial platforms for improved reliability and accuracy of mapping without the need for ground control points. The Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2015, XL-1/W4, 397–402. [Google Scholar] [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.; Habib, A. Automated Aerial Triangulation for UAV-Based Mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A critical review of automated photogrammetric processing of large datasets. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W5, 591–599. [Google Scholar] [CrossRef]

- Woodget, A.S.; Carbonneau, P.E.; Visser, F.; Maddock, I.P. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Process. Landforms 2014, 40, 47–64. [Google Scholar] [CrossRef]

- Woodget, A.S.; Dietrich, J.T.; Wilson, R.T. Quantifying Below-Water Fluvial Geomorphic Change: The Implications of Refraction Correction, Water Surface Elevations, and Spatially Variable Error. Remote Sens. 2019, 11, 2415. [Google Scholar] [CrossRef]

- Dietrich, J.T. Bathymetric Structure-from-Motion: Extracting shallow stream bathymetry from multi-view stereo photogrammetry. Earth Surf. Process. Landforms 2016, 42, 355–364. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Karantzalos, K.; Georgopoulos, A.; Skarlatos, D. Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters. Remote Sens. 2020, 12, 322. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sens. 2019, 11, 2225. [Google Scholar] [CrossRef]

- Mandlburger, G.; Kölle, M.; Nübel, H.; Soergel, U. BathyNet: A Deep Neural Network for Water Depth Mapping from Multispectral Aerial Images. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2021, 89, 71–89. [Google Scholar] [CrossRef]

- Duplančić Leder, T.; Leder, N.; Peroš, J. Satellite Derived Bathymetry Survey Method-Example of Hramina Bay. Trans. Marit. Sci. 2019, 8, 99–108. [Google Scholar] [CrossRef]

- Lyzenga, D.; Malinas, N.; Tanis, F. Multispectral bathymetry using a simple physically based algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2251–2259. [Google Scholar] [CrossRef]

- Lumban-Gaol, Y.A.; Ohori, K.A.; Peters, R.Y. Satellite-derived bathymetry using convolutional neural networks and multispectral Sentinel-2 images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B3-2021, 201–207. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379. [Google Scholar] [CrossRef] [PubMed]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Wang, L.; Liu, H.; Su, H.; Wang, J. Bathymetry retrieval from optical images with spatially distributed support vector machines. GIScience Remote Sens. 2018, 56, 323–337. [Google Scholar] [CrossRef]

- Zhou, S.; Liu, X.; Sun, Y.; Chang, X.; Jia, Y.; Guo, J.; Sun, H. Predicting Bathymetry Using Multisource Differential Marine Geodetic Data with Multilayer Perceptron Neural Network. Int. J. Digit. Earth 2024, 17, 2393255. [Google Scholar] [CrossRef]

- Pereira, P.; Baptista, P.; Cunha, T.; Silva, P.A.; Romão, S.; Lafon, V. Estimation of the nearshore bathymetry from high temporal resolution Sentinel-1A C-band SAR data - A case study. Remote Sens. Environ. 2019, 223, 166–178. [Google Scholar] [CrossRef]

- Santos, D.; Fernández-Fernández, S.; Abreu, T.; Silva, P.A.; Baptista, P. Retrieval of nearshore bathymetry from Sentinel-1 SAR data in high energetic wave coasts: The Portuguese case study. Remote Sens. Appl. Soc. Environ. 2022, 25, 100674. [Google Scholar] [CrossRef]

- Cui, A.; Ma, Y.; Zhang, J.; Wang, R. A SAR wave-enhanced method combining denoising and texture enhancement for bathymetric inversion. Int. J. Appl. Earth Obs. Geoinf. 2025, 139, 104520. [Google Scholar] [CrossRef]

- Wang, C.; Li, Q.; Liu, Y.; Wu, G.; Liu, P.; Ding, X. A comparison of waveform processing algorithms for single-wavelength LiDAR bathymetry. ISPRS J. Photogramm. Remote Sens. 2015, 101, 22–35. [Google Scholar] [CrossRef]

- Mandlburger, G. A review of airborne laser bathymetry for mapping of inland and coastal waters. Hydrogr. Nachrichten 2020, 116, 6–15. [Google Scholar]

- International Hydrographic Organization. Standards for Hydrographic Surveys (S-44) Edition 6.1.0. 2022. Available online: https://iho.int/uploads/user/pubs/standards/s-44/S-44_Edition_6.1.0.pdf (accessed on 12 January 2025).

- Dandabathula, G.; Hari, R.; Sharma, J.; Sharma, A.; Ghosh, K.; Padiyar, N.; Poonia, A.; Bera, A.K.; Srivastav, S.K.; Chauhan, P. A High-Resolution Digital Bathymetric Elevation Model Derived from ICESat-2 for Adam’s Bridge. Sci. Data 2024, 11, 705. [Google Scholar] [CrossRef]

- Xie, C.; Chen, P.; Zhang, S.; Huang, H. Nearshore Bathymetry from ICESat-2 LiDAR and Sentinel-2 Imagery Datasets Using Physics-Informed CNN. Remote Sens. 2024, 16, 511. [Google Scholar] [CrossRef]

- Saylam, K.; Hupp, J.R.; Averett, A.R.; Gutelius, W.F.; Gelhar, B.W. Airborne lidar bathymetry: Assessing quality assurance and quality control methods with Leica Chiroptera examples. Int. J. Remote Sens. 2018, 39, 2518–2542. [Google Scholar] [CrossRef]

- Guo, K.; Li, Q.; Wang, C.; Mao, Q.; Liu, Y.; Zhu, J.; Wu, A. Development of a single-wavelength airborne bathymetric LiDAR: System design and data processing. ISPRS J. Photogramm. Remote Sens. 2022, 185, 62–84. [Google Scholar] [CrossRef]

- Mandlburger, G. Airborne LiDAR: A Tutorial for 2025. LIDAR Mag. 2024. Available online: https://lidarmag.com/2024/12/30/airborne-lidar-a-tutorial-for-2025 (accessed on 12 June 2025).

- Parrish, C.; Magruder, L.; Neuenschwander, A.; Forfinski-Sarkozi, N.; Alonzo, M.; Jasinski, M. Validation of ICESat-2 ATLAS Bathymetry and Analysis of ATLAS’s Bathymetric Mapping Performance. Remote Sens. 2019, 11, 1634. [Google Scholar] [CrossRef]

- Eren, F.; Pe’eri, S.; Rzhanov, Y.; Ward, L. Bottom characterization by using airborne lidar bathymetry (ALB) waveform features obtained from bottom return residual analysis. Remote Sens. Environ. 2018, 206, 260–274. [Google Scholar] [CrossRef]

- Guo, K.; Xu, W.; Liu, Y.; He, X.; Tian, Z. Gaussian Half-Wavelength Progressive Decomposition Method for Waveform Processing of Airborne Laser Bathymetry. Remote Sens. 2017, 10, 35. [Google Scholar] [CrossRef]

- Kogut, T.; Bakula, K. Improvement of Full Waveform Airborne Laser Bathymetry Data Processing based on Waves of Neighborhood Points. Remote Sens. 2019, 11, 1255. [Google Scholar] [CrossRef]

- Xing, S.; Wang, D.; Xu, Q.; Lin, Y.; Li, P.; Jiao, L.; Zhang, X.; Liu, C. A Depth-Adaptive Waveform Decomposition Method for Airborne LiDAR Bathymetry. Sensors 2019, 19, 5065. [Google Scholar] [CrossRef]

- Westfeld, P.; Maas, H.G.; Richter, K.; Weiß, R. Analysis and correction of ocean wave pattern induced systematic coordinate errors in airborne LiDAR bathymetry. ISPRS J. Photogramm. Remote Sens. 2017, 128, 314–325. [Google Scholar] [CrossRef]

- Westfeld, P.; Richter, K.; Maas, H.G.; Weiß, R. Analysis of the effect ofwave patterns on refraction in airborne lidar bathymetry. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B1, 133–139. [Google Scholar] [CrossRef]

- Xu, W.; Guo, K.; Liu, Y.; Tian, Z.; Tang, Q.; Dong, Z.; Li, J. Refraction error correction of Airborne LiDAR Bathymetry data considering sea surface waves. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102402. [Google Scholar] [CrossRef]

- Zhou, G.; Wu, G.; Zhou, X.; Xu, C.; Zhao, D.; Lin, J.; Liu, Z.; Zhang, H.; Wang, Q.; Xu, J.; et al. Adaptive model for the water depth bias correction of bathymetric LiDAR point cloud data. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103253. [Google Scholar] [CrossRef]

- Zhong, J.; Sun, J.; Lai, Z.; Song, Y. Nearshore bathymetry from ICESat-2 LiDAR and Sentinel-2 Imagery Datasets Using Deep Learning Approach. Remote Sens. 2022, 14, 4229. [Google Scholar] [CrossRef]

- Jung, J.; Parrish, C.E.; Magruder, L.A.; Herrmann, J.; Yoo, S.; Perry, J.S. ICESat-2 bathymetry algorithms: A review of the current state-of-the-art and future outlook. ISPRS J. Photogramm. Remote Sens. 2025, 223, 413–439. [Google Scholar] [CrossRef]

- Dietrich, J.T.; Parrish, C.E. Development and Analysis of a Global Refractive Index of Water Data Layer for Spaceborne and Airborne Bathymetric Lidar. Earth Space Sci. 2025, 12, e2024EA004106. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, N.; Liu, Z.; Yang, B.; Yang, F.; Wang, X.H.; Li, S. Satellite-derived bathymetry using the ICESat-2 lidar and Sentinel-2 imagery datasets. Remote Sens. Environ. 2020, 250, 112047. [Google Scholar] [CrossRef]

- Chen, L.; Xing, S.; Zhang, G.; Guo, S.; Gao, M. Refraction Correction Based on ATL03 Photon Parameter Tracking for Improving ICESat-2 Bathymetry Accuracy. Remote Sens. 2024, 16, 84. [Google Scholar] [CrossRef]

- Dietrich, J. pyBathySfM v4.5; GitHub: San Francisco, CA, USA, 2020. [Google Scholar]

- Manessa, M.D.M.; Kanno, A.; Sekine, M.; Haidar, M.; Yamamoto, K.; Imai, T.; Higuchi, T. Satellite-Derived Bathymetry using Random Forest algorithm and WorldView-2 imagery. Geoplanning J. Geomat. Plan. 2016, 3, 117. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Wu, Z.; Mao, Z.; Shen, W.; Yuan, D.; Zhang, X.; Huang, H. Satellite-derived bathymetry based on machine learning models and an updated quasi-analytical algorithm approach. Opt. Express 2022, 30, 16773. [Google Scholar] [CrossRef]

- Harrys, R.M. rifqiharrys/sdb_gui: SDB GUI 3.6.1 (v3.6.1). 2024. Available online: https://doi.org/10.5281/zenodo.11045690 (accessed on 12 January 2025).

- National Snow and Ice Data Center. ATL03: Advanced Topographic Laser Altimeter System Lidar Waveform Data, Version 6. Available online: https://nsidc.org/data/atl03/versions/6 (accessed on 12 January 2025).

- Neumann, T.A.; Martino, A.J.; Markus, T.; Bae, S.; Bock, M.R.; Brenner, A.C.; Brunt, K.M.; Cavanaugh, J.; Fernandes, S.T.; Hancock, D.W.; et al. The Ice, Cloud, and Land Elevation Satellite—2 mission: A global geolocated photon product derived from the Advanced Topographic Laser Altimeter System. Remote Sens. Environ. 2019, 233, 111325. [Google Scholar] [CrossRef] [PubMed]

- Ashphaq, M.; Srivastava, P.K.; Mitra, D. Preliminary examination of influence of Chlorophyll, Total Suspended Material, and Turbidity on Satellite Derived-Bathymetry estimation in coastal turbid water. Reg. Stud. Mar. Sci. 2023, 62, 102920. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Confronting turbidity, the major challenge for satellite-derived coastal bathymetry. Sci. Total Environ. 2023, 870, 161898. [Google Scholar] [CrossRef] [PubMed]

- Saputra, L.R.; Radjawane, I.M.; Park, H.; Gularso, H. Effect of Turbidity, Temperature and Salinity of Waters on Depth Data from Airborne LiDAR Bathymetry. In Proceedings of the 3rd International Conference on Maritime Sciences and Advanced Technology, Pangandaran, Indonesia, 5–6 August 2021; Volume 925, p. 012056. [Google Scholar] [CrossRef]

- Giribabu, D.; Hari, R.; Sharma, J.; Sharma, A.; Ghosh, K.; Kumar Bera, A.; Kumar Srivastav, S. Prerequisite Condition of Diffuse Attenuation Coefficient Kd(490) for Detecting Seafloor from ICESat-2 Geolocated Photons During Shallow Water Bathymetry. Hydrology 2023, 11, 11. [Google Scholar] [CrossRef]

| Satellite Mission | Spatial Resolution [m] | Revisit Time [Days] | Availability | Type |

|---|---|---|---|---|

| Sentinel-1 | 10 | 12 | Open | SAR |

| Sentinel-2 | 10 | 5 | Open | Optical |

| Landsat-8 | 30 | 16 | Open | Optical |

| Quickbird-2 | 0.61–0.72/ 2.40–2.60 1 | 2–3 | Commercial | Optical |

| Ikonos-2 | 0.82/3.20 1 | 3 | Commercial | Optical |

| WorldView 1–4 | 0.50/- 1 | 2–6 | Commercial | Optical |

| 0.46/1.80 1 | 1 | |||

| 0.31–0.34/ 1.24–1.38 1 | 1–5 | |||

| 0.31–1.00/ 1.24–4.00 1 | 1 | |||

| GeoEye-1 | 0.41/1.65 1 | 3 | Commercial | Optical |

| TerraSAR-X | 1–40 | 11 | Commercial | SAR |

| VQ-840-GE | ASTRALite EDGE | YellowScan Navigator | |

|---|---|---|---|

| Weight [kg] | 9.5 | 5 | 3.7 |

| Measurement rate [kHz] | 50–100 | 20–40 | up to 50 |

| Laser wavelength [nm] | 532 | 532 | 532 |

| Operation altitude [m] | 5600 MSL | 30–50 AGL | 100 AGL |

| Depth performance [SD] | 1.8–2.0 | 1.5–2 | 2 |

| Footprint at 100 m | 100 mm | 300 mm | - |

| Scan pattern | Nearly elliptic | Linear cross-track | Non-repetitive elliptical |

| Camera | RGB | - | Global shutter embedded |

| Camera res. [MP] | 12 | - | ND |

| CZMIL Super Nova | VQ-880-GH | HawkEye 4X | Leica CoastalMapper | |

|---|---|---|---|---|

| Weight [kg] | 287 | 70 | 250 | 180 |

| Operation altitude AGL [m] | 400–800 | 10–1600 | 400–600/ up to 1600 1 | 300–6000/ 600–900 1 |

| Wavelength [nm] | 532/1064 1 | 532/1064 1 | 532/1064 1 | 515/1064 1 |

| Measurement rate [kHz] | 210/240 1 | 200–700/150–900 1 | 35–500 | 500–1000/ up to 2000 1 |

| Scan pattern | circular | circular | elliptical | circular |

| Beam div. [mrad] | 7 | 0.7–2.0/0.3 1 | 7 | 2.75/0.17 1 |

| Footprint [cm] | 280–560 | 0.7–320/0.3–48 1 | 280–420 | |

| Depth perform. [SD] | 3 | 1.5 | 3 | 2 2 |

| Camera | RGB/hyperspectral | RGB | RGB/RGBN | RGB and NIR |

| Camera res. [MP] | 150 | 10 | 5/80 | 250 and 150 |

| Study Site | Number of Images | Number of Tie Points | Check Points RMSE [pix] | Check Scale Bars Error [mm] | Number of Points in a Dense Cloud | Ground Resolution [mm/pix] |

|---|---|---|---|---|---|---|

| Before water flooding | 34 | 54,876 | 0.357 | 0.003 | 29,758,181 | 1.06 |

| After water flooding | 39 | 53,210 | 0.272 | 0.159 | 29,006,701 | 1.16 |

| Date of Acquisition | Flying Altitude [m] | Number of Images | Number of Tie Points | Ground Control Points RMSE [mm] | Check Points RMSE [mm] | Number of Points in a Dense Cloud | Ground Resolution [mm/pix] |

|---|---|---|---|---|---|---|---|

| 18 July 2017 | 633 | 29 | 122,069 | 25.9 | 70 | 110,951,382 | 28.5 |

| Data Source | Date of Acquisition | Type of Data | Point Density [Points/m2] | Max. Water Penetration [m] |

|---|---|---|---|---|

| Measurement by RIEGL VQ-840-G | 2021 | bathymetry, topography | 6–8 | approx. 17.5 |

| Litto3D program by SHOM | 2015 | bathymetry, topography | 0.04/1 1 | approx. 70 |

| LiDAR HD program by IGN | 2021 | topography | 10 | - |

| Date of Acquisition | Track Used | Description of the Track |

|---|---|---|

| 13 November 2020 | gt2l | strong ATLAS beam |

| gt2r | weak ATLAS beam | |

| 11 August 2022 | gt3l | strong ATLAS beam |

| gt3r | weak ATLAS beam |

| Study Site | Technology | Method | Spatial Resolution | Flying Height [m] | Technology of In Situ Data | Method of In Situ Data | RMSE [m] | R2 |

|---|---|---|---|---|---|---|---|---|

| Baby pool | UAS | Image-based | 2 mm | 5 | UAS | Image-based | 0.011 | 0.889 |

| Nora | AB | Image-based | 30 mm | 634 | - | - | - | - |

| Nora | SDB | LiDAR-based | 1–2 points/m2 | 496,000 | AB | Image-based | 0.619 | 0.773 |

| Les Deux Freres | SDB | Image-based | 10 m | 786,000 | UAS | LiDAR | 1.163/1.643 | 0.679/0.695 |

| Les Deux Freres | UAS | LiDAR-based | 6–8 points/m2 | 150 | AB | LiDAR (Litto3D) | 0.744 | 0.985 |

| LiDAR (HDLiDAR) | 0.794 | 0.994 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kujawa, P.; Remondino, F. A Review of Image- and LiDAR-Based Mapping of Shallow Water Scenarios. Remote Sens. 2025, 17, 2086. https://doi.org/10.3390/rs17122086

Kujawa P, Remondino F. A Review of Image- and LiDAR-Based Mapping of Shallow Water Scenarios. Remote Sensing. 2025; 17(12):2086. https://doi.org/10.3390/rs17122086

Chicago/Turabian StyleKujawa, Paulina, and Fabio Remondino. 2025. "A Review of Image- and LiDAR-Based Mapping of Shallow Water Scenarios" Remote Sensing 17, no. 12: 2086. https://doi.org/10.3390/rs17122086

APA StyleKujawa, P., & Remondino, F. (2025). A Review of Image- and LiDAR-Based Mapping of Shallow Water Scenarios. Remote Sensing, 17(12), 2086. https://doi.org/10.3390/rs17122086