Optimizing Data Consistency in UAV Multispectral Imaging for Radiometric Correction and Sensor Conversion Models

Abstract

1. Introduction

2. Materials and Methods

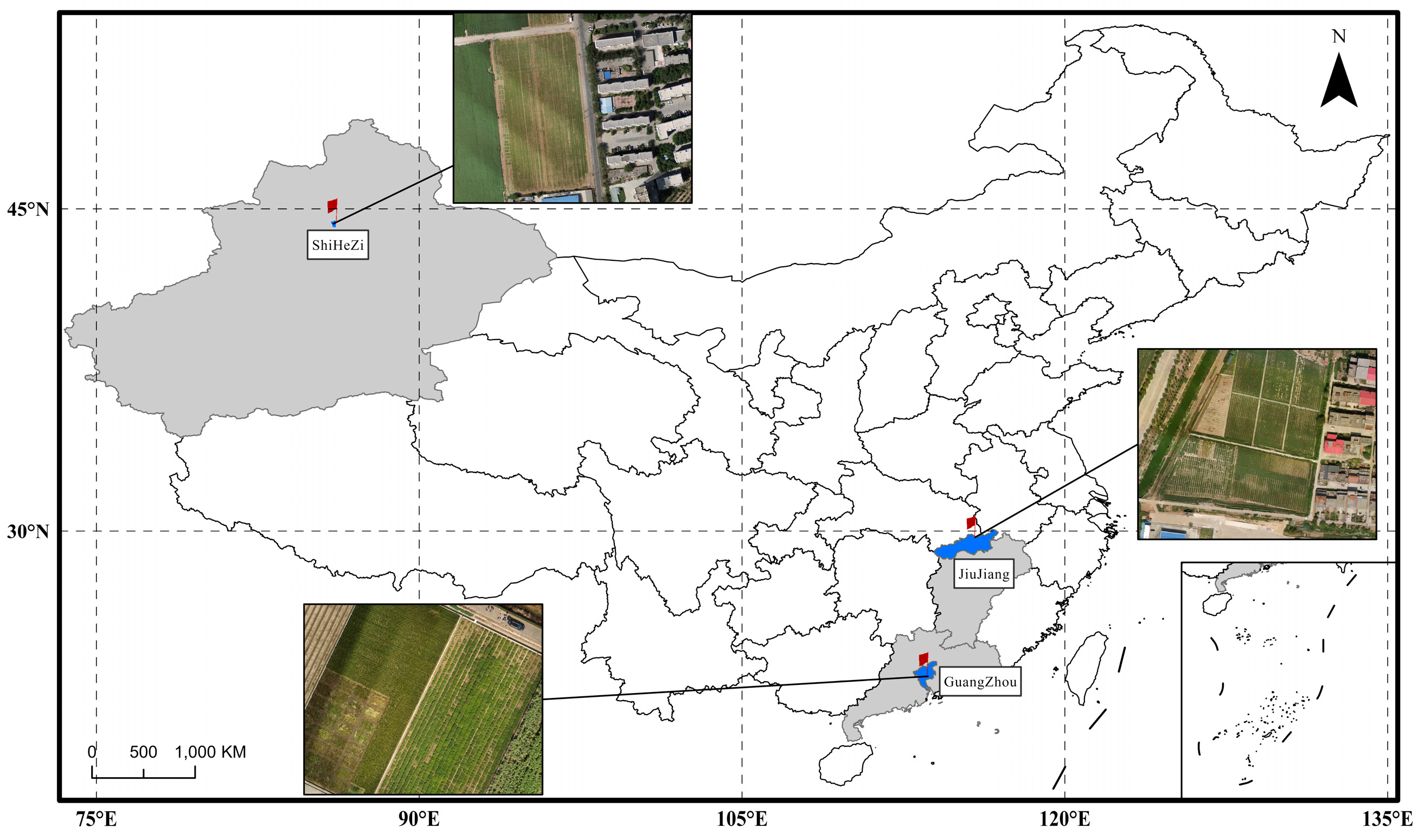

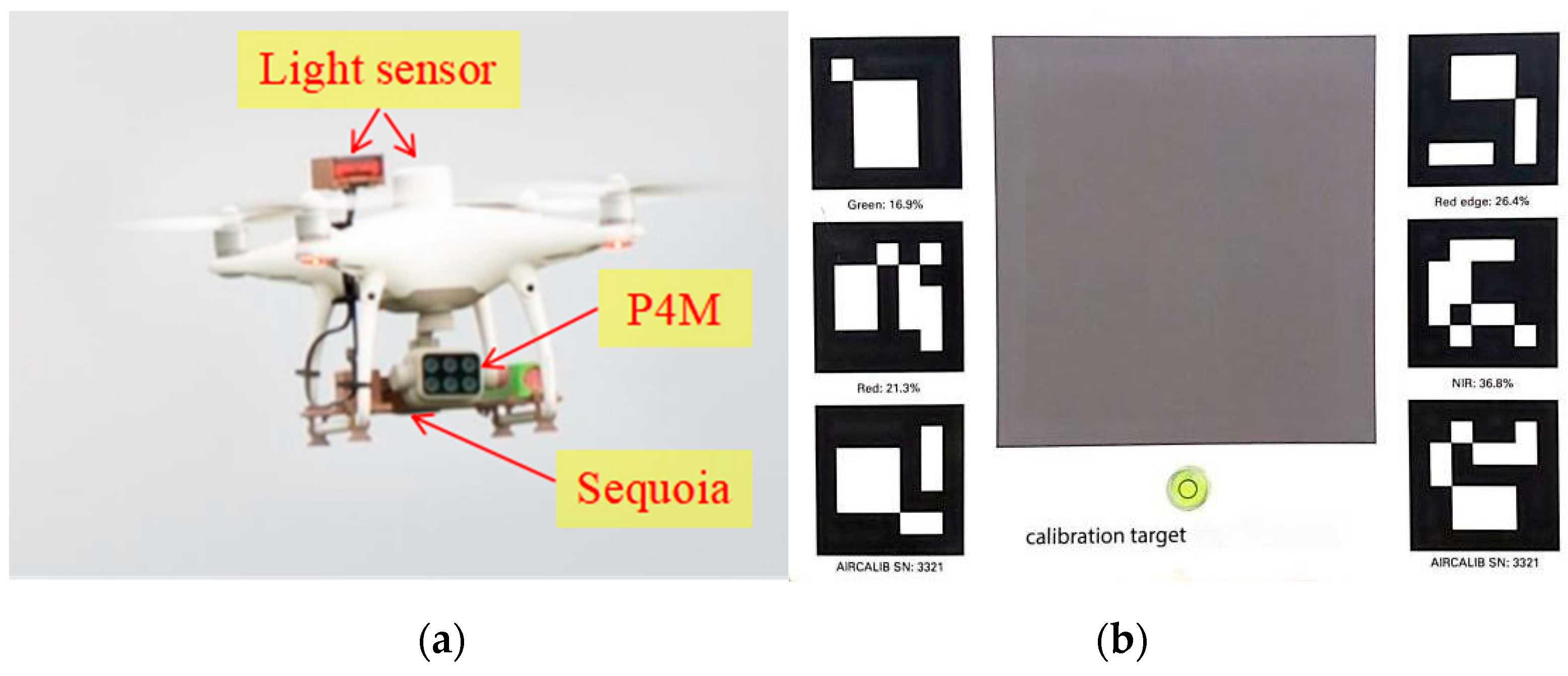

2.1. Study Area and Data Acquisition

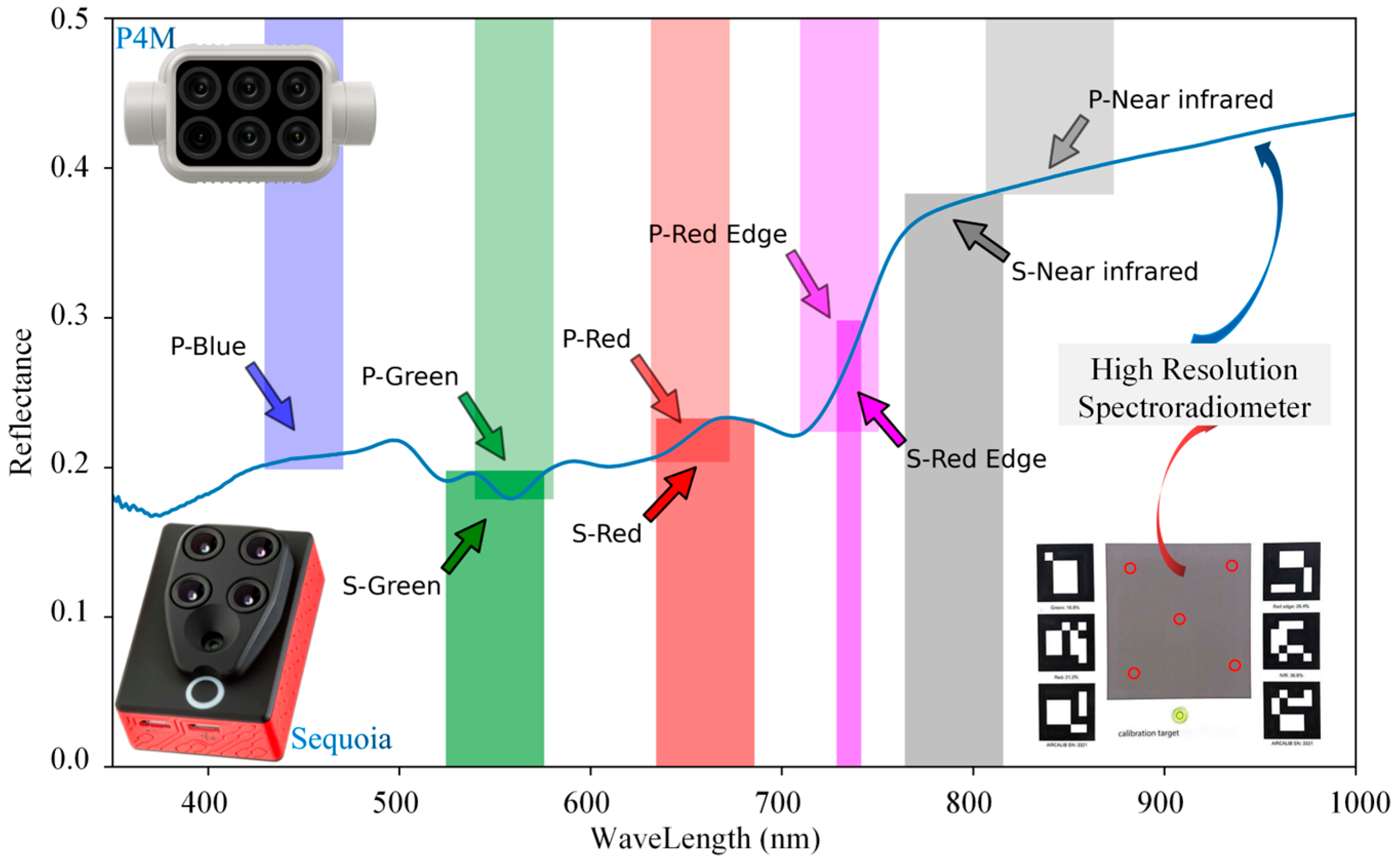

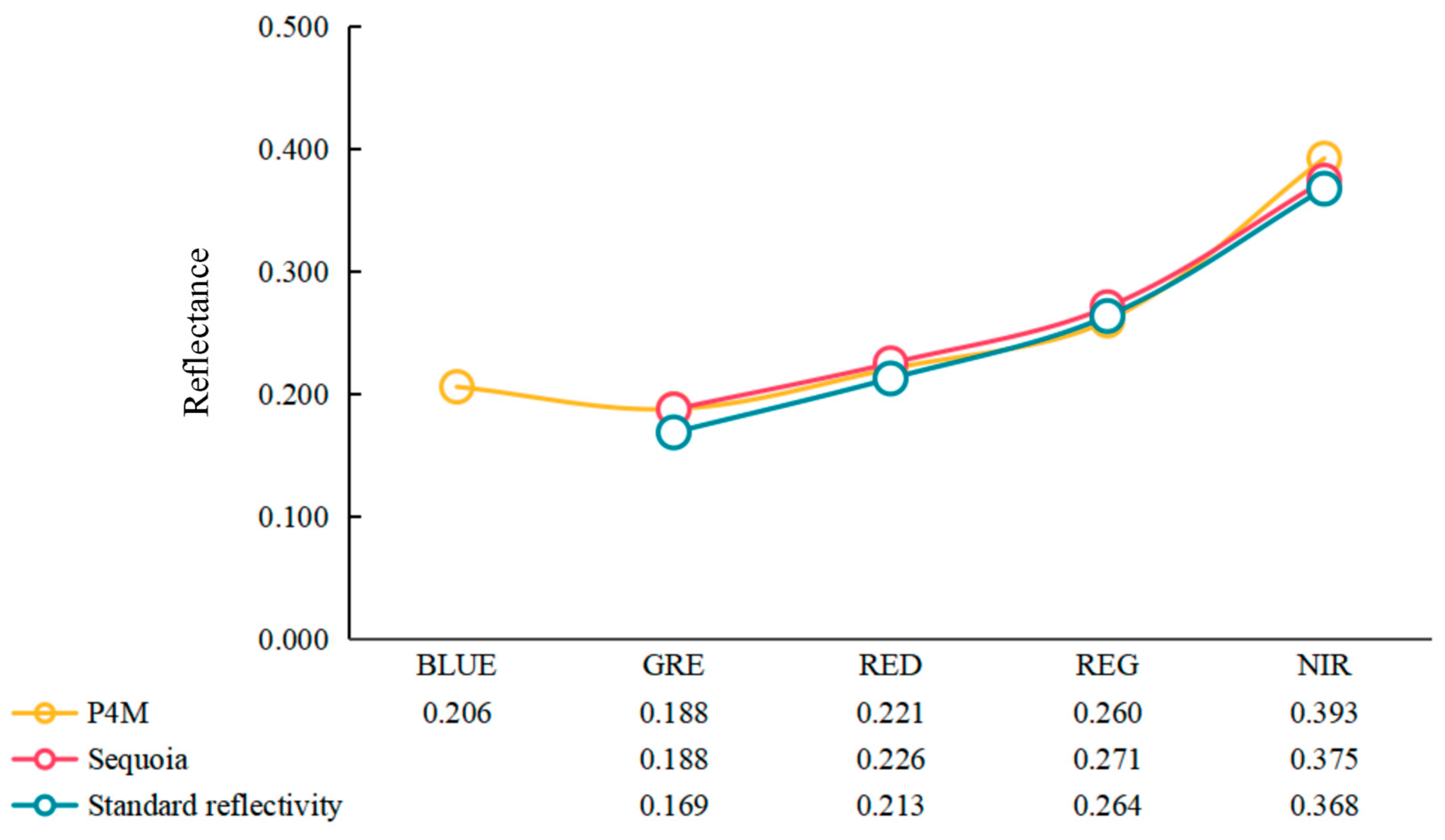

2.2. Radiometric Calibration Methodology

2.3. Image Pre-Processing

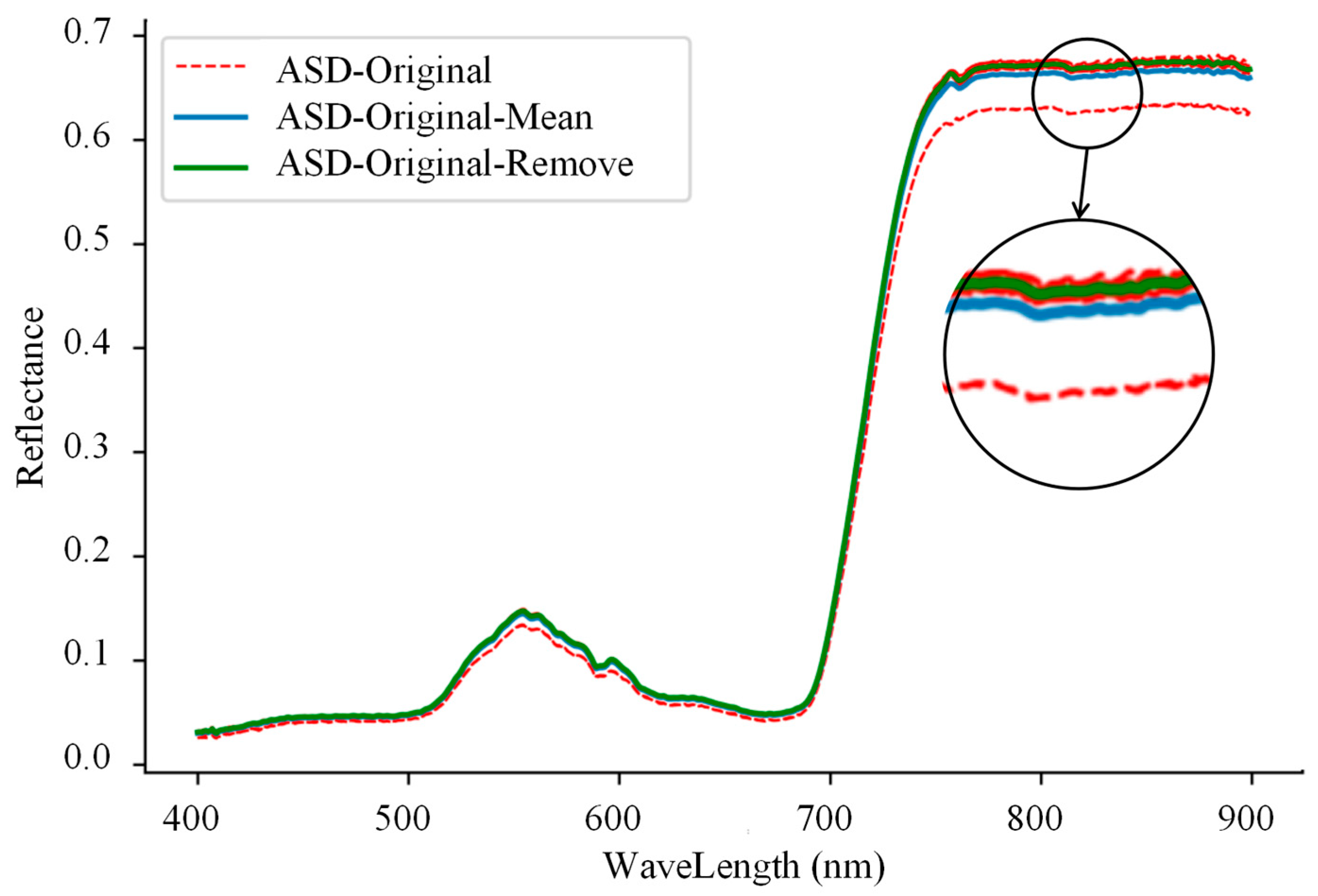

2.4. Spectral Reflectance Processing

2.5. Image Reflectance and Vegetation Index Consistency Test Method

2.6. Evaluation Method of Spectral Data and Vegetation Index Accuracy

2.7. Conversion Model and Feature Importance Analysis

3. Results

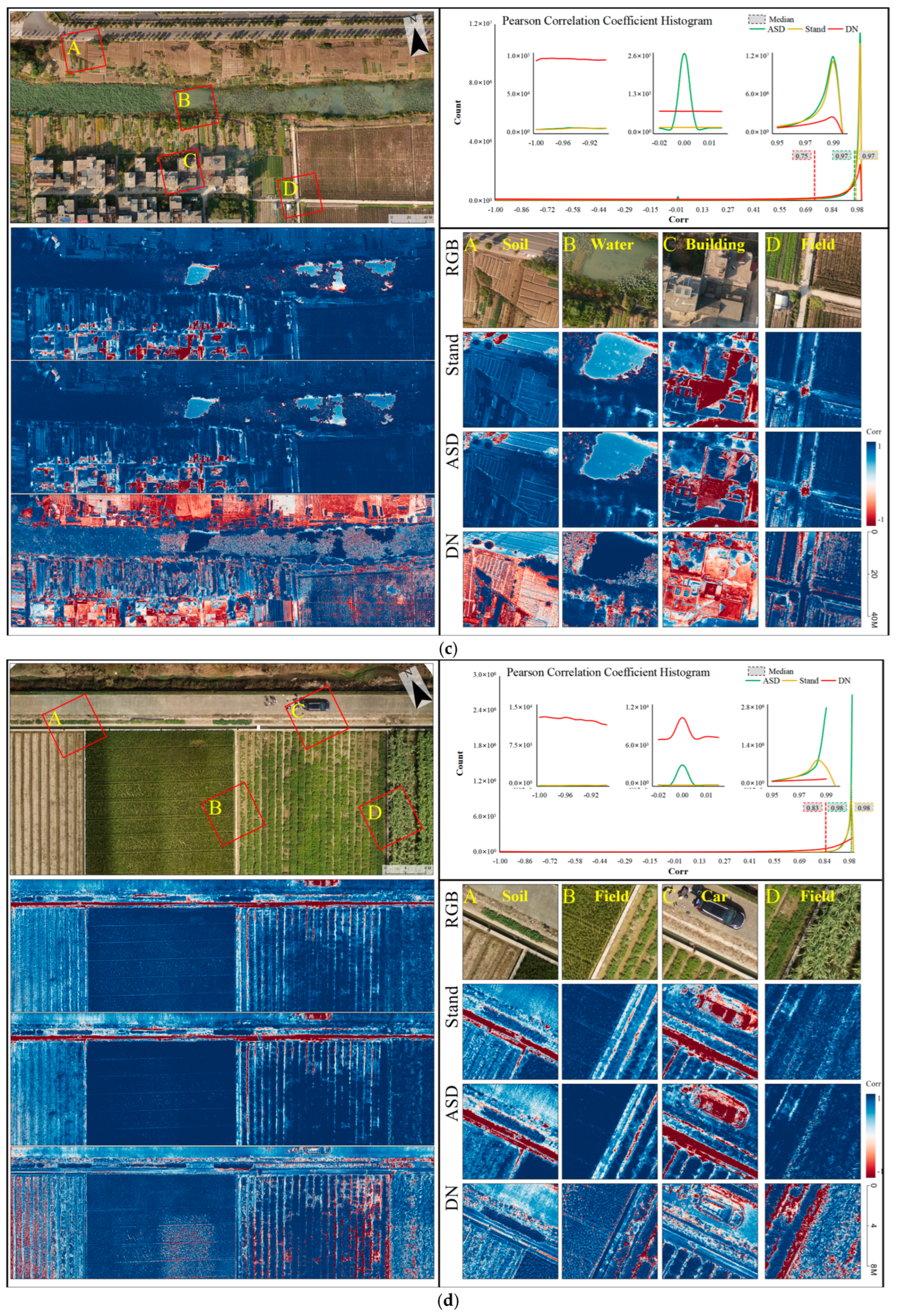

3.1. Consistency Analysis of Image Data

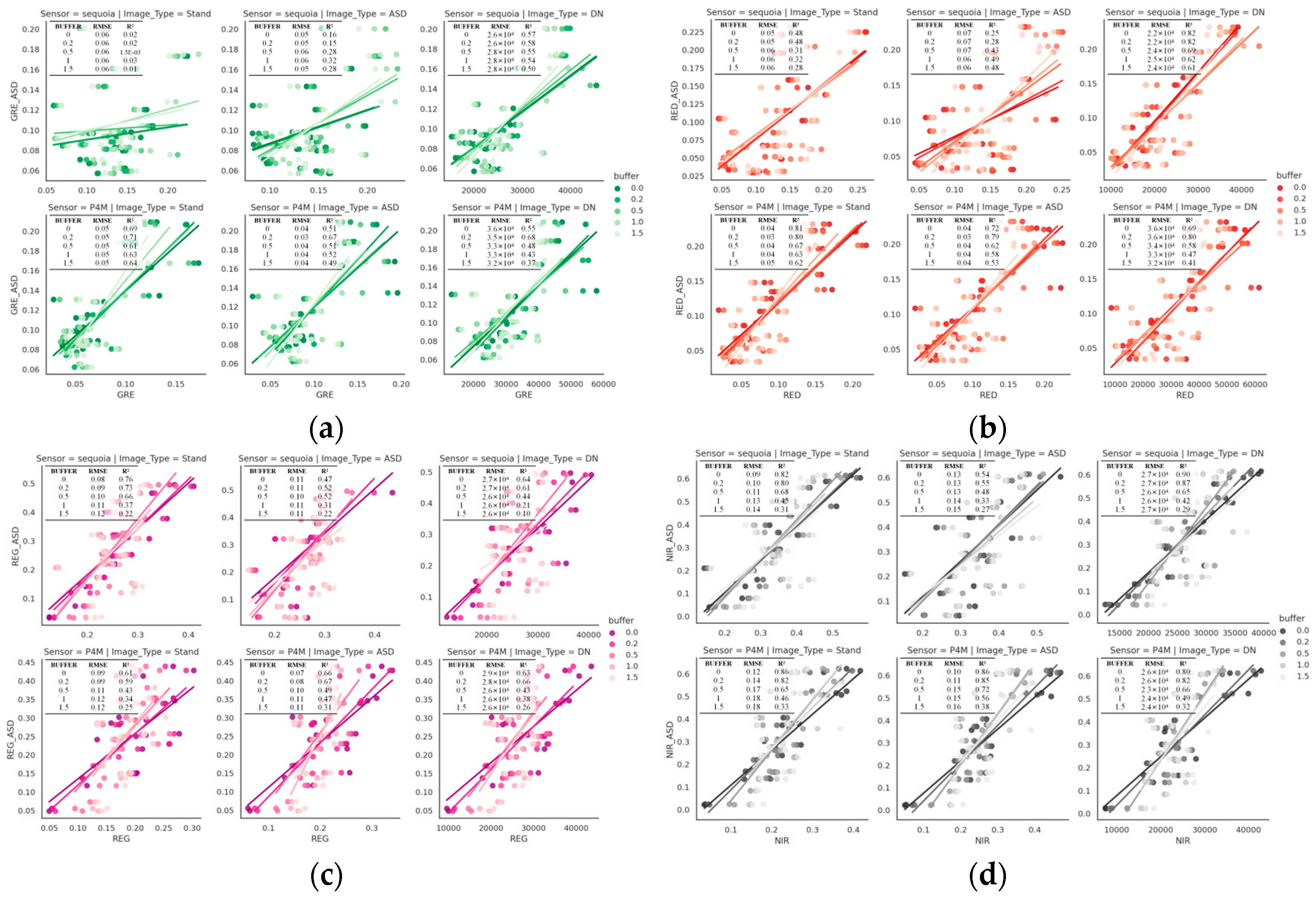

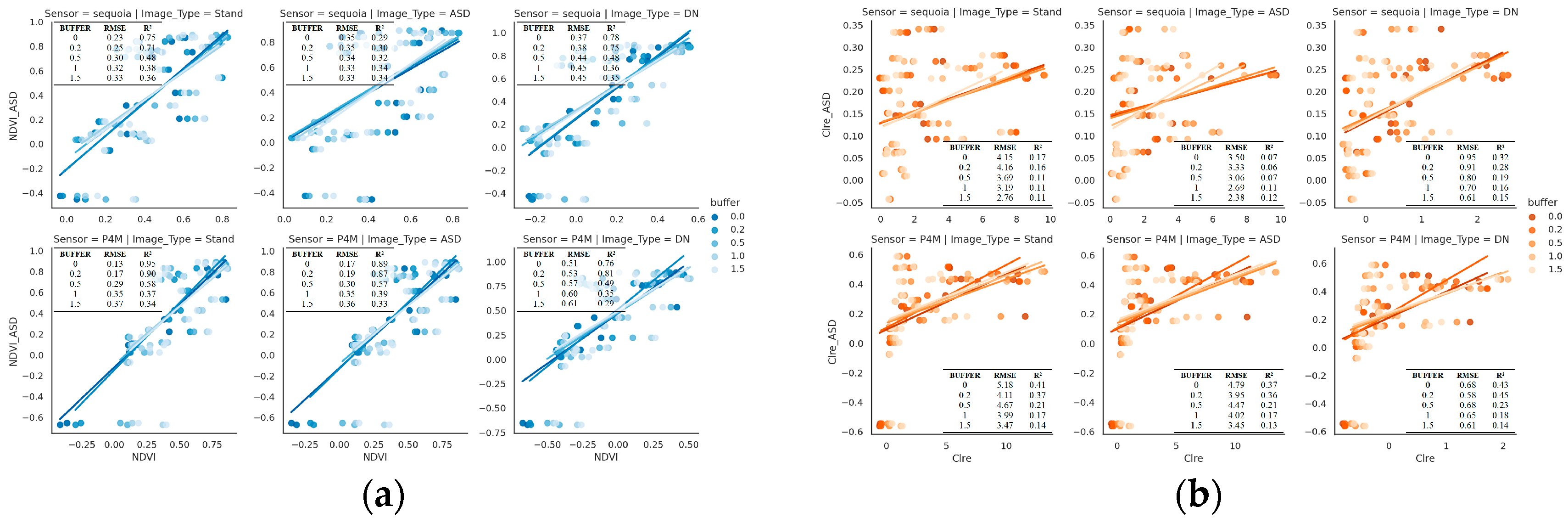

3.2. Consistency Test of Each Band Value and Vegetation Index

3.3. Spectral Data and Vegetation Index Value Accuracy Evaluation

3.4. Conversion Model Evaluation

4. Discussion

4.1. Impact of Radiometric Correction on Data Consistency

4.2. Comparative Accuracy of Sensors

4.3. Conversion Model

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| ALL | ASD | Stand | DN | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | |

| GRE | 0.90 | 0.92 | 0.95 | 0.72 | 0.64 | 0.57 | 0.59 | 0.51 | 0.32 | 0.58 | 0.24 | 0.86 |

| RED | 0.88 | 0.95 | 0.97 | 0.78 | 0.73 | 0.57 | 0.74 | 0.69 | 0.37 | 0.68 | 0.34 | 0.92 |

| REG | 0.97 | 0.97 | 0.97 | 0.58 | 0.59 | 0.56 | 0.48 | 0.48 | 0.38 | 0.77 | 0.59 | 0.93 |

| NIR | 0.97 | 0.98 | 0.97 | 0.62 | 0.60 | 0.50 | 0.57 | 0.55 | 0.34 | 0.76 | 0.63 | 0.92 |

| NDVI | 0.90 | 0.90 | 0.55 | 0.95 | 0.92 | 0.54 | 0.93 | 0.90 | 0.51 | 0.41 | 0.38 | 0.31 |

| RVI | 0.90 | 0.88 | 0.57 | 0.91 | 0.88 | 0.55 | 0.89 | 0.87 | 0.52 | 0.35 | 0.35 | 0.35 |

| DVI | 0.81 | 0.94 | 0.19 | 0.79 | 0.71 | 0.37 | 0.74 | 0.68 | 0.30 | 0.43 | 0.54 | 0.13 |

| GNDVI | 0.88 | 0.85 | 0.70 | 0.93 | 0.87 | 0.71 | 0.88 | 0.82 | 0.67 | 0.30 | 0.17 | 0.26 |

| MSAVI | 0.97 | 0.98 | 0.97 | 0.78 | 0.72 | 0.47 | 0.75 | 0.70 | 0.34 | 0.76 | 0.63 | 0.92 |

| GCVI | 0.90 | 0.87 | 0.63 | 0.91 | 0.87 | 0.68 | 0.88 | 0.84 | 0.53 | 0.26 | 0.17 | 0.21 |

| RNDVI | 0.90 | 0.89 | 0.35 | 0.95 | 0.92 | 0.47 | 0.93 | 0.90 | 0.51 | 0.45 | 0.39 | 0.24 |

| NDRE | 0.77 | 0.73 | 0.24 | 0.79 | 0.71 | 0.35 | 0.78 | 0.74 | 0.27 | 0.11 | 0.02 | 0.19 |

| MSRre | 0.84 | 0.85 | 0.68 | 0.75 | 0.67 | 0.32 | 0.76 | 0.72 | 0.23 | 0.27 | 0.09 | 0.46 |

| CLre | 0.50 | 0.54 | 0.05 | 0.75 | 0.68 | 0.33 | 0.75 | 0.70 | 0.26 | 0.01 | 0.01 | 0.01 |

| ALL | ASD | Stand | DN | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | ALL | NDVI > 0.2 | NDVI ≤ 0.2 | |

| GRE | 1.46 | 1.37 | 1.18 | 0.56 | 0.55 | 0.51 | 0.64 | 0.61 | 0.64 | 0.48 | 0.40 | 0.37 |

| RED | 2.16 | 1.31 | 0.95 | 0.57 | 0.53 | 0.47 | 0.62 | 0.57 | 0.55 | 0.71 | 0.38 | 0.30 |

| REG | 0.76 | 0.81 | 0.90 | 0.44 | 0.44 | 0.44 | 0.47 | 0.46 | 0.50 | 0.25 | 0.24 | 0.29 |

| NIR | 0.74 | 0.74 | 0.90 | 0.48 | 0.47 | 0.49 | 0.49 | 0.48 | 0.54 | 0.24 | 0.21 | 0.29 |

| NDVI | 0.23 | 0.15 | 0.59 | 0.17 | 0.13 | 0.52 | 0.16 | 0.14 | 0.38 | 0.60 | 0.28 | 2.72 |

| RVI | 0.36 | 0.33 | 0.11 | 0.35 | 0.32 | 0.10 | 0.35 | 0.33 | 0.09 | 0.50 | 0.34 | 0.20 |

| DVI | 1.96 | 1.21 | 15.38 | 0.55 | 0.49 | 0.81 | 0.54 | 0.51 | 0.66 | 0.65 | 0.35 | 4.89 |

| GNDVI | 0.26 | 0.20 | 1.10 | 0.23 | 0.18 | 0.88 | 0.22 | 0.18 | 0.99 | 0.78 | 0.49 | 6.97 |

| MSAVI | 0.74 | 0.74 | 0.90 | 0.26 | 0.26 | 0.18 | 0.27 | 0.27 | 0.20 | 0.24 | 0.21 | 0.29 |

| GCVI | 0.59 | 0.54 | 1.42 | 0.62 | 0.56 | 1.16 | 0.53 | 0.49 | 1.38 | 0.92 | 0.71 | 39.36 |

| RNDVI | 0.28 | 0.18 | 5.16 | 0.21 | 0.16 | 5.93 | 0.22 | 0.18 | 3.78 | 0.65 | 0.32 | 6.79 |

| NDRE | 0.39 | 0.32 | 0.84 | 0.34 | 0.28 | 0.77 | 0.37 | 0.32 | 0.80 | 1.16 | 1.14 | 2.79 |

| MSRre | 2.02 | 2.32 | 6.17 | 0.16 | 0.17 | 0.14 | 0.18 | 0.18 | 0.17 | 0.60 | 0.58 | 1.24 |

| CLre | 0.75 | 0.56 | 2.16 | 0.43 | 0.36 | 0.82 | 0.44 | 0.40 | 0.84 | 5.25 | 4.70 | 14.08 |

References

- Guo, W.; Qiao, H.-B.; Zhao, H.-Q.; Zhang, J.-J.; Pei, P.-C.; Liu, Z.-L. Cotton Aphid Damage Monitoring Using Uav Hyperspectral Data Based On Derivative of Ratio Spectroscopy. Spectrosc. Spectr. Anal. 2021, 41, 1543–1550. [Google Scholar]

- Berger, K.; Verrelst, J.; Féret, J.-B.; Wang, Z.; Wocher, M.; Strathmann, M.; Danner, M.; Mauser, W.; Hank, T. Crop Nitrogen Monitoring: Recent Progress and Principal Developments in the Context of Imaging Spectroscopy Missions. Remote Sens. Environ. 2020, 242, 111758. [Google Scholar] [CrossRef]

- Brook, A.; De Micco, V.; Battipaglia, G.; Erbaggio, A.; Ludeno, G.; Catapano, I.; Bonfante, A. A Smart Multiple Spatial and Temporal Resolution System to Support Precision Agriculture From Satellite Images: Proof of Concept On Aglianico Vineyard. Remote Sens. Environ. 2020, 240, 111679. [Google Scholar] [CrossRef]

- Qiao, X.; Li, Y.-Z.; Su, G.-Y.; Tian, H.-K.; Zhang, S.; Sun, Z.-Y.; Yang, L.; Wan, F.-H.; Qian, W.-Q. Mmnet: Identifying Mikania Micrantha Kunth in the Wild Via a Deep Convolutional Neural Network. J. Integr. Agric. 2020, 19, 1292–1300. [Google Scholar] [CrossRef]

- Yang, W.; Xu, W.; Wu, C.; Zhu, B.; Chen, P.; Zhang, L.; Lan, Y. Cotton Hail Disaster Classification Based On Drone Multispectral Images at the Flowering and Boll Stage. Comput. Electron. Agric. 2021, 180, 105866. [Google Scholar] [CrossRef]

- Liu, S.; Bai, X.; Zhu, G.; Zhang, Y.; Li, L.; Ren, T.; Lu, J. Remote Estimation of Leaf Nitrogen Concentration in Winter Oilseed Rape Across Growth Stages and Seasons by Correcting for the Canopy Structural Effect. Remote Sens. Environ. 2023, 284, 113348. [Google Scholar] [CrossRef]

- Chen, T.; Yang, W.; Zhang, H.; Zhu, B.; Zeng, R.; Wang, X.; Wang, S.; Wang, L.; Qi, H.; Lan, Y.; et al. Early Detection of Bacterial Wilt in Peanut Plants through Leaf-Level Hyperspectral and Unmanned Aerial Vehicle Data. Comput. Electron. Agric. 2020, 177, 105708. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-Weight Multispectral Uav Sensors and their Capabilities for Predicting Grain Yield and Detecting Plant Diseases. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 963–970. [Google Scholar] [CrossRef]

- Nansen, C.; Murdock, M.; Purington, R.; Marshall, S. Early Infestations by Arthropod Pests Induce Unique Changes in Plant Compositional Traits and Leaf Reflectance. Pest. Manag. Sci. 2021, 77, 5158–5169. [Google Scholar] [CrossRef]

- Wang, C.; Chen, Q.; Fan, H.; Yao, C.; Sun, X.; Chan, J.; Deng, J. Evaluating Satellite Hyperspectral (Orbita) and Multispectral (Landsat 8 and Sentinel-2) Imagery for Identifying Cotton Acreage. Int. J. Remote Sens. 2021, 42, 4042–4063. [Google Scholar] [CrossRef]

- Yu, F.; Bai, J.-C.; Jin, Z.-Y.; Guo, Z.-H.; Yang, J.-X.; Chen, C.-L. Combining the Critical Nitrogen Concentration and Machine Learning Algorithms to Estimate Nitrogen Deficiency in Rice From Uav Hyperspectral Data. J. Integr. Agric. 2023, 22, 1216–1229. [Google Scholar] [CrossRef]

- Palmer, S.C.J.; Kutser, T.; Hunter, P.D. Remote Sensing of Inland Waters: Challenges, Progress and Future Directions. Remote Sens. Environ. 2015, 157, 1–8. [Google Scholar] [CrossRef]

- Barrero, O.; Perdomo, S.A. Rgb and Multispectral Uav Image Fusion for Gramineae Weed Detection in Rice Fields. Precis. Agric. 2018, 19, 809–822. [Google Scholar] [CrossRef]

- Dunbar, M.B.; Caballero, I.; Román, A.; Navarro, G. Remote Sensing: Satellite and Rpas (Remotely Piloted Aircraft System). In Marine Analytical Chemistry; Blasco, J., Tovar-Sánchez, A., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 389–417. [Google Scholar]

- Xu, W.; Chen, P.; Zhan, Y.; Chen, S.; Zhang, L.; Lan, Y. Cotton Yield Estimation Model Based On Machine Learning Using Time Series Uav Remote Sensing Data. Int. J. Appl. Earth Obs. 2021, 104, 102511. [Google Scholar] [CrossRef]

- Gill, T.; Gill, S.K.; Saini, D.K.; Chopra, Y.; de Koff, J.P.; Sandhu, K.S. A Comprehensive Review of High Throughput Phenotyping and Machine Learning for Plant Stress Phenotyping. Phenomics 2022, 2, 156–183. [Google Scholar] [CrossRef]

- Chen, P.; Xu, W.; Zhan, Y.; Wang, G.; Yang, W.; Lan, Y. Determining Application Volume of Unmanned Aerial Spraying Systems for Cotton Defoliation Using Remote Sensing Images. Comput. Electron. Agric. 2022, 196, 106912. [Google Scholar] [CrossRef]

- Corti, M.; Cavalli, D.; Cabassi, G.; Bechini, L.; Pricca, N.; Paolo, D.; Marinoni, L.; Vigoni, A.; Degano, L.; Gallina, P.M. Improved Estimation of Herbaceous Crop Aboveground Biomass Using Uav-Derived Crop Height Combined with Vegetation Indices. Precis. Agric. 2023, 24, 587–606. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Optimal Spatial Resolution of Unmanned Aerial Vehicle (Uav)-Acquired Imagery for Species Classification in a Heterogeneous Grassland Ecosystem. GISci. Remote Sens. 2018, 55, 205–220. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Hu, X.; Wei, L.; Zhang, L. A Robust Spectral-Spatial Approach to Identifying Heterogeneous Crops Using Remote Sensing Imagery with High Spectral and Spatial Resolutions. Remote Sens. Environ. 2020, 239, 111605. [Google Scholar] [CrossRef]

- Lu, H.; Fan, T.; Ghimire, P.; Deng, L. Experimental Evaluation and Consistency Comparison of Uav Multispectral Minisensors. Remote Sens. 2020, 12, 2542. [Google Scholar] [CrossRef]

- Blickensdörfer, L.; Schwieder, M.; Pflugmacher, D.; Nendel, C.; Erasmi, S.; Hostert, P. Mapping of Crop Types and Crop Sequences with Combined Time Series of Sentinel-1, Sentinel-2 and Landsat 8 Data for Germany. Remote Sens. Environ. 2022, 269, 112831. [Google Scholar] [CrossRef]

- Ranjan, A.K.; Patra, A.K.; Gorai, A.K. A Review On Estimation of Particulate Matter From Satellite-Based Aerosol Optical Depth: Data, Methods, and Challenges. Asia-Pac. J. Atmos. Sci. 2021, 57, 679–699. [Google Scholar] [CrossRef]

- Pahlevan, N.; Smith, B.; Alikas, K.; Anstee, J.; Barbosa, C.; Binding, C.; Bresciani, M.; Cremella, B.; Giardino, C.; Gurlin, D.; et al. Simultaneous Retrieval of Selected Optical Water Quality Indicators From Landsat-8, Sentinel-2, and Sentinel-3. Remote Sens. Environ. 2022, 270, 112860. [Google Scholar] [CrossRef]

- Shi, C.; Hashimoto, M.; Nakajima, T. Remote Sensing of Aerosol Properties From Multi-Wavelength and Multi-Pixel Information Over the Ocean. Atmos. Chem. Phys. 2019, 19, 2461–2475. [Google Scholar] [CrossRef]

- Jiang, J.; Johansen, K.; Tu, Y.; McCabe, M.F. Multi-Sensor and Multi-Platform Consistency and Interoperability Between Uav, Planet Cubesat, Sentinel-2, and Landsat Reflectance Data. GISci. Remote Sens. 2022, 59, 936–958. [Google Scholar] [CrossRef]

- von Bueren, S.K.; Burkart, A.; Hueni, A.; Rascher, U.; Tuohy, M.P.; Yule, I.J. Deploying Four Optical Uav-Based Sensors Over Grassland: Challenges and Limitations. Biogeosciences 2015, 12, 163–175. [Google Scholar] [CrossRef]

- Zhao, D.; Huang, L.; Li, J.; Qi, J. A Comparative Analysis of Broadband and Narrowband Derived Vegetation Indices in Predicting Lai and Ccd of a Cotton Canopy. ISPRS J. Photogramm. 2007, 62, 25–33. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. Uav-Based Multispectral Remote Sensing for Precision Agriculture: A Comparison Between Different Cameras. ISPRS J. Photogramm. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation Monitoring Using Multispectral Sensors—Best Practices and Lessons Learned From High Latitudes; Cold Spring Harbor Laboratory Press: Harbor, NY, USA, 2018. [Google Scholar]

- Zhang, J.; Huang, W.; Li, J.; Yang, G.; Luo, J.; Gu, X.; Wang, J. Development, Evaluation and Application of a Spectral Knowledge Base to Detect Yellow Rust in Winter Wheat. Precis. Agric. 2011, 12, 716–731. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Pearson, R.L.; Miller, L.D. Remote Mapping of Standing Crop Biomass for Estimation of the Productivity of the Shortgrass Prairie. In Proceedings of the Remote Sensing of Environment VIII, Ann Arbor, MI, USA, 2–6 October 1972; p. 1355. [Google Scholar]

- Jordan, C.F. Derivation of Leaf-Area Index From Quality of Light On the Forest Floor. Ecology. 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation From Eos-Modis. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote Estimation of Leaf Area Index and Green Leaf Biomass in Maize Canopies. Geophys. Res. Lett. 2003, 30, 5. [Google Scholar] [CrossRef]

- Fitzgerald, G.; Rodriguez, D.; O’Leary, G. Measuring and Predicting Canopy Nitrogen Nutrition in Wheat Using a Spectral Index—The Canopy Chlorophyll Content Index (Ccci). Field Crop Res. 2010, 116, 318–324. [Google Scholar] [CrossRef]

- Wu, C.; Niu, Z.; Tang, Q.; Huang, W. Estimating Chlorophyll Content From Hyperspectral Vegetation Indices: Modeling and Validation. Agric. Forest Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote Estimation of Canopy Chlorophyll Content in Crops. Geophys. Res. Lett. 2005, 32, 28. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Raissouni, N.; Li, Z. A Comparative Study of Land Surface Emissivity Retrieval from NOAA Data. Remote Sens. Environ. 2001, 75, 256–266. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Jiménez-Muñoz, J.C.; Paolini, L. Land surface temperature retrieval from LANDSAT TM 5. Remote Sens. Environ. 2004, 90, 434–440. [Google Scholar] [CrossRef]

- Gray, P.C.; Windle, A.E.; Dale, J.; Savelyev, I.B.; Johnson, Z.I.; Silsbe, G.M.; Larsen, G.D.; Johnston, D.W. Robust Ocean Color From Drones: Viewing Geometry, Sky Reflection Removal, Uncertainty Analysis, and a Survey of the Gulf Stream Front. Limnol. Oceanogr. Methods 2022, 20, 656–673. [Google Scholar] [CrossRef]

- Windle, A.E.; Silsbe, G.M. Evaluation of Unoccupied Aircraft System (Uas) Remote Sensing Reflectance Retrievals for Water Quality Monitoring in Coastal Waters. Front. Environ. Sci. 2021, 9, 674247. [Google Scholar] [CrossRef]

| Location | Sensor | Date | Start Time of the Flight | Area Covered (ha) | Flight Altitude (M) | Ground Sample Distance (M/Pixel) |

|---|---|---|---|---|---|---|

| Shihezi | Sequoia | 2 August 2022 | 12:00 a.m. | 70 | 100 | 0.107 |

| P4M | 0.056 | |||||

| Jiujiang | Sequoia | 16 September 2022 | 11:00 a.m. | 80 | 0.103 | |

| P4M | 0.054 | |||||

| Sequoia | 18 October 2022 | 11:00 a.m. | 80 | 0.103 | ||

| P4M | 0.054 | |||||

| Guangzhou | Sequoia | 26 October 2022 | 11:30 a.m. | 2 | 30 | 0.034 |

| P4M | 0.017 |

| Vegetation Index Equation | Reference |

|---|---|

| Normalized Difference Vegetation Index | [32] |

| Ratio Vegetation Index | [33] |

| Difference Vegetation Index | [34] |

| Green Normalized Difference Vegetation Index | [35] |

| Modified Soil Adjusted Vegetation Index | [36] |

| Green Chlorophyll Vegetation Index | [37] |

| Red Edge Normalized Difference Vegetation Index | [37] |

| Normalized Difference Red Edge | [38] |

| Modified Red Edge Soil Adjusted Vegetation Index | [39] |

| Red Edge Chlorophyll Vegetation Index | [40] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, W.; Fu, H.; Xu, W.; Wu, J.; Liu, S.; Li, X.; Tan, J.; Lan, Y.; Zhang, L. Optimizing Data Consistency in UAV Multispectral Imaging for Radiometric Correction and Sensor Conversion Models. Remote Sens. 2025, 17, 2001. https://doi.org/10.3390/rs17122001

Yang W, Fu H, Xu W, Wu J, Liu S, Li X, Tan J, Lan Y, Zhang L. Optimizing Data Consistency in UAV Multispectral Imaging for Radiometric Correction and Sensor Conversion Models. Remote Sensing. 2025; 17(12):2001. https://doi.org/10.3390/rs17122001

Chicago/Turabian StyleYang, Weiguang, Huaiyuan Fu, Weicheng Xu, Jinhao Wu, Shiyuan Liu, Xi Li, Jiangtao Tan, Yubin Lan, and Lei Zhang. 2025. "Optimizing Data Consistency in UAV Multispectral Imaging for Radiometric Correction and Sensor Conversion Models" Remote Sensing 17, no. 12: 2001. https://doi.org/10.3390/rs17122001

APA StyleYang, W., Fu, H., Xu, W., Wu, J., Liu, S., Li, X., Tan, J., Lan, Y., & Zhang, L. (2025). Optimizing Data Consistency in UAV Multispectral Imaging for Radiometric Correction and Sensor Conversion Models. Remote Sensing, 17(12), 2001. https://doi.org/10.3390/rs17122001