1. Introduction

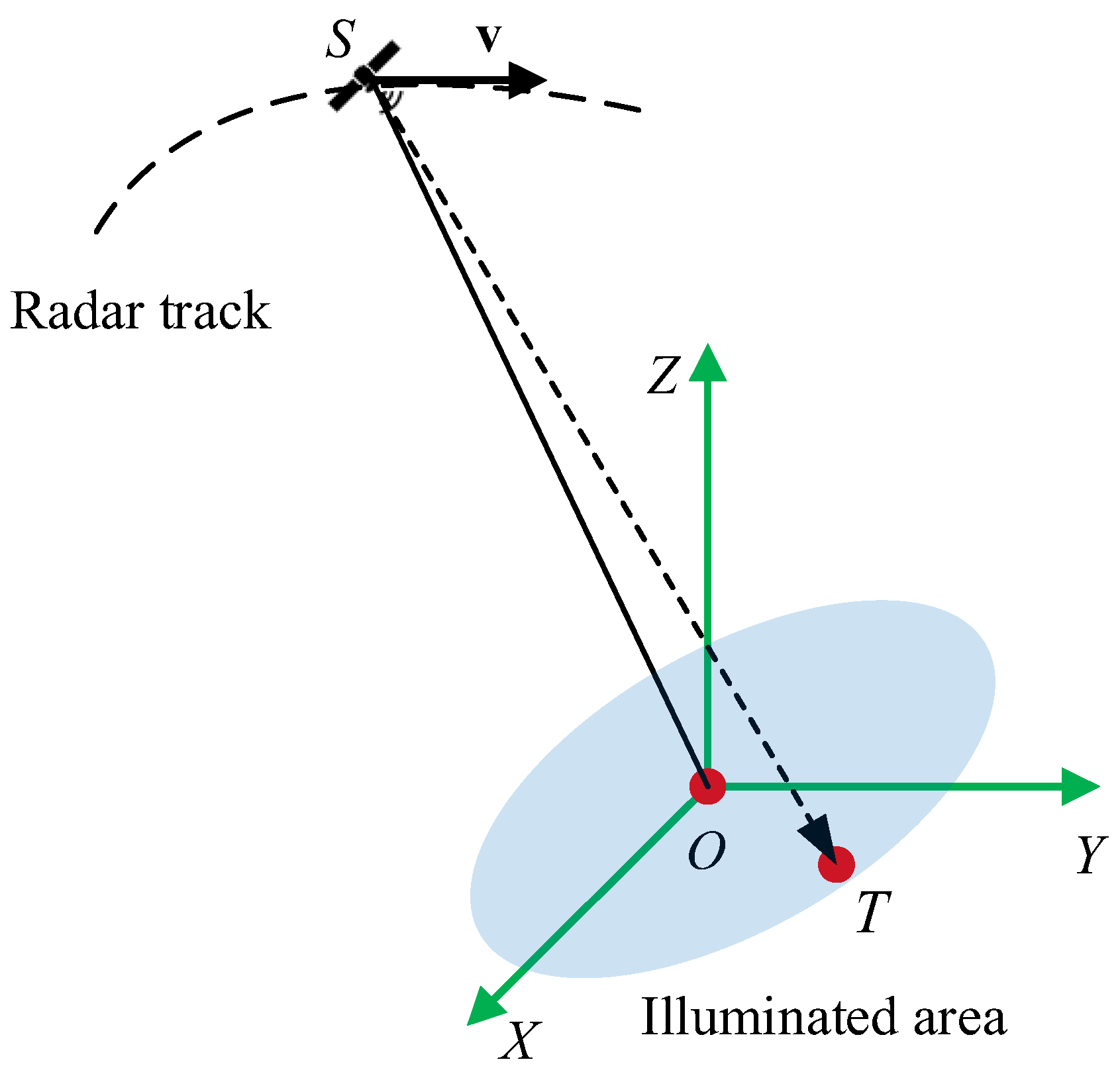

Synthetic aperture radar (SAR) is an active microwave imaging technology capable of all-weather, all-day Earth observation, demonstrating broad application prospects in disaster monitoring, resource exploration, and building condition assessment [

1,

2,

3]. With continuous advancements in SAR technology, SAR imaging is progressively evolving toward longer synthetic times and larger swaths to meet the demands of high-efficiency and high-precision mapping. Geosynchronous SAR (GEO SAR) [

4,

5] is a typical long-synthetic-time large-swath SAR, and current research has yielded significant work in areas such as system analysis and design [

6,

7,

8,

9], two-dimensional (2-D) imaging processing [

10,

11,

12,

13,

14,

15,

16], three-dimensional imaging processing, and deformation extraction [

17,

18,

19], etc. Some preliminary equivalent experiments have been performed to validate the principle and feasibility of these SAR systems [

20,

21]. Furthermore, leveraging its extremely high orbital altitude, moon-based SAR (MB SAR) [

22,

23] possesses inherent advantages in wide-swath imaging, giving rise to moon-based SAR Earth observation technology. Current research efforts focus on system design and signal processing of MB SAR [

24,

25,

26], elevating SAR mapping coverage to new heights and further advancing long-synthetic-time large-swath SAR technology development.

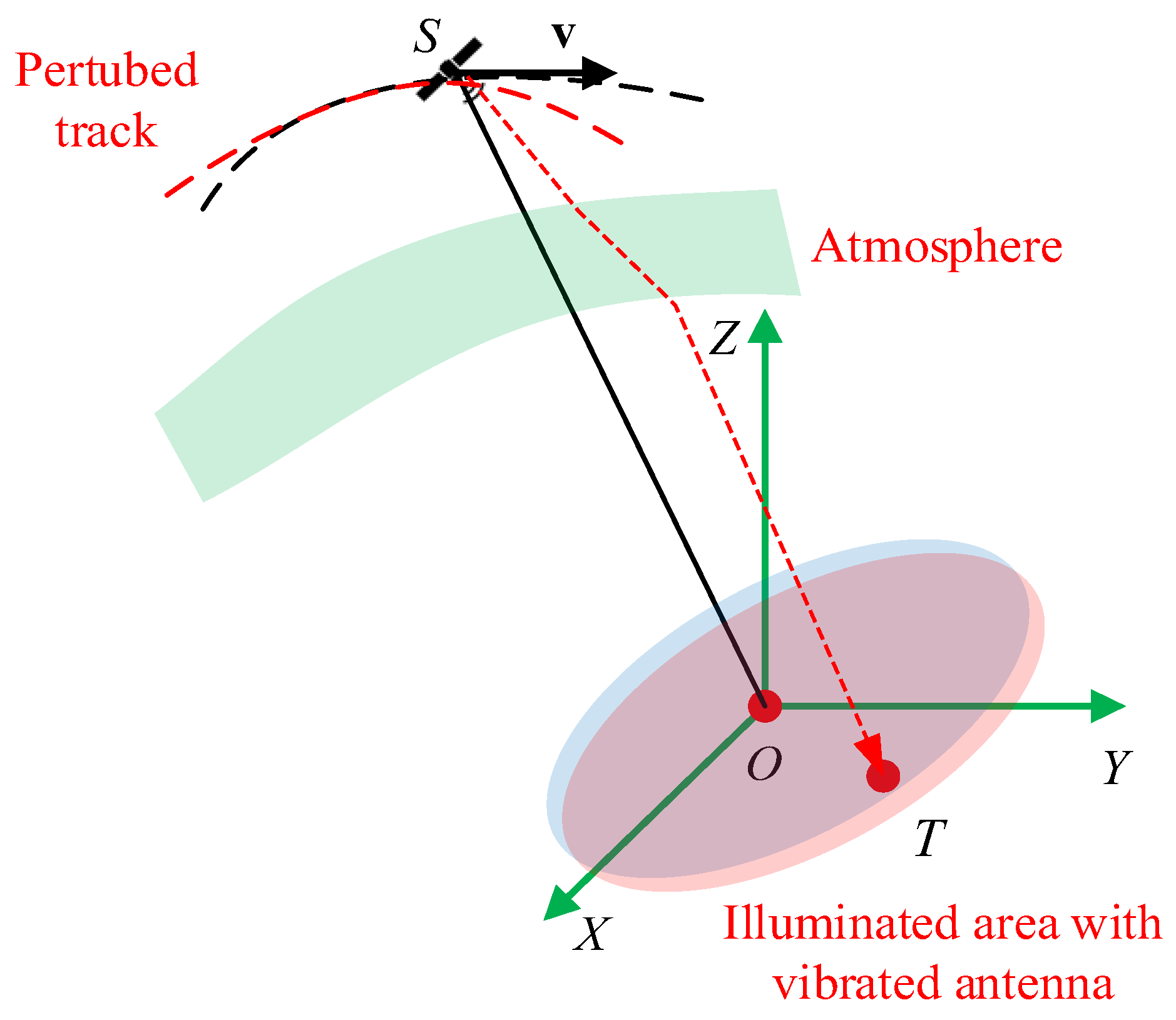

However, due to the influence of extremely long synthetic aperture times and large swaths, many non-ideal factors, including atmospheric impact, orbital perturbation, and antenna vibration, will result in seriously undesired impacts on SAR images. Therefore, these factors must be taken into account in SAR imaging processing. At the same time, due to the curved trajectory and the large imaging width, the impact of these non-ideal factors also has serious 2-D spatial variation characteristics. Therefore, analysis of and compensation for these non-ideal factors are hotspots of current SAR research [

27].

At present, many studies have been carried out on non-ideal factors. The sources and effects of these non-ideal factors can be summarized as follows:

Atmospheric impact [

28,

29,

30,

31,

32,

33,

34]: The electromagnetic waves of SAR will travel through the atmosphere twice, and the atmosphere will affect the SAR signal. The atmospheric impact mainly includes three aspects: background ionosphere, ionospheric scintillation, and the tropospheric impact. The background ionosphere mainly brings polynomial phase errors, and it will degrade focusing performance by spreading the mainlobe and introducing asymmetric sidelobes. Ionospheric scintillation will bring random phase and amplitude errors, which will mainly affect focusing performance by degrading the integrated sidelobe ratio (ISLR).

Orbital perturbation [

35,

36,

37,

38]: Theoretically, the orbit information of GEO SAR or MB SAR can be obtained from the ephemeris. However, non-ideal factors such as J2 perturbation, third body perturbation, and radiation pressure perturbation will make the real orbit deviate from the theoretical orbit, which leads to undesired orbital perturbations. Generally, orbital perturbation can be partially compensated for based on high precision orbit determination technology. However, the precision of orbit determination is usually worse than several centimeters, which is comparable to the wavelength, and the residue error after compensation still cannot be neglected. Thus, it is necessary to deal with the residual orbital perturbation error after compensation based on orbital determination technology, which is also the focus of the term “orbital perturbation error” in this paper.

Antenna vibration [

39,

40]: Due to the large physical size of GEO SAR and MB antennae, which are usually dozens of meters, SAR with a long synthetic time and large swath is faced with severe antenna vibration, which consists of translational vibration and rotational vibration. Antenna translational vibration will result in the periodic phase error, and antenna rotational vibration will cause periodic amplitude error. Further information related to the impact of antenna vibration on SAR can be found in [

40].

To deal with these non-ideal factors of SAR with a long synthetic time and large swath, several methods have been proposed, and these methods can be divided into two types. One is the SAR autofocusing method based on persistent scatterers (PSs) [

41,

42,

43]. These methods regard the PSs as point targets and extract the errors from these point targets directly. The other type is the SAR autofocusing method based on optimal image quality [

44,

45,

46,

47]. Image quality evaluation indices, such as entropy, contrast, sharpness, or correlation with the real image, are used in this type of method. This method establishes the relationship between image quality and non-ideal factors, and the non-ideal factors can be estimated adaptively and iteratively.

However, the methods mentioned above have unneglectable shortcomings. The methods based on PSs in [

41,

42,

43] consider only one type of the non-ideal factors, and the effectiveness of the methods still remain to be validated for actual SAR working conditions with multiple non-ideal factors. As for the methods based on optimal image quality in [

44,

45,

46,

47], iteration is usually required for optimization. In this case, the calculation burden becomes an unneglectable problem. In addition, the selection of a proper image quality index is also a problem for error estimation.

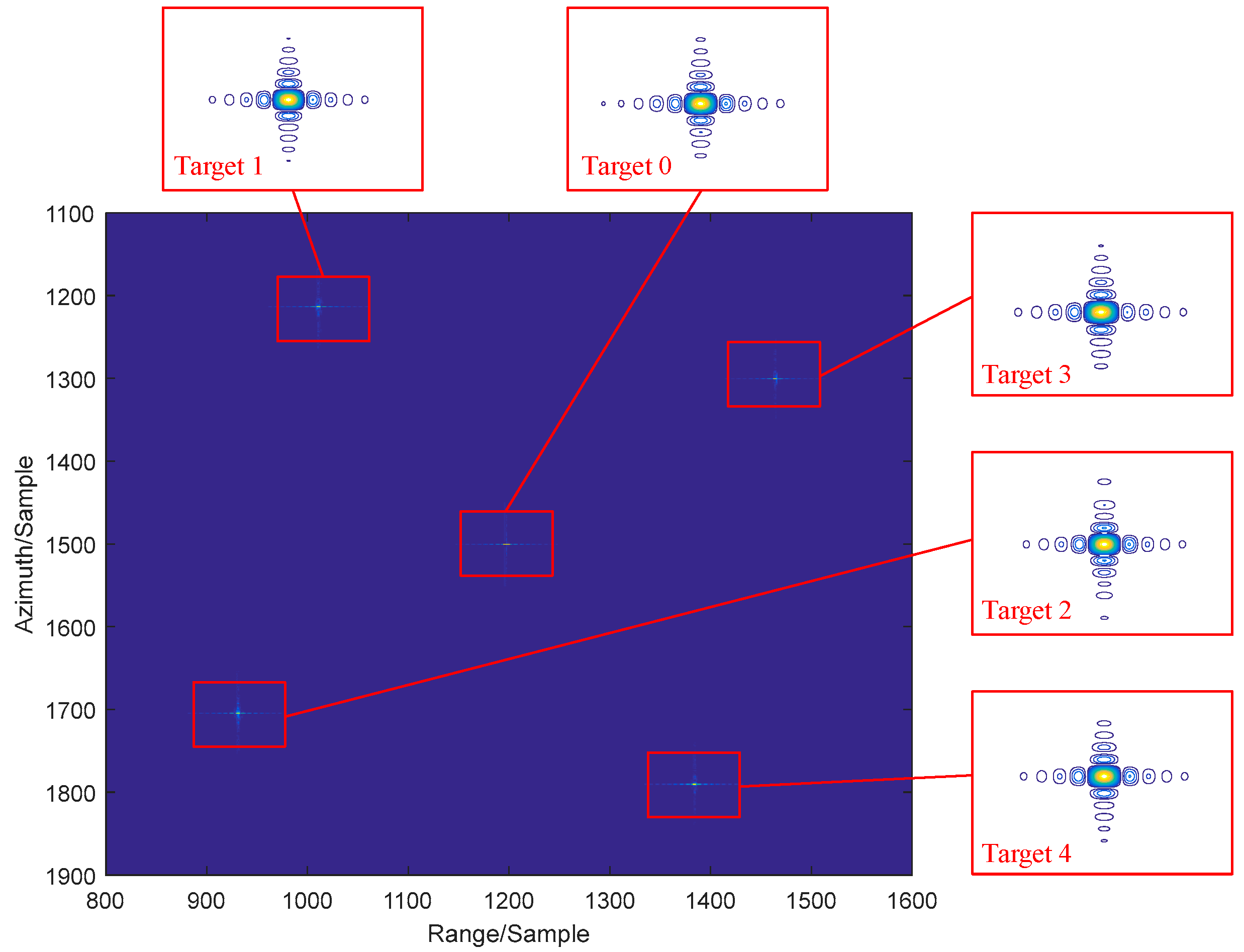

To resolve the aforementioned problems and obtain well-focused SAR images, an autofocusing method for SAR with a long synthetic time and large swath is proposed. The main contributions are twofold.

The method provides a PGA-based solution to SAR imaging with multi-source non-ideal factors. Specifically, an improved phase gradient autofocus equipped with discrete windowing and amplitude error estimation is proposed to deal with multiple types of error.

An error fusion and interpolation method is proposed to deal with the problem of error estimation with a long synthetic time and large swath. This method fuses errors among sub-apertures in the long synthetic time and can fulfill autofocus for blocks where strong scatterers are not sufficient in the large swath. The error in these blocks is interpolated with the errors in adjacent blocks which have sufficient PSs.

The steps of the proposed method are delivered as follows. This method firstly takes 2-D blocking for the scenery to deal with the range and azimuth spatial variability of the non-ideal factor impacts. Subsequently, synthetic aperture division is applied to reduce the temporal variability of the non-ideal factor impacts. Afterwards, the spectral analysis (SPECAN) algorithm is used for imaging, which facilitates error compensations in the azimuth time domain. The errors are estimated with an improved PGA algorithm, which uses the phase gradient of strong points to estimate the phase error and uses the envelope to estimate the amplitude error. In addition, the discrete windowing technique is applied to extract strong points in the condition of the paired echoes. The proposed method can be applied to SAR with a long synthetic time and large swath. Considering that MB SAR, which involves long-synthetic-aperture-time and large-swath imaging, still has a significant gap from practical application, this paper conducts modeling, derivation, and simulation with reference to the observation geometry of GEO SAR.

The rest of this paper is organized as follows. In

Section 2, the SAR echo signal model considering non-ideal factors is discussed. In

Section 3, the autofocusing method is derived and proposed in detail.

Section 4 validates the proposed method via computer simulations based on the target array and the distributed target. Finally, the conclusions are delivered in

Section 5.

3. An Improved Phased Gradient Autofocus Method for SAR with a Long Synthetic Time and Large Swath

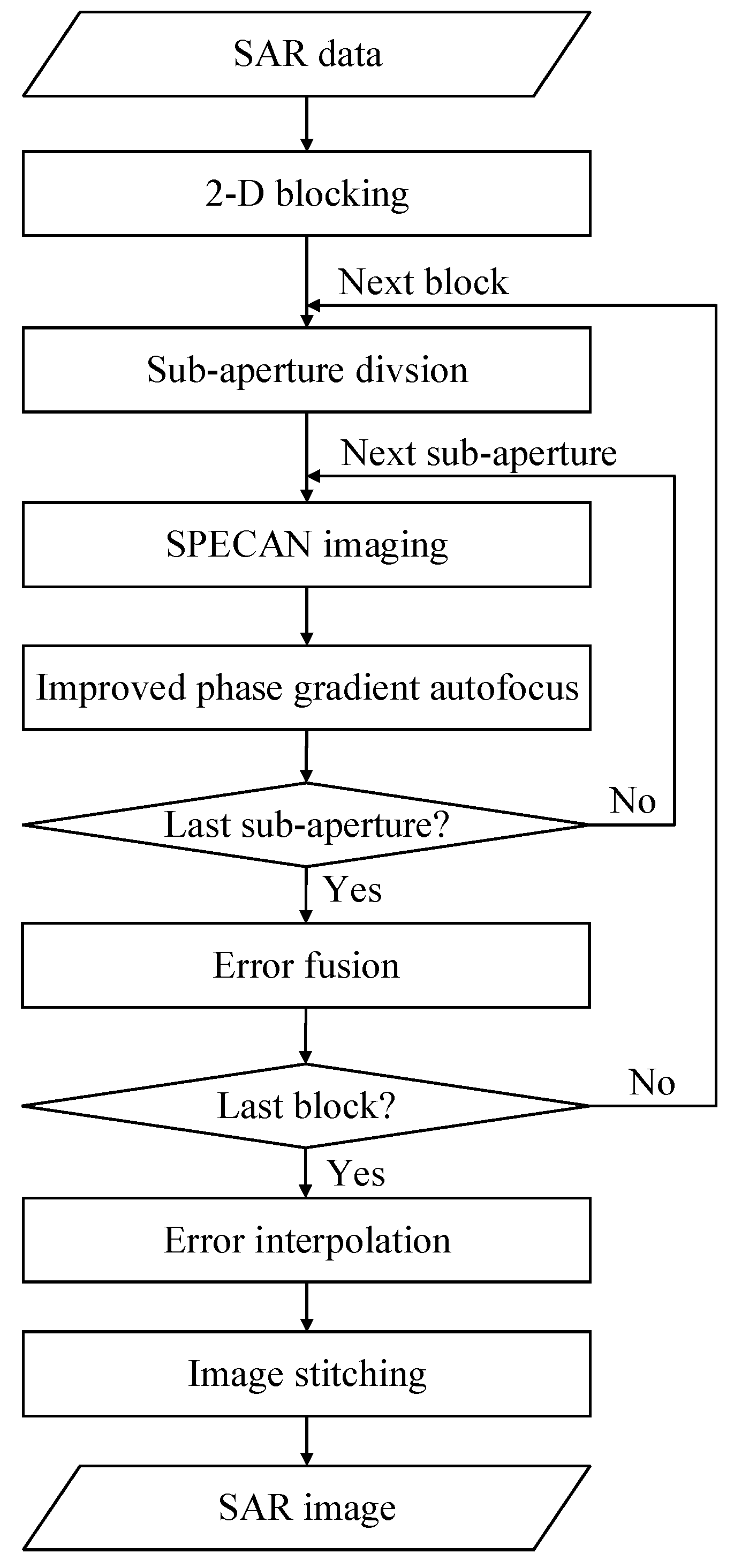

To deal with the undesired impacts on SAR imaging with a long synthetic time and large swath caused by the aforementioned multiple non-ideal factors, an autofocus method based on SPECAN imaging and an improved PGA algorithm are proposed in this section. The method involves 2-D blocking, synthetic aperture division, and discrete windowing, and the overall flowchart of the method is shown in

Figure 3. The detailed process flow of the proposed method will be introduced as follows.

3.1. 2-D Blocking and Synthetic Aperture Division

As discussed before, the impacts caused by non-ideal factors show severe 2-D spatial variability, which will significantly increase the difficulty of autofocus processing. To address this problem, the characteristics of 2-D spatial variance are analyzed, and 2-D blocking is adopted. 2-D blocking is a valid approach to deal with the problem of spatial variance. After a scene with an extremely large width is divided into several small blocks, the spatial variance problems will no longer exist within each block. The block size can be restricted by the overall high order phase error, and discussion on the block size can be found in [

44]. In order to facilitate the image stitching process afterwards, the blocks are set with overlap. The size of the overlapped area can be set as several tens of samples.

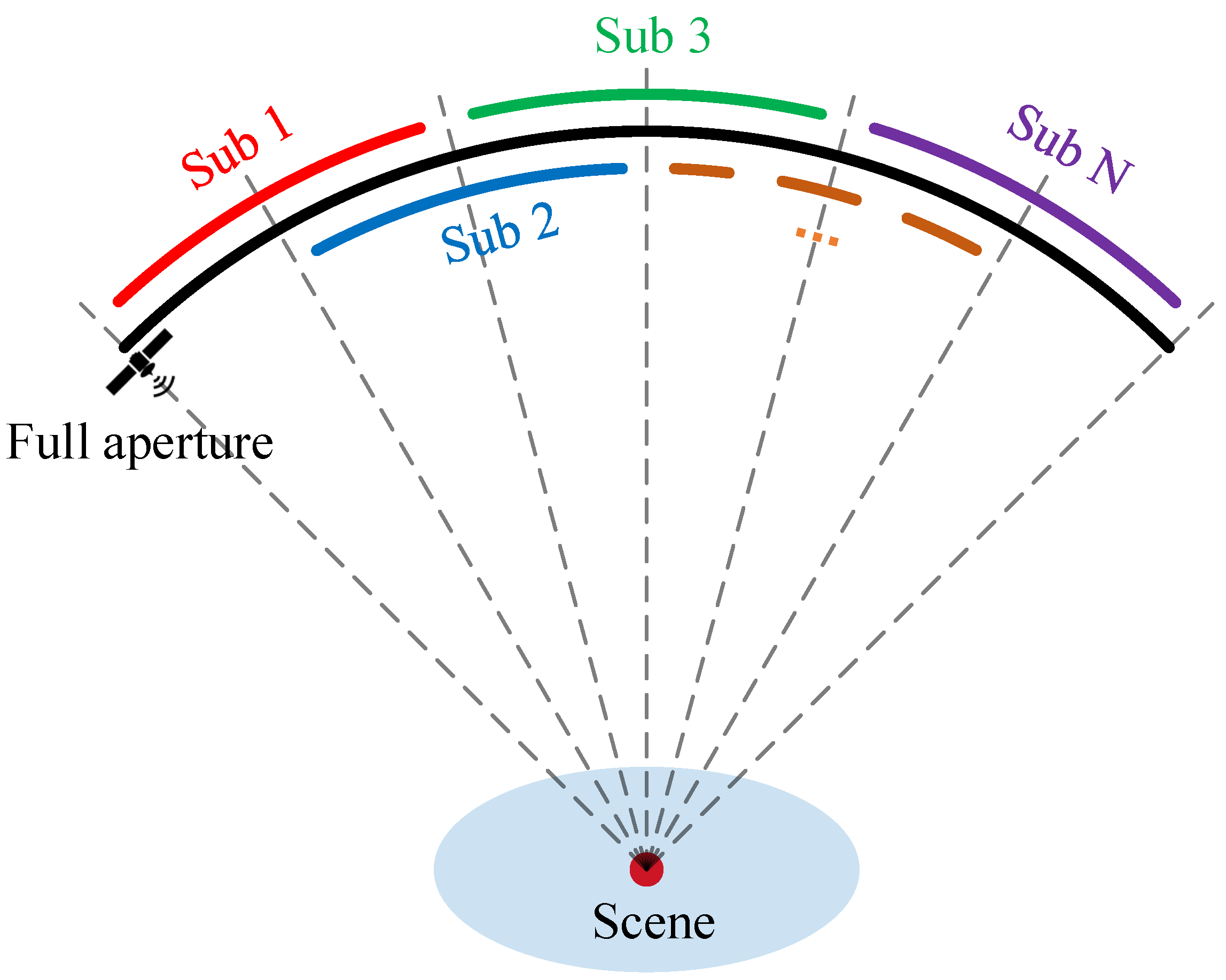

GEO SAR satellite works at a 36,000-kilometer-high orbit, and MB SAR works with a distance of 380,000 km on average. These will result in an unsatisfactory noise equivalent sigma zero (NESZ). With a full aperture time as long as hundreds of seconds, low SNR and large error will cause totally defocused strong points, which will lead to a failure of phase error estimation in autofocus methods related to a strong point, such as the PGA algorithm.

Therefore, the problem of effective raw focusing should be addressed first, and dividing the full synthetic aperture to several short sub-apertures can be an applicable approach to solve the problem. As shown in

Figure 4, in each sub-aperture, phase error is limited within an appropriate range so that the defocusing level of the strong point will not be too severe, raw imaging will be accomplished properly via the SPECAN algorithm, and the phase errors can be estimated separately. There are overlaps between each adjacent sub-aperture pair, with which error fusion can be accomplished after error estimation.

The length of each sub-aperture can be preliminarily set according to typical parameters or otherwise observed non-ideal factor parameters, and imaging can be realized with the preliminary sub-aperture time. If imaging processing fails, a shorter length can be chosen until enough almost-well-focused strong points are acquired.

3.2. SPECAN Imaging

For each sub-aperture and in each block, imaging is fulfilled with the SPECAN algorithm. The typical SPECAN algorithm consist of range compression and range cell migration correction in the range frequency domain, deramping in the azimuth time domain, and finally azimuth Fourier transformation. As for SPECAN imaging for SAR with a long synthetic time and large swath, considering the non-ideal factors, range processing and RCMC can be easily performed within each sub-aperture and each block, and the result can be written as

where

and

denote the overall non-ideal amplitude and phase error, which is discussed in (15) and (16).

For the range compressed signal

, azimuth compression can be realized via a high-order phase multiplication and azimuth Fourier transformation

where

Combining (17) and (18), with some high-order terms omitted, the azimuth compressed result is

where * denotes convolution.

With the stationary phase principle, method of series inversion, and Jacobi–Anger expansion, the non-ideal phase in the azimuth frequency domain can be written as

where

represents the Bessel function of the first kind. This result shows that periodic errors in azimuth imaging manifest as paired echoes, which replicate the image along the azimuth direction with specific weights. The replication interval relates to the vibration frequency, while the weighting factors depend on vibration amplitude and initial phase. In (20), the polynomial phase error can be written as

and the random phase

is omitted, for it can hardly be described with an analytical expression, and it can be analyzed via statistical methods. Here the coefficients can be calculated with the method of series inversion:

As for the amplitude error, considering the fact that it will be estimated in the azimuth time domain by extracting the envelope of strong targets, its analytical form is not required. As a result, the final signal model after SPECAN imaging can be achieved as

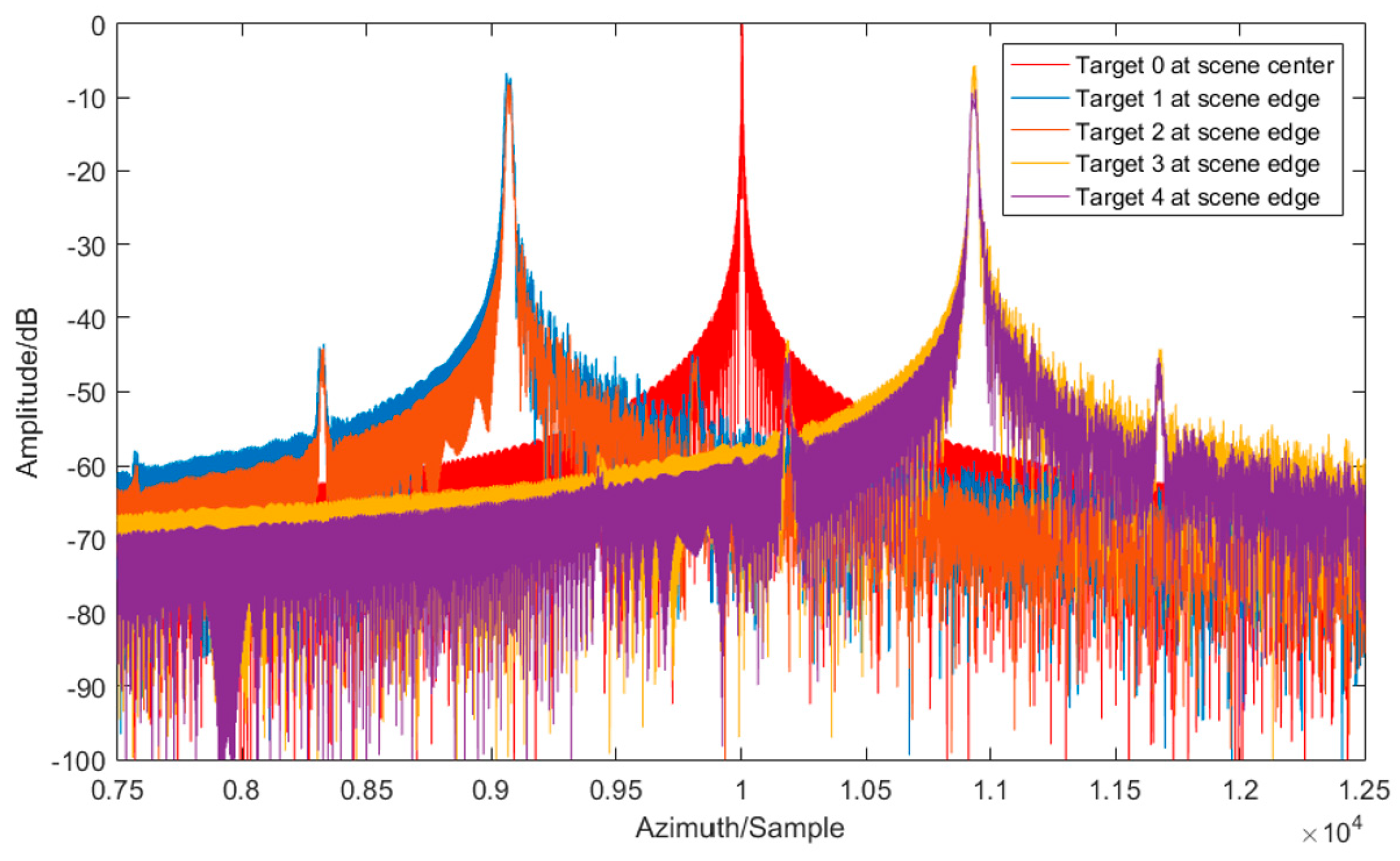

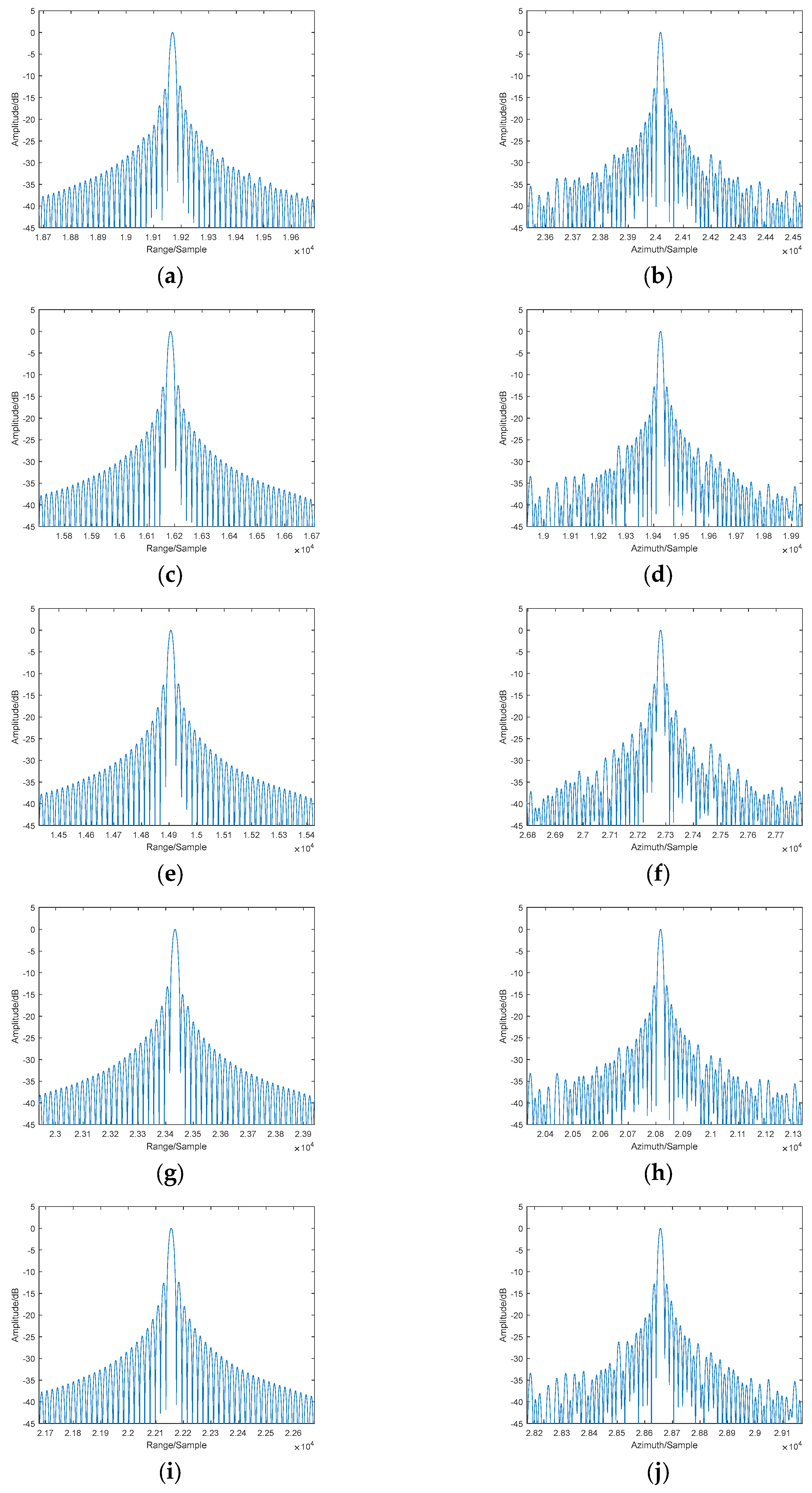

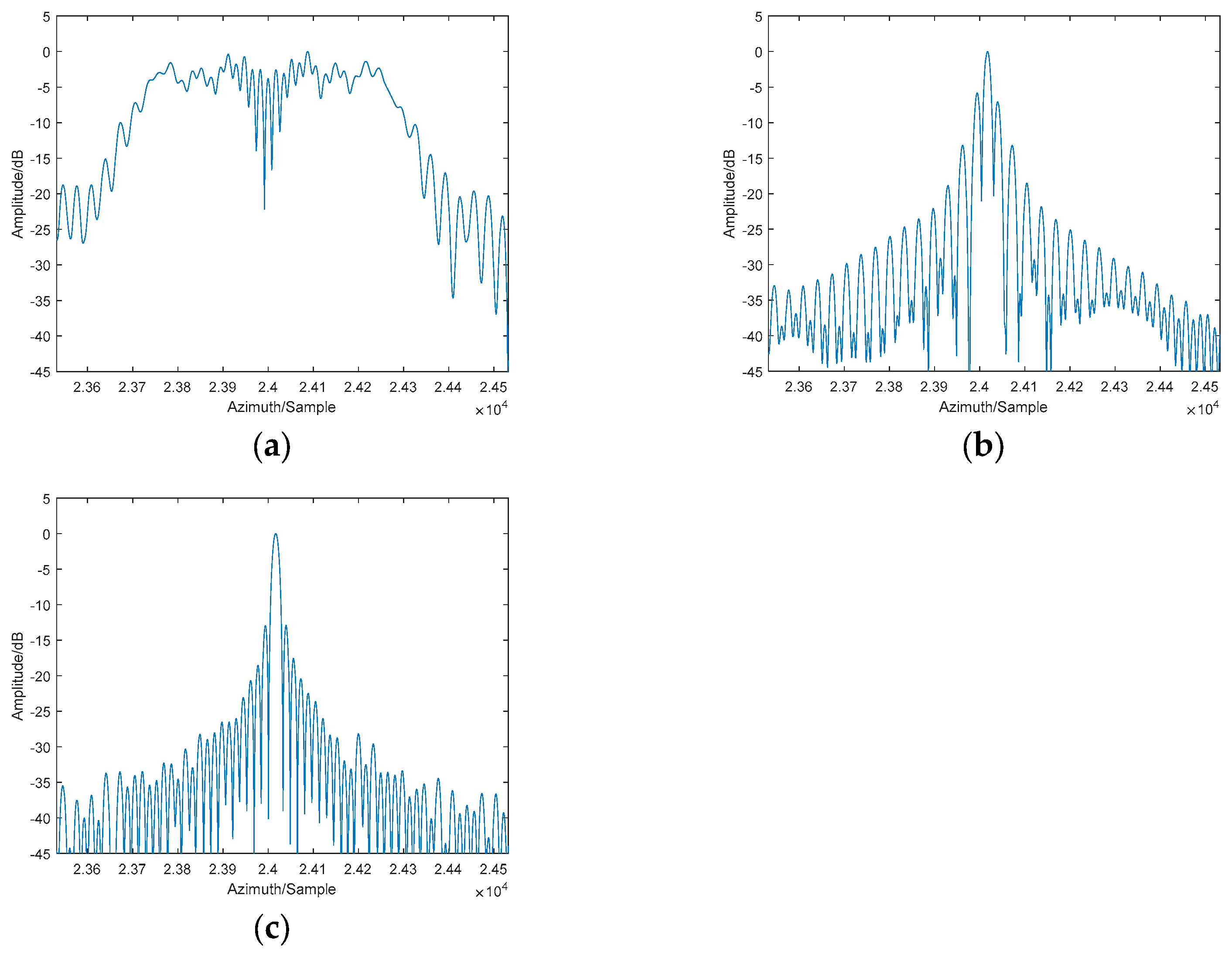

According to the SPECAN imaging result, the final image of a point target can be seen as an ideal 2-D sinc function convolved with the spectra of the errors, which will degrade the quality of the image through widening the mainlobe and causing paired echoes.

3.3. Improved Phase Gradient Autofocus

In the following autofocus processing, an improved phased gradient autofocus is applied to estimate and compensate for the errors. The main differences of the proposed improved PGA and traditional PGA involve two aspects: (1) discrete windowing, rather than the rectangular windowing in traditional PGA, is applied to deal with the paired echoes introduced by periodic errors; and (2) an envelope of strong targets is extracted to estimate the amplitude errors. In addition, the phase errors are estimated in the same way of traditional PGA processing.

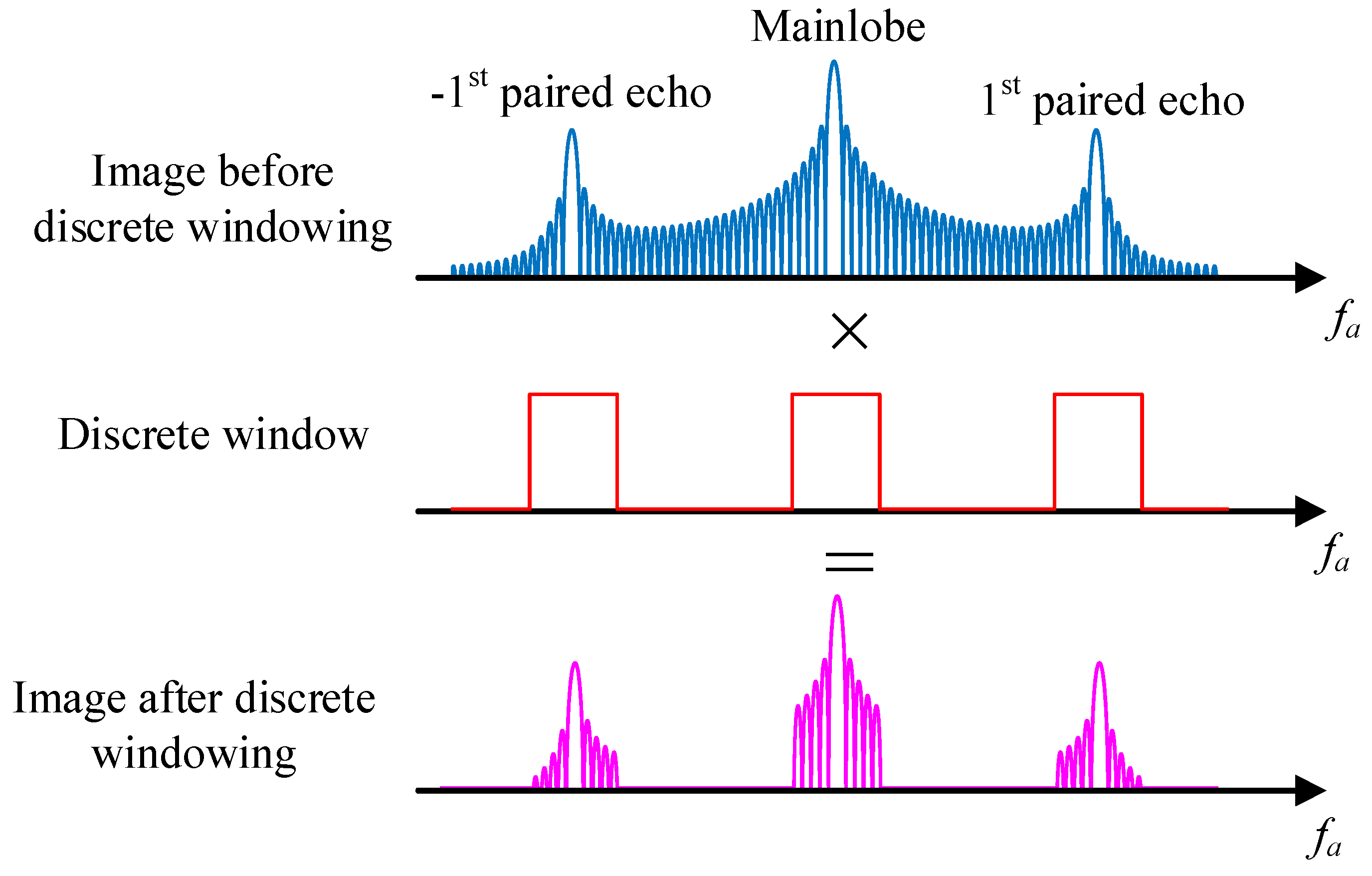

3.3.1. Discrete Windowing

The periodic phase error caused by antenna vibration will bring extra paired echo peaks in the image, thus spreading the energy of the targets. As is shown in (20), the paired echo peaks appear in the position with azimuth displacement of integer multiples of vibration frequency from the actual mainlobe position

where

is the position of the nth paired echo in the image. As a result, the power of the strong point is mainly distributed discretely in the neighborhoods around the peaks of the mainlobe and the paired echoes.

The discrete windowing method can be applied to address the problem of paired echoes. As shown in

Figure 5, the basic idea of discrete windowing is to multiply the data with a series of discrete windows which have non-zero values only near the mainlobe and the paired echo peaks, and thus include the energy of the target as much as possible.

Considering the SPECAN processing in (23), and taking the widened azimuth point spread function as a whole, the azimuth image is

where the widened azimuth point spread function is

A series of discrete windows can be defined as

where

is rectangular window function. The total number of discrete windows is

, and the width of each window

is determined by the power distribution of each peak in the same way as traditional continuous windowing. After discrete windowing, the image becomes

where

is a truncated azimuth point spread function with the center at the nth paired echo peak.

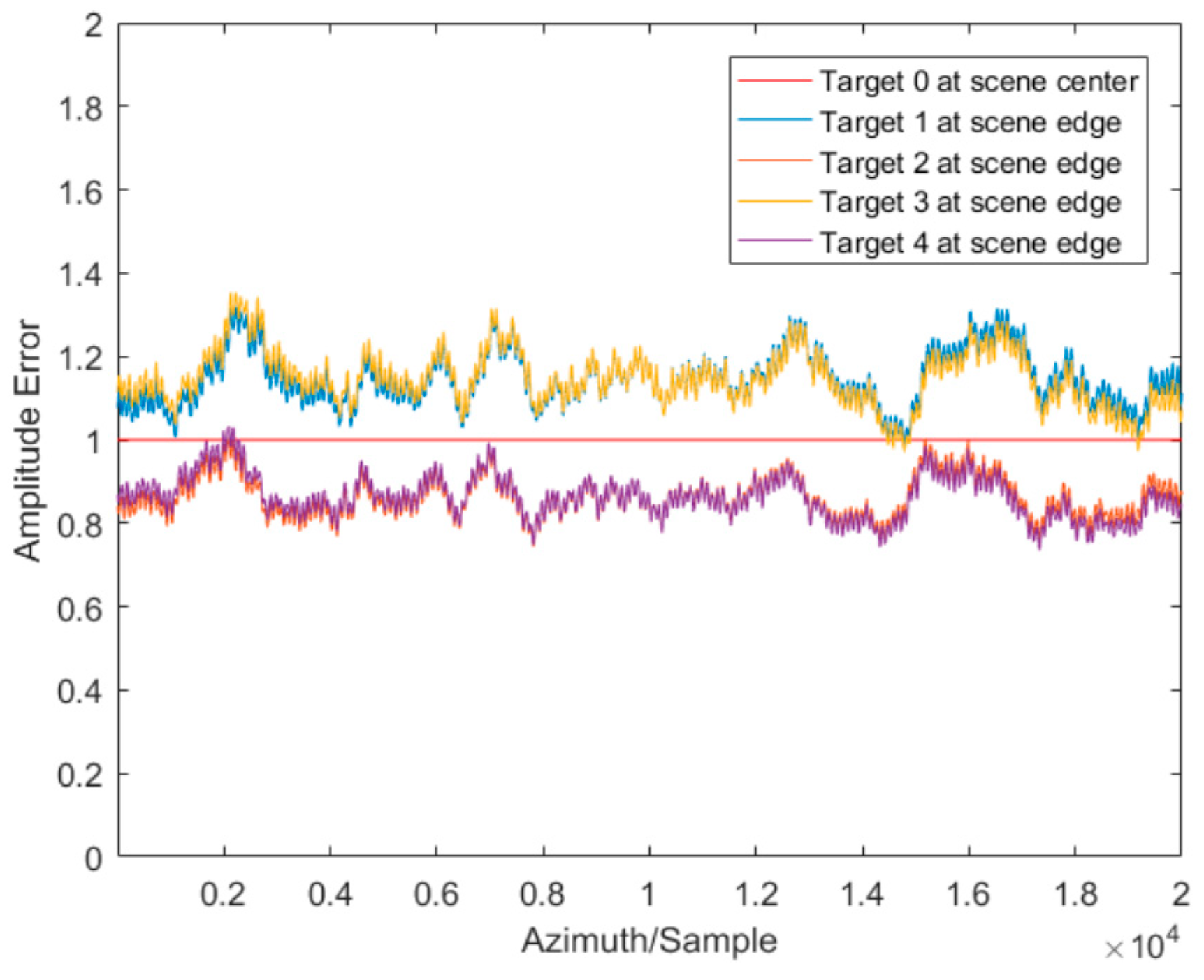

3.3.2. Amplitude Error Estimation and Compensation

The amplitude errors of SAR will bring fluctuating amplitude in the data domain, which can be shown in the IFFT result of (29) after discrete windowing and can be written as

For the sake of simplicity, the azimuth data can be rewritten with its amplitude and phase as

The fluctuating amplitude will consequently degrade the image quality. Thus, the problem of non-uniform amplitude should be addressed. An amplitude compensation procedure is added by extracting the fluctuating amplitude of each strong point to remove the impact of amplitude error.

As shown in (30), amplitude errors are multiplicative real noise in the azimuth time domain, and they will turn the constant envelope of strong point data into fluctuating forms. Thus, it can be estimated by extracting the envelope of strong point data, which can be shown in

where

is the envelope of the data and

is the average of

for azimuth time. According to the estimated amplitude errors, amplitude compensation can be fulfilled in the azimuth time domain.

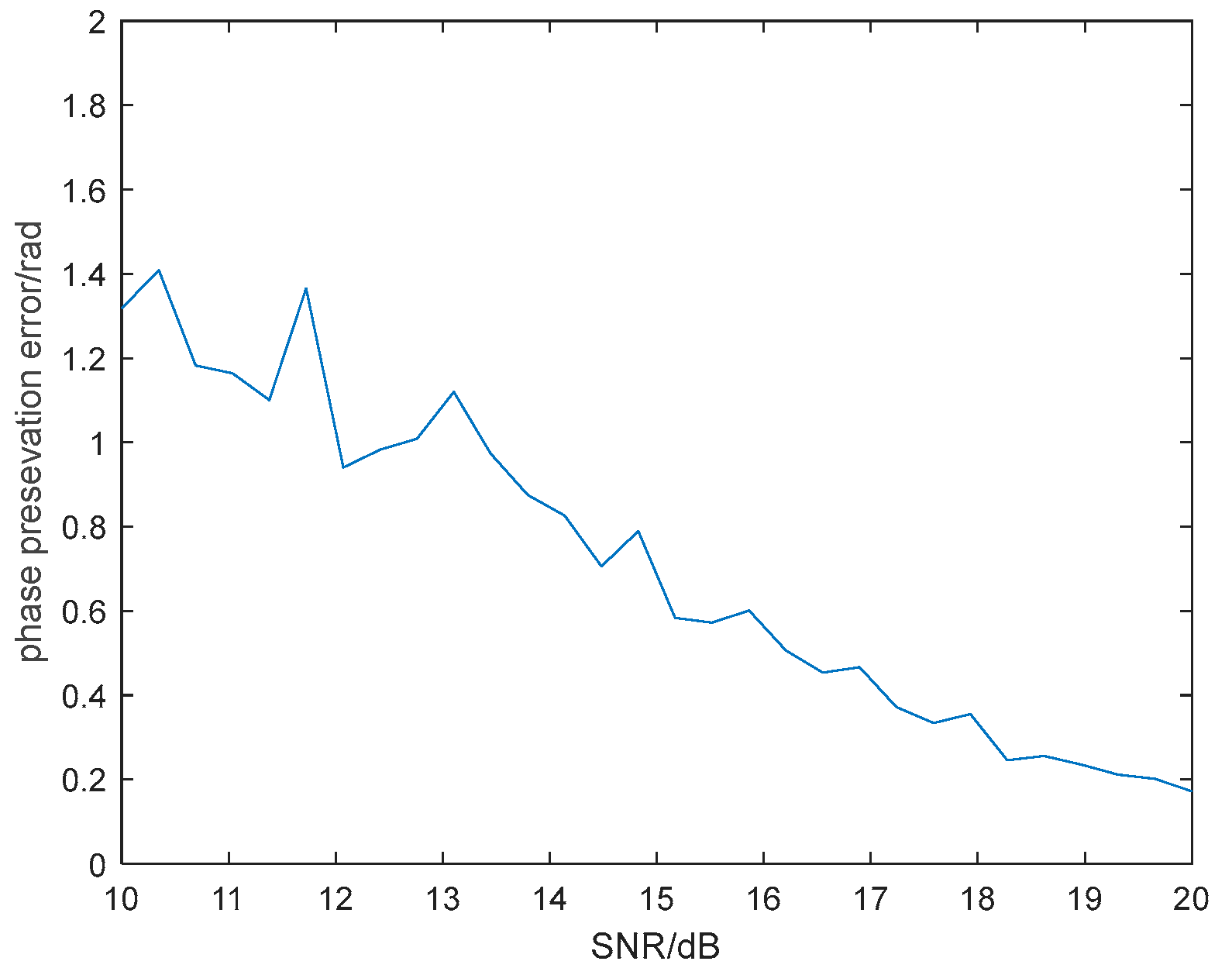

3.3.3. Phase Error Estimation and Compensation

After applying amplitude compensation, the data in the azimuth time domain become

and the phase errors

can be corrected by the PGA method. The PGA autofocus method is based on the fact that the phase gradient of the isolated well-focused strong point in the data domain should be zero. Thus, the idea of PGA autofocus is to compensate for the extra non-linear phase errors brought by non-ideal factors to realize precise focusing.

In PGA processing, a circular shift is first applied in several chosen strong points to remove the linear phase, and then the phase gradient is estimated. For strong points in

range cells, the linear unbiased minimum variance (LUMV) estimation of the phase gradient can be written as

where

is the first-order difference of

, which is the

sample of

.

The accumulative phase error is

Usually, the estimated phase error is compensated iteratively until the phase error is below a preset value.

3.4. Error Fusion for Sub-Apertures

In the procedures above, the error in each sub-aperture and each block can be estimated. Afterward, the estimated errors should be fused to realize the desired imaging processing with the whole synthetic aperture time for the entire imaging scene.

Assume that the estimated amplitude and phase errors in the nth sub-aperture for one specific block is

where

and

are the center and the length of the

sub-aperture, respectively. Correspondingly, the error in the full aperture can be fused by the following rule: for the overlapped part of sub-apertures, the fused error is an average of each sub-aperture error; and for the non-overlapped part, the fused error is the estimated error itself. This rule can be written as

where

is the length of overlapped part of two adjacent sub-apertures.

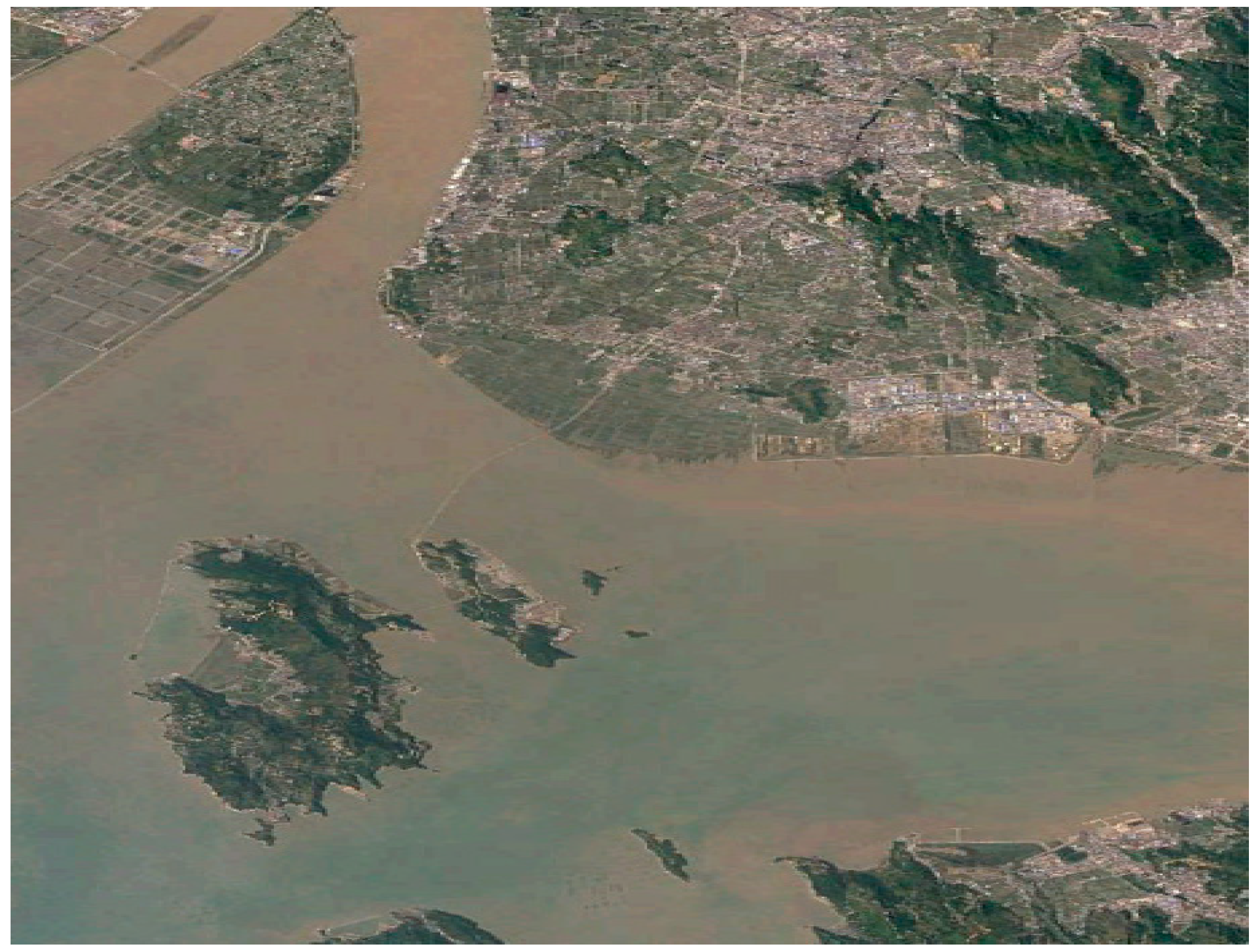

3.5. Error Interpolation for Blocks Without PSs

The error in the full aperture for a block with PSs can be estimated with the method above. However, for blocks where PSs are not sufficient, the proposed method fails for the reason that it relies on point scatters to estimate phase and amplitude error. Therefore, interpolation is used to estimate errors for blocks without PSs.

Error of blocks without PSs can be interpolated with the aid of their adjacent blocks which have enough strong points:

where

is the error parameter of the

th block without a strong point, and

is the error parameter of the

th block with proper strong points.

and

are the coordinates of the center of the

th block with proper strong points, and

and

are the coordinates of the center of the

th block without strong points. The function

denotes the interpolation process.

Furthermore, for the random error which can be hardly modelled with parameters, interpolation can be applied directly on the phase and amplitude for each azimuth time:

where

is the error amplitude and error of the

th block without a strong point at azimuth time

, and

is the error parameter of the

th block with proper strong points.

Cubic interpolation is applied to fulfill the

function. Cubic interpolation of scattered two-dimensional data provides a smooth reconstruction of surfaces from irregularly sampled points through a triangulation-based piecewise polynomial approach. The method begins by constructing a Delaunay triangulation of the input domain, partitioning the plane into triangular elements with vertices at the original data locations. Within each triangle, a bicubic polynomial function can be defined as

This function is fitted to approximate the interpolated data. The polynomial contains ten coefficients that are determined by imposing two constraints. First, the interpolation conditions are enforced at each vertex to ensure exact reproduction of the input data:

Second, gradient matching conditions are applied to guarantee continuity across triangle boundaries:

where

and

shares the same vertex

. By applying these constraints, the coefficients in (39) can be determined.

The method achieves smooth visual results while maintaining local adaptability to irregular data distribution. The cubic terms in the polynomial allow for curvature variation within each element, providing better approximation than linear methods while avoiding the oscillatory behavior that can occur with higher-order global polynomials. This characteristic is well applicable in error estimation among different blocks.

3.6. Image Stitching

So far, the errors of full aperture in any block have been estimated. Therefore, the final desired SAR image with a full synthetic aperture for the entire imaging scene can be obtained via stitching the images of all the blocks.

The image stitching mainly include the problem of the image tile effect on the edge of each image block. To deal with this problem, an imaging stitching method with image registration and edge smoothing is applied.

Since the proposed method is a PGA-based error estimation method, the first-order and constant polynomial error will not be estimated, and these errors will lead to constant phase and displacement in the final SAR image. Considering that error estimation and compensation are applied to each block, residual errors after compensation vary among the blocks. The varying residue errors will cause varying displacement and constant phase in each block:

where

denotes the error-compensated image for the

th block,

denotes the ideal image,

and

denote displacement in the range and azimuth direction, respectively, and

denotes the constant phase for the

th block. In addition, an additional block-varying processing gain

is considered, for each block is processed separately.

In the image stitching process, image registration is first applied to remove displacement. Considering that the blocks are set with overlap, registration can be fulfilled via maximizing the cross-correlation of magnitude of the overlapped area for two adjacent blocks:

With the displacement compensated for, the varying

and

can be estimated to remove the tile effect. The estimation for

can be completed by unifying the magnitude of overlapped area in different blocks, and the estimation for

can be completed via maximizing the phase-compensated summation of overlapped area of two adjacent blocks:

where

denotes the image of the

th block after registration and magnitude unification. With the block-varying displaced, magnitude and phase compensated for, the image stitching accomplished, the image for the entire scene is finally acquired.

3.7. Algorithm Complexity Analysis

The data load for SAR imaging with a long synthetic time and large swath is very large, and algorithm complexity might be a problem. In this subsection, algorithm complexity is analyzed.

In our proposed method, range-matched filtering is firstly applied, and it is conducted in the range frequency domain. Therefore, -point fast Fourier transformation (FFT) is performed times, where and are the number of samples in the range and azimuth directions, respectively, and it demands complex number addition and complex number multiplication. Range-matched filtering and range cell migration correction (RCMC) are completed by multiplication in the range frequency domain, and it demands a total of complex number multiplication. Thereafter, range inverse FFT (IFFT) is applied, and its computation load is the same as the range FFT.

In the following azimuth processing, azimuth deramping is performed, and it is realized via a point complex number multiplication times. Here, is the number of sub-apertures. and is the number of azimuth samples in a sub-aperture. To form the rough image for autofocusing, the azimuth FFT is then applied, and its computation load is complex number additions and complex number multiplications. It should be noted that the azimuth FFT will be repeatedly performed in the subsequent autofocusing procedures for times of iteration and for each of the blocks. Therefore, the total computation load for the azimuth FFT in the error estimation iteration for all the blocks should be taken into account. Therefore, an extra number of complex number additions and complex number multiplications are included. It will be the same case for the following computation load analysis.

In the autofocus processing, azimuth windowing is conducted for the range cells with strong scatterers, and it demands complex number multiplications. Here, denotes the number of strong scatterers for error estimation in each block. For these windowing range cells, azimuth IFFT is performed, and it requires complex number additions and complex number multiplications. Thereafter, errors are estimated via the proposed improved PGA method, and its computation load is complex number additions, as well as complex number multiplications. Then, the errors are fused among the sub-apertures, and its computation load can be omitted, for it will not involve time-consuming computation based on radar data. For the same reason, the computation load of imaging stitching is also omitted. Therefore, the computation load of final imaging only involves error compensation for each block and the corresponding azimuth FFT, and it includes complex number additions, as well as complex number multiplications.

Overall, the total computation load of the proposed method can be summarized in

Table 1, and the algorithm complexity can be described as

. In actual processing of SAR data, total computation time is usually several hours to several tens of hours, depending on the amount of iterations.