Robust Pose Estimation for Noncooperative Spacecraft Under Rapid Inter-Frame Motion: A Two-Stage Point Cloud Registration Approach

Abstract

1. Introduction

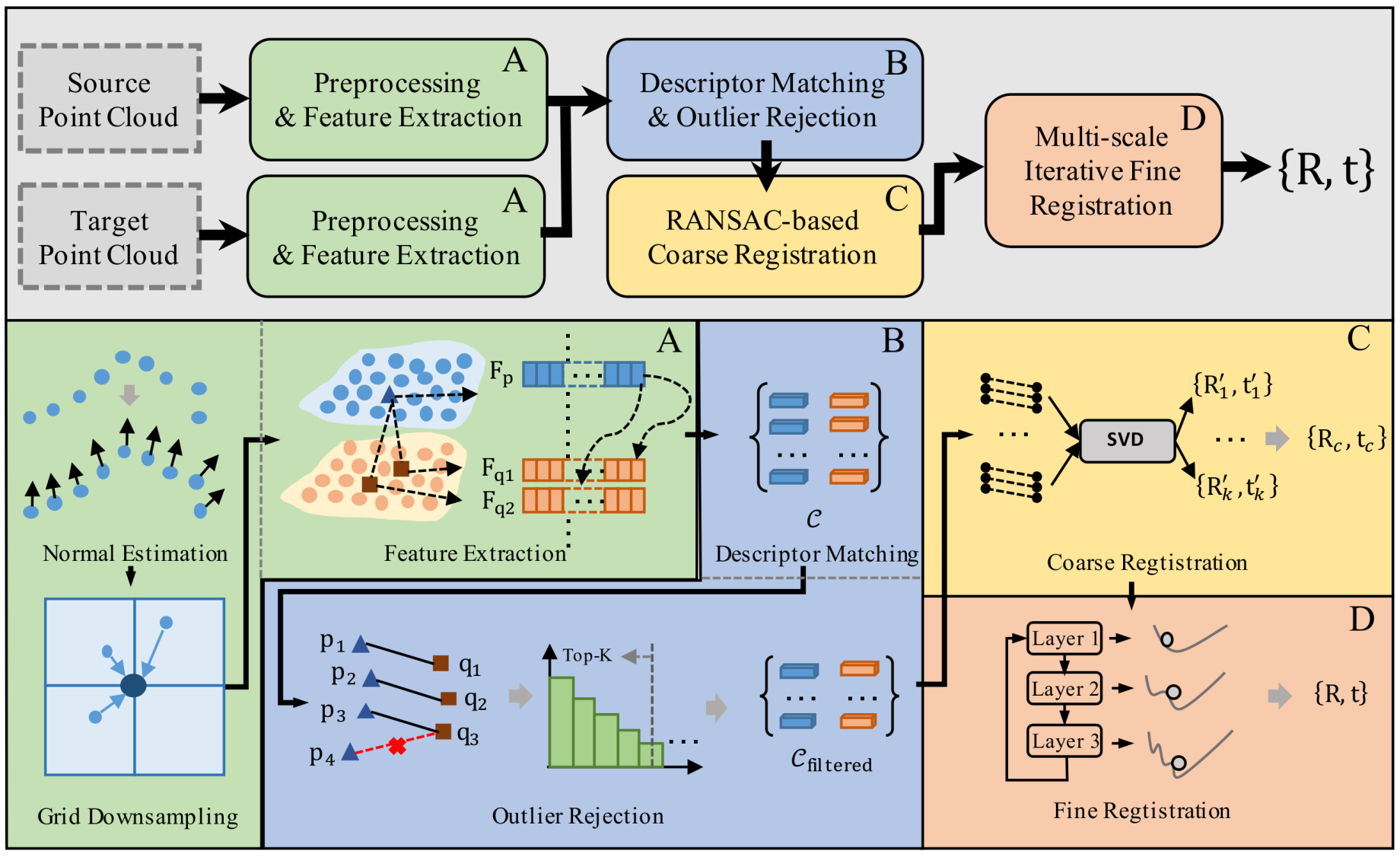

- Two-stage Point Cloud Registration Framework: We propose a novel two-stage point cloud registration framework tailored for pose estimation of noncooperative spacecraft. This approach incorporates a length-invariant outlier rejection mechanism in the coarse alignment stage, followed by an ICP-based fine registration. The design significantly improves robustness and accuracy under fast inter-frame motion, as demonstrated through extensive quantitative evaluations.

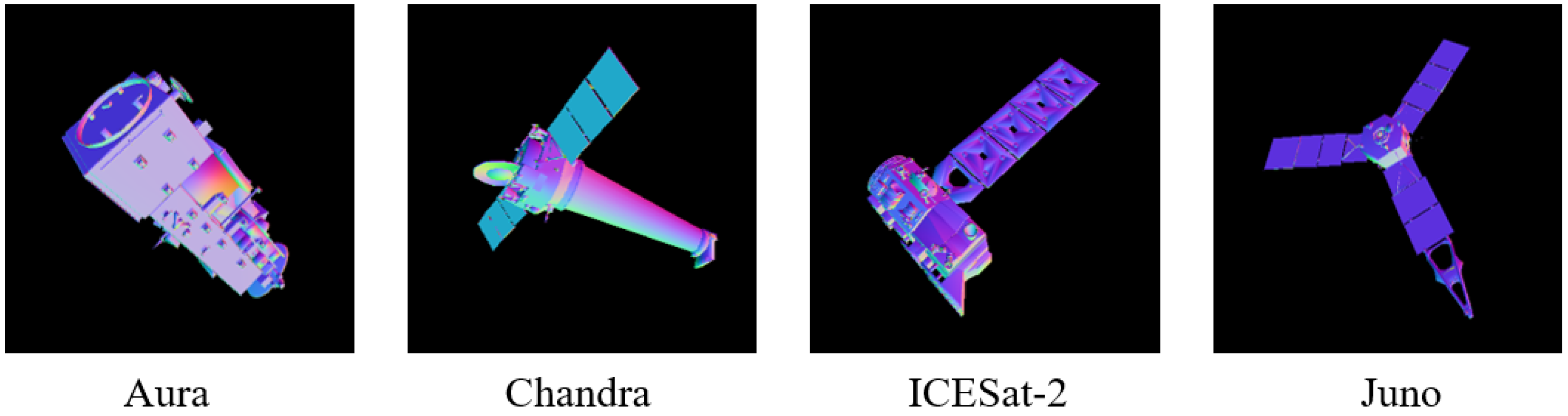

- Synthetic Benchmark Dataset for Spacecraft Pose Estimation: We construct a comprehensive synthetic dataset using 8 diverse CAD models of spacecraft, each with 10 independently generated sequences and a total of 12,000 annotated frames. The dataset provides accurate ground truth poses and is designed to support rigorous benchmarking under a wide range of motion and viewing conditions.

- Practical Adaptability for Onboard Applications: The proposed framework is designed with computational efficiency and data generalizability in mind, making it suitable for onboard processing in resource-constrained spacecraft systems. Unlike many deep learning-based methods, our approach avoids the need for large-scale training data and delivers real-time inference performance, which is validated across diverse simulated ToF scenarios.

2. Materials and Methods

2.1. Problem Statement

2.2. Feature-Based Coarse Registration

2.2.1. Point Cloud Preprocessing

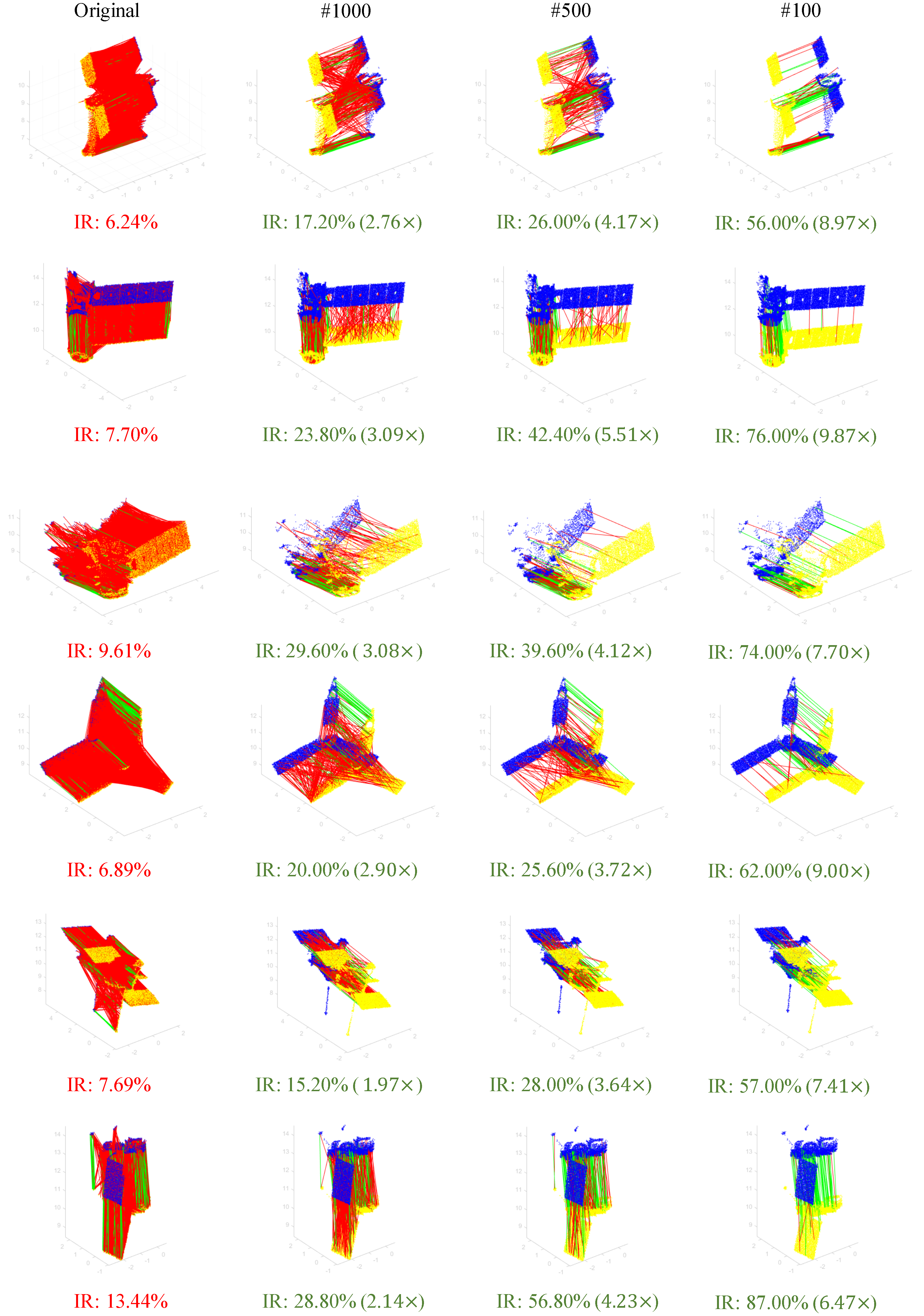

2.2.2. Feature Extraction and Matching

2.2.3. RANSAC-Based Pose Estimation

2.3. Geometry-Based Fine Registration

2.4. Point Cloud Dataset Construction

- : 3D vertex in world coordinates

- , : Camera extrinsic parameters

- : Intrinsic matrix

- : Pixel coordinates in image plane

| Algorithm 1 Synthetic point cloud generation. |

|

3. Results

3.1. Dataset

3.2. Evaluation Metrics

3.3. Implementation Details

3.4. Comparison to Other Methods

3.4.1. Quantitative Comparison

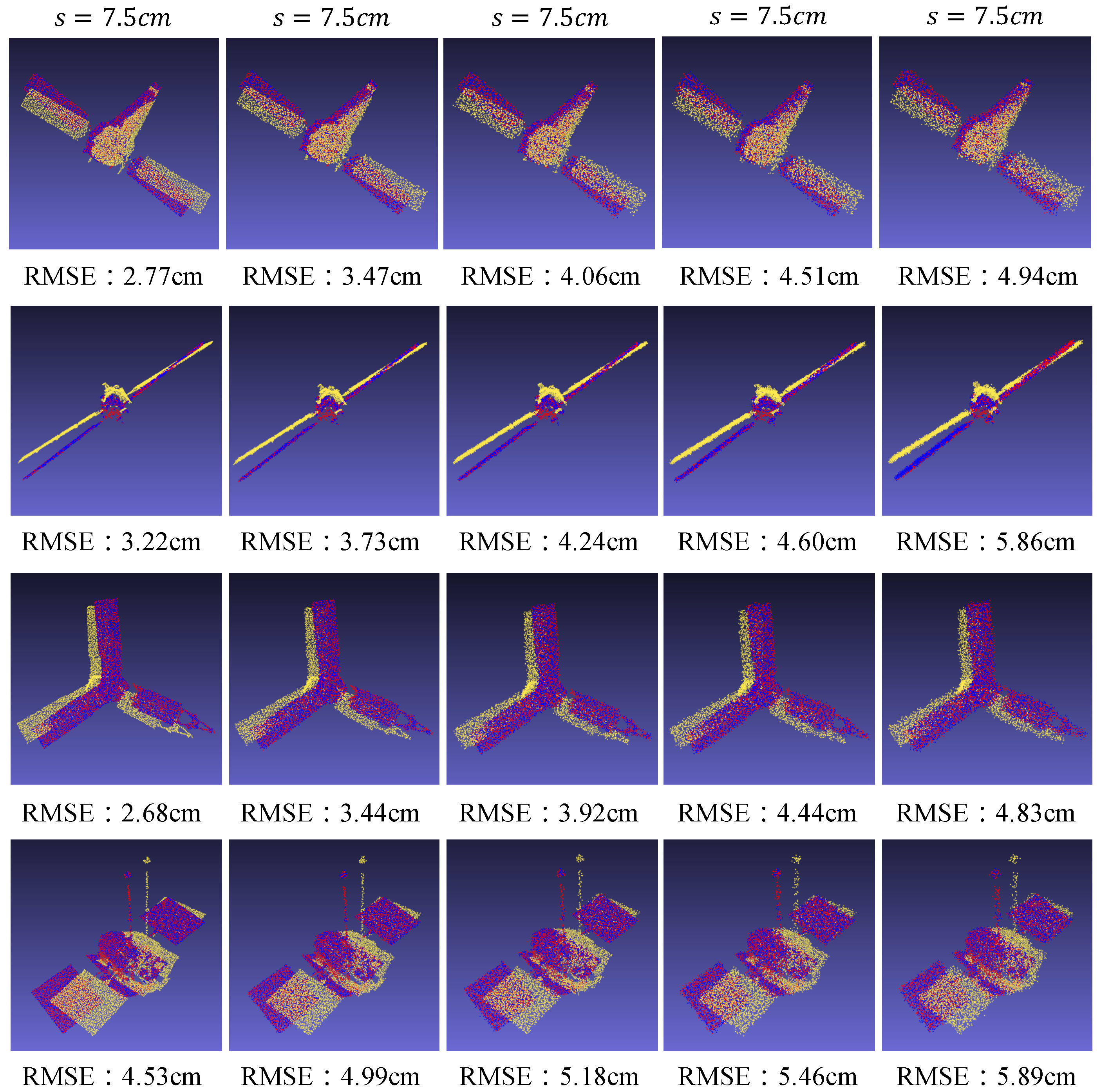

3.4.2. Robustness to Noise

3.4.3. Qualitative Results

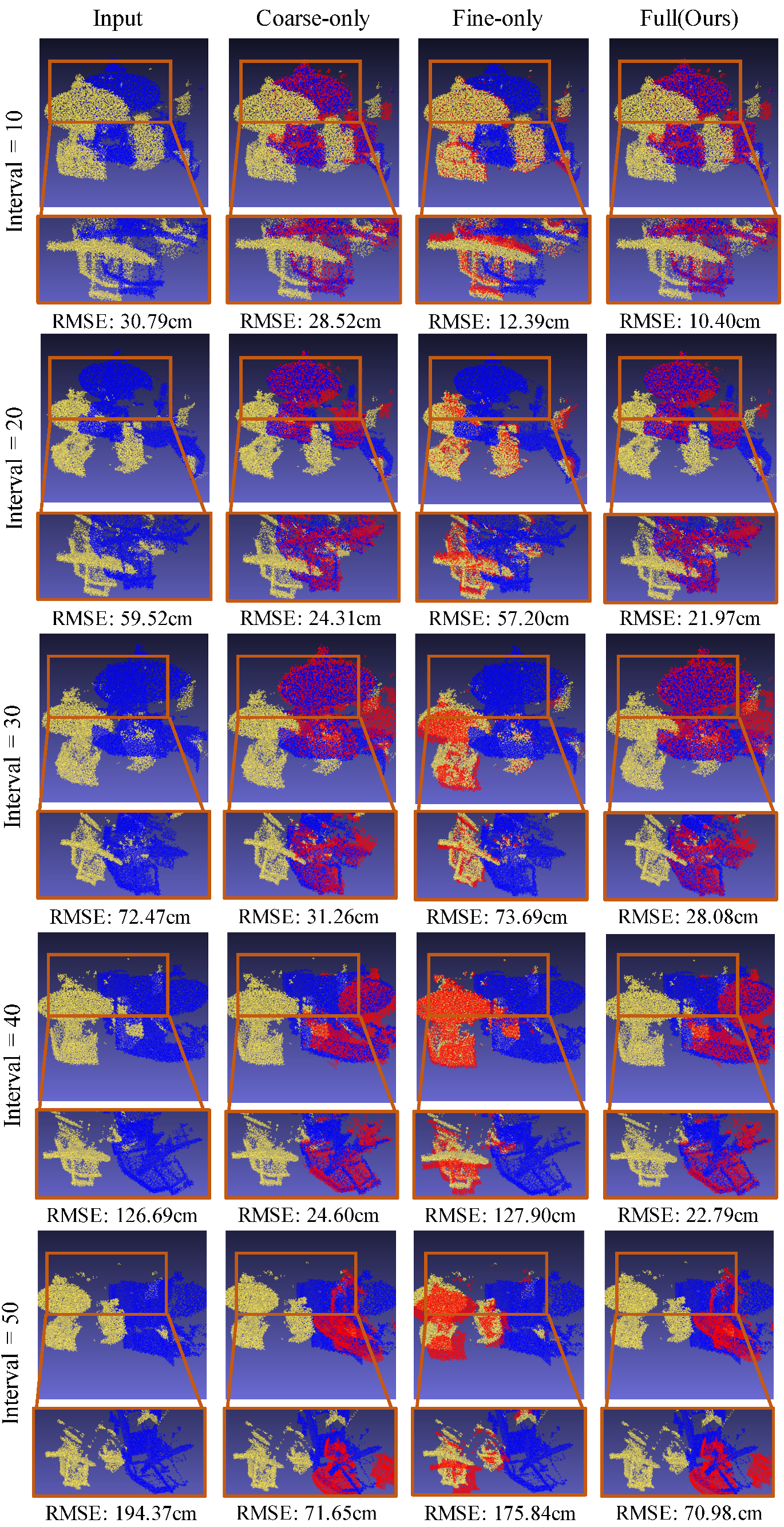

3.5. Ablation Study

3.6. Runtime Performance Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Srivastava, R.; Sah, R.; Das, K. Model predictive control of floating space robots for close proximity on-orbit operations. In Proceedings of the AIAA SCITECH 2023 Forum, National Harbor, MD, USA, 23–27 January 2023; p. 0157. [Google Scholar]

- Jawaid, M.; Elms, E.; Latif, Y.; Chin, T.J. Towards bridging the space domain gap for satellite pose estimation using event sensing. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 11866–11873. [Google Scholar]

- Zhang, Y.; Wang, J.; Chen, J.; Shi, D.; Chen, X. A Space Non-Cooperative Target Recognition Method for Multi-Satellite Cooperative Observation Systems. Remote Sens. 2024, 16, 3368. [Google Scholar] [CrossRef]

- Hu, L.; Sun, D.; Duan, H.; Shu, A.; Zhou, S.; Pei, H. Non-cooperative spacecraft pose measurement with binocular camera and tof camera collaboration. Appl. Sci. 2023, 13, 1420. [Google Scholar] [CrossRef]

- Hu, J.; Li, S.; Xin, M. Real-time pose determination of ultra-close non-cooperative satellite based on time-of-flight camera. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 8239–8254. [Google Scholar] [CrossRef]

- Pauly, L.; Rharbaoui, W.; Shneider, C.; Rathinam, A.; Gaudillière, V.; Aouada, D. A survey on deep learning-based monocular spacecraft pose estimation: Current state, limitations and prospects. Acta Astronaut. 2023, 212, 339–360. [Google Scholar] [CrossRef]

- Gavilanez, G.; Moncayo, H. Vision-based relative position and attitude determination of non-cooperative spacecraft using a generative model architecture. Acta Astronaut. 2024, 225, 131–140. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Yuan, H.; Chen, H.; Wu, J.; Kang, G. Non-Cooperative Spacecraft Pose Estimation Based on Feature Point Distribution Selection Learning. Aerospace 2024, 11, 526. [Google Scholar] [CrossRef]

- Pensado, E.A.; de Santos, L.M.G.; Jorge, H.G.; Sanjurjo-Rivo, M. Deep learning-based target pose estimation using lidar measurements in active debris removal operations. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 5658–5670. [Google Scholar] [CrossRef]

- Liu, J.; Sun, W.; Yang, H.; Zeng, Z.; Liu, C.; Zheng, J.; Liu, X.; Rahmani, H.; Sebe, N.; Mian, A. Deep learning-based object pose estimation: A comprehensive survey. arXiv 2024, arXiv:2405.07801. [Google Scholar]

- Renaut, L.; Frei, H.; Nuchter, A. CNN-based Pose Estimation of a Non-Cooperative Spacecraft with Symmetries from LiDAR Point Clouds. IEEE Trans. Aerosp. Electron. Syst. 2024, 61, 5002–5016. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; SPIE: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Robotics: Science and Systems; University of Washington: Seattle, WA, USA, 2009; Volume 2, p. 435. [Google Scholar]

- Zhang, J.; Yao, Y.; Deng, B. Fast and robust iterative closest point. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3450–3466. [Google Scholar] [CrossRef] [PubMed]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Tombari, F.; Salti, S.; Di Stefano, L. Unique signatures of histograms for local surface description. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Proceedings, Part III 11. Springer: Berlin/Heidelberg, Germany, 2010; pp. 356–369. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Nießner, M.; Fisher, M.; Xiao, J.; Funkhouser, T. 3dmatch: Learning local geometric descriptors from RGB-D reconstructions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1802–1811. [Google Scholar]

- Gojcic, Z.; Zhou, C.; Wegner, J.D.; Wieser, A. The perfect match: 3D point cloud matching with smoothed densities. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5545–5554. [Google Scholar]

- Choy, C.; Park, J.; Koltun, V. Fully convolutional geometric features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8958–8966. [Google Scholar]

- Huang, S.; Gojcic, Z.; Usvyatsov, M.; Wieser, A.; Schindler, K. Predator: Registration of 3D point clouds with low overlap. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4267–4276. [Google Scholar]

- Qin, Z.; Yu, H.; Wang, C.; Guo, Y.; Peng, Y.; Xu, K. Geometric transformer for fast and robust point cloud registration. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11143–11152. [Google Scholar]

- Barath, D.; Matas, J. Graph-cut RANSAC. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6733–6741. [Google Scholar]

- Quan, S.; Yang, J. Compatibility-guided sampling consensus for 3-D point cloud registration. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7380–7392. [Google Scholar] [CrossRef]

- Yang, J.; Huang, Z.; Quan, S.; Qi, Z.; Zhang, Y. SAC-COT: Sample consensus by sampling compatibility triangles in graphs for 3-D point cloud registration. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5700115. [Google Scholar] [CrossRef]

- Chen, Z.; Sun, K.; Yang, F.; Tao, W. Sc2-pcr: A second order spatial compatibility for efficient and robust point cloud registration. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13221–13231. [Google Scholar]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A globally optimal solution to 3D ICP point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2241–2254. [Google Scholar] [CrossRef] [PubMed]

- Bustos, A.P.; Chin, T.J. Guaranteed outlier removal for point cloud registration with correspondences. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2868–2882. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Shi, J.; Carlone, L. Teaser: Fast and certifiable point cloud registration. IEEE Trans. Robot. 2020, 37, 314–333. [Google Scholar] [CrossRef]

- Zhou, Q. Fast global registration. In Computer Vision—ECCV 2016: 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 766–782. [Google Scholar]

- Li, P.; Wang, M.; Fu, J.; Zhang, B. Efficient pose and motion estimation of non-cooperative target based on LiDAR. Appl. Opt. 2022, 61, 7820–7829. [Google Scholar] [CrossRef] [PubMed]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 652–660. [Google Scholar]

- Bechini, M.; Lavagna, M.; Lunghi, P. Dataset generation and validation for spacecraft pose estimation via monocular images processing. Acta Astronaut. 2023, 204, 358–369. [Google Scholar] [CrossRef]

- NASA 3D Resources Website. 2024. Available online: https://nasa3d.arc.nasa.gov/models (accessed on 7 December 2024).

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Liu, W.; Wu, H.; Chirikjian, G.S. LSG-CPD: Coherent Point Drift with Local Surface Geometry for Point Cloud Registration. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15293–15302. [Google Scholar]

- Li, J.; Zhang, C.; Xu, Z.; Zhou, H.; Zhang, C. Iterative distance-aware similarity matrix convolution with mutual-supervised point elimination for efficient point cloud registration. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIV 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 378–394. [Google Scholar]

- Yew, Z.J.; Lee, G.H. Rpm-net: Robust point matching using learned features. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11824–11833. [Google Scholar]

| Sequences | ICP | NDT | LSG-CPD | RobustICP | IDAM | RPM-Net | Ours | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | |

| Aura | 6.76 | 28.21 | 8.01 | 31.28 | 0.89 | 8.01 | 3.37 | 17.37 | 1.21 | 10.29 | 1.14 | 11.45 | 1.07 | 13.17 |

| Chandra | 7.87 | 26.09 | 7.17 | 31.77 | 3.00 | 12.78 | 3.23 | 7.96 | 1.96 | 15.63 | 1.99 | 10.26 | 2.02 | 4.10 |

| DeepSpace | 18.37 | 97.30 | 23.60 | 113.96 | 2.58 | 10.01 | 3.27 | 28.71 | 3.45 | 11.78 | 3.01 | 9.23 | 1.66 | 9.13 |

| ICESat-2 | 9.14 | 42.57 | 12.91 | 68.36 | 1.31 | 9.06 | 5.40 | 15.22 | 2.05 | 8.89 | 1.57 | 8.87 | 2.37 | 12.70 |

| Jason-1 | 3.27 | 13.62 | 3.59 | 12.24 | 2.62 | 8.78 | 8.99 | 5.98 | 2.78 | 12.45 | 2.26 | 7.25 | 4.08 | 7.55 |

| Juno | 6.30 | 30.16 | 2.68 | 32.86 | 1.00 | 14.32 | 2.55 | 9.37 | 2.51 | 13.69 | 1.75 | 10.23 | 0.52 | 10.40 |

| Messenger | 7.78 | 27.33 | 8.16 | 32.14 | 2.09 | 4.53 | 2.80 | 16.25 | 1.92 | 5.01 | 1.88 | 11.47 | 1.78 | 5.68 |

| Topex | 32.33 | 26.84 | 19.95 | 28.48 | 2.55 | 7.54 | 4.00 | 8.59 | 2.66 | 7.88 | 3.24 | 8.01 | 1.71 | 4.79 |

| Average | 11.48 | 36.52 | 10.76 | 43.87 | 2.01 | 9.38 | 4.20 | 13.68 | 2.32 | 10.70 | 2.10 | 9.59 | 1.90 | 8.44 |

| Sequences | ICP | NDT | LSG-CPD | RobustICP | IDAM | RPM-Net | Ours | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | RRE | RTE | |

| = 1 cm | 11.89 | 48.92 | 10.07 | 28.51 | 1.44 | 6.31 | 3.04 | 10.61 | 2.20 | 9.58 | 1.58 | 9.22 | 1.76 | 8.02 |

| = 2 cm | 11.48 | 36.52 | 10.76 | 43.89 | 2.01 | 9.38 | 4.20 | 13.68 | 2.32 | 10.70 | 2.10 | 9.59 | 1.90 | 8.44 |

| = 3 cm | 9.21 | 39.37 | 8.55 | 37.45 | 2.76 | 13.02 | 4.99 | 15.88 | 3.14 | 12.23 | 3.00 | 11.05 | 2.48 | 9.54 |

| = 4 cm | 9.86 | 34.17 | 8.41 | 39.12 | 3.26 | 13.85 | 5.83 | 16.47 | 5.98 | 14.69 | 4.97 | 12.89 | 1.85 | 8.93 |

| = 5 cm | 11.02 | 44.86 | 9.29 | 44.51 | 5.07 | 18.46 | 7.82 | 18.60 | 7.23 | 17.85 | 6.27 | 15.61 | 3.14 | 12.24 |

| Average | 10.69 | 40.77 | 9.41 | 38.70 | 2.91 | 12.20 | 5.18 | 15.05 | 4.17 | 13.01 | 3.58 | 11.67 | 2.23 | 9.43 |

| Configuration | RRE (°) | RTE (cm) | ||

|---|---|---|---|---|

| Mean | Std | Mean | Std | |

| Coarse-only | 5.32 | 1.15 | 12.87 | 2.42 |

| Fine-only | 8.71 | 2.63 | 14.25 | 3.38 |

| Full (Ours) | 1.90 | 0.73 | 8.44 | 1.02 |

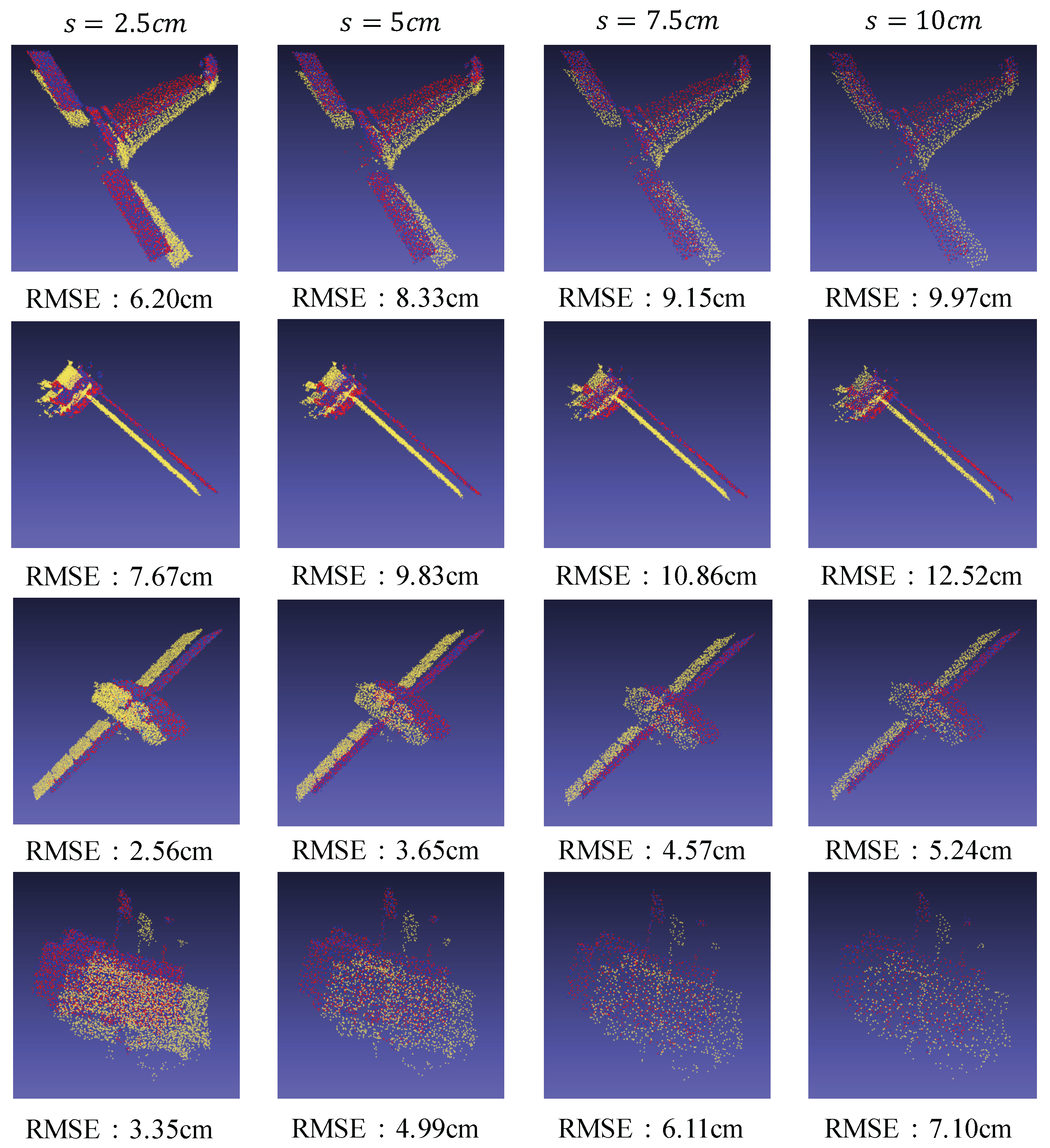

| RRE (°) | RTE (cm) | Time (ms) | |

|---|---|---|---|

| s = 2.5 cm | 1.49 | 7.22 | 179 |

| s = 5 cm | 1.90 | 8.44 | 99 |

| s = 7.5 cm | 2.45 | 12.25 | 88 |

| s = 10 cm | 4.23 | 15.78 | 67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, M.; Xu, L. Robust Pose Estimation for Noncooperative Spacecraft Under Rapid Inter-Frame Motion: A Two-Stage Point Cloud Registration Approach. Remote Sens. 2025, 17, 1944. https://doi.org/10.3390/rs17111944

Zhao M, Xu L. Robust Pose Estimation for Noncooperative Spacecraft Under Rapid Inter-Frame Motion: A Two-Stage Point Cloud Registration Approach. Remote Sensing. 2025; 17(11):1944. https://doi.org/10.3390/rs17111944

Chicago/Turabian StyleZhao, Mingyuan, and Long Xu. 2025. "Robust Pose Estimation for Noncooperative Spacecraft Under Rapid Inter-Frame Motion: A Two-Stage Point Cloud Registration Approach" Remote Sensing 17, no. 11: 1944. https://doi.org/10.3390/rs17111944

APA StyleZhao, M., & Xu, L. (2025). Robust Pose Estimation for Noncooperative Spacecraft Under Rapid Inter-Frame Motion: A Two-Stage Point Cloud Registration Approach. Remote Sensing, 17(11), 1944. https://doi.org/10.3390/rs17111944