Abstract

The latest research indicates that Large Vision-Language Models (VLMs) have a wide range of applications in the field of remote sensing. However, the vast amount of image data in this field presents a challenge in selecting high-quality multimodal data, which are essential for saving computational resources and time. Therefore, we propose an adaptive fine-tuning algorithm for multimodal large models. The core steps of this algorithm involve two stages of data truncation. First, the vast dataset is projected into a semantic vector space, where the MiniBatchKMeans algorithm is used for automated clustering. This classification ensures that the data within each cluster exhibit high semantic similarity. Next, the data within each cluster are processed by calculating the translational difference between the original and perturbed data in the multimodal large model’s vector space. This difference serves as a generalization metric for the data. Based on this metric, we select data with high generalization potential for training. We apply this algorithm to train the InternLM-XComposer2-VL-7B model on two 3090 GPUs, using one-third of the GeoChat multimodal remote sensing dataset. The results demonstrate that our algorithm outperforms state-of-the-art baselines. The model trained on our optimally chosen one-third dataset, as validated through experiments, showed only a 1% reduction in performance across various remote sensing metrics compared to the model trained on the full dataset. This approach significantly preserved general-purpose capabilities while reducing training time by 68.2%. Furthermore, the model achieved scores of 89.86 and 77.19 on the UCMerced and AID evaluation datasets, respectively, surpassing the GeoChat dataset by 5.43 and 5.16 points. It only showed a 0.91-point average decrease on the LRBEN evaluation dataset.

1. Introduction

The emergence of large language models (LLMs) has brought significant advancements to the field of artificial intelligence, demonstrating remarkable capabilities across various natural language processing tasks. For instance, models like ChatGPT [1] and GPT-4 [2] exhibit strong zero-shot and few-shot [3] learning abilities, which allow them to generalize well across many domains. However, when applied to specialized fields such as healthcare, law, and hydrology, these general-purpose models often experience performance degradation, since their insufficient training in domain-specific knowledge results in a lack of understanding of tasks within these specialized areas.

To address this issue, researchers have begun exploring the specialized training and fine-tuning of LLMs for specific domains, and notable achievements have been made. For example, in the medical field [4], Google and DeepMind introduced Med-PaLM [5], a model designed for medical dialogue, which excels in tasks such as medical question answering, diagnostic advice, and patient education. Han et al. proposed MedAlpaca [6], a model fine-tuned on a large corpus of medical data based on Stanford Alpaca [7], aimed at serving medical question answering and consultation scenarios. Wang et al. developed BenTsao [8], which was fine-tuned using Chinese synthetic data generated from medical knowledge graphs and the literature, providing accurate Chinese medical consultation services. In the legal field, Zhou et al. introduced LaWGPT [9], which was developed through secondary pre-training and instruction fine-tuning on large-scale Chinese legal corpora, enabling robust legal question answering capabilities. In the field of hydrology, Ren et al. proposed WaterGPT [10], a model based on Qwen-7B-Chat [11] and Qwen2-7B-Chat [12], which successfully achieved knowledge-based question answering and intelligent tool invocation within the hydrology domain through extensive secondary pre-training and instruction fine-tuning on domain-specific data.

With the success of LLMs in various fields, researchers have gradually started to explore the development of domain-specific multimodal models. For instance, in the medical field, Wang et al. introduced XrayGLM [13] to address challenges in interpreting various medical images. Li et al. proposed LLaVA-Med [14], aiming to build a large language and vision model with GPT-4-level capabilities in the biomedical domain.

In the field of remote sensing, real-world tasks often require multi-faceted comprehensive analysis to achieve effective solutions. Therefore, practical applications typically necessitate multi-task collaboration for accurate judgment. Despite significant advancements in deep learning [15,16] within the remote sensing field [17], most current research still focuses on addressing single tasks and designing architectures for individual tasks [18], which limits the comprehensive processing of remote sensing images [19,20]. Consequently, multimodal large models may exhibit exceptional performance in the remote sensing domain.

In the field of remote sensing, significant progress has also been made by researchers. For example, Liu et al. introduced RemoteCLIP [21], the first vision-language foundation model specifically designed for remote sensing, aimed at learning robust visual features with rich semantics and generating aligned textual embeddings for various downstream tasks. Zhang et al. proposed a novel framework for the domain-specific pre-training of vision-language models, DVLM [22], and trained the GeoRSCLIP model for remote sensing. They also created a paired image-text dataset called RS5M for this purpose. Hu et al. released a high-quality remote sensing image caption dataset, RSICap [23], to promote the development of large vision-language models in the remote sensing domain and provided the RSIEval benchmark dataset for the comprehensive evaluation of these models’ performance. Kuckreja et al. introduced GeoChat [24], a multimodal model specifically designed for remote sensing, capable of handling various remote sensing images and performing visual question answering and scene classification tasks. They also proposed the RS multimodal instruction-following dataset, which includes 318 k multimodal instructions, and the geo-bench evaluation dataset for assessing the performance of multimodal models in remote sensing. Zhang et al. proposed EarthGPT [25], which seamlessly integrates multi-sensor image understanding and various remote sensing visual tasks within a single framework. EarthGPT can comprehend optical, synthetic aperture radar (SAR), and infrared images under natural language instructions and accomplish a range of tasks including remote sensing scene classification, image description, visual question answering, object description, visual localization, and object detection. Liu et al. introduced the Change-Agent platform [26], which integrates a multi-level change interpretation model (MCI) and a large language model (LLM) to provide comprehensive and interactive remote sensing change analysis, achieving state-of-the-art performance in change detection and description while offering a new pathway for intelligent remote sensing applications.

However, most current research focuses on direct training using large multimodal datasets, leading to significant computational resource consumption. Studies have shown that fine-tuning on a small amount of high-quality data can achieve comparable or even superior results. For instance, in the pure-text LLM domain, Chen et al. proposed the ALPAGASUS algorithm [27], which leverages a large language model (e.g., ChatGPT) to automatically identify and filter out low-quality instruction-tuning examples; Du et al. introduced MoDS [28], a data-selection strategy guided by the criteria of quality, diversity, and necessity; Li et al. developed Superfiltering [29], in which a smaller model pre-filters examples by their instruction-following difficulty before fine-tuning a larger model; Kung et al. presented Active Instruction Tuning [30], demonstrating that datasets with high prompt uncertainty yield stronger generalization; Yang et al. proposed a Self-Distillation method [31] to mitigate catastrophic forgetting during LLM fine-tuning; and Yu et al. introduced WaveCoder [32], which embeds examples into vector space and applies KCenterGreedy clustering to select a core subset. In the multimodal arena, Wei et al. showed that fine-tuning InstructionGPT-4 on just 6% of judiciously selected data outperforms the original MiniGPT-4 across diverse tasks [33]. Regarding the selection of high-quality fine-tuning datasets for remote sensing, Chen et al. proposed RSPrompter [34], which employs a lightweight prompt generator to guide the Segment Anything Model in producing semantically aligned instance masks, and DynamicVis [35], which uses a dynamic region perception backbone coupled with multi-instance meta-embeddings to efficiently process ultra-high-resolution imagery with low latency and minimal memory footprint, illustrating that specialized adaptation modules can dramatically reduce the fine-tuning cost while maintaining or enhancing performance across remote sensing tasks. Nevertheless, despite the clear benefits of strategic data selection, existing methods either target text-only LLMs or general multimodal tasks; none has been designed to filter and assemble high-quality instruction-tuning datasets tailored for remote sensing multimodal models. This gap prevents us from efficiently honing domain-specific capabilities without compromising the model’s overall generalization—motivating the need for a dedicated dataset-selection algorithm for remote sensing instruction fine-tuning.

To address this gap, we proposed a novel, adaptive fine-tuning algorithm for multimodal large models, capable of automatically categorizing and filtering remote sensing multimodal instruction datasets to identify high-quality data for training from massive remote sensing datasets. The core steps of the algorithm include projecting the large-scale data into a semantic vector space and using the MiniBatchKMeans algorithm for automated clustering. Each data cluster was then processed by introducing perturbation parameters to the original data and calculating the translational differences between the original and perturbed data in the multimodal model’s vector space. This difference served as a generalization performance metric, determining the quality of the dataset. Finally, through a layer of ranking, we selected the batch of datasets with the highest generalization performance metrics for training.

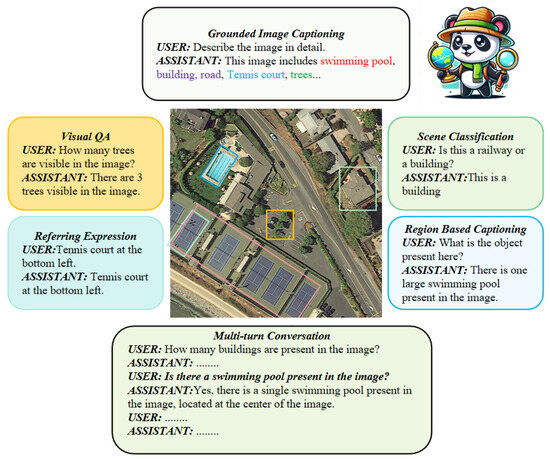

We utilized the RS multimodal instruction-following dataset proposed by GeoChat for training and adopted the Evaluation Benchmark from GeoChat along with MMBench_DEV_EN [36], MME [37], and SEEDBench_IMG [38] as evaluation datasets for domain-specific and general domains, respectively. Through comparisons with random selection, the WaveCoder algorithm, and our proposed algorithm on the GeoChat classification dataset, our results demonstrated that our algorithm outperformed other baseline methods, maximizing domain capability enhancement while preserving generalization ability. Additionally, our algorithm’s selected one-third dataset reduced training time by approximately two-thirds compared to training on the entire dataset, with only a 1% average decrease in performance in the remote sensing domain, while significantly maintaining generalization capability. Figure 1 illustrates our multimodal model’s versatility on six key remote sensing tasks by centering a single high-resolution aerial image and surrounding it with colored panels that pair user prompts and model responses for grounded image captioning, visual question answering, referring expression comprehension, scene classification, region-based captioning, and multi-turn conversation—demonstrating how the model seamlessly fuses visual and textual cues to describe scenes, count and localize objects, classify content, and engage in interactive dialog.

Figure 1.

Various tasks that our remote sensing multimodal large model can complete.

The main contributions of this paper are as follows:

- We proposed a new multimodal instruction fine-tuning dataset quality metric—generalization performance metric.

- We introduce a novel algorithm that selects high-quality remote sensing multimodal fine-tuning datasets to achieve faster and more efficient training results.

- By training on small datasets, we compared the effects of baseline algorithms and our algorithm in both general and remote sensing domains, validating that our algorithm achieved favorable results in the remote sensing domain.

2. Dataset Creation

2.1. Training Data

The RS multimodal instruction-following dataset is a multimodal instruction-following dataset designed for remote sensing image understanding. It integrates various tasks such as image description, visual question answering, and visual dialogue, aiming to enhance the model’s ability to handle complex reasoning, object attribute understanding, and spatial relationships. The dataset contains a total of 318,000 instruction pairs. The RS Multimodal Instruction-Following Dataset is a curated corpus of 318,000 high-quality instruction–response pairs aligned with 120,000 unique 256 × 256 px remote sensing image tiles, designed to support a unified multimodal instruction-following paradigm in the RS domain. These tiles were sourced from diverse satellite and aerial platforms, including Sentinel-2 MSI, Landsat-8 OLI, commercial sub-meter imagery, and Google Earth captures, covering a wide range of spatial resolutions (0.3 m to 10 m ground-sampling distance). The dataset generation pipeline leverages existing object-detection benchmarks to create initial image descriptions, then uses Vicuna-1.5 for LLM-driven conversation generation, and finally enriches the collection with visual question-answering and scene-classification examples drawn from dedicated RS datasets. By integrating tasks such as image captioning, VQA, object-attribute queries, spatial-relation reasoning, and multi-turn visual dialogue, it enables the comprehensive fine-tuning and evaluation of MLLMs for complex scene understanding in remote sensing. Hosted publicly on GeoX-Lab with full documentation and code, this dataset underpins state-of-the-art RS VLMs like GeoChat and RS-GPT4V, facilitating robust domain adaptation and zero-shot reasoning on unseen tasks.

2.2. Evaluation Datasets

Our evaluation datasets include two parts: the remote sensing evaluation dataset and the general multimodal evaluation dataset.

- (1)

- Remote Sensing Evaluation Datasets:

LRBEN (Land Use and Land Cover Remote Sensing Benchmark Dataset): This dataset is designed for land use and land cover classification tasks in remote sensing. It includes high-resolution images annotated for various types of land cover, such as urban areas, forests, water bodies, and agricultural fields. LRBEN is used to benchmark models’ performance in visual question answering, scene classification, and other tasks in remote sensing.

UC Merced Land Use Dataset: This dataset contains aerial imagery of various land use classes, such as agricultural, residential, and commercial areas. The images are high-resolution and cover 21 different classes, each with 100 images, making it suitable for scene classification tasks. It is widely used for evaluating remote sensing models’ ability to classify and understand different land use types.

AID (Aerial Image Dataset): AID is a large-scale dataset for aerial scene classification. It contains images from various scenes, such as industrial areas, residential areas, and transportation hubs. The dataset is designed to help in developing and benchmarking algorithms for scene classification, image retrieval, and other remote sensing tasks. AID includes a significant number of images for each category, providing a comprehensive benchmark for evaluating model performance.

- (2)

- General Multimodal Evaluation Datasets:

MMBench_DEV_EN: MMBench is a benchmark suite for evaluating the multimodal understanding capabilities of large vision-language models (LVLMs). It contains approximately 2974 multiple-choice questions covering 20 capability dimensions. Each question is single-choice, ensuring the reliability and reproducibility of the evaluation results. MMBench uses a strategy called cyclic evaluation to more reliably test the performance of vision-language models.

MME (Multimodal Evaluation): MME is a comprehensive evaluation benchmark for large multimodal language models, aiming to systematically develop a holistic evaluation process. The MME dataset includes up to 30 of the latest multimodal large language models and consists of 14 sub-tasks to test the models’ perceptual and cognitive abilities. The MME data annotations are all manually designed to avoid potential data leakage issues that might arise from using public datasets.

SEEDBench_IMG: SEEDBench is an image dataset specifically designed for training and evaluating multimodal models. It contains high-quality image data with detailed annotations, suitable for various multimodal tasks such as image classification, object detection, and scene understanding. The SEEDBench dataset aims to assist researchers in developing and optimizing multimodal models by providing a comprehensive benchmark.

3. Methods

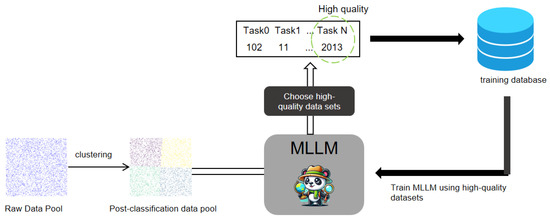

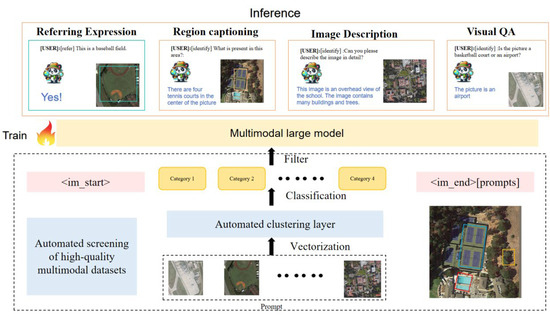

3.1. Adaptive Self-Tuning for Multimodal Models

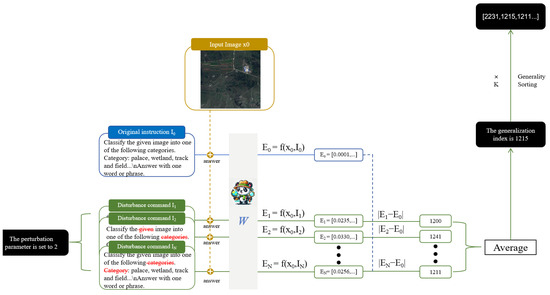

In real-world scenarios, the volume of instruction fine-tuning data is often large and continually expanding, leading to increased training costs. Additionally, as the data volume grows, data conflicts also become more pronounced, often resulting in poorer training outcomes. To address this issue, we proposed a new algorithm that enables large models to autonomously select data to better adapt to domain-specific tasks. The core of this algorithm is to allow the model to independently identify the most generalizable task instructions, achieving optimal performance with a minimal amount of training data. The flowchart of this process is shown in Figure 2. The complete training and inference process of our algorithm is illustrated in Figure 3.

Figure 2.

Adaptive self-tuning for a multimodal model’s algorithm flow.

Figure 3.

Complete process of adaptive self-tuning for a multimodal model’s algorithm.

3.2. Selection of Generalizable Tasks

The autonomous selection of task instruction datasets with greater generalization has been a research hotspot. For instance, Sid-dhant and Lipton’s work on uncertainty-based active learning [39] provides significant insights.

Inspired by these studies, we proposed a new generalization measure: vector space translation difference. Since large models predict the next word based on context, changes in the context vector affect subsequent content generation. We evaluated the uncertainty of instructions by randomly deleting words from the instruction context as perturbation information and observing the degree of change in the model’s vector space. Generally, entries with stronger uncertainty yield better generalization effects after training. Specifically, the vector space translation difference measures the translation difference in the vector space of the model’s projection vectors when given complete and perturbed task instructions, assessing the generalization of the instruction. This quantifies the model’s responsiveness to uncertain instructions, enabling a better evaluation of the model’s generalization performance.

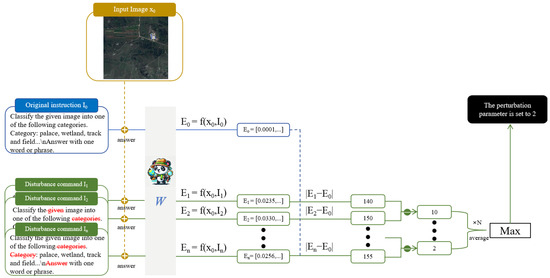

The detailed flowchart is shown in Figure 4, and the specific steps were as follows:

Figure 4.

Adaptive self-tuning for multimodal models calculating generalization index process.

- For the massive data pool X, we used the bge-large-en-v1.5 [40] model to project each data entry into a vector space and then performed automated clustering using the MiniBatchKMeans algorithm. Specifically, we performed clustering calculations for different numbers of clusters using the MiniBatchKMeans algorithm, recorded the SSE (sum of squared errors) and silhouette coefficient for each cluster number, and selected the optimal number of clusters based on the highest silhouette coefficient. The data were eventually divided into p clusters. The specific steps were as follows:

- (1)

- Data projection onto vector space:

Here, Xi represents the ith data item in the data pool and Vi represents the vector representation projected through the bge-large-en-v1.5 model.

- (2)

- Calculation of the sum of squared errors (SSE):

Here, p represents the number of clusters, Cj denotes the jth cluster, and μj is the centroid of the jth cluster. Vi represents the vector belonging to the jth cluster. The SSE measures the sum of the distances between data points and their respective cluster centroids, serving as one of the indicators to evaluate clustering performance. A smaller SSE indicates that the points within a cluster are more tightly grouped. By plotting the SSE values for different numbers of clusters p, one can preliminarily assess the reasonable range for the number of clusters.

- (3)

- Calculation of the silhouette coefficient:

Here, a(i) represents the average distance from data point i to all other points within the same cluster and b(i) represents the average distance from data point i to the nearest points in a different cluster. The silhouette coefficient C for the entire dataset is the average of the silhouette scores s(i) for all data points:

Here, n represents the total number of data points.

- (4)

- Selection of the optimal number of clusters:

Here, C(k) represents the silhouette coefficient for different numbers of clusters k and p is the optimal number of clusters that maximize C(k).

- 2.

- For the given p-th cluster and the K-th original instruction I0, add a perturbation parameter n (i.e., the number of words randomly deleted from each instruction). Generate N perturbed instructions randomly, denoted as I1 to IN.

- 3.

- Then, concatenate the input image X0, answer with I0 to IN, and project them into the vector space of the multimodal large model, as shown in the following formula:

- 4.

- For the instructions I0 to IN and their corresponding images and answers, calculate the Euclidean distances between the projection vectors E0 to EN and the perturbed vectors E1 to EN sequentially, as follows:

- 5.

- Sum the Euclidean distances between the perturbed vectors E1 to EN and E0. Then, calculate the average value as the generalization measure, where n represents the perturbation parameter value and K represents the K-th data entry.

- 6.

- Finally, sort each instruction in the p-th cluster by its generalization measure, with higher measures corresponding to higher training priority.

3.3. Selection of Optimal Disturbance Parameters

To select the optimal disturbance parameter n, we observed the relative embedding differences when adding different disturbance parameters to determine the best value for n (The process is shown in Figure 5).

Figure 5.

Adaptive self-tuning for multimodal model’s algorithm selects the best disturbance parameter n process.

The specific steps were as follows:

- First, for the given K-th original instruction I0, we sequentially added random parameters from 1 to n, resulting in disturbed instructions I1 to IN.

- Then, we concatenated the input image X0 and the answer with I0 to In, respectively, and projected them into the vector space of the multimodal large model to obtain vectors E0 to En. The formula is as follows:

- For the obtained vectors E0 to En, we sequentially calculated the Euclidean distance between each perturbed vector E1 to En and the original vector E0 to En. The formula is as follows:

- Then, we calculated the average embedding difference Sn,k for the K entries under the disturbance parameter n. We sequentially calculated the relative embedding differences Dn,K from 1 to n and selected the disturbance parameter with the maximum relative embedding difference as the optimal disturbance parameter. The formula is as follows, where K represents the p-th data pool containing K entries and n represents the disturbance parameter:

3.4. Compare Algorithms

Algorithm 1: Random Sampling

The random sampling method involves randomly selecting a subset of the dataset for training. This approach often captures the most diverse and broadly representative data from the dataset. Therefore, we used the random sampling algorithm as our baseline for comparison.

Algorithm 2: KCenterGreedy Clustering Algorithm

WaveCoder proposes a method for selecting a core dataset using the KCenterGreedy clustering algorithm. In this approach, we used the bge-visualized-m3 [41] model to project each image-text pair into vector space. We then applied the KCenterGreedy algorithm for clustering and selected a representative subset of the dataset.

4. Results

4.1. Training Details

We performed LoRA [42] fine-tuning on the InternLM-XComposer2-VL-7B [43] model using the RS multimodal instruction-following dataset. The fine-tuning parameters are shown in Table 1.

Table 1.

Training parameters.

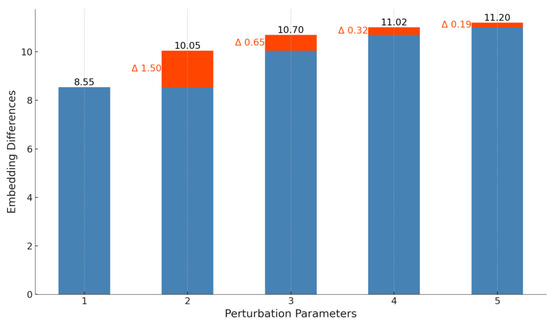

4.2. Experiment on Disturbance Parameter Settings

To validate the effectiveness of our algorithm, we used a subset of clustered data focused on classification tasks, containing 32 k entries, as the training set. We first evaluated the optimal disturbance parameter using our algorithm, and the relative vector embedding differences are shown in Figure 6.

Figure 6.

Relative vector embedding difference under different disturbance parameters.

As shown in the figure, the optimal disturbance parameter was 2, with the value gradually converging and the change magnitude decreasing, approaching 0 after 4.

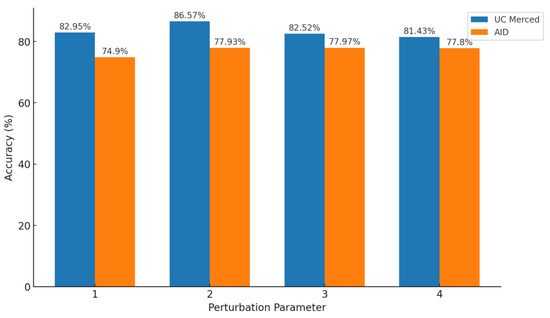

Therefore, we set the optimal disturbance parameter to 2. To further verify this, we used our algorithm to rank the generalizability of the training set with disturbance parameters from 1 to 4. We selected the top 5000 entries with the highest generalizability for training and evaluated the performance on the UC Merced and AID datasets. The results are shown in Figure 7.

Figure 7.

Model training effect under different disturbance parameters.

From the figure, the model achieved the best training performance when the disturbance parameter was set to 2, reaching 86.57% accuracy on the UC Merced dataset—4 percentage points higher than with a parameter of 1 or 3. On the AID dataset, it attained 77.93%, only 0.04 percentage points below the peak value at n = 3. Additionally, we conducted experiments on two other clusters of 25 K and 90 K entries. Using our algorithm, we selected 5 K samples from each cluster for training. The evaluation results in Table 2 and Table 3 confirmed that, with n = 2, the models achieved optimal performance on RSVQA-LR, AID, and UC Merced. Overall, the model’s best training performance was obtained when the disturbance parameter was set to 2.

Table 2.

Model performance on RSVQA-LR when trained on 5 K samples selected by our algorithm from a 25 K entry cluster under varying perturbation parameter n.

Table 3.

Model performance on AID and UC Merced when trained on 5 K samples selected by our algorithm from a 90 K entry cluster under varying perturbation parameter n.

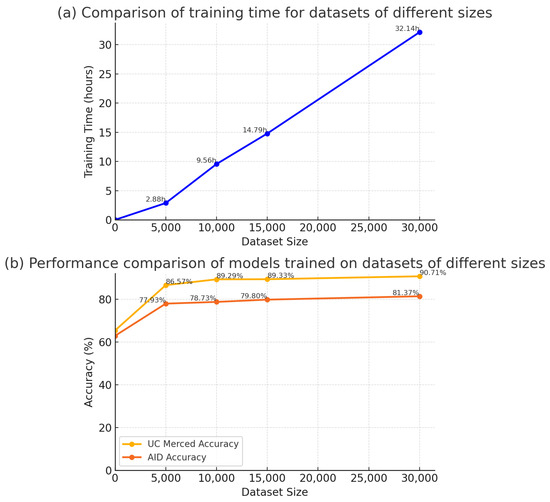

4.3. Optimal Training Data Ratio

To determine the optimal training data ratio, we conducted a detailed comparison of training durations and model performance for different data volumes (5000, 10,000, 15,000, and 32,000 samples). The experimental results are shown in Figure 8.

Figure 8.

Comparison of training time and model performance under different sizes of datasets.

As illustrated in Figure 8, increasing the training data volume led to improved model performance on both the AID and UC Merced datasets. Specifically, with 5000 samples, the performance on the AID dataset was 77.93, and on the UC Merced dataset, it was 86.57. When the data volume was increased to 10,000 samples, the performance on the AID and UC Merced datasets rose to 78.73 and 89.29, respectively. Further increasing the data volume to 15,000 and 32,000 samples resulted in performance levels of 79.80 and 81.37, as well as 89.33 and 90.71. This indicates that more data generally improve model performance, but the performance gain gradually diminishes.

The training duration data showed a significant increase with the data volume. For instance, training with 5000 samples took 2.88 h, while training with 32,000 samples increased to 32.14 h, an additional 29.26 h.

By comparing model performance and training durations across different data volumes, we found that with 10,000 samples, the model’s performance was close to its peak, while the training duration was significantly lower compared to 15,000 and 32,000 samples. Specifically, the performance difference between 10,000 and 32,000 samples was an average of 2.13, with a reduction in computation cost by 22.18 h.

In summary, with 10,000 samples, the model achieved a high performance while significantly reducing training time and computational resources. Thus, 10,000 samples represent the optimal balance between performance and computational cost. This indicates that using approximately one-third of the total dataset achieves better training results while substantially lowering the computational cost.

4.4. Comparison of Algorithm Performance

To further validate the effectiveness of our algorithm, we compared random sampling, the KCenterGreedy clustering algorithm, and our algorithm. We selected 5000 data entries for training in each case and compared the model’s performance on the UC Merced and AID datasets. The results are shown in Table 4 (In the table below, we use red text to indicate the extent of decreases and green text to indicate the extent of increases).

Table 4.

Comparison of training effects of different algorithm models under 5000 pieces of data.

As shown in the table, our algorithm improved the baseline algorithm (random sampling) by 0.50 on the UC Merced dataset and 0.67 on the AID dataset, with an average improvement of 0.58. In contrast, the KCenterGreedy clustering algorithm improved by 0.64 on the UC Merced dataset but decreased by 3.90 on the AID dataset, resulting in an overall decrease of 1.63 compared to the baseline algorithm. Overall, our algorithm achieved the best training performance.

To further observe the improvement of our algorithm over the baseline algorithm, we tested the training performance on a dataset of 10,000 entries and on the entire classification dataset. The results are shown in Table 5.

Table 5.

Comparison of training effects of different algorithm models under different scales of data.

As shown in the table, when the dataset size was expanded to 10,000 entries, our algorithm showed even greater advantages, improving by 0.63 on the AID dataset and by 1.77 on the UC Merced dataset compared to the baseline algorithm, with an overall improvement of 1.20. The average improvement of 0.58 from 5000 to 10,000 entries was nearly double, indicating that the performance improvement brought by our algorithm increased with the dataset size. Additionally, when training on the entire 32 k dataset, our algorithm, using only 10 k entries, was only 1.42 points lower on the UC Merced dataset and 2.64 points lower on the AID dataset, with an overall average decrease of 2.00. This result demonstrates that our algorithm can significantly approximate the performance of training on the entire dataset with just one-third of the data.

Furthermore, we compared the performance of models trained with our algorithm and the baseline algorithm in general domains. The results are shown in Table 6.

Table 6.

Comparison of general performance of different algorithm models under different scales of data.

As shown in the table, our algorithm also retained the best general domain capabilities, demonstrating superior performance over the random sampling method on the MMBench_DEV_en, SEEDBench, and MME datasets, achieving scores of 84.38, 75.45, and 2276.30, respectively. The performance on MMBench_DEV_en and SEEDBench exceeded that of the original model, with improvements of 0.41 and 33.60, respectively. In contrast, while direct training on the 32 k dataset showed an improvement on MMBench_DEV_en, it slightly declined on SEEDBench. Overall, our method significantly enhanced performance metrics in the remote sensing domain while maintaining the model’s general capabilities, demonstrating its effectiveness and superiority.

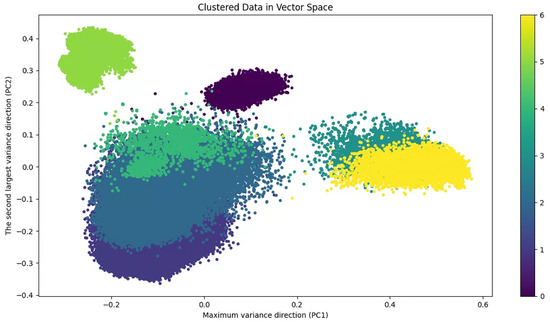

4.5. Final Performance of Our Algorithm

Using our algorithm for automatic clustering, we divided the RS multimodal instruction-following dataset into seven categories, as shown in the vector space visualization in Figure 9.

Figure 9.

RS dataset clustering in vector space.

We then selected 15,000 data entries from each category, totaling 105,000 entries for training. The model was trained for three epochs, and the results are shown in Table 7 and Table 8.

Table 7.

Comparing the evaluation results of different models on AID and UC Merced datasets.

Table 8.

Comparing the evaluation results of different models on the LRBEN dataset.

As shown in Table 7 and Table 8, we highlighted our trained model’s results in bold. The model trained with only 105 k entries achieved 77.19 on the AID dataset and 89.86 on the UC Merced dataset, which are 5.16 and 5.43 points higher than GeoChat, respectively. On the LRBEN dataset, it achieved an average of 90.90, only 0.91 points lower than GeoChat. Observing the performance of the original models on the AID, UC Merced, and LRBEN datasets, we find that our original model InternLM-XComposer2-VL-7B outperformed GeoChat’s original model LLaVA-1.5 by an average of 4.63 on AID and UC Merced. After training, our model outperformed GeoChat by 5.3 on these datasets. On the LRBEN dataset, InternLM-XComposer2-VL-7B scored 1.72 points lower than LLaVA-1.5, and our final trained model scored 0.91 points lower than GeoChat.

These results indicate that the performance of the original model has a direct positive impact on the final training performance. However, the key finding is that by selecting high-quality, generalizable datasets, our algorithm can achieve results comparable to those obtained from training on the full dataset, using only one-third of the data. This demonstrates the effectiveness and efficiency of our method in enhancing model performance.

4.6. Ablation Study

4.6.1. Rationale for the Generalization Measure

We treat the embedding shift after word deletion as a generalization metric because the instruction fine-tuning stage primarily serves to activate the model’s capabilities. When we randomly delete words from an instruction, the greater the change in the model’s understanding is and the more critical the lost information is. By performing multiple random deletions and averaging the resulting shifts, this approach more accurately identifies which samples the model considers to contain the most key information—and, thus, which will yield the greatest training benefit. As shown in Table 9, we listed the instructions with the highest and lowest generalization measures within a cluster for a classification task. We then used GPT-O3 to rate each instruction’s difficulty and found that higher-generalization instructions tend to be more challenging. From that cluster, we extracted the 100 samples with the highest generalization measures and the 100 with the lowest and tested them using the original InternLM-XComposer2-VL-7B model. As Table 10 demonstrates, samples with higher generalization measures produce higher model accuracy—and training on more difficult instructions delivers greater performance improvements.

Table 9.

Instructions with highest and lowest generalization measures in a classification cluster.

Table 10.

InternLM-XComposer2-VL-7B accuracy on high- vs. low-generalization instruction samples.

4.6.2. Comparison of Different Perturbation Methods

We carried out further experiments to compare how different perturbation strategies affect our algorithm. Specifically, we applied two-word swaps and direct Gaussian-noise injection (mean = 0, standard deviation = 0.001) into the embedding vectors. We then trained on 5 K randomly sampled examples from a cluster containing 32 K items. The resulting model performances are presented in Table 11. As shown, deleting words at random yielded the best performance; both swapping words and adding noise to the embeddings performed substantially worse. We attribute this to the fact that swapping words rarely removes truly critical information and that small Gaussian perturbations in the embedding space do not induce sufficiently large changes. In contrast, word deletion provides a simple and effective way to alter the model’s interpretation of the instruction.

Table 11.

Model performance comparison under word deletion, word swap, and Gaussian-noise perturbations.

4.6.3. Impact of the Clustering Module

To evaluate how the clustering step affected our selection algorithm, we treated the 32 K example cluster as Cluster 0 and the 25 K example cluster as Cluster 1. We then applied our algorithm to each cluster separately to sample 5 K examples for training. The results are reported in Table 12 and Table 13. Data drawn from Cluster 0 yielded a large performance gain on AID and UC Merced—from 64.13% up to 82.25%—but a slight drop on RSVQA-LR (64.14% → 61.34%). Conversely, data from Cluster 1 boosted RSVQA-LR markedly (64.14% → 78.57%) while causing the AID and UC Merced scores to fall (64.13% → 62.03%). This confirms that the clustering effectively grouped samples by their dominant semantic tasks.

Table 12.

AID and UC Merced performance for 5 K samples from Cluster 0 vs. Cluster 1.

Table 13.

RSVQA-LR performance for 5 K samples from Cluster 0 vs. Cluster 1.

We then carried out two further experiments:

- Mixed-Subsets Training—merge the two 5 K subsets (one from each cluster) and train on the 10 K combined set.

- Merge-then-Select—first merge all 57 K examples, sample 10 K with our algorithm, and train.

Results (Table 14 and Table 15) showed that, across RSVQA-LR, AID, and UC Merced, the Cluster-then-Select approach (sampling 5 K per cluster) consistently outperformed both mixed-subsets and merge-then-select strategies. Moreover, training on the mixed dataset always incurs a performance penalty compared to training on each cluster’s 5 K subset.

Table 14.

AID and UC Merced performance for Cluster-then-Select vs. Merge-then-Select Training.

Table 15.

RSVQA-LR Performance for Cluster-then-Select vs. Merge-then-Select Training.

4.6.4. Algorithm Efficiency Comparison

We measured both the training time and the required GPU memory for our method and the two baselines. The results are summarized in Table 16, where:

Table 16.

Computational time and GPU memory usage comparison for random sampling, our algorithm, and KCenterGreedy.

- D is the time for the embedding model to process one sample;

- S is the size of the selected core subset;

- E is the time to obtain a single multimodal-model embedding;

- D is the GPU memory used by the embedding model;

- E is the GPU memory used by the multimodal model.

As the table shows, random sampling had the lowest cost in both time and memory but it did not achieve the best training performance. Our algorithm came next in computational cost, while KCenterGreedy was the most expensive. Regarding memory usage, our method usually requires the most, followed by KCenterGreedy, with random sampling again being the cheapest.

To further evaluate the performance of our algorithm, we compared the results of training on the entire dataset versus a 105 k subset selected by our algorithm, both using InternLM-XComposer2-VL-7B on two 3090 GPUs for one epoch. The results are shown in Table 17, Table 18 and Table 19. Notably, training on the 105 k dataset took approximately 35 h, while training on the full 318 k dataset required around 110 h, more than three times the time consumption.

Table 17.

Comparing the evaluation results of models trained on datasets of different scales on AID and UC Merced.

Table 18.

Comparing the evaluation effects of models trained on datasets of different scales on LRBEN.

Table 19.

Comparing the evaluation effects of models trained on datasets of different scales in general fields.

As shown in Table 7 and Table 8, our algorithm outperformed both random sampling and the KCenterGreedy method in every evaluation, and—for remote sensing tasks—using the full training set offered virtually no advantage over the one-third subset selected by our approach. On the AID benchmark, the subset model even attained an accuracy that was 0.53 percentage points higher than the model trained on all available data. We believe this occurred because, in multi-task scenarios with highly diverse samples, merely enlarging the dataset also enlarges the pool of conflicting or redundant examples. These conflicts can introduce gradient noise and hinder optimization. Our selection algorithm mitigated this issue by filtering out samples that contribute little new information or that clash with other tasks, thus yielding a cleaner training signal and more reliable performance despite—or even because of—the reduced data volume. Our algorithm reached an accuracy of 80.64 on the AID and UC Merced evaluation datasets, which was only 0.87% lower than training on the full dataset. On the RSVQA-LR dataset, our algorithm averaged an accuracy of 80.59, just 1.42% lower than the full dataset training.

It is worth noting that the training results on the UC Merced and AID datasets were not as high as those achieved by training on a single type of dataset, as described in Section 4.3. This indicates that training on datasets of different types together can lead to significant data conflicts. However, our method achieved a higher score on the AID dataset compared to training on the entire dataset, suggesting that selecting high-quality subsets can alleviate some of the data conflicts.

It is worth noting that, in general-domain tasks, our algorithm retained better performance than training directly on the full dataset, achieving scores of 83.78, 74.92, and 2121.01 on MMBench, SEEDBench, and MME, respectively—all higher than the performance scores of the model trained on the full dataset. Additionally, on the SEEDBench and MME datasets, the accuracy loss from training on the full dataset was nearly twice that of the loss from our algorithm.

In summary, our algorithm saves more than twice the training time while maximizing the retention of general-domain capabilities, with only about a 1% accuracy loss in the remote sensing domain.

5. Conclusions

This study addresses the issue of data selection for multimodal large models in various domain tasks by proposing an adaptive fine-tuning algorithm. Most current research directly trains on large-scale multimodal data, which not only require substantial computational resources but also result in significant performance degradation when randomly selecting a small subset of data. To resolve this, we first projected the large-scale data into vector space and used the MiniBatchKMeans algorithm for automated clustering. Then, we measured the generalizability of the data by calculating the translation difference in the multimodal large model’s vector space between the original and perturbed data and autonomously selected data with high generalizability for training.

Our experiments, based on the InternLM-XComposer2-VL-7B model, were conducted on the remote sensing multimodal dataset proposed by GeoChat. The results show that using the adaptive fine-tuning algorithm, our method outperforms the random sampling and KCenterGreedy clustering algorithms in training with a 5000-entry dataset, achieving the best domain and general performance with a 10,000-entry dataset. Ultimately, using only 105,000 data entries—one-third of the GeoChat dataset—and training on a single 3090 GPU, our model achieved performances of 89.86 on the UC Merced dataset and 77.19 on the AID dataset, which are 5.43 and 5.16 points higher than GeoChat, respectively. On the LRBEN evaluation dataset, our model was only 0.91 points lower on average. Furthermore, comparing the performance of models trained on the full dataset versus our one-third dataset, we found that our approach reduced training time by more than 68.2% while maintaining general-domain capabilities with only a 1% average decrease in remote sensing accuracy.

In summary, our adaptive fine-tuning algorithm effectively selects high-quality data, enhancing model performance in specific domains while maintaining general performance under limited computational resources. This algorithm has significant practical value for training multimodal large models, especially in scenarios with constrained computational resources.

Nevertheless, our approach still has several limitations: (1) because the perturbation strategy relies on randomly deleting words, it may occasionally remove critical terms and thus underestimate the generalizability of some short or jargon-heavy instructions; (2) the embedding step depends on a large encoder, which limits deployment on edge devices with very restricted GPU memory; and (3) the method has been validated only on a remote sensing corpus, so its effectiveness in other multimodal domains remains to be systematically verified.

Author Contributions

Conceptualization, Y.R.; methodology, Y.R.; software, Z.H.; validation, Y.R.; formal analysis, W.L.; investigation, Z.W.; resources, W.J.; data curation, C.Q.; writing—original draft preparation, Y.R.; writing—review and editing, T.Z. and W.L.; supervision, L.J.; project administration, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (62171347), the Shaanxi Provincial Water Conservancy Fund Project (2024SLKJ-16), and the research project of Shaanxi Coal Geology Group Co., Ltd. (SMDZ-2023CX-14).

Data Availability Statement

The data presented in the GeoChat study are openly available in the GitHub repository at https://github.com/mbzuai-oryx/geochat, accessed on 13 May 2025. For further details, you can refer to the repository directly.

Conflicts of Interest

Author Zhiyang Wang was employed by the Shaanxi Water Development Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

References

- Bahrini, A.; Khamoshifar, M.; Abbasimehr, H.; Alazab, M.; Khorasani, A.; Akbari, M.; Mohseni, S.; Yang, X.; Elhoseny, M.; Khedher, L. ChatGPT: Applications, Opportunities, and Threats. In Proceedings of the 2023 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 27–28 April 2023; IEEE: New York, NY, USA, 2023; pp. 274–279. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Ren, Y.; Li, W.; Shi, L.; Ding, J.; Du, J.; Chen, T. FUO_ED: A Dataset for Evaluating the Performance of Large Language Models in Diagnosing Complex Cases of Fever of Unknown Origin. SSRN 2024, 1–8. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Chung, J.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; Payne, T.; et al. Large Language Models Encode Clinical Knowledge. arXiv 2022, arXiv:2212.13138. [Google Scholar] [CrossRef] [PubMed]

- Han, T.; Adams, L.C.; Papaioannou, J.M.; Grundmann, T.; Makowski, M.R.; Rückert, J.; Braren, R.F.; Kaissis, G.A. MedAlpaca—An Open-Source Collection of Medical Conversational AI Models and Training Data. arXiv 2023, arXiv:2304.08247. [Google Scholar]

- Taori, R.; Gulrajani, I.; Zhang, T.; Dubois, Y.; Li, X.; Guestrin, C.; Liang, P.; Hashimoto, T.B. Stanford Alpaca: An Instruction-Following LLaMA Model. arXiv 2023, arXiv:2309.16609. [Google Scholar]

- Wang, H.; Liu, C.; Xi, N.; Qiao, Y.; Li, H.; Zhang, Y.; Wang, Y.; Zhang, J.; Liu, T. Huatuo: Tuning LLaMA Model with Chinese Medical Knowledge. arXiv 2023, arXiv:2304.06975. [Google Scholar]

- Zhou, Z.; Shi, J.X.; Song, P.X.; Zhang, Y.; Li, J.; Chen, Y.; Wang, Y.; Liu, T. LawGPT: A Chinese Legal Knowledge-Enhanced Large Language Model. arXiv 2024, arXiv:2406.04614. [Google Scholar]

- Ren, Y.I.; Zhang, T.Y.; Dong, X.R.; Li, W.; Shi, L.; Ding, J.; Du, J.; Chen, T. WaterGPT: Training a large language model to become a hydrology expert. Water 2024, 16, 3075. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Duan, Y.; Fan, Y.; Gong, Z.; Guo, J.; Han, T.; He, J.; et al. Qwen Technical Report. arXiv 2023, arXiv:2309.16609. [Google Scholar]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; Lin, H.; et al. Qwen2 Technical Report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Wang, R.; Duan, Y.; Li, J.; Zhang, T.; Chen, Y.; Liu, F.; Zhou, J.; Zhang, Z.; Chen, H.; Li, W.; et al. XrayGLM: The First Chinese Medical Multimodal Model for Chest Radiographs Summarization. arXiv 2023, arXiv:2408.12345. [Google Scholar]

- Li, C.; Wong, C.; Zhang, S.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; et al. Llava-Med: Training a Large Language-and-Vision Assistant for Biomedicine in One Day. In Advances in Neural Information Processing Systems 36 (NeurIPS 2024); Curran Associates, Inc.: Red Hook, NY, USA, 2024. [Google Scholar]

- Zhang, T.; Qin, C.; Li, W.; Feng, X.; Chen, K.; Liu, C.; Chen, H.; Su, H.; Qiu, J.; Tang, Z.; et al. Water Body Extraction of the Weihe River Basin Based on MF-SegFormer Applied to Landsat8 OLI Data. Remote Sens. 2023, 15, 4697. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Chen, H.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; Su, H.; Qiu, J.; Tang, Z.; et al. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation Based on Visual Foundation Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Zhang, T.; Ji, W.; Li, W.; Qin, C.; Wang, T.; Ren, Y.; Fang, Y.; Han, Z.; Jiao, L. EDWNet: A Novel Encoder–Decoder Architecture Network for Water Body Extraction from Optical Images. Remote Sens. 2024, 16, 4275. [Google Scholar] [CrossRef]

- Su, H.; Qiu, J.; Tang, Z.; Chen, K.; Liu, C.; Chen, H.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; et al. Retrieving Global Ocean Subsurface Density by Combining Remote Sensing Observations and Multiscale Mixed Residual Transformer. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Qin, C.H.; Li, W.B.; Zhang, T.Y.; Feng, X.; Chen, K.; Liu, C.; Chen, H.; Su, H.; Qiu, J.; Tang, Z.; et al. Improved DeepLabv3+ Based Flood Water Body Extraction Model for SAR Imagery. In Proceedings of the IGARSS 2024–2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; IEEE: New York, NY, USA, 2024; pp. 1196–1199. [Google Scholar]

- Zhang, T.; Li, W.; Feng, X.; Chen, K.; Liu, C.; Chen, H.; Su, H.; Qiu, J.; Tang, Z.; Qin, C.; et al. Super-Resolution Water Body Extraction Based on MF-SegFormer. In Proceedings of the IGARSS 2024–2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; IEEE: New York, NY, USA, 2024; pp. 9848–9852. [Google Scholar]

- Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; Chen, K.; Liu, C.; Chen, H.; et al. RemoteCLIP: A Vision Language Foundation Model for Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; et al. RS5M: A Large Scale Vision-Language Dataset for Remote Sensing Vision-Language Foundation Model. arXiv 2023, arXiv:2306.11300. [Google Scholar]

- Hu, Y.; Yuan, J.; Wen, C.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. RSGPT: A Remote Sensing Vision Language Model and Benchmark. arXiv 2023, arXiv:2307.15266. [Google Scholar] [CrossRef]

- Kuckreja, K.; Danish, M.S.; Naseer, M.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. GeoChat: Grounded Large Vision-Language Model for Remote Sensing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 27831–27840. [Google Scholar]

- Zhang, W.; Cai, M.; Zhang, T.; Qin, C.; Li, W.; Feng, X.; Chen, K.; Liu, C.; Chen, H.; Su, H.; et al. EarthGPT: A Universal Multi-Modal Large Language Model for Multi-Sensor Image Comprehension in Remote Sensing Domain. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5917820. [Google Scholar] [CrossRef]

- Liu, C.; Chen, K.; Zhang, H.; Su, H.; Wu, F.; Li, W.; Qin, R.; Zhang, M.; Chen, H.; Wang, C.; et al. Change-agent: Towards interactive comprehensive remote sensing change interpretation and analysis. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5635616. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Yan, J.; Wang, H.; Gunaratna, K.; Yadav, V.; Tang, Z.; Srinivasan, V.; Zhou, T.; Huang, H.; et al. AlpaGasus: Training A Better Alpaca with Fewer Data. arXiv 2023, arXiv:2307.08701. [Google Scholar]

- Du, Q.; Zong, C.; Zhang, J. MoDS: Model-oriented Data Selection for Instruction Tuning. arXiv 2023, arXiv:2311.15653. [Google Scholar]

- Li, M.; Zhang, Y.; He, S.; Li, Z.; Zhao, H.; Wang, J.; Cheng, N.; Zhou, T. Superfiltering: Weak-to-Strong Data Filtering for Fast Instruction-Tuning. arXiv 2024, arXiv:2402.00530. [Google Scholar]

- Kung, P.N.; Yin, F.; Wu, D.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. Active Instruction Tuning: Improving Cross-Task Generalization by Training on Prompt Sensitive Tasks. arXiv 2023, arXiv:2311.00288. [Google Scholar]

- Yang, Z.; Pang, T.; Feng, H.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. Self-Distillation Bridges Distribution Gap in Language Model Fine-Tuning. arXiv 2024, arXiv:2402.13669. [Google Scholar]

- Yu, Z.; Zhang, X.; Shang, N.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. WaveCoder: Widespread and Versatile Enhanced Instruction Tuning with Refined Data Generation. arXiv 2023, arXiv:2312.14187. [Google Scholar]

- Wei, L.; Jiang, Z.; Huang, W.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. InstructionGPT-4: A 200-Instruction Paradigm for Fine-Tuning MiniGPT-4. arXiv 2023, arXiv:2308.12067. [Google Scholar]

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation based on Visual Foundation Model. arXiv 2023, arXiv:2306.16269. [Google Scholar] [CrossRef]

- Chen, K.; Liu, C.; Chen, B.; Li, W.; Zou, Z.; Shi, Z. DynamicVis: An Efficient and General Visual Foundation Model for Remote Sensing Image Understanding. arXiv 2025, arXiv:2503.16426. [Google Scholar]

- Liu, Y.; Duan, H.; Zhang, Y.; Zhang, Z.; Zhao, T.; Guo, Y.; Liu, F.; Chen, D.; Guan, Z.; Zhang, T.; et al. MMBench: Is Your Multi-Modal Model an All-Around Player? arXiv 2023, arXiv:2307.06281. [Google Scholar]

- Fu, C.; Chen, P.; Shen, Y.; Qin, Y.; Zhang, M.; Lin, X.; Yang, J.; Zheng, X.; Li, K.; Sun, X.; et al. MME: A Comprehensive Evaluation Benchmark for Multimodal Large Language Models. arXiv 2023, arXiv:2306.13394. [Google Scholar]

- Li, B.; Ge, Y.; Ge, Y.; Wang, G.; Wang, R.; Zhang, R.; Shan, Y. SEED-Bench: Benchmarking Multimodal Large Language Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 13299–13308. [Google Scholar]

- Siddhant, A.; Lipton, Z.C. Deep Bayesian Active Learning for Natural Language Processing: Results of a Large-Scale Empirical Study. arXiv 2018, arXiv:1808.05697. [Google Scholar]

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N. C-Pack: Packaged Resources to Advance General Chinese Embedding. arXiv 2023, arXiv:2309.07597. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. BGE M3-Embedding: Multi-Lingual, Multi-Functionality, Multi-Granularity Text Embeddings Through Self-Knowledge Distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Dong, X.; Zhang, P.; Zang, Y.; Cao, Y.; Wang, B.; Ouyang, L.; Wei, X.; Zhang, S.; Duan, H.; Cao, M.; et al. InternLM-XComposer2: Mastering Free-Form Text-Image Composition and Comprehension in Vision-Language Large Model. arXiv 2024, arXiv:2401.16420. [Google Scholar]

- Chen, J.; Zhu, D.; Shen, X.; Li, X.; Liu, Z.; Zhang, P.; Krishnamoorthi, R.; Chandra, V.; Xiong, Y.; Elhoseiny, M. MiniGPT-v2: Large Language Model as a Unified Interface for Vision-Language Multi-Task Learning. arXiv 2023, arXiv:2310.09478. [Google Scholar]

- Bai, J.; Bai, S.; Yang, S.; Wang, S.; Tan, S.; Wang, P.; Lin, J.; Zhou, C.; Zhou, J. Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond. arXiv 2023, arXiv:2401.09712. [Google Scholar]

- Liu, H.; Li, C.; Li, Y.; Lee, Y.J. Improved Baselines with Visual Instruction Tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 26296–26306. [Google Scholar]

- Dai, W.; Li, J.; Li, D.; Tiong, A.M.H.; Zhao, J.; Wang, W.; Li, B.; Fung, P.; Hoi, S. InstructBLIP: Towards General-Purpose Vision-Language Models with Instruction Tuning. arXiv 2024, arXiv:2402.04257. [Google Scholar]

- Ye, Q.; Xu, H.; Ye, J.; Yan, M.; Hu, A.; Liu, H.; Qian, Q.; Zhang, J.; Huang, F. MPlug-OWL2: Revolutionizing Multi-Modal Large Language Model with Modality Collaboration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: New York, NY, USA, 2024; pp. 13040–13051. [Google Scholar]

- Zhan, Y.; Xiong, Z.; Yuan, Y. SkyEyeGPT: Unifying Remote Sensing Vision-Language Tasks via Instruction Tuning with Large Language Model. arXiv 2024, arXiv:2401.09712. [Google Scholar] [CrossRef]

- Muhtar, D.; Li, Z.; Gu, F.; Zhang, X.; Xiao, P. LHRS-Bot: Empowering Remote Sensing with VGI-Enhanced Large Multimodal Language Model. arXiv 2024, arXiv:2402.02544. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).