Abstract

Analyzing wetland landscape pattern evolution is crucial for managing wetland resources. High-resolution remote sensing serves as a primary method for monitoring wetland landscape patterns. However, the complex landscape types and spatial structures of wetlands pose challenges, including interclass similarity and intraclass spatial heterogeneity, leading to the low separability of landscapes and difficulties in identifying fragmented and small objects. To address these issues, this study proposes the multilevel feature cross-fusion wetland landscape classification network (MFCFNet), which combines the global modeling capability of Swin Transformer with the local detail-capturing ability of convolutional neural networks (CNNs), facilitating discerning intraclass consistency and interclass differences. To alleviate the semantic confusion caused by different-level features with semantic gaps during fusion, we introduce a deep–shallow feature cross-fusion (DSFCF) module between the encoder and the decoder. We incorporate global–local attention block (GLAB) to aggregate global contextual information and local detail. The constructed Shengjin Lake Wetland Gaofen Image Dataset (SLWGID) is utilized to evaluate the performance of MFCFNet, achieving evaluation metric results of the OA, mIoU, and F1 score of 93.23%, 78.12%, and 87.05%, respectively. MFCFNet is used to classify the wetland landscape of Shengjin Lake from 2013 to 2023. A landscape pattern evolution analysis is conducted, focusing on landscape transitions, area changes, and pattern characteristic variations. The method demonstrates effectiveness for the dynamic monitoring of wetland landscape patterns, providing valuable insights for wetland conservation.

1. Introduction

Wetlands, known as the “Earth’s kidneys”, are among the three major global ecosystems. Wetlands are vital for the carbon cycle, pollution degradation, water conservation, climate regulation, and biodiversity protection [1,2]. The dynamic monitoring of wetlands allows for the analysis of landscape pattern evolution, which helps gain an understanding of the status and trends of wetlands. This provides reliable data support for the protection and management of wetland resources. However, wetlands are often located in remote areas and are widely distributed, posing significant difficulties for wetland monitoring. The rapid development of remote sensing technology offers abundant observational data for dynamic wetland monitoring [3]. Remote sensing, with its fast acquisition, wide coverage, and rich data, enables researchers to conduct the long-term dynamic monitoring of wetlands, supporting the annual classification and monitoring of wetland changes, landscape patterns, and surface water [4,5,6,7].

High-resolution satellite images provide detailed feature information for wetland landscape classification, including object boundaries, vegetation types, patch shapes, and textures [8]. High-resolution images with rich spatial and spectral information pose challenges for wetland landscape classification because of the small interclass differences and large intraclass variability caused by complex spatial structures and widespread feature distributions [9,10]. This variation results in misclassification and omission, making wetland classification a significant challenge. Researchers have conducted extensive studies on wetland landscape classification methods. In the realm of machine learning, algorithms such as random forests (RFs) [11], classification and regression trees (CARTs) [12], and support vector machines (SVMs) [13] have been widely employed for wetland classification. However, the spectral similarity of wetland types, the complexity of vegetation composition, and the dynamic nature of water limit the effectiveness of traditional machine learning methods for wetland classification [14]. These methods struggle with nonlinear spectral–spatial relationships, making it difficult to address issues such as “same spectrum, different objects” and “same object, different spectra”, and “salt-and-pepper” noise [9].

Deep learning methods exhibit strong nonlinear mapping capabilities when addressing complex nonlinear relationships in practical problems [15]. Researchers have applied convolutional neural networks to image segmentation tasks [16,17,18]. Fully convolutional networks (FCNs) [19] replace fully connected layers with convolutional layers and introduce skip connections, increasing segmentation accuracy. However, FCNs overlook pixel relationships, resulting in poor spatial consistency. The U-Net encoder–decoder structure uses skip connections to pass low-level features to high-level layers, effectively combining contextual information and reducing detail loss [20]. The DeepLab series of networks employs an atrous spatial pyramid pooling (ASPP) module to expand the receptive field, enabling the capture of semantic information at different scales [21,22,23]. These methods are effectively applied to complex wetland classification tasks [24,25,26].

In remote sensing images, wetland areas display a broad distribution of features and intricate spatial structures, necessitating more comprehensive semantic information for fine classification tasks [10,27]. CNN-based methods effectively capture local details with small convolutional kernels, but their limited receptive fields hinder the capturing of global context, leading to blurred boundaries in complex scenes with interclass similarity and intraclass variability. Numerous researchers have turned to transformers for semantic segmentation tasks to address this challenge [28,29,30,31]. The multihead attention in the transformer enhances global context extraction, overcoming the local focus of other networks. However, its high computational complexity demands more memory for large images. The Swin Transformer improves efficiency by using windowed self-attention within nonoverlapping local windows [32] and connects information between windows via a sliding window mechanism, enhancing global feature modeling.

When high-resolution remote sensing images are used for complex wetland landscape classification, we primarily encounter the following two issues:

- (1)

- Interclass feature similarity and intraclass spatial heterogeneity: In high-resolution remote sensing images, features of different categories, such as forests, farmlands, and grasslands in complex wetlands, exhibit certain similarities, with texture differences being subtle. The intermingling of land types leads to blurred boundaries [33]. Additionally, the same wetland type can exhibit significant feature variations due to differing conditions, such as cultivation practices and planting types. The high spatial heterogeneity of wetlands results in marked differences in objects such as water and vegetation across regions, increasing classification uncertainty [34].

- (2)

- Difficulty in recognizing fragmented and small objects: Fragmented and small objects, such as tiny water bodies, small tree patches, and minor settlements, often have limited pixel representation, providing insufficient texture and shape information for accurate recognition. These objects are frequently embedded in complex backgrounds, making them prone to obscuration and mixed pixel issues at boundaries, leading to unclear segmentation. Furthermore, inadequate sample labeling and class imbalance during training complicate the classification process.

Therefore, the feature variability of wetland types across extensive spatial distributions necessitates a large receptive field and the ability to extract global feature distribution information. The complex wetland structure, with its small targets and rich details, requires a focus on preserving fine-grained features to accurately capture local information [35,36,37]. Therefore, the aggregation of global and local information is crucial for accurately understanding the semantic structure of images. Combining CNN with a transformer allows for the strengths of each to be leveraged. WetMapFormer is a wetland mapping framework that combines CNN and a transformer, providing precise wetland mapping for three experimental wetland sites in Canada [10]. Methods such as CMTFNet [38], CSTUnet [39], and TCNet [40] facilitate the integrated extraction and fusion of local and global features. However, current methods [41,42,43,44] that combine CNNs and transformers overlook the semantic gaps between features extracted from different levels and fail to consider the complementary use of information from adjacent feature layers. In complex wetland scenes, shallow features focus on local details and textures, whereas deep features emphasize overall semantic information, highlighting object categories and the global structure of the scene. The semantic gaps between different feature levels can lead to information conflicts or losses during integration.

To address the challenges in the high-resolution remote sensing image classification of complex wetland landscapes—such as interclass feature similarity, intraclass spatial heterogeneity, and difficulties in recognizing fragmented and small objects—while also effectively bridging the semantic gaps between multilevel features and integrating global and local information to enhance classification accuracy, this study proposes a multilevel deep–shallow feature cross-fusion wetland landscape classification network (MFCFNet) for the long-term classification and analysis of the landscape pattern evolution in the Shengjin Lake wetland. The main findings are as follows:

- (1)

- We propose a multilevel feature cross-fusion wetland classification network (MFCFNet). MFCFNet uses a multistage Swin Transformer as the encoder for the hierarchical feature extraction of global semantic information while employing a CNN to capture low-level features and local information. The Shengjin Lake Wetland Gaofen Image Dataset (SLWGID) is created to assess the segmentation performance of MFCFNet, achieving 93.23% OA, 78.12% mIoU, and 87.05% mF1 scores on the dataset. Moreover, MFCFNet’s architecture—multilevel feature encoding, cross-scale fusion, and the integration of global and local representations—enhances discriminative feature expression, improving adaptability to weak categories and generalization under limited annotations and class imbalance.

- (2)

- To bridge the semantic gaps between different hierarchical features extracted by the Swin Transformer encoder, a deep–shallow feature cross-fusion module (DSFCF) is introduced between the encoder and the decoder. This module establishes complementary information between adjacent feature layers, effectively alleviating the semantic confusion that arises during feature fusion and promoting the effective utilization and integration of features across different scales.

- (3)

- In the decoder, a global–local attention block (GLAB) is introduced to capture and aggregate global contextual features and the intricate details of multiscale local structures. The GLAB enhances the ability of the model to discern interclass differences among types, as well as intraclass consistency features. The GLAB alleviates the challenges of recognizing classes with high spatial heterogeneity and improves the segmentation performance for fragmented and small objects.

- (4)

- MFCFNet is utilized for the landscape classification of the Shengjin Lake wetland from 2013 to 2023. On the basis of the classification results over these 11 years, a landscape pattern evolution analysis is conducted, focusing on changes in landscape type areas, interclass transitions, and variations in landscape pattern characteristics.

2. Study Area and Data

2.1. Study Area

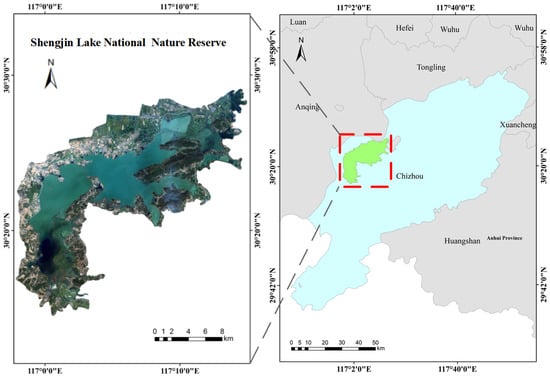

The Shengjin Lake National Nature Reserve is located in Dongzhi County, Chizhou city, Anhui Province, within the coordinates of 116°55′ to 117°15′E and 30°15′ to 30°30′N, covering a total area of 33,340 hectares [45], as shown in Figure 1. The Shengjin Lake area receives abundant rainfall and is rich in surface runoff, with well-vegetated surroundings. This wetland is a freshwater lake with high water quality and serves as a protected area focused on conserving freshwater wetland ecosystems and rare, endangered bird species [46]. An investigation of the dynamic changes in the landscape patterns of the Shengjin Lake wetland ecosystem is crucial for monitoring the ecological and environmental changes and maintaining the biodiversity. This study also provides important insights for wetland conservation management and the rational utilization of wetland resources. Therefore, we select Shengjin Lake as the study area to conduct an in-depth investigation of wetland landscape classification methods and to analyze the evolution of landscape patterns from 2013 to 2023.

Figure 1.

Location of Shengjin Lake National Nature Reserve.

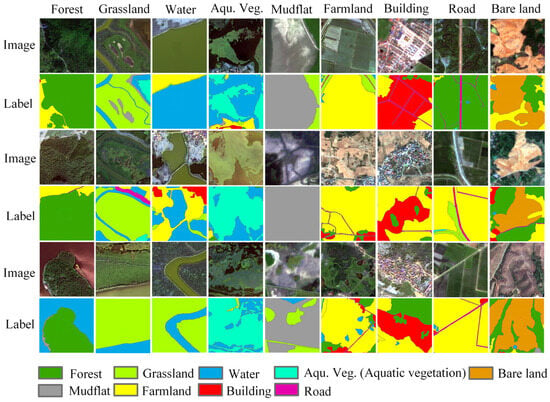

2.2. Dataset

This study used 28 Gaofen satellite images (GF-1, GF-1C, GF-2, and GF-6) covering Shengjin Lake from 2013 to 2023. After radiometric calibration, atmospheric correction, orthorectification, fusion, geometric correction, mosaicking, and clipping, a dataset of the study area over 11 years was obtained, as shown in Table 1. On the basis of the land use classification system and the objectives of landscape pattern analysis, the area was divided into nine landscape types: forest, grassland, water, aquatic vegetation, mudflat, farmland, buildings, roads, and bare land, as shown in Table 2. Typical images of these landscape types and their corresponding labels are shown in Figure 2.

Table 1.

Image information.

Table 2.

Description of landscape type.

Figure 2.

Wetland landscape type images and labels.

In the image selection process, we initially aimed to prioritize Gaofen-series satellite images captured within the same time period of each year to minimize the impact of seasonal variations. However, due to limitations in image availability, data quality, and cloud cover, it was unavoidable to extend the acquisition periods to adjacent months for certain years. To control the potential influence of acquisition time differences, we prioritized images captured during stable water-level periods with typical vegetation characteristics and clear weather conditions. Moreover, stricter sample selection criteria were applied for seasonally sensitive categories (e.g., aquatic vegetation and grassland) to mitigate the impact of seasonal variations on classification accuracy.

For sample preparation, to ensure the diversity and representativeness of the samples, typical ground landscape types under various imaging conditions were included. For the 11 years of image data from 2013 to 2023, several areas of approximately 5000 m × 5000 m were chosen to construct the samples. These areas encompassed the typical features of different categories, ensuring that each landscape category had sufficient and balanced samples. This study employed visual interpretation to construct sample label data, classifying the land types into nine categories: forest, grassland, water, aquatic vegetation, mudflat, farmland, building, road, and bare land. The dataset consisted of 6579 images (1024 × 1024 pixels), which were randomly split into training, testing, and validation sets at a 3:1:1 ratio. Ultimately, the Shengjin Lake Wetland Gaofen Image Dataset (SLWGID) was constructed.

3. Method

3.1. Network Structure

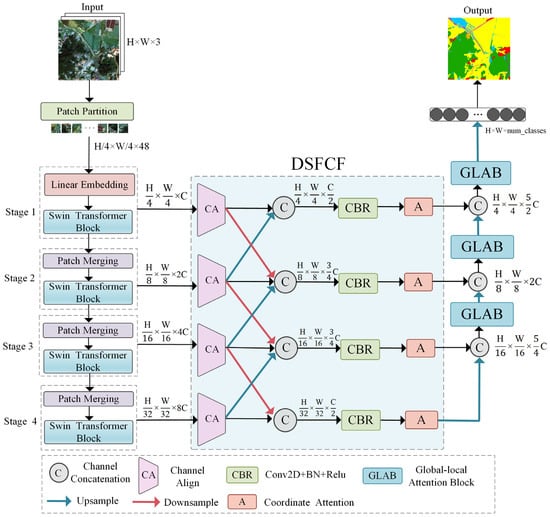

The transformer employs a multihead self-attention mechanism to perform global self-attention calculations, capturing long-range dependencies. However, the high computational complexity of the transformer demands more memory for large images. The Swin Transformer addresses this limitation with its window-based attention mechanism and sliding window approach, which establishes dependencies between feature maps within windows [32]. CNN excels at capturing local features by sliding convolutional kernels, effectively identifying edges, textures, and other characteristics within local receptive fields [18]. Considering the advantages of both the Swin Transformer and the CNN, we combine the two methods. Figure 3 shows the overall structure of MFCFNet for wetland landscape classification. MFCFNet employs four stages of Swin Transformer blocks as the encoder to hierarchically construct a method for multiscale feature extraction.

Figure 3.

Overall structure of MFCFNet.

A semantic gap often exists between the deep features and shallow features generated in the encoder stage [47]. Simple skip connections during feature fusion can cause semantic confusion. We introduce a deep–shallow feature cross-fusion module (DSFCF) between the encoder and the decoder to establish connections. This module performs cross-fusion between adjacent features, allowing for the aggregation of multiscale features and establishing complementary information between neighboring feature layers, effectively mitigating semantic confusion during the fusion of features at different levels.

Wetland features are often discrete, with complex details and overlapping categories, resulting in challenges such as interclass similarity and intraclass variability, making small object recognition difficult [48]. By integrating global and local features, we can better perceive the spatial relationships of information and recognize feature differences across multiple scales. Therefore, we introduce a global–local attention block (GLAB) in the decode. The multilevel features output from the DSFCF module are fused with the upsampled features from the decoder and then fed into the global–local attention module (GLAB), enabling hierarchical feature representation that aggregates global context and local detail across different scales.

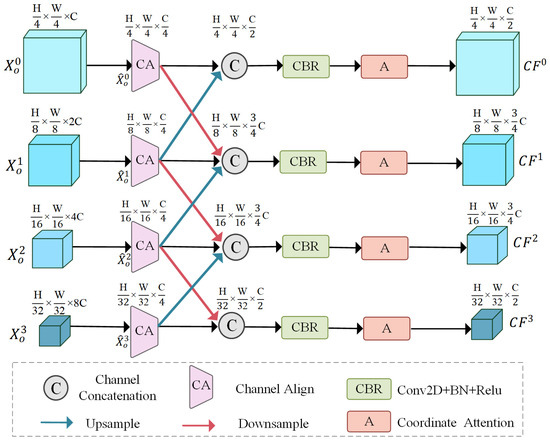

3.2. Deep–Shallow Feature Cross-Fusion Module

In the Swin Transformer encoder, the feature levels extracted at different stages reflect varying representations. Shallow features have a smaller receptive field and typically provide fine-grained information such as edges, shape contours, textures, and positions, whereas deep features, with a larger receptive field, encompass more abstract contextual semantic information. During feature fusion, the significant semantic gap between deep features and shallow features can cause semantic confusion [47]. Therefore, in this study, a deep–shallow feature cross-fusion (DSFCF) module is designed to integrate multi-scale features and mitigate this semantic gap.

The structure of the DSFCF module is shown in Figure 4. First, the feature maps output from the four levels of the Swin Transformer blocks undergo a channel alignment (CA) operation. This operation uses a 2D convolution with a 1 × 1 kernel to adjust the dimensions and distribution of feature channels. Compressing and aligning the channels across different levels helps the model retain critical features, remove redundancy, and improve feature utilization efficiency. The CA also facilitates complementary fusion between deep and shallow features, enabling the effective integration of multilevel features within the same spatial domain while reducing computational cost. After the CA operation, the channel numbers of the four levels are reduced from C, 2C, 3C, and 4C to a unified C/4, as expressed by the following formula:

where represents the output features from the encoder, while denotes the output features after the CA (Channel Align) operation.

Figure 4.

Schematic of the DSFCF.

Second, downsampling is applied to the first-, second-, and third-level branches to convert them to the same size as the feature maps of the branches at the subsequent level. Similarly, upsampling is performed on the second-, third-, and fourth-level branches to match the sizes of the feature maps of the branches at the preceding level. This process can be represented by the following equations:

where represents the -th level feature, denotes the downsampling operation, and refers to the 2× bilinear interpolation upsampling operation. and represent the output features of the previous and subsequent branches after the channel alignment, respectively, where and represent the output features after downsampling and upsampling, respectively.

Third, the features of the current branch are concatenated with the features from the previous and subsequent branches to complete the cross-fusion. A 3 × 3 convolution is applied to the fused features at each level to further capture the fine-grained information. Finally, a coordinate attention mechanism, which has spatial position awareness, is used to capture long-range dependencies within the spatial domain. This process can be expressed by the following equations:

where represents the features of the current branch, and and represent the feature maps from the previous and subsequent levels, respectively. denotes the feature concatenation operation; refers to the 3 × 3 convolution, BatchNorm, and ReLU operations; represents the coordinate attention mechanism; and indicates the result of the cross-fusion of features across different levels.

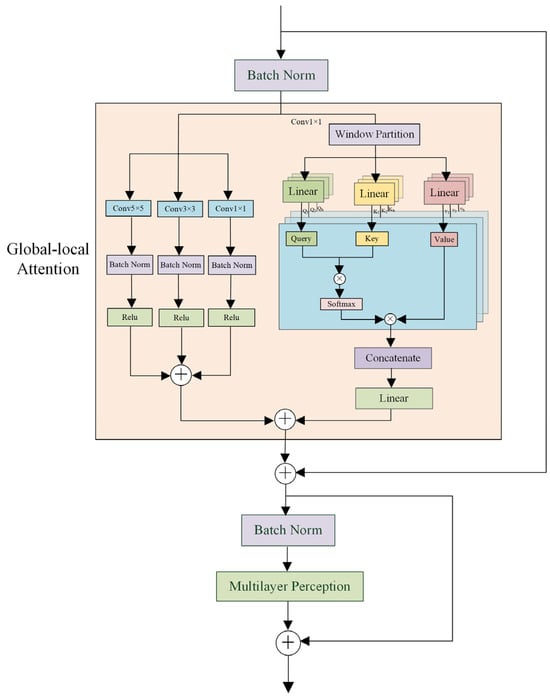

3.3. Global–Local Attention Block

High-resolution images contain much spatial and spectral information, with rich details on the texture and shape of ground objects. However, the complexity of these object features increases, leading to segmentation errors due to phenomena such as “different objects with the same spectrum” and “same object with different spectra” [49]. To better capture both global contextual feature distributions and local, multiscale structural details and to enhance the ability of the model to learn interclass differences and intraclass consistency, a global–local attention block (GLAB) is introduced in the model decoder. As shown in Figure 5, the GLAB consists of a local branch composed of multiscale convolutions and a global branch based on windowed multihead self-attention mechanisms, which extract local detail information and global spatial dependency semantic features, respectively. The local branch employs convolutional groups with kernel sizes of 5 × 5, 3 × 3, and 1 × 1 to capture spatial and spectral features at different scales, thereby fully extracting fine-grained local features, and can be represented as:

where represents the output features of the local branch. Through the combination of these multi-scale convolutions, the complex features of ground objects can be comprehensively analyzed, enhancing the detail extraction capability of the local branch.

Figure 5.

Schematic of the GLAB.

In the global branch, a 1 × 1 convolution is applied to expand the channel dimension C of the input feature map three times. To reduce the computational complexity of the self-attention module, the input features are partitioned into non-overlapping windows, with self-attention executed within each window. This approach reduces computational costs while preserving global contextual information. Within each window, the input sequence is mapped into Query (Q), Key (K), and Value (V) vectors. Then, the attention weights are obtained by calculating the dot product between and . The results of this dot product are normalized using the Softmax function to generate the attention weights. The attention weights are subsequently multiplied by to obtain the attention scores. The vectors are divided into h heads, and the self-attention for each head is computed separately, which can be expressed as follows:

where are the projections of onto the i-th attention head, and is a scaling factor used to alleviate the vanishing gradient problem. The results from all the attention heads are concatenated and passed through a linear transformation to obtain the final multihead self-attention output, which can be expressed as:

where is the learnable linear transformation matrix. By merging the global context information obtained from the global branch with the local features extracted by the local branch, a comprehensive representation of multi-scale information is achieved, which can be expressed as:

where represents the result of the fusion between the global branch and the local branch. Residual connections are applied within the GLAB, and the process can be expressed as:

Here, represents the global–local attention operation, composed of both the global and local branches. refers to the features input to and (Batch Normalization), while represents the output features of MLP and . This dual-branch feature aggregation strategy not only enhances the model’s ability to capture global contextual information but also strengthens its capability to analyze local details. By closely integrating contextual information with fine-grained features, the model demonstrates improved robustness and accuracy when handling complex wetland scenarios and intricate details.

4. Experiments and Results

4.1. Implementation Details and Evaluation Metrics

The experiments in this study are conducted in a Python 3.8 environment via the PyTorch 1.11.0 framework on a computer equipped with an NVIDIA GeForce RTX 4070 Ti GPU (NVIDIA, Santa Clara, CA, USA). The learning rate is 0.0003, and the weight decay parameter is 0.00025. The training is conducted for 100 epochs with a batch size of 4, using the AdamW optimizer and a cosine strategy to adjust the learning rate. The loss function is a combination of Dice loss and soft cross-entropy loss. Soft cross-entropy loss is suitable for handling multiclass problems, whereas Dice loss is used for segmentation tasks. Combining these two loss functions to supervise model training helps improve the model’s performance. The loss function is expressed by the following formula:

To evaluate the classification accuracy of the model, this study uses overall accuracy (OA), mean intersection over union (mIoU), and the mean F1 score (mF1). The OA measures the proportion of correct predictions, the IoU assesses the overlap between the predictions and the ground truth, the mIoU averages the IoU across classes, and the mF1 averages the F1 scores across classes. The formulas are as follows:

where , , , and represent the number of true positives, false positives, true negatives, and false negatives, respectively, for the specific class indexed by k.

4.2. Comparative Experimental Results Analysis

The proposed MFCFNet is evaluated against seven typical semantic segmentation models (DeepLabV3+ [23], SegNet [50], HRFormer [51], UNetFormer [52], SegFormer [53], BANet [54], and DCSwin [55] on the SLWGID. The comparison results of the models are quantitatively assessed, with Table 3 and Table 4 presenting the overall and category-specific evaluation metrics for each model. Compared to the other models, MFCFNet achieves higher mIoU, mF1, and OA values on SLWGID, with scores of 78.12%, 87.05%, and 93.23%, respectively. In the segmentation results for various categories, MFCFNet records the highest IoU and F1 scores for eight categories, excluding forest. SegNet aggregates multiscale features but struggles with interclass similarities and intraclass differences, leading to subpar performance in fine category segmentation. In comparison, MFCFNet achieves significantly higher F1 scores and IoUs for all categories, with the exception of the forest category. Compared to DeepLabV3+, MFCFNet, with DSFCF and GLAB, improves both overall and detailed semantic extraction, achieving a 10.23% increase in the mIoU and a 7.89% increase in the mF1 score. Models such as SegFormer, HRFormer, UNetFormer, and DCSwin incorporate attention mechanisms to establish global dependencies, increasing the aggregation of global and local semantic information. Although they outperform earlier CNN-based methods, their categorywise segmentation accuracy still lags behind that of MFCFNet.

Table 3.

Quantitative comparison results on the SLWGID.

Table 4.

Classification performance of different methods on the SLWGID.

MFCFNet performs excellently across most landscape types in Table 4, achieving the highest IoU and F1 scores in eight categories, including water, grassland, farmland, and building, demonstrating the model’s strong generalization ability in diverse wetland landscapes. While MFCFNet shows strong performance in most categories, its feature representation for the forest category is still challenged by structural diversity and scale variation. In contrast, some comparison models, such as DCSwin and BANet, may leverage global modeling or dual-path mechanisms to respond more effectively to specific types of forested areas, achieving slightly better results for this category under certain structural conditions. Nevertheless, MFCFNet still maintains a leading position in overall classification accuracy, confirming its comprehensive advantages in complex remote sensing segmentation tasks.

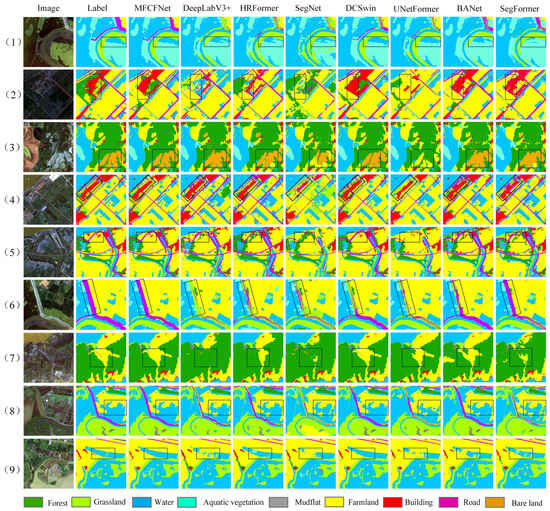

We conduct a qualitative assessment of the classification results from different models. The segmentation results of various methods on SLWGID are presented in Figure 6. Owing to the complex land cover in the Shengjin Lake area, there are significant differences among similar land cover types; for example, farmland exhibits various morphological features. Additionally, different categories, such as farmland, grassland, and forest, share similar spectral characteristics, making segmentation quite challenging. The classic CNN segmentation algorithms DeepLabV3+ and SegNet focus on local features, which makes it difficult to ensure the completeness and accuracy of the segmented objects. As shown in the segmentation results in Figure 6(3,4,6), there are significant misclassifications and blurred boundaries among farmland, forest, and bare land. In contrast, MFCFNet more effectively captures and aggregates global and local contextual information, allowing for the extraction of more consistent features within categories. HRFormer and SegFormer leverage transformers to obtain global contextual information; however, their performance in segmenting objects in close proximity suffers from the semantic information overlapping. In contrast, MFCFNet effectively enhances the ability of the model to perceive spatial positions and boundary details. In the black-boxed areas of the figure, where farmland, forest, and grassland patches are interwoven, MFCFNet yields more complete and accurate segmentations of different categories. As shown in Figure 6(1,4,9), the segmentation boundaries for water, grassland, and farmland are more distinct and continuous in MFCFNet than in models such as SegNet, DCSwin, and SegFormer.

Figure 6.

Qualitative comparison results on the SLWGID. Subfigures (1–9) show the comparison experiment results of nine test images. The black boxes highlight the differences in classification details among different comparative methods.

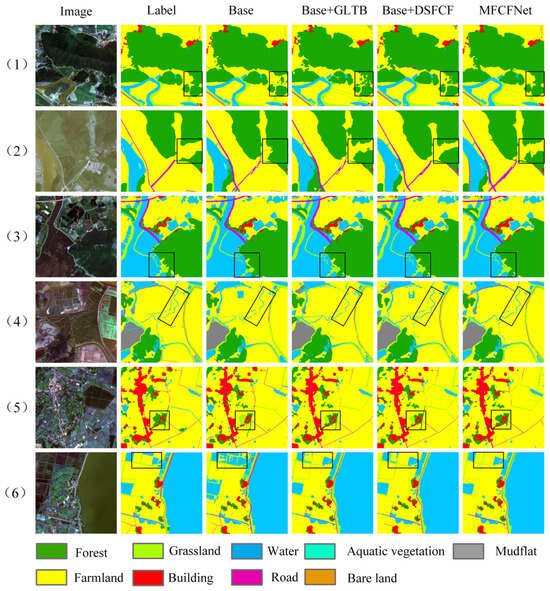

4.3. Ablation Experiments

To evaluate the effectiveness of each module in the proposed MFCFNet, we conduct ablation experiments on the SLWGID. The base model does not include the GLAB and DSFCF modules. We assess the effect of GLAB and DSFCF on segmentation performance separately.

The quantitative results are shown in Table 5. Adding the DSFCF module to the baseline model increases the mIoU by 0.63% and the mF1 score by 0.51%. When GLAB is added to the baseline, the mIoU improves by 0.35%, and the mF1 score improves by 0.40%. With both DSFCF and GLAB integrated into the baseline, the mIoU increases by 0.88%, the mF1 score increases by 0.86%, and the OA increases by 0.42%.

Table 5.

Quantitative results of the ablation study on the SLWGID.

The qualitative results are shown in Figure 7. The classification results of the base model show that the forests within the black box are not extracted well, with significant confusion between forests and farmland. After incorporating the GLAB, the previously unrecognized portions of the forest are segmented. When the DSFCF module is added to the base model, the completeness of forest extraction improves significantly. A similar pattern is observed in Figure 7(2), where the model better differentiates between farmland and forest after the inclusion of both the DSFCF and GLAB. Figure 7(4,5) further demonstrate that with the addition of both modules, the ability of the model to recognize small targets, such as narrow water and small forest patches, improves significantly. Figure 7(3,6) show that the GLAB improves segmentation among forests and farmland, effectively reducing confusion between different categories. In summary, the results clearly show that adding both modules significantly improves the accuracy of feature extraction for land covers with similar characteristics and the ability of the model to recognize features with intraclass variations. This leads to more precise, distinct, and complete segmentation results than those of the base model.

Figure 7.

Qualitative results of the ablation study on the SLWGID. Subfigures (1–6) show the ablation experiment results of six test images. The black boxes highlight the differences in classification details of ablation experiments.

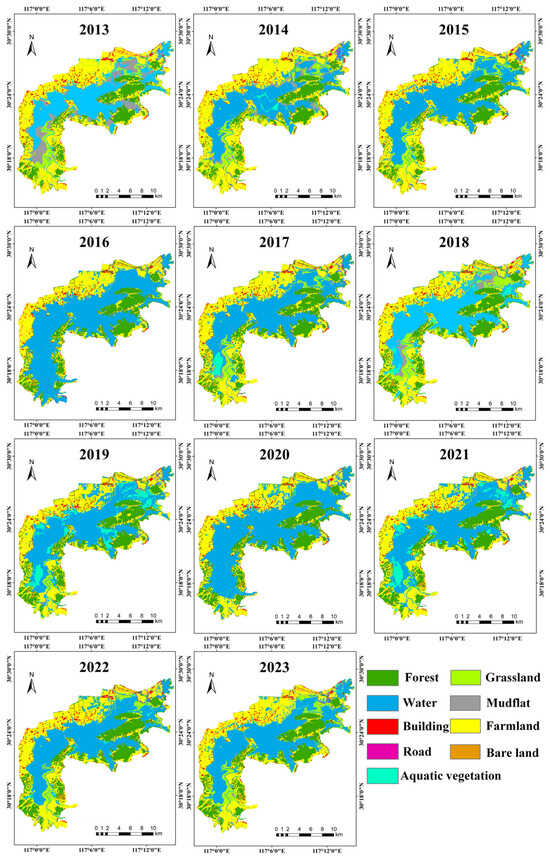

4.4. Classification Results for the Shengjin Lake Wetland from 2013 to 2023

To assess the classification performance of the model on Shengjin Lake images from 2013 to 2023 while ensuring the reliability and accuracy of the foundational landscape classification results for subsequent landscape pattern analysis, two untrained 5000 m × 5000 m plots were selected for the accuracy evaluation of the image for each year. The results of the accuracy evaluation are presented in Table 6. With the exception of the overall accuracy (OA) of 2013, which was less than 90%, all other images had OAs greater than 90%, with a maximum OA of 93.48%.

Table 6.

Test evaluation results on Shengjin Lake images from 2013 to 2023.

We applied MFCFNet to classify the landscape of Shengjin Lake National Nature Reserve via remote sensing images from 2013 to 2023. The classification results are shown in Figure 8. MFCFNet clearly demonstrated excellent overall segmentation performance, achieving high precision in distinguishing between different land cover types. This was particularly notable in its differentiation between classes with similar spectral features and close color textures, such as forests and farmland. Additionally, the model successfully extracted finer details, such as sparse patches of trees between farmland and residential buildings. Moreover, the Shengjin Lake area featured a variety of farmland types, with significant differences in texture and shape within categories. The model significantly reduced misclassification instances. The model also exhibited high integrity in extracting large lakes, and it could effectively capture many small ponds and fine streams in the study area.

Figure 8.

Landscape classification results for Shengjin Lake National Nature Reserve from 2013 to 2023.

4.5. Uncertainty Analysis of Classification Results

Although MFCFNet achieved satisfactory classification performance in the Shengjin Lake wetland, there remains a degree of uncertainty in its practical application, mainly due to the following factors:

- (1)

- Variations in remote sensing image quality. In multi-temporal remote sensing classification tasks, differences in image quality across years—such as spatial resolution, radiometric consistency, cloud coverage, and illumination conditions—can significantly affect classification stability, especially over long time series. Although this study prioritized cloud-free and high-quality images, limitations in satellite acquisition conditions made it difficult to avoid image quality discrepancies in certain years. For example, some images were slightly affected by haze or exhibited edge blurring, which compromised the expression of surface feature information in specific areas, leading to misclassification and reduced accuracy. These inter-annual inconsistencies in image quality were among the key factors affecting the stability and reliability of long-term wetland classification in Shengjin Lake.

- (2)

- Limitations in temporal sampling frequency and the influence of seasonal hydrological fluctuations. In this study, only one remote sensing image per year was selected for classification analysis. This relatively low temporal sampling frequency may have been insufficient to fully capture the seasonal dynamics of the wetland throughout the year. Certain short-term or transient changes—such as temporary water accumulation after heavy rainfall or seasonal vegetation growth and degradation—may have gone unrecorded, potentially leading to misclassification. Moreover, the Shengjin Lake wetland exhibited significant seasonal hydrological fluctuations, largely influenced by monsoonal precipitation and water regulation. Some typical landscape types, such as water, mudflats, grasslands, and aquatic vegetation, displayed substantial spectral differences between wet and dry seasons. Single-date imagery is often inadequate to reflect the full extent of these annual variations, which may result in temporal inconsistencies and the spatial misclassification of these categories, thereby affecting the overall accuracy and stability of classification results. To better capture the dynamic evolution of wetland landscapes, future work could incorporate multi-season or higher frequency imagery for classification and analysis. The use of higher temporal resolution data would improve the model’s ability to detect landscape transitions and enhance classification precision.

5. Analysis of Landscape Pattern Evolution in Shengjin Lake

5.1. Analysis of Landscape Type Transition

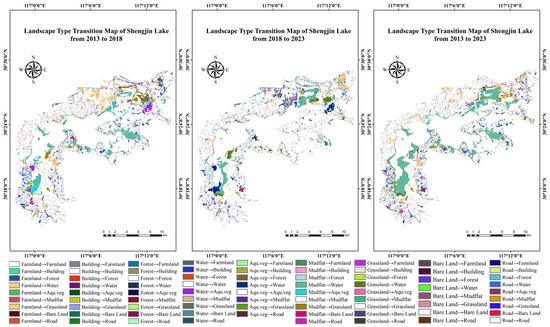

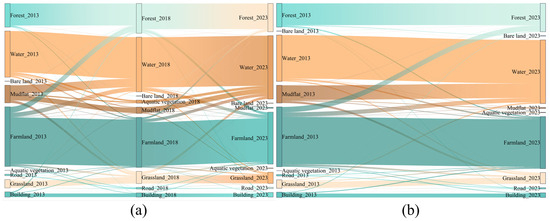

The landscape type transfer matrix quantifies area conversions between different landscape types, showing changes in direction and extent [56]. To analyze the dynamic transfer of landscape types in Shengjin Lake, we created landscape type transfer maps for the periods 2013–2018, 2018–2023, and 2013–2023, as shown in Figure 9. We conducted a transfer matrix analysis, visualizing the results as a Sankey diagram (Figure 10). Additionally, we statistically analyzed the transfer area and direction, as well as the transfer out rate and transfer in rate for each type from 2013 to 2023, with the landscape type transfer matrices presented in Table 7.

Figure 9.

Wetland landscape type transfer map of Shengjin Lake in 2013–2018, 2018–2023, and 2013–2023.

Figure 10.

Wetland landscape type transfer Sankey map of Shengjin Lake: (a) 2013–2018 and 2018–2023, (b) 2013–2023.

Table 7.

Wetland landscape type transfer matrix for Shengjin Lake in 2013–2023.

According to Figure 10, the most significant transfer from 2013 to 2018 was from mudflats to water. The conversion of the mudflat area was significantly influenced by the water level of Shengjin Lake. Additionally, a notable transfer from farmland to forest occurred, mainly in the western area of the upper lake of Shengjin Lake. The “Grain for Green” policy has facilitated the conversion of farmland to forest. During the period from 2018 to 2023, the dynamic transfer of certain landscape types in the water area was quite evident. Owing to seasonal changes in the water level of the Shengjin Lake wetland, the rising water level submerged the previously exposed mudflats, grasslands, and aquatic vegetation. Additionally, there was a certain degree of transfer from forest and water to farmland. From 2013 to 2023, the most significant transfer was from mudflats to water. In December 2013, during the dry season, large mudflats were exposed due to low water levels. By April 2024, the water level rose significantly, with nearly no mudflats visible, indicating a substantial transfer of 2969.62 hm2. The transfer from farmland to forest was also notable, covering 1094.88 hm2, with a transfer in rate of 18.89%. Additionally, farmland to water transfers were evident, primarily with a conversion to ponds for aquaculture, with a transfer area of 872.14 hm2 and a transfer out rate of 7.13%.

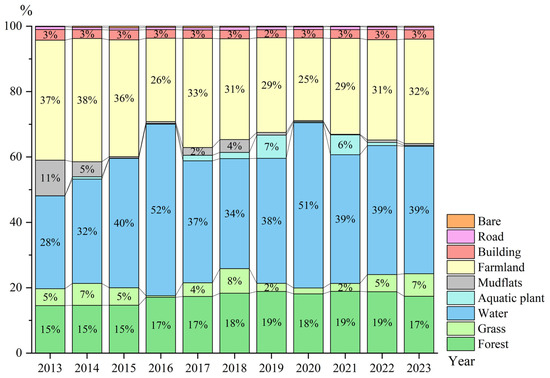

5.2. Analysis of Landscape Type Area Changes

The analysis of changes in landscape type area is key to analyzing the dynamic changes in landscape patterns [57]. We calculated the area of each landscape type from 2013 to 2023 and summarized the results in an area proportional change chart, as shown in Figure 11. The Shengjin Lake area is dominated by water, farmland, and forest, with smaller areas of grassland, mudflats, buildings, roads, and bare land. The forest area remained relatively stable, increasing slowly from 15% in 2013 to 19% in 2019. This change was related primarily to the “Grain for Green” policy, which resulted in a corresponding decrease in the proportion of farmland area. However, from 2020 to 2023, the forest area slightly decreased to 17% because some mountain forests were excavated and transformed into bare land. The changes in the farmland, mudflat, and grassland areas were significantly influenced by the seasonal water level changes. The area variations in these three types were closely related to the changes in water area, which tended to decrease as the other type increased. When the water area increased, mudflat and grassland areas decreased because, during high water levels, grasslands, mudflats, and some lakeside farmlands could become submerged. Notably, in 2016 and 2019, the proportion of water exceeded 50% due to the image collection times in these two years coinciding with extreme precipitation events that rapidly increased the water level. Consequently, the area of water significantly increased, submerging large areas of grassland, mudflat, and lakeside farmland, leading to a decrease in the area proportions of these three types. The reduction in farmland area was influenced by the transformation of some farmland into ponds for aquaculture. The area proportions of roads and buildings remained relatively small and stable over the 11 years.

Figure 11.

Area proportions of Shengjin Lake wetland landscape types from 2013 to 2023.

5.3. Analysis of Landscape Pattern Characteristic Changes

Landscape indices are quantitative analysis methods that describe the spatial characteristics and dynamic changes in landscape patterns, reflecting information about the composition and spatial configuration of landscape structures [58]. In this study, we select appropriate landscape indices at both the landscape level and type level to analyze the structure and function of landscape patterns. Shengjin Lake, a typical seasonal lake wetland, features a complex water–land interlaced landscape, rich habitat types, and dynamic water level changes. Therefore, in studying the wetland landscape evolution of Shengjin Lake, it is essential to focus not only on the overall landscape pattern changes but also on the specific changes in wetland types. The landscape indices selected in this study, including NP (Number of Patches), MPS (Mean Patch Size), ED (Edge Density), LSI (Landscape Shape Index), COHESION (Cohesion Index), CONTAG (Contagion Index), IJI (Interspersion and Juxtaposition Index), SHDI (Shannon’s Diversity Index), and AI (Aggregation Index) at the landscape level, and COHESION (Cohesion Index), LPI (Largest Patch Index), LSI (Landscape Shape Index), and AI (Aggregation Index) at the type level, effectively reflect the fragmentation, connectivity, boundary complexity, aggregation, and diversity of the wetland landscape in the study area, providing a comprehensive understanding of the wetland landscape structure and its dynamic changes. The names and meanings of these landscape indices are shown in Table 8.

Table 8.

Landscape pattern indices and meanings.

Moreover, there are correlations between landscape indices. For example, NP and MPS are used to measure the degree of landscape fragmentation and patch size characteristics, respectively. These two indices show a negative correlation, indicating that an increase in the number of patches is usually accompanied by a decrease in the size of individual patches, which intensifies landscape fragmentation. ED and LSI reflect the complexity of landscape edges and patch shapes, respectively, and they exhibit a positive correlation, suggesting that an increase in edge length is often associated with more complex patch shapes. CONTAG and SHDI measure the aggregation of landscape types and diversity, respectively, and show a negative correlation, revealing a trend where increased landscape aggregation leads to a decrease in type diversity. AI and COHESION both characterize the spatial aggregation and connectivity of patches, and they are positively correlated, indicating that enhanced patch aggregation contributes to improving the overall landscape connectivity. IJI is used to measure the evenness of interspersion between different landscape types. It shows a positive correlation with SHDI and a negative correlation with CONTAG, meaning that when the landscape has rich and evenly distributed types, the interspersion between different types is higher, while in a more aggregated landscape, the interspersion decreases. These correlations reflect the mutual exclusion relationships between fragmentation and connectivity, as well as between aggregation and diversity.

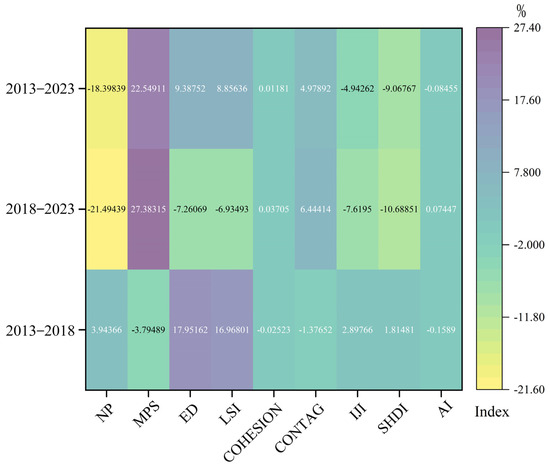

5.3.1. Landscape Index Analysis—Landscape Level

Landscape-level indices can comprehensively describe the overall changes in the landscape patterns in a study area [59]. We calculate the landscape-level indices for the years 2013, 2018, and 2023, and the results are presented in Table 9. Additionally, we analyze the changes in landscape indices during 2013–2018, 2018–2023, and 2013–2023, as shown in Figure 12.

Table 9.

Wetland landscape-level indices for Shengjin Lake.

Figure 12.

Change rate of the landscape-level index.

From 2013 to 2018, NP increased by 3.94% but decreased by 21.49% from 2018 to 2023, resulting in a reduction from 12,425 to 10,139 patches over the entire period from 2013 to 2023, a decrease of 18.40%. This indicates a reduction in landscape fragmentation, which is beneficial for ecological connectivity and biodiversity. MPS increased by 22.55% from 2.68 ha in 2013 to 3.29 ha in 2023, indicating improved ecological connectivity. ED initially increased, from 88.14 m/ha in 2013 to 103.97 m/ha in 2018, before decreasing to 96.42 m/ha in 2023. From 2013 to 2023, ED increased by 9.39%, indicating an increase in edge effects among landscape types and greater complexity in boundary shapes. The LSI increased from 42.62 to 46.39, with an increase of 8.86%. This finding indicates an increase in the complexity of patch shapes, which trend toward irregularity, suggesting that human activities have interfered with the landscape ecosystem, leading to more complex shapes. COHESION remained stable, increasing slightly from 99.88 in 2013 to 99.90 in 2023. CONTAG increased by 4.98% to 66.14%, reflecting high patch aggregation and stable integrity. The AI also remained high, indicating strong landscape connectivity and ecosystem stability. The SHDI initially increased before decreasing from 1.56 in 2013 to 1.42 in 2023, a reduction of 9.06%. This indicates a decrease in landscape richness and in the degree of fragmentation.

5.3.2. Landscape Index Analysis—Type Level

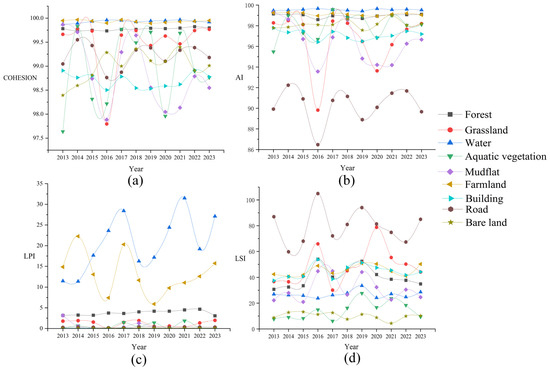

At the landscape type level, we select the cohesion index (COHESION), aggregation index (AI), landscape shape index (LSI), and maximum patch index (LPI) to measure the changes in the aggregation, shape complexity, and dominance of the nine landscape types, as shown in Figure 13.

Figure 13.

Changes in the landscape-type-level index from 2013 to 2023: (a) COHESION; (b) AI; (c) LPI; (d) LSI.

The COHESION measures the connectivity of different landscape types. The COHESION of forest, water, and farmland is relatively high and remains stable, as these three land types occupy a large proportion of the total area of the study area, making them dominant landscapes. This finding indicates that the connectivity of patches within these three landscape types is high. The COHESION of aquatic vegetation, mudflats, and grasslands fluctuates significantly due to seasonal water level changes. When water levels rise, grassy and muddy patches are submerged, disrupting landscape connectivity and lowering the COHESION.

The LPI measures the dominance of landscapes within the study area. In terms of the dynamic characteristics of the LPIs, farmland and water have higher LPIs than the other landscape types do, indicating that their maximum patch areas occupy a larger proportion of the study area than the other landscape types do, making them dominant landscapes. The LPI of water shows a fluctuating upward trend, increasing from 11.48% in 2013 to 28.41% in 2017, then decreasing to 16.26% in 2018, increasing to 31.49% in 2021, decreasing to 19.19% in 2022, and reaching 27.11% in 2023, a 15.63% increase from 2013. This increase in the lake water area indicates that wetland conservation has improved water connectivity and biodiversity. The LPIs of forests remain stable, fluctuating between 3% and 4.5%.

The LSI describes the shape complexity of patches within landscape types. The LSI of roads is greater than that of other landscape types, primarily because of their complex and irregular shapes, as well as their larger perimeter-to-area ratios, which are designed to meet the functional needs of connecting different areas. Farmland typically presents a more regular shape, whereas forest shapes, although irregular, have a smaller perimeter-to-area ratio, resulting in LSI values that are usually lower than those of roads. However, the LSI value of farmland exhibited some fluctuations between 2013 and 2023, indicating that human activities have influenced the complexity of farmland shapes. The LSI value of water remains stable, as seasonal water level changes have little impact on lake shape. Shoreline development restrictions preserve the natural form of the lake.

The AI measures the spatial aggregation of similar types of patches within the landscape. The AI values for farmland, forest, and water remain large and stable, indicating that these landscape types have highly aggregated patches. The AI trends for grasslands and mudflats are similar, showing significant decreases in 2016 and 2020. Specifically, the AI for grasslands decreased to 89.82% and 93.63%, whereas that for mudflats decreased to 93.56% and 94.20%, respectively. This decrease is attributed to climate factors and rising water levels, which submerged previously exposed patches, potentially increasing the number of small patches while reducing connectivity. Conversely, during lower water levels in other years, the exposed areas of grasslands and mudflats increased, leading to greater connectivity and higher AI values.

6. Conclusions

This study explored the long-term spatiotemporal evolution of wetland landscape patterns by tackling classification challenges using a multilevel feature cross-fusion network. We applied MFCFNet to classify Shengjin Lake’s wetland landscapes from 2013 to 2023 and analyzed their evolution in terms of transitions, area changes, and pattern characteristics. The main conclusions were as follows:

- (1)

- MFCFNet combined the global modeling capability of the Swin Transformer with the local detail-capturing strength of CNN, enhancing the model’s ability to perceive intraclass consistency and interclass differences, thereby improving the separability of wetland landscapes. The constructed SLWGID was used to evaluate MFCFNet, achieving OA, mIoU, and F1 scores of 93.23%, 78.12%, and 87.05%, respectively.

- (2)

- The DSFCF bridged the semantic gap between deep and shallow features, alleviating the semantic confusion in feature fusion. The GLAB captured global spatial dependency semantic features and local detailed information. These modules enhanced the model’s accuracy in segmenting landscape types with similar features and spatial heterogeneity, while significantly improving its ability to extract fragmented and small objects.

- (3)

- MFCFNet was used to classify the Shengjin Lake wetland from 2013 to 2023, followed by an analysis of landscape pattern evolution. Seasonal water level fluctuations led to frequent transitions among water, mudflats, and grasslands. Forest area increased from 15% to 17%, and water expanded from 28% to 39%, while grassland, mudflats, and aquatic vegetation fluctuated. Landscape indices showed reduced fragmentation, increased boundary complexity, and improved aggregation and connectivity.

Author Contributions

Conceptualization, B.W. and S.S.; methodology, S.S. and Z.J.; software, Y.W.; validation, P.Z.; resources, C.P.; data curation, S.S.; writing—original draft preparation, S.S.; writing—review and editing, B.W.; visualization, Z.L.; project administration, S.X. and J.Q.; funding acquisition, B.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant numbers 41901282, 42101381, and 41971311), the Key Research and Development Project of Anhui Province (grant number 2022l07020027), International Science and Technology Cooperation Special (grant number 202104b11020022), Anhui Provincial Natural Science Foundation (grant number 2308085MD126), Hefei Municipal Natural Science Foundation (grant number 202323), Anhui Province Key Laboratory of Water Conservancy and Water Resources (grant number 2023SKJ06), Natural Resources Science and Technology Program of Anhui Province (grant number 2023-K-7), and Anhui Province Ecological Environment Science and Technology Project (grant number 2024hb001).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hosseiny, B.; Mahdianpari, M.; Brisco, B.; Mohammadimanesh, F.; Salehi, B. WetNet: A spatial–temporal ensemble deep learning model for wetland classification using Sentinel-1 and Sentinel-2. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4406014. [Google Scholar] [CrossRef]

- McCarthy, M.J.; Radabaugh, K.R.; Moyer, R.P.; Muller-Karger, F.E. Enabling efficient, large-scale high-spatial resolution wetland mapping using satellites. Remote Sens. Environ. 2018, 208, 189–201. [Google Scholar] [CrossRef]

- Wu, H.; Zeng, G.; Liang, J.; Chen, J.; Xu, J.; Dai, J.; Sang, L.; Li, X.; Ye, S. Responses of landscape pattern of China’s two largest freshwater lakes to early dry season after the impoundment of Three-Gorges Dam. Int. J. Appl. Earth Observ. Geoinf. 2017, 56, 36–43. [Google Scholar] [CrossRef]

- Guo, D.; Shi, W.; Qian, F.; Wang, S.; Cai, C. Monitoring the spatiotemporal change of Dongting Lake wetland by integrating Landsat and MODIS images, from 2001 to 2020. Ecol. Inform. 2022, 72, 101848. [Google Scholar] [CrossRef]

- Mao, D.; Wang, Z.; Du, B.; Li, L.; Tian, Y.; Jia, M.; Zeng, Y.; Song, K.; Jiang, M.; Wang, Y. National wetland mapping in China: A new product resulting from object-based and hierarchical classification of Landsat 8 OLI images. ISPRS J. Photogramm. Remote Sens. 2020, 164, 11–25. [Google Scholar] [CrossRef]

- Wu, Q.; Lane, C.R.; Li, X.; Zhao, K.; Zhou, Y.; Clinton, N.; Devries, B.; Golden, H.E.; Lang, M.W. Integrating LiDAR data and multi-temporal aerial imagery to map wetland inundation dynamics using Google Earth Engine. Remote Sens. Environ. 2019, 228, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Han, X.; Chen, X.; Feng, L. Four decades of winter wetland changes in Poyang Lake based on Landsat observations between 1973 and 2013. Remote Sens. Environ. 2015, 156, 426–437. [Google Scholar] [CrossRef]

- Xing, H.; Niu, J.; Feng, Y.; Hou, D.; Wang, Y.; Wang, Z. A coastal wetlands mapping approach of Yellow River Delta with a hierarchical classification and optimal feature selection framework. Catena 2023, 223, 106897. [Google Scholar] [CrossRef]

- Gao, Y.; Li, W.; Zhang, M.; Wang, J.; Sun, W.; Tao, R.; Du, Q. Hyperspectral and multispectral classification for coastal wetland using depthwise feature interaction network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5512615. [Google Scholar] [CrossRef]

- Jamali, A.; Roy, S.K.; Ghamisi, P. WetMapFormer: A unified deep CNN and vision transformer for complex wetland mapping. Int. J. Appl. Earth Observ. Geoinf. 2023, 120, 103333. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Motagh, M. Random forest wetland classification using ALOS-2 L-band, RADARSAT-2 C-band, and TerraSAR-X imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 13–31. [Google Scholar] [CrossRef]

- Hu, Y.; Dong, Y.; Batunacun, B. An automatic approach for land-change detection and land updates based on integrated NDVI timing analysis and the CVAPS method with GEE support. ISPRS J. Photogramm. Remote Sens. 2018, 146, 347–359. [Google Scholar] [CrossRef]

- Yang, J.; Ren, G.; Ma, Y.; Fan, Y. Coastal wetland classification based on high resolution SAR and optical image fusion. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Zhou, K. Study on wetland landscape pattern evolution in the Dongping Lake. Appl. Water Sci. 2022, 12, 200. [Google Scholar] [CrossRef]

- Chen, Y.; Dong, Q.; Wang, X.; Zhang, Q.; Kang, M.; Jiang, W.; Wang, M.; Xu, L.; Zhang, C. Hybrid Attention Fusion Embedded in Transformer for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17. [Google Scholar] [CrossRef]

- Eigen, D.; Fergus, R. Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Cheng, X.; He, X.; Qiao, M.; Li, P.; Tian, Z. Enhanced contextual representation with deep neural networks for land cover classification based on remote sensing images. Int. J. Appl. Earth Observ. Geoinf. 2022, 107, 102706. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T.; Trevor, D. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Pan, H. A feature sequence-based 3D convolutional method for wetland classification from multispectral images. Remote Sens. Lett. 2020, 11, 837–846. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Liao, X.; Tu, B.; Li, J.; Plaza, A. Class-wise graph embedding-based active learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5522813. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Esser, P.; Rombach, R.; Ommer, B. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Yang, F.; Yang, H.; Fu, J.; Lu, H.; Guo, B. Learning texture transformer network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Han, Z.; Gao, Y.; Jiang, X.; Wang, J.; Li, W. Multisource remote sensing classification for coastal wetland using feature intersecting learning. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6008405. [Google Scholar] [CrossRef]

- Guo, F.; Meng, Q.; Li, Z.; Ren, G.; Wang, L.; Zhang, J.; Xin, R.; Hu, Y. Multisource feature embedding and interaction fusion network for coastal wetland classification with CNN and vision transformers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 62, 5509516. [Google Scholar]

- Wu, H.; Zhang, M.; Huang, P.; Tang, W. CMLFormer: CNN and Multi-scale Local-context Transformer network for remote sensing images semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7233–7241. [Google Scholar] [CrossRef]

- Ni, Y.; Liu, J.; Chi, W.; Wang, X.; Li, D. CGGLNet: Semantic segmentation network for remote sensing images based on category-guided global-local feature interaction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5615617. [Google Scholar] [CrossRef]

- Yang, Y.; Jiao, L.; Li, L.; Liu, X.; Liu, F.; Chen, P.; Yang, S. LGLFormer: Local-global lifting transformer for remote sensing scene parsing. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5602513. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and multiscale transformer fusion network for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Fan, L.; Zhou, Y.; Liu, H.; Li, Y.; Cao, D. Combining Swin transformer with UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5530111. [Google Scholar] [CrossRef]

- Xiang, X.; Gong, W.; Li, S.; Chen, J.; Ren, T. TCNet: Multiscale fusion of transformer and CNN for semantic segmentation of remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3123–3136. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Gao, L.; Liu, H.; Yang, M.; Chen, L.; Wan, Y.; Xiao, Z.; Qian, Y. STransFuse: Fusing Swin transformer and convolutional neural network for remote sensing image semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10990–11003. [Google Scholar] [CrossRef]

- Li, H.; Li, H.; Li, C.; Wu, B.; Gao, J. Hybrid Swin transformer-CNN model for pore-crack structure identification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5909713. [Google Scholar] [CrossRef]

- Gao, Y.; Liang, Z.; Wang, B.; Wu, Y.; Liu, S. Inversion of aboveground biomass of grassland vegetation in Shengjin Lake based on UAV and satellite remote sensing images. Lake Sci. 2019, 31, 517–528. [Google Scholar]

- Yang, L.; Dong, B.; Wang, Q.; Sheng, S.W.; Han, W.Y.; Zhao, J.; Cheng, M.W.; Yang, S.W. Habitat suitability change of water birds in Shengjin Lake National Nature Reserve, Anhui Province. J. Lake Sci. 2015, 6, 1027–1034. [Google Scholar]

- Ye, Y.; Wang, M.; Zhou, L.; Lei, G.; Fan, J.; Qin, Y. Adjacent-level feature cross-fusion with 3D CNN for remote sensing image change detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5618214. [Google Scholar] [CrossRef]

- Ju, Y.; Bohrer, G. Classification of wetland vegetation based on NDVI time series from the HLS dataset. Remote Sens. 2022, 14, 2107. [Google Scholar] [CrossRef]

- Yang, H.; Liu, X.; Chen, Q.; Cao, Y. Mapping Dongting Lake Wetland Utilizing Time Series Similarity, Statistical Texture, and Superpixels with Sentinel-1 SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8235–8244. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. Hrformer: High-resolution transformer for dense prediction. arXiv 2021, arXiv:2110.09408. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Wang, L.; Li, R.; Wang, D.; Duan, C.; Wang, T.; Meng, X. Transformer meets convolution: A bilateral awareness network for semantic segmentation of very fine resolution urban scene images. Remote Sens. 2021, 13, 3065. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Duan, C.; Zhang, C.; Meng, X.; Fang, S. A novel transformer-based semantic segmentation scheme for fine-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6506105. [Google Scholar] [CrossRef]

- Zhang, M.; Gong, Z.; Zhao, W.; A, D. Changes of wetland landscape pattern and its driving mechanism in Baiyangdian Lake in recent 30 years. Acta Ecol. Sin. 2016, 36, 12. [Google Scholar]

- Jiang, Z. Analysis of spatial-temporal changes of landscape pattern in Zhengzhou in recent 20 years. Jingwei World 2024, 4, 11–14. [Google Scholar]

- Chen, W.; Xiao, D.; Li, X. Classification, application, and creation of landscape indices. Chin. J. Appl. Ecol. 2002, 1, 121–125. [Google Scholar]

- Huang, M.; Yue, W.; Feng, S.; Zhang, J. Spatial and temporal evolution of habitat quality and landscape pattern in Dabie Mountain area of West Anhui Province based on InVEST model. Acta Ecol. Sin. 2020, 40, 12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).