Combining Multi-View UAV Photogrammetry, Thermal Imaging, and Computer Vision Can Derive Cost-Effective Ecological Indicators for Habitat Assessment

Abstract

1. Introduction

- (1)

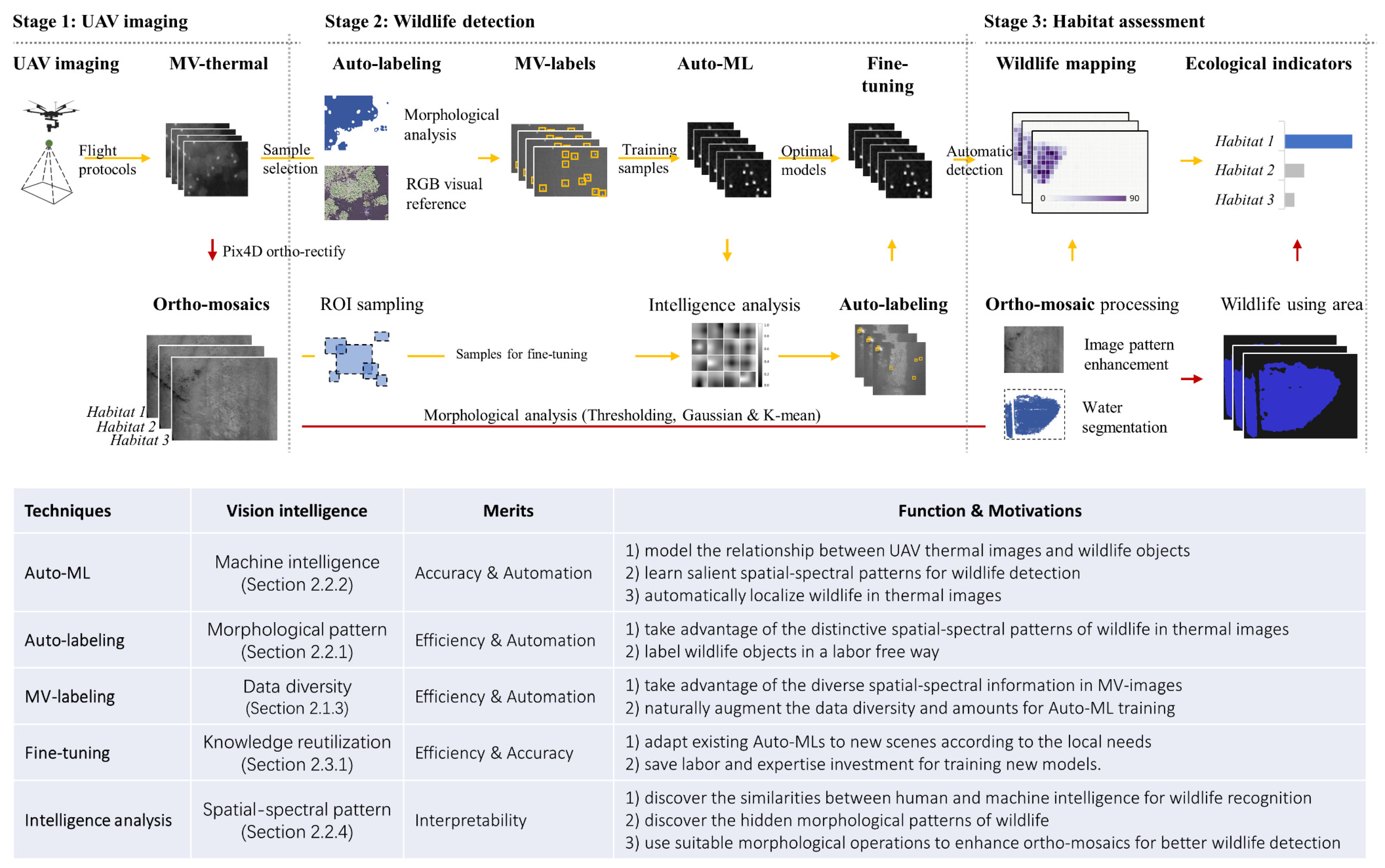

- The UAV imaging stage was designed to explore the potential of UAV photogrammetry for high-quality wildlife data collection. UAVs and thermal imaging were used and tested at different flight heights to conduct wildlife surveys in wetland habitats, and thermal ortho-mosaics were produced to detect wildlife (Section 2.1).

- (2)

- The wildlife detection stage was designed to explore the potential of thermal images, multi-view (MV) UAV image structure, and AutoML to achieve labor-free wildlife and inundation (water area) mapping (Section 2.2). Experiments with various AutoML architectures, training data sizes, and feature types were conducted to discover the optimal configuration of human labor, expertise, and computing investment for wildlife distribution mapping.

- (3)

- The habitat assessment stage was designed to explore how to transform the wildlife counts and distribution information into ecological indicators (including wildlife counts, usage area, and usage efficiency for intra- and inter-habitat comparison) to reflect the level of wildlife capacity and wildlife use (Section 2.3).

2. Materials and Methods

2.1. UAV Imaging

2.1.1. Study Area

2.1.2. UAV Flight Protocols

2.1.3. MV UAV Images to Ortho-Mosaics

2.2. Wildlife Detection

2.2.1. AutoMV Labeling

- (1)

- Segment the image regions with pixel values larger than a local adaptive threshold, calculated as the local mean plus two times the local variance within a 16 × 16 sliding window for each pixel;

- (2)

- Remove image regions with area and solidity below thresholds determined by local knowledge of the wildlife shape and size;

- (3)

- Select the images with high-level labeling quality as training samples, in visual reference to the RGB images. The high-quality labels are determined as images with more than 50% wildlife. Wildlife are accurately labeled without obvious false positive labels (objects that are incorrectly labeled as birds).

2.2.2. Experiments with Different AutoML

2.2.3. Experiments for Optimal Model Selection

2.2.4. Intelligence Analyses

2.3. Application for Habitat Assessment

2.3.1. Wildlife Distribution by Fine-Tuning

2.3.2. Wildlife Using Area by Morphological Analysis

2.3.3. Ecological Indicators for Habitat Assessment

3. Results

3.1. Experiment for Wildlife Detection

3.2. Experiment for Intelligence Analyses

3.3. Experiment for Habitat Assessment

4. Discussion

4.1. High-Quality Wildlife Surveys

4.2. Vision Intelligence for Cost-Effective Habitat Quality Assessment

4.3. Ecological Meaning, Limitations, and Future Extension for Habitat Assessment

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van Horne, B. Density as a Misleading Indicator of Habitat Quality. J. Wildl. Manag. 1983, 47, 893–901. [Google Scholar] [CrossRef]

- Schamberger, M.; Farmer, A.H.; Terrell, J.W. Habitat Suitability Index Models: Introduction; Office of Biological Services: Loxton, Australia, 1982.

- Drahota, J.; Reker, R.; Bishop, A.; Hoffman, J.; Souerdyke, R.; Walters, R.; Boomer, S.; Kendell, B.; Brewer, D.C.; Runge, M.C. Public Land Management to Support Waterfowl Bioenergetic Needs in the Rainwater Basin Region; U.S. Fish & Wildlife Service: Lincoln, NE, USA, 2009.

- Krausman, P.R. Some Basic Principles of Habitat Use. Grazing Behav. Livest. Wildl. 1999, 70, 85–90. [Google Scholar]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Raja Segaran, R.; Reid, I.; Terauds, A.; Koh, L.P. Drones Count Wildlife More Accurately and Precisely than Humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated Detection and Enumeration of Marine Wildlife Using Unmanned Aircraft Systems (UAS) and Thermal Imagery. Sci. Rep. 2017, 7, 45127. [Google Scholar] [CrossRef]

- Arzel, C.; Elmberg, J.; Guillemain, M. Ecology of Spring-Migrating Anatidae: A Review. J. Ornithol. 2006, 147, 167–184. [Google Scholar] [CrossRef]

- Johnson, M.D. Habitat Quality: A Brief Review for Wildlife Biologists. Trans.-West. Sect. Wildl. Soc. 2005, 41, 31–41. [Google Scholar]

- Johnson, M.D. Measuring Habitat Quality: A Review. Condor 2007, 109, 489–504. [Google Scholar] [CrossRef]

- Chabot, D.; Francis, C.M. Computer-Automated Bird Detection and Counts in High-Resolution Aerial Images: A Review. J. Field Ornithol. 2016, 87, 343–359. [Google Scholar] [CrossRef]

- Nowak, M.M.; Dziób, K.; Bogawski, P. Unmanned Aerial Vehicles (UAVs) in Environmental Biology: A Review. Eur. J. Ecol. 2019, 4, 56–74. [Google Scholar] [CrossRef]

- Blumstein, D.T.; Mennill, D.J.; Clemins, P.; Girod, L.; Yao, K.; Patricelli, G.; Deppe, J.L.; Krakauer, A.H.; Clark, C.; Cortopassi, K.A.; et al. Acoustic Monitoring in Terrestrial Environments Using Microphone Arrays: Applications, Technological Considerations and Prospectus. J. Appl. Ecol. 2011, 48, 758–767. [Google Scholar] [CrossRef]

- Burton, A.C.; Neilson, E.; Moreira, D.; Ladle, A.; Steenweg, R.; Fisher, J.T.; Bayne, E.; Boutin, S. Wildlife Camera Trapping: A Review and Recommendations for Linking Surveys to Ecological Processes. J. Appl. Ecol. 2015, 52, 675–685. [Google Scholar] [CrossRef]

- Brack, I.V.; Kindel, A.; Oliveira, L.F.B. Detection Errors in Wildlife Abundance Estimates from Unmanned Aerial Systems (UAS) Surveys: Synthesis, Solutions, and Challenges. Methods Ecol. Evol. 2018, 9, 1864–1873. [Google Scholar] [CrossRef]

- Rahman, D.A.; Rahman, A.A.A.F. Performance of Unmanned Aerial Vehicle with Thermal Imaging, Camera Trap, and Transect Survey for Monitoring of Wildlife. In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP Publishing Ltd.: Bristol, UK, 2021; Volume 771. [Google Scholar]

- Corcoran, E.; Winsen, M.; Sudholz, A.; Hamilton, G. Automated Detection of Wildlife Using Drones: Synthesis, Opportunities and Constraints. Methods Ecol. Evol. 2021, 12, 1103–1114. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, X.; Dedman, S.; Rosso, M.; Zhu, J.; Yang, J.; Xia, Y.; Tian, Y.; Zhang, G.; Wang, J. UAV Remote Sensing Applications in Marine Monitoring: Knowledge Visualization and Review. Sci. Total Environ. 2022, 838, 155939. [Google Scholar] [CrossRef] [PubMed]

- Wilson, A.M.; Barr, J.; Zagorski, M. The Feasibility of Counting Songbirds Using Unmanned Aerial Vehicles. Auk Ornithol. Adv. 2017, 134, 350–362. [Google Scholar] [CrossRef]

- Sardà-Palomera, F.; Bota, G.; Sardà, F.; Brotons, L. Reply to ‘a Comment on the Limitations of UAVs in Wildlife Research—The Example of Colonial Nesting Waterbirds’. J. Avian Biol. 2018, 49, e01902. [Google Scholar] [CrossRef]

- Chrétien, L.P.; Théau, J.; Ménard, P. Wildlife Multispecies Remote Sensing Using Visible and Thermal Infrared Imagery Acquired from an Unmanned Aerial Vehicle (UAV). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2015, 40, 241–248. [Google Scholar] [CrossRef]

- Rey, N.; Volpi, M.; Joost, S.; Tuia, D. Detecting Animals in African Savanna with UAVs and the Crowds. Remote Sens. Environ. 2017, 200, 341–351. [Google Scholar] [CrossRef]

- Evans, L.J.; Jones, T.H.; Pang, K.; Saimin, S.; Goossens, B. Spatial Ecology of Estuarine Crocodile (Crocodylus Porosus) Nesting in a Fragmented Landscape. Sensors 2016, 16, 1527. [Google Scholar] [CrossRef]

- Chabot, D.; Craik, S.R.; Bird, D.M. Population Census of a Large Common Tern Colony with a Small Unmanned Aircraft. PLoS ONE 2015, 10, e0122588. [Google Scholar] [CrossRef]

- Kellenberger, B.; Volpi, M.; Tuia, D. Fast Animal Detection in UAV Images Using Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 866–869. [Google Scholar]

- Chrétien, L.P.; Théau, J.; Ménard, P. Visible and Thermal Infrared Remote Sensing for the Detection of White-Tailed Deer Using an Unmanned Aerial System. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Thapa, G.J.; Thapa, K.; Thapa, R.; Jnawali, S.R.; Wich, S.A.; Poudyal, L.P.; Karki, S. Counting Crocodiles from the Sky: Monitoring the Critically Endangered Gharial (Gavialis Gangeticus) Population with an Unmanned Aerial Vehicle (UAV). J. Unmanned Veh. Syst. 2018, 6, 71–82. [Google Scholar] [CrossRef]

- Peng, J.; Wang, D.; Liao, X.; Shao, Q.; Sun, Z.; Yue, H.; Ye, H. Wild Animal Survey Using UAS Imagery and Deep Learning: Modified Faster R-CNN for Kiang Detection in Tibetan Plateau. ISPRS J. Photogramm. Remote Sens. 2020, 169, 364–376. [Google Scholar] [CrossRef]

- Zabel, F.; Findlay, M.A.; White, P.J.C. Assessment of the Accuracy of Counting Large Ungulate Species (Red Deer Cervus Elaphus) with UAV-Mounted Thermal Infrared Cameras during Night Flights. Wildl. Biol. 2023, 2023, e01071. [Google Scholar] [CrossRef]

- Brisson-Curadeau, É.; Bird, D.; Burke, C.; Fifield, D.A.; Pace, P.; Sherley, R.B.; Elliott, K.H. Seabird Species Vary in Behavioural Response to Drone Census. Sci. Rep. 2017, 7, 17884. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, G.; Corcoran, E.; Denman, S.; Hennekam, M.E.; Koh, L.P. When You Can’t See the Koalas for the Trees: Using Drones and Machine Learning in Complex Environments. Biol. Conserv. 2020, 247, 108598. [Google Scholar] [CrossRef]

- Kim, M.; Chung, O.S.; Lee, J.K. A Manual for Monitoring Wild Boars (Sus Scrofa) Using Thermal Infrared Cameras Mounted on an Unmanned Aerial Vehicle (UAV). Remote Sens. 2021, 13, 4141. [Google Scholar] [CrossRef]

- Chen, A.; Jacob, M.; Shoshani, G.; Charter, M. Using Computer Vision, Image Analysis and UAVs for the Automatic Recognition and Counting of Common Cranes (Grus Grus). J. Environ. Manag. 2023, 328, 116948. [Google Scholar] [CrossRef]

- Christie, K.S.; Gilbert, S.L.; Brown, C.L.; Hatfield, M.; Hanson, L. Unmanned Aircraft Systems in Wildlife Research: Current and Future Applications of a Transformative Technology. Front. Ecol. Environ. 2016, 14, 241–251. [Google Scholar] [CrossRef]

- Jumail, A.; Liew, T.S.; Salgado-Lynn, M.; Fornace, K.M.; Stark, D.J. A Comparative Evaluation of Thermal Camera and Visual Counting Methods for Primate Census in a Riparian Forest at the Lower Kinabatangan Wildlife Sanctuary (LKWS), Malaysian Borneo. Primates 2021, 62, 143–151. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting Mammals in UAV Images: Best Practices to Address a Substantially Imbalanced Dataset with Deep Learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Lobry, S.; Tuia, D. Half a Percent of Labels Is Enough: Efficient Animal Detection in UAV Imagery Using Deep CNNs and Active Learning. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9524–9533. [Google Scholar] [CrossRef]

- Kellenberger, B.; Veen, T.; Folmer, E.; Tuia, D. 21 000 Birds in 4.5 H: Efficient Large-Scale Seabird Detection with Machine Learning. Remote Sens. Ecol. Conserv. 2021, 7, 445–460. [Google Scholar] [CrossRef]

- Drahota, J.; Reichart, L.M. Wetland Seed Availability for Waterfowl in Annual and Perennial Emergent Plant Communities of the Rainwater Basin. Wetlands 2015, 35, 1105–1116. [Google Scholar] [CrossRef]

- Tang, Z.; Li, Y.; Gu, Y.; Jiang, W.; Xue, Y.; Hu, Q.; LaGrange, T.; Bishop, A.; Drahota, J.; Li, R. Assessing Nebraska Playa Wetland Inundation Status during 1985–2015 Using Landsat Data and Google Earth Engine. Environ. Monit. Assess. 2016, 188, 654. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.; Drahota, J.; Hu, Q.; Jiang, W. Examining Playa Wetland Contemporary Conditions in the Rainwater Basin, Nebraska. Wetlands 2018, 38, 25–36. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. Multi-View Object-Based Classification of Wetland Land Covers Using Unmanned Aircraft System Images. Remote Sens. Environ. 2018, 216, 122–138. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Zare, A.; Dewitt, B.A.; Flory, L.; Smith, S.E. A Fully Learnable Context-Driven Object-Based Model for Mapping Land Cover Using Multi-View Data from Unmanned Aircraft Systems. Remote Sens. Environ. 2018, 216, 328–344. [Google Scholar] [CrossRef]

- Hu, Q.; Woldt, W.; Neale, C.; Zhou, Y.; Drahota, J.; Varner, D.; Bishop, A.; LaGrange, T.; Zhang, L.; Tang, Z. Utilizing Unsupervised Learning, Multi-View Imaging, and CNN-Based Attention Facilitates Cost-Effective Wetland Mapping. Remote Sens. Environ. 2021, 267, 112757. [Google Scholar] [CrossRef]

- Nguyen, N.D.; Do, T.; Ngo, T.D.; Le, D.D. An Evaluation of Deep Learning Methods for Small Object Detection. J. Electr. Comput. Eng. 2020, 2020, 3189691. [Google Scholar] [CrossRef]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid Object Detection Using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. 511–518. [Google Scholar]

- Dalal, N.; Triggs, W. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 886–893. [Google Scholar]

- Dollar, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Gray Scale and Rotation Invariant Texture Classification with Local Binary Patterns. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2000; Volume 24, pp. 404–420. ISBN 3540676856. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-Cnn: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 39, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Van der Maaten, L.; Hinton, G. Visualizing Data Using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision Wildlife Monitoring Using Unmanned Aerial Vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef] [PubMed]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools, and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Ma, H.; Zhu, J.; Lyu, M.R.T.; King, I. Bridging the Semantic Gap between Image Contents and Tags. IEEE Trans. Multimed. 2010, 12, 462–473. [Google Scholar] [CrossRef]

- Wan, J.; Wang, D.; Hoi, S.C.H.; Wu, P.; Zhu, J.; Zhang, Y.; Li, J. Deep Learning for Content-Based Image Retrieval. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 157–166. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. Int. Conf. Mach. Learn. ICML 2014, 2, 988–996. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Fretwell, S.D. On Territorial Behavior and Other Factors Influencing Habitat Distribution in Birds. Acta Biotheor. 1969, 19, 37–44. [Google Scholar] [CrossRef]

- Pyke, G. Optimal Foraging Theory: An Introduction. In Encyclopedia of Animal Behavior; Elsevier Academic Press: Amsterdam, The Netherlands, 2019; pp. 111–117. [Google Scholar]

- Newton, I. Can Conditions Experienced during Migration Limit the Population Levels of Birds? J. Ornithol. 2006, 147, 146–166. [Google Scholar] [CrossRef]

- Morris, D.W.; Mukherjee, S. Can We Measure Carrying Capacity with Foraging Behavior? Ecology 2007, 88, 597–604. [Google Scholar] [CrossRef]

- Webb, E.B.; Smith, L.M.; Vrtiska, M.P.; Lagrange, T.G. Effects of Local and Landscape Variables on Wetland Bird Habitat Use During Migration Through the Rainwater Basin. J. Wildl. Manag. 2010, 74, 109–119. [Google Scholar] [CrossRef]

- Delgado, J.A.; Khosla, R.; Mueller, T. Recent Advances in Precision (Target) Conservation. J. Soil. Water Conserv. 2011, 66, 167–170. [Google Scholar] [CrossRef]

- Clark, J.D.; Dunn, J.E.; Smith, K.G. A Multivariate Model of Female Black Bear Habitat Use for a Geographic Information System. J. Wildl. Manag. 1993, 57, 519–526. [Google Scholar] [CrossRef]

| Labeling (Seconds) | Training (Minutes) | |||

|---|---|---|---|---|

| Investments | ||||

| Average costs | 0.496 s per wildlife object | 0.015 s per wildlife object | 575 s | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Q.; Zhang, L.; Drahota, J.; Woldt, W.; Varner, D.; Bishop, A.; LaGrange, T.; Neale, C.M.U.; Tang, Z. Combining Multi-View UAV Photogrammetry, Thermal Imaging, and Computer Vision Can Derive Cost-Effective Ecological Indicators for Habitat Assessment. Remote Sens. 2024, 16, 1081. https://doi.org/10.3390/rs16061081

Hu Q, Zhang L, Drahota J, Woldt W, Varner D, Bishop A, LaGrange T, Neale CMU, Tang Z. Combining Multi-View UAV Photogrammetry, Thermal Imaging, and Computer Vision Can Derive Cost-Effective Ecological Indicators for Habitat Assessment. Remote Sensing. 2024; 16(6):1081. https://doi.org/10.3390/rs16061081

Chicago/Turabian StyleHu, Qiao, Ligang Zhang, Jeff Drahota, Wayne Woldt, Dana Varner, Andy Bishop, Ted LaGrange, Christopher M. U. Neale, and Zhenghong Tang. 2024. "Combining Multi-View UAV Photogrammetry, Thermal Imaging, and Computer Vision Can Derive Cost-Effective Ecological Indicators for Habitat Assessment" Remote Sensing 16, no. 6: 1081. https://doi.org/10.3390/rs16061081

APA StyleHu, Q., Zhang, L., Drahota, J., Woldt, W., Varner, D., Bishop, A., LaGrange, T., Neale, C. M. U., & Tang, Z. (2024). Combining Multi-View UAV Photogrammetry, Thermal Imaging, and Computer Vision Can Derive Cost-Effective Ecological Indicators for Habitat Assessment. Remote Sensing, 16(6), 1081. https://doi.org/10.3390/rs16061081